Abstract

Background

It is unclear how comprehensive evaluations conducted prior to clinical clerkships (CC), such as the objective structured clinical examination (OSCE) and computer-based testing (CBT), reflect the performance of medical students in CC. Here we retrospectively analyzed correlations between OSCE and CBT scores and CC performance.

Methods

Ethical approval was obtained from our institutional review board. We analyzed correlations between OSCE and CBT scores and CC performance in 94 medical students who took the OSCE and CBT in 2017 when they were 4th year students, and who participated in the basic CC in 2018 when they were 5th year students.

Results

Total scores for OSCE and CBT were significantly correlated with CC performance (P<0.001, each). More specifically, medical interview and chest examination components of the OSCE were significantly correlated with CC performance (P = 0.001, each), while the remaining five components of the OSCE were not.

Conclusion

Our findings suggest that the OSCE and CBT play important roles in predicting CC performance in Japanese medical education context. Among OSCE components, medical interview and chest examination were suggested to be important for predicting CC performance.

Introduction

Seamless medical education in which students gradually acquire professional abilities from when they are undergraduates up until they become postgraduates is important from the perspective of outcome-based education [1]. To achieve this goal, effective clinical training methods are needed which allow for a smooth transition from undergraduate medical education to basic skill acquisition as a postgraduate [2].

In Japan, clinical clerkships (CC)s form the basis of clinical training. In contrast to conventional clinical training, which involves only observation and no practice, CC have students participate as members of a medical team to perform actual medical procedures and care. The range of medical procedures allowed to be performed by students is defined and carried out under the supervision of an instructing doctor [3]. This enables students to acquire practical clinical skills. In this regard, students are required to have a sense of identity and personal responsibility [4]. Clinical training throughout the various departments of a hospital is carried out in the form of CCs which are driven by curricula for diagnoses and treatments [5].

Assuring basic clinical competency in medical students prior to CC is essential from a medical safety perspective. In order to validate the basic clinical competency of medical students, the objective structured clinical examination (OSCE) and computer-based testing (CBT) were introduced in 2005 as standardized tests, organized by the Common Achievement Tests Organization (CATO) [http://www.cato.umin.jp/], to be taken by medical students. The OSCE evaluates clinical skills and communication skills using simulated patients and simulators [6][7], and the CBT basic clinical knowledge. The OSCE and CBT are mandatory for 4th year students in Japanese medical schools. Medical students are recognized by the Association of Japanese Medical Colleges as “student doctor” once they pass both examinations. After this certification, medical students can participate in the CC.

Although previous studies examined the CC performance by mini-clinical evaluation exercise (mini-CEX) [8][9], the relation between OSCE or CBT and CC performance has not been fully validated. Furthermore, no study has evaluated which skill components measured in the OSCE reflect student performance in CCs in Japanese medical education context.

Thus, we decided to evaluate the relationships between OSCE components or CBT and CC performance in Japanese medical education contexts. Accordingly, the present study aimed to retrospectively analyze correlations of various components of the OSCE and CBT with CC performance.

Material and methods

Ethical considerations

This study was approved by the Research Ethics Committee of Osaka Medical College (No.2806). All data were fully anonymized before accessing them and our research ethics committee waived the requirement for informed consent.

Study population

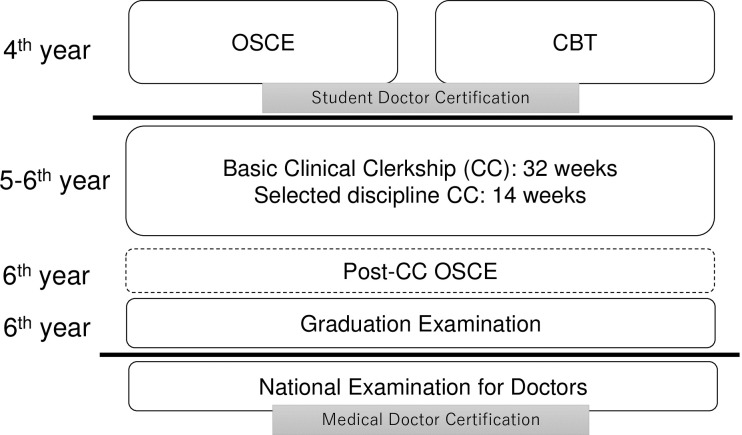

As with other medical schools in Japan, medical students of Osaka Medical College take the OSCE and CBT in their 4th year, and participate in CCs in their 5th and 6th years. The students have undergone all basic and clinical medicine lectures and skill training utilizing simulation before OSCE and CBT. Once they complete their CCs, medical students then take the graduation examination. From 2020, a post-CC OSCE will be introduced by CATO formally to evaluate clinical skills cultivated during CCs (Fig 1).

Fig 1. Schematic summarizing relationships between objective structured clinical examination (OSCE), computer-based testing (CBT), and clinical clerkships (CCs) at Osaka Medical College.

Subjects of the present study were medical students of Osaka Medical College who were 4th year students in 2017 and 5th year student in 2018. We excluded students who did not advance to 5th year status in 2018.

Study measures

OSCE content and evaluation

The OSCE evaluates various aspects of clinical competency. The OSCE included the following seven themes: medial interview, head and neck examination, chest examination, abdominal examination, neurological examination, emergency response, and basic clinical technique. The OSCE is carried out in seven stations, with one station dedicated to a 10-min medical interview, and the remaining six stations to physical examinations and basic skills in 5-min for each. In the 5 or 10 minutes, students perform core clinical skills such as medical interview and physical examination [10].

In the present study, student performance was evaluated by two examiners using a checklist. Scores on each component of the OSCE was based on the average of the scores assigned by the two examiners. Examiners evaluate the communication, medical safety, and consultation skills accordingly on the checklist. The examiners underwent about three hours evaluation training for standardization based on common text and video provided by CATO. Each student take examination in the all seven skill stations and total score was calculated by the average of seven skills. The examination also strictly checks the identification of students by validating their names and ID numbers. Examiners from other universities are routinely invited to validate internal evaluations during the OSCE.

CBT content and evaluation

The CBT consists of multiple-choice questions and extended matching items, and students are required to answer 320 questions about basic clinical knowledge over the course of six hours. Evaluation was performed by the 240 questions which the difficulty and discriminating power was validated from the past pooling data. The remaining 80 questions were trial questions which are not used for the evaluation. The questions are standard tested by the CATO. The CBT includes clinical disciplines and related basic medicine knowledges. Scores for the CBT are machine-calculated and scoring rate was evaluated.

Clinical clerkship (CC) content and evaluation

Medical students participate in a basic CC during their 5th year. The basic CC involves participation in CCs of all clinical departments of the hospital over the course of 32 weeks, with each CC spanning about one to two weeks in duration. Once students complete the basic CC, they must then select a discipline they wish to study for 14 weeks (Figure).

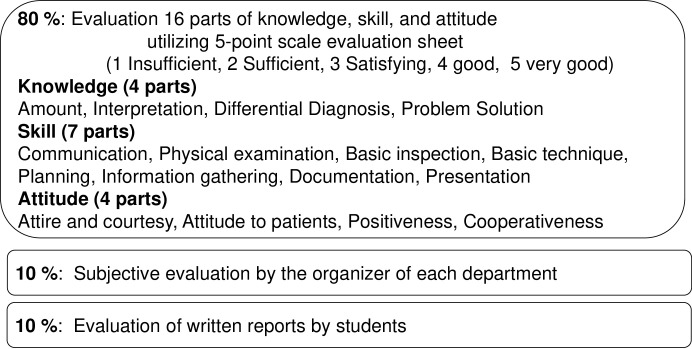

During the CCs, supervising (teaching) doctors of each department evaluate the clinical skills of students using an evaluation sheet based on the mini- CEX and Direct Observation of Procedural Skills (DOPS) [11][12]. Accomplishment consists of 5-point evaluation sheet for 16 parts (80%), subjective evaluation by the organizer of each department (10%), and written report (10%) (Fig 2).

Fig 2. Contents of clinical clerkships (CC) evaluation in our college.

Accomplishment consists of 5-point evaluation sheet for 16 parts (80%), subjective evaluation by the organizer (10%), and written report (10%).

Scores for each CC are collected by the medical education center and are used to calculate an average score. In this study, we used the basic CC (32 weeks) score, since all medical students are required to participate in the basic CC.

Statistical analysis

Statistical analyses were performed using JMP® 11 (SAS Institute Inc., Cary, NC, USA). Results were compared using Pearson’s correlation test. Data are presented as mean ± SD. P < 0.05 was considered statistically significant.

Results

We analyzed scores of 94 medical students who participated in the OSCE and CBT in 2017, and the basic CC in 2018. As shown in Table 1, medical students generally achieved scores ranging from 80%-90% for the OSCE, CBT, and CC.

Table 1. Scores for objective structured clinical examination (OSCE), computer-based testing (CBT), and clinical clerkship (CC).

| Medical interview | Head and neck examination | Chest examination | Abdominal examination | Neurological examination | Emergency response | Basic Technique | Total OSCE score | Computer- based testing | Clinical Clerkship | |

|---|---|---|---|---|---|---|---|---|---|---|

| Average | 80.3 | 92.2 | 81.8 | 94.3 | 90.8 | 90.6 | 82.4 | 87.5 | 80.1 | 78.0 |

| SD | 9.9 | 6.6 | 10.8 | 4.9 | 7.6 | 6.6 | 5.8 | 3.9 | 7.5 | 2.1 |

Correlations of OSCE and CBT scores with CC scores

Correlations of OSCE and CBT scores with CC scores are shown in Table 2. Total scores for OSCE and CBT were significantly correlated with CC scores (P<0.001 each). When analyzed by OSCE components, medical interview and chest examination scores were significantly correlated with CC scores (P = 0.001, each), while the remaining five component scores were not.

Table 2. Correlations of objective structured clinical examination (OSCE) and computer-based testing scores with clinical clerkship (CC) scores.

| Medical interview | Head and neck examination | Chest examination | Abdominal examination | Neurological examination | Emergency response |

Basic Technique | Total OSCE score | Computer-based testing | |

|---|---|---|---|---|---|---|---|---|---|

| R | 0 | 0.059 | 0.329 | 0.166 | 0.158 | 0.124 | 0.161 | 0.377 | 0.346 |

| Co-efficient | 0.075 | 0.004 | 0.108 | 0.028 | 0.025 | 0.015 | 0.026 | 0.141 | 0.120 |

| P | 0.001* | 0.570 | 0.001* | 0.109 | 0.130 | 0.234 | 0.122 | <0.001* | <0.001* |

*P<0.05.

Correlations between OSCE and CBT scores

We evaluated correlations between OSCE and CBT scores, and found no significant correlations between them (Table 3). There also were no significant correlations between each OSCE component score and CBT score (Table 3).

Table 3. Correlations between scores of objective structured clinical examination (OSCE) components and computer-based testing (CBT).

| Medical interview | Head and neck examination | Chest examination | Abdominal examination | Neurological examination | Emergency response | Basic Technique | Total OSCE score | |

|---|---|---|---|---|---|---|---|---|

| R | -0.045 | 0.0618 | 0.179 | -0.021 | -0.059 | 0.044 | 0.04 | 0.063 |

| Co-efficient | 0.021 | 0.004 | 0.032 | 0.0005 | 0.003 | 0.002 | 0.002 | 0.004 |

| P | 0.667 | 0.560 | 0.085 | 0.841 | 0.579 | 0.672 | 0.702 | 0.549 |

Discussion

Our study showed that total scores for OSCE and CBT were significantly correlated with CC scores. This suggest that OSCE and CBT can be an effective indicator of CC performance in Japanese medical education. From the specific correlation analysis, medical interview and chest examination scores were significantly correlated with CC scores.

Physical examinations and medical interviews are essential skills and being able to evaluate information from these provides information important for diagnosis and treatment during CCs [13][14]. In clinical settings, it is not rare to overlook physical findings or perform evaluations incorrectly. Incorrect assessment of physical findings can lead to errors in diagnosis, which may result in an adverse outcome for the patient [15][16]. Accordingly, from the perspectives of clinical competency and outcome-based education, assuring the quality of both technical and non-technical skills of medical students before CCs is essential.

In the present study, total scores for OSCE and CBT showed strong and significant correlations with CC performance, as reflected in CC scores. These data validate the OSCE and CBT as measures to assure competency prior to participating in CCs. Interestingly, no components of the OSCE were significantly correlated with total CBT score. This suggests that competency as evaluated using the OSCE and CBT are different, and a combination of both could provide a better sense of the competency of medical students prior to CCs.

When OSCE components were considered individually, medical interview and chest examination components were significantly correlated with CC performance, while the remaining five components were not. One potential explanation for this is that, of the seven components of the OSCE, medical interviews and chest examinations are performed the most often during CCs. Thus, focusing training on these skills may contribute to better CC performance [17][18].

In contrast, components other than medical interviews and chest examinations were not significantly correlated with CC performance. One possible reason for this is the lack of opportunities to use such skills. For example, medical students are not permitted to perform emergency response such as advanced life support alone [19][20]. As medical students should acquire these basic clinical competencies after medical doctor certification, some educational method for compensating the gap is warranted [21].

To overcome this problem, we believe that simulation-based education (SBE) can be a powerful solution to compensate for the lack of opportunities to exercise these skills [22]. As SBE methods have been developed and are widely used to acquire both technical and non-technical skills in medical education [23][24], combination of SBE and CC could potentially maximize the competency of medical students. For example, medical students can rephrase the resuscitation utilizing simulator which they watched in the emergency ward. Such combination can enhance the CC performance in medical students.

Medical educators are expected to improve CC program by including SBE method to compensate low-frequent clinical skills [25]. They can also utilize SBE for formative assessment to improve teaching and learning in clinical region.

This study has a number of limitations worth noting. First, as data were obtained from a single institution, our findings may not be generalizable to other medical schools [26][27]. However, our results likely apply to medical schools in Japan given the core medical curriculum adopted throughout the country. Second, the CBT scores are generally high and small variation, this might have caused some bias for correlation analysis. Third, we excluded the students who did not progress to perform correlation analysis more accurately, as we considered that content of CC may differ year by year. However, this may have caused bias. Fourth, we evaluated the total OSCE score by the average of seven stations which may have lacked statistical justification. Lastly, we evaluated the overall score for CC. Correlations between the OSCE and CBT and the various aspects of CC may provide further insight into how these test instruments relate to actual medical practice by medical students. In this regard, it will be interesting to evaluate the relationship between CC performance and post-CC OSCE scores once the post-OSCE is implemented in the 2020 curriculum year.

In conclusion, our findings suggest that the OSCE and CBT play important roles in predicting CC performance. In particular, medical interview and chest examination components of the OSCE were particularly relevant for predicting CC performance.

Supporting information

(XLSX)

Data Availability

The data underlying the results presented in the study are available from attached supplemental data.

Funding Statement

The authors have no affiliation with any manufacturer of any device described in the manuscript and declare no financial interest in relation to the material described in the manuscript. Financial support for the study was provided by Osaka Medical College which had no role in study design, data collection and analysis, publication decisions, or manuscript preparation.

References

- 1.Ellaway RH, Graves L, Cummings BA (2016) Dimensions of integration, continuity and longitudinality in clinical clerkships. Med Educ 50:912–921. 10.1111/medu.13038 [DOI] [PubMed] [Google Scholar]

- 2.Hudson JN, Poncelet AN, Weston KM, Bushnell JA, A Farmer E (2017) Longitudinal integrated clerkships. Med Teach 39:7–13. 10.1080/0142159X.2017.1245855 [DOI] [PubMed] [Google Scholar]

- 3.Takahashi N, Aomatsu M, Saiki T, Otani T, Ban N (2018) Listen to the outpatient: qualitative explanatory study on medical students' recognition of outpatients' narratives in combined ambulatory clerkship and peer role-play. BMC Med Educ 18:229 10.1186/s12909-018-1336-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Murata K, Sakuma M, Seki S, Morimoto T (2014) Public attitudes toward practice by medical students: a nationwide survey in Japan. Teach Learn Med 26:335–343. 10.1080/10401334.2014.945030 [DOI] [PubMed] [Google Scholar]

- 5.Okayama M, Kajii E (2011) Does community-based education increase students' motivation to practice community health care?—a cross sectional study. BMC Med Educ 11:19 10.1186/1472-6920-11-19 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Tagawa M, Imanaka H (2010) Reflection and self-directed and group learning improve OSCE scores. Clin Teach 7:266–270. 10.1111/j.1743-498X.2010.00377.x [DOI] [PubMed] [Google Scholar]

- 7.Ishikawa H, Hashimoto H, Kinoshita M, Fujimori S, Shimizu T, Yano E (2006) Evaluating medical students' non-verbal communication during the objective structured clinical examination. Med Educ 40:1180–1187. 10.1111/j.1365-2929.2006.02628.x [DOI] [PubMed] [Google Scholar]

- 8.Humphrey-Murto S, Côté M1 Pugh D, Wood TJ (2018) Assessing the Validity of a Multidisciplinary Mini-Clinical Evaluation Exercise. Teach Learn Med 30:152–161. 10.1080/10401334.2017.1387553 [DOI] [PubMed] [Google Scholar]

- 9.Rogausch A, Beyeler C, Montagne S, Jucker-Kupper P, Berendonk C, Huwendiek S, et al. (2015) The influence of students’ prior clinical skills and context characteristics on mini-CEX scores in clerkships–a multilevel analysis. BMC Med Edu 15:208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Tagawa M, Imanaka H (2010) Reflection and self-directed and group learning improve OSCE scores. Clin Teach 7:266–270. 10.1111/j.1743-498X.2010.00377.x [DOI] [PubMed] [Google Scholar]

- 11.Lörwald AC, Lahner FM, Mooser B, Perrig M, Widmer MK, Greif R, et al. (2019) Influences on the implementation of Mini-CEX and DOPS for postgraduate medical trainees' learning: A grounded theory study. Med Teach 41:448–456. 10.1080/0142159X.2018.1497784 [DOI] [PubMed] [Google Scholar]

- 12.Lörwald AC, Lahner FM, Nouns ZM, Berendonk C, Norcini J, Greif R, et al. (2018) The educational impact of Mini-Clinical Evaluation Exercise (Mini-CEX) and Direct Observation of Procedural Skills (DOPS) and its association with implementation: A systematic review and meta-analysis. PLoS One 13:e0198009 10.1371/journal.pone.0198009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Bowen JL (2006) Educational strategies to promote clinical diagnostic reasoning. N Engl J Med 355:2217–2225. 10.1056/NEJMra054782 [DOI] [PubMed] [Google Scholar]

- 14.Nomura S, Tanigawa N, Kinoshita Y, Tomoda K (2015) Trialing a new clinical clerkship record in Japanese clinical training. Adv Med Educ Pract 6:563–565. 10.2147/AMEP.S90295 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Shikino K, Ikusaka M, Ohira Y, Miyahara M, Suzuki S, Hirukawa M, et al. (2015) Influence of predicting the diagnosis from history on the accuracy of physical examination. Adv Med Educ Pract 6:143–148. 10.2147/AMEP.S77315 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kirkman MA, Sevdalis N, Arora S, Baker P, Vincent C, Ahmed M (2015). The outcomes of recent patient safety education interventions for trainee physicians and medical students: a systematic review. BMJ Open 5:e007705 10.1136/bmjopen-2015-007705 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Kassam A, Cowan M, Donnon T (2016) An objective structured clinical exam to measure intrinsic CanMEDS roles. Med Educ Online 21:31085 10.3402/meo.v21.31085 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Eftekhar H, Labaf A, Anvari P, Jamali A, Sheybaee-Moghaddam F (2012) Association of the pre-internship objective structured clinical examination in final year medical students with comprehensive written examinations. Med Educ Online 17:15958. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Couto LB, Durand MT, Wolff ACD, Restini CBA, Faria M Jr, Romão GS, et al. (2019) Formative assessment scores in tutorial sessions correlates with OSCE and progress testing scores in a PBL medical curriculum. Med Educ Online 24:1560862 10.1080/10872981.2018.1560862 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Dong T, Saguil A, Artino AR Jr, Gilliland WR, Waechter DM, Lopreaito J, et al. (2012) Relationship between OSCE scores and other typical medical school performance indicators: a 5-year cohort study. Mil Med 177:44–46. 10.7205/milmed-d-12-00237 [DOI] [PubMed] [Google Scholar]

- 21.Mukohara K, Kitamura K, Wakabayashi H, Abe K, Sato J, Ban N (2004) Evaluation of a communication skills seminar for students in a Japanese medical school: a non-randomized controlled study. BMC Med Educ 4:24 10.1186/1472-6920-4-24 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hough J, Levan D, Steele M, Kelly K, Dalton M (2019) Simulation-based education improves student self-efficacy in physiotherapy assessment and management of paediatric patients. BMC Med Educ 16:463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Offiah G, Ekpotu LP, Murphy S, Kane D, Gordon A, O'Sullivan M, et al. (2019) Evaluation of medical student retention of clinical skills following simulation training. BMC Med Educ 19:263 10.1186/s12909-019-1663-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Dong T, Zahn C, Saguil A, Swygert KA, Yoon M, Servey J, et al. (2017) The Associations Between Clerkship Objective Structured Clinical Examination (OSCE) Grades and Subsequent Performance. Teach Learn Med 29:280–285. 10.1080/10401334.2017.1279057 [DOI] [PubMed] [Google Scholar]

- 25.Schiekirka S, Reinhardt D, Heim S, Fabry G, Pukrop T, Anders S, et al. (2012) Student perceptions of evaluation in undergraduate medical education: a qualitative study from one medical school. BMC Med Educ 12:45 10.1186/1472-6920-12-45 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Näpänkangas R, Karaharju-Suvanto T, Pyörälä E, Harila V, Ollila P, Lähdesmäki R, et al. (2016) Can the results of the OSCE predict the results of clinical assessment in dental education? Eur J Dent Educ 20:3–8. 10.1111/eje.12126 [DOI] [PubMed] [Google Scholar]

- 27.Sahu PK, Chattu VK, Rewatkar A, Sakhamuri S (2019) Best practices to impart clinical skills during preclinical years of medical curriculum. J Educ Health Promot 8:57 10.4103/jehp.jehp_354_18 [DOI] [PMC free article] [PubMed] [Google Scholar]