Abstract

Virtual realities are powerful tools to analyze and manipulate interactions between animals and their environment and to enable measurements of neuronal activity during behavior. In many species, however, optical access to the brain and/or the behavioral repertoire are limited. We developed a high-resolution virtual reality for head-restrained adult zebrafish, which exhibit cognitive behaviors not shown by larvae. We noninvasively measured activity throughout the dorsal telencephalon by multiphoton calcium imaging. Fish in the virtual reality showed regular swimming patterns and were attracted to animations of conspecifics. Manipulations of visuo-motor feedback revealed neurons that responded selectively to the mismatch between the expected and the actual visual consequences of motor output. Such error signals were prominent in multiple telencephalic areas, consistent with models of predictive processing. A virtual reality system for adult zebrafish therefore provides opportunities to analyze neuronal processing mechanisms underlying higher brain functions including decision making, associative learning, and social interactions.

Introduction

Virtual realities (VRs) are powerful tools to examine how brains interact with the world through behavior1–8. For example, VR arenas allow experimenters to systematically modify the sensory consequences of behavioral outputs in order to analyze how brains generate predictions and plan behaviors9. Because animals in a VR can be physically restrained, neuronal activity can be measured during behavior using optical or electrophysiological methods that cannot be applied in freely moving animals2,10–14. VRs therefore offer unique opportunities for mechanistic analyses of brain functions such as object perception, learning and memory, and social behavior.

Higher brain functions involve transformations of activity patterns across distributed populations of neurons that can be measured using optical methods15–17. In small animals such as Drosophila, most neurons are accessible to high-resolution optical imaging approaches12, allowing for exhaustive and detailed analyses of neuronal population dynamics. In vertebrates, however, the fraction of optically accessible neurons is often severely constrained. Exceptions are small teleosts such as Danionella translucida, which is sufficiently small and transparent for optical activity measurements even at adult stages18. However, the behavioral repertoire has not been explored in detail and molecular tools are only beginning to be developed. In zebrafish, optical measurements of neuronal activity have been performed at embryonic and larval stages up to seven days post fertilization13,16,19–22. At these stages, fish are transparent and can be restrained in agarose but neuronal circuits are immature and the behavioral repertoire is dominated by reflex-like sensory-motor behaviors with limited potential for plasticity. Adult zebrafish have a broader behavioral repertoire that includes social interactions, complex innate behaviors, various forms of learning and cognitive behaviors that do not occur in larvae20,23–26. In principle, adult zebrafish brains are still sufficiently small to perform high resolution optical imaging and dense reconstructions of neuronal wiring diagrams throughout many brain areas20,27,28. The adult zebrafish is therefore a promising vertebrate model for mechanistic analyses of higher brain functions. However, methods for high-resolution activity measurements during complex behaviors are still lacking.

Activity measurements in adult zebrafish have previously been performed in brain explants29, precluding simultaneous analyses of behavior. Here we developed procedures for two-photon calcium imaging of neural activity in head-restrained adult zebrafish during behavior in a high-resolution VR. We confirmed that adult zebrafish in the VR show regular spontaneous swimming and affiliative behavior to movies of conspecifics. By perturbing visuo-motor feedback during self-generated movements we found neurons in multiple telencephalic brain areas that responded specifically to the mismatch between the expected and the actual visual input, consistent with models of predictive processing30–32.

Results

Head fixation and virtual reality for adult zebrafish

Because adult zebrafish cannot be immobilized in agarose we developed a method for stable head fixation. The skull of adult zebrafish consists of five major sets of bones, four of which are thin (<100 μm) and do not provide sufficient stability. The fifth set, in contrast, is very solid and surrounds the brain ventrally and laterally from the nostrils to the spinal cord (Fig. 1a,b). We used tissue glue and dental cement to bilaterally attach small L-shaped head bars to this set of bones at a specific site lateral to the cerebellum that is not covered by muscle or connective tissue (Supplementary Fig. 1).

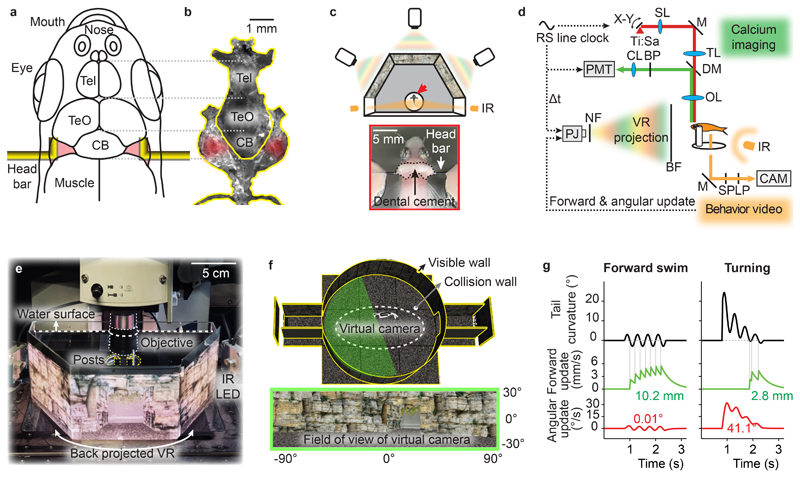

Figure 1. Head fixation of adult zebrafish and closed-loop virtual reality.

a, Attachment sites of L-shaped bars for head fixation. Tel: telencephalon; TeO: optic tectum; CB: cerebellum.

b, Photograph of dissected set of stable skull bones. Red shading indicates attachment sites.

c, Top: Schematic of VR projected by three projectors onto a panoramic screen with a 180° field of view. Arrow depicts head-fixed fish. Bottom: dorsal view of head-fixed fish.

d, Synchronization of closed-loop VR and two-photon imaging. The photomultiplier tube (PMT) and the light-emitting diodes (LEDs) of the projector are gated in a non-overlapping manner using the line clock of the 8 kHz resonant scanner (RS) as a trigger. BF: back-projection film; BP: band pass filter; CAM: camera; CL: collimating lens; DM: dichroic mirror; IR: infrared light source; LP: long pass filter; M: mirror; NF: notch filter; OL: objective lens; PJ: projector; SL: scan lens; SP: short-pass filter; Ti:Sa: titanium-sapphire laser; TL: tube lens.

e, Back-projection of the VR onto a semi-hexagonal tank under the microscope (photograph of setup).

f, 3D model of the VR consisting of a cylindrical arena, a flat floor and two tunnels (top). Walls were textured with naturalistic rocks and plants (bottom). A set of three virtual cameras together capture a 180° field of view (green shading). An invisible collision wall (dashed line) kept virtual cameras at a distance from the VR boundaries to prevent pixelation of the texture.

g, Algorithm to update the virtual cameras based on tail movements of head-fixed animals. Forward movement in the VR (green) is triggered by the zero crossings of the caudal tail curvature (black). Angular movement in the VR (red) is proportional to the caudal tail curvature after low-pass filtering. Numbers show total forward movement (10.2 mm and 2.8 mm) and rotation (0.01° and 41.1°) in the examples shown at default gain settings.

We immobilized individual fish by attaching the head bars to a solid mount. We then placed the fish in a 3D virtual environment that was defined using open-source software for game development and projected onto the walls of a custom-made tank (180 ° field of view; Fig. 1c-f; Supplementary Fig. 2). High resolution for presentations of naturalistic visual stimuli was achieved by three projectors arranged at 60 ° (Fig. 1c,e). The virtual environment consisted of a circular arena with textured surfaces and two short tunnels (Fig. 1f). The virtual movement of the fish was restricted to an elliptic area in the center by an invisible wall, preventing fish from entering the tunnels. We detected tail movements of the fish by video imaging from below using infrared illumination. To create a closed-loop VR the virtual swimming trajectory of head-fixed fish in a horizontal plane was predicted from the curvature of the tail and the projection was updated accordingly in real time (50 Hz). Symmetric tail undulations resulted in forward translation in the VR, which was implemented by triggering translations on the zero-crossings of the caudal tail curvature. Asymmetric tail movements resulted in an initial change in heading direction, followed by a brief forward movement (Fig. 1g).

It is not possible to reproduce the exact kinematics of natural motor behavior in the VR because head-fixation results in reactive forces on the body that do not occur during free swimming. Moreover, virtual swimming was restricted to a horizontal plane and fish received no vestibular feedback. Our goal was therefore to establish conditions in which fish swim spontaneously in the VR and show specific behavioral responses to sensory stimuli. When we decoupled the VR from motor output (open-loop), head-fixed fish often showed irregular, high-amplitude tail beats (“struggling”) and subsequently entered a prolonged period of inactivity. In closed-loop, we initialized gains to default values and fine-adjusted them for each fish to minimize struggling and inactivity (Supplementary Fig. 3). Under these conditions, fish performed repetitive swims over long periods of time, resembling the discrete “bout-and-glide” swimming pattern of unrestrained zebrafish (Supplementary Figs. 4,5).

Behavioral responses to naturalistic visual stimuli

We next examined behavioral responses to visual stimuli. Freely swimming adult zebrafish are visually attracted to conspecifics behind a transparent divider33,34 or to images and movies of other zebrafish35. We tracked freely swimming adult fish in a rectangular tank while we presented visual stimuli behind the opposing small walls for 20 s. One stimulus was a movie of a shoal of three adult zebrafish in a tank whereas the other stimulus was a static image of the empty tank (Supplementary Fig. 6a). During stimulus presentation, fish spent more time near the movie of the shoal, as visualized by probability maps (Supplementary Fig. 6b, n = 64 fish). Quantitative analysis of a side preference index confirmed that fish spent significantly more time on the side where the movie was presented (Supplementary Fig. 6c, p = 10-10, paired t-test versus pre-movie period, n = 64 fish). Hence, freely swimming fish were attracted to movies of conspecifics, consistent with previous results.

Head-fixed fish in the VR in the absence of stimulus presentations frequently circled the environment, similar to the spontaneous behavior of unrestrained fish. When we presented stimuli in the two tunnels (Fig. 2a; duration: 40 s – 300 s), fish frequently stopped circling when they encountered the tunnel showing the shoal and performed persistent directional swims towards the shoal (Fig. 2b–d, Supplementary Video 1). We did not observe this behavior when fish encountered the tunnel showing the empty tank. The fraction of time spent close to the stimulus was higher when the shoal was present (Fig. 2e; 16.7% vs. 10.4%; p = 0.038, Wilcoxon rank sum test) and the side preference index was significantly biased towards the shoal (Fig. 2f; p = 0.0096, t-test, n = 46 fish). These results demonstrate that visual attraction to movies of conspecifics was retained in the VR.

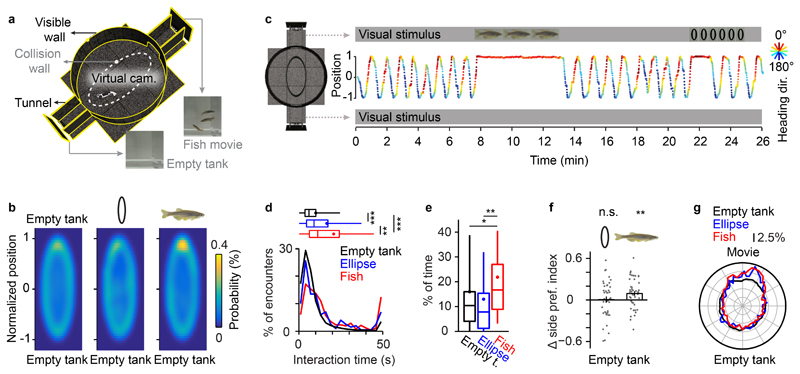

Figure 2. Behavioral responses to movies in the closed-loop VR.

a, Schematic of the VR. Head-restrained fish navigated within the transparent collision wall (dashed line) and had visual access to movies presented at the end of the tunnels.

b, Probability of fish position during presentation of different visual stimuli at the end of the tunnels (n = 46 fish). Visual stimuli were (1) a movie of conspecifics in a fish tank, (2) a movie of vertical ellipses that recapitulated the trajectories of conspecifics on the same background, and (3) a static image of a fish tank (empty tank). Between stimulus presentations, the fish tank image was presented at the end of the tunnels.

c, Example of the behavior of an adult zebrafish. Y-axis shows normalized position along the long axis of the VR; colors indicate heading direction. Similar behavior was observed in 9 fish.

d, Distribution of interaction time with visual stimuli. The median interaction time with the empty empty tank was 5.9 s (25th – 75th percentile = 3.5 s - 10.0 s); the median interaction time with the ellipse movie was 8.9 s (25th – 75th percentile = 4.4 s - 17.15 s); the median interaction time with the fish movie was 11.1 s (25th – 75th percentile = 6.1 s - 23.9 s). Interaction time was significantly longer in response to fish movies as compared to ellipse movies (p = 0.0056, two-sided Wilcoxon rank sum test, n = 515 encounters with fish movies and 200 encounters with ellipse movies) and to the empty tank condition (p = 10-47, two-sided Wilcoxon rank sum test, n = 6864 encounters with tunnels without movie). Interactions with ellipse movies were significantly longer as compared to the empty tank condition (p = 10-9, two-sided Wilcoxon rank sum test). Box plots show median, 25th and 75th percentiles. Dots and error bars show mean ± s.d.. Asterisks indicate p < 0.05 (*), p < 0.01 (**), p < 0.001 (***). Interaction time was defined as the time that the visual stimulus remained within the frontal visual field (-45° - 45°) during each encounter. Data was collected from 46 fish.

e, Fraction of time spent near the movie (first of 10 bins along the long axis) under different stimulus conditions. Fish spent significantly more time near the stimulus when the movie contained conspecifics (two-sided Wilcoxon rank sum test, p = 0.002; n = 46 fish) and to the control condition (two-sided Wilcoxon rank sum test, p = 0.038; n = 46 fish). Box plots, error bars and asterisks are defined as in d.

f, Stimulus-induced change in side preference index (SPI). Head-restrained animals exhibited a significant bias towards movies of conspecifics (p = 0.0096, two-sided t-test, n = 46 fish) but not towards ellipse movies (p = 0.81, two-sided t-test, n = 46 fish). The SPI is defined as the mean of the normalized position along the long axis (between -1 and 1) during stimulus presentation. The change in SPI is defined as ΔSPI = SPI (movie vs. empty tank) - SPI (empty tank vs. empty tank). Error bars represent mean ± s.e.m. and asterisks indicate p < 0.01 (**).

g, Body orientation during presentation of conspecifics or ellipses. Body orientation was significantly biased towards the movies of conspecifics (p ≤ 0.001, Kuiper’s test, n = 3091 orientation measures) and ellipses (p ≤ 0.001, Kuiper’s test, n = 1201 orientation measures). Body orientation was measured every 30 s. Radial unit represents 2.5% of total recording time.

When we replaced each of the three fish in the movie by a vertical black ellipse of matching size that followed the same motion patterns, fish occasionally also stopped circling in the VR when they encountered the movie (Fig. 2c). However, this behavior was rare and circling usually resumed during movie presentation. The fraction of time spent close to the movie was not significantly different from the no-movie condition, and we did not observe a significant change in the side preference index (Fig. 2b-f, fraction of time near movie = 7.8%, p = 0.36, Wilcoxon rank sum test; side preference index: p = 0.81, t-test, n = 46 fish). Nevertheless, the heading direction was significantly biased towards the movie of ellipses (Fig. 2g, p < 0.001 for ellipse movie vs. empty tank and fish movie vs. empty tank, respectively, Kuiper’s test). Adult zebrafish therefore oriented towards the movie of ellipses without actively approaching it. These results reveal different behavioral responses to movies of conspecifics and abstract shapes, illustrating the potential of the VR for systematic analyses of behavioral responses to complex visual stimuli.

Imaging Neuronal Population Activity during Behavior

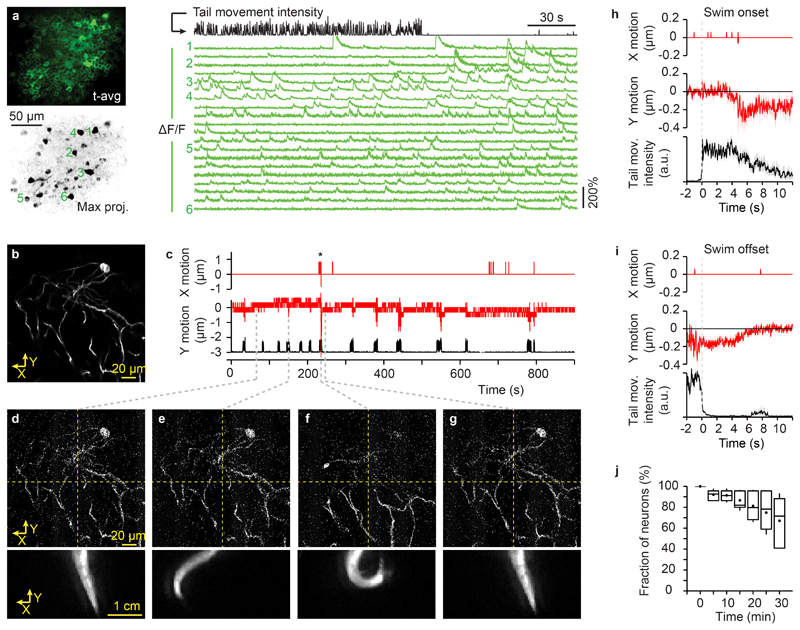

To explore in vivo imaging in adult zebrafish we measured point spread functions and imaged neurons using a two-photon microscope. Although the skull influenced optical resolution (Supplementary Fig. 7), we could resolve individual somata and neurites up to a depth of >200 μm below the brain surface (not including the thickness of the skull; Supplementary Figs. 8,9), which comprises approximately 30% of the pallium. To measure neuronal activity in the dorsal telencephalon we performed two-photon calcium imaging in Tg(neuroD:GCaMP6f) fish36. Photons of the VR were temporally segregated from fluorescence measurements by exclusive gating of the projectors’ LEDs and the photomultiplier tube (Fig. 1d). Maximum projections of image series (8000 frames at 30 Hz) along the time axis usually revealed no obvious blurring (Fig. 3a), indicating that motion artifacts were negligible. In addition, we analyzed image motion in Tg(SAGFF212C:Gal4), Tg(UAS:ArchT-GFP) fish37, which express the fluorescent transmembrane protein ArchT-GFP in sparsely distributed telencephalic neurons (Supplementary Fig. 10). We acquired image series when the VR was off and fish showed episodes of struggling that were longer and more vigorous than regular swim events (Supplementary Fig. 11). Nonetheless, image shifts were small (usually <0.3 μm; rarely >1 μm) and reversible after cessation of tail movements (Fig. 3b-i; Supplementary Video 2). To assess long-term stability we manually tracked individual somata and found that 67 ± 8% of somata could be identified throughout image series of 30 min (n = 224 neurons from 8 fish; Fig. 3j). A linear fit yielded a median half-life of 49 min for individual neurons without adjustments of the focal plane.

Figure 3. Quantification of brain motion during motor output.

a, Simultaneous recording of neuronal activity and behavior in Tg(neuroD:GCaMP6f) fish. Left: time-averaged raw fluorescence (top) and maximum intensity projection of mean-subtracted image series, revealing active neurons (bottom). Right: traces of calcium signals (ΔF/F) from individual neurons (green; numbers indicate neurons in the maximal projection image) together with the simultaneously recorded tail movement intensity (black). Experiments with similar results were repeated in 26 fish.

b, Time-averaged fluorescent image of GFP-expressing interneurons acquired in an adult Tg(SAGFF212C:Gal4), Tg(UAS:ArchT-GFP) zebrafish during behavior (30 s; single focal plane). Recordings of brain motion and tail movement (b - g) were repeated in 2 fish.

c, Image motion in the field of view in b (red) in X (medial-lateral direction) and Y (rostro-caudal direction) during tail movements (black). Positive values of X and Y correspond to leftward and forward motion, respectively. The recording was performed in the dark. Asterisk: an episode of U-shaped tail bends (at approximately 230 s). Image motion was measured by cross correlation of individual, raw images to the mean of a stable image series. The resolution of the measurement is 0.82 μm in X and 0.32 μm in Y.

d - g. Selected single frames of a two-photon imaging time series (33 ms per frame; top, raw data; Supplementary Video 2) and the simultaneously recorded video frames of the tail (bottom). Grey dashed lines indicate time (in c) of the respective frames.

h, Mean image displacement in X and Y aligned on swim onset. Shading indicates s.e.m. (n = 40 swim events).

i, Image displacement in X and Y aligned on swim offset.

j, Fraction of neurons that could be continuously identified as a function of recording time (manual tracking of 224 neurons in 10 fields of view from 8 fish). Box plots show median, 25th and 75th percentiles. Dots and error bars show mean ± s.d..

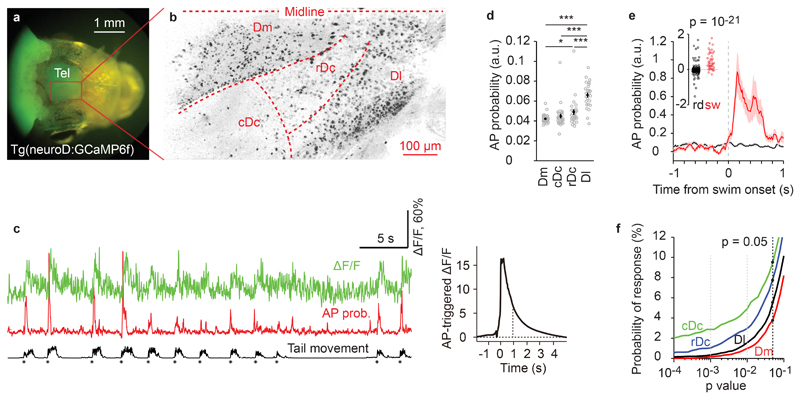

We measured neuronal activity in different fields of view in the dorsal telencephalon of individual Tg(neuroD:GCaMP6f) fish (Fig. 4a,b; Supplementary Fig. 9; first 200 μm; 7 image series with a total of 54000 frames per field of view). We distinguished four telencephalic areas: (1) Dm (medial zone of the dorsal telencephalon; likely to be homologous to the basolateral amygdala and related areas38), (2) the dorsorostral (rDc) and (3) dorsocaudal (cDc) parts of area Dc (central zone of the dorsal telencephalon; presumably related to isocortex39,40), and (4) the dorsal part of Dl (lateral zone of the dorsal telencephalon; potentially related to hippocampus41). During spontaneous swimming in the VR, individual neurons in all brain areas showed sparse and discrete fluorescence transients. We first highlighted active neurons by a maximum projection of pixel intensities along the time axis of each image series (267 s; 8000 frames) after subtracting the mean (Fig. 3a). This analysis revealed a region in Dl with a high density of active neurons (Fig. 4b). Consistent with this observation, spontaneous neuronal activity was significantly higher in Dl than in other regions (p < 0.001, two-sided t-test, n = 26 fish, Fig. 4d).

Figure 4. Region-specific neuronal activity in the telencephalon of adult zebrafish.

a, Wide-field fluorescence image of the head of an adult zebrafish expressing neuroD:GCaMP6f.

b, Neuronal activity pattern of adult zebrafish forebrain imaged using a two-photon microscope through the intact skull after removal of the skin. Active neurons (dark) were revealed by a maximum projection of pixel intensities along the time axis of a mean-subtracted image series (90 s). Similar activity patterns were observed in 26 fish.

c, Calcium signals of a swim-modulated neuron (ΔF/F; green), estimated AP probability (red), and intensity of tail movement (black). Asterisks depict onsets of swim events. Right: AP-triggered average of calcium transient. Dashed line depicts decay time constant of 0.9 s. Swim-modulated neurons were identified in 26 fish.

d, Mean AP probability in different brain regions and animals. Each circle represents one fish (n = 26; error bars represent s.e.m.). Neural activity was significantly higher in Dl than in other forebrain areas (two-sided t-test, p = 10-13, 10-7, 10-5 for comparison to Dm, cDc, rDc, respectively). Activity in rDc was significantly higher than in Dm (two-sided t-test, p < = 0.014). Other pairwise comparisons were not significant (two-sided t-test, p = 0.25 for Dm compared to cDc, p = 0.26 for cDc compared to rDc). Asterisks indicate p < 0.05 (*) and p < 0.001 (***).

e, Mean AP probability of a swim-modulated neuron triggered on swim onsets (red; n = 126 events) and on random time points (black; n = 500). Shading shows s.e.m.; inset shows the difference in AP probability between 0.5 s time windows immediately before and after the onset of swim events. A neuron was considered swim-modulated when the distribution of swim-triggered responses was significantly higher (p < 0.05; one-sided t-test) from randomly triggered responses.

f, Probability that the activity of individual neurons is modulated by swimming as a function of detection stringency (p-value of one-sided t-test as described in e). Dashed black line depicts probability of swim modulation for stringency of p < 0.05 in cDc (9.4%), rDc (7.7%), Dl (5.5%) and Dm (3.8%). Data was pooled from 26 fish.

To determine whether activity of individual neurons was modulated by swimming we first estimated the fluctuations in action potential (AP) probability underlying the observed somatic calcium transients using a convolutional neural network (“Elephant”)42 (Fig. 4c). We then calculated the difference in AP probability Δp in 0.5 s time windows before and after the onset of swim bouts for individual neurons. As a control, we triggered the same analysis on random time points. Neurons were considered swim-modulated when the distribution of Δp was significantly different between swim-triggered and randomly triggered conditions (one-sided t-test with p < 0.05, Fig. 4e). The probability of observing swim-modulated activity was higher in cDc (9.4%, 158/1675 neurons) and rDc (7.9%, 233/2941) than in Dl (5.5%, 438/7967) or Dm (3.7%, 275/7449; Fig. 4f).

Analysis of predictive processing in the virtual reality

We exploited the VR to analyze predictive neural processing in zebrafish by manipulating sensory-motor feedback. The theoretical framework of predictive processing assumes that brains generate internal models of the world and suppress responses to predictable sensory inputs through top-down projections. As a consequence, bottom-up projections transmit error signals, rather than primary sensory information, and these error signals are used to update the internal model30,32. This process has been proposed to allow for experience-dependent learning of structures in the world and to drive not only sensory-motor behavior but also cognitive processes such as the inference of the mental states and actions of other individuals43. A key prediction of classical predictive processing models is that unexpected sensory inputs elicit neuronal responses that cannot be explained by sensory inputs or motor output alone but encode the mismatch between the expected and the actual sensory input. Error signals consistent with this assumption have been described in various vertebrate brain areas32,44–46. However, detailed characterizations of these error signals and the underlying circuitry are rare47.

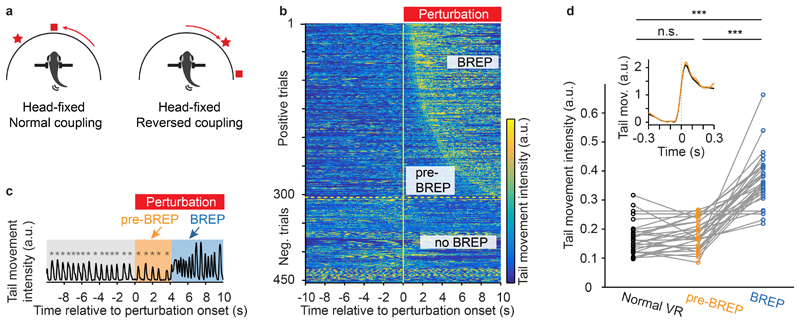

We generated brief episodes of visuomotor mismatch by introducing left-right reversals to the angular update of the VR for 5 – 10 s without modifying forward swimming (Fig. 5a, Supplementary Video 3; mean duration: 7.5 s; mean inter-event interval: 5 min). Hence, the VR rotated opposite to the expected direction during swim bouts. These perturbations frequently triggered a distinct sequence of motor outputs with a variable delay (Supplementary Video 4; Supplementary Figs. 11, 12). Behavioral responses typically started with 1 – 5 unilateral bends of the caudal tail with increasing frequency and amplitude that resembled J-turns, a motor motif that rotates the body axis48. These movements were followed by vigorous and irregular movements of the entire tail. Using these motor features we manually annotated behavioral responses to perturbation (BREPs) in each trial (Fig. 5b). We then divided the time after perturbation onset into two phases: the pre-BREP period, corresponding to the delay between perturbation onset and the BREP, and the BREP period, corresponding to the time between BREP onset and the end of the 10 s analysis time window.

Figure 5. Behavioral responses to visual feedback mismatch.

a, Schematic showing VR updates during normal and reversed visuomotor coupling. Reversals of 5 - 10 s with a mean duration of 7.5 s were introduced every 2 – 7 minutes with a mean inter-event interval of 5 minutes. Symbols represent hypothetical visual cues in the VR.

b, Tail movement intensity (color-coded) in different perturbation trials (rows) as a function of time (x-axis). A visuomotor perturbation was initiated at t = 0. Trials from 26 fish were sorted by the onset of the behavioral response (BREP). The intensity of tail movements was quantified by the mean of the absolute pixel-wise difference between successive video frames.

c, Example trace (from b) of tail movement intensity before the VR perturbation (normal VR), during perturbation but before behavioral responses (pre-BREP period), and during behavioral responses (BREP period). Asterisks indicate the onset of swim events. Intensity of tail movement was quantified as in b.

d, Intensity of tail movement (quantified as in b) during normal visuomotor coupling, during the pre-BREP period, and during the BREP period. Each plot symbol represents one fish (normal VR vs. pre-BREP, p = 0.07; normal VR vs. BREP, p = 10-10; pre-BREP vs. BREP, p = 10-9; two-sided pairwise t-test; n = 26 fish). Asterisks indicate p < 0.001 (***). Inset, mean intensity of tail movement triggered on the onset of swim events during normal visuomotor coupling (black) and during the pre-BREP period (yellow). The mean and s.e.m. were calculated over perturbation trials (n = 303 positive trials from 26 fish) after averaging swim events within each trial.

The duration of the pre-BREP period varied substantially between trials (Supplementary Fig. 12; median = 2.1 s, 25th - 75th percentile = 1.2 – 4.1 s). During this period, animals continued to perform short periodic swims (Fig. 5c) with an intensity and temporal structure that were not significantly different from swims during normal visuomotor coupling (Fig. 5d, pairwise t-test, p = 0.07). Tail movement intensity during the subsequent BREP period, in contrast, was significantly higher than the intensity during normal visuomotor coupling (Fig. 5d; pairwise t-test against normal coupling, p < 0.001, n = 26 animals; Supplementary Fig. 11). During the pre-BREP period, fish therefore received unexpected visual input without an obvious change in motor output.

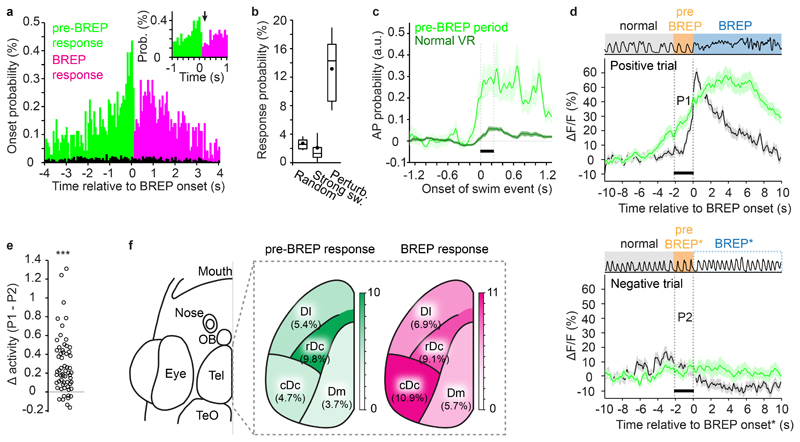

We measured neuronal activity during brief VR perturbations in 2 – 8 fields of view per fish to sample activity in Dm, cDc, rDc and Dl (1 – 7 perturbations per field of view). We detected a significant response to the perturbation in 882 out of 7090 neuron-perturbation pairs (12.2%) from 26 animals (Dm 9.2%, 233/2520 neuron-perturbation pairs; cDc 15.0%, 121/805; rDc 19.4%, 171/882; Dl 12.4%, 357/2883; see Methods for the definition of responses). To further characterize this activity we first clustered the 882 responses based on their temporal activity profile and found that the average activity was increased before the onset of the BREP in 5 out of 11 clusters (Supplementary Fig. 13). Hence, a substantial fraction of neuronal responses preceded the BREP.

Analyses of individual neuronal responses confirmed this observation (Fig. 6a). To compare these responses to activity related to spontaneous swims we identified swim events similar to BREPs during normal visuomotor coupling (“spontaneous strong swims”; swim duration >2 seconds). Across fish, the probability of detecting a response following the onset of a strong swim was 2.5 ± 0.6 % (mean ± s.e.m., n = 26 fish; total of 64600 neuron-swim pairs) and not significantly different from the probability of observing a significant response around randomly chosen time points (2.6 ± 0.2 %, mean ± s.e.m., n = 26 fish; total of 35450 time points; p = 0.13, two-sided t-test). Both of these probabilities were significantly lower than the probability of observing a response following a VR perturbation (13.4 ± 1.1%, mean ± s.e.m., n = 26 fish; total of 7090 neuron-perturbation pairs; p = 10-14 and 10-14, two-sided t-test; Fig. 6b). VR perturbations therefore evoked neuronal responses that are unlikely to reflect only enhanced motor output.

Figure 6. Prediction error signals in the zebrafish forebrain.

a, Distribution of neuronal response onset times relative to BREP onset. Green: responses that started after perturbation onset but before BREP onset (pre-BREP responses; n = 382); red: responses that started after BREP onset (BREP responses; n = 500). Inset shows abrupt change in response onset probability at BREP onset. Black: probability of response onsets around the onsets of spontaneous strong swims in the normal VR.

b, Probability of observing a response (change in ΔF/F within adjacent 10 s time windows) at randomly selected time points (2.60 ± 0.17 %; mean ± s.e.m.; n = 26 fish), around the onset of spontaneous strong swims in a normal VR (2.45 ± 0.55 %), and around the onset of visuomotor perturbations (13.4 ± 1.05 %). Spontaneous strong swim are swim events >2 s in duration. Box plots show median, 25th and 75th percentiles. Dots and error bars show mean ± s.d..

c, AP probability of swim modulated pre-BREP responses (n = 63) triggered on swim onset during normal visuomotor coupling (dark) and during the pre-BREP period (light, shading indicates s.e.m.). Bar depicts the first 230 ms when tail movement was indistinguishable between the two conditions (Fig. 5d).

d, Activity of the same set of pre-BREP neurons in positive trials (124 trials; aligned on BREP onset) and negative trials (106 trials). Green: Average response, black: tail movement intensity, during positive (top) and negative (bottom) trials. The analysis included only neurons that showed pre-BREP responses in at least 50% of the positive trials, and that were also recorded in at least one negative trial (n = 65 neurons, shading indicates s.e.m.). Activity in negative trials was aligned to the median delay of BREPs in positive trials because animals did not show a BREP. Bars indicate the median delay (2.06 s) between perturbation onset and BREP onset in positive trials. Data from 13 fish.

e, Activity difference between the pre-BREP period of positive trials (P1 in d) and the corresponding time window in negative trials (P2 in d). Each symbol represents a neuron (n = 65). The mean difference was significantly greater than zero (p = 10-7, two-sided pairwise t-test). Asterisks indicate p < 0.001 (***).

f, Probability of observing the onset of responses to visuomotor perturbation during the pre-BREP and BREP period in the dorsal forebrain. Total number of neuron-perturbation pairs in Dm, cDc, rDc and Dl were 2520, 805, 882, and 2883, respectively. Control is shown in Supplementary Fig. 14.

For further analyses we divided responses into two subsets depending on whether their onset occurred before the transition in motor behavior (pre-BREP responses, n = 382) or thereafter (BREP responses, n = 500; Supplementary Fig. 13). During normal visuomotor coupling, activity was modulated by swimming in 16.5% (63/382) of the pre-BREP responses but only in 2.8% (14/500) of the BREP responses. Hence, the probability of swim-modulation was substantially higher in pre-BREP responses than across all responses to VR perturbations (5.6%, p < 0.001, X2 test). Consistent with this observation, the mean activity increased sharply at the onset of swim bouts in pre-BREP responses but not in BREP responses (Supplementary Fig. 13).

The modulation of pre-BREP responses by swimming may reflect sensory responses to visual input or motor-related activity. Alternatively, pre-BREP responses may represent an error signal that arises from a mismatch between the expectation of the animal and the actual sensory feedback. To distinguish between these possibilities we identified pre-BREP responses that were swim-modulated (n = 63) and compared the swim-triggered average AP probability during two conditions: (1) spontaneous swims during normal visuomotor coupling, and (2) swims during the pre-BREP period. The swim-modulated activity was substantially higher during the pre-BREP period (Fig. 6c) while the intensity and temporal structure of motor output were indistinguishable from spontaneous swims during normal visuo-motor coupling (Fig. 5d). Hence, enhanced activity during the pre-BREP period cannot be explained by changes in motor output or visual flow amplitude, implying that it was caused by the unexpected direction of visual flow during active swimming.

We next identified neurons that responded during the pre-BREP phase when the visuomotor perturbation evoked a BREP (positive trials) and examined their activity during trials when the perturbation failed to evoke a BREP (negative trials; n = 65 neurons). Neurons showed a strong increase in neuronal activity in positive trials but not in negative trials (Fig.6d,e; pairwise t-test, p <0.001). The activity in positive trials increased prior to the onset of BREPs (Fig. 6d) and predicted the occurrence of the BREP (Supplementary Fig. 13f), consistent with the hypothesis that responses during the pre-BREP phase represent a behaviorally relevant prediction error.

In all telencephalic areas examined, changes in neuronal activity occurred more frequently during the pre-BREP or BREP phase (Fig. 6f) than at randomly chosen time points (Supplementary Fig. 14). Pre-BREP responses were more abundant in rDc than in other brain areas, while BREP responses were more abundant in both rDc and cDc (Fig. 6f). These results demonstrate that a substantial fraction of telencephalic neurons respond to a mismatch between expectation and sensory feedback, thus representing a sensory-motor prediction error.

Discussion

We established a closed-loop VR system for optical measurements of neuronal activity during behavior in head-fixed adult zebrafish. Traditionally, higher brain functions of vertebrates are studied in rodents and primates but the large size of the brain severely constrains the fraction of optically accessible neurons in these species. Large-scale imaging of neuronal activity is possible in larval zebrafish16,22 but their potential to study associative learning, social interactions or cognitive behaviors is limited. Other small vertebrates such as Danionella translucida18 are not well established as animal models. We therefore focused on adult zebrafish, which exhibit a rich repertoire of behaviors and allow for imaging of neuronal population activity throughout the dorsal pallium with single-neuron resolution. We measured activity across brain areas that are difficult to target simultaneously in mammals, including the proposed homologs of the basolateral amygdala (Dm), the isocortex (Dc) and part of the hippocampus (Dl). Conceivably, non-invasive optical access to deeper structures could be achieved using adaptive optics or three-photon imaging49,50. Hence, activity measurements in behaving adult zebrafish offer opportunities to analyze neuronal population activity during complex behaviors. Moreover, the zebrafish brain offers exceptional opportunities to study the ontogeny and maturation of neural circuit functions in a vertebrate.

Large-scale optical measurements of neuronal activity have recently been achieved in freely moving larval zebrafish using a specialized tracking technique22 but this approach cannot easily be scaled to adults. Juvenile and adult zebrafish can be examined in a VR designed for freely moving animals8 but this method does not permit simultaneous activity measurements. A VR for head-fixed zebrafish cannot fully emulate physical reality and principal constraints restrict the potential for kinematic analyses of motor output3,8. Nonetheless, swimming behavior of head-fixed adult zebrafish in the closed-loop VR resembled free swimming in the home tank and fish showed differential and directional behavioral responses to visual stimuli. Our approach therefore enables measurements and manipulations of neuronal population activity in adult zebrafish during complex behaviors.

We briefly and specifically manipulated interactions of animals with their environment to test the hypothesis that telencephalic neurons represent sensorimotor prediction errors, which are key elements in models of predictive processing30,32. Prominent error signals were observed in multiple areas of the dorsal telencephalon, indicating that error detection is a prominent computation in the teleost brain. It is presently unknown how and where these error signals are computed, and how they are related to alertness or arousal. Our results provide a starting point to address these questions by dissecting the mechanisms of error detection at the level of individual neurons and circuits.

Online Methods

Animal models

All experiments were performed in adult (5 – 21 months old) zebrafish (Danio rerio) of both sexes. Fish were raised and kept under standard laboratory conditions (26–27°C; 13 h/11 h light/dark cycle). All experiments were approved by the Veterinary Department of the Canton Basel-Stadt (Switzerland).

Calcium imaging was performed using Tg(neuroD:GCaMP6f) fish36 in a nacre background. Tg(SAGFF212C:Gal4), Tg(UAS:ArchT-GFP) fish were created from Tg(SAGFF212C:Gal4), Tg(UAS:GFP) fish and Tg(UAS:ArchT-GFP) fish by multiple crossings. Tg(SAGFF212C:Gal4), Tg(UAS:GFP) fish were created by the gene trap method described by Asakawa et al.51. ArchT-GFP encodes a fusion protein consisting of the light-sensitive proton-pump archaerhodopsin-3 (Arch52) and GFP. Tg(UAS:ArchT-GFP) fish were generated using the Tol2Kit53, involving a multisite recombination reaction (Invitrogen Multisite Gateway manual Version D, 2007) between p5E–UAS (5xUAS and E1b minimal promoter54), pME–ArchT-GFP (first-generation archaerhodopsin-3 fused to GFP52), and p3E–polyA as entry vectors, and pDestTol2CG2 as destination vector53.

Overview of the experimental procedure

Light-weight head bars were fabricated from stainless steel syringe needles and attached to the skull of the fish using tissue glue and dental cement under anesthesia. After gluing of head bars to mounting posts, the anesthetic was removed and fish were transferred to the VR environment under the two-photon microscope. During the subsequent 20 – 30 min, fish were allowed to habituate to the closed-loop VR and gains were adjusted manually until the animal exhibited quasi-periodic swimming behavior. Multiple calcium imaging sessions were then performed in each fish that were separated by 15 min. Within each session, series of 54000 frames (30 min) were acquired and subsequently divided into shorter series (usually 8000 frames; 267 s) for analysis. The field of view was constant in each session and covered one or more forebrain regions. VR perturbations (left-right reversal) were introduced either automatically every 7 min, each lasting for 10 s, or manually every 2 – 7 min, each lasting 5 – 10 s. Between imaging sessions, a new field of view was chosen while animals continued to behave in a closed-loop VR.

Head-fixation of adult zebrafish for in vivo imaging

Fish were anaesthetized in 0.03% tricaine methanesulfonate (MS-222), wrapped in moist tissue and placed under a dissection microscope. Subsequently, 0.01% MS222 was continuously delivered into the mouth through a small cannula. The surgical procedure (Supplementary Fig. 1) took approximately 50 min and included gluing head bars to the skull (30 min), removing the skin above the forebrain to improve optical access (5 min), and gluing head bars to the mounting posts (15 min). After surgery basic behaviors of the animal including saccades, locomotion, feeding and social affiliation remained robust (Supplementary Video 5). Step-by-step instructions are provided as a Supplementary Protocol.

Virtual reality

A 3D model of the virtual reality system was built using the modelling software Wings 3D. The Python-based game engine Panda3D was used to update the location and angle of the virtual cameras in the VR. A cluster of three virtual cameras was used to capture three adjacent 60 ° x 60 ° (height by width) fields of view side by side (total of 60 ° x 180 °, height by width). Forward swims translated into a forward movement of the virtual cameras and right (left) turns translated into clockwise (counter-clockwise) rotation of the virtual cameras. Collision detection was included to keep the animal at a distance from visible boundaries to prevent pixelation of textures. The virtual cameras were enclosed by an invisible collision sphere that would be pushed back by the invisible collision wall in the 3D model. The camera cluster therefore stopped moving when it hit the wall head-on, or it slid along the wall smoothly when it struck the wall at an angle. The VR also permitted the presentation of dynamic textures such as movies of conspecifics or ellipses inside the tunnels.

Because the scaling between distance units in the VR and physical distance is arbitrary it is not possible to define absolute distances in the VR without additional information for calibration. Assuming a scaling factor of 5 mm/VR unit, which results in a realistic size of fish in movie displays (approximately 25 mm), the diameter of the arena would be 30 cm, and the long and short axes of the invisible collision boundary would be 20 cm and 10 cm, respectively.

The movie of conspecifics contained two females and one male with a maximal angular size of 20 °. The ellipse movie consisted of 3 vertically oriented ellipses of similar size that followed the same motion path. The VR was projected onto back projection film (DILAND SCREEN, DGP) on the outside of a custom-built, semi-hexagonal tank made of polymethyl methacrylate (10 cm per side, 8 cm high). Three projectors (AAXA P3, with RGB LEDs and LCoS display) arranged at 60 ° projected segments of the VR onto the three sides of the semi-hexagon. The red, green and blue light-emitting diodes (LEDs) of the projectors were gated by the line trigger signal (TTL) from the resonant scanner (Cambridge Technology, CRS 8 kHz) of the two photon microscope using a custom-built circuit55 to achieve fast rise and fall times of the LED output.

Tail tracking and inference of virtual movement

The tail of the head-fixed fish was illuminated from the side using an infrared light (805 nm, Roithner Lasertechnik, RLT80810MG) and monitored from below using a camera (Point Grey, Dragonfly2). A short pass filter (<875 nm, Edmund Optics, #86-106) and a long pass filter (>700 nm, Edmund Optics, #43-949) were positioned in front of the camera to block infrared light from the femtosecond laser and visible light from the projectors, respectively. Camera settings, real-time behavioral analysis, visuomotor gain control and movie presentations in the VR were controlled by a custom program written in LabVIEW (National Instruments; https://github.com/HUANGKUOHUA/Zebrafish-in-virtual-reality.git). Tail movements were recorded at a resolution of 90 by 70 pixels (lateral x rostro-caudal) at 50 Hz. The shape of the tail was analyzed in real-time and represented by seven equally spaced points along the tail using a custom routine56 that was further modified. The curvature and the undulation frequency of the caudal part of the tail was quantified to infer the swimming trajectory and to update the position and angle of virtual cameras. Every zero-crossing of the tail curvature triggered a forward movement that decayed exponentially (decay constant, τ = 190 ms). Thus, fast symmetric tail undulations were most efficient in driving forward swims in the VR. The amplitude of turns was derived from the bending direction and the curvature of the caudal tail. In each video frame, a right bend triggered a right turn in proportion to the tail curvature, followed by an exponential decay (τ = 190 ms). A sequence of unilateral tail bends was therefore most efficient in driving rotations. Linear and angular updates of the VR only occurred during active swimming which was defined by the intensity of tail movements (see below) so that an inactive fish with a curved tail did not cause a rotation of the VR.

where α is the angular gain, c is the caudal curvature of the tail, r∝ is the decay constant (0.9).

where β is the forward gain, δ = 1 if a zero-cross of caudal tail curvature occurs, otherwise δ = 0, rβ is the decay constant (0.9).

The gains of the forward swims and turns in the VR were initially set to a default value: angular gain = 2, forward gain = 0.3. During the first 20 – 30 min in the VR and before collection of behavioral and imaging data, swimming behavior was observed by the experimenter. If struggling or inactivity were observed, gains were slightly modified, and further fine gain adjustments were made thereafter (Supplementary Fig. 3). These adjustments were made because we expected optimal gain settings to vary between individuals due to differences in strength, body shape and other parameters.

VR combined with two-photon microscopy

Adult zebrafish were head-fixed and positioned at the rear center of the water-filled VR chamber (water depth 7 cm), ca. 3 cm below the water surface. A 16x water-immersion objective with a working distance of 3 mm (Nikon, CF175 LWD 16xW) or a 20x water-immersion objective with a working distance of 1.7 mm (Zeiss, Plan-Apochromat 20xW) were used for two-photon imaging. A custom-designed water-proof sleeve allowed for immersion of objectives >3 cm below the water surface. A custom-built multiphoton microscope with resonant scanners was used to acquire series of images with 512 x 512 pixels at a rate of 30 Hz using custom-written software based on Scanimage36,57. Because the photomultiplier tube (PMT) was gated on alternating lines, the effective resolution was 512 x 256 pixels at 30 Hz. GCaMP6f fluorescence was imaged through the intact skull using an excitation wavelength of 920 nm with a power of 50 mW at the sample. The microscope did not include compensation for group velocity dispersion or other optical components designed to optimize imaging depth.

In contrast to a head-fixed rodent, where the objective front lens can be shielded from ambient light by the skull and head plate10, a substantial amount of photons from the virtual reality entered the objective lens. We therefore temporally separated calcium imaging from the VR by gating the LEDs of the projector and the fluorescence-detecting PMT (H11706P-40, Hamamatsu) in a non-overlapping manner. The TTL line clock of the resonant scanner (Cambridge Technology, CRS 8 kHz) was used as a gating signal to switch between VR illumination and fluorescence detection on alternating lines using a data acquisition board with a retriggering function (National Instruments, PCIe-6321). The projector LEDs were turned ON for 24 μs during the turnaround period of the resonant scanner while the shutter circuit of the PMT was switched to low gain. To further reduce optical contaminations a notch filter (514.5/25 nm, OD 4, Edmund Optics) was positioned in front of each projector and an additional band-pass filter (510/50 nm, Chroma) was positioned in front of the PMT. Between imaging sessions, the resonant scanner was not scanning and the projector LEDs were triggered by an 8 kHz TTL signal generated by a data acquisition board (National Instruments) to maintain the VR. The frame count of the two-photon recording (30 Hz) was stored in each video frame (50 fps) to ensure precise frame-to-frame mapping between behavioral recordings and activity measurements.

Behavioral analysis in head-restrained adult zebrafish

To analyze the probability of animal position in the VR, the virtual position of the animal was labeled by a pixel value of 1 on a background of zeros and averaged across recording frames. The resulting residence probability was convolved by a normalized Gaussian mask (σ = 2 cm). The side preference index (SPI) of each animal was calculated from the mean of the normalized position (between -1 and 1) along the long axis of the VR during the analysis time window (90 s). ΔSPI = SPI (movie vs. empty tank) - SPI (empty tank vs. empty tank). Movies of conspecifics and ellipses were presented for 40 - 300 s.

Intensity of tail movements was quantified by the mean of the absolute difference in pixel values between adjacent video frames. To detect swim events, tail movement intensity trace was binarized using a threshold of three standard deviations calculated from the lower half of the distribution. Binarized events separated by a duration <100 ms were fused. The onset of a swim event was defined as the onset of these binarized events. The duration of a swim event was defined as the interval between adjacent swim onsets. Each swim event thus consisted of an active period (with tail movement) followed by an inactive period (without tail movement).

The onset of BREPs was determined by visual inspection of the behavioral video. Usually, a transition in motor behavior was detected because fish started to perform unilateral bends of the caudal tail followed by vigorous and often bilateral movements of the whole tail, including the rostral trunk of the body (Supplementary Video 4). In some cases fish clearly responded to VR perturbations with increased motor output, but an abrupt transition in tail beat structure was not detectable. In such cases, BREP onset was set to the 3rd tail flick after the VR perturbation.

Behavioral analysis in freely-swimming adult zebrafish

Responses to movies of conspecifics and abstract shapes were analyzed in 64 freely swimming adult zebrafish. The behavior of individual freely swimming fish was recorded in a rectangular tank (L*W*H = 20*10*15 cm, water depth 10 cm) for one hour at 10 Hz. The long walls of the tank were made of white PVC with matt surfaces. The short walls were made of anti-reflection glass (LUXAR, Glas Trösch, Switzerland) to prevent interactions of fish with their mirror image. The bottom was covered with a diffusor to prevent reflections. An infrared LED panel illuminated the tank from below for video imaging from above. Movies were identical to those used in the VR. Each movie was presented to the animal for 20 s on LCD monitors behind the short walls of the tank. Four tanks were positioned in parallel to increase the throughput of the assay. Animal in different tanks could not see each other and were presented with independent movies.

Analysis of spontaneous swimming behavior in the absence of movies was performed with a different group of fish (n = 18). The behavior of individual freely swimming fish was recorded in a rectangular tank (L*W*H = 30*15*20 cm, water depth 10 cm) for one hour at 30 Hz. The center of mass of the animal was labeled with a pixel value of 1 on a background of zeros and the average of all recording frames was convolved by a normalized Gaussian mask (σ = 2 cm) to generate the residence probability map.

To measure the intensity of tail movements, an image patch with the fish at the center (100 by 100 pixels) was extracted from each video frame and the mean of the absolute difference in pixel values between adjacent frames was calculated. The side preference index, swim event onset and swim event period were defined by the procedure described above.

Definition of brain regions in the dorsal pallium

Anatomical definitions of canonical subdivisions of the dorsal pallium in adult zebrafish vary somewhat between previous studies39,39,40. We adopted the definitions of Aoki et al.40 with minor modifications because this definition best matched landmarks in the fluorescence images of the dorsal telencephalon from Tg(neuroD:GCaMP6f) fish. At the end of the experiment, two-photon image stacks of the dorsal pallium were acquired in each fish and forebrain regions were delineated manually based on anatomical features. Dm was separated from other forebrain regions by the sulcus ypsilonformis. cDc was separated from rDc by a boundary that was visible in the NeuroD:GCaMP6f expression (Supplementary Fig. 9). This boundary appears to correspond to a boundary in parvalbumin expression40. Dl was lateral to rDc at the rostral end of the forebrain but covered rDc at a more caudal position39 (Supplementary Fig. 9).

Post-processing of calcium imaging data and extraction of neuronal ROIs

In each session, a single field of view was recorded for 30 min at 30 Hz. The 54000 frames were then separated into six files with 8000 frames per file (267 seconds) and one file with 6000 frames. For visualization, images were smoothed by a mild 2D Gaussian filter (s.d. 1 pixel in each direction).

Motion artifacts comprised two main types that were easily detectable by maximum projections of image series along the time axis because they resulted in blurring. The first type was a transient movement of the brain in both lateral (x, y) and axial (z) directions during episodes of struggling. These image shifts were usually small (<1 μm) and reversible (Fig. 3b-g). No obvious image shifts were observed during spontaneous swim events or during BREPs, which are smaller in intensity and duration (Supplementary Fig. 11). In rare cases, struggling resulted in a change in the z-position that was not fully reversible. Such a shift, as well as large transient shifts in any direction, resulted in substantial blurring of the maximum projection. The second type was a slow drift (<1 μm/min) in the horizontal plane towards the rostral direction that was observed occasionally. Usually, this drift did not substantially influence the analysis of neuronal responses to VR perturbations because the image displacement within the 20 s analysis time window was small. Nonetheless, any files that showed obvious blurring in the maximum projection were excluded from further analyses (4.8% of all files).

ROIs were drawn independently in different files. It is therefore possible that the same neuron appeared more than once in the analysis when ROIs in different files represented the same neuron. In matrices representing the activity of neurons as a function of time, we estimated that each neuron was represented, on average, by 2.9 entries. For the analysis of the same pre-BREP neurons during positive and negative trials (Fig. 6d,e), however, activity traces were concatenated for neurons that were identified reliably across all seven files of a session. This ensured that the same neurons were analyzed during positive and negative trials. ROIs for single neurons were selected manually in ImageJ58 and time traces were averaged across all pixels for each ROI. For calculation of ΔF/F0 traces, F0 was determined as the mean of the 25 % lowest percentile of the fluorescence trace. ΔF/F0 traces were deconvolved to extract relative action potential (AP) probabilities using the Elephant algorithm42 (https://git.io/vNbsz).

Analysis of neuronal activity modulated by VR perturbation

To determine whether a neuron was responsive to the VR perturbation, the difference in ΔF/F between 10 s time windows before and after perturbation onset was calculated. The observed response was compared to the response distribution triggered by 500 random time points on the same activity trace. The neuron was considered responsive in this perturbation trial when the response exceeded the mean of the randomly sampled distribution by two s.d.s. Each combination of a ROI and a VR perturbation is defined as neuron-perturbation pair. Each neuron therefore contributed n neuron-perturbation pairs, where n is the number of VR perturbations that were applied while imaging the neuron.

To investigate how many neurons responded during behavioral transitions in the absence of VR perturbations we identified “spontaneous strong swims” (swim duration >2 s) during normal visuomotor coupling. A time interval was randomly sampled from the pre-BREP period and paired to the spontaneous strong swim to define an artificial onset. This artificial onset was used to identify responsive neurons by the procedure described above. Each combination of a ROI and a swim event is defined as neuron-swim pair. Each neuron therefore contributed n neuron-swim pairs, where n is the number of swims that were performed while imaging the neuron.

To determine the response onset of individual neurons (Fig. 6a) the activity trace from -10 s to +10 s around the perturbation onset was smoothed using a median filter with a 150 ms time span. The lower 70 % of the activity trace before the perturbation were used to calculate the baseline and s.d.. Rising edges were detected from the post-perturbation activity trace using a threshold of 5 s.d.. The first rising edge with a mean activity in the following 1 s higher than three s.d. was defined as the first calcium transient. The combination of criteria for height (five standard deviations) and duration (three standard deviations for 1 s) reliably separated responses from noise. From the rising edge of the response event, a retrograde search was applied for the first time point when the activity was lower than 2 standard deviations. This time point was set as the response onset of the neuron to this respective perturbation.

Clustering of activity traces was performed by affinity propagation59.

Statistical analysis

We tested for significant differences in the mean using a one- or two-sided t-test, for differences in the median using a two-sided Wilcoxon rank sum test, for differences in fractions using a X2 test and for differences between angular distributions using a Kuiper’s test.

Supplementary Material

Acknowledgements

We thank G. Keller and M. Leinweber for comments on the manuscript; P. Argast, P. Buchmann and P. Zmarz (Friedrich Miescher Institute for Biomedical Research, Basel, Switzerland) for technical support, A. Prendergast and C. Wyart (Institut du Cerveau et de la Moelle Épinière, Paris, France) for sharing Tg(neuroD:GCaMP6f) fish, E. Boyden (Massachusetts Institute of Technology, Boston, USA) and B. Roska (Friedrich Miescher Institute for Biomedical Research, Basel, Switzerland) for sharing reagents (ArchT-GFP), and the Friedrich group for stimulating discussions. This work was supported by the Novartis Research Foundation (R.W.F.), by a Novartis Institutes for Biomedical Research Presidential Postdoctoral Fellowship (K.H.), by the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (grant agreement No 742576), by the Swiss National Science Foundation (R.W.F.; 310030B_152833/1; 135196/1), by a fellowship from the Boehringer Ingelheim Fonds (P.R.), and by the NBRP and NBRP/Fundamental Technologies Upgrading Program from AMED (K.K.).

Footnotes

Reporting Summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Data availability

Original image series and virtual reality presentations that support the findings of this study are too large to be included in the publication. These data are available from the corresponding author upon reasonable request.

Code availability

Software for controlling VR and coordinating VR with two-photon imaging can be downloaded at https://github.com/HUANGKUOHUA/Zebrafish-in-virtual-reality.git.

Author contributions

K.H. developed the methodology, designed and performed experiments, analyzed data, and wrote the manuscript. P.R. developed methodology and wrote the manuscript. T.F. and K.K. created and analyzed transgenic fish. T.B. supervised the project. R.W.F. supervised the project, analyzed data and wrote the manuscript.

Competing interests

The authors declare no competing interests.

References

- 1.Holscher C. Rats are able to navigate in virtual environments. J Exp Biol. 2005;208:561–569. doi: 10.1242/jeb.01371. [DOI] [PubMed] [Google Scholar]

- 2.Harvey CD, Collman F, Dombeck DA, Tank DW. Intracellular dynamics of hippocampal place cells during virtual navigation. Nature. 2009;461:941–946. doi: 10.1038/nature08499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Minderer M, Harvey CD, Donato F, Moser EI. Virtual reality explored. Nature. 2016;533:324–325. doi: 10.1038/nature17899. [DOI] [PubMed] [Google Scholar]

- 4.Reiser MB, Dickinson MH. A modular display system for insect behavioral neuroscience. J Neurosci Methods. 2008;167:127–139. doi: 10.1016/j.jneumeth.2007.07.019. [DOI] [PubMed] [Google Scholar]

- 5.Kim SS, Rouault H, Druckmann S, Jayaraman V. Ring attractor dynamics in the Drosophila central brain. Science. 2017;356:849–853. doi: 10.1126/science.aal4835. [DOI] [PubMed] [Google Scholar]

- 6.Dombeck DA, Reiser MB. Real neuroscience in virtual worlds. Curr Opin Neurobiol. 2012;22:3–10. doi: 10.1016/j.conb.2011.10.015. [DOI] [PubMed] [Google Scholar]

- 7.Larsch J, Baier H. Biological Motion as an Innate Perceptual Mechanism Driving Social Affiliation. Curr Biol. 2018;28:3523–3532.e4. doi: 10.1016/j.cub.2018.09.014. [DOI] [PubMed] [Google Scholar]

- 8.Stowers JR, et al. Virtual reality for freely moving animals. Nat Methods. 2017;14:995–1002. doi: 10.1038/nmeth.4399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Keller GB, Bonhoeffer T, Hübener M. Sensorimotor Mismatch Signals in Primary Visual Cortex of the Behaving Mouse. Neuron. 2012;74:809–815. doi: 10.1016/j.neuron.2012.03.040. [DOI] [PubMed] [Google Scholar]

- 10.Dombeck DA, Khabbaz AN, Collman F, Adelman TL, Tank DW. Imaging Large-Scale Neural Activity with Cellular Resolution in Awake, Mobile Mice. Neuron. 2007;56:43–57. doi: 10.1016/j.neuron.2007.08.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Maimon G, Straw AD, Dickinson MH. Active flight increases the gain of visual motion processing in Drosophila. Nat Neurosci. 2010;13:393–399. doi: 10.1038/nn.2492. [DOI] [PubMed] [Google Scholar]

- 12.Seelig JD, et al. Two-photon calcium imaging from head-fixed Drosophila during optomotor walking behavior. Nat Methods. 2010;7:535–540. doi: 10.1038/nmeth.1468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Ahrens MB, et al. Brain-wide neuronal dynamics during motor adaptation in zebrafish. Nature. 2012;485:471–477. doi: 10.1038/nature11057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Picardo MA, et al. Population-Level Representation of a Temporal Sequence Underlying Song Production in the Zebra Finch. Neuron. 2016;90:866–876. doi: 10.1016/j.neuron.2016.02.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kerr JND, Denk W. Imaging in vivo: watching the brain in action. Nat Rev Neurosci. 2008;9:195–205. doi: 10.1038/nrn2338. [DOI] [PubMed] [Google Scholar]

- 16.Ahrens MB, Orger MB, Robson DN, Li JM, Keller PJ. Whole-brain functional imaging at cellular resolution using light-sheet microscopy. Nat Methods. 2013;10:413–420. doi: 10.1038/nmeth.2434. [DOI] [PubMed] [Google Scholar]

- 17.Panier T, et al. Fast functional imaging of multiple brain regions in intact zebrafish larvae using Selective Plane Illumination Microscopy. Front Neural Circuits. 2013;7 doi: 10.3389/fncir.2013.00065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Schulze L, et al. Transparent Danionella translucida as a genetically tractable vertebrate brain model. Nat Methods. 2018;15:977–983. doi: 10.1038/s41592-018-0144-6. [DOI] [PubMed] [Google Scholar]

- 19.Portugues R, Severi KE, Wyart C, Ahrens MB. Optogenetics in a transparent animal: circuit function in the larval zebrafish. Curr Opin Neurobiol. 2013;23:119–126. doi: 10.1016/j.conb.2012.11.001. [DOI] [PubMed] [Google Scholar]

- 20.Friedrich RW, Jacobson GA, Zhu P. Circuit Neuroscience in Zebrafish. Curr Biol. 2010;20:R371–R381. doi: 10.1016/j.cub.2010.02.039. [DOI] [PubMed] [Google Scholar]

- 21.Muto A, Ohkura M, Abe G, Nakai J, Kawakami K. Real-Time Visualization of Neuronal Activity during Perception. Curr Biol. 2013;23:307–311. doi: 10.1016/j.cub.2012.12.040. [DOI] [PubMed] [Google Scholar]

- 22.Kim DH, et al. Pan-neuronal calcium imaging with cellular resolution in freely swimming zebrafish. Nat Methods. 2017;14:1107–1114. doi: 10.1038/nmeth.4429. [DOI] [PubMed] [Google Scholar]

- 23.Buske C, Gerlai R. Shoaling develops with age in Zebrafish (Danio rerio) Prog Neuropsychopharmacol Biol Psychiatry. 2011;35:1409–1415. doi: 10.1016/j.pnpbp.2010.09.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Frank T, Mönig NR, Satou C, Higashijima S, Friedrich RW. Associative conditioning remaps odor representations and modifies inhibition in a higher olfactory brain area. Nat Neurosci. 2019;22:1844–1856. doi: 10.1038/s41593-019-0495-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Gerlach G, Lysiak N. Kin recognition and inbreeding avoidance in zebrafish, Danio rerio, is based on phenotype matching. Anim Behav. 2006;71:1371–1377. [Google Scholar]

- 26.Valente A, Huang K-H, Portugues R, Engert F. Ontogeny of classical and operant learning behaviors in zebrafish. Learn Mem. 2012;19:170–177. doi: 10.1101/lm.025668.112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Wanner AA, Genoud C, Masudi T, Siksou L, Friedrich RW. Dense EM-based reconstruction of the interglomerular projectome in the zebrafish olfactory bulb. Nat Neurosci. 2016;19:816–825. doi: 10.1038/nn.4290. [DOI] [PubMed] [Google Scholar]

- 28.Friedrich RW, Genoud C, Wanner AA. Analyzing the structure and function of neuronal circuits in zebrafish. Front Neural Circuits. 2013;7 doi: 10.3389/fncir.2013.00071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Zhu P, Fajardo O, Shum J, Zhang Schärer Y-P, Friedrich RW. High-resolution optical control of spatiotemporal neuronal activity patterns in zebrafish using a digital micromirror device. Nat Protoc. 2012;7:1410–1425. doi: 10.1038/nprot.2012.072. [DOI] [PubMed] [Google Scholar]

- 30.Rao RPN, Ballard DH. Predictive coding in the visual cortex: a functional interpretation of some extra-classical receptive-field effects. Nat Neurosci. 1999;2:79–87. doi: 10.1038/4580. [DOI] [PubMed] [Google Scholar]

- 31.Bastos AM, et al. Canonical Microcircuits for Predictive Coding. Neuron. 2012;76:695–711. doi: 10.1016/j.neuron.2012.10.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Keller GB, Mrsic-Flogel TD. Predictive Processing: A Canonical Cortical Computation. Neuron. 2018;100:424–435. doi: 10.1016/j.neuron.2018.10.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Dreosti E, Lopes G, Kampff AR, Wilson SW. Development of social behavior in young zebrafish. Front Neural Circuits. 2015;9 doi: 10.3389/fncir.2015.00039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Stednitz SJ, et al. Forebrain Control of Behaviorally Driven Social Orienting in Zebrafish. Curr Biol. 2018;28:2445–2451.e3. doi: 10.1016/j.cub.2018.06.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Gerlai R. Animated images in the analysis of zebrafish behavior. Curr Zool. 2017;63:35–44. doi: 10.1093/cz/zow077. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Rupprecht P, Prendergast A, Wyart C, Friedrich RW. Remote z-scanning with a macroscopic voice coil motor for fast 3D multiphoton laser scanning microscopy. Biomed Opt Express. 2016;7:1656. doi: 10.1364/BOE.7.001656. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Koide T, et al. Olfactory neural circuitry for attraction to amino acids revealed by transposon-mediated gene trap approach in zebrafish. Proc Natl Acad Sci. 2009;106:9884–9889. doi: 10.1073/pnas.0900470106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Lal P, et al. Identification of a neuronal population in the telencephalon essential for fear conditioning in zebrafish. BMC Biol. 2018;16 doi: 10.1186/s12915-018-0502-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Mueller T, Dong Z, Berberoglu MA, Guo S. The dorsal pallium in zebrafish, Danio rerio (Cyprinidae, Teleostei) Brain Res. 2011;1381:95–105. doi: 10.1016/j.brainres.2010.12.089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Aoki T, et al. Imaging of Neural Ensemble for the Retrieval of a Learned Behavioral Program. Neuron. 2013;78:881–894. doi: 10.1016/j.neuron.2013.04.009. [DOI] [PubMed] [Google Scholar]

- 41.Rodríguez F, et al. Conservation of Spatial Memory Function in the Pallial Forebrain of Reptiles and Ray-Finned Fishes. J Neurosci. 2002;22:2894–2903. doi: 10.1523/JNEUROSCI.22-07-02894.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Berens P, et al. Community-based benchmarking improves spike rate inference from two-photon calcium imaging data. PLOS Comput Biol. 2018;14:e1006157. doi: 10.1371/journal.pcbi.1006157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Koster-Hale J, Saxe R. Theory of Mind: A Neural Prediction Problem. Neuron. 2013;79:836–848. doi: 10.1016/j.neuron.2013.08.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Blakemore S-J, Wolpert DM, Frith CD. Central cancellation of self-produced tickle sensation. Nat Neurosci. 1998;1:635–640. doi: 10.1038/2870. [DOI] [PubMed] [Google Scholar]

- 45.Cullen KE. Vestibular processing during natural self-motion: implications for perception and action. Nat Rev Neurosci. 2019;20:346–363. doi: 10.1038/s41583-019-0153-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Schultz W, Dayan P, Montague PR. A Neural Substrate of Prediction and Reward. Science. 1997;275:1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- 47.Attinger A, Wang B, Keller GB. Visuomotor Coupling Shapes the Functional Development of Mouse Visual Cortex. Cell. 2017;169:1291–1302.e14. doi: 10.1016/j.cell.2017.05.023. [DOI] [PubMed] [Google Scholar]

- 48.McElligott MB, O’Malley DM. Prey Tracking by Larval Zebrafish: Axial Kinematics and Visual Control. Brain Behav Evol. 2005;66:177–196. doi: 10.1159/000087158. [DOI] [PubMed] [Google Scholar]

- 49.Wang T, et al. Three-photon imaging of mouse brain structure and function through the intact skull. Nat Methods. 2018;15:789–792. doi: 10.1038/s41592-018-0115-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Ji N. Adaptive optical fluorescence microscopy. Nat Methods. 2017;14:374–380. doi: 10.1038/nmeth.4218. [DOI] [PubMed] [Google Scholar]

- 51.Asakawa K, et al. Genetic dissection of neural circuits by Tol2 transposon-mediated Gal4 gene and enhancer trapping in zebrafish. Proc Natl Acad Sci. 2008;105:1255–1260. doi: 10.1073/pnas.0704963105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Han X, et al. A High-Light Sensitivity Optical Neural Silencer: Development and Application to Optogenetic Control of Non-Human Primate Cortex. Front Syst Neurosci. 2011;5 doi: 10.3389/fnsys.2011.00018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Kwan KM, et al. The Tol2kit: A multisite gateway-based construction kit forTol2 transposon transgenesis constructs. Dev Dyn. 2007;236:3088–3099. doi: 10.1002/dvdy.21343. [DOI] [PubMed] [Google Scholar]

- 54.Distel M, Wullimann MF, Koster RW. Optimized Gal4 genetics for permanent gene expression mapping in zebrafish. Proc Natl Acad Sci. 2009;106:13365–13370. doi: 10.1073/pnas.0903060106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Leinweber M, et al. Two-photon Calcium Imaging in Mice Navigating a Virtual Reality Environment. J Vis Exp. 2014 doi: 10.3791/50885. 50885. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Huang K-H, Ahrens MB, Dunn TW, Engert F. Spinal Projection Neurons Control Turning Behaviors in Zebrafish. Curr Biol. 2013;23:1566–1573. doi: 10.1016/j.cub.2013.06.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Pologruto TA, Sabatini BL, Svoboda K. ScanImage: Flexible software for operating laser scanning microscopes. Biomed Eng OnLine. 2003;9 doi: 10.1186/1475-925X-2-13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Schneider CA, Rasband WS, Eliceiri KW. NIH Image to ImageJ: 25 years of image analysis. Nat Methods. 2012;9:671–675. doi: 10.1038/nmeth.2089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Frey BJ, Dueck D. Clustering by Passing Messages Between Data Points. Science. 2007;315:972–976. doi: 10.1126/science.1136800. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.