Abstract

Background:

Postlingually deafened adult cochlear implant (CI) users routinely display large individual differences in the ability to recognize and understand speech, especially in adverse listening conditions. Although individual differences have been linked to several sensory (“bottom-up”) and cognitive (“top-down”) factors, little is currently known about the relative contributions of these factors in high- and low-performing CI users.

Purpose:

The aim of the study was to investigate differences in sensory functioning and neurocognitive functioning between high- and low-performing CI users on the Perceptually Robust English Sentence Test Open-set (PRESTO), a high-variability sentence recognition test containing sentence materials produced by multiple male and female talkers with diverse regional accents.

Research Design:

CI users with accuracy scores in the upper (HiPRESTO) or lower quartiles (LoPRESTO) on PRESTO in quiet completed a battery of behavioral tasks designed to assess spectral resolution and neurocognitive functioning.

Study Sample:

Twenty-one postlingually deafened adult CI users, with 11 HiPRESTO and 10 LoPRESTO participants.

Data Collection and Analysis:

A discriminant analysis was carried out to determine the extent to which measures of spectral resolution and neurocognitive functioning discriminate HiPRESTO and LoPRESTO CI users. Auditory spectral resolution was measured using the Spectral-Temporally Modulated Ripple Test (SMRT). Neurocognitive functioning was assessed with visual measures of working memory (digit span), inhibitory control (Stroop), speed of lexical/phonological access (Test of Word Reading Efficiency), and nonverbal reasoning (Raven’s Progressive Matrices).

Results:

HiPRESTO and LoPRESTO CI users were discriminated primarily by performance on the SMRT and secondarily by the Raven’s test. No other neurocognitive measures contributed substantially to the discriminant function.

Conclusions:

High- and low-performing CI users differed by spectral resolution and, to a lesser extent, nonverbal reasoning. These findings suggest that the extreme groups are determined by global factors of richness of sensory information and domain-general, nonverbal intelligence, rather than specific neurocognitive processing operations related to speech perception and spoken word recognition. Thus, although both bottom-up and top-down information contribute to speech recognition performance, low-performing CI users may not be sufficiently able to rely on neurocognitive skills specific to speech recognition to enhance processing of spectrally degraded input in adverse conditions involving high talker variability.

Keywords: adverse conditions, cochlear implants, individual differences, speech recognition

INTRODUCTION

Cochlear implants (CIs) are auditory prosthetic devices that restore a sense of hearing, albeit degraded in nature, to individuals with moderate-to-profound sensorineural hearing loss. CIs have been very effective as a medical treatment, and many adult postlingually deafened CI recipients are generally able to achieve a high level of speech understanding in quiet listening conditions. Despite the overall success of CIs, enormous individual differences in outcomes in this patient population are routinely observed in CI centers around the world (Lazard et al, 2012; Blamey et al, 2013), and rates of “poor” speech understanding in CI users are high; for example, 13% of patients score <10% correct in recognizing words in sentences in quiet (Lenarz et al, 2012). The factors underlying individual differences in speech recognition have not been fully explained and integrated, posing a major clinical problem for optimizing CI outcomes.

Variability in basic auditory sensitivity (e.g., spectral and temporal resolution) may help predict individual differences in speech recognition in postlingually deafened adult CI users (e.g., Moberly et al, 2018). CI users rely on a signal that is highly degraded in spectrotemporal detail because of the limitations of the electrode–nerve interface and the relatively broad electrical stimulation of the auditory nerve. In particular, spectral information, important for phonetic perception, is poorly conveyed by the CI device, limiting speech recognition abilities (Henry et al, 2005). However, spectral resolution across the electrode array appears to vary across individual implant users (Won et al, 2007). Differences in spectral resolution and speech recognition have typically been associated with patient-related demographic characteristics, such as age, age at implantation, duration of deafness, and residual hearing, as well as device- or surgical-related factors, such as implant model, implant settings, and surgical technique. Regardless of the underlying factors, poorer spectral resolution for some CI users may contribute to increased difficulty in recognizing speech (Henry et al, 2005; Won et al, 2007; Moberly et al, 2018). CI users with good spectral resolution may be better able to use the increased acoustic-phonetic spectral details provided by modern CI devices (Croghan et al, 2017).

Beyond differences in the initial “bottom-up” sensory input, related to physiologic or device-related factors, there also appear to be differences in “top-down” processing, the capacity of individual CI users to make use of neurocognitive processes and language knowledge to understand the degraded sensory information. Neurocognitive and linguistic skills have been found to be related to individual differences in speech recognition in hearing-impaired adults (e.g., Arehart et al, 2013) and adult CI users (e.g., Heydebrand et al, 2007; Lazard et al, 2010; Holden et al, 2013). Yet, compared with other demographic and device-related factors, the involvement and relative contributions of specific neurocognitive skills in speech recognition in diverse listener populations are still unclear. Some specific neurocognitive skills, such as working memory capacity, appear to be more strongly related to speech perception and recognition and may be more strongly related than more general cognitive abilities, such as nonverbal intelligence (for a review, see Akeroyd, 2008). Working memory (Lyxell et al, 1998; Tao et al, 2014) as well as inhibitory control (Moberly et al, 2016b), verbal learning and memory (Pisoni et al, 2018), and processing speed (Tinnemore et al, 2018) have been linked to individual differences in speech recognition among adult CI users. In addition, although a strong relation has not been established, nonverbal reasoning skills have recently been found to be associated with individual performance among postlingually deafened adult CI users, independently of age (Mattingly et al, 2018).

Individual differences among CI users may be further exacerbated in adverse listening conditions commonly encountered in daily life. Normal-hearing (NH) listeners are able to recognize and successfully understand speech under an enormously wide range of adverse and challenging conditions, such as noise, reverberation, or the presence of competing talkers. In real-world listening conditions, listeners are required to rapidly adapt not only to these diverse listening environments but also to highly variable acoustic changes in the vocal source, reflecting the speaker’s gender, age, regional dialect, speaking rate, and speaking style, to facilitate speech communication (e.g., Pisoni, 1997). Although NH listeners appear to adjust quickly and almost effortlessly to acoustic differences in speech (e.g., Johnsrude et al, 2013; Souza et al, 2013), talker variability places substantial perceptual and cognitive load on basic speech recognition processes. Increased talker variability creates additional cognitive load and requires greater cognitive processing resources than spoken materials with lower talker variability (e.g., Nusbaum and Magnuson, 1997). As such, high-variability speech with multiple talkers has been shown to be more challenging to recognize for NH listeners (e.g., Mullennix et al, 1989). Furthermore, studies with NH listeners also revealed large individual differences in performance and suggested several basic perceptual and neurocognitive skills as factors underlying multitalker sentence recognition (e.g., Tamati et al, 2013; Tamati and Pisoni, 2014).

Auditory sensitivity and neurocognitive skills may show different relative contributions to speech recognition for CI users in general, but also, more specifically, to high- versus low-performing CI users on speech recognition in testing conditions with high talker variability. Early research on CI speech recognition focused on the high-performing CI users (or “stars”) as a way to demonstrate the efficacy or limitations of CIs, whereas some recent research works have tried to investigate speech recognition performance in low-performing CI users (e.g., Moberly et al, 2016a; Pisoni et al, 2017). Although no standard criteria exist for determining high and low performers, previous studies have observed substantial variability across CI users. For example, some CI users obtain near ceiling performance on sentence recognition in quiet, whereas others perform quite poorly (e.g., <10%) on tasks measuring sentence recognition in quiet (Lenarz et al, 2012). In principle, high- and low-performing CI users might represent two distinct groups of patients whose outcomes may be more specifically predicted by differences in underlying neurocognitive functioning and auditory skills. These two groups often show large differences in performance in speech recognition tasks: low-performing CI users are more susceptible to noise (e.g., Fu and Nogaki, 2005), as well as other sources of signal degradations, such as speech variability (e.g., Tamati et al, 2019). Furthermore, although both groups perform more poorly under adverse listening conditions, it appears that some high-performing CI users are better able to take advantage of top-down compensatory mechanisms (Bhargava et al, 2014). These performance differences suggest that the extreme groups of listeners may demonstrate different underlying information-processing mechanisms that reflect the sensory and neurocognitive resources the listeners have with them.

Differences in auditory sensitivity to spectral and temporal properties of speech and/or neurocognitive skills between high and low performers may lead them to adopt different perceptual strategies. Indeed, there is some evidence that CI users demonstrate individual differences in their perceptual strategies, such as the weights they assign to spectral and temporal cues (Winn et al, 2012; Bhargava et al, 2014; Moberly et al, 2014), resulting in group differences not only in speech recognition accuracy but also in the underlying mechanisms. Although previous research has assumed that both auditory sensitivity and neurocognitive functioning contribute to speech recognition performance in CI users (e.g., Bhargava et al, 2014), these factors may not contribute equally to performance differences among high and low performers. The specific neurocognitive and auditory skills that are associated with high or low performance are unclear, in particular, with respect to whether these groups can be defined by more general auditory sensitivity and/or neurocognitive skills or whether they are differentiated by specific subskills related to speech perception and recognition.

The Current Study

The aim of the present study was to examine the relative contributions of spectral resolution and several neurocognitive skills that differentiate speech recognition outcomes among high- and low-performing experienced CI users, as defined by performance in the top versus bottom quartile on sentence recognition relative to our study population. For the present study, the relative speech recognition skills of the CI users were determined using scores from the Perceptually Robust English Sentence Test Open-set (PRESTO; Gilbert et al, 2013). PRESTO maximizes talker variability by incorporating multiple talkers, genders, and regional accents. The PRESTO materials have been shown to be more challenging to recognize than sentence materials with lower talker variability, such as Hearing in Noise Test (HINT; Nilsson et al, 1994) and AzBio (Spahr et al, 2012) sentences, for NH listeners and hearing-impaired listeners with CIs (Gilbert et al, 2013). In addition, PRESTO materials yield large individual differences in performance, which have been found to be related to several neurocognitive skills (Tamati et al, 2013; Moberly et al, 2018). Taken together, these earlier studies suggest that high-variability speech recognition cannot be attributed to peripheral hearing acuity or audibility alone and reflect complex interactions of auditory sensitivity and neurocognitive processes.

A discriminant function analysis was carried out to determine the extent to which measures of auditory sensitivity, specifically auditory spectral resolution, and neurocognitive functioning discriminate the high- versus low-performing CI users on PRESTO. Auditory spectral resolution was measured with a behavioral test of spectral resolution, specifically, the Spectral-Temporally Modulated Ripple Test (SMRT; Aronoff and Landsberger, 2013). Several neurocognitive functions were measured, including working memory, inhibitory control, verbal learning and memory, lexical/phonological processing speed, and a global measure of nonverbal reasoning. Based on previous studies, we predicted that spectral resolution would discriminate the high- and low-performing groups. More specifically, if spectral resolution primarily determines whether a CI user will achieve a high or low level of speech recognition in adverse conditions, then the contribution of spectral resolution to the discriminant function should be the greatest among the measures. For the neurocognitive skills, we anticipated two possible outcomes. If poor spectral resolution and weak neurocognitive functioning combine to result in extremely low performance (and good spectral resolution and strong cognitive skills combine for high performance), then neurocognitive skills would be expected to help discriminate the groups. Alternatively, if low performers receive such a poor signal that they cannot benefit from neurocognitive skills, regardless of their respective individual abilities, then measures of neurocognitive functioning may not contribute to the discriminant function. Thus, spectral resolution was expected to be the most useful primary factor in discriminating the groups, with a potential secondary contribution of neurocognitive functioning.

METHODS

Participants

Data from 21 postlingually deafened adult CI users were included in the present study. All of the CI users had participated in a larger study on speech, language, and neurocognitive skills, which, at the time of the present study, included 44 adult postlingually deafened CI users (Moberly et al, 2017; Kramer et al, 2018; Moberly et al, 2018). Of the 21 CI users in the present study, 11 scored within the upper quartile of the distribution (HiPRESTO) and 10 scored within the lower quartile (LoPRESTO) of a group of 44 CI users (overlapping with participant groups reported in earlier studies) on PRESTO, after adjusting for those who did not complete or were unable to complete the SMRT (n = 4). The 11 HiPRESTO CI users included 5 female and 6 male participants, aged 45–70 years (M = 60; standard deviation [SD] = 8), and the 10 LoPRESTO CI users included 4 female and 6 male participants, aged 52–81 years (M = 65; SD = 10).

All participants were native English speakers, with at least a high school diploma or equivalent, with relatively normal general language proficiency as demonstrated by word reading scores within two SD of the normative mean on the Wide Range Achievement Test (Wilkinson and Robertson, 2006), and no evidence of significant cognitive impairment based on a written version of the Mini-Mental State Examination (Folstein et al, 1975). Additional demographic information for the CI participants is provided in Table 1. The 11 HiPRESTO CI users included five bilateral and six unilateral users (five with contralateral hearing aid use) and the ten LoPRESTO CI users included two bilateral and eight unilateral users (four with contralateral hearing aid use). The HiPRESTO CI users were on average 53.7 (SD = 9.1) years old at their first CI with a total duration of hearing loss (from age of initial hearing loss to present) of 32.8 (SD = 14.8) years, whereas the LoPRESTO CI users were on average 59.9 (SD = 11.7) years old with a total duration of hearing loss of 39.5 (SD = 19.2) years. Socioeconomic status (SES) was assessed using a measure developed by Nittrouer and Burton (2005), which incorporates occupational and educational levels; higher scores indicate higher SES (range of scores: 1–64). The HiPRESTO CI users scored on average 26.7 (SD = 8.8) using this measure, whereas the LoPRESTO CI users scored on average 28.7 (SD = 13.7). As can be seen in Table 1, overall, HiPRESTO and LoPRESTO groups showed similar mean demographic characteristics, but with vast individual differences in these characteristics within both groups.

Table 1.

Participant Demographics and Group Means (SD)

| Group | Participant Number | Gender | Age (Years) | Age of First Cl (Years) | SES (out of 64) | Device | Side of Implant | Current Contralateral Hearing Aid Use | Etiology of Hearing Loss | Duration of Hearing Loss (Years) | Better Ear PTA (dB HL) | PRESTO Score (% Correct Keywords) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| HiPRESTO | 1 | M | 61 | 55 | 24 | Cochlear | Right | Yes | Unknown | 16 | 111.25 | 76.61 |

| HiPRESTO | 2 | M | 70 | 68 | 42 | Cochlear | Left | Yes | Unknown, progressive loss as adult | 30 | 93.75 | 76.61 |

| HiPRESTO | 3 | F | 67 | 58 | 9 | Cochlear | Bilateral | No | Genetic, Meniere’s disease | 31 | 120.00 | 79.03 |

| HiPRESTO | 4 | M | 55 | 50 | 30 | Cochlear | Bilateral | No | Unknown, progressive loss as an adult | 34 | 120.00 | 79.84 |

| HiPRESTO | 5 | M | 66 | 60 | 18 | Cochlear | Left | No | Meniere’s disease | 52 | 80.00 | 81.25 |

| HiPRESTO | 6 | F | 57 | 48 | 25 | Cochlear | Right | Yes | Genetic, progressive loss as child/adult | 50 | 82.50 | 81.45 |

| HiPRESTO | 7 | M | 59 | 57 | 24 | Cochlear | Bilateral | No | Sudden loss | 4 | 120.00 | 84.38 |

| HiPRESTO | 8 | F | 45 | 44 | 30 | Advanced Bionics | Right | Yes | Unknown, progressive loss as child | 39 | 88.75 | 84.68 |

| HiPRESTO | 9 | F | 65 | 54 | 24 | Cochlear | Bilateral | No | Genetic | 24 | 120.00 | 86.61 |

| HiPRESTO | 10 | F | 66 | 62 | 35 | Cochlear | Right | Yes | Genetic, progressive loss as adult, noise exposure, sudden loss in the right ear | 31 | 78.75 | 88.84 |

| HiPRESTO | 11 | F | 50 | 35 | 33 | Bilateral | No | Unknown, progressive loss as child | 50 | 117.50 | 95.16 | |

| HiPRESTO Mean (SD) | 60.1 (7.8) | 53.7 (9.1) | 26.7 (8.8) | 32.8 (14.8) | 102.95 (18.06) | 83.1 (5.6) | ||||||

| LoPRESTO | 1 | M | 76 | 74 | 35 | Cochlear | Left | Yes | Ototoxicity (amikacin) | 53 | 96.25 | 4.02 |

| LoPRESTO | 2 | F | 52 | 46 | 42 | Cochlear | Right | Yes | Unknown, born with HL | 52 | 113.75 | 22.58 |

| LoPRESTO | 3 | M | 60 | 52 | 36 | Cochlear | Bilateral | No | Measles | 54 | 105.00 | 25.81 |

| LoPRESTO | 4 | M | 59 | 54 | 6 | Cochlear | Right | No | Genetic | 44 | 108.75 | 29.84 |

| LoPRESTO | 5 | F | 55 | 44 | 15 | Cochlear | Left | No | Sudden, otosclerosis, progressive as adult | 30 | 120.00 | 32.26 |

| LoPRESTO | 6 | M | 81 | 80 | 49 | Cochlear | Left | Yes | Unknown, progressive as adult | 13 | 75.00 | 33.06 |

| LoPRESTO | 7 | F | 64 | 59 | 24 | Cochlear | Left | Yes | Unknown, progressive as child | 55 | 95.00 | 33.87 |

| LoPRESTO | 8 | F | 62 | 59 | 14 | Cochlear | Bilateral | No | Sepsis, ototoxicity | 4 | 95.00 | 34.68 |

| LoPRESTO | 9 | F | 76 | 68 | 30 | Cochlear | Left | No | Progressive loss as adult, probable autoimmune | 31 | 108.75 | 37.05 |

| LoPRESTO | 10 | F | 65 | 63 | 36 | Cochlear | Right | No | Genetic, progressive as adult | 59 | 86.25 | 41.94 |

| LoPRESTO Mean (SD) | 65.0 (9.6) | 59.9 (11.7) | 28.7 (13.7) | 39.5 (19.2) | 100.38 (13.51) | 29.5 (10.5) |

F = female; M = male; PTA = pure-tone average.

Participants wore their typical hearing prostheses, including CIs for bilateral CI participants or any contralateral hearing aids for bimodal CI participants, except during an unaided audiogram. Testing with typical hearing prostheses was carried out to obtain more ecologically valid measures of sentence recognition and auditory functioning in which scores would provide a measure of how much linguistic and auditory information could be reasonably expected to be received and processed by the listener in a quiet listening environment. All participants provided informed written consent and received $15 per hour for participation. The local institutional review board approved the study protocol, and all testing took place at The Ohio State University. All participants provided informed written consent before participation.

Measures and Procedures

All measures were collected as part of a larger study on speech, language, and cognitive skills in adult postlingually deafened CI users. Testing was carried out at the Eye and Ear Institute of The Ohio State University Wexner Medical Center in sound-proof booths and acoustically insulated rooms. Visual stimuli were presented on a paper or a touch screen monitor (Keytec, Inc, Richardson, TX) placed two feet in front of the participant. Auditory stimuli were presented at 68 dB SPL, via a Roland MA-12C loudspeaker (Roland Corp, Los Angeles, CA) placed 1 m from the participant at 0° azimuth. Responses were audio-visually recorded via a Sony HDR-PH260 High Definition Handycam (Sony Corp, Tokyo, Japan) for later offline scoring. Measures used in the present study are described in the following paragraphs. For detailed information on the general approach, materials, and collection procedures, see Moberly et al (2017), Kramer et al (2018), and Moberly et al (2018).

Sentence Recognition

To determine the group membership, PRESTO sentences (Gilbert et al, 2013) were used to assess high-variability sentence recognition among the CI users. Scores were based on 32 PRESTO sentences, originally selected from the Texas Instruments/Massachusetts Institute of Technology Speech Corpus (TIMIT; Garofolo et al., 1993). PRESTO maximizes talker variability by including sentences produced by multiple male and female talkers with different regional accents. Original PRESTO sentence lists were balanced for talker gender, keyword frequency, and keyword familiarity, with no repeated talkers. For the present study, participants were asked to repeat 32 sentences, with the first 2 as practice. Scores were percent keywords correct across the final 30 sentences. As discussed earlier, quartile scores from a larger distribution of 44 CI users were used to define HiPRESTO and LoPRESTO groups. For the purposes of the present study, five participants were removed before analysis because of unavailable PRESTO keyword scores (n = 1) or SMRT scores (n = 4). Overall, the final group of 39 CI users had a mean score of 57.8% (SD = 21.7) keywords correct on PRESTO. The 11 HiPRESTO CI users had a mean score of 83.1% (SD = 5.6) keywords correct on PRESTO, whereas the 10 LoPRESTO CI users had a mean score of 29.5% (SD = 10.5) keywords correct on PRESTO.

Spectral Resolution

The CI users’ spectral resolution was assessed using the SMRT (Aronoff and Landsberger, 2013). The stimuli consisted of 202 pure-tone frequency components with amplitudes spectrally modulated by a sine wave. The SMRT was carried out in a three-interval, two-alternative forced choice task in which two of the intervals contained a reference signal with 20 ripples per octave (RPO) and one contained the target signal. The target signal, set initially at 0.5 RPO, was modified using a one-up/one-down adaptive procedure with a step size of 0.2 RPO. A ripple detection threshold was calculated based on the last six reversals of each run, with the first three runs discarded as practice. Listeners selected the deviant (target) signal. A higher score represented better spectral resolution. For more details on the task, see Aronoff and Landsberger (2013).

Neurocognitive Functioning

Working Memory Capacity: Working memory capacity was assessed using a computerized version of a visual digit span task, based on the original auditory digit span task from the Wechsler Intelligence Scale for Children, Fourth Edition, Integrated (Wechsler, 2004). Participants were presented visually with lists of (2–7) digits and were instructed to reproduce each test list in the same order by touching the digits in a 3 × 3 matrix on the computer touch screen. The score was calculated based on the total correct items and used for final data analysis.

Inhibitory Control: A computerized Stroop test (http://millisecond.com) was used to assess inhibitory control, based on the original version by Stroop (1935). On each trial, a color word was presented on the computer screen, in either the same or different color font, and the participant was asked to press a key on a keyboard corresponding to the font color and not the name of the color word. Response times were calculated for both congruent (matching color word and font color) and incongruent trials (mismatching color word and font color). An interference score was calculated by subtracting the mean response time of the congruent trials from the mean response time of the incongruent trials. Response times from the congruent trials and interference scores were used for analysis as measures of processing speed and inhibitory control, respectively.

Verbal Learning and Memory: The California Verbal Learning Test, Version II (CVLT; Delis et al, 2000), was used to assess verbal learning and memory, using a visual version created and validated in adult CI users by Pisoni et al (2018). On the computer screen, participants first saw a list of 16 familiar words, one at a time on the screen, from four semantic categories (List A). List A was presented in the same order five times, and participants recalled as many words as possible after each presentation as a measure of repetition learning and free recall. The fifth presentation of List A was followed by an interference list of 16 new words from four semantic categories (List B). Participants recalled List B words as a measure of proactive interference. After the presentation and recall of List B, the participants then recalled List A again, as a measure of retroactive interference from List B. Finally, after all recall lists, a yes/no recognition memory test with the List A items was implemented to assess storage of items without retrieval demands. For the present study, three measures of CVLT performance were used for analysis: CVLT T1 and CVLT T5 (total words correctly recalled on first and fifth trials, respectively), List B (total words correctly recalled from List B presentation), and CVLT Y/N recognition discriminability (hits versus total false positives in the recognition memory test). (CVLT scores for one LoPRESTO CI user were not available. To carry out the discriminant analysis, group averages for the three CVLT scores were used for this participant.)

Lexical/Phonological Processing Speed: Lexical/Phonological processing speed was assessed with the Test of Word Reading Efficiency, Version 2 (TOWRE; Torgesen et al, 1999). TOWRE is a measure of word reading accuracy and fluency that measures the participant’s ability to accurately recognize and identify familiar real words and to phonologically decode nonwords. Participants read as many real words as possible from a 108-word list or as many nonwords as possible from a 66-nonword list in 45 seconds. The two scores used in the present study were calculated based on the number of whole words correct and the number of whole nonwords correctly read aloud.

Nonverbal Reasoning: The Raven’s Progressive Matrices test was used to measure nonverbal intelligence or abstract visuospatial reasoning (Raven, 2000). Participants were presented with incomplete visual patterns on a touch screen monitor and were asked to complete the visual pattern by selecting the best option from a closed set of alternatives. Participants completed as many items as possible in ten minutes; scores were number of correct items.

Statistical Analysis

The aim of the present study was to explore group differences between low-performing and high-performing CI users on high-variability sentence recognition assessed with PRESTO. First, means and SDs for HiPRESTO and LoPRESTO groups were calculated for each measure. An independent samples t-test was carried out to confirm group differences in PRESTO scores. Second, to examine the relations among the measures to be used in the discriminant analyses, Pearson correlations were calculated. Third, a discriminant function analysis was carried out to determine the extent to which the measures of spectral resolution and neurocognitive functioning could discriminate LoPRESTO and HiPRESTO CI users. A discriminant function analysis is a technique that uses a linear combination of independent variables to determine which of these variables help to explain between-group differences observed for a specific dependent variable.

RESULTS

Means and SDs on each measure are displayed in Table 2. An independent sample t-test demonstrated that the difference in overall performance on PRESTO between the HiPRESTO and LoPRESTO groups was significant [t(19) = 14.83, p < 0.001], confirming the group selection criteria.

Table 2.

Mean (SD) Scores for LoPRESTO and HiPRESTO Groups

| Groups | ||

|---|---|---|

| LoPRESTO (n = 10) | HiPRESTO (n = 11) | |

| Sentence recognition | ||

| PRESTO keywords correct (%) | 29.5 (10.5) | 83.1 (5.6) |

| Neurocognitive functioning | ||

| Working memory capacity | ||

| Digit span (# correct) | 47.5 (20.9) | 47.3 (20.6) |

| Information-processing speed and inhibitory control | ||

| Stroop control (congruent) condition (msec) | 1,148.9 (250) | 1,077.7 (245) |

| Stroop interference (msec) | 142.7 (293.0) | 194.0 (118.6) |

| Speed of phonological and lexical access | ||

| TOWRE nonwords (% nonwords correct) | 68.8 (11.9) | 76.8 (7.3) |

| TOWRE words (% words correct) | 72.0 (9.6) | 79.3 (5.7) |

| Verbal learning and memory | ||

| CVLT T1/T5 (# correct) | 50.9 (10.5) | 49.1 (10.5) |

| CVLT List B (# correct) | 29.3 (11.8) | 36.4 (12.1) |

| CVLT Y/N discriminability (hits vs. false positives) | 2.9 (0.66) | 3.2 (0.64) |

| Nonverbal reasoning | ||

| Raven’s (# correct) | 9.7 (3.6) | 14.7 (4.1) |

| Spectral resolution | ||

| SMRT (ripple-resolution threshold) | 1.1 (0.4) | 3.6 (1.6) |

Correlational analyses were carried out to explore the relations between the measures, shown in Table 3. Overall, very few strong correlations emerged among the measures. The SMRT scores (spectral resolution) were correlated with the TOWRE word scores (r = 0.45, p = 0.039) and Raven’s scores (r = 0.72, p < 0.001). Raven’s scores were moderately correlated with the Stroop control scores (r = −0.52, p = 0.015). Finally, some significant relations emerged for the CVLT measures: CVLT T1/T5 scores were moderately correlated with CVLT List B scores (r = 0.56, p = 0.01) and CVLT Y/N recognition discriminability scores (r = 0.65, p = 0.002). Because the measures were not consistently and strongly related, all selected measures were used in the discriminant analysis.

Table 3.

Pearson Correlations between Measures Across All Participants

| Measure | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1. | Digit span | ||||||||||

| 2. | Stroop control | −0.03 | |||||||||

| 3. | Stroop interference | 0.03 | 0.11 | ||||||||

| 4. | TOWRE nonwords | 0.07 | −0.09 | 0.22 | |||||||

| 5. | TOWRE words | −0.28 | 0.09 | 0.42 | 0.22 | ||||||

| 6. | CVLT T1/T5 | 0.06 | −0.22 | −0.19 | 0.02 | −0.29 | |||||

| 7. | CVLT List B | 0.09 | −0.34 | −0.01 | 0.03 | 0.04 | 0.56 | ||||

| 8. | CVLT Y/N discriminability | 0.06 | −0.34 | 0.00 | 0.04 | −0.36 | 0.65 | 0.37 | |||

| 9. | Raven’s | 0.18 | −0.52 | 0.19 | 0.32 | 0.30 | 0.16 | 0.23 | 0.44 | ||

| 10. | SMRT | −0.18 | −0.40 | −0.01 | 0.18 | 0.45 | 0.18 | 0.28 | 0.39 | 0.72 |

Note: r values are reported. Statistically significant (<0.05) correlations are in bold.

Results of the discriminant analysis showed that LoPRESTO and HiPRESTO groups were significantly classified by the measures, Wilks’ λ = 0.21 [, p = 0.014]. The Wilks’ λ statistic (provided in SPSS) represents the proportion of the variance not explained by group differences (the range of possible values is 0.0–1.0; lower values emerge when within-group variability is small compared with the total variability, indicating that the groups differ). The discriminant function had an eigenvalue, representing the discriminating ability of the discriminant function, of 3.92 (larger values are associated with a stronger discriminating ability) and a canonical correlation, representing the correlation between the discriminant scores and the groupings of the dependent variable, of 0.89 (high correlations—perfect is 1.00—are associated with a stronger discriminating ability). Overall, 100% of the original cases could be correctly classified, superior to random assignment based on prior group membership probabilities (Tabachnik and Fidell, 2001). Because classification can be quite high when sample sizes are small, a leave-one-out cross-validation classification was also used. The leave-one-out cross-validation method classifies each case based on the functions derived from all other cases and can be used to assess a model’s performance. Using cross-validation, 76.2% of the cases were correctly classified, suggesting that the model is stable and robust.

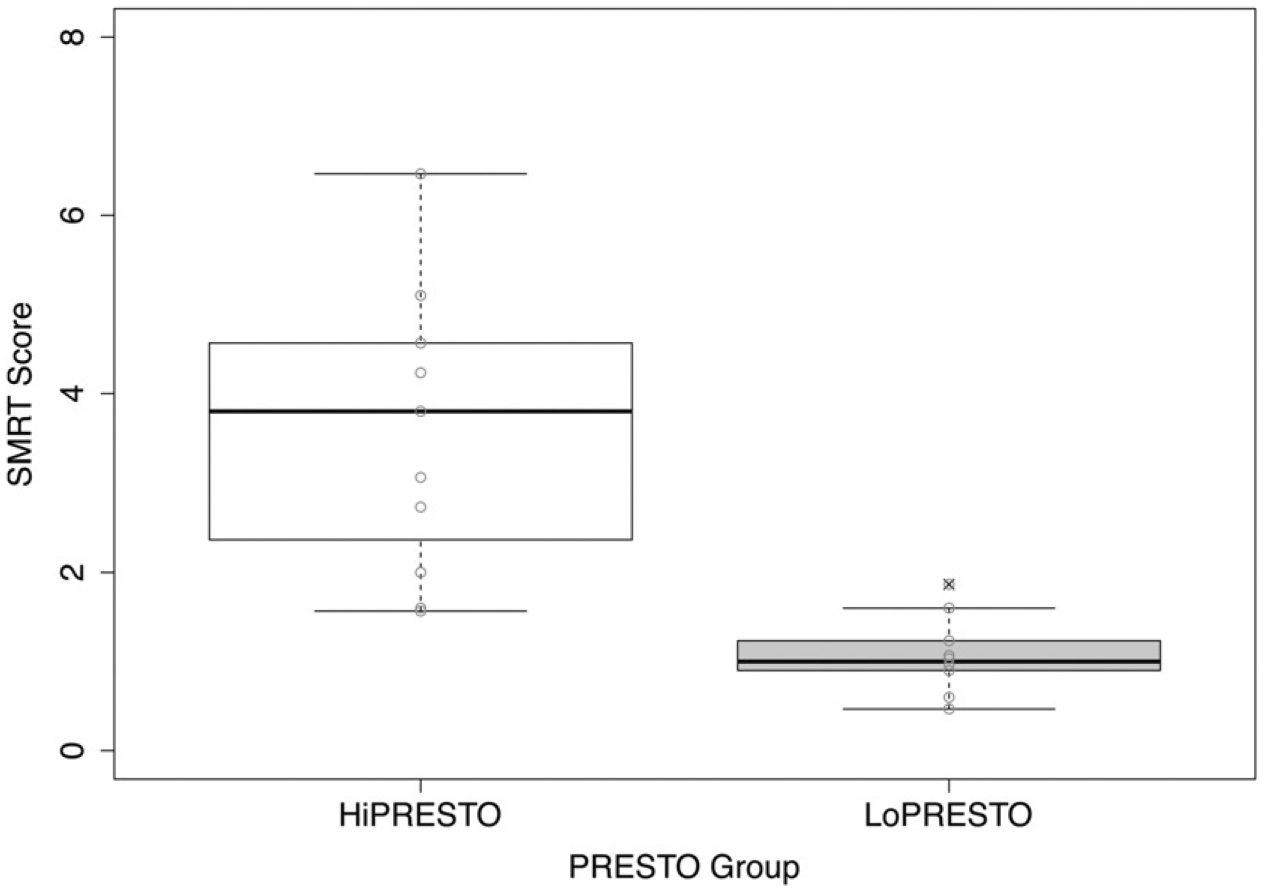

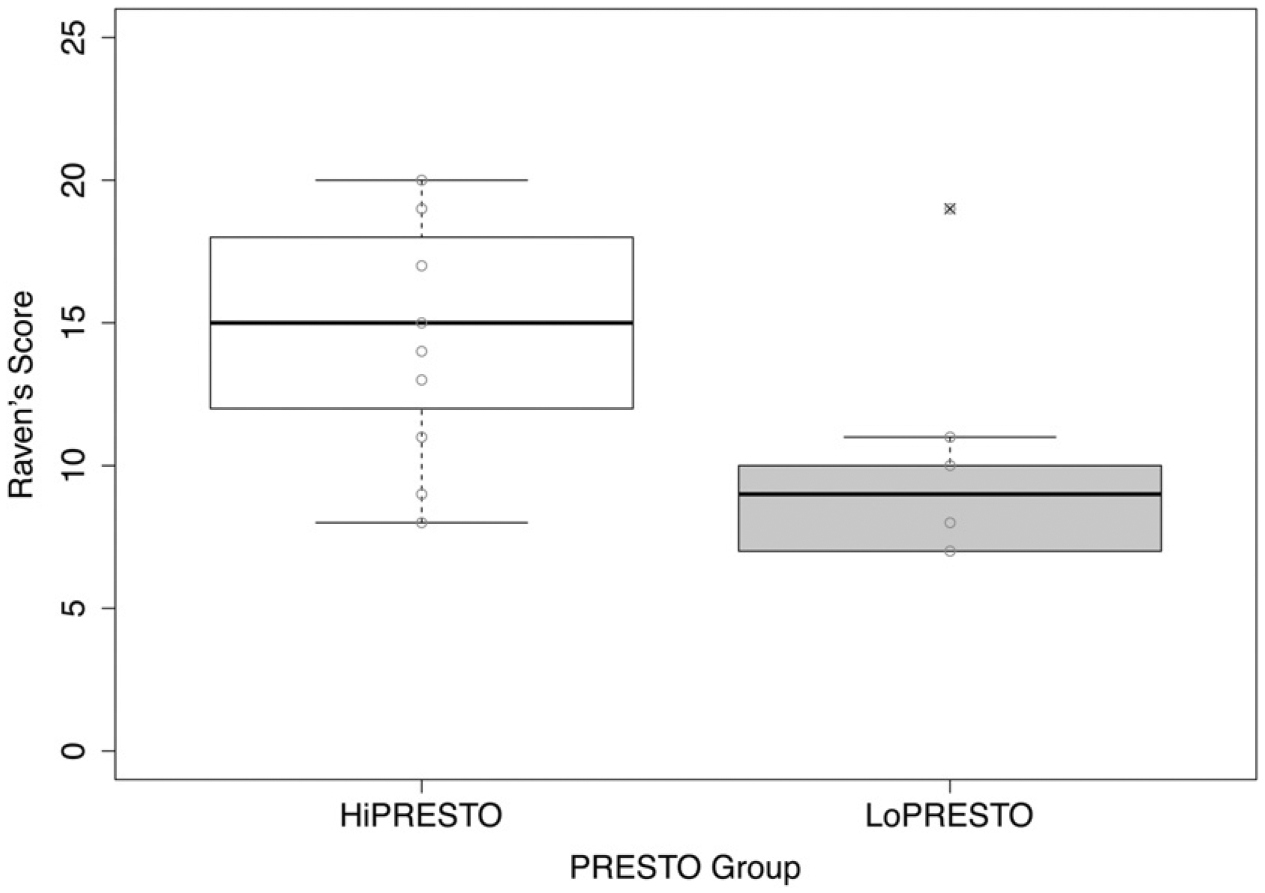

Structure matrix coefficients are listed in Table 4, indicating the relative importance of the measures in predicting group membership. Structure matrix coefficients, representing the correlation between independent variables and the discriminant function, are generally considered to be important if greater than |0.30|. The results indicate that LoPRESTO and HiPRESTO groups are primarily discriminated by SMRT scores (0.58) and Raven’s scores (0.35). Figures 1 and 2 show the SMRT and Raven’s scores, respectively, for HiPRESTO and LoPRESTO groups. Although other measures contribute to the discriminant function, they were relatively less important than these two primary measures to discriminate the LoPRESTO and HiPRESTO groups. Thus, the HiPRESTO group is characterized by high spectral resolution and stronger nonverbal reasoning skills.

Table 4.

Structure Matrix Coefficients

| Measure | Structure Matrix Coefficients | Rank |

|---|---|---|

| SMRT | 0.58 | 1 |

| Raven’s | 0.35 | 2 |

| TOWRE words | 0.25 | 3 |

| TOWRE nonwords | 0.22 | 4 |

| CVLT List B | 0.16 | 5 |

| CVLT Y/N discriminability | 0.12 | 6 |

| Stroop control | −0.08 | 7 |

| Stroop interference | 0.06 | 8 |

| CVLT T1/T5 | −0.04 | 9 |

| Digit span | 0.00 | 10 |

Figure 1.

Average SMRT scores for HiPRESTO and LoPRESTO groups. The boxes extend from the lower to the upper quartile (the interquartile range, IQ), and the midline indicates the median. The whiskers indicate the highest and lowest values not >1.5 times the IQ. Light gray circles represent individual scores. Circles containing an “x” indicate outliers, that is, data points >1.5 times the IQ.

Figure 2.

Same as Figure 1, except average Raven’s scores are shown for HiPRESTO and LoPRESTO groups.

DISCUSSION

The goal of the present study was to determine whether high- and low-performing postlingually deafened adult CI users could be discriminated by measures of auditory spectral resolution and neurocognitive functioning. The results of the discriminant function analysis, using a measure of spectral resolution and several measures of neurocognitive skills, demonstrated that spectral resolution, primarily, and nonverbal reasoning, secondarily, predicted CI users’ performance on PRESTO, a high-variability sentence recognition test. In particular, high-performing users were characterized by better spectral resolution and stronger nonverbal reasoning, whereas low-performing users were overall characterized by poorer spectral resolution and weaker nonverbal reasoning. These results suggest that both spectral resolution and neurocognitive functioning are important for discriminating high- and low-performing CI users in speech recognition in conditions with high talker variability, with spectral resolution as the greatest contributor to group differences.

Our first hypothesis was that spectral resolution would contribute the most to discriminating the high and low performers on PRESTO. This hypothesis was supported by our results. Spectral resolution was the primary contributor to the discriminant function. HiPRESTO users obtained significantly higher (better) SMRT thresholds than the LoPRESTO users, although the SMRT thresholds of the HiPRESTO group should be interpreted with caution, given the potential for spectral distortion of SMRT stimuli with high RPO values when presented through a CI processor (DiNino and Arenberg, 2018). The function of spectral resolution to speech recognition is well established. Studies using acoustic vocoder simulations of CI hearing have demonstrated that speech perception and recognition declines with decreasing spectral resolution (i.e., with decreasing number of spectral channels) (e.g., Fu et al, 1998; Friesen et al, 2001). In addition, for individual CI users, differences in spectral resolution have been found to be related to individual differences in vowel and consonant perception (Henry et al, 2005), word recognition (Won et al, 2010; Drennen et al, 2014), and sentence recognition (Moberly et al, 2018), especially in noise (Won et al, 2007). The role of reduced spectral resolution may be further strengthened in conditions with high talker variability because listeners are forced to rely primarily on bottom-up cues when perceptual normalization for talker and accent variability is difficult (Clopper, 2012). The results from the present study replicate those previous findings, suggesting that spectral resolution is important in accounting for individual differences in CI speech recognition. The present study extends previous findings by demonstrating that spectral resolution is particularly important for determining high and low performance in speech recognition in adverse conditions with high talker variability. However, the extent to which the relative importance of spectral resolution to discriminating the high- and low-performing groups depends on the use of a high-variability talker sentence recognition test is unclear. More research studies should examine the contribution of auditory sensitivity to spectral and temporal properties of speech to speech recognition under different adverse listening conditions.

Regarding neurocognitive functioning, we predicted that neurocognitive skills may not discriminate the high- versus low-performing groups in the case that the signal is so degraded for the low-performing CI users that they could not benefit from compensatory top-down skills. However, if neurocognitive functioning still plays a role even with poor spectral resolution, such that both combine for either extremely low or extremely high performance, then neurocognitive skills would be expected to help discriminate the groups. Our results showed that neurocognitive abilities also contributed to the discriminant function, supporting the second hypothesis. In particular, nonverbal reasoning, as measured by the Raven’s Progressive Matrices test, helped in discriminating the low- and high-performing CI users. However, note that because the focus of the present study was to determine whether auditory spectral resolution and neurocognitive functioning discriminate high- and low-performers on the PRESTO rather than to explain individual differences, scores from neurocognitive tests were not adjusted by demographic factors, such as age, SES, and gender, for the analyses. Given possible associations between demographic factors and cognitive skills (e.g., Kramer et al, 2018), some of the differences between groups could be attributed to these factors. Moreover, had normalized scores been used, the discriminant function may have had a weaker discriminating ability.

Nonverbal reasoning has not consistently emerged as a significant predictor of speech recognition in CI users (e.g., Akeroyd, 2008; but see Knutson et al, 1991; Holden et al, 2013). Moberly et al (2018) found that the strongest neurocognitive predictor of speech recognition among a similar group of CI users was nonverbal reasoning, using the same Raven’s Progressive Matrices test as in the present study. In both studies, a timed version of the Raven’s test was used—participants had to complete as many items as possible within ten minutes. Thus, this measure may incorporate processing speed, beyond nonverbal reasoning. If this were the case, we would also expect that scores on the Raven’s test to be related to other neurocognitive measures involved in processing speech, including the timed Stroop and TOWRE measures. Scores on the Raven’s test showed a fairly high, but not significant, correlation with the Stroop and TOWRE measures. Furthermore, they were more strongly related to the Stroop control measure from congruent trials, which involves processing speed, in the predicted negative direction (faster Stroop associated with fewer correct Raven’s items). Although all these tasks may involve processing speed to some extent, the group means did not differ substantially and a strong relation between these measures and the discriminant function did not emerge. The lack of contribution of specific processing operations related to speech recognition, such as working memory (see Akeroyd, 2008), and the discriminant function may be explained by the focus on the extreme groups. The LoPRESTO group may be receiving such a poor signal that these specific cognitive domains, which may account for some variability among a larger distribution of CI users with better spectral resolution, are not engaged in supporting speech processing in this group. By contrast, domain-general nonverbal reasoning measured by the Raven’s test, which reflects the ability to reason and solve novel problems, may underlie performance across all the auditory tasks in the present study, including the SMRT. Performance on the SMRT and Raven’s test was also strongly correlated in this group (r = 0.72), as shown in Table 3. In addition to procedural commonalities of task performance, performance on SMRT and the Raven’s test may be influenced by the same underlying mechanisms; specifically, long durations of deafness without substantial auditory input may contribute to both poor spectral resolution and nonverbal reasoning. However, given that the HiPRESTO and LoPRESTO groups did not vary greatly in duration of deafness or other typical demographic factors (see Table 1), the potential factor(s) underlying the relation between SMRT and nonverbal reasoning is not apparent. Alternatively, because the present study involves a unique group of experienced adult postlingually deafened CI users, the observed relations between spectral resolution and nonverbal reasoning and PRESTO performance may not be representative of the larger, more diverse sample of adult CI users with varying degrees of experience with their CIs. Nonetheless, given that the present study was specifically designed to test broadly whether both auditory sensitivity and neurocognitive skills contribute to group differences among high- and low-performing CI users, and not specifically to test which domains, the results suggest that in addition to spectral resolution, a domain-general global measure of nonverbal intelligence discriminates higher and lower performance on speech recognition in adverse conditions.

Regardless of the specific neurocognitive skills involved and how they may be related to demographic factors, the results suggest that neurocognitive functioning contributes to low and high performance together with auditory spectral resolution. To better understand the contribution of these factors, we examined individual performance on the spectral resolution and nonverbal reasoning measures in more detail. The HiPRESTO group seemed to benefit from both better spectral resolution and stronger nonverbal reasoning. Of the HiPRESTO CI users, 8/11 appeared in the top 11 in spectral resolution scores, with 3/11 falling in the middle of the overall distribution. In addition, 8/11 appeared in the top 11 in nonverbal reasoning scores, with 3/11 falling in the middle of the overall distribution. The LoPRESTO group appears to be more variable in their scores, at least in nonverbal reasoning. For spectral resolution, 7/10 low-performing CI users also appeared in the bottom 10, with 3/10 falling in the middle of the overall distribution. For nonverbal reasoning, only 2/10 LoPRESTO CI users also appeared in the bottom 10 in nonverbal reasoning scores, with 7/10 in the middle and 1/10 in the top of the overall distribution. Interestingly, the LoPRESTO CI user with strong nonverbal reasoning had one of the worst spectral resolution scores (0.60 on the SMRT). Thus, the contributory role of neurocognitive functioning to speech recognition among the low performers may be relatively limited and constrained by the amount of useful acoustic-phonetic information provided by the CI for higher levels of processing.

With reduced sensory input, listeners tend to use perceptual strategies relying on top-down linguistic or contextual information (e.g., Pichora-Fuller et al, 1995; Janse and Ernestus, 2011). Although most CI users may be able to engage neurocognitive resources to compensate for a degraded signal, the ability to apply these compensation mechanisms (and potentially benefit from stronger neurocognitive skills) is likely to be reduced for CI users with poor spectral resolution. The findings from the present study suggest a limited role for cognitive skills in CI users with poor auditory sensitivity (Collison et al, 2004) and imply a reduced ability to rely on top-down compensation mechanisms for CI users with lower overall speech recognition skills (e.g., Chatterjee et al, 2010; Bhargava et al, 2014). These limitations may lead low-performing CI users to adopt different perception strategies than high-performing users in completing complex speech recognition tasks, as further individual differences within the group reflect variability in neurocognitive skills. Differences in spectral resolution between high- and low-performing CI users, and within the low-performing group, may emerge in different types of adverse conditions, which demand varying reliance on bottom-up or top-down information. Additional research should be carried out to examine the perceptual strategies of the high- versus low-performing CI users, and their reliance on top-down compensation mechanisms in a variety of adverse conditions.

Taken together, the results of the present study emphasize the importance of both auditory sensitivity and neurocognitive functioning in accounting for individual differences in CI speech recognition in adverse conditions with high talker variability. To obtain high sentence recognition accuracy in these conditions, good spectral resolution and strong cognitive skills appear to be necessary. Overall, low-performing CI users seem to be limited by poor spectral resolution and weak cognitive skills, combined. However, spectral resolution may play the most important and limiting role for low-performing CI users; some CI users may be restricted by very poor spectral resolution, regardless of their individual cognitive capacities. These findings have some potential clinical implications in the development of more effective rehabilitative treatment protocols for adult postlingually deafened CI users. High-performing CI users, and possibly those falling in the middle of the distribution, may benefit from a broad treatment protocol involving both adjustments to the sensory input and training in neurocognitive and linguistic skills. Because the signal is already rich enough for them to effectively engage top-down mechanisms, neurocognitive or linguistic training may yield improvements in their ability to deal with degraded signals in adverse listening conditions. Conversely, low-performing CI users may more greatly benefit from improvements in sensory information. For these users, stronger neurocognitive or linguistic skills through training would not be expected to lead to improved recognition without at least some improvement in sensory information. These users may primarily benefit from improvements in signal processing, through new processing or speech coding strategies, or auditory training specifically targeting spectral resolution, such as training involving spectral ripple noise. Future research should be carried out to further examine the contributions of auditory sensitivity and neurocognitive functioning in a variety of challenging and adverse conditions to help predict speech recognition outcomes and to inform rehabilitative treatments for postlingually deafened adult CI users.

Acknowledgments

Collection of data was primarily supported by the National Institutes of Health, National Institute on Deafness and Other Communication Disorders (NIDCD) Career Development Award 5K23DC015539-02, and the American Otological Society Clinician-Scientist Award to Aaron C. Moberly. Preparation of the manuscript was also supported in part by VENI Grant No. 275-89-035 from the Netherlands Organization for Scientific Research (NWO) and funding from the President’s Postdoctoral Scholars Program (PPSP) at The Ohio State University awarded to Terrin N. Tamati.

Abbreviations:

- CI

cochlear implant

- CVLT

California Verbal Learning Test

- HiPRESTO

group of CI users in the upper quartile of performance distribution on PRESTO

- LoPRESTO

group of CI users in the lower quartile of performance distribution on PRESTO

- NH

normal hearing

- PRESTO

Perceptually Robust English Sentence Test Open-set

- RPO

ripples per octave

- SD

standard deviation

- SMRT

Spectral-Temporally Modulated Ripple Test

- TOWRE

Test of Word Reading Efficiency

- WRAT

Wide Range Achievement Test

REFERENCES

- Akeroyd MA. (2008) Are individual differences in speech reception related to individual differences in cognitive ability? A survey of twenty experimental studies with normal and hearing-impaired adults. Int J Audiol 47:S53–S71. [DOI] [PubMed] [Google Scholar]

- Arehart KH, Souza P, Baca R, Kates JM. (2013) Working memory, age and hearing loss: susceptibility to hearing aid distortion. Ear Hear 34:251–260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aronoff JM, Landsberger DM. (2013) The development of a modified spectral ripple test. J Acoust Soc Am 134:EL217–EL222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bhargava P, Gaudrain E, Başkent D. (2014) Top–down restoration of speech in cochlear-implant users. Hear Res 309:113–123. [DOI] [PubMed] [Google Scholar]

- Blamey P, et al. (2013) Factors affecting auditory performance of postlingually deaf adults using cochlear implants: an update with 2251 patients. Audiol Neuro Otol 18:36–47. [DOI] [PubMed] [Google Scholar]

- Chatterjee M, Peredo F, Nelson D, Başkent D. (2010) Recognition of interrupted sentences under conditions of spectral degradation. J Acoust Soc Am 127:EL37–EL41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clopper CG. (2012) Effects of dialect variation on the semantic predictability benefit. Lang Cogn Process 27:1002–1020. [Google Scholar]

- Collison EA, Munson B, Carney AE. (2004) Relations among linguistic and cognitive skills and spoken word recognition in adults with cochlear implants. J Speech Lang Hear Res 47: 496–508. [DOI] [PubMed] [Google Scholar]

- Croghan NBH, Duran SI, Smith ZM. (2017) Re-examining the relationship between number of cochlear implant channels and maximal speech intelligibility. J Acoust Soc Am 142:EL537. [DOI] [PubMed] [Google Scholar]

- Delis DC, Kramer J, Kaplan E, Ober BA. (2000) California Verbal Learning Test, 2. San Antonio, TX: The Psychological Corporation. [Google Scholar]

- DiNino M, Arenberg JG. (2018) Age-related performance on vowel identification and the spectral-temporally modulated ripple test in children with normal hearing and with cochlear implants. Trends Hear 22:1–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Drennen WR, Anderson ES, Won JH, Rubinstein JT. (2014) Validation of a clinical assessment of spectral ripple resolution for cochlear-implant users. Ear Hear 35:E92–E98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Folstein MF, Folstein SE, McHugh PR. (1975) “Mini-mental state”. A practical method for grading the cognitive state of patients for the clinician. J Psychiatr Res 12:189–198. [DOI] [PubMed] [Google Scholar]

- Friesen LM, Sannon RV, Başkent D, Wang X. (2001) Speech recognition in noise as a function of the number of spectral channels: comparison of acoustic hearing and cochlear implants. J Acoust Soc Am 110:1150–1163. [DOI] [PubMed] [Google Scholar]

- Fu QJ, Nogaki G. (2005) Noise susceptibility of cochlear implant users: the role of spectral resolution and smearing. J Assoc Res Otolaryngol 6:19–27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fu QJ, Shannon RV, Wang X. (1998) Effects of noise and spectral resolution on vowel and consonant recognition: acoustic and electric hearing. J Acoust Soc Am 104:3586–3596. [DOI] [PubMed] [Google Scholar]

- Garofolo JS, Lamel LF, Fisher WM, Fiscus JG, Pallett DS, Dahlgren NL. (1993) The DARPA TIMIT Acoustic-Phonetic Continuous Speech Corpus. Philadelphia, PA: Linguistic Data Consortium. [Google Scholar]

- Gilbert JL, Tamati TN, Pisoni DB. (2013) Development, reliability and validity of PRESTO: a new high-variability sentence recognition test. J Am Acad Audiol 24:1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henry BA, Turner CW, Behrens A. (2005) Spectral peak resolution and speech recognition in quiet: normal hearing, hearing impaired and cochlear implant listeners. J Acoust Soc Am 118:1111–1121. [DOI] [PubMed] [Google Scholar]

- Heydebrand G, Hale S, Potts L, Gotter B, Skinner M. (2007) Cognitive predictors of improvements in adults’ spoken word recognition six months after cochlear implant activation. Audiol Neuro Otol 12:254–264. [DOI] [PubMed] [Google Scholar]

- Holden LK, Finley CC, Firszt JB, Holden TA, Brenner C, Potts LG, Gotter BD, Vanderhoff SS, Mispagel K, Heydebrand G, Kinner MW. (2013) Factors affecting open-set recognition in adults with cochlear implants. Ear Hear 34:342–360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Janse E, Ernestus M. (2011) The roles of bottom-up and top-down information in the recognition of reduced speech: evidence from listeners with normal and impaired hearing. J Phonet 39: 330–343. [Google Scholar]

- Johnsrude IS, Mackey A, Hakyemez H, Alexander E, Trang HP, Carlyon RP. (2013) Swinging at a cocktail party: voice familiarity aids speech perception in the presence of a competing voice. Psychol Sci 24:1995–2004. [DOI] [PubMed] [Google Scholar]

- Knutson JF, Gantz BJ, Hinrichs JV, Schartz HA, Tyler RS, Woodsworth G. (1991) Psychological predictors of audiological outcomes of multichannel cochlear implants: preliminary findings. Ann Otol Rhinol Laryngol 100:817–822. [DOI] [PubMed] [Google Scholar]

- Kramer S, Vasil KJ, Adunka OF, Pisoni DB, Moberly AC. (2018) Cognitive functions in adult cochlear implant users, cochlear implant candidates, and normal-hearing listeners. Laryngoscope Investig Otolaryngol 3:304–310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lazard DS, Lee HJ, Gaebler M, Kell CA, Truy E, Giraud AL. (2010) Phonological processing in post-lingual deafness and cochlear implant outcome. Neuroimage 49:3443–3451. [DOI] [PubMed] [Google Scholar]

- Lazard DS, et al. (2012) Pre-, per- and postoperative factors affecting performance of postlingually deaf adults using cochlear implants: a new conceptual model over time. PLoS One 7: e48739. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lenarz M, Sönmez H, Joseph G, Büchner A, Lenarz T. (2012) Cochlear implant performance in geriatric patients. Laryngoscope 122:1361–1365. [DOI] [PubMed] [Google Scholar]

- Lyxell B, Andersson J, Andersson U, Arlinger S, Bredberg G, Harder H. (1998) Phonological representation and speech understanding with cochlear implants in deafened adults. Scand J Psychol 39:175–179. [DOI] [PubMed] [Google Scholar]

- Mattingly JK, Castellanos I, Moberly AC. (2018) Nonverbal reasoning as a contributor to sentence recognition outcomes in adults with cochlear implants. Otol Neurotol 39(10):e956–e963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moberly AC, Bates C, Harris MS, Pisoni DB. (2016a) The enigma of poor performance by adults with cochlear implants. Otol Neurotol 37:1522–1528. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moberly AC, Houston DM, Castellanos I. (2016b) Non-auditory neurocognitive skills contribute to speech recognition in adults with cochlear implants. Laryngoscope Investig Otolaryngol 1: 154–162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moberly AC, Castellanos I, Vasil KJ, Adunka OF, Pisoni DB. (2017) “Product” versus “process” measures in assessing speech recognition outcomes in adults with cochlear implants. Otol Neurotol 39:E195–E202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moberly AC, Lowenstein JH, Tarr E, Caldwell-Tarr A, Welling DB, Shahin AJ, Nittrouer S. (2014) Do adults with cochlear implants rely on different acoustic cues for phoneme perception than adults with normal hearing? J Speech Lang Hear Res 57:566–582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moberly AC, Vasil KJ, Wcinich T, Safdar N, Boyce L, Roup C, Holt R, Adunka O, Castellanos I, Sharifo V, Houston D, Pisoni DB. (2018) How does aging affect recognition of spectrally degraded speech? Laryngoscope 128(5, Suppl):doi: 10.1002/lary.27457. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mullennix JW, Pisoni DB, Martin CS. (1989) Some effects of talker variability on recall of spoken word recognition. J Acoust Soc Am 85:365–378. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nilsson M, Soli SD, Sullivan JA. (1994) Development of the Hearing in Noise Test for the measurement of speech reception thresholds in quiet and in noise. J Acoust Soc Am 95:1085–1099. [DOI] [PubMed] [Google Scholar]

- Nittrouer S, Burton LT. (2005) The role of early language experience in the development of speech perception and phonological processing abilities: evidence from 5-year-olds with histories of otitis media with effusion and low socioeconomic status. J Commun Disord 38:29–63. [DOI] [PubMed] [Google Scholar]

- Nusbaum HC, Magnuson JS. (1997) Talker normalization: phonetic constancy as a cognitive process In: Johnson K, Mullennix JW, eds. Talker Variability in Speech Processing. San Diego, CA: Academic Press, 109–132. [Google Scholar]

- Pichora-Fuller MK, Schneider B, Daneman M. (1995) How young and old listeners listen to and remember speech in noise. J Acoust Soc Am 97:593–608. [DOI] [PubMed] [Google Scholar]

- Pisoni DB. (1997) Some thoughts on “normalization” in speech perception In: Johnson K, Mullennix JW, eds. Talker Variability in Speech Processing. San Diego, CA: Academic Press, 9–32. [Google Scholar]

- Pisoni DB, Broadstock A, Wucinich T, Safdar N, Miller K, Hernandez LR, Vasil K, Boyce L, Davies A, Harris MS, Castellanos I, Xu H, Kronenberger WG, Moberly AC. (2018) Verbal learning and memory after cochlear implantation in postlingually deaf adults: some new findings with the CVLT-II. Ear Hear 39:720–745. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pisoni DB, Kronenberger WG, Harris MS, Moberly AC. (2017) Three challenges for future research on cochlear implants. World J Otorhinolaryngol Hear Neck Surg 3:240–254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raven J (2000) The Raven’s progressive matrices: change and stability over culture and time. Cogn Psychol 41:1–48. [DOI] [PubMed] [Google Scholar]

- Souza P, Gehani N, Wright R, McCloy D. (2013) The advantage of knowing the talker. J Am Acad Audiol 24:689–700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spahr AJ, Dorman MF, Litvak LM, Van Wie S, Gifford RH, Loizou PC, Loiselle LM, Oakes T, Cook S. (2012) Development and validation of the AzBio sentence lists. Ear Hear 33: 112–117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stroop JR. (1935) Studies of interference in serial verbal reactions. J Exp Psychol 18:643–662. [Google Scholar]

- Tabachnik BG, Fidell LS. (2001) Using Multivariate Statistics. 4th ed Boston, MA: Allyn and Bacon. [Google Scholar]

- Tamati TN, Pisoni DB. (2014) Non-native speech recognition in high-variability listening conditions using PRESTO. J Am Acad Audiol 25:869–892. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tamati TN, Gilbert JL, Pisoni DB. (2013) Some factors underlying individual differences in speech recognition on PRESTO: a first report. J Am Acad Audiol 24:616–634. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tamati TN, Janse E, Başkent D. (2019) Perceptual discrimination of speaking style under cochlear implant simulation. Ear Hear 40: 63–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tao D, Deng R, Jiang Y, Galvin JJ, Fu QJ, Chen B. (2014) Contribution of auditory working memory to speech understanding in Mandarin-speaking cochlear implant users. PLoS One 9:E99096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tinnemore AR, Zion DJ, Kulkarni AM, Chatterjee M. (2018) Children’s recognition of emotional prosody in spectrally degraded speech is predicted by their age and cognitive status. Ear Hear 39:874–880. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Torgesen JK, Wagner RK, Rashotte CA. (1999) Test of Word Reading Efficiency. Austin, TX: Pro-Ed. [Google Scholar]

- Wechsler D (2004) WISC-IV: Wechsler Intelligence Scale for Children, Integrated: Technical and Interpretive Manual. San Antonio, TX: Harcourt Brace and Company. [Google Scholar]

- Wilkinson GS, Robertson GJ. (2006) Wide Range Achievement Test. 4th ed Lutz, FL: Psychological Assessment Resources. [Google Scholar]

- Winn MB, Won JH, Moon IJ. (2012) Assessment of spectral and temporal resolution in cochlear implant users using psychoacoustic discrimination and speech cue categorization. Ear Hear 37: E377–E390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Won JH, Drennan WR, Kang RS, Rubinstein JT. (2010) Psychoacoustic abilities associated with music perception in cochlear implant users. Ear Hear 31:796–805. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Won JH, Drennan WR, Rubinstein JT. (2007) Spectral-ripple resolution correlates with speech reception in noise in cochlear implant users. J Assoc Res Otolaryngol 8:384–392. [DOI] [PMC free article] [PubMed] [Google Scholar]