Abstract

We systematically reviewed available evidence from Embase, Medline, and the Cochrane Library on diagnostic accuracy and clinical impact of commercially available rapid (results <3 hours) molecular diagnostics for respiratory viruses as compared to conventional molecular tests. Quality of included studies was assessed using the Quality Assessment of Diagnostic Accuracy Studies criteria for diagnostic test accuracy (DTA) studies, and the Cochrane Risk of Bias Assessment and Risk of Bias in Nonrandomized Studies of Interventions criteria for randomized and observational impact studies, respectively. Sixty-three DTA reports (56 studies) were meta-analyzed with a pooled sensitivity of 90.9% (95% confidence interval [CI], 88.7%–93.1%) and specificity of 96.1% (95% CI, 94.2%–97.9%) for the detection of either influenza virus (n = 29), respiratory syncytial virus (RSV) (n = 1), influenza virus and RSV (n = 19), or a viral panel including influenza virus and RSV (n = 14). The 15 included impact studies (5 randomized) were very heterogeneous and results were therefore inconclusive. However, we suggest that implementation of rapid diagnostics in hospital care settings should be considered.

Keywords: rapid test, molecular diagnostics, diagnostic accuracy, impact, review

Commercially available rapid molecular tests for respiratory viruses demonstrate 91% (95% confidence interval [CI], 89%–93%) pooled sensitivity and 96% (95% CI, 94%–98%) pooled specificity. Evidence on the impact of the implementation of rapid molecular tests for respiratory viruses is heterogeneous and inconclusive.

Acute respiratory tract infections (RTIs) have a high disease burden and are the third cause of death worldwide [1, 2]. Respiratory viruses predominate as causative pathogens in patients hospitalized with acute RTI, accounting for 50%–66% of microbiological etiologies [3–5]. Rapid identification of viral etiologies may improve effective patient management by influencing decision making on antibiotic treatment, antiviral therapy, hospital admission, length of stay, and implementation of infection-control measures to prevent further transmission [2, 6]. It may also lead to avoidance of unnecessary costs and antimicrobial resistance by reducing unnecessary prescriptions of antibiotics [7–10].

About a decade ago, the transition from conventional techniques as viral cultures and immunoassays to reverse-transcription polymerase chain reaction (RT-PCR) techniques did not result in a reduction in overall antibiotic use in hospitalized patients with lower RTI [6]. Although being faster in comparison to conventional techniques, RT-PCR–based diagnostics still took up to 48 hours from sampling to result [6], whereas nowadays we have access to rapid diagnostics with turnaround times of >1 hour [11].

Whether these rapid methods lead to improved patient outcomes, however, is still under debate. First, there is a wide range of rapid tests available with large differences in diagnostic accuracy. Reviews evaluating accuracy of available rapid tests for respiratory viruses either included a heterogeneous group of tests including both ultrarapid but less sensitive antigen-based tests and more sensitive but slightly slower molecular tests [11–13], compared rapid tests to outdated techniques as viral culture or immunoassays [13], focused on only 1 or 2 viral pathogens, mostly influenza virus [11], or focused on 1 specific assay [14, 15]. To guide physicians and hospitals in their choice for rapid diagnostic tools and how to value and interpret their results, a diagnostic test accuracy (DTA) review of available molecular rapid tests as compared to the best available reference standard—RT-PCR or other molecular methods—is essential. Second, even with tests that demonstrate high accuracy, there are conflicting conclusions on whether implementation of these tests results in better patient outcomes. A review on clinical impact of rapid molecular tests that summarizes and assesses sources of heterogeneity to explain these discrepant results is therefore highly needed.

In this review, we provide an overview of available molecular rapid tests that can provide results for the detection of respiratory viruses within 3 hours. We systematically summarize quality and meta-analyze results of DTA studies and systematically review studies evaluating the clinical impact of rapid molecular testing for respiratory viruses.

METHODS

We followed the guidance provided by the Cochrane DTA Working Group [16]. This systematic review was registered in the Prospero database under CRD42017057881. A systematic literature search for both DTA and clinical impact studies was conducted (Supplementary Materials 1A). The search was performed in Medline, Embase, and the Cochrane Library on 31 August 2017. Inclusion and exclusion criteria and the screening process are described in Supplementary Materials 1B and 1C, respectively. Data extraction for both DTA and clinical impact studies was conducted in a systematic manner (Supplementary Materials 1D and 1E). Methodological quality of the included studies was reviewed using the Quality Assessment of Diagnostic Accuracy Studies (QUADAS-2) criteria [17] for DTA studies, the Cochrane Risk of Bias tool [18] for randomized clinical impact studies, and the Risk of Bias in Nonrandomized Studies of Interventions (ROBINS-I) tool [19] for nonrandomized clinical impact studies.

Statistical Analysis

Sensitivity and specificity were calculated using 2 × 2 contingency tables for all index tests from the included DTA studies. Sensitivities and specificities of individual studies with their corresponding 95% confidence intervals (CIs) were presented in paired forest plots. We used the bivariate random-effects model to meta-analyze the logit-transformed sensitivities and specificities to obtain a summary estimate together with a random-effects 95% confidence and prediction interval. This model takes into account the precision by which sensitivities and specificities have been estimated in each study using the binomial distribution (ie, weighted average) and incorporates any additional variability beyond chance that exists between studies (ie, random-effects model). Results were plotted in receiver operating characteristic (ROC) space with 95% confidence and 95% prediction intervals. The 95% confidence region reflects the precision of the pooled point estimate, whereas the 95% prediction region represents the region in which the individual results of a new, large study evaluating the diagnostic accuracy of the same rapid assay are to be expected. In these plots, sensitivity and specificity estimates of the most frequently described assays were pooled per assay. Heterogeneity between studies was assessed by subgroup analyses using bivariate random-effects regression for different study populations, different assays, different viruses that were assessed, different study designs, and studies with different quality. For clinical impact studies, a descriptive summary of the quality of included studies was given. Results of clinical impact studies were not pooled quantitatively, but presented per clinical outcome arranged by study quality. All analyses were performed in R Studio, and ROC plots were made using Stata version 11 software.

RESULTS

Diagnostic Accuracy

After screening (Figure 1), 63 separate reports were included in the meta-analysis from 56 individual DTA study publications (Supplementary Materials 2). The main characteristics of the included DTA reports are described in Table 1. The median sample size in these reports was 95 patients (interquartile range [IQR], 49–196). The included reports evaluated 13 commercial molecular rapid diagnostic tests. Of these, the most frequently studied tests were the Alere i Influenza A&B assay (Alere, Scarborough, Maine; 14 reports), Cobas Liat Influenza A/B (Roche Diagnostics, Indianapolis, Indiana; 5 reports), FilmArray (BioFire Diagnostics, Salt Lake City, Utah; 10 reports), Cepheid Xpert Flu Assay (Cepheid, Sunnyvale, California; 9 reports), Simplexa Flu A/B & Respiratory Syncytial Virus (RSV) kit (Focus Diagnostics, Cypress, California; 9 reports), and Verigene Respiratory Virus Plus (Nanosphere, Northbrook, Illinois; 5 reports).

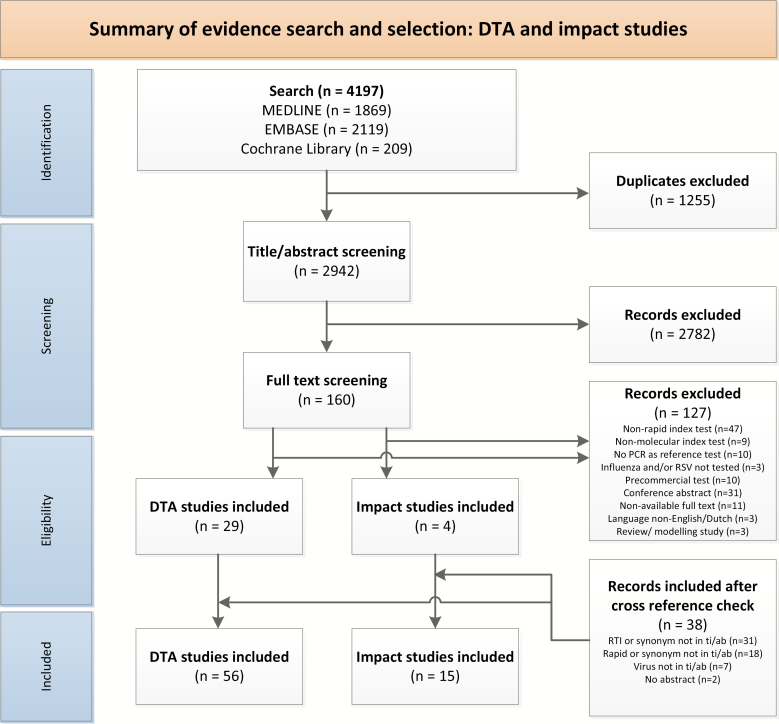

Figure 1.

Preferred Reporting Items for Systematic Reviews and Meta-analyses (PRISMA) flowchart. Abbreviations: DTA, diagnostic test accuracy; PCR, polymerase chain reaction; RSV, respiratory syncytial virus; RTI, respiratory tract infection; ti/ab, title/abstract.

Table 1.

Characteristics of the Reports (N = 63) from the 56 Included Diagnostic Test Accuracy Studies

| Characteristic | No. (%) |

|---|---|

| Study design | |

| Cohort study | 28 (44.4) |

| Case-control study | 28 (44.4) |

| Partially cohort and partially case-control | 7 (11.1) |

| Data collection | |

| Prospective | 25 (39.7) |

| Retrospective | 29 (46.0) |

| Both prospective and retrospective | 9 (14.3) |

| Virus evaluated | |

| Influenza A and Ba | 29 (46.0) |

| Influenza A, B, and RSVb | 20 (31.7) |

| Panel of virusesc | 14 (22.2) |

| Study population | |

| Children | 8 (12.7) |

| Adultsd | 7 (11.1) |

| Children and adults | 26 (41.3) |

| Not reported | 22 (34.9) |

| Patient symptoms | |

| Patients with ILI or symptoms of an RTIe | 36 (57.1) |

| Symptoms not described | 27 (42.9) |

| Tests evaluated | |

| AdvanSure (LG Life Sciences)f | 3 (4.8) |

| Alere i Influenza A&B assay (Alere) | 14 (22.2) |

| Aries Flu A/B & RSV assay (Luminex)f | 2 (3.2) |

| Cobas Liat Influenza A/B (Roche Diagnostics) | 5 (7.9) |

| Enigma MiniLab (Enigma Diagnostics Ltd)f | 1 (1.6) |

| FilmArray (BioFire Diagnostics) | 10 (15.9) |

| Cepheid Xpert Flu Assay (Cepheid) | 9 (14.3) |

| ePlex RP Panel (GenMark Diagnostics)f | 1 (1.6) |

| PLEX-ID Flu Assay (Abbott Molecular)f | 1 (1.6) |

| RIDAGENE Flu & RSV kit (R-Biopharm AG)f | 1 (1.6) |

| Roche RealTime (Roche Diagnostics)f | 2 (3.2) |

| Simplexa Flu A/B & RSV kit (Focus Diagnostics) | 9 (14.3) |

| Verigene Respiratory Virus Plus test (Nanosphere) | 5 (7.9) |

| Reference standard | |

| In-house or laboratory-developed RT-PCR | 22 (34.9) |

| Commercial RT-PCRg | 41 (65.1) |

See Supplementary Materials 2 for the reference list of studies.

Abbreviations: ILI, influenza-like illness; RSV, respiratory syncytial virus; RTI, respiratory tract infection; RT-PCR, reverse-transcription polymerase chain reaction.

aAmong these studies, 1 study (Salez et al, 2013) only validated the Cepheid Xpert Flu Assay for influenza B.

bAmong these studies, 1 study (Peters et al, 2017) only validated the Alere i Influenza A&B assay for RSV.

cFilmArray (15 viral targets): RSV-A, RSV-B, influenza A/H1, influenza A/H3, influenza untypable, influenza B, parainfluenza virus types 1–4, human metapneumovirus (hMPV), adenovirus, enterovirus/rhinovirus, coronavirus NL63, coronavirus HKU1. For some studies, this panel was only partially validated. AdvanSure (14 viral targets): RSV-A, RSV-B, influenza A, influenza B, parainfluenza virus 1–3, hMPV, bocavirus, adenovirus, rhinovirus, and coronaviruses OC43, 229E, and NL63. ePlex RP panel (21 viral targets): RSV-A, RSV-B, RSV untypable, influenza A/H1, influenza 2009 A/H1N1, influenza A/H3, influenza A untypable, influenza B, parainfluenza virus types 1–4, hMPV, bocavirus, adenovirus, enterovirus/rhinovirus, Middle East respiratory syndrome coronavirus, and coronaviruses OC43, 229E, NL63, and HKU1.

dTwo adult studies only included immunocompromised patients (Steensels et al, 2017 and Hammond et al, 2012).

eAmong studies that included symptomatic patients, 14 studies included patients with ILI (8 cohort studies, 4 case-control studies, and 2 with both a symptomatic cohort; 21 included patients with symptoms of an upper or lower RTI and 2 that included patients with symptoms that were not further specified.

fFull affiliations of index tests not mentioned in text: AdvanSure (LG Life Sciences, Seoul, Korea), Aries Flu A/B & RSV assay (Luminex Corporation, Austin, Texas), Enigma MiniLab Influenza A/B & RSV (Enigma Diagnostics, Salisbury, United Kingdom), ePlex respiratory pathogen panel (GenMark Diagnostics, Carlsbad, California), PLEX-ID Flu assay (Abbott Molecular, Des Plaines, Illinois), RIDAGENE Flu & RSV kit (R-Biopharm AG, Darmstadt, Germany), and Roche RealTime Ready Influenza AH1N1 Detection Set (Roche Diagnostics, Indianapolis, Indiana).

gOne study used 2 different commercial PCR methods or composite reference with concordance of at least 2 multiplex PCR methods (Popowitch, 2013).

The quality of the included DTA studies (n = 56) was assessed using the QUADAS-2 criteria and is summarized in Supplementary Figure 1. The biggest concern in terms of quality was that a minority (35%) of included studies gave a clear description of their selection criteria and/or used a cohort design for inclusion of patients or specimens. In terms of flow, 17% of studies used samples that were frozen between index and reference testing, used multiple different molecular reference standards, and/or excluded samples that had invalid results on either the index test or reference standard. For the index test, in the majority of studies it was unclear whether results were interpreted without knowledge of the results of the reference test.

Overall, the pooled sensitivity of all rapid molecular tests was 90.9% (95% CI, 88.7%–93.1%) and the pooled specificity was 96.1% (95% CI, 94.2%–97.9%). Forest plots for both sensitivity and specificity of all included studies are shown in Figure 2. ROC plots with sensitivity and specificity of the most frequently assessed assays are depicted in Figure 3. Subgroup analyses were conducted to investigate heterogeneity in sensitivity and specificity (Table 2). The sensitivity of the different index tests ranged from 81.6% (95% CI, 75.4%–87.9%) for the Alere i Influenza A&B assay to 99.0% (95% CI, 98.3%–99.6%) for the Simplexa Flu A/B & RSV kit (P = .000). The specificity of assays detecting a panel of viruses (eg, the FilmArray, AdvanSure, and ePlex RP panel) was significantly lower than the specificity of assays detecting only influenza virus and/or RSV (P = .009). Subgroup analyses based on differences in study design showed increased sensitivity of cohort studies as compared to case-control studies (P = .009). The pooled sensitivity of studies that only included children (n = 8) was 93.0% (95% CI, 91.5%–94.5%) as compared to a pooled sensitivity of 79.8% (95% CI, 70.7%–88.9%) in adults (n = 7) (P = .01), whereas the pooled specificity was higher in adults (98.6% [95% CI, 95.5%–100%]) as compared to child studies (80.8% [95% CI, 73.1%–88.4%]) (P = .001).

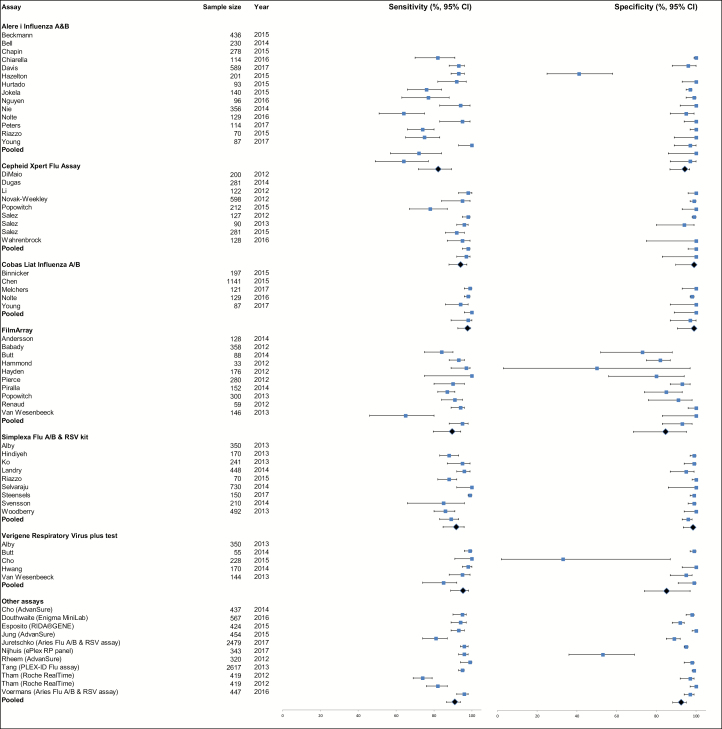

Figure 2.

Forest plot for sensitivity (left) and specificity (right) (% with 95% confidence interval) of all study reports (N = 63), stratified and pooled per assay (top to bottom). In one study (Salez 2012), no negative tested samples were included, so specificity could not be calculated for this study and was therefore excluded from the pooled analysis. For specificity, 4 studies had an outstandingly low specificity due to the case-control design with inclusion of a very low number of virus-negative patients: 37 negative patients, of whom 22 tested false positive with the Alere i Influenza A&B assay (Chapin 2015), 2 negative patients, of whom 1 tested false positive with FilmArray (Butt 2014), 3 negative patients, of whom 2 tested false positive with the Verigene Respiratory Virus Plus test (Butt 2014), and 29 negative patients, of whom 10 tested false positive with the ePlex RP panel (Nijhuis 2017). Please see Supplementary Materials 2 for the reference list of studies. Abbreviation: CI, confidence interval.

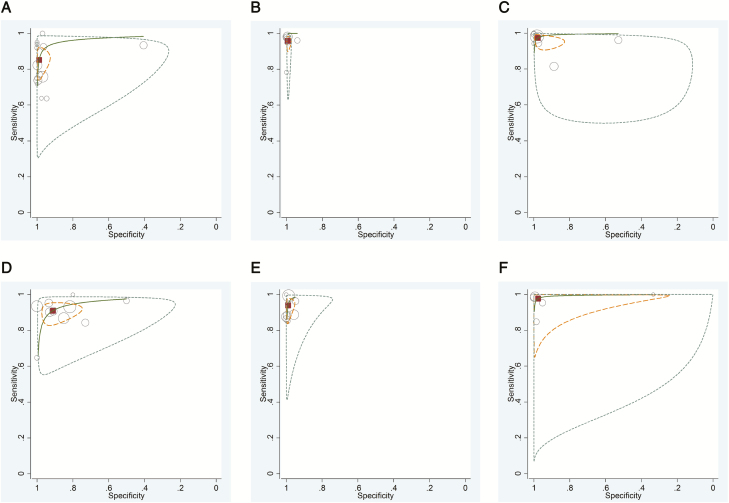

Figure 3.

Receiver-operating characteristic (ROC) curve plots of most frequently evaluated rapid molecular diagnostic tests: Alere i Influenza A&B assay (A), Cepheid Xpert Flu Assay (B), Cobas Liat Influenza A/B (C), FilmArray (D), Simplexa Flu A/B & Respiratory Syncytial Virus kit (E), and Verigene Respiratory Virus Plus test (F). The size of the circles indicates the sample size of the individual studies. The pooled summary estimate is represented by the square, the 95% confidence region by the finely dotted lines, the 95% prediction region by the striped lines, and the ROC curve by the continuous line.

Table 2.

Accuracy Estimates From Subgroup Analyses Using Bivariate Random-effects Regression

| Characteristic | No. of Studies | Pooled Sensitivity, % (95% CI) | P Valuea | Pooled Specificity, % (95% CI) | P Valuea |

|---|---|---|---|---|---|

| Population age group | |||||

| Children | 8 | 93.0 (91.5–94.5) | .010 | 80.8 (73.1–88.4) | .001 |

| Adults | 7 | 79.8 (70.7–88.9) | 98.6 (95.5–100) | ||

| Population symptoms | |||||

| Respiratory/ILI | 34 | 90.4 (87.2–93.7) | .655 | 96.2 (93.6–98.7) | .478 |

| Unclear | 29 | 91.4 (88.6–94.2) | 94.8 (91.9–97.7) | ||

| Viruses | |||||

| Influenza | 29 | 87.9 (83.7–92.1) | .078b | 97.4 (94.2–100) | .009b |

| Influenza + RSV | 19 | 94.1 (90.9–97.4) | 96.4 (93.6–99.2) | ||

| Panel of viruses | 14 | 91.8 (88.7–95.0) | 88.8 (82.7–95.0) | ||

| Index test | |||||

| Alere i Influenza A&B | 14 | 81.6 (75.4–87.9) | .000c | 94.0 (86.0–100) | .623 |

| Cobas Liat Influenza A/B | 5 | 98.1 (90.8–100) | 99.7 (88.5–100) | ||

| FilmArray | 10 | 89.2 (86.4–92.0) | 96.1 (90.5–100) | ||

| Simplexa Flu A/B & RSV | 9 | 99.0 (98.3–99.6) | 98.2 (93.3–100) | ||

| Verigene RV Plus test | 5 | 96.2 (88.0–100) | 97.1 (87.6–100) | ||

| Cepheid Xpert Flu | 9 | 94.9 (91.1–98.6) | 100 (97.8–100) | ||

| Study design | |||||

| Cohort | 28 | 94.7 (92.5–96.8) | .009 | 96.5 (94.3–98.8) | .147 |

| Case-control | 28 | 88.8 (85.2–92.5) | 91.2 (84.5–97.9) | ||

| Prospective or retrospective study | |||||

| Prospective | 25 | 91.4 (89.2–93.6) | .461 | 95.9 (93.4–98.5) | .200 |

| Retrospective | 29 | 89.7 (86.0–93.4) | 91.9 (85.7–98.1) |

Abbreviations: CI, confidence interval; ILI, influenza-like illness; RSV, respiratory syncytial virus.

a P values are calculated comparing sensitivity and specificity of ≥2 groups, using an independent sample t test for 2 groups and a 1-way analysis of variance for >2 groups.

bPost hoc test using Tukey honestly significant difference (HSD) gives a significant result between influenza and panel of viruses (P = .008); between influenza + RSV and panel of viruses (P = .036); no significant result between influenza and influenza + RSV.

cPost hoc test using Tukey HSD gives significant result between Alere i Influenza A&B and Cobas Liat Influenza A/B (P = .001); between Alere i Influenza A&B and Simplexa Flu A/B & RSV (P = .000); between Alere i Influenza A&B and Verigene RV Plus (P = .007); between Alere i Influenza A&B and Cepheid Xpert Flu (P = .002); no significant result between the other groups.

Clinical Impact

After screening (Figure 1), we included 15 clinical impact studies [1, 20–33]. Characteristics of included clinical impact studies are described in Table 3. The implemented diagnostic rapid molecular test was combined with procalcitonin measurements in 2 studies [21, 30]. Two studies implemented guidelines on treatment decisions based on the rapid test results [20, 21], whereas in other studies no changes in treatment recommendations and antibiotic stewardship were made or treatment consequences of rapid testing were not described. Five studies were randomized diagnostic impact trials [1, 20, 21, 24, 25], 6 studies used a nonrandomized before-after design [23, 26–29, 32], and 4 studies were observational noncomparative studies [22, 30, 31, 33]. Only 1 study included patients at >1 center [22]. Three studies [1, 20, 31] placed the rapid test at the point of care, whereas others located the diagnostic test at the microbiological laboratory. Seven studies were sponsored by the manufacturer of the diagnostic test [20, 21, 23–25, 28, 29]. The median number of included patients in the studies was 300 (IQR, 121–630) and most studies (n = 9) included only adult patients [1, 20, 21, 24–26, 28, 30, 34]. The FilmArray was used most frequently (11 of 15 studies) as a diagnostic intervention test [1, 20, 21, 24, 25, 27–31, 33].

Table 3.

Characteristics of Studies Included in the Review of Clinical Impact Studies (n = 15)

| Characteristic | No. (%) |

|---|---|

| Study design | |

| Randomized controlled trial | 5 (33.3) |

| Cohort study with before-after design | 6 (40.0) |

| Cohort study without control group | 4 (26.7) |

| Single-center study | 14 (93.3) |

| Study population | |

| Children | 2 (13.3) |

| Adults | 9 (60.0) |

| Children and adults | 2 (13.3) |

| Not reported | 2 (13.3) |

| Sample size | |

| Eligible patients, No., median (IQR)a | 475 (232–945) |

| Included patients, median (IQR) | 300 (121–630) |

| Intervention group patients, median (IQR) | 151 (72–347) |

| Control group patients, median (IQR)b | 149 (50–205) |

| Symptoms of patients | |

| Patients with ILI or symptoms of RTI | 10 (67.7) |

| (Eventual) symptoms unclear | 5 (33.3) |

| Tests evaluated | |

| Alere i Influenza A&B assay | 1 (6.7) |

| FilmArrayc | 11 (73.3) |

| Cepheid Xpert Flu assay | 2 (13.3) |

| Simplexa Flu A/B & RSV kit | 1 (6.7) |

| Reference standard | |

| In-house or laboratory-developed RT-PCR and/or other routine viral pathogen test | 11 (73.3) |

| No comparison for clinical outcomes | 4 (26.7) |

| Clinical outcomes | |

| Antibiotics | 11 (73.3) |

| Oseltamivir | 5 (33.3) |

| Hospital admission | 4 (26.7) |

| Length of hospital stay | 7 (46.7) |

| Isolation measurements | 3 (20.0) |

| Safety outcomes | 6 (40.0) |

| No. of radiographs and other investigations | 2 (13.3) |

| Turnaround time | 10 (67.7) |

Data are presented as No. (%) unless otherwise indicated.

Abbreviations: ILI, influenza-like illness; IQR, interquartile range; RSV, respiratory syncytial virus; RTI, respiratory tract infection; RT-PCR, reverse-transcription polymerase chain reaction.

aIn 4 studies, the number of eligible patients was unclear (Chu 2015, Keske 2017, Muller 2016, and Xu 2013).

bIn 4 studies, no control group was used for comparison (Busson 2017, Keske 2017, Timbrook 2015, and Xu 2013).

cIn 2 studies, the FilmArray (partially) was a combined diagnostic intervention with procalcitonin measurement (Branche 2015 and Timbrook 2015).

The quality assessment of all studies is summarized in Supplementary Figure 2. All nonrandomized studies suffered from potential confounding bias and bias in outcome measurements.

The results of the impact studies were very heterogeneous. Clinical outcomes for each study are categorized and summarized in Table 4, with studies of higher quality at the top. The turnaround time of the rapid molecular tests vs reference molecular techniques was significantly faster in all studies that assessed turnaround time (n = 10) [1, 20, 23–25, 27–29, 31, 32]. Implementation of rapid molecular tests did not decrease the number of antibiotic prescriptions or the duration of antibiotic treatment. Only 1 multivariable adjusted before-after study [23] reported a significantly lower percentage of antibiotic prescriptions in the patients tested with the Simplexa Flu A/B & RSV kit during the second season as compared to patients tested with the laboratory-developed RT-PCR during the first season. One other before-after study [29] reported a significant reduction in duration of antibiotic treatment. Both studies were not adjusted for differences in the proportion of influenza virus–positive patients, which was significantly higher during the second (intervention) season. Oseltamivir prescriptions were more appropriate in influenza virus–positive patients according to 1 randomized study [1] and 1 nonrandomized study [26]. Two other nonrandomized comparative studies showed no effect of rapid testing on oseltamivir prescriptions [23, 28]. The number of hospital admissions was not reduced by rapid molecular testing [1, 22, 26, 28], but 2 studies, among which 1 was a randomized study, showed a decreased length of hospital stay among admitted patients [1, 28]. Length of hospital stay was not reduced in 4 other studies, among which 2 were randomized studies [20, 21, 23, 29] that, however, were smaller and potentially underpowered as compared to the randomized study that showed a significant effect [1]. Safety outcomes as mortality, serious adverse events, and intensive care unit admissions and/or readmissions did not differ between the intervention and control groups [1, 20, 21, 23, 29, 30]. In terms of efficiency, 1 study reported lower costs of therapy with the use of a rapid molecular test [24] and 2 studies reported a reduction in the number of chest radiographs in influenza virus–positive patients [22, 28]. There was no effect on the use of isolation facilities in 2 studies [1, 29] but 1 unadjusted before-after study reported a significant reduction in the mean droplet isolation days, a reduction in isolation days for suspected influenza (0.4 vs 2.7 days; P < .001), and an increase in isolation days for confirmed influenza virus infection (1.1 vs 0.9 days; P = .16) [32].

Table 4.

Overview of Clinical Outcomes Presented in Included Clinical Impact Studies (n = 15)

| Outcome per study (author, year, country) | Study design | Sample size (n) | Effect - intervention vs control/ odds ratio (OR) | P-value | Conclusion |

|---|---|---|---|---|---|

| Antibiotic prescriptions | |||||

| Brendish, 2017 (UK) |

RCT (1:1) | 714 | 84% vs 83% | .84 | No decrease in antibiotic prescriptions |

| Andrews, 2017 (UK) |

RCT (quasia) | 522b | 75% vs 77% | .99 | |

| Chu, 2015 (USA) |

Before-after, multivariatec | 350 | 63% vs 76% | <.001 | |

| Rogers, 2014 (USA) |

Before-after, univariate | 1136 | 72% vs 73% | .61 | |

| Rappo, 2016 (USA) |

Before-after, univariate | 337d | 66% vs 61% | .35 | |

| Linehan, 2017 (Ireland) |

Before-after, univariate | 67e | 33% vs 76% | <.001 | |

| Busson, 2017 (Belgium) |

Cohort, no control group | 69 | In 36.2% of patients antibiotic prescriptions were avoided | - | |

| Keske, 2017 (Turkey) | Cohort, no control group | 359d | 45% of virus positive patients received antibiotics | - | |

| Duration of antibiotic therapy | |||||

| Branche, 2015 (USA) |

RCT (1:1) | 300 | Median 3 days [IQR 1–7] vs 4 [0–8] | .71 | No decrease in duration of antibiotic therapy |

| Brendish, 2017 (UK) |

RCT (1:1) | 714 | Mean 7.2 days [SD 5.1] vs 7.7 [4.9] | .32 | |

| Andrews, 2017 (UK) |

RCT (quasia) | 522b | Median 6 days [IQR 4–7] vs 6 [5–7.3] | .23 | |

| Gilbert, 2016 (USA) |

RCT (quasif) | 127 | Mean 1053/1000 patient-days [SD 657] vs 472/1000 [1667] | .07 | |

| Gelfer, 2015 (USA) |

RCT (quasif) | 18d | Mean 683/1000 patient-days [SD 317] vs 917/1000 [220] | .052 | |

| Rogers, 2014 (USA) |

Before-after, univariate | 1136 | Mean 2.8 days [SD 1.6] vs 3.2 [SD 1.6] | .003 | |

| Rappo, 2016 (USA) |

Before-after, univariate | 212e | Median 1 vs 2 days | .24 | |

| Keske, 2017 (Turkey) | Cohort, no control group | 160d | Mean 6.5 days [SD 3.7] in virus positive patients | - | |

| Oseltamivir prescriptions | |||||

| Brendish, 2017 (UK) |

RCT (1:1) | 714 | 18% vs 14% | .16 | More appropriate oseltamivir use in influenza positive patients |

| 94e | 91% vs 65% | .003 | |||

| Chu, 2015 (USA) |

Before-after, univariate | 350 | 55% vs 45% | .05 | |

| 40e | 100% vs 100% | 1.00 | |||

| 136g | 45% vs 43% | .60 | |||

| Rappo, 2016 (USA) |

Before-after, univariate | 212e | 61% vs 61% | .96 | |

| Linehan, 2017 (Ireland) |

Before-after, univariate | 68e | 95% vs 72% | <.01 | |

| Xu, 2013 (USA) |

Cohort, no control group | 97e | 81% of influenza positive patients received oseltamivir | - | |

| Length of hospital stay | |||||

| Branche, 2015 (USA) |

RCT (1:1) | 300 | Median 4 vs 4 days | NS | Reduction in length of hospital stay |

| Brendish, 2017 (UK) |

RCT (1:1) | 714 | Mean 5.7 days [SD 6.3] vs 6.8 [7.7]h | .044 | |

| Andrews, 2017 (UK) |

RCT (quasia) | 545 | Median 4.1 days [IQR 2.0–9.1] vs 3.3 [1.7–7.9] | .28 | |

| Rappo, 2016 (USA) |

Before-after, multivariatei | 212e | Median 1.6 days [IQR 0.3–4.8] vs 2.1 [0.4–5.6] | .040 | |

| Rogers, 2014 (USA) |

Before-after, univariate | 1136 | Mean 3.2 days [SD 1.6] vs 3.4 [1.7] | .16 | |

| Chu, 2015 (USA) |

Before-after, univariate | 350 | Median 4 days [range 1–164] vs 5 [0–117] | .33 | |

| Timbrook, 2015 (USA) |

Cohort, no control group | 601d | Median 1 day [IQR 0–3] in virus positive patients | - | |

| Hospital admissions | |||||

| Brendish, 2017 (UK) |

RCT (1:1) | 714 | 92% vs 92% | .94 | No reduction in hospital admissions |

| Rappo, 2016 (USA) |

Before-after, univariate | 337d | 76% vs 74% | .60 | |

| Linehan, 2017 (Ireland) |

Before-after, univariate | 69e | 45% vs 88% | <.001 | |

| Busson, 2017 (Belgium) |

Cohort, no control group | 69 | 5.8% of hospitalizations was avoided | - | |

| Safety | |||||

| Branche, 2015 (USA) |

RCT (1:1) | 300 | No difference in-hospital deaths, SAEs, new pneumonia cases or 90-day post-hospitalization visits | NS | Safety is not affected |

| Brendish, 2017 (UK) |

RCT (1:1) | 714 | 30-day readmission 13% vs 16% | .28 | |

| 30-day mortality 3% vs 5% | .15 | ||||

| ICU admission 3% vs 2% | .36 | ||||

| Andrews, 2017 (UK) |

RCT (quasia) | 545 | 30-day readmission 19% vs 20% | .70 | |

| 30-day mortality 4% vs 4% | .79 | ||||

| Rogers, 2014 (USA) |

Before-after, univariate | 1136 | Mortality 0% vs 0% | 1.00 | |

| ICU admission 0% vs 0% | 1.00 | ||||

| Chu, 2015 (USA) |

Before-after, univariate | 350 | Mortality 2% vs 4% | .68 | |

| ICU admission 31% vs 25% | .19 | ||||

| Timbrook, 2015 (USA) |

Cohort, no control group | 601d | ICU admission in 8.8% of virus positive patients | - | |

| (1) Costs; (2a) no. of / (2b) any additional chest radiographs; (3a) use of / (3b) time in isolation facilities | |||||

| Gilbert, 2016 (USA) |

RCT (quasif) | 127 | (1) $8308/1000 patient-days [SD 10165] vs $11890/1000 [11712] | .02 | Potential reduction in costs and additional X-rays |

| Rappo, 2016 (USA) |

Before-after, multivariatei | 188e | (2a) Median 1 [IQR 1-1] vs 1 [1–2] | .005 | |

| Busson, 2017 (Belgium) |

Cohort, no control group | 28e | (2b) 25% reduction of X-rays in influenza positive patients | - | |

| Brendish, 2017 (UK) |

RCT (1:1) | 385j |

(3a) 33% vs 25% | .12 | |

| 50e | 74% vs 57% | .24 | |||

| Rogers, 2014 (USA) |

Before-after, univariate | 1136 | (3b) 2.9 days [SD 1.6] vs 3.0 [1.7] | .27 | |

| Muller, 2016 (Canada) |

Before-after, univariate | 125 | (3b) Droplet isolation: 3.5 days vs 6.0 | <.001 | |

| Turnaround time | |||||

| Brendish, 2017 (UK) |

RCT (1:1) | 714 | Mean 2.3 hours [SD 1.4] vs 37.1 [21.5] | <.001 | Significantly faster |

| Andrews, 2017 (UK) |

RCT (quasia) | 545 | Median 19 hours [IQR 8.1–31.7] vs 39.5 [25.4–57.6]k | <.001 | |

| Gilbert, 2016 (USA) |

RCT (quasif) | 127 | Mean 2.1 hours [SD 0.7] vs 26.5 [15] | <.001 | |

| Gelfer, 2015 (USA) |

RCT (quasif) | 59 | Mean 1.8 hours [SD 0.3] vs 26.7 [16] | <.001 | |

| Chu, 2015 (USA) |

Before-after, multivariatec | 350 | Median 1.7 hours [range 0.8–11.4] vs 25.2 [2.7–55.9] | <.001 | |

| Rogers, 2014 (USA) |

Before-after, univariate | 1136 | Mean 6.4 hours [SD 4.9] vs 18.7 [8.2]l | <.001 | |

| Pettit, 2015 (USA) |

Before-after, univariate | 1102 | Mean 3.1 hours vs 46.4 | <.001 | |

| Rappo, 2016 (USA) |

Before-after, univariate | 212e | Median 1.7 hours [IQR 1.6–2.2] vs 7.7 [0.8–14] | .015 | |

| Muller, 2016 (Canada) |

Before-after, univariate | 125 | Mean 3.6 hours vs 35.0 | - | |

| Xu, 2013 (USA) |

Cohort, no control group | 2537 | Median 1.4 hours | - |

Abbreviations: ED, emergency department; ICU, intensive care unit; IQR, interquartile range; NS, not significant; PCR, polymerase chain reaction; RCT, randomized controlled trial; SAE, serious adverse event; SD, standard deviation.

aQuasi randomized randomization process with rapid viral molecular testing on even days of the month and reference laboratory PCR testing on odd days.

bAnalysis for antibiotic prescription performed in 522/545 patients due to missing data on antibiotic prescriptions for 13 patients in control arm and ten in intervention arm.

cMultivariate analysis adjusting for confounders age, location of sample collection, receipt of influenza vaccine, immunosuppressed status and pregnancy.

dSubgroup analysis in virus positive patients. In the study of Gelfer (2015) among these virus positive patients only the patients who received antimicrobials were included. In the study of Keske (2017) these virus positive patients included only inpatients, and for the duration of antibiotic therapy only patients with inappropriate antibiotic use were included.

eSubgroup analysis in influenza positive patients. In the study of Busson (2017) among these influenza positive patients only the patients who were tested with rapid molecular tests during working hours and who were still in the ED during the test result were included. In the study of Rappo (2016) among these influenza positive patients only the patients who received a chest radiograph were included in the multivariate analysis for the number of chest radiographs.

fQuasi randomized randomization process with rapid viral molecular testing during one-week and reference laboratory PCR testing during the following week and so on.

gSubgroup analysis in influenza negative patients.

hAdjusted for in-hospital mortality.

iMultivariate analysis adjusting for confounders age, immunosuppressed status, asthma and admission to ICU.

jAnalysis for isolation facility use were only available from patients included during the second season of inclusion.

kIn the study of Andrews (2017) patients were admitted to an Acute Medical Unit of Medical Assessment Centre before inclusion in the study. The turnaround time was calculated as the time from admission to result and therefore also covers the time from admission until the swab was actually taken (during which time the assessment of eligibility for inclusion and informed consent procedure were performed).

lIn the study of Rogers (2014) patients were included at the Emergency Department, but also after admission, leading to a longer time to result.

DISCUSSION

In our meta-analysis, DTA studies for molecular rapid tests for respiratory viruses showed that these tests are accurate with a pooled sensitivity of 90.9% (95% CI, 88.7%–93.1%) and a pooled specificity of 96.1% (95% CI, 94.2%–97.9%). In our subgroup analysis, the Cobas Liat Influenza A/B system was most reliable for the detection of influenza virus, with a sensitivity of 98.1%, and the Simplexa Flu A/B & RSV kit was most reliable for detection of influenza virus and RSV with a sensitivity of 99.0%. The FilmArray simultaneously tests for a panel of 15 viruses with a sensitivity of 89.2%. Overall, molecular tests had better sensitivity in children than adults, presumably due to higher viral loads in children [35]. Studies on the clinical impact of rapid molecular testing had large variation in design and quality. Nevertheless, they unanimously found significantly decreased turnaround times. In addition, a reduced length of hospital stay, increased appropriate use of oseltamivir in influenza virus–positive patients, and a potential reduction in costs and additional radiographs as compared to conventional molecular methods were observed in the majority of the (high-quality) studies. No effect was seen on antibiotic prescriptions, duration of antibiotic therapy, use of in-hospital isolation measurements, or the number of hospital admissions.

This is the first systematic review to compare and pool the diagnostic accuracy of multiple rapid molecular assays and to analyze clinical outcomes. Other systematic reviews on this topic have either included nonrapid molecular assays [36, 37], only focused on 1 or 2 particular assays [14, 15, 38], or also included nonmolecular rapid tests with lower sensitivity as compared to molecular assays [11, 12, 39, 40]. Studies have shown superior accuracy of molecular assays compared with rapid antigen tests [11], and pooling the results of assays that use different underlying techniques gives pessimistic estimates of the diagnostic accuracy of molecular tests [41]. Potential practical concerns of molecular tests as compared to antigen tests, such as increased costs, longer turnaround times, and more complicated procedures, have largely been overcome with recent technological innovations [4]. Molecular tests are replacing antigen-based rapid assays. Therefore, further comparisons should be using molecular assays as a gold standard. In this review we included both pathogen-specific singleplex and multiplex assays detecting a range of respiratory viruses, whereas in most reviews and studies there is special focus on assays that detect only 1 or 2 pathogens, mainly influenza virus [11, 38, 40] and sometimes RSV [12]. Viral pathogens other than influenza virus and RSV also have a high burden of disease [42], and their detection may have clinical consequences as antiviral treatment [43] and application of isolation measurements in a hospital setting. Depending on the clinical setting and patient population, assays that are capable of detecting a panel of viruses may therefore be of increased interest when rapid tests are to replace conventional molecular tests.

To determine which rapid test to implement, the overall diagnostic accuracy of a multiplex test may then be more important than its individual pathogen accuracy, whereas in the current diagnostic accuracy reviews, overall sensitivity and specificity are often given per virus instead of per assay [11, 12, 39]. However, it should be noted that judging discrepant viral results similarly for multiplex and singleplex assays will result in poorer diagnostic accuracy, mainly specificity, of multiplex assays. Therefore, when comparing different available rapid molecular assays—for example, Simplexa Flu A/B & RSV and FilmArray—it should always be noted that differences in diagnostic accuracy between these assays can result from testing a different number of viral pathogens while the diagnostic accuracy per individual viral pathogen may be similar.

Former studies assessing the effect of testing with conventional multiplex assays providing results within 24–48 hours showed no effect on antibiotic treatment and hospital length of stay [6, 44]. However, more rapid testing for respiratory viruses might improve the impact on clinical outcomes as results are available before any initial treatment or management is established by the treating physician. To our knowledge, this is the first review to specifically assess the clinical impact of rapid molecular tests, and not rapid antigen tests, without a restriction in the detection of influenza virus and RSV [45, 46]. The included studies, even the high-quality randomized studies [1, 20, 21, 24, 25], show heterogeneous results. The location of the rapid test, which was at the point of care in only 3 studies, may affect turnaround times and thereby clinical outcomes. Apart from other differences in design, and in analysis and power, differences in the implementation strategy might partially explain these discrepancies. First, education and training of personnel and physicians on the implemented rapid test, its diagnostic accuracy, and its potential effects on clinical outcomes may contribute to its effect on clinical outcomes [33]. Second, a combination of a rapid test and a result-based guideline on subsequent clinical management options might have more impact than a stand-alone diagnostic test, even though the 2 studies describing the implementation of a diagnostic bundle did not show any significant effects of their implementation, which might be partially explained by limited adherence to these guidelines [20, 21]. A complicating factor therein is that identification of a viral pathogen from a respiratory tract sample may not necessarily attribute causation [2]. Third, a combination of a rapid test and another diagnostic as procalcitonin [21, 30] or other biomarker-based assays [47] may increase the persuasiveness of the rapid viral test on whether there is a bacterial or viral causative pathogen. However, current evidence for the effect of the combination of respiratory viral testing and procalcitonin on clinical outcomes is disappointing [21].

Strengths of our systematic review and meta-analysis of DTA studies are that we followed a standardized protocol for the inclusion of studies, quality assessment, data extraction, and statistical analysis. To be as complete as possible, we did not exclude studies with a less optimal study design (eg, case-control studies). We evaluated heterogeneity using subgroup analyses. Furthermore, we assessed the clinical impact of rapid molecular testing for respiratory viruses. Since quantitative pooling of clinical impact results was not feasible due to heterogeneity in study design and quality, we made overall conclusions for clinical endpoints that were assessed by at least 2 studies based on majority votes of studies with highest quality and power. Also, an overview of available clinical impact studies may have important implications for the design of future clinical impact studies. Our review also has some limitations. First, due to poor reporting in DTA studies, we had missing information for our subgroup analyses. Second, there was substantial residual heterogeneity between DTA studies that could not be explained by our subgroup analyses. Residual heterogeneity and thereby differences in diagnostic accuracy might have been caused by differences in sampling types [48] and duration of clinical symptoms and associated viral loads of included patients, for example, which were factors that were poorly reported. Furthermore, with an assay level comparison of diagnostic accuracy, the multiplex assays are disadvantaged. The more viruses that an assay tests for, the bigger the chance of any discrepant results with the reference test. Therefore, as mentioned before, when interpreting the results of a head-to-head comparison of the accuracy of different assays, the number of tested pathogens should also be taken into account and results should be interpreted carefully.

In conclusion, rapid molecular tests for viral pathogen detection provide accurate results. Even though results on clinical impact of rapid diagnostic tests are conflicting, there is high-quality evidence that rapid testing might decrease the length of hospital stay and might increase appropriate use of oseltamivir in influenza virus–positive patients, without leading to adverse results. We therefore suggest considering implementation of rapid molecular tests within hospital settings and recommend performance of high-quality randomized studies.

Supplementary Data

Supplementary materials are available at Clinical Infectious Diseases online. Consisting of data provided by the authors to benefit the reader, the posted materials are not copyedited and are the sole responsibility of the authors, so questions or comments should be addressed to the corresponding author.

Notes

Author contributions.L. M. V. contributed to the design, search, screening, data extraction, data analysis, data interpretation, production of the figures, and writing of the report. A. H. L. B. contributed to the search, screening, data interpretation, and writing of the report. J. B. R., R. S., A. R.-B., and A. I. M. H. contributed to data interpretation and writing of the report. J. J. O. contributed to design, data interpretation, and writing of the report.

Potential conflicts of interest.All authors: No reported conflicts. All authors have submitted the ICMJE Form for Disclosure of Potential Conflicts of Interest. Conflicts that the editors consider relevant to the content of the manuscript have been disclosed.

References

- 1. Brendish NJ, Malachira AK, Armstrong L, et al. Routine molecular point-of-care testing for respiratory viruses in adults presenting to hospital with acute respiratory illness (ResPOC): a pragmatic, open-label, randomised controlled trial. Lancet Respir Med 2017; 5:401–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Zumla A, Al-Tawfiq JA, Enne VI, et al. Rapid point of care diagnostic tests for viral and bacterial respiratory tract infections—needs, advances, and future prospects. Lancet Infect Dis 2014; 14:1123–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Clark TW, Medina MJ, Batham S, Curran MD, Parmar S, Nicholson KG. Adults hospitalised with acute respiratory illness rarely have detectable bacteria in the absence of COPD or pneumonia; viral infection predominates in a large prospective UK sample. J Infect 2014; 69:507–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Brendish NJ, Schiff HF, Clark TW. Point-of-care testing for respiratory viruses in adults: the current landscape and future potential. J Infect 2015; 71:501–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Jain S, Williams DJ, Arnold SR, et al. CDC EPIC Study Team Community-acquired pneumonia requiring hospitalization among U.S. children. N Engl J Med 2015; 372:835–45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Oosterheert JJ, van Loon AM, Schuurman R, et al. Impact of rapid detection of viral and atypical bacterial pathogens by real-time polymerase chain reaction for patients with lower respiratory tract infection. Clin Infect Dis 2005; 41:1438–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Gonzales R, Malone DC, Maselli JH, Sande MA. Excessive antibiotic use for acute respiratory infections in the United States. Clin Infect Dis 2001; 33:757–62. [DOI] [PubMed] [Google Scholar]

- 8. Smith SM, Fahey T, Smucny J, Becker L. Antibiotics for acute bronchitis. Cochrane Database Syst Rev 2014; 3:CD000245. [DOI] [PubMed] [Google Scholar]

- 9. McCullers JA. The co-pathogenesis of influenza viruses with bacteria in the lung. Nat Rev Microbiol 2014; 12:252–62. [DOI] [PubMed] [Google Scholar]

- 10. Hawkey PM. The growing burden of antimicrobial resistance. J Antimicrob Chemother 2008; 62:1–9. [DOI] [PubMed] [Google Scholar]

- 11. Merckx J, Wali R, Schiller I, et al. Diagnostic accuracy of novel and traditional rapid tests for influenza infection compared with reverse transcriptase polymerase chain reaction. Ann Intern Med 2017; 167:395–409. [DOI] [PubMed] [Google Scholar]

- 12. Bruning A, Leeflang M, Vos J, et al. Rapid tests for influenza, respiratory syncytial virus, and other respiratory viruses: a systematic review and meta-analysis. Clin Infect Dis 2017; 65:1026–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Chartrand C, Tremblay N, Renaud C, Papenburg J. Diagnostic accuracy of rapid antigen detection tests for respiratory syncytial virus infection: systematic review and meta-analysis. J Clin Microbiol 2015; 53:3738–49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Babady NE. The FilmArray respiratory panel: an automated, broadly multiplexed molecular test for the rapid and accurate detection of respiratory pathogens. Expert Rev Mol Diagn 2013; 13:779–88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Salez N, Nougairede A, Ninove L, Zandotti C, de Lamballerie X, Charrel RN. Xpert Flu for point-of-care diagnosis of human influenza in industrialized countries. Expert Rev Mol Diagn 2014; 14:411–8. [DOI] [PubMed] [Google Scholar]

- 16. Deeks JJ, Bossuyt PM, Gatsonis C, et al. Cochrane handbook for systematic reviews of diagnostic test accuracy version 1.0. 2010. Available at: http://srdta.cochrane.org/. Accessed 9 February 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Whiting PF, Rutjes AWS, Westwood ME, et al. Research and reporting methods accuracy studies. Ann Intern Med 2011; 155:529–36. [DOI] [PubMed] [Google Scholar]

- 18. Higgins JPT, Altman DG, Gøtzsche PC, et al. The Cochrane Collaboration’s tool for assessing risk of bias in randomised trials. BMJ 2011; 343:1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Sterne JA, Hernán MA, Reeves BC, et al. ROBINS-I: A tool for assessing risk of bias in non-randomised studies of interventions. BMJ 2016; 355:4–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Andrews D, Chetty Y, Cooper BS, et al. Multiplex PCR point of care testing versus routine, laboratory-based testing in the treatment of adults with respiratory tract infections: a quasi-randomised study assessing impact on length of stay and antimicrobial use. BMC Infect Dis 2017; 17:1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Branche AR, Walsh EE, Vargas R, et al. Serum procalcitonin measurement and viral testing to guide antibiotic use for respiratory infections in hospitalized adults: a randomized controlled trial. J Infect Dis 2015; 212:1692–700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Busson L, Mahadeb B, De Foor M, Vandenberg O, Hallin M. Contribution of a rapid influenza diagnostic test to manage hospitalized patients with suspected influenza. Diagn Microbiol Infect Dis 2017; 87:238–42. [DOI] [PubMed] [Google Scholar]

- 23. Chu HY, Englund JA, Huang D, et al. Impact of rapid influenza PCR testing on hospitalization and antiviral use: a retrospective cohort study. J Med Virol 2015; 87:2021–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Gilbert D, Gelfer G, Wang L, et al. The potential of molecular diagnostics and serum procalcitonin levels to change the antibiotic management of community-acquired pneumonia. Diagn Microbiol Infect Dis 2016; 86:102–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Gelfer G, Leggett J, Myers J, Wang L, Gilbert DN. The clinical impact of the detection of potential etiologic pathogens of community-acquired pneumonia. Diagn Microbiol Infect Dis 2015; 83:400–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Linehan E, Brennan M, O’Rourke S, et al. Impact of introduction of Xpert flu assay for influenza PCR testing on obstetric patients: a quality improvement project. J Matern Fetal Neonatal Med 2018; 31:1016–20. [DOI] [PubMed] [Google Scholar]

- 27. Pettit NN, Matushek S, Charnot-Katsikas A, et al. Comparison of turnaround time and time to oseltamivir discontinuation between two respiratory viral panel testing methodologies. J Med Microbiol 2015; 64:312–3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Rappo U, Schuetz AN, Jenkins SG, et al. Impact of early detection of respiratory viruses by multiplex PCR assay on clinical outcomes in adult patients. J Clin Microbiol 2016; 54:2096–103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Rogers BB, Shankar P, Jerris RC, et al. Impact of a rapid respiratory panel test on patient outcomes. Arch Pathol Lab Med 2015; 139:636–41. [DOI] [PubMed] [Google Scholar]

- 30. Timbrook T, Maxam M, Bosso J. Antibiotic discontinuation rates associated with positive respiratory viral panel and low procalcitonin results in proven or suspected respiratory infections. Infect Dis Ther 2015; 4:297–306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Xu M, Qin X, Astion ML, et al. Implementation of FilmArray respiratory viral panel in a core laboratory improves testing turnaround time and patient care. Am J Clin Pathol 2013; 139:118–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Muller MP, Junaid S, Matukas LM. Reduction in total patient isolation days with a change in influenza testing methodology. Am J Infect Control 2016; 44:1346–9. [DOI] [PubMed] [Google Scholar]

- 33. Keske Ş, Ergönül Ö, Tutucu F, Karaaslan D, Palaoğlu E, Can F. The rapid diagnosis of viral respiratory tract infections and its impact on antimicrobial stewardship programs. Eur J Clin Microbiol Infect Dis 2018; 37:779–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Ducharme FM, Zemek R, Chauhan B, et al. Determinants of oral corticosteroid responsiveness in wheezing asthmatic youth (doorway): a multicentre prospective cohort study of children with acute moderate or severe asthma exacerbations. Am J Respir Crit Care Med 2015; 191:S314–5. [Google Scholar]

- 35. Granados A, Peci A, McGeer A, Gubbay JB. Influenza and rhinovirus viral load and disease severity in upper respiratory tract infections. J Clin Virol 2017; 86:14–9. [DOI] [PubMed] [Google Scholar]

- 36. Cohen-Bacrie S, Halfon P. Prospects for molecular point-of-care diagnosis of lower respiratory infections at the hospital’s doorstep. Future Virol 2013; 8:43–56. [Google Scholar]

- 37. Doan Q, Enarson P, Kissoon N, Klassen TP, Johnson DW. Rapid viral diagnosis for acute febrile respiratory illness in children in the emergency department. Cochrane Database Syst Rev 2009; 4:CD006452. [DOI] [PubMed] [Google Scholar]

- 38. Huang HS, Tsai CL, Chang J, Hsu TC, Lin S, Lee CC. Multiplex PCR system for the rapid diagnosis of respiratory virus infection: systematic review and meta-analysis. Clin Microbiol Infect 2018; 24:1055–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Chartrand C, Leeflang MM, Minion J, Brewer T, Pai M. Accuracy of rapid influenza diagnostic tests: a meta-analysis. Ann Intern Med 2012; 156:500–11. [DOI] [PubMed] [Google Scholar]

- 40. Moore C. Point-of-care tests for infection control: should rapid testing be in the laboratory or at the front line? J Hosp Infect 2013; 85:1–7. [DOI] [PubMed] [Google Scholar]

- 41. Vos LM, Riezebos-Brilman A, Hoepelman AIM, Oosterheert JJ. Rapid tests for common respiratory viruses. Clin Infect Dis 2017; 65:1958–9. [DOI] [PubMed] [Google Scholar]

- 42. Gaunt ER, Harvala H, McIntyre C, Templeton KE, Simmonds P. Disease burden of the most commonly detected respiratory viruses in hospitalized patients calculated using the disability adjusted life year (DALY) model. J Clin Virol 2011; 52:215–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. McKimm-Breschkin JL, Jiang S, Hui DS, Beigel JH, Govorkova EA, Lee N. Prevention and treatment of respiratory viral infections: presentations on antivirals, traditional therapies and host-directed interventions at the 5th ISIRV Antiviral Group conference. Antiviral Res 2018; 149:118–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Vallières E, Renaud C. Clinical and economical impact of multiplex respiratory virus assays. Diagn Microbiol Infect Dis 2013; 76:255–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Ko F, Drews SJ. The impact of commercial rapid respiratory virus diagnostic tests on patient outcomes and health system utilization. Expert Rev Mol Diagn 2017; 17:917–31. [DOI] [PubMed] [Google Scholar]

- 46. Egilmezer E, Walker GJ, Bakthavathsalam P, et al. Systematic review of the impact of point-of-care testing for influenza on the outcomes of patients with acute respiratory tract infection. Rev Med Virol 2018; 28:1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. van Houten CB, de Groot JAH, Klein A, et al. A host-protein based assay to differentiate between bacterial and viral infections in preschool children (OPPORTUNITY): a double-blind, multicentre, validation study. Lancet Infect Dis 2017; 17:431–40. [DOI] [PubMed] [Google Scholar]

- 48. Kim C, Ahmed JA, Eidex RB, et al. Comparison of nasopharyngeal and oropharyngeal swabs for the diagnosis of eight respiratory viruses by real-time reverse transcription-PCR assays. PLoS One 2011; 6:2–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.