Abstract

HIV-1C is the most prevalent subtype of HIV-1 and accounts for over half of HIV-1 infections worldwide. Host genetic influence of HIV infection has been previously studied in HIV-1B, but little attention has been paid to the more prevalent subtype C. To understand the role of host genetics in HIV-1C disease progression, we perform a study to assess the association between longitudinally collected measures of disease and more than 100,000 genetic markers located on chromosome 6. The most common approach to analyzing longitudinal data in this context is linear mixed effects models, which may be overly simplistic in this case. On the other hand, existing flexible and nonparametric methods either require densely sampled points, restrict attention to a single SNP, lack testing procedures, or are cumbersome to fit on the genome-wide scale. We propose a functional principal variance component (FPVC) testing framework which captures the nonlinearity in the CD4 and viral load with low degrees of freedom and is fast enough to carry out thousands or millions of times. The FPVC testing unfolds in two stages. In the first stage, we summarize the markers of disease progression according to their major patterns of variation via functional principal components analysis (FPCA). In the second stage, we employ a simple working model and variance component testing to examine the association between the summaries of disease progression and a set of single nucleotide polymorphisms. We supplement this analysis with simulation results which indicate that FPVC testing can offer large power gains over the standard linear mixed effects model.

Key words and phrases. Genomic association studies, HIV disease progression, functional principal component analysis, longitudinal data, mixed effects models, variance component testing

1. Introduction.

An important goal of large-scale genomic association studies is to explore susceptibility to complex diseases. These studies have led to identification of many genomic regions as putatively harboring disease susceptibility alleles for a wide range of disorders. For patients with a particular disease, association studies have also been performed to identify genetic variants associated with progression of disease. The disease progression is often monitored by longitudinally measured biological markers. Such longitudinal measures allow researchers to more clearly characterize clinical outcomes that cannot necessarily be captured in one or even a few measurements.

We are motivated by a large-scale association study of HIV-1 Subtype-C (HIV-1C) progression in sub-Saharan African individuals. HIV-1C is the most prevalent subtype of HIV-1 and accounts for over half of HIV-1 infections worldwide [Geretti (2006)]. Sub-Saharan Africa, where HIV-1C dominates, was home to an estimated 69% of people living with HIV in 2012 [UNAIDS (2012)]. While several human leukoucyte antigen (HLA) alleles [e.g., Fellay et al. (2007), van Manen et al. (2009), Migueles et al. (2000)] and other loci have been identified to be associated with AIDS progression in European males infected by HIV-1B [Fellay et al. (2007), O’Brien and Hendrickson (2013)], comparatively little research has focused on host genetic influence in this African population and subtype.

In this study, we seek to relate the longitudinal progression of these two markers—log10 CD4 (lCD4) count and log10 viral load (lVL)—to a set of approximately 100,000 single nucleotide polymorphisms (SNPs) located on chromosome 6 in two independent cohorts of treatment-naive individuals in Botswana. We focus on chromosome 6 because it houses the HLA region of genes, which are known to impact immune function. Throughout this paper, we will use y = (y1, …, yr)⊤ to denote the longitudinal outcome (in the context of this study, either lCD4 or lVL), measured at times t = (t1, …, tr)⊤. We will furthermore let a set of genetic markers of interest be denoted z and any potential covariates be x.

The most common approach to analyzing longitudinal data of this kind is to use linear mixed effects (LME) models [Laird and Ware (1982)], which relate y linearly to z, x, and t with both fixed and random effects. However, in the case of HIV progression measured by lCD4 and lVL (and in many other practical situations), such a linear relationship is likely to be overly simplistic. To incorporate nonlinear trajectories, many generalizations of LMEs have been proposed. Typically, methods assume that y is a noisy realization of a smooth underlying function Y(·). These methods include nonlinear or nonparametric LMEs using a fixed spline basis expansion [Guo (2002), Lindstrom and Bates (1990), Rice and Wu (2001)] for t and adjusting for z. Functional regression methods have been proposed for densely sampled trajectories [Chiou, Müller and Wang (2003)] for a single z as well as for irregularly spaced longitudinal data where z is also allowed to change over time [Yao, Müller and Wang (2005b)]. In these methods, estimation proceeds via kernel smoothing for both the population mean function and the covariance process of Y(·). Krafty et al. (2008) proposed an iterative procedure for fitting functional regression models which accounts for within-subject covariance but does not estimate random effects. Similarly, Reiss, Huang and Mennes (2010) proposed a ridge-based estimator for the case when y is measured on a common, fine grid of points for all subjects, but requires z to be univariate and also ignores random effects. In a similarly dense setting Morris and Carroll (2006) proposed wavelet-based mixed effects models, and inference procedures for the random effects were developed in Antoniadis and Sapatinas (2007).

While some of these methods can be adapted to test for association, none of them are suitable for our study for the following reasons. First, some require restrictive assumptions about the density of measurements [e.g., Morris and Carroll (2006)] which are clearly not met here. Further, all of these methods were developed with estimation and regression in mind. While many of them could in principle be used to derive testing procedures, the validity of their inference procedures often relies on the model assumptions concerning the distribution of y given the SNPs and the covariates. Under model mis-specification, the resulting test may fail to maintain type I error. Additionally, these procedures would require fitting a complex iterative or smoothing-based model thousands or millions of times for genome-wide studies and hence become computationally infeasible. We propose a novel testing procedure which is valid regardless of the true distribution of y, is fast to fit and has a simple limiting distribution, despite the fact that we account for nonlinearity in y in a manner akin to previous functional regression methods. To do this, we first capture the nonlinearity in y using functional principal components analysis (FPCA) [Castro, Lawton and Sylvestre (1986), Hall, Müller and Wang (2006), Krafty et al. (2008), Rice and Silverman (1991), Yao, Müller and Wang (2005a)]. Using an eigenfunction decomposition of the smoothed covariance function of Y(·), we approximate each patient’s Y(·) by a weighted average of the estimated eigenfunctions, with weights corresponding to functional principal component loadings or scores. Then borrowing a variance component test framework and using these scores as pseudo-outcomes, we construct a Functional Principal Variance Component (FPVC) test that can capture the nonlinear trajectories without requiring a normality assumption or fitting individual functional regression models. The test statistics can be approximated by a mixture of chi-squares, and the small number of eigenfunctions needed to approximate the trajectories can result in a test statistic with low degrees of freedom.

Since the data of interest are sampled at irregular time intervals, we use the best linear unbiased predictor (BLUP) to estimate the scores. The BLUP was also the basis for FPCA with sparse longitudinal data in the principal analysis via conditional expectation (PACE) method [Yao, Müller and Wang (2005a)] under a normality assumption. Here, we use BLUP to motivate our testing procedure but do not require normality for the validity of the FPVC test. The test statistic can be derived through the variance component testing framework and viewed as a summary measure of the overall covariance between the estimated subject-specific scores, which characterize the person’s trajectory, and the genetic markers. Similar variance component tests have previously been proposed for standard linear and logistic regressions with observed single outcomes [Wu et al. (2011)].

The primary virtues of FPVC testing are threefold. First, we separate the procedure into two stages of distinct complexity to make it feasible at large scale. In the first stage, we model y flexibly using FPCA and obtain a succinct summary of disease progression for each patient, once and for all. In the second stage, we perform a rather simple model at large scale. Thus, we segregate the computationally complex stage (which need occur only once) from the large-scale stage (which could require the same computation on the order of millions of times in, e.g., genome-wide association studies). Second, the summary of y that we obtain from FPCA is the most succinct summary possible, as the eigenfunctions identified by FPCA are the functions that explain the most variability in y. Third, our theoretical results suggest that the null distribution of the FPVC test statistic reduces to a simple mixture of χ2 distributions. The variability due to estimating the eigenfunctions does not contribute to the null distribution of the test statistic asymptotically at the first order [see (11) and the derivation of the asymptotic null distribution].

In Section 2, we describe FPCA and introduce FPVC testing and our main theoretical results. In Section 3, we give details about the association study for HIV progression. In Section 4, we discuss simulation results, and in Section 5 we discuss further implications of our procedure.

2. Functional principal variance component testing.

2.1. The test statistic.

In this section, we propose a testing procedure for assessing the association between a set of genetic markers z and a longitudinally measured outcome y, adjusting for covariates x. Let the data for analysis consist of n independent random vectors , where is a vector of outcome measurements taken at times , is a closed and bounded interval, zi = (zi1, …, zip)⊤ is a vector of genetic markers of interest, and xi = (1, xi1, …, xiq)⊤ is a vector of additional covariates that are potentially related to the outcome, all measured on person i. For each i, we take to be distributed as (z⊤, x⊤)⊤.

Our goal is to test the null hypothesis

| (1) |

where is a set of genetic factors to test, identified by the index set . Special cases include marginal testing, as in traditional genome-wide association studies, where for some j ∈ {1, …, p}, or set-based testing where are the indices of the SNPs in a gene or some other related set.

To model the longitudinal trajectory, we assume that yir is a noisy sample of a smooth underlying function Yi(·), evaluated at the point tir,

which following the logic of Yao, Müller and Wang (2005a) can be written as a linear combination of its population mean E{Y(·)} and a set of eigenfunctions {ϕk(·)}

| (2) |

where ξik is the FPCA score associated with the kth eigenfunction, E(ξik) = 0, Var(ξik) = λk, and the eigenfunctions are ordered such that the kth explains the kth most variance in Y(·). Thus, the relationship between yi and xi and zi must be captured by the only random quantity in (2), the vector of random coefficients . Therefore, testing (1) is equivalent to testing

However, direct assessment of the association between and is difficult since are unobservable and could be infinity.

Most methods of estimating a function like Yi(·) require an explicit tuning of the smoothness of the resulting estimator. Here that corresponds to choosing a (typically small) number K of eigenfunctions as an approximation

where K < ∞ could be chosen such that the first K directions capture a proportion of the variation at least as large as . Our simulation results (see Section 4) suggest that the performance of FPVC testing is not very sensitive to the choice of K provided that is close to 1. Since are not observable or generally estimable due to sparse sampling of measurement times, we instead infer about the association between Yi(·) and zi based on the best linear unbiased predictor (BLUP),

| (3) |

where , , and such that , Cov{Y(s),Y(t)} = G(s, t), and δrl = I{r=l}. In the PACE method of Yao, Müller and Wang (2005a), was obtained as E(ξik|yi) under the assumption that ξik and εir are jointly normal, but we don’t require normality here. We simply take as an observable and reasonable approximation to ξik even if normality does not hold, as has been argued in Robinson (1991) and Jiang (1998).

Thus, we propose to test (1) by testing

Taking note that the association we seek to test is conditional on x, one may construct a test for by regressing onto . However, this is only valid if the effect of xi on Yi(·) is captured fully based on the model relating xi and , which may not be true in general. To remove the effect of xi without imposing a strong assumption on how xi affects Yi(·), we instead choose to model the conditional expectation of zij given xi, , and center as where for any j

To form the test statistic for H0, we propose to summarize the overall association between Y(·) and based on the Frobenius norm of the standardized covariance between and

| (4) |

Though Q0 takes a simple form and can be motivated naturally as an estimated covariance (and can thus be considered model-free), it can also be viewed as a variance component score test statistic similar to those considered previously for other regression models [Commenges and Andersen (1995), Lin (1997)]. Details on the derivation of the variance component score test statistic are given in Section 2.2.

Both and involve various nuisance parameters that remain to be estimated. First, under mild regularity conditions which are outlined in the Supplementary Material [Agniel et al. (2016)], we can use FPCA to estimate the relevant quantities via local linear smoothing as in Hall, Müller and Wang (2006) and Yao, Müller and Wang (2005a). Subsequently, we can estimate by

| (5) |

for , , and . To estimate , various approaches can be taken depending on the nature of x. For example, when x is discrete, can be estimated empirically. With continuous x, we may impose a parametric model with

| (6) |

and obtain as , where is an estimate of a finite-dimensional parameter θj. To take two examples that commonly come up in genomics, if zj takes values in {0, 1}, for example, under the dominant model, then zj can be modeled using logistic regression, and if zj takes values in {0, 1, 2}, then we may use a binomial generalized linear model or a proportional odds model. There are two reasons to prefer to remove the effect of x from z rather than from ξ: first, it may in general be easier to specify a model for z rather than ξ because of the limited range of z, and, second, this formulation facilitates asymptotic analysis without the need to derive the asymptotic distributions for the estimated FPCA scores. Finally, based on and , our proposed test statistic is

| (7) |

where and .

2.2. Connection to mixed effects models.

In this section, we demonstrate that one can arrive at the quantity (4) via a more familiar mixed effects model. Consider the model

| (8) |

| (9) |

where B is a K × s matrix with (k, j)th entry βkj and Λ = diag(λ1, …, λK). We can obtain Q0 as the variance component score test statistic for H0 : B = 0. Specifically, let βkj = ηνkj and we consider a working model such that {νkj} are independently distributed with E(νkj) = 0 and . Under this working model, H0 : B = 0 is equivalent to

This formulation follows the logic of variance component score tests that have been proposed previously [Wu et al. (2011)] and recalls, for example, the likelihood ratio test proposed in Crainiceanu and Ruppert (2004). To obtain the variance component test statistic, rewrite the model as

for centered outcome , error vector , and random effects ei = (ei1, …, eiK)⊤ ~ N(0, Λ). Then

where and is the ri × ri identity matrix.

The log-likelihood for yμi can then be written

Because the target of inference is η, we marginalize over the nuisance parameter ν conditional on the observed data to obtain where the expectation is taken over the distribution of ν. We follow the argument in Commenges and Andersen (1995) and note that the score at the null value is 0: .So we instead consider the score with respect to η2, , and we show in the Supplementary Material [Agniel et al. (2016)] that this score can be written

up to a scaling constant.

To obtain finally Q0, we standardize by n−1 and drop the second term because it converges to a constant, yielding the score statistic

taking note of the form of from (3). Thus, our proposed test statistic can be obtained as a variance component test under a normal mixed model framework. We can also view Q0 as a simple summary of the overall covariance between the scores of the FPCA and the genetic markers. We next derive the null distribution of the FPVC test statistic without requiring the normal mixed model to hold.

2.3. Estimating the null distribution of the test statistic.

To obtain p-values for FPVC testing, we must identify the null distribution of Q. To this end, we show in Agniel et al. (2016) that the key quantity in Q

is asymptotically equivalent to

under H0, that is, for each j and k. The key idea for deriving the null distribution of qkj is that, since is approximately mean 0 conditional on xi, the variability due to approximating by does not contribute any additional noise to qkj (compared to ) at the first order under H0. Thus, we can obtain the limiting distribution of Q by analyzing the quantity .

To characterize the null distribution of , we need to account for the variability in the estimated model parameters for in . Without loss of generality, we assume that for each j

| (10) |

where is some (q + 1)-dimensional function of xi with . It follows that

| (11) |

where , , and . We show in Agniel et al. (2016) that the limiting null distribution of Q is a mixture of random variables, , with mixing coefficients determined by the eigenvalues of the covariance matrix of . So finally we obtain a p-value for the association between the set and Y(·) as , where is an empirical estimate of al.

By a similar argument, one could construct an asymptotically equivalent test statistic by estimating in two stages. Instead of obtaining an estimator directly from FPCA via equation (5), FPCA can be used to estimate only μ(·) and . By plugging the estimated and into the mixed model (8), one can obtain what we will call the re-fitted test statistic

| (12) |

where is the BLUP from the model with Cov(ξi) = D, for some unspecified positive definite matrix D. By the same argument above, estimation of by contributes no additional variability to the test statistic at the first order. It follows that

and hence has the same limiting null distribution as Q. Not surprisingly, simulation results suggest that the performance of is quite similar to the performance of Q. This equivalence indicates that effectively our proposed testing procedure uses FPCA to estimate potentially nonlinear bases and assesses the effect of genetic markers by fitting a mixed model with these basis functions. The test statistics also can be viewed as a simple summary of covariances, and—since we estimate the null distribution without relying on the normality assumption required by the mixed models—our testing procedure remains valid regardless of the adequacy of the mixed model.

2.4. Combining multiple sources of outcome information.

In the HIV progression study, we seek to test the overall association between SNPs and both lCD4 and lVL simultaneously because more and distinct information about HIV progression is captured in both measures than in either one alone. FPVC testing, as outlined above, can be easily adapted to perform a test for the overall association between and all outcomes of interest. To use information in multiple outcomes, , we simply perform FPCA separately on each y(m) and obtain FPCA scores for each person and each outcome. Subject i’s scores for would be , as in (5), and the full set of scores for person i would be . Then we simply proceed by testing

as before based on

| (13) |

Since each outcome may be measured on a different scale, one may use scaling or weighting to allow scores from each outcome to contribute similarly to the test statistic. See Section 5 for further discussion of scaling/weighting.

3. Association study for HIV progression.

In this study, two independent cohorts were recruited in Botswana to detect sets of SNPs related to HIV disease progression as measured by lCD4 and lVL. The first cohort, which we will denote BHP010, was a natural history observational prospective cohort study recruited from clinics in Gaborone. This cohort included HIV-1C-infected individuals with CD4 cell counts above 400 cells per μl and not yet qualified for the Botswana highly active antiretroviral treatment (HAART) program. Patients were not enrolled if they were younger than 18, had an active AIDS-defining illness requiring the initiation of HAART, presented with an AIDS-related malignancy, or previously had been exposed to HAART during pregnancy or breast feeding.

Follow-up visits occurred at approximately three-month intervals with an additional visit one month after enrollment. VL was generally collected at six-month intervals, and most patients in this cohort do not have VL measurements after two years of follow-up. Follow-up began in 2005 and lasted for up to 255 weeks. The mean follow-up time was 41 months. At least two CD4 measurements were required for measuring disease progression, and 449 patients satisfied this criterion. Of these, 366 were women, and the median age at baseline was 34 years old with an interquartile range (IQR) of (28, 39). In 2008, 143 patients were genotyped on an Illumina LCG BeadChip, the chip used in the second cohort. After exclusions for quality control—call rate greater than 0.99, genotype-derived gender matching listed gender—137 individuals were included in the association study.

The second cohort we will denote BHP011. This cohort came from a randomized, multifactorial, double-blind placebo-controlled trial conducted between December 2004 and July 2009 [Baum et al. (2013)]. The purpose of the trial was to determine the efficacy of micronutrient supplementation (supplementation of multivitamin, selenium, or both) in improving immune function in HIV-1C-infected individuals. It was composed of 878 treatment-naive patients with CD4 higher than 350 cells/μl, as well as body mass index (BMI) greater than 18 for women and 18.5 for men (calculated as weight in kilograms divided by height in meters squared), age of 18 years or older, no current AIDS-defining conditions or history of AIDS-defining conditions, and no history of endocrine or psychiatric disorders.

Patients were followed up for a maximum of 169 weeks. They returned to clinics approximately every three months to measure CD4 and approximately every six months to measure VL. The mean follow-up time was 696 days. Of these, 838 had at least two CD4 measurements, and 613 were women. The median age at baseline was 33 years old with an interquartile range (IQR) of (28, 39). In this cohort, 326 individuals were genotyped on Illumina LCG BeadChips, with 320 entered into the association study after quality control exclusions.

FPCA was performed on each cohort separately for both lCD4 and lVL. Patients who were not genotyped were included for the estimation of FPCA. Three eigenfunctions were chosen for lCD4 and two for lVL in each cohort, which corresponds to 99% of proportion of variance explained for each. The form of the eigenfunctions look similar for both lCD4 and lVL in each cohort and lend themselves to reasonable interpretations [see Agniel et al. (2016) for plotted eigenfunctions]. The first eigenfunction tends to serve as a mean shift or an intercept; the second eigenfunction acts something like a slope; and the third eigenfunction behaves approximately as a quadratic term. The vector of estimated “re-fitted” scores [refer to (12)] for each individual to be used in testing can be written where is the estimated score corresponding to the kth estimated eigenfunction of lCD4 when m = 1 and lVL when m = 2.

A total of 155,007 SNPs on chromosome 6 were genotyped. After requiring less than 5% missingness and at least five individuals with any minor alleles in each cohort, p = 108,665 SNPs, zi = (zi1, …, zip) remained for association testing, where the ordering in zi corresponds to position on the chromosome. The dominant model was used for analysis such that zij = 1 if any minor alleles are present and zij = 0 if none are present. Missing values were imputed as the minor allele frequency for that SNP. To gain power by pooling information in nearby SNPs, sets of 10 contiguous SNPs were constructed as for j = 1, …, 108, 656. Here the choice of 10 merely serves as example for illustration and sets could in principle be constructed with more SNPs, but due to the small sample size in each cohort, we kept the size of the sets modest. Tests were performed on each cohort separately, and p-values were combined using the Fisher method. The false discovery rate was controlled at 0.1 using the Benjamini–Hochberg procedure [Benjamini and Hochberg (1995)], which is expected to remain valid since although the moving window construction of sets induces high correlations for nearby regions, SNP sets in distant regions are not expected to be correlated [Storey, Taylor and Siegmund (2004)].

Tests were adjusted for age and gender to remove any possible confounding, so that we are testing for the effect of SNP sets on disease progression conditional on age and gender. Logistic regression was used to remove their effects. The method appears to be robust to this specification, as results were not markedly changed either when no adjustment was made or by specifying a probit model (results not shown).

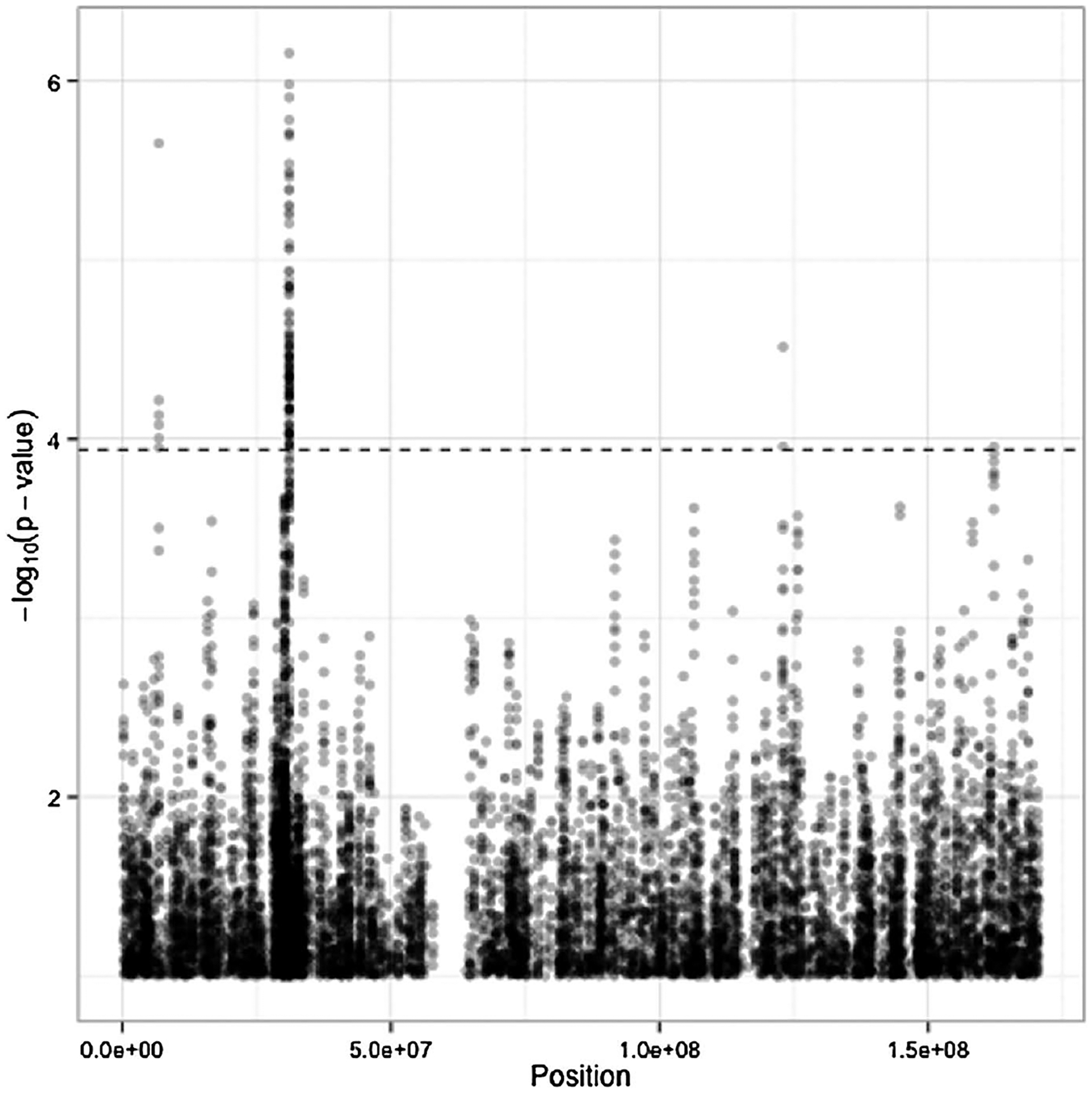

The Manhattan plot for the 108,656 tests is given in Figure 1. In all, 126 tests passed the FDR threshold, corresponding to four broad regions of the chromosome. Six contiguous tests rejected in the region between positions 6,784,416 and 6,793,116 (region 1), which fall between the LY86 and BTF3P7 genes; 117 tests rejected between positions 31,022,266 and 31,080,899 (region 2), including SNPs on the HCG22 and C6orf15 genes; two tests rejected between 122,990,817 and 123,014,708 (region 3) on the PKIB gene; and one test rejected representing SNPs in the region between 162,250,522 and 162,254,546 (region 4) including SNPs on the PARK2 gene. Notably, the C6orf15 gene has been reported to be associated with susceptibility to follicular lymphoma [Skibola et al. (2009)], and genes in linkage disequilibrium with HCG22 and C6orf15 have demonstrated associations to total white blood cell counts [Nalls et al. (2011)] and multiple myeloma [Chubb et al. (2013)].

Fig. 1.

Manhattan plot for set-based testing on chromosome 6. Position on x-axis for each test is determined by the middle SNP (5th of 10) in the set. The dotted line corresponds to the threshold for rejection at FDR 0.1.

Furthermore, regions 1, 3, and 4—whose −log10 p-values are depicted in Figure 2(a), (c), and (d), respectively—only have strong signals in one of the two cohorts. Region 1 demonstrates association largely in BHP010, as can be seen in the figure where the large triangles in the figure (representing the set-based p-value in BHP010) tend to lie above the large dots (representing the combined set-based p-value), while the diamonds for BHP011 tend to be very low. Conversely, in regions 3 and 4 the association is apparent only in BHP011. Whereas in region 2, associations tend to be strong in both cohorts, and the combined p-values tend to be lower (higher in the figure) than either of the component p-values.

Fig. 2.

P-values in significant regions on the −log10 scale. Large symbols correspond to set-based tests, and for illustration small symbols correspond to tests for individual SNPs. Triangles represent p-values computed in BHP010, and diamonds BHP011. Circles represent combined set-based p-values, which are of primary interest and are connected by lines. Combined p-values that are below the FDR threshold are in color, as are their corresponding component p-values.

To better understand the outcome of the test, we looked at average disease progression within groups of patients with similar minor allele burden. To do this, we identified the SNP set with the smallest p-value, which lay in the HCG22 portion of region 2 and included the following SNPs: rs2535308, rs2535307, rs2535306, rs2535305, rs3130955, rs2535304, rs12527394, rs2535303, kgp9442190, and rs3130959. Patients were grouped according to the number of loci among these 10 at which they had any minor alleles. Within these groups, we averaged the estimated mean lCD4 and lVL in each cohort over time . As a demonstration, the results for those with 2, 3, 8, and 9 loci with minor alleles are depicted in Figure 3. We selected these groups to demonstrate burden extremes (very few individuals had 0 or 1 loci affected, so they were not shown).

Fig. 3.

Disease progression by minor allele burden in most significant SNP set. Estimated lCD4 and lVL are grouped by number of loci in SNP set with any minor alleles and averaged. For clarity, just those individuals with 2, 3, 8, and 9 loci are included. Lines correspond to estimates of the conditional mean based on FPCA.

The healthiest group included those with only 2 and 3 affected loci, who had higher and more stable CD4 and lower and gently increasing VL throughout the study period in each cohort. Those with 3 affected loci tended to have a more negative CD4 slope and higher and increasing VL over the study period. Those with 9 affected loci in general had the worst progression: low and declining CD4 counts in both cohorts, and high and relatively stable VL in both cohorts. Those with 8 affected loci tend to fall in the middle. Smoothing the raw data directly in each of these groups yielded similar results and nearly identical conclusions (results not shown).

4. Simulation results.

We have performed simulation studies to assess the finite sample performance of our proposed testing procedure and compare its power to the standard linear-mixed-model-based procedures. For simplicity, we focused on a single marker z in the absence of covariates and two potential functional outcomes generated from

where , j = 0, 1 are independent and identically distributed (i.i.d.) random effects and are i.i.d. errors, for m = 1, 2. For each subject i, we generate the number of observations from a Poisson distribution ri ~ Poisson(λ) + 2, and we generate tir uniformly over the time interval (0, 2π). The parameter β controls the magnitude of the genetic effect. The parameter α controls the linearity of the genetic effect—when α = 0 the genetic effect is entirely linear, and when α = 1 the effect is entirely nonlinear. The parameter γ controls the complexity of the mean process and the amount of inter-subject variability: when γ = 0, the mean process is relatively simple but the inter-subject variability is high, and when γ = 1 the mean process is complex and the inter-subject variability is low. The genetic factor zi is generated according to a binomial(2, 0.1), with 0.1 the minor allele frequency.

We examined the performance of the FPVC test statistic Q [defined in (13), here denoted by “FPCA”] and its asymptotically equivalent counterpart [defined in the context of a single outcome in (12), here denoted “Re-fitted”]. For the purposes of comparison, we also examined the performance of a similar test statistic that does not use FPVC but instead employs a pre-specified basis. Consider the test statistic , where is the BLUP from the linear mixed model yir = β0 + β1tir + ξi1 + ξi2tir + εir. In the following, we denote results for Qlin by “Linear”.

The number of FPCA scores for the mth outcome, Km, was selected as the smallest K such that the fraction of variation explained (FVE), , was at least . To ensure that the scores for each outcome contributed comparably to the test statistics, we centered and scaled each outcome as , prior to obtaining and , where and .

In the following we report power as the proportion of 1000 simulations for which the testing procedure produced a p-value below 0.05 to demonstrate the relative performance of the various testing procedures. To ensure that the asymptotic null distribution of the test statistic yields a valid testing procedure, we evaluate the entire distribution of p-values under the null hypothesis, including at levels much lower than 0.05.

4.1. Type I error.

In the following we take λ = 6. The empirical type I error rates for testing at the 0.05 level ranged from 0.040 (γ = 0.25) to 0.048 (γ = 0) for Q; from 0.036 (γ = 1) to 0.047 (γ = 0) for ; and from 0.040 (γ = 0.75) to 0.059 (γ = 1) for Qlin. However, levels much smaller than 0.05 are necessary to control error rates in large-scale testing. Thus, to establish the validity of our testing procedure for performing many tests, we establish that the resulting p-values are approximately uniform under the null hypothesis. We performed 106 simulations under the null, with n = 200, γ = 1, and α = 0, and we obtained the type I error of FPVC testing at each of the following levels: 1 × 10−6, …, 9 × 10−6, 1 × 10−5, …, 9 × 10−5, 1 × 10−4, …, 9 × 10−4, 1 × 10−3, …, 9 × 10−3. Results are depicted in Figure 4. The Figure shows that the level is preserved at all levels of testing. Further simulations would provide better approximations of type I error rates at smaller levels, but these results suggest that the asymptotic null distribution fits quite well in small samples.

Fig. 4.

Empirical type I error rates for tests performed at various levels, based on 106 simulations.

4.2. Power.

In Figure 5, we display results for n = 100 and all levels of γ and α. There, the figure demonstrates that, despite the fact that the true effect was linear when α = 0, both FPC-based tests dominate the linear-based tests, and the advantage of using FPVC, in terms of power, did not disappear, even when the true effect was linear. Notably, as γ varied, we saw power gains by using the FPVC-based Q and , with the gains increasing as γ approached 1 and the functional form of became more complex, and the need to flexibly model it increased.

Fig. 5.

Power to detect β using Q (FPVC), (FPVC Re-fitted), and Qlin (Linear). β values are listed on the x-axis.

In all of our simulations, the FPVC methods dominated the linear method in terms of power while maintaining desirable type I error rates. We wanted to ensure that the improvement we were seeing was not simply due to the fact that the linear model used only two scores, a random intercept and a random slope , for each outcome whereas the FPVC-based methods used Km scores, where Km was often selected larger than 2. Thus, we also considered the performance of scores based on fixed-basis expansions of t, using either polynomial or spline bases.

Specifically, we fit models with K = 2, 3, …, 6 degrees of freedom. For the polynomial setting we used bases corresponding to the model for a centered and scaled version of tir. For the spline basis, we used cubic B-splines constructed with the specified degrees of freedom with the bs function in the splines R package. Because, in some sense, FPCA does model selection by choosing the basis that explains the most variability in y, we also perform model selection on the pre-specified bases to ensure a fair comparison. We select the model with the lowest AIC and use the from that model in the testing procedure. We will call the test statistic based on B-splines QB and the model based on polynomial bases Qp.

Results are found in Figure 6. We found that there were some situations when using the pre-specified B-spline basis could outperform the FPVC tests, particularly when γ was near 0 (low mean complexity) and α was near 1 (linear genetic effect). However, the polynomial basis never outperformed FPVC. Further, as the complexity of the trajectory γ increased, the desirability of FPVC testing always increased, suggesting that in simple problems, using a pre-specified basis may be preferable, but for complex effects and complex trajectories, FPVC will likely be preferred. In general, if the complexity of the trajectory is unknown, FPVC testing offers a generally powerful method for all settings that is insensitive to tuning parameter selection.

Fig. 6.

Power to detect β using Q (FPVC), QB (B-splines), and Qp (Polynomial). β values are listed on the x-axis.

5. Discussion.

We have proposed functional principal variance component testing, a FPCA-based testing procedure for assessing the association between a set of genetic variants and a complexly varying longitudinal outcome y that is feasible on the genome-wide scale, allowing adjustment for other covariates. Unlike the standard mixed-model-based approaches, we do not model the trajectories parametrically but use the data to identify the most parsimonious summaries of the trajectory patterns via FPCA. We subsequently test the association between the random coefficients ξi and the markers of interest using a test statistic motivated by variance component testing. Our procedure could potentially be much more powerful than procedures based on pre-specified bases, which might suffer power loss due to either high degrees of freedom or inability to capture the complexity in the trajectories. Furthermore, our FPVC testing is computationally efficient as we are able to perform thousands or even millions of tests quickly by separating the time-intensive FPCA from the testing. This makes our method feasible on the genome-wide scale where millions of marginal tests may be necessary. As an example, computing test statistics and p-values for FPVC testing typically takes less than 0.1 seconds for a set of 10 SNPs and both lCD4 and lVL combined on a Macbook Pro. Conversely, fitting a single linear mixed effects model for only lCD4 with a random effect for a small pre-specified B-spline basis takes more than two seconds. At the genome-wide scale we would observe a speed-up on the order of hours. Code for FPVC testing is available at https://github.com/denisagniel/fpvc.

It is important to note that while we make mild assumptions on the longitudinal outcome y to obtain the form of our proposed test statistic, the validity of FPVC testing requires no assumption about the relationship between y and . FPVC testing remains valid even if the working mixed model (8) fails to hold. Additionally, while one can motivate the quantity as the conditional expectation of ξik under a normality assumption on ξik and εir, testing based on Q remains valid even when this normality fails to hold since the estimated eigenvalues and eigenfunctions from functional PCA converge uniformly to their limits [Hall, Müller and Wang (2006)]. In fact, one can consider FPVC model-free in that the test statistic Q could be motivated simply as an estimated covariance. Furthermore, we assume that the errors εir are i.i.d. with mean 0 and variance σ2, but some relaxation of this assumption is possible for some “degree of weak dependence and in cases of nonidentical distribution” [Hall, Müller and Wang (2006)], while still maintaining the validity of our procedure.

FPVC testing can also simultaneously consider multiple sources of outcome information to better characterize complex phenotypes. With multiple longitudinal outcomes, one might wish to ensure that scores for all outcomes are roughly on the same scale, so that each outcome contributes comparably to the test statistic. To this end, one may consider a weighted version of (13) as

where ωm are nonnegative outcome-specific weights that can be pre-specified or data-adaptive. Alternatively, in the absence of relevant weights, one can simply scale each y(m) so that the magnitude of is comparable across different values of m. Let where and . Then obtain via FPCA on and construct the test statistic . Such a strategy appears to work well in simulation studies.

While we use FPCA to summarize the longitudinal trajectories for the purpose of testing with low degrees of freedom, in principle another suitably parsimonious nonparametric method could be used instead. For example, if observations were measured on a common, fine grid of points, then one could imagine using the methods in Morris and Carroll (2006) to first regress y on t, obtain the random effects estimates (similar to the ξi employed here), and use these estimates in testing. However, no available approaches are as widely applicable as our FPCA-based approach, which can be used even when data are sparsely observed; other approaches may not have as small effective degrees of freedom as an FPCA-based method, and the resulting testing procedure may be more sensitive to correct tuning.

Supplementary Material

Footnotes

SUPPLEMENTARY MATERIAL

Supplementary proofs and plots (DOI: 10.1214/18-AOAS1135SUPP; .pdf). We provide the derivation of the form of the score statistic, proof of its null distribution, and supporting assumptions. And we include the form of the eigenfunctions for the HIV data analysis.

Contributor Information

Denis Agniel, RAND Corporation, 1776 Main St., Santa Monica, California 90401, USA; Department of Biomedical Informatics, Harvard Medical School, 10 Shattuck St, Boston, Massachusetts 02115, USA.

Tianxi Cai, Department of Biostatistics, Harvard T. H. Chan School of Public Health, 655 Huntington Ave, Boston, Massachusetts 02115, USA.

REFERENCES

- Agniel D, Xie W, Essex M and Cai T (2018). Supplement to “Functional principal variance component testing for a genetic association study of HIV progression.” DOI: 10.1214/18-AOAS1135SUPP. [DOI] [PMC free article] [PubMed]

- Antoniadis A and Sapatinas T (2007). Estimation and inference in functional mixed-effects models. Comput. Statist. Data Anal 51 4793–4813. [Google Scholar]

- Baum MK, Campa A, Lai S, Martinez SS, Tsalaile L, Burns P, Farahani M, Li Y, Van Widenfelt E, Page JB et al. (2013). Effect of micronutrient supplementation on disease progression in asymptomatic, antiretroviral-naive, HIV-infected adults in Botswana: A randomized clinical trial. JAMA 310 2154–2163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benjamini Y and Hochberg Y (1995). Controlling the false discovery rate: A practical and powerful approach to multiple testing. J. Roy. Statist. Soc. Ser. B 57 289–300. [Google Scholar]

- Castro PE, Lawton WH and Sylvestre EA (1986). Principal modes of variation for processes with continuous sample curves. Technometrics 28 329–337. [Google Scholar]

- Chiou J-M, Müller H-G and Wang J-L (2003). Functional quasi-likelihood regression models with smooth random effects. J. R. Stat. Soc. Ser. B. Stat. Methodol 65 405–423. [Google Scholar]

- Chubb D, Weinhold N, Broderick P, Chen B, Johnson DC, Försti A, Vijayakrishnan J, Migliorini G, Dobbins SE, Holroyd A et al. (2013). Common variation at 3q26. 2, 6p21. 33, 17p11. 2 and 22q13. 1 influences multiple myeloma risk. Nat. Genet 45 1221–1225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Commenges D and Andersen PK (1995). Score test of homogeneity for survival data. Lifetime Data Anal. 1 145–159. [DOI] [PubMed] [Google Scholar]

- Crainiceanu CM and Ruppert D (2004). Likelihood ratio tests in linear mixed models with one variance component. J. R. Stat. Soc. Ser. B. Stat. Methodol 66 165–185. [Google Scholar]

- Fellay J, Shianna KV, Ge D, Colombo S, Ledergerber B, Weale M, Zhang K, Gumbs C, Castagna A, Cossarizza A et al. (2007). A whole-genome association study of major determinants for host control of HIV-1. Science 317 944–947. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geretti AM (2006). HIV-1 subtypes: Epidemiology and significance for HIV management. Curr. Opin. Infect. Dis 19 1–7. [DOI] [PubMed] [Google Scholar]

- Guo W (2002). Functional mixed effects models. Biometrics 58 121–128. [DOI] [PubMed] [Google Scholar]

- Hall P, Müller H-G and Wang J-L (2006). Properties of principal component methods for functional and longitudinal data analysis. Ann. Statist 34 1493–1517. [Google Scholar]

- Jiang J (1998). Asymptotic properties of the empirical BLUP and BLUE in mixed linear models. Statist. Sinica 8 861–885. MR1651513 [Google Scholar]

- Joint United Nations Programme on HIV/AIDS (UNAIDS) (2012). Global Report: UNAIDS Report on the Global AIDS Epidemic: 2012. UNAIDS. [Google Scholar]

- Krafty RT, Gimotty PA, Holtz D, Coukos G and Guo W (2008). Varying coefficient model with unknown within-subject covariance for analysis of tumor growth curves. Biometrics 64 1023–1031. [DOI] [PubMed] [Google Scholar]

- Laird NM and Ware JH (1982). Random-effects models for longitudinal data. Biometrics 963–974. [PubMed] [Google Scholar]

- Lin X (1997). Variance component testing in generalised linear models with random effects. Biometrika 84 309–326. [Google Scholar]

- Lindstrom MJ and Bates DM (1990). Nonlinear mixed effects models for repeated measures data. Biometrics 46 673–687. [PubMed] [Google Scholar]

- Migueles SA, Sabbaghian MS, Shupert WL, Bettinotti MP, Marincola FM, Martino L, Hallahan CW, Selig SM, Schwartz D, Sullivan J et al. (2000). HLA B* 5701 is highly associated with restriction of virus replication in a subgroup of HIV-infected long term nonprogressors. Proc. Natl. Acad. Sci. USA 97 2709–2714. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris JS and Carroll RJ (2006). Wavelet-based functional mixed models. J. R. Stat. Soc. Ser. B. Stat. Methodol 68 179–199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nalls MA, Couper DJ, Tanaka T, van Rooij FJ, Chen M-H, Smith AV, Toniolo D, Zakai NA, Yang Q, Greinacher A et al. (2011). Multiple loci are associated with white blood cell phenotypes. PLoS Genet. 7 e1002113–e1002113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O’Brien SJ and Hendrickson SL (2013). Host genomic influences on HIV/AIDS. Genome Biol. 14 201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reiss PT, Huang L and Mennes M (2010). Fast function-on-scalar regression with penalized basis expansions. Int. J. Biostat 6 28. [DOI] [PubMed] [Google Scholar]

- Rice JA and Silverman BW (1991). Estimating the mean and covariance structure nonparametrically when the data are curves. J. Roy. Statist. Soc. Ser. B 53 233–243. [Google Scholar]

- Rice JA and Wu CO (2001). Nonparametric mixed effects models for unequally sampled noisy curves. Biometrics 57 253–259. [DOI] [PubMed] [Google Scholar]

- Robinson GK (1991). That BLUP is a good thing: The estimation of random effects. Statist. Sci 6 15–51. [Google Scholar]

- Skibola CF, Bracci PM, Halperin E, Conde L, Craig DW, Agana L, Iyadurai K, Becker N, Brooks-Wilson A, Curry JD et al. (2009). Genetic variants at 6p21. 33 are associated with susceptibility to follicular lymphoma. Nat. Genet 41 873–875. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Storey JD, Taylor JE and Siegmund D (2004). Strong control, conservative point estimation and simultaneous conservative consistency of false discovery rates: A unified approach. J. R. Stat. Soc. Ser. B. Stat. Methodol 66 187–205. [Google Scholar]

- van Manen D, Kootstra NA, Boeser-Nunnink B, Handulle MA, van’t Wout AB and Schuitemaker H (2009). Association of HLA-C and HCP5 gene regions with the clinical course of HIV-1 infection. AIDS 23 19–28. [DOI] [PubMed] [Google Scholar]

- Wu MC, Lee S, Cai T, Li Y, Boehnke M and Lin X (2011). Rare-variant association testing for sequencing data with the sequence kernel association test. Am. J. Hum. Genet 89 82–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yao F, Müller H-G and Wang J-L (2005a). Functional data analysis for sparse longitudinal data. J. Amer. Statist. Assoc 100 577–590. [Google Scholar]

- Yao F, Müller H-G and Wang J-L (2005b). Functional linear regression analysis for longitudinal data. Ann. Statist 33 2873–2903. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.