Despite most journalist being liberal, they do not discriminate against conservatives by what news they choose to cover

Abstract

Is the media biased against conservatives? Although a dominant majority of journalists identify as liberals/Democrats and many Americans and public officials frequently decry supposedly high and increasing levels of media bias, little compelling evidence exists as to (i) the ideological or partisan leanings of the many journalists who fail to answer surveys and/or identify as independents and (ii) whether journalists’ political leanings bleed into the choice of which stories to cover that Americans ultimately consume. Using a unique combination of a large-scale survey of political journalists, data from journalists’ Twitter networks, election returns, a large-scale correspondence experiment, and a conjoint survey experiment, we show definitively that the media exhibits no bias against conservatives (or liberals for that matter) in what news that they choose to cover. This shows that journalists’ individual ideological leanings have unexpectedly little effect on the vitally important, but, up to this point, unexplored, early stage of political news generation.

INTRODUCTION

Unbiased political media coverage is vital for a healthy democracy (1). Most Americans want their news free from political bias; a dominant majority (78%) of Americans believe that it is never acceptable for a news organization to favor one political party over another when reporting the news (2). Journalists hold strong norms to eschew bias in their coverage of politics (3). However, when asked about the coverage of news organizations in America, less than half can identify a source that they believe reports the news objectively, less than 30% trust the media to get the facts straight, and less than 20% trust the media to report the news without bias (4). Since 1989, the number of Americans stating that there is a great deal of bias in news coverage has nearly doubled (4). Simply put, many Americans believe that the news media do a poor job of separating facts from opinion (4). With the strong influence that the media exerts on citizens (5–7), the increased salience of fake news (8–10), and the “unprecedented” levels of violence against journalists (11), understanding the potential biases of the media is vital.

Ideological bias is central to the concerns that Americans harbor about the news media. Concerns about liberal media bias are widespread. Many Americans believe that liberal media bias is prevalent and pernicious. According to a 2017 Gallup poll, 64% of Americans believe the media favors the Democratic Party (compared to 22% who said they believed it favored the Republican Party). Consternation over the liberal bias in the mainstream media runs rampant, making its way into commentary of the state of the news media from political pundits (12) and academics (13), into too many social media discussions to even begin to mention, and even into the stages of presidential debates and town halls. There are reasons to expect that this perspective may comport with reality. Some evidence suggests that journalists have more liberal views than the general public (14). Given this, we might expect political ideology to fundamentally shape journalists’ views about what is and is not newsworthy (15). However, it is also possible that the public perceives ideological bias in what journalists choose to cover because they are psychologically motivated to see bias in the news (16).

Does ideological bias actually shape what news journalists choose to cover? Although we know some about ideological biases in how the news is covered (the slant of the news that is covered or presentation bias), we know very little about the potential role of ideological bias in what is covered. Previous research has focused almost exclusively on presentation bias in the news, but bias can also arise earlier: in the selection of news to cover. Ideological leanings might alter journalist evaluations of the newsworthiness of a particular story (15). Despite their best attempts to maintain high standards of objectivity, journalists may omit news stories that do not adhere to their own (most likely liberal) predispositions. This type of gatekeeping bias in the earlier stages of news story generation would be vitally important, were it to exist, because the topics focused on in the news influence what is on the political agenda and how people evaluate political information (17, 18). After all, the news media are integral to informing marginalized segments of the population about politics (19–21).

Identifying gatekeeping bias in news coverage, however, has proven to be incredibly difficult. In part, this is because identifying the full population of news from which journalists could select stories is difficult. Scholars of media coverage only view the final product and do not observe the full set of stories that might have been available in the world for journalists to potentially cover. Analysis of gatekeeping bias from published stories suffers from the fallacy of selecting on the dependent variable. Perceptions of biases in what journalists cover could be the result of true media biases, or they could also just be the result of an underlying set of stories that journalists have to select from that are ideologically skewed (22). Perhaps the “truth” itself has a liberal (or conservative) bias.

We overcome this stubborn obstacle by examining how journalists respond to a potential news story available in their media market. This study tests for ideological bias (specifically gatekeeping bias), which occurs before the creation of news content. Our research combines data from five sources: a large survey of journalists, a conjoint experiment embedded in our survey, election returns, Twitter data about journalist networks, and a novel correspondence experiment design. Hence, our study addresses two substantial problems in the study of media bias.

First, using Twitter data, we are able to estimate the ideology of half of the journalists in our sample, nearly five times larger than any previous study of journalists. In this dataset, we show that journalists are overwhelmingly liberal, perhaps even more so than surveys have suggested. Most journalists are far to the left of even the average (Twitter-using) American.

Second, our work addresses the nagging problem of an unknown composition of potential news stories through the use of a correspondence experiment. This experiment presented journalists with a potential news story (a candidate running for the state legislature) that varied only in its ideological content (i.e., the ideology of the candidate). While this design may not generalize to all potential news stories, it does allow us to test for bias in a vitally important step in the news generation process: gatekeeping bias, in this case, related to what journalists choose to cover on the campaign trail. Given the role that news stories play in providing much-needed attention to potential candidates, withholding coverage can be thought of as a powerful gatekeeping tool where partisan bias may come into play (17, 18). With this unique design, we show that, contrary to popular narratives and despite the fact that journalists skew to the left, there is little to no liberal bias in what reporters choose to cover. Our well-powered correspondence experiment allows us to confidently rule out even very slight biases against conservatives. This implies that journalists do not exhibit ideological gatekeeping bias: that liberal media bias does not manifest itself in the vital early stage of news generation despite strong reasons to think it might.

RESULTS

To test for ideological bias in the news that journalists choose to cover, we combine the five data sources just mentioned. The survey of journalists allows us to see whether journalists, indeed, skew in the liberal direction. Previous studies have tended to show that this is the case (14, 23). To replicate and extend previous surveys, we collected our list of journalists using the U.S. Newspaper List (usnpl.com), a comprehensive national media directory of newspapers, television stations, and radio stations operating in the United States. This allowed us to identify the full sample of newspapers in each state. Using this site, a team of four researchers visited the website or Facebook page of every newspaper in each state and searched for the email addresses of political journalists and editors between May 2017 and July 2017. In many cases, this team was able to identify journalists who were explicitly assigned to a political beat. However, in the case that a specific reporter was not explicitly designated as being a political reporter, all reporters were collected. This process resulted in a sampling frame of just more than 13,500 journalists with working email addresses. We invited these individuals to participate in the survey by email in late August and early September 2017. A total of 1511 journalists responded to the survey for a response rate of 11.3% [among the emails that did not bounce, our response rate was 13.1%, a rate almost double of other recent surveys of journalists (24)]. Among other things, the survey asked the reporters to disclose their political ideology.

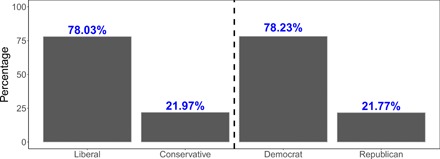

Consistent with previous surveys of journalists, we find that a majority of surveyed journalists (54% not including self-identified independents who indicated that they leaned toward a party; 78% including independents who leaned toward a party) do have ideological leanings and preferences. Figure 1 shows the breakdown of journalists’ self-reported ideology and partisan preferences. For the partisan preferences, we asked individuals who identified as independents to indicate which party they leaned toward. As can be seen, among journalists willing to identify a partisan or ideological preference, Democrats/liberals are much more numerous than Republicans/conservatives. While there is certainly an ethos of independence among this group, a majority of journalists are willing to self-report being attached to a specific political direction, and among this group, a dominant majority of journalists affiliate with the left.

Fig. 1. Ideological composition of journalists (survey).

The figure displays the ideological/partisan leanings of journalists among those willing to attach themselves to a specific ideological/partisan direction. Among all surveyed journalists, 60% indicate being Democrats or Democratic leaners and 23% identify as independents (46% identify as independents when including independents who lean toward a party). This data comes from our survey of journalists (2017; N = 1511). As a reference, Willnat and Weaver (23) report 79% of partisan identifiers as being Democrats.

There are, however, two large problems with using surveys of journalists, as previous work has done, to measure their ideology. First, many journalists report being independents. (Despite asking them to which party they leaned, 23% still self-identified as pure independents.) Second and perhaps more importantly, despite having a high response rate for surveys of this nature, many journalists choose not to respond to surveys. This decision could be directly related to their willingness to divulge their partisan and ideological leanings. The truth is that surveys leave a large number of journalists without ideological scores. Hence, there is a great benefit to understand where a larger pool of journalists fall on the ideological spectrum, something that no study has achieved in the past.

To do so, we use our second dataset: information on the comprehensive list of people whom journalists follow on Twitter. To collect this information, we searched for each of the journalists in our sampling frame on Twitter using their name, their email address, and the outlet for which they worked. Once we had this information, we use the frequently used approach developed and validated in (25). This uses a Bayesian ideal point approach. The logic of this methodological technique is that individuals display their preferences (in this case, for ideological homogeneity) through their actions (in this case, who they follow on Twitter), just as they do with many revealed preferences. Barberá (25) shows that this approach produces ideology measures that are strongly related to individual self-reported measures of ideology and validated party registration records among both the public and elites. (We show that this also holds true among the journalists who answered our survey; see fig. S4 in the Supplementary Materials.) As Barberá (25) notes, this approach comes with the distinct advantage that it “allows us to estimate ideology for more actors than any existing alternative, at any point in time and across many polities.” This is true in our case; this method allows us to have much more coverage than any previous effort at measuring journalist ideology, providing us with the ideology of a full 50% of journalists in our sampling frame. (Most surveys of journalists have response rates less than 10%.)

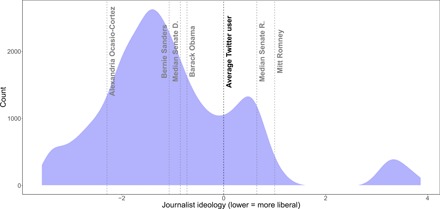

Figure 2 shows the distribution of ideological positions of journalists based on their Twitter interactions. As can be seen, journalists are dominantly liberal and often fall far to the left of Americans. A full 78.1% of journalists are more liberal than the average Twitter user. Moreover, 66% are even more liberal than former President Obama, 62.3% are to the left of the median Senate Democrat (in the 114th Congress), and a full 14.5% are more liberal than Alexandria Ocasio-Cortez (one of the most liberal members of the House).

Fig. 2. Ideological composition of journalists (Twitter networks).

The figure displays a kernel density of ideological/partisan leanings of journalists based on the people they choose to follow on Twitter. The measure uses the Bayesian ideal point approach by Barberá (25). N = 6801.

In short, journalists are overwhelmingly liberal/Democrats, and many journalists appear to be far to the left of the average American. However, being liberal and expressing liberal gatekeeping bias in the choice of news to cover are clearly two different things. After all, journalists state that they strongly value objectivity in reporting the news (26). Does the strong ideological skew that we observe actually influence the potential news that journalists choose to cover?

To test this possibility, in the spring of 2018, we ran a correspondence experiment of roughly 13,500 journalists in our sampling frame. Correspondence experiments are widely used in many contexts to test for bias (27). However, to our knowledge, this constitutes one of the first correspondence experiments of journalists (the only exception of which we are aware is by Graves et al. (28), who examined the effect of various messages on the fact-checking behavior of journalists rather than to look for partisan or ideological bias).

Correspondence experiments are built on the premise that one can elicit and measure bias by providing individuals with a standardized task. The condition that one desires to test for bias is then randomized. If individuals behave differently toward individuals of different backgrounds (in this case, the ideological leanings of the candidate running for office), then we can infer discrimination. As in all correspondence experiments, the primary outcome here is whether an individual responded to the inquiry. We avoid measures of response quality, as these are only observed among those who respond, and hence, they are especially susceptible to posttreatment bias (29).

While correspondence experiments do not capture all forms of potential bias, they come with the distinct advantage of being couched in an experimental design that allows us to rule out other potential factors [an aspect distinct from Implicit Attitude Tests (IATs), resentment scales, or other means of measuring individual bias]. If journalists systematically exhibited ideological bias in the political news that they choose to cover, then we would expect to see differences in response rates across the treatment conditions. If journalists’ gatekeeping decisions were shaped by their own ideological positions, those of the news organization for which they work, or those of their readership, then we would expect to see heterogeneous treatment effects along these dimensions.

To run our correspondence experiment, we created an artificial campaign email address for a fictitious candidate for the state legislature. We emailed the journalists on our list, asking them to cover the potential candidate. Covering campaigns and the people who run in them is a vital part of political journalists’ jobs. A short follow-up survey conducted in October 2019 (full details are available in the Supplementary Materials) on the relative interest in different types of news stories confirmed that this sort of request would be common and generally thought of as newsworthy. At the same time, a story on this topic would not be so important that it eliminates journalist discretion about whether to cover the topic depending on the journalist’s perception of the nature of the story, the presence of other ongoing news stories, and the time required to follow up on the story. In short, this story appears to be something that is generally considered newsworthy but is subject to journalist discretion and is exactly the type of story where gatekeeping biases could be manifest.

Our email appeared to be from a campaign staffer, indicating that the candidate was about to announce his candidacy within the next week and asking whether the journalist would be interested in sitting down with the candidate sometime in the following week to discuss his candidacy and vision for state government. The text in each of the emails was identical except for the bio of the candidate that we included at the end of the message. In the brief bio, we randomly varied the candidate’s ideological description. Each email described the candidate as being either a “conservative Republican,” “a moderate Republican,” “a moderate Democrat,” or a “progressive Democrat.” (We landed on four labels to maximize statistical power.) We chose these labels to magnify the difference between the ideologies of the candidates running in the primary; the progressive/conservative modifiers signal ideological strength. The full text variation is detailed in fig. S1. As we describe in Materials and Methods, we found no evidence that journalists believed that the candidate was fictitious.

Overall, we received responses from 18.3% of journalists (22% among those that did not bounce), which is slightly on the lower end of response rates in correspondence studies (30), but indistinguishable from correspondence studies of members of Congress [that have seen a 19% response rate; see (31)], mayors in the United States [10% response rate; see (32)], and elected officials in South Africa [21% response rate; see (33)]. (That our overall response rate was on the lower side likely reflects that many correspondence studies are conducted on elected officials who have staffs to help them respond to their emails; most journalists do not have such a luxury.)

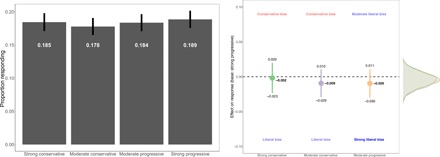

Figure 3 shows the results of our correspondence experiment. It displays the causal effect of candidate ideology on the probability of receiving a response to the campaign’s inquiry about setting up an interview to cover the candidate. To do so, it makes two comparisons. In the panel on the left, it shows mean response rates by treatment condition. In the second, it provides coefficient plots benchmarking response patterns to the base category of a strong progressive Democrat. (The conclusions that we are able to draw are the same if we use a different left-out category.)

Fig. 3. Effect of candidate ideology on journalist responses.

The figure displays raw response rates by treatment condition (left) and the coefficients from a regression that benchmarks the three treatments listed to a strong progressive (right). Bars (left) display mean levels; points (right) are coefficient estimates. Lines surrounding points/bars are 95% confidence intervals. Both are labeled in the figures. The figure also labels the direction of ideological biases in the figure, be they liberal or conservative. The distributions to the right show results from permutation tests that randomly shuffle the data and estimate a treatment effect for each shuffle. The model includes controls for journalist’s position, topical focus, gender, and percent democrat in their constituency, along with state fixed effects. Model N = 13,443.

As can be seen, there is no statistical or substantive difference in the probability of a journalist responding to the email based solely on the treatment conditions. Comparing the two poles, strong conservative candidates are, on average, a mere 0.4 percentage points less likely to get a response than strong progressive candidates. This effect is miniscule (being equivalent to 0.47% of an SD) and is far from significantly different from 0 (P = 0.87). (The same holds true comparing the other treatment conditions.) This null effect is very precisely estimated: Using equivalence testing (34), we can confidently (P < 0.05) rule out bias in favor of a progressive candidate greater than 2.35 percentage points (which is a paltry 6% of an SD). On the right panel of Fig. 3 is another way of seeing how notable the null is. There, we plot the distribution of coefficient estimates from 1000 permutation tests or random shuffles of the data. As can be seen, the coefficient plots fall right in the middle of the distributions from random data shuffles. This suggests that our effects are no different from what we would see with random chance and no relationship between independent (ideological treatment conditions) and our dependent (response to the inquiry) variables. Another (imperfect) way to benchmark our effects is to compare them to other forms of bias shown by correspondence studies. While knowing what treatment is most comparable to a partisan manipulation is difficult, this approach allows us to get some sense of the substantive size of our effects. Although branching out in recent years, most of correspondence studies have looked for racial discrimination (30, 35), thus making the evidentiary base for this form of bias the strongest in the correspondence study literature. According to a recent meta-analysis of these studies in (30), the average racial minority discriminatory effect in correspondence studies is 9.4 percentage points. That means that the maximum feasible size of liberal media bias (based on the bottom of our 95% confidence intervals for the treatment effect on the left) is only 24.4% of the size of the average level of discrimination toward minorities. Our average treatment effect is a paltry 2.1% of the meta-analytic pooled average treatment effect for racial minorities. This difference is not only highly statistically distinct but also substantively meaningful.

In short, despite being dominantly liberals/Democrats, journalists do not seem to be exhibiting liberal media bias (or conservative media bias) in what they choose to cover. This null is vitally important, showing that, overall, journalists do not display political gatekeeping bias in what they choose to cover.

A possible reason why we observe no discrimination in our correspondence experiment is that the ideological makeup of a community influences response patterns. Given market demand, a reporter working for a newspaper whose subscribers are conservative (for example) might feel more pressure to cover an emerging conservative candidate than they would an emerging progressive candidate, given their desire to bring in potential readers (and the accompanying additional revenue that would come with this).

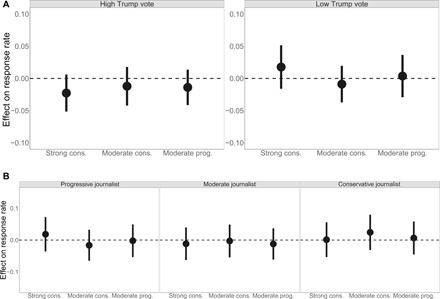

To test this possibility, we incorporate data from the 2016 presidential election and look for heterogeneous treatment effects by presidential vote share. (In the Supplementary Materials, we test for heterogeneities by journalist-perceived newspaper ideology; the results are the same.) Figure 4A shows the results from this test. It breaks counties by presidential vote share at the median level. Figure 4A shows that there appear to be very little differences in treatment effects by the underlying composition of the surrounding area. None of the interaction terms are significant at traditional levels (moderate progressive, P = 0.42; moderate conservative, P = 0.85; and strong conservative, P = 0.081). These differences are also not substantively interesting; the effects among subgroups are all small, and (using equivalence testing) they all allow us to rule out even moderately sized effect. In short, we find that a journalist working for a newspaper in a county that voted for Trump is just as likely to respond to a request for an interview with a progressive candidate as they are to a request from a conservative candidate. This shows that even despite powerful economic incentives from the readership of one’s newspapers, journalists still show no signs of ideological gatekeeping bias in what they choose to cover.

Fig. 4. Experimental effect by social context and journalist ideology.

Correspondence experiment effects by newspaper readership and journalist ideology are shown. Both panels display the coefficients from a regression that benchmarks the three treatments listed to a strong progressive. Black lines are 95% confidence intervals; points are coefficient estimates. Both models control for journalist’s position, topical focus, and gender. Panel (A) breaks the regression models by Trump vote share in the 2016 election. Panel (B) models are broken into terciles by the Twitter ideology scores. Model N (top left) = 6717; model N (top right) = 6726; model N (bottom left) = 2233; model N (bottom center) = 2242; model N (bottom right) = 2307.

Although we do not find evidence of broad, systematic ideological bias or ideological bias depending on the ideology of the potential readership, one might expect that individual biases would shape response patterns. Put differently, while we do not find conservative or liberal candidates to be systematically disadvantaged overall, there are strong theoretical reasons to expect that political reporters will be more responsive to candidates with whom they share their political ideology. After all, research into the psychological underpinnings driving personal interactions suggests that individuals strongly prefer to associate with those with whom they are ideologically aligned (36). If this were occurring, then we might not see evidence of bias overall, but instead, we would see polarized coverage. If journalists were exhibiting biased behavior, then progressive-leaning journalists should be less likely to do a news story on conservative political candidates (and vice versa).

Figure 4B shows our treatment effects by journalist ideology (which are broken into terciles, with the bottom tercile representing the most liberal journalists, the middle representing more moderate journalists, and the top tercile representing relatively conservative journalists). As seen in Fig. 4B, we find that journalists, regardless of their own ideology, treat candidates from different ideological backgrounds the same. (We find the same result if we use self-reported ideology.)

Last, we replicate our finding of no liberal media bias in a conjoint experiment embedded in our original survey of journalists. The conjoint task presented journalist respondents with two pairs of hypothetical candidates who were announcing their candidacy for governor in the state. We indicated to journalists that they were in a situation where the timing of the announcements, their location, and the staffing limitations of the paper are such that the newspaper is unable to have a reporter at both announcements. After displaying basic information about each candidate, we asked respondents to indicate which of the two candidate announcements they would send a reporter to cover in person. We randomized the political party of the participant along with other characteristics of that individual (see the Supplementary Materials for more information on this experiment). Each respondent was shown two scenarios.

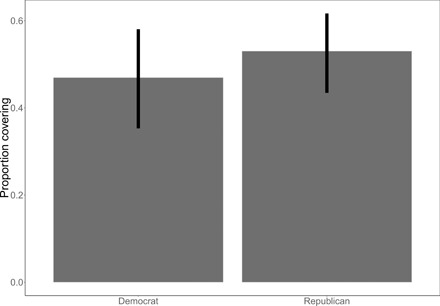

As we show in Fig. 5, when presented with various attributes of a potential story, the partisan nature of that story has no effect on whether journalists report that they would be willing to cover that story. If anything, they are more predisposed to cover Republican candidates. However, this effect is not statistically significant. Using equivalence testing, we can rule out any meaningful levels of liberal media bias with a very high degree of confidence. This suggests that the null effect that we observe is not unique to the specific nature of the correspondence study.

Fig. 5. Effect of candidate ideology on journalist responses (conjoint experiment).

The figure displays favorable coverage indicated by the two partisan conditions in the conjoint experiment that was embedded in our survey of journalists. Bars indicate mean levels; lines show 95% confidence intervals (P = 0.17). Experimental N = 3276. The other conditions randomized in the conjoint experiment had to do with the race, gender, candidate quality, social class, campaign manager connections and experience, and issue being addressed.

DISCUSSION

Narratives of the media being biased against conservatives and toward liberals have come to dominate modern discussions of the media. A majority of Americans think that the media favors Democrats and that journalists are liberal and identify with the Democratic Party. There is evidence that the public is not wrong; most journalists are far to the left of a typical American, regardless of whether we measure their ideology using surveys or the observed-behavior approach that we have used above. However, no research (up to this point) has explored whether ideological biases bleed into a crucial stage of the news-generation process: when journalists make vital decisions about what to cover. Here, we have shown that despite theoretical reasons for bias and popular narratives, journalists show no signs of ideological gatekeeping biases. They show that despite the overwhelming liberal composition of the media, there is no evidence of liberal media bias in the news that political journalists choose to cover.

These results paint a relatively positive view of the journalistic profession, one that is often missed in popular discussions about the potential for media bias. Some may wonder why we observe no evidence of political bias in what journalists choose to cover, when some previous studies have shown that there is political bias in how journalists cover the news. One possibility is that studies showing news media bias rely on national newspapers or cable news, whereas we study political reporting of both national and local news outlets. While it is hard to know for sure what mechanisms are driving our findings, our results are consistent with a mix of self-policing by journalists or oversight of newspaper managers [which may not be as liberal as journalists themselves; see (6, 37–39)] constraining journalists in the news stories that they choose to cover (3, 37) or both. As we have discussed in our test for heterogeneous treatment effects, there are strong economic and individual ideological pressures for journalists to exhibit bias in what they choose to cover. The fact that they do not suggests that some other strong force, perhaps the ethos of ideological balance that is often discussed in journalism training programs, constrains these powerful individual and economic forces. Future work would do well to explore why these forces do not constrain how the news is covered in national news outlets.

Regardless of the exact reasons for a lack of ideological bias, our results provide concrete evidence that counters popular narratives by political pundits, academics, and even President Trump himself. Despite repeatedly claiming that the media chooses to cover only topics that are detrimental to his campaign, presidency, and followers, we find little evidence to comport with the idea that journalists across the United States are ideologically biased choosing what political news to cover.

MATERIALS AND METHODS

In the correspondence experiment, we clustered on city and newspaper to minimize potential stable unit treatment value assumption (SUTVA) violations among reporters because larger newspapers have more reporters and reporters in smaller cities may contact each other even when they work for different newspapers. (For this reason, in our models in the paper, we cluster our SEs at the same level.) We then randomly assigned each journalist in the full sample to receive one of four possible emails. To avoid having our messages marked as spam, we sent out our emails in randomly ordered batches of 400 per day. Thinking of other potential SUTVA violations, we made sure that journalists from the same newspaper received their email on the same day.

Although our requests were sent out almost 6 months after the initial survey, it is important to demonstrate that the requests were perceived as real rather than related to a particular research project. To gauge the reception of the emails, we had a team of research assistants read all of the email responses and code them to gauge the response of the journalists to such a request. Our results suggest that the emails were perceived as credible. Over 75% of the responses included a follow-up question requesting more details about the individual’s candidacy (such as what specific district he was running in, whether he was running for state House or Senate, when and where he would announce his candidacy, and requests for more details on his professional background), 10% immediately tried to schedule an interview, 11% referred us to another department or journalist at the newspaper, and almost 3% requested a photograph that they could use in a story. None showed any indication that they believe the emails were part of a study or were noncredible.

We received Institutional Review Board (IRB) approval at both Cornell College and Brigham Young University-Idaho. The IRB determined that the deception and time required in our studies were minimal compared to the potential benefits of this study.

Supplementary Material

Acknowledgments

We are grateful for the feedback from scholars at the University of Michigan, Brigham Young University, and the Midwest Political Science Association’s (2019) Annual Meeting. We are also thankful to the following research assistants: A. Alvarino, W. Anderson, T. Buddle, M. Christiansen, D. Fernandez, A. Kohl, J. Nunamaker, M. Stern, Z. Stoll, and M. Valdez. Funding: The authors have no funding sources to disclose. H.J.G.H. and M.R.M. conceived the original survey of journalists and the conjoint experiment. H.J.G.H. collected the journalists’ email addresses, Twitter handles, and carried out the original survey of journalists. M.R.M. performed the Twitter network analysis. H.J.G.H., J.B.H., and M.R.M conceived the correspondence study. M.R.M. carried out the correspondence study. H.J.G.H, J.B.H., and M.R.M. conducted the analysis. H.J.G.H, J.B.H., and M.R.M. contributed to writing the manuscript. Author contributions: All authors contributed equally to this project. Competing interests: The authors declare that they have no competing interests. Data and materials availability: All data needed to evaluate the conclusions in the paper are present in the paper and/or the Supplementary Materials. Additional data related to this paper may be requested from the authors. The replication data/code are posted at the Harvard Dataverse site for this article.

SUPPLEMENTARY MATERIALS

Supplementary material for this article is available at http://advances.sciencemag.org/cgi/content/full/6/14/eaay9344/DC1

REFERENCES AND NOTES

- 1.Dahl R. A., What political institutions does large-scale democracy require? Polit. Sci. Q. 120, 187–197 (2005). [Google Scholar]

- 2.A. Mitchell, K. Simmons, K. Matsa, L. Silver, “Publics globally want unbiased news coverage, but are divided on whether their news media deliver,” Pew Research Center, 2018.

- 3.W. Dean, “Understanding bias” (Technical Report, 2018).

- 4.Knight Foundation, “American Views: Trust, media and democracy” (2018).

- 5.DellaVigna S., Kaplan E., The fox news effect: Media bias and voting. Q. J. Econ. 122, 1187–1234 (2007). [Google Scholar]

- 6.Martin G. J., McCrain J., Local news and national politics. Am. Polit. Sci. Rev. 113, 372–384 (2019). [Google Scholar]

- 7.Martin G. J., Yurukoglu A., Bias in cable news: Persuasion and polarization. Am. Econ. Rev. 107, 2565–2699 (2017). [Google Scholar]

- 8.Guess A., Nyhan B., Reifler J., Selective exposure to misinformation: Evidence from the consumption of fake news during the 2016 us presidential campaign. Eur. Res. Counc. 9, 1–14 (2018). [Google Scholar]

- 9.Lazer D. M. J., Baum M. A., Benkler Y., Berinsky A. J., Greenhill K. M., Menczer F., Metzger M. J., Nyhan B., Pennycook G., Rothschild D., Schudson M., Sloman S. A., Sunstein C. R., Thorson E. A., Watts D. J., Zittrain J. L., The science of fake news. Science 359, 1094–1096 (2018). [DOI] [PubMed] [Google Scholar]

- 10.Vosoughi S., Roy D., Aral S., The spread of true and false news online. Science 359, 1146–1151 (2018). [DOI] [PubMed] [Google Scholar]

- 11.Reporters Without Borders, “Worldwide round-up of journalists killed, detained, held hostage, or missing in 2018” (Technical Report, 2018).

- 12.B. Shapiro, Primetime Propaganda: The True Hollywood Story of How the Left Took Over Your TV (Broadside Books, 2011). [Google Scholar]

- 13.T. Groseclose, Left Turn: How Liberal Media Bias Distorts the American Mind (St. Martin’s Press, 2011). [Google Scholar]

- 14.K. Dautrich, T. H. Hartley, How the News Media Fail American Voters: Causes, Consequences, and Remedies (Columbia Univ. Press, 1999). [Google Scholar]

- 15.D. McQuail, Media Performance: Mass Communication and the Public Interest (Sage, 1992). [Google Scholar]

- 16.W. L. Miller, Media and Voters: The Audience, Content, and Influence of Press and the Television at the 1987 General Election (Oxford Univ. Press, 1991). [Google Scholar]

- 17.A. E. Boydstun, Making the News: Politics, the Media, and Agenda Setting (University of Chicago Press, 2013). [Google Scholar]

- 18.H. J. Hassell, The Party’s Primary: Control of Congressional Nominations (Cambridge Univ. Press, 2017). [Google Scholar]

- 19.Tichenor P. J., Donohue G. A., Olien C. N., Mass media flow and differential growth in knowledge. Public Opin. Q. 34, 159–170 (1970). [Google Scholar]

- 20.King G., Schneer B., White A., How the news media activate public expression and influence national agendas. Science 358, 776–780 (2017). [DOI] [PubMed] [Google Scholar]

- 21.Druckman J. N., The implications of framing effects for citizen competence. Polit. Behav. 23, 225–256 (2001). [Google Scholar]

- 22.Groeling T., Kernell S., Is network news coverage of the president biased? J. Polit. 60, 1063–1087 (1998). [Google Scholar]

- 23.L. Willnat, D. H. Weaver, American Journalist in the Digital Age: Key Findings (School of Journalism, Indiana Univ., 2014). [Google Scholar]

- 24.McGregor S. C., Molyneux L., Twitter’s influence on news judgment: An experiment among journalists. Journalism 2018, 10.1177/1464884918802975 (2018). [Google Scholar]

- 25.Barberá P., Birds of the same feather tweet together: Bayesian ideal point estimation using twitter data. Polit. Anal. 23, 76–91 (2015). [Google Scholar]

- 26.S. Allan, News Culture (McGraw-Hill, 2010). [Google Scholar]

- 27.Neumark D., Banke R. J., Van Nort K. D., Sex discrimination in restaurant hiring: An audit study. Q. J. Econ. 111, 915–941 (1996). [Google Scholar]

- 28.Graves L., Nyhan B., Reifler J., Understanding innovations in journalistic practice: A field experiment examining motivations for fact-checking. J. Commun. 66, 102–138 (2016). [Google Scholar]

- 29.Montgomery J. M., Nyhan B., Torres M., How conditioning on posttreatment variables can ruin your experiment and what to do about it. Am. J. Polit. Sci. 62, 760–775 (2018). [Google Scholar]

- 30.Costa M., How responsive are political elites?: A meta-analysis of experiments on public officials. J. Exp. Polit. Sci. 4, 241–254 (2017). [Google Scholar]

- 31.Butler D. M., Karpowitz C. F., Pope J. C., A field experiment on legislators home styles: Service versus policy. J. Polit. 74, 474–486 (2012). [Google Scholar]

- 32.Carnes N., Holbein J., Do public officials exhibit social class biases when they handle casework? Evidence from multiple correspondence experiments. PLOS ONE 14, e0214244 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.McClendon G. H., Race and responsiveness: An experiment with south african politicians. J. Exp. Polit. Sci. 3, 60–74 (2016). [Google Scholar]

- 34.Lakens D., Equivalence tests: A practical primer for t tests, correlations, and meta-analyses. Soc. Psychol. Personal. Sci. 8, 355–362 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Quillian L., Pager D., Hexel O., Midtbøen A. H., Meta-analysis of field experiments shows no change in racial discrimination in hiring over time. Proc. Natl. Acad. Sci. U.S.A. 114, 10870–10875 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Iyengar S., Sood G., Lelkes Y., Affect, not ideology: A social identity perspective on polarization. Public Opin. Q. 76, 405–431 (2012). [Google Scholar]

- 37.Breed W., Social control in the newsroom: A functional analysis. Soc. Forces 33, 326–335 (1955). [Google Scholar]

- 38.Budak C., Goel S., Rao J. M., Fair and balanced? Quantifying media bias through crowdsourced content analysis. Public Opin. Q. 80, 250–271 (2016). [Google Scholar]

- 39.Bakshy E., Messing S., Adamic L. A., Exposure to ideologically diverse news and opinion on facebook. Science 348, 1130–1132 (2015). [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary material for this article is available at http://advances.sciencemag.org/cgi/content/full/6/14/eaay9344/DC1