Abstract

Hearing loss is a widespread condition that is linked to declines in quality of life and mental health. Hearing aids remain the treatment of choice, but, unfortunately, even state-of-the-art devices provide only limited benefit for the perception of speech in noisy environments. While traditionally viewed primarily a loss of sensitivity, it is now clear that hearing loss has additional effects that cause complex distortions of sound-evoked neural activity that cannot be corrected by amplification alone. Here we describe the effects of hearing loss on neural activity in order to illustrate the reasons why current hearing aids are insufficient and to motivate the use of new technologies to explore directions for improving the next generation of devices.

Keywords: hearing loss, hearing aids, auditory system, speech perception

Hearing loss is a serious problem without an adequate solution

Current estimates suggest that approximately 500 million people worldwide suffer from hearing loss [1]. This impairment is not simply an inconvenience: hearing loss impedes communication, leads to social isolation, and has been linked to increased risk of cognitive decline and mortality. In fact, a recent commission identified hearing loss as the most important modifiable risk factor for dementia, accounting for nearly 10% of overall risk [2].

Despite the severe consequences of hearing loss, only 10-20% of older people with significant impairment use a hearing aid [3]. Several factors contribute to this poor uptake (psychological, social, etc.), but one of the most important is the lack of benefit provided by current devices in noisy environments [4]. The common complaint of those with hearing loss, “I can hear you, but I can’t understand you”, is echoed by hearing aid users and non-users alike. Inasmuch as the purpose of a hearing aid is to facilitate communication and reduce social isolation, devices that do not enable the perception of speech in typical social settings are inadequate.

What does the ear do? The simple answer: amplification, compression, and frequency analysis

The cochlea transforms the mechanical signal that enters the ear into an electrical signal that is sent to the brain via the auditory nerve (AN; Figure 1A). Incoming sound causes vibrations of the basilar membrane (BM) that runs along the length of the cochlea. As the BM moves, the inner hair cells (IHCs) that are attached to it release neurotransmitter onto nearby AN fibers to elicit electrical activity (Figure 1B).

Figure 1. The view of cochlear function and dysfunction that is implicit in the design of current hearing aids.

(A) A schematic diagram illustrating the decomposition of incoming sound into its constituent frequencies on the cochlea. The frequency tuning of the cochlea (which is spiral shaped, but unrolled here for illustration) changes gradually along its length such that BM movement and AN activity are driven by high frequencies at the basal end, near the interface with the middle ear, and low frequencies at the apical end.

(B) A schematic diagram showing a cross-section of the cochlea, with key components labelled (adapted, with permission, from Ashmore, Physiol Rev, 88: 173–210, 2008)

(C) A schematic diagram illustrating the active amplification and compression provided by OHCs. OHCs amplify the BM movement elicited by weak sounds to compress the range of BM movement across all sound levels (left panel, black line) and make use of the full dynamic range of the AN (right panel, black line). Without the amplification provided by OHCs, sensitivity to weak sounds is lost completely, and the AN activity elicited by strong sounds is decreased.

(D) A schematic diagram illustrating frequency analysis in the cochlea. The top panel shows the frequency content ofincoming sound consisting of 4 distinct frequencies. The bottom panel shows the AN activity elicited by the sound alongthe length of the cochlea (black). The colored lines indicate the preferred frequency of the AN fibers at each cochlearposition. The positions are specified relative to the basal end of a typical human cochlea.

Weak sounds do not drive BM movement strongly enough to elicit AN activity and, thus, require active amplification by outer hair cells (OHCs), which provide feedback to reinforce the passive movement of the BM (Figure 1B). The amplification provided by OHCs decreases as sounds become stronger, resulting in a compression of incoming sound. This compression enables sound levels spanning more than 6 orders of magnitude to be encoded within the limited dynamic range of AN activity (Figure 1C, black lines).

The mechanical properties of the BM change gradually along its length, creating tonotopy -- a systematic variation in the sound frequency to which each point in the cochlea is preferentially sensitive. Because of tonotopy, the amplitude of BM movement and subsequent AN activity at different points along the cochlea reflect the power at which different frequencies are present in the incoming sound. In the parts of the cochlea that are preferentially sensitive to low frequencies, the frequency content of incoming sound is also reflected in phase-locked BM movement and AN activity that tracks the sound on a cycle-by-cycle basis. Thus, the signal sent to the brain by the ear is, to a first approximation, a frequency analysis (Figure 1D).

What is hearing loss? The simple answer: decreased sensitivity

Hearing loss has many causes including genetic mutations, ototoxic drugs, noise exposure, and aging [1]. The most common forms of hearing loss are typically associated with a loss of sensitivity in which weak sounds no longer elicit any AN activity, while strong sounds elicit less AN activity than they would in a healthy ear (Figure 1C, gray lines). This loss of sensitivity most often results from the dysfunction of OHCs, which can suffer direct damage (sensory hearing loss) or be impaired indirectly due to degeneration of the stria vascularis, the heavily vascularized wall of the cochlea that provides the energy to support active amplification (metabolic hearing loss).

The effects of hearing loss are typically most pronounced in cochlear regions that are sensitive to high frequencies where OHCs normally provide the greatest amount of amplification. While a number of attempts have been made to identify distinct phenotypes of hearing loss, a recent systematic analysis of a large cohort revealed a continuum of patterns from flat loss that impacted all frequencies equally to sloping loss that increased from low to high frequencies [5].

Hearing aids restore sensitivity, but fail to restore normal perception

Most current hearing aids serve primarily to artificially replace the amplification and compression that are no longer provided by OHCs through multi-channel wide dynamic range compression (WDRC; see glossary). This approach enhances the perception of weak sounds, but, unfortunately, is not sufficient to restore the perception of speech in noisy environments [6,7]. Many current hearing aids also include additional features -- speech processors, directional microphones, frequency transforms, etc. -- that can be useful in certain situations but provide only modest additional benefits overall [8–12].

The assumption that is implicit in the design of current hearing aids is that hearing loss is primarily a loss of sensitivity that can be solved by simply restoring neural activity to its original level. However, this is a dramatic oversimplification: hearing loss does not simply weaken neural activity, it profoundly distorts it. Speech perception is dependent not only on the overall level of neural activity, but also on the specific patterns of activity across neurons over time [13]. Current hearing aids fail to restore normal perception because they fail to restore a number of important aspects of these patterns [14–17] (Figure 2, Key Figure).

Figure 2. Hearing aids must transform incoming sound to correct the distortions in neural activity patterns caused by hearing loss.

(A) A schematic diagram illustrating the distortion of the signal that is elicited in the brain by a damaged ear. In a healthy auditory system (top row), the word ‘Hello’ spoken at a moderate intensity elicits a specific pattern of activity across neurons over time and results in an accurate perception of the word ‘Hello’. In a damaged ear, the same word elicits activity that is both weaker overall and has a different pattern, resulting in impaired perception.

(B) A schematic diagram illustrating the correction of distorted neural activity by a hearing aid. With a hearing aid that provides only amplification (top row), the word ‘Hello’ spoken at a moderate intensity is amplified to a high intensity. This results in a restoration of the overall level of neural activity, but does not correct for the distortion in the pattern of activity across neurons over time and, thus, does not restore normal perception. An ideal hearing aid (bottom row) would transform the word ‘Hello’ into a different sound in order to restore not only the overall level of neural activity, but also the pattern of activity across neurons over time.

What does the ear do? The real answer: nonlinear signal processing

The idea that the ear performs a frequency analysis of incoming sound is insufficient because the cochlea is highly nonlinear. The amplification and compression provided by OHCs is a form of nonlinearity, but it is relatively simple and, at least in theory, can be restored by current hearing aids. However, each OHC is capable of modulating BM movement not only in the region of the cochlea to which it is attached, but also at other locations. Consequently, sound coming into a healthy ear is subject to complex nonlinear processing that creates cross-frequency interactions. Because of these interactions, the degree to which any particular frequency in an incoming sound is amplified depends not only on the power at that frequency, but also on the power at other frequencies.

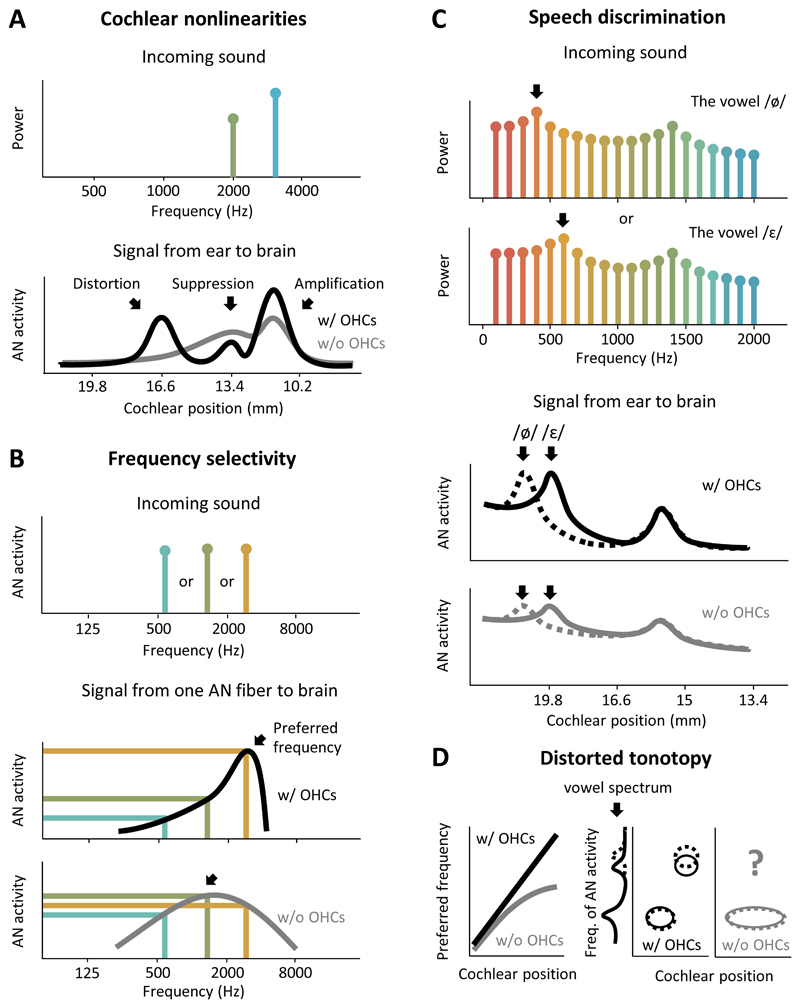

Because of cross-frequency interactions, the pattern of AN activity elicited by an incoming sound deviates substantially from that which would correspond to simple frequency analysis in a number of ways (Figure 3A, black line). One is the creation of distortion products: interactions between two frequencies that are present in an incoming sound can create additional BM movement and AN activity at a point in the cochlea that is normally sensitive to a third frequency that is not actually present in the sound. Another is suppression: the ability of OHCs at one location to reduce BM movement at nearby locations. This suppression sharpens frequency tuning and results in a local winner-take-all interaction on the BM that selectively amplifies the dominant frequencies in incoming sound. This selectivity is critical in noisy environments where the important frequencies in speech might otherwise be obscured [18–20].

Figure 3. The loss ofcochlear nonlinearities distorts the signal that the ear sends to the brain.

(A) A schematic diagram illustrating several important cochlear nonlinearities controlled by OHCs. The top panel shows the frequency content of an incoming sound consisting of 2 distinct frequencies, one of which is slightly stronger than the other. The bottom panel shows the AN activity elicited by the sound along the length of the cochlea (black). OHCs amplify the stronger frequency, suppress the weaker frequency, and create a distortion at a third frequency. Without OHCs (gray), these nonlinearities are eliminated and the signal that is sent to the brain from the ear is reduced to a simple, and only weakly selective, frequency analysis.

(B) A schematic diagram illustrating the effects of OHC dysfunction on the frequency selectivity of a single AN fiber. The top panel shows the frequency content of incoming sound consisting of one of 3 distinct frequencies. The bottom panels show the activity elicited in a single AN fiber by incoming sound as a function of frequency with (black) and without (gray) OHCs. OHCs amplify a particular preferred frequency (arrow) while suppressing nearby frequencies to provide sharp frequency tuning and high differential sensitivity, illustrated by large differences in the activity elicited by each of the three different frequencies. Without OHCs, frequency tuning is broad, the preferred frequency shifts toward the lower preferred frequency of the passive BM movement (arrow), and differential sensitivity is lost.

(C) A schematic diagram illustrating the effects of OHC dysfunction on the AN activity elicited by speech. The top panel shows the frequency content of two vowels, /ø/ and /τ/, which differ only in the position of their low-frequency peak (first formant). Note that the frequency axis in this panel is linear rather than logarithmic. The bottom panels show the AN activity elicited by the two vowels (solid, dashed) along the length of the cochlea with (black) and without (gray) OHCs. OHCs amplify the dominant frequencies in the vowels while suppressing other frequencies to selectively amplify the frequency peaks. Without OHCs, this selective amplification is lost and the difference in the AN activity elicited by the two vowels is greatly diminished.

(D) Schematic diagrams illustrating the effects of OHC dysfunction on tonotopy. As illustrated in the left panel, in a healthy ear (black) there is a gradual and consistent change in preferred frequency along the length of the cochlea. Without OHCs, the preferred frequency of each fiber shifts toward lower frequencies. Because OHC dysfunction is typically more pronounced in the regions of the cochlea that are sensitive to higher frequencies, this results in a distorted tonotopy in which low frequencies are overrepresented and AN fibers that were previously sensitive to high frequencies respond only to low frequencies. The consequences of this distorted tonotopy on the AN activity elicited by speech are illustrated in the center and right panels. In a healthy ear (black), the AN activity at each part of the cochlea is dominated by the nearest peak in the frequency spectrum of the incoming sound. If two vowels (solid and dashed) differ in their high-frequency peak (second formant), that difference will be reflected in the activity of AN fibers in the part of the cochlea that is preferentially sensitive to high frequencies. Without OHCs (gray), however, most of the cochlea becomes preferentially sensitive to low frequencies and information about the high-frequency peak is lost entirely.

What is hearing loss? The real answer: a profound distortion of neural activity patterns

Loss of cross-frequency interactions

Because cross-frequency interactions are dependent on OHCs, they are also eliminated by the same OHC dysfunction that decreases sensitivity. As a result, the AN activity patterns that are sent to the brain from a damaged ear are qualitatively different from the patterns that the brain has learned to expect from a healthy ear (Figure 3A, gray line). Unfortunately, these distorted patterns do not provide a sufficient basis for perception in noisy environments: without the nonlinear processing provided by cross-frequency interactions, the patterns elicited by different sounds are less unique and less robust to background noise [21].

OHC amplification and suppression sharpen the frequency tuning of the BM such that AN fibers become highly selective for their preferred frequency (Figure 3B, black line). This sharp tuning enables the entire dynamic range of each fiber to be utilized on a narrow range of frequencies such that different frequencies are easily distinguished based on the activity that they elicit. However, when OHC function is impaired, the BM loses its sharp tuning and AN fibers use less of their dynamic range on a wider range of frequencies (Figure 3B, gray line). For complex sounds such as speech, this results in a smearing of the activity pattern across AN fibers, making it difficult for the brain to differentiate between the patterns elicited by similar sounds, especially in noisy environments [21,22] (Figure 3C).

OHC impairment also causes a shift in the preferred frequency of each fiber toward the lower preferred frequency of the passive BM movement (Figure 3B, arrows). Because OHC impairment is typically more pronounced in regions of the cochlea that are sensitive to higher frequencies, this results in distorted tonotopy in which much of the cochlea is sensitive to only low frequencies [23] (Figure 3D). This distortion greatly reduces the information that the brain receives about high frequencies, which are critical for the perception of speech in noisy environments [24].

Hidden hearing loss

In addition to their effects on OHCs, many forms of hearing loss impact the AN itself [25]. In particular, recent studies have drawn attention to a previously unrecognized form of AN degeneration: damage to the peripheral axon or the IHC synaptic terminal (Figure 4A), which results in a loss of function. This synaptopathy can occur long before loss of the AN cell body itself [26,27] and has been termed “hidden hearing loss” [28] because its effects are not evident in standard clinical audiometric tests. These tests measure only sensitivity to weak sounds, while hidden hearing loss appears to be selective for those AN fibers with a high activation threshold that are sensitive only to strong sounds[29].

Figure 4. Hidden hearing loss distorts the neural activity elicited by strong sounds, particularly in noisy environments.

(A) A schematic diagram showing the anatomy of the AN. The AN is composed of bipolar spiral ganglion neurons (SGNs).Each SGN sends its peripheral axon to synapse with an IHC and its central axon to synapse with neurons in the cochlearnucleus of the brainstem. In hidden hearing loss (bottom row), there is a degeneration of the IHC synapses and peripheralaxons, but the SGN cell bodies and central axons remain largely in tact. This degeneration is selective for high-thresholdfibers (colors indicate fiber threshold as in C). The box indicates the region shown in B.

(B) An image showing a degeneration of AN fiber terminals in the cochlea of a 67-year-old female (adapted, withpermission, from Viana et al., Hearing Res, 327: 78-88, 2015). The top panel in each row shows the maximum projectionof a confocal z-stack immunostained for neurofilament (green). The bottom panel in each column shows a cross-sectionthrough the image stack at the location denoted by the dashed line. The left and right columns show images from theregion of the cochlea that were preferentially sensitive to 1 KHz and 8 kHz, respectively. The number of fiber terminals inboth regions is significantly below that which would be expected based on SGN cell body counts, but while the excessterminal loss in the left column is relatively mild, the excess terminal loss in the right column is severe. The scale barindicates 50 μm.

(C) A schematic diagram showing AN activity as a function of sound level for fibers with different thresholds (colors) and for the entire fiber population (black, gray). In a heathy ear (left panel), fibers with different thresholds provide differential sensitivity across all sounds levels. In an ear with hidden hearing loss (right panel), selective degeneration of high-threshold fibers results in a loss of differential sensitivity to changes in amplitude at high sound levels.

(D) A schematic diagram showing the effects of hidden hearing loss on the signal that the ear sends to the brain. The left panels show the level of incoming sound as a function of time. The middle panels show the AN activity as a function of sound level for fibers with three different thresholds and the entire fiber population (colors as in B). The right panels show the AN activity over time for each fiber and the entire fiber population with and without hidden hearing loss (colors as in B). Without high-threshold fibers, the brain receives little information about amplitude modulations in strong sounds in a quiet environment (top row), or about amplitude modulations in any sound in a noisy environment (bottom row).

Even in a healthy ear, OHC amplification is not sufficient to compress incoming sound into the dynamic range of an individual AN fiber. Thus, differential sensitivity across a wide range of sound levels is achieved only through dynamic range fractionation -- parallel processing in different populations of fibers, each of which has a different activation threshold and provides sensitivity over a relatively small range (Figure 4C). Because high-threshold fibers provide differential sensitivity to strong sounds, their loss has important implications for the perception of speech in noisy environments [30]. Strong sounds saturate low-threshold fibers such that they become maximally active and are no longer sensitive to small changes in sound amplitude (Figure 4D; note that information about sound frequency may still be transmitted by these fibers through their temporal patterns). Thus, when high-threshold fibers are compromised, changes in the amplitude of strong sounds are poorly reflected in the signal that the ear sends to the brain. Direct evidence linking hidden hearing loss to perceptual deficits in humans is still lacking; however, the indirect evidence that is available from humans is largely consistent with the direct evidence from animals [31,32] and the renewed interest in this area will likely lead to further advances in the near future.

Brain plasticity

The effects of hearing loss also extend beyond the ear into the brain itself [33]. One widely-observed effect of hearing loss is a decrease in inhibitory tone, mediated by changes in GABAergic and glycinergic neurotransmission throughout the brain. Hearing loss weakens the signal from the ear to the brain, and the subsequent downregulation of inhibitory neurotransmission is thought to be a form of homeostatic plasticity that effectively amplifies the input from the ear to restore brain activity to its original level [34]. This decrease in inhibition can improve some aspects of perception (e.g. the detection of weak sounds), but it may also have unfortunate consequences.

One effect that is of particular relevance to hearing aids is loudness recruitment, an abnormally rapid growth in brain activity (and, thus, perceived loudness) with increasing sound level [35]. This loudness recruitment distorts fluctuations in sound level that are critical for speech perception [36] and, when combined with hearing loss, leaves only a small range of levels in which sounds are both audible and comfortable. The plasticity that follows hearing loss may also impair the perception of speech in other ways [37]; for example, if the degree of hearing loss varies with frequency, as is often the case, plasticity can also result in a reorganization of the tonotopic maps within the brain, further distorting the representation of the frequencies for which the loss of sensitivity is largest [38].

Central processing deficits

The most commonly observed auditory deficit with a distinct central component is impaired temporal processing, e.g. failure to detect a short pause within an ongoing sound [39], which is highly dependent on the balance between excitation and inhibition within the brain [40]. Impaired temporal processing decreases sensitivity to interaural time differences (ITDs) [41,42] and prevents the use of spatial cues to solve the so-called “cocktail party problem” of separating out one talker from a group [43]. Hearing aids do little to improve sound localization and, indeed, often make matters worse by distorting spatial cues [41,44].

Temporal processing is also critical for speech perception independent of localization. Much of speech perception in noisy environments appears to be mediated by listening in the ‘dips’ -- short periods during which the noise is weak. Temporal processing also allows multiple talkers to be separated by voice pitch, which is essential for solving the cocktail party problem. Hearing loss impairs the ability to perceive small differences in pitch and, importantly, to separate two talkers based on voice pitch [45–47]. Impaired pitch-processing arises partly from the cochlear dysfunction discussed above [47,48], but changes in central brain areas also appear to play a role [49,50].

Beyond auditory processing deficits: the role of cognitive factors

The combined peripheral and central effects of hearing loss described above result in a distorted neural representation of speech. However, the perceptual problems suffered by many listeners, particularly those who are older, often go far beyond those that would be predicted based on hearing loss alone, even when impairments in the processing of both weak and strong sounds are considered [49]. In recent years, it has become clear that the ultimate impact of a distorted neural representation on speech perception, as well as the efficacy of attempts to correct it, are strongly dependent on cognitive factors [51].

The past decade has seen the development of a conceptual model for understanding the interaction between auditory and cognitive processes during speech perception [52,53]. During active listening, neural activity patterns from the central auditory system are sent to language centers where they are matched to stored representations of different speech elements. When listening to speech in quiet through a healthy auditory system, the match between the incoming neural activity patterns and the appropriate stored representations occurs automatically on a syllable-by-syllable basis, and requires little or no contribution from cognitive processes. However, when listening to speech in a noisy background through an impaired auditory system, the incoming neural activity patterns will be distorted and the match to stored representations may no longer be clear. This problem may be compounded during long-term hearing loss as stored representations become less robust [54].

When the match between incoming neural activity patterns and stored representations is not clear, cognitive processes are engaged: executive function focuses selective attention toward the speaker of interest and away from other sounds to reduce interference from background noise; working memory stores neural activity patterns for several seconds so that information can be integrated across multiple syllables; linguistic circuits take advantage of contextual cues to narrow the set of possible matches and infer missing words. This model explains why much of the variance in speech perception performance in older listeners is explained by differences in cognitive function [49,55]: high cognitive function can compensate for distortions in incoming neural activity patterns, while low cognitive function can compound them.

Importantly, the effects of cognitive function on speech perception persist even with hearing aids. Many of the advanced processing strategies that are used by modern hearing aids can distort incoming speech. While listeners with high cognitive function may be able to ignore these distortions and take advantage of the improvements in sound quality, those with low cognitive function may find the distortions distracting [56,57]. Our understanding of the impact of cognitive factors on the efficacy of hearing aids has advanced dramatically in recent years; while many questions remain unresolved, there are already a number of issues that should be considered when designing new devices (Box 1).

Box 1: Cognitive factors and hearing aid efficacy.

Recent advances in our understanding of the interactions between auditory and cognitive processes during speech perception present a number of opportunities for improving hearing aid efficacy.

Improving the efficacy of current hearing aids

Aggressive signal processing strategies that distort the acoustic features of incoming speech seem to largely benefit listeners with high cognitive function. Can cognitive measures be included in hearing aid fitting to determine the optimal form of signal processing for a given listener? What are the appropriate clinical tests of cognitive function for this purpose?

Improving the efficacy of future hearing aids

The design of new signal processing strategies should be informed by our new understanding of cognitive factors. Are distortions of some acoustic features more distracting than distortions of others? Are certain combinations of distortions particularly distracting? Furthermore, the benefit of any signal processing strategy for a given listener may vary with the degree to which cognitive processes are engaged. Can new hearing aids be designed to control signal processing dynamically based on cognitive load? Can cognitive load be estimated accurately through analysis of incoming sound, or through simultaneous measurements of physiological signals?

Improving rehabilitation and training programs

If cognitive function is a major determinant of hearing aid efficacy, then cognitive training may have the potential to improve speech perception. Do the benefits of cognitive training transfer to improved speech perception for hearing aid users? Can cognitive training help listeners to make use of signal processing strategies that they would otherwise find distracting? It is also possible that cognitive training in the earliest stages of hearing loss may be beneficial. Can cognitive training before hearing aid use improve initial and/or ultimate efficacy?

Concluding remarks and future perspectives

To restore normal auditory perception, hearing aids must not only provide amplification, but also transform incoming sound to correct the distortions in neural activity that result from the loss of cross-frequency interactions in the cochlea, hidden hearing loss, brain plasticity, and central processing deficits. This is, of course, much easier said than done. First of all, with extensive cochlear damage -- e.g. dead regions where IHCs are lost [58] -- full restoration of perception may not be possible (see Outstanding Questions). Even in people with only mild or moderate impairment, identifying the transformation required to create the desired neural activity is extremely difficult.

Fortunately, there are several recent advances that may facilitate progress. Our understanding of the distortions caused by hearing loss is rapidly advancing [59], and as the nature of these distortions becomes clearer it will be easier to identify transformations to compensate for them. It should also be possible to take advantage of new techniques for machine learning that are already transforming other areas of medicine [60]. Deep neural networks that can learn complex nonlinear relationships directly from data may be able to identify transformations that have eluded human engineers. The data requirements for these approaches exceed current experimental capabilities, but new technology for large-scale recording of neural activity may be able satisfy them [61].

Large-scale recordings of neural activity can also be used to tackle another major challenge: the idiosyncratic nature of hearing loss. Every individual will suffer from a different pattern of cochlear damage, resulting in a unique distortion of neural activity. However, because studies of neural activity are typically based on averaging small-scale recordings across individuals, we do not yet have the knowledge required to treat each individual optimally in a personalized manner. Large-scale recordings may help to overcome this problem by allowing for a complete characterization of activity in each individual. This information should also improve our ability to infer the pattern of underlying cochlear damage from non-invasive or minimally invasive clinical tests [62].

Since hearing aids are likely to continue to be the primary treatment for hearing loss for years to come, it is critical that we continue to work toward developing devices that can restore normal auditory perception. Achieving this goal will be challenging, and hearing aids may never may be sufficient for those with severe cochlear damage. But if the next generation of devices is designed to treat hearing loss as a distortion of activity patterns in the brain, rather than a loss of sensitivity in the ear, dramatic improvements for those with mild or moderate impairment are possible. Together with higher uptake due to increasing social acceptance of wearable devices, improved access through decreased regulation [63], and the development of over-the-counter personal sound amplification products (PSAPs) [64], we have an opportunity to improve the health and wellbeing of a huge number of people in the near future.

Glossary.

Multi-channel wide dynamic range compression (WDRC)

The processing scheme used in most current hearing aids. In this scheme, the amount of amplification and compression provided by the hearing aid depends on the frequency of the incoming sound. In a typical hearing aid fitting procedure, the loss of sensitivity is measured at several different frequencies, and the amount of amplification and compression provided by the hearing aid for each frequency is adjusted according to a prescribed formula to improve audibility without causing discomfort.

Voice pitch

The primary frequency of vocal cord vibration. Typical values for men, women, and children are 125 Hz, 200 Hz, and 275 Hz, respectively. However, voice pitch varies widely across individuals and, thus, is an important cue for solving the “cocktail party problem” of separating the voices of multiple talkers. The processing of pitch relies on mechanisms in the cochlea and central auditory areas that are compromised by hearing loss.

Interaural time difference (ITD)

The primary cue for the localization of low-frequency sounds such as speech. When a sound reaches one ear before the other, the ITD indicates the location in space from which the sound originated. However, even when sounds are located to the side of the head, ITDs are extremely small (< 1ms); thus, sensitivity to ITDs relies on highly precise temporal processing in central auditory areas that is compromised by hearing loss.

Personal sound amplification product (PSAP)

A hearing device that is available over-the-counter and is not specifically labeled as a treatment for hearing loss. PSAPs are generally less expensive than hearing aids, but use many of the same technologies and can achieve comparable performance.

Outstanding questions

How good can a hearing aid possibly be, i.e. what is the maximum perceptual improvement that an ideal hearing aid can achieve for a given level or form of hearing loss?

What features of neural activity patterns are critical for the perception of speech in noisy environments and how are they distorted by hearing loss?

Do different forms of hearing loss, e.g. noise induced or age-related, result in different distortions in neural activity patterns?

Can specific patterns of distortion in neural activity be inferred from non-invasive or minimally invasive clinical tests? How can hearing aids be personalized to correct specific distortions?

How should an ideal hearing aid transform incoming sound to elicit neural activity patterns that restore normal perception?

Can the performance of a hearing aid be improved through training or rehabilitation programs that facilitate beneficial plasticity in central auditory areas? Can the early adoption of hearing aids before significant hearing loss prevent the occurrence of detrimental plasticity in central auditory areas?

Trends Box

Hearing loss is now widely recognized as a major cause of disability and a primary risk factor for dementia, but most cases still go untreated. Uptake of hearing aids is poor, partly because they provide little benefit in typical social settings.

The effects of hearing loss on neural activity in the ear and brain are complex and profound. Current hearing aids can restore overall activity levels to normal, but are ultimately insufficient because they fail to compensate for distortions in the specific patterns of neural activity that encode information about speech.

Recent advances in electrophysiology and machine learning, together with a changing regulatory landscape and increasing social acceptance of wearable devices, should improve the performance and uptake of hearing aids in the near future.

Acknowledgements

We thank A. Forge and J. Ashmore for helpful discussions. This work was supported in part by the Wellcome Trust (200942/Z/16/Z) and the US National Science Foundation (NSF PHY-1125915).

References

- 1.Wilson BS, et al. Global hearing health care: new findings and perspectives. Lancet Lond Engl. 2017 doi: 10.1016/S0140-6736(17)31073-5. [DOI] [PubMed] [Google Scholar]

- 2.Livingston G, et al. Dementia prevention, intervention, and care. Lancet Lond Engl. 2017 doi: 10.1016/S0140-6736(17)31363-6. [DOI] [PubMed] [Google Scholar]

- 3.Davis A, et al. Aging and Hearing Health: The Life-course Approach. The Gerontologist. 2016;56(2):S256–267. doi: 10.1093/geront/gnw033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.McCormack A, Fortnum H. Why do people fitted with hearing aids not wear them? Int J Audiol. 2013;52:360–368. doi: 10.3109/14992027.2013.769066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Allen PD, Eddins DA. Presbycusis phenotypes form a heterogeneous continuum when ordered by degree and configuration of hearing loss. Hear Res. 2010;264:10–20. doi: 10.1016/j.heares.2010.02.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Larson VD, et al. Efficacy of 3 commonly used hearing aid circuits: A crossover trial. NIDCD/VA Hearing Aid Clinical Trial Group. JAMA. 2000;284:1806–1813. doi: 10.1001/jama.284.14.1806. [DOI] [PubMed] [Google Scholar]

- 7.Humes LE, et al. A comparison of the aided performance and benefit provided by a linear and a two-channel wide dynamic range compression hearing aid. J Speech Lang Hear Res JSLHR. 1999;42:65–79. doi: 10.1044/jslhr.4201.65. [DOI] [PubMed] [Google Scholar]

- 8.Brons I, et al. Effects of noise reduction on speech intelligibility, perceived listening effort, and personal preference in hearing-impaired listeners. Trends Hear. 2014:18. doi: 10.1177/2331216514553924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Cox RM, et al. Impact of advanced hearing aid technology on speech understanding for older listeners with mild to moderate, adult-onset, sensorineural hearing loss. Gerontology. 2014;60:557–568. doi: 10.1159/000362547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Hopkins K, et al. Benefit from non-linear frequency compression hearing aids in a clinical setting: The effects of duration of experience and severity of high-frequency hearing loss. Int J Audiol. 2014;53:219–228. doi: 10.3109/14992027.2013.873956. [DOI] [PubMed] [Google Scholar]

- 11.Magnusson L, et al. Speech recognition in noise using bilateral open-fit hearing aids: the limited benefit of directional microphones and noise reduction. Int J Audiol. 2013;52:29–36. doi: 10.3109/14992027.2012.707335. [DOI] [PubMed] [Google Scholar]

- 12.Picou EM, et al. Evaluation of the effects of nonlinear frequency compression on speech recognition and sound quality for adults with mild to moderate hearing loss. Int J Audiol. 2015;54:162–169. doi: 10.3109/14992027.2014.961662. [DOI] [PubMed] [Google Scholar]

- 13.Young ED. Neural representation of spectral and temporal information in speech. Philos Trans R Soc B Biol Sci. 2008;363:923–945. doi: 10.1098/rstb.2007.2151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Moore BC. Perceptual consequences of cochlear hearing loss and their implications for the design of hearing aids. Ear Hear. 1996;17:133–161. doi: 10.1097/00003446-199604000-00007. [DOI] [PubMed] [Google Scholar]

- 15.Sachs MB, et al. Biological basis of hearing-aid design. Ann Biomed Eng. 2002;30:157–168. doi: 10.1114/1.1458592. [DOI] [PubMed] [Google Scholar]

- 16.Schilling JR, et al. Frequency-shaped amplification changes the neural representation of speech with noise-induced hearing loss. Hear Res. 1998;117:57–70. doi: 10.1016/s0378-5955(98)00003-3. [DOI] [PubMed] [Google Scholar]

- 17.Dinath F, Bruce IC. Hearing aid gain prescriptions balance restoration of auditory nerve mean-rate and spike-timing representations of speech. Conf Proc IEEE Eng Med Biol Soc; 2008. pp. 1793–1796. [DOI] [PubMed] [Google Scholar]

- 18.Recio-Spinoso A, Cooper NP. Masking of sounds by a background noise--cochlear mechanical correlates. J Physiol. 2013;591:2705–2721. doi: 10.1113/jphysiol.2012.248260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Sachs MB, Young ED. Effects of nonlinearities on speech encoding in the auditory nerve. J Acoust Soc Am. 1980;68:858–875. doi: 10.1121/1.384825. [DOI] [PubMed] [Google Scholar]

- 20.Sachs MB, et al. Auditory nerve representation of vowels in background noise. J Neurophysiol. 1983;50:27–45. doi: 10.1152/jn.1983.50.1.27. [DOI] [PubMed] [Google Scholar]

- 21.Young ED. Neural Coding of Sound with Cochlear Damage. In: Le Prell CG, et al., editors. Noise-Induced Hearing Loss. Vol. 40. Springer; New York: 2012. pp. 87–135. [Google Scholar]

- 22.Baer T, Moore BCJ. Effects of spectral smearing on the intelligibility of sentences in noise. J Acoust Soc Am. 1993;94:1229–1241. doi: 10.1121/1.408640. [DOI] [PubMed] [Google Scholar]

- 23.Henry KS, et al. Distorted Tonotopic Coding of Temporal Envelope and Fine Structure with Noise-Induced Hearing Loss. J Neurosci. 2016;36:2227–2237. doi: 10.1523/JNEUROSCI.3944-15.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Moore BCJ. A review of the perceptual effects of hearing loss for frequencies above 3 kHz. Int J Audiol. 2016;0:1–8. doi: 10.1080/14992027.2016.1204565. [DOI] [PubMed] [Google Scholar]

- 25.Liberman MC, Kujawa SG. Cochlear synaptopathy in acquired sensorineural hearing loss: Manifestations and mechanisms. Hear Res. 2017;349:138–147. doi: 10.1016/j.heares.2017.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Felix H, et al. Degeneration pattern of human first-order cochlear neurons. Adv Otorhinolaryngol. 2002;59:116–123. doi: 10.1159/000059249. [DOI] [PubMed] [Google Scholar]

- 27.Sergeyenko Y, et al. Age-related cochlear synaptopathy: an early-onset contributor to auditory functional decline. J Neurosci. 2013;33:13686–13694. doi: 10.1523/JNEUROSCI.1783-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Schaette R, McAlpine D. Tinnitus with a normal audiogram: physiological evidence for hidden hearing loss and computational model. J Neurosci Off J Soc Neurosci. 2011;31:13452–13457. doi: 10.1523/JNEUROSCI.2156-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Furman AC, et al. Noise-induced cochlear neuropathy is selective for fibers with low spontaneous rates. J Neurophysiol. 2013;110:577–586. doi: 10.1152/jn.00164.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Costalupes JA, et al. Effects of continuous noise backgrounds on rate response of auditory nerve fibers in cat. J Neurophysiol. 1984;51:1326–1344. doi: 10.1152/jn.1984.51.6.1326. [DOI] [PubMed] [Google Scholar]

- 31.Bharadwaj HM, et al. Individual differences reveal correlates of hidden hearing deficits. J Neurosci. 2015;35:2161–2172. doi: 10.1523/JNEUROSCI.3915-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Liberman MC, et al. Toward a Differential Diagnosis of Hidden Hearing Loss in Humans. PLoS ONE. 2016;11 doi: 10.1371/journal.pone.0162726. e0162726. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Tremblay KL, Miller CW. How neuroscience relates to hearing aid amplification. Int J Otolaryngol. 2014;2014 doi: 10.1155/2014/641652. 641652. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Gourévitch B, et al. Is the din really harmless? Long-term effects of non-traumatic noise on the adult auditory system. Nat Rev Neurosci. 2014;15:483–491. doi: 10.1038/nrn3744. [DOI] [PubMed] [Google Scholar]

- 35.Cai S, et al. Encoding intensity in ventral cochlear nucleus following acoustic trauma: implications for loudness recruitment. J Assoc Res Otolaryngol JARO. 2009;10:5–22. doi: 10.1007/s10162-008-0142-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Moore BCJ, et al. Effect of loudness recruitment on the perception of amplitude modulation. J Acoust Soc Am. 1996;100:481–489. [Google Scholar]

- 37.Peelle JE, Wingfield A. The Neural Consequences of Age-Related Hearing Loss. Trends Neurosci. 2016;39:486–497. doi: 10.1016/j.tins.2016.05.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Syka J. Plastic changes in the central auditory system after hearing loss, restoration of function, and during learning. Physiol Rev. 2002;82:601–636. doi: 10.1152/physrev.00002.2002. [DOI] [PubMed] [Google Scholar]

- 39.Humes LE, et al. Measures of hearing threshold and temporal processing across the adult lifespan. Hear Res. 2010;264:30–40. doi: 10.1016/j.heares.2009.09.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Frisina RD. Aging changes in the central auditory system. The Oxford Handbook of Auditory Science: The Auditory Brain. 2010;2:418–438. [Google Scholar]

- 41.Akeroyd MA. An Overview of the Major Phenomena of the Localization of Sound Sources by Normal-Hearing, Hearing-Impaired, and Aided Listeners. Trends Hear. 2014:18. doi: 10.1177/2331216514560442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.King A, et al. The effects of age and hearing loss on interaural phase difference discrimination. J Acoust Soc Am. 2014;135:342–351. doi: 10.1121/1.4838995. [DOI] [PubMed] [Google Scholar]

- 43.Marrone N, et al. The effects of hearing loss and age on the benefit of spatial separation between multiple talkers in reverberant rooms. J Acoust Soc Am. 2008;124:3064–3075. doi: 10.1121/1.2980441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Brown AD, et al. Time-Varying Distortions of Binaural Information by Bilateral Hearing Aids. Trends Hear. 2016:20. doi: 10.1177/2331216516668303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Arehart KH, et al. Double-vowel perception in listeners with cochlear hearing loss: differences in fundamental frequency, ear of presentation, and relative amplitude. J Speech Lang Hear Res JSLHR. 2005;48:236–252. doi: 10.1044/1092-4388(2005/017). [DOI] [PubMed] [Google Scholar]

- 46.Chintanpalli A, et al. Effects of age and hearing loss on concurrent vowel identification. J Acoust Soc Am. 2016;140:4142. doi: 10.1121/1.4968781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Oxenham AJ. Pitch perception and auditory stream segregation: implications for hearing loss and cochlear implants. Trends Amplif. 2008;12:316–331. doi: 10.1177/1084713808325881. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Lorenzi C, et al. Speech perception problems of the hearing impaired reflect inability to use temporal fine structure. Proc Natl Acad Sci U S A. 2006;103:18866–18869. doi: 10.1073/pnas.0607364103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Humes LE, Dubno JR. Factors Affecting Speech Understanding in Older Adults. In: Gordon-Salant S, et al., editors. The Aging Auditory System. Springer; New York: 2010. pp. 211–257. [Google Scholar]

- 50.Martin JS, Jerger JF. Some effects of aging on central auditory processing. J Rehabil Res Dev. 2005;42:25–44. doi: 10.1682/jrrd.2004.12.0164. [DOI] [PubMed] [Google Scholar]

- 51.Arlinger S, et al. The emergence of cognitive hearing science. Scand J Psychol. 2009;50:371–384. doi: 10.1111/j.1467-9450.2009.00753.x. [DOI] [PubMed] [Google Scholar]

- 52.Rönnberg J, et al. The Ease of Language Understanding (ELU) model: theoretical, empirical, and clinical advances. Front Syst Neurosci. 2013;7:31. doi: 10.3389/fnsys.2013.00031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Poeppel D, et al. Speech perception at the interface of neurobiology and linguistics. Philos Trans R Soc Lond B Biol Sci. 2008;363:1071–1086. doi: 10.1098/rstb.2007.2160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Röwnnberg J, et al. Hearing loss is negatively related to episodic and semantic long-term memory but not to short-term memory. J Speech Lang Hear Res JSLHR. 2011;54:705–726. doi: 10.1044/1092-4388(2010/09-0088). [DOI] [PubMed] [Google Scholar]

- 55.Füllgrabe C, et al. Age-group differences in speech identification despite matched audiometrically normal hearing: contributions from auditory temporal processing and cognition. Front Aging Neurosci. 2014;6:347. doi: 10.3389/fnagi.2014.00347. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Lunner T, et al. Cognition and hearing aids. Scand J Psychol. 2009;50:395–403. doi: 10.1111/j.1467-9450.2009.00742.x. [DOI] [PubMed] [Google Scholar]

- 57.Arehart KH, et al. Working memory, age, and hearing loss: susceptibility to hearing aid distortion. Ear Hear. 2013;34:251–260. doi: 10.1097/AUD.0b013e318271aa5e. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Moore BC. Dead regions in the cochlea: diagnosis, perceptual consequences, and implications for the fitting of hearing AIDS. Trends Amplif. 2001;5:1–34. doi: 10.1177/108471380100500102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Henry KS, Heinz MG. Effects of sensorineural hearing loss on temporal coding of narrowband and broadband signals in the auditory periphery. Hear Res. 2013;303:39–47. doi: 10.1016/j.heares.2013.01.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Obermeyer Z, Emanuel EJ. Predicting the Future — Big Data, Machine Learning, and Clinical Medicine. N Engl J Med. 2016;375:1216–1219. doi: 10.1056/NEJMp1606181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Shobe JL, et al. Brain activity mapping at multiple scales with silicon microprobes containing 1,024 electrodes. J Neurophysiol. 2015;114:2043–2052. doi: 10.1152/jn.00464.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Dubno JR, et al. Classifying human audiometric phenotypes of age-related hearing loss from animal models. J Assoc Res Otolaryngol JARO. 2013;14:687–701. doi: 10.1007/s10162-013-0396-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Warren E, Grassley C. Over-the-Counter Hearing Aids: The Path Forward. JAMA Intern Med. 2017;177:609–610. doi: 10.1001/jamainternmed.2017.0464. [DOI] [PubMed] [Google Scholar]

- 64.Reed NS, et al. Personal Sound Amplification Products vs a Conventional Hearing Aid for Speech Understanding in Noise. JAMA. 2017;318:89–90. doi: 10.1001/jama.2017.6905. [DOI] [PMC free article] [PubMed] [Google Scholar]