Abstract

Recent studies, conducted almost exclusively in primates, have shown that several cortical areas usually associated with modality-specific sensory processing are subject to influences from other senses. Here we demonstrate using single-unit recordings and estimates of mutual information that visual stimuli can influence the activity of units in the auditory cortex of anesthetized ferrets. In many cases, these units were also acoustically responsive and frequently transmitted more information in their spike discharge patterns in response to paired visual-auditory stimulation than when either modality was presented by itself. For each stimulus, this information was conveyed by a combination of spike count and spike timing. Even in primary auditory areas (primary auditory cortex [A1] and anterior auditory field [AAF]), ~15% of recorded units were found to have nonauditory input. This proportion increased in the higher level fields that lie ventral to A1/AAF and was highest in the anterior ventral field, where nearly 50% of the units were found to be responsive to visual stimuli only and a further quarter to both visual and auditory stimuli. Within each field, the pure-tone response properties of neurons sensitive to visual stimuli did not differ in any systematic way from those of visually unresponsive neurons. Neural tracer injections revealed direct inputs from visual cortex into auditory cortex, indicating a potential source of origin for the visual responses. Primary visual cortex projects sparsely to A1, whereas higher visual areas innervate auditory areas in a field-specific manner. These data indicate that multisensory convergence and integration are features common to all auditory cortical areas but are especially prevalent in higher areas.

Keywords: cross-modal processing, ferret, information theory, retrograde labeling, sensory convergence, visual

Introduction

Perception of real-world events frequently depends on the synthesis of information from different sensory systems. Revealing where in the brain sensory signals are combined and integrated is key to understanding the basis by which cross-modal processing influences behavior. Recently, studies in both human (Calvert et al. 1999; Giard and Peronnet 1999; Foxe et al. 2000, 2002; Molholm et al. 2002, 2004; Murray et al. 2005) and nonhuman (Schroeder et al. 2001; Schroeder and Foxe 2002; Brosch et al. 2005; Ghazanfar et al. 2005) primates have provided evidence for multisensory convergence within cortical areas that have hitherto been regarded as modality specific. It is unclear, however, whether this unexpected involvement of low-level cortical areas in multisensory processing is a feature common to mammalian brains or whether primates are exceptional in this respect. Although it has been reported that the cat visual cortex also receives auditory inputs (Morrell 1972; Fishman and Michael 1973), the most detailed investigation in a nonprimate species found that neurons sensitive to other modalities are rare within visual, auditory, and somatosensory areas of the rat cortex but are relatively plentiful at the borders of these zones (Wallace et al. 2004).

Evidence for multisensory integration in humans is based on imaging or electroencephalographic/magnetoencephalo-graphic studies (Calvert et al. 1999; Giard and Peronnet 1999; Foxe et al. 2000, 2002; Molholm et al. 2002, 2004; Murray et al. 2005), which suffer from low spatial resolution and therefore cannot precisely localize regions of convergence. Moreover, intracranial recordings in monkeys are often based on local field potentials and/or multiunit activity (Schroeder et al. 2001; Schroeder and Foxe 2002; Fu et al. 2003; Ghazanfar et al. 2005). Although these methods allow activity to be localized to particular cortical fields and layers, it can be difficult to determine whether multisensory responses are elicited by individual neurons or by a combination of modality-specific neurons found in close proximity. Furthermore, when using measures of summed activity, it is not possible to correlate the presence of multisensory input with the response characteristics of individual neurons.

Visual influences on neurons in auditory cortex have been reported in awake primates at the single-neuron level, but it was proposed that these emerged as a result of the behavioral training received by the animals (Brosch et al. 2005). Thus, the extent to which individual neurons in low-level cortical areas traditionally viewed as modality specific are normally involved in multisensory processing remains uncertain. Moreover, all previous electrophysiological studies in this field have investigated multisensory convergence and integration by comparing the number of spikes evoked by different stimuli. Studies of visual (Van Rullen et al. 1998), auditory (Furukawa et al. 2000; Brugge et al. 2001; Nelken et al. 2005), and somatosensory (Panzeri et al. 2001; Johansson and Birznieks 2004) processing have now shown that stimulus information can also be encoded by temporal features of the spike discharge pattern. Thus, a full characterization of the sensitivity of neurons to multisensory stimuli requires analytical approaches to be used that take into account different neural coding schemes.

In this study, we show using single-unit recordings and estimates of mutual information (MI) between stimuli and spike trains that units sensitive to visual stimulation are widespread in both primary and nonprimary areas of ferret auditory cortex and that these neurons frequently transmit more information in response to bisensory stimulation than to either auditory or visual stimuli presented by themselves. We also show that ferret auditory cortex receives inputs from several visual cortical areas and from parietal cortex, which could provide the basis for these nonauditory response properties.

Materials and Methods

Animal Preparation

All animal procedures were approved by the local ethical review committee and performed under license from the UK Home Office in accordance with the Animal (Scientific Procedures) Act 1986. Nineteen adult pigmented ferrets (Mustela putorius) were used in this study. Eight of these were used exclusively for electrophysiological recordings, and 11 were used for neuroanatomical tract-tracing experiments. All animals received regular otoscopic examinations prior to the experiment to ensure that both ears were clean and disease free.

Anesthesia was induced by 2 mL/kg intramuscular injection of alphaxalone/alphadolone acetate (Saffan; Schering-Plough Animal Health, Welwyn Garden City, UK). The left radial vein was cannulated, and a continuous infusion (5 mL/h) of a mixture of medetomidine (Domitor; 0.022 mg/kg/h; Pfizer, Sandwich, UK) and ketamine (Ketaset; 5 mg/kg/h; Fort Dodge Animal Health, Southampton, UK) in physiological saline containing 5% glucose was provided throughout the experiment. The infusate was supplemented with 0.5 mg/kg/h dexa-methasone (Dexadreson; Intervet UK Ltd, Milton Keynes, UK) and 0.06 mg/kg/h atropine sulfate (C-Vet Veterinary Products, Leyland, UK) in order to reduce the risk of cerebral edema and bronchial secretions, respectively. A tracheal cannula was implanted so that the animal could be placed on a ventilator and body temperature, end-tidal CO2, and the electrocardiogram were monitored throughout. The right pupil was dilated by topical application of atropine sulfate and protected with a zero-refractive power contact lens.

The animal was placed in a stereotaxic frame, and the temporal muscles on both sides were retracted to expose the dorsal and lateral parts of the skull. For terminal recording experiments, a metal bar was cemented and screwed into the right side of the skull, holding the head without further need of a stereotaxic frame. On the left side, the temporal muscle was largely removed to gain access to the auditory cortex, which is bounded by the suprasylvian sulcus (Fig. 1) (Kelly et al. 1986). For tract-tracing experiments, custom-made hollow ear bars were used to maintain stable head position without the need for a headbar. The suprasylvian and pseudosylvian sulci were exposed by a craniotomy. The overlying dura was removed and the cortex covered with silicon oil. The animal was then transferred to a small table in an anechoic chamber (IAC Ltd, Winchester, UK).

Figure 1.

(A) Lateral view of the ferret brain showing the major sulci and gyri. (B) Sensory cortical areas in the ferret. The locations of known auditory, visual, somatosensory, and posterior parietal fields are indicated (Manger et al. 2002, 2004, 2005; Ramsay and Meredith 2004; Bizley et al. 2005). ASG, anterior sigmoid gyrus; as, ansinate sulcus; cns, coronal sulcus; crs, cruciate sulcus; LG, lateral gyrus; ls, lateral sulcus; OB, olfactory bulb; OBG, orbital gyrus; prs, presylvian sulcus; PSG, posterior sigmoid gyrus; pss, pseudosylvian sulcus; SSG, suprasylvian gyrus, sss, suprasylvian sulcus; A1, primary auditory cortex; AAF, anterior auditory field; PPF, posterior pseudosylvian field; PSF, posterior suprasylvian field; ADF, anterior dorsal field; AVF, anterior ventral field. fAES, anterior ectosylvian sulcal field; PPr, rostral posterior parietal cortex; 3b, primary somatosensory cortex; S2, secondary somatosensory cortex; S3, tertiary somatosensory cortex; D, dorsal; R, rostral. Scale bar is 5 mm in (A) 1 mm in (B).

Stimuli

Acoustic stimuli were generated using TDT system 3 hardware (Tucker-Davis Technologies, Alachua, FL). In 3 recording experiments and all tract-tracing experiments, acoustic stimuli were presented via a closed-field electrostatic speaker (EC1, Tucker-Davis Technologies). In the remaining recording experiments, a Panasonic headphone driver (RPHV297, Panasonic, Bracknell, UK) was used. The electrostatic drivers had a flat frequency output to >30 kHz, whereas the output of the Panasonic drivers extended to 25 kHz. Closed-field calibrations were performed using an one-eighth inch condenser microphone (Brüel and Kjær, Naerum, Denmark), placed at the end of a model ferret ear canal, to create an inverse filter that ensured the driver produced a flat (less than ±5 dB) output. All acoustic stimuli were presented contralaterally.

Pure-tone stimuli were used to obtain frequency-response areas (FRAs), both to characterize individual units and to determine tonotopic gradients in order to identify in which cortical field any given recording was made. The tone frequencies used ranged, in one-third octave steps, from 500 Hz to 24 kHz (Panasonic driver) or 500 Hz to 30 kHz (TDT EC1 driver) and were 100 ms in duration (5 ms cosine ramped). Intensity levels were varied between 10 and 80 dB SPL in 10 dB increments. This totaled 150-200 frequency-level combinations, each of which was presented pseudorandomly ⩾3 times at a rate of once per second. Broadband noise bursts (40 Hz-30 kHz bandwidth and cosine ramped with a 10 ms rise/fall time), generated afresh on every trial, were used as a search stimulus in order to establish whether each unit was acoustically responsive.

The visual stimulus was a diffuse light flash, which was varied in intensity from 0.3 to 70 cd/m2, calibrated with a Tektronix J16 photometer (Bracknell, UK), presented from a light-emitting diode that was usually fixed at a distance of 10 cm from the contralateral eye so that it illuminated virtually the whole contralateral visual field. In order to determine whether units were acoustically and/or visually responsive, 100-ms noise bursts and light flashes were presented separately or simultaneously at a rate of once per second. These stimuli were interspersed with a no-stimulus condition, with presentation pseudor-andomized, and each stimulus configuration presented 20-40 times. To eliminate any possibility that responses recorded following presentation of visual stimuli were artifacts of our experimental design, we confirmed that no sound was emitted when the visual stimuli were switched on and off using a Brüel and Kjær one-eighth inch microphone and type 2610 measuring amplifier and that these responses disappeared when the LED was active but covered up. In 2 animals, visual receptive fields were mapped using a flashing LED mounted on a robotic arm (TDT), which allowed the stimuli to be presented at different angles at a distance of 1 m from the animal’s eye.

Data Acquisition

Recordings were made with silicon probe electrodes (Neuronexus Technologies, Ann Arbor, MI). In 5 animals, we used electrodes with a 4 × 4 configuration (4 active sites on 4 parallel probes, with a horizontal and a vertical spacing of 200 μm). In a small number of recordings in one of these animals, and for all recordings in a further 3 animals, a single shank electrode was used with 16 active site spaced at 150-μm intervals. The electrodes were positioned so that they entered the cortex approximately orthogonal to the surface of the ectosylvian gyrus (EG). Recordings were made in all auditory cortical fields that were identified in the ferret by Bizley et al. (2005), although, because the anterior ectosylvian sulcus (AES) is known to be a multisensory area (Ramsay and Meredith 2004), there was a sampling bias toward the rostral fields on both the middle ectosylvian gyrus (MEG) and anterior ectosylvian gyrus (AEG).

The neuronal recordings were band-pass filtered (500 Hz-5 kHz), amplified (up to 20 000×), and digitized at 25 kHz. Data acquisition and stimulus generation were performed using BrainWare (Tucker-Davis Technologies).

Data Analysis

Spike sorting was performed off-line. The noise level in the signal was averaged over the preceding second and the trigger level for detecting a spike automatically adjusted to be 3 times this level. Single units were isolated from the digitized signal by manually clustering data according to spike features such as amplitude, width, and area. We also inspected autocorrelation histograms, and only cases in which the interspike interval histograms revealed a clear refractory period were classed as single units.

Data analysis was performed in MATLAB (MathWorks Inc., Natick, MA). Visual latencies were typically, although not always, longer than auditory latencies, and responses to both stimulus modalities lasted for up to 200 ms after stimulus onset. In order to classify whether a unit was responsive to auditory and/or visual stimuli, 2 methods of analysis were used. First, a 2-way analysis of variance (ANOVA) of spike counts over a 200-ms window was performed, in which the 2 binary factors were the presence/absence of an auditory stimulus and the presence/absence of a visual stimulus. This allowed us to quantify whether individual units responded to each form of stimulation and whether there was any interaction resulting from the combined presentation of light and sound. Second, measures of MI were calculated using methods described by Nelken et al. 2005. Briefly, the stimulus S and neural response R were treated as random variables, and the MI between them, I(S;R), measured in bits, was calculated as a function of their joint probability p(s, r) and defined as

| (1) |

where p(s), p(r) are the marginal distributions (Cover and Thomas 1991). The MI is zero if the 2 values are independent (i.e., p(s, r) = p(s)p(r) for every r and s) and is positive otherwise. Because naive MI estimators may suffer from both under- and overestimation, the “adaptive direct” algorithm, described fully and validated in Nelken et al. (2005), was applied. The MI estimated from full responses is hard to compute due to the high dimensionality of all possible spiking responses, leading to large under-sampled matrices and to large bias. The simplest approach is to use coarser binning, but this will result in lower MI estimates relative to that of the raw data. To overcome this problem, the spike train was resampled at a number of resolutions to produce a new train containing a 0 in each time bin lacking any spikes and 1 in each bin containing ⩾1 spike. The naive MI (including the bias) was computed initially using a matrix based on a large number of bins, each with a low probability. The matrix was then reduced, step by step, by joining the rows and columns to create coarser binning and the MI and bias recomputed. The reduction continued until only a single row or column remained, resulting in a set of decreasing MI values and a corresponding set of decreasing bias values. The MI was estimated by the largest difference between the 2.

To estimate the MI for the present data set, responses were classified according to the presence or absence of an auditory or visual stimulus (i.e., for a total of 4 stimulus conditions). The spike train was then binned at several time resolutions, ranging from 8 to 256 ms. Because binning is a data reduction step, the maximal MI over all temporal resolutions was considered as the best estimate of the true MI. To assess whether the obtained value was significant, stimuli and responses were randomized and the MI recalculated. The data were bootstrapped in this manner 100 times, and the 99th percentile was extracted from the resulting distribution. If the MI calculated from the data exceeded this value, it was considered to be significant.

To test for an interaction when the 2 stimuli were presented together, the nonstimulus trials were removed from consideration. Then the MI between the responses and the binary classification of unisensory/ bisensory stimulation was calculated. This MI is significant when the distribution of responses to bisensory stimulation is different from the distribution of responses to visual and auditory stimuli presented separately. Because this would also be the case for modality-specific neurons, the MI was reestimated after removing possible unisensory auditory effects by randomly intermixing the responses to the light alone with those to the light and sound presented together. The MI was then reestimated after removing the possible contribution of unisensory visual effects by randomizing the sound with the light-sound trials. In both cases, bootstrap was used to build a distribution of MI values. If the MI for the real data exceeded both estimated confidence limits, it was concluded that a significant multisensory interaction was present in the neuronal response. Therefore, units classified as “bisensory” either had a spiking response to both modalities of stimulation when presented independently or had a significant response to one modality of stimulation, which was modulated by the presence of the second stimulus modality that did not, alone, produce a significant response.

In general, we found that the two 2-way ANOVA based on the spike counts and the MI analysis produced results in good accordance with each other (>70% of units were classified in the same manner by each type of test). However, examination of raster plots and derived significance values suggested that the MI analysis was slightly more sensitive, presumably because it takes into account spike timing patterns as well as spike counts (see below). Furthermore, the ANOVA assumes linearity in the contributions of each factor and classified a small population of units that clearly showed nonlinear interactions between the visual and auditory stimuli as unisensory. By contrast, the MI analysis captured such interactions. Consequently, the MI values were used to classify the responses of the cortical units.

In order to quantify the contribution of spike timing information to the MI estimates, a simplified timing statistic, the mean response latency, was computed (see Nelken et al. 2005). The mean response latency is simply the mean latency of all spikes within the response window and would be equal to the first spike latency if there was only one spike. MI was calculated for each stimulus condition using either the spike count (the total number of spikes over a 200-ms response window) or the mean response latency. It has been shown that, although neither of these statistics alone can capture the full information in spike trains recorded from auditory cortex, jointly they can convey all the available information about the stimuli that is present in the neural response (Nelken et al. 2005).

The type and magnitude of the multisensory interaction was quantified as in previous studies in this field (Newman and Hartline 1981; King and Palmer 1985; Populin and Yin 2002) using the following formula:

| (2) |

where r A + V is the number of spikes evoked by combined visual-auditory stimulation, r A the number evoked by auditory stimulation alone, and r V the number evoked by visual stimulation alone. As in previous studies in which cross-modal interactions have been analyzed using this formula, spike counts were corrected for spontaneous activity by subtracting the spike counts in an equivalent window prior to stimulus presentation from that used to calculate the response. Response modulation values of 0 indicate a simple summation of unisensory influences, whereas values >0 indicate superadditive interactions and values below this indicate cross-modal occlusion or subadditive interactions.

Response latencies were calculated from the pooled poststimulus time histogram (PSTH) containing the responses to the appropriate stimulus. Minimum response latencies were computed as the time at which the pooled response first crossed a critical value defined as 20% of the difference between the spontaneous and peak firing rates (as in Bizley et al. 2005).

Verification of Recording Sites

Following the completion of recordings, animals were perfused (see below). The cortex was removed, gently flattened between glass coverslips, and cryoprotected in 30% sucrose, after which 50-μm tangential sections were cut and stained for Nissl substance. This enabled the location of the recording sites to be examined, thereby ensuring that none of the electrode penetrations had passed through the suprasylvian sulcus and into the adjacent suprasylvian gyrus. Because it was not possible to make lesions at the recording sites, we could not identify which cortical layers the recorded units were located in. However, the tracks made by the silicon probe electrodes were clearly visible in the Nissl-stained sections, and depth measurements were derived from the microdrive readings from the point at which the electrodes entered the cortex.

Tracers

Aseptic surgical techniques were used in all tracer injection experiments. Tracers used were 10% dextran tetramethylrhodamine (10 000 MW, Fluororuby [FR]; Molecular Probes Inc., Eugene, OR), 10% dextran biotin fixable (biotinylated dextran amine [BDA], 10 000 and 3000 MW; Molecular Probes), and 1% cholera toxin subunit β (CTβ, List Biological Laboratories, Campbell, CA).

Tracer injections were, in most cases, made in physiologically identified cortical regions (see Table 1). When physiological verification was not possible, the locations of the tracer injections were assigned to a particular cortical field based on our previous descriptions of ferret auditory cortex (Bizley et al. 2005). A glass micropipette was lowered, and BDA, FR, or CTβ were injected, in most cases, by iontophoresis using a positive current of 5 μA and a duty cycle of 7 s for a duration of 15 min. In a small number of cases, FR and CTβ were injected by pressure with a nanoejector (Nanoject II; Drummond Scientific Company, Broomall, PA). Once the injections were complete, the micropipette was withdrawn, the dura lifted back in place, and the piece of cranium that had previously been removed replaced. Sutures were placed in the remaining temporal muscle and skin, so that they could be returned to their preoperative positions. The animals received intraoperative and subsequent postoperative analgesia with Vetergesic (0.15 mL of buprenorphine hydrochloride, intramuscularly; Alstoe Animal Health, Melton Mowbray, UK).

Table 1.

Details of tracer injections made in auditory cortex

| Animal | Injections site | BF | Tracer | Plane |

|---|---|---|---|---|

| F0252 | MEG | 15 | FR | Flattened |

| MEG | 1 | BDA | ||

| F0404 | MEG | 7 | BDA | Flattened |

| MEG | 7 | CTβ | ||

| F0268 | MEG | 7 | BDA | Flattened |

| MEG | 7 | FR | ||

| MEG | 7 | CTβ | ||

| F0504 | PEG | BDA | Flattened | |

| F0505 | PEG | CTβ | Flattened | |

| AEG | BDA | |||

| F0522 | MEG | FR | Coronal | |

| F0510 | PEG | CTβ | Coronal | |

| F0533 | PPF | 7 | CTβ | Coronal |

| VP | Broad, low | BDA | ||

| F0532 | MEG | 20 | BDA | Coronal |

| MEG | 20 | FR | ||

| F0536 | MEG | 2 | BDA | Coronal |

| MEG | 19 | FR | ||

| F0535 | ADF | 10 | BDA | Coronal |

| AVF | Noise only | FR |

Note: VP, ventroposterior area; BF, best frequency of units recorded at the injection site.

Tissue Processing

Survival times were between 2 and 4 weeks, after which transcardial perfusion followed terminal overdose with Euthatal (400 mg/kg of pentobarbital sodium; Merial Animal Health Ltd, Harlow, UK). The blood vessels were washed with 300 mL of 0.9% saline followed by 1 L of fresh 4% paraformaldehyde in 0.1 M phosphate buffer, pH 7.4 (PB). The brain was dissected from the skull, maintained in the same fixative for several hours, and then immersed in 30% sucrose solution in 0.1M PB for 3 days. In 5 cases, the 2 hemispheres were dissected and gently flattened between 2 glass slides. In those cases, the cortex was later sectioned in the tangential plane; 6 other brains were sectioned in the standard coronal plane. Sections (50-μm thick) were cut on a freezing microtome, and 6 or 7 sets of serial sections were collected in 0.1 M PB. Every third series of sections was used to analyze the tracer labeling.

FR and CTβ were visualized with immunohistochemistry reactions, whereas BDA was reacted only with avidin biotin peroxidase (Vectastain Elite ABC Kit; Vector Laboratories, Burlingame, CA). Sections were washed several times in 10 mM phosphate-buffered saline (PBS) with 0.1% Triton X100 (PBS-Tx) and incubated overnight at 4 °C in the primary antibody (FR: anti-tetramethylrhodamine, rabbit immunoglob-ulin G [IgG]; Molecular Probes; dilution 1:6000; CTβ: goat-anti-CTβ, dilution 1:15 000). After washing 3 times in PBS-Tx, sections were incubated for 2 h in the biotinylated secondary antibody (biotinylated goat anti-rabbit IgG H + L [FR] or rabbit-anti-goat [CTβ], dilution 1:200; Vector Laboratories) at room temperature. Sections were once again washed and incubated for 90 min in avidin biotin peroxidase, washed in PBS, and then incubated with the chromogen solution; 3,3′-diamino-benzidine (DAB; Sigma-Aldrich Company Ltd, Dorset, UK). Sections were incubated in 0.4 mM DAB and 9.14 mM H2O2 in 0.1 M PB until the reaction product was visualized. When BDA and FR or CTβ were injected in the same animal, the BDA was first visualized with ABC followed by DAB enhanced with 2.53 mM nickel ammonium sulfate. The second tracer (FR or CTβ) was subsequently visualized using the appropriate protocol with DAB only as the chromogen. Reactions were stopped by rinsing the sections several times in 0.1 M PB. Sections were mounted on gelatinized glass slides, air dried, dehydrated, and coverslipped.

For every animal, one set of serial sections was counterstained with 0.2% cresyl violet, another set was selected to visualize cytochrome oxidase (CO) activity, and a third set was used to perform SMI32 immunohistochemistry to aid identification of different cortical areas and laminae. CO staining was obtained after 12 h incubation with 4% sucrose, 0.025% cytochrome C (Sigma-Aldrich), and 0.05% DAB in 0.1 M PB at 37 °C To stain neurofilament H in neurons, we used a monoclonal mouseanti-SMI32 (dilution 1:4000; Sternberg Monoclonals, Inc., Latherville, MA). After immersion for 60 min in a blocking serum solution with 5% normal horse serum, the sections were incubated overnight at 5 °C with the mouse antibody and 2% normal horse serum in 10 mM PBS. Mouse biotinylated secondary antibody was used after brief washings in 10 mM PBS (mouse ABC kit, dilution 1:200 in PBS with 2% normal horse serum; Vector Laboratories). Immunoreaction was followed by several washings in PBS, incubation in ABC, and visualization using DAB with nickel-cobalt intensification (Adams 1981).

Histological Analysis

Sections were analyzed with a Leica DMR microscope fitted with a digital Leica camera using TWAIN software (Leica Microsystems, Heerbrugg, Switzerland). The locations of the labeled cells were plotted using a camera lucida onto drawings of the cortex produced from adjacent Nissl-stained sections.

Results

Ferret auditory cortex lies on the EG, where 6 different fields have been defined physiologically (Bizley et al. 2005) (Fig. 1). As in other species, these fields can be divided into primary like, or core, areas and nonprimary, or belt, areas. The primary auditory cortex (A1) and the anterior auditory field (AAF) are tonotopically organized core areas. Of the remaining 4 belt areas, 2 fields, the posterior pseudosylvian and suprasylvian fields (PPF and PSF), are tonotopically organized and lie on the posterior bank of the EG. Finally, 2 nontonotopically organized areas are located on the AEG. The anterior dorsal field (ADF) responds well to tones and is likely to be a belt area, whereas the response properties and anatomical connectivity (Bizley et al. 2005 and unpublished observations) of the anterior ventral field (AVF) suggest that this is a higher sensory area.

Data are presented here from recordings made from 756 single units in the left EG whose responses were significantly modulated by acoustic, visual, and/or multisensory stimulation (Bajo et al. 2006). Many other acoustically responsive units were recorded in these animals, but these were not tested with visual stimuli and are not considered further here. Data from each animal were initially examined separately, and, after ensuring that a consistent trend in the distribution of these responses was present in all animals, the data were pooled across subjects. Recording sites were assigned to different cortical fields based on their locations on the surface of the EG plus subsequent histology, as well as the frequency tuning and other response properties of the units (Bizley et al. 2005).

The raster plots in Figure 2 illustrate the range of responses evoked by these units to our standard stimuli used to investigate visual-auditory interactions. These stimuli comprised 100 ms noise bursts presented to the contralateral ear, 100 ms light flashes presented within the contralateral visual field, or both presented simultaneously. The symbols at the top right of each panel indicate whether significant responses or visual-auditory interactions were observed (according to the MI values, see Materials and Methods). In Figure 2A, the unit responded robustly to acoustic stimulation, whereas the visual stimulus was ineffective in changing the spike discharge pattern of the unit, either by itself or in combination with the sound. By contrast, Figure 2B,C shows units whose responses were clearly modulated by both auditory and visual stimulation. In each case, bisensory stimulation evoked spike discharges in which components of the responses to each stimulus modality could be discerned by virtue of their different temporal firing patterns. Figure 2D shows a unit that responded to visual but not to auditory stimulation. In Figure 2E, the unit did not respond to visual stimulation alone, although its auditory response was enhanced when light flashes were presented simultaneously. Finally, Figure 2F shows an example of a unit in which the only significant response was obtained with combined visual-auditory stimulation.

Figure 2.

Raster plots showing the range of response types recorded in auditory cortex. Responses of single units to broadband noise (A), a diffuse light flash (V) and combined auditory-visual stimulation (AV) are shown. (A) Example of a robust auditory response. (B, C) Units that responded to both stimuli presented separately. (D) Unit that responded only to visual stimulation. (E, F) Units that showed a clear enhancement of their unisensory responses when combined visual-auditory stimulation was used. Symbols indicate significant responses defined using MI measures. Units (A, B, and F) were located in field PSF, (C, E) in ADF, and (D) in PPF.

Cortical Location of Modality-Specific and Multisensory Units

All units were classified as auditory, visual, or bisensory. Visual-auditory units included those exhibiting a clear spiking response to both stimulus modalities (e.g., Fig. 2B,C) and those for which the MI analysis revealed a significant interaction, that is, where responses to bisensory stimulation were significantly different from those evoked in either unisensory condition (e.g., Fig. 2E,F). Recording sites were plotted onto an image of the exposed cortex to form maps showing the location of each response type. Maps for 2 animals are shown in Figure 3A,B. In these plots, the blue dots indicate the location of units that were classified as unisensory auditory, green triangles the location of the unisensory visual units, and red diamonds the location of units displaying bisensory responses. Different cortical fields on the EG have been delimited (dashed lines) on the basis of the frequency-tuning properties of all the acoustically responsive units recorded in these animals (see Fig. 1 and Bizley et al. 2005). Often different symbols overlap due to the multiple recording sites on a single probe (this is especially true for Fig. 3B because in this animal recordings were made with a 16-channel single-shaft probe).

Figure 3.

Location of auditory (blue dots), visual (green triangles), and bisensory (red diamonds) units plotted across the surface of the auditory cortex in 2 different animals (A, B). Cortical field boundaries (derived from measurements of unit best frequency and other response properties) are indicated by dashed lines. Recordings made within the ventral bank of the suprasylvian sulcus are shown “unfolded,” as indicated by the dotted lines in (A). Superimposed symbols indicate that multiple units were recorded at one site or, more commonly, that recordings were made at several depths. The recordings shown in (A) were made with a 4 × 4 silicon probe configuration, whereas those in (B) were obtained with a single linear probe with 16 recording sites. Recording sites in (B) have been jittered so that symbols are not completely overlapping. (C) Proportions of units responsive to each stimulus modality in each field (data pooled from all 6 animals). The total number of units recorded in each field is indicated at the top of each column. Bisensory units have been subdivided into 2 categories: those in which there was a response to both modalities of unisensory stimulation and those in which only one modality of stimulation produced a significant response when the stimuli were presented in isolation, but the addition of the other stimulus modality significantly modulated the response to the effective stimulus.

The most striking feature in Figure 3 is the incidence of bisensory units in each of the identified auditory cortical fields. Unisensory visual units were also widespread and were even found near the edges of the primary auditory fields, A1 and AAF, at the tip of the EG. Some of these units extended into the ventral bank of the suprasylvian sulcus, where AAF is located (see unfolded regions near the tip of the gyrus in Fig. 3A), but were not located on the suprasylvian gyrus. Figure 3C shows the proportions of each response type found in each cortical field. It should be noted that there was a sampling bias (for reasons discussed in the Materials and Methods) toward the anterior fields (AAF, ADF, AVF), and therefore, the total number of units recorded in these regions is higher than in the fields located on the posterior side of the EG. Even in primary auditory areas (A1 and AAF), ~15% of recorded units were found to have nonauditory input. This proportion increased in the higher level fields that lie ventral to A1/AAF and was highest in area AVF, where nearly 50% of the units were found to be responsive to visual stimuli only and a further quarter to both visual and auditory stimuli.

Response Latencies

First spike response latencies were calculated for the visual response in all units whose responses were significantly modulated by light alone (n = 148), and auditory first spike latencies were calculated for all bisensory units exhibiting a significant unisensory response following contralateral stimulation with broadband noise bursts (n = 113). These are plotted, for each cortical area, in Figure 4A,B. Units ranged in their visual first spike latency from ~40 to >200 ms, whereas most auditory latencies were <50 ms. Visual latencies in AAF were significantly shorter than those in most other cortical fields, whereas the longest latencies were found in field AVF. Although there were no significant interareal differences in auditory response latencies for this population of cells, the distribution of first spike latencies across different cortical areas followed a similar pattern to that previously described with pure-tone stimuli (Bizley et al. 2005), with the posterior fields (PPF and PSF) having the longest latencies.

Figure 4.

First spike latencies of auditory (A) and visual responses (B) of the multisensory units (i.e., units with significant responses to each modality). These are plotted separately for the 6 auditory fields that have been described in the ferret (see Fig. 1). Box plots depict the interquartile range and the central bar indicates the median response with the location of the notch showing the distribution of values around this. Kruskal-Wallis tests revealed significant (P < 0.05) interareal latency differences for the visual responses only. Significant differences, analyzed using Tukey-Kramer post hoc tests, between the latencies in each area are indicated by the horizontal bars.

Spatial Receptive Fields

In 2 of the animals, an LED mounted on a robotic arm at a distance of 1 m from the animal’s head was used to map the visual spatial receptive fields. This visual stimulus was, in some cases, accompanied by simultaneous presentation of a contra-lateral noise burst. Figure 5A shows how the visual response of a unit recorded in AAF varied with the azimuthal angle of the LED; its response was clearly restricted to a region of contra-lateral space. The visual azimuth response profile of another unit, this time from ADF, is depicted in Figure 5B. The magnitude of the visual response is shown in the presence and absence of auditory stimulation. Again, the visual response was clearly tuned to the anterior contralateral quadrant and a significant interaction was found between the location of the light and the presence of the sound (P < 0.001), indicating that the addition of the auditory stimulus sharpened the spatial selectivity of the visual response. A total of 39 bisensory cells had their visual spatial receptive fields mapped in this fashion. In 31 of these units, there was a significant effect on the response of varying the location of the LED (2-way ANOVA with light position and presence of sound as factors). Azimuth profiles showing how the responses of all these units varied as the location of the robotic-arm-mounted LED was changed while presenting noise to the contralateral ear are plotted in Figure 5C. Although some units showed regions of increased and decreased activity at different LED locations, the majority were contralaterally tuned, as can be seen in the average spatial response profile.

Figure 5.

Visual receptive fields in auditory cortex. (A) Raster plot showing the response of a unit recorded in AAF to stationary light flashes from an LED at different stimulus directions. This unit did not respond to sound, but a neighboring unit recorded with the same electrode had a best frequency of 20 kHz. The visual spatial receptive field was restricted in both azimuth and elevation (data not shown for elevation, but responses at the best azimuth were strongest for positions on or below the horizon). (B) Azimuth response profile for a different unit, recorded in ADF, which was also tuned for visual stimulus location. This visual response was significantly modulated by simultaneous stimulation of the contralateral ear. (C) Azimuth response profiles for all 31/39 of the spatially tuned cells recorded. Normalized spike rate in response to the arm-mounted LED and a contralateral noise burst are plotted (gray). The auditory stimulus location was therefore constant, whereas the azimuthal angle of the visual stimulus alone was varied. The mean spatial receptive field is overlaid in black.

Varying Stimulus Intensity

Because we wished to sample as many units as possible with our multielectrode arrays, we typically used a fairly intense visual stimulus in order to determine the incidence of visually sensitive units in different auditory cortical fields. In a number of cases, however, we also used much lower stimulus levels and found that these were also effective in generating responses. Auditory and visual thresholds are plotted for 31 bisensory units in Figure 6A. Many of these had very low thresholds. The thresholds for a further 21 visual units recorded from a range of auditory cortical fields are also plotted. An example of a bisen-sory unit with a low threshold for each stimulus modality is shown in Figure 6B. This unit, which had clear responses to both auditory and visual stimulation presented separately, had a visual response threshold of 0.5 cd/m2 and an auditory threshold of 34 dB SPL.

Figure 6.

(A) Auditory and visual response thresholds for 31 units (filled circles), and visual thresholds for a further 21 visual units (crosses on the x axis indicate that these latter units were not responsive to acoustic stimulation). (B) Raster plot showing the response of a single unit recorded in AVF to auditory and visual stimuli of increasing intensity. These stimuli were presented in a randomly interleaved fashion and are shown with the earliest presentations of each intensity combination positioned above the later ones. Note the clear latency separation of the response to each stimulus, with the visual response having a much longer latency than the response to sound. Suprathreshold stimuli were presented at a fixed intensity in one modality, whereas the intensity of the other stimulus was varied, as indicated by the values to the left of the plot. This unit had a visual threshold of 0.5 cd/m2 and an auditory threshold of 34 dB SPL.

Multisensory Interactions in Auditory Cortex

The magnitude of the visual-auditory interactions exhibited by units that were classified as bisensory was quantified using equation (2). This measures how different the multisensory response is from the linear sum of the responses to unisensory stimulation. Therefore, a bisensory unit that sums its inputs linearly (i.e., an additive interaction) or a unisensory unit whose response is unmodulated by the other stimulus modality will be deemed to show no cross-modal facilitation or occlusion according to this equation.

The responses of the 6 units that are shown in Figure 2 are replotted (as mean ± standard error of mean spike rates for each stimulus condition) in Figure 7, together with their response modulation values from equation (2). Units B, C, E, and F were classified as bisensory, either because they gave significant responses to both visual and auditory stimuli or because the responses to bisensory stimulation conveyed significantly more information than either of the unisensory responses. Units B and C exhibited sublinear interactions or cross-modal occlusion. By contrast, a significant facilitation or superadditive interaction was observed in the responses of units E and F; in the most extreme case (unit F), neither visual nor auditory stimuli were effective at driving the neuron, whereas bisensory stimulation produced a clear response. Such extreme cases of facilitation were relatively uncommon and were usually observed when the responses to unisensory stimulation were particularly weak.

Figure 7.

Cross-modal interactions in auditory cortex. Data from the same units plotted in Figure 2 are replotted as spike rates ± standard error of mean (corrected for spontaneous firing) for each of the 3 stimulation conditions with the percentage of response modulation values calculated using equation (2). The response modulation value reflects the difference between the linear sum of the unisensory responses and the response to bisensory stimulation.

Figure 8A shows the distribution of cross-modal response modulation values for units classified as bisensory. Most units exhibited linear or sublinear interactions in their spike discharge rates and in only a few cases, were superadditive effects of the sort shown in Figure 5E,F observed. When looking for bisensory interactions, the intensity of both stimuli was kept constant at a relatively high value. The incidence of cross-modal facilitation in the superior colliculus is known to decrease as stimulus intensity is increased (Meredith and Stein 1986; Stanford et al. 2005), so it is possible that a greater incidence of superadditivity would have been observed had weaker stimuli been used. There were no apparent differences in the distribution of such responses across cortical area (data not shown).

Figure 8.

Magnitude of cross-modal interactions in auditory cortex. (A) Bar graph showing the distribution of response modulation values (from eq. 2) derived from a comparison of evoked spike counts with each stimulus condition for all bisensory units. (B) Modulation values calculated in the same way but using the MI values rather than spike counts.

In order to compare the cross-modal response modulation values obtained with spike counts to the MI estimates, we used the same formula (eq. 2) but substituted the spike counts by the corresponding MI values (in bits). The distribution of response modulation values (Fig. 8B) was very similar, and both measures tended to reveal the same type of cross-modal interaction for individual units.

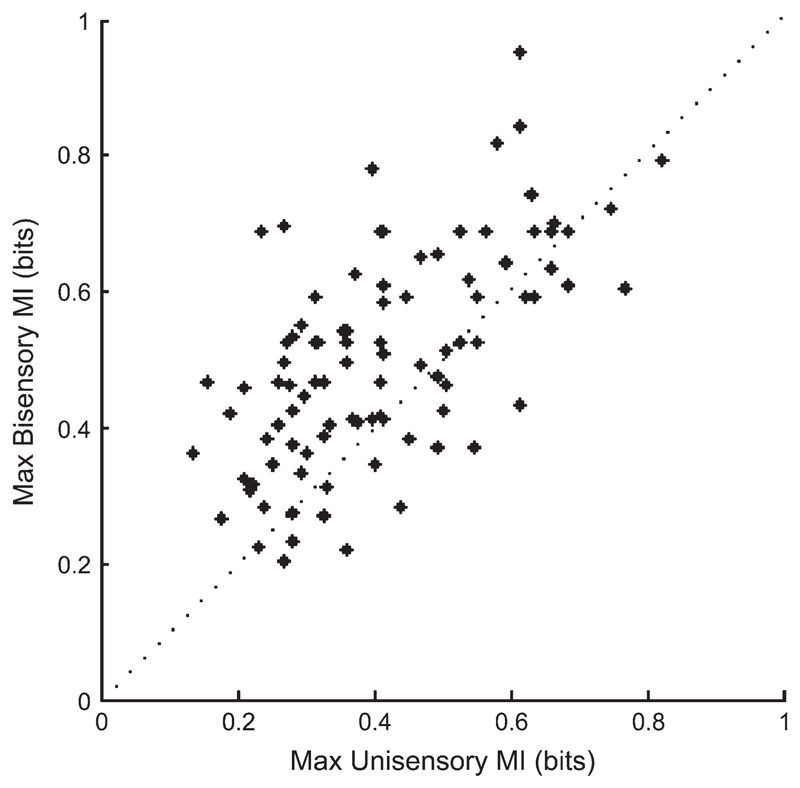

By calculating the MI associated with each stimulus condition (compared with spontaneous activity), we were able to show that in the majority of units classified as “bisensory” there was an increase in the transmitted information when combined visual-auditory stimuli were presented compared with the most effective unisensory stimulus. Figure 9 plots the MI value obtained in the bisensory condition against that for the most effective unisensory condition. The majority of points fell above the line of unity, indicating that there was more information in the response when visual and auditory stimulation was combined.

Figure 9.

Scatterplot comparing the MI values for the most effective unisensory condition and the bisensory condition for all units that responded to both stimulus modalities. Points above the line indicate that more information is transmitted in response to combined visual-auditory stimulation than in the unisensory condition.

Information in Spike Timing

As previously mentioned, we found that the MI analysis provided a more sensitive index for the presence of multisensory responses than ANOVA tests based on spike count. We explicitly tested the hypothesis that this is the case because the MI analysis takes into account stimulus-related variations in spike timing as well as the overall spike count. It has previously been shown for auditory cortex that spike count and mean response latency can together capture the total information in the full spike discharge pattern (Nelken et al. 2005). The mean response latency is a reduced timing measure and is the average latency of all spikes in the response window and would be equal to the first spike latency when there is only one spike. We therefore calculated the MI based on each of these 2 measures for all our recorded responses and compared the relative contributions of spike timing and spike count.

This analysis is presented in Figure 10A, which plots, for all units in which there was a significant response for that stimulus, the relative information in spike count and spike timing. Points lying above the line of unity indicate that the unit transmits more information in its mean response latency than in its spike count. The MI calculated from mean response latencies exceeded the MI from spike counts in 56% of auditory responses (crosses), 70% of visual responses (diamonds), and 52% of bisensory (triangles) responses. The most dramatic differences were obtained when the spike count information was relatively low due to there being little difference between the stimulus-evoked response and the spontaneous activity. Although the recorded spike counts suggested that the stimulus was relatively ineffective in these cases, there was often clear time-locked activity in response to the stimulus, which the mean response latency measure was able to extract. The response of one such unit is shown in Figure 10B.

Figure 10.

Decomposing the stimulus-related information in the spike discharge patterns. (A) The MI (in bits) calculated from spike counts plotted against the MI value obtained using mean response latency for all units in which the relevant response was previously classified as significant. × denotes auditory responses, ∇ denotes visual responses, ◇ denotes responses to bisensory stimulation. (B) Example raster plot in which the MI obtained using mean response latency (0.44 bits for the bisensory condition) exceeded that obtained using spike counts (0.13 bits) alone.

Altering Stimulus Onset Times

A closer examination of the nature of the multisensory interactions was performed by altering the relative timing of the auditory and visual stimuli. In 241 units in which there was no visual response and no multisensory interaction was detectable following simultaneous presentation of the 2 stimuli, ~6% (14 units) showed suppression of the auditory response when the visual stimulus was presented either 100 or 200 ms prior to the acoustic stimulus. An example of such a neuron is shown in Figure 11. This unit responded robustly to a noise burst but was unaffected by a light stimulus, presented either alone or simultaneously with the sound. However, presentation of the visual stimulus 100 or 200 ms prior to the auditory stimulus caused a significant suppression of the response to noise. Such data show that multisensory interactions can be observed only in response to very specific stimulus configurations.

Figure 11.

Cross-modal interactions depend on the relative timing of the visual and auditory stimuli. (A) Raster plot showing how the response of a bisensory unit varied when the sound was delayed relative to the light or vice versa. Stimulus conditions: A, auditory stimulus alone; V, visual stimulus alone; AV, simultaneous light and sound; VdA, light delayed relative to sound; VAd, sound delayed relative to light. The vertical lines indicate stimulus onset timing (light gray for light, dark gray for sound). (B) Mean (±standard deviation) evoked spike count for the visual stimulus alone, auditory stimulus alone, and for bisensory stimulation with the sound delayed relative to the light. The spike count changed significantly (Kruskal-Wallis test, P = 0.003) across these conditions, and post hoc tests revealed that the values obtained with the light alone and with interstimulus intervals of 100 and 200 ms were significantly lower than the spike counts recorded in the other bisensory stimulus conditions.

Distribution in Cortical Depth

Information about the origin of visual inputs to auditory cortex can be obtained by examining the laminae in which multisen-sory interactions occur. Because the silicon probe electrodes used in these experiments do not allow an accurate histological reconstruction of the depths of the recording sites, we are unable to match recordings to specific cortical lamina. However, as the probes had multiple recording sites and recordings were made orthogonal to the surface of the cortex, it was possible to examine the relative depth of the multisensory responses.

We examined the distribution of unisensory and bisensory responses across the recording sites on our silicon probes (both the 4 × 4 and 16 × 1 configuration of recording sites). Visually sensitive units could be found at all the cortical depths sampled. Without histological verification of individual recording sites or a current source density analysis of local field potentials, it was not possible to confirm the laminar origin of these recordings. Nevertheless, these data suggest that visual inputs to auditory cortex are not restricted to particular layers.

Auditory Response Characteristics of Bisensory Neurons

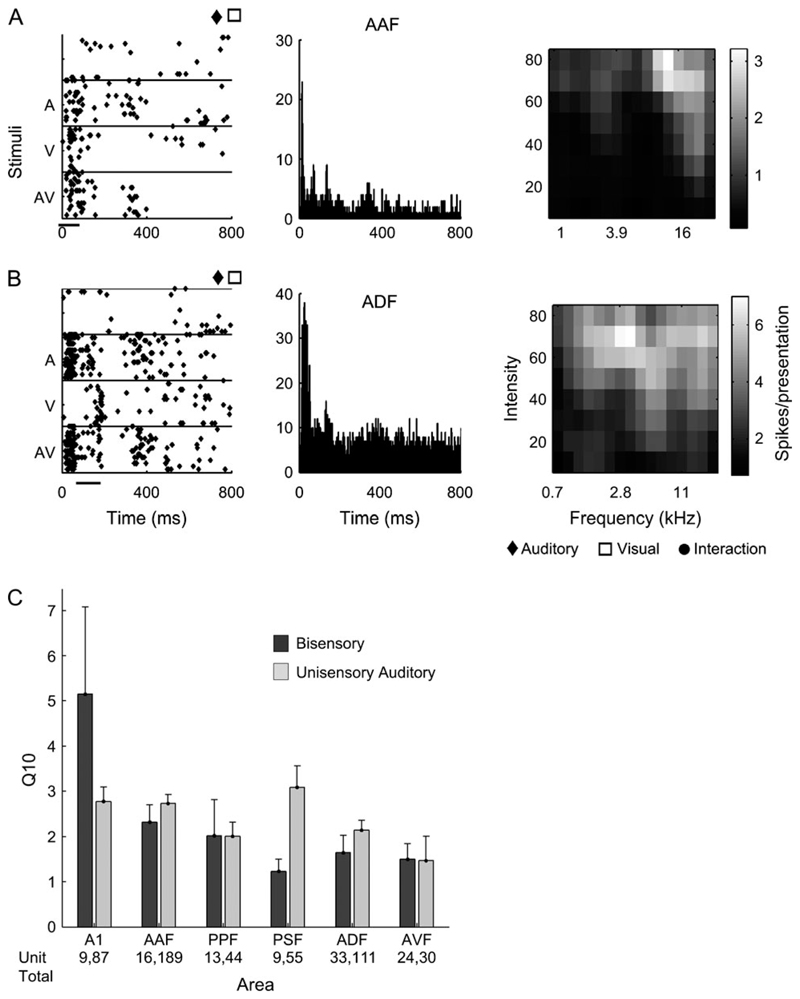

The frequency-tuning properties of all acoustically responsive units were assessed in order to examine the characteristics of those units that also received visual inputs. This was done by measuring the Q10 (bandwidth at 10 dB above threshold divided by best frequency; high values indicate narrow frequency tuning). A 2-way ANOVA (with cortical area and response modality as factors) revealed a significant difference in tuning between different areas (F 5,614 = 3.93, P < 0.01), but not between the auditory and visual-auditory units (F 1,614 = 0.23, P = 0.23).

Figure 12A,B plots the responses of 2 units selected to demonstrate that bisensory neurons exhibited a range of frequency-tuning characteristics, which were usually typical of the field in which they were recorded. The first column shows the raster plot of each unit in response to auditory, visual, and combined visual-auditory stimulation. The central column shows the pooled PSTH in response to 3 repetitions of each of the pure-tone stimuli used to construct the FRA, which is shown in the third column. Unit A is from AAF, and unit B is from ADF. These units have FRAs that are highly typical for their respective fields, with the AAF unit having a short latency onset response and sharp frequency tuning, whereas the ADF response was more sustained and broadly tuned. Both of these units were also visually responsive but did not exhibit significant cross-modal interactions. A comparison of the Q10 values for unisensory auditory and bisensory units recorded in each of 6 cortical fields is shown in Figure 6C. No significant differences in auditory frequency tuning were found according to whether the units were sensitive to visual stimulation or not.

Figure 12.

(A, B) Two examples of FRAs recorded from bisensory units. The left column shows the raster plots in response to auditory, visual, and bisensory stimuli (same conventions as Fig. 2). The middle column shows the pooled PSTH for 3 repetitions of each of the pure-tone stimuli used to construct the FRAs, which are shown in the right column. (A) Unit recorded in AAF. (B) Unit recorded in ADF. (C) Histograms showing the distribution of Q10 values for unisensory auditory and bisensory units in each of the 6 cortical fields.

Anatomical Connectivity: Potential Cortical Sources of Visual Input

A total of 20 separate tracer injections were made into the auditory cortices of 11 ferrets as indicated in Table 1. In all these cases, the injection sites spanned all cortical layers but did not spread to the white matter. To serve the aim of this investigation, only connections between auditory cortex and other nonauditory sensory cortical areas will be described. Visual, somatosensory, and parietal areas of the ferret cortex were identified on the basis of previous anatomical and physiological studies (Innocenti et al. 2002; Manger et al. 2002, 2004).

The number of labeled neurons and the quality of filling varied with the tracer injected, presumably reflecting differences in the size of the injection sites and in the sensitivity and diffusion characteristics of different tracers. Although this prevented us from making quantitative comparisons of the projections arising from different injection sites, the use of different tracers avoided the limitations associated with any particular tracer and allowed us to inject two or more different tracers in the same cortex. In each case, these injections resulted in labeling in nonauditory sensory areas that was predominantly ipsilateral to the injection site and, for injections placed in the same areas, comparable in its distribution among different tracers. Retrograde labeling was also examined outside of the cerebral cortex, which, as expected, revealed labeling in different parts of the medial geniculate body, but not in the lateral geniculate or in the midbrain. Labeling was observed in the suprageniculate nucleus after tracer injections into areas on the anterior bank (ADF and AVF, data not shown).

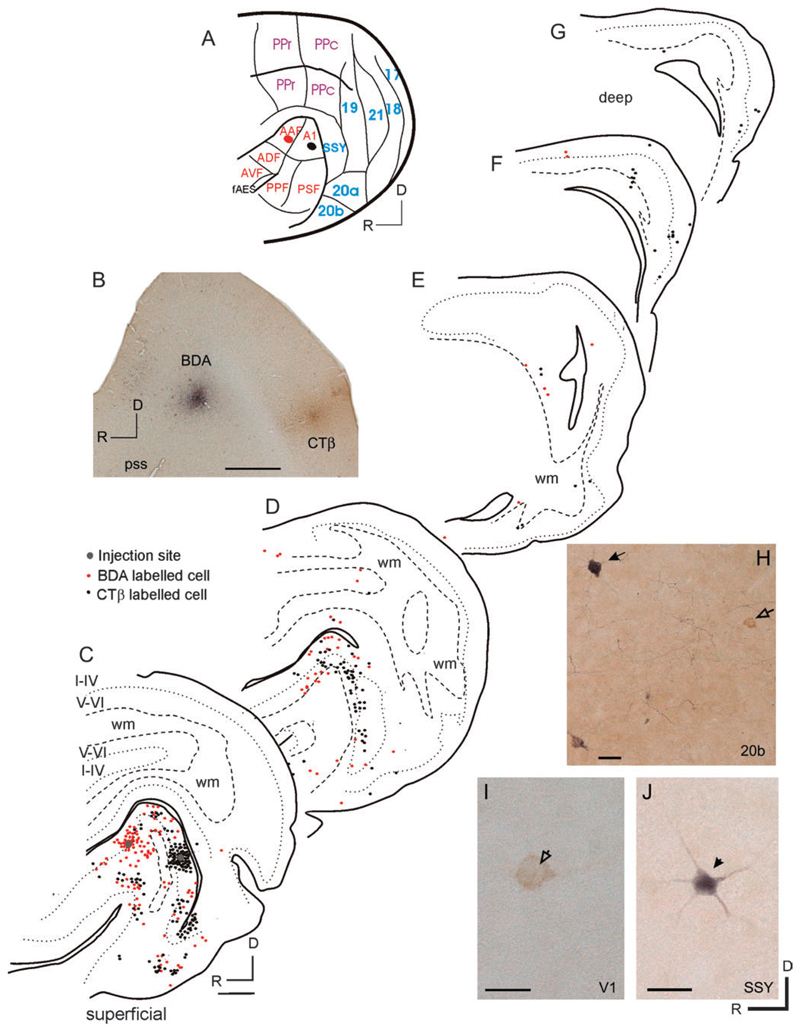

In the cortex, retrogradely labeled cells were found in several nonauditory areas after tracer injections in the EG (Figs 13-15). Labeled cells were consistently observed in visual areas 17, 18, 19, and 20, as well as the suprasylvian cortex (SSY), and posterior parietal cortex, although their distribution varied according to the location of the injection sites. These cells were located mainly in cortical layers III and V.

Figure 13.

Cortical inputs to the MEG. (A) Schematic of the ferret brain showing the sensory and posterior parietal areas. Previously described visual areas are labeled in blue, parietal in purple, and auditory in red. The CTβ and BDA injection sites in the MEG are represented by the red and black regions, respectively. (B) Photomicrograph of a flattened tangential section of the ferret EG showing the injection sites. Deposits of tracer were made by iontophoresis into frequency-matched locations (best frequency, 7 kHz) in the caudal and rostral MEG. (C-G) Drawings of flattened tangential sections of the cortex, ordered from lateral (C) to medial (G). CTβ-labeled cells are shown in red and BDA-labeled cells in black. Every fifth section (50-lm thick) was examined, but, for the purpose of illustration, labeling from pairs of sections was collapsed onto single sections. wm, white matter. Dotted lines mark the limit between layers IV and V; dashed lines delimit the white matter. Scale bars, 1 mm. (H-J) Examples of retrogradely labeled cells in area 20 (H), area 17 (I), and area SSY (J). The open arrow illustrates a CTβ-labeled cell, the closed arrows show BDA-labeled cells. Terminal fields were also visible in these sections. Scale bars, 50 μm.

Figure 15.

Cortical inputs to higher level auditory areas on the AEG. (A) Schematic of the ferret brain showing the sensory and posterior parietal areas (as in Fig. 13). The location of the CTβ injection site in the AEG is shown in black. The vertical lines represent the rostrocaudal level of the coronal sections in (C-I). (B) Photomicrographs of a coronal section showing the injection site. Scale bar, 1 mm. (C-I) Drawings of coronal sections of the cortex, ordered from rostral (C) to caudal (I). CTβ-labeled cells are shown in black. (J, K) Examples of retrogradely labeled cells in PPc and area 21, respectively. HP, hippocampus. Scale bars, 50 μm.

Figure 13 shows the location of retrogradely labeled cells in the cortex following injections of 2 different tracers in the MEG, where the primary auditory fields, A1 and AAF, are located. Sparse labeling was found in areas 17, 18, and 20 (Fig. 13C-G), caudal posterior parietal cortex (PPc, Fig. 13D), and in SSY (Fig. 13D). The greatest number of labeled cells was found in area 20, which has been subdivided into areas 20a and 20b (Manger et al. 2004). Labeling was densest in area 20b, the smaller and more anterior of the 2 fields, with fewer labeled cells present in area 20a. Labeling in areas 17 and 18 was found near their dorsal and ventral borders, corresponding to where the peripheral visual field is represented (Law et al. 1988). Occasional cells were observed in the AES cortex, which, in the ferret, lies within the anterior bank of the pseudosylvian sulcus and receives inputs from primary visual and somatosensory areas (Ramsay and Meredith 2004; Manger et al. 2005). Tracer injections placed into the MEG in other animals produced patterns of labeling consistent with those shown in Figure 13. Injections into both caudal MEG, corresponding to A1, and more rostral MEG, corresponding to AAF, produced very similar patterns of labeling in nonauditory areas.

Figure 14 shows the pattern of cortical labeling observed after injections of BDA and CTβ were placed in the AEG and posterior ectosylvian gyrus (PEG), respectively. The labeling produced by each tracer was almost entirely nonoverlapping, indicating that the higher level auditory cortical areas located on these 2 sides of the EG have different sources of input. The injection of CTβ in the PEG resulted in extensive labeling in the MEG, together with some in AES cortex and in the most rostral and ventral parts of the AEG, which is probably corresponding to limbic areas (Fig. 14D,E). As in the MEG, the predominant nonauditory input to the PEG originated in areas 20a and 20b (Fig. 14E). Scattered cells were also observed in SSY and area 19 (Fig. 14F), although labeling in primary visual areas and PPc was virtually absent. The injection of CTβ in this animal was made in the center of the PEG (Fig. 14A,C), probably spanning the common low-frequency border of fields PPF and PSF (Bizley et al. 2005). More restricted injections into either one of these fields yielded similar patterns of labeling to those in Figure 14.

Figure 14.

Cortical inputs to higher level auditory areas on the AEG and PEG. (A) Schematic of the ferret brain showing the sensory and posterior parietal areas (as in Fig. 13). The black circle represents an injection of BDA made by iontophoresis into the AEG, and the red circle represents the site of the CTβ injection in the PEG. (B, C) Photomicrographs of flattened tangential sections showing the injection sites in the AEG and PEG, respectively. (D-H) Drawings of flattened tangential sections of the cortex, ordered from lateral (D) to medial (H). CTβ-labeled cells are shown in red and BDA-labeled cells in black. Scale bars, 1 mm. The centers of the injection sites are illustrated by gray circles. (I, J) Examples of retrogradely labeled cells in area 20 and area SSY. Scale bars, 50 μm. Other details as in Figure 13.

In contrast to the MEG and PEG, the primary nonauditory input to the AEG arose from SSY (Figs 14E and 15C,D). Sparser labeling was observed in area 20a and 20b (Figs 14E and 15D), PPc (Figs 14F,H and 15C,D), and occasionally in areas 17 and 18 (Figs 14F and 15F-H). The single CTβ injection illustrated in Figure 15 was placed in the center of the AEG, did not spread as far as the pseudosylvian sulcus, and therefore did not include AES cortex. In contrast, the BDA injection into the AEG shown in Figure 14 did extend into the sulcus, most likely including AES cortex. Nevertheless, the pattern of labeling that resulted from these 2 injections was very similar.

In summary, these tracer injections reveal the presence of projections to auditory cortex from several different visual cortical areas. The strongest inputs to auditory fields located on both the MEG and the PEG arise from area 20. Additionally, MEG receives weak, direct projections from primary visual areas, whereas PEG is innervated by higher visual areas. This differs from the AEG, where the largest input originates in SSY.

Discussion

The traditional view that integration of information across the senses occurs in the cortex only after substantial modality-specific processing has taken place has recently been cast into doubt by the discovery that multisensory interactions are prevalent in low-level cortical areas (reviewed by Schroeder and Foxe 2005). Our data build on this growing body of neuroimaging, electrophysiological, and anatomical evidence in primates and other species, by showing that unisensory visual and visual-auditory neurons are widely distributed in ferret auditory cortex. We also identified a possible substrate for these responses by showing that auditory cortex receives inputs from several visual cortical areas. We found some visually sensitive neurons in all 6 auditory areas that were studied, including the primary fields A1 and AAF. Although the incidence of these neurons increased in higher areas on the AEG, their presence in all these fields suggests that multisensory convergence is a general feature of auditory, and perhaps all sensory, cortical areas.

The animals used in this study were anesthetized and had not undergone any behavioral training. This enabled us to carry out the large numbers of recordings necessary to detect and document visually responsive neurons and to investigate multisensory integration without having to consider the additional modulating factors of behavioral state or prior training, which are known to influence multisensory interactions at a cellular level (e.g., Bell et al. 2003; Brosch et al. 2005). Previous studies in both passive awake (Schroeder et al. 2001; Schroeder and Foxe 2002) and anesthetized (Fu et al. 2003) monkeys have revealed the presence of visual and somatosensory influences on the belt areas of auditory cortex. However, the only reports of multisensory integration in core areas have come from studies of behaving animals (Brosch et al. 2005; Ghazanfar et al. 2005). For example, in a task where monkeys were trained to perform an auditory discrimination task triggered by a visual stimulus, responses were observed to the cue light (Brosch et al. 2005). Our data suggest that the substrate for these responses is present and active even in animals that have not undergone any form of multisensory training.

Previous multisensory investigations in the carnivore brain have focused on AES cortex (Wallace et al. 1992; Benedek et al. 1996; Manger et al. 2005) and the rostral aspect of the lateral suprasylvian sulcus (Toldi and Feher 1984; Wallace et al. 1993), which both contain a mixture of modality-specific and multi-sensory neurons. These are, however, regarded as “association areas,” and there have previously been no reports of multisen-sory convergence within putatively unisensory fields of the carnivore auditory cortex. Indeed, the absence of visual activity has been used as a defining characteristic of nonprimary auditory areas (e.g., in differentiating the secondary auditory cortex from AES in the cat [Clarey and Irvine 1990]). Although the highest incidence of visually responsive neurons was found in AVF, which lies close to the presumed location of AES (Ramsay and Meredith 2004; Manger et al. 2005), we found that multisensory convergence is a property of at least 15-20% of units in each of the auditory areas that have so far been characterized in the ferret. As in the study of visual-auditory interactions in auditory cortex by Ghazanfar et al. (2005), we found a higher incidence of multisensory interactions in the higher order cortical fields than in the core areas.

There are at least 2 possible reasons why we were able to observe a greater prevalence of visual influences in auditory cortex than in previous studies in other species. First, the use of multielectrode recording arrays allowed us to sample large numbers of units in a relatively unbiased fashion within a single animal. Second, instead of simply comparing the number of spikes evoked by different stimuli, as all previous studies of multisensory processing have done, we estimated the information transmitted by the units about the type of stimulus presented. Although the multisensory responses of individual units were often classified in the same manner (i.e., as super-additive, additive, or subadditive), the MI estimates were more sensitive. This is most likely because the MI analysis takes into account spike timing as well as spike count. Indeed, by comparing the relative amounts of information in 2 reduced measures of the spike train, the spike count, and the mean response latency, we were able to show that the majority of units transmit more information in the timing of their responses than in the overall spike counts. This was particularly the case for stimuli that, in terms of a change in firing rate, were weakly effective. Thus, the quantification of responses to multisensory stimuli needs to take into account not only the number of spikes evoked by different stimuli (Stanford et al. 2005) but also at least a coarse measure of the timing of those spikes.

Some of the clearest evidence for responses in auditory cortex to visual and somatosensory stimuli has come from studies in which local field potentials and/or multiunit activity have been measured (Schroeder et al. 2001; Schroeder and Foxe 2002; Fu et al. 2003; Ghazanfar et al. 2005). Although these recording methods are useful for detecting the presence of sparsely distributed neurons or, in the case of local field potentials, for examining laminar variations in neuronal stimulus processing, single-unit recordings are required in order to probe the inputs to and integrative properties of individual neurons. By using this approach, we can conclude that we did indeed record from units with converging visual and auditory inputs, rather than from closely intermingled unisensory neurons. This also facilitated an examination of the other response characteristics of the units. Neurons whose activity was modulated by visual stimuli usually exhibited frequency-tuning properties that were characteristic of the cortical fields in which they were located, further supporting the claim that multisen-sory integration is a general feature of the auditory cortex.

Sensitivity to visual stimuli could already be a feature of the ascending input to auditory cortex or arise as a result of lateral or feedback connections from other cortical areas. There have been several reports of somatosensory or visual influences on neuronal activity in subcortical auditory structures (Aitkin et al. 1981; Young et al. 1992; Doubell et al. 2003; Komura et al. 2005; Shore and Simic 2005; Musacchia et al. 2006). Moreover, following tracer injections in auditory cortex, we observed retrograde labeling in the suprageniculate nucleus of the thalamus, which is known to receive auditory, somatosensory, and visual inputs (Hicks et al. 1986; Benedek et al. 1997; Eördegh et al. 2005). Local field potential recordings from monkey auditory belt areas have shown that somatosensory responses have a similar feedforward profile to the auditory responses, that is, beginning in layer IV and then spreading to extragranular layers (Schroeder et al. 2001). There is therefore clear evidence that nonauditory inputs can arise as a result of multisensory convergence either at a subcortical level or within the cortex itself.

Visual activation of the caudomedial area of monkey auditory cortex has a different laminar profile, however, indicative of inputs terminating in the supragranular and infragranular layers, but not in layer IV (Schroeder and Foxe 2002). This pattern is more consistent with inputs from other cortical fields. In their single-unit recording study of rat cerebral cortex, Wallace et al., (2004) reported that neurons responsive to different combinations of visual, auditory, and somatosensory stimuli were restricted to layers V and VI, although modality-specific units were distributed across all cortical layers. This is consistent with our observation that units sensitive to visual stimulation were encountered throughout the full depth of auditory cortex.

Examination of response latencies can also help to identify potential sources of input. As expected, response latencies to visual stimuli were generally much longer than those to sound. They were also longer than those reported to appropriately oriented sinusoidal gratings in ferret V1 (Alitto and Usrey 2004), but more in line with those recorded in area SSY (Philipp et al. 2005). Although response latency depends on many factors, such as stimulus contrast, the relatively long values observed in the present study are consistent with projections to auditory cortex arising predominantly from higher visual cortical areas.

Direct evidence for this is provided by our finding that both primary and nonprimary auditory fields are innervated by neurons in visual and posterior parietal areas of ferret cortex. Anatomical studies in primates have shown that different regions of auditory cortex, including A1, project to V1 and V2 (Falchier et al. 2002; Rockland and Ojima 2003). However, evidence for reciprocal connections has so far been lacking. Our anatomical results provide the first evidence for projections from early visual areas to auditory cortex, which could therefore underlie the visual responses recorded in the present study. It is interesting to note that the only projections from areas 17 and 18 were to the MEG, where the primary auditory fields are found, and that different areas on the suprasylvian gyrus provide the main sources of visual input to the higher level auditory fields on the PEG and AEG.

An important, and as yet unanswered, question relates to the properties of the visual responses in auditory cortex. Receptive field sizes and visual field representation vary among the cortical areas that project to auditory cortex (Law et al. 1988; Manger et al. 2002, 2004; Cantone et al. 2005). In a limited number of recordings, we mapped the spatial extent of the visual receptive fields and found, in most cases, that these were broadly tuned to regions of space within the anterior contralateral quadrant. This finding, together with the low stimulus thresholds observed where these were measured, makes it extremely unlikely that the responses to the LEDs arose from nonspecific arousal effects. Interestingly, the addition of a broadband stimulus delivered to the contralateral ear resulted in a sharpening of the visual spatial tuning, suggesting that the neurons were integrating information from the 2 senses in ways that might enhance stimulus localization. To date, relatively little is known about the receptive field properties of neurons in higher visual areas of the ferret beyond receptive field size, location, and sensitivity for stimulus motion. Further studies are clearly required to examine these properties in both visual and auditory areas of the cortex.

It has been suggested that somatosensory and visual inputs into auditory cortex might facilitate auditory localization, due to their greater spatial precision, or be involved in “resetting” auditory cortical activity to enhance responses to subsequent auditory input (Schroeder and Foxe 2005). Auditory cortex is certainly required for normal sound localization accuracy (Heffner HE and Heffner RS 1990; Malhotra et al. 2004; Smith et al. 2004), and spatial information is thought to be encoded in a distribution fashion across different cortical fields (Furukawa et al. 2000). This is therefore consistent with widespread visual inputs within auditory cortex. However, areas 20a and 20b, which provide the largest input to both MEG and PEG, are thought to belong to the ventral “what” visual processing stream in ferrets (Manger et al. 2004). By contrast, the largest visual input to auditory fields on the AEG comes from area SSY, which overlaps with the recently described posterior suprasylvian (PSS) area (Philipp et al. 2006). Area PSS contains a high incidence of direction-selective neurons and is therefore likely to be part of the dorsal “where” processing stream. Further characterization of these visual inputs could help to unravel the functional organization of auditory cortex.

Acknowledgments

This work was funded by a Wellcome Trust studentship and a travel grant from the Interdisciplinary Center for Neural Computation of the Hebrew University to JKB and by Wellcome Senior and Principal Research Fellowships to AJK. IN was supported by a Volkswagen grant and a Graphics Interchange Format grant.

Funding to pay the Open Access publication charges for this article was provided by the Wellcome Trust.

Notes

Conflict of Interest: None declared.

References

- Adams JC. Heavy metal intensification of DAB-based HRP reaction product. J Histochem Cytochem. 1981;29:775. doi: 10.1177/29.6.7252134. [DOI] [PubMed] [Google Scholar]

- Aitkin LM, Kenyon CE, Philpott P. The representation of the auditory and somatosensory systems in the external nucleus of the cat inferior colliculus. J Comp Neurol. 1981;196:25–40. doi: 10.1002/cne.901960104. [DOI] [PubMed] [Google Scholar]

- Alitto HJ, Usrey WM. Influence of contrast on orientation and temporal frequency tuning in ferret primary visual cortex. J Neuro-physiol. 2004;91:2797–2808. doi: 10.1152/jn.00943.2003. [DOI] [PubMed] [Google Scholar]

- Bajo VM, Nodal FR, Bizley JK, Moore DR, King AJ. The Ferret Auditory Cortex: Descending projections to the inferior colliculus. Cereb Cortex. 2006 doi: 10.1093/cercor/bhj164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bell AH, Corneil BD, Munoz DP, Meredith MA. Engagement of visual fixation suppresses sensory responsiveness and multisensory integration in the primate superior colliculus. Eur J Neurosci. 2003;18:2867–2873. doi: 10.1111/j.1460-9568.2003.02976.x. [DOI] [PubMed] [Google Scholar]

- Benedek G, Fischer-Szatmari L, Kovacs G, Perenyi J, Katoh YY. Visual, somatosensory and auditory modality properties along the feline suprageniculate-anterior ectosylvian sulcus/insular pathway. Prog Brain Res. 1996;112:325–334. doi: 10.1016/s0079-6123(08)63339-7. [DOI] [PubMed] [Google Scholar]

- Benedek G, Pereny J, Kovacs G, Fischer-Szatmari L, Katoh YY. Visual, somatosensory, auditory and nociceptive modality properties in the feline suprageniculate nucleus. Neurosci. 1997;78:179–189. doi: 10.1016/s0306-4522(96)00562-3. [DOI] [PubMed] [Google Scholar]

- Bizley JK, Nodal FR, Nelken I, King AJ. Functional organization of ferret auditory cortex. Cereb Cortex. 2005;15:1637–1653. doi: 10.1093/cercor/bhi042. [DOI] [PubMed] [Google Scholar]

- Brosch M, Selezneva E, Scheich H. Nonauditory events of a behavioral procedure activate auditory cortex of highly trained monkeys. J Neurosci. 2005;25:6797–6806. doi: 10.1523/JNEUROSCI.1571-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brugge JF, Reale RA, Jenison RL, Schnupp J. Auditory cortical spatial receptive fields. Audiol Neurootol. 2001;6:173–177. doi: 10.1159/000046827. [DOI] [PubMed] [Google Scholar]

- Calvert GA, Brammer MJ, Bullmore ET, Campbell R, Iversen SD, David AS. Response amplification in sensory-specific cortices during crossmodal binding. Neuroreport. 1999;10:2619–2623. doi: 10.1097/00001756-199908200-00033. [DOI] [PubMed] [Google Scholar]

- Cantone G, Xiao J, McFarlane N, Levitt JB. Feedback connections to ferret striate cortex: direct evidence for visuotopic convergence of feedback inputs. J Comp Neurol. 2005;487:312–331. doi: 10.1002/cne.20570. [DOI] [PubMed] [Google Scholar]