Abstract

Anterior cingulate cortex (ACC) carries a wealth of value-related information necessary for regulating behavioral flexibility and persistence. It signals error and reward events informing decisions about switching or staying with current behavior. During decisions it encodes the average value of exploring alternative choices (search value), even after controlling for response selection difficulty, and, during learning, the degree to which internal models of the environment and current task must be updated. ACC value signals are in part derived from the history of recent reward simultaneously integrated over multiple time scales thereby enabling comparison of experience over the recent and more extended past. Such ACC signals may instigate attentionally demanding and difficult processes such as behavioral change via interactions with prefrontal cortex. However, the signal in ACC instigating behavioral change need not itself be a conflict/difficulty signal.

Despite many prominent reports relating dorsal anterior cingulate cortex activity (dACC, or rostral cingulate zone: RCZa1; figs.lb,2,3e) to behavior and cognition both in health and disease2, a general theory of its function remains elusive because there is no single factor linking change in stimuli or behavior to neural activity. According to most current theories, dACC plays a key role in behavioural flexibility but there are disputes about its specific contribution. Imagine you are exploring a complex environment when you encounter a valuable item (e.g. an employment offer for a job-seeker or fruit for a foraging monkey). You may either engage with that item or ignore it if the environment is sufficiently rich to make trying elsewhere tempting or more valuable. In such situations, we argue dACC signals information such as the average value of the environment (“search value”), influencing whether you continue your search, potentially entering a sequence of new actions, or remain with the item encountered3. DACC activity also reflects other information determining behavioral change such as how well things have been going (average reward rate) recently over multiple time scales4–6. DACC activity also occurs when animals7,8 or people9 update models of the current task or environment so that new patterns of behavior can emerge. While evidence for encoding of such information in dACC activity is comparatively recent, there is already broad consensus that dACC activity in many species reflects outcomes of decisions - successes and errors - and whether such feedback indicates a need for behavioral change10–18.

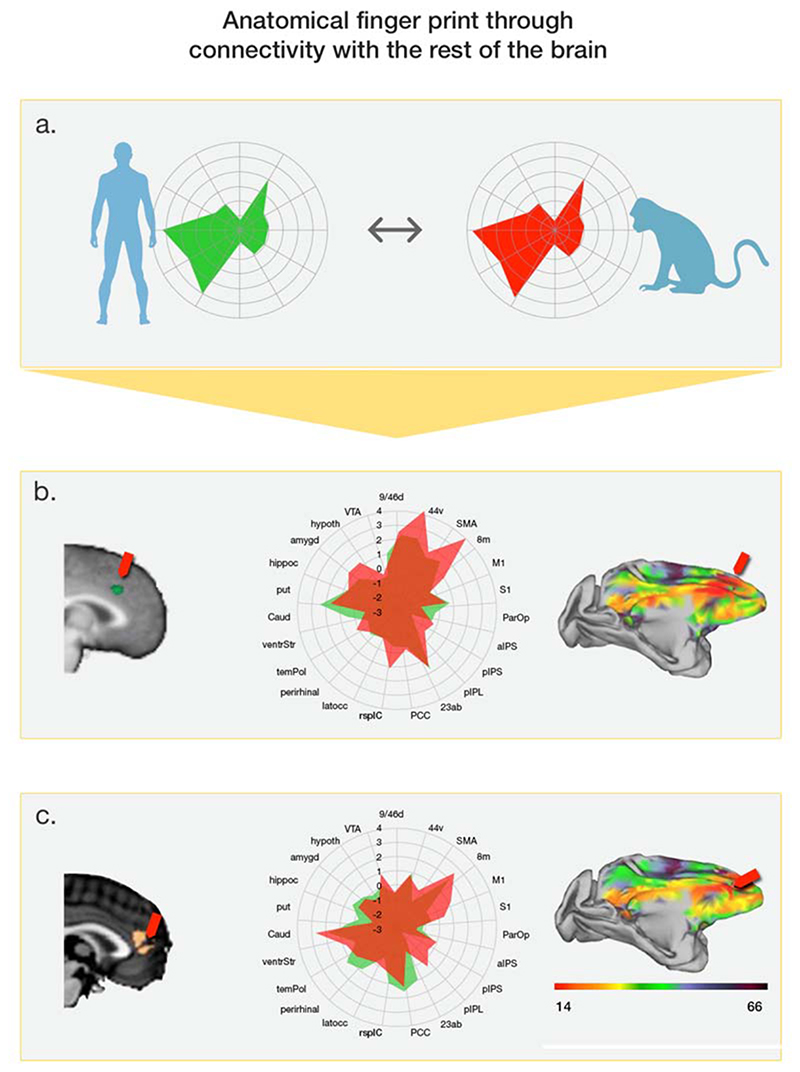

Figure 1. Comparing dACC and pgACC in humans and macaques.

(a) Every brain region has a distinctive “fingerprint” of connections. To compare brain areas in humans and macaques we first identify the fingerprint of the human area. This is estimated from its fMRI-derived resting state activity correlations with other brain areas (left). There is strong positive coupling with the area marked on the circumference when the green line is close to the circumference. The fingerprint can then be compared with fingerprints of every frontal area in the macaque. The best matching fingerprint from the other species is shown in red on the right. Comparison of fingerprints suggests (b) dACC and (c) pgACC similarities in humans and macaques1. In each case task-related human brain activity is shown on the left. Activity in dACC activity from Behrens and colleagues12 is shown in b. Panel c shows activity in pgACC covarying with participants’ general willingness to forage amongst alternative choices despite costs recorded by Kolling and colleagues3 (far left) and activity recorded by McGuire and Kable48 (to its right) also in pgACC and adjacent dorsomedial prefrontal cortex that is related to moment-to-moment variation in the value of persisting in a choice through a time delay. The anatomical names used by the two sets of authors differed but the activations’ proximity highlight the fact that it is the same region that is active in both studies. In each case the center shows fingerprints for the same areas based on a set of 23 key brain regions for the human (green) and best matching macaque area (red). On the right heat maps show the strength of fingerprint correspondence for all voxels in the macaque frontal lobe (red indicates strong correspondence and arrows indicate peak correspondence). DACC and pgACC are associated with different patterns of resting state connectivity but in each case corresponding areas are found in the macaque.

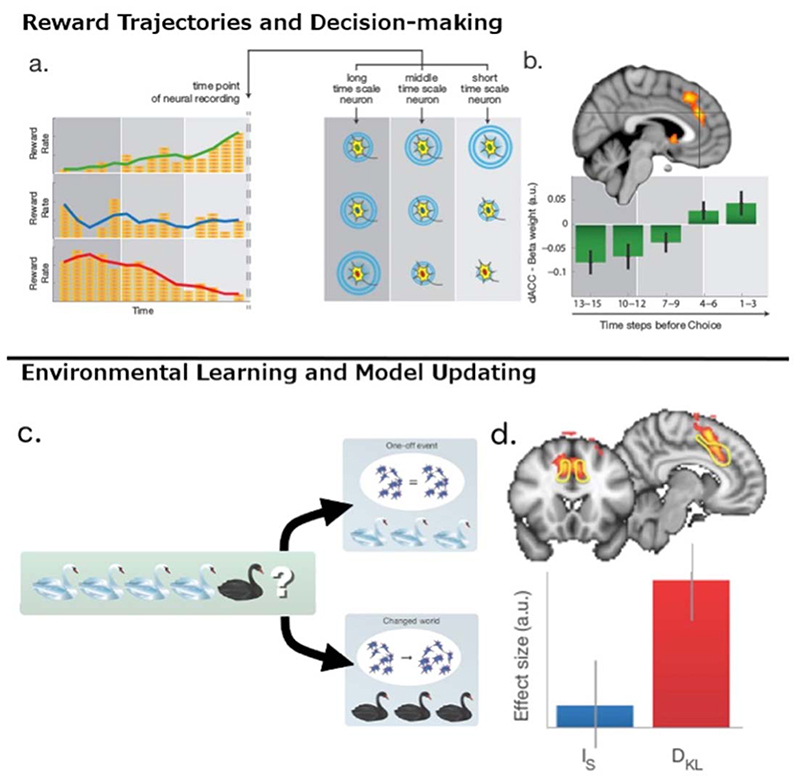

Figure 2. The derivation of value signals in dACC and the presence of model updating signals in dACC.

(a) Deriving value signals from the history of past rewards in macaque and human dACC over multiple time scales. (left) The value of a choice can be estimated from the history of I rewards associated with it. A choice may be associated first with high value (many coins, red line), low value (green), or medium value (blue). Changes in reward rates over time mean that red and green option values reverse over time. (right) The activity of neurons in macaque dACC reflects the history of rewards received over different time scales allowing the simultaneous representation of value estimates over different time periods4,6. A neuron sensitive to reward over longer time scales will be more active, all other things being equal, when a choice is initially associated with high levels of reward (bottom) than low levels (top). A neuron sensitive to short term reward histories, all other things being equal, will be more active when recent experience has been good (top) rather than bad (bottom). (b) Human dACC also reflects reward history over different time scales simultaneously. The relative weight and sign assigned to more recent and more distant reward history suggest a comparison that effectively allows for the projection of future expected reward trajectories (has reward been encountered more frequently recently than over the longer term average) that could guide decisions to keep with a default or to change5. (c) DACC is active when internal models are updated not just when task difficulty increases because surprising events occur. Imagine a naturalist who has only ever observed white swans. On first visiting a new country they come across a black swan for the first time. Should they treat this new swan as an outlier and continue to expect that the next swan they see will be white as usual? Alternatively should they update their model of the new environment and expect to see more black swans? In the first case it may be difficult to know how to respond to the surprising new event but the neural representation of the environment remains constant. In the second case the neural representation is reconfigured. (d) Whole-brain cluster-corrected fMRI analysis indicated a region spanning dACC and adjacent pre-SMA in which there was a significant effect of model updating (contrast shows all voxels with a parametric effect of DKL). The ROI denoted by the yellow line is the dACC region of interest analysed in the lower part of the panel to show mean effect size for surprise (IS) and updating (DKL) (error bars are SEM). Adapted from9.

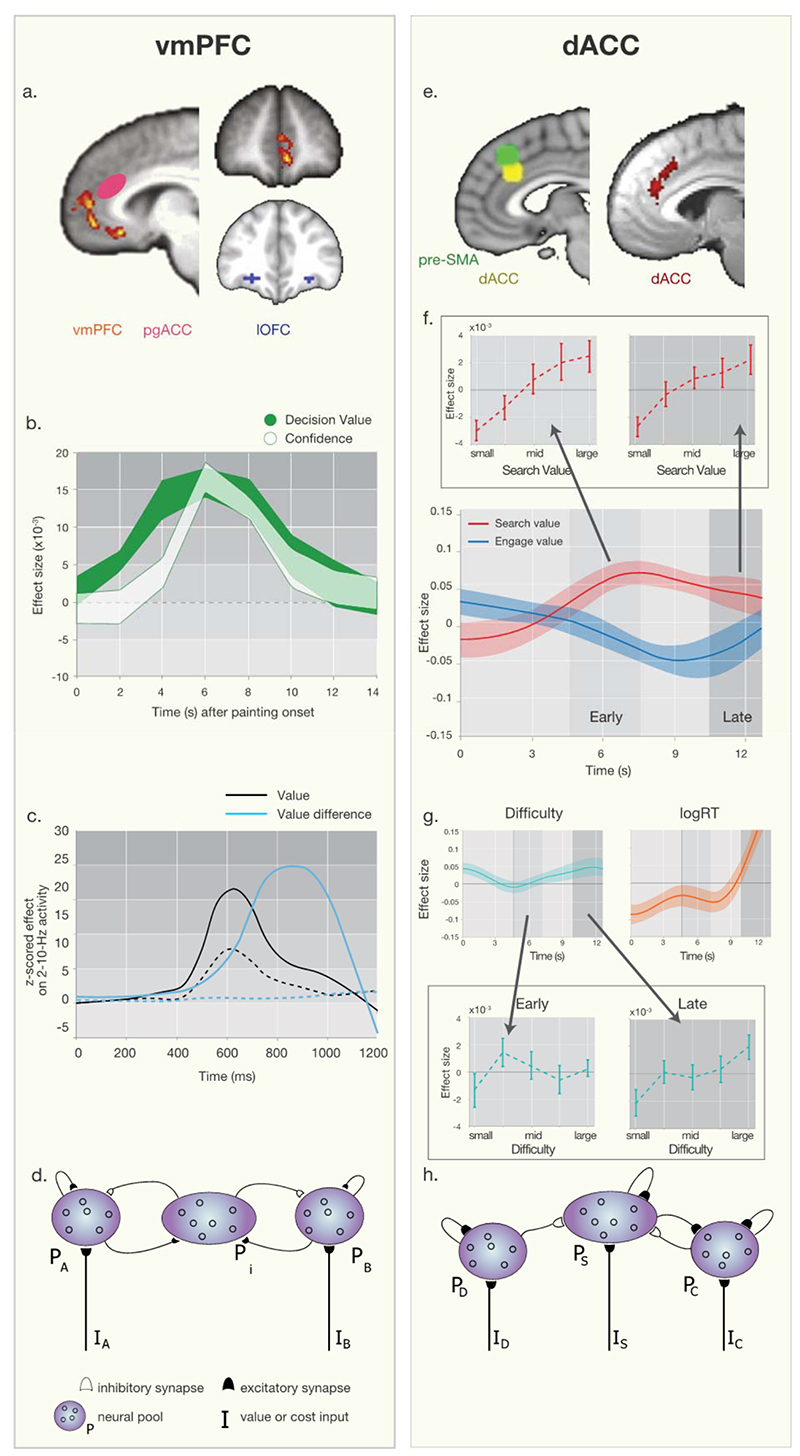

Figure 3. Comparing vmPFC and dACC values signals and decision-making processes.

Both vmPFC (left column) and dACC (right column) are anatomically distinct from adjacent areas. In both areas multiple signals are present but can be explained in relation to biophysically plausible neural network models. (a) Whole brain cluster’corrected value difference (chosen’unchosen option value) signal in vmPFC50 (orange) is anatomically distinct from reward activity in more lateral OFC1 (blue on coronal section) and pgACC (sometimes also called “vmPFC”48, magenta oval on sagittal section). (b) fMRI time course analysis reveals vmPFC activity reflects both decision value (green) and confidence (white) [adopted from55]. (c) MEG recordings show vmPFC activity first reflects the sum of the values (black) of the possible choices and then the difference (blue) in the choices’ values (solid lines are correct trials, dashed lines are errors; adapted from61) but these different signals can be explained by (d) a neural network model60. PA and PB are two pools of neurons in which activity is a function of the value of options A and B respectively. There are recurrent excitatory connections within both pools but between pools interactions are inhibitory and mediated by pool PI. The inhibitory interneurons instantiate a competitive “value comparison” process that leaves a single pool in an attractor state and a decision is made. (e) Left panel shows yellow dACC ROI from which signals were extracted. The region it is anatomically distinct from the location of difficulty effects in or near pre’SMA (green)20. Right panel shows the whole brain cluster’corrected effect of search value in dACC in red, even after controlling for difficulty and log(RT)3 (peak MNI,x=‘4mm;y=36mm;z=26mm). (f) fMRI timecourse analysis of dACC reveals effects of search value (red) followed by engage value (blue) even after controlling for later effects of other factors (g) such as logRT (red) and difficulty (blue). Insets in panel f show the BOLD signal binned by different levels of search value illustrating a search value signal emerges early and is sustained until late in the trial but insets in panel g show that, using a similar binning approach, difficulty effects emerge only later. Arrows linking insets to timecourses indicate approximate time of binning analysis. (g) Network model of dACC explaining the sequence of activity in f. Here, similarly to the network model in d distinct neural populations receive different value input and interact with each other via mutual inhibition and excitation. However, we believe that compared to the symmetric representation of different option values in d, this network model of dACC, has a larger population that represents the value of the environment and is sensitive to environmental context and meta changes such as volatility (Referred to as Ps for a population that can represent search value). Due to dACC’s well established signals related to costs such as effort and pain, we believe such representations to interact with neural population here referred to as Pc (i.e. a population signaling costs). Furthermore, the value of sticking with a default option is also implemented here as PD (i.e. population signaling a pull or bias toward a default), as a self-sustaining neural population that inhibits populations representing the value of exploration or the overall environment Ps. Note however, that this inhibitory impact on dACC might not be implemented as a symmetric interaction and might originate from remote regions. Furthermore, as for panel d, one very important remaining question is how those neural population trigger appropriate responses after a decision has been reached. In this model population PS might simply initiate behavioral adaptation and exploratory behavior, as well as a mode in which there is increased plasticity, model updating, and learning.

By contrast a prominent theory has proposed dACC “diversity can be understood in terms of a single underlying function: allocation of control”19. Arguably it is non-trivial to identify the controlled process in many naturalistic settings. To return to our example, one could argue that continuing to explore and ignoring a tempting item encountered requires control, or equally that precisely the opposite behavior, engaging with the item encountered and ignoring distracting influences of potentially valuable alternatives, requires control. A third, more recent suggestion is that dACC signals the need for control when both options are almost identical in value20. Consequently, in one version of this account19 foraging-related value signals are discussed as determining the value of exerting control19. However, in a more recent version20 the existence of such signals in dACC is questioned and instead it is proposed “dACC activity can be most parsimoniously and accurately interpreted as reflecting choice difficulty alone”20.

Our argument is not that difficulty/conflict does not modulate dACC activity. Indeed such modulation is seen in most brain regions concerned with decision-making. Rather we argue that difficulty or control allocation is insufficient to account for all dACC activity. Moreover, we argue below that difficulty’s/conflict’s impact on dACC signals may be a side effect of its role in evaluating behavioral change and model updating, not the other way around.

Anatomy of ACC and medial frontal cortex in primates and rodents

Although human dACC has been suggested to be unique21, its somatotopy22,23 and activity coupling with other brain areas1, which reflects anatomical connections24, suggest important resemblances with monkey dACC (fig.1). A region’s connectivity fingerprint is critical in constraining its function because connections determine the information regions receive and the influence they wield over other areas. Some of the areas dACC interacts with, such as frontal pole, have changed during evolution25,27, but dACC’s overall connectivity fingerprint remains similar in humans and other primates. Although there is no exact equivalent of primate dACC in rodents there are similarities between the anatomy of area 24, of which dACC is a part, and area 24 in rodent ACC28. While rodent’primate ACC correspondences are not precise they are stronger than for any granular prefrontal area29.

In both humans and macaques dACC is distinguished from adjacent medial frontal cortex such as the pre’supplementary motor area (pre’SMA). Although both pre’SMA and dACC share connections, for example with dorsolateral prefrontal cortex (dlPFC)30,31, dACC is more strongly connected with subcortical regions coding reward and value including amygdala31, much of striatum32, dopaminergic and serotonergic transmitter systems33, and adjacent ACC areas such as perigenual ACC (pgACC) which in turn is special in its ability to influence dopamine31,34,35 via connections to the striosome. Also, unlike pre’SMA, dACC may exert direct influences over motor output; dACC projects to primary motor cortex and spinal cord30. Therefore, while we might expect dACC and pre’SMA to sometimes be co’active, perhaps with dlPFC, the different connections suggest this will not always be true. Furthermore, if we are interested in how value signals are translated into behavioral change and persistence then we should focus on dACC.

Value signals in frontal cortex and dACC

When monkeys make decisions, dACC neuron activity reflects choice value in terms of potential rewards and effort costs36,42. Sometimes value signals arise later than in other areas such as orbitofrontal cortex but other times they are more prevalent and arise earlier in dACC 36,42.

We are beginning to understand how value signals arise within dACC; they reflect the recency-weighted history of previously chosen rewards. DACC neurons have activity reflecting reward history with different time constants (fig.2a,b)4,6. Such reward history signals reflecting different time constants are also detectable in human dACC where they can be compared to predict future rewards and guide decisions to persist or change behavior5. Independent of difficulty effects, dACC can compute the value of persisting in the current environment, rather than with switching away from it5. Furthermore, dACC lesions impair the use of reward history dependent value to determine the balance between behavioral persistence and change43.

Several additional lines of evidence demonstrate value-related activity in dACC neurons is unrelated to response selection difficulty. For example, response selection becomes easier as monkeys progress through a sequence of actions towards a reward [accuracy increases and reaction times (RTs) decrease] but many dACC neurons increase their activity44. Moreover, despite repeated attempts it has been impossible to identify dACC single neuron activity encoding difficulty in monkeys10,11,45,46.

DACC neurons responsive to conflict or cognitive load have been claimed in humans47. However, arguably the key contrast of behavioral conditions supporting the claim might not just reflect conflict but the possibility of alternative courses of actions; increased neural activity is predicted by most theories if the contrast is between conditions varying in number of response associations, number of distracting alternative courses of action, and effort costs. However, after careful testing of a large sample of dACC neurons in monkeys in an experiment focusing directly on difficulty, not a single neuron actually coding response difficulty per se was found45. Instead each neuron with activity related to a particular response became active whenever there was even partial evidence for that response. Therefore, neurons encoded actions or action values and may also have signaled alternative task goals, but never difficulty. However, as a result of such coding many neurons became active in conflict situations and their aggregate activity gave the impression of a difficulty signal that could not be dissociated from that expected from true difficulty neurons by a technique such as fMRI.

Similarly Ebitz and Platt46 pointed out that while dACC neurons reflected a potentially valuable goal that might become an alternative focus of monkey behavior (a function for which we argue dACC is critical) “action conflict signals were absent”. As already noted, however, we are not really concerned with whether conflict/difficulty signals are present but instead our claim is that any such effects are insufficient to explain away evidence of other signals in dACC. What is clear is that, in monkey dACC, value signals exist without clear relation to difficulty.

Of course value signals are also found beyond dACC: in parietal cortex, the dopaminergic system, striatum, and amygdala. There has been a surprising tendency, however, to assert that, within frontal cortex, value signals exist only in ventromedial prefrontal cortex (vmPFC)48. However, at least three different “vmPFC” regions show specific value and decision-related activity and have distinct roles in behavior: areas 1349, 1450,51, and pgACC48,52,53 (fig.3a). It should therefore come as no surprise if value signals exist in other frontal areas such as dACC. It is, however, likely that any value signals in dACC will, as elsewhere, have distinctive features.

Human dACC and the value of behavioral change

Neurophysiological experiments suggest individual dACC neurons carry value signals but that aggregate population activity also reflects difficulty. Two recent reports explain how an fMRI experiment should be conducted when there may be multiple influences on a brain area’s activity54,55. Both focused on vmPFC rather than dACC. One factor was value and the other was decision confidence (approximately the inverse of difficulty20,55). Both factors influenced brain activity and temporal evolution of their effect was visualized with a General Linear Model (GLM) timecourse analysis55(fig.3b).

An analogous approach can be used to identify activity related to the value of exploring an environment, a behavior called foraging3. Participants decided whether to engage with a default option or whether they preferred to explore alternative options. The decision to explore depended on search value (average value of alternatives) and engage value (value of the default options).

The experimental design and schedule ensured search value and difficulty shared little variance (<2.5%). Therefore brain activity could be securely related to either difficulty or search value. Using whole brain GLM analysis, including control regressors of difficulty and log(RT), search value was linked with dACC (fig.3e). In addition there was a smaller and later negative effect of engage value on dACC (fig.3f). Therefore dACC has just the signals needed for determining value of behavioral exploration. Marginally significant effects of difficulty and log(RT) occurred in dACC towards the end of the decision period (fig.3g). An equivalent analysis in which dACC activity was binned by search value or difficulty suggested similar conclusions. Difficulty effects may be stronger in or anterior to pre-SMA (fig.3e)20; many other experiments link pre-SMA and adjacent SEF to difficult response selection56,57.

Interpreting multiple signals in dACC

It can be difficult to know what conclusions to draw when different experiments provide evidence for presence or absence of signals in dACC but again we can find inspiration in the manner in which controversies regarding vmPFC activity have been resolved. Various experiments had suggested that during decision making vmPFC activity reflects the sum of values of possible choices, the choices’ difference in value, or simply the value of the choice ultimately taken50,58,59. By using a biophysical neural network model of the decision process60 it was possible to reconcile these claims and demonstrate they may correspond to signals in a decisionmaking circuit generated at different time points during evolution of a decision61 (fig.3c,d). Pools of neurons code for the value of each potential choice and become active in proportion to its value. Recurrent excitation between neurons in each pool and inhibition between pools ensures the network moves to an attractor state in which one pool, representing one option, remains active and a “choice” is made. Model activity first reflects the sum of choice values and then difference in choice values. High temporal resolution recordings show the same is true of vmPFC61.

Variants of this model are likely applicable in many cortical areas concerned with selection. Drawing on work linking comparator processes to dACC59 and our own studies3,25,26 we propose a neural network model of dACC (fig.3h) predicting presence of both value signals and difficulty effects. In such a hypothetical network variance in activity is related to search value first and then, slightly later, to engage value. When these values are closer together the decision is difficult and the network takes longer to move into an attractor state. This means that later in time during decision making, variance in activity correlates with difficulty and RT even though there are no units explicitly signaling “difficulty”. Difficulty correlates arise because difficulty affects the temporal dynamics of the comparison process. Interestingly, this is the time’varying activity pattern observed in dACC (fig.3f,g). Note that, in the absence of high temporal resolution measurements, the properties and sign of predicted difficulty effects depend on assumptions made about the model and how it is reflected in the time’integrated fMRI signal. For example, if high firing attractor states last after reaction time, then one might see a negative effect of difficulty in fMRI, whereas if activity diminishes as soon as a threshold is crossed one might expect a positive correlate. Either way it is clear that merely measuring a correlate of difficulty does not mean an area’s primary function is to signal difficulty.

Another prediction of such models is that if a decision is very easy, because options have very different values, then it may not be possible to detect value signals in the network’s aggregate activity with a low temporal resolution technique such as fMRI. In some experiments examining very easy decisions20, the brevity of the comparative process makes any detectable value effects in fMRI unlikely. Moreover, we know that faster and more efficient alternative selection mechanisms can be used if choices can be made with very simple heuristics61. In other words, no decision-related value signals are expected if there is no real decision to make.

Foraging, task switching, and updating of internal models

“Search value”, the average value of an environment, is signaled in dACC. In many natural situations animals don’t choose between simultaneously presented options but instead decide whether to engage with sequentially presented options as they are encountered62. Engaging incurs an opportunity cost because potential opportunities to pursue better options are lost. We have therefore pointed out that search value (indexing potential opportunity costs) signals in dACC could guide foraging.

Importantly, our theory is not that dACC activity is simply synonymous with foraging value. Although like others63, we have drawn inspiration from consideration of foraging problems primates evolved to solve it is important to appreciate that many neuroeconomic decisions people make in modern environments involve similar factors. A job-seeker considering one position and foregoing alternatives is making a decision about opportunity costs. Similar signals could guide task switching. The opportunity cost of alternatives makes maintaining engagement in a particular task difficult and so it should be possible to integrate search value-related ideas into models of cognitive control that focus on dACC-dlPFC interactions64–66.

By the same token, not every task with a link to naturalistic decision-making can be performed by dACC alone. PgACC activity is also related to participants’ general willingness to forage amongst alternative choices despite costs3 (fig.1c). In another recent experiment48 without a requirement for search value to guide behavior (and therefore perhaps not surprisingly no dACC activity) the need to persevere or to continue engaging with the current environment again led to pgACC activity. Homologous regions are also involved in cost/benefit decision making in monkeys and rodents35,52,67.

DACC is also implicated in attention switching when it is driven by the updating of internal models of behavior68. In an fMRI experiment, two types of unexpected events occurred. On model update trials subjects responded to a target in an unexpected location and its color indicated future targets would appear nearby. However, on surprise only trials, differently colored targets in an unexpected location indicated one-off events and no need to update internal models of where future targets would appear. We quantified and carefully dissociated model updating and difficulty of responding in this experiment9. Difficulty is equivalent to the surprise associated with a particular target location characterized by its Shannon information IS. Model updating is captured by the Kullback-Leibler divergence (DKL) between the posterior and the prior probability estimates of target location. DACC activity occurred on update trials, as a function of DKL, but not on surprise only trials even though both were associated with RT increases (fig.2c,d). Once again behavioral change-related activity is found in dACC but difficulty itself has little explanatory power. Detailed descriptions of rodent ACC neuron activity during discarding and updating of internal models have also been reported7,8.

Lesions and inactivation of dACC

There might be selection difficulty during decisions between sticking or switching to an alternative. However, investigations of dACC disruption in humans and macaques have revealed complicated impairments that cannot be related in a simple way to difficulty69–72. Unlike lesions to other frontal areas, dACC lesions have little impact on cognitive control; instead impairments are most prominent when decisions concern assessment of the relative value of behavioral persistence versus change43,73,74. Perhaps the most striking deficits seen after bilateral lesions, even when circumscribed to the cingulate sulcus’s ventral bank, however, are failures to act at all75; it is difficult to account for such dramatic effects with subtle arguments about detecting difficulty. Such profound failures are, however, expected if an average sense of the value of possibilities afforded by the environment is lost.

Summary and Future Directions

DACC is active both during decisions and when decision outcomes are assessed. Value, model update, and outcome-related activity in dACC have in common that they all regulate behavioral adaptation and persistence. Although behavioral adaptation may, in turn, entail difficult response selection, dACC activity reflects more than just need or value of control. Moreover, the actual process of behavioral adaptation may be implemented not just in dACC but through dACC’s interactions with dlPFC64–66.

Surprisingly, despite considerable debate about dACC, there have been few attempts to understand adjacent ACC regions. Understanding the precise anatomical arrangements of activity patterns is important because sometimes differing views of function can be reconciled if they are focussed on different subdivisions of medial frontal cortex. As we have noted (fig.3) value and difficulty effects are prominent in adjacent but different areas. Understanding dACC in the context of interactions with pgACC, adjacent gyral ACC, and posterior cingulate cortex, not just in relation to dlPFC, will be important. Ecological foraging theory suggests additional ways of thinking about the decisions we have evolved to take and this, together with neuroeconomic analysis methods, can be exploited to design new decision’ making paradigms that may clarify ACC activity. Interpreting the activity that we find, however, will only be possible in the context of computational descriptions (fig.3). Importantly, such models not only make qualitative predictions about presence or absence of neural activity but suggest specific patterns of temporal dynamics strongly suggestive of particular choice mechanisms (fig.3). Finally, detailed neurophysiological and lesion/inactivation studies will be needed to aid interpretation of human neuroimaging studies of dACC.

References

- 1.Neubert FX, Mars RB, Sallet J, Rushworth MF. Connectivity reveals relationship of brain areas for reward-guided learning and decision making in human and monkey frontal cortex. Proceedings of the National Academy of Sciences of the United States of America. 2015 doi: 10.1073/pnas.1410767112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Behrens TE, Fox P, Laird A, Smith SM. What is the most interesting part of the brain? Trends Cogn Sci. 2013;17:2–4. doi: 10.1016/j.tics.2012.10.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Kolling N, Behrens TE, Mars RB, Rushworth MF. Neural mechanisms of foraging. Science. 2012;336:95–98. doi: 10.1126/science.1216930. 336/6077/95 [pii] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Seo H, Lee D. Temporal filtering of reward signals in the dorsal anterior cingulate cortex during a mixed-strategy game. J Neurosci. 2007;27:8366–8377. doi: 10.1523/JNEUROSCI.2369-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wittmann M, et al. Predicting how your luck will change: decision making driven by multiple time-linked reward representations in anterior cingulate cortex. Nature Communications. 2016 doi: 10.1038/ncomms12327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Bernacchia A, Seo H, Lee D, Wang XJ. A reservoir of time constants for memory traces in cortical neurons. Nature neuroscience. 2011;14:366–372. doi: 10.1038/nn.2752. nn.2752 [pii] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Tervo DG, et al. Behavioral variability through stochastic choice and its gating by anterior cingulate cortex. Cell. 2014;159:21–32. doi: 10.1016/j.cell.2014.08.037. [DOI] [PubMed] [Google Scholar]

- 8.Karlsson MP, Tervo DG, Karpova AY. Network resets in medial prefrontal cortex mark the onset of behavioral uncertainty. Science. 2012;338:135–139. doi: 10.1126/science.1226518. [DOI] [PubMed] [Google Scholar]

- 9.O’Reilly JX, et al. Dissociable effects of surprise and model update in parietal and anterior cingulate cortex. Proceedings of the National Academy of Sciences of the United States of America. 2013;110:E3660–3669. doi: 10.1073/pnas.1305373110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ito S, Stuphorn V, Brown JW, Schall JD. Performance monitoring by the anterior cingulate cortex during saccade countermanding. Science. 2003;302:120–122. doi: 10.1126/science.1087847. [DOI] [PubMed] [Google Scholar]

- 11.Emeric EE, et al. Performance monitoring local field potentials in the medial frontal cortex of primates: anterior cingulate cortex. Journal of neurophysiology. 2008;99:759–772. doi: 10.1152/jn.00896.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Behrens TE, Woolrich MW, Walton ME, Rushworth MF. Learning the value of information in an uncertain world. Nature neuroscience. 2007;10:1214–1221. doi: 10.1038/nn1954. nn1954 [pii] [DOI] [PubMed] [Google Scholar]

- 13.Quilodran R, Rothe M, Procyk E. Behavioral shifts and action valuation in the anterior cingulate cortex. Neuron. 2008;57:314–325. doi: 10.1016/j.neuron.2007.11.031. S0896-6273(07)01021-5 [pii] [DOI] [PubMed] [Google Scholar]

- 14.Narayanan NS, Cavanagh JF, Frank MJ, Laubach M. Common medial frontal mechanisms of adaptive control in humans and rodents. Nature neuroscience. 2013;16:1888–1895. doi: 10.1038/nn.3549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Holroyd CB, Coles MG. The neural basis of human error processing: reinforcement learning, dopamine, and the error-related negativity. Psychol Rev. 2002;109:679–709. doi: 10.1037/0033-295X.109.4.679. [DOI] [PubMed] [Google Scholar]

- 16.Warren CM, Hyman JM, Seamans JK, Holroyd CB. Feedback-related negativity observed in rodent anterior cingulate cortex. J Physiol Paris. 2015;109:87–94. doi: 10.1016/j.jphysparis.2014.08.008. [DOI] [PubMed] [Google Scholar]

- 17.Phillips JM, Everling S. Event-related potentials associated with performance monitoring in non-human primates. Neuroimage. 2014;97:308–320. doi: 10.1016/j.neuroimage.2014.04.028. [DOI] [PubMed] [Google Scholar]

- 18.Scholl J, et al. The Good, the Bad, and the Irrelevant: Neural Mechanisms of Learning Real and Hypothetical Rewards and Effort. J Neurosci. 2015;35:11233–11251. doi: 10.1523/JNEUROSCI.0396-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Shenhav A, Botvinick MM, Cohen JD. The expected value of control: an integrative theory of anterior cingulate cortex function. Neuron. 2013;79:217–240. doi: 10.1016/j.neuron.2013.07.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Shenhav A, Straccia MA, Cohen JD, Botvinick MM. Anterior cingulate engagement in a foraging context reflects choice difficulty, not foraging value. Nature neuroscience. 2014;17:1249–1254. doi: 10.1038/nn.3771. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Cole MW, Yeung N, Freiwald WA, Botvinick M. Cingulate cortex: diverging data from humans and monkeys. Trends Neurosci. 2009;32:566–574. doi: 10.1016/j.tins.2009.07.001. S0166-2236(09)00106-4 [pii] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Amiez C, Petrides M. Neuroimaging Evidence of the Anatomo-Functional Organization of the Human Cingulate Motor Areas. Cereb Cortex. 2012 doi: 10.1093/cercor/bhs329. bhs329 [pii] [DOI] [PubMed] [Google Scholar]

- 23.Procyk E, et al. Midcingulate Motor Map and Feedback Detection: Converging Data from Humans and Monkeys. Cereb Cortex. 2014 doi: 10.1093/cercor/bhu213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.O’Reilly JX, et al. Causal effect of disconnection lesions on interhemispheric functional connectivity in rhesus monkeys. Proceedings of the National Academy of Sciences of the United States of America. 2013;110:13982–13987. doi: 10.1073/pnas.1305062110. 1305062110 [pii] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kolling N, Wittmann M, Rushworth MF. Multiple neural mechanisms of decision making and their competition under changing risk pressure. Neuron. 2014;81:1190–1202. doi: 10.1016/j.neuron.2014.01.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Boorman ED, Rushworth MF, Behrens T. Ventromedial prefrontal and anterior cingulate cortex adopt choice and default reference frames during sequential multialternative choice. Journal of neuroscience. 2013;33:2242–2253. doi: 10.1523/JNEUROSCI.3022-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Neubert FX, Mars RB, Thomas AG, Sallet J, Rushworth MF. Comparison of Human Ventral Frontal Cortex Areas for Cognitive Control and Language with Areas in Monkey Frontal Cortex. Neuron. 2014 doi: 10.1016/j.neuron.2013.11.012. S0896-6273(13)01080-5 [pii] [DOI] [PubMed] [Google Scholar]

- 28.Vogt B. Cingulate Neurobiology and Disease. In: Vogt BA, editor. Oxford University Press; 2009. [Google Scholar]

- 29.Passingham RE, Wise SP. The Neurobiology of the Prefrontal Cortex: Anatomy, Evolution, and the Origin of Insight. Oxford University Press; 2012. [Google Scholar]

- 30.Strick PL, Dum RP, Picard N. Motor areas on the medial wall of the hemisphere. Novartis Foundation Symposium; 1998. pp. 64–75. [DOI] [PubMed] [Google Scholar]

- 31.Van Hoesen GW, Morecraft RJ, Vogt BA. Neurobiology of Cingulate Cortex and Limbic Thalamus. In: Vogt BA, Gabriel M, editors. Birkhauser; 1993. [Google Scholar]

- 32.Haber SN, Kim KS, Mailly P, Calzavara R. Reward-related cortical inputs define a large striatal region in primates that interface with associative cortical connections, providing a substrate for incentive-based learning. J Neurosci. 2006;26:8368–8376. doi: 10.1523/JNEUROSCI.0271-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Porrino LJ, Goldman-Rakic PS. Brainstem innervation of prefrontal and anterior cingulate cortex in the rhesus monkey revealed by retrograde transport of HRP. J Comp Neurol. 1982;205:63–76. doi: 10.1002/cne.902050107. [DOI] [PubMed] [Google Scholar]

- 34.Eblen F, Graybiel AM. Highly restricted origin of prefrontal cortical inputs to striosomes in the macaque monkey. J of Neuroscience. 1995;15:5999–6013. doi: 10.1523/JNEUROSCI.15-09-05999.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Friedman A, et al. A Corticostriatal Path Targeting Striosomes Controls DecisionMaking under Conflict. Cell. 2015;161:1320–1333. doi: 10.1016/j.cell.2015.04.049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kennerley SW, Dahmubed AF, Lara AH, Wallis JD. Neurons in the frontal lobe encode the value of multiple decision variables. J Cogn Neurosci. 2009;21:1162–1178. doi: 10.1162/jocn.2009.21100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kennerley SW, Behrens TE, Wallis JD. Double dissociation of value computations in orbitofrontal and anterior cingulate neurons. Nature neuroscience. 2011;14:1581–1589. doi: 10.1038/nn.2961. nn.2961 [pii] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Rudebeck PH, Mitz AR, Chacko RV, Murray EA. Effects of amygdala lesions on reward-value coding in orbital and medial prefrontal cortex. Neuron. 2013;80:1519–1531. doi: 10.1016/j.neuron.2013.09.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Hayden BY, Pearson JM, Platt ML. Neuronal basis of sequential foraging decisions in a patchy environment. Nature neuroscience. 2011;14:933–939. doi: 10.1038/nn.2856. nn.2856 [pii] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Blanchard TC, Hayden BY. Neurons in dorsal anterior cingulate cortex signal postdecisional variables in a foraging task. J Neurosci. 2014;34:646–655. doi: 10.1523/JNEUROSCI.3151-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Cai X, Padoa’Schioppa C. Neuronal encoding of subjective value in dorsal and ventral anterior cingulate cortex. J Neurosci. 2012;32:3791–3808. doi: 10.1523/JNEUROSCI.3864-11.2012. 32/11/3791 [pii] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Luk CH, Wallis JD. Choice Coding in Frontal Cortex during Stimulus’Guided or Action’ Guided Decision’Making. J Neurosci. 2013;33:1864–1871. doi: 10.1523/JNEUROSCI.4920-12.2013. 33/5/1864 [pii] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Kennerley SW, Walton ME, Behrens TE, Buckley MJ, Rushworth MF. Optimal decision making and the anterior cingulate cortex. Nature Neurosci. 2006;9:940–947. doi: 10.1038/nn1724. [DOI] [PubMed] [Google Scholar]

- 44.Shidara M, Richmond BJ. Anterior cingulate: single neuronal signals related to degree of reward expectancy. Science. 2002;296:1709–1711. doi: 10.1126/science.1069504. [DOI] [PubMed] [Google Scholar]

- 45.Nakamura K, Roesch MR, Olson CR. Neuronal activity in macaque SEF and ACC during performance of tasks involving conflict. Journal of neurophysiology. 2005;93:884–908. doi: 10.1152/jn.00305.2004. [DOI] [PubMed] [Google Scholar]

- 46.Ebitz RB, Platt ML. Neuronal activity in primate dorsal anterior cingulate cortex signals task conflict and predicts adjustments in pupil-linked arousal. Neuron. 2015;85:628–640. doi: 10.1016/j.neuron.2014.12.053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Sheth SA, et al. Human dorsal anterior cingulate cortex neurons mediate ongoing behavioural adaptation. Nature. 2012;488:218–221. doi: 10.1038/nature11239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.McGuire JT, Kable JW. Medial prefrontal cortical activity reflects dynamic re-evaluation during voluntary persistence. Nature neuroscience. 2015;18:760–766. doi: 10.1038/nn.3994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Cai X, Padoa’Schioppa C. Contributions of orbitofrontal and lateral prefrontal cortices to economic choice and the good-to-action transformation. Neuron. 2014;81:1140–1151. doi: 10.1016/j.neuron.2014.01.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Boorman ED, Behrens TE, Woolrich MW, Rushworth MF. How green is the grass on the other side?. Frontopolar cortex and the evidence in favor of alternative courses of action. Neuron. 2009;62:733–743. doi: 10.1016/j.neuron.2009.05.014. S0896-6273(09)00389-4 [pii] [DOI] [PubMed] [Google Scholar]

- 51.Strait CE, Blanchard TC, Hayden BY. Reward value comparison via mutual inhibition in ventromedial prefrontal cortex. Neuron. 2014;82:1357–1366. doi: 10.1016/j.neuron.2014.04.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Amemori K, Graybiel AM. Localized microstimulation of primate pregenual cingulate cortex induces negative decision-making. Nature neuroscience. 2012;15:776–785. doi: 10.1038/nn.3088. nn.3088 [pii] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Wittmann M, et al. Self-other-mergence in frontal cortex during cooperation and competition. Neuron. 2016 doi: 10.1016/j.neuron.2016.06.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.De Martino B, Fleming SM, Garrett N, Dolan RJ. Confidence in value-based choice. Nature neuroscience. 2013;16:105–110. doi: 10.1038/nn.3279. nn.3279 [pii] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Lebreton M, Abitbol R, Daunizeau J, Pessiglione M. Automatic integration of confidence in the brain valuation signal. Nature neuroscience. 2015;18:1159–1167. doi: 10.1038/nn.4064. [DOI] [PubMed] [Google Scholar]

- 56.Isoda M, Hikosaka O. Switching from automatic to controlled action by monkey medial frontal cortex. Nature neuroscience. 2007;10:240–248. doi: 10.1038/nn1830. [DOI] [PubMed] [Google Scholar]

- 57.Mars RB, et al. Short-latency influence of medial frontal cortex on primary motor cortex during action selection under conflict. J Neurosci. 2009;29:6926–6931. doi: 10.1523/JNEUROSCI.1396-09.2009. 29/21/6926 [pii] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Daw ND, O’Doherty JP, Dayan P, Seymour B, Dolan RJ. Cortical substrates for exploratory decisions in humans. Nature. 2006;441:876–879. doi: 10.1038/nature04766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Hare TA, Schultz W, Camerer CF, O’Doherty JP, Rangel A. Transformation of stimulus value signals into motor commands during simple choice. Proceedings of the National Academy of Sciences of the United States of America. 2011;108:18120–18125. doi: 10.1073/pnas.1109322108. 1109322108 [pii] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Wang XJ. Decision making in recurrent neuronal circuits. Neuron. 2008;60:215–234. doi: 10.1016/j.neuron.2008.09.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Hunt LT, et al. Mechanisms underlying cortical activity during value-guided choice. Nature neuroscience. 2012 doi: 10.1038/nn.3017. nn.3017 [pii] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Freidin E, Kacelnik A. Rational choice, context dependence, and the value of information in European Starlings (Sturnus vulgaris) Science. 2011;334:1000–1002. doi: 10.1126/science.1209626. [DOI] [PubMed] [Google Scholar]

- 63.Pearson JM, Watson KK, Platt ML. Decision making: the neuroethological turn. Neuron. 2014;82:950–965. doi: 10.1016/j.neuron.2014.04.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Rothé M, Quilodran R, Sallet J, Procyk E. Coordination of high gamma activity in anterior cingulate and lateral prefrontal cortical areas during adaptation. J Neurosci. 2011;31:11110–11117. doi: 10.1523/JNEUROSCI.1016-11.2011. 31/31/11110 [pii] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Logiaco L, Quilodran R, Procyk E, Arleo A. Spatiotemporal Spike Coding of Behavioral Adaptation in the Dorsal Anterior Cingulate Cortex. PLoS biology. 2015;13:e1002222. doi: 10.1371/journal.pbio.1002222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Voloh B, Valiante TA, Everling S, Womelsdorf T. Theta-gamma coordination between anterior cingulate and prefrontal cortex indexes correct attention shifts. Proceedings of the National Academy of Sciences of the United States of America. 2015;112:8457–8462. doi: 10.1073/pnas.1500438112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Rudebeck PH, Walton ME, Smyth AN, Bannerman DM, Rushworth MF. Separate neural pathways process different decision costs. Nature neuroscience. 2006;9:1161–1168. doi: 10.1038/nn1756. [DOI] [PubMed] [Google Scholar]

- 68.Posner MI, Petersen SE. The Attention Sytem of The Human Brain. Annu Rev Neurosci. 1990;13:25–42. doi: 10.1146/annurev.ne.13.030190.000325. [DOI] [PubMed] [Google Scholar]

- 69.Fellows LK, Farah MJ. Is anterior cingulate cortex necessary for cognitive control? Brain. 2005;128:788–796. doi: 10.1093/brain/awh405. [DOI] [PubMed] [Google Scholar]

- 70.Rushworth MFS, Hadland KA, Gaffan D, Passingham RE. The effect of cingulate cortex lesions on task switching and working memory. J Cogn Neurosci. 2003;15:338–353. doi: 10.1162/089892903321593072. [DOI] [PubMed] [Google Scholar]

- 71.Shima K, Tanji J. Role for cingulate motor area cells in voluntary movement selection based on reward. Science. 1998;282:1335–1338. doi: 10.1126/science.282.5392.1335. [DOI] [PubMed] [Google Scholar]

- 72.Mansouri FA, Buckley MJ, Tanaka K. Mnemonic function of the dorsolateral prefrontal cortex in conflict-induced behavioral adjustment. Science. 2007;318:987–990. doi: 10.1126/science.1146384. [DOI] [PubMed] [Google Scholar]

- 73.Camille N, Tsuchida A, Fellows LK. Double dissociation of stimulus-value and actionvalue learning in humans with orbitofrontal or anterior cingulate cortex damage. J Neurosci. 2011;31:15048–15052. doi: 10.1523/JNEUROSCI.3164-11.2011. 31/42/15048 [pii] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Buckley MJ, et al. Dissociable components of rule-guided behavior depend on distinct medial and prefrontal regions. Science. 2009;325:52–58. doi: 10.1126/science.1172377. [DOI] [PubMed] [Google Scholar]

- 75.Thaler D, Chen Y, Nixon PD, Stern C, Passingham RE. The functions of the medial premotor cortex (sma) I. Simple learned movements. Exp Brain Res. 1995;102:445–460. doi: 10.1007/BF00230649. [DOI] [PubMed] [Google Scholar]