Abstract

Virtually every system today confronts the cybersecurity threat, and the system architect must have the ability to integrate security features and functions as integral elements of a system. In this chapter, we survey this large, complex, and rapidly evolving subject with the goal of giving the reader a level of understanding that will enable incorporation of cybersecurity within an MBSE process and effective interaction with security experts. We begin by introducing the subject and describing the primary aspects of the current cybersecurity environment. We define fundamental terminology and concepts used in the cybersecurity community, and we describe the basic steps to include cybersecurity risk in an overall risk management process, which is a central SE responsibility. We then list some of the primary sources of information, guidance, and standards upon which a systems engineer can and should draw. Next, we summarize the major aspects of incorporating security controls in a system architecture and design to achieve an acceptable level of security risk for a system. We extend this to the increasingly important world of service-oriented, network-based, and distributed systems. We conclude with a brief presentation of the application of MBSAP to the specific issues of cybersecurity and summarize the characteristics of a Secure Software Development Life Cycle aimed at creating software with minimum flaws and vulnerabilities. We illustrate the application of cybersecurity principles and practices using the Smart Microgrid example. Chapter Objective: the reader will be able to apply the MBSAP methodology to systems and enterprises that require protection of sensitive data and processes against the growing cybersecurity threat and to work effectively with cybersecurity specialists to achieve effective secure system solutions.

The Cybersecurity Challenge

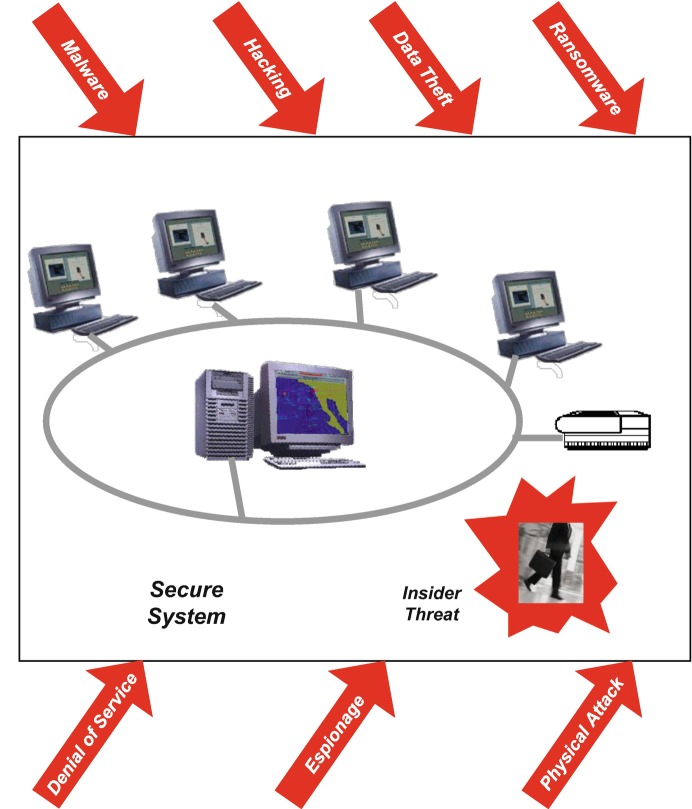

This chapter provides a brief, high-level summary of a very large and complicated topic: protecting information-intensive systems against attack, compromise, corruption, theft, unauthorized use, and other malicious acts. The overall term for this is cybersecurity, which was referred to in the past as Information Assurance.1 Scarcely a day passes without a major news report on computer crime or other incident, from things as basic as defacing Web sites to identity theft, crippling virus attacks, diverted bank accounts, ransomware , and compromise of sensitive operational data and intellectual property. Systems with large numbers of users, geographically distributed locations, and networked access are especially vulnerable. Security professionals, many of whom have specialized training and hold credentials like Certified Information Systems Security Professional ( CISSP) , fight a never-ending battle to defeat criminals, hackers, terrorists, foreign intelligence services, and deranged people who derive perverse satisfaction from releasing viruses, Trojans, worms, and other “malware.” Even a system with robust safeguards against external attack may be vulnerable to the “insider threat” from personnel with authorized access who have become disaffected, have taken money from a criminal or hostile agency, or are simply poorly trained and careless. Any organization or enterprise that relies on information processing is a potential target, and the reality is that most systems of any size or significance have already been penetrated. Figure 10.1 suggests the range of threats confronting secure systems.

Fig. 10.1.

Multiple threats to secure systems

Appendix G tabulates some common attack methods and possible mitigations. A few examples from recent reports on cyberattacks and data breaches will serve to highlight the challenge [1].

Common estimates are that the number of new threats is doubling every year, and the global cost of data breaches is measured in trillions of dollars per year.

Many, perhaps most, common attacks could be defeated with available and affordable safeguards that victims simply fail to deploy; as an example, the average time from publication of a software patch or other mitigation to close a newly discovered vulnerability to the actual installation of the protective measure by both private and public sector organizations is months or even years, when it should be hours or days.

The top ten system vulnerabilities account for approximately 85% of data breaches; some have been around for as long as several years but continue to be exploited.

Roughly 80% of successful attacks originate with external threat agents, but the majority also involve either deliberate or accidental actions by “insiders,” i.e., members or employees of the victimized organization; a common example is an attack that starts with “phishing” to trick an insider into revealing information or downloading malware, giving the attacker access to the system.

A cybersecurity provider recently reported that ten out of ten mobile devices and applications used for payments at stores, theaters, hotels, etc. had major security weaknesses such as failing to encrypt Social Security Numbers.

Relatively new and more sophisticated threats are proliferating; one such is a “ ransomware” attack in which a criminal attacker encrypts a victim’s databases and demands payment to restore them.

Online security across the board is so bad that most successful hackers don’t actually need to employ hacking methods; they simply enter the victim’s network through unprotected public interfaces .

Just as a perfectly safe airplane is one with so much strength and redundancy that it’s too heavy to fly, a perfectly secure information system would simply deny access to anyone. Cybersecurity is therefore a balancing act involving an adequate level of protection against known or postulated threats while still allowing systems and their users to carry out their legitimate functions and accomplish their business objectives or operational missions. For example, a classic dilemma facing a security architect today is the unanticipated user, an external party who is not known to a system a priori, and may very well not even be physically located at the system, but who nevertheless has permissions granted by a higher authority to access functions and data. The security design must then ensure that such legitimate users are granted access, while imposters are blocked with acceptably low error rates.

Since this is a book about system architecture and architecture-centric systems engineering, the emphasis of this chapter is on ways to implement adequate safeguards against cyberattacks within the context of an overall balanced solution to customer needs. The discussion is primarily about architectural approaches, especially layered defense design patterns for security, as well as selection of security controls (safeguards or countermeasures) and the products that implement them. We necessarily pay less attention to procedural aspects of security such as enforcing strong passwords and providing security awareness training, although these are absolutely essential elements of an effective cybersecurity posture.

Given that absolute security is impossible, the approach taken today by security policy-makers and architects is based on risk management. A typical security architecture begins with the most thorough analysis possible of the sources and methods of system attack. The security architect then follows an orderly process of selecting and applying protective measures while ensuring that a system can still accomplish its purpose with acceptable impacts on performance, cost, reliability, and long-term evolution. Security design often uses products with defined assurance levels and employs design patterns, security protocols, and standards to achieve an acceptable level of security risk. This chapter describes a typical security architecture process and introduces some terminology and design approaches. The objective is to enable a general system architect to appreciate the dimensions of the security challenge, to interact more effectively with security specialists, and to ensure compliance with security policies . There are frequent references to Department of Defense (DoD) security policies and practices both because of the importance of protecting classified information and because much of the security technology and many standards and implementation methods, which are used throughout information technology (IT) systems, derive from the experience and investments of the Defense community.

Basic Concepts

Elements of an Attack

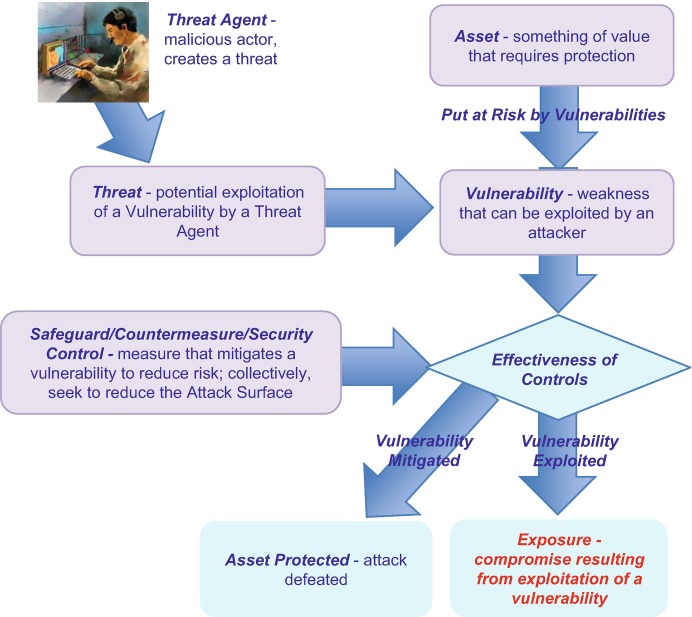

Increasingly sophisticated attacks on information systems are often characterized as cybercrime, cyber-espionage, and even cyberwarfare. Figure 10.2 is a graphical portrayal of the main elements of a cyberattack and introduces some standard terms. An Asset is any information, process, or other system content that requires protection. Assets are potentially at risk if the system has that an attacker can exploit. Collectively, these vulnerabilities constitute the system’s , and a primary goal of secure architecture is to minimize this. An asset such as a database typically has that are the specific items sought by an attacker. A is created by a Threat Agent who seeks to exploit a vulnerability to steal, corrupt, delete, or otherwise harm an asset. Then if the system has safeguards, properly called Security Controls , that adequately mitigate the vulnerability, the asset is protected; otherwise, there can be an Exposure or data breach. The structure in Fig. 10.2 is the basis for the Vocabulary for Event Reporting and Incident Sharing ( VERIS) [2] which asks “What Threat Actor took what Action on what Asset to compromise what Attribute,” referred to as the 4 As, and is widely used in reporting and compiling information on cybersecurity events.

Fig. 10.2.

Elements of a cyberattack

Published work on information security, of which a paper by Whitmore [3] is a typical example, generally highlights the following as the principal factors in the success or failure of a system that is trusted to handle sensitive information:

Clarity and completeness of requirements, including validation by stakeholders, especially the ultimate system users

Trustworthiness of the components and policies used in system implementation

Satisfaction of requirements by the system design and implementation

Correct operation and maintenance of the system to preserve security characteristics

Understanding of the environment in which the system operates, including the threats that security mechanisms must counter

The remainder of this chapter explores these aspects of system security and discusses some of the current approaches in use by security architects and engineers.

Although classified information is most often associated with military systems, any organization that has sensitive data needing protection should apply a consistent scheme for sorting that information into levels of importance or sensitivity and clearly labeling it so that appropriate safeguards can be applied. The US DoD defines classification levels, such as For Official Use Only (FOUO, also called Controlled Unclassified Information), Confidential, Secret, and Top Secret, primarily on the basis of the damage to national security that would result from information compromise. Intelligence agencies typically require more stringent control and protection for their information, which is often achieved through “compartments” that have very strict access requirements and higher levels of physical, administrative, and technical security measures. Civil agencies and commercial enterprises typically define sensitivity levels, e.g., Restricted, Confidential, Private, etc., for information such as intellectual property, personal and financial data, strategic planning, and compliance with laws governing information protection. A good data classification system concentrates the most stringent security controls on the most sensitive information, especially when it is impractical to give everything the highest level of protection.

Categories of Threat Agents

The threat agents in Fig. 10.2 come in a wide variety of forms. The following is a representative tabulation.

– people within the targeted organization who may be either malicious (deliberately seeking to do damage, commit theft, etc.) or inadvertent (careless, poorly trained, etc.); these are the most dangerous because they are already inside system defenses and have access to targeted assets.

Hackers, Thrill Seekers, and Individual Criminals – individuals or small groups whose motivation may range from ideology to financial gain to an adrenaline rush from cracking a system.

– organizations looking to compromise systems for purposes of theft, blackmail, data ransom, or other criminal objectives; stolen data is commonly traded on the Dark or Black Web, a group of clandestine peer-to-peer networks (“darknets”) using the public Internet but with measures to control access and prevent users from being identified or traced.

– a variety of criminal organization that, in addition to cybercrime, may seek to compromise target systems as part of a political, ideological, or simply psychopathological campaign.

Advanced Persistent Threat ( – the most sophisticated category of attackers, often state-sponsored for purposes of military or commercial espionage; APTs commonly have extensive financial and technical resources to execute elaborate campaigns extending over long periods, employing mixtures of tactics, and seeking to thoroughly infiltrate, and even take control of, target systems .

Fundamental Security Concepts

System, network, and enterprise security is customarily discussed in terms of a set of fundamental concepts. These include:

Confidentiality – the ability to preserve secrecy by preventing unauthorized access to sensitive data, whether stored (“at rest”), being communicated (“in transit”), being processed (“in use”), or being created (e.g., by being intercepted by an attacker’s tool such as a keyboard sniffer)

Integrity – the ability to preserve content by preventing an unauthorized party from modifying, corrupting, inserting, deleting, or duplicating data

Availability – the ability to ensure timely access to protected data and functions by authorized recipients

Authentication/Identity – the ability to prove with adequate certainty that a party is who the party claims to be and has an identity that is known to the system or enterprise for purposes such as Access Control

/Access Control – the ability to restrict access to sensitive data to authenticated recipients who possess the necessary access permissions and need-to-know

Non-Repudiation – the ability to prove that a given party took part in an information transaction despite that party’s attempts to deny involvement

Audit – the ability to detect, inspect, record, analyze, and report events associated with security mechanisms, which may be important in determining that a compromise or other unauthorized action has occurred, verifying that security procedures have been followed, and detecting events or trends that may reveal hostile activity

Confidentiality, Integrity, and Availability are the primary cybersecurity concerns and are, with a certain amount of irony, referred to as CIA. An additional term and concept that is increasingly stressed is Resilience, which is the overarching characteristic of a secure system that implements all of the listed concepts, is able to defeat both existing and emerging threats, can maintain essential functions during and after an attack, and can maintain an acceptable level of risk as the threat environment evolves.

Elements of Resilient Cybersecurity

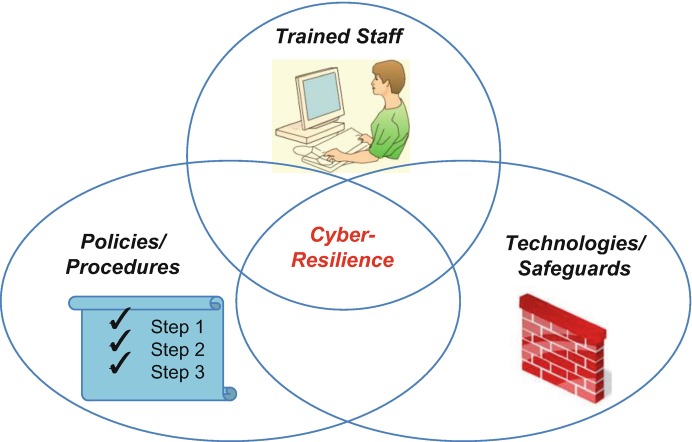

To achieve a cyber- resilient system architecture and implementation while maintaining acceptable system performance, cost, and reliability, the security architect employs proven designs, mechanisms, products, and procedures. All too often, organizations and managers assume that cybersecurity begins and ends with technical safeguards such as firewalls. In reality, an effective security solution requires all three of the elements sketched in Fig. 10.3. They include:

Personnel who are trained, motivated, and empowered to perform their duties in a secure and reliable fashion, including all levels of management, system users, and system support staff

Processes and procedures that implement a security policy and maintain the effectiveness of safeguards

Technologies that implement security controls and that evolve to keep pace with an ever-changing threat environment

Fig. 10.3.

Essential elements of an effective cybersecurity solution

A second threefold taxonomy is useful in describing the security controls or safeguards employed to protect a system. Securing a system that has human involvement normally requires a mix of technical, physical, and procedural security measures.

- Technical measures – these can be quite diverse and may include:

- Firewalls and other protective devices at the system boundary to protect against unauthorized information flows in or out of the system

- Intrusion detection devices to detect, log, and analyze unauthorized attempts to access a system, often resulting in alarms so that timely defensive measures can be taken

- Public Key Infrastructure (PKI)

- Auditing of activity logs, system configuration changes, and other events to detect and respond to suspicious or prohibited actions

- Security testing, including simulated hostile attacks, to verify the robustness of information protection and identify deficiencies that must be corrected

- Policy servers that automate the application of rules governing the operation of security mechanisms

- Access control mechanisms to enforce user privileges and restrict unauthorized use of resources and data

- Lockdown (“hardening”) of servers and other computers by disabling any operating system functions, ports, utilities, or other capabilities that are not absolutely necessary and that may create security vulnerabilities

Physical measures – these seek to place sensitive information or other protected resources behind barriers ranging from facility access controls such as badges, door locks, and guards to locked enclosures and backup power and air conditioning. These can range from property fences and controlled access points to alarms and locked equipment enclosures .

Procedural measures – these deal with secure operations and practices aimed at maintaining the effectiveness of security controls and eliminating vulnerabilities caused by human error. A typical example is a requirement to erase sensitive software and data from a computer at the completion of a work period or task. Others include automatic locking of computers after a period of inactivity and regular, timely system scans to detect suspicious behavior or altered system configurations. Such procedures may be documented in an Automated Information System (AIS) directive. A very important category of procedural security involves training system users to practice good security “hygiene” such as frequently changing passwords and detecting and defeating “phishing” attacks that try to trick a user into disclosing sensitive information or loading malicious software .

Any protective measure is likely to eventually be compromised or circumvented. As a result, traditional defensive configurations that tend to be static and to protect only the system boundary are evolving toward more dynamic and sophisticated approaches. Layered defense approaches such as Defense-in-Depth (DiD) and Zero-Trust Architecture , described below, are an important advance over previous security implementations. The basic idea is that an attacker, to reach sensitive system content, must penetrate a series of barriers, which can be individually managed and updated to deal with newly detected threats and methods of attack. Another important cybersecurity trend involves anti-malware tools that go beyond detecting a threat based on comparing a message or file to a library of threat signatures and seek to analyze a message and any attached files to determine its functions, spot the presence of malicious code or Web sites, and block an attempted attack.

Yet another evolving security approach seeks to be proactive and is based on continuous, even real-time, monitoring of user behavior and cybersecurity events coupled with dynamic responses to minimize or contain attempted intrusions [3, 4]. This could involve deploying agents (see Chap. 10.1007/978-3-319-95669-5_14) at various locations within an information enterprise to measure events such as password change attempts (which may indicate a password breaking attack), failed log-on attempts, blocked data packets, or data objects failing integrity checks. Agent reports can alert operational personnel to an abnormal situation and can trigger automated responses such as blocking suspected network addresses. Logging and analysis of messages and other traffic, particularly amounts, combinations, or timing of data downloads that have not been previously seen, can reveal patterns that may indicate the early stages of a cyberattack. A number of commercial products based on inspection and sophisticated statistical analysis of network activity are now available and have proved effective. Continuous testing of security controls and application software is essential to detect and mitigate vulnerabilities, especially new ones from the ever-evolving threat environment .

Cybersecurity Domains

Security professionals typically organize the cybersecurity discipline using the domains of the CISSP Common Body of Knowledge (CBK). The following paragraphs briefly summarize these2 and give the equivalent of an executive summary of the subject.

– measures to ensure only authorized persons or other entities (“subjects”) can get possession of protected content (“objects”). They are based on file permissions, program permissions, and data rights that are tailored to each individual system user’s need for such content and embedded in a user account. Access control includes the process by which individuals receive authorizations to access particular system content and authentication of the identity of a subject requesting access to an object.

– measures to ensure cybersecurity when protected objects are transmitted. This includes network architecture and design, trusted network components, secure channels, and protection against network attacks.

– security policy , guidance, and direction that a system must follow to attain an Authority to Operate (ATO) with sensitive data. This commonly dictates security constraints and procedures that must be followed, security functions vs. operational or business goals and missions, policy compliance and enforcement, phases of the information life cycle, and governance of third parties such as component vendors, personnel security, education and training, metrics, and resources, especially budgets and skilled personnel.

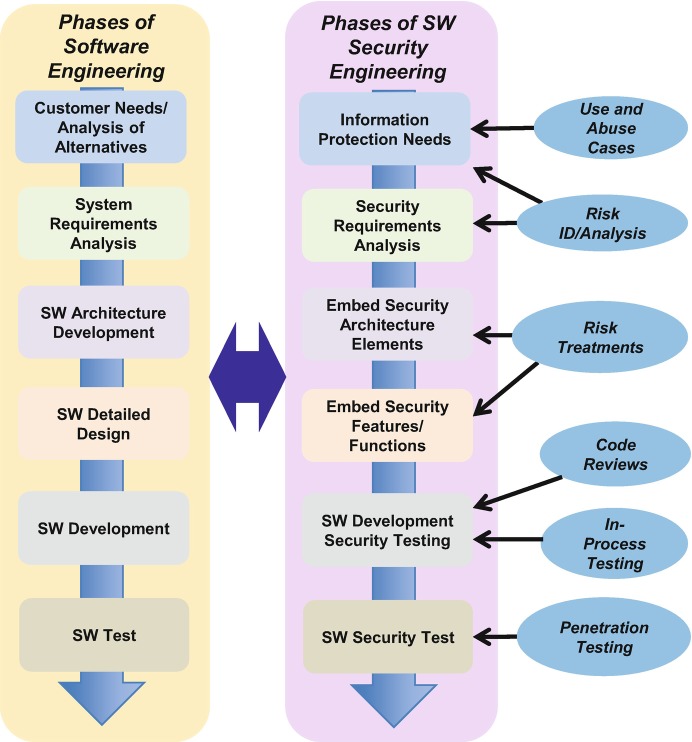

Secure Software Development Life Cycle ( – building cybersecurity into system and application software from the outset. This includes determining information protection needs, establishing security requirements, developing secure software architecture and design, software security testing, and assessing protection effectiveness. Software security controls must be applied and tested in a development environment that is isolated from production systems.

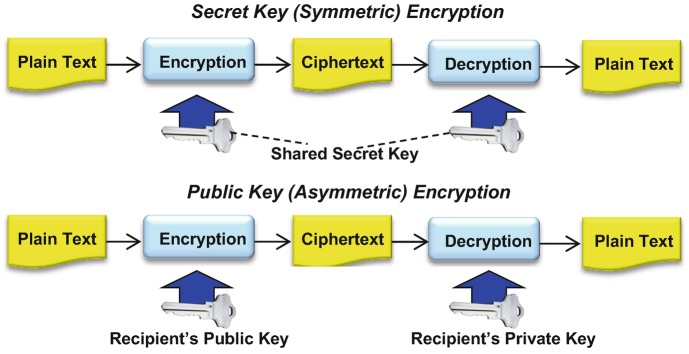

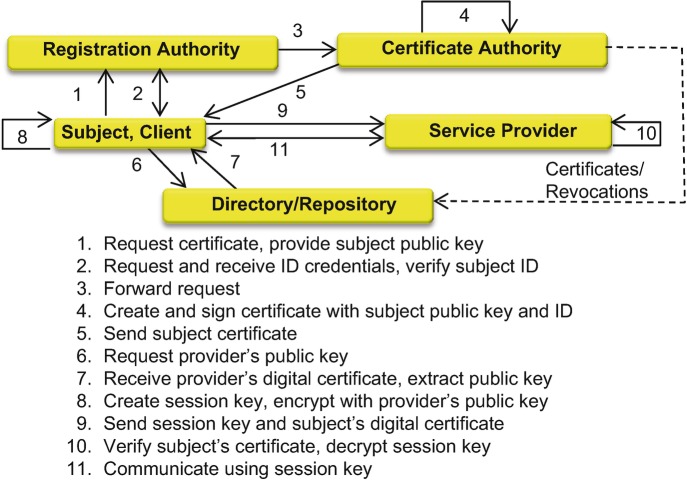

– converting plaintext into unreadable ciphertext using complex algorithms. This domain includes tailored applications of cryptography, the life cycle of cryptographic materials such as encryption keys, basic and advanced cryptographic concepts, encryption algorithms (“ciphers”), public and private keys, digital signatures, non-repudiation (generally using digital signatures), attack methods, cryptography for network security, cryptography for secure application software, PKI, issues with digital certificates, and information hiding (what and when to hide). The protection of the crypto keys is more important than the strength of the cryptography itself; hence a good Key Management Plan means more than the strength of a cipher .

– embedded features and functions that implement security controls and eliminate or mitigate vulnerabilities. This includes models and concepts, model-based evaluation, security capabilities, vulnerabilities, countermeasures, selection of trusted components, and mechanisms to establish and maintain the required level of system security over time.

– ongoing activities to enforce policies and procedures, detect and prevent or mitigate attacks, and maintain a system security posture. This includes operations security, resource protection, incident response, attack prevention and response, management of software vulnerabilities and patches to mitigate them, change and configuration management, and procedures to preserve system security resilience and fault tolerance.

– measures to minimize the organizational and mission impacts of cyberattacks and natural disasters. This includes requirements, impact analysis, backup and recovery strategies, disaster recovery, and testing of plans for continuity and recovery.

– legal issues, ethics, investigations and evidence, forensics, compliance procedures, contracts, and procurements.

– measures to establish and maintain a secure environment for sensitive resources and processes. This includes site and facility design, perimeter security, internal security, facility security, equipment security , privacy, and safety.

Cybersecurity Foundations

An effective, affordable, and resilient cybersecurity solution begins with two fundamental principles.

and Requirements The first essential is a policy that concisely describes the goals assigned to the security function of an organization and how those goals will be met with available or planned resources, together with specific, verifiable security requirements. A good starting point is a Security System Concept of Operations (SECOPS), which complements an overall Concept of Operations (CONOPS) by providing a focused definition of security requirements levied on the components or functions of a system with rationale for each. For example, the SECOPS would describe the required level of access control and what the components responsible for user account management, privilege control, and user authentication must do. A SECOPS often includes “Abuse Cases,” which are analogous to normal Use Cases except that they describe behaviors associated with a cyberattack.

The SECOPS may incorporate or be the basis for a Security Policy Document (SPD). A security policy should be stated in a form that is useful to security stakeholders and includes an overall security policy and governance strategy. The policy is typically elaborated in a Security Plan (SP) that spells out specific policy for all system elements, defines an acceptable risk threshold, and gives an overview of system security requirements and the existing or planned controls to satisfy those requirements. Special Publication SP 800-18, Guide for Developing Security Plans for Federal Information Systems, Rev. 1, from the National Institute of Standards and Technology (NIST) [5] has general guidance applicable to security planning in both public and private sectors.

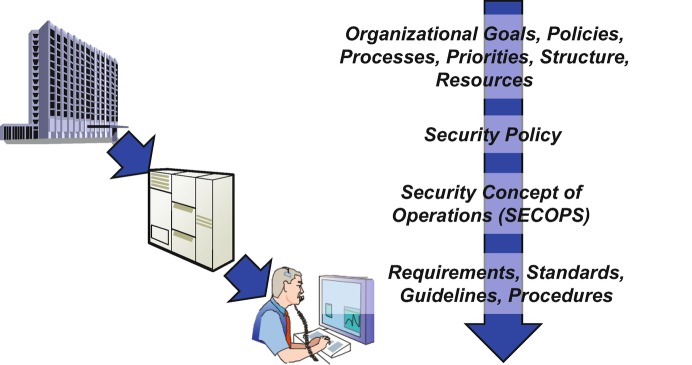

Ultimately, a secure system needs clear, unambiguous , effective, and verifiable requirements for security features and functions as part of an overall requirements baseline to support development, acquisition, integration, test, operational support, and other activities. Federal Information Processing System Publication 200 (FIPS 200) [6] lays out minimum acceptable requirements in 17 areas and can be used as a template for developing security requirements. Figure 10.4 suggests the flowdown from overall organizational policy and goals to focused security policy, a SECOPS , and ultimately security requirements and implementation guidance .

Fig. 10.4.

Effective cybersecurity originates in overall organizational policy and flows down to security requirements and implementation

Secure System Life Cycle The best and least expensive cybersecurity solution results when security is “baked in” to a system design from the outset. A secure life cycle starts with making security requirements a core part of the overall system requirements baseline and continues through all phases of development, integration, test, production, fielding, and operational support. All too often, an attempt is made to graft cybersecurity safeguards onto an existing system, but this is virtually guaranteed to be much harder to do and to yield a less resilient and more costly result. A secure life cycle is particularly important for application software and is further discussed under that topic later in this chapter.

These principles may seem obvious but they are, in fact, widely ignored. A recent analysis of the cybersecurity of US Air Force systems uncovered two pervasive trends in risk management [7]:

Failing to adequately capture the impact to operational missions, a violation of the first basic principle

Implementing cybersecurity as an add-on to existing systems, versus designing it in, a violation of the second

The report goes on to recommend better definition of cybersecurity goals ; clear roles and responsibilities with adequate authority to act; more comprehensive and better prioritized security controls across systems and enterprises; improved access to cybersecurity expertise; continuous assessment and reporting of security status; better threat data; and, perhaps most significantly, holding individuals responsible for violation of cybersecurity policies . None of this is new or surprising. Similar findings come up repeatedly in reviews of nominally secure systems in Government, industry, and elsewhere, especially after cyberattacks. Clearly, the security community has a long way to go in getting system owners to take the cyber threat seriously and to deal with it effectively.

It should be clear from this preliminary survey of cybersecurity that this is a multidimensional problem and a process that continues for the entire operational lifetime of a system or enterprise. Just a few of the matters requiring diligent attention and adequate resources are promptly installing software patches, managing user accounts, acting quickly to detect and mitigate new vulnerabilities, maintaining the security awareness and defensive training of personnel, validating the trust level of new system components before installation, and monitoring system resources and activities to detect and defeat hostile actions . A good basic agenda for establishing and maintaining cyber resilience is spelled out in the Critical Security Control (CSC) list maintained by the Council on CyberSecurity [8]. The remainder of this chapter explores the aspects of cybersecurity that are most prominent in system architecture and in MBSE .

Cybersecurity Risk Management

Risk management is a core activity of Systems Engineering, based on assessing the probability of occurrence of potential adverse events or conditions and the consequences of such occurrences. A risk management process usually consists of:

Risk identification

Risk assessment in terms of probability of occurrence and consequence of occurrence

Identification of risks requiring mitigation

Planning and budgeting for risk reduction activities

Tracking the progress of risk mitigation until a risk is retired or a decision is made to accept it

In dealing with cybersecurity, risk management is focused on protected assets, threats, vulnerabilities, and risk mitigation using security controls. It begins with risk identification and analysis, which then supports selection of security controls to achieve an acceptable risk level. This, in turn, is the basis for securing approval to operate the system. Accordingly, risk analysis is an essential component of defining security policy and requirements. The goal is to apply security controls intelligently to mitigate the most important risks while preserving an operationally effective, affordable, and supportable system. Risk management continues over the life of a system to deal with changes to the system itself and with the emergence of new vulnerabilities and threats.

Cybersecurity risk management deals with four basic elements.

Risk – a measure of the likelihood of occurrence of an adverse event such as loss of sensitive information and of the potential resulting consequences to a system or organization that is the target of an attack. This is conceptually equivalent to the probability and consequence of occurrence used in general risk management.

Threat – the potential danger of a compromise that is associated with a vulnerability. The term is commonly associated with an entity (Threat Agent), event, or circumstance that has the potential to adversely affect an organization/target by exploiting a vulnerability.

– a flaw in a target that can potentially be exploited by a threat. This is often due to the absence or weakness of a security control or countermeasure.

– an event in which a threat agent leverages a vulnerability to carry out an attack or otherwise induce adverse consequences for a target. An exploit typically results in an Exposure/Compromise/Data Breach, i.e., an instance of the target being exposed to loss.

The basic risk relationship can be expressed by considering an individual risk as a function of a specific vulnerability and a threat that arises from the possibility that the vulnerability may be discovered and exploited. We can state this simply as:

| 10.1 |

where the nth risk, Riskn, results from the nth threat associated with the nth vulnerability.

Cybersecurity risks are associated with information assets (data, systems, processes, etc.) whose value is such that they require protection. The sum of vulnerabilities (or, equivalently, of potential attack vectors) constitutes an Attack Surface. Managing cyber risks then comes down to minimizing the Attack Surface. This is made difficult by the reality of a diverse, growing threat environment; a full listing of known vulnerabilities and attack methods would fill a small book, and a complete description would fill a large one.

The first step in cybersecurity risk management is risk identification ( RI) . This begins with asset valuation, meaning a consistent approach to determine both quantitative (cost) and qualitative (relative importance) values. Factors include costs to acquire or develop an asset, initial and recurring data maintenance costs, importance of assets to the organization and to others (including criminals), and public value in terms of things like intellectual property. Next comes threat analysis, involving identification and definition of known and potential threats, consequences of an exploitation, the estimated frequency of threat events, and the probability that a potential threat will materialize. The third element of RI is vulnerability assessment. This can draw on a variety of sources, including published lists of vulnerabilities, a history of security events, and results of vulnerability testing.

Now comes risk analysis ( RA) , which can be quantitative or qualitative. Quantitative RA has advantages in devising an optimum cybersecurity solution but requires more information and more effort. It attempts to assign a numerical value or cost to assets and threats to support cost/benefit analysis and more clear and concise characterization of particular risks. NIST SP 800-30, Risk Management Guide for IT Systems [9], defines a nine-step risk assessment process. The analysis begins with the asset valuations and assessments of threats and vulnerabilities from RI . For each combination of an asset, a vulnerability, and a threat, an Annualized Loss Expectancy ( ALE) is calculated as:

| 10.2 |

where SLE is the Single Loss Expectancy and ARO is the Annualized Rate of Occurrence, stated as the expected number of occurrences of a specific threat in a 12-month period. SLE is determined as:

| 10.3 |

where EF is the Exposure Factor, consisting of the fraction of total Asset Value expected to be lost in a single security event. Obviously, many of the values used in these calculations are estimates, although careful tracking of published security data and analysis of an organization’s security history and of recent event logs can make the estimates more accurate.

The next step is to evaluate the feasibility and cost of possible security controls. It can be difficult to arrive at the true total cost of a safeguard. In addition to the cost of developing or purchasing a product that implements a control, there are likely to be costs associated with planning, implementation, and maintenance; operating costs (including skilled personnel); and costs associated with economic impacts such as effects on productivity. Once a reasonable total cost is obtained, the value of a given control against a given threat can be computed as:

| 10.4 |

Qualitative RA is a heuristic method (Delphi technique) based on scenarios. It does not attach absolute numerical costs, but typically uses scoring by knowledgeable people on a 1–5 or 1–10 scale of threat severity, potential loss, effectiveness of protective measures, etc. These subjective assessments can be compiled to get some insight into the relative importance of risks and the relative cost and effectiveness of countermeasures. When quantitative RA is not feasible, qualitative analysis is better than nothing.

With the results of RI and RA in hand, risk management moves to decisions about how each risk should be treated. There are four basic options, representing the available safeguards:

– application of security controls or countermeasures to mitigate vulnerabilities and thereby reduce risk

Risk Assignment or Transference – movement of a risk to a third party, e.g., by using insurance

– action to eliminate the situation that gives rise to a risk, e.g., by disposing of assets or modifying vulnerable processes

– deciding to live with a risk when the cost of mitigating it exceeds the potential consequences of a compromise

Several factors may bear on risk treatment decisions. In addition to the cost/benefit associated with implementing a security control, which may indicate accepting the risk or pursuing risk avoidance, some of these considerations are:

– becomes important when there are statutory requirements or civil liabilities associated with failure to implement security controls.

– publications from NIST and other standards bodies offer guidance on optimum risk treatments.

– may be a factor if failure to mitigate a risk has potential operational impacts such as added cost for security surveillance, reduced productivity, or the need to implement work-arounds in affected processes.

– a situation in which a proposed security control to deal with one risk actually introduces new vulnerabilities and thus leads to other collateral risks.

From all these considerations, the last step is to decide which risk treatments to apply based on an integrated assessment of the most effective protection strategy within the constraints of available resources and system impacts. Another way to say this is that we seek to reduce a system’s Attack Surface to the feasible minimum. The remaining , which might be quantified by summing the ALEs of the untreated risks or simply described in qualitative terms, must correspond to acceptable risk level as defined in policy and approved by senior management of the organization. Security policy and requirements are ultimately based on achieving this level, and it is essential that responsible authorities in the organization approve it as being acceptable. This is then the basis for granting or withholding permission to operate the system with sensitive data and processes. RI, RA, security controls, and assessment of residual risk should be repeated as necessary in the face of the constantly changing threat environment, specifically whenever new threats, new vulnerabilities, and changes to system content and processes make the previous analysis no longer valid.

A number of systematic threat modeling approaches are available. One is the Microsoft Threat Modeling Process, which is supported by a free tool and is complemented by threat analysis tools [10]. Once a set of security objectives for a system has been established, this process goes through a sequence of characterizing and decomposing applications to discover trust boundaries, data flows, entry and exit points, and external dependencies that potentially introduce vulnerabilities. These are then analyzed in terms of known threats and attack vectors, using methods such as attack tree analysis, resulting in a prioritized threat list. Finally, actual and potential vulnerabilities are identified, and the analysis results are fed back to the application characterization stage to support design changes, addition of countermeasures, or other steps to mitigate the threats. Other threat modeling methodologies include:

– this model uses six basic threat categories: Spoofing Identity, Tampering with Data, Repudiation, Information Disclosure, Denial of Service, and Elevation of Privilege; it then identifies mitigation strategies for each.

– this approach scores each identified threat in terms of Damage Potential, Reproducibility, Exploitability, Affected Users, and Discoverability from 1 to 10 and then computes overall risk by dividing the sum of these scores by 5.

Cybersecurity Guidance and Resources

Organizations that develop, own, and authorize secure information systems commonly establish policies and other directives governing these systems and their operations. The flowdown of security policy from overall organizational policies and objectives was described earlier. These policies often draw upon a growing body of standards published by Government, industry, and other bodies. System architects and engineers need to be familiar with this aspect of cybersecurity.

Table 10.1 lists some of the more prominent sources of cybersecurity data and guidance. The list contains Government agencies, industry consortia, professional societies, and others. Collectively, they offer current cybersecurity intelligence, tools and methods, standards, training, and many other resources of great value to the secure system architect, as well as later to the security staff that must support and maintain adequate security over a system’s operational lifetime.

Table 10.1.

Sources of cybersecurity information and guidance

| Source | Information provided |

|---|---|

| Web Application Security Consortium (WASC) | Best practice security standards, tools, resources, and information, e.g., Web application scanner evaluation criteria |

| Open Web Application Security Project (OWASP) | Tools, articles, other resources; development/testing/code review procedures, list of top risks |

| Department of Homeland Security (DHS) | Best practice tools, guidelines, rules, principles, other resources; Build Security In (BSI) initiative; provides CWE (below) as a service; SW assurance Common Body of Knowledge; comprehensive guidance on secure SW development at buildsecurityin.us-cert.gov |

| Institute of Electrical and Electronics Engineers (IEEE), Computer Society (CS), Center for Secure Design (CSD) | Recent initiative aimed at providing a variety of artifacts for secure system development, e.g., “How to Avoid the Top Ten Software Security Flaws” |

| ISO/IEC 27034 | Standard guidance on integrating security in processes for managing applications |

| System Administration, Networking, and Security Institute (SANS) | Education, certification, reference materials, conferences dealing with information security |

| Council on CyberSecurity | Established in 2013; publishes a list of prioritized Critical Security Controls (CSCs) that are widely used as a measure of cyber resilience (www.counciloncybersecurity.org) |

| National Institute of Standards and Technology (NIST) | Federal Government lead for cybersecurity, e.g., Risk Management Framework (RMF); multiple standards and best practice publications, including SP 800 series and Federal Information Processing Standards (FIPS) |

| National Security Agency (NSA) | Published the original “Rainbow Books” on computer and network security; operates the Center for Assured SW |

| SW Assurance Metrics and Tool Evaluation (SAMATE) | Provided by DHS and NIST |

| Common Attack Pattern Enumeration and Classification (CAPEC) | Sponsored by DHS and MITRE (http://capec.mitre.org/index.html); lists common attack patterns, comprehensive schema, and classification taxonomy; ~500 entries (so far) |

| National Vulnerability Database (NVD); NIST SP 800-53 | Federal Government repository of standards-based vulnerability management data; uses the Security Content Automation Protocol (SCAP) which includes the Extensible Configuration Checklist Description Format (XCCDF); supports automation of vulnerability management, security measurement, and compliance; includes databases of security checklists, security-related software flaws, misconfigurations, product names, and impact metrics; supports the Information Security Automation Program (ISAP); provides a list of Common Vulnerabilities and Exposures (CVEs) and scores CVEs to quantify the risk of vulnerabilities, calculated from a set of equations based on metrics such as access complexity and availability of a remedy; includes a Computer Platform Enumeration (CPE) dictionary |

As Table 10.1 suggests, there is a large and diverse community striving to cope with the ever-growing and constantly changing cyber threat environment. As part of these activities, a number of standards and taxonomies have been developed.

Vocabulary for Event Recording and Incident Sharing ( – a set of metrics designed to provide a common language for describing security incidents in a structured and repeatable manner [2]. VERIS helps organizations collect and share useful incident-related information.

Common Weakness Enumeration ( – a common language for describing software security weaknesses in architecture, design, or code; this is the standard measure for SW security tools targeting these weaknesses and a common baseline standard for identification, mitigation, and prevention of software weaknesses [11].

National Vulnerability Database ( – a Government repository of standards-based vulnerability management data, described in NIST Special Publication 800-53.

- – some of these are listed in Table 10.1; they include:

- Common Vulnerabilities and Exposures (CVE)

- Common Configuration Enumeration (CCE)

- Open Vulnerability Assessment Language (OVAL)

- Computer Platform Enumeration (CPE)

- Common Vulnerability Scoring System (CVSS)

- Extensible Configuration Checklist Description Format (XCCDF)

Common Attack Pattern Enumeration and Classification ( – a dictionary and classification taxonomy of known attacks that can be used by analysts, developers, testers, and educators to advance community understanding and enhance defenses.

Other – multiple publically available security taxonomies, research reports, checklists, newsletters, blogs, and other data sources.

Secure Architecture and Design

Given an effective cybersecurity policy and associated set of requirements, a sub-discipline of Systems Engineering that is generally referred to as System Security Engineering (SSE) comes into play as part of the overall MBSAP flow from requirements to an OV, LV, and PV. At every step, security requirements are allocated to a progressively more detailed system structure, and security functions and processes are modeled as part of the Behavioral Perspective. The overall strategy recommended in this book can be called “ layered defense ,” and the layers of this structure are defined in parallel with other architecture development activities. Layered defense has two complementary elements:

Defense in Depth ( – deployment of diverse and mutually supportive security controls at various points in the architecture to create, in effect, concentric shells of protection that an attacker must defeat to reach protected assets. Later paragraphs and Appendix F go into more detail on this approach. DiD can be thought of as security in depth.

Zero-Trust Architecture – a strategy that extends and refines DiD architectures with fine-grained segmentation, strict access control, strict privilege management, and advanced protective devices, all based on a rule that no person and no resource is trusted without adequate verification. Zero-Trust represents a broad approach to secure systems.

Secure Architecture and Design Process

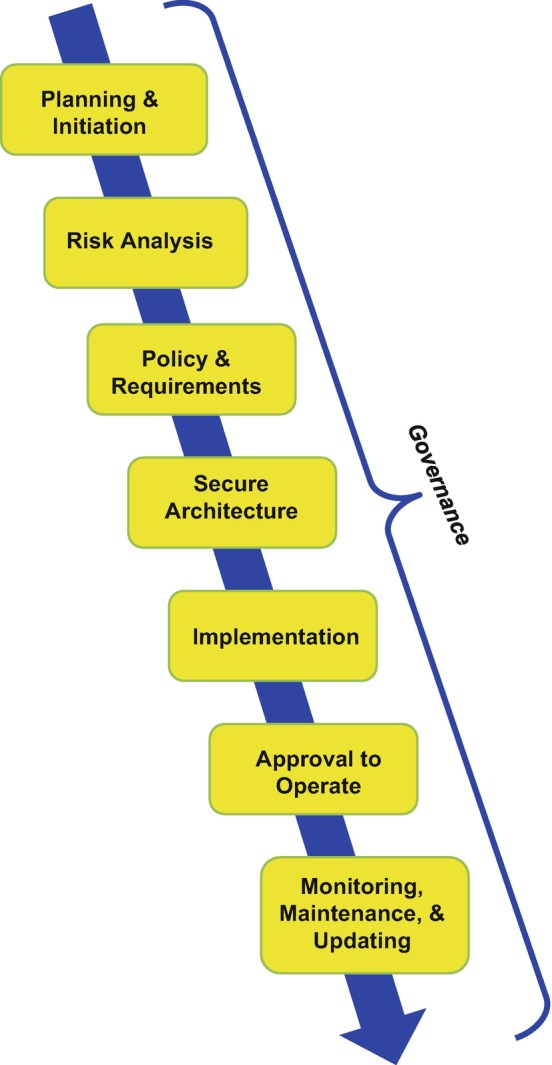

Figure 10.5 shows the primary steps of a typical end-to-end security architecture process. As with architecture strategy in general, this flow is highly iterative, and results at any step may cause a return to an earlier step to correct a problem, refine a requirement, perform additional analysis on a discovered issue, and so forth. By making this an integral part of an MBSAP effort, security architecture becomes an integral part of the overall architecture of a secure system or enterprise, not something that is ad hoc or independent . Cybersecurity requirements, functions, and standards will impact many aspects of the system and may be the dominant factor in decisions such as product selections. Security testing involves a distinct set of events, but it must be integrated with the overall test plan to ensure all required data is collected at minimum cost and effort. Similar considerations apply throughout the MBSAP process.

Fig. 10.5.

Basic flow of a security architecture process

The following paragraphs summarize the primary actions at each step in Fig. 10.5.

– as part of basic program planning, establish the methodology, resources, schedule, and other basics of the security architecture development; this includes securing commitment from program management, customers, and other stakeholders.

Threat Analysis and Risk Assessment – perform the analysis activities described earlier under Risk Management.

– once the RI, RA, and risk treatment analysis is complete, create a security policy, SP, SECOPS , and specific security requirements.

- Cybersecurity Architecture – define the security architecture under MBSAP, including:

- Selecting and prescribing security boundaries and mapping them to system structure and partitioning.

- Defining and modeling the implementation of selected security controls at each level of a layered defense structure.

- Defining and modeling specific security functions and services; for example, security services like user authentication and access control are usually made available to both infrastructure and application components of a system.

- Defining metrics to be used to assess the quality and policy compliance of the security architecture; another NIST Special Publication, 800-55 [12], offers guidance on the selection and application of security metrics.

- Defining roles and responsibilities of system users, security managers, and others.

- Defining other aspects of the security architecture such as required trust levels for various system components; this must obviously be done in close collaboration with the system architecture development as a whole, and there will be security content in the OV, LV, and PV .

- – as described in more detail in the next section, implement the security architecture within the PV, including:

- Selecting and configuring components and processes that implement security controls

- Verifying that system components have appropriate required trust levels based on the nature of the threat and the sensitivity of protected information and that selected products comply

- Developing security-related architecture artifacts and documentation

- All other aspects of building the system in conformity to requirements and the security architecture

– once the implementation is complete, accomplish the analysis and testing to verify that the required risk level has been achieved. Regardless of whether a system has a Government or a private customer, adequate testing must be planned and conducted as an integral part of the total test program. Ultimately, a body of evidence is presented to an appropriate authority to support an approval to operate decision. This is further discussed under System and Component Trust Evaluation below.

- Monitoring, Maintenance, and Upgrading – during system operation, provide the resources and procedures for monitoring functions such as event logging, configuration audits, and vulnerability testing. Since technology, requirements, and threats continuously evolve, even the best security architecture and implementation will require periodic updating and retesting to maintain assurance that the required risk level is still being met. Plans for long-term system support and upgrading need to account for the inevitable updates and problem fixes associated with system security components and functions. Specific activities in this area include:

- Configuration management to protect against unknown or unapproved content

- Prompt installation of software patches as soon as released to deal with new vulnerabilities

- Technology refreshment as cybersecurity components, like any other products, evolve, become obsolescent, and require replacement or upgrading

- Addition, upgrading, or reconfiguration of protective measures to deal with new or changing threats and policies

- Education, training, and security awareness of personnel to ensure the human element of the cybersecurity posture does not degrade as personnel and system functions change

– as shown in Fig. 10.5, implement governance at every step and throughout a system’s lifetime. Valuable guidance is provided in NIST Special Publication 800-100 [13], which is targeted to Government agencies and policies but provides useful insight for private security architects and managers as well; Chap. 10.1007/978-3-319-95669-5_3 is especially relevant to secure system development. This document stresses the importance of clear individual and organizational roles and responsibilities, regular monitoring of security features and policy compliance, periodic reassessment of the effectiveness of security controls and procedures using measurements and metrics, rigorous configuration management and change control, and continuous analysis of incidents, supported by auditing of system logs.

A number of Government and private sector organizations issue guidance on design of a resilient cybersecurity solution. Rozanski and Woods [14], writing about software architecture from a largely commercial point of view, suggest the following elements of a security approach:

– grant system users only those accesses and capabilities required to perform their roles.

– focus protection on system elements that are most vulnerable and most likely to be attacked.

– this is essentially layered defense.

– structure and manage information such that access to a given data area does not accidentally expose information that should be separately controlled.

– avoid implementations that are hard for system users and administrators to work with and therefore invite cheating, shortcuts, or work-arounds.

– ensure that if failures or anomalies force a system to revert to default configurations and settings, these still provide adequate protection.

– similarly, ensure that if a system fails or shuts down, it does so in a state that does not expose sensitive content; a typical example is to encrypt all data at rest (i.e., in nonvolatile storage); this is the security equivalent of “fail-safe.”

– the inverse principle is that upon startup, a system must activate all protective measures before accessing or exposing sensitive information.

– implement protections that provide adequate assurance of the identity, trust level, and access privileges of any external entity trying to use the system.

– provide the capability to detect and log all security-related events, and implement a rigorous process of analysis and auditing to make full use of the recorded event data.

The Department of Defense focuses on the following essential aspects of cybersecurity policy for the DoD Information Network (DoDIN), supporting the Global Information Grid (GIG); these apply to most private sector cybersecurity situations as well:

– the policy requires both non-forgeable credentials and the use of a Strength of Mechanism assessment to ensure acceptable risk that unauthorized individuals will be able to gain access to classified systems and data.

– the policy directs that traditional Role-Based Access Control ( RBAC) give way to more powerful and flexible approaches such as Risk-Adaptive Access Control ( RAdAC) as described later in this chapter.

– the policy calls for adaptive and flexible privilege management to ensure all authorized users have timely access to the resources needed for mission accomplishment in accordance with the local operational situation.

– the GIG requires that participants implement TCPs, also described below.

– the policy prescribes migration to a “black core” in which sensitive information is always encrypted when it is outside a secure environment; ideally, data is encrypted at the computer that produces it and decrypted at the computer that consumes it.

Such policy requirements could drive anything from the selection of biometric means for user authentication to the development of new algorithms for real-time adjustment of individual privileges. For example, most security guidance documents now call for two-factor or multifactor authentication, involving two or more independent means of verifying identity, in place of sole reliance on passwords that are all too frequently compromised. In a private sector context, a security architect might well be called upon to implement controls that give citizens of multiple countries in a global company or enterprise access to appropriate data and processes while ensuring no export control laws or company policies on information release are violated. These examples illustrate the importance of very thorough analysis of policies and security requirements early in the architecture cycle, followed by allocation to the elements of an emerging system architecture and design, to achieve the objective of embedding security features and functions in the system.

Layered Defense Architecture

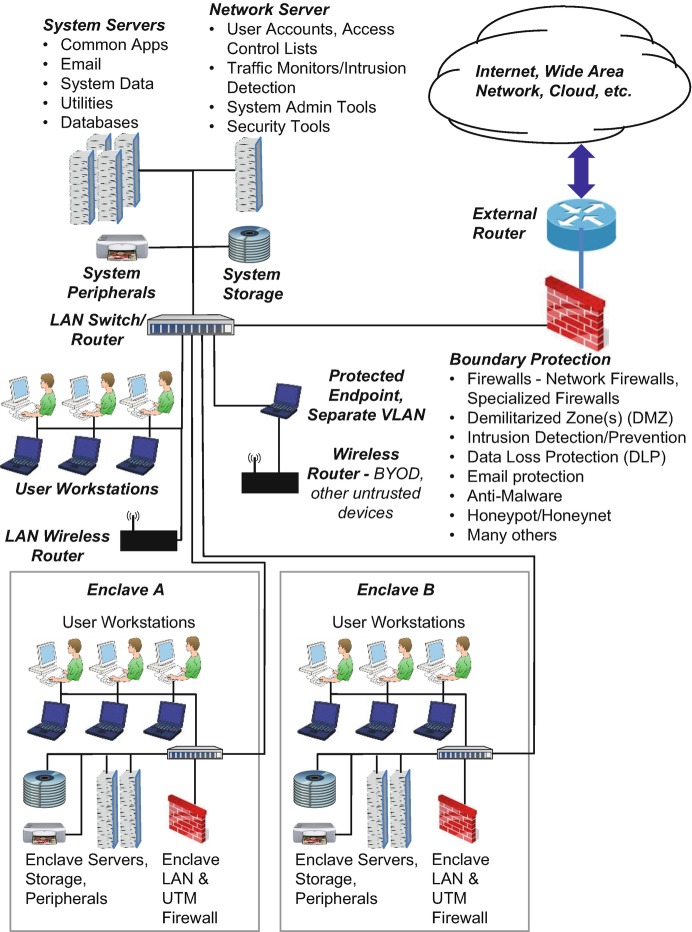

To provide a basis for discussing layered defense, Fig. 10.6 shows a notional networked enterprise to which a variety of risk mitigations can be applied. This is at least representative of the contents of a wide assortment of real-world information systems. As used in MBSAP, layered defense has two components: a DiD structure of successive layers of protective mechanisms and Zero-Trust Architecture , which enforces segregation and strict access controls for all sensitive content.

Fig. 10.6.

Notional networked enterprise requiring defense-in-depth protection

Layered defense begins as a dimension of the secure system architecture and continues in the selection and deployment of products and processes for security controls at various points. This is a much larger topic than can be fully treated in a single chapter. Accordingly, the emphasis here is on key aspects of secure system implementation that are likely to be important to a general systems engineer charged with satisfying the full scope of system requirements, including security. The following paragraphs describe some important considerations in devising a DiD structure for a system like that in Fig. 10.6. Table 10.2 lists a typical set of layers in a DiD structure for such a system with representative safeguards and functions. Appendix F gives an extensive set of security control definitions for these layers, many of which are referenced in Fig. 10.6.

Table 10.2.

Examples of defense-in-depth protective measures

| Protective layers | Protective measures | Protective functions |

|---|---|---|

| System Boundary or Perimeter |

Firewall(s) External Networking Demilitarized Zone (DMZ) Intrusion Detection System/Intrusion Prevention System (IDS/IPS) Encryption/Decryption in boundary Protection Devices Data Loss Prevention Honeypot/Honeynet Anti-Virus/Anti-Malware |

Block unauthorized information transfers and scan for malicious or prohibited content Allow connections only to trusted addresses Isolate system resources from external access; provide address space for protective devices and tools Monitor external activity and log unapproved traffic; IPS blocks untrusted messages Ensure sensitive (“red”) content is converted to unreadable (“black”) before release from a protected environment Detect and block unauthorized efforts to extract (“exfiltrate”) data Trap would-be attackers in a “sacrificial” server; gather data for attack analysis Detect/defeat malware at system boundary |

| Network |

Network-Based Intrusion Protection/Access Control Enclave Segregation with Firewalls Anti-Virus Labeled/Protected Messaging Message Integrity Checking Encryption |

Monitor network traffic, detect suspicious or prohibited use, block unauthorized use Provide customized protection for separate network enclaves Detect/defeat malware in network traffic Restrict traffic to authorized participants, prevent/detect message corruption or tampering Detect tampering with network traffic Eliminate unprotected data on the network |

| Endpoint |

Operating System (OS) Lockdown/Hardening Host System Security (HSS) User Accounts OS Control File Protection Administrative Separation of Privileges Firewalls/Intrusion Detection |

Disable functions not required for system operations that may create vulnerabilities Detect/prevent unauthorized or suspicious access attempts, malware, other hostile actions Enforce access controls, user authentication, principle of least privilege, etc. Prevent unauthorized changes to controls Restrict access to security controls and settings Customize protection for endpoint devices |

| Application Software |

Logging and Auditing Software Testing and Trusted Software Specialized Firewall(s) e.g., Web Application and XML Content Monitoring/Filtering |

Detect suspicious/prohibited access to apps Rigorously analyze and test software to preclude malicious code and other vulnerabilities; detect/prevent unauthorized modifications Apply customized protection to applications Detect suspicious or unauthorized processing |

| Data |

Logging and Auditing Encryption of Data at Rest Data Loss Prevention (DLP) |

Detect suspicious or prohibited access to data Ensure even theft or other physical compromise cannot obtain sensitive data Block unauthorized data access/exfiltration |

Boundary Security A Boundary Security System ( BSS) protects the point of entry from the external network environment and implements functions like those listed in Fig. 10.6. Some specific examples include:

– may provide some protective functions such as address filtering on messages.

– a variety of types can be deployed to inspect various aspects of in- and outbound traffic and block suspicious or unauthorized messages.

Demilitarized Zone ( – internal and external firewalls can be used to create a “buffer zone”3 between the untrusted external environment and sensitive system resources; this can host a variety of protective measures.

Intrusion Detection System/Prevention System ( – specialized device or software that detects and (if an IPS) blocks and records suspicious activity.

Data Loss Protection ( – identifies, monitors, and protects data in use, data in motion, and data at rest through deep content inspection and other sophisticated techniques.

– servers that allow external users to request and receive services such as Email and applications without being granted direct access to the actual servers and data.

– software that tries to detect malicious software and prevent it from executing.

– a “sacrificial” server that mimics real system resources to attract an attacker and gather information; multiple honeypots create a “honeynet .”

System Resources There is a set of overall system resources, including servers, workstations, storage, peripherals, and a local area network (LAN) router. These provide functions needed by all system users such as account management and common software applications. All these components are candidates for protection using tailored security controls .

The specialized functions of the enterprise are isolated in enclaves, each with its own LAN and a Unified Threat Management (UTM) firewall. Only two enclaves are shown, but there would typically be an enclave with restricted access for each individual department or other organizational element of the enterprise. This segmentation is a core principle of Zero-Trust Architecture . As with all cybersecurity devices, it’s important that candidate UTM products be carefully evaluated for their protective capabilities, especially against the assessed threats to a system .

The system LAN has a wireless router for connection of approved (trusted) devices. There is also a wireless router for other access, such as by personal (Bring Your Own Device, or BYOD) devices. This router is protected by a dedicated processor that implements a variety of security controls and establishes a virtual LAN (VLAN) as described in Chap. 10.1007/978-3-319-95669-5_9 that is separate from the system network.

The other key element of layered defense is a Zero-Trust ( ZT) Architecture [15], and Table 10.3 gives some of the main features for a system like that in Fig. 10.6. The name “Zero-Trust” derives from the basic principle that no one and nothing is trusted beyond a single authenticated instance. ZT complements and strengthens DiD by adding stringent controls, especially for access to sensitive content. For example, a system that makes an authentication and authorization decision for every request for access to sensitive content provides a higher level of assurance than one that requires only one-time access approval for an entire user session.

Table 10.3.

Features of a Zero-Trust Architecture

| Protective features | Protective functions |

|---|---|

| Zero-Trust |

No system users or resources (applications, databases, etc.) are trusted a priori Every resource access, installation action, etc. requires explicit authentication and authorization |

| Address control |

All resources are protected by strong access controls, regardless of location (e.g., multifactor authentication of users) Enforces highly granular access to resources (specific permissions) |

| Network segmentation |

Network is divided into multiple segments enclosing related resources and functions Enclaves have distinct protected perimeters and enforce access controls Enclaves are protected by multifunction devices such as a Unified Threat Management (UTM) firewalla |

| Network security |

Implements a secure packet forwarding engine Monitors all network traffic for suspicious activity |

aForrester Research has coined the term “Segmentation Gateway” for this device

All of the DiD and ZT features in Tables 10.2 and 10.3 could be applied to a system like the one in Fig. 10.6, depending on the assets to be protected and the risks to be mitigated. One of the major objectives of adding a Zero-Trust network to a DiD architecture is to minimize the damage an attacker can do after successfully penetrating a system. Every attempt at “lateral movement” from one resource area or enclave to another must defeat a layered defensive structure within the security perimeter of that enclave. Additionally, every attempt to access a protected asset requires strong authentication of the party requesting access and fine-grained authorization (access permission) for every specific asset. DiD with Zero-Trust , using advanced safeguards such as those which apply statistical behavior analysis and artificial intelligence, represents the current state-of-the-art in secure architecture.

As an example of secure architecture strategy based on deployment of multiple, complementary safeguards, Jones and Horowitz [16] have published an approach to protecting critical utility infrastructure, especially nuclear power plants. Basic elements of their proposal are:

Use of diverse and redundant subsystems, increasing the effort an attacker must make to defeat a given layer of protection; this can also draw out a “signature” by which an attacker can be identified and recognized.

Configuration “hopping” to restore a compromised system by switching to an alternative configuration.

Data consistency checking using techniques such as voting among parallel diverse components.

Tactical forensics to distinguish true attacks from false alarms or natural failures .

Secure System Implementation

Once an approved security architecture is in hand, the next stage of the process in Fig. 10.5 is implementation . We assume here that a set of security controls and other risk treatments has been selected and embodied in an architecture with the appropriate features and functions. These must now be realized with hardware and software components, procedures, staff training, and other elements of a cybersecurity solution. The system must then be tested to confirm that security controls function as designed, that no known vulnerabilities remain open, and that the required residual risk level has been achieved. The following paragraphs discuss implementation of these controls in the context of the enterprise in Fig. 10.6.

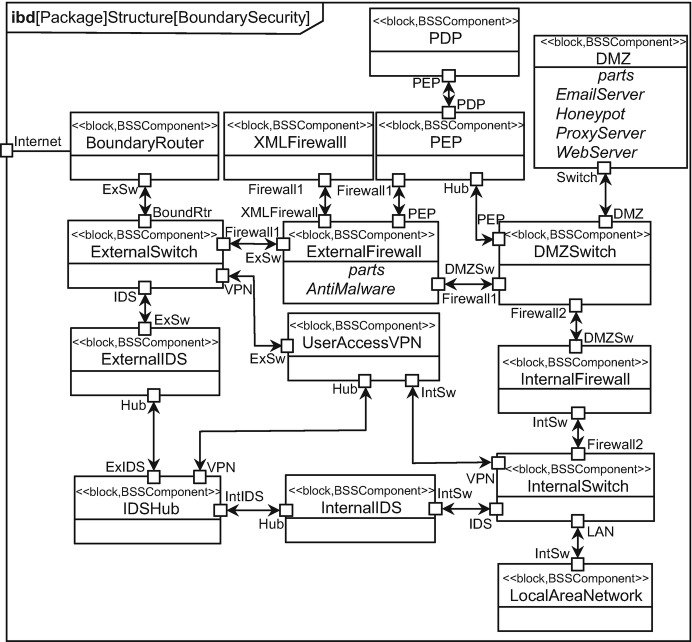

Boundary Security

A DiD design begins with protective measures at the system or enclave perimeter as described in Sect. 10.5.2. In an older security architecture, boundary security may well be the primary defense. This approach has been called “ crunchy outside, chewy inside” and is now thoroughly obsolete. There is no such thing as a standard BSS design, but Fig. 10.7 shows some typical components in an Internal Block Diagram. The Blocks associated with the BSS have a corresponding stereotype. The system connects to the outside world, which might be a public network like the Internet, a private wide area network ( WAN), a cloud environment provided by a third party, or some other external environment, through a Boundary Router. There may also be other channels such as encrypted point-to-point links, and Fig. 10.7 includes a Virtual Private Network ( VPN) server used by authorized parties outside the enterprise. This and other networking approaches are discussed in Chap. 10.1007/978-3-319-95669-5_9. There may be an external Network Switch if the Boundary Router needs this functionality. The Boundary Router normally uses the Border Gateway Protocol to establish a session with another autonomous system on the network. It may participate in the security scheme by filtering incoming traffic, e.g., by applying Time of Day limits to exclude messages that fall outside established time parameters.

Fig. 10.7.

Typical elements of a boundary security system, forming the perimeter security layer of a DiD implementation

Perhaps the most ubiquitous security component is a firewall , which is a specialized computer, running trusted software, that applies a range of techniques to incoming and outgoing traffic to identify and block unauthorized or threatening messages.

The firewall has rule sets that can be programmed by security managers to establish the basis for allowing or preventing messages from passing, as well as an Access Control List ( ACL) that identifies authorized external sites and users.

Firewalls apply a variety of filtering techniques, usually at the packet and protocol or application levels, based on user-supplied rules and parameters. A packet filtering firewall, which can be a software component running in a sophisticated boundary router, permits, rejects, and drops packets based on parameters such as IP address and port number. A proxy firewall runs proxy gateways for mail and other kinds of traffic using rules for context, authorization, and authentication. These, and other firewall types, can be combined to meet specific security requirements. A sophisticated boundary security system may have a DMZ with both external and internal firewalls, which is further described in a later paragraph. We also note that any firewall will have a certain number of false negatives (legitimate access requests that are blocked) and false positives (the opposite mistake). System and Network Administrators may be required to “tune” the firewall rules to achieve acceptable levels of these as defined by organizational security policy. For example, it might be acceptable to have 1% of legitimate traffic denied if that’s the consequence of blocking 99.9% of malicious traffic.

As Fig. 10.7 indicates, a firewall may be supported by a variety of specialized security controls. The External Firewall is shown as including an Anti-Malware Part and interacting with an XML Firewall that specifically scans XML traffic and protects XML-based interfaces. The Internal Firewall is shown as incorporating DLP, which is often deployed at multiple layers of a DiD structure. The dual firewalls in Fig. 10.7 are complemented by Internal and External Intrusion Detection Systems, connected by a Hub. An IDS detects unauthorized traffic by determining patterns of activity and matching them to known signatures that are identified with known vulnerabilities or attack vectors. It can apply a variety of algorithms at the packet, message, protocol, and application layers and may be set up to respond to network attacks, data-driven attacks, attempts to subvert access controls and privilege management, unauthorized log-ons, and unauthorized access requests. The IDS may also supplement malware protection software in dealing with Trojans, worms, and other attacks. A more powerful component is an Intrusion Protection System ( IPS) that actively blocks “bad” traffic. To remain effective, an IPS must receive regular updates as new threats are identified; this is analogous to keeping malware detection libraries current .

Both public and private sector systems have begun to implement the DMZ concept as a way to make information available to authorized external users while protecting core resources. A DMZ typically provides a data buffer and task queue. It resides in a separate enclave with its own address space and may have an external Domain Name System (DNS) server to process external user identities. A DMZ can be implemented with one or two firewalls, the latter being more secure. Figure 10.7 shows the DMZ, accessed through a DMZ Switch, lying between the External and Internal Firewalls and hosting functions like an Email Server, a Web Server, and one or more Proxy Servers that expose data and processes from system servers without allowing direct access to those resources. An Email Gateway is a server that accepts, forwards, delivers, and stores messages on behalf of system users. It typically also scans messages and attachments for viruses and other malicious content and can perform filtering to eliminate unwanted traffic (the notorious “spam”). A DMZ typically creates a “holding pen” for traffic to which the firewall can send suspicious incoming messages for additional processing before delivery or deletion. A further feature shown in the figure is a Honeypot that mimics actual system resources to trap malicious intruders and allow defenders to record information and to observe the details of an attempted penetration.

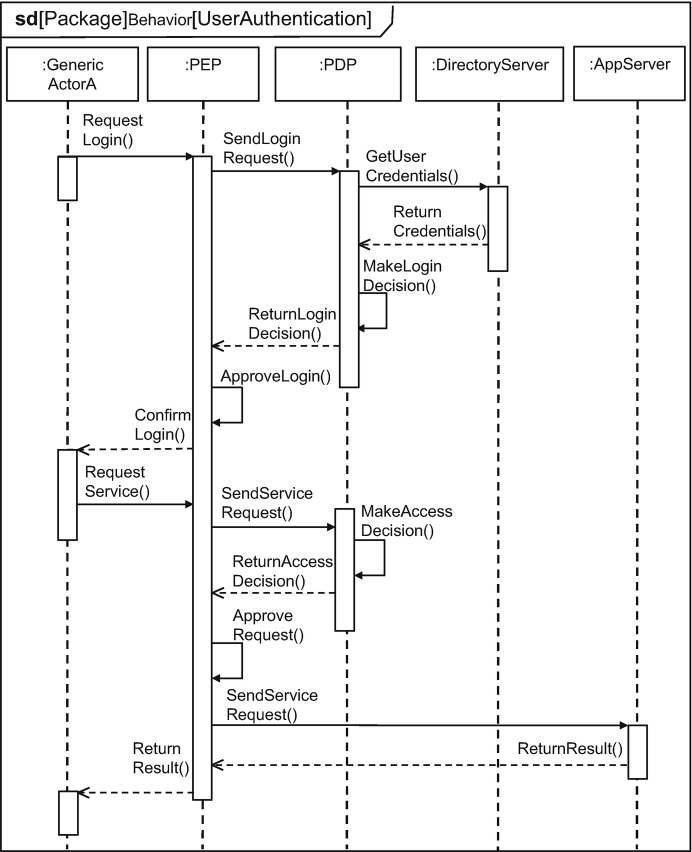

For many years, cybersecurity was based on applying rigid rules governing which users could access what information and processes. This makes it hard to accommodate the dynamic situations common in modern enterprises subject to sophisticated cyberattacks and can create a large administrative burden to fine-tune the privileges of a large and constantly changing user base. The current trend is toward policy-based security management in which rule sets take into account a variety of circumstances and make near real-time adjustments. For example, a specific individual might need access to specific data for a specific period of time, after which this privilege should be revoked. Or the access rules might need to become more stringent in response to a detected hostile penetration attempt. One implementation uses a Policy Enforcement Point ( PEP) , shown interacting with the External Firewall in Fig. 10.7, which is usually a dedicated computer that mediates user access requests, message traffic, and other activities on the basis of a set of policy attributes. In a typical situation, a user would request access to an application or data set, and the PEP would obtain the user’s certificate and other attributes (see Access Control below); would apply current policy, provided by a Policy Definition Point ( PDP) , to determine if the requested access is allowed; and, if so, would authorize the requested activity. The PDP server gives System and Security Administrators important tools to manage access control.

On the inner edge of the BSS, an Internal Switch connects traffic that passes the Internal Firewall , along with VPN traffic and data from the IDS, to the system LAN. This is the next DiD level, with security controls like those listed in Table 10.2. The other layers have obvious mappings to endpoint devices connected to the network, to application software running on servers in the enclaves, and to data, wherever it exists and whether it is in use, in motion, or at rest. Space prevents a description of these layers as detailed as that just given for the Boundary or Perimeter Security Layer. However, Appendix F gives more detail about the candidate controls at each level that are summarized in the table. Because many cyberattacks seek to exploit weaknesses in Web-facing software, an important example is the use of a Web Application Firewall ( WAF) using Deep Packet Inspection ( DPI) either as part of the overall system BSS or in conjunction with the access point to an enclave.

Trusted Computing Platform (TCP)