Abstract

Groll and Thomson's evaluation of the effectiveness of Ontario's Universal Influenza Immunization Campaign used per capita cases of laboratory-confirmed influenza. We argue that these data are susceptible to various biases and should not be used as an outcome measure. Laboratory data are traditionally used to identify the presence of influenza activity rather than to identify levels of influenza activity. A better measure of viral activity is the proportion of influenza tests positive; whereas the weekly proportion of tests positive was relatively consistent, a marked increase over time in the numbers of laboratory-confirmed cases paralleled an increase in the number of tests performed. Regardless, for evaluating universal influenza immunization program effectiveness, other established and available measures employed in previous studies describing the epidemiology of influenza should be used instead of laboratory data.

Keywords: Influenza, Universal immunization, Public health

In their evaluation of Ontario's Universal Influenza Immunization Campaign, Groll and Thomson state that there is a lack of high-quality influenza outcome data in Ontario, so instead they examined the effectiveness of the program using per capita cases of laboratory-confirmed influenza [1]. These laboratory data are traditionally used by public health agencies to identify the presence of influenza activity – based on exceeding case or proportion positive test thresholds – and to characterize circulating strains, but there are good reasons why they are not used to identify levels of influenza activity.

The most important reason that per capita cases is a suboptimal outcome measure for evaluating the effect of the immunization program is that it is susceptible to ascertainment bias [2]. Heightened awareness of influenza and other respiratory infections have led to increased requests for testing. Given the increased attention in recent years to both interpandemic and pandemic influenza, as well as emerging diseases such as the severe acute respiratory syndrome (SARS), it is not surprising that more tests for influenza may be ordered by physicians and public health professionals in their diagnostic work-up of patients with acute respiratory illnesses and investigations of respiratory outbreaks, respectively.

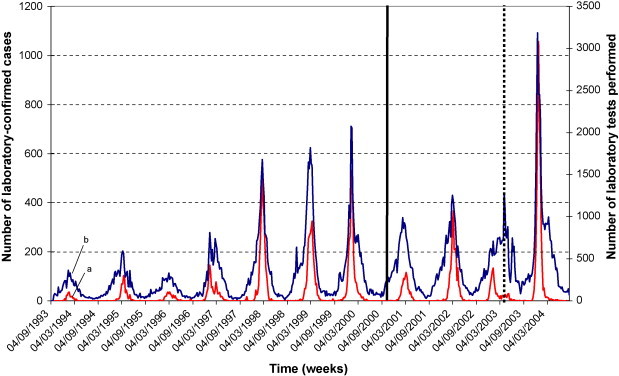

Evidence for increased testing over time is illustrated in Fig. 1 . This figure plots weekly surveillance data (obtained from the same source as Groll and Thomson) for influenza A and B for Ontario from 1993 to 2004, and compares the number of tests performed for influenza (using viral culture or direct antigen detection) with the number of cases of lab-confirmed influenza. Annual peaks corresponding to influenza season are apparent for both number of tests performed and number of cases. It is also fairly evident that number of tests performed for influenza have increased over time, with a sudden increase coinciding with and persisting since the SARS outbreak that occurred in Ontario in the spring of 2003 [3], [4]. The increase in numbers of laboratory-confirmed cases seen over time parallels the increase in number of tests performed.

Fig. 1.

Comparison of weekly number of laboratory-confirmed cases of influenza A and B (a) with number of tests for influenza performed (b) for Ontario, 1993–2004. Introduction of Ontario's Universal Influenza Immunization Program (UIIP) in October 2000 is indicated by the solid vertical line and the outbreak of severe acute respiratory syndrome (SARS) in the spring of 2003 is indicated by the dashed vertical line.

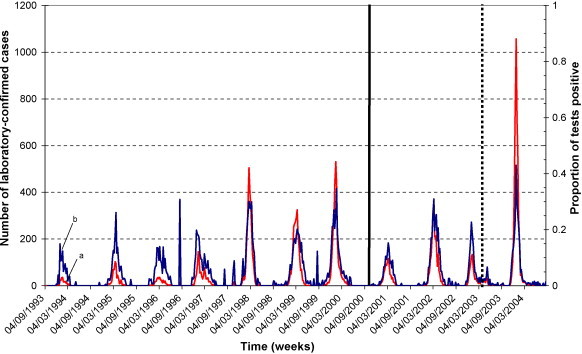

A better measure of viral activity is the proportion of influenza tests positive (the number of cases of lab-confirmed influenza divided by the number of tests performed). This is illustrated in Fig. 2 , which compares the number of cases of lab-confirmed influenza with the proportion of tests for influenza that were positive. Again, annual peaks corresponding to influenza season are evident for both number of cases and proportion of tests positive. However, the peaks for the proportion of tests positive are fairly consistent over time, varying between 0.15 and 0.45, whereas the peaks for the number of cases increase dramatically over time, from less than 50 to over 1000 cases per week. The relative consistency of the proportion of tests positive over time coupled with the increase in lab-confirmed cases suggests that the increase in lab-confirmed cases is attributable to more tests being performed.

Fig. 2.

Comparison of weekly number of laboratory-confirmed cases of influenza A and B (a) with proportion of tests positive (b) for Ontario, 1993–2004. UIIP introduction of is indicated by the solid vertical line and the SARS outbreak is indicated by the dashed vertical line.

On an unrelated note, there was an error in Fig. 1 in Groll and Thomson's paper: the y-axis title should be cases per 1,000,000 population and not per 100,000 population. For December 2003, there were 2394 cases of influenza reported out of a population of 12,256,645 as of 1 July 2003. This would be a monthly incidence of 195 per 1,000,000 cases and accurately reflects the data in the graph. That only 2 cases of influenza per 10,000 people are identified during months of influenza activity, when the actual rates of disease approach 5 per 100 people, further raises concern that any laboratory-derived measures of influenza activity are vulnerable to ascertainment and sampling biases.

Groll and Thomson did examine potential ascertainment bias but – considering the level of concern and importance to the study findings – they did not go far enough. They should have disclosed more information about laboratory testing and performed more analyses to examine bias. Better still, they should have used other established and available measures employed in previous studies describing the epidemiology of influenza, such as hospitalizations, mortality, emergency department use and ambulatory physician visits.

References

- 1.Groll D.L., Thomson D.J. Incidence of influenza in Ontario following the Universal Influenza Immunization Campaign. Vaccine. 2006;24(24):5245–5250. doi: 10.1016/j.vaccine.2006.03.067. [DOI] [PubMed] [Google Scholar]

- 2.Last J.M. 4th ed. Oxford University Press; New York: 2001. A dictionary of epidemiology. [Google Scholar]

- 3.Poutanen S.M., Low D.E., Henry B. Identification of severe acute respiratory syndrome in Canada. N Engl J Med. 2003;348(20):1995–2005. doi: 10.1056/NEJMoa030634. [DOI] [PubMed] [Google Scholar]

- 4.Svoboda T., Henry B., Shulman L. Public health measures to control the spread of the severe acute respiratory syndrome during the outbreak in Toronto. N Engl J Med. 2004;350(23):2352–2361. doi: 10.1056/NEJMoa032111. [DOI] [PubMed] [Google Scholar]