Significance

An outstanding goal in cognitive neuroscience is to understand the relationship between neurophysiological processes and conscious experiences. More or less implicitly, it is assumed that common supramodal processes may underlie conscious perception in different sensory modalities. We tested this idea directly using decoding analysis on brain activity following near-threshold stimulation, investigating common neural correlates of conscious perception between different sensory modalities. Our results across all tested sensory modalities revealed the specific dynamic of a supramodal brain network interestingly including task-unrelated primary sensory cortices. Our findings provide direct evidence of a common distributed activation pattern related to conscious access in different sensory modalities.

Keywords: consciousness, perception, near-threshold stimulation, multivariate decoding analysis, magnetoencephalography

Abstract

An increasing number of studies highlight common brain regions and processes in mediating conscious sensory experience. While most studies have been performed in the visual modality, it is implicitly assumed that similar processes are involved in other sensory modalities. However, the existence of supramodal neural processes related to conscious perception has not been convincingly shown so far. Here, we aim to directly address this issue by investigating whether neural correlates of conscious perception in one modality can predict conscious perception in a different modality. In two separate experiments, we presented participants with successive blocks of near-threshold tasks involving subjective reports of tactile, visual, or auditory stimuli during the same magnetoencephalography (MEG) acquisition. Using decoding analysis in the poststimulus period between sensory modalities, our first experiment uncovered supramodal spatiotemporal neural activity patterns predicting conscious perception of the feeble stimulation. Strikingly, these supramodal patterns included activity in primary sensory regions not directly relevant to the task (e.g., neural activity in visual cortex predicting conscious perception of auditory near-threshold stimulation). We carefully replicate our results in a control experiment that furthermore show that the relevant patterns are independent of the type of report (i.e., whether conscious perception was reported by pressing or withholding a button press). Using standard paradigms for probing neural correlates of conscious perception, our findings reveal a common signature of conscious access across sensory modalities and illustrate the temporally late and widespread broadcasting of neural representations, even into task-unrelated primary sensory processing regions.

While the brain can process an enormous amount of sensory information in parallel, only some information can be consciously accessed, playing an important role in the way we perceive and act in our surrounding environment. An outstanding goal in cognitive neuroscience is thus to understand the relationship between neurophysiological processes and conscious experiences. However, despite tremendous research efforts, the precise brain dynamics that enable certain sensory information to be consciously accessed remain unresolved. Nevertheless, progress has been made in research focusing on isolating neural correlates of conscious perception (1), in particular suggesting that conscious perception—at least if operationalized as reportability (2)—of external stimuli crucially depends on the engagement of a widely distributed brain network (3). To study neural processes underlying conscious perception, neuroscientists often expose participants to near-threshold (NT) stimuli that are matched to their individual perceptual thresholds (4). In NT experiments, there is a trial-to-trial variability in which around 50% of the stimuli at NT intensity are consciously perceived. Because of the fixed intensity, the physical differences between stimuli within the same modality can be excluded as a determining factor leading to reportable sensation (5). Despite numerous methods used to investigate conscious perception of external events, most studies target a single sensory modality. However, any specific neural pattern identified as a correlate of consciousness needs evidence that it generalizes to some extent, e.g., across sensory modalities. We argue that this has not been convincingly shown so far.

In the visual domain, it has been shown that reportable conscious experience is present when primary visual cortical activity extends toward hierarchically downstream brain areas (6), requiring the activation of frontoparietal regions to become fully reportable (7). Nevertheless, a recent magnetoencephalography (MEG) study using a visual masking task revealed early activity in primary visual cortices as the best predictor for conscious perception (8). Other studies have shown that neural correlates of auditory consciousness relate to the activation of fronto-temporal rather than fronto-parietal networks (9, 10). Additionally, recurrent processing between primary, secondary somatosensory, and premotor cortices has been suggested as potential neural signatures of tactile conscious perception (11, 12). Indeed, recurrent processing between higher- and lower-order cortical regions within a specific sensory system is theorized to be a marker of conscious processing (6, 13, 14). Moreover, alternative theories such as the global workspace framework (15) extended by Dehaene et al. (16) postulate that the frontoparietal engagement aids in “broadcasting” relevant information throughout the brain, making it available to various cognitive modules. In various electrophysiological experiments, it has been shown that this process is relatively late (∼300 ms) and could be related to increased evoked brain activity after stimulus onset such as the so-called P300 signal (17–19). Such late brain activities seem to correlate with perceptual consciousness and could reflect the global broadcasting of an integrated stimulus making it conscious. Taken together, theories and experimental findings argue in favor of various “signatures” of consciousness from recurrent activity within sensory regions to a global broadcasting of information with engagement of fronto-parietal areas. Even though usually implicitly assumed, it is so far unclear whether similar spatiotemporal neural activity patterns are linked to conscious access across different sensory modalities.

In the current study, we investigated conscious perception in different sensory systems using multivariate analysis on MEG data. Our working assumption is that brain activity related to conscious access has to be independent from the sensory modality; i.e., supramodal consciousness-related neural processes need to exhibit spatiotemporal generalization. Such a hypothesis is most ideally tested by applying decoding methods to electrophysiological signals recorded while probing conscious access in different sensory modalities. The application of multivariate pattern analysis (MVPA) to electroencephalography (EEG) and MEG measurements offers increased sensitivity in detecting experimental effects distributed over space and time (20–23). MVPA is often used in combination with a searchlight method (24, 25), which involves sliding a small spatial window over the data to reveal areas containing decodable information. The combination of both methods provides spatiotemporal detection of optimal decodability, determining where, when, and for how long a specific pattern is present in brain activity. Such multivariate decoding analyses have been proposed as an alternative in consciousness research, complementing other conventional univariate approaches to identify neural activity predictive of conscious experience at the single-trial level (26).

Here, we acquired MEG data while each participant performed three different standard NT tasks on three sensory modalities with the aim of characterizing supramodal brain mechanisms of conscious perception. In the first experiment we show how neural patterns related to perceptual consciousness can be generalized over space and time within and—most importantly—between different sensory systems by using classification analysis on source-level reconstructed brain activity. In an additional control experiment, we replicate the main findings and exclude the possibility that our observed patterns are due to response preparation/selection despite the need to report stimuli detection on every trial in our experiments.

Results

Behavior.

We investigated participants’ detection rate for NT, sham (absent stimulation), and catch (above perceptual threshold stimulation intensity) trials separately for the initial and the control experiment. Catch and sham trials were used to control false alarms and correct rejection rates across the experiment.

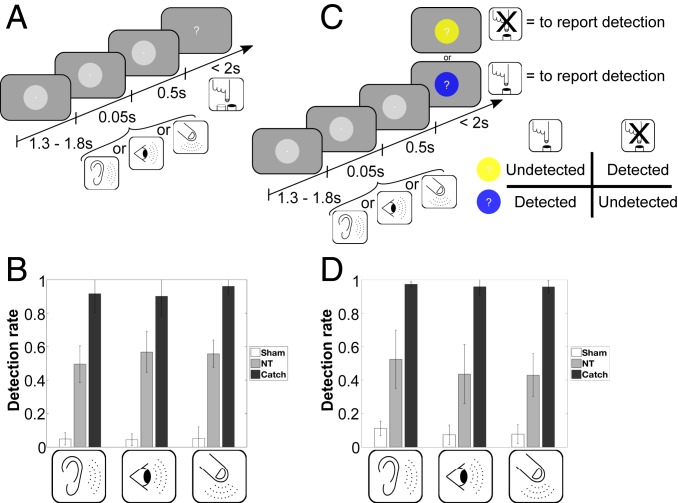

During the initial experiment participants had to wait for a response screen and press a button on each trial to report their perception (Fig. 1A). During the control experiment, however, a specific response screen was used to control for motor response mapping. At each trial the participants must use a different response mapping related to the circle’s color surrounding the question mark during the response screen (Fig. 1C).

Fig. 1.

Experimental designs and behavioral results. (A and B) Initial experiment. (C and D) Control experiment. (A) After a variable intertrial interval between 1.3 and 1.8 s during which participants fixated on a central white dot, a tactile/auditory/visual stimulus (depending on the run) was presented for 50 ms at individual perceptual intensity. After 500 ms, stimulus presentation was followed by an on-screen question mark, and participants indicated their perception by pressing one of two buttons (i.e., stimulation was “present” or “absent”) with their right hand. (B and D) The group average detection rates for NT stimulation were around 50% across the different sensory modalities. Sham trials in white (no stimulation) and catch trials in black (high-intensity stimulation) were significantly different from the NT condition in gray within the same sensory modality for both experiments. Error bars depict the SD. (C) Identical timing parameters were used in the control experiment; however, a specific response screen design was used to control for motor response mapping. Each trial the participants must use a different response mapping related to the circle’s color surrounding the question mark during the response screen. Two colors (blue or yellow) were used and presented randomly during the control experiment. One color was associated to the response mapping rule “press the button only if there is a stimulation” (for near-threshold condition detected) and the other color was associated to the opposite response mapping, “press a button only if there is no stimulation” (for near-threshold condition undetected). The association between one response mapping and a specific color (blue or yellow) was fixed for a single participant but was predefined randomly across different participants.

For the initial experiment and across all participants (n = 16), detection rates for NT experimental trials were 50% (SD: 11%) for auditory runs, 56% (SD: 12%) for visual runs, and 55% (SD: 8%) for tactile runs. The detection rates for the catch trials were 92% (SD: 11%) for auditory runs, 90% (SD: 12%) for visual runs, and 96% (SD: 5%) for tactile runs. The mean false alarm rates in sham trials were 4% (SD: 4%) for auditory runs, 4% (SD: 4%) for visual runs, and 4% (SD: 7%) for tactile runs (Fig. 1B). Detection rates of NT experimental trials in all sensory modalities significantly differed from those of catch trials (auditory, T15 = −14.44, P < 0.001; visual, T15 = −9.47, P < 0.001; tactile, T15 = −20.16, P < 0.001) or sham trials (auditory, T15 = 14.66, P < 0.001; visual, T15 = 16.99, P < 0.001; tactile, T15 = 20.66, P < 0.001).

Similar results were observed for the control experiment across all participants (n = 14). Detection rates for NT experimental trials were 52% (SD: 17%) for auditory runs, 43% (SD: 17%) for visual runs, and 42% (SD: 12%) for tactile runs. The detection rates for the catch trials were 97% (SD: 2%) for auditory runs, 95% (SD: 5%) for visual runs, and 95% (SD: 4%) for tactile runs. The mean false alarm rates in sham trials were 11% (SD: 4%) for auditory runs, 7% (SD: 6%) for visual runs, and 7% (SD: 6%) for tactile runs (Fig. 1B). Detection rates of NT experimental trials in all sensory modalities significantly differed from those of catch trials (auditory, T13 = −9.64, P < 0.001; visual, T13 = −10.78, P < 0.001; tactile, T13 = −14.75, P < 0.001) or sham trials (auditory, T13 = 7.85, P < 0.001; visual, T13 = 6.24, P < 0.001; tactile, T13 = 9.75, P < 0.001). Overall the behavioral results are comparable to those of other studies (27, 28). Individual reaction times and performances are reported in SI Appendix, Table S2.

Event-Related Neural Activity.

To compare poststimulus processing for “detected” and “undetected” trials, evoked responses were calculated at the source level for the initial experiment. As a general pattern over all sensory modalities, source-level event-related fields (ERFs) averaged across all brain sources show that stimuli reported as detected resulted in pronounced poststimulus neuronal activity, whereas unreported stimuli did not (Fig. 2A). Similar general patterns were observed for the control experiment with identical univariate analysis (SI Appendix, Fig. S2). ERFs were significantly different over the averaged time course with specificity dependent on the sensory modality targeted by the stimulation. Auditory stimulations reported as detected elicit significant differences compared to undetected trials first between 190 and 210 ms, then between 250 and 425 ms, and finally between 460 and 500 ms after stimulus onset (Fig. 2 A, Left). Visual stimulation reported as detected elicits a large increase of ERF amplitude compared to undetected trials from 230 to 250 ms and from 310 to 500 ms after stimulus onset (Fig. 2 A, Middle). Tactile stimulation reported as detected elicits an early increase of ERF amplitude between 95 and 150 ms and then a later activation between 190 and 425 ms after stimulus onset (Fig. 2 A, Right). In our protocol, such early ERF difference for the tactile NT trials could be due to the experimental setup where auditory and visual targets stimulation emerged from a background stimulation (constant white noise and screen display) whereas tactile stimuli remain isolated transient sensory targets (Materials and Methods).

Fig. 2.

NT trials event-related responses for different sensory modalities: auditory (Left), visual (Center), and tactile (Right). (A) Source-level absolute value (baseline corrected for visualization purpose) of group event-related average (solid line) and SEM (shaded area) in the detected (red) and the undetected (blue) condition for all brain sources. Significant time windows are marked with bottom solid lines (black line: PBonferroni-corrected < 0.05) for the contrast of detected vs. undetected trials. The relative source localization maps are represented in B for the averaged time period. (B) Source reconstruction of the significant time period marked in A for the contrast of detected vs. undetected trials, masked at Pcluster-corrected < 0.05.

Source localization of these specific time periods of interest was performed for each modality (Fig. 2B). The auditory condition shows significant early source activity mainly localized to bilateral auditory cortices, superior temporal sulcus, and right inferior frontal gyrus, whereas the late significant component was mainly localized to right temporal gyrus, bilateral precentral gyrus, and left inferior and middle frontal gyrus. A large activation can be observed for the visual conditions including primary visual areas; fusiform and calcarine sulcus; and a large fronto-parietal network activation including bilateral inferior frontal gyrus, inferior parietal sulcus, and cingulate cortex. The early contrast of tactile evoked response shows a large difference in the brain activation including primary and secondary somatosensory areas, but also a large involvement of right frontal activity. The late contrast of tactile evoked response presents brain activation including left frontal gyrus, left inferior parietal gyrus, bilateral temporal gyrus, and supplementary motor area.

Timing differences between detected and undetected stimuli emerge most clearly after 150 to 200 ms (see topographies in SI Appendix, Fig. S5). This is different when comparing catch versus sham trials, where early differences (i.e., prior to 150 ms) can be observed (SI Appendix, Figs. S1 and S5). The difference in latency between the tactile stimulation processing and the other modalities is less pronounced for this condition where the target is clearly present or absent. At the sensory level, for all sensory modalities the differences start relatively focal (SI Appendix, Fig. S5) over putatively sensory processing regions and become increasingly widespread with time. These later evoked response effects are descriptively similar to the ones observed for the near-threshold stimuli, underlining their putative relevance for enabling conscious access.

Decoding and Multivariate Searchlight Analysis across Time and Brain Regions.

We investigated the generalization of brain activation over time within and between the different sensory modalities. To this end, we performed a multivariate analysis of reconstructed brain source-level activity from the initial experiment. Time generalization analysis presented as a time-by-time matrix between 0 and 500 ms after stimulus onset shows significant decoding accuracy for each condition (Fig. 3A). We train and test our classifiers on 50% of the dataset with the same proportion of trials coming from each separate run of the acquisition compensating for potential fatigue or habituation effect along the course of the experiments. As can be seen on the black cells located on the diagonal in Fig. 3A, cross-validation decoding was performed within the same sensory modality. However, off-diagonal red cells in Fig. 3A represent decoding analysis between different sensory modalities. Inside each cell, data reported along the diagonal (dashed line) reveal average classifiers accuracy for a specific time point used for the training and testing procedure, whereas off-diagonal data reveal a potential classifier ability to generalize decoding based on a different training and testing time points procedure. Indeed, we observed the ability of the same classifier trained on a specific time point to generalize its decoding performance over several time points (see off-diagonal significant decoding inside each cell in Fig. 3A). To appreciate this result, we computed the average duration of significant decoding on testing time points based on the different training time points (Fig. 3B). On average, decoding within the same modality, the classifier generalization starts after 200 ms and we observed significant maximum classification accuracy after 400 ms (Fig. 3 B, Top).

Fig. 3.

Time-by-time generalization analysis within and between sensory modalities (for NT trials). The 3 × 3 matrices of decoding results are represented over time (from stimulation onset to 500 ms after). (A) Each cell presents the result of the searchlight MVPA with time-by-time generalization analysis where classifier accuracy was significantly above chance level (50%) (masked at Pcorrected < 0.005). For each temporal generalization matrix, a classifier was trained at a specific time sample (vertical axis: training time) and tested on all time samples (horizontal axis: testing time). The black dotted line corresponds to the diagonal of the temporal generalization matrix, i.e., a classifier trained and tested on the same time sample. This procedure was applied for each combination of sensory modality; i.e., presented in Top row is decoding analysis performed by classifiers trained on the auditory modality and tested on auditory, visual, and tactile (Left, Center, and Right columns, respectively) for the two classes: detected and undetected trials. The cells contoured with black line axes (on the diagonal) correspond to within the same sensory modality decoding, whereas the cells contoured with red line axes correspond to between different modalities decoding. (B) Summary of average time-generalization and decoding performance over time for all within-modality analyses (Top: average based on the three black cells of A) and between-modalities analyses (Bottom: average based on the six red cells of A). For each specific training time point on the x axis the average duration of the classifier’s ability to significantly generalize on testing time points was computed and reported on the y axis. Additionally, normalized average significant classifiers accuracies over all testing times for a specific training time point are represented as a color-scale gradient.

Early differences specific to the tactile modality have been grasped by the classification analysis by showing significant decoding accuracy already after 100 ms without strong time generalization for this sensory modality, whereas auditory and visual conditions show significant decoding starting only around 250 to 300 ms after stimulus onset. Such an early dynamic specific to the tactile modality could explain off-diagonal accuracy for all between-modalities decoding where the tactile modality was involved (Fig. 3A). Interestingly, time-generalization analysis concerning between-sensory-modalities decoding (red cells in Fig. 3A) revealed significant maximal generalization at around 400 ms (Fig. 3 B, Bottom). In general, the time-generalization analysis revealed time clusters restricted to late brain activity with maximal decoding accuracy on average after 300 ms for all conditions. The similarity of this time cluster over all three sensory modalities suggests the generality of such brain activation.

Restricted to the respective significant time clusters (Fig. 3A), we investigated the underlying brain sources resulting from the searchlight analysis within and between conditions (Fig. 4). The decoding within the same sensory modality revealed higher significant accuracy in relevant sensory cortex for each specific modality condition (Fig. 4, brain plots on diagonal). In addition, auditory modality searchlight decoding revealed also a strong involvement of visual cortices (Fig. 4, Top row and Left column), while somatosensory modality decoding revealed parietal regions involvement such as precuneus (Fig. 4, Bottom row and Right column). However, decoding searchlight analysis between different sensory modalities revealed higher decoding accuracy in fronto-parietal brain regions in addition to diverse primary sensory regions (Fig. 4, brain plots off diagonal).

Fig. 4.

Spatial distribution of significant searchlight MVPA decoding within and between sensory modalities. Shown are source brain maps for average decoding accuracy restricted to the related time-generalization significant time-by-time cluster (cf. Fig. 3A). Brain maps were thresholded by showing only 10% maximum significant decoding accuracy for each respective time-by-time cluster. Black line contouring separates all between-sensory-modality decoding brain maps from the cross-validation within one sensory-modality decoding analysis on the diagonal.

Decoding and Multivariate Searchlight Analysis over All Sensory Modalities.

We further investigated the decoding generalizability of brain activity patterns across all sensory modalities in one analysis by decoding detected versus undetected trials over all blocks together (Fig. 5A). Initially, we performed this specific analysis with data from the first experiment and separately with data from the control experiment to replicate our findings and control for potential motor response bias (SI Appendix, Fig. S3). By delaying the response mapping to after the stimulus presentation in a random fashion during the control experiment, neural patterns during relevant periods putatively cannot be confounded by response selection/preparation. Importantly, analysis performed on the control experiment used identical data in SI Appendix, Fig. S3 B and C, but only trials assignation (i.e., two classes of definition) for decoding was different: “detected versus undetected” (SI Appendix, Fig. S3B) or “response versus no response” (SI Appendix, Fig. S3C). Only decoding of conscious report (i.e., detected versus undetected) showed significant time-by-time clusters (SI Appendix, Fig. S3 A and B). This result rules out a confounding influence of the motor report and again strongly suggests the existence of a common supramodal pattern related to conscious perception.

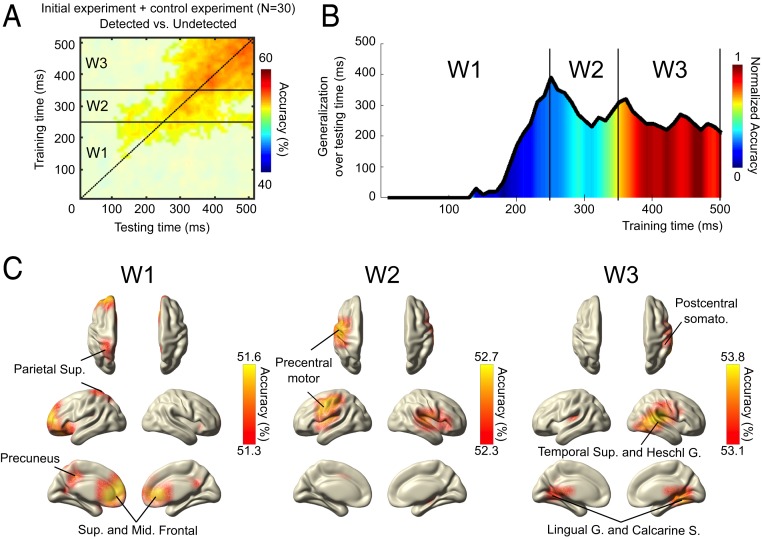

Fig. 5.

Time-by-time generalization and brain searchlight decoding analysis across all sensory modalities (for NT trials). Shown are compiled results for both initial and control experiments. (A) Decoding results represented over time (from stimulation onset to 500 ms after). Shown is the result of the searchlight MVPA with time-by-time generalization analysis of “detected” versus “undetected” trials across all sensory modalities. The plot shows the time clusters where classifier accuracy was significantly above chance level (50%) (masked at Pcorrected < 0.005). The black dotted line corresponds to the diagonal of the temporal generalization matrix, i.e., a classifier trained and tested on the same time sample. Horizontal black lines separate time windows (W1, W2, and W3) (B) Summary of average time-generalization and decoding performance over time (A). For each specific training time point on the x axis the average duration of the classifier’s ability to significantly generalize on testing time points was computed and reported on the y axis. Additionally, normalized average significant classifiers accuracies over all testing times for a specific training time point are represented as a color-scale gradient. Based on this summary three time windows were depicted to explore spatial distribution of searchlight decoding (W1, [0–250] ms; W2, [250–350] ms; W3, [350–500] ms). (C) Spatial distribution of significant searchlight MVPA decoding for the significant time clusters depicted in A and B. Brain maps were thresholded by showing only 10% maximum significant (Pcorrected < 0.005) decoding accuracy for each respective time-by-time cluster.

We investigated the similarity of time-generalization results by merging data from both experiments (Fig. 5A). We tested for significant temporal dynamics of brain activity patterns across all our data, taking into account that less stable or similar patterns would not survive group statistics. Overall the ability for one classifier to generalize across time seems to increase linearly after a critical time point around 100 ms. We show that whereas the early patterns (<250 ms) are rather short-lived, temporal generalizability increases, showing stability values after ∼350 ms (Fig. 5B). To follow up on potential generators underlying these temporal patterns, we depicted the searchlight results from three specific time windows (W1, W2, and W3) regarding the time-generalization decoding and the distribution of normalized accuracy over time (Fig. 5C). W1 from stimulation onset to 250 ms depicts the first significant searchlight decoding found in this analysis, W2 from 250 to 350 ms depicts the first generalization period where decoding accuracy is low, and finally W3 from 350 to 500 ms depicts the second time-generalization period where higher decoding accuracy was found (Fig. 5B). The depiction of the results highlights precuneus, insula, anterior cingulate cortex, and frontal and parietal regions mainly involved during the first significant time window (W1), while the second time window (W2) main significant cluster is located over left precentral motor cortices. Interestingly the late time window (W3) shows stronger decoding over primary sensory cortices where accuracy is the highest: lingual and calcarine sulcus, superior temporal and Heschl gyrus, and right postcentral gyrus (Fig. 5C). The sources depicted by the searchlight analysis suggest strong overlaps with functional brain networks related to attention and saliency detection (29), especially during the earliest time periods (W1 and W2) (SI Appendix, Fig. S4).

Discussion

For a neural process to be a strong contender as a neural correlate of consciousness, it should show some generalization, e.g., across sensory modalities. This has—despite being implicitly assumed—never been directly tested. To pursue this important issue, we investigated a standard NT experiment targeting three different sensory modalities to explore common spatiotemporal brain activity related to conscious perception using multivariate and searchlight analysis. Our results and conclusions are intimately dependent on the task relevance of “report-based paradigms,” in contrast to “no-report paradigms” (30). Participants performed a detection task and reported their perception for each trial. It has been shown that such protocols can elicit additional late (after 300 ms) brain activity components compared to other paradigms (31). Our findings focusing on the poststimulus evoked responses are in line with previous studies for each specific sensory modality, showing stronger brain activation when the stimulation was reported as perceived (27, 28, 32). Importantly by exploiting the advantages of decoding, we provide direct evidence of common electrophysiological correlates of conscious access across sensory modalities.

ERF Time-Course Differences across Sensory Modalities.

Our first results suggest significant temporal and spatial differences when univariate contrast between detected and undetected trials was used to investigate sensory-specific evoked responses. At the source level, the global group average activity revealed different significant time periods according to the sensory modality targeted where modulations of evoked responses related to detected trials can be observed (Fig. 2A). In the auditory and visual modalities, we found mainly significant differences after 200 ms. In the auditory domain, perception- and attention-modulated sustained responses around 200 ms from sound onset were found in bilateral auditory and frontal regions using MEG (33, 34). Using MEG, a previous study confirmed awareness-related effects from 240 to 500 ms after target presentation during visual presentation (35).

Our results show early differences in the transient responses (for the contrast detected versus undetected) for the somatosensory domain compared to the other sensory modalities and have been previously identified using EEG at around 100 and 200 ms (36). Moreover, previous MEG studies have shown early brain signal amplitude modulation (<200 ms) related to tactile perception in NT tasks (28, 37, 38). Such differences are less pronounced regarding the contrast between catch and sham trials across sensory modalities (SI Appendix, Fig. S1). Early ERF difference for the tactile NT trials can be due to the experimental setup where auditory and visual targets stimulation emerged from a background stimulation (constant white noise and screen display) whereas tactile stimuli remain isolated transient sensory targets. Despite these differences the time-generalization analysis was able to grasp similar brain activity occurring at different timescales across these three sensory modalities.

Source localizations performed with univariate contrasts for each sensory modality suggest differences in network activation with some involvement of similar brain regions in late time windows such as inferior frontal gyrus, inferior parietal gyrus, and supplementary motor area. However, qualitatively similar topographic patterns observed in such analysis cannot unequivocally be interpreted as similar brain processes. The important question is whether these neural activity patterns within a specific sensory modality can be used to decode a subjective report of the stimulation within a different sensory context. The multivariate decoding analysis we performed in the next analysis aimed to answer this question.

Identification of Common Brain Activity across Sensory Modalities.

Multivariate decoding analysis was used to refine spatiotemporal similarity across these different sensory systems. In general, stable characteristics of brain signals have been proposed as a transient stabilization of distributed cortical networks involved in conscious perception (39). Using the precise time resolution of MEG signal and time-generalization analysis, we investigated the stability and time dynamics of brain activity related to conscious perception across sensory systems. In addition to the temporal analysis in this study, we also used the source-level analysis as an indication of the possible brain origin of the effects. The presence of similar brain activity can be revealed between modalities using such a technique, even if significant ERF modulation is distributed over time. As expected, between-modality time-generalization analysis involving tactile runs shows off-diagonal significant decoding due to early significant brain activity for the tactile modality (Fig. 3A). This result suggests the existence of early but similar brain activity patterns related to conscious perception in the tactile domain compared to auditory and visual modalities.

Generally, decoding results revealed a significant time cluster starting around 300 ms with high classifier accuracy that speaks in favor of a late neural response related to conscious report. Actually, we observed the ability of the same classifier trained on specific time points with a specific sensory modality condition to generalize its decoding performance over several time points with the same or another sensory modality. This result speaks in favor of supramodal brain activity patterns that are consistent and stable over time. In addition, the searchlight analysis across brain regions provides an attempt to depict brain network activation during these significant time-generalization clusters. Note that, as seen also in multiple other studies using decoding (22, 23, 40, 41), the average accuracy can be relatively low and yet remains significant at the group level. Note, however, that contrary to many other cognitive neuroscientific studies using decoding (41, 42), we do not apply the practice of “subaveraging” trials to create “pseudo”-single trials, which naturally boosts average decoding accuracy (43). Also, the statistical rigor of our approach is underlined by the fact that the reported decoding results are restricted to highly significant effects (Pcorrected < 0.005; Materials and Methods). Critically, we replicated our results—applying the identical very conservative statistical thresholds—within a second control experiment when looking at conscious perception report contrast independently from motor response activity (SI Appendix, Fig. S3). Our results conform to those of previous studies in underlying the importance of late activity patterns as crucial markers of conscious access (7, 44) and decision-making processes (10, 45).

Due to our protocol settings with a low number of “catch” and “sham” trials, we decided to concentrate our analysis on the near-threshold stimuli contrast (detected vs. undetected). However, there are remaining interesting questions regarding the processing of undetected targets compared to the absence of stimuli. Future experiments should investigate such questions by equilibrating the number of sham trials (“target absent”) and near-threshold trials to investigate the precise processing of undetected targets across the different modalities by using similar decoding techniques to those presented in this experiment.

Furthermore in this study, we explored the brain regions underlying time dynamics of conscious report by using brain source searchlight decoding. Knowing the limitations of such MEG analysis and using a spatially coarse grid resolution for computational efficiency (3 cm), we restricted depiction of results to the main 10% maximum decoding accuracy over all searchlight brain regions. Some of the brain regions found in our searchlight analysis, namely deep brain structures such as the insula and anterior cingulate cortex, are shared with other functional brain networks such as the salience network (46, 47). Also the superior frontal cortex and parietal cortex have been previously found to be activated by attention-demanding cognitive tasks (48). Hence, we emphasize that one cannot conclude from our study that the observed network identified in Fig. 5C is exclusively devoted to conscious report. Indeed, catch and sham trials decoding can also elicit a similar between-modalities temporal decoding pattern compared to near-threshold stimulation analysis, speaking in favor of a similar ignition of a common network during normal perception of high-contrast stimuli for the three sensory modalities targeted by our experiment (SI Appendix, Fig. S6). Even if this additional analysis has to be taken with precaution due to the low number of trials for these conditions in our protocol (SI Appendix, Table S1), it is informative regarding the involvement of normal perception-processing brain networks in our task. Actually, brain networks identified in this study share common brain regions and dynamics with the attentional and salience networks that remain relevant mechanisms for performing a NT task. Interestingly this part of the network seems to be more involved during the initial part of the process, prior to motor brain region involvement (Fig. 5C and SI Appendix, Fig. S4).

Some brain regions involved in motor planning were identified with our analysis, such as precentral gyrus, and could in principle relate to the upcoming button press to report the subjective perception of the stimulus. We specifically targeted such motor preparation bias within the control experiment, in which the participant was unable to predict a priori how to report a conscious percept (i.e., pressing or withholding a button press) until the response prompt appeared. Importantly, we did not find any significant decoding when trials used for the analysis were sorted under response type (e.g., with or without an actual button press from the participant) compared to a subjective report of detection (SI Appendix, Fig. S3 B and C). Such findings could speak in favor of generic motor planning (49) or decision processes related activity in such forced-choice paradigms (50, 51).

Late Involvement of All Primary Sensory Cortices.

Some within-modalities decoding results highlighted unspecific primary cortices involvement while decoding was performed on another sensory modality. For instance, during auditory near-threshold stimulation, the main decoding accuracy of neural activity predicting conscious perception was found in auditory cortices but also in visual cortices (Fig. 4, Top row and Left column). Interestingly, our final analysis revealed and confirmed that primary sensory regions are strongly involved in decoding conscious perception across sensory modalities. Moreover, such brain regions were mainly found during the last time period investigated following the first main involvement of fronto-parietal areas (Fig. 5). These important results suggest that sensory cortices from a specific modality contain sufficient information to allow the decoding of perceptual conscious access in another different sensory modality. These results suggest a late active role of primary cortices over three different sensory systems (Fig. 5). One study reported efficient decoding of visual object categories in early somatosensory cortex using functional MRI (FMRI) and multivariate pattern analysis (52). Another fMRI experiment suggested that sensory cortices appear to be modulated via a common supramodal frontoparietal network, attesting to the generality of the attentional mechanism toward expected auditory, tactile, and visual information (53). However, in our study we demonstrate how local brain activity from different sensory regions reveals a specific dynamic allowing generalization over time to decode the behavioral outcome of a subjective perception in another sensory modality. These results speak in favor of intimate cross-modal interactions between modalities in perception (54). Our results replicate earlier reports (for several modalities in one study) that conscious access to near-threshold stimuli is not caused by differences in the early mainly bottom–up-driven activation, but rather involves later widespread and reentrant neural patterns (2, 3, 6).

Finally, our results suggest that primary sensory regions remain important at late latency after stimulus onset for resolving stimulus perception over different sensory modalities. We propose that this network could enhance the processing of behaviorally relevant signals, here the sensory targets. Although the integration of classically unimodal primary sensory cortices into a processing hierarchy of sensory information is well established (55), some studies suggest multisensory roles of primary cortical areas (56, 57).

Today it remains unknown how such multisensory responses could be related to an individual’s unisensory conscious percepts in humans. Since sensory modalities are usually interwoven in real life, our findings of a supramodal network that may subserve both conscious access and attentional functions have a higher ecological validity than results from previous studies on conscious perception for a single sensory modality.

Actually, our results are in line with an ongoing debate in neuroscience asking to what extent multisensory integration emerges already in primary sensory areas (57, 58). Animal studies provided compelling evidence suggesting that the neocortex is essentially multisensory (59). Here our findings speak in favor of a multisensory interaction in primary and associative cortices. Interestingly a previous fMRI study using multivariate decoding revealed distinct mechanisms governing audiovisual integration in primary and associative cortices needed for spatial orienting and interactions in a multisensory world (60).

Conclusion

We successfully characterized common patterns over time and space suggesting generalization of consciousness-related brain activity across different sensory NT tasks. Our study paves the way for future investigation using techniques with more precise spatial resolution such as functional magnetic resonance imaging to depict in detail the brain network involved. This study reports significant spatiotemporal decoding across different sensory modalities in a near-threshold perception experiment. Indeed, our results speak in favor of the existence of stable and supramodal brain activity patterns, distributed over time and involving seemingly task-unrelated primary sensory cortices. The stability of brain activity patterns over different sensory modalities presented in this study is, to date, the most direct evidence of a common network activation leading to conscious access (2). Moreover, our findings add to recent remarkable demonstrations of applying decoding and time-generalization methods to MEG (21–23, 61) and show a promising application of MVPA techniques to source-level searchlight analysis with a focus on the temporal dynamics of conscious perception.

Materials and Methods

Participants.

Twenty-five healthy volunteers took part in the initial experiment conducted in Trento and 21 healthy volunteers took part in the control experiment performed in Salzburg. All participants presented normal or corrected-to-normal vision and no neurological or psychiatric disorders. Three participants for the initial experiment and one participant for the control experiment were excluded from the analysis due to excessive artifacts in the MEG data leading to an insufficient number of trials per condition after artifact rejection (fewer than 30 trials for at least one condition). Additionally, within each experiment six participants were discarded from the analysis because the false alarms rate exceeded 30% and/or the near-threshold detection rate was over 85% or below 15% for at least one sensory modality (due to threshold identification failure and difficulty in using response button mapping during the control experiment, also leaving fewer than 30 trials for at least one relevant condition in one sensory modality: detected or undetected). The remaining 16 participants (11 females, mean age 28.8 y; SD, 3.4 y) for the initial experiment and 14 participants (9 females, mean age 26.4 y; SD, 6.4 y) for the control experiment reported normal tactile and auditory perception. The ethics committee of the University of Trento and University of Salzburg, respectively, approved the experimental protocols that were used with the written informed consent of each participant.

Stimuli.

To ensure that the participant did not hear any auditory cues caused by the piezo-electric stimulator during tactile stimulation, binaural white noise was presented during the entire experiment (training blocks included). Auditory stimuli were presented binaurally using MEG-compatible tubal in-ear headphones (SOUNDPixx; VPixx Technologies). Short bursts of white noise with a length of 50 ms were generated with Matlab and multiplied with a Hanning window to obtain a soft on- and offset. Participants had to detect short white noise bursts presented near their hearing threshold (27). The intensity of such transient target auditory stimuli was determined prior to the experiment to emerge from the background constant white noise stimulation. Visual stimuli were Gabor ellipsoid (tilted 45°; 1.4° radius; frequency, 0.1 Hz; phase, 90; sigma of Gaussian, 10) back projected on a translucent screen by a Propixx DLP projector (VPixx Technologies) at a refresh rate of 180 frames per second. On the black screen background, a centered gray fixation circle (2.5° radius) with a central white dot was used as a fixation point. The stimuli were presented for 50 ms in the center of the screen at a viewing distance of 110 cm. Tactile stimuli were delivered with a 50-ms stimulation to the tip of the left index finger, using one finger module of a piezo-electric stimulator (Quaerosys) with 2 × 4 rods, which can be raised to a maximum of 1 mm. The module was attached to the finger with tape and the participant’s left hand was cushioned to prevent any unintended pressure on the module (28). For the control experiment (conducted in another laboratory; i.e., Salzburg), visual, auditory, and tactile stimulation setups were identical but we used a different MEG/MRI vibrotactile stimulator system (CM3; Cortical Metrics).

Task and Design.

The participants performed three blocks of a NT perception task. Each block included three separate runs (100 trials each) for each sensory modality: tactile (T), auditory (A), and visual (V). A short break (∼1 min) separated each run and longer breaks (∼4 min) were provided to the participants after each block. Inside a block, runs alternated in the same order within subject and were pseudorandomized across subjects (i.e., subject 1 = TVA-TVA-TVA; subject 2 = VAT-VAT-VAT; …). Participants were asked to fixate on a central white dot in a gray central circle at the center of the screen throughout the whole experiment to minimize eye movements.

A short training run with 20 trials was conducted to ensure that participants had understood the task. Then, in three different training sessions prior to the main experiment, participants’ individual perceptual thresholds (tactile, auditory, and visual) were determined in the shielded room. For the initial experiment, a one-up/one-down staircase procedure with two randomly interleaved staircases (one upward and one downward) was used with fixed step sizes. For the control experiment we used a Bayesian active sampling protocol to estimate psychometric slope and threshold for each participant (62). Once determined by these staircase procedures, all near-threshold stimulation intensities remained stable during each block of the whole experiment for a given participant. All stimulation intensities can be found in SI Appendix, Table S1.

The main experiment consisted of a detection task (Fig. 1A). At the beginning of each run, participants were told that on each trial a weak stimulus (tactile, auditory, or visual depending on the run) could be presented at random time intervals. Five hundred milliseconds after the target stimulus onset, participants were prompted to indicate whether they had felt the stimulus with an on-screen question mark (maximal response time: 2 s). Responses were given using MEG-compatible response boxes with the right index finger and the middle finger (response-button mapping was counterbalanced among participants). Trials were then classified into hits (detected stimulus) and misses (undetected stimulus) according to the participants’ answers. Trials with no response were rejected. Catch (above perceptual threshold stimulation intensity) and sham (absent stimulation) trials were used to control false alarms and correct rejection rates across the experiment. Overall, there were nine runs with 100 trials each (in total 300 trials for each sensory modality). Each trial started with a variable interval (1.3 to 1.8 s, randomly distributed) followed by an experimental near-threshold stimulus (80 per run), a sham stimulus (10 per run), or a catch stimulus (10 per run) of 50 ms each. Each run lasted for ∼5 min. The whole experiment lasted for ∼1 h.

Identical timing parameters were used in the control experiment. However, a specific response screen design was used to control for motor response mapping. For each trial the participants must use a different response mapping related to the circle’s color surrounding the question mark during the response screen. Two colors (blue or yellow) were used and presented randomly after each trial during the control experiment. One color was associated to the response mapping rule “press the button only if there is a stimulation” (for the near-threshold condition, detected) and the other color was associated to the opposite response mapping rule “press a button only if there is no stimulation” (for the near-threshold condition, undetected). The association between one response mapping and a specific color (blue or yellow) was fixed for a single participant but was predefined randomly across different participants. Importantly, by delaying the response mapping to after the stimulus presentation in an (for the individual) unpredictable manner, neural patterns during relevant periods putatively cannot be confounded by response selection/preparation. Both experiments were programmed in Matlab using the open source Psychophysics Toolbox (63).

MEG Data Acquisition and Preprocessing.

MEG was recorded at a sampling rate of 1 kHz using a 306-channel (204 first-order planar gradiometers, 102 magnetometers) VectorView MEG system for the first experiment in Trento and a Triux MEG system for the control experiment in Salzburg (Elekta-Neuromag Ltd.) in a magnetically shielded room (AK3B; Vakuumschmelze). Before the experiments, individual head shapes were acquired for each participant including fiducials (nasion and preauricular points) and around 300 digitized points on the scalp with a Polhemus Fastrak digitizer. Head positions of the individuals relative to the MEG sensors were continuously controlled within a run using five coils. Head movements did not exceed 1 cm within and between blocks.

Data were analyzed using the Fieldtrip toolbox (64) and the CoSMoMVPA toolbox (65) in combination with MATLAB 8.5 (MathWorks). First, a high-pass filter at 0.1 Hz (FIR filter with transition bandwidth 0.1 Hz) was applied to the continuous data. Then the data were segmented from 1,000 ms before to 1,000 ms after target stimulation onset and down-sampled to 512 Hz. Trials containing physiological or acquisition artifacts were rejected. A semiautomatic artifact detection routine identified statistical outliers of trials and channels in the datasets using a set of different summary statistics (variance, maximum absolute amplitude, maximum z value). These trials and channels were removed from each dataset. Finally, the data were visually inspected and any remaining trials and channels with artifacts were removed manually. Across subjects, an average of five channels (±2 SD) were rejected. Bad channels were excluded from the whole dataset. A detailed report of the remaining number of trials per condition for each participant can be found SI Appendix, Table S1. Finally, in all further analyses and within each sensory modality for each subject, an equal number of detected and undetected trials was randomly selected to prevent any bias across conditions (66).

Source Analyses.

Neural activity evoked by stimulus onset was investigated by computing ERFs. For all source-level analyses, the preprocessed data were 30-Hz lowpass filtered and projected to source level using a linearly constrained minimum variance (LCMV) beamformer analysis (67). For each participant, realistically shaped, single-shell head models (68) were computed by coregistering the participants’ head shapes either with their structural MRI or—when no individual MRI was available (three participants and two participants, for the initial experiment and the control experiment, respectively)—with a standard brain from the Montreal Neurological Institute (MNI), warped to the individual head shape. A grid with 1.5-cm resolution based on an MNI template brain was morphed into the brain volume of each participant. A common spatial filter (for each grid point and each participant) was computed using the lead fields and the common covariance matrix, taking into account the data from both conditions (detected and undetected or catch and sham) for each sensory modality separately. The covariance window for the beamformer filter calculation was based on 200 ms pre- to 500 ms poststimulus. Using this common filter, the spatial power distribution was then estimated for each trial separately. The resulting data were averaged relative to the stimulus onset in all conditions (detected, undetected, catch, and sham) for each sensory modality. Only for visualization purposes a baseline correction was applied to the averaged source-level data by subtracting a time window from 200 ms prestimulus to stimulus onset. Based on a significant difference between event-related fields of the two conditions over time for each sensory modality, the source localization was performed restricted to specific time windows of interest. All source images were interpolated from the original resolution onto an inflated surface of an MNI template brain available within the Caret software package (69). The respective MNI coordinates and labels of localized brain regions were identified with an anatomical brain atlas (AAL atlas; ref. 70) and a network parcellation atlas (29). Source analysis of MEG data is an inherently underspecified problem and no unique solution exists. Furthermore, source leakage cannot be avoided, further reducing the accuracy of any analysis. Finally, we remind the reader that we do not expect more than 3 cm precision on our results because we used standard LCMV source localization with a 1.5-cm grid. In other words, source plots should be seen as suggestive rather conclusive evidence for underlying brain regions only.

MVPA Decoding.

MVPA decoding was performed for the period 0 to 500 ms after stimulus onset based on normalized (z-scored) single-trial source data down-sampled to 100 Hz (i.e., time steps of 10 ms). We used multivariate pattern analysis as implemented in CoSMoMVPA (65) to identify when and what kind of a common network between sensory modalities is activated during the near-threshold detection task. We defined two classes for the decoding related to the task behavioral outcome (detected and undetected). For decoding within the same sensory modality, single-trial source data were randomly assigned to one of two chunks (half of the original data).

For decoding of all sensory modalities together, single-trial source data were pseudorandomly assigned to one of the two chunks with half of the original data for each sensory modality in each chunk. Data were classified using a twofold cross-validation procedure, where a Bayes-naive classifier predicted trial conditions in one chunk after training on data from the other chunk. For decoding between different sensory modalities, single-trial source data of one modality were assigned to one testing chunk and the trials from other modalities were assigned to the training chunk. The number of target categories (e.g., detected/undetected) was balanced in each training partition and for each sensory modality. The trials data are equally partitioned into the chunks (i.e., we have the same amount of the three runs and block trials for each modality in each separate chunk used for classification). Training and testing partitions always contained different sets of data.

First, the temporal generalization method was used to explore the ability of each classifier across different time points in the training set to generalize to every time point in the testing set (21). In this analysis we used local neighborhoods features in time space (time radius of 10 ms: for each time step we included as additional features the previous and next time sample data point). We generated temporal generalization matrices of task decoding accuracy (detected/undetected), mapping the time at which the classifier was trained against the time it was tested. Generalization of decoding accuracy over time was calculated for all trials and systematically depended on a specific between- or within-sensory-modality decoding. The reported average accuracy of the classifier for each time point corresponds to the group average of individual effect size: the ability of classifiers to discriminate detected from undetected trials. We summarized time generalization by keeping only significant accuracy for each sensory modality decoding. Significant classifiers’ accuracies were normalized between 0 and 1,

| [1] |

where is a variable of all significant decoding accuracies and is a given significant accuracy at time Normalized accuracies were then averaged across significant testing time and decoding conditions. The number of significant classifier generalizations across testing time points and the relevant averaged normalized accuracies were reported along the training time dimension (Figs. 3B and 5B). For all significant time points previously identified we performed a “searchlight” analysis across brain sources and time neighborhood structure. In this analysis we used local neighborhoods features in source and time space. We used a time radius of 10 ms and a source radius of 3 cm. All significant searchlight accuracy results were averaged over time and only the maximum 10% significant accuracies were reported on brain maps for each sensory modality decoding condition (Fig. 4) or for all conditions together (Fig. 5C).

Finally, we applied the same type of analysis to all sensory modalities by taking all blocks together with detected and undetected NT trials (equalized within each sensory modality). For the control experiment, we equalized trials based on the 2 × 2 design with detection report (detected or undetected) and type of response (“button press = response” or “no response”), so that we get the same number of trials inside each category (i.e., class) for each sensory modality. We performed a similar decoding analysis by using a different class definition: either detected vs. undetected or response vs. no response (SI Appendix, Fig. S3 B and C).

Statistical Analysis.

Detection rates for the experimental trials were statistically compared to those from the catch and sham trials, using dependent samples t tests. Concerning the MEG data, the main statistical contrast was between trials in which participants reported a stimulus detection and trials in which they did not (detected vs. undetected).

The evoked response at the source level was tested at the group level for each of the sensory modalities. To eliminate polarity, statistics were computed on the absolute values of source-level event-related responses. Based on the global average of all grid points, we first identified relevant time periods with maximal difference between conditions (detected vs. undetected) by performing group analysis with sequential dependent t tests between 0 and 500 ms after stimulus onset using a sliding window of 30 ms with 10 ms overlap. P values were corrected for multiple comparisons using Bonferroni correction. Then, to derive the contributing spatial generators of this effect, the conditions detected and undetected were contrasted for the specific time periods with group statistical analysis using nonparametric cluster-based permutation tests with Monte Carlo randomization across grid points controlling for multiple comparisons (71).

The multivariate searchlight analysis results discriminating between conditions were tested at the group level by comparing the resulting individual accuracy maps against chance level (50%) using a nonparametric approach implemented in CoSMoMVPA (65), adopting 10,000 permutations to generate a null distribution. P values were set at P < 0.005 for cluster-level correction to control for multiple comparisons using a threshold-free method for clustering (72), which has been used and validated for MEG/EEG data (40, 73). The time generalization results at the group level were thresholded using a mask with corrected z score > 2.58 (or Pcorrected < 0.005) (Figs. 3A and 5A). Time points exceeding this threshold were identified and reported for each training data time course to visualize how long time generalization was significant over testing data (Figs. 3B and 5B). Significant accuracy brain maps resulting from the searchlight analysis on previously identified time points were reported for each decoding condition. The maximum 10% of averaged accuracies were depicted for each significant decoding cluster on brain maps (Figs. 4 and 5).

Data Availability.

A down-sampled (to 100 Hz) version of the data is available at the OSF public repository (https://osf.io/E5PMY/). The original nonresampled raw data are available, upon reasonable request, from the corresponding author. Data analysis code is available at the corresponding author’s GitLab repository (https://gitlab.com/gaetansanchez).

Supplementary Material

Acknowledgments

This work was supported by the European Research Council (ERC) (Window to Consciousness [WIN2CON], ERC Starting Grant [StG] 283404). We thank Julia Frey for her great support during data collection.

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission.

Data deposition: A down-sampled (to 100 Hz) version of the raw data is available at the OSF public repository (https://osf.io/E5PMY/). The original nonresampled data are available, upon reasonable request, from the corresponding author. Data analysis code is available at the corresponding author’s GitLab repository (https://gitlab.com/gaetansanchez).

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.1912584117/-/DCSupplemental.

References

- 1.Crick F., Koch C., A framework for consciousness. Nat. Neurosci. 6, 119–126 (2003). [DOI] [PubMed] [Google Scholar]

- 2.Dehaene S., Changeux J.-P., Experimental and theoretical approaches to conscious processing. Neuron 70, 200–227 (2011). [DOI] [PubMed] [Google Scholar]

- 3.Naghavi H. R., Nyberg L., Common fronto-parietal activity in attention, memory, and consciousness: Shared demands on integration? Conscious. Cogn. 14, 390–425 (2005). [DOI] [PubMed] [Google Scholar]

- 4.Foley J. M., Legge G. E., Contrast detection and near-threshold discrimination in human vision. Vision Res. 21, 1041–1053 (1981). [DOI] [PubMed] [Google Scholar]

- 5.Ruhnau P., Hauswald A., Weisz N., Investigating ongoing brain oscillations and their influence on conscious perception - network states and the window to consciousness. Front. Psychol. 5, 1230 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Lamme V. A. F., Towards a true neural stance on consciousness. Trends Cogn. Sci. 10, 494–501 (2006). [DOI] [PubMed] [Google Scholar]

- 7.Dehaene S., Changeux J.-P., Naccache L., Sackur J., Sergent C., Conscious, preconscious, and subliminal processing: A testable taxonomy. Trends Cogn. Sci. 10, 204–211 (2006). [DOI] [PubMed] [Google Scholar]

- 8.Andersen L. M., Pedersen M. N., Sandberg K., Overgaard M., Occipital MEG activity in the early time range (<300 ms) predicts graded changes in perceptual consciousness. Cereb. Cortex 26, 2677–2688 (2016). [DOI] [PubMed] [Google Scholar]

- 9.Brancucci A., Lugli V., Perrucci M. G., Del Gratta C., Tommasi L., A frontal but not parietal neural correlate of auditory consciousness. Brain Struct. Funct. 221, 463–472 (2016). [DOI] [PubMed] [Google Scholar]

- 10.Joos K., Gilles A., Van de Heyning P., De Ridder D., Vanneste S., From sensation to percept: The neural signature of auditory event-related potentials. Neurosci. Biobehav. Rev. 42, 148–156 (2014). [DOI] [PubMed] [Google Scholar]

- 11.Auksztulewicz R., Spitzer B., Blankenburg F., Recurrent neural processing and somatosensory awareness. J. Neurosci. 32, 799–805 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Auksztulewicz R., Blankenburg F., Subjective rating of weak tactile stimuli is parametrically encoded in event-related potentials. J. Neurosci. 33, 11878–11887 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Tallon-Baudry C., On the neural mechanisms subserving consciousness and attention. Front. Psychol. 2, 397 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.van Gaal S., Lamme V. A. F., Unconscious high-level information processing: Implication for neurobiological theories of consciousness. Neuroscientist 18, 287–301 (2012). [DOI] [PubMed] [Google Scholar]

- 15.Baars B. J., Global workspace theory of consciousness: Toward a cognitive neuroscience of human experience. Prog. Brain Res. 150, 45–53 (2005). [DOI] [PubMed] [Google Scholar]

- 16.Dehaene S., Charles L., King J.-R., Marti S., Toward a computational theory of conscious processing. Curr. Opin. Neurobiol. 25, 76–84 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Sergent C., Baillet S., Dehaene S., Timing of the brain events underlying access to consciousness during the attentional blink. Nat. Neurosci. 8, 1391–1400 (2005). [DOI] [PubMed] [Google Scholar]

- 18.Fisch L., et al. , Neural “ignition”: Enhanced activation linked to perceptual awareness in human ventral stream visual cortex. Neuron 64, 562–574 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Melloni L., Schwiedrzik C. M., Müller N., Rodriguez E., Singer W., Expectations change the signatures and timing of electrophysiological correlates of perceptual awareness. J. Neurosci. 31, 1386–1396 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Cichy R. M., Pantazis D., Oliva A., Resolving human object recognition in space and time. Nat. Neurosci. 17, 455–462 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.King J.-R., Dehaene S., Characterizing the dynamics of mental representations: The temporal generalization method. Trends Cogn. Sci. 18, 203–210 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Tucciarelli R., Turella L., Oosterhof N. N., Weisz N., Lingnau A., MEG multivariate analysis reveals early abstract action representations in the lateral occipitotemporal cortex. J. Neurosci. 35, 16034–16045 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Wutz A., Muschter E., van Koningsbruggen M. G., Weisz N., Melcher D., Temporal integration windows in neural processing and perception aligned to saccadic eye movements. Curr. Biol. 26, 1659–1668 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Kriegeskorte N., Goebel R., Bandettini P., Information-based functional brain mapping. Proc. Natl. Acad. Sci. U.S.A. 103, 3863–3868 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Haynes J.-D., et al. , Reading hidden intentions in the human brain. Curr. Biol. 17, 323–328 (2007). [DOI] [PubMed] [Google Scholar]

- 26.Sandberg K., Andersen L. M., Overgaard M., Using multivariate decoding to go beyond contrastive analyses in consciousness research. Front. Psychol. 5, 1250 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Leske S., et al. , Prestimulus network integration of auditory cortex predisposes near-threshold perception independently of local excitability. Cereb. Cortex 25, 4898–4907 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Frey J. N., et al. , The tactile window to consciousness is characterized by frequency-specific integration and segregation of the primary somatosensory cortex. Sci. Rep. 6, 20805 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Gordon E. M., et al. , Generation and evaluation of a cortical area parcellation from resting-state correlations. Cereb. Cortex 26, 288–303 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Tsuchiya N., Wilke M., Frässle S., Lamme V. A. F., No-report paradigms: Extracting the true neural correlates of consciousness. Trends Cogn. Sci. 19, 757–770 (2015). [DOI] [PubMed] [Google Scholar]

- 31.Pitts M. A., Metzler S., Hillyard S. A., Isolating neural correlates of conscious perception from neural correlates of reporting one’s perception. Front. Psychol. 5, 1078 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Karns C. M., Knight R. T., Intermodal auditory, visual, and tactile attention modulates early stages of neural processing. J. Cogn. Neurosci. 21, 669–683 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kauramäki J., et al. , Two-stage processing of sounds explains behavioral performance variations due to changes in stimulus contrast and selective attention: An MEG study. PLoS One 7, e46872 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Zoefel B., Heil P., Detection of near-threshold sounds is independent of EEG phase in common frequency bands. Front. Psychol. 4, 262 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Wyart V., Tallon-Baudry C., Neural dissociation between visual awareness and spatial attention. J. Neurosci. 28, 2667–2679 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Ai L., Ro T., The phase of prestimulus alpha oscillations affects tactile perception. J. Neurophysiol. 111, 1300–1307 (2014). [DOI] [PubMed] [Google Scholar]

- 37.Palva S., Linkenkaer-Hansen K., Näätänen R., Palva J. M., Early neural correlates of conscious somatosensory perception. J. Neurosci. 25, 5248–5258 (2005). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Wühle A., Mertiens L., Rüter J., Ostwald D., Braun C., Cortical processing of near-threshold tactile stimuli: An MEG study. Psychophysiology 47, 523–534 (2010). [DOI] [PubMed] [Google Scholar]

- 39.Schurger A., Sarigiannidis I., Naccache L., Sitt J. D., Dehaene S., Cortical activity is more stable when sensory stimuli are consciously perceived. Proc. Natl. Acad. Sci. U.S.A. 112, E2083–E2092 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Turella L., et al. , Beta band modulations underlie action representations for movement planning. Neuroimage 136, 197–207 (2016). [DOI] [PubMed] [Google Scholar]

- 41.Martin Cichy R., Khosla A., Pantazis D., Oliva A., Dynamics of scene representations in the human brain revealed by magnetoencephalography and deep neural networks. Neuroimage 153, 346–358 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Kaiser D., Oosterhof N. N., Peelen M. V., The neural dynamics of attentional selection in natural scenes. J. Neurosci. 36, 10522–10528 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Grootswagers T., Wardle S. G., Carlson T. A., Decoding dynamic brain patterns from evoked responses: A tutorial on multivariate pattern analysis applied to time series neuroimaging data. J. Cogn. Neurosci. 29, 677–697 (2017). [DOI] [PubMed] [Google Scholar]

- 44.Sergent C., Dehaene S., Neural processes underlying conscious perception: Experimental findings and a global neuronal workspace framework. J. Physiol. Paris 98, 374–384 (2004). [DOI] [PubMed] [Google Scholar]

- 45.Polich J., Updating P300: An integrative theory of P3a and P3b. Clin. Neurophysiol. 118, 2128–2148 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Kucyi A., Hodaie M., Davis K. D., Lateralization in intrinsic functional connectivity of the temporoparietal junction with salience- and attention-related brain networks. J. Neurophysiol. 108, 3382–3392 (2012). [DOI] [PubMed] [Google Scholar]

- 47.Chen T., Cai W., Ryali S., Supekar K., Menon V., Distinct global brain dynamics and spatiotemporal organization of the salience network. PLoS Biol. 14, e1002469 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Menon V., Uddin L. Q., Saliency, switching, attention and control: A network model of insula function. Brain Struct. Funct. 214, 655–667 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Rubia K., et al. , Mapping motor inhibition: Conjunctive brain activations across different versions of go/no-go and stop tasks. Neuroimage 13, 250–261 (2001). [DOI] [PubMed] [Google Scholar]

- 50.Donner T. H., Siegel M., Fries P., Engel A. K., Buildup of choice-predictive activity in human motor cortex during perceptual decision making. Curr. Biol. 19, 1581–1585 (2009). [DOI] [PubMed] [Google Scholar]

- 51.Mostert P., Kok P., de Lange F. P., Dissociating sensory from decision processes in human perceptual decision making. Sci. Rep. 5, 18253 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Smith F. W., Goodale M. A., Decoding visual object categories in early somatosensory cortex. Cereb. Cortex 25, 1020–1031 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Langner R., et al. , Modality-specific perceptual expectations selectively modulate baseline activity in auditory, somatosensory, and visual cortices. Cereb. Cortex 21, 2850–2862 (2011). [DOI] [PubMed] [Google Scholar]

- 54.Pooresmaeili A., et al. , Cross-modal effects of value on perceptual acuity and stimulus encoding. Proc. Natl. Acad. Sci. U.S.A. 111, 15244–15249 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Felleman D. J., Van Essen D. C., Distributed hierarchical processing in the primate cerebral cortex. Cereb. Cortex 1, 1–47 (1991). [DOI] [PubMed] [Google Scholar]

- 56.Lemus L., Hernández A., Luna R., Zainos A., Romo R., Do sensory cortices process more than one sensory modality during perceptual judgments? Neuron 67, 335–348 (2010). [DOI] [PubMed] [Google Scholar]

- 57.Liang M., Mouraux A., Hu L., Iannetti G. D., Primary sensory cortices contain distinguishable spatial patterns of activity for each sense. Nat. Commun. 4, 1979 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Vetter P., Smith F. W., Muckli L., Decoding sound and imagery content in early visual cortex. Curr. Biol. 24, 1256–1262 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Ghazanfar A. A., Schroeder C. E., Is neocortex essentially multisensory? Trends Cogn. Sci. 10, 278–285 (2006). [DOI] [PubMed] [Google Scholar]

- 60.Rohe T., Noppeney U., Distinct computational principles govern multisensory integration in primary sensory and association cortices. Curr. Biol. 26, 509–514 (2016). [DOI] [PubMed] [Google Scholar]

- 61.King J.-R., Pescetelli N., Dehaene S., Brain mechanisms underlying the brief maintenance of seen and unseen sensory information. Neuron 92, 1122–1134 (2016). [DOI] [PubMed] [Google Scholar]

- 62.Kontsevich L. L., Tyler C. W., Bayesian adaptive estimation of psychometric slope and threshold. Vision Res. 39, 2729–2737 (1999). [DOI] [PubMed] [Google Scholar]

- 63.Brainard D. H., The psychophysics toolbox. Spat. Vis. 10, 433–436 (1997). [PubMed] [Google Scholar]

- 64.Oostenveld R., Fries P., Maris E., Schoffelen J.-M., FieldTrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput. Intell. Neurosci. 2011, e156869 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Oosterhof N. N., Connolly A. C., Haxby J. V., CoSMoMVPA: Multi-modal multivariate pattern analysis of neuroimaging data in Matlab/GNU octave. Front. Neuroinform. 10, 27 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Gross J., et al. , Good practice for conducting and reporting MEG research. Neuroimage 65, 349–363 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Van Veen B. D., van Drongelen W., Yuchtman M., Suzuki A., Localization of brain electrical activity via linearly constrained minimum variance spatial filtering. IEEE Trans. Biomed. Eng. 44, 867–880 (1997). [DOI] [PubMed] [Google Scholar]

- 68.Nolte G., The magnetic lead field theorem in the quasi-static approximation and its use for magnetoencephalography forward calculation in realistic volume conductors. Phys. Med. Biol. 48, 3637–3652 (2003). [DOI] [PubMed] [Google Scholar]

- 69.Van Essen D. C., et al. , An integrated software suite for surface-based analyses of cerebral cortex. J. Am. Med. Inform. Assoc. 8, 443–459 (2001). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Tzourio-Mazoyer N., et al. , Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. Neuroimage 15, 273–289 (2002). [DOI] [PubMed] [Google Scholar]

- 71.Maris E., Oostenveld R., Nonparametric statistical testing of EEG- and MEG-data. J. Neurosci. Methods 164, 177–190 (2007). [DOI] [PubMed] [Google Scholar]

- 72.Smith S. M., Nichols T. E., Threshold-free cluster enhancement: Addressing problems of smoothing, threshold dependence and localisation in cluster inference. Neuroimage 44, 83–98 (2009). [DOI] [PubMed] [Google Scholar]

- 73.Pernet C. R., Latinus M., Nichols T. E., Rousselet G. A., Cluster-based computational methods for mass univariate analyses of event-related brain potentials/fields: A simulation study. J. Neurosci. Methods 250, 85–93 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data