Abstract

The amygdala is known as a key brain region involved in the explicit and implicit processing of emotional faces, and plays a crucial role in salience detection. Not until recently was the mismatch negativity (MMN), a component of the event‐related potentials to an odd stimulus in a sequence of stimuli, utilized as an index of preattentive salience detection of emotional voice processing. However, their relationship remains to be delineated. This study combined the fMRI scanning and event‐related potential recording by examining amygdala reactivity in response to explicit and implicit (backward masked) perception of fearful and angry faces, along with recording MMN in response to the fearfully and angrily spoken syllables dada in healthy subjects who varied in trait anxiety (STAI‐T). Results indicated that the amplitudes of fearful MMN were positively correlated with left amygdala reactivity to explicit perception of fear, but negatively correlated with right amygdala reactivity to implicit perception of fear. The fearful MMN predicted STAI‐T along with left amygdala reactivity to explicit fear, whereas the association between fearful MMN and STAI‐T was mediated by right amygdala reactivity to implicit fear. These findings suggest that amygdala reactivity in response to explicit and implicit threatening faces exhibits opposite associations with emotional MMN. In terms of emotional processing, MMN not only reflects preattentive saliency detection but also stands at the crossroads of explicit and implicit perception. Hum Brain Mapp 38:140–150, 2017. © 2016 Wiley Periodicals, Inc.

Keywords: mismatch negativity, amygdala reactivity, explicit and implicit emotional processing

INTRODUCTION

General consensus holds that the amygdala is a key brain region involved in the explicit and implicit processing of emotional faces, and plays a crucial role in salience detection [Adolphs, 2010]. The explicit and implicit processing of emotional faces could be elicited by the conscious and non‐conscious (backward masked) perception, respectively [Tamietto and de Gelder, 2010]. Conversely, voices, like faces, convey socially relevant information [Belin et al., 2004]. Not until recently was the mismatch negativity (MMN) utilized to index the salience of emotional voice processing [Cheng et al., 2012; Schirmer et al., 2005]. MMN in response to pure tone may reflect the borderline between automatic and attention‐dependent processes [Näätänen et al., 2011]. It is thus reasonable to propose that amygdala reactivity in response to explicit and implicit emotional processing should have an association with emotional MMN.

The magnitude of amygdala reactivity in response to threatening (angry, fearful) faces has been positively associated with individual variability in the indices of trait anxiety [Etkin et al., 2004; Most et al., 2006]. Using fMRI in conjunction with backward masked stimulus presentation represents the way toward determining the role of the amygdala in implicit (non‐conscious) processing [Whalen et al., 1998]. Amygdala reactivity exhibits lateralization according to the awareness level, as shown by the left for explicit (conscious) and the right for implicit (non‐conscious) processing [Morris et al., 1998]. In addition, the serotonin transporter polymorphism (5‐HTTLPR), known as a potential genetic contributor to trait anxiety, can account for 4.6% to 10% of the variance in the amygdala reactivity to threatening faces [Munafò et al., 2008; Murphy et al., 2013]. Among the various models proposed for 5‐HTTLPR‐dependent modulation of amygdala reactivity, the tonic model explains higher negative emotionality in risk allele carriers in terms of higher deactivation of amygdala responses to neutral stimuli, whereas the phasic model posits higher responses to threatening stimuli per se [Canli and Lesch, 2007; Canli et al., 2005].

MMN is a component of the event‐related potential (ERP) in response to an odd stimulus in a sequence of stimuli regardless of whether the subjects are paying attention to the sequence [Näätänen et al., 1978]. MMN underlying the borderline between automatic and attention‐dependent processes can lead to conscious perception of auditory changes [Näätänen et al., 2011]. Recent studies suggest that, in addition to many basic features of sounds such as frequency, duration, or phonetic content, MMN can also be utilized as an index of the salience of emotional voice processing [Chen et al., 2014; Cheng et al., 2012; Fan and Cheng, 2014; Fan et al., 2013]. Particularly, one fMRI study reported that the unexpected presence of emotionally spoken voices embedded in a passive auditory oddball paradigm could activate hemodynamic response in the amygdala [Schirmer et al., 2008]. Testosterone administrations could alter MMN in response to emotional syllables, rather than acoustically matched nonvocal sounds, lending support to the involvement of amygadala in the generator sources of emotional MMN [Chen et al., 2015].

To delineate the relationship between amygdala reactivity and emotional MMN, we combined fMRI scanning and ERP recording by examining the amygdala reactivity in response to explicit and implicit (backward masked) perception of angry and fearful faces, as well as recording MMN to angrily and fearfully spoken syllables dada in healthy volunteers, who varied in trait anxiety. If emotional MMN were comparable with the electrophysiological analogue of amygdala reactivity, we hypothesized that the hemodynamic response to threatening faces would be associated with the amplitude of MMN to threatening syllables. Considering MMN as the borderline between automatic and attention‐dependent processes in audition [Näätänen et al., 2011], we proposed that such associations would be distinct between explicit and implicit processing. Furthermore, we performed path analyses to examine the directionality of emotional MMN influences on amygdala reactivity and trait anxiety.

MATERIALS AND METHODS

Participants

Thirty healthy volunteers (16 males), aged between 20 to 34 (23.9 ± 3.0) years, participated in the study after providing written informed consent. All participants had gone through both ERP and fMRI experiments. All participants were ethnic Chinese and right‐handed. All participants were prescreened to exclude comorbid psychiatry/neurological disorders (e.g., dementia, seizures), history of head injury, alcohol or substance abuse or dependence within the past 5 years. All of them had normal peripheral hearing bilaterally (pure tone average threshold < 15 dB HL) and normal or corrected‐to‐normal vision at the time of testing. This study was approved by the Ethics Committee from National Yang‐Ming University Hospital and conducted in accordance with the Declaration of Helsinki.

Stimuli

The auditory stimuli for the ERP recording were the meaningless syllables dada spoken by a female speaker with fearful, angry, and neutral prosodies. A female speaker from a performing arts school produced the meaningless syllables dada with three sets of emotional (fearful, angry, neutral) prosodies. Within each set of emotional prosody, the speaker produced the syllables dada for more than 10 times. Syllables were edited to become equally long (550 msec) and loud (min: 57 dB, max: 62 dB; mean: 59 dB) with the use of Cool Edit Pro 2.0 (Syntrillium Software Corporation, Phoenix, Arizona, USA) and Sound Forge 9.0 (Sony Corporation, Tokyo, Japan). Each syllable set was rated for emotionality on a 5‐point Likert‐scale. Emotional syllables that were identified as the most fearful, angry, and emotionless were selected as the stimuli. The ratings on the Likert‐scale (mean ± SD) of fearful, angry, and neutral syllables were 4.34 ± 0.65, 4.26 ± 0.85, and 2.47 ± 0.87, respective [see Chen et al., 2014, 2015, 2016a, 2016b; Cheng et al., 2012; Fan et al., 2013; Hung et al., 2013; Hung and Cheng, 2014 for validation].

The visual stimuli for the fMRI scanning consisted of the black and white pictures of male and female faces showing fearful, angry and neutral facial expressions, which were chosen from the Pictures of Facial Affect [Ekman and Friesen, 1976]. Faces were cropped into an elliptical shape, whose background, hair, and jewelry cues were eliminated, and were oriented to maximize inter‐stimulus alignment of eyes and mouths.

Procedures

The sequence of ERP recording and fMRI scanning was counter‐balanced between participants. A half of participants were first going through the ERP recording and a half of them were first going through the fMRI scanning. We randomly assigned participants into two different experimental sequences. After ERP recording and fMRI scanning, the State‐Trait Anxiety inventory (STAI) was administered to the subjects to determine their self‐reported anxiety levels [Spielberger et al., 1970]. The STAI consists of 22‐item scales with a range of four possible responses to each. One scale on state anxiety (STAI‐S) verifies anxiety in specific situations, including fear, nervousness, discomfort, and overarousal of the autonomic nervous system temporarily induced by situations perceived as dangerous. The other scale on trait anxiety (STAI‐T) determines anxiety as a general trait, which is a relatively enduring disposition to feel stress, worry, and discomfort. Given that the top range of trait anxiety scores might suggest individuals with unreported anxiety disorders, we used a structural clinical interview tool to ensure all participants no evidence of anxiety disorders [First et al., 1996]. In addition, there was at least one‐week interval between ERP recording and fMRI scanning.

The ERP recording was conducted in a sound attenuated, dimly light and electrically shielded room. Stimuli were presented binaurally via two loudspeakers placed approximately 30 cm distance on right and left sides of the subject's head. The sound pressure level (SPL) peaks of different types of stimuli were equalized to eliminate the effect of the substantially greater energy of the angry stimuli. The mean background noise level was around 35 dB SPL. During ERP recording, the participants were required to watch a silent movie with subtitles while task‐irrelevant emotional syllables in oddball sequences were presented. The oddball paradigm used fearfully and angrily spoken syllables dada as deviants and neutrally spoken syllables dada as standards. There were a total of two blocks. Each block consisted of 450 trials, of which 80% were neutral syllables, 10% were fearful syllables, and the other 10% were angry syllables. The sequences of stimuli were quasi‐randomized such that successive deviant stimuli were avoided. The stimulus‐onset‐asynchrony was 1,200 ms, including a stimulus length of 550 ms and an inter‐stimulus interval of 650 ms.

For the fMRI scanning, the paradigm was derived from the work by Etkin et al. [2004]. The color identification task was explained to the subjects, and they were told to focus on the faces and identify their color within the fMRI scanner. Each stimulus presentation involved a 200 ms fixation to cue subjects to focus on the center of the screen, followed by a 400 ms blank screen and 200 ms of face presentation. Subjects then had 1,130 ms to respond with a key press indicating the color of the face. Non‐masked stimuli consisted of 200 ms of an emotional (fearful or angry) or neutral expression face, while backward masked stimuli consisted of 17 ms of an emotional or neutral face, followed by 183 ms of a neutral face mask belonging to a different individual, but of the same color and gender. 17 ms is sufficient to block the explicit recognition of emotional faces for most people [Kim et al., 2010; Milders et al., 2008]. Each epoch consisted of six trials of the same stimulus type, but randomized with respect to color and gender. The functional run had 12 epochs (two for each stimulus type) that were pseudo‐randomized for the stimulus type. To avoid stimulus order effects, we used two different counterbalanced run orders. Stimuli were presented using Matlab software (MathWorks, Sherborn, MA, USA) and were triggered by the first radio frequency pulse for the functional run. The stimuli were displayed on VisuaStim XGA LCD screen goggles (Resonance Technology, Northridge, CA). The screen resolution was 800 × 600, with a refresh rate of 60 Hz. Behavioral responses were recorded by the fORP interface unit and saved in the Matlab program. Prior to the functional run, subjects were trained in the color identification task using unrelated face stimuli that were cropped, colorized, and presented in the same manner as the non‐masked faces described above to avoid any learning effects during the functional run. Reaction time data for each stimulus type were determined only for trials where subjects correctly identified the color of the faces (0.94 ± 0.25 s). The average accuracy (± SEM) for all stimuli was 89% ± 1%. Reaction time difference scores were calculated for subtracting the average reaction time for the IN (implicit neutral) or EN (explicit neutral) trials for each subject from their corresponding IF (implicit fearful), IA (implicit angry), EF (explicit fearful), or EA (explicit angry) average reaction time, respectively.

Immediately after fMRI scanning, subjects underwent the detection task, in which subjects were shown all of the stimuli again, alerted to the presence of emotional (fearful or angry) faces. Subjects were administered a forced‐choice test under the same presentation conditions as during scanning and asked to indicate whether they saw an emotional face or not. The detection task was designed to assess possible awareness of the masked emotional faces. The chance level for correct answers was 33.3%. Better than chance performance was determined by calculation of a detection sensitivity index (d′) based on the percentage of trials a masked stimulus was detected when presented [“hits” (H)] adjusted for the percentage of trials a masked stimulus was “detected” when not presented [“false alarms” (FA)]; [d′ = z‐score (percentage H) − z‐score (percentage FA), with chance performance = 0 ± 1.74] [Whalen et al., 2004]. Each subject's detection sensitivity was calculated separately for each of the stimulus categories and then averaged.

Electroencephalogram Apparatus, Recording, and Data Analysis

The electroencephalogram (EEG) was continuously recorded from 32 scalp sites using electrodes mounted in an elastic cap and positioned according to the modified International 10 − 20 system, with the addition of two mastoid electrodes. The electrode at right mastoids (A2) was used as on‐line reference. Eye blinks and eye movements were monitored with electrodes located above and below left eye. The horizontal electro‐oculogram was recorded from electrodes placed 1.5 cm lateral to the left and right external canthi. A ground electrode was placed on the forehead. Electrode/skin impedance was kept < 5 kΩ. Channels were re‐referenced off‐line to the average of left and right mastoid recordings [(A1 + A2)/2]. Signals were sampled at 250 Hz, band‐pass filtered (0.1 − 100 Hz), and epoched over an analysis time of 900 ms, including pre‐stimulus 100 ms used for baseline correction. An automatic artifact rejection system excluded from the average all trials containing transients exceeding ±100 μV at all recording electrodes. Furthermore, the quality of ERP traces was ensured by careful visual inspection in every subject and trial and by applying appropriate digital, zero‐phase shift band‐pass filtered (0.1–50 Hz, 24 dB/octave). The first ten trials were omitted from the averaging to exclude unexpected large responses elicited by the initiation of the sequences. The paradigm was edited by MatLab software (The MathWorks). Each event in the paradigm was associated with a digital code that was sent to the continuous EEG, allowing off‐line segmentation and average of selected EEG periods for analysis. The ERP data were processed and analyzed by Neuroscan 4.3 (Compumedics, Australia).

The amplitudes of MMN were defined as an average within a 50‐ms time window surrounding the peak at the electrode sites (F3, Fz, F4, C3, Cz, C4). The peak of MMN was defined as the largest negativity in the subtraction between the deviant and standard ERPs during a period of 150 to 350 ms after stimulus onset. Only the standards before the deviants were included into analysis. Statistical analysis on MMN was conducted using a repeated ANOVA comprising the within‐subject factors as the deviant type (fearful or angry), coronal (left, midline, right), and anterior‐posterior electrode site (frontal, central). Degrees of freedom were corrected using the Greenhouse‐Geisser method. The post hoc comparison was conducted when preceded by only significant main effects.

fMRI Data Acquisition, Image Processing, and Analysis

Functional and structural MRI data were acquired on a 3T MRI scanner (Siemens Magnetom Tim Trio, Erlanger, German) equipped with a high‐resolution 12‐channel head array coil. A gradient‐echo, T2*‐weighted echoplanar imaging with blood oxygen level‐dependent (BOLD) contrast pulse sequence was used for functional data. To optimize the BOLD signal in amygdala [Morawetz et al., 2008], 29 interleaved slices were acquired along the AC‐PC plane, with a 96 × 128 matrix, 19.2 × 25.6 cm2 field of view (FOV) and voxel size 2 × 2 × 2 mm, resulting in a total of 144 volumes for the functional run (TR = 2 s, TE = 36 ms, flip angle = 70°, slice thickness 2 mm, no gap). Parallel imaging GRAPPA with factor 2 was used to speed up acquisition. Structural data were acquired using a magnetization‐prepared rapid gradient echo sequence (TR = 2.53 s, TE = 3.03 ms, FOV = 256 × 224 mm2, flip angle = 7°, matrix = 224 × 256, voxel size = 1.0 × 1.0 × 1.0 mm3, 192 sagittal slices/slab, slice thickness = 1 mm, no gap).

Image processing and analysis was carried out using SPM8 (Wellcome Department of Imaging Neuroscience, London, UK). Using MarsBar (see http://marsbar.sourceforge.net/), ROIs were drawn from the right and left amygdala according to a prior study [Dannlowski et al., 2007]. Signal across all voxels with a radius of 6 mm in these ROIs was averaged and evaluated for the masked and nonmasked emotional (fearful and angry) comparisons. Low‐frequency signal drift was corrected by applying a high‐pass temporal filter with a 128 s cutoff. The general linear model was carried out for statistical analyses. Regressors were created for each event type in a block design with 12 s “activity on” periods. These regressors were then convolved with a canonical hemodynamic response function. Shorthand [e.g., EF (explicit fearful) − EN (explicit neutral)] was used to indicate the contrasts of regressors (e.g., explicit fearful blocks > explicit neutral blocks). Error bars signify SEM. To isolate the effects of emotional content of stimuli from other aspects of the stimuli and the task, we subtracted neutral [EN (explicit neutral)] or masked neutral activity [IN (implicit neutral)] from emotional [EF (explicit fearful); EA (explicit angry)] or masked emotional activity [IF (implicit fearful); IA (implicit angry)], respectively. The explicit perception of fearful and angry faces was denoted as nonmasked fear and anger (EF − EN, EA − EN) and the implicit perception of fearful and angry faces as masked fear and anger (IF − IN, IA − IN). For these priori ROIs, small volume corrections were applied with a statistical threshold of P = 0.05 (FWE corrected).

Relations between Emotional MMN, Amygdala Reactivity, and Trait Anxiety

Path analysis was performed to test the directionality of emotional MMN and amygdala reactivity influences on trait anxiety (STAI‐T). The fearful MMN was treated as independent variable. Amygdala reactivity was treated as latent variable, and errors were assumed to be uncorrelated. To avoid overfitting, models with more than two paths were not evaluated. The Bayesian information criterion (BIC) was used to evaluate model fit, the best fitting model having the lowest BIC value [Raftery, 1993]. Moreover, the best fitting model should fulfill the criteria of a χ2 statistic corresponding to P > 0.05 and a standardized root mean square residual value of < 0.08. Statistical analyses were performed using SPSS 17.0 and IBM SPSS AMOS 23.0. To test whether the influence of emotional MMN on trait anxiety (STAI‐T) was dependent on its influence on the amygdala reactivity, path analysis was performed with the aim to find the best‐fitting model from three possible candidates: (1) fearful MMN → amygdala reactivity → STAI‐T; (2) fearful MMN → STAI‐T → amygdala reactivity; (3) fearful MMN → amygdala reactivity, fearful MMN → STAI‐T.

RESULTS

Behavioral Performance

The STAI assessments before fMRI scanning and ERP recording, respectively, indicated that both of the scores on state anxiety [t(29) = −0.7, P = 0.95] and trait anxiety [t(29) = 0.13, P = 0.9] did not differ between two experimental sessions.

For the color identification task within the fMRI scanner, the accuracy did not differ between explicit and implicit conditions [t(29) = −0.64, P > 0.05]. For the emotion detection task outside the fMRI scanner, according to one‐tailed binominal model, the score of 28 hits (42.2% hit rate) and above were considered significantly over chance level (33%). All subjects performed above chance in the explicit condition (d′, mean ± SD: 1.51 ± 0.31), but below chance in the implicit condition (0.07 ± 0.09).

Neurophysiological Measures of Preattentive Discrimination of Emotional Voices

Preattentive discrimination of emotional voices was studied using MMN, determined by subtracting the neutral ERP from angry and fearful ERPs (Supporting Information Table s1). The three‐way repeated ANOVA analysis showed main effects of deviant type (fearful vs. angry) [F(1, 29) = 5.49, P = 0.026, = 0.16] and coronal site (left, midline, right) [F(2, 58) = 19.39, P < 0.001, = 0.4]. MMN to fearful deviants (mean ± SE, 4.79 ± 0.367 μv) was significantly larger in amplitude than MMN to angry deviants (4.22 ± 0.327 μv). The midline electrodes (4.8 ± 0.35 μv) displayed larger MMN than right site (4.51 ± 0.35 μv) and left site electrodes (4.16 ± 0.3 μv) irrespective of fearful or angry deviants. There was a significant interactions of anterior‐posterior × coronal site [F(2, 58) = 5.51, P = 0.006, = 0.16]. Post hoc analyses revealed that frontal and central electrodes were comparable in the right site (frontal: 4.41 ± 0.39 μv; central: 4.62 ± 0.32 μv), whereas significantly different in the left (P = 0.003) and midline electrodes (P = 0.002). Additionally, the amplitudes of fearful MMN (fearful vs. neutral) were significantly associated with the trait anxiety (r = −0.45, P = 0.01) (Fig. 1). Smaller amplitudes of fearful MMN predicted higher trait anxiety.

Figure 1.

Emotional MMN and trait anxiety. A. Subtracting neutral ERP from fearful and angry ERPs determines fearful and angry MMN, respectively. B. Weaker fearful MMN at Cz predicted more trait anxiety on the STAI‐T.

Amygdala Reactivity

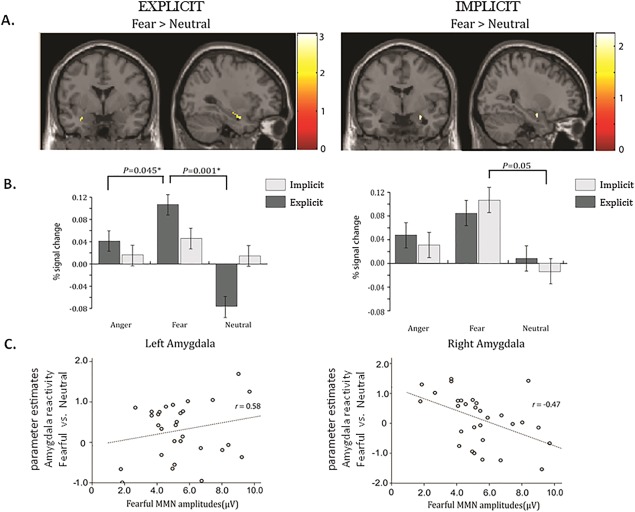

The voxel‐wise analysis showed significant activations in the fusiform gyrus to explicit and implicit perception of fearful faces (Supporting Information Table s2). Explicitly perceived, that is, nonmasked, fearful faces activated the left amygdala, whereas implicitly perceived, that is, masked, fearful faces activated the right amygdala (Fig. 2A). However, angry faces did not elicit any activation within the amygdala. ROI analyses confirmed that the explicitly perceived fearful relative to neutral faces [t(29) = 4.30, P = 0.001] elicited significantly stronger activation in the left amygdala, whereas implicitly perceived fearful relative to neutral faces elicited significantly stronger activation in the right amygdala [t(29) = 2.10, P = 0.05] (Fig. 2B).

Figure 2.

Explicit and implicit processing of fearful faces in left and right amygdala. A. The voxel‐wise analysis showed that explicitly perceived, that is, nonmasked, fearful faces activated the left amygdala, whereas implicitly perceived, that is, masked, fearful faces activated the right amygdala. B. The ROI analyses indicated that the explicitly perceived fearful relative to neutral faces (P = 0.001) elicited significantly stronger activation in the left amygdala, whereas implicitly perceived fearful relative to neutral faces elicited significantly stronger activation in the right amygdala (P = 0.05). C. Explicitly perceived negative emotionality (fearful vs. neutral) in the left amygdala was positively correlated with fearful MMN amplitudes (r = 0.58, P = 0.008), whereas the implicitly perceived emotionality in the right amygdala was negatively correlated with fearful MMN amplitudes (r = −0.47, P = 0.009). Fisher r‐to‐z transformation indicated that amygdala reactivity to explicitly and implicitly perceived emotionality independently predicted the fearful MMN amplitudes (Δz = 4.31, P < 0.01).

Additionally, the explicitly perceived negative emotionality (fearful vs. neutral) in the left amygdala was positively correlated with fearful MMN amplitudes (r = 0.58, P = 0.008), whereas the implicitly perceived emotionality in the right amygdala was negatively correlated with fearful MMN amplitudes (r = −0.47, P = 0.009) (Fig. 2C). Fisher r‐to‐z transformation indicated that the amygdala reactivity to explicitly and implicitly perceived emotionality independently predicted the fearful MMN amplitude (Δz = 4.31, P < 0.01).

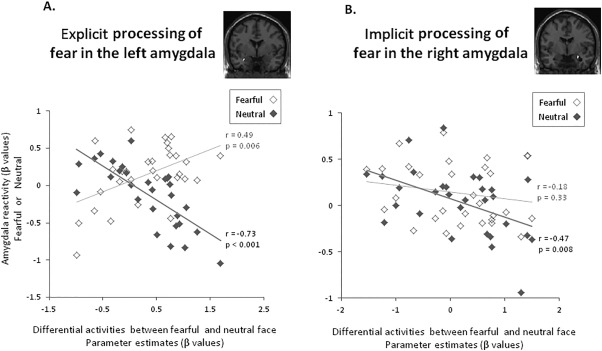

Furthermore, the amygdala reactivity to explicitly perceived emotionality (fearful vs. neutral faces) was positively correlated with the response to fearful faces (r = 0.49, P = 0.006) but negatively correlated with the response to neutral faces (r = −0.73, P < 0.001) (Fig. 3A). Fisher r‐to‐z transformation confirmed that both of the response to fearful and neutral faces independently contributed to explicitly perceived emotionality (Δz = 5.38, P < 0.01). During the explicit processing of emotionality, higher level of negative emotionality (fearful vs. neutral faces) was coupled with lower activation to neutral faces but higher activation to fearful faces. Conversely, the amygdala reactivity to implicitly perceived emotionality (fearful vs. neutral faces) was only significantly correlated with the response to neutral faces (r = −0.47, P = 0.008) but was not correlated with the response to fearful faces (r = −0.18, P = 0.33) (Fig. 3B). Fisher r‐to‐z transformation indicated that the correlation coefficients between the response to neutral and fearful faces were not significantly different during implicitly perceived emotionality (Δz = 1.21, P = 0.23). During the implicit processing of emotionality, higher level of negative emotionality (fearful vs. neutral faces) was coupled with lower activation to neutral faces.

Figure 3.

Negative emotionality (fearful vs. neutral) in the amygdala as the function of neutral and fearful face processing. A. The amygdala reactivity to explicitly perceived emotionality (fearful vs. neutral) was positively correlated with the response to fearful faces (r = 0.49, P = 0.006) but negatively correlated with the response to neutral faces (r = −0.73, P < 0.001). Fisher r‐to‐z transformation confirmed that both of the response to fearful faces and neutral faces independently contributed to explicitly perceived emotionality (Δz = 5.38, P < 0.01). During the explicit processing of emotionally undefined stimuli, higher level of negative emotionality (fearful vs. neutral faces) was coupled with lower activation to neutral faces but higher activation to fearful faces. B. Conversely, the amygdala reactivity to implicitly perceived emotionality (fearful vs. neutral) was only negatively correlated with the response to neutral faces (r = −0.47, P = 0.008), but was not correlated with the response to fearful faces (r = −0.18, P = 0.33). Fisher r‐to‐z transformation indicated that the correlation coefficients between the response to neutral and fearful faces were not significantly different during implicitly perceived emotionality (Δz = 1.21, P = 0.23). During the implicit processing of emotionally undefined stimuli, higher level of negative emotionality (fearful vs. neutral faces) was coupled with lower activation to neutral faces.

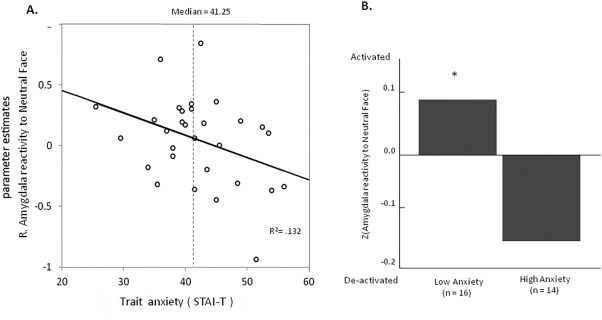

Figure 4A demonstrates that the amygdala reactivity to neutral faces was negatively correlated with the STAI‐T scores (trait anxiety) (r = −0.36, P = 0.048). We further divided groups of relatively high and low trait anxiety based on median split of STAI‐T scores (Median = 0.96; Mean ± SD = 0.96 ± 0.04). We normalized the individual amygdala reactivity and performed a non‐parametric one‐sample‐t test (Wilcoxon signed rank tests) separately for these groups. Results showed that the group with higher trait anxiety (n = 14) had a marginally significant de‐activation in the amygdala to neutral faces (Z = 1.32, P = 0.061), whereas the group with lower trait anxiety (n = 16) showed an increased activation in the amygdala to neutral faces (Z = 1.51, P = 0.022) (Fig. 4B).

Figure 4.

Amygdala reactivity to neutral stimuli and trait anxiety. A. Scatterplot showing the individual trait anxiety measurements plotted against the amygdala reactivity to neutral stimuli. The decreased amygdala activation to neutral stimuli, indicating higher baseline amygdala activation, was associated with higher levels of trait anxiety (r = −0.36, P = 0.048). B. The group of participants who reported higher trait anxiety than the median showed marginally significant de‐activation in the amygdala to neutral faces (Z = 1.32, P = 0.061), whereas the group of participants who reported lower trait anxiety showed increased activation in the amygdala to neutral faces (Z = 1.51, P = 0.022).

Relationship between Emotional MMN, Amygdala Reactivity, and Trait Anxiety

For the implicit condition, a multiple regression model predicting the right amygdala reactivity from fearful MMN at the selected electrodes indicated an independent contribution at C4 [β = −0.47, t(29) = 2.80, P = 0.009]. Such a relation was not found in the left amygdala (P > 0.05). As for the explicit condition, a multiple regression model predicting the left amygdala reactivity from fearful MMN at the selected electrodes indicated an independent contribution at C3 [β = 0.39, t (29) = 2.29, P = 0.03]. Such a relationship was not found in the right amygdala (P > 0.05).

To test whether the influence of fearful MMN on trait anxiety (STAI‐T) was dependent on its influence on amygdala reactivity, path analysis was performed with the aim to find the best‐fitting model from three possible candidates: (1) fearful MMN → amygdala reactivity → STAI‐T; (2) fearful MMN → STAI‐T → amygdala reactivity; (3) fearful MMN → amygdala reactivity, fearful MMN → STAI‐T.

For the explicit condition, the lowest BIC value, indicating the best fit, was obtained for the model (3) [P = 0.58; fearful MMN → amygdala reactivity; fearful MMN → STAI‐T]. Fearful MMN explained 15.8% of the variance in left amygdala reactivity and 24.7% of the variance in STAI‐T scores when the shared variance was partialed out (Supporting Information Figure s1A). As for the implicit condition, the best model was obtained for the model (1) [P = 0.25; fearful MMN → amygdala reactivity → STAIT]. Fearful MMN explained 16.5% of the variance in right amygdala reactivity; and right amygdala reactivity explained 13.2% of the variance in STAI‐T scores when the shared variance was partialed out (Supporting Information Figure s1B).

DISCUSSION

Previous literature suggests that amygdala signals emotional salience of faces and MMN indexes emotional salience of voices. Trait anxiety modulates the neural network underpinning the processing of emotional salience [Geng et al., 2015]. To clarify whether the amygdala reactivity in response to explicit and implicit emotional processing was associated with emotional MMN, we combined fMRI scanning and ERP recording in subjects who varied in trait anxiety.

Fearful MMN was negatively correlated with STAI‐T scores. Using a similar paradigm in individuals with autistic traits, a previous study reported that the amplitude of fearful MMN was negatively correlated with the severity of social deficits [Fan and Cheng, 2014]. Social deficits were closely coupled with anxiety [White and Roberson‐Nay, 2009]. It is thus reasonable to infer that participants with higher levels of self‐reported trait anxiety would be more likely to display reduced fearful MMN. Given its automatic nature, the emotional MMN might be used to index perceived emotional saliency associated with trait anxiety levels.

The amygdala reactivity to explicitly and implicitly perceived emotionality (fearful vs. neutral faces) was negatively correlated with the response to neutral faces. We extended this finding to ascribe the association with 5‐HTTLPR‐dependent modulation of amygdala reactivity. The tonic model explained higher negative emotionality in short allele carriers of the 5‐HTTLPR polymorphism as a result of higher de‐activation of amygdala responses to neutral stimuli, rather than higher responses to threatening stimuli per se [Canli and Lesch, 2007]. In parallel, we found that the amygdala activation elicited by neutral faces was negatively associated with the STAI‐T scores. During the implicit processing of emotionally undefined stimuli, higher level of negative emotionality (fearful vs. neutral faces) was coupled with lower activation to neutral faces. Furthermore, individuals with the short allele are associated with higher levels of trait anxiety, as suggested by one meta‐analysis study [Schinka et al., 2004]. It is not surprising that the group of participants who reported higher levels of trait anxiety showed a marginally significant de‐activation in the amygdala to neutral faces, whereas the group of participants who reported lower trait anxiety showed an increased activation in the amygdala to neutral faces.

The explicitly perceived fearful face relative to neutral faces significantly elicited stronger activation in the left amygdala, whereas implicitly perceived fearful face relative to neutral significantly elicited stronger activation in the right amygdala. In parallel, one previous fMRI study demonstrated that masked and unmasked presentations of angry faces produced significant activation in the right and left amygdala, respectively [Morris et al., 1998]. In assessing the influence of emotional cues on behavior, fearful and angry faces have been frequently presumed to signify threat to perceivers and are often combined into one category, that is, threatening faces [Marsh et al., 2005]. However, in the current study, angry faces did not elicit any activation within the amygdala. It lent support to the notion that fearful faces are particularly stronger activator of the amygdala. Fearful faces might indicate the increased probability of threats, and angry faces could instantiate threats to a certain degree [Davis et al., 2011].

Notably, fearful MMN was found to have opposite correlations with left and right amygdala reactivity in response to explicit and implicit perception of fearful faces, respectively. Visual backward masking has often been used to study non‐conscious perception [Kouider and Dehaene, 2007]. The cognitive process underlying conscious awareness can be understood better in terms of attention dependent or not [Badgalyan, 2012]. The MMN paradigm classically requires participants to read a book or watch a movie. Although MMN has been supposed to be relatively automatic [Näätänen, 1992], available evidence about the effect of task demand on MMN is mixed. Some studies found that the task load has no effect on MMN amplitude when auditory stimuli are ignored [Muller‐Gass et al., 2006; Otten et al., 2000]. On the contrary, other studies suggested that task difficulty might affect MMN response to a degree when varying visual tasks during continuous vigilance is used [Fan et al., 2013; Yucel et al., 2005; Zhang et al., 2006]. Theoretically, MMN is hypothesized to emerge on the borderline between automatic and attention‐dependent processes [Näätänen et al., 2011]. Furthermore, the path analyses indicated that the explicit and implicit processing of amygdala reactivity could influence the directionality and strength of the associations between fearful MMN and STAI‐T. Fearful MMN explained 15.8% of the variance in left amygdala reactivity along with 24.7% of the variance in STAI‐T scores. Conversely, fearful MMN explained 16.5% of the variance in right amygdala reactivity; and right amygdala reactivity explained 13.2% of the variance in STAI‐T scores. That is, fearful MMN directly explained the variance in STAI‐T; however, the MMN effect on STAI‐T was mediated by right amygdala reactivity. In addition, the model fitting and path analyses revealed that the value of the Akaike information criterion (AIC) was approximately 2 for implicit processing; however, it was higher than 4 for explicit processing. Given that a lower AIC value indicates a closer model fit, it should be reasonable to infer that implicit perception more clearly explained the associations between fearful MMN and trait anxiety than did explicit perception.

Some limitations of this study must be acknowledged. First, imaging the amygdala with fMRI may be affected by multiple adverse factors. For instance, signal dropouts result from magnetic inhomogeneity and low signal‐to‐noise ratio. The extent of movements in relation with neutral or negative stimuli might alter the amygdala signal. There are raising concerns about many interpretations on the functioning of the amygdala that rely on fMRI evidence only. One recent study even reported that the amygdala activations are likely confounded by signals originating in the basal vein of Rosenthal rather than amygdala itself [Boubela et al., 2015]. In this study, we looked into the six parameters from SPM, which describes the rigid body movements of each subject, and found that the movements were not statistically dependent on the neutral and emotional conditions (all P > 0.2). Second, regarding sample size and ethnic background, generalization of the results may be limited. This may not be the optimal design, and future studies in which multimodal imaging and larger sample size are warranted.

Taken together, integrating the fMRI and ERP data, this study demonstrated that amygdala reactivity in response to explicit and implicit processing of emotional faces displays opposite associations with MMN to the unexpected presence of emotionally spoken voices embedded in a passive auditory oddball paradigm. We thus propose that emotional MMN should stand at the crossroads between explicit (conscious) and implicit (non‐conscious) emotional processing in the amygdala. Given its automaticity to perceived emotional saliency in terms of auditory changes [Garrido et al., 2009], emotional MMN might serve as a biomarker for trait anxiety.

Supporting information

Supporting Information

ACKNOWLEDGMENT

None of the authors have any conflicts of interest to declare.

REFERENCES

- Adolphs R (2010): What does the amygdala contribute to social cognition? Ann N Y Acad Sci 1191:42–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Badgalyan RD (2012): Nonconscious perception, conscious awarenss and attention. Conscious Cogn 21:584–586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belin P, Fecteau S, Bedard C (2004): Thinking the voice: Neural correlates of voice perception. Trends Cogn Sci 8:129–135. [DOI] [PubMed] [Google Scholar]

- Boubela RN, Kalcher K, Huf W, Seidel EM, Derntl B, Pezawas L, Nasel C, Moser E (2015): fMRI measurements of amygdala activation are confounded by stimulus correlated signal fluctuation in nearby veins draining distant brain regions. Sci Rep 5:10499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Canli T, Lesch KP (2007): Long story short: The serotonin transporter in emotion regulation and social cognition. Nat Neurosci 10:1103–1109. [DOI] [PubMed] [Google Scholar]

- Canli T, Omura K, Haas BW, Fallgatter A, Constable RT, Lesch KP (2005): Beyond affect: A role for genetic variation of the serotonin transporter in neural activation during a cognitive attention task. Proc Natl Acad Sci USA 102:12224–12229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen C, Lee YH, Cheng Y (2014): Anterior insular cortex activity to emotional salience of voices in a passive oddball paradigm. Front Hum Neurosci 8:743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen C, Chen CY, Yang CY, Lin CH, Cheng Y (2015): Testosterone modulates preattentive sensory processing and involuntary attention switches to emotional voices. J Neurophysiol 113:1842–1849. [DOI] [PubMed] [Google Scholar]

- Chen C, Liu CC, Weng PY, Cheng Y (2016a): Mismatch negativity to threatening voices associated with positive symptoms in schizophrenia. Front Hum Neurosci 10:362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen C, Sung JY, Cheng Y (2016b): Neural dynamics of emotional salience processing in response to voices during the stages of sleep. Front Behav Neurosci 10:117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheng Y, Lee SY, Chen HY, Wang PY, Decety J (2012): Voice and emotion processing in the human neonatal brain. J Cogn Neurosci 24:1411–1419. [DOI] [PubMed] [Google Scholar]

- Dannlowski U, Ohrmann P, Bauer J, Kugel H, Arolt V, Heindel W, Kersting A, Baune BT, Suslow T (2007): Amygdala reactivity to masked negative faces is associated with automatic judgmental bias in major depression: A 3T fMRI study. J Psychiatry Neurosci 32:423–429. [PMC free article] [PubMed] [Google Scholar]

- Davis FC, Somerville LH, Ruberry EJ, Berry AB, Shin LM, Whalen PJ (2011): A tale of two negatives: Differential memory modulation by threat‐related facial expressions. Emotion 11:647–655. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekman P, Friesen WV (1976): Pictures of Facial Affect. Palo Alto, CA: Consulting Psychologists Press. [Google Scholar]

- Etkin A, Klemenhagen KC, Dudman JT, Rogan MT, Hen R, Kandel ER, Hirsch J (2004): Individual differences in trait anxiety predict the response of the basolateral amygdala to unconsciously processed fearful faces. Neuron 44:1043–1055. [DOI] [PubMed] [Google Scholar]

- Fan YT, Cheng Y (2014): Atypical mismatch negativity in response to emotional voices in people with autism spectrum conditions. PLoS One 9:e102471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan YT, Hsu YY, Cheng Y (2013): Sex matters: n‐back modulates emotional mismatch negativity. Neuroreport 24:457–463. [DOI] [PubMed] [Google Scholar]

- First MB, Gibbon M, Spitzer RL, Williams JBW (1996): User's Guide for the Structured Clinical Interview for DSM‐IV Axis I Disorders ‐ Research Version (SCID‐I/P, version 2.0). New York: Biometrics Research Department, New York State Psychiatric Institute. [Google Scholar]

- Garrido MI, Kilner JM, Stephan KE, Friston KJ (2009): The mismatch negativity: A review of underlying mechanisms. Clin Neurophysiol 120:453–463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geng H, Li X, Chen J, Gu R (2015): Decreased intra‐ and inter‐salience network functional connectivity is related to trait anxiety in adolescents. Front Behav Neurosci 9:350. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hung AY, Cheng Y (2014): Sex differences in preattentive perception of emotional voices and acoustic attributes. Neuroreport 25:464–469. [DOI] [PubMed] [Google Scholar]

- Hung AY, Ahveninen J, Cheng Y (2013): Atypical mismatch negativity to distressful voices associated with conduct disorder symptoms. J Child Psychol Psychiatry 54:1016–1027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim MJ, Loucks RA, Neta M, Davis FC, Oler JA, Mazzulla EC, Whalen PJ (2010): Behind the mask: The influence of mask‐type on amygdala response to fearful faces. Soc Cogn Affect Neurosci 5:363–368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kouider S, Dehaene S (2007): Levels of processing during non‐conscious perception: A critical review of visual masking. Philos Trans R Soc Lond B Biol Sci 362:857–875. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marsh AA, Ambady N, Kleck RE (2005): The effects of fear and anger facial expressions on approach‐ and avoidance‐related behaviors. Emotion 5:119–124. [DOI] [PubMed] [Google Scholar]

- Milders M, Sahraie A, Logan S (2008): Minimum presentation time for masked facial expression discrimination. Cogn Emot 22:63–82. [Google Scholar]

- Morawetz C, Holz P, Lange C, Baudewig J, Weniger G, Irle E, Dechent P (2008): Improved functional mapping of the human amygdala using a standard functional magnetic resonance imaging sequence with simple modifications. Magn Reson Imaging 26:45–53. [DOI] [PubMed] [Google Scholar]

- Morris JS, Ohman A, Dolan RJ (1998): Conscious and unconscious emotional learning in the human amygdala. Nature 393:467–470. [DOI] [PubMed] [Google Scholar]

- Most SB, Chun MM, Johnson MR, Kiehl KA (2006): Attentional modulation of the amygdala varies with personality. Neuroimage 31:934–944. [DOI] [PubMed] [Google Scholar]

- Muller‐Gass A, Stelmack RM, Campbell KB (2006): The effect of visual task difficulty and attentional direction on the detection of acoustic change as indexed by the Mismatch Negativity. Brain Res 1078:112–130. [DOI] [PubMed] [Google Scholar]

- Munafò MR, Brown SM, Hariri AR (2008): Serotonin transporter (5‐HTTLPR) genotype and amygdala activation: A meta‐analysis. Biol Psychiatry 63:852–857. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murphy SE, Norbury R, Godlewska BR, Cowen PJ, Mannie ZM, Harmer CJ, Munafò MR (2013): The effect of the serotonin transporter polymorphism (5‐HTTLPR) on amygdala function: A meta‐analysis. Mol Psychiatry 18:512–520. [DOI] [PubMed] [Google Scholar]

- Näätänen R (1992): Attention and Brain Function. Hillsdale, NJ: Erlbaum. [Google Scholar]

- Näätänen R, Gaillard AW, Mantysalo S (1978): Early selective‐attention effect on evoked potential reinterpreted. Acta Psychol (Amst) 42:313–329. [DOI] [PubMed] [Google Scholar]

- Näätänen R, Kujala T, Winkler I (2011): Auditory processing that leads to conscious perception: A unique window to central auditory processing opened by the mismatch negativity and related responses. Psychophysiology 48:4–22. [DOI] [PubMed] [Google Scholar]

- Otten LJ, Alain C, Picton TW (2000): Effects of visual attentional load on auditory processing. Neuroreport 11:875–880. [DOI] [PubMed] [Google Scholar]

- Raftery AE (1993): Bayesian model selection in structural equation models In: Bollen KA, Long JS, editors. Testing Structural Equation Models. Newbury Park, CA: Sage Publications; pp 163–180. [Google Scholar]

- Schinka JA, Busch RM, Robichaux‐Keene N (2004): A meta‐analysis of the association between the serotonin transporter gene polymorphism (5‐HTTLPR) and trait anxiety. Mol Psychiatry 9:197–202. [DOI] [PubMed] [Google Scholar]

- Schirmer A, Striano T, Friederici AD (2005): Sex differences in the preattentive processing of vocal emotional expressions. Neuroreport 16:635–639. [DOI] [PubMed] [Google Scholar]

- Schirmer A, Escoffier N, Zysset S, Koester D, Striano T, Friederici AD (2008): When vocal processing gets emotional: On the role of social orientation in relevance detection by the human amygdala. Neuroimage 40:1402–1410. [DOI] [PubMed] [Google Scholar]

- Spielberger CD, Gorsuch RL, Lushene RE (1970): Manual for the State‐Trait Anxiety Inventory. Palp Alto, CA: Consulting Psychologists Press. [Google Scholar]

- Tamietto M, de Gelder B (2010): Neural bases of the non‐conscious perception of emotional signals. Nat Rev Neurosci 11:697–709. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Rauch SL, Etcoff NL, McInerney SC, Lee MB, Jenike MA (1998): Masked presentations of emotional facial expressions modulate amygdala activity without explicit knowledge. J Neurosci 18:411–418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whalen PJ, Kagan J, Cook RG, Davis FC, Kim H, Polis S, McLaren DG, Somerville LH, McLean AA, Maxwell JS, Johnstone T (2004): Human amygdala responsivity to masked fearful eye whites. Science 306:2061. [DOI] [PubMed] [Google Scholar]

- White SW, Roberson‐Nay R (2009): Anxiety, social deficits, and loneliness in youth with autism spectrum disorders. J Autism Dev Disord 39:1006–1013. [DOI] [PubMed] [Google Scholar]

- Yucel G, Petty C, McCarthy G, Belger A (2005): Graded visual attention modulates brain responses evoked by task‐irrelevant auditory pitch changes. J Cogn Neurosci 17:1819–1828. [DOI] [PubMed] [Google Scholar]

- Zhang P, Chen X, Yuan P, Zhang D, He S (2006): The effect of visuospatial attentional load on the processing of irrelevant acoustic distractors. Neuroimage 33:715–724. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supporting Information