Abstract

Recent research has shown that toddlers’ lexical representations are phonologically detailed, quantitatively much like those of adults. Studies in this article explore whether toddlers’ and adults’ lexical representations are qualitatively similar. Psycholinguistic claims (Lahiri & Marslen-Wilson, 1991; Lahiri & Reetz, 2002, 2010) based on underspecification (Kiparsky, 1982 et seq.) predict asymmetrical judgments in lexical processing tasks; these have been supported in some psycholinguistic research showing that participants are more sensitive to noncoronal-to-coronal (pop → top) than to coronal-to-noncoronal (top → pop) changes or mispronunciations. Three experiments using on-line visual world procedures showed that 19-month-olds and adults displayed sensitivities to both noncoronal-to-coronal and coronal-to-noncoronal mispronunciations of familiar words. No hints of any asymmetries were observed for either age group. There thus appears to be considerable developmental continuity in the nature of early and mature lexical representations. Discrepancies between the current findings and those of previous studies appear to be due to methodological differences that cast doubt on the validity of claims of psycholinguistic support for underspecification.

Keywords: lexical representation, developmental continuity, mispronunciation processing, phonological details, underspecification

Adults exhibit impressive abilities in recognizing words in familiar languages. They can segment words instantaneously from continuous speech streams, abstract native phonemes effortlessly from speech signals characterized by lack of invariants, and distinguish known words from novel words with very high accuracy.

For learners to acquire adult-like language proficiency, experience with the phonology of the native language plays a central role. Studies have consistently shown that infants possess global perceptual sensitivities that can be adapted to learn the phonological categories of any language. For example, before six months, infants can distinguish phonetic categories that are not used in their native language, and it is not until the end of the first year of life that infants are fully tuned to language-specific speech structures (e.g., Kuhl, Williams, Lacerda, Stevens, & Lindblom, 1992; Werker, Gilbert, Humphrey, & Tees, 1981; Werker & Tees, 1984; Werker & Lalonde, 1988). Whether early lexical knowledge comprises native phonological details and whether the details are adult-like both in amount and in nature have been topics of debate.

Several early studies suggested that early lexical representations might be holistic and vague, with little or no detailed phonological information. For instance, children as old as eight years had been found to fail to discriminate native phonemes across a variety of tasks, including picture selection and phonological similarity judgment (Barton, 1976, 1980; Eilers & Oller, 1976; Garnica, 1973; Kay-Raining Bird & Chapman, 1998; Schvachkin, 1973). However, recent studies using less demanding experimental paradigms have shown that at least by the middle of the second year, lexical representations are detailed in terms of phonological features. Swingley & Aslin (2000) found that 14-month-olds are sensitive to 1-feature mispronunciations (“vaby”) of familiar words (“baby”). White & Morgan (2008) demonstrated that 19-month-old infants display graded sensitivity to varying degrees of onset mispronunciations of familiar words: as mispronunciations increasingly deviated from a correct form (/dog/) by one (/gog/), two (/kog/), or three features (/sog/), infants’ proportional looking to a referent of the familiar target word decreased. The same pattern of response was replicated for coda mispronunciations (Ren & Morgan, 2011), vowel mispronunciations (Mani & Plunkett, 2011) and lexical tone mispronunciations (Ren & Morgan, 2013). Using a slightly different paradigm, Nazzi (2005) showed that 20-month-olds can apply their detailed representation of native speech categories in learning minimal pairs of novel words (“pize” vs. “tize”). Adults have also been found to be affected by the degree of acoustic-phonetic mismatch during semantic priming (Connine, Titone, Deelman, & Blasko, 1997; Milberg, Blumstein, & Dworetzky, 1988) and visual word recognition (Tin, White, & Morgan, 2014). Thus, early and mature lexical representations both appear to be of commensurate with phonological detail.

However, although these previous studies have found striking developmental continuity in the amount of phonological details between early and mature lexical representations, whether the nature of the represented detail is stable across development is still not known. In particular, phonological theories of underspecification (Archangeli, 1988; Avery & Rice, 1989; Kiparsky, 1982) have suggested that certain unmarked feature values, such as coronal place of articulation, may be left unspecified or empty in underlying lexical representations (Kean, 1975). Such arguments are primarily motivated by differences in phonological processes between coronal and noncoronal segments. For example, in Catalan (Mascaro, 1976), backwards place of articulation assimilation occurs only for coronal stops, as in (1),

(1). Stop assimilation in Catalan:

| se[t] | ‘seven’ |

| se[m] mans | ‘seven hands’ |

| se[p] forcs | ‘seven fires’ |

| se[b] beus | ‘seven voices’ |

| se[d] dones | ‘seven women’ |

| se[k] cases | ‘seven houses’ |

whereas labial and velar stops do not undergo such assimilation.

(2). Stop assimilation in Catalan :

| ca[p] | ‘no’ |

| ca[p] signe | ‘no sign’ |

| po[k] | ‘few’ |

| po[k] pa | ‘few bread’ |

| po[k] sol | ‘few sun’ |

Differences in phonological processes between coronal and noncoronal segments are also found in many other languages, such as English place assimilation (e.g. sweet [swi:t] girl is often pronounced as [swi:k]girl) (Gaskell & Marslen-Wilson, 1996) and so forth. Underspecification theory is thus in part an attempt to explain why pronunciations of nominally coronal segments in words are less faithful than are pronunciations of noncoronal segments.

Although underspecification was not advanced to explain speech perception, hypotheses predicting effects on spoken word recognition have been derived from this aspect of phonological theory (Lahiri & Marslen-Wilson, 1991; Lahiri & Reetz, 2002, 2010). For example, the Featurally Underspecified Lexicon (FUL) model (Lahiri & Reetz, 2002, 2010) predicts specific asymmetries in effects of mispronunciations. On this account, the place of articulation of the onset /d/ of the word duck is unspecified in lexical representation; consequently, mispronunciations in the onset of duck such as guck will not be incompatible with the underlying representation, and such mispronunciations should have minimal effects on lexical activation of duck. By contrast, the onset /g/ of the word goose is specified as [+velar] in the underlying lexical representation, so mispronunciations of the onset of goose such as doose will be incompatible with the underlying representation and thus will significantly disrupt lexical activation of goose.

Psycholinguistic studies using a variety of tasks have adduced evidence supporting predictions of the FUL model. For example, at temporally early stages of speech perception as found by ERP, German-speaking adults display asymmetric discrimination for mispronunciations of familiar words with coronal or noncoronal onsets (Friedrich, Lahiri, & Eulitz, 2008), word internal consonants (Friedrich, Eulitz, & Lahiri, 2006; Cornell, Lahiri & Eulitz, 2013), codas (Lahiri & van Coillie, 1999), and vowels (Lahiri & Reetz, 2010; Cornell, Lahiri, & Eulitz, 2011). Similar effects have also been found with English-speaking adults (Roberts, Wetterlin & Lahiri, 2013). Other studies have used gating and lexical decision procedures to demonstrate putative effects of underspecification in Bengali, English, and German (Gaskell & Marslen-Wilson, 1996; Lahiri & Marslen-Wilson, 1991; Lahiri & Reetz, 2010; Wheeldon & Wasker, 2004).

A recent series of studies by Mitterer (2011), however, failed to find the asymmetries predicted by the FUL model. Mitterer conducted a series of visual-world studies in which Dutch-speaking adults saw four printed words arrayed on a screen and heard targets that varied from onset competitors by one (Experiments 1-3) or two (Experiment 4) features (see also McQueen & Viebahn, 2007). Adults looked more towards competitor words than phonologically unrelated distractor words, but there were no differences depending on phonological relations between target and competitor onsets.

In this article, we present a series of studies examining possible asymmetries in toddlers’ processing of (mis)pronunciations of familiar words and compare toddlers’ processing with adults’ to examine the question of developmental continuity. That is, do toddlers’ lexical representations have similar phonological details with adults’ mature lexical representations in terms of featural (under)specification. The other goal of our studies was to ensure that the tasks used clearly tapped lexical representations. Unlike Mitterer (2011), we used a simplified visual-world procedure in which subjects saw pictured referents of familiar words. Associations between pronunciations and referents by definition involve the mental lexicon. By contrast, in languages with sub-logographic writing systems such as English, pronunciations can be readily generated for novel or nonce forms and thus relations between pronunciations and printed forms need not rely on lexical entries. Use of pictured referents also allowed us to use the same procedure, with only minor modifications, with both toddlers and adults. This will best enable us to address our fundamental goal: to determine whether the nature of phonological specification in the mental lexicon is stable across development.

Based on the underspecification account (Kiparsky, 1982 et seq.), the unmarked values are assumed to be the default values. Therefore, certain considerations, both theoretical and empirical, suggest that effects of underspecification might be observed most strongly in younger populations. For example, Optimality Theory (OT) analyses of child grammar (Gnanadesikan, 2004) have suggested that markedness constraints, which dis-prefer marked forms, outrank faithfulness constraints, which would have preserved those marked forms, in toddlers’ early phonology.

Language experience may attenuate effects of underspecification. Certain accounts of early lexical representation have suggested that the degree of phonological specification correlates positively with the toddler’s vocabulary size (Charles Luce & Luce, 1990, 1995; Storkel, 2002; Walley, 1993, 2005). According to the Lexical Restructuring Model (Metasala & Walley, 1998), for example, word learning can increase the specificity of infants’ speech categorization and concomitantly increase in the specificity with which individual words are represented in the lexicon. As the child’s vocabulary expands, the lexicon comes to contain more and more sets of similar sounding words. On such views, toddlers, whose vocabularies are markedly smaller, are more likely to have an underspecified lexicon than adults. Of course, adults know many more minimal pairs than do toddlers, many of which involve coronal vs. noncoronal contrasts (e.g., pop, top, cop); suppression of underspecification might help to avoid false-alarm over-recognition of items with coronal segments1.

To date, studies with infants and toddlers have found mixed evidence for early appearance of asymmetries predicted by underspecification. Dijkstra & Fikkert (2011), for example, habituated 6-month-old Dutch-learning infants to either repeated taan or paan tokens and then tested their ability to discriminate trials in which one or the other stimulus repeated versus trials in which the two stimuli alternated. Whereas infants habituated to paan discriminated the two types of trials, infants habituated to taan did not. Similarly, Tsuji, Mazuka, Cristia & Fikkert (2015) habituated 4-month-old Dutch-learning infants and 4-month-old Japanese-learning infants to either repeated ompa or onta tokens. Then, they tested the infants’ ability to discriminate trials in which one or the other stimulus repeated versus trials in which the two stimuli alternated. For both language groups, whereas infants habituated to noncoronal stimuli (paan, ompa) discriminated the two types of trials, those habituated to coronal stimuli (taan, onta) did not. In both studies, the authors interpreted their findings in terms of underspecification: when the standard of comparison was coronal (taan or onta), the coronal feature for place of articulation was unspecified, and the noncoronal counterpart (paan or ompa) were compatible with the standard. However, when the standard of comparison was noncoronal (paan or ompa), the labial place of articulation was specified, the coronal counterpart (taan or onta) was not compatible with the standard, and paan vs. taan and ompa vs.onta were both discriminable.

Using a preferential looking mispronunciation task, van der Feest and Fikkert (2015) found that 20- and 24-month-old Dutch-learning toddlers showed significant differences in proportional looking times to referents of familiar words beginning with labials depending on whether they were correctly pronounced or mispronounced with coronal onsets. However, toddlers did not show differences in looking times when familiar words beginning with coronals were mispronounced with labial onsets. Similarly, Tsuji, Fikkert, Yamane & Mazuka (2016) tested one group of Dutch-learning and one group of Japanese-learning 18-month-olds on their sensitivities to coronal mispronunciations of novels words. They found lack of sensitivities to such mispronunciations for infants from both language groups. The procedures of these two studies both involved considerable stimulus repetition. That is, each toddler was exposed to a single target item, which was tested in multiple pronunciation conditions: In van der Feest & Fikkert, there are six items tested in correct pronunciations, and among the six items, four were tested with place of articulation mispronunciations, and four with voicing mispronunciations. Similarly, in Tsuji, Fikkert, Yamane & Mazuka, the two items were tested with correct pronunciations, mispronunciation changes to labial sounds, and mispronunciation changes to dorsal sounds.

Less clear support for underspecification comes from a series of studies by Fennell and colleagues testing 14-month-old English-learning infants on their sensitivities to different directions of novel word mispronunciations using the “switch task” (Stager & Werker, 1997). Consistent with the underspecification hypothesis, an initial study (Fennel, 2007) showed that infants detected a labial-to-coronal switch but failed to detect a coronal-to-labial switch. However, inconsistent with the underspecification hypothesis, a follow-up study (Fennell, van der Feest, & Spring, 2010) showed that 14-month-olds were better able to detect a coronal-to-velar switch than a velar-to-coronal switch. To explain such findings, the author attributed the asymmetries to the fact that /b/</d/</g/ in acoustic variability in their experimental stimuli. Thus, the authors concluded that the asymmetries they observed might be better explained by acoustic properties of the segments than by their phonological status or (under)specification.

In this article, we examine possible asymmetries at the lexical level by comparing 19-month-old English-learning toddlers’ and English-speaking adults’ detection of correct and incorrect pronunciations of familiar words. We tested coronal-to-labial, labial-to-coronal, coronal-to-velar, and velar-to-coronal mispronunciations in an online word recognition task using a simplified version of the visual world paradigm. If toddlers’ lexical representations are unspecified for coronal place of articulation as predicted by the underspecification hypothesis, then we expect to see significant asymmetries: larger effects for labial-to-coronal and velar-tocoronal mispronunciations than for coronal-to-labial and coronal-to-velar mispronunciations. By using lexical specification as leverage, we hope to obtain a clearer picture of developmental (dis)continuity in the nature of lexical representations.

EXPERIMENT 1

White & Morgan (2008) introduced several refinements to the preferential looking mispronunciation task pioneered by Swingley & Aslin (2000). First, rather than displaying two referents with known labels in each trial, they paired a referent with a known label and a referent without a known label. The rationale for this modification was that known labels might reduce the sensitivity of the paradigm by repelling of mispronunciations towards the correct forms. For example, if a toddler were to see pictures of a baby and a car and hear a mispronunciation vaby, because that pronunciation is not a possible label for the car, changes in looking time relative to the correct pronunciation baby might be limited. They also tested each item on only a single trial. This modification is of importance for studies of asymmetries in speech perception, because testing items repeatedly raises possibilities of carry-over effects and may change the perceived nature of the task. In particular, whereas hearing vaby once may interrogate whether it is an acceptable pronunciation of baby (a perceptual task), hearing these two pronunciations multiple times might be construed as asking whether vaby is a plausible mispronunciation of baby. To find a solution to such a question, infants might need to draw on implicit metalinguistic knowledge. In this way, tasks with repetitions of stimuli may tap into different processing levels than on-line lexical processing.

Collectively, the modifications introduced by White & Morgan served to increase the power and sensitivity of the mispronunciation task: whereas earlier studies (Bailey & Plunkett, 2002; Swingley & Aslin, 2002) had failed to find graded effects depending on the numbers of features altered in mispronunciations, White & Morgan showed a strong linear effect of number of features altered in onset changes on toddlers’ looking times. Here, we use White & Morgan’s procedures to interrogate possible asymmetries in word recognition.

Experiments 1a and 1b were designed to establish whether 19-month-olds exhibit asymmetrical sensitivities to mispronunciations depending on whether the mispronunciations are from coronal to noncoronal segments or vice-versa. To address this question, we tested mispronunciations in consonantal onsets in Experiment 1a and codas in Experiment 1b, respectively.

Methods

Participants

Fifty-five 19-month-old English-learning infants were recruited from monolingual English-speaking families in Rhode Island and Massachusetts. Twenty-seven of these infants were tested on onset mispronunciations in Experiment 1a and twenty-eight were tested on coda mispronunciations in Experiment 1b. Nine participants were excluded from data analysis due to fussiness (4) or crying (3) or over 50% alternative language exposure (1). This left twenty-one 19-month-olds (mean age = 1;7;17) in Experiment 1a and twenty-six 19-month-olds (mean age = 1;7;23) in Experiment 1b, respectively.

Stimuli

Familiar labels comprised a set of words that are comprehended by the majority of infants by 14 months, according to the MacArthur CDI norms (Dale & Fenson, 1996). In each trial, infants saw two images, one depicting a referent of a familiar label, the other depicting a referent of an unfamiliar (to 19-month-olds) label. An example stimulus pair is depicted in Figure 1; a list of displayed objects is given in Appendix A for onset mispronunciations and in Appendix B for coda mispronunciations. Infants were tested in 18 trials. These included three trials with correctly pronounced coronal stops (e.g. dog or cat), three mispronounced coronal stops (e.g. gog or cak), three correctly pronounced noncoronal stops (e.g. cat or dog), three mispronounced noncoronal stops (e.g. tat or dod), three correctly pronounced familiar fillers (e.g. hand) and three novel fillers (e.g. wrench). Examples of (mis)pronunciations are given in Table 1 for onsets and Table 2 for codas. Familiarity of labels for target and distractor images was assessed via a parental questionnaire completed after the experiment session.

Figure 1.

Sample visual stimulus pair in Experiments 1a & 1b.

Table 1.

Sample stimuli for onset mispronunciations

| Audio Stimuli Samples | (Mis)pronunciation | Place of articulation |

|---|---|---|

| Where’s the duck? | Correct | Coronal |

| Where’s the guck? | Mispronounced | Noncoronal |

| Where’s the cat? | Correct | Noncoronal |

| Where’s the tat? | Mispronounced | Coronal |

Table 2.

Sample stimuli for coda mispronunciations

| Audio Stimuli Samples ( | Mis)pronunciation | Place of articulation |

|---|---|---|

| Where’s the cat? | Correct | Coronal |

| Where’s the cak? | Mispronounced | Noncoronal |

| Where’s the duck? | Correct | Noncoronal |

| Where’s the dut? | Mispronounced | Coronal |

Mispronunciations involved only single-feature changes in place of articulation, and all mispronunciations resulted in non-words or in words judged unlikely to be familiar to infants at this age. Most of the words tested in the current study were monosyllabic, with only two exceptions (baby and table) for onsets in Experiment 1a due to the restricted vocabulary size of babies at 19 months. All stimuli were naturally produced by a trained female speaker of American English who produced the utterances with positive infant-directed affect. The mean length of target items across all conditions was 588.05 msec (SD = 78.58 msec); lengths of target items did not differ significantly across conditions, F (3, 92) = 0.036, p = 0.991. A complete list of words used in the current study and their accompanying images are provided in Appendix A for onsets and Appendix B for codas.

Pairings of familiar and unfamiliar objects remained constant across subjects. Assignment of stimulus pairs to pronunciation condition was counterbalanced across subjects (filler trials were constant across subjects). Half of the coronal items were mispronounced with a corresponding labial stop, and half were mispronounced with a velar stop. These were counterbalanced so that one sub-group heard two labial mispronunciations and one velar mispronunciation, while the other heard one labial mispronunciation and two velar mispronunciations. Similarly, the noncoronal items were evenly divided between labials and velars; one sub-group heard one correct labial pronunciation and two correct velar pronunciations, while the other heard two correct labial pronunciations and one correct velar pronunciation. Each item was presented to each infant one time only, i.e. either the correct form or the mispronounced form. Order of presentation was pseudo randomized online for each infant with the constraint that the first two trials always contained one correct filler trial and one novel filler trial.

Procedure

Testing was conducted in a sound-treated laboratory room. The parent sat with the child on his/her lap, while listening to instrumental music over noise-cancelation headphones to mask the audio stimuli. Approximately 90cm in front of the child were two 51cm flat-panel monitors mounted side-by-side, together subtending approximately 55 degrees of visual angle. A speaker was located centrally between the two monitors behind a pegboard panel. At the subjects’ eye level, a blue light was mounted on the panel between the two monitors. The subjects were observed over a closed-circuit video system and recorded on a digital camcorder at 30 fps for later off-line coding. Speech stimuli were played at conversational level (70 dB).

An intermodal preferential looking procedure (IPLP) similar as that used in White & Morgan (2008) was used during the experiment. Each trial began with the blue light flashing until the subject fixated at midline. At that point, the experimenter turned off the center light and initiated the salience phase. During the salience phase, one object with a known label and a second object with unknown label were simultaneously displayed on the two monitors (see Figure 1). The two objects were displayed silently for 4 seconds to establish baseline looking preference. After the salience phase, the two monitors went dark, and infants’ attention was then recaptured to midline to avoid contingencies between side of fixation at the end of the salience period and at the beginning of the test period.

After recentralization, the experimenter initiated the test phase. During the test phase, the audio stimulus (Where’s the X?) was played, and immediately after that, the two visual stimuli were presented for 8 seconds. Following an interval of at least 1 second, the next trial commenced. Side of presentation of the familiar object was randomized between trials by the customized experimental software, but was consistent across salience and test phases within each single trial. The dependent measure was the change in proportional looking to the familiar object between the (silent) salience phase and the test phase. Of interest was whether looking behavior would differ as a function of the directions of mispronunciation.

Following the session, the parent completed the vocabulary questionnaire to verify on his/her toddler’s comprehension and production of the stimulus items (familiar and unfamiliar).

Results and discussion

Results from the parental questionnaire indicated the items selected were appropriate for this sample of 19-month-olds. Of a scale of 3 (1 = only visually familiar; 2 = visually familiar and label known; 3 = visually familiar, label known and produced), labels for target images (used as familiar words) received an average score of 2.462 for Experiment 1a (SD = .242) and 2.462 for Experiment 1b (SD = .273), indicating that they were highly familiar to toddlers. Labels of distractor images2 received an average score of 0.24 for Experiment 1a (SD = .235) and 0.301 for Experiment 1b (SD = .208), indicating that they were highly unfamiliar to toddlers. No labels of target images in Experiment 1a or 1b were scored 1. Labels of distractor images were scored 3 in 24 trials (of 360 total, 6.67%) in Experiment 1a, and in 32 trials (of 468 total, 6.84%) in Experiment 1b. These trials were all removed from further analyses.

Looking behavior was coded off-line frame-by-frame (1 frame = 33 msec) using in-house coding software. For the salience phase, looking behavior was coded for entire the 4s duration of the phase. For the test phase, looking behavior was coded only for the 3s following the onset of the first occurrence of the target word. This was done in order to include only subjects’ initial response to the target word.

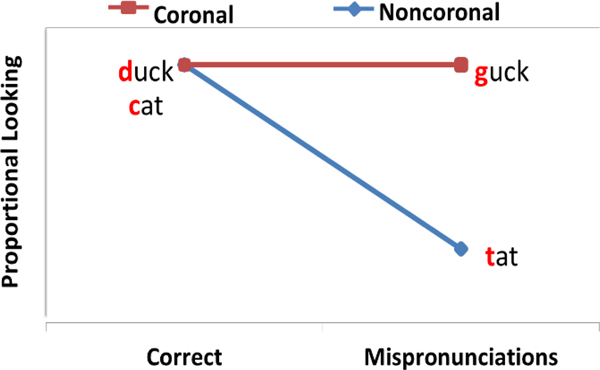

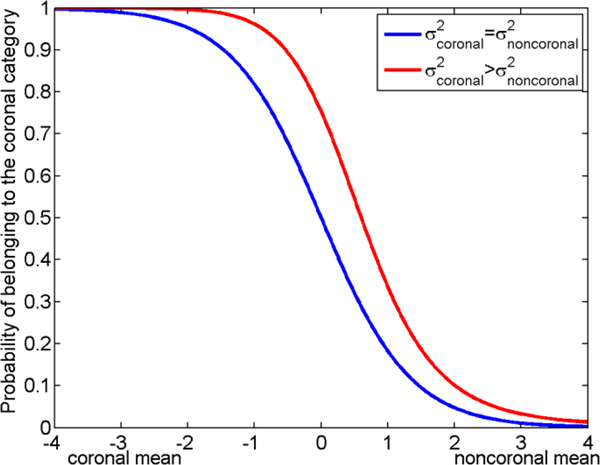

In the present case, two competing accounts make divergent predictions for the patterns of results that should be observed. On the underspecification (FUL) account, mispronunciations of segments that are underlyingly labial or velar should disrupt lexical recognition and access, whereas mispronunciations of underlyingly coronal segments should not; the data should follow the pattern shown in Figure 2. By contrast, on the account that segments are equivalently represented regardless of place of articulation, a main effect of (mis)pronunciation should obtain, but there should be no (mis)pronunciation-by-place of articulation interaction.

Figure 2.

Predicted results from the hypothesis of underspecification.

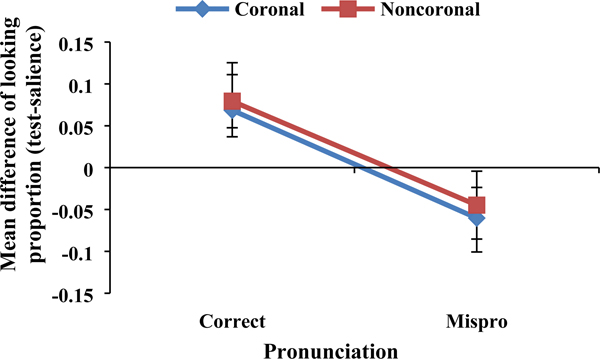

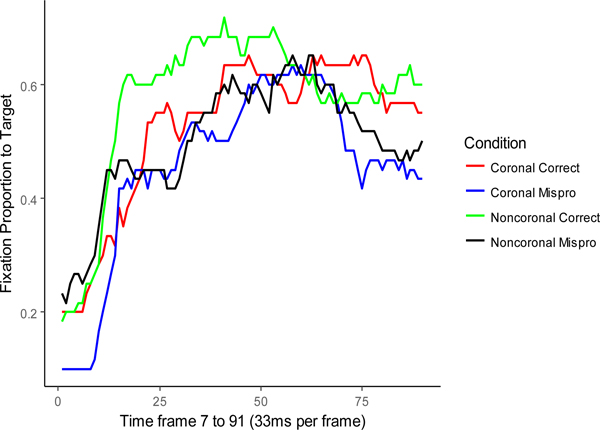

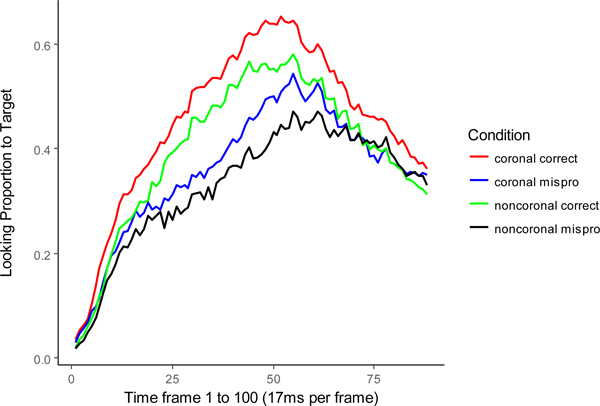

Overall summaries of the data for onsets in Experiment 1a and codas in Experiment 1b are shown in Figures 3 and 4, respectively. In both figures, proportional looking towards each of the objects in each trial was first computed over the total time the subject spent looking at both objects for that phase. Then, a difference score of proportional looking was computed using the following formula:

Figure 3.

Experiment 1a: Toddlers’ sensitivities to different directions of onset mispronunciations. Error bars show two standard errors computed via subject-wise non-parametric

Figure 4.

Experiment 1b: Toddlers’ sensitivities to different directions of coda mispronunciations. Error bars show two standard errors computed via subject-wise non-parametric bootstrap.

This formula measures the change in looking toward the familiar object after the target was named. Such difference scores allowed us to use each stimulus pair as its own baseline, controlling for differences in visual salience or inherent preference for a particular stimulus in each pairing. It is clear from inspection that the interactions predicted by the underspecification account did not materialize in our evidence.

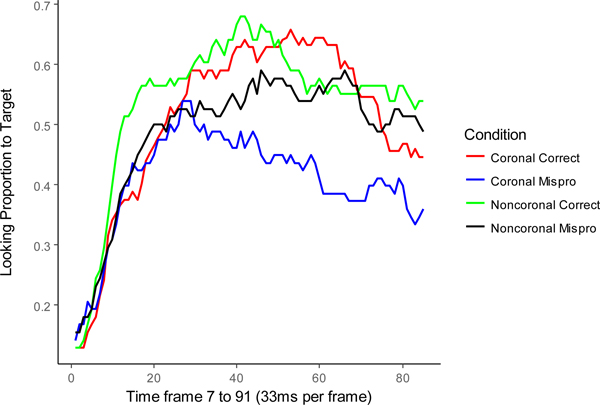

The dependent measure of our statistical analyses was participants’ success/failure to gaze at the target object at each time frame for each trial. In particular, if at a certain time frame of a trial the participant was looking at the target, we coded it as a 1 and otherwise we coded it as 0. The time course data can be found in Figure 5 for Experiment 1a and Figure 6 for Experiment 1b, respectively.

Figure 5.

Experiment 1a: Time course data of toddlers’ sensitivities to different directions of onset mispronunciations

Figure 6.

Experiment 1b: Time course data of toddlers’ sensitivities to different directions of coda mispronunciations

Given the binary data in each time frame for each trial, we conducted Bayesian Logistic Regressions with Mixed Effects Modeling. By specifying priors that are appropriate for each account, Bayesian data analyses (Kruschke, 2010; Gelman, Carlin, Stern & Rubin, 2004) can be computed that yield estimates of the posterior probabilities of the parameters, providing a principled quantitative evaluation of the credibility of the hypotheses under discussion. Fixed effects of the model included pronunciation and place of articulation as two discrete variables, time as a continuous variable, and their interactions. Random effects of the model include random intercepts and slopes for both subjects and items. Mathematical details of the model can be found in Supplementary Material II. The time window used for Experiment 1a was between 233.31 msec and 1899.81 msec after the initiation of each trial, and the time window used for Experiment 1b was between 233.31 msec and 1733.16 msec after the initiation of each trial. Following standard practice, data from the first 233.31 msec were excluded from analyses to allow for programming and execution of initial saccades. After 1899.81 msec (1733.16 msec for Experiment 1b), on average participants had reached the highest looking proportion to the target objects. This suggests that participants at this time point had achieved the highest degree of lexical activation for all the experimental conditions.

We first ran Bayesian Logistic Mixed Effects Regressions with non-informative priors. That is, conjugate priors were used for the parameters of the model with coefficients of (mis)pronunciation, place of articulation, time and the interaction between these factors were all set as normally distributed centered on zero. Standard deviations of normal distributions were set to follow a half gamma distribution with the rate parameter and the shape parameter both set as 0.01. This Bayesian method is similar to null hypothesis significance testing with Logistic Mixed Effects Modeling except that the mean and the variance of the regression coefficients were specified with conjugate prior distributions. In this way, we will be able to obtain the posterior distributions of these parameters which provides the information about the observed effect size. JAGS code for Logistic Mixed Effects Modeling with non-informative priors is given in Appendix E. For readers unfamiliar with Bayesian data analyses, results of NHST Logistic Mixed Effects Modeling are given in Supplementary Material II. Posterior distributions for two main effects and the interaction are shown in Table 3 for Experiments 1a and 1b.

Table 3.

Posterior distributions of coefficients of Experiment 1a and 1b

| Experiment 1a Infant Onset | Experiment 1b Infant Coda | |||||||

| Coefficients | Mean | SD | 95% | HDI | mean | SD | 95% | HDI |

| Intercept | −0.01 | 0.35 | −0.68 | 0.68 | −0.50 | 0.18 | −0.85 | −0.15 |

| Time | 0.69 | 0.14 | 0.42 | 0.97 | 0.96 | 0.08 | 0.81 | 1.11 |

| Pronunciation | 0.11 | 0.37 | −0.62 | 0.84 | 0.68 | 0.16 | 0.37 | 0.99 |

| Place of Articulation (POA) | 0.02 | 0.45 | −0.85 | 0.90 | −0.28 | 0.20 | −0.67 | 0.12 |

| POA*Pronunciation | 0.16 | 0.70 | −1.22 | 1.54 | −0.06 | 0.14 | −0.32 | 0.21 |

| Pronunciation*Time | 0.22 | 0.26 | −0.28 | 0.72 | 0.38 | 0.11 | 0.17 | 0.59 |

| POA*Time | −0.13 | 0.18 | −0.49 | 0.22 | 0.06 | 0.10 | −0.13 | 0.26 |

| POA*Pronunciation *Time | −0.01 | 0.27 | −0.51 | 0.53 | −0.07 | 0.11 | −0.29 | 0.15 |

For both experiments, zero was included in the 95% highest density intervals (HDIs) of the posterior distributions for the three-way interactions of (mis)pronunciation by place of articulation by time. These results suggest that during word activation, toddlers do not differentiate mispronunciation effects according to the place of articulations of the target word for both onsets and codas. Moreover, the HDIs of the posterior distributions for the effect of (mis)pronunciation over time (i.e., time and pronunciation interaction) exclude zero for Experiment 1b, suggesting that 19-month-olds in this experiment were also sensitive to 1-feature mispronunciations of coda consonants in word recognition. By contrast, the effect of (mis)pronunciation over time (i.e., time and pronunciation interaction) does not exclude zero for Experiment 1a. Therefore, the lack of three-way interaction in this experiment participants could also suggest that 19-month-olds were not sensitive to 1-feature mispronunciations of onset consonants.

To examine this possibility and to further evaluate the underspecification and equivalent-representation accounts, we conducted Bayesian Model Comparisons. In particular, two sets of priors were established with equal probability, where one set supports the equivalent representation account and the other set supports the underspecification hypothesis. By specifying priors that are appropriate for each account, posterior probabilities were then computed for the observed data given each of the alternative accounts to provide a quantitative estimate of the relative credibility of the two accounts.

The two informed sets of priors were obtained through the following steps. First, we obtained data from Tin & Morgan (2014) who tested English-speaking adults on their sensitivities to 1-, 2- and 3-feature mispronunciations in word onset consonants. Using the correct mispronunciation and 1-feature mispronunciation data of this study, we created two separate datasets with one dataset supporting the underspecification hypothesis and the other dataset supporting the equivalent representation account. In the underspecification data, participants’ response to (mis)pronunciations of coronal segments were made to be equal to their correct counterparts; and all of the effect of mispronunciation is assumed to reside in the noncoronal items. In the equivalent-representation data, participants’ response to (mis)pronunciations of coronal segments were simply taken from their 1-feature mispronunciation counterpart in Tin & Morgan’s data to model the fact that there was no (mis)pronunciation by place of articulation interaction. Then, the two datasets were tested with the same logistic mixed effects model as the one used for non-informative priors, and parameters of the model were estimated using Maximum Likelihood Estimation (MLE). Thus, two sets of coefficients were obtained and then entered into the Bayesian Model Comparison as two sets of prior distributions, with one set of coefficient representing the underspecification model and the other set representing the equivalent representation account. Specific values for the prior means of the coefficients under each model are given in Table 4. JAGS code for Bayesian Model Comparison can be found in Appendix F.

Table 4.

Prior coefficients in Bayesian model comparisons

|

Coefficient |

Model 1: Full-Specification |

Model 2: Underspecification |

|---|---|---|

| Intercept | −3.440e-01 | −5.306e-01 |

| Time | 7.344e-01 | 1.396e+00 |

| Pronunciation | 8.746e-01 | 3.036e-13 |

| Place of Articulation (POA) | −1.447e-14 | −8.746e-01 |

| POA*Pronunciation | 1.750e-14 | 8.746e-01 |

| Pronunciation*Time | 6.620e-01 | 1.055e-14 |

| POA*Time | − 8.674e-15 | −6.620e-01 |

| POA*Pronunciation *Time | 1.016e-14 | 6.620e-01 |

Given the onset data in Experiment 1a and 1b, the posterior probability of the model based on parameters derived from the underspecification account (P(MU|D)) was both 0, whereas the posterior likelihood of the model based on parameters derived from the equivalent representation account (P(MER|D)) was 1 according to the MCMC estimation, which assumed the prior probability of the two sets of parameters to be equal. The ratio of these two likelihoods yields a Bayes factor of infinity. A Bayes factor of this magnitude is typically interpreted as “very strong” (Kass & Raftery, 1995) or “decisive” (Jeffries, 1961). Therefore, for the data in Experiment 1a and Experiment 1b, the equivalent representation parameters provide a credible account, whereas the underspecification parameters do not. Therefore, in the range of reasonable priors, there are no values leading to anything other than supporting for an interpretation of equivalent specification.

Contrary to accounts for both infants (Dijkstra & Fikkert, 2011; Tsuji et al., 2015) and adults (Lahiri & Reetz, 2010), all our analyses indicate that 19-month-olds equivalently represent coronal and noncoronal consonants. This inconsistency with previous findings may in part be due to our use of on-line measurements of processing of correct and incorrect pronunciations in an intermodal preferential looking procedure. Previous studies of underspecification in adults have typically used other tasks, such as the oddball paradigm in EEG, gating or semantic priming, to examine effects of mispronunciations. It is thus unclear whether the reported results reflect procedural differences or developmental changes in lexical representation between infants’ early lexical representation and adults’ mature lexical representation. To disentangle this, in Experiments 2a and 2b, we employed a visual world paradigm mimicking the intermodal preferential looking procedure used for infants to test adults on their immediate responses to mispronunciations.

EXPERIMENT 2

The purpose of the two experiments was to test whether adults asymmetrically represent coronals and non-coronals using an on-line word recognition task. Experiments 2a and 2b addressed this question for consonantal onsets and codas, respectively.

As noted earlier, Mitterer (2011), using a procedure similar to ours, failed to find evidence for predicted asymmetries. Our studies differ from those of Mitterer’s in several ways, most notably using images rather than printed words and examining effects of mispronunciations. We discuss ramifications of these differences later in Experiment 3.

Methods

Participants

Sixty-three mono-lingual English-speaking adults aged from 19 to 37 were recruited from students and staff members at Brown University. Thirty-one adults were tested in Experiment 2a for onsets, and thirty-two were tested in Experiment 2b for codas. Seven participants were excluded from data analysis due to failure in the eye tracker calibration (4), failure to follow instructions (2), and discomfort with the experimental settings (1). This left twenty-eight participants in each experiment.

Stimuli

Familiar stimuli comprised a set of highly frequent objects with familiar names; the distractors were comprised of a set of rare or fantastical objects, with their names unlikely to be known. An example stimulus pair is depicted in Figure 7.

Figure 7.

Sample visual stimulus pair in Experiments 2a & 2b.

One hundred and eight trials were used, with seventy-two experimental trials and thirty-six filler trials. Among the seventy-two experimental trials, there were eighteen correct coronal trials (e.g. duck/cat), eighteen mispronounced coronal trials (e.g. guck/cak), eighteen correct noncoronal trials (e.g. cat/duck), and eighteen mispronounced noncoronal trials (e.g. tat/dut). Among the thirty-two filler trials, there were eighteen correct fillers (e.g. hand) and eighteen novel fillers (e.g. pruse). According to the Corpus of Contemporary American English (Davies, 2011), mean log frequencies were 3.82 (SD = 0.61) for items with coronal onsets, 4.01 (SD = 0.57) for items with noncoronal onsets, 4.11 (SD = 0.56) for items with coronal codas, and 3.73 (SD = 0.70) for items with coronal codas. Differences in frequencies were not significant for onset items (t (70) = −1.362, p = .177), but items with coronal codas occurred more frequently than items with noncoronal codas (t (70) = 2.591, p = .012). Because our selection of items was constrained by image-ability and main effects of place of articulation were not of central interest, we judged these differences in item frequencies to be acceptable.

All stimuli were naturally produced by a trained female speaker of American English who produced the utterances with positive affect. The mean length of target items across all conditions was 564.05 msec (SD = 70.84 msec); lengths of target items did not differ significantly across conditions, F (3, 140) = 1.036, p = 0.890. The sets of non-filler items were counterbalanced across two subgroups: the items that one subgroup heard correctly pronounced, the other group heard mispronounced, and vice-versa. Complete lists of words used in the current studies are shown in Appendix C for onsets and Appendix D for codas; accompanying images are provided in Supplementary Material I.

As in Experiments 1, mispronunciations only involved changes in place of articulation. All mispronunciations resulted in non-words in English. Following the rationale described in Experiments 1, each item was presented to each participant one time only.

Procedure

The procedure was similar to that used in Experiments 1a and 1b. The experiment began with detailed written instructions on the computer screen, in which participants were told to click on the image to which the spoken word referred, using a provided mouse. Each trial began with a salience phase, in which participants saw pairs of images with no accompanying speech. Following re-centering of the participant’s gaze on a fixation point, the pair of images reappeared, this time accompanied with a spoken label. In these experiments, the carrier phrase Where’s the was eliminated from the test phase; participants heard only the isolated label3. Gaze was recorded by an ASL eye-tracker (all subjects in Experiment 2a, 20 subjects in Experiment 2b) or an SMI eye-tracker (eight subjects in Experiment 2b, after the ASL eye-tracker failed). For subjects tested on the ASL equipment, trials were terminated immediately after the mouse click. The SMI software did not allow trials to be terminated on mouse clicks; for subjects tested with this apparatus, trials continued for 4 seconds, at which point the fixation image for the next trial appeared on the screen. Regions of interest were defined based on the locations of the two images shown in each trial and were used to automatically code the eye-tracker output.

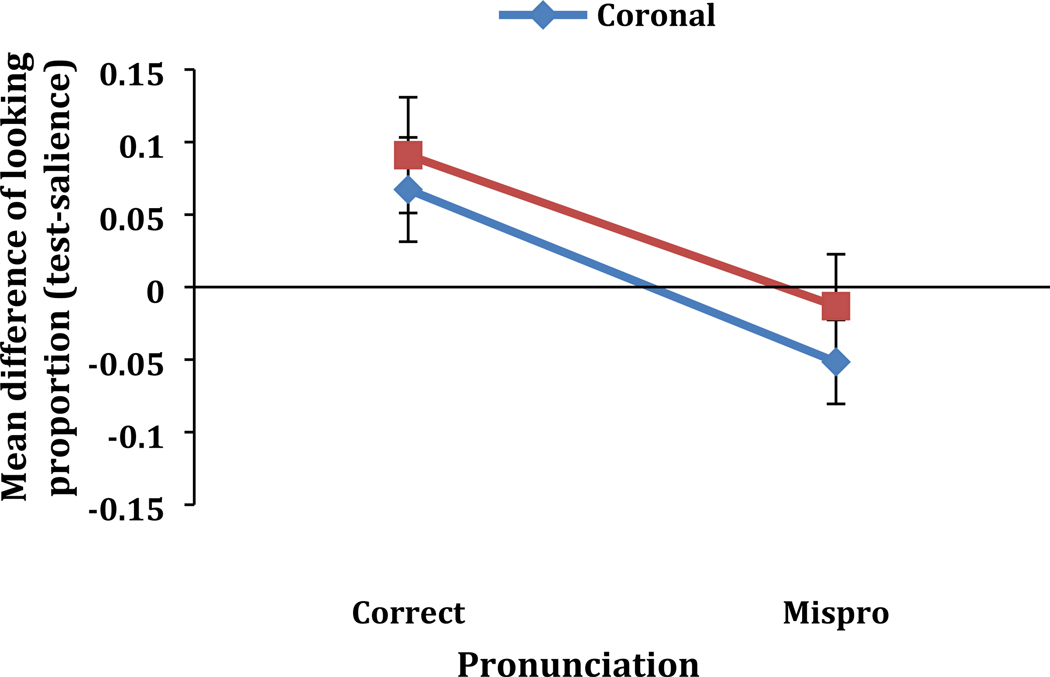

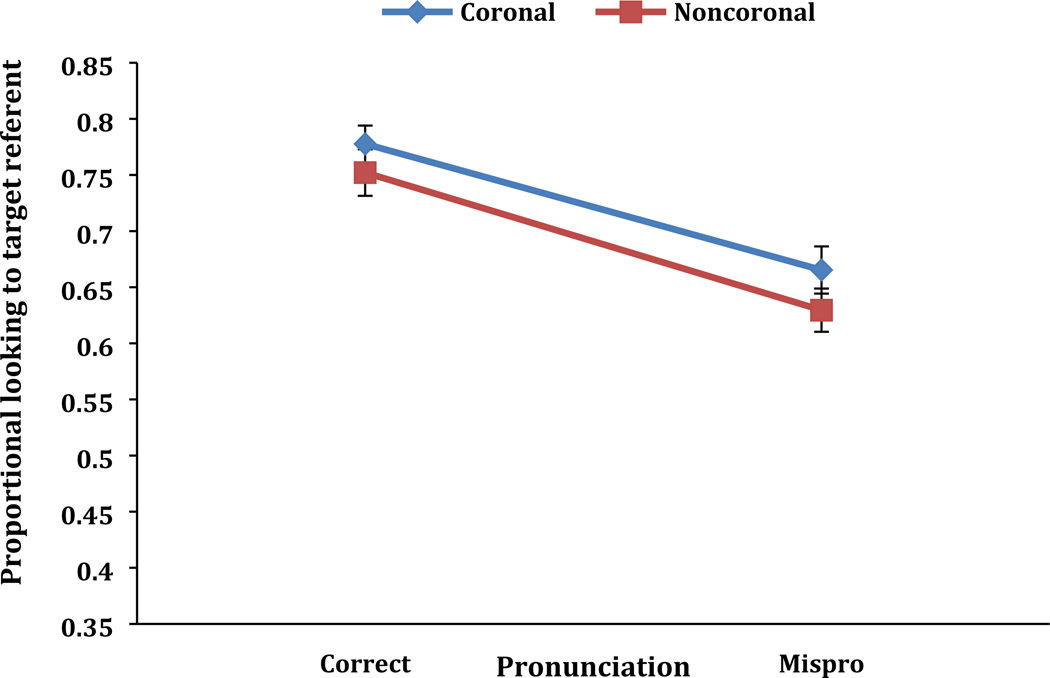

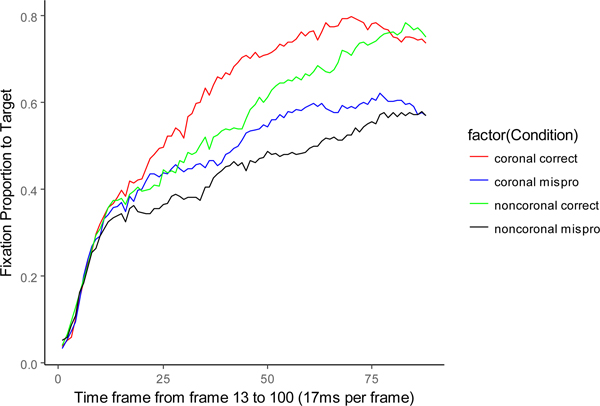

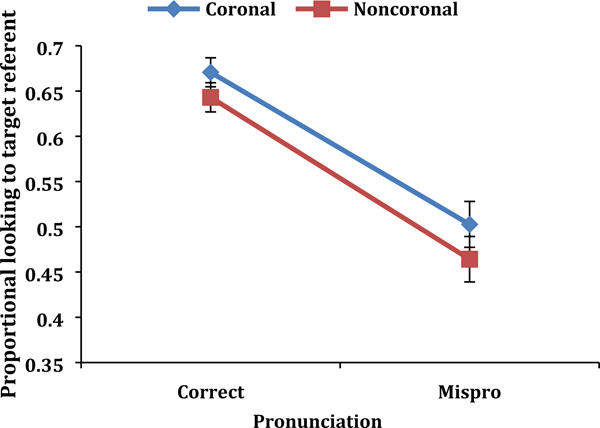

Results and discussion

Data are coded frame by frame using an in-house software for the eye-tracking data, with each frame 16.67 milliseconds. Overall summaries of the data of the proportional looking to target are shown in Figures 8 and 9 for onsets in Experiment 2a and codas in Experiment 2b, respectively. It is clear from inspection that the underspecification account was again not well supported in our evidence.

Figure 8.

Experiment 2a: Adults’ sensitivities to different directions of onset mispronunciations.Error bars show two standard errors computed via subject-wise non-parametric bootstrap.

Figure 9.

Experiment 2b: Adults’ sensitivities to different directions of coda mispronunciations. Error bars show two standard errors computed via subject-wise non-parametric bootstrap.

As in Experiment 1, we conducted Bayesian statistical analyses using the same mixed effects logistic regression. Time course data are depicted in Figure 10 and Figure 11 for Experiment 2a and 2b, respectively.

Figure 10.

Experiment 2a: Time course data of adults’ sensitivities to different directions of onset mispronunciations

Figure 11.

Experiment 2b: Time course data of adults’ sensitivities to different directions of mispronunciations

As in Experiment 1, we first conducted the Bayesian Mixed Effects Logistic Regressions using non-informative priors; and time windows were selected for analyses to capture lexical activation in all experimental conditions. In particular, 216.71 to 1016.87 and 216.71 to 1667 msec after the initiation of each trial were selected for analyses in Experiment 2a and 2b, respectively.

Posterior distributions for comparisons testing the two main effects and the interaction are summarized for Experiment 2a and 2b in Table 5.

Table 5.

Posterior distributions of coefficients of Experiment 2a and 2b

| Experiment 2a Adult Onset | Experiment 2b Adult Coda | |||||||

|---|---|---|---|---|---|---|---|---|

| Coefficients | mean | SD | 95% | HDI | mean | SD | 95% | HDI |

| Intercept | −0.39 | 0.16 | −0.69 | −0.08 | −0.50 | 0.18 | −0.85 | −0.15 |

| Time | 0.39 | 0.17 | 0.06 | 0.72 | 0.96 | 0.08 | 0.81 | 1.11 |

| Pronunciation | 0.58 | 0.22 | 0.16 | 1.00 | 0.68 | 0.16 | 0.37 | 0.99 |

| Place of Articulation (POA) | −0.21 | 0.14 | −0.47 | 0.06 | −0.28 | 0.20 | −0.67 | 0.12 |

| POA*Pronunciation | 0.03 | 0.30 | −0.56 | 0.59 | −0.06 | 0.14 | −0.32 | 0.21 |

| Pronunciation*Time | 0.50 | 0.17 | 0.16 | 0.83 | 0.38 | 0.11 | 0.17 | 0.59 |

| POA*Time | 0.22 | 0.19 | −0.16 | 0.62 | 0.06 | 0.10 | −0.13 | 0.26 |

| POA*Pronunciation *Time | −0.27 | 0.24 | −0.74 | 0.20 | −0.07 | 0.11 | −0.29 | 0.15 |

For both experiments, the 95% HDIs of the posterior distributions for (mis)pronunciation by time interaction exclude zero, suggesting that participants in both experiments were sensitive to 1-feature mispronunciations over time. By contrast, zero was not excluded from the HDIs of the posterior distributions for the (mis)pronunciation by place of articulation interaction for either experiment: these effects are not credibly different from zero.

We next ran Bayesian Model Comparisons to assess the underspecification and equivalent-representation accounts using the two informed sets of priors as in Experiment 1. Given the coda data in Experiment 2b, the posterior likelihood of the model based on parameters derived from the underspecification account (P(MU|D)) was 0, whereas the posterior likelihood of the model based on parameters derived from the equivalent representation account (P(MER|D)) was 1. The ratio of the two likelihoods yields a decisive Bayes factor of infinity. Therefore, for the data in both Experiments 2a and 2b, the equivalent representation parameters provide absolutely credible accounts for the data, whereas the underspecification parameters do not. We also conducted time course analyses for data in both experiments. Such results are consistent with previous analyses: no evidence for predicted psycholinguistic effects of underspecification were found. Details can be found in Supplementary Material II.

Like 19-month-olds, when assessed in a task that measured on-line processing, adult participants were sensitive to one-feature mispronunciations. Taking together findings in Experiment 1 and Experiment 2, we conclude that toddlers’ early lexicon is adult-like in terms of phonological details, suggesting substantial developmental continuity between early and mature lexical representations.

Also like 19-month-olds, adults were not sensitive to the direction in which those mispronunciations took place. These findings suggest that for purposes of immediate processing, coronal and noncoronal onsets and codas are equally represented with phonological details at the lexical level for adults, contrary to predictions from the underspecification hypothesis.

EXPERIMENT 3

Does the visual world procedure used in Experiments 1 and 2 tap into underlying lexical representations? In our procedure, subjects saw images of possible referents in the salience phase before they heard any label in the test phase; both toddlers (Mani & Plunkett, 2010) and adults (Meyer, Belke, Telling, & Humphreys, 2007) can generate implicit labels upon seeing referents with known names. The task in Experiments 1 and 2 could thus be conceived as recruiting “a phono-lexical matching process between the heard label and the [implicit] label for the perceived object [that determines] the goodness-of-fit” (Mayor & Plunkett, 2014, p. 93). This suggests an alternative explanation for our failure to find asymmetries: if the implicit labels are full-fledged surface forms with values filled in for any features that are unspecified in underlying representations, our task amounts to asking subjects to compare one surface form to another, and no asymmetries would be predicted on any account.

This concern applies even more strongly to Mitterer’s (2011) studies, which used printed words as referents rather than images. Although printed referents circumvent limitations of imageability and allow a wider choice of stimulus words (McQueen & Viebahn, 2007), use of such referents introduces additional interpretive difficulties. First, orthographic similarities may influence competitor activations (Salverda & Tanenhaus, 2010). In Mitterer’s experiments, competitors differed from targets in the initial letter (and onset phone), but then shared several subsequent letters. Thus competition may have occurred at an orthographic level in addition to or even in place of phonological competition.

Salverda and Tanenhaus argue that “in the absence of sufficient preview time to read the printed words, orthographic rather than phonological similarity will mediate the mapping between an unfolding spoken word and potential printed referents” (p. 1109). To counteract unwanted orthographic effects, Mitterer allowed subjects additional time to read the printed words before hearing the spoken stimuli (personal communication, 7/26/2014). However, while increased preview time may have possibly eliminated orthographic effects, subjects were more likely to have generated representations of productive pronunciations either through explicit subvocal rehearsal (Baddeley & Hitch, 1975) or via implicit access to articulatory codes for the printed stimuli (Wheat, Cornelissen, Frost, & Hansen, 2010; Klein et al., 2014). Again, if such productive phonological representations are fully specified surface forms, there will be no cause for asymmetric effects on any account.

To resolve this problem, we conducted a new version of the adult onset study. The stimuli and design were the same as in Experiment 2a, except that the salience phase was eliminated so that the pictures appeared synchronously with the onset of the label at the start of each trial. In this fashion, subjects were not afforded opportunities to generate implicit pronunciations but rather could only compare the heard label with existing lexical representations to determine goodness-of-fit.

Methods

Participants

Twenty-four mono-lingual English-speaking adults aged from 19 to 57 were recruited from students and staff members at Brown University.

Stimuli

The same stimuli were used as in Experiment 2a.

Procedure

The salience phase used in earlier experiments was eliminated; only the test phase as described earlier was used. Participants’ eye-movements were recorded by an SMI eye-tracker. Each trial began with a fixation point in the center of the screen. Once the participant fixated the fixation point for 500 msec, the trial began automatically: the label was played and the two pictures appeared simultaneously.

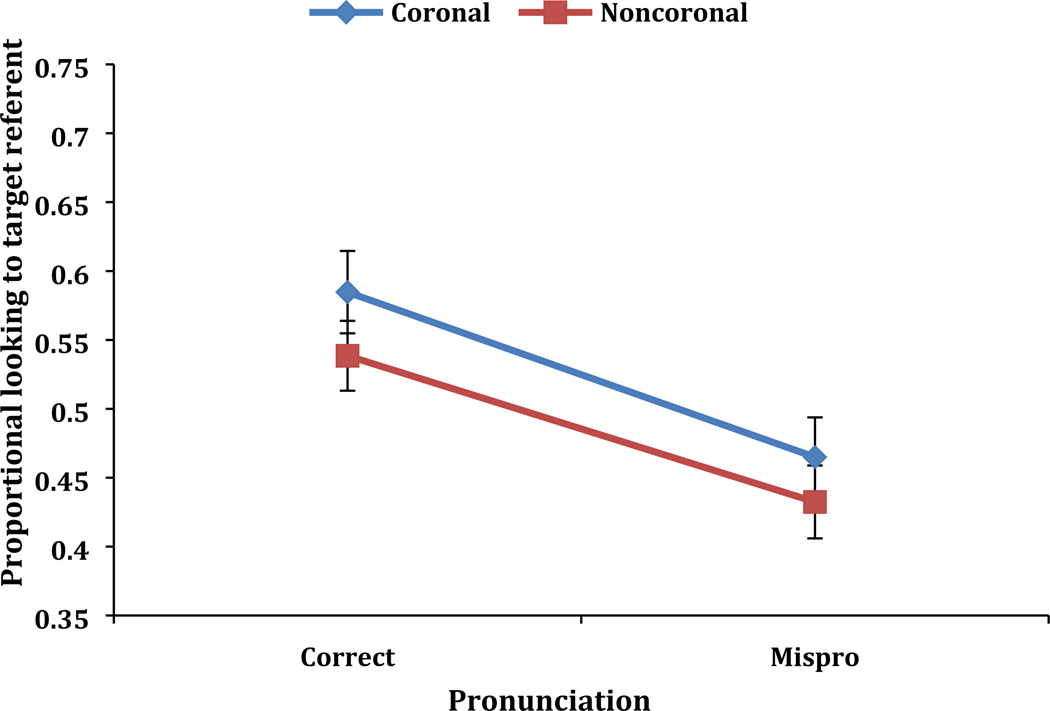

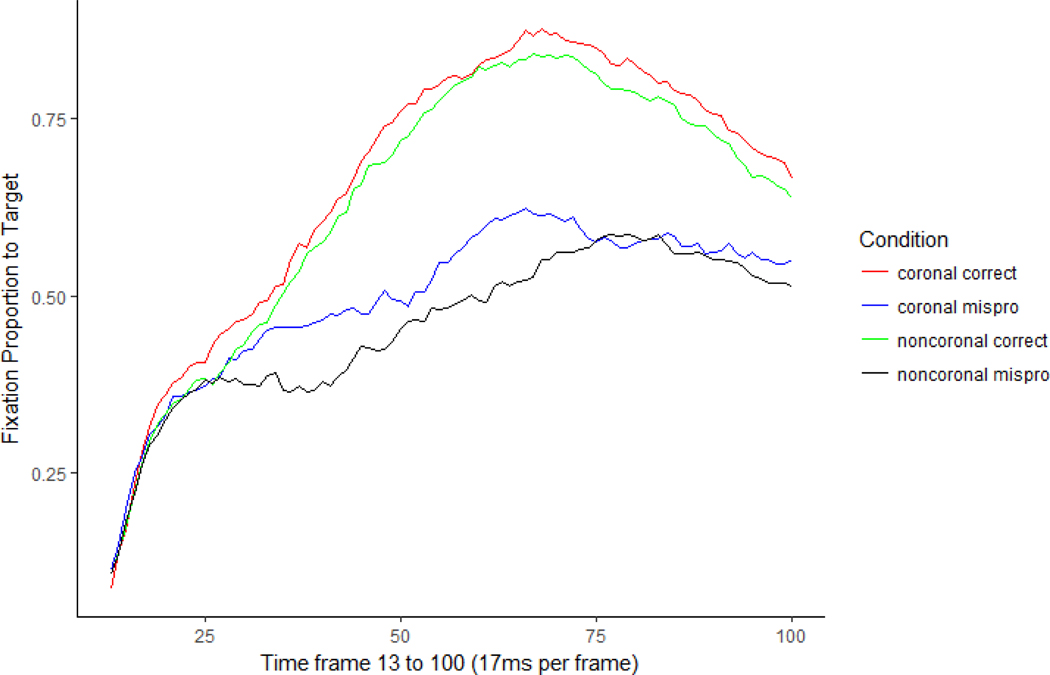

Results and Discussion

As in Experiment 2a, data was coded frame by frame using an in-house software with each time window 16.67 milliseconds; and the dependent measure was participants’ success/failure of looking at the target object at each time frame. The time window used for data analyses was between 216.71 msec and 1066.88 msec after the onset of each trial, when word activation was still taking place for all the four different conditions. To provide a first inspection of the data, looking proportions to target in each condition are shown in Figure 12 and time course data are shown in Figure 13. It is clear from inspection that the underspecification account was again not well supported in our evidence.

Figure 12.

Adults’ sensitivities to different directions of onset mispronunciations when the baseline phase is removed from the task. Error bars show two standard errors computed via subject-wise non-parametric bootstrap.

Figure 13.

Time course of adults’ sensitivities to different directions of onset mispronunciations when the baseline phase is removed from the task.

As in Experiment 2, we conducted series of Bayesian analyses to evaluate which model (underspecification (FUL) vs. equivalent specification) best captures the parameter distributions of the data. We first conducted an analysis using non-informative priors. Results for the posterior distributions of each estimated coefficients can be found in Table 6.

Table 6.

Posterior distributions of coefficients of Experiment 3

| Coefficient | Mean | SD | 95% | HDI |

|---|---|---|---|---|

| Intercept | −0.26 | 0.18 | −0.60 | 0.09 |

| Time | 0.45 | 0.10 | 0.25 | 0.64 |

| Pronunciation | 0.72 | 0.13 | 0.48 | 1.00 |

| Place of Articulation (POA) | −0.11 | 0.13 | −0.37 | 0.15 |

| POA*Pronunciation | 0.02 | 0.13 | −0.23 | 0.28 |

| Pronunciation*Time | 0.54 | 0.11 | 0.33 | 0.75 |

| POA*Time | −0.02 | 0.05 | −0.12 | 0.08 |

| POA*Pronunciation *Time | 0.01 | 0.03 | −0.06 | 0.07 |

We then specified priors that were appropriate for the underspecification and equivalent representation accounts to provide principled quantitative estimates of the relative credibility of the two accounts.

The posterior likelihood of the model based on parameters derived from the underspecification account (P(MU|D)) was 0.00009, whereas the posterior likelihood of the model based on parameters derived from the equivalent representation account (P(MER|D)) was 0.99991 according to the MCMC estimation. As in Experiment 2, we assumed the prior probability of the two sets of parameters to be equal. The ratio of these two likelihoods yields a a decisive Bayes factor of infinity.

In this experiment, the salience phase was dropped from the procedure, so that the patterns of data cannot be ascribed to artifactual comparisons of surface forms but rather reflect the architecture of feature specification in the lexicon. Like Experiments 2a and 2b, Experiment 3 indicates that coronal and noncoronal features are equally specified in the adult lexicon and that psycholinguistic claims based on underspecification (i.e. FUL model) do not hold true.

GENERAL DISCUSSION

Given the central role of word learning in language acquisition, a critical question regarding developmental continuity is whether toddlers’ early lexical representations resemble adults’ mature lexical representations. Studies in recent years have shown that early and mature lexical representations do include similar quantities of phonological detail (Swingley & Aslin, 2000; White & Morgan, 2008). However, it has not previously been investigated whether the quality of phonological details in toddlers’ representations is also adult-like.

As the first attempt in investigating whether certain phonological features are consistently (under)specified across development we have reported a series of studies, two with toddlers and three with adults, using a simplified visual-world procedure that directly and immediately taps into lexical activations. The results of our studies are uniform: for both toddlers and adults, for both onsets and codas, with and without prior exposure to images, looking to the referents of familiar words was decreased when those words were mispronounced.

These results replicated findings in the literature that toddlers are sensitive to one-feature mispronunciations (Swingley & Aslin, 2000; White & Morgan, 2008), indicating that early lexical representations include very detailed information in terms of phonological features. Given that we also found the same results with adult listeners, we can conclude that toddlers’ early lexical representations are adult-like in terms of the amount of phonological details. Therefore, contrary to claims of phonological reorganization (Storkel, 2002; Walley, 2005) our findings suggest developmental continuity between toddlers’ early lexicon and adults’ mature lexicon.

More importantly, in no case, did the nature of the mispronunciations make any difference: whether coronals were mispronounced as noncoronals or noncoronals were mispronounced as coronals, looking time to the familiar referent decreased in much the same proportion. Such findings suggest that both coronal feature and noncoronals are equally specified in both 19-month-olds’ and adults’ lexical representations. Our results are clearly inconsistent with predictions from underspecification-based accounts such as the Featurally Underspecified Lexicon model (Lahiri & Reetz, 2002, 2010). Our adult studies mirror those of Mitterer (2011), who tested Dutch-speaking adults. As we did, Mitterer consistently failed to find evidence for asymmetries predicted by the FUL model: looking to printed-word onset competitors differing from targets by one or two features was the same regardless of featural relations. Thus, across procedures, languages, and ages, converging results indicate that there are no differences in lexical representations of coronal versus noncoronal segments.

Nevertheless, as noted earlier, a number of studies have found evidence for the asymmetries predicted by accounts such as the FUL model. For example, in a gating task, Bengali speakers gave few CVN responses to early gates of CVN stimuli, consistent with the claim that oral vowels in Bengali are unspecified for nasality (Lahiri & Marslen-Wilson, 1991). Lahiri & van Coillie (1999) found that the German word for “railway”, bahn could still prime the word for “train” when it was mispronounced as bahm. However, the word for “tree”, baum, did NOT prime the word for “bush” when it was mispronounced as baun. In several follow-up studies, Lahiri and colleagues replicated the same pattern of asymmetry for word onsets (Fredrich, Eulitz & Lahiri, 2008), medial positions (Friedrich, Eulitz & Lahiri, 2006), and vowels (Obleser, Lahiri & Eulitz, 2004; Lahiri & Reetz, 2010) using priming or lexical decision tasks. In addition, using the oddball paradigm, Cornell, Lahiri & Eulitz (2011,1013) and Eulitz & Lahiri (2004) found similar patterns of asymmetry at an early stage of speech perception (around 250 milliseconds) for English consonant onsets and vowels and German vowels respectively.

Van der Feest and Fikkert (2015) used an Intermodal Preferential Looking Procedure similar to ours and showed that 20- and 24-month-old Dutch-learning toddlers respond asymmetrically to place-of-articulation mispronunciations of coronal- and labial-initial familiar words. Using a novel word-learning task, Tsuji et. al. (2016) also found a lack of sensitivities to mispronunciation changes to coronal words with 18-month-old Japanese and Dutch toddlers. Thus, across procedures, languages, and ages, converging results indicate that there are differences in representations of coronal versus noncoronal segments.

How can these contradictory findings be reconciled? One possible approach lies in considering the nature of the representations engaged in the various procedures. The visual world/mispronunciation task that we used here exploits relations between word-forms and referents – relations that are fundamentally lexical. We will argue below that some of the tasks that have yielded evidence apparently in support of lexical underspecification engage only phonetic representations, whereas other recruit, in addition, strategic, metalinguistic representations.

As also suggested by Mitterer, findings from studies that measure early stages of processing may reflect phonetic processing. Recent EEG studies examining underspecification have mostly used the oddball paradigm, in which participants passively listen to stimuli changing from standard to nonstandard forms (Cornell, Lahiri & Eulitz, 2011& 2013; Eulitz & Lahiri, 2004). In all these studies, asymmetrical sensitivities are often found at temporally very early stages. Moreover, no lexical activation is required for this sort of task: stimuli may comprise nonce items as well as existing words.

Nor is it clear whether results from studies with young infants supporting underspecification-based accounts can reasonably be construed as reflecting properties of lexical representations. Tsuji et. al. (2015) tested 4-month-old Dutch-learning infants and Japanese-learning infants in a habituation paradigm on their sensitivities to different directions of changes between nonce items ompa and onta and found discrimination of the items when infants were habituated to ompa but not to onta. Similarly, Dijkstra & Fikkert (2011) tested six-month-old Dutch infants in a habituation paradigm on their sensitivities to different directions of changes between nonce items paan and taan and found discrimination of the items when infants were habituated to paan but not to taan. By six months, infants can form representations that have at least some lexical properties: they can use their own names and other highly familiar forms as anchors for segmentation (Bortfeld, Morgan, Golinkoff, & Rathbun, 2005), and they can associate some form of reference with well-known words (Bergelson & Swingley, 2012). Moreover, by eight months, infants can use syllable combinations extracted from statistical learning tasks in word-like fashions (Erickson, Thiessen, & Graf Estes, 2014; Saffran, 2001). However, there is no evidence that infants can do any of these things with the repeated, isolated forms characteristic of habituation stimuli, or that they assign them any sort of lexical representation. The conservative interpretation, thus, is that infants treat such stimuli as mere phonetic sequences.

If subjects are processing stimuli solely at a phonetic level in these procedures, it is possible that the asymmetries found in these studies may reflect statistical or distributional differences between speech categories in competition. Coronal sounds are more frequent and more variable as a class than are noncoronal sounds; we argue that either of these properties is sufficient for producing asymmetrical judgments.

Phoneticians and phonologists have often noted that, cross-linguistically, coronal sounds are more frequent in occurrence than noncoronal sounds (e.g., Maddieson, 1984; Keating, 1990). To supplement these observations, we tabulated the frequency of occurrence of relevant classes of sounds in the CALLHOME database for several languages in diverse language families, including Arabic (Kilany, Gadalla, Yacoub, & McLemore, 2002), Japanese (Kobayashi, Crist, Kaneko, & McLemore, 1996), and Spanish (Garrett, Morton, & McLemore, 1996). Results from our tabulations are shown in Table 7. In each of these languages, coronal segments as a class are at least twice as frequent as either labial or velar segments. The same pattern generally holds with respect to stop consonants as a class and with respect to minimally contrastive pairs of segments.

Table 7.

Frequencies of segments in CALLHOME transcripts by place of articulation

| Labial | Coronal | Velar | ||

|---|---|---|---|---|

| Language | Segments | |||

| Arabic | All consonants | 91,409 | 222,774 | 94,624 |

| Stops | 25,592 | 54,279 | 38,544 | |

| Japanese | All consonants | 57,513 | 236,813 | 99,760 |

| Stops | 15,854 | 62,241 | 79,117 | |

| Spanish | All consonants | 101,717 | 320,167 | 53,483 |

| Stops | 44,366 | 62,961 | 43,005 | |

Japanese is exceptional: velar stops (in particular, /k/ and /ky/) occur more often than coronal stops (in particular, /t/). Thus, Japanese may provide an interesting test-bed for teasing apart effects of processing at featural and segmental levels4. As discussed previously, Tsuji et al. (2015) showed that Japanese-learning 4-month-olds display asymmetric perceptions of coronal and labial segments, discriminating changes from a labial to a coronal but not vice versa. This finding is consistent with the frequency-based account proposed here (see Table 7): changes from less frequent categories to more frequent categories are easier to detect than the other way around. The critical question here is whether this effect is reversed when coronal and velar segments are considered in similar speech perception tasks that do not involve lexical activation.

If we conceive of speech sound categories as attractors (see also Tuller et. al., 1994) with strength proportional to the experienced frequency of constituent features or sounds, then categories with higher frequencies of occurrence may tend to pull in, or assimilate, exemplars of less frequent speech categories, especially when the two categories are close with each other in perceptual space (Anderson, Morgan, & White, 2003). Since coronal sounds/place of articulation occur more often, noncoronal sounds are more likely to be perceived as coronal sounds than vice versa. Feldman, Griffith & Morgan (2009) elaborated this idea on a more quantitative basis. Conceiving of speech perception as a process that uses Bayesian inference to reconstruct the stimulus from noise,their computational model suggested that, given a set of speech categories c and observed data d, then according to Bayes’ rule, the posterior probability of any given speech category is given by . Differences in the frequencies of phonetic categories correspond to differences in their prior probabilities P(ci). This difference, as modeled by a bias term of magnitude, , can cause a shift in the discriminative boundary between the two categories under comparison (c1 and c2), the higher the prior of a specific category, the more likely that a given stimulus will be classified in this category. Therefore, in cases such as discrimination of repetitions of taan from alternations of taan and paan, multiple categories come into play, and the log ratio influences the outcomes of competitions. Here, if the attractor for /t/ (or taan) is sufficiently more powerful that the attractor for /p/ (or paan), repetitions of taan and alternations of taan and paan may not be discriminable. On this view, the 6-month-olds tested by Dijkstra & Fikkert (like the 4-month-olds tested by Tsuji et al.) came to their study with somewhat more experience with coronals than with labials, though with relatively little experience overall. Habituation with taan served to strengthen further the relatively strong /t/ (taan) attractor, so that after habituation, infants’ ability to discriminate repetitions of taan from alternations of taan and paan was diminished. Other infants were habituated with paan; for these infants, habituation served to strengthen the relatively weak /p/ (paan) attractor, leveling the competitive field so that after habituation, infants’ ability to discriminate repetitions of paan from alternations of taan and paan was left intact (or perhaps enhanced). In the case of adult subjects in oddball tasks, well-established coronal attractors are stronger than noncoronal attractors so that changes from coronal standards to noncoronal (mis)pronunciations will be more difficult to detect.

Coronal sounds may also have greater variability than their noncoronal counterparts, not so much due to their greater susceptibility to effects of coarticulation as to the facts that coronals appear in wider ranges of contexts and are more likely to be subject to phonological alternations than are noncoronals. When coarticulatory contexts are controlled, coronals may display less variability in place of articulation than do labials or velars (Sussman, McCaffrey, & Matthews, 1991; Sussman, Hoemeke, & McCaffrey, 1992). However, coronals typically appear in a much wider range of coarticulatory context than do noncoronals. In English, for example, of 45 possible onset clusters, only four (e.g., quick) do not include a coronal segment, while of 73 possible monomorphemic coda clusters, only three (e.g. stink) do not include a coronal segment. As we noted earlier, in Catalan, coronal nasals can assimilate in place to following stops, while velar or palatal nasals remain unchanged (Mascaro, 1976). Similarly, in English, coronal word-final obstruents may assimilate in place to following consonants, whereas noncoronal obstruents do not (Marslen-Wilson, Nix, & Gaskell, 1995). Thus, whereas sweet may appear in assimilated forms (sweep boy, sweek girl), deep and steak cannot (*deet tank, *steat dinner).

As a first approximation, segment variability can be estimated by computing the likelihood that a given underlying segment will surface as a different segment. Cohen Priva (2012) used a modified Levenshtein distance metric to align underlying (dictionary) forms and phonetic realizations in the Buckeye Natural Speech Corpus (Pitt, Johnson, Raymond, Hume & Fosler-Lussier, 2007) and to create an articulatory confusion matrix for English segments. Of the 43,915 underlying coronal stop tokens, 21,576 (49%) were pronounced as some other segment, whereas of the 64,288 underlying noncoronal stop tokens, only 2,997 (5%) were pronounced as an alternative segment, a significant difference, t(4) = 248.83, p < .001.5 Moreover, coronal stops have larger numbers of alternative manifestations (24 for /d/ and 19 for /t/) than do noncoronal stops (/b/, /g/, /p/, and /k/ each have 7 deviant forms). A chi-square goodness-of-fit test comparing against a theoretical expectation of 12.67 for the three voiced cells and 11 for the three unvoiced cells, i.e., testing against the hypothesis that number of variants does not vary across different places of articulation, yields a χ2(2) of 23.94, p < .001.

Ren & Austerweil (2017) modeled effects of variability in the following way: If we conceive of speech sound categories as attractors with strength proportional to the experienced frequency of constituent sounds, then categories with broader variability are amenable to a wider range of sounds. Such categories may assimilate exemplars from less variable categories, especially when the two categories are in perceptual proximity. Because coronal sounds have greater variability than noncoronal sounds, they are more likely to assimilate noncoronal sounds than vice versa. Thus, coronals mispronounced as noncoronals are more acceptable in perception than vice versa. Their Bayesian model confirmed this hypothesis: categories with higher variability have higher posterior probabilities than categories with lower variability (Figure 14).

Figure 14.

Simulation of category assignments under the assumption of Gaussian distribution with the variance of the broad category twice as the narrow category. Graph excepted from Ren & Austerweil (2017).

Perceptual asymmetries resembling those purportedly due to underspecification have been observed in other cognitive domains as well, where they have often been ascribed to effects of differences in variability. Quinn, Eimas & Rosencrantz (1993), for example, found that 4-month-olds habituated to pictures of cat faces could easily detect a change to a picture of a dog face, but infants habituated to dog faces failed to detect a change to a cat face. A series of follow-up studies investigating this asymmetry confirmed that the dog stimuli were more variable in appearance and that when variability was equated across categories the asymmetry disappeared (Eimas, Quinn & Cowan, 1994; Mareschal, French & Quinn, 2000; Mareschal, & Quinn, 2001). Similarly, in music perception, Delbé, Bigand & French (2006) examined effects of variability by training non-musician adults with two distributions of pitch sequences differing in variability and then testing them on sensitivities to the two directions of changes. Their results indicated that changes from the less variable category to the more variable category are more detectable than vice versa. Therefore, while underspecification may cause asymmetry, asymmetry is not necessarily a consequence of underspecification.

To recap, some of the tasks that have been used in studies supporting underspecification-based accounts such as the Featurally Underspecified Lexicon model engage only phonetic representations. During this stage of word recognition, speech perception is affected by the statistical properties of native speech categories, such as frequency of occurrence and variability of the speech category. We have argued that perceptual asymmetries can occur at this level, and may be the consequence of differences in statistical properties – frequency and/or variance – of the categories under comparison. Thus, rather than claiming that perceptual asymmetries originates from the lexical level where different phonological features are specified in different manners, we argue that asymmetries found in studies reviewed above are phonetic in nature. Moreover, rather than arguing that perceptual asymmetries are consequences of universal grammar, we argue that they may arise due to domain-general computational properties of cognition and depend on experience with input. Regardless of whether our account is correct, however, evidence from procedures such as the oddball task cannot bear on lexical underspecification, because such tasks do not necessarily engage lexical representations.

We note that some recent theoretical developments reject distinctions between pre-lexical and lexical representations (e.g., Arnold et al, 2017; Baayen, Shaoul, Willits, & Ramscar, 2015). On this view, asymmetric results such as those just described may arise from on-going adjustments of perception that are sensitive to both present and past statistical properties of experience (e.g., Marsolek, 2008), where the statistical properties that are relevant may depend on the nature of the task.

Other tasks that have been used to support underspecification-based accounts, such as lexical decision and semantic priming, however, clearly do engage lexical representations. Here the critical difference between studies that have found evidence consistent with predictions from underspecification and those that have not appears to involve the number of times items were presented for judgments. In both our studies and those of Mitterer (2011), items were presented for judgment one time only; the asymmetries characteristic of underspecification were not observed. Item repetition is central to some of the tasks that have supported psycholinguistic claims of underspecification. In ERP studies, some of which have also incorporated behavioral lexical decision tasks, items are repeated multiple times so that responses may be averaged, filtering noise. In gating studies, auditory stimuli are presented repeatedly, with progressively less truncation, while subjects are asked to guess which word they have heard. Lahiri & Marslen-Wilson (1991) report data on 11 gates per item. Priming and lexical decision procedures do not require item repetition, but in studies using these techniques that have supported underspecification, item repetition has been the rule. For example, in Roberts, Wetterlin & Lahiri (2013) in which a delayed semantic priming task was used, each testing item was repeated 4 times. In Friedrich, Lahiri and Eulitz (2008), Experiment 2, which used auditory priming, each testing item was also repeated 4 times. Similarly, in a study with toddlers somewhat similar to our own, both van der Feest and Fikkert (2015) and Tsuji et. al. (2016)6 presented target items multiple times each. Most tellingly, lexical decision tasks have consistently failed to find evidence for underspecification when there was no item repetition (e.g. Friedrich, Lahiri, & Eulitz, 2006; Experiment 1 in Friedrich, Lahiri, & Eulitz, 2008).7

A voluminous literature has considered effects of item repetitions in memory and lexical decision tasks (e.g., Britt, Mirman, Kornilov, & Magnuson, 2014; Dutilh, Krypotos, & Wagenmakers, 2011; Forbach, Stanners, & Hochhaus, 1974; Rugg, 1990); a comprehensive review of this literature is beyond the scope of this article. However, item repetitions affect behavior in a variety of ways: latencies become shorter, speed of information processing increases, and responses biases may be adjusted. Judging the same items repeatedly also gives subjects additional opportunities to invoke strategies and may fundamentally change the perceived nature of the task. For example, whereas hearing guck once may interrogate the degree to which it activates duck - a perceptual task - hearing these two pronunciations multiple times might be construed as asking whether guck is a plausible mispronunciation of duck. This latter is a metalinguistic task; subjects may use their (implicit) knowledge that coronals frequently assimilate in place to preceding or following segments whereas noncoronal segments do so rarely if at all to judge that guck may be a plausible mispronunciation of duck, but tat is not a plausible mispronunciation of cat. Tasks in which responses are influenced by supra-lexical, metalinguistic knowledge cannot provide decisive evidence on lexical underspecification: underspecification predicts asymmetries in judgments, but asymmetries in judgments are not all attributable to underspecification.