Abstract

To preserve competitive advantage in a more and more digitalized environment, today’s organizations seek to assess their level of digital maturity. Given this particular practical relevance, a plethora of digital maturity models, designed to asses a company’s digital status quo, has emerged over the past few years. Largely developed and published by practitioners, the academic value of these models remains obviously unclear. To shed light on their value in a broader sense, in this paper we critically evaluate 17 existing digital maturity models – identified through a systematic literature search (2011–2019) – with regard to their validity of measurement. We base our evaluation on established academic criteria, such as generalizability or theory-based interpretation, that we apply in a qualitative content analysis to these models. Our analysis shows that most of the identified models do not conform to the established evaluation criteria. Based on these insights, we derive a detailed research agenda and suggest respective research questions and strategies.

Keywords: Digital maturity models, Measurement, Research agenda

Introduction

If we believe in the press, consultancies, and the majority of researchers, being ‘digital’ is paramount for companies to stay competitive in today’s business world. The goal is to seize the opportunities of the ongoing digital transformation [1]. To evaluate the status quo of a company’s digitalization and to provide guidance for future investments, latest IS literature has established the term digital maturity. Following Chanias and Hess, digital maturity is “the status of a company’s digital transformation” – it describes “what a company has already achieved with regard to transformation efforts” [2]. Here, efforts include implemented changes from an operational point of view as well as acquired capabilities regarding the mastering of the transformation process. In this context, given the high practical relevance of the topic, a great variety of so-called digital maturity models (DMMs) has emerged in the past few years. According to Mettler [3] this trend will prevail as the demand for maturity models will further increase.

Largely developed and published by consultancies in a practical setting, DMMs on the one hand aim at measuring the current level of a company’s digitalization, on the other hand at providing a model path to digital maturity. However, so far, due to their practical nature and the lack of external quality assessments such as peer reviews, the generalizability and consistency of DMMs remains largely unclear. The constant publication of new maturity models for similar fields of application suggests a certain arbitrariness and does not contribute to the clarification of this case.

An analysis of corresponding literature of this field shows that the academic community also does not yet provide comprehensive answers to these questions. Although there are scholars attempting to measure digitalization based on more concrete metrics (e.g. [4]), these metrics are however neither comprehensive nor generalizable.

To provide not only practical but also substantial theoretical value, DMMs should conform to several academic requirements such as a common understanding of their underlying concepts. However, although the terms digital and digitalization of a company exist for many years already, they still remain abstract. Related concepts are context-dependent (e.g., society, company, individual) and vary according to the perspectives on the topic (e.g., human, process, technology). This lack of a common and concrete definition leads to a certain ambiguityalready when it comes to the basic task of DMMs: measuring a company’s level of digitalization. In this context, questions arise such as:

What are relevant variables to measure digitalization? How can they be quantified? How can a certain comparability between companies be ensured? How can we investigate if a certain level of digitalization affects the performance of a company?

Unfortunately, the authors of existing models only rarely reveal their motivation behind the procedures and results of their measurement [5].

Given their high practical relevance and popularity, we have set out to evaluate existing DMMs with regard to their conformity to above mentioned quality standards and thus theoretical value. Determining the status quo of digitalization is the main goal of DMMs. Without such an assessment, DMMs cannot provide a comprehensive path to higher levels of maturity. We thus focus in our analysis on the quality of measurement of a company’s digitalization carried out by the single models. While doing so, we follow the request for further scholarly inquiry on digital maturity, in order to contribute to a more profound understanding of the ongoing sociotechnical phenomenon of digital transformation [6]. Furthermore, this work is in line with existing works who are calling for a deeper understanding, analysis and differentiation of IS maturity models and their usefulness for practitioners [7, 8]. With our undertaking we also answer the call to identify and remedy shortcomings of existing maturity models [5]. The research question we have set out to answer is:

To what extent do DMMs respect quality standards in their measurement of a company’s degree of digitalization?

To answer the research question, we have selected established measurement criteria as basis for the evaluation of the DMMs [9]. In this paper, we will first provide a definition and background information regarding DMMs. Then, we identify quality criteria for the process of measurement and derive a catalogue of requirements from established literature. Based on this catalogue, we will evaluate and compare relevant DMMs identified through a systematic literature search. Finally, after a discussion of this evaluation, we propose a research agenda to remedy shortcomings of existing maturity models and provide guidance for future research.

Theoretical Background

Digital Maturity Models

The term maturity can be defined as “the ability to respond to the environment in an appropriate manner through management practices” [10]. According to Scott and Bruce maturity with regards to an organization is a “reflection of the appropriateness of its measurement and management practices in the context of its strategic objectives and in response to environmental change” [11]. Rosemann and de Bruin describe maturity more pragmatically as “a measure to evaluate the capabilities of an organization in regard to a certain discipline” [12, p. 2]. Scholars agree that the organization’s reaction to its external environment is generally learned rather than instinctive. However, the maturity of an organization does not necessarily relate to its age.

The evaluation of a firm’s maturity marks one of the key points in the process of achieving a higher level of organizational performance [11]. Defining and assessing the maturity of different types of organizational resources allows organizations to evaluate their capabilities with respect to different business areas. In this context, for example, the maturity of certain organizational processes, objects or technologies can be the focus of such a reflection. Based on this concept, maturity models are multistage frameworks to describe a typical path in the development of organizational capabilities [12]. Here, the different levels are conceptualized in terms of evolutionary stages [13]. Maturity models can be described as normative reference models. In general, they are applied in organizations in order to assess the current status quo of a set of capabilities, to derive measures for improvement and prioritize them accordingly [1]. Through a set of benchmark variables and indicators for each stage, the matrix frameworks offer a possibility to make the progress of an object of interest towards a desired state tangible [14]. The relationship between maturity and organizational performance is properly understood, e.g. higher maturity leads to higher performance [15].

Given the simplistic character of maturity models and their practical value, several frameworks have emerged over the last decades across all disciplines [8]. Most recently, in the context of digitization, researchers and practitioners have set out to assess the digital maturity of organizations [2].

Despite their relevance and popularity in various management disciplines, the development and application of maturity models still bears a number of shortcomings [16]. The most prominent point of criticism concerns the poor theoretical basis and empirical evidence of maturity models [17]. Furthermore, de Bruin et al. claim that particularly limited documentation on the development of the maturity model may contribute to the lack of validity and rigor [19]. In response, IS researchers have increasingly set out to determine guidelines for the development of maturity models that are intended to bolster more rigorous design processes [18]. However, regarding the measurement procedures of maturity models, existing academic literature does not yet provide specific quality criteria. In the following, we have thus set out to derive a set of requirements for that purpose based on established literature in the field.

Criteria of a Valid Measurement Process

The procedure of measurement can be considered as one of the main components of the research process itself [19]. Ferris provides a comprehensive definition of the term measurement: “It is an empirical process, using an instrument, effecting a rigorous and objective mapping of an observable into a category in a model of the observable that meaningfully distinguishes the manifestation from other possible and distinguishable manifestations [22, p. 101].”

The quality of measurement is determined by its validity. The concept of validity refers to the substance, the proximity to the truth, of inferences made when justifying and interpreting observed scores. The validity of measurement is evaluated through the underlying assertions, building a complex net of arguments to back up the findings. The judgement about validity is thus made based on so-called validity arguments [19]. According to the Standards for Educational and Psychological Testing “validity is the most important consideration in test evaluation” [20]. However, Kane points out that like many virtues, it is more honored than practised [24]. Therefore, Kane et al. have developed a set of requirements designed to warrant a certain level of validity in performance measurement [9].

The five requirements are: (1) Observation, (2) Generalizability, (3) Theory-based Interpretation, (4) Exploration, and (5) Implication [9, 21, 22]. Brühl offers an updated and comprehensive overview of these criteria. After having consulted additional literature in this field, as suggested by Becker et al. [4], we have chosen the five guidelines defined by Kane et al. and Brühl as theoretical foundation and thus as the basis for our argument [19, 25]. The requirements catalogue for a valid measurement process in DMMs is the following:

Observation:

This argument ensures that the measurement of a single indicator is in line with the predefined measurement procedure [19]. In other words, this requirement makes sure that on the one hand, the appropriate indicator for the subject matter is measured and on the other hand, that it is measured correctly. In this context, the target domain needs to be defined first. The target domain designates the full range of performances actually observed and included in the interpretation. It differs from a wider range of observations that is not subject to interpretation. A target’s expected score over all possible performances in the target domain is defined as the target score.To determine the target domain and lay the foundation for the target score, the definition of the subject matter to be interpreted should be as specific as possible [9]. As we have already pointed out, the target domain of interest with regard to a company’s digitalization tends to be very broad. Central points of evaluation for the DMMs derived from the argument of observation here are thus:

Definition of the phenomenon

Definition of target domain

Predefined measurement procedure to determine target scores.

Generalizability:

This requirement constitutes in a statistical generalization. Researchers conclude from the observed target score based on actual performance on all expected scores of similar tasks of the target domain. In this context, it is assumed that observed scores are “based on random or at least representative samples” [9]. It is thus implied that a certain measurement is valid at any point of time; all context-specific factors are abandoned [19]. For this procedure, the measurement needs to involve a sample of performances from the target domain. Generalizability increases as the sample of independent observations increases. Also, standardization of the assessment procedure tends to improve the generalizability of the scores. This argument leads us to a set of two evaluation criteria for the DMMs:

Measurement approach

Sample size of independent observations

Degree of standardization in the measurement procedure.

Theory-Based Interpretation:

This argument stresses that measurement requires a sound theoretical framework. This means that every single indicator should have a ‘theoretical’ connection to the overall construct [19]. Assumptions that are made need to be backed up by corresponding theory. Given the practical setting the DMMs largely stem from, we will focus in our evaluation on a single key point:

Theoretical basis of the model/measurement procedure.

Extrapolation:

This concept is important for scenarios in which the measured construct is put into relation with other constructs [19]. An example here could be that the level of digital maturity identified by the DMM is put in relation with the performance or competitiveness of a company. In this context, especially the plausibility of inferences is of importance to guarantee the validity of the measurement. With regards to extrapolation we thus focus on:

Assumed connections between maturity level and other constructs

Plausibility of inferences to argument for such a relationship.

Implications:

This criterion is relevant if decisions are to be made based on the measured construct [19]. Here, again the transparency and plausibility of inferences made to justify these decisions are key. A thorough chain of arguments is necessary to back up the path from the initial status quo of digitalization that is measured towards higher levels of digital maturity. For this argument, we thus focus on:

Justification of steps on the path towards maturity.

With regards to the design of our evaluation and comparison of the DMMs we follow Becker et al.’s established work in the field of IT maturity models [5]. We will take these criteria as the basis for evaluating the DMMs identified through the next step (see Sect. 3.2).

Research Design

Literature Review for Selecting Digital Maturity Models

Vom Brocke et al. state that the searching process of the literature review “must be comprehensibly described” so that “readers can assess the exhaustiveness of the review and other scholars can more confidently (re)use the results in their own research [23].” In this context, researchers of the IS field suggest a systematic and structured way of identifying and reviewing the literature [24].

We searched through a 8-year period (2011 to 2019) in ten leading IS journals, five major IS conferences and two additional databases (Business Source Premier and Google Scholar). The chosen timeframe appears to be especially relevant as in 2011 the first so called DMM was published by a consultancy. The outlets and databases have been selected based on the experience reported by [12] who have investigated existing IS maturity models.

The search terms used in our systematic review were constructed based on the PICO criteria (Population, Intervention, Comparison and Outcomes). These criteria are usually applied as guidelines in the medical field to frame the research question by identifying keywords and formulating search strings. Kitchenham and Charters deem the PICO criteria as particularly suitable when conducting a systematic review in the academic context of Information Systems [25]. In addition to deriving major search terms through PICO, we identified synonyms and alternative spellings for these keywords by consulting both experts and literature of this field [26]. As a result the following keywords were compiled: “maturity model”, “stages of growth model”. “stage model”, “change model”, “transformation model”, and “grid”. In addition, we framed the literature search using “Information Technology” and “Digital*”. Lastly, when constructing the search strings, we used both the Boolean AND and OR to on the one hand link the major terms derived in the first step and on the other hand to incorporate these synonyms and alternative spellings in our systematic literature search. We first applied the search strings to the IS journals and conferences to check for the accuracy of the search terms. As a result, we added “grid” as search term and applied the search string to the electronic databases.

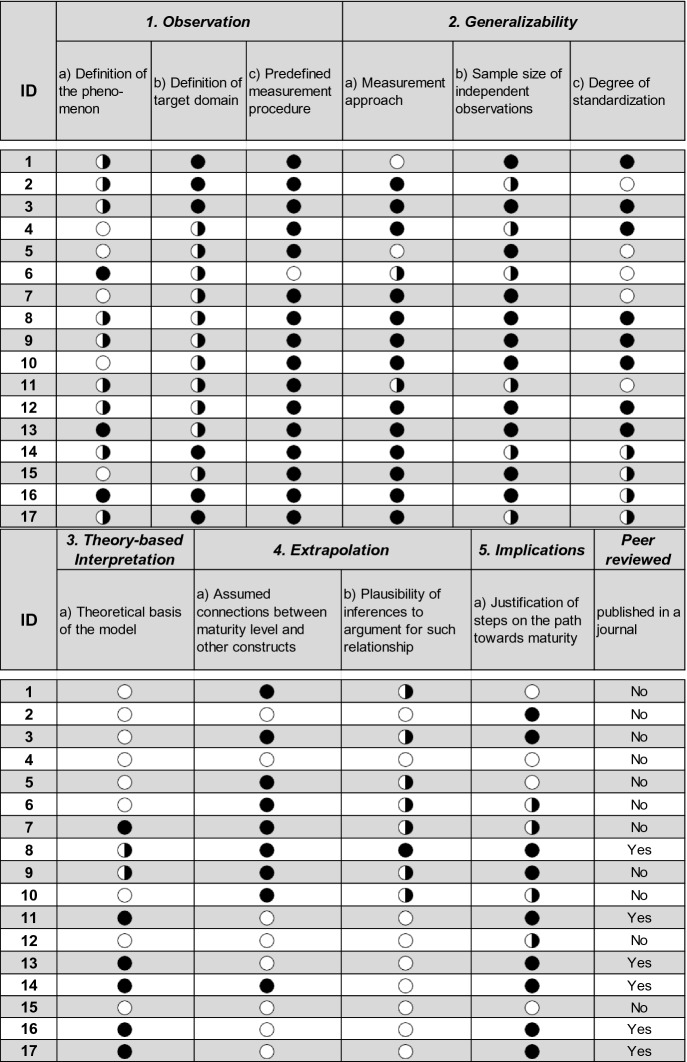

Content Analysis Design

All selected DMMs (see Table 1) were subject to a content analysis. The overarching objective was to identify whether they fulfill the quality criteria defined in Sect. 2.2. We conducted a deductive category assignment in which we considered the corresponding sub-dimensions and assigned one of the following “codes” (see Table 2): A black (‘filled’) Harvey ball indicates that the respective sub-dimension is completely fulfilled. A black white (‘partly filled’) Harvey ball means that the sub-dimension is only fulfilled to some extent. A white (‘blank’) Harvey ball was assigned in case the sub-dimension is not fulfilled at all or not applicable to the model.

Table 1.

Identified digital maturity models

| ID | Reference | Name of DMM | Year |

|---|---|---|---|

| 1 | [27] | Industry digitization index | 2011 |

| 2 | [28] | Digital transformation maturity | 2011 |

| 3 | [29] | Digital maturity matrix | 2012 |

| 4 | [30] | Status of digitalization | 2013 |

| 5 | [31] | Digital quotient | 2015 |

| 6 | [32] | Digital transformation index | 2015 |

| 7 | [33] | Digitale reife | 2016 |

| 8 | [6] | Stages in digital business transformation | 2016 |

| 9 | [34] | Digital maturity & transformation report | 2016 |

| 10 | [35] | Digital maturity model 4.0 | 2016 |

| 11 | [36] | Digital maturity model for telecom | 2016 |

| 12 | [37] | Industry 4.0 readiness | 2017 |

| 13 | [1] | Digital maturity in traditional industries | 2017 |

| 14 | [38] | Maturity assessment for industry 4.0 | 2018 |

| 15 | [39] | Digitalisierungsindex mittelstand 2018 | 2018 |

| 16 | [40] | Strategic factors enabling digital maturity | 2019 |

| 17 | [41] | Maturity model of digital transformation | 2019 |

Table 2.

Analysis of digital maturity models - overview

For example (see Table 2): DMM 2 has provided a definition for “digital transformation”, but not for “digital transformation maturity”. Therefore, the subdimension

1(a) Definition of the phenomenon has only partially been fulfilled and marked with a black white Harvey ball.

Following established criteria of qualitative research, this procedure has been done by two researchers independently. They agreed in 94% of the cases. Remaining cases have been discussed among the authors until consensus was reached.

Findings

The findings of our analysis are summarized in Table 2. Only three of the 17 analyzed models provide definitions for digital maturity. Five models omit giving any definition of the concept of digital maturity itself or of related concepts such as digital transformation or digitization. However, all of the models offer a number of different dimensions to describe the target domain. Dimensions of the target domain are for example: customer experience, operational processes, business models and digital capabilities. Furthermore, the vast majority of the DMMs have a predefined measurement approach as well as a fairly large sample. A large stake of the models does not provide any information about the degree of standardization in the measurement undertaken. Nine of the analyzed DMMs fail to provide a theoretical basis for their work. In most cases, when assumptions with regards to connections between maturity levels and other constructs are made, these were not backed by plausible argumentation. Also, four of the models fail to justify the order of the consecutive steps towards digital maturity. In total, six models are peer reviewed and published in academic outlets.

Research Agenda

Based on our analysis, we suggest a first draft of a future research agenda for the field of DMMs (see Table 3). The research agenda is the result of the discussion onour findings and corresponding potential avenues for further research.

Table 3.

Suggested research agenda

| Category | Selected research problems/questions | Proposed research strategies/methods |

|---|---|---|

| Definitions and measurement procedure | How to define digital maturity? What are the dimensions of DM? | Conceptual research Content validity checks (e.g., interviews) |

| How to design a measurement procedure for DM? | Construct development | |

| Theoretical framework | How to link/position DMM to existing theories? | Conceptual research |

| Is there a need for a new DMM theory? How could such a theory look like? | Explorative (theory development) Grounded theory | |

| Empirical aspects | How to ensure generalizability? | Large data sets, cross-company analysis (multiple case study design) |

| Evolution of DM over time? | Longitudinal studies |

The main challenge researchers should focus on is developing precise conceptual definitions of DMM-related terms. The current situation with multiple understandings and definitions of the core terms hinders the development of a measurement model [42]. Thus, scholars should put effort in defining digital maturity first. We consider this conceptual work as the most important step for improving the research field around DMMs as it lays the foundation for subsequent tasks. Particularly, definitions form the core of theoretical frameworks [43] that have to be developed to increase the quality of DMM research.

Having discussed required conceptual definitions and theoretical frameworks, we can now take a closer look at the empirical aspects. Most existing DMMs are tested through real data, but the quality of the methods and approaches applied largely differs or cannot be evaluated at all. For example, in most of the analyzed papers, the data collection procedure is not transparently explained. Thus, our first call with regardsto empirical aspects is to increase transparency of both sampling and data collection. Furthermore, depending on the focus of the model, it could be beneficial to concentrate on specific data groups such as certain company sizes or certain cultures. The second call concerns the methods applied to analyse the data. In most cases, DMMs can be considered as quantitative in nature, which is particularly useful when the goal is for example a comparison between different companies. However, a qualitative approach could contributeto the measurement quality of a company’s digital maturity. Qualitative research settings have proven to be especially useful in the process of DMM development [6].

The scope of our suggested research agenda clearly shows that there is a number of significant shortcomings with regards to the measurement validity of existing DMMs. Correspondingly, the overall quality and theoretical value of DMMs is put to the test.

With our research agenda we contributeon atheoretical level by identifying and remedying shortcomings of existing maturity models in the field of IS [5]. On a practical level, we provide managers with a much needed critical evaluation of these popular tools [8]. We acknowledge that due to the practical nature of DMMs, the exhaustiveness of the literature review is questionable. Future research could thus include a systematic literature review of additional practitioners’ outlets and search terms to provide a more comprehensive overview. We hope that this research agenda will inspire researchers to further investigate the topic of DMMs which will ultimately lead to a higher practical and theoretical value of these useful models.

Contributor Information

Marié Hattingh, Email: marie.hattingh@up.ac.za.

Machdel Matthee, Email: machdel.matthee@up.ac.za.

Hanlie Smuts, Email: hanlie.smuts@up.ac.za.

Ilias Pappas, Email: ilias.pappas@uia.no.

Yogesh K. Dwivedi, Email: ykdwivedi@gmail.com

Matti Mäntymäki, Email: matti.mantymaki@utu.fi.

Tristan Thordsen, Email: tthordsen@escpeurope.eu.

Matthias Murawski, Email: mmurawski@escpeurope.eu.

Markus Bick, Email: mbick@escpeurope.eu.

References

- 1.Remane, G., Hanelt, A., Wiesboeck, F., Kolbe, L.: Digital maturity in traditional industries–an exploratory analysis. In: Proceedings of the 25th European Conference on Information Systems (ECIS) (2017)

- 2.Chanias S, Hess T. How digital are we? maturity models for the assessment of a company’s status in the digital transformation. Manag. Rep./Institut für Wirtschaftsinformatik und Neue Medien. 2016;2:1–14. [Google Scholar]

- 3.Mettler T. Thinking in terms of design decisions when developing maturity models. Int. J. Strateg. Decis. Sci. (IJSDS) 2010;1:76–87. doi: 10.4018/jsds.2010100105. [DOI] [Google Scholar]

- 4.Kotarba M. Measuring digitalization–key metrics. Found. Manag. 2017;9:123–138. doi: 10.1515/fman-2017-0010. [DOI] [Google Scholar]

- 5.Becker J, Knackstedt R, Pöppelbuß J. Developing maturity models for IT management. Bus. Inf. Syst. Eng. 2009;1:213–222. doi: 10.1007/s12599-009-0044-5. [DOI] [Google Scholar]

- 6.Berghaus, S., Back, A.: Stages in digital business transformation: results of an empirical maturity study. In: MCIS, p. 22 (2016)

- 7.Proença D, Borbinha J. Maturity models for information systems-a state of the art. Procedia Comput. Sci. 2016;100:1042–1049. doi: 10.1016/j.procs.2016.09.279. [DOI] [Google Scholar]

- 8.Becker, J., Niehaves, B., Pöppelbuß, J., Simons, A.: Maturity models in IS research. In: 18th European Conference on Information Systems, ECIS2010‐0320 (2010)

- 9.Kane M, Crooks T, Cohen A. Validating measures of performance. Educ. Meas.: Issues Pract. 1999;18:5–17. doi: 10.1111/j.1745-3992.1999.tb00010.x. [DOI] [Google Scholar]

- 10.Bititci US, Garengo P, Ates A, Nudurupati SS. Value of maturity models in performance measurement. Int. J. Prod. Res. 2015;53:3062–3085. doi: 10.1080/00207543.2014.970709. [DOI] [Google Scholar]

- 11.Pedrini CN, Frederico GF. Information technology maturity evaluation in a large Brazilian cosmetics industry. Int. J. Bus. Adm. 2018;9:15. [Google Scholar]

- 12.Poeppelbuss J, Niehaves B, Simons A, Becker J. Maturity models in information systems research: literature search and analysis. CAIS. 2011;29:1–15. doi: 10.17705/1CAIS.02927. [DOI] [Google Scholar]

- 13.Rosemann, M., de Bruin, T.: Towards a business process managment maturity model. In: ECIS 2005 Proceedings, vol. 37 (2005)

- 14.Lahrmann, G., Marx, F., Winter, R., Wortmann, F.: Business intelligence maturity: development and evaluation of a theoretical model. In: 2011 44th Hawaii International Conference on System Sciences (HICSS), pp. 1–10. IEEE (2011)

- 15.Dooley K, Subra A, Anderson J. Maturity and its impact on new product development project performance. Res. Eng. Des. 2001;13(1):23–29. doi: 10.1007/s001630100003. [DOI] [Google Scholar]

- 16.Mettler, T., Blondiau, A.: HCMM-a maturity model for measuring and assessing the quality of cooperation between and within hospitals. In: 2012 25th International Symposium on Computer-Based Medical Systems (CBMS), pp. 1–6. IEEE (2012)

- 17.Carvalho, J.V., Rocha, Á., van de Wetering, R., Abreu, A.: A maturity model for hospital information systems. J. Bus. Res. (2017)

- 18.Solli-Sæther H, Gottschalk P. The modeling process for stage models. J. Organ. Comput. Electron. Commer. 2010;20:279–293. doi: 10.1080/10919392.2010.494535. [DOI] [Google Scholar]

- 19.Brühl R. Wie Wissenschaft Wissen schafft – Wissenschaftstheorie für Sozial- und Wirtschaftswissenschaften. Konstanz: UVK Verlagsgesellschaft/Lucius & Lucius; 2015. [Google Scholar]

- 20.APA, A.: NCME: standards for educational and psychological testing. Washington, DC, American Psychological Association, American Educational Research Association, National Council on Measurement in Education (1985)

- 21.Kane MT. Validation. Educ. Meas. 2006;4:17–64. [Google Scholar]

- 22.Kane MT. An argument-based approach to validity. Psychol. Bull. 1992;112:527. doi: 10.1037/0033-2909.112.3.527. [DOI] [Google Scholar]

- 23.Vom Brocke, J., Simons, A., Niehaves, B., Riemer, K., Plattfaut, R., Cleven, A.: Reconstructing the giant: on the importance of rigour in documenting the literature search process. In: Ecis, vol. 9, pp. 2206–2217 (2009)

- 24.Bandara, W., Miskon, S., Fielt, E.: A systematic, tool-supported method for conducting literature reviews in information systems. In: Proceedings of the19th European Conference on Information Systems (ECIS 2011) (2011)

- 25.Kitchenham, B., Charters, S.: Guidelines for performing systematic literature reviews in software engineering (2007)

- 26.Lasrado, L.A., Vatrapu, R., Andersen, K.N. (eds.): Maturity models development in is research: a literature review (2015)

- 27.Friedrich, R., Gröne, F., Koster, A., Le Merle, M.: Measuring industry digitization: Leaders and laggards in the digital economy (2011). https://www.strategyand.pwc.com/report/measuring-industry-digitization-leaders-laggards

- 28.Westerman G, Calméjane C, Bonnet D, Ferraris P, McAfee A. Digital transformation: a roadmap for billion-dollar organizations. MIT Cent. Digital Bus. Capgemini Consult. 2011;1:1–68. [Google Scholar]

- 29.Westerman G, Tannou M, Bonnet D, Ferraris P, McAfee A. The digital advantage: how digital leaders outperform their peers in every industry. MITSloan Manag. Capgemini Consult. MA. 2012;2:2–23. [Google Scholar]

- 30.Becker, W., Ulrich, P., Vogt, M.: Digitalisierung im Mittelstand-Ergebnisbericht einer Online-Umfrage. Univ., Lehrstuhl für Betriebswirtschaftslehre, insbes. Unternehmensführung und Controlling (2013)

- 31.Catlin, T., Scanlan, J., Willmott, P.: Raising your digital quotient. McKinsey Q. 1–14 (2015)

- 32.Berger, R.: The digital transformation of industry (2015). www.rolandberger.com/publications/publication_pdf/roland_berger_digital_transformation_of_industry_20150315.pdf

- 33.Arreola González, A., et al.: Digitale Transformation: Wie Informations-und Kommunikationstechnologie etablierte Branchen grundlegend verändert–Der Reifegrad von Automobilindustrie, Maschinenbau und Logistik im internationalen Vergleich. Abschlussbericht des vom Bundesministerium für Wirtschaft und Technologie geförderten Verbundvorhabens, IKT-Wandel “(Steuerkreis: Grolman von, H., Krcmar, H., Kuhn, K.-J., Picot, A., Schätz, B.) (2016)

- 34.Berghaus, S., Back, A. Kaltenrieder, B.: Digital maturity & transformation report 2016 (2016). https://crosswalk.ch/dmtr2016-delivery

- 35.Gill, M., VanBoskirk, S.: Digital Maturity Model 4.0. Benchmarks: Digital Transformation Playbook (2016)

- 36.Valdez-de-Leon, O.: A digital maturity model for telecommunications service providers. Technol. Innov. Manag. Rev. 6 (2016)

- 37.Lichtblau, K., et al.: Studie: Industrie 4.0 Readiness. http://www.impuls-stiftung.de/documents/3581372/4875835/Industrie+4.0+Readniness+IMPULS+Studie+Oktober+2015.pdf/447a6187-9759-4f25-b186-b0f5eac69974 (2017)

- 38.Colli M, Madsen O, Berger U, Møller C, Wæhrens BV, Bockholt M. Contextualizing the outcome of a maturity assessment for Industry 4.0. IFAC-PapersOnLine. 2018;51:1347–1352. doi: 10.1016/j.ifacol.2018.08.343. [DOI] [Google Scholar]

- 39.Deutsche Telekom, A.G.: Digitalisierungsindex Mittelstand 2018. Der digitale Status Quo des deutschen Mittelstands, pp. 2–3 (2018)

- 40.Salviotti, G., Gaur, A., Pennarola, F.: Strategic factors enabling digital maturity: an extended survey (2019)

- 41.Ifenthaler D, Egloffstein M. Development and implementation of a maturity model of digital transformation. TechTrends. 2019;64:1–8. doi: 10.1007/s11528-019-00457-4. [DOI] [Google Scholar]

- 42.MacKenzie SB, Podsakoff PM, Podsakoff NP. Construct measurement and validation procedures in MIS and behavioral research: integrating new and existing techniques. MIS Q. 2011;35:293–334. doi: 10.2307/23044045. [DOI] [Google Scholar]

- 43.Whetten DA. What constitutes a theoretical contribution? Acad. Manag. Rev. 1989;14:490–495. doi: 10.5465/amr.1989.4308371. [DOI] [Google Scholar]