Abstract

Diagnosis and evaluation of early gastric cancer (EGC) using endoscopic images is significantly important; however, it has some limitations. In several studies, the application of convolutional neural network (CNN) greatly enhanced the effectiveness of endoscopy. To maximize clinical usefulness, it is important to determine the optimal method of applying CNN for each organ and disease. Lesion-based CNN is a type of deep learning model designed to learn the entire lesion from endoscopic images. This review describes the application of lesion-based CNN technology in diagnosis of EGC.

Keywords: Artificial intelligence, Convolutional neural networks, Early gastric cancer, Endoscopy, Invasion depth

INTRODUCTION

Endoscopy has played an important role in gastrointestinal (GI) tract examination because it enables clinicians to directly observe the GI tract. However, the accuracy of its diagnostic results is limited by the experience of the practitioner and complex environmental factors of the GI tract [1]. Therefore, there is an increasing interest in the field of endoscopic imaging regarding a method for improving the accuracy of diagnosis.

Artificial intelligence (AI) based on deep learning is making remarkable progress in various medical fields. Endoscopic imaging is one of the most effective applications of AI-based analytics in the medical field [2,3]. A convolutional neural network (CNN) comprises a deep learning architecture that is best known for its application in imaging data analysis [4]. Recently, several authors have reported successful application of CNN to GI-endoscopic image analysis [5-8]. Among various diseases of the GI tract, early gastric cancer (EGC) has certain special characteristics. This review focuses on the application of lesion- based CNN in EGC.

CONVOLUTIONAL NEURAL NETWORK IN ENDOSCOPIC IMAGING

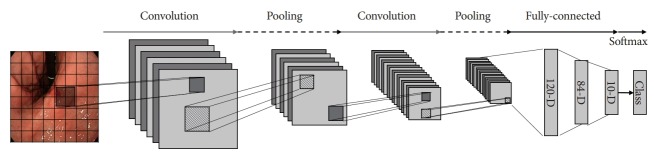

CNN technology is based on the principle that the visual cortex of the human brain is responsible for the recognition of images. A CNN can be divided into components that extract the features of images and those that classify data. The feature extraction part comprises iterations of the convolutional and pooling layers. The convolutional layer is typically composed of a filter and an activation function. The filter performs a convolution operation to extract features from input data. When the input image is processed into consecutive convolutional layers, the filters are accumulated. This process creates increasingly descriptive and sophisticated feature detectors. When the feature map is extracted, the activation function converts it to quantitative non-linear values. Overfitting occurs owing to the increased number of features; therefore, a new layer is obtained by resizing via the pooling layer. The key features are extracted from the image through iterations of the convolutional and pooling layers. Subsequently, classification is performed through the fully connected layers, which are the last layers of the CNN [9]. Fig. 1 shows an example of the classification of GI-endoscopic images by a CNN.

Fig. 1.

Simple example of deep learning convolutional neural network using early gastric cancer detection model.

CNN was first developed by Fukushima in 1980 [10]. In 1995, Lo et al. reported the detection of lung nodules using a CNN [11]. However, at the time, it was forgotten because of limitations of computational power. About 17 years later, a CNN overcomes these limitations by improving computation performance with the development of graphical processing units and applying a deep learning method. Deep learning allows more efficient learning through multiple intermediate layers between the input and output layers. In 2012, Krizhevsky et al. designed a CNN with deep learning method (AlexNet, designed by the SuperVision group) and won the ImageNet Large Scale Visual Recognition Challenge with an overwhelming performance [9]. Since then, numerous authors have studied medical image analysis using CNN with deep learning method [12-14].

Most examinations and diagnoses of GI tract diseases are performed using endoscopy [1,15]. Therefore, accurate analysis of endoscopic images is important, and the application of CNN can be considerably useful. CNN has been applied for the detection and pathological classification of colon polyps [16-19]. Karnes et al. added narrow-band imaging (NBI) to improve the accuracy of polyp detection [20]. Byrne et al. developed a system for real-time assessment of colon polyps in a video format [19]. Additionally, CNN was applied in upper endoscopy to detect gastritis caused by Helicobacter pylori infection [21] and classify the subtypes of Barrett’s esophagus [22]. Moreover, it is currently being applied for the detection and classification of GI tract cancer, including cancer of the esophagus, stomach, and colon [5,6,23].

CHARACTERISTICS OF EARLY GASTRIC CANCER IN ENDOSCOPIC EXAMINATION

The role of endoscopic examination in the diagnosis of EGC includes the characterization and detection of lesions. Awareness of the signs of suspicious lesions is important. However, some EGC lesions can be difficult to detect because of extremely subtle changes in the endoscopic examination [24]. Furthermore, EGC detection becomes complex owing to chronic inflammation or intestinal metaplasia of the surrounding mucosa. Recently, some authors reported that image enhancement technology, such as magnifying NBI, improves the ability to identify mucosal abnormalities [25-27]. However, the enhancement technology is not widely used currently because of the lack of additional experience, special equipment, and time.

Accurate detection of EGC requires both knowledge and technical expertise [28]. It is required that endoscopic techniques do not create blind spots during examination. Additionally, the air inflation should be adjusted to adequately reveal the lesions. These technical aspects are reflected in the endoscopic image. However, owing to the nature of endoscopic examination, it is impossible to standardize all these conditions because of variations in both the practitioner and the patient’s condition. The lack of standardization in imaging can be an important limitation for research using images. Furthermore, it is impossible to fully standardize the image acquired via endoscopy. Therefore, it is important to select high-quality images that adequately reveal the features of the lesion.

Pre-treatment prediction of the invasion depth (T-staging) has becoming increasingly important because it is an essential factor in determining the treatment method for EGC [29]. Some authors have reported that conventional endoscopy is comparable to endoscopic ultrasound for predicting the invasion depth of EGC [30]. Abe et al. reported a depth-predicting score for the differentiated-type EGC [31]. Tumors with sizes greater than 30 mm, remarkable redness, uneven surface, and margin elevation were associated with deep submucosal cancers [31]. However, this approach can be challenging because these features are extremely subtle in EGC. Therefore, there has been an increasing interest in the field of medical imaging regarding the modalities for predicting the depth of EGC.

Gastric cancer shows greater histological heterogeneity than other cancers. Even tumors confined to the mucosa show histologic heterogeneity, which tends to increase with deeper invasion and increased tumor diameter [32]. Such mixed histology has been reported to be associated with more aggressive behavior than other histological types [33,34]. Till date, the histological heterogeneity of EGC mucosa cannot be accurately predicted without biopsy. While evaluating EGC, it is important to identify the entire lesion owing to the heterogeneity of EGC.

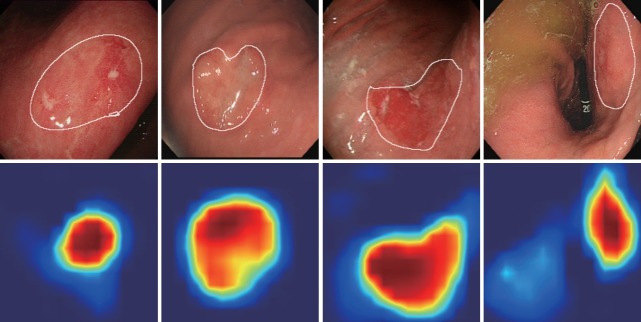

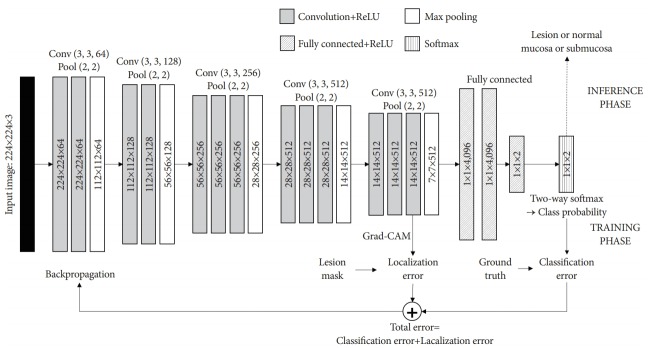

LESION-BASED CONVOLUTIONAL NEURAL NETWORK

Fundamentally, deep neural networks are black boxes, indicating that it is not known how the network produces the output. Therefore, the results are analyzed using statistical methods. For example, various measures, such as sensitivity, specificity, and accuracy, are used to analyze the results and identify problems in the network. However, we can only estimate how the network performs judgements, while the forwarding process of the network remains unclear. This limitation can lead to critical errors in the learning process involving endoscopic images. For example, in the case of AI that is focused only on classification for EGC diagnosis or depth prediction, basic normal structures, such as the pyloric channel or esophagogastric junction, might be incorrectly recognized as lesions. Therefore, the validity of the process needs to be confirmed instead of merely accepting the classification result of the AI. To design a more reliable deep learning system, there has been an increasing interest in the interpretability of AI. Selvaraju et al. proposed the gradient-weighted class activation mapping (Grad-CAM) approach for producing “visual explanations” for the decisions reached by a CNN [35]. Grad-CAM produces a visual explanation via gradient-based localization of deep learning networks. All neural networks learn by back-propagating a gradient, using the common gradient as a weight applied to the feature of the layer that needs to be visualized. Application of the Grad-CAM method confirms that the network is properly trained and the entire lesion is evaluated within the endoscopic image. During the training process, the CNN learns about the localization errors and constructs heatmaps for those regions where it focuses. The blue and red colors on the Grad-CAM indicate lower and higher activation values, respectively (Fig. 2). Fig. 3 shows an example of the lesion-based CNN algorithm with the Grad-CAM method. The Grad-CAM of the last convolutional layer was used to measure the Grad-CAM loss. Activated regions with high values were more often used as visual features in the following fully connected layers. By applying the Grad-CAM method to EGC, the entire lesion can be evaluated to reduce localization errors and improve performance. Yoon et al. named this process, “lesion-based CNN” [36]. They reported that it showed better performance than other CNNs in the detection of EGC and depth prediction. The Grad-CAM loss was applied to the existing cross-entropy loss to reduce localization errors. During training, the model was taught to properly detect EGCs while activating the EGC regions by simultaneously optimizing the cross-entropy and Grad-CAM losses. However, even with the same type of CNN, the result may vary depending on whether the Grad-CAM method is applied [36]. There are some limitations in this system. Inaccuracy of the hand-drawn margin of EGC is possible—this is not a pixel-by-pixel operation; therefore, there is a potential for error. Additionally, in some complex cases, even experts find it difficult to accurately determine the margin of EGC through conventional endoscopy. In the future, a large amount of learning data and the application of image enhancement techniques, such as magnifying NBI, should be considered to improve the lesion-based CNN approach.

Fig. 2.

Examples of gradient-weighted class activation mapping output extracted from each convolutional layer of the trained lesion-based convolutional neural network. The white lines on the first row indicate the actual early gastric cancer regions. The images on the second row represent the activated map extracted from the last convolutional layer of the network.

Fig. 3.

Example of lesion-based convolutional neural network algorithm with gradient-weighted class activation mapping method. Grad-CAM, gradient-weighted class activation mapping.

CONCLUSIONS

CNN is an effective method to improve endoscopic image evaluation. The application of CNN will significantly enhance the effectiveness of endoscopy. To maximize clinical usefulness, it is important to determine the optimal method of CNN application. Lesion-based CNN using Grad-CAM is one of the most effective AI-based methods for EGC diagnosis. However, the application of AI to endoscopic images should not remain restricted to a level where its image classification performance is superior to that of humans—achieving optical biopsy should be the final objective of this approach. This can be achieved by improving the performance, based on the characteristics of each organ and disease.

Footnotes

Conflicts of Interest: The authors have no financial conflicts of interest.

REFERENCES

- 1.Take I, Shi Q, Zhong Y-S. Progress with each passing day: role of endoscopy in early gastric cancer. Transl Gastrointest Cancer. 2015;4:423–428. [Google Scholar]

- 2.Zhu R, Zhang R, Xue D. Lesion detection of endoscopy images based on convolutional neural network features. In: 2015 8th International Congress on Image and Signal Processing (CISP); 2015 Oct 14-16; Shenyang, China. Piscataway (NJ). 2015. pp. 372–376. [Google Scholar]

- 3.Du W, Rao N, Liu D, et al. Review on the applications of deep learning in the analysis of gastrointestinal endoscopy images. IEEE Access. 2019;7:142053–142069. [Google Scholar]

- 4.Lecun Y, Bottou L, Bengio Y, Haffner P. Gradient-based learning applied to document recognition. Proceedings of the IEEE. 1998;86:2278–2324. [Google Scholar]

- 5.Hirasawa T, Aoyama K, Tanimoto T, et al. Application of artificial intelligence using a convolutional neural network for detecting gastric cancer in endoscopic images. Gastric Cancer. 2018;21:653–660. doi: 10.1007/s10120-018-0793-2. [DOI] [PubMed] [Google Scholar]

- 6.Horie Y, Yoshio T, Aoyama K, et al. Diagnostic outcomes of esophageal cancer by artificial intelligence using convolutional neural networks. Gastrointest Endosc. 2019;89:25–32. doi: 10.1016/j.gie.2018.07.037. [DOI] [PubMed] [Google Scholar]

- 7.Urban G, Tripathi P, Alkayali T, et al. Deep learning localizes and identifies polyps in real time with 96% accuracy in screening colonoscopy. Gastroenterology. 2018;155:1069–1078. doi: 10.1053/j.gastro.2018.06.037. e8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Zhu Y, Wang QC, Xu MD, et al. Application of convolutional neural network in the diagnosis of the invasion depth of gastric cancer based on conventional endoscopy. Gastrointest Endosc. 2019;89:806–815. doi: 10.1016/j.gie.2018.11.011. e1. [DOI] [PubMed] [Google Scholar]

- 9.Krizhevsky A, Sutskever I, Hinton GE, et al. Imagenet classification with deep convolutional neural networks. Advances in Neural Information Processing Systems. 2012:1097–1105. [Google Scholar]

- 10.Fukushima K. Neocognitron: a self organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biol Cybern. 1980;36:193–202. doi: 10.1007/BF00344251. [DOI] [PubMed] [Google Scholar]

- 11.Lo SB, Lou SA, Lin JS, Freedman MT, Chien MV, Mun SK. Artificial convolution neural network techniques and applications for lung nodule detection. IEEE Trans Med Imaging. 1995;14:711–718. doi: 10.1109/42.476112. [DOI] [PubMed] [Google Scholar]

- 12.Han SS, Park GH, Lim W, et al. Deep neural networks show an equivalent and often superior performance to dermatologists in onychomycosis diagnosis: automatic construction of onychomycosis datasets by region-based convolutional deep neural network. PLoS One. 2018;13:e0191493. doi: 10.1371/journal.pone.0191493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Larson DB, Chen MC, Lungren MP, Halabi SS, Stence NV, Langlotz CP. Performance of a deep-learning neural network model in assessing skeletal maturity on pediatric hand radiographs. Radiology. 2018;287:313–322. doi: 10.1148/radiol.2017170236. [DOI] [PubMed] [Google Scholar]

- 14.Rajpurkar P, Irvin J, Zhu K, et al. CheXNet: radiologist-level pneumonia detection on chest x-rays with deep learning [Internet] c2017 Available from: https://arxiv.org/abs/1711.05225.

- 15.Mannath J, Ragunath K. Role of endoscopy in early oesophageal cancer. Nat Rev Gastroenterol Hepatol. 2016;13:720–730. doi: 10.1038/nrgastro.2016.148. [DOI] [PubMed] [Google Scholar]

- 16.Komeda Y, Handa H, Watanabe T, et al. Computer-aided diagnosis based on convolutional neural network system for colorectal polyp classification: preliminary experience. Oncology. 2017;93(Suppl 1):30–34. doi: 10.1159/000481227. [DOI] [PubMed] [Google Scholar]

- 17.Zhang R, Zheng Y, Mak TW, et al. Automatic detection and classification of colorectal polyps by transferring low-level CNN features from nonmedical domain. IEEE J Biomed Health Inform. 2017;21:41–47. doi: 10.1109/JBHI.2016.2635662. [DOI] [PubMed] [Google Scholar]

- 18.Lequan Y, Hao C, Qi D, Jing Q, Pheng Ann H. Integrating online and offline three-dimensional deep learning for automated polyp detection in colonoscopy videos. IEEE J Biomed Health Inform. 2017;21:65–75. doi: 10.1109/JBHI.2016.2637004. [DOI] [PubMed] [Google Scholar]

- 19.Byrne MF, Chapados N, Soudan F, et al. Real-time differentiation of adenomatous and hyperplastic diminutive colorectal polyps during analysis of unaltered videos of standard colonoscopy using a deep learning model. Gut. 2019;68:94–100. doi: 10.1136/gutjnl-2017-314547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Karnes WE, Alkayali T, Mittal M, et al. Automated polyp detection using deep learning: leveling the field. Gastrointest Endosc. 2017;85(5 Suppl):AB376–AB377. [Google Scholar]

- 21.Shichijo S, Nomura S, Aoyama K, et al. Application of convolutional neural networks in the diagnosis of Helicobacter pylori infection based on endoscopic images. EBioMedicine. 2017;25:106–111. doi: 10.1016/j.ebiom.2017.10.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hong J, Park B, Park H. Convolutional neural network classifier for distinguishing Barrett’s esophagus and neoplasia endomicroscopy images. In: 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); 2017 Jul 11-15; Seogwipo, Korea. Piscataway (NJ). 2017. pp. 2892–2895. [DOI] [PubMed] [Google Scholar]

- 23.Ito N, Kawahira H, Nakashima H, Uesato M, Miyauchi H, Matsubara H. Endoscopic diagnostic support system for cT1b colorectal cancer using deep learning. Oncology. 2019;96:44–50. doi: 10.1159/000491636. [DOI] [PubMed] [Google Scholar]

- 24.Gotoda T. Macroscopic feature of “Gastritis-like cancer” with little malignant appearance in early gastric cancer. Stomach and Intestine. 1999;34:1495–1504. [Google Scholar]

- 25.Chiu PWY, Uedo N, Singh R, et al. An Asian consensus on standards of diagnostic upper endoscopy for neoplasia. Gut. 2019;68:186–197. doi: 10.1136/gutjnl-2018-317111. [DOI] [PubMed] [Google Scholar]

- 26.Yao K, Doyama H, Gotoda T, et al. Diagnostic performance and limitations of magnifying narrow-band imaging in screening endoscopy of early gastric cancer: a prospective multicenter feasibility study. Gastric Cancer. 2014;17:669–679. doi: 10.1007/s10120-013-0332-0. [DOI] [PubMed] [Google Scholar]

- 27.Fujiwara S, Yao K, Nagahama T, et al. Can we accurately diagnose minute gastric cancers (≤5 mm)? Chromoendoscopy (CE) vs magnifying endoscopy with narrow band imaging (M-NBI) Gastric Cancer. 2015;18:590–596. doi: 10.1007/s10120-014-0399-2. [DOI] [PubMed] [Google Scholar]

- 28.Yao K. The endoscopic diagnosis of early gastric cancer. Ann Gastroenterol. 2013;26:11–22. [PMC free article] [PubMed] [Google Scholar]

- 29.Goto O, Fujishiro M, Kodashima S, Ono S, Omata M. Outcomes of endoscopic submucosal dissection for early gastric cancer with special reference to validation for curability criteria. Endoscopy. 2009;41:118–122. doi: 10.1055/s-0028-1119452. [DOI] [PubMed] [Google Scholar]

- 30.Choi J, Kim SG, Im JP, Kim JS, Jung HC, Song IS. Comparison of endoscopic ultrasonography and conventional endoscopy for prediction of depth of tumor invasion in early gastric cancer. Endoscopy. 2010;42:705–713. doi: 10.1055/s-0030-1255617. [DOI] [PubMed] [Google Scholar]

- 31.Abe S, Oda I, Shimazu T, et al. Depth-predicting score for differentiated early gastric cancer. Gastric Cancer. 2011;14:35–40. doi: 10.1007/s10120-011-0002-z. [DOI] [PubMed] [Google Scholar]

- 32.Luinetti O, Fiocca R, Villani L, Alberizzi P, Ranzani GN, Solcia E. Genetic pattern, histological structure, and cellular phenotype in early and advanced gastric cancers: evidence for structure-related genetic subsets and for loss of glandular structure during progression of some tumors. Hum Pathol. 1998;29:702–709. doi: 10.1016/s0046-8177(98)90279-9. [DOI] [PubMed] [Google Scholar]

- 33.Yoon HJ, Kim YH, Kim JH, et al. Are new criteria for mixed histology necessary for endoscopic resection in early gastric cancer? Pathol Res Pract. 2016;212:410–414. doi: 10.1016/j.prp.2016.02.013. [DOI] [PubMed] [Google Scholar]

- 34.Hanaoka N, Tanabe S, Mikami T, Okayasu I, Saigenji K. Mixed-histologic- type submucosal invasive gastric cancer as a risk factor for lymph node metastasis: feasibility of endoscopic submucosal dissection. Endoscopy. 2009;41:427–432. doi: 10.1055/s-0029-1214495. [DOI] [PubMed] [Google Scholar]

- 35.Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-CAM: visual explanations from deep networks via gradient-based localization. In: 2017 IEEE International Conference on Computer Vision (ICCV); 2017 Oct 22-29; Venice, Italy. Piscataway (NJ). 2017. pp. 618–626. [Google Scholar]

- 36.Yoon HJ, Kim S, Kim JH, et al. A lesion-based convolutional neural network improves endoscopic detection and depth prediction of early gastric cancer. J Clin Med. 2019;8:E1310. doi: 10.3390/jcm8091310. [DOI] [PMC free article] [PubMed] [Google Scholar]