Abstract

Quality improvement collaboratives (QICs) have long been used to facilitate group learning and implementation of evidence-based interventions (EBIs) in healthcare. However, few studies systematically describe implementation strategies linked to QIC success. To address this gap, we evaluated a QIC on colorectal cancer (CRC) screening in Federally Qualified Health Centers (FQHCs) by aligning standardized implementation strategies with collaborative activities and measuring implementation and effectiveness outcomes. In 2018, the American Cancer Society and North Carolina Community Health Center Association provided funding, in-person/virtual training, facilitation, and audit and feedback with the goal of building FQHC capacity to enact selected implementation strategies. The QIC evaluation plan included a pre-test/post-test single group design and mixed methods data collection. We assessed: 1) adoption, 2) engagement, 3) implementation of QI tools and CRC screening EBIs, and 4) changes in CRC screening rates. A post-collaborative focus group captured participants’ perceptions of implementation strategies. Twenty-three percent of North Carolina FQHCs (9/40) participated in the collaborative. Health Center engagement was high although individual participation decreased over time. Teams completed all four QIC tools: aim statements, process maps, gap and root cause analysis, and Plan-Do-Study-Act cycles. FQHCs increased their uptake of evidence-based CRC screening interventions and rates increased 8.0% between 2017 and 2018. Focus group findings provided insights into participants’ opinions regarding the feasibility and appropriateness of the implementation strategies and how they influenced outcomes. Results support the collaborative’s positive impact on FQHC capacity to implement QI tools and EBIs to improve CRC screening rates.

Keywords: Quality improvement, Colorectal neoplasms, Early detection of cancer, Capacity building, Implementation science, Community health centers

1. Background

Quality improvement collaboratives (QICs) emerged in the mid-1990s as a promising approach to facilitate group learning and systems change across a range of healthcare settings and topics. The Institute for Healthcare Improvement (IHI) “Breakthrough Series” model (The Breakthrough Series: IHI’s Collaborative Model for Achieving Breakthrough Improvement. Boston, MA, 2003) is the most widely used collaborative approach, and has been replicated with thousands of healthcare teams and organizations (Institute for Healthcare Improvement, n.d.). Federally Qualified Health Centers (FQHCs) have a long history of participation in collaboratives, most notably the Health Resources and Services Administration (HRSA)-funded Health Disparities Collaboratives for improving the quality of chronic care and reducing disparities. As the popularity of QICs rapidly increased, a call for a stronger evidence-base was issued in 2004 given the lack of rigorous study designs demonstrating positive effects on patient outcomes (Mittman, 2004).

Fifteen years later, randomized controlled trials of QICs are still rare, but systematic reviews provide evidence that they achieve positive - although limited and variable - improvements in processes of care and clinical outcomes (Chin, 2010; Schouten et al., 2008; Wells et al., 2018). Schouten and colleagues reviewed nine controlled studies; two had little to no effect; five had mixed effects, and two had positive effects on outcomes (Schouten et al., 2008). Chin reviewed experimental, quasi-experimental, and descriptive studies that evaluated the HRSA Health Disparities Collaboratives, and found that some had a positive impact on processes of care and clinical outcomes whereas others only improved processes of care (Chin, 2010). Wells and colleagues conducted a systematic review of 64 studies of QICs that varied in settings, topics, and populations; 83% reported improvements in primary outcome measures (Wells et al., 2018). Although these findings are encouraging, authors noted that many studies provided insufficient description of the collaborative, its activities, and levels of engagement (Wells et al., 2018). The variability across QICs has been well-documented (Schouten et al., 2008; Wells et al., 2018; Strating et al., 2011), creating an imperative for detailed descriptions of collaborative components so that reasons for variation can be studied and effective models replicated. As Nadeem and colleagues note, collaboratives provide an opportunity to intervene on multi-level factors that are known to influence outcomes such as characteristics of the intervention, fit with providers and the organization, leadership, culture and climate, and alignment with the policy environment (Nadeem et al., 2016). Consequently, QICs in FQHCs can move beyond addressing diseases or conditions by increasing overall capacity to address population health (Chambers, 2018).

Implementation science, specifically the widely used compilation of implementation strategies published by Powell et al. (2015) and implementation outcomes published by Proctor et al. (2011), can support a more systematic exploration of how QICs lead to positive outcomes. The purpose of this paper is to explain how we applied an implementation science lens to our QIC evaluation by: 1) aligning collaborative and FQHC activities with implementation strategies from Powell’s taxonomy (Powell et al., 2015), and 2) measuring implementation and service outcomes as defined by Proctor (Proctor et al., 2011). Our goal is to describe the strategies used by the collaborative and explore the mechanisms through which the collaborative led to improvements in colorectal cancer (CRC) screening rates. The results will accelerate our understanding and application of effective QICs for not only cancer screening, but also broader prevention activities such as healthy eating and physical activity, thus improving quality of care, health outcomes, and population health.

2. Conceptual framework

In 2018, the American Cancer Society® (ACS) partnered with the North Carolina Community Health Center Association (NCCHCA) to sponsor a CRC screening QIC and invited UNC Chapel Hill, part of the Cancer Prevention and Research Control Network (CPCRN, www.cpcrn.org) to serve as the external evaluator. Fig. 1 provides an overview of the conceptual framework guiding the evaluation, with the implementation strategies informed by Powell (Powell et al., 2015) and the implementation and service outcomes informed by Proctor (Proctor et al., 2011).

Fig. 1.

Conceptual framework for the quality improvement collaborative.

The framework describes the implementation strategies employed at two levels: the level of the facilitation team (ACS and NCCHCA staff) that led the collaborative and the level of the implementation team (FQHC team members) that participated in the collaborative. The model also specifies the implementation, service, and population outcomes that the strategies were intended to achieve.

We applied terms from Powell’s taxonomy (Powell et al., 2015) to each of the implementation strategies listed in the conceptual framework and refer to them in the descriptions below (in italics).

2.1. Implementation strategies: collaborative facilitation team

The NCCHCA recruited 13 FQHCs to apply for the collaborative and selected nine to participate. They obtained formal commitments from the leadership of each FQHC in the form of a written agreement. ACS provided funding ($20,000 total) to cover transportation and lodging for FQHC staff to participate in the collaborative. Then, ACS staff conducted training for FQHC staff during two in-person collaborative meetings and monthly virtual meetings, with a focus on using quality improvement (QI) tools to implement CRC screening evidence-based interventions (EBIs). The QI tools were adapted from materials developed by the Institute for Healthcare Improvement, HRSA, and the Centers for Medicare and Medicaid Services (CMS) and included aim statements, current and future state process maps, root cause and gap analysis, and Plan-Do-Study-Act (PDSA) cycle templates (see Table 1). During the monthly virtual meetings, ACS shared graphs of each FQHC’s screening rates and facilitated discussions on barriers and facilitators (i.e., audit and provide feedback). ACS staff delivered phone and in-person coaching (i.e., provided facilitation) for each FQHC.

Table 1.

Quality improvement tools and activitiesa.

| Tool | Activity |

|---|---|

| Aim statements |

|

| Current and future state process maps |

|

| Gap and root cause analysis |

|

| Plan-Do-Study-Act (PDSA) cycles |

|

Templates available at http://www.ihi.org/resources/Pages/Tools/Quality-Improvement-Essentials-Toolkit.aspx.

2.2. Implementation strategies: implementation team

As depicted in Fig. 1, the collaborative’s strategies were intended to build FQHC teams’ capacity to select and apply implementation strategies. Each FQHC convened an implementation team of three members to participate in the collaborative. ACS recommended QI staff, but centers ultimately determined the composition of their teams. Teams used QI tools to identify barriers and facilitators to CRC screening and to implement Plan-Do-Study-Act (PDSA) cycles (i.e., conduct cyclical small tests of change). The implementation teams also selected CRC screening EBIs on which to focus.

2.3. Implementation outcomes

To have an impact, the collaborative needed to achieve implementation outcomes including FQHC leadership adoption of the collaborative, FQHC team engagement in collaborative activities, and FQHC system implementation of CRC screening EBIs and implementation and spread of QI tools to other types of screening and health conditions.

2.4. System and population outcomes

The goal of the collaborative was to improve CRC screening rates and thereby reduce colorectal cancer morbidity and mortality, with a particular focus on reducing disparities.

3. Methods

3.1. Evaluation design

We used a pre-test/post-test single group design and mixed methods data collection. Sources of data included self-administered questionnaires and inventories using PDF forms and SurveyMonkey®, copies of completed QI tools, Poll Everywhere questions during monthly virtual meetings, monthly and annual screening rates from FQHCs’ electronic health records, and published data from HRSA’s Uniform Data System (UDS). All data collected by ACS and the NCCHCA were deidentified and sent to the UNC evaluation team for analysis. The UNC evaluators also conducted a post-collaborative focus group to capture contextual data. The evaluation plan was reviewed by the University of North Carolina Institutional Review Board and determined to be Not Human Subjects Research.

3.2. Implementation outcome measures

The evaluation assessed the collaborative’s impact on implementation outcomes. Table 2 provides an overview of the implementation outcomes, how they align with those described in Proctor’s taxonomy, and how they were defined and measured.

Table 2.

Implementation outcomes.

| Proctor et al.’s implementation outcomes (Proctor et al., 2011) | Collaborative implementation outcomes | Definition | Measurement |

|---|---|---|---|

| Adoption - setting | Adoption | FQHCs that signed the participant agreement (n = 9) | Center demographics from the Uniform Data System (Health Resources and Services Administration Health Center Program: 2018. Health Center Data, n.d.-b) |

| Adoption - provider | Engagement | FQHC and individual attendance at in-person collaborative meetings and monthly calls | Lobbyguard software and Poll Everywhere item |

| Implementation Fidelity | Implementation of QI tools | QI tools that were filled out and acted upon by FQHC staff during the collaborative | Log of completed QI tools |

| Penetration | Spread of QI tools | Tools that were applied to other screening/conditions or other populations | Baseline and follow-up inventory and post-collaborative focus group |

| Intervention fidelity | Implementation of CRC screening EBIs | Implementation of interventions recommended by the Guide to Community Preventive Services | Baseline and follow-up inventory |

| Effectiveness | CRC screening rates | Percentage of adults 50–75 years of age who had appropriate screening for colorectal cancer | The 2016, 2017, 2018 Uniform Data System (Health Resources and Services Administration Health Center Program: 2018. Health Center Data, n.d.-b) |

| Appropriateness | Influence of implementation strategies on CRC screening rates | Implementation staff perceptions of how collaborative strategies led to systems change and increase in screening rates | Post-collaborative focus group |

3.2.1. Adoption

Data were collected on the nine FQHC systems that agreed to participate in the collaborative. Descriptors of the FQHCs (including all clinical sites) and their patient populations were reported by the participating centers or extracted from the UDS 2017 Health Center Program Awardee Data (Health Resources and Services Administration Health Center Program: 2017 Health Center Data, n.d.-a).

3.2.2. Engagement

Data were collected on FQHC staff participation in collaborative activities. LobbyGuard Visitor Management Software was used to track in-person meeting attendance. For the monthly virtual meetings, attendance was monitored through a Poll Everywhere question.

3.2.3. Implementation and spread of QI tools

ACS staff maintained a log of completed QI tools from each FQHC. Spread of the QI tools was assessed via the online inventory described below and through the post-collaborative focus group.

3.2.4. Implementation of CRC screening EBIs

The ACS administered baseline and follow-up inventories that assessed each FQHC’s Fecal Immunochemical Test (FIT) kit and colonoscopy capacity (i.e. “Are you currently experiencing any problems with colonoscopy capacity?”), use of CRC screening EBIs (e.g. reminder systems, patient education), and implementation of QI tools (i.e. “What QI Process tools did you use for colorectal cancer screening improvement after the collaborative?”). The CRC screening EBIs included in the inventory were those recommended by the Guide to Community Preventive Services (Community Preventive Services Task Force, n.d.). The electronic 39-item baseline inventory was completed between January and February 2018, and the follow-up 45-item inventory was completed between February and March 2019.

3.2.5. Influence of implementation strategies on outcomes

Thirteen participants representing seven FQHCs attended an in-person 90-minute focus group in February 2019, at the end of the collaborative. A structured focus group guide was used to inquire about participants’ perceptions of how implementation strategies led to improvements in CRC screening, and how the collaborative model could be improved.

3.3. System outcome measures

3.3.1. Changes in colorectal cancer screening rates

For the participating FQHCs, annual CRC screening rates for 2016, 2017, and 2018 are from the UDS (Health Resources and Services Administration Health Center Program: 2018. Health Center Data, n.d.-b). The SurveyMonkey® questionnaire captured monthly rates on a rolling year schedule.

3.4. Analysis plan

We used a mixed methods approach for data collection and analysis. Descriptive statistics were calculated for adoption, engagement, implementation of QI tools and CRC screening EBIs. In the baseline and follow-up inventories, qualitative data from open-ended responses were used to provide context for the quantitative findings. The focus group discussion was recorded, transcribed, and coded by two independent coders, using a content analysis approach (Hsieh and Shannon, 2005). The two reviewers met to reconcile any discrepancies in coding and to summarize findings into themes. Content analysis was guided by elements of the evaluation model with the goal of exploring participants’ perceptions of implementation strategies and the relationship between those strategies and implementation outcomes. The identified themes were reviewed with members of the facilitation team. To assess changes in screening rates before the collaborative (2016–2017) and during the collaborative (2017–2018), we calculated weighted averages and compared the percent change between the two time periods.

4. Results

4.1. Sample

Table 3 displays the characteristics of the nine participating FQHCs, which had between one and six clinical sites except for one large center with 23 clinical sites. In 2017, the number of patients per center between the ages of 50–74 ranged from 633 to 14,924. A large proportion of the FQHCs’ populations were racial minorities (mean = 65.6%); patients of Hispanic ethnicity (mean = 24.7%); and uninsured (mean = 36.7%). Each FQHC was asked to create a three-member implementation team; members included quality improvement staff, clinical administrators, and providers (physicians, mid-levels, nurses). Among those who completed the baseline inventory (n = 22), half had a low level of experience with quality improvement activities and a third had worked in an FQHC setting for less than one year.

Table 3.

Organizational characteristics of participating FQHCs, 2017.

| FQHC | Characteristics of FQHCs (N = 9) | |||||

|---|---|---|---|---|---|---|

| # of clinical sites | % of patients screened for CRCa | # of patients ages 50–74a | % racial minoritya,b | % hispanic ethnicitya,b | % uninsureda,c | |

| A | 2 | 41.3 | 1280 | 46.9 | 3.2 | 9.3 |

| B | 23 | 35.6 | 14,924 | 51.9 | 20.5 | 33.9 |

| C | 1 | 19.8 | 2419 | 82.4 | 39.3 | 54.5 |

| D | 5 | 12.8 | 2921 | 86.2 | 5.6 | 25.1 |

| E | 2 | 25.9 | 854 | 29.0 | 15.6 | 30.3 |

| F | 5 | 45.0 | 5582 | 76.7 | 50.5 | 48.6 |

| G | 6 | 25.7 | 1539 | 92.5 | 26.1 | 9.1 |

| H | 3 | 17.1 | 1186 | 83.5 | 29.5 | 74.7 |

| I | 1 | 32.9 | 633 | 40.9 | 32.4 | 44.5 |

Data are from the 2017 UDS.

Race and ethnicity are for the whole patient population.

Insurance status is for patients 18 and over.

4.2. Implementation outcomes

4.2.1. Adoption

North Carolina had 40 FQHCs in 2018, 13 (32.5%) of which applied to participate in the QIC. Out of the 13, the ACS and the NCCHCA selected nine centers with the lowest screening rates and the capacity to fully engage in the QIC. Capacity was informally assessed based on the FQHCs’ successful participation in prior ACS and NCCHCA initiatives, verbal commitment to the QIC by center leadership, and priority the FQHC gave to CRC screening compared to other UDS clinical care measures.

4.2.2. Engagement

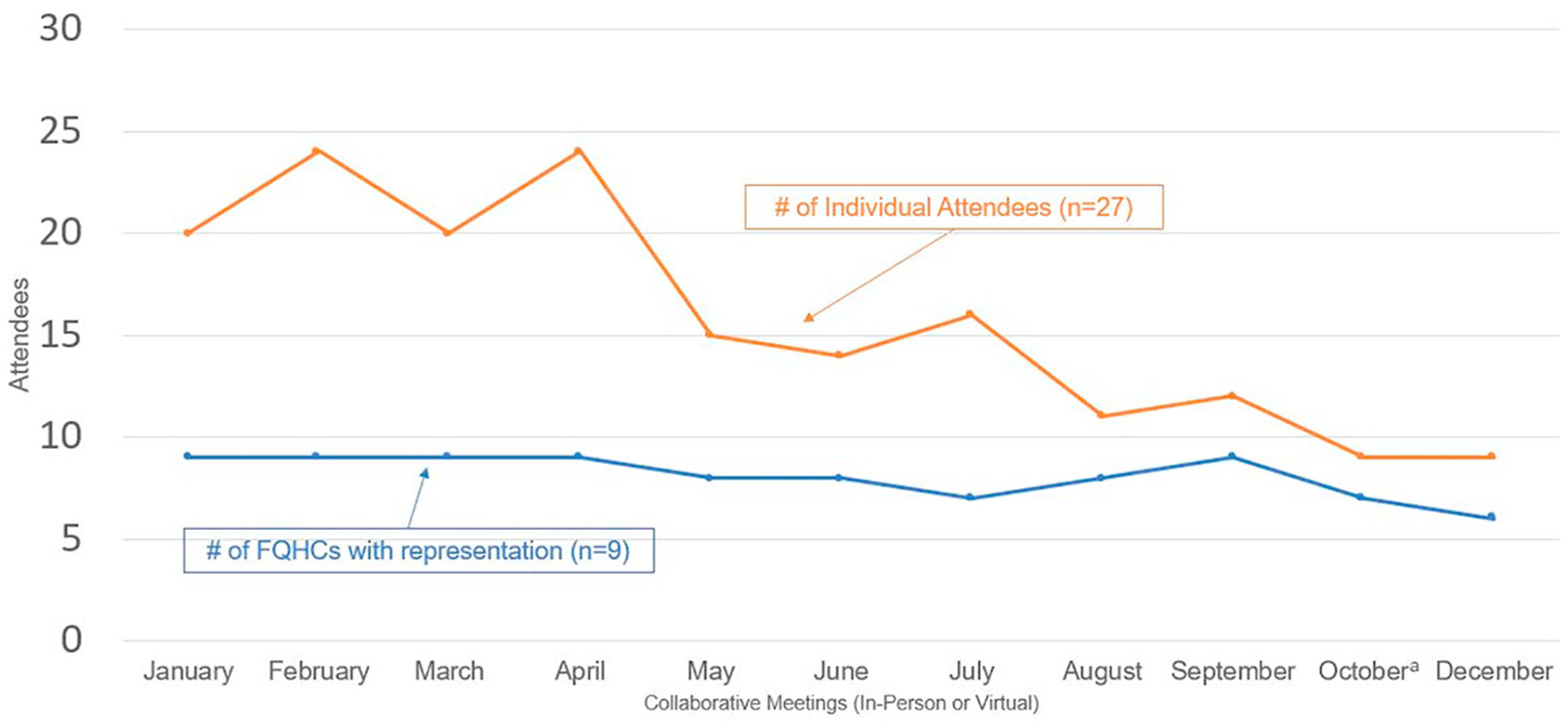

Fig. 2 displays FQHC representation and individual attendance for the two in-person collaborative trainings and the monthly virtual meetings. All FQHCs sent team members to both in-person meetings, with the proportion of team members attending ranging from 100% (27/27) at the first meeting to 89% (24/27) at the second meeting. FQHC representation at the virtual meetings was 100% (9/9) for the first four months, then dropped to 67% (6/9) by the end of the collaborative. Individual team member attendance at the virtual meetings peaked at 74% (20/27) for the first four months, then decreased steadily over time with a low of 41% (11/27) in October and December.

Fig. 2.

Quality improvement collaborative attendance, 2018.

a No call was held in November.

4.2.3. Implementation of QI tools

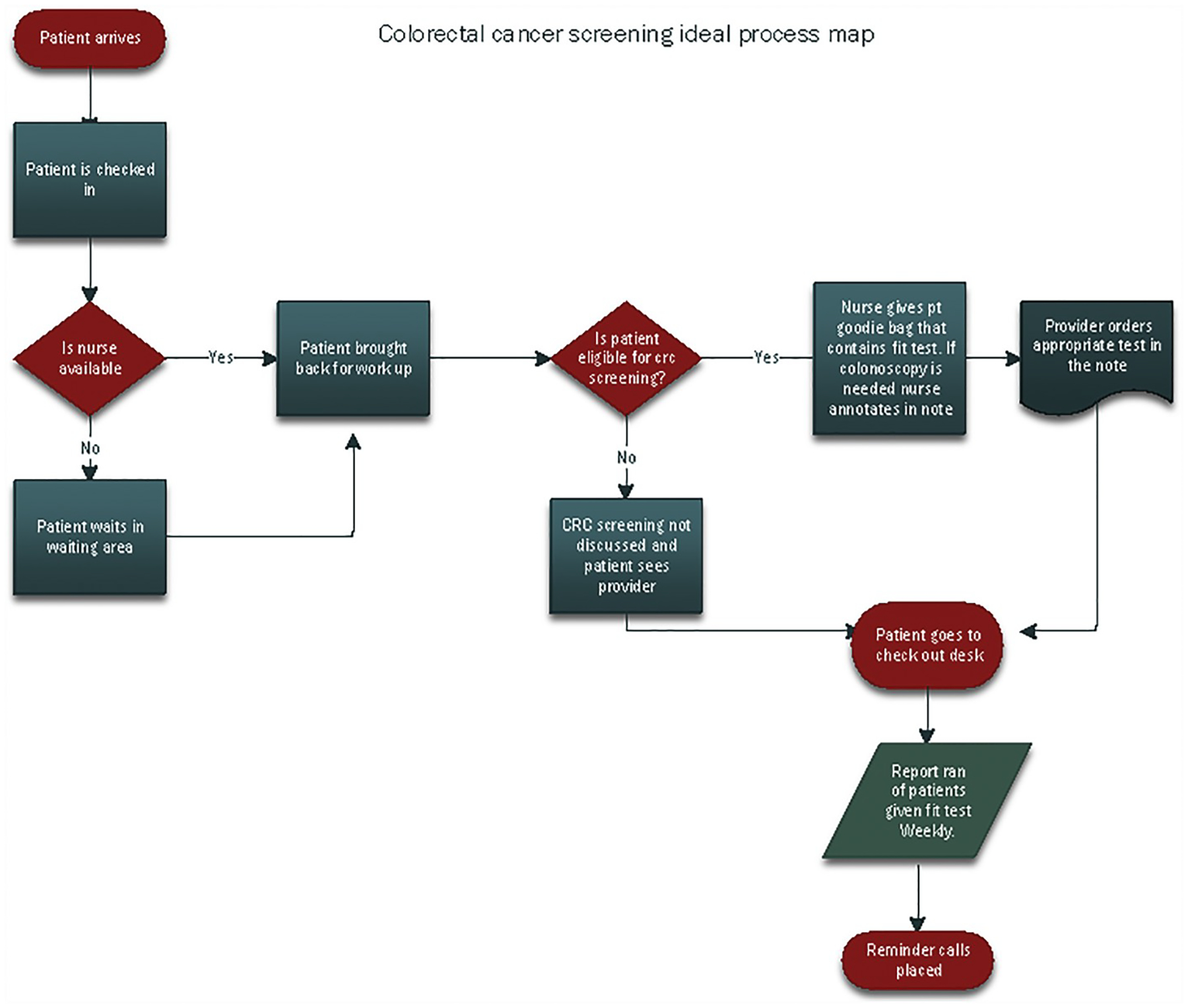

Most FQHC teams completed all the QI tools. All nine teams completed and submitted aims statements, eight out of nine teams submitted their current state process map, and all nine submitted their future state process map. Eight out of nine teams completed their gap and root cause analysis, and seven out of nine submitted PDSA cycle worksheets. For the process maps, FQHC teams took different approaches. For example, some FQHC teams completed a map for each clinical site while others completed one for the entire FQHC system. Some sites completed a map for one process while others completed maps for multiple processes (see Appendices A and B for sample process maps).

4.2.4. Implementation of CRC screening EBIs

As measured by the baseline and follow-up inventory, barriers to FIT-based CRC screening were reduced during the collaborative. Before the collaborative, two FQHC teams documented problems with limited testing supplies and patients not returning their stool cards. On the follow-up inventory, no sites reported problems with FIT kit capacity and all nine clinics were performing FIT kit tracking, compared to six at baseline. Problems with helping patients obtain colonoscopies, however, did not change over time. Three FQHC teams reported the exact same barriers on the inventory both pre- and post-collaborative, which included patients’ lack of insurance or inability to afford the procedure, too few providers who offer free or reduced-fee colonoscopies, and delays in processing paperwork for charity care. Table 4 displays the change in CRC screening EBIs that FQHCs were implementing before and after the collaborative. Standing orders for CRC screening were implemented by one additional FQHC. Patient reminders for screening increased for phone and mail, but not for text and patient portal. Provider prompts increased in the EHR, but not paper reminders. Reminders through huddles or nurse review decreased. Provider assessment and feedback increased, but not patient education.

Table 4.

CRC screening EBIs implemented by FQHCs (N = 9).

| Yes (Pre) | Yes (Post) | Differencea | ||

|---|---|---|---|---|

| CRC Screening Standing Orders | 5 | 6 | 1 | |

| CRC Screening Reminders for Patients | Live Phone | 3 | 5 | 2 |

| Automated Phone | 1 | 2 | 1 | |

| 4 | 7 | 3 | ||

| Text Message | 1 | 1 | 0 | |

| Patient Portal | 1 | 1 | 0 | |

| Provider Prompts and Type | Provider Prompt | 5 | 6 | 1 |

| Prompts in EHR | 1 | 4 | 3 | |

| Paper reminders in chart | 0 | 0 | 0 | |

| Review scheduled patients in huddle | 3 | 1 | −2 | |

| Nurse reviews screenings and alerts provider | 1 | 0 | −1 | |

| Provider Assessment and Feedback | EHR can provide reports of those due for screening, screening rates, and screening modality | 4 | 7 | 3 |

| Patient education on colorectal cancer screening options | One-on-One | 9 | 9 | 0 |

Green = increase, yellow = no change, red = decrease.

4.2.5. Spread

In the follow-up inventory, participants reported applying the QI tools to diabetes, vaccinations for HPV and pneumonia, and workflows such as scheduling and no-shows.

4.2.6. Influence of implementation strategies on outcomes

Table 5 summarizes qualitative themes related to each of the implementation strategies, with illustrative quotations. Obtaining a formal commitment from FQHC directors and providing funding for travel motivated FQHC team members to participate in the collaborative activities. The in-person trainings were the most frequently mentioned and most highly valued strategy. Participants appreciated the comprehensive overview of the QI process and tools. Participants also valued the opportunity that in-person trainings provided for them to interact with peers and obtain hands-on experience with the QI tools. They even suggested that trainers spend less time on CRC screening EBIs so that more time could be devoted to QI processes and tools. Participants found it challenging to make time for the monthly virtual meetings but valued the facilitation provided by ACS staff. The audit and feedback on their CRC screening rates was helpful because it generated friendly competition and increased team accountability. Lastly, they felt the QI tools were highly valuable, with the exception of the Fishbone Diagram (a visualization tool for categorizing the potential causes of a problem in order to identify its root causes) and the Five Whys worksheet (an iterative interrogative technique used to explore the cause-and-effect relationships underlying a particular problem).

Table 5.

Implementation strategies.

| Implementation strategies | Themes | Quotations |

|---|---|---|

| Formal commitment | Motivated engagement | “I said we signed the contract to be on these calls. We have to do this, because we said we were going to. And if we’re not going to then we shouldn’t have done it.” |

| Funding | Motivated engagement | “I’m not going to lie, girls, we’re broke. That we were able to get it paid for, that sealed the deal.” |

| Training: in-person meetings | Highly valued for providing:

|

They explained “why you have to go through all the steps, which in turn I could take to my team and say listen, guys, we have to do it this way because it makes more sense if we start it at the beginning instead of jumping to the end.” |

| “I really like the interactive collaboration and bouncing ideas off- oh, this worked for them; let’s try it for us.” “Because people would think of things that I didn’t think of and it would make it so, okay, almost like an idea think box that I could pick from.” | ||

| “By the time you actually got back to your health department, you had worked through this whole process and understood it, so that way you could start with your team and know what you were doing.” | ||

| Training: virtual meetings | Were difficult to prioritize over clinic needs | “When you have webinars in your health center, you get pulled away when there’s a crisis going on.” |

| Facilitation | Valued the personalized problem solving and support | “It was helpful to have an ACS member assigned to you because if I had questions, I could just send an email.” |

| Audit and feedback | Valued for:

|

“Them knowing how they were performing and showing them how they were performing, and it was just a motivator to do it and to get everybody on board and everybody to work together.” |

| “There are so many plates in the air all the time…so we couldn’t really lose momentum because we kept having to put in our data.” | ||

| Implementation team | Need to have the right people on the team | “It cannot just be QI people. There needs to be at least one clinical person on the team because those clinical people are the ones that have to document. They’re the ones that have to be in the face of the patient helping promote this.” |

| Using QI tools to identify barriers and facilitators |

|

“We only had one referral specialist. We realized that she needed help, right there in that room during the boot camp…so we did bring on key staff throughout the year because of that current state and future state [process map].” |

| “Maybe you don’t have to have the Five Whys and the Fishbone.” | ||

| “The Five Whys was kind of an out there, like where does it go?” | ||

| Using QI tools to conduct cyclical, small tests of change | PDSA cycles allowed participants to see if a change worked and demonstrate progress | “If I implement a PDSA, I can see immediately if that worked.” |

4.3. System outcomes

4.3.1. Changes in colorectal cancer screening rates

In 2017, eight of the participating FQHCs served a total of 31,338 patients ages 50–74 (the only year in which age-specific data were available from the ACS). The percentage of patients who received appropriate CRC screening increased for all but one of the FQHCs in 2017 (the year prior to the collaborative) and 2018 (the year in which the QI Collaborative took place). More importantly, the weighted average of the percentage of patients who had received appropriate screening increased by 8.0% from 2017 to 2018 as compared to 3.3% from 2016 to 2017 (see Table 6).

Table 6.

Colorectal cancer screening rates for participating FQHCs: 2016, 2017, 2018.

|

FQHC “I” was not in operation until 2017 and was excluded from the analysis.

5. Discussion

Using an implementation science lens through which to evaluate QICs can promote standardized terminology and facilitate more detailed description of collaborative strategies, which allows for easier replication and synthesis of the model (Proctor et al., 2013). The goal of this paper was to use Powell’s (Powell et al., 2015) implementation strategies and Proctor’s (Proctor et al., 2011) implementation outcomes to describe the mechanisms through which the collaborative led to improvements in CRC screening rates. To further our understanding of these mechanisms, we used qualitative methods to capture participants’ perceptions of the collaborative activities and explain how they may have contributed to positive implementation outcomes.

5.1. The collaborative experience

In terms of engagement, we observed that individual participation in the virtual meetings declined over time, a pattern that has been observed in other QICs (Colon-Emeric et al., 2006). Requesting that all three implementation team members attend every meeting may have been too burdensome. However, nearly every FQHC sent at least one team member to all 11 collaborative gatherings. Focus group participants attributed this strong commitment to signing a contract and accepting additional funding. The composition of the participants on the implementation team varied between those who had substantial experience with quality improvement and those who had less experience and were new to the FQHC setting. While the study was not designed to analyze the influence of team member characteristics on implementation and outcomes, the consensus from the focus group findings was that the capacity-building was useful for all participants, regardless of their level of familiarity with quality improvement.

Almost all implementation teams provided documentation of how their clinics implemented QI tools, and they reported increased use of 7/13 CRC screening EBIs. A few of the obstacles to implementing EBIs that were mentioned in the focus group included lack of capacity in the EMR and slow uptake of patient portals. When asked about the content of the in-person trainings, focus group participants wanted the ACS to prioritize general QI capacity-building over specific information on CRC screening. One possible reason is that staff may have had a high level of knowledge about CRC screening EBIs prior to starting the QI Collaborative. A recent survey of FQHCs across eight states found that 77% were fully implementing at least one recommended CRC screening intervention from the Community Guide (Adams et al., 2018). Results from the follow-up inventory indicated that capacity for distributing FIT kits and following up with patients was improved as a result of the QIC. However, problems with colonoscopy capacity did not appear to be resolved at the end of twelve months. This is not surprising given that North Carolina does not have funds for diagnostic colonoscopies for the uninsured. FQHCs have had to develop relationships with local gastroenterology practices to donate endoscopy services or creatively manage revenue to supplement the cost of colonoscopies (Weiner et al., 2017). Barriers such as understaffing and lack of access to colonoscopy have broader organizational and policy-level implications which need to be addressed in future QIC research. Implementation science, with its emphasis on multi-level determinants and strategies, can inform how best to address these structural barriers.

Because the long-term goal for ACS is to replicate and disseminate the QIC model for all cancer screenings, there was an emphasis on collecting and systematically applying recommendations for improvement. In subsequent QICs, the ACS is incorporating the findings from this evaluation such as keeping the focus on quality improvement, increasing peer-to-peer best-practice sharing and troubleshooting, sharing identified screening rates every month, and having ACS staff conduct at least one site visit for every FQHC. Appendix C includes a table with recommendations from the post-collaborative focus group.

5.2. Impact on CRC screening rates

In the past several years, FQHCs have made significant strides in improving CRC screening among their patient population (National Colorectal Cancer Roundtable, n.d.; Riehman et al., 2018). In 2017, the FQHCs that participated in the QIC had an average weighted screening rate of 32.7%. Their rate was lower than the non-participating FQHCs in North Carolina (37.7%), and the national FQHC average (42.0%), which gave them room to improve. With one exception (FQHC B), the centers were relatively small with 6 or fewer clinical sites. Their patient populations, however, were diverse. As safety net providers, many served a patient population with a high proportion of racial/ethnic minorities and the uninsured, but three of the centers had less than 10% uninsured and/or less than 10% minorities. Despite this variability, all but one of the FQHCs increased their CRC screening rates over the one-year collaborative. There is evidence that the QIC had an impact as the weighted average of the percentage of patients who received appropriate screening was 4.7 points higher in 2018 than in 2017. Also, the percentage increase in rates for the cohort outpaced state-level and national trends. In North Carolina, overall CRC screening rate for the 31 centers that did not participate in the collaborative increased by 1.8% from 2017 to 2018. For all FQHCs in the U.S., the rate increased 2.1% from 2017 to 2018 (Health Resources and Services Administration Health Center Program: 2018. Health Center Data, n.d.-b). Interestingly, a randomized controlled trial of a QIC in 23 primary care clinics did not demonstrate statistically significant differences in CRC screening rates. The authors state “Advancing the knowledge base of QI interventions requires future reports to address how and why QI interventions work rather than simply measuring whether they work” (Shaw et al., 2013), which was the goal of this evaluation.

5.3. Strengths of the quality improvement collaborative model

One of the strengths of this QIC was that every center received facilitation (i.e., coaching) from an assigned ACS Primary Care Manager. All Care Managers had received extensive virtual training through the IHI Open School (http://www.ihi.org/education/IHIOpenSchool/) and in-person training through ACS and HealthTeamWorks (https://www.healthteamworks.org/). They provided core practice facilitator tasks noted by others (Bidassie et al., 2015) such as: context assessment; guiding PDSA cycles and use of process improvement tools; identifying resources and making referrals; holding teams accountable for plan implementation during site visits; and providing support and encouragement. Another strength was the quality and reputation of the facilitator team. In their taxonomy of attributes of successful collaboratives, AHRQ found that the degree of credibility of the host or convener and the leadership was an important component of the social system (Nix et al., 2018). The ACS is a well-respected organization with a high level of expertise in the topic area, and the NCCHCA is a trusted partner with the mission of serving its FQHC members. These two organizations were ideally suited to convene and support the QIC. Features of the QIC also mapped back to five essential features that determine short-term success, according to a study of 182 teams that participated in seven QICs (Strating et al., 2011) (see Table 7).

Table 7.

Essential collaborative features that determine short-term outcomes.

| Essential feature | CRC screening quality improvement collaborative |

|---|---|

|

|

|

|

|

|

|

|

|

|

The conceptual framework for the QIC could be generalized to preventive services beyond cancer screening such as nutrition and physical activity guidelines.

5.4. Strengths of the evaluation approach

This evaluation plan was guided by a conceptual model that specified implementation strategies and outcomes (e.g., adoption, engagement, and implementation of QI tools and CRC screening EBIs), which enhanced our ability to describe the collaborative activities in detail and link them to improvements in screening rates. Collecting completed QI tools such as aims statements, process maps, and gap and root cause analyses provided proof that the teams were executing EBIs and improvement strategies in a more systematic way, as recommended by Leeman et al. (2019). Because we documented both the collaborative-level implementation strategies as well as the FQHC-level strategies, the study findings contribute to a clearer pathway from the application of evidence to changes in the outcome. Wells et al. (2018) recommend that evaluations of QICs include site engagement measures, which we collected by measuring individual attendance and FQHC-representation at all meetings. Nadeem et al. (2013) advised researchers to provide detailed information on collaborative components, which we laid out in Table 1 and included in the Appendices. Our mixed methods approach and close collaboration with ACS and NCCHCA in interpreting the evaluation results speaks to Solberg’s (2005) observation that “Efforts to evaluate such QICs must therefore include those more subjective but important influences on care quality, not just particular changes in a few measures”. We specifically addressed implementation strategies and outcomes that are often missing from QIC clinical trials, thus promoting transparency and replicability.

5.5. Limitations

The results presented in this paper are based on an external evaluation of a real-world collaborative that was not part of a research study, and thus have some limitations. While selection bias may have played a role in the observed increases in CRC screening rates, randomly selected FQHCs with a high dropout rate would have defeated the funder’s purpose of creating a sustainable learning cohort. Given the lack of randomization and a control group, caution must be taken when attributing improvements in screening solely to the QIC. To strengthen our conclusions, we applied weighted averages to assess differences in CRC screening rates between 2016, 2017, and 2018. We also assessed rates among the non-participating FQHCs in North Carolina. Another limitation is that the practice inventory was not a standardized instrument and, in a few cases, the pre and post were completed by different team members. Future evaluations could benefit from standardized, validated measures completed by the same participants, when possible. Only seven of the nine FQHCs were able to travel to attend the in-person focus group, so two centers did not have their perspectives represented in the qualitative results. Sustainment of CRC screening EBIs is a critical implementation outcome which we have not addressed because post-collaborative data have not yet been collected. While nine organizations is a fairly small sample size, it is practical for the purposes of in-person meetings and on-site coaching. As this model is replicated across multiple cohorts of FQHCs, the ability to test for statistically significant changes in CRC screening rates will be increased.

5.6. Conclusion

Our evaluation approach is novel in that we incorporated measures and outcomes from implementation science, which enabled us to apply standardized terminology to describe what we observed while also exploring the mechanisms through which the QIC led to improvements in CRC screening rates. For cancer screening specifically, the model is ripe for dissemination to states that have partnerships with the ACS, primary care organizations, and other key stakeholders. The ACS is currently adapting the QIC approach for cancer prevention and control initiatives in North Carolina, South Carolina, Virginia, and Florida. They have engaged the University of South Carolina CPCRN as the external evaluator of a QIC on HPV vaccination with FQHCs in SC. Their evaluation plan builds on the activities described here and encompasses more frequent data collection to monitor and assess intervention outcomes (e.g., baseline, mid-program, and post-program). Additional qualitative data collection will further elucidate contextual factors influencing the QI interventions and CRC screening interventions in the FQHCs, such as how the QI intervention promotes expanded social networks for FQHCs for future collaboration. The ACS, primary care associations, and FQHCs are nationwide, providing an infrastructure that can rapidly and efficiently replicate the QI Collaborative model to deliver life-saving CRC screening to our country’s most vulnerable populations.

Acknowledgements

This publication was supported by Cooperative Agreement Number U48-DP005017 from the Centers for Disease Control and Prevention’s Prevention Research Centers Program and the National Cancer Institute. The findings and conclusions in this publication are those of the authors and do not necessarily represent the official position of the funders. We would like to thank Dr. Ziya Gizlice for his assistance with the statistical analysis, Jennifer Richmond for her assistance with the bibliography, the reviewers for their thoughtful feedback, and the FQHC staff who generously participated in our evaluation. Publication of this supplement was supported by the Cancer Prevention and Control Network (CPCRN), University of North Carolina at Chapel Hill and the following co-funders: Case Western Reserve University, Oregon Health & Science University, University of South Carolina, University of Iowa, University of Kentucky, University of Pennsylvania and University of Washington.

Appendix A.

Sample process map

Appendix B.

Sample process map

Appendix C.

Recommendations for improvements

| Recommendations | |

|---|---|

| In-person and virtual meetings |

|

| Data collection |

|

| Quality improvement tools |

|

Footnotes

Data statement

Data on colorectal cancer screening rates are publicly available from the Bureau of Primary Care, Health Resources and Services Administration website: https://bphc.hrsa.gov/uds/datacenter.aspx?q=d. All other evaluation data are either the proprietary rights of the American Cancer Society® or not available in order to protect the confidentiality of the participants.

References

- Adams SA, Rohweder CL, Leeman J, et al. , 2018. Use of evidence-based interventions and implementation strategies to increase colorectal cancer screening in Federally Qualified Health Centers. J. Community Health 43 (6), 1044–1052. 10.1007/s10900-018-0520-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bidassie B, Williams LS, Woodward-Hagg H, Matthias MS, Damush TM, 2015. Key components of external facilitation in an acute stroke quality improvement collaborative in the Veterans Health Administration. Implement. Sci 10, 69. 10.1186/s13012-015-0252-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chambers DA, 2018. Commentary: increasing the connectivity between implementation science and public health: advancing methodology, evidence integration, and sustainability. Annu. Rev. Public Health 39, 1–4. 10.1146/annurev-publhealth-110717-045850. [DOI] [PubMed] [Google Scholar]

- Chin MH, 2010. Quality improvement implementation and disparities: the case of the health disparities collaboratives. Med. Care 48 (8), 668–675. 10.1097/MLR.0b013e3181e3585c. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Colon-Emeric C, Schenck A, Gorospe J, et al. , 2006. Translating evidence-based falls prevention into clinical practice in nursing facilities: results and lessons from a quality improvement collaborative. J. Am. Geriatr. Soc 54 (9), 1414–1418. 10.1111/j.1532-5415.2006.00853.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Community Preventive Services Task Force Cancer screening: multicomponent interventions-colorectal cancer. https://www.thecommunityguide.org/findings/cancer-screening-multicomponent-interventions-colorectal-cancer, Accessed date: 14 June 2019.

- Health Resources and Services Administration Health Center Program: 2017 Health Center Data https://bphc.hrsa.gov/uds/datacenter.aspx?q=d, Accessed date: 14 June 2019.

- Health Resources and Services Administration Health Center Program: 2018. Health Center Data https://bphc.hrsa.gov/uds/datacenter.aspx?q=d, Accessed date: 2 September 2019.

- Hsieh HF, Shannon SE, 2005. Three approaches to qualitative content analysis. Qual. Health Res 15 (9), 1277–1288. 10.1177/1049732305276687. [DOI] [PubMed] [Google Scholar]

- Institute for Healthcare Improvement The breakthrough series: IHI’s collaborative model for achieving breakthrough improvement. http://www.ihi.org/resources/Pages/IHIWhitePapers/TheBreakthroughSeriesIHIsCollaborativeModelforAchievingBreakthroughImprovement.aspx, Accessed date: 14 June 2019.

- Leeman J, Askelson N, Ko LK, et al. , 2019. Understanding the processes that Federally Qualified Health Centers use to select and implement colorectal cancer screening interventions: a qualitative study. Transl. Behav. Med [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mittman BS, 2004. Creating the evidence base for quality improvement collaboratives. Ann. Intern. Med 140 (11), 897–901. [DOI] [PubMed] [Google Scholar]

- Nadeem E, Olin SS, Hill LC, Hoagwood KE, Horwitz SM, 2013. Understanding the components of quality improvement collaboratives: a systematic literature review. Milbank Q. 91 (2), 354–394. 10.1111/milq.12016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nadeem E, Weiss D, Olin SS, Hoagwood KE, Horwitz SM, 2016. Using a theory-guided learning collaborative model to improve implementation of EBPs in a state children’s mental health system: a pilot study. Admin. Pol. Ment. Health 43 (6), 978–990. 10.1007/s10488-016-0735-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- National Colorectal Cancer Roundtable CRC screening rates reach 39.9% in FQHCs in 2016. https://nccrt.org/2016-uds-rates/, Accessed date: 14 June 2019.

- Nix M, McNamara P, Genevro J, et al. , 2018. Learning collaboratives: insights and a new taxonomy from AHRQ’s two decades of experience. Health Aff. (Millwood) 37 (2), 205–212. 10.1377/hlthaff.2017.1144. [DOI] [PubMed] [Google Scholar]

- Powell BJ, Waltz TJ, Chinman MJ, et al. , 2015. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement. Sci 10, 21. 10.1186/s13012-015-0209-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proctor E, Silmere H, Raghavan R, et al. , 2011. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Admin. Pol. Ment. Health 38 (2), 65–76. 10.1007/s10488-010-0319-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proctor EK, Powell BJ, McMillen JC, 2013. Implementation strategies: recommendations for specifying and reporting. Implement. Sci 8 (1), 139. 10.1186/1748-5908-8-139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Riehman KS, Stephens RL, Henry-Tanner J, Brooks D, 2018. Evaluation of colorectal cancer screening in Federally Qualified Health Centers. Am. J. Prev. Med 54 (2), 190–196. 10.1016/j.amepre.2017.10.007. [DOI] [PubMed] [Google Scholar]

- Schouten LM, Hulscher ME, van Everdingen JJ, Huijsman R, Grol RP, 2008. Evidence for the impact of quality improvement collaboratives: systematic review. BMJ 336 (7659), 1491–1494. 10.1136/bmj.39570.749884.BE. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shaw EK, Ohman-Strickland PA, Piasecki A, et al. , 2013. Effects of facilitated team meetings and learning collaboratives on colorectal cancer screening rates in primary care practices: a cluster randomized trial. Ann. Fam. Med 11 (3), 220–228. S221–228. 10.1370/afm.1505. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Solberg LI, 2005. If you’ve seen one quality improvement collaborative. Ann. Fam. Med 3 (3), 198–199. 10.1370/afm.304. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strating MM, Nieboer AP, Zuiderent-Jerak T, Bal RA, 2011. Creating effective quality-improvement collaboratives: a multiple case study. BMJ Qual. Saf 20 (4), 344–350. 10.1136/bmjqs.2010.047159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- The Breakthrough Series: IHI’s Collaborative Model for Achieving Breakthrough Improvement. Boston, MA. [Google Scholar]

- Weiner BJ, Rohweder CL, Scott JE, et al. , 2017. Using practice facilitation to increase rates of colorectal cancer screening in community health centers, North Carolina, 2012–2013: feasibility, facilitators, and barriers. Prev. Chronic Dis 14, E66. 10.5888/pcd14.160454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wells S, Tamir O, Gray J, Naidoo D, Bekhit M, Goldmann D, 2018. Are quality improvement collaboratives effective? A systematic review. BMJ Qual. Saf 27 (3), 226–240. 10.1136/bmjqs-2017-006926. [DOI] [PubMed] [Google Scholar]