Abstract

We investigate a well-known phenomenon of variational approaches in image processing, where typically the best image quality is achieved when the gradient flow process is stopped before converging to a stationary point. This paradox originates from a tradeoff between optimization and modeling errors of the underlying variational model and holds true even if deep learning methods are used to learn highly expressive regularizers from data. In this paper, we take advantage of this paradox and introduce an optimal stopping time into the gradient flow process, which in turn is learned from data by means of an optimal control approach. After a time discretization, we obtain variational networks, which can be interpreted as a particular type of recurrent neural networks. The learned variational networks achieve competitive results for image denoising and image deblurring on a standard benchmark data set. One of the key theoretical results is the development of first- and second-order conditions to verify optimal stopping time. A nonlinear spectral analysis of the gradient of the learned regularizer gives enlightening insights into the different regularization properties.

Keywords: Variational problems, Gradient flow, Optimal control theory, Early stopping, Variational networks, Deep learning

Introduction

Throughout the past years, numerous image restoration tasks in computer vision such as denoising [38] or super-resolution [39] have benefited from a variety of pioneering and novel variational methods. In general, variational methods [9] are aiming at the minimization of an energy functional designed for a specific image reconstruction problem, where the energy minimizer defines the restored output image. For the considered image restoration tasks, the observed corrupted image is generated by a degradation process of the corresponding ground truth image, which is the real uncorrupted image.

In this paper, the energy functional is composed of an a priori known, task-dependent, and quadratic data fidelity term and a Field-of-Experts-type regularizer [36], whose building blocks are learned kernels and learned activation functions. This regularizer generalizes the prominent total variation regularization functional and is capable of accounting for higher-order image statistics. A classical approach to minimize the energy functional is a continuous-time gradient flow, which defines a trajectory emanating from a fixed initial image. Typically, the regularizer is adapted such that the end point image of the trajectory lies in a proximity of the corresponding ground truth image. However, even the general class of Field-of-Experts-type regularizers is not able to capture the entity of the complex structure of natural images, that is why the end point image may substantially differ from the ground truth image. To address this insufficient modeling, we advocate an optimal control problem using the gradient flow differential equation as the state equation and a cost functional that quantifies the distance of the ground truth image and the gradient flow trajectory evaluated at the stopping time T. Besides the parameters of the regularizer, the stopping time is an additional control parameter learned from data.

The main contribution of this paper is the derivation of criteria to automatize the calculation of the optimal stopping time T for the aforementioned optimal control problem. In particular, we observe in the numerical experiments that the learned stopping time is always finite even if the learning algorithm has the freedom to choose a larger stopping time.

For the numerical optimization, we discretize the state equation by means of the explicit Euler and Heun schemes. This results in an iterative scheme which can be interpreted as static variational networks [11, 19, 24] as a subclass of deep learning models [26]. Here, the prefix “static” refers to constant regularizers with respect to time. In several experiments we demonstrate the superiority of the learned static variational networks for image restoration tasks terminated at the optimal stopping time over classical variational methods. Consequently, the early stopped gradient flow approach is better suited for image restoration problems and computationally more efficient than the classical variational approach.

A well-known major drawback of mainstream deep learning approaches is the lack of interpretability of the learned networks. In contrast, following [16], the variational structure of the proposed model allows us to analyze the learned regularizers by means of a nonlinear spectral analysis. The computed eigenpairs reveal insightful properties of the learned regularizers.

There have been several approaches to cast deep learning models as dynamical systems in the literature, in which the model parameters can be seen as control parameters of an optimal control problem. E [12] clarified that deep neural networks such as residual networks [21] arise from a discretization of a suitable dynamical system. In this context, the training process can be interpreted as the computation of the controls in the corresponding optimal control problem. In [27, 28], Pontryagin’s maximum principle is exploited to derive necessary optimality conditions for the optimal control problem in continuous time, which results in a rigorous discrete-time optimization. Certain classes of deep learning networks are examined as mean-field optimal control problems in [13], where optimality conditions of the Hamilton–Jacobi–Bellman type and the Pontryagin type are derived. The effect of several discretization schemes for classification tasks has been studied under the viewpoint of stability in [4, 7, 17], which leads to a variety of different network architectures that are empirically proven to be more stable.

The benefit of early stopping for iterative algorithms is examined in the literature from several perspectives. In the context of ill-posed inverse problems, early stopping of iterative algorithms is frequently considered and analyzed as a regularization technique. There is a variety of literature on the topic, and we therefore only mention the selected monographs [14, 23, 34, 41]. Frequently, early stopping rules for inverse problems are discussed in the context of the Landweber iteration [25] or its continuous analogue commonly referred to as Showalter’s method [44] and are based on criteria such as the discrepancy or the balancing principle.

In what follows, we provide an overview of recent advances related to early stopping. Raskutti et al. [37] exploit early stopping for nonparametric regression problems in reproducing kernel Hilbert spaces (RKHS) to prevent overfitting and derive a data-dependent stopping rule. Yao et al. [42] discuss early stopping criteria for gradient descent algorithms for RKHS and relate these results to the Landweber iteration. Quantitative properties of the early stopping condition for the Landweber iteration are presented in Binder et al. [5]. Zhang and Yu [45] prove convergence and consistency results for early stopping in the context of boosting. Prechelt [33] introduces several heuristic criteria for optimal early stopping based on the performance of the training and validation error. Rosasco and Villa [35] investigate early stopping in the context of incremental iterative regularization and prove sample bounds in a stochastic environment. Matet et al. [30] exploit an early stopping method to regularize (strongly) convex functionals. In contrast to these approaches, we propose early stopping on the basis of finding a local minimum with respect to the time horizon of a properly defined energy.

To illustrate the necessity of early stopping for iterative algorithms, we revisit the established TV- denoising functional [38], which amounts to minimizing the variational problem among all functions , where denotes a bounded domain, is the regularization parameter and refers to a corrupted input image. An elementary, yet very inefficient optimization algorithm relies on a gradient descent using a finite difference discretization for the regularized functional ()

| 1 |

where denotes a lattice, are discrete functions and is a finite difference gradient operator with Neumann boundary constraint (for details see [8, Section 3]). For a comprehensive list of state-of-the-art methods to efficiently solve TV-based variational problems, we refer the reader to [9].

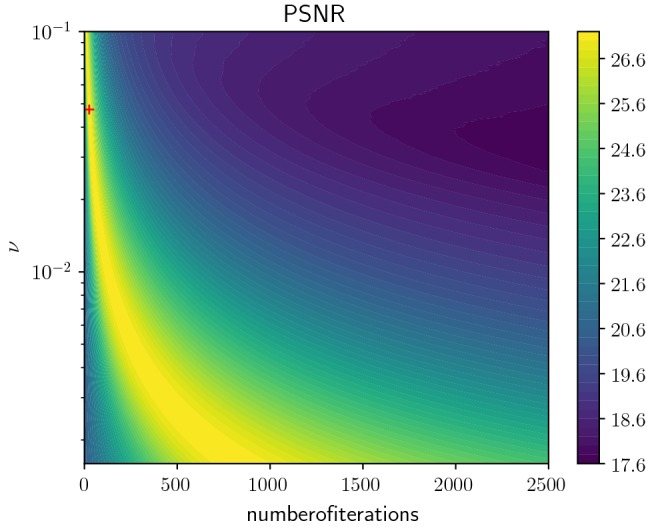

Figure 1 depicts the dependency of the peak signal-to-noise ratio on the number of iterations and the regularization parameter for the TV- problem (1) using a step size and , where the input image with a resolution of is corrupted by additive Gaussian noise with standard deviation 0.1. As a result, for each regularization parameter there exists a unique optimal number of iterations, where the signal-to-noise ratio peaks. Beyond this point, the quality of the resulting image is deteriorated by staircasing artifacts and fine texture patterns are smoothed out. The global maximum (26, 0.0474) is marked with a red cross, the associated image sequence is shown in Fig. 2 (left to right: input image, noisy image, restored images after 13, 26, 39, 52 iterations).1 If the gradient descent is considered as a discretization of a time continuous evolution process governed by a differential equation, then the optimal number of iterations translates to an optimal stopping time.

Fig. 1.

Contour plot of the peak signal-to-noise ratio depending on the number of iterations and the regularization parameter for TV- denoising. The global maximum is marked with a red cross (Color figure online)

Fig. 2.

Image sequence with globally best PSNR value. Left to right: input image, noisy image, restored images after 13, 26, 39, 52 iterations

In this paper, we refer to the standard inner product in the Euclidean space by . Let be a domain. We denote the space of continuous functions by , the space of k-times continuously differentiable functions by for , the Lebesgue space by , , and the Sobolev space by , , where the latter space is endowed with the Sobolev (semi-)norm for defined as and . With a slight abuse of notation, we frequently set . The identity matrix in is denoted by . is the one vector.

This paper is organized as follows: In Sect. 2, we argue that certain classes of image restoration problems can be perceived as optimal control problems, in which the state equation coincides with the evolution equation of static variational networks, and we prove the existence of solutions under quite general assumptions. Moreover, we derive a first order necessary as well as a second-order sufficient condition for the optimal stopping time in this optimal control problem. A Runge–Kutta time discretization of the state equation results in the update scheme for static variational network, which is discussed in detail in Sect. 3. In addition, we visualize the effect of the optimality conditions in a simple numerical example in and discuss alternative approaches for the derivation of static variational networks. Finally, we demonstrate the applicability of the optimality conditions to two prototype image restoration problems in Sect. 4: denoising and deblurring.

Optimal Control Approach to Early Stopping

In this section, we derive a time continuous analog of static variational networks as gradient flows of an energy functional composed of a data fidelity term and a Field-of-Experts-type regularizer . The resulting ordinary differential equation is used as the state equation of an optimal control problem, in which the cost functional incorporates the squared -distance of the state evaluated at the optimal stopping time to the ground truth as well as (box) constraints of the norms of the stopping time, the kernels and the activation functions. We prove the existence of minimizers of this optimal control problem under quite general assumptions. Finally, we derive first- and second-order optimality conditions for the optimal stopping time using a Lagrangian approach.

Let be a data vector, which is either a signal of length n in 1D, an image of size in 2D or spatial data of size in 3D. Since we are primarily interested in two-dimensional image restoration, we focus on this task in the rest of this paper and merely remark that all results can be generalized to the remaining cases. For convenience, we restrict to grayscale images, the generalization to color or multi-channel images is straightforward. In what follows, we analyze an energy functional of the form

| 2 |

that is composed of a data fidelity term and a regularizer specified below. We incorporate the Field-of-Experts regularizer [36], which is a common generalization of the discrete total variation regularizer and is given by

with kernels and associated nonlinear functions for .

Throughout this paper, we consider the specific data fidelity term

for fixed and fixed . We remark that various image restoration tasks can be cast in exactly this form for suitable choices of A and b [9].

The gradient flow [1] associated with the energy for a time reads as

| 3 |

| 4 |

where denotes the flow of with , and the function is given by

For a fixed and an a priori constant-bounded open-interval , we consider -conforming basis functions with compact support in for . The vectorial function space for the activation functions is composed of m identical component functions , which are given as the linear combination of with weight vector , i.e.

| 5 |

We remark that in contrast to [4, 17], inverse problems for image restoration rather than image classification are examined. Thus, we incorporate in (3) the classical gradient flow with respect to the full energy functional in order to promote data consistency, whereas in the classification tasks, only the gradient flow with respect to the regularizer is considered.

In what follows, we analyze an optimal control problem, for which the state equation (3) and initial condition (4) will arise as equality constraints. The cost functional J incorporates the -distance of the flow evaluated at time T and the ground truth state and is given by

We assume that the controls T, and satisfy the box constraints

| 6 |

as well as the zero mean condition

| 7 |

Here, we have and we choose a fixed parameter . Further, and are continuously differentiable functions with non-vanishing gradient such that and as and . We include the condition (7) to reduce the dimensionality of the kernel space. Moreover, this condition ensures an invariance with respect to gray-value shifts of image intensities.

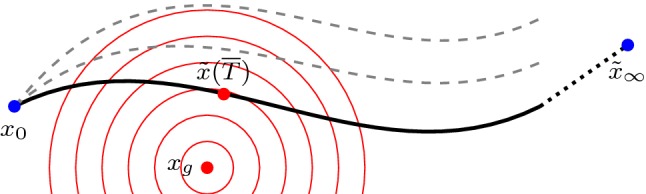

The particular choice of the cost functional originates from the observation that a visually appealing image restoration is obtained as the closest point on the trajectory of the flow (reflected by the -distance) to subjected to a moderate flow regularization as quantified by the box constraints. Figure 3 illustrates this optimization task for the optimal control problem. Among all trajectories of the ordinary differential equation (3) emanating from a constant initial value , one seeks the trajectory that is closest to the ground truth in terms of the squared Euclidean distance as visualized by the energy isolines. Note that each trajectory is uniquely determined by . The sink/stable node is an equilibrium point of the ordinary differential equation, in which all eigenvalues of the Jacobian of the right-hand side of (3) have negative real parts [40].

Fig. 3.

Schematic drawing of optimal trajectory (black curve) as well as suboptimal trajectories (gray dashed curves) emanating from with ground truth , optimal restored image , sink/stable node and energy isolines (red concentric circles) (Color figure online)

The constraint of the stopping time is solely required for the existence theory. For the image restoration problems, a finite stopping time can always be observed without constraints. Hence, the optimal control problem reads as

| 8 |

subject to the constraints (6) and (7) as well as the nonlinear autonomous initial value problem (Cauchy problem) representing the state equation

| 9 |

for and . We refer to the minimizing time T in (8) as the optimal early stopping time. To better handle this optimal control problem, we employ the reparametrization , which results in the equivalent optimal control problem

| 10 |

subject to (6), (7) and the transformed state equation

| 11 |

for , where

Remark 1

The long-term dynamics of the nonlinear autonomous state equation (11) is determined by the set of fixed points of f. A system is asymptotically stable at a fixed point y if all real parts of the eigenvalues of Df(y) are strictly negative [18, 40]. In the case of convex potential functions with unbounded support and a full rank matrix A, the autonomous differential equation (3) is asymptotically stable.

In the next theorem, we apply the direct method in the calculus of variations to prove the existence of minimizers for the optimal control problem.

Theorem 1

(Existence of solutions) Let . Then the minimum in (10) is attained.

Proof

Without restriction, we solely consider the case and omit the subscript.

Let be a minimizing sequence for J with an associated state such that (6), (7) and (11) hold true (the existence of is verified below). The coercivity of and implies and for fixed constants . Due to the finite dimensionality of and the boundedness of , we can deduce the existence of a subsequence (not relabeled) such that . In addition, using the bounds and we can pass to further subsequences if necessary to deduce for suitable such that . The state equation (11) implies

This estimate already guarantees that [0, 1] is contained in the maximum domain of existence of the state equation due to the linear growth of the right-hand side in [40, Theorem 2.17]. Moreover, Gronwall’s inequality [18, 40] ensures the uniform boundedness of for all and all , which in combination with the above estimate already implies the uniform boundedness of . Thus, by passing to a subsequence (again not relabeled), we infer that exists such that (the pointwise evaluation is possible due to the Sobolev embedding theorem), in and in . In addition, we obtain

as and holds true in a weak sense [18]. However, due to the continuity of the right-hand side, we can even conclude [18, Chapter I]. Finally, the theorem follows from the continuity of J along this minimizing sequence.

In the next theorem, a first-order necessary condition for the optimal stopping time is derived.

Theorem 2

(First-order necessary condition for optimal stopping time) Let . Then for each stationary point of J with associated state such that (6), (7) and (11) are valid the equation

| 12 |

holds true. Here, denotes the adjoint state of , which is given as the solution to the ordinary differential equation

| 13 |

with terminal condition

| 14 |

Proof

Again, without loss of generality, we restrict to the case and omit the subscript. Let be a stationary point of J, which exists due to Theorem 1. The constraints (11), (6) and (7) can be written as

where (note that ) is given by

For multipliers in the space , we consider the associated Lagrange functional to minimize J incorporating the aforementioned constraints, i.e., for and we have

| 15 |

Following [22, 43], the Lagrange multiplier exists if J is Fréchet differentiable at , G is continuously Fréchet differentiable at and is regular, i.e.

| 16 |

The (continuous) Fréchet differentiability of J and G at can be proven in a straightforward manner. To show (16), we first prove the surjectivity of . For any , we have

The surjectivity of with initial condition given by follows from the linear growth in x, which implies that the maximum domain of existence coincides with . This solution is in general only a solution in the sense of Carathéodory [18, 40]. Since and have non-vanishing derivatives, the validity of (16) and thus the existence of the Lagrange multiplier follow.

The first-order optimality conditions with test functions , , and read as

| 17 |

| 18 |

| 19 |

The fundamental lemma of calculus of variations yields in combination with (17) and (19) for

| 20 |

| 21 |

in a distributional sense. Since the right-hand sides of (20) and (21) are continuous if , we can conclude [18, 40] and hence (21) holds in the classical sense. Finally, (18) and (19) imply

| 22 |

which proves (12) if (the case is trivial).

The preceding theorem can easily be adapted for fixed kernels and activation functions leading to a reduced optimization problem with respect to the stopping time only:

Corollary 1

(First-order necessary condition for subproblem) Let and for be fixed with satisfying (6) and (7). We denote by the adjoint state (13). Then, for each stationary point of the subproblem

| 23 |

in which the associated state satisfies (11), the first-order optimality condition (12) holds true.

Remark 2

Under the assumptions of Corollary 1, a rescaling argument reveals the identities for

We conclude this section with a second-order sufficient condition for the partial optimization problem (23):

Theorem 3

(Second-order sufficient conditions for subproblem) Let . Under the assumptions of Corollary 1, with associated state is a strict local minimum of if a constant exists such that

| 24 |

for all satisfying and

| 25 |

Proof

As before, we restrict to the case and omit subscripts. Let us denote by L the version of the Lagrange functional (15) with fixed kernels and fixed activation functions to minimize J subject to the side conditions as specified in Corollary 1. Let be a local minimum of J. Furthermore, we consider arbitrary test functions , where we endow the Banach space with the norm for . Then,

Following [43, Theorem 43.D], J has a strict local minimum at if the first-order optimality conditions discussed in Corollary 1 holds true and a constant exists such that

| 26 |

for all satisfying

and . The theorem follows from the homogeneity of order 2 in T in (26), which results in the modified condition (25).

Remark 3

All aforementioned statements remain valid when replacing the function space and the norm of the activation functions by suitable Sobolev spaces and Sobolev norms, respectively. Moreover, all statements only require minor modifications if instead of the box constraints (6) nonnegative, coercive and differentiable functions of the norms of T, , and are added in the cost functional J.

Time Discretization

The optimal control problem with state equation originating from the gradient flow for the energy functional was analyzed in Sect. 2. In this section, we prove that static variational networks can be derived from a time discretization of the state equation incorporating Euler’s or Heun’s method [2, 6]. To illustrate the concepts, we discuss the optimal control problem in using fixed kernels and activation functions in Sect. 3.1. Finally, a literature overview of alternative ways to derive variational networks as well as relations to other approaches are presented in Sect. 3.2.

Let be a fixed depth. For a stopping time we define the node points for . Consequently, Euler’s explicit method for the transformed state equation (11) with fixed kernels and fixed activation functions reads as

| 27 |

for with . The discretized ordinary differential equation (27) defines the evolution of the static variational network. We stress that this time discretization is closely related to residual neural networks with constant parameters in each layer. Here, is an approximation of , the associated global error is bounded from above by

with , where denotes the Lipschitz constant of f [2, Theorem 6.3]. In general, this global error bound has a tendency to overestimate the actual global error. Improved error bounds can either be derived by performing a more refined local error analysis, which solely results in a better constant C, or by using higher-order Runge–Kutta methods. One prominent example of an explicit Runge–Kutta scheme with a quadratic order of convergence is Heun’s method [6], which is defined as

| 28 |

We abbreviate the right-hand side of (13) as follows:

The corresponding update schemes for the adjoint states are given by

| 29 |

in the case of Euler’s method and

| 30 |

in the case of Heun’s method. We remark that in general implicit Runge–Kutta schemes are not efficient due to the complex structure of the Field-of-Experts regularizer.

In all cases, we have to choose the step size such that the explicit Euler scheme is stable [6], i.e.,

| 31 |

for all , where denotes the ith eigenvalue of the Jacobian of either f or g. Note that this condition already implies the stability of Heun’s method. Thus, in the numerical experiments, we need to ensure a constant ratio of the stopping time T and the depth S to satisfy (31).

Optimal Control Problem in

In this subsection, we apply the first- and second-order criteria for the partial optimal control problem (see Corollary 1) to the simple, yet illuminative example in with a single kernel, i.e., and . More general applications of the early stopping criterion to image restoration problems are discussed in Sect. 4. Below, we consider a regularized data fitting problem composed of a squared -data term and a nonlinear regularizer incorporating a forward finite difference matrix operator with respect to the x-direction. In detail, we choose and

To compute the solutions of the state (11) and the adjoint (13) differential equation, we use Euler’s explicit method with 100 equidistant steps. All integrals are approximated using a Gaussian quadrature of order 21. Furthermore, we optimize the stopping time in the discrete set .

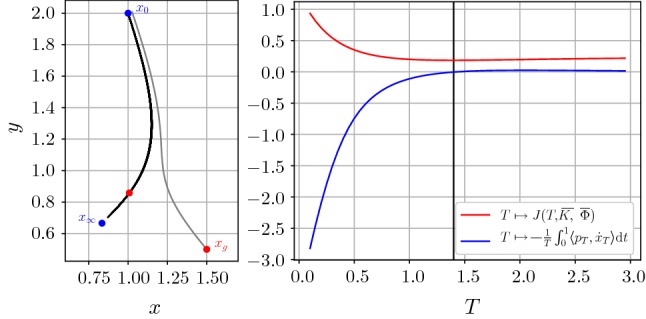

Figure 4 (left) depicts all trajectories for (black curves) of the state equation emanating from with sink/stable node . The end points of the optimal trajectory and the ground truth state are marked by red points. Moreover, the gray line indicates the trajectory of (the subscript denotes the solutions calculated with stopping time T) associated with the optimal stopping time. The dependency of the energy (red curve)

and of the first-order condition (12) (blue curve)

on the stopping time T is visualized in the right plot in Figure 4. Note that the black vertical line indicating the optimal stopping time given by (12) crosses the energy plot at the minimum point. The function value of the second-order condition (24) in Theorem 3 is 0.071, which confirms that is indeed a strict local minimum of the energy.

Fig. 4.

Left: Trajectories of the state equation for T varying in (black curves), initial value (blue point), sink/stable node (blue point), ground truth state and end point of optimal trajectory (red points). Right: function plots of the energy (red plot) and the first-order condition (blue plot) (Color figure online)

Alternative Derivations of Variational Networks

We conclude this section with a brief review of alternative derivations of the defining equation (27) for variational networks. Inspired by the classical nonlinear anisotropic diffusion model by Perona and Malik [31], Chen and Pock [11] derive variational networks as discretized nonlinear reaction diffusion models of the form with an a priori fixed number of iterations, where and represent the reaction and diffusion terms, respectively, that coincide with the first and second expression in (11). By exploiting proximal mappings, this scheme can also be used for non-differentiable data terms . In the same spirit, Kobler et al. [24] related variational networks to incremental proximal and incremental gradient methods. Following [19], variational networks result from a Landweber iteration [25] of the energy functional (2) using the Field-of-Experts regularizer. Structural similarities of variational networks and residual neural networks [21] are analyzed in [24]. In particular, residual neural networks (and thus also variational networks) are known to be less prone to the degradation problem, which is characterized by a simultaneous increase of the training/test error and the model complexity. Note that in most of these approaches time varying kernels and activation functions are examined. In most of the aforementioned papers, the benefit of early stopping has been observed.

Numerical Results for Image Restoration

We examine the advantageousness of early stopping for image denoising and image deblurring using static variational networks in this section. In particular, we show that the first-order optimality condition results in the optimal stopping time. We do not verify the second-order sufficient condition discussed in Theorem 3 since in all experiments the first-order condition indicates an energy minimizing solution and thus this verification is not required.

Image Reconstruction Problems

In the case of image denoising, we perturb a ground truth image by additive Gaussian noise

for a certain noise level resulting in the noisy input image . Consequently, the linear operator is given by the identity matrix and the corrupted image as well as the initial condition coincide with the noisy image, i.e., and .

For image deblurring, we consider an input image that is corrupted by a Gaussian blur of the ground truth image and a Gaussian noise n with . Here, refers to the matrix representation of the normalized convolution filter with the blur strength of the function

Numerical Optimization

For all image reconstruction tasks, we use the BSDS 500 data set [29] with grayscale images in . We train all models on 200 train and 200 test images from the BSDS 500 data set and evaluate the performance on 68 validation images as specified by [36].

In all experiments, the activation functions (5) are parametrized using quadratic B-spline basis functions with equidistant centers in the interval . Let be the two-dimensional image of a corresponding data vector , . Then, the convolution of the image with a filter is modeled by applying the corresponding kernel matrix to the data vector u. We only use kernels of size . Motivated by the relation , we choose . Additionally, we use for a weight vector w associated with . Since all numerical experiments yield a finite optimal stopping time T, we omit the constraint .

For a given training set consisting of pairs of corrupted images and corresponding ground truth images , we denote the associated index set by . To train the model, we consider the discrete energy functional

| 32 |

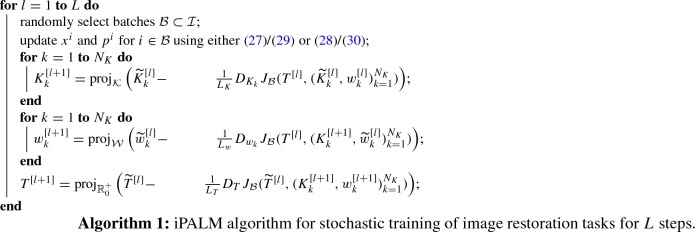

for a subset , where denotes the terminal value of the Euler/Heun iteration scheme for the corrupted image . In all numerical experiments, we use the iPALM algorithm [32] described in Algorithm 1 to optimize all parameters with respect to a randomly selected batch . Each batch consists of 64 image patches of size that are uniformly drawn from the training data set.

For an optimization parameter q representing either T, or , we use in the lth iteration step the over-relaxation

We denote by the Lipschitz constant that is determined by backtracking and by the orthogonal projection onto the corresponding set denoted by . Here, the constraint sets and are given by

Here, the constraint sets and are given by

Each component of the initial kernels in the case of image denoising is independently drawn from a Gaussian random variable with mean 0 and variance 1 such that . The learned optimal kernels of the denoising task are incorporated for the initialization of the kernels for deblurring. The weights of the activation functions are initialized such that around 0 for both reconstruction tasks.

Results

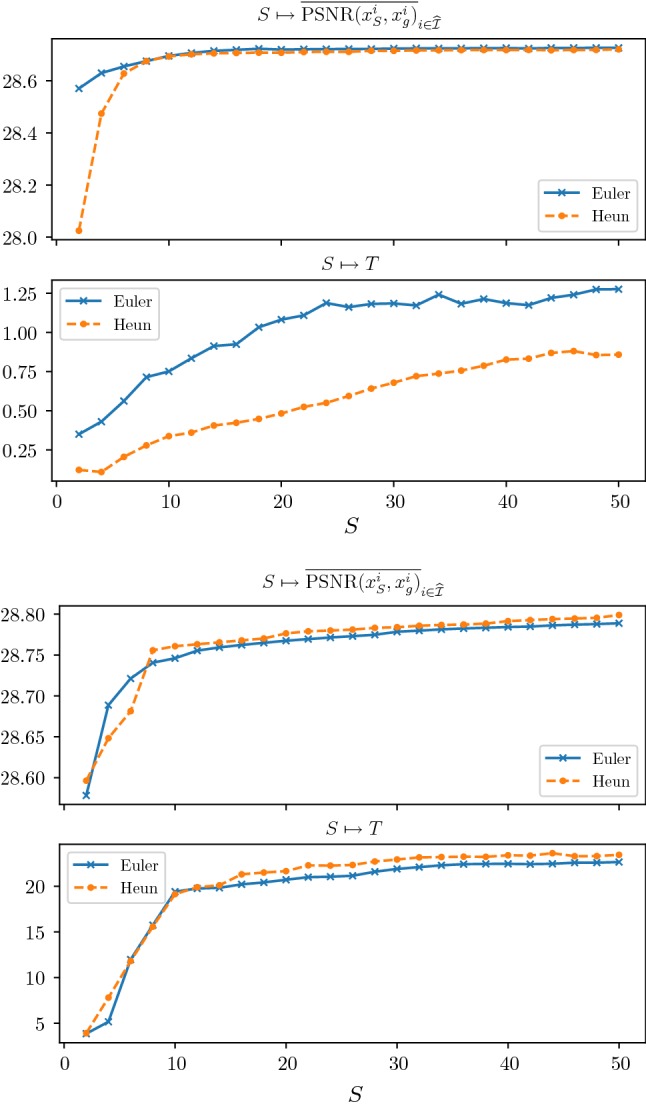

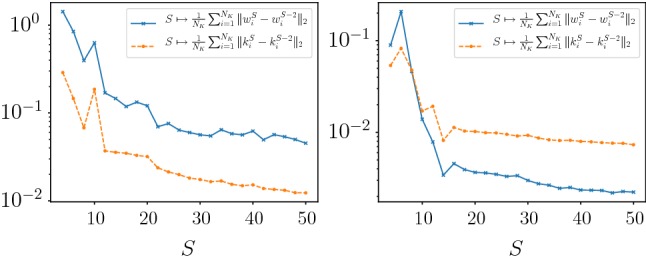

In the first numerical experiment, we train models for denoising and deblurring with kernels, a depth and training steps. Afterward, we use the calculated parameters and T as an initialization and train models for various depths and . Figure 5 depicts the average PSNR value with denoting the index set of the test images and the learned stopping time T as a function of the depth S for denoising (first two plots) and deblurring (last two plots). As a result, we observe that all plots converge for large S, where the PSNR curve monotonically increases. Moreover, the optimal stopping time T is finite in all these cases, which empirically validates that early stopping is beneficial. Thus, we can conclude that beyond a certain depth S the performance increase in terms of PSNR is negligible and a proper choice of the optimal stopping time is significant. The asymptotic value of T for increasing S in the case of image deblurring is approximately 20 times larger compared to image denoising due to the structure of the deblurring operator A. Figure 6 depicts the average -difference of consecutive convolution kernels and activation functions for denoising and deblurring as a function of the depth S. We observe that the differences decrease with larger values of S, which is consistent with the convergence of the optimal stopping time T for increasing S. Both time discretization schemes perform similar, and thus in the following experiments, we solely present results calculated with Euler’s method due to advantages in the computation time.

Fig. 5.

Plots of the average PSNR value across the test set (first and third plot) as well as the learned optimal stopping time T (second and fourth plot) as a function of the depth S for denoising (top) and deblurring (bottom). All plots show the results for the explicit Euler and explicit Heun schemes

Fig. 6.

Average change of consecutive convolution kernels (solid blue) and activation functions (dotted orange) for denoising (left) and deblurring (right) in terms of the -norm (Color figure online)

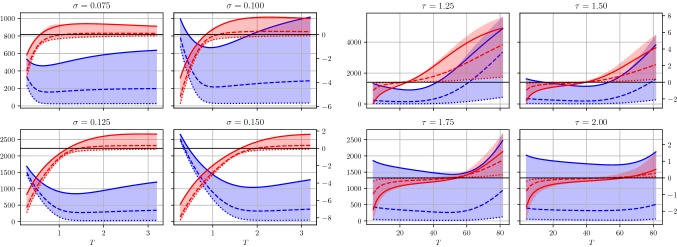

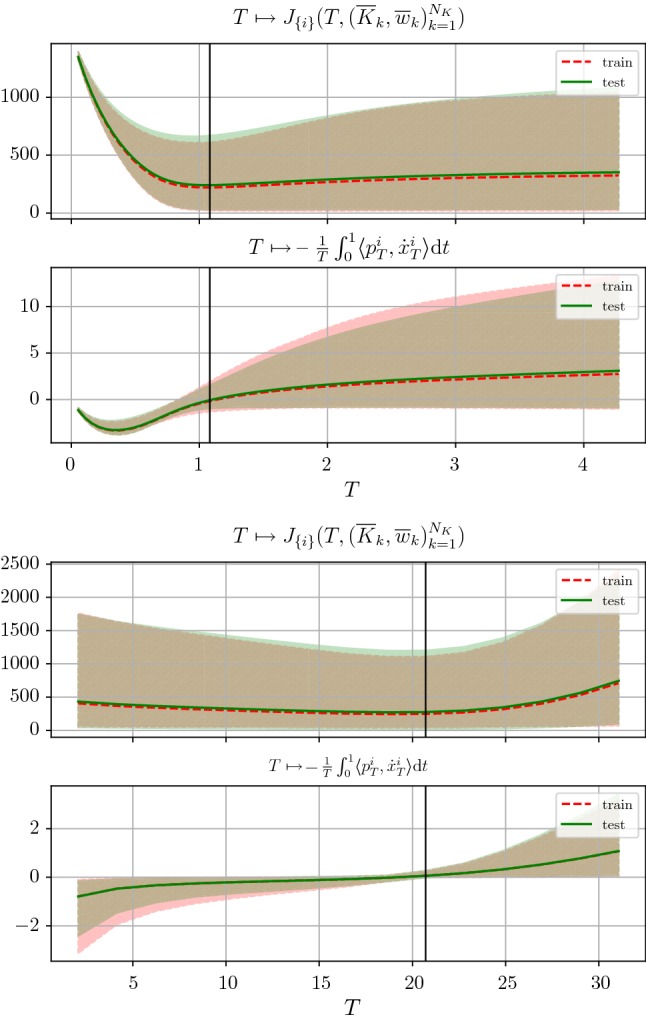

We next demonstrate the applicability of the first-order condition for the energy minimization in static variational networks using Euler’s discretization scheme with . Figure 7 depicts band plots along with the average curves among all training/test images of the functions

| 33 |

for all training and test images for denoising (first two plots) and deblurring (last two plots). We approximate the integral in the first-order condition (12) via

We deduce that the first-order condition for each test image indicates the energy minimizing stopping time. Note that all image dependent stopping times are distributed around the average optimal stopping time that is highlighted by the black vertical line and learned during training.

Fig. 7.

Plots of the energies (first and third plot) and first-order conditions (second and fourth plot) for training and test set with for denoising (first pair of plots) and / for deblurring (last pair of plots). The average value across the training/test sets are indicated by the dotted red/solid green curves. The area between the minimal and maximal function value for each T across the training/test set are indicated by the red and green area, respectively (Color figure online)

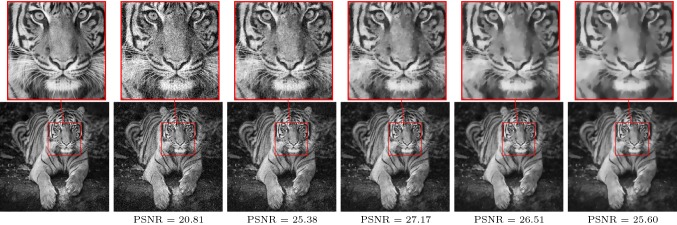

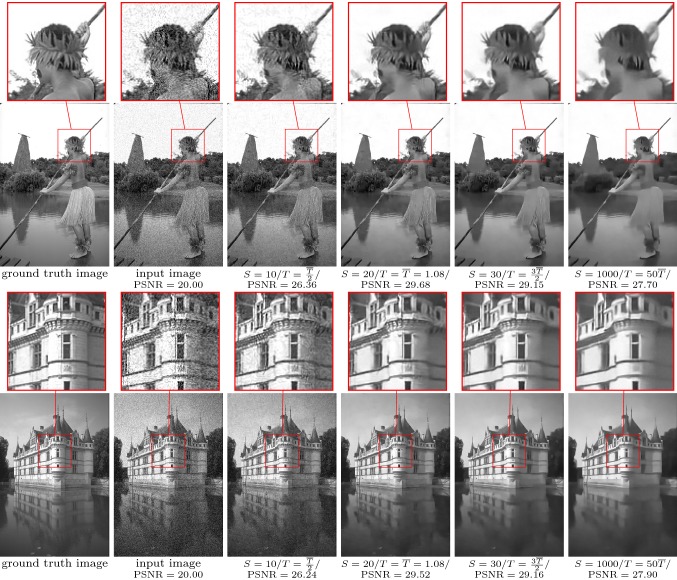

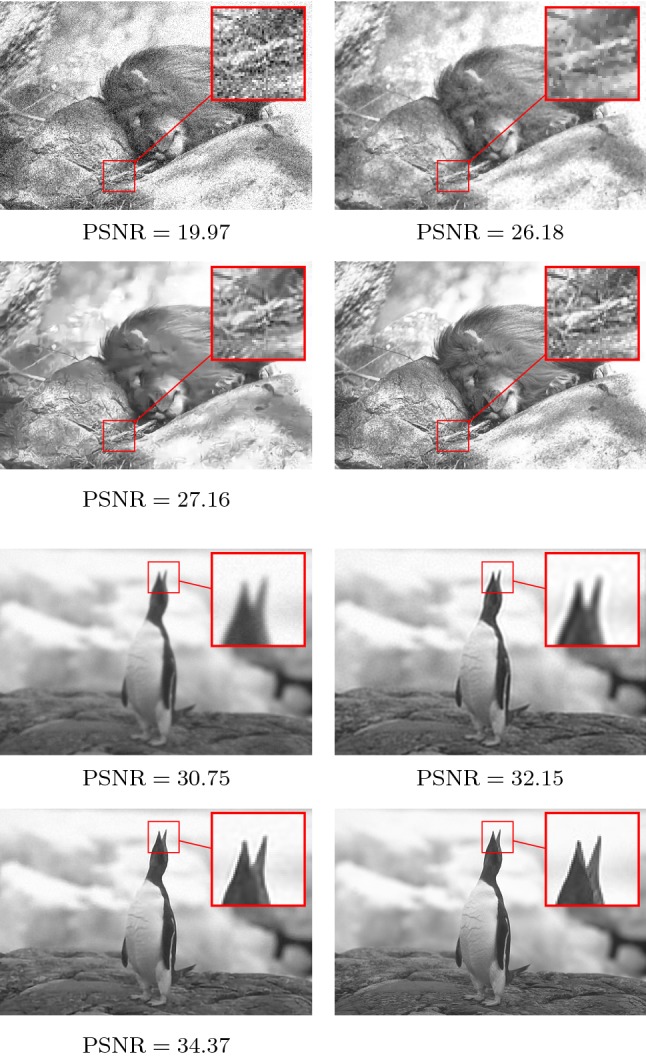

Figure 8 depicts two input images (first column): the corrupted images g (second column) and the denoised images for (third to sixth column). The maximum values of the PSNR obtained for are 29.68 and 29.52, respectively. To ensure a sufficiently fine time discretization, we enforce , where for we set . Likewise, Fig. 9 contains the corresponding results for the deblurring task. Again, we enforce a constant ratio of S and T. The PSNR value peaks around the optimal stopping time, and the corresponding values are 29.52 and 27.80, respectively. We observed an average computation time of 8.687 ms for the deblurring task using a RTX 2080 Ti graphics card and the PyTorch machine learning framework.

Fig. 8.

From left to right: ground truth image, noisy input image (), restored images for with for image denoising. The zoom factor of the magnifying lens is 3

Fig. 9.

From left to right: ground truth image, blurry input image (, ), restored images for with for image deblurring. The zoom factor of the magnifying lens is 3

As desired, indicates the energy minimizing time, where both the average curves for the training and test sets nearly coincide, which proves that the model generalizes to unseen test images. Although the gradient of the average energy curve (33) is rather flat near the learned optimal stopping time, the proper choice of is indeed crucial as shown by the qualitative results in Figs. 8 and 9. In the case of denoising, for , we still observe noisy images, whereas for too large T, local image patterns are smoothed out. For image deblurring, images computed with too small values of T remain blurry, while for ringing artifacts are generated and their intensity increase with larger T. For a corrupted image, the associated adjoint state requires the knowledge of the ground truth for the terminal condition (14), which is in general not available. However, Fig. 7 shows that the learned average optimal stopping time yields the smallest expected error. Thus, for arbitrary corrupted images, is used as the stopping time.

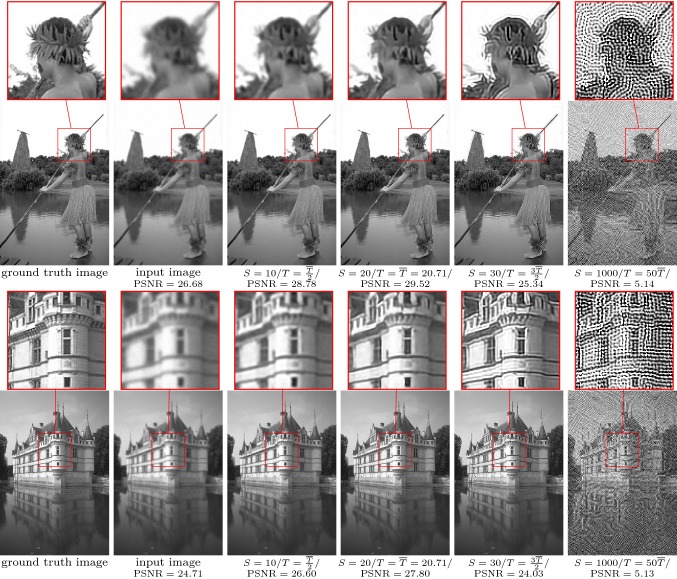

Figure 10 illustrates the plots of the energies (blue plots) and the first-order conditions (red plots) as a function of the stopping time T for all test images for denoising (left) and deblurring (right), which are degraded by noise levels and different blur strengths . Note that in each plot the associated curves of three prototypic images are visualized. To ensure a proper balancing of the data fidelity term and the regularization energy for the denoising task, we add the factor to the data term as typically motivated by Bayesian inference. For all noise levels and blur strengths , the same fixed pairs of kernels and activation functions trained with / and depth are used. Again, the first-order conditions indicate the degradation depending energy minimizing stopping times. The optimal stopping time increases with the noise level and blur strength, which results from a larger distance of and and thus requires longer trajectories.

Fig. 10.

Band plots of the energies (blue plots) and first-order conditions (red plots) for image denoising (left) and image deblurring (right) and various degradation levels. In each plot, the curves of three prototypic images are shown (Color figure online)

Table 1 presents pairs of average PSNR values and optimal stopping times for the test set for denoising (top) and deblurring (bottom) for different noise levels and blur strengths . All results in the table are obtained using kernels and a depth . Both first rows present the results incorporating an optimization of all control parameters and T. In contrast, both second rows show the resulting PSNR values and optimal stopping times for only a partial optimization of the stopping times and pretrained kernels and activation functions for /. Further, both third rows present the PSNR values and stopping times obtained by using the reference models without any further optimization. Finally, the last rows present the results obtained by using the FISTA algorithm [3] for the TV- variational model [38] for denoising and the IRcgls algorithm of the “IR Tools” package [15] for deblurring, which are both not data-driven and thus do not require any training. In detail, the weight parameter of the data term as well as the early stopping time are optimized using a simple grid search for the TV- model. We exploit the IRcgls algorithm to iteratively minimize using the conjugate gradient method until an early stopping rule based on the relative noise level of the residuum is satisfied. Note that IRcgls is a Krylov subspace method, which is designed as a general-purpose method for large-scale inverse problems (for further details see [15] and [20, Chapter 6] and the references therein). Figure 11 shows comparison of the results of the FISTA algorithm for the TV-/the IRcgls algorithm and our method (with the optimal early stopping time) for /.

Table 1.

Average PSNR value of the test set for image denoising/deblurring with different degradation levels along with the optimal stopping time

| Image denosing | ||||||||

| Full optimization of all controls | 30.05 | 0.724 | 28.72 | 1.082 | 27.72 | 1.445 | 26.95 | 1.433 |

| Optimization only of | 30.00 | 0.757 | 27.73 | 1.514 | 26.95 | 2.055 | ||

| Optimization only for | 29.74 | 1.082 | 26.51 | 1.082 | 22.73 | 1.082 | ||

| TV- | 28.60 | 27.32 | 26.38 | 25.66 | ||||

| Image deblurring | ||||||||

| Full optimization of all controls | 29.95 | 39.86 | 28.76 | 37.78 | 27.87 | 40.60 | 27.13 | 40.01 |

| Optimization only of | 29.73 | 23.86 | 27.69 | 47.72 | 26.71 | 51.70 | ||

| Optimization only for | 27.75 | 37.78 | 27.55 | 37.78 | 26.59 | 37.78 | ||

| IRcgls | 28.00 | 27.05 | 26.37 | 25.82 | ||||

The first rows of each table present the results obtained by the optimization of all control parameters. The second rows show the results calculated with fixed , which were pretrained for /. In the third rows, we evaluate the models trained for / on different degradation levels without any further optimization. The last rows present PSNR values obtained with the TV- model [38] using a dual accelerated gradient descent and the IRcgls [15] algorithm, respectively

Fig. 11.

Corrupted input image (first/third row, left), restored images using the FISTA/IRcgls algorithm (first/third row, right), and the proposed method (second/fourth row, left) as well as the ground truth image (second/fourth row, right) for denoising (upper quadruple) and deblurring (lower quadruple). The zoom factor of the magnifying lens is 3

In Table 1, the resulting PSNR values of the first and second row are almost identical for image denoising despite varying optimal stopping times. Consequently, a model that was pretrained for a specific noise level can be easily adapted to noise levels by only modifying the optimal stopping time. Neglecting the optimization of the stopping time leads to inferior results as presented in the third rows, where the reference model was used for all degradation levels without any change. However, in the case of image deblurring, the model benefits from a full optimization of all controls, which is caused by the dependency of A on the blur strength. For the noise level 0.1 we observe the average PSNR value 28.72 which is on par with the corresponding results of [10, Table II]. We emphasize that in their work a costly full minimization of an energy functional is performed, whereas we solely require a depth to compute comparable results.

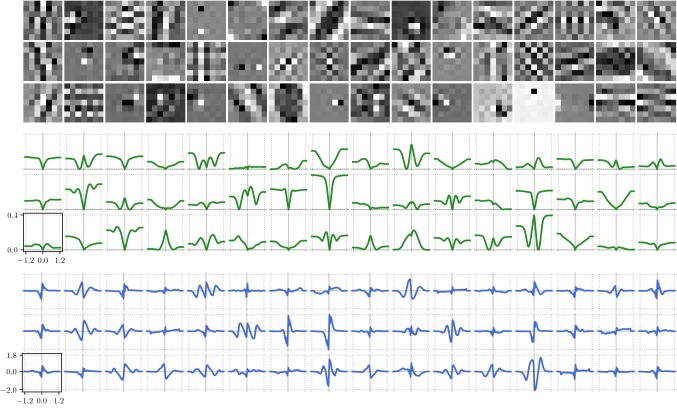

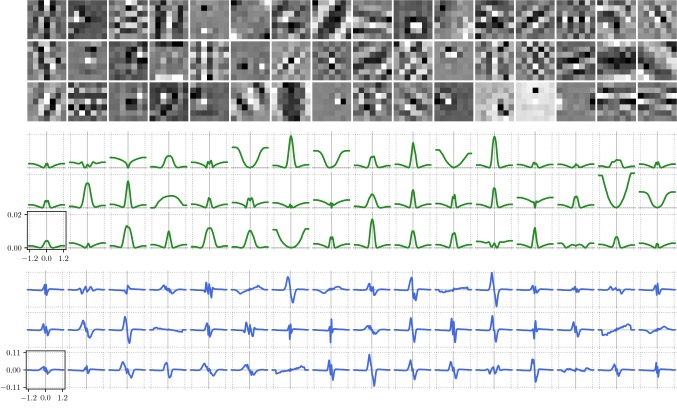

For the sake of completeness, we present in Fig. 12 (denoising) and Fig. 13 (deblurring) the resulting triplets of kernels (top), potential functions (middle) and activation functions (bottom) for a depth . The scaling of the axes is identical among all potential functions and activation functions, respectively. Note that the potential functions are computed by numerical integration of the learned activation functions and we choose the integration constant such that every potential function is bounded from below by 0. As a result, we observe a large variety of different kernel structures, including bipolar forward operators in different orientations (e.g., 5th kernel in first row, 8th kernel in third row) or pattern kernels representing prototypic image textures (e.g. kernels in first column). Likewise, the learned potential functions can be assigned to several representative classes of common regularization functions like, for instance, truncated total variation (8th function in second row of Fig. 12), truncated concave (4th function in third row of Fig. 12), double-well potential (10th function in first row of Fig. 12) or “negative Mexican hat” (8th function in third row of Fig. 12). Note that the associated kernels in both tasks nearly coincide, whereas the potential and activation functions significantly differ. We observe that the activation functions in the case of denoising have a tendency to generate higher amplitudes compared to deblurring, which results in a higher relative balancing of the regularizer in the case of denoising.

Fig. 12.

Triplets of -kernels (top), potential functions (middle) and activation functions (bottom) learned for image denoising

Fig. 13.

Triplets of -kernels (top), potential functions (middle) and activation functions (bottom) learned for image deblurring

Spectral Analysis of the Learned Regularizers

Finally, in order to gain intuition of the learned regularizer, we perform a nonlinear eigenvalue analysis [16] for the gradient of the Field-of-Experts regularizer learned for and . For this reason, we compute several generalized eigenpairs satisfying

for . Note that by omitting the data term, the forward Euler scheme (27) applied to the generalized eigenfunctions reduces to

| 34 |

where the contrast factor determines the global intensity change of the eigenfunction. We point out that due to the nonlinearity of the eigenvalue problem such a formula only holds locally for each iteration of the scheme.

We compute generalized eigenpairs of size by solving

| 35 |

for all , where

denotes the generalized Rayleigh quotient, which is derived by minimizing (35) with respect to . The eigenfunctions are computed using an accelerated gradient descent with step size control [32]. All eigenfunctions are initialized with randomly chosen image patches of the test image data set, from which we subtract the mean. Moreover, in order to minimize the influence of the image boundary, we scale the image intensity values with a spatial Gaussian kernel. We run the algorithm for iterations, which is sufficient for reaching a residual of approximately for each eigenpair.

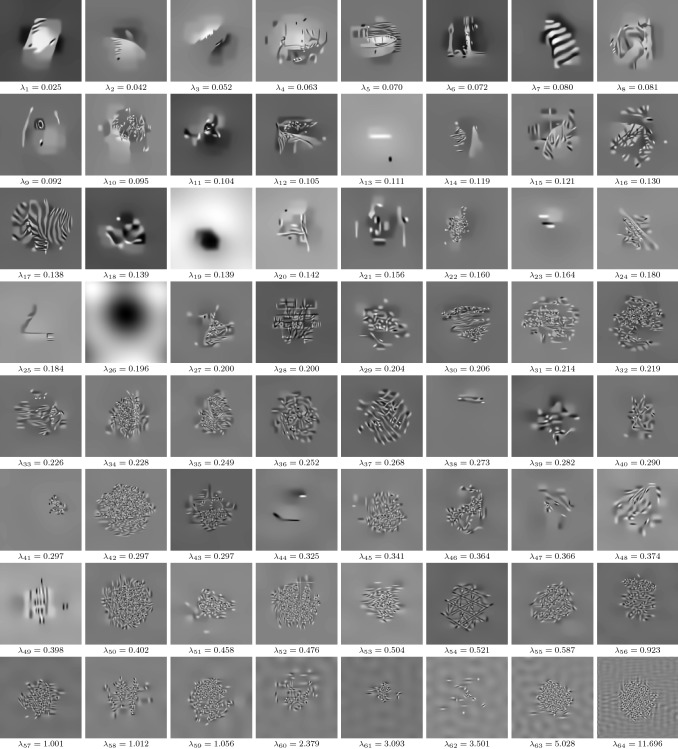

Figure 14 depicts the resulting pairs of eigenfunctions and eigenvalues for image denoising. We observe that eigenfunctions corresponding to smaller eigenvalues represent in general more complex and smoother image structures. In particular, the first eigenfunctions can be interpreted as cartoon-like image structures with clearly separable interfaces. Most of the eigenfunctions associated with larger eigenvalues exhibit texture-like patterns with a progressive frequency. Finally, wave and noise structures are present in the eigenfunctions with the highest eigenvalues.

Fig. 14.

eigenpairs for image denoising, where all eigenfunctions have the resolution and the intensity of each eigenfunction is adjusted to [0, 1]

We remark that all eigenvalues are in the interval . Since , the contrast factors in (34) are in the interval [0.368, 0.999], which shows that the regularizer has a tendency to decrease the contrast. Formula (34) also reveals that eigenfunctions corresponding to contrast factors close to 1 are preserved over several iterations. In summary, the learned regularizer has a tendency to reduce the contrast of high-frequency noise patterns, but preserves the contrast of texture- and structure-like patterns.

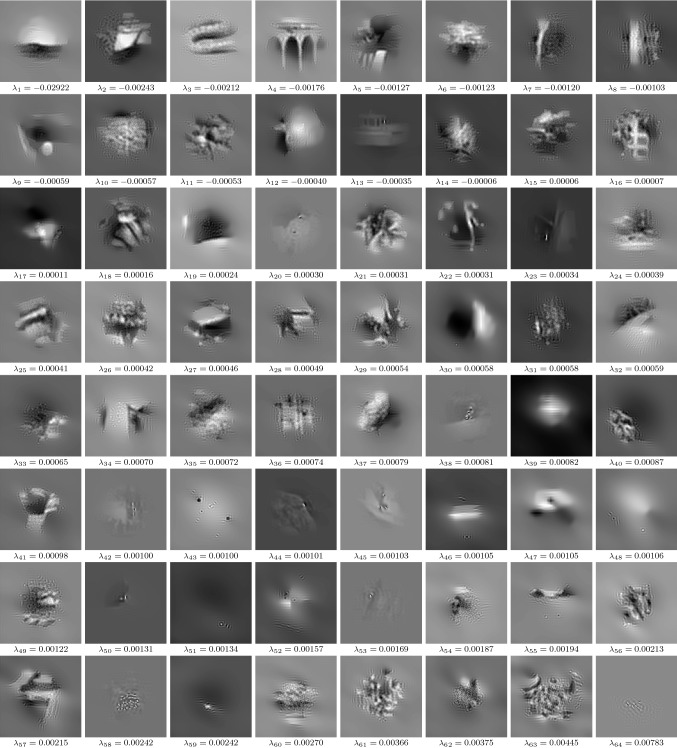

Figure 15 shows the eigenpairs for the deblurring task. All eigenvalues are relatively small and distributed around 0, which means that the corresponding contrast factors lie in the interval [0.992, 1.030]. Therefore, the learned regularizer can both decrease and increase the contrast. Moreover, most eigenfunctions are composed of smooth structures with a distinct overshooting behavior in the proximity of image boundaries. This implies that the learned regularizer has a tendency to perform image sharpening.

Fig. 15.

eigenpairs for image deblurring, where all eigenfunctions have the resolution and the intensity of each eigenfunction is adjusted to [0, 1]

Conclusion

Starting from a parametric and autonomous gradient flow perspective of variational methods, we explicitly modeled the stopping time as a control parameter in an optimal control problem. By using a Lagrangian approach, we derived a first-order condition suited to automatize the calculation of the energy minimizing optimal stopping time. A forward Euler discretization of the gradient flow led to static variational networks. Numerical experiments confirmed that a proper choice of the stopping time is of vital importance for the image restoration tasks in terms of the PSNR value. We performed a nonlinear eigenvalue analysis of the gradient of the learned Field-of-Experts regularizer, which revealed interesting properties of the local regularization behavior. A comprehensive long-term spectral analysis in continuous time is left for future research.

A further future research direction would be the extension to dynamic variational networks, in which the kernels and activation functions evolve in time. However, a major issue related to this extension emerges from the continuation of the stopping time beyond its optimal point.

Acknowledgements

Open access funding provided by Graz University of Technology. We acknowledge support from the European Research Council under the Horizon 2020 program, ERC starting Grant HOMOVIS (No. 640156) and ERC advanced Grant OCLOC (No. 668998).

Biographies

Alexander Effland

received his BSc (2006-2010) in economics and his BSc (2007-2010), MSc (2010-2012) and PhD (2013-2017) in mathematics from the University of Bonn. After a Postdoc position at the University of Bonn, he moved to Graz University of Technology, where he is currently a postdoctoral researcher at the Institute of Computer Graphics and Vision. The focus of his research is mathematical image processing, calculus of variations and deep learning in computer vision.

Erich Kobler

received his BSc (2009-2013) and his MSc (2013-2015) in Information and Computer Engineering (Telematik) with a specialization in robotics and computer vision from Graz University of Technology. He is currently a PhD student in the group of Prof. Thomas Pock at the Institute of Computer Graphics and Vision, Graz University of Technology. His current research interests include computer vision, image processing, inverse problems, medical imaging, and machine learning.

Karl Kunisch

is professor and head of department of mathematics at the University of Graz, and Scientific Director of the Radon Institute of the Austrian Academy of Sciences in Linz. He received his PhD and Habilitation at the Technical University of Graz in 1978 and 1980. His research interests include optimization and optimal control, inverse problems and mathematical imaging, numerical analysis and applications, currently focusing on topics in the life sciences. Karl Kunisch spent three years at the Lefschetz Center for Dynamical Systems at Brown University, USA, held visiting positions at INRIA Rocquencourt and the Universite Paris Dauphine, and was a consultant at ICASE, NASA Langley, USA. Before joining the faculty at the University in Graz he was professor of numerical mathematics at the Technical University of Berlin. Karl Kunisch is the author of two monographs and more than 340 papers. He is editor of numerous journals, including SIAM Numerical Analysis and SIAM Optimization and Optimal Control, and the Journal of the European Mathematical Society.

Thomas Pock

received his MSc (1998-2004) and his PhD (2005-2008) in Computer Engineering (Telematik) from Graz University of Technology. After a Postdoc position at the University of Bonn, he moved back to Graz University of Technology where he has been an Assistant Professor at the Institute of Computer Graphics and Vision. In 2013 Thomas Pock received the START price of the Austrian Science Fund (FWF) and the German Pattern recognition award of the German association for pattern recognition (DAGM) and in 2014, Thomas Pock received a starting grant from the European Research Council (ERC). Since June 2014, Thomas Pock is a Professor of Computer Science at Graz University of Technology. The focus of his research is the development of mathematical models for computer vision and image processing as well as the development of efficient convex and non-smooth optimization algorithms.

Footnotes

Image by Nichollas Harrison (CC BY-SA 3.0).

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Alexander Effland, Email: alexander.effland@icg.tugraz.at.

Erich Kobler, Email: erich.kobler@icg.tugraz.at.

Karl Kunisch, Email: karl.kunisch@uni-graz.at.

Thomas Pock, Email: pock@icg.tugraz.at.

References

- 1.Ambrosio L, Gigli N, Savare G. Gradient Flows in Metric Spaces and in the Space of Probability Measures. Basel: Birkhäuser; 2008. [Google Scholar]

- 2.Atkinson K. An Introduction to Numerical Analysis. 2. Hoboken: Wiley; 1989. [Google Scholar]

- 3.Beck A, Teboulle M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imag. Sci. 2009;2:183–202. doi: 10.1137/080716542. [DOI] [Google Scholar]

- 4.Benning, M., Celledoni, E., Ehrhardt, M., Owren, B., Schönlieb, C.-B.: Deep learning as optimal control problems: models and numerical methods. arXiv:1904.05657 (2019)

- 5.Binder A, Hanke M, Scherzer O. On the Landweber iteration for nonlinear ill-posed problems. J. Inv. Ill Posed Probl. 1996;4(5):381–390. [Google Scholar]

- 6.Butcher JC. Numerical Methods for Ordinary Differential Equations. 2. Hoboken: Wiley; 2008. [Google Scholar]

- 7.Chang, B., Meng, L., Haber, E., Ruthotto, L., Begert, D., Holtham, E.: Reversible architectures for arbitrarily deep residual neural networks. In: AAAI Conference on Artificial Intelligence (2018)

- 8.Chambolle, A., Caselles, V., Novaga, M., Cremers, D., Pock, T.: An introduction to total variation for image analysis. In: Theoretical Foundations and Numerical Methods for Sparse Recovery. Radon Series on Computational and Applied Mathematics, vol. 9, pp. 263–340 (2009)

- 9.Chambolle A, Pock T. An introduction to continuous optimization for imaging. Acta Numer. 2016;25:161–319. doi: 10.1017/S096249291600009X. [DOI] [Google Scholar]

- 10.Chen Y, Ranftl R, Pock T. Insights into analysis operator learning: from patch-based sparse models to higher-order MRFs. IEEE Trans. Image Process. 2014;99(1):1060–1072. doi: 10.1109/TIP.2014.2299065. [DOI] [PubMed] [Google Scholar]

- 11.Chen Y, Pock T. Trainable nonlinear reaction diffusion: a flexible framework for fast and effective image restoration. IEEE Trans. Pattern Anal. Mach. Intell. 2017;39(6):1256–1272. doi: 10.1109/TPAMI.2016.2596743. [DOI] [PubMed] [Google Scholar]

- 12.Weinan E. A proposal on machine learning via dynamical systems. Commun. Math. Stat. 2017;5:1–11. [Google Scholar]

- 13.Weinan E, Han J, Li Q. A mean-field optimal control formulation of deep learning. Res. Math. Sci. 2019;6:10. doi: 10.1007/s40687-018-0172-y. [DOI] [Google Scholar]

- 14.Engl HW, Hanke M, Neubauer A. Regularization of inverse problems. Volume 375 of Mathematics and its Applications. Dordrecht: Kluwer Academic Publishers Group; 1996. [Google Scholar]

- 15.Gazzola S, Hansen PC, Nagy JG. IR Tools: a MATLAB package of iterative regularization methods and large-scale test problems. Numer. Algorithms. 2018;81(3):773–811. doi: 10.1007/s11075-018-0570-7. [DOI] [Google Scholar]

- 16.Gilboa G. Nonlinear Eigenproblems in Image Processing and Computer Vision. Berlin: Springer; 2018. [Google Scholar]

- 17.Haber E, Ruthotto L. Stable architectures for deep neural networks. Inverse Probl. 2017;34(1):014004. doi: 10.1088/1361-6420/aa9a90. [DOI] [Google Scholar]

- 18.Hale JK. Ordinary Differential Equations. New York: Dover Publications; 1980. [Google Scholar]

- 19.Hammernik K, Klatzer T, Kobler E, Recht MP, Sodickson DK, Pock T, Knoll F. Learning a variational network for reconstruction of accelerated MRI data. Magn. Resonance Med. 2018;79(6):3055–3071. doi: 10.1002/mrm.26977. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Hansen, P. C.: Discrete inverse problems. In: Fundamentals of Algorithms, vol. 7. Society for Industrial and Applied Mathematics, Philadelphia, PA (2010)

- 21.He, K., Zhang, X., Ren, S., Sun, J.: Deep Residual Learning for Image Recognition. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

- 22.Ito, K., Kunisch, K.: Lagrange multiplier approach to variational problems and applications. In: Advances in Design and Control, vol. 15. Society for Industrial and Applied Mathematics, Philadelphia, PA (2008)

- 23.Kaltenbacher B, Neubauer A, Scherzer O. Iterative Regularization Methods for Nonlinear Ill-Posed Problems. Berlin: Walter de Gruyter GmbH & Co. KG; 2008. [Google Scholar]

- 24.Kobler, E., Klatzer, T., Hammernik, K., Pock, T.: Variational networks: Connecting variational methods and deep learning. In: Pattern Recognition, pp. 281–293. Springer, Berlin (2017)

- 25.Landweber L. An iteration formula for Fredholm integral equations of the first kind. Am. J. Math. 1951;73(3):615–624. doi: 10.2307/2372313. [DOI] [Google Scholar]

- 26.LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 27.Li Q, Chen L, Tai C, E W. Maximum principle based algorithms for deep learning. J. Mach. Learn. Res. 2018;18:1–29. [Google Scholar]

- 28.Li, Q., Hao, S.: An optimal control approach to deep learning and applications to discrete-weight neural networks. arXiv:1803.01299 (2018)

- 29.Martin, D., Fowlkes, C., Tal, D., Malik, J.: A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In: International Conference on Computer Vision (2001)

- 30.Matet, S., Rosasco, L., Villa, S., Vu, B. L.: Don’t relax: early stopping for convex regularization. arXiv:1707.05422 (2017)

- 31.Perona P, Malik J. Scale-space and edge detection using anisotropic diffusion. IEEE Trans. Pattern Anal. Mach. Intell. 1990;12(7):629–639. doi: 10.1109/34.56205. [DOI] [Google Scholar]

- 32.Pock T, Sabach S. Inertial proximal alternating linearized minimization (iPALM) for nonconvex and nonsmooth problems. SIAM J. Imag. Sci. 2016;9(4):1756–1787. doi: 10.1137/16M1064064. [DOI] [Google Scholar]

- 33.Prechelt L. Early Stopping—But When? In Neural Networks: Tricks of the Trade. 2. Berlin: Springer; 2012. pp. 53–67. [Google Scholar]

- 34.Rieder A. Keine Probleme mit inversen Problemen. Braunschweig: Friedr. Vieweg & Sohn; 2003. [Google Scholar]

- 35.Rosasco L, Villa S. Learning with incremental iterative regularization. Adv. Neural Inf. Process. Syst. 2015;28:1630–1638. [Google Scholar]

- 36.Roth S, Black MJ. Fields of experts. Int. J. Comput. Vis. 2009;82(2):205–229. doi: 10.1007/s11263-008-0197-6. [DOI] [Google Scholar]

- 37.Raskutti, G., Wainwright, M. J., Yu, B.: Early stopping for non-parametric regression: an optimal data-dependent stopping rule. In: 49th Annual Allerton Conference on Communication, Control, and Computing (Allerton), pp. 1318–1325 (2011)

- 38.Rudin L, Osher S, Fatemi E. Nonlinear total variation based noise removal algorithms. Phys. D. 1992;60:259–268. doi: 10.1016/0167-2789(92)90242-F. [DOI] [Google Scholar]

- 39.Schulter, S., Leistner, C., Bischof, H.: Fast and accurate image upscaling with super-resolution forests. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 3791–3799 (2015)

- 40.Teschl G. Ordinary Differential Equations and Dynamical Systems. Providence: American Mathematical Society; 2012. [Google Scholar]

- 41.Tikhonov AN. Nonlinear Ill-Posed Problems. Netherlands: Springer; 1998. [Google Scholar]

- 42.Yao Y, Rosasco L, Caponnetto A. On early stopping in gradient descent learning. Constr. Approx. 2007;26(2):289–315. doi: 10.1007/s00365-006-0663-2. [DOI] [Google Scholar]

- 43.Zeidler E. Nonlinear Functional Analysis and its Applications III: Variational Methods and Optimization. New York: Springer; 1985. [Google Scholar]

- 44.Zhang,Y., Hofmann, B.: On the second order asymptotical regularization of linear ill-posed inverse problems. Appl. Anal. (2018). 10.1080/00036811.2018.1517412

- 45.Zhang T, Yu B. Boosting with early stopping: convergence and consistency. Ann. Stat. 2005;33(4):1538–1579. doi: 10.1214/009053605000000255. [DOI] [Google Scholar]