Abstract

A variational model for learning convolutional image atoms from corrupted and/or incomplete data is introduced and analyzed both in function space and numerically. Building on lifting and relaxation strategies, the proposed approach is convex and allows for simultaneous image reconstruction and atom learning in a general, inverse problems context. Further, motivated by an improved numerical performance, also a semi-convex variant is included in the analysis and the experiments of the paper. For both settings, fundamental analytical properties allowing in particular to ensure well-posedness and stability results for inverse problems are proven in a continuous setting. Exploiting convexity, globally optimal solutions are further computed numerically for applications with incomplete, noisy and blurry data and numerical results are shown.

Keywords: Variational methods, Learning approaches, Inverse problems, Functional lifting, Convex relaxation, Convolutional Lasso, Machine learning, Texture reconstruction

Introduction

An important task in image processing is to achieve an appropriate regularization or smoothing of images or image-related data. In particular, this is indispensable for most application-driven problems in the field, such as denoising, inpainting, reconstruction, segmentation, registration or classification. Also beyond imaging, for general problem settings in the field of inverse problems, an appropriate regularization of unknowns plays a central role as it allows for a stable inversion procedure.

Variational methods and partial-differential-equation-based methods can now be regarded as classical regularization approaches of mathematical image processing (see, for instance, [5, 42, 54, 62]). An advantage of such methods is the existence of a well-established mathematical theory and, in particular for variational methods, a direct applicability to general inverse problems with provable stability and recovery guarantees [31, 32]. While in particular piecewise smooth images are typically well described by such methods, their performance for oscillatory- or texture-like structures, however, is often limited to predescribed patterns (see, for instance, [27, 33]).

Data-adaptive methods such as patch- or dictionary-based methods (see, for instance, [2, 13, 22, 23, 37]) on the other hand are able to exploit redundant structures in images independent of an a priori description and are, at least for some specific tasks, often superior to variational- and PDE-based methods. In particular, methods based on (deep) convolutional neural networks are inherently data adaptive (though data adaptation takes place in a preprocessing/learning step) and have advanced the state of the art significantly in many typical imaging applications in the past years [38].

Still, for data-adaptive approaches, neither a direct applicability to general inverse problems nor a corresponding mathematical understanding of stability results or recovery guarantees are available to the extend they are with variational methods. One reason for this lies in the fact that, for both classical patch- or dictionary-based methods and neural-network-based approaches, data adaptiveness (either online or in a training step) is inherently connected to the minimization of a non-convex energy. Consequently, standard numerical approaches such as alternating minimization or (stochastic) gradient descent can, at best, only be guaranteed to deliver stationary points of the energy, hence suffering from the risk of delivering suboptimal solutions.

The aim of this work is to provide a step toward bridging the gap between data-adaptive methods and variational approaches. As a motivation, consider a convolutional Lasso problem [39, 65] of the form

| 1 |

Here, the goal is to learn image atoms (constrained to a set ) and sparse coefficient images which, via a convolution, synthesize an image corresponding to the given data (with data fidelity being measured by ). This task is strongly related to convolutional neural networks in many ways, see [46, 61, 65] and the paragraph Connections to deep neural networks below for details. A classical interpretation of this energy minimization is that it allows for a sparse (approximate) representation of (possible noisy) image data, but we will see that this synopsis can be extended to include a forward model for inverse problems and a second image component of different structures. In any case, the difficulty here is non-convexity of the energy in (1), which complicates both analysis and its numerical solution. In order to overcome this, we build on a tensorial-lifting approach and subsequent convex relaxation. Doing so and starting from (1), we introduce a convex variational method for learning image atoms from noisy and/or incomplete data in an inverse problems context. We further extend this model by a semi-convex variant that improves the performance in some applications. For both settings, we are able to prove well-posedness results in function space and, for the convex version, to compute globally optimal solutions numerically. In particular, classical stability and convergence results for inverse problems such as the ones of [31, 32] are applicable to our model, providing a stable recovery of both learned atoms and images from given, incomplete data.

Our approach allows for a joint learning of image atoms and image reconstruction in a single step. Nevertheless, it can also be regarded purely as an approach for learning image atoms from potentially incomplete data in a training step, after which the learned atoms can be further incorporated in a second step, e.g., for reconstruction or classification. It should also be noted that, while we show some examples where our approach achieves a good practical performance for image reconstruction compared to the existing methods, the main purpose of this paper is to provide a mathematical understanding rather than an algorithm that achieves the best performance in practice.

Related Works Regarding the existing literature in the context of data-adaptive variational learning approaches in imaging, we note that there are many recent approaches that aim to infer either parameter or filters for variational methods from given training data, see, e.g., [14, 30, 36]. A continuation of such techniques more toward the architecture of neural networks is so-called variational networks are so-called [1, 35] where not only model parameters but also components of the solution algorithm such as stepsizes or proximal mappings are learned. We also refer to [41] for a recent work on combining variational methods and neural networks. While for some of those methods also a function space theory is available, the learning step is still non-convex and the above approaches can in general only be expected to provide locally optimal solutions.

In contrast to that, in a discrete setting, there are many recent directions of research which aim to overcome suboptimality in non-convex problems related to learning. In the context of structured matrix factorization (which can be interpreted as the underlying problem of dictionary learning/sparse coding in a discrete setting), the authors of [29] consider a general setting of which dictionary learning can be considered as a special case. Exploiting the existence of a convex energy which acts as lower bound, they provide conditions under which local optima of the convex energy are globally optimal, thereby reducing the task of globally minimizing a non-convex energy to finding local optima with certain properties. In a similar scope, a series of works in the context of dictionary learning (see [4, 55, 59] and the references therein) provide conditions (e.g., assuming incoherence) under which minimization algorithms (e.g., alternating between dictionary and coefficient updates) can be guaranteed to converge to a globally optimal dictionary with high probability. Regarding these works, it is important to note that, as discussed in Sect. 2.1 (see also [26]), the problem of dictionary learning is similar but yet rather different to the problem of learning convolutional image atoms as in (1) in the sense that the latter is shift-invariant since it employs a convolution to synthesize the image data (rather than comparing with a patch matrix). While results on structured matrix decomposition that allow for general data terms (such as [29]) can be applied also to convolutional sparse coding by including the convolution in the data term, this is not immediate for dictionary learning approaches.

Although having a different motivation, the learning of convolutional image atoms is also related to blind deconvolution, where one aims to simultaneously recover a blurring kernel and a signal from blurry, possibly noisy measurements. While there is a large literature on this topic (see [15, 20] for a review), in particular lifting approaches that aim at a convex relaxation of underlying bilinear problem in a discrete setting are related to our work. In this context, the goal is often to obtain recovery guarantees under some assumptions on the data. We refer to [40] for a recent overview, to [3] for a lifting approach that poses structural assumptions on both the signal and the blurring kernel and uses a nuclear-norm-based convex relaxation, and to [21] for a more generally applicable approach that employs the concept of atomic norms [19]. Moreover, the work [40] studies the joint blind deconvolution and demixing problem, which has the same objective as (1) of decomposing a signal into a sum of convolutions but is motivated in [40] from multiuser communication. There, the authors again pose some structural assumptions on the underlying signal but, in contrast to previous works, deal with recovery guarantees of non-convex algorithms, which are computationally more efficient than those addressing a convex relaxation.

Connections to Deep Neural Networks Regarding a deeper mathematical understanding of deep convolutional neural networks, establishing a mathematical theory for convolutional sparse coding is particularly relevant due to a strong connection of the two methodologies. Indeed, it is easy to see that for instance in case and is fixed, the numerical solution of the convolutional sparse coding problem (1) via forward-backward splitting with a fixed number of iterations is equivalent to a deep residual network with constant parameters. Similarly, recent results (see [46, 47, 58]) show a strong connection of thresholding algorithms for multilayer convolutional sparse coding with feed-forward convolutional neural networks. In particular, this connection is exploited to transfer reconstruction guarantees from sparse coding to the forward pass of deep convolutional neural networks.

In this context, we also highlight [61], which very successfully employs filter learning in convolutional neural networks as regularization prior in image processing tasks. That is, [61] uses simultaneous filter learning and image synthesis for regularization, without prior training. The underlying architecture is strongly related to the energy minimization approach employed here, and again we believe that a deeper mathematical analysis of the latter will be beneficial to explain the success of the former.

Another direct relation to deep neural networks is given via deconvolutional neural networks as discussed in [65], which solve a hierarchy of convolutional sparse coding problems to obtain a feature representation of given image data. Last but not least, we also highlight that the approach discussed in this paper can be employed as feature encoder (again potentially also using incomplete/indirect data measurements), which provides a possible preprocessing step that is very relevant in the context of deep neural networks.

Outline of the Paper

In Sect. 2, we present the main ideas for our approach in a formal setting. This is done from two perspectives, once from the perspective of a convolutional Lasso approach and once from the perspective of patch-based methods. In Sect. 3, we then carry out an analysis of the proposed model in function space, where we motivate our approach via convex relaxation and derive well-posedness results. Section 4 then presents the model in a discrete setting and the numerical solution strategy, and Sect. 5 provides numerical results and a comparison to the existing methods. At last, an “Appendix” provides a brief overview on some results for tensor spaces that are used in Sect. 3. We note that, while the analysis of Sect. 3 is an important part of our work, the paper is structured in a way such that readers only interested in the conceptual idea and the realization of our approach can skip Sect. 3 and focus on Sects. 2 and 4.

A Convex Approach to Image Atoms

In this section, we present the proposed approach to image-atom-learning and texture reconstruction, where we focus on explaining the main ideas rather than precise definitions of the involved terms. For the latter, we refer to Sect. 3 for the continuous model and Sect. 4 for the discrete setting.

Our starting point is the convolutional Lasso problem [18, 65], which aims to decompose a given image u as a sparse linear combination of basic atoms with coefficient images by inverting a sum of convolutions as follows

It is important to note that, by choosing the to be composed of delta peaks, this allows to place the atoms at any position in the image. In [65], this model was used in the context of convolutional neural networks for generating image atoms and other image-related tasks. Subsequently, many works have dealt with the algorithmic solution of the resulting optimization problem, where the main difficulty lies in the non-convexity of the atom-learning step, and we refer to [28] for a recent review.

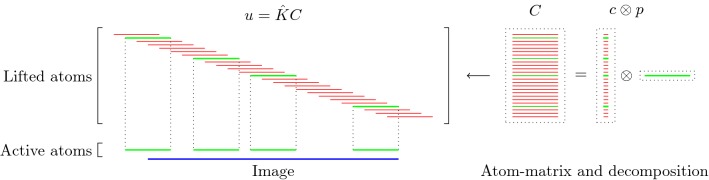

Our goal is to obtain a convex relaxation of this model that can be used for both, learning image atoms from potentially noisy data and image reconstruction tasks such as inpainting, deblurring or denoising. To this aim, we lift the model to the tensor product space of coefficient images and image atoms, i.e., the space of all tensors with being a rank-1 tensor such that . We refer to Fig. 1 for a visualization of this lifting in a one-dimensional setting, where both coefficients and image atoms are vectors and corresponds to a rank-one matrix. Notably, in this tensor product space, the convolution can be written as linear operator such that . Exploiting redundancies in the penalization of and the constraint , and rewriting the above setting in the lifted tensor space, as discussed in Sect. 3, we obtain the following minimization problem as convex relaxation of the convolutional Lasso approach

where takes the 1-norm and 2-norm of C in coefficient and atom direction, respectively. Now while a main feature of the original model was that the number of image atoms was fixed, this is no longer the case in the convex relaxation and would correspond to constraining the rank of the lifted variable C (defined as the minimal number of simple tensors needed to decompose C) to be below a fixed number. As convex surrogate, we add an additional penalization of the nuclear norm of C in the above objective functional (here we refer to the nuclear norm of C in the tensor product space which, in the discretization of our setting, coincides with the classical nuclear norm of a matrix reshaping of C). Allowing also for additional linear constraints on C via a linear operator , we arrive at the following convex norm that measures the decomposability of a given image u into a sparse combination of atoms as

Interestingly, this provides a convex model for learning image atoms, which for simple images admitting a sparse representation seems quite effective. In addition, this can in principle also be used as a prior for image reconstruction tasks in the context of inverse problems via solving for example

with given some corrupted data, A a forward operator and a parameter.

Fig. 1.

Visualization of the atom-lifting approach for 1D images. The green (thick) lines in the atom matrix correspond to nonzero (active) atoms and are placed in the image at the corresponding positions

Both the original motivation for our model and its convex variant have many similarities with dictionary learning and patch-based methods. The next section strives to clarify similarities and difference and provides a rather interesting, different perspective on our model.

A Dictionary-Learning-/Patch-Based Methods’ Perspective

In classical dictionary-learning-based approaches, the aim is to represent a resorted matrix of image patches as a sparse combination of dictionary atoms. That is, with a vectorized version of an image and a patch matrix containing l vectorized (typically overlapping) images patches of size nm, the goal is to obtain a decomposition , where is a coefficient matrix and is a matrix of k dictionary atoms such that is the coefficient for the atom in the representation of the patch . In order to achieve a decomposition in this form, using only a sparse representation of dictionary atoms, a classical approach is to solve

where potentially puts additional constraints on the dictionary atoms, e.g., ensures that for all j.

A difficulty with such an approach is again the bilinear and hence non-convex nature of the optimization problem, leading to potentially many non-optimal stationary points and making the approach sensitive to initialization.

As a remedy, one strategy is to consider a convex variant (see, for instance, [6]). That is, rewriting the above minimization problem (and again using the ambiguity in the product cp to eliminate the constraint) we arrive at the problem

where . A possible convexification is then given as

| 2 |

where is the nuclear norm of the matrix C.

A disadvantage of such an approach is that the selection of patches is a priori fixed and that the lifted matrix C has to approximate each patch. In the situation of overlapping patches, this means that individual rows of C have to represent different shifted versions of the same patch several times, which inherently contradicts the low-rank assumption.

It is now interesting to see our proposed approach in relation to these dictionary learning methods and the above-described disadvantage: Denote again by the lifted version of the convolution operator, which in the discrete setting takes a lifted patch matrix as input and provides an image composed of overlapping patches as output. It is then easy to see that , the adjoint of , is in fact a patch selection operator and it holds that . Now using , the approach in (2) can be rewritten as

| 3 |

where we remember that u is the original image. Considering the problem of finding an optimal patch-based representation of an image as the problem of inverting , we can see that the previous approach in fact first applies a right inverse of and then decomposes the data. Taking this perspective, however, it seems much more natural to consider instead an adjoint formulation as

| 4 |

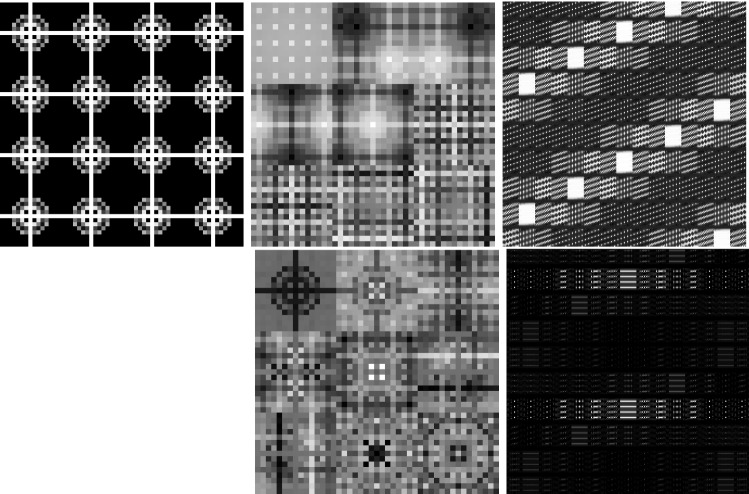

Indeed, this means that we do not fix the patch decomposition of the image a priori but rather allow the method itself to optimally select the position and size of patches. In particular, given a particular patch at an arbitrary location, this patch can be represented by using only one line of C and the other lines (corresponding to shifted versions) can be left empty. Figure 2 shows the resulting improvement by solving both of the above optimization problems for a particular test image, where the parameters are set such that the data error of both methods, i.e., , is approximately the same. As can be seen, solving (3), which we call patch denoising, does not yield meaningful dictionary atoms as the dictionary elements need to represent different, shifted version of the single patch that makes up the image. In contrast to that, solving (4), which we call patch reconstruction, allows to identify the underlying patch of the image and the corresponding patch matrix is indeed row sparse. In this context, we also refer to [26] which makes similar observations and differs between patch analysis (which is related to (3)) and patch synthesis, which is similar to (4); however, it does not consider a convolutional- but rather a matrix-based synthesis operator.

Fig. 2.

Patch-based representation of test images. Left: original image, middle: nine most important patches for each method (top: patch denoising, bottom: patch reconstruction), right: section of the corresponding patch matrices

The Variational Model

Now while the proposed model can, in principle, describe any kind of image, in particular its convex relaxation seems best suited for situations where the image can be described by only a few, repeating atoms, as would be, for instance, the case with texture images. In particular, since we do not include rotations in the model, there are many simple situations, such as u being the characteristic function of a disk, which would in fact require an infinite number of atoms. To overcome this, it seems beneficial to include an additional term which is rotationally invariant and takes care of piecewise smooth parts of the image. Denoting to be any regularization functional for piecewise smooth data and taking the infimal convolution of this functional with our atom-based norm, we then arrive at the convex model

for learning image data and image atom kernels from potentially noisy or incomplete measurements.

A particular example of this model can be given when choosing , the total variation function [51]. In this setting, a natural choice for the operator M in the definition of is to take the pointwise mean of the lifted variable in atom direction, which corresponds to constraining the learned atoms to have zero mean and enforces some kind of orthogonality between the cartoon and the texture part in the spirit of [43]. In our numerical experiments, in order to obtain an even richer model for the cartoon part, we use the second-order total generalized variation function () [7, 9] as cartoon prior and, in the spirit of a dual norm, use M to constrain the 0th and 1st moments of the atoms to be zero.

We also remark that, as shown in the analysis part of Sect. 3, while an -type norm on the lifted variables indeed arises as convex relaxation of the convolutional Lasso approach, the addition of the nuclear norm is to some extent arbitrary and in fact, in the context of compressed sensing, it is known that a summation of two norms is suboptimal for a joint penalization of sparsity and rank [44]. (We refer to Remark 5 for an extended discussion.) Indeed, our numerical experiments also indicate that the performance of our method is to some extent limited by a suboptimal relaxation of a joint sparsity and rank penalization. To account for that, we also tested with semi-convex potential functions (instead of the identity) for a penalization of the singular values in the nuclear norm. Since this provided a significant improvement in some situations, we also include this more general setting in our analysis and the numerical results.

The Model in a Continuous Setting

The goal of this section is to define and analyze the model introduced in Sect. 2 in a continuous setting. To this aim, we regard images as functions in the Lebesgue space with a bounded Lipschitz domain , and . Image atoms are regarded as functions in , with a second (smaller) bounded Lipschitz domain (either a circle or a square around the origin) and an exponent that is a priori allowed to take any value in , but will be further restricted below. We also refer to “Appendix” for further notation and results, in particular in the context of tensor product spaces, that will be used in this section.

As described in Sect. 2, the main idea is to synthesize an image via the convolution of a small number of atoms with corresponding coefficient images, where we think of the latter as a sum of delta peaks that define the locations where atoms are placed. For this reason, and also due to compactness properties, the coefficient images are modeled as Radon measures in the space , the dual of , where we denote

i.e., the extension of by . The motivation for using this extension of is to allow atoms also to be placed arbitrarily close to be boundary (see Fig. 1). We will further use the notation for an exponent and denote duality pairings between and and between and by , while other duality pairings (e.g., between tensor spaces) are denoted by . By , we denote standard and Radon norms whenever the domain of definition is clear from the context, otherwise we write , etc.

The Convolutional Lasso Prior

As a first step, we deal with the convolution operator that synthesizes an image from a pair of a coefficient image and an image atom in function space. Formally, we aim to define as

where we extend p by zero outside of . An issue with this definition is that, in general, p is only defined Lebesgue almost everywhere and so we have to give a rigorous meaning to the integration of p with respect to an arbitrary Radon measure. To this aim, we define the convolution operator via duality (see [52]). For , we define by the functional on as dense subset of as

where always denotes the zero extension of the function or measure g outside their domain of definition. Now we can estimate with

Hence, by density we can uniquely extend to a functional in and we denote by the associated function in . Now in case p is integrable w.r.t. c and , we get by a change of variables and Fubini’s theorem that for any

Hence we get that in this case, and defining as

we get that K(c, p) coincides with the convolution of c and p whenever the latter is well defined. Note that K is bilinear and, as the previous estimate shows, there exists such that . Hence, , the space of bounded bilinear operators (see “Appendix”).

Using the bilinear operator K and denoting by a fixed number of atoms, we now define the convolutional Lasso prior for an exponent and for as

| 5 |

and set if the constraint set above is empty. Here, we include an operator in our model that optionally allows to enforce additional constraints on the atoms. A simple example of M that we have in mind is an averaging operator, i.e., ; hence, the constraint that corresponds to a zero-mean constraint.

A Convex Relaxation

Our goal is now to obtain a convex relaxation of the convolutional Lasso prior. To this aim, we introduce by and the lifting of the bilinear operator K and the linear operators I and M, with being the identity, to the projective tensor product space (see “Appendix”). In this space, we consider a reformulation as

| 6 |

where

Note that this reformulation is indeed equivalent. Next we aim to derive the convex relaxation of in this tensor product space. To this aim, we use the fact that for a general function , its convex, lower semi-continuous relaxation can be computed as the biconjugate , where and .

First we consider a relaxation of the functional . In this context, we need an additional assumption on the constraint set , which is satisfied, for instance, if or for , in particular will be fulfilled by the concrete setting we use later on.

Lemma 1

Assume that there exists a continuous, linear, norm-one projection onto . Then, the convex, lower semi-continuous relaxation of is given as

where if and else, and is the projective norm on given as

Proof

Our goal is to compute the biconjugate of . First we note that

and consequently

Hence, the assertion follows if we show that To this aim, we first show that , where we set . Let be such that and take , be such that for some and . Then, with P the projection to as in the assumption, we get that

Now remember that, according to [53, Theorem 2.9], we have with the norm . Taking arbitrary , , we get that and hence

and since was arbitrary, it follows that . Finally, by closedness of we get that and, since has the approximation property (see [24, Section VIII.3]), from [53, Proposition 4.6], it follows that , hence and by assumption . Consequently, , and since was arbitrary, the claimed inequality follows.

Now we show that , from which the claimed assertion follows by the previous estimate and taking the convex conjugate on both sides. To this aim, take such that

and take , such that for all n, i and

We then get

Now it can be easily seen that the last expression equals 0 in case for all c, p with . In the other case, we can pick with and such that and get for any that

Hence, the last line of the above equation is either 0 or infinity and equals

This result suggests that the convex, lower semi-continuous relaxation of (6) will be obtained by replacing with the projective tensor norm on and the constraint . Our approach to show this will in particular require us to ensure lower semi-continuity of this candidate for the relaxation, which in turn requires us to ensure a compactness property of the sublevel sets of the energy appearing in (6) and closedness of the constraints. To this aim, we consider a weak* topology on and rely on a duality result for tensor product spaces (see “Appendix”), which states that, under some conditions, the projective tensor product can be identified with the dual of the so-called injective tensor product . The weak* topology on is then induced by pointwise convergence in as dual space of . Different from what one would expect from the individual spaces, however, this can only be ensured for the case which excludes the space for the image atoms. This restriction will also be required later on in order to show well-posedness of a resulting regularization approach for inverse problems, and hence, we will henceforth always consider the case that and use the identification (see “Appendix”).

As a first step toward the final relaxation result, and also as a crucial ingredient for well-posedness results below, we show weak* continuity of the operator on the space .

Lemma 2

Let . Then, the operator is continuous w.r.t. weak* convergence in and weak convergence in . Also, for any it follows that and, via the identification (see “Appendix”), can be given as .

Proof

First we note that for any , the function defined as (where we extend by 0 to ) is contained in . Indeed, continuity follows since by uniform continuity for any there exists a such that for any with and with

Also, taking to be the support of we get, with the extension of K by , for any that for any and hence in and .

Now for , taking to be a sequence converging to , we get that

Thus, can be approximated by a sequence of compactly supported functions and hence . Fixing now , we note that for any , the function is continuous; hence, we can define the linear functional

and get that is continuous on . Then, since it can be approximated by a sequence of simple tensors in the injective norm, which coincides with the norm in and, using Lemma 22 in “Appendix”, we get

Now by density of simple tensors in the projective tensor product, it follows that . In order to show the continuity assertion, take weak * converging to some . Then by the previous assertion we get for any that

hence weakly converges to Ku on a dense subset of which, together with boundedness of , implies weak convergence.

We will also need weak*-to-weak* continuity of , which is shown in the following lemma in a slightly more general situation than needed.

Lemma 3

Take and assume that with Z a reflexive space and define , where I is the identity on . Then is continuous w.r.t. weak* convergence in both spaces.

Proof

Take weak* converging to some and write . We note that, since Z is reflexive, it satisfies in particular the Radon Nikodým property (see “Appendix”) and hence can be regarded as predual of and we test with . Then

where the convergence follows since , the predual of , and hence,

Now we can obtain the convex, lower semi-continuous relaxation of .

Lemma 4

With the assumptions of Lemma 1 and , the convex, l.s.c. relaxation of is given as

| 7 |

Proof

Again we first compute the convex conjugate:

Similarly, we see that , where

Now in the proof of Lemma 1, we have in particularly shown that ; hence, if we show that is convex and lower semi-continuous, the assertion follows from . To this aim, take a sequence in converging weakly to some u for which, without loss of generality, we assume that

Now with such that , and we get that is bounded. Since admits a separable predual (see “Appendix”), this implies that admits a subsequence weak* converging to some C. By weak* continuity of and we get that and , respectively, and by weak* lower semi-continuity of , it follows that

which concludes the proof.

This relaxation results suggest to use as in Equation (7) as convex texture prior in the continuous setting. There is, however, an issue with that, namely that such a functional cannot be expected to penalize the number of used atoms at all. Indeed, taking some and assume that . Now note that we can split any summand as follows: Write with disjoint support such that . Then, we can rewrite

which gives a different representation of C by increasing the number of atoms without changing the cost of the projective norm. Hence, in order to maintain the original motivation of the approach to enforce a limited number of atoms, we need to add an additional penalty on C for the lifted texture prior.

Adding a Rank Penalization

Considering the discrete setting and the representation of the tensor C as a matrix, the number of used atoms corresponds to the rank of the matrix, for which it is well known that the nuclear norm constitutes a convex relaxation [25]. This construction can in principle also be transferred to general tensor products of Banach spaces via the identification (see Proposition 23 in “Appendix”)

and the norm

It is important to realize, however, that the nuclear norm of operators depends on the underlying spaces and in fact coincides with the projective norm in the tensor product space (see Proposition 23). Hence, adding the nuclear norm in does not change anything, and more generally, whenever one of the underlying spaces is equipped with an -type norm, we cannot expect a rank-penalizing effect (consider the example of the previous section).

On the other hand, going back to the nuclear norm of a matrix in the discrete setting, we see that it relies on orthogonality and an inner product structure and that the underlying norm is the Euclidean inner product norm. Hence, an appropriate generalization of a rank-penalizing nuclear norm needs to be built on a Hilbert space setting. Indeed, it is easy to see that any operator between Banach spaces with a finite nuclear norm is compact, and in particular for any with finite nuclear norm and Hilbert spaces, there are orthonormal systems , and uniquely defined singular values such that

Motivated by this, we aim to define an -based nuclear norm as extended real-valued function on as convex surrogate of a rank penalization. To this aim, we consider from now on the case . Remember that the tensor product of two spaces X, Y is defined as the vector space spanned by linear mappings on the space of bilinear forms on , which are given as . Now since can be regarded as subspace of , also can be regarded as subspace of Further, defining for ,

we get that for a constant , and hence, also the completion can be regarded as subspace of . Further, can be identified with the space of nuclear operators as above such that

Using this, and introducing a potential function , we define for ,

| 8 |

We will mostly focus on the case , in which coincides with an extension of the nuclear norm and can be interpreted as convex relaxation of the rank. However, since we observed a significant improvement in some cases in practice by choosing to be a semi-convex potential function, i.e., a function such that is convex for sufficiently small, we include the more general situation in the theory.

Remark 5

(Sparsity and low-rank) It is important to note that restricts C to be contained in the smoother space and in particular does not allow for simple tensors with the ’s being composed of delta peaks. Thus, we observe some inconsistency of a rank penalization via the nuclear norm and a pointwise sparsity penalty, which is only visible in the continuous setting via regularity of functions. Nevertheless, such an inconsistency has already been observed in the finite-dimensional setting in the context of compressed sensing for low-rank AND sparse matrices, manifested via a poor performance of the sum of a nuclear norm and norm for exact recovery (see [44]). As a result, there exist many studies on improved, convex priors for the recovery of low-rank and sparse matrices, see, for instance, [19, 49, 50]. While such improved priors can be expected to be highly beneficial for our setting, the question does not seem to be solved in such a way that can be readily applied in our setting.

One direct way to circumvent this inconsistency would be to include an additional smoothing operator for C as follows: Take such that to be a weak*-to-weak* continuous linear operator and define the operator as , where denotes the identity in . Then one could alternatively also use as alternative for penalizing the rank of C while still allowing C to be a general measure. Indeed, in the discrete setting, by choosing S also to be injective, we even obtain the equality (where we interpret C and SC as matrices). In practice, however, we did not observe an improvement by including such a smoothing and thus do not include in our model.

Remark 6

(Structured matrix completion) We would also like to highlight the structured-matrix-completion viewpoint on the difficulty of low-rank and sparse recovery. In this context, the work [29] discusses conditions for global optimality of solutions to the non-convex matrix decomposition problem

| 9 |

where measures the loss w.r.t. some given data and allows to enforce structural assumptions on the factors U, V. For this problem, [29] shows that rank-deficient local solutions are global solutions to a convex minorant obtained allowing k to become arbitrary large (formally, choose ) and, consequently, also globally optimal for the original problem. Choosing if and infinity else, where is a discrete version of the lifted convolution operator, and , we see that (for simplicity ignoring the optional additional atom constraints) the convolutional Lasso prior of Equation (5) can be regarded as special case of (9). Viewed in this way, our above results show that the convex minorant obtained with is in fact the convex relaxation, i.e., the largest possible convex minorant, of the entire problem including the data- and the convolution term. But again, as discussed in Sect. 3.2, we cannot expect a rank-penalizing effect of the convex relaxation obtained in this way. An alternative, as mentioned in [29], would be to choose . Indeed, while in this situation it is not clear if the convex minorant with is the convex relaxation, the former still provides a convex energy from which one would expect a rank-penalizing effect. Thus, this would potentially be an alternative approach that could be used in our context with the advantage of avoiding the lifting but the difficulty of finding rank-deficient local minima of a non-convex energy.

Well-Posedness and a Cartoon–Texture Model

Including for as additional penalty in our model, we ultimately arrive at the following variational texture prior in the tensor product space , which is convex whenever is convex, in particular for .

| 10 |

where is a parameter balancing the sparsity and the rank penalty.

In order to employ as a regularization term in an inverse problems setting, we need to obtain some lower semi-continuity and coercivity properties. As a first step, the following lemma, which is partially inspired by techniques used in [10, Lemma 3.2], shows that, under some weak conditions on , defines a weak* lower semi-continuous function on .

Lemma 7

Assume that is lower semi-continuous, non-decreasing, that

for and that

there exist such that for .

Then, the functional defined as in (8) is lower semi-continuous w.r.t. weak* convergence in .

Proof

Take weak* converging to some for which, w.l.o.g., we assume that

We only need to consider the case that is bounded, otherwise the assertion follows trivially. Hence, we can write such that . Now we aim to bound in terms of . To this aim, first note that the assumptions in imply that for any there is such that for all . Also, for any i, n and via a direct contradiction argument it follows that there exists such that for all i, n. Picking such that for all , we obtain

hence is also bounded as a sequence in and admits a (non-relabeled) subsequence weak* converging to some , with being the predual space. By the inclusion and uniqueness of the weak* limit, we finally get and can write and . By lower semi-continuity of , this would suffice to conclude in the case . For the more general case, we need to show a pointwise lim-inf property of the singular values. To this aim, note that by the Courant–Fischer min–max principle (see, for instance, [12, Problem 37]) for any compact operator with Hilbert spaces and the k-th singular value of T sorted in descending order, we have

Now consider fixed. For any subspace V with , the minimum in the equation above is achieved, and hence, we can denote to be a minimizer and define such that . Since weak* convergence of a sequence to T in implies in particular for all x, by lower semi-continuity of the norm it follows that is lower semi-continuous with respect to weak* convergence. Hence, this is also true for the function by being the pointwise supremum of a family of lower semi-continuous functional. Consequently, for the sequence it follows that . Finally, by monotonicity and lower semi-continuity of and Fatou’s lemma we conclude

The lemma below now establishes the main properties of that in particular allow to employ it as regularization term in an inverse problems setting.

Lemma 8

The infimum in the definition of (10) is attained and is convex and lower semi-continuous. Further, any sequence such that is bounded admits a subsequence converging weakly in .

Proof

The proof is quite standard, but we provide it for the readers convenience. Take to be a sequence such that is bounded. Then, we can pick a sequence in such that , and

This implies that admits a subsequence weak* converging to some . Now by continuity of and we have that and that is bounded. Hence also admits a (non-relabeled) subsequence converging weakly to some . This already shows the last assertion. In order to show lower semi-continuity, assume that converges to some v and, without loss of generality, that

Now this is a particular case of the argumentation above; hence, we can deduce with as above that

which implies lower semi-continuity. Finally, specializing even more to the case that is the constant sequence , also the claimed existence follows.

In order to model a large class of natural images and to keep the number of atoms needed in the above texture prior low, we combine it with a second part that models cartoon-like images. Doing so, we arrive at the following model

| P |

where we assume to be a functional that models cartoon images, is a given data discrepancy, a forward model and we define the parameter balancing function

| 11 |

Now we get the following general existence result.

Proposition 9

Assume that is convex, lower semi-continuous and that there exists a finite-dimensional subspace such that for any , , ,

with and denoting the complement of U in . Further assume that , is convex, lower semi-continuous and coercive on the finite-dimensional space A(U) in the sense that for any two sequences , such that , is bounded and is bounded, also is bounded. Then, there exists a solution to (P).

Remark 10

Note that, for instance, in case satisfies a triangle inequality, the sequence in the coercivity assumption is not needed, i.e., can be chosen to be zero.

Proof

The proof is rather standard, and we provide only a short sketch. Take a minimizing sequence for (P). From Lemma 8, we get that admits a (non-relabeled) weakly convergent subsequence. Now we split and and by assumption get that is bounded. But since is bounded, so is and consequently also . Now we split again , where the latter denotes the complement of , and note that also is a minimizing sequence for (P). Hence, it remains to show that is bounded in order to get a bounded minimizing sequence. To this aim, we note that and that A is injective on this finite-dimensional space. Hence, for some , and by the coercivity assumption on the data term we finally get that is bounded. Hence, also admits a weakly convergent subsequence in and by continuity of A as well as lower semi-continuity of all involved functionals existence of a solution follows.

Remark 11

(Choice of regularization) A particular choice of regularization for in (P) that we consider in this paper is , with the second-order total generalized variation functional [9], and

with and for all , the complement of the first-order polynomials, and is invariant on first-order polynomials, the result of Proposition 9 applies.

Remark 12

(Norm-type data terms) We also note that the result of Proposition 9 in particular applies to for any or , where we extend the norms by infinity to whenever necessary. Indeed, lower semi-continuity of these norms is immediate for both and , and since the coercivity is only required on a finite-dimensional space, it also holds by equivalence of norms.

Remark 13

(Inpainting) At last we also remark that the assumptions of Proposition 9 also hold for an inpainting data term defined as

whenever has non-empty interior. Indeed, lower semi-continuity follows from the fact that convergent sequences admit pointwise convergent subsequences and the coercivity follows from finite dimensionality of U and the fact that has non-empty interior.

Remark 14

(Regularization in a general setting) We also note that Lemma 8 provides the basis for employing either directly or its infimal convolution with a suitable cartoon prior as in Proposition 9 for the regularization of general (potentially nonlinear) inverse problems and with multiple data fidelities, see, for instance, [31, 32] for general results in that direction.

The Model in a Discrete Setting

This section deals with the discretization of the proposed model and its numerical solution. For the sake of brevity, we provide only the main steps and refer to the publicly available source code [16] for all details.

We define to be the space of discrete grayscale images, to be the space of coefficient images and to be the space of image atoms for which we assume and, for simplicity, only consider a square domain for the atoms. The tensor product of a coefficient image and a atom is given as and the lifted tensor space is given as the four-dimensional space .

Texture Norm The forward operator K being the lifting of the convolution and mapping lifted matrices to the vectorized image space is then given as

and we refer Fig. 1 for a visualization in the one-dimensional case. Note that by extending the first two dimensions of the tensor space to , we allow to place an atom at any position where it still effects the image, also partially outside the image boundary.

Also we note that, in order to reduce dimensionality and accelerate the computation, we introduce a stride parameter in practice which introduces a stride on the possible atom positions. That is, the lifted tensor space and forward operator are reduced in such a way that the grid of possible atom positions in the image is essentially . This reduces the dimension of the tensor space by a factor , while for it naturally does not allow for arbitrary atom positions anymore and for it corresponds to only allowing non-overlapping atoms. In order to allow for atoms being placed next to each other, it is important to choose to be a divisor of the atom-domain size n and we used and in all experiments of the paper. In order to avoid extensive indexing and case distinctions, however, we only consider the case here and refer to the source code [16] for the general case.

A straightforward computation shows that, in the discrete lifted tensor space, the projective norm corresponding to discrete and norms for the coefficient images and atoms, respectively, is given as a mixed 1-2 norm as

The nuclear norm for a potential on the other hand reduces to the evaluation of on the singular values of a matrix reshaping of the lifted tensors and is given as

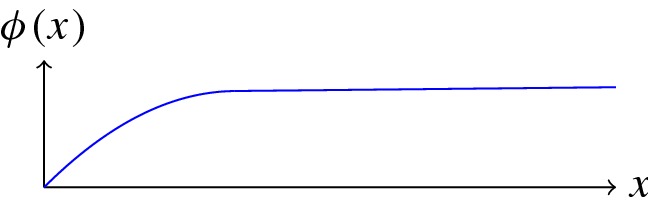

where denotes a reshaping of the tensor C to a matrix of dimensions . For the potential function , we consider two choices: Mostly we are interested in which yields a convex texture model and enforces sparsity of the singular values. A second choice we consider is given as

| 12 |

where , and , see Fig. 3. It is easy to see that fulfills the assumptions of Lemma 7 and that is semi-convex, i.e., is convex for . While the results of Sect. 3 hold for this setting even without the semi-convexity assumption, we cannot in general expect to obtain an algorithm that provably delivers a globally optimal solution in the semi-convex (or generally non-convex) case. The reason for using a semi-convex potential rather than a arbitrary non-convex one is twofold: First, for a suitably small stepsize the proximal mapping

Fig. 3.

Visualization of the potential .

is well defined and hence proximal-point-type algorithms are applicable at least conceptually. Second, since we employ on the singular values of the lifted matrices C, it will be important for numerical feasibility of the algorithm that the corresponding proximal mapping on C can be reduced to a proximal mapping on the singular values. While this is not obvious for a general choice of , it is true (see Lemma 15) for semi-convex with suitable parameter choices.

Cartoon Prior As cartoon prior we employ the second-order total generalized variation functional which we define for fixed parameters and a discrete image as

Here, and denote discretized gradient and symmetrized Jacobian operators, respectively, and we refer to [8] and the source code [16] for details on a discretization of . To ensure a certain orthogonality of the cartoon and texture parts, we further define the operator M that incorporates atom constraints, to evaluate the 0th and 1st moments of the atoms, which in the lifted setting yields

The discrete version of (P) is then given as

| DP |

where the parameter balancing functions are given as in (11) and the model depends on three parameters , with defining the trade-off between data and regularization, defining the trade-off between the cartoon and the texture parts and defining the trade-off between sparsity and low rank of the tensor C.

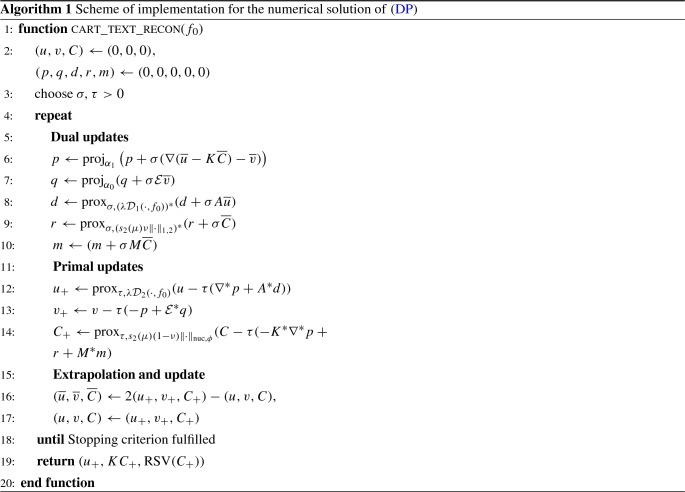

Numerical Solution For the numerical solution of (DP), we employ the primal–dual algorithm of [17]. Since the concrete form of the algorithm depends on whether the proximal mapping of the data term is explicit or not, in order to allow for a unified version as in Algorithm 1, we replace the data term by

where we assume the proximal mappings of to be explicit and, depending on the concrete application, set either or to be the constant zero function.

Denoting by the convex conjugate of a function g, with being the standard inner product of the sum of all pointwise products of entries of v and w, we reformulate (DP) to a saddle-point problem as

Here, the dual variables are in the image space of the corresponding operators, if and else, with a pointwise infinity norm on . The operator E and the functional are given as

and summarizes all the conjugate functionals as above. Applying the algorithm of [17] to this reformulation yields the numerical scheme as in Algorithm 1.

Note that, we set either such that the dual variable d is constant 0 and line 9 of the algorithm can be skipped, or we set such that the proximal mapping in line 13 reduces to the identity. The concrete choice of and the proximal mappings will be given in the corresponding experimental sections. All other proximal mappings can be computed explicitly and reasonably fast: The mappings and can be computed as pointwise projections to the -ball (see, for instance, [8]) and the mapping is a similar projection given as

Most of the computational effort lies in the computation of , which, as the following lemma shows, can be computed via an SVD and a proximal mapping on the singular values.

Lemma 15

Let be a differentiable and increasing function and be such that is convex on . Then, the proximal mapping of for parameter is given as

where is the SVD of and for

In particular, in case we have

and in case

we have that is convex whenever and in this case

Proof

At first note that it suffices to consider as a function on matrices and show the assertion without the reshaping operation. For any matrix B, we denote by the SVD of B and contains the singular values sorted in non-increasing order, where is uniquely determined by B and are chosen to be suitable orthonormal matrices.

We first show that is convex. For , matrices, we get by subadditivity of the singular values (see, for instance, [60]) that

Now with we get that , and thus, also is convex. Hence, first-order optimality conditions are necessary and sufficient and we get (using the derivative of the singular values as in [45]) with the derivative of that is equivalent to

and consequently to

which is equivalent to

as claimed. The other results follow by direct computation.

Note also that, in Algorithm 1, returns the part of the image that is represented by the atoms (the “texture part”) and stand for right-singular values of and returns the image atoms. For the sake of simplicity, we use a rather high, fixed number of iterations in all experiment but note that, alternatively, a duality-gap-based stopping criterion (see, for instance, [8]) could be used.

Numerical Results

In this section, we present numerical results obtained with the proposed method as well as its variants and compare to existing methods. We will mostly focus on the setting of (DP), where , and we use different data terms . Hence, the regularization term is convex and consists of for the cartoon part and a weighted sum of a nuclear norm and norm for the texture part. Besides this choice of regularization (called CT-cvx), we will compare to pure regularization (called TGV), the setting of (DP) with the semi-convex potential as in (12) (call CT-scvx) and the setting of (DP) with replaced by , i.e., only the texture norm is used for regularization, and (called TXT). Further, in the last subsection, we also compare to other methods as specified there. For CT-cvx and CT-scvx, we use the algorithm described in the previous section (where convergence can only be ensured for CT-cvx), and for the other variants we use an adaption of the algorithm to the respective special case.

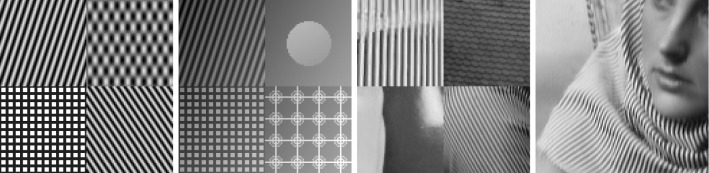

We fix the size of the atom domain to pixel and the stride to 3 pixel (see Sect. 4) for all experiments and use four different test images (see Fig. 4): The first two are synthetic images of size , containing four different blocks of size , whose size is a multiple of the chosen atom-domain size. The third and fourth images have size (not being a multiple of the atom-domain size), and the third image contains four sections of real images of size each (again not a multiple of the atom-domain size). All but the first image contain a mixture of texture and cartoon parts. The first four subsections consider only convex variants of our method (), and the last one considers the improvement obtained with a non-convex potential and also compares to other approaches.

Fig. 4.

Different test images we will refer to as: Texture, Patches, Mix, Barbara

Regarding the choice of parameters for all methods, we generally aimed to reduce the number of varying parameters for each method as much as possible such that for each method and type of experiment, at most two parameters need to be optimized. Whenever we incorporate the second-order TGV functional for the cartoon part, we fix the parameters to . The method CT-cvx then essentially depends on the three parameters . We experienced that the choice of is rather independent of the data and type of experiments; hence, we leave it fixed for all experiments with incomplete or corrupted data, leaving our method with two parameters to be adapted: defining the trade-off between data and regularization and defining the trade-off between cartoon and texture regularization. For the semi-convex potential, we choose as with the convex one, fix and use two different choices of , depending on the type of experiment, hence again leaving two parameters to be adapted. A summary of the parameter choice for all methods is provided in Table 2.

Table 2.

Parameter choice for all methods and experiments used in the paper. Here, always defines the trade-off between data fidelity and regularization, defined the trade-off between cartoon and texture, defined the trade-off between the 1/2 norm and the penalization of singular values and defines the degree of non-convexity for the semi-convex potential. Whenever a parameter was optimized over a certain range for each experiment, we write opt

| CT-cvx | CT-scvx | TGV | TXT | BM3D | CL | CDL | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Dcp. | – | opt | 0.95 | – | 0.75 | |||||||||

| Inp. | – | opt | 0.975 | - | opt | 0.975 | 0.1 | – | – | 0.975 | ||||

| Den. | opt | opt | 0.975 | opt | opt | 0.975 | 2.0 | opt | opt | 0.975 | opt | opt | opt | opt |

| Dcv. | opt | opt | 0.975 | opt | ||||||||||

We also note that, whenever we tested a range of different parameters for any method presented below, we show the visually best results in the figure. Those are generally not the ones delivering the best result in terms of peak-signal-to-noise ratio, and for the sake of completeness we also provide in Table 1 the best PSNR result obtained with each method and each experiment over the range of tested parameters.

Table 1.

Best PSNR result achieved with each method for the parameter test range as specified in Table 2

| Texture | Patches | Mix | Barbara | |

|---|---|---|---|---|

| Inpainting | ||||

| TGV | 10.32 | 19.28 | 20.19 | 20.58 |

| TXT/ CT-cvx | 17.59 | 25.55 | 23.38 | 23.48 |

| CT-scvx | 32.74 | 23.6 | ||

| Denoising | ||||

| TGV | 11.83 | 23.96 | 23.74 | 23.99 |

| TXT/ CT-cvx | 16.06 | 25.91 | 26.07 | 25.0 |

| CT-scvx | 29.4 | 25.56 | ||

| CL | 29.09 | 25.14 | ||

| BM3D | 30.82 | 28.15 | ||

| CDL | 27.96 | 25.24 | ||

| Deconvolution | ||||

| TGV | 23.72 | 23.14 | ||

| CT-cvx | 24.52 | 23.34 | ||

The best result for each experiment is written in bold

Image-Atom-Learning and Texture Separation

As first experiment, we test the method CT-cvx for learning image atoms and texture separation directly on the ground truth images. To this aim, we use

and the proximal mapping of is a simple projection to . The results can be found in Fig. 5, where for the pure texture image we used only the texture norm (i.e., the method TXT) without the TGV part for regularization.

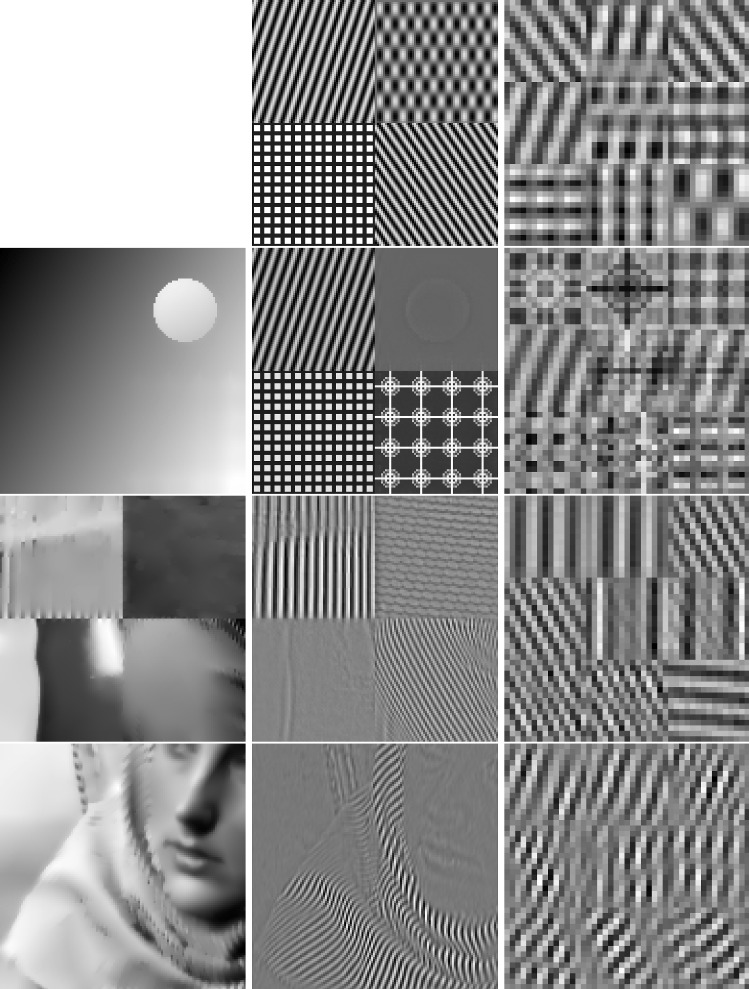

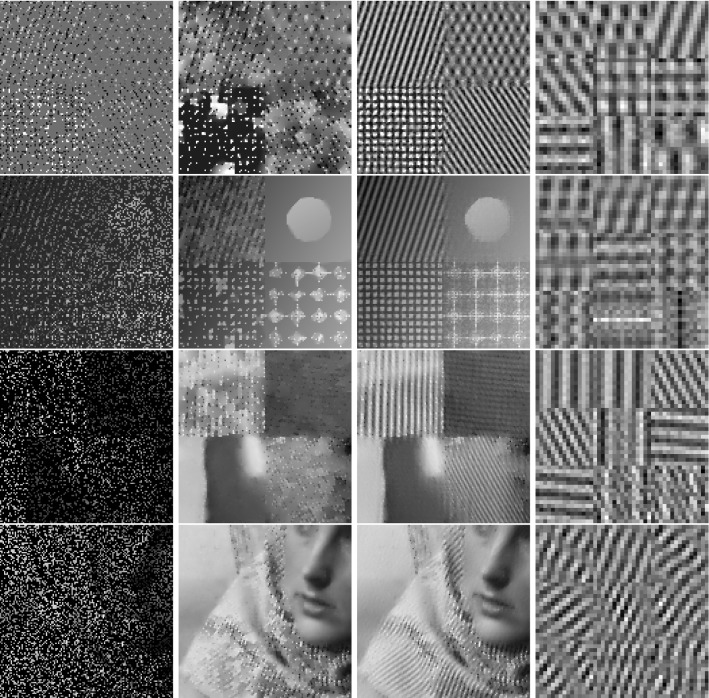

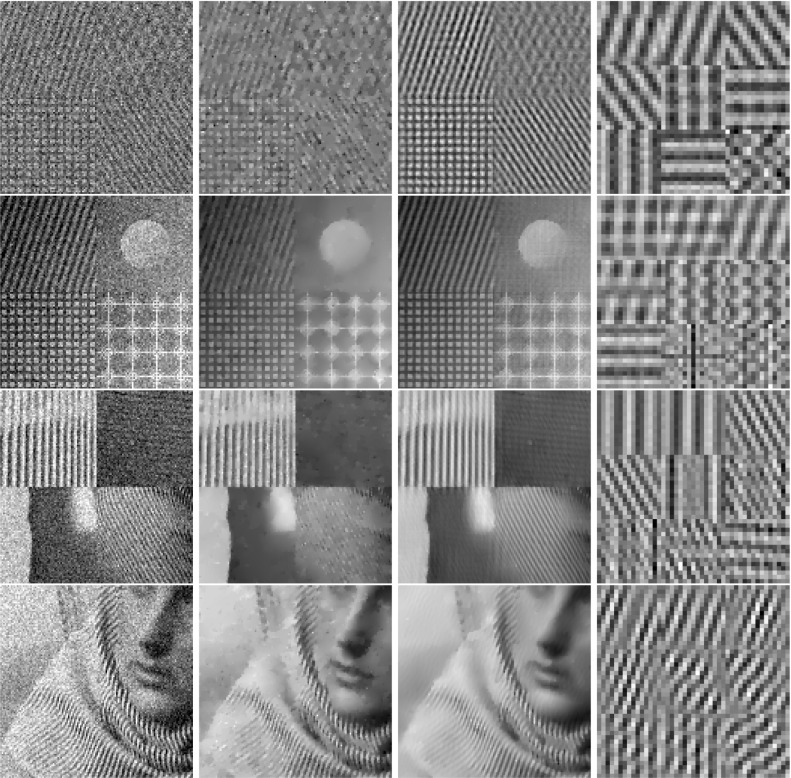

Fig. 5.

Cartoon–texture decomposition (rows 2–4) and nine most important learned atoms for different test images and the methods TXT (row 1) and CT-cvx (rows 2–4)

It can be observed that the proposed method achieves a good decomposition of cartoon and texture and also is able to learn the most important image structure effectively. While there are some repetitions of shifted structures in the atoms, the different structures are rather well-separated and the first nine atoms corresponding to the nine largest singular values still contain the most important features of the texture parts.

Inpainting and Learning from Incomplete Data

This section deals with the task of inpainting a partially available image and learning image atoms from these incomplete data. For reference, we also provide results with pure regularization (the method TGV). The data fidelity in this case is

with E the index set of known pixels and the proximal mapping of is a projection to on all points in E. Again we use only the texture norm for the first image (the method TXT) and the cartoon–texture functional for the others.

The results can be found in Fig. 6. For the first and third images, of the pixels were given, while for the other two, were given. It can be seen that our method is generally still able to identify the underlying pattern of the texture part and to reconstruct it reasonably well. Also the learned atoms are reasonable and are in accordance with the ones learned from the full data as in the previous section. In contrast to that, pure regularization (which assumes piecewise smoothness) has no chance to reconstruct the texture patterns. For the cartoon part, both methods are comparable. It can also be observed that the target-like structure in the bottom right of the second image is not reconstructed well and also not well identified with the atoms (only the eighth one contains parts of this structure). The reason might be that due to the size of the repeating structure there is not enough redundant information available to reconstruct it from the missing data. Concerning the optimal PSNR values of Table 1, we can observe a rather strong improvement with CT-cvx compared to TGV.

Fig. 6.

Image inpainting from incomplete data. From left to right: Data, TGV-based reconstruction, proposed method (only TXT in first row), nine most important learned atoms. Rows 1, 3: 20% of pixels, rows 2, 4: 30% of pixels

Learning and Separation Under Noise

In this section, we test our method for image-atom-learning and denoising with data corrupted by Gaussian noise (with standard deviation 0.5 and 0.1 times the image range for the Texture and the other images, respectively). Again we compare to TGV regularization in this section (but also to other methods in Sect. 5.5) and use the texture norm for the first image (the method TXT). The data fidelity in this case is

and .

The results are provided in Fig. 7. It can be observed that also under the presence of rather strong noise, our method is able to learn some of the main features of the image within the learned atoms. Also the quality of the reconstructed image is improved compared to TGV, in particular for the right-hand side of the Mix image, where the top left structure is only visible in the result obtained with CT-cvx. On the other hand, the circle of the Patches image obtained with CT-cvx contains some artifacts of the texture part. Regarding the optimal PSNR values of Table 1, the improvement with CT-cvx compared to TGV is still rather significant.

Fig. 7.

Denoising and atom learning. From left to right: Noisy data, TGV-based reconstruction, proposed method (only TXT for the first image), nine most important learned atoms

Deconvolution

This section deals with the learning of image features and image reconstruction in an inverse problem setting, where the forward operator is given as a convolution with a Gaussian kernel (standard deviation 0.25, kernel size pixels), and the data are degraded by Gaussian noise with standard deviation 0.025 times the image range. The data fidelity in this case is

with A being the convolution operator, and .

We show results for the Mix and the Barbara image and compare to TGV in Fig. 8. It can be seen that the improvement is comparable to the denoising case. In particular, the method is still able to learn reasonable atoms from the given, blurry data and in particular for the texture parts the improvement is quite significant.

Fig. 8.

Reconstruction from blurry and noisy data. From left to right: Data, TGV, proposed, learned atoms

Comparison

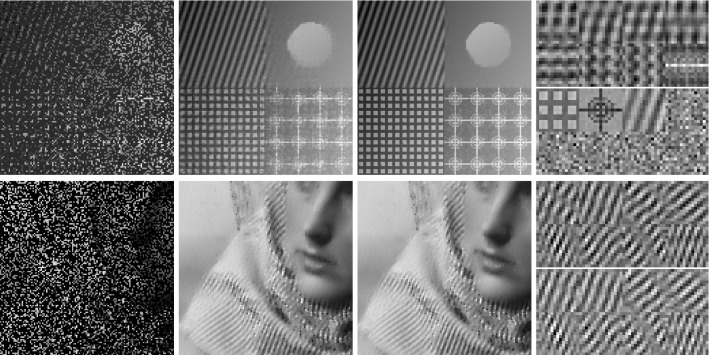

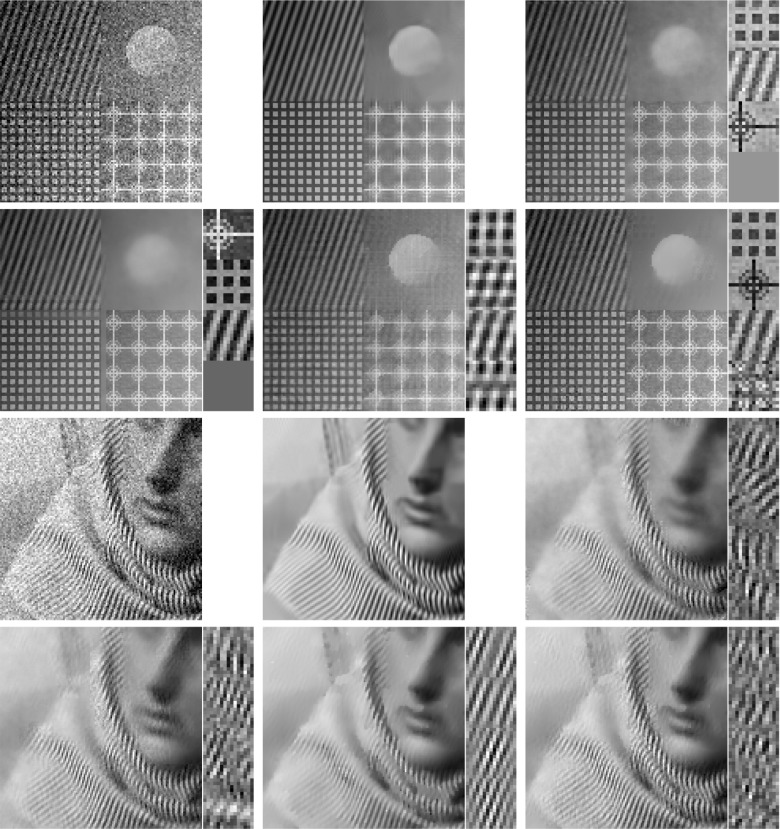

This section compares the method CT-cvx to its semi-convex variant CT-scvx and to other methods. At first, we consider the learning of atoms from incomplete data and image inpainting in Fig. 9. It can be seen there that for the Patches image, the semi-convex variant achieves an almost perfect results: It is able to learn exactly the three atoms that compose the texture part of the image and to inpaint the image very well. For the Barbara image, where more atoms are necessary to synthesize the texture part, the two methods yield similar results and also the atoms are similar. These results are also reflected in the PSNR values of Table 1, where CT-scvx is more that 7 decibel better for the Patches image and achieves only a slight improvement for Barbara.

Fig. 9.

Comparison of CT-cvx and CT-scvx for inpainting with 30% of the pixels given. From left to right: Data, convex, semi-convex, convex atoms (top), semi-convex atoms (bottom)

Next we consider the semi-convex variant CT-scvx for denoising the Patches and Barbara images of Fig. 7. In this setting, also other methods are applicable and we compare to an own implementation of a variant of the convolutional Lasso algorithm (called CL), to BM3D denoising [22] (called BM3D) and to a reference implementation for convolutional dictionary learning (called CDL). For CL, we strive to solve the non-convex optimization problem

where are coefficient images, are atoms and k is the number of used atoms. Note that we use the same boundary extension, atom-domain size and stride variable than in the methods CT-cvx, CT-scvx, and that denotes a discrete TV functional with a slight smoothing of the norm to make it differentiable (see the source code [16] for details). For the solution, we use an adaption of the algorithm of [48]. For BM3D, we use the implementation obtained from [34]. For CDL, we use a convolutional dictionary learning implementation provided by the SPORCO library [56, 64], and more precisely, we adapted the convolutional dictionary learning example (cbpdndl_cns_gry) which uses a dictionary learning algorithm (dictlrn.cbpdndl.ConvBPDNDictLearn) based on the ADMM consensus dictionary update [28, 57]. Note that CDL addresses the same problem as CL, however, instead of including the TV component the image is high-pass-filtered prior to dictionary learning using Tikhonov regularization.

Remark 16

We note that, while we provide the comparison to BM3D in order to have a reference on achievable denoising quality, we do not aim to propose an improved denoising method that is comparable to BM3D. In contrast to BM3D, our method constitutes a variational (convex) approach, that is generally applicable for inverse problems and for which we were able to provide a detailed analysis in function space such that in particular stability and convergence results for vanishing noise can be proven. Furthermore, beyond mere image reconstruction, we regard the ability of simultaneous image-atom-learning and cartoon–texture decomposition as an important feature of our approach.

Results for the Patches and Barbara image can be found in Fig. 10, where for CL and CDL we allowed for three atoms for the Patches images an tested 3, 5, and 7 atoms for the Barbara image, showing the best result that was obtained with 7 atoms. Note that for all methods, parameters were chosen and optimized according to Table 2 and for the CDL method we also tested the standard setting of 64 atoms of size , which performed worse than the choice of 7 atoms. In this context, it is important to note that the implementation of CDL was designed for dictionary learning from a set of clean training images, for which it makes sense to learn a large number of atoms. When “misusing” the method for joint learning and denoising, it is natural that the number of admissible atoms needs to be constraint to achieve a regularizing effect.

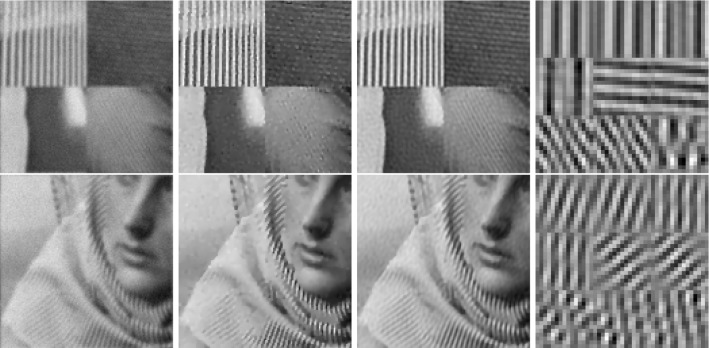

Fig. 10.

Comparison of different methods for denoising the Patches and Barbara images from Fig. 7. First row for each image, from left to right: Noisy data, BM3D, CDL. Second row for each image, from left to right: CL, CT-cvx and CT-scvx. The four most important learned atoms are shown right to the image, if applicable

Looking at Fig. 10, it can be seen that, as with the inpainting results, CT-scvx achieves a very strong improvement compared to CT-cvx for the Patches image (obtaining the atoms almost perfectly) and only a slight improvement for the Barbara image. Regarding the Patches image, the CL and CDL methods perform similar but slightly worse than CT-scvx. While there, also the three main features are identified correctly, they are not centered which leads to artifacts in the reconstruction and might be explained by the methods being stuck in a local minimum. For this image, the result of BM3D is comparable but slightly smoother than the ones of CT-scvx. In particular, the target-like structure in the bottom left is not very well reconstructed with BM3D but suffers from less remaining noise. For the Barbara image, BM3D delivers the best result, but a slight over-smoothing is visible. Regarding the PSNR values of Table 1, BM3D performs best and CT-scvx second best (better that CL and CDL), where in accordance with the visual results the difference of BM3D and CT-scvx for the Patches image is not as high as with Barbara.

Discussion

Using lifting techniques, we have introduced a (potentially convex) variational approach for learning image atoms from corrupted and/or incomplete data. An important part of our work is the analysis of the proposed model, which shows well-posedness results in function space for a general inverse problem setting. The numerical part shows that indeed our model can effectively learn image atoms from different types of data. While this works well also in a convex setting, moving to a semi-convex setting (which is also captured by our theory) yields a further, significant improvement. While the proposed method can also be regarded solely as image reconstruction method, we believe its main feature is in fact the ability to learn image atoms from incomplete data in a mathematically well-understood framework. In this context, it is important to note that we expect our approach to work well whenever the non-cartoon part of the underlying image is well described with only a few filters. This is natural, since we learn only from a single dataset and allowing for a large number of different atoms will remove the regularization effect of our approach.

As discussed in introduction, the learning of convolutional image atoms is strongly related to a deep neural networks, in particular also when using a multilevel setting. Motivated by this, future research questions are an extension of our method in this direction as well as is exploration for classification problems.

Acknowledgements

Open access funding provided by Austrian Science Fund (FWF). MH acknowledges support by the Austrian Science Fund (FWF) (Grant J 4112). TP is supported by the European Research Council under the Horizon 2020 program, ERC starting Grant Agreement 640156.

Biographies

Antonin Chambolle

studied at École Normale Supérieure (Paris) and has a PhD (1993) in applied mathematics from U. Paris Dauphine, supervised by Jean-Michel Morel. After a post-doc at SISSA, Trieste, he has worked as a CNRS Junior Scientist at U. Paris Dauphine and then since 2003 as a CNRS Junior and then Senior Scientist at CMAP, Ecole Polytechnique, Paris. He has also been a French Government Fellow at Churchill College, U. Cambridge (DAMTP) in 2015-16. His research, mostly in mathematical analysis, focus on calculus of variations, free boundary and free discontinuity problems, interface motion, and numerical optimization with applications to (fracture) mechanics or imaging.

Martin Holler

received his MSc (2005–2010) and his PhD (2010–2013) with a “promotio sub auspiciis praesidentis rei publicae” in Mathematics from the University of Graz. After research stays at the University of Cambridge, UK, and the Ecole Polytechnique, Paris, he currently holds a University Assistant position at the Insititue of Matheamtics and Scientific Commputing of the University of Graz. His research interests include inverse problems and mathematical image processing, in particular the development and anlysis of mathematical models in this context as well as applications in biomedical imaging, image compression and beyond.

Thomas Pock

received his MSc (1998–2004) and his PhD (2005–2008) in Computer Engineering (Telematik) from Graz University of Technology. After a Post-doc position at the University of Bonn, he moved back to Graz University of Technology where he has been an Assistant Professor at the Institute for Computer Graphics and Vision. In 2013 Thomas Pock received the START price of the Austrian Science Fund (FWF) and the German Pattern recognition award of the German association for pattern recognition (DAGM) and in 2014, Thomas Pock received an starting grant from the European Research Council (ERC). Since June 2014, Thomas Pock is a Professor of Computer Science at Graz University of Technology. The focus of his research is the development of mathematical models for computer vision and image processing as well as the development of efficient convex and non-smooth optimization algorithms.

A Appendix: Tensor Spaces

We recall here some basic results on tensor products of Banach spaces that will be relevant for our work. Most of these results are obtained from [24, 53], to which we refer to for further information and a more complete introduction to the topic.

Throughout this section, let always be Banach spaces. By , we denote the analytic dual of X, i.e., the space of bounded linear functionals from X to . By and , we denote the spaces of bounded linear and bilinear mappings, respectively, where the norm for the latter is given by . In case the image space is the reals, we write and .

Algebraic Tensor Product The tensor product of two elements , can be defined as a linear mapping on the space of bilinear forms on via

The algebraic tensor product is then defined as the subspace of the space of linear functionals on B(X, Y) spanned by elements with , .

Tensor Norms We will use two different tensor norms, the projective and the injective tensor norm (also known as the largest and smallest reasonable cross norm, respectively). The projective tensor norm on is defined for as

Note that indeed is a norm and (see [53, Proposition 2.1]). We denote by the completion of the space equipped with this norm. The following result gives a useful representation of elements in and their projective norm.

Proposition 17

For and , there exist bounded sequences , such that

In particular,

Now for the injective tensor norm, we note that elements of the tensor product can be viewed as bounded bilinear forms on by associating with a tensor the bilinear form , where this association is unique (see [53, Section 1.3]). Hence, can be regarded as a subspace of and the injective tensor norm is the norm induced by this space. Thus, for the injective tensor norm is given as

and the injective tensor product is defined as the completion of with respect to this norm.

Tensor Lifting The next result (see [53, Theorem 2.9]) shows that there is a one-to-one correspondence between bounded bilinear mappings from to Z and bounded linear mappings from to Z.

Proposition 18

For there exists a unique linear mapping such that . Further, is bounded and the mapping is an isometric isomorphism between the Banach spaces and .

Using this isometry, for we will always denote by the corresponding linear mapping on the tensor product.

The following result is provided in [53, Proposition 2.3] and deals with the extension of linear operators to the tensor product.

Proposition 19

Let , . Then there exists a unique operator such that . Furthermore, .

Tensor Space Isometries The following proposition deals with duality of the injective and the projective tensor products. To this aim, we need the notion of Radon Nikodým property and approximation property, which we will not define here but rather refer to [53, Sections 4 and 5] and [24]. For our purposes, it is important to note that both properties hold for -spaces with , the Radon Nikodým property holds for reflexive spaces, but while we cannot expect the Radon Nikodým property to hold for and , the approximation property does.

Lemma 20

Assume that either or has the Radon Nikodým property and that either or has the approximation property. Then

and for simple tensors and the duality pairing is given as

Proof

The identification of the duals is shown in [53, Theorem 5.33]. For the duality paring, we first note that the action of an element on is given as the action of the associated bilinear form [53, Section 3.4], which for simple tensors can be given as

Now in case also is a simple tensor, i.e., , the action of this bilinear form can be given more explicitly [53, Section 1.3], which yields

The duality between the injective and projective tensor products will be used for compactness assertions on subsets of the latter. To this aim, we note in the following lemma that separability of the individual space transfers to the tensor product. As a consequence, in case and satisfy the assumption of Lemma 20 and both admit a separable predual, also admits a separable predual and hence bounded sets are weakly* compact.

Lemma 21

Let X, Y be separable spaces. Then both and are separable.

Proof

Take and to be dense countable subsets of X and Y, respectively. First note that it suffices to show that any simple tensor can be approximated arbitrarily close by with , . But this is true since (using [53, Propositions 2.1 and 3.1])

where denotes either the projective or the injective norm.

The following result, which can be obtained by direct modification of the result shown at the beginning of [53, Section 3.2], provides an equivalent representation of the injective tensor product in a particular case.

Lemma 22

Denote by the space of compactly supported continuous functions mapping from to X and denote by its completion with respect to the norm . Then, we have that

where the isometry is given as the completion of the isometric mapping defined for as