Abstract

Abnormal emotional reactions of the brain in patients with facial nerve paralysis have not yet been reported. This study aims to investigate this issue by applying a machine-learning algorithm that discriminates brain emotional activities that belong either to patients with facial nerve paralysis or to healthy controls. Beyond this, we assess an emotion rating task to determine whether there are differences in their experience of emotions. MEG signals of 17 healthy controls and 16 patients with facial nerve paralysis were recorded in response to picture stimuli in three different emotional categories (pleasant, unpleasant, and neutral). The selected machine learning technique in this study was the logistic regression with LASSO regularization. We demonstrated significant classification performances in all three emotional categories. The best classification performance was achieved considering features based on event-related fields in response to the pleasant category, with an accuracy of 0.79 (95% CI (0.70, 0.82)). We also found that patients with facial nerve paralysis rated pleasant stimuli significantly more positively than healthy controls. Our results indicate that the inability to express facial expressions due to peripheral motor paralysis of the face might cause abnormal brain emotional processing and experience of particular emotions.

Keywords: classification, emotion, facial nerve paralysis, LASSO, MEG

1. Introduction

The human facial expressions are an essential part of communication. Eyes, mouth, and brows specific movements can show emotions that are universally understandable [1,2]. It is believed that facial expressions have a direct influence on subjective feelings, so that facial expressions strengthen our emotions while suppression of that weakens emotions [3]. This theory is called the facial feedback hypothesis (FFH), and Charles Darwin [4] was one of the first who suggested that. Several studies have supported the FFH. As reported earlier [5], receptors in the facial skin return information to the brain, and when this feedback attains consciousness, it is perceived as emotion. Izard [6,7] also argued that central neural activity in the brain stem, limbic cortex, and hypothalamus is activated by the perception of an emotional stimulus, and then a signal is sent from the hypothalamus to the facial muscles and subsequently to the brain stem, hypothalamus, limbic system, and thalamus. This concept is consistent with recent studies suggesting that deliberate imitation of facial expressions is linked to neuronal activation in limbic regions such as the amygdala [8,9,10,11], which is connected to the hypothalamus and brain stem regions [12].

As a result of the above conceptual discussion, facial expressions generate a feedback cycle to the brain, but what happens to this feedback cycle if a person cannot perform the facial expressions? The inability to perform facial expressions due to the facial nerve injury is called facial nerve paralysis [13]. The facial nerve is implicated in the control of facial asymmetries and expressions [14,15], which is mainly associated with the primary sensorimotor area [16,17]. The paralysis of the facial nerve leads to loss of facial movement feedback and breaks the integrity of the sensorimotor circuit, which results in impaired connectivity within the cortical facial motor network [18,19,20,21]. Such consequences of facial nerve paralysis raise the question of whether there are differences between the processing of emotional stimuli of healthy controls and patients with facial nerve paralysis. To answer this question, we consider one of the formulations of the FFH, the necessity hypothesis, which states that facial expressions are “necessary to produce emotional experience” [22]. If the “necessity hypothesis” is correct, a person who has total facial paralysis should not experience emotions [23]. In line with this, the inability of a woman with total facial nerve paralysis and normal intelligence to perform a facial expression recognition task was previously reported [24]. Then again, in another study, patients with facial nerve paralysis made at least three more incorrect judgments than the mean of the healthy controls in a facial expression recognition task but had no significant impairment [25]. In contrast, a case study of a woman with total facial paralysis showed no impairment in facial expression experience and recognition [23]. Even with more patients, 18 adults with total facial paralysis, another study [26] reported no widespread deficits in the facial expression recognition task.

Overall, these studies provide a wealth of information on proving or rejecting the necessity hypothesis. Nonetheless, none of these studies measured the brain signals of patients with facial nerve paralysis and healthy controls in response to emotional stimuli, and to our knowledge, differences between their brain emotional responses have not yet been reported. It is also unclear whether these patients with facial nerve paralysis have different brain frequencies compared to healthy subjects in response to emotional stimuli. In the present study, we investigate this question by applying a machine learning algorithm that classifies one second of brain activities (measured with MEG) belonging to either facial nerve paralysis patients or healthy controls. The selected machine learning technique in this study is the logistic regression with LASSO regularization, which is highly common in the classification of high-dimensional data and also showed high accuracies in the emotion classification studies (e.g., [27,28,29]). Using this method also showed higher accuracies compared to using the other classification methods in emotion classification studies. For instance, Kim and colleagues [27] reported equivalent or better emotion classification performances using logistic regression than related works using the support vector machine (SVM), and naïve Bayes. Moreover, another EEG study [28] found that logistic regression with LASSO regularization had better performance in emotion classification and less over-fitted results compared to only using logistic regression. They also found that their classification performances using logistic regression with LASSO regularization are higher than those reported by other studies using different classifiers like naïve Bayes, Bayes, and SVM. Caicedo and colleagues [29] also studied the classification of high vs. low valence and high vs. low arousal emotional responses of the brain considering EEG signals. They performed logistic regression with LASSO regularization, SVM, and neural network (NN) as classification methods, and they found that using logistic regression with LASSO regularization makes higher accuracies in both arousal and valence categories compared to SVM and NN. In addition to this strong evidence, using LASSO has the advantage of automatic feature selection by setting the regression coefficients of irrelevant predictors to zero, which is often more accurate and interpretable than achieved estimates produced by univariate or stepwise methods [30,31,32]. Hence, we decided to use the logistic regression with LASSO regularization in our study. The classifications are performed based on considering event-related fields (ERFs) and the power spectrums of five brain frequency bands. In addition, we assess the Self-Assessment Manikin (SAM; [33]) test to determine whether there are differences in the experience of different emotions in these two groups of subjects.

2. Materials and Methods

In order to classify the brain’s emotional responses of healthy controls and patients with facial nerve paralysis in three categories (pleasant, neutral, and unpleasant), we proposed a methodology, which is described in the following section.

2.1. Subjects

Thirty-three subjects participated in the experiment: 17 healthy (11 females; aged 19–33 years; mean age 26.9 years) and 16 patients with facial nerve paralysis (14 females; age 26–65 years; mean age 45.8 years). Patients were recruited from the Department of Otorhinolaryngology of the Jena University Hospital. All subjects had a normal or corrected-to-normal vision, and healthy subjects had no history of neurological and psychiatric disorders. Beck Depression Inventory (BDI) [34] was measured for patients. The results of this inventory and further information about patients can be found in Table 1. All subjects gave their written informed consent, and the details of the study were approved by the local Ethics Committee of the Jena University Hospital (4415-04/15).

Table 1.

Characteristics of the facial nerve paralysis patients in this study.

| Patient Number. | Gender 1 | Side | Duration of Having Facial Paralysis in Month | Degree of Paralysis | Type of Paralysis | Reason for Facial Paralysis 2 | Becks Depression Inventory (BDI) |

Depression’s Severity According to BDI |

|---|---|---|---|---|---|---|---|---|

| 1 | W | Left | 72 | Complete | Chronic | 3 | 10 | Mild |

| 2 | W | Right | 58 | Complete | Chronic | 1 | 12 | Mild |

| 3 | W | Right | 101 | Complete | Chronic | 1 | 1 | Minimal |

| 4 | W | Left | 40 | Complete | Chronic | 1 | 44 | Severe |

| 5 | W | Left | 29 | Complete | Chronic | 1 | 25 | Moderate |

| 6 | W | Right | 79 | Complete | Chronic | 1 | 3 | Minimal |

| 7 | M | Left | 35 | Complete | Acute | 2 | 6 | Minimal |

| 8 | W | Right | 23 | Complete | Acute | 1 | 7 | Minimal |

| 9 | W | Right | 71 | Complete | Chronic | 1 | 8 | Minimal |

| 10 | W | Left | 25 | Complete | Chronic | 1 | 13 | Mild |

| 11 | W | Right | 22 | Complete | Chronic | 2 | 3 | Minimal |

| 12 | W | Right | 21 | Complete | Acute | 1 | 17 | Mild |

| 13 | M | Right | 17 | Complete | Chronic | 1 | 2 | Minimal |

| 14 | W | Left | 99 | Complete | Chronic | 3 | 6 | Minimal |

| 15 | W | Left | 19 | Complete | Acute | 2 | 4 | Minimal |

| 16 | W | Left | 16 | Complete | Chronic | 3 | 15 | Mild |

1 W: woman, M: man, 2 1 = idiopathic, 2 = inflammation, 3 = post-surgical.

2.2. Stimuli and Design

The stimuli consisted of 180 color pictures that were selected from the International Affective Picture System (IAPS; [35]), consisting of three emotional categories (60 pictures each): pleasant, neutral, and unpleasant. The pictures were presented on a white screen in front of the subjects (viewing distance about 80 cm) and were divided into three blocks consisting of 20 pictures of each category in a pseudo-randomized order. Each picture was presented for 6000 ms, followed by varying inter-trial intervals between 2000 to 6000 ms. The three blocks were presented successively, and each block was followed by a short break that allowed the subject to relax. Subjects were asked to avoid eye blinks and eye movements during viewing the pictures and remaining motionless as much as possible. The entire recording process lasted about 45 min and was conducted in a magnetically shielded and sound-sheltered room in the bio-magnetic center of the Jena University Hospital.

After the measurement step, all 180 pictures were presented again in the same order as before, and subjects were requested to rate the level of arousal and valence of each picture. Pictures were rated using the Self-Assessment Manikin (SAM; [33]) with a seven-point scale indicating arousal (1 to 7, relaxed to excited) and valence (1 to 7, pleasant to unpleasant) levels. To find the differences between the ratings between patients and healthy controls, the median ratings of all healthy controls over one picture were compared with the median ratings of 14 patients over the same picture using the Wilcoxon rank-sum test. We did not consider the ratings of two patients because they did not fully participate in this step.

2.3. Data Acquisition and Preprocessing

MEG recordings were obtained using a 306-channel helmet-shaped Elekta Neuromag MEG system (Vectorview, Elekta Neuromag Oy, Helsinki, Finland), including 204 gradiometers and 102 magnetometers. In this experiment, only the information of the 102 magnetometers was analyzed. The reason for this is that we had a higher signal-to-noise ratio (SNR) when using magnetometers than when using gradiometers in our study. Moreover, since we used the SSS method, which estimates inside components with the 102 magnetometers and 204 magnetometers, taking magnetometers or gradiometers into account would lead to very similar result measures [36,37,38]. To define the Cartesian head coordinate system, a 3D digitizer (3SPACE FASTRAK, Polhemus Inc., Colchester, VT, USA) was used. MEG was digitized to 24 bit at a sampling rate of 1 kHz. All channels were on-line low-pass filtered at 330 Hz and high-pass filtered at 0.1 Hz. MaxFilter Version 2.0.21 (Elekta Neuromag Oy. Finland) using the signal-space separation (SSS) method [39] was applied on raw data with aligning of sensor-level data across all subjects to one reference subject which helped to achieve the same MEG channel positions for all subjects and quantify the robustness of sensors across all subjects. Then, 1000 ms before the stimulus onset and 1500 ms after the stimulus onset were pre-processed. Baseline correction was applied to the first 1000 ms of the epoch. Data were down-sampled to 250 Hz and band-pass filtered (1–80 Hz). Using the independent component analysis (ICA), eye artifacts (EOG), and artifacts caused by magnetic fields of heartbeat (ECG) were removed. Visual detection was used to identify and remove trials that had excessive movement artifacts. Finally, 45 to 55 trials remained for each stimulus category per subject. The artifact-free data were low-pass filtered at 45 Hz to calculate event-related fields (ERFs). Then the power spectrums of MEG data were calculated in five frequency bands: delta (1–4 Hz), theta (5–8 Hz), alpha (9–14 Hz), beta (15–30 Hz), and gamma (31–45 Hz). The entire analysis was performed using the Fieldtrip toolbox [40] and MATLAB 9.3.0 (Mathworks, Natick, MA, USA).

2.4. Feature Extraction

The feature sets used in this study can be categorized into two groups: features based on ERFs, and features based on power spectrums. These features and the classification method used in this experiment are explained in detail in the following section.

2.4.1. Features Based on ERFs

We took the mean values of event-related fields power (i.e., ERFs to the power of two) over one-second post-stimulus and all stimuli of each emotion category as observations for each subject. Thus, each subject provided three vector-valued observations: one for pleasant, one for neutral, and one for unpleasant. Each observation incorporates 102 (magnetometers) elements. Combining observations from all 33 subjects to define the feature matrix in one emotion category (e.g., pleasant), we obtained 33 observations with 102 predictors each. Therefore, based on ERF responses, we compiled three feature sets (according to three emotion categories), and each feature set had a dimensionality of 33 × 102.

2.4.2. Features Based on Power Spectrums

To generate features based on power spectrums, we took the mean values of the power spectrum (from a particular frequency band) over one-second post-stimulus and all stimuli of each emotion category as observations for each subject. Thus, each subject provided three vector-valued observations for each frequency band (5 bands): one for pleasant, one for neutral, and one for unpleasant. Each observation incorporates 102 (magnetometers) elements. Therefore, based on power spectrums, we compiled 15 feature sets (according to three emotion categories and five brain frequency bands) with a dimensionality of 33 × 102 each.

2.5. Feature Subset Selection and Classification

After extracting features, we had to select a subset of features that are mostly related to the emotion discrimination of these two groups of subjects and apply classification methods for that subset. The reason for selecting a subset is that from the statistical point of view, irrelevant features may decrease the classification accuracy [41]. However, exploring based on the entire feature set and identifying subsets of discriminative features is a very complex and lengthy process. Thus, using effective feature selection methods helps to avoid the accumulation of features that are not discriminative in the least amount of time possible.

Here, we employed regularized logistic regression with the most popular penalty, the least absolute shrinkage, and selection operator (LASSO; [42]), for feature subset selection and classification. LASSO is highly common in the classification of high dimensional data (a large number of predictors and small sample size) because it selects variables by forcing some regression coefficients to zero and provides high classification accuracies [43].

We defined the response variable of the logistic regression by one for healthy controls and zero for patients with facial nerve paralysis. Let be a vector with elements, and let be associate vectors with predictors. The probability of being healthy (class 1) for the subject is estimated by Equation (1) [43]:

| (1) |

where and are the regression coefficients and the intercept, respectively. The goal of LASSO regression is to estimate the and which are obtained by Equation (2) [43]:

| (2) |

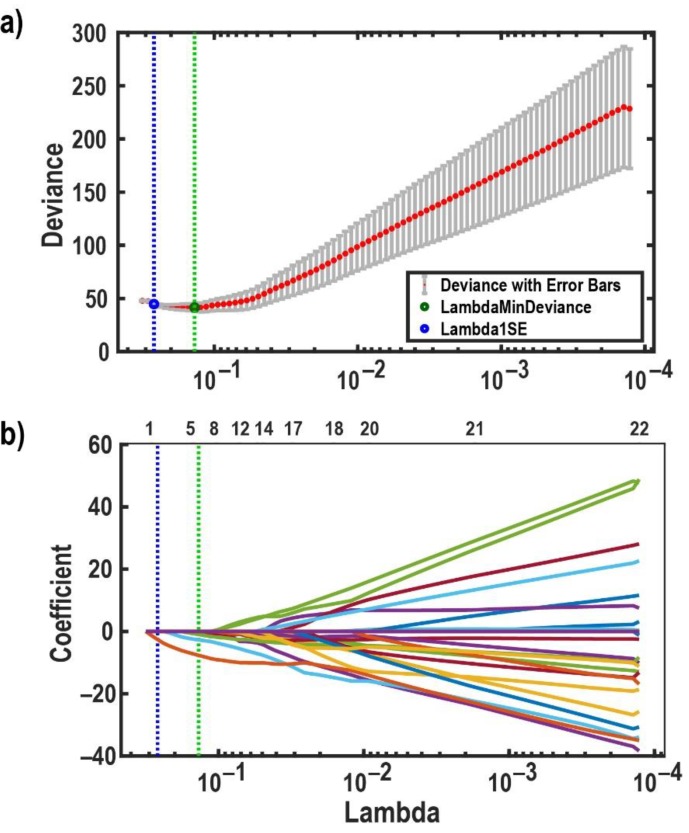

The penalty term, penalizes large regression parameters, where the regularization constant λ, is the positive tuning parameter that controls the balance between the model fit and the effect of the penalty term [44]. When , maximum likelihood is reached and when tends towards infinity, it increases the impact of the penalty term on the parameter estimates, and the penalty term obliges all regression coefficients to be zero. To determine the optimal value of λ, we used a leave-one-subject-out cross-validation (33-fold). The optimal λ was selected according to the minimum cross-validation error under the constraint that at least two regression coefficients are not equal to zero. This constraint resulted from pilot investigations, which revealed that reliable discrimination of patients and healthy controls was not possible based on univariate features. Since we defined 18 feature sets, we had to determine 18 lambdas; exemplary, we show only one figure related to the lambda determination (see Figure 1). The optimal λ associated with each feature set was used for feature subset selection and classification. The classification performance was evaluated by accuracy, specificity, and sensitivity. The accuracy is the ratio of the correctly classified subjects to their total number. The ratio of correct positives (healthy controls classified as healthy controls) to the total number of healthy controls is called sensitivity or true positive rate. The ratio of correct negatives (patients classified as patients) to the total number of patients is called specificity or true negative rate. To assess our classification results, we performed 1000 16-fold-stratified cross-validations to estimate simultaneous 95%-confidence intervals for accuracy, sensitivity, and specificity (see Figure 2).

Figure 1.

Effects of LASSO regularization tuning parameter λ on regression coefficients, and deviances. (a) A plot of the cross-validation deviance of the LASSO fit model against the λ. This figure shows leave-one-subject-out cross-validation results to determine the optimal value of λ. The Y-axis indicates the cross-validation deviance corresponds to the values of λ on the X-axis. The mean cross-validation deviance is shown by the red points in this figure, and each error bar shows ±1 standard deviation. The blue and green vertical dotted lines (in both figures) indicate the λ, which gives the minimum deviance with no more than one standard deviation (blue circle) and the minimum deviance (green circle), respectively. (b) The paths of the LASSO fit model’s coefficients in dependence on λ. This figure shows how λ controls the shrinkage of LASSO coefficients. The numbers above the box show how many non-zero coefficients remain considering the corresponding λ values on the X-axis. The Y-axis illustrates the coefficients of classifiers. Each path refers to one regression coefficient. It is shown that when λ increases to the left side of the plot, the number of remaining non-zero coefficients gets close to zero.

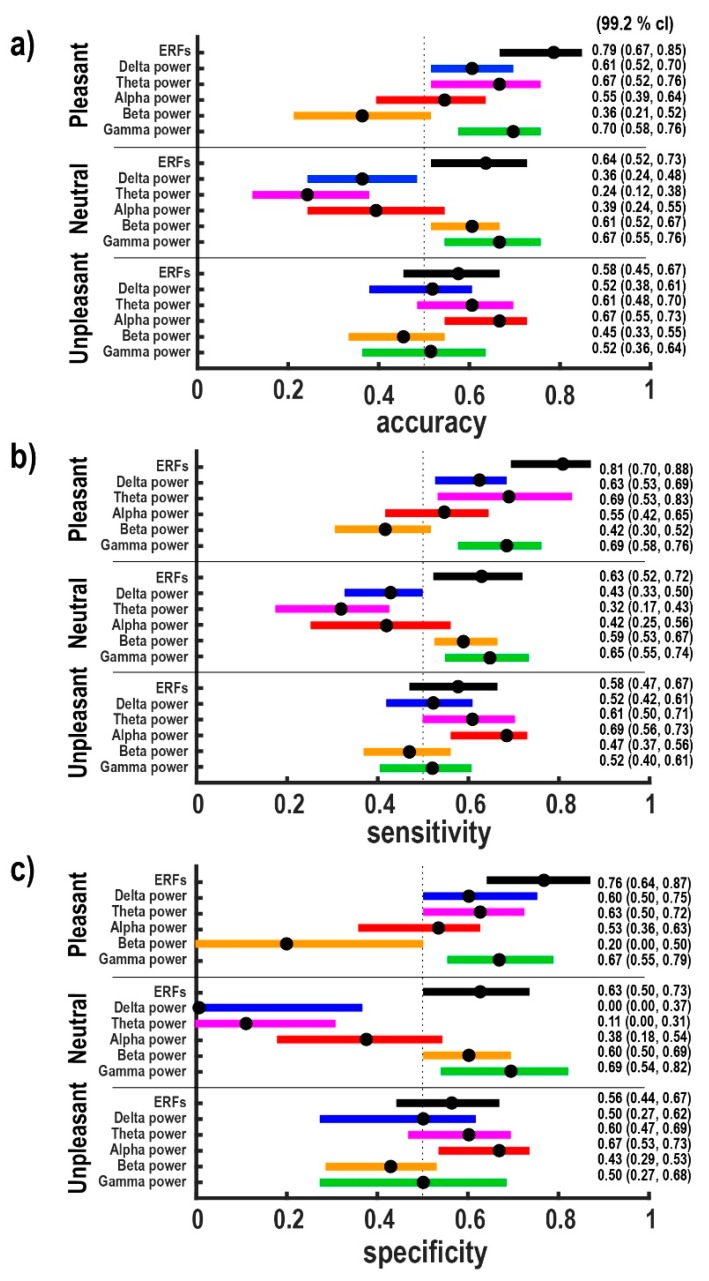

Figure 2.

Evaluation of classifier performance for all feature sets based on 1000 16-fold-stratified cross-validations. Numerical values represent medians as well as 95% simultaneous confidence intervals for the metrics (a) accuracy, (b) sensitivity, and (c) specificity. The median values considering 95% CI are represented by circles. The vertical dotted line displays results equal to random results. Considering features based on ERFs in the pleasant category, we achieved the highest classification performances.

3. Results

In this section, we assess the feasibility of classifying brain emotional responses of healthy controls and patients with facial nerve paralysis. To this end, we report the classification performances of choosing each feature set. Then we report the results of the comparison between the levels of arousal and valence rated by these two groups of subjects to determine whether there are differences between their experience of emotions or not.

3.1. Classification Results

Figure 2a depicts accuracies with 95% simultaneous confidence intervals for all feature sets. Simultaneous confidence intervals were determined at 99.2% of individual confidence levels in order to obtain a 95% simultaneous confidence level using Bonferroni correction for six hypotheses. As can be seen, it is possible to discriminate the brain responses of these two groups of subjects in each category: in the category of pleasant based on ERFs as well as on delta-, theta- and gamma-band power; in the category of neutral based on ERFs as well as on beta- and gamma-band power; in the category of unpleasant based on alpha-band power. The highest accuracy of 0.79 (95% CI (0.70, 0.82), 99.2% CI (0.67, 0.85)) was obtained for pleasant stimuli in combination with a direct exploitation of ERFs. Comparing the three categories, by trend, the groups are best distinguishable for pleasant stimuli.

In order to elaborate that accuracy values are not dominated by one of the subjects’ groups, sensitivities and specificities with 95% simultaneous confidence intervals for all feature sets are presented in Figure 2b,c, respectively. As expected, due to similar group sizes of 16 and 17, statistical significances with respect to sensitivity and specificity resemble the significance pattern of accuracy.

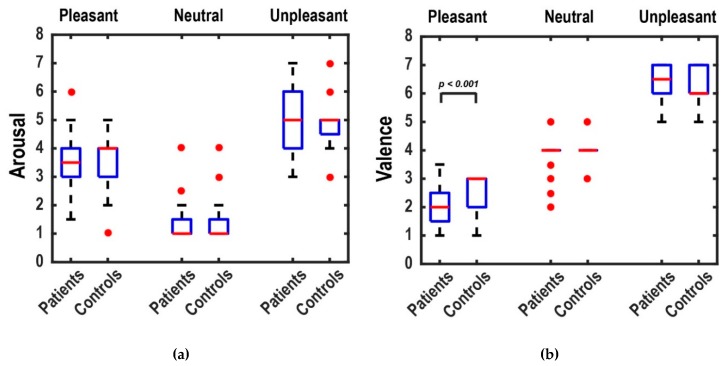

3.2. Ratings Results

The results of the median ratings of patients and healthy controls for arousal and valence levels in three picture categories are indicated in Figure 3. To compare the median ratings between patients and controls, we performed the Wilcoxon rank-sum test, which is based on the null hypothesis of equal medians. We found no significant differences in the comparison of arousal ratings. In the comparison of the valence ratings, we found significant higher valence ratings only for pleasant stimuli in healthy subjects compared to patients (). This shows that patients rated pleasant images significantly more positively than controls, since, in the 7-point scale of valence, 1 indicates the highest positivity and 7 the highest negativity of an emotion. Except for some outliers, neutral stimuli showed no variation between subjects (median rating = 4).

Figure 3.

Boxplots of the arousal (a) and valence (b) ratings of patients and healthy controls for each picture category. Boxplots show the median ratings of subjects for each picture category. The red lines are the medians, and the red circles represent outliers. The valence ratings for pleasant stimuli are significantly higher for healthy controls compared to patients.

4. Discussion

Facial nerve paralysis is a common disorder of the main motor pathway, which causes an inability to perform facial expressions. In our present study, we investigated the automatic classification of brain responses of patients with facial nerve paralysis and healthy controls using MEG signals in response to three emotional categories of picture stimuli (pleasant, neutral, and unpleasant). We evaluated the feasibility of classifying brain emotional reactions of these two groups of subjects by computing several features based on ERFs and power spectrums in five brain frequency bands. Significant classification performances were obtained for all three emotional categories, and the highest was achieved when considering feature sets taken from ERFs in response to pleasant stimuli with the median of 0.79 (95% CI (0.70, 0.82)). These results demonstrate that patients with facial nerve paralysis might have different emotional brain responses compared to healthy controls. However, comparing the amplitude of brain responses between patients and controls, considering ERFs and power spectra in each frequency band, we found no significant differences. We propose that these differences might relate to the patterns of brain emotional responses. As a physiological explanation, since the loss of movement feedback in facial nerve paralysis influences the cortical motor network [18,19,20,21], which is responsible for the generation of patterned emotion-specific changes in several systems such as the limbic system [45]; it is possible that the produced patterns become different. To the best of our knowledge, there are no studies that report the differences between the brain’s emotional responses of facial nerve paralysis patients and healthy controls. However, our results are consistent with the result of an earlier study that reported blocking facial mimicry of healthy subjects causes different neural activations in the amygdala in response to emotional stimuli [3]. Our results are also compatible with a very recent study that compared patients with facial nerve paralysis and healthy controls in resting state and found that the brain fraction amplitude of low-frequency fluctuation is abnormal in emotion-related regions [46].

Our classification accuracies obtained using logistic regression with LASSO regularization are not compatible with any study because, as mentioned above, to the best of our knowledge, our study is the first to classify the brain’s emotional responses of patients with facial nerve palsy compared to the healthy controls. However, our results can be compatible with the results of emotion classification studies. The accuracies achieved in our study (the highest value: 0.79 (99.2% CI (0.67, 0.85))) are similar or higher than the results achieved with many studies. For instance, an EEG study [47] conducted SVM to classify valence and arousal in human emotions evoked by visual stimuli, and the classification accuracies were between 54.7% and 62.6%. Another EEG study [48] also used SVM to classify four emotion categories (joy, anger, sadness, and pleasure), and the best accuracies were obtained considering joy (86.2%). Using both SVM and the hidden Markov model, one EEG study [49] classified pleasant, unpleasant, and neutral emotion categories, and the highest mean accuracy was 62%. Classification using SVM was also performed in classifying joy, sadness, fear, and relaxed states, which resulted in an average accuracy of 41.7% [50]. Using naïve Bayes and Fisher discriminant analysis (FDA), the average accuracy of 58% was obtained for classifying three arousal categories of picture stimuli by another study [51]. In order to propose Bayesian network-based classifiers in classifying emotions, one EEG study achieved the highest accuracy of 78.17% [52]. To our knowledge, only one study [53] investigated MEG for the classification of human emotions. They performed linear SVM classifiers and achieved the highest classification accuracy of 84%. Our classification accuracies are also similar or higher than the results of other studies using the same classification methods as in our study. For instance, Kim and colleagues [27] reported accuracy of 78.57% performing logistic regression to classify positive versus negative emotions, and they also found that their results were more accurate than results of related works using SVM and naïve Bayes. Another EEG study [28] also reported the maximum accuracy of 78.1% when performing logistic regression with LASSO regularization in the classification of valence and arousal. They also noted that their results achieved by using logistic regression with LASSO regularization were higher than those achieved by logistic regression alone or compared with other studies using classifiers such as naïve Bayes, Bayes, and SVM. Caicedo and colleagues [29] also performed the logistic regression with LASSO regularization to classify arousal and valence. They also used SVM and NN classifiers and achieved an accuracy of 78.2% using logistic regression with LASSO regulation, which was higher than when using SVM and NN.

In our study, the classification accuracies obtained through brain responses to pleasant stimuli were higher than brain responses triggered by other stimuli. This finding indicates that the processing of pleasant emotions in facial nerve paralysis patients are significantly different from those in healthy controls, and this difference is more pronounced than differences between the brain responses evoked by unpleasant or neutral stimuli. However, why might paralysis of facial nerve cause different brain emotional responses to pleasant stimuli, more than to unpleasant stimuli? One possible explanation might be that people control their negative emotions more often than positive emotions [54]. Therefore healthy controls may refrain from performing facial expressions while having unpleasant emotions more than when experiencing pleasant emotions. Thus, there may not be vast differences between the feedback caused by facial expressions during unpleasant stimuli, and consequently, the brain responses triggered by that, in people who can perform (healthy subjects), and people who cannot perform facial expressions (patients with facial nerve paralysis).

In our emotion ratings task, we used the International Affective Picture System (IAPS; [35]), including a wide range of emotional scenes such as nature, war scenes, sports, and family, as opposed to previous studies that contained images of faces [23,24,25,26]. Using the Self-Assessment Manikin (SAM; [33]) test, we demonstrated that patients with facial nerve paralysis significantly rated lower valence levels for pleasant stimuli compared to healthy controls. Since valence is the positivity or the negativity conveyed by an emotion [55], the ratings obtained from subjects in this experiment imply that the effects of pleasant stimuli are more positive for patients than for healthy controls. Thus, our findings demonstrate that facial feedback plays an essential role in the normal experience of pleasant emotional images. This finding is in line with our previous findings regarding the highly significant different brain responses of these two groups of subjects in response to pleasant stimuli, which are reflected in the different experience of pleasant stimuli by these patients. Inconsistent with our results, from the suppression of emotional expressions in healthy controls, Davis and his colleagues [56] demonstrated that inhibiting facial expressions in healthy people makes no difference in positive emotional experiences, but weakens negative emotional experiences. The different emotional experience of patients with facial nerve paralysis and healthy controls has also been reported in earlier studies that evaluated facial expression recognition tasks. Calder and colleagues [25] studied three patients with total facial nerve paralysis and compared their emotion recognition with 40 healthy controls. They reported that patients made at least three times more wrong judgments than the average of the healthy controls, but there was no significant impairment. Giannini and colleagues [24] reported the complete inability of a woman with total facial nerve paralysis in performing a facial expression recognition task. However, some studies found no different emotion recognition by patients with total facial nerve paralysis compared to healthy controls [23,26]. Accordingly, such studies suggest that facial feedback is not necessary to recognize facial expressions, which might oppose the necessity hypothesis. Our study does not allow us to support or oppose the previously described necessity hypothesis because it requires that we study patients with total facial nerve paralysis. However, our study demonstrates that the paralysis of the facial nerve causes changes in the emotional responses of the brain, especially during pleasant stimuli and these results were also reflected in the ratings of pleasant emotion in these patients. This finding is a strong argument for the importance of the ability to perform facial expressions to have normal brain emotional processing and experience of particular emotions.

Considering different feature sets, we have demonstrated that the brain’s responses to pleasant, unpleasant, and neutral stimuli in patients with facial nerve paralysis are significantly different from those in healthy controls. Moreover, we showed that these different brain responses are associated with the power spectrum of some frequency bands. No study to our knowledge has reported any differences between the frequency-bands of brain emotional responses in the comparison between healthy subjects and patients with facial nerve paralysis. However, we found some biological evidence that may explain some of these results. There is some evidence that gamma-band activity is associated with the activation of the sensorimotor cortex, and gamma event-related synchronization (ERS) occurs in the sensorimotor cortex during unilateral limb movements such as movements of fingers, toes, and tongue [57,58], thus unilateral facial movements might produce gamma activity in the sensorimotor cortex. Since patients with facial nerve paralysis cannot fully perform facial expressions, and because they have impaired connectivity within the sensorimotor cortex [18,19,20,21], their induced gamma activity might be different. Many recent experiments have also focused on the role of theta–gamma oscillations in cognitive neuroscience [59,60,61,62,63]. It is assumed that theta–gamma interactions are associated with cortical sensory processing [64], and a hierarchy of oscillations including delta, theta, and gamma oscillations arranges sensory functions [65]. Thus, impaired connectivity within the sensorimotor cortex caused by facial nerve paralysis can lead to a disruption of the delta–theta–gamma oscillations. Moreover, it has been demonstrated that when sensory and motor regions become engaged, the suppression of alpha power has been observed [63]. Given that the facial nerve is mainly associated with the primary sensorimotor area [16,17] and the paralysis of that results in reduced connectivity within the cortical facial motor network [18,19,20,21], it might be the reason for different alpha power in patients with paralysis of facial nerve compared to healthy controls.

Finding significant classification accuracies in the classification of brain neutral responses of these two groups of subjects were interesting, unexpected findings. Since it is assumed that neutral stimuli have non-emotional content, we did not expect to find different brain responses of these two groups of subjects during viewing neutral stimuli. However, we have shown that the brain ERFs of patients with facial nerve paralysis differ significantly from those of healthy controls and are associated with beta and gamma bands. Nonetheless, one possibility might be that mood changes when viewing affective pictures may influence the processing of neutral pictures, and the presentation of neutral images alone may lead to more accurate results [66].

5. Limitations in the Study

There are some limitations in this study that should be considered in further research. First, the mean age of patients in this study was greater than in healthy subjects. This was because most facial nerve paralysis patients were not interested in participating in the experiment. Therefore, we did not have many possibilities to consider the same mean age for both groups of participants. The reason for the unwillingness of these patients to participate in such studies might be that they feel reluctant to communicate with other people or to participate in public [67,68]. However, it might be beneficial to consider the same mean age in both groups of subjects in further research. The second issue that could be considered in future studies is to include other emotional categories such as anger, anxiety, or surprise to better classify all emotional states of these two groups of people. Third, in this study, we did not measure the brain activations of these two groups of subjects during the resting state. We would suggest that considering the data of the resting state in further studies would help to find the answer to these very important questions, namely whether these two groups of subjects have different brain activities even at baseline or whether some of the brain rhythms reflect the differences between these two groups of test subjects at baseline.

6. Conclusions

This study shows that the emotional experiences and the brain’s emotional responses of patients with facial nerve paralysis are accurately separated from those in healthy controls in specific emotions. Our results suggest that the ability to perform facial expressions is necessary to have normal emotional processing and experience of emotions.

Acknowledgments

The authors gratefully thank S. Heginger and T. Radtke for technical assistance and E. Künstler for her valuable comments.

Author Contributions

Conceptualization, R.H., O.W.W. and C.M.K.; Data curation, M.K., L.L., P.B. and R.H.; Formal analysis, M.K., L.L. and P.B.; Funding acquisition, O.W.W. and C.M.K.; Investigation, M.K., S.B., L.L., T.G., P.B., R.H., O.W.W. and C.M.K.; Methodology, M.K., S.B., L.L., T.G., P.B. and C.M.K.; Project administration, O.W.W. and C.M.K.; Resources, R.H., O.W.W., G.F.V., O.G.-L. and C.M.K.; Supervision, O.W.W. and C.M.K.; Validation, M.K. and L.L.; Visualization, M.K.; Writing—original draft, M.K.; Writing—review & editing, M.K., S.B., L.L., T.G., P.B., R.H., O.W.W., G.F.V., O.G.-L. and C.M.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by BMBF (IRESTRA 16SV7209 and Schwerpunktprogramm BU 1327/4-1).

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Andrew R.J. The origins of facial expressions. Sci. Am. 1965;213:88–95. doi: 10.1038/scientificamerican1065-88. [DOI] [PubMed] [Google Scholar]

- 2.Ekman P., Friesen W.V., O’Sullivan M. What the Face Reveals. Oxford University Press; New York, NY, USA: 1997. Smiles when lying; pp. 201–216. [Google Scholar]

- 3.Hennenlotter A., Dresel C., Castrop F., Ceballos-Baumann A.O., Wohlschläger A.M., Haslinger B. The link between facial feedback and neural activity within central circuitries of emotion—New insights from Botulinum toxin–induced denervation of frown muscles. Cereb. Cortex. 2008;19:537–542. doi: 10.1093/cercor/bhn104. [DOI] [PubMed] [Google Scholar]

- 4.Darwin C.R. The Expression of the Emotions in Man and Animals. Appleton; New York, NY, USA: 1896. [Google Scholar]

- 5.Tomkins S.S. Theories of Emotion. Academic Press; Cambridge, MA, USA: 1980. Affect as amplification: Some Modifications in Theory; pp. 141–164. [Google Scholar]

- 6.Izard C.E. The Face of Emotion. Appleton-Century-Crofts; New York, NY, USA: 1971. [Google Scholar]

- 7.Izard C.E. Differential emotions theory and the facial feedback hypothesis of emotion activation: Comments on Tourangeau and Ellsworth’s “The role of facial response in the experience of emotion”. J. Personal. Soc. Psychol. 1981;58:487–498. doi: 10.1037/0022-3514.58.3.487. [DOI] [Google Scholar]

- 8.Carr L., Iacoboni M., Dubeau M.C., Mazziotta J.C., Lenzi G.L. Neural mechanisms of empathy in humans: A relay from neural systems for imitation to limbic areas. Proc. Natl. Acad. Sci. USA. 2003;100:5497–5502. doi: 10.1073/pnas.0935845100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Wild B., Erb M., Eyb M., Bartels M., Grodd W. Why are smiles contagious? An fMRI study of the interaction between perception of facial affect and facial movements. Psychiatry Res. Neuroimaging. 2003;123:17–36. doi: 10.1016/S0925-4927(03)00006-4. [DOI] [PubMed] [Google Scholar]

- 10.Dapretto M., Davies M.S., Pfeifer J.H., Scott A.A., Sigman M., Bookheimer S.Y., Iacoboni M. Understanding emotions in others: Mirror neuron dysfunction in children with autism spectrum disorders. Nat. Neurosci. 2006;9:28. doi: 10.1038/nn1611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Lee T.W., Josephs O., Dolan R.J., Critchley H.D. Imitating expressions: Emotion-specific neural substrates in facial mimicry. Soc. Cogn. Affect. Neurosci. 2006;1:122–135. doi: 10.1093/scan/nsl012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.LeDoux J.E. Emotion circuits in the brain. Annu. Rev. Neurosci. 2000;23:155–184. doi: 10.1146/annurev.neuro.23.1.155. [DOI] [PubMed] [Google Scholar]

- 13.Coulson S.E., O’dwyer N.J., Adams R.D., Croxson G.R. Expression of emotion and quality of life after facial nerve paralysis. Otol. Neurotol. 2004;25:1014–1019. doi: 10.1097/00129492-200411000-00026. [DOI] [PubMed] [Google Scholar]

- 14.Spencer R.C., Irving M.R. Causes and management of facial nerve palsy. Br. J. Hosp. Med. 2016;77:686–691. doi: 10.12968/hmed.2016.77.12.686. [DOI] [PubMed] [Google Scholar]

- 15.Hussain A., Nduka C., Moth P., Malhotra R. Bell’s facial nerve palsy in pregnancy: A clinical review. J. Obstet. Gynaecol. 2017;37:409–415. doi: 10.1080/01443615.2016.1256973. [DOI] [PubMed] [Google Scholar]

- 16.Sessle B.J., Yao D., Nishiura H., Yoshino K., Lee J.C., Martin R.E., Murray G.M. Properties and plasticity of the primate somatosensory and motor cortex related to orofacial sensorimotor function. Clin. Exp. Pharmacol. Physiol. 2005;32:109–114. doi: 10.1111/j.1440-1681.2005.04137.x. [DOI] [PubMed] [Google Scholar]

- 17.Svensson P., Romaniello A., Wang K., Arendt-Nielsen L., Sessle B.J. One hour of tongue-task training is associated with plasticity in corticomotor control of the human tongue musculature. Exp. Brain Res. 2006;173:165–173. doi: 10.1007/s00221-006-0380-3. [DOI] [PubMed] [Google Scholar]

- 18.Klingner C.M., Volk G.F., Maertin A., Brodoehl S., Burmeister H.P., Guntinas-Lichius O., Witte O.W. Cortical reorganization in Bell’s palsy. Restor. Neurol. Neurosci. 2011;29:203–214. doi: 10.3233/RNN-2011-0592. [DOI] [PubMed] [Google Scholar]

- 19.Klingner C.M., Volk G.F., Brodoehl S., Burmeister H.P., Witte O.W., Guntinas-Lichius O. Time course of cortical plasticity after facial nerve palsy: A single-case study. Neurorehabilit. Neural Rep. 2012;26:197–203. doi: 10.1177/1545968311418674. [DOI] [PubMed] [Google Scholar]

- 20.Klingner C.M., Volk G.F., Brodoehl S., Witte O.W., Guntinas-Lichius O. The effects of deefferentation without deafferentation on functional connectivity in patients with facial palsy. Neuroimage Clin. 2014;6:26–31. doi: 10.1016/j.nicl.2014.08.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Klingner C.M., Brodoehl S., Witte O.W., Guntinas-Lichius O., Volk G.F. The impact of motor impairment on the processing of sensory information. Behav. Brain Res. 2019;359:701–708. doi: 10.1016/j.bbr.2018.09.016. [DOI] [PubMed] [Google Scholar]

- 22.Fridlund A.J. Human Facial Expression: An Evolutionary View. Academic Press; New York, NY, USA: 1994. [Google Scholar]

- 23.Keillor J.M., Barrett A.M., Crucian G.P., Kortenkamp S., Heilman K.M. Emotional experience and perception in the absence of facial feedback. J. Int. Neuropsychol. Soc. 2002;8:130–135. doi: 10.1017/S1355617701020136. [DOI] [PubMed] [Google Scholar]

- 24.Giannini A.J., Tamulonis D., Giannini M.C., Loiselle R.H., Spirtos G. Defective response to social cues in Möbius’ syndrome. J. Nerv. Ment. Dis. 1984;172:174–175. doi: 10.1097/00005053-198403000-00008. [DOI] [PubMed] [Google Scholar]

- 25.Calder A.J., Keane J., Cole J., Campbell R., Young A.W. Facial expression recognition by people with Möbius syndrome. Cogn. Neuropsychol. 2000;17:73–87. doi: 10.1080/026432900380490. [DOI] [PubMed] [Google Scholar]

- 26.Rives Bogart K., Matsumoto D. Facial mimicry is not necessary to recognize emotion: Facial expression recognition by people with Moebius syndrome. Soc. Neurosci. 2010;5:241–251. doi: 10.1080/17470910903395692. [DOI] [PubMed] [Google Scholar]

- 27.Kim S., Li F., Lebanon G., Essa I. Beyond sentiment: The manifold of human emotions; Proceedings of the 16th International Conference on Artificial Intelligence and Statistics (AISTATS); Scottsdale, AZ, USA. 29 April–1 May 2013; pp. 360–369. [Google Scholar]

- 28.Fan M., Chou C.A. Recognizing affective state patterns using regularized learning with nonlinear dynamical features of EEG; Proceedings of the 2018 IEEE EMBS International Conference on Biomedical & Health Informatics (BHI); Las Vegas, NV, USA. 4–7 March 2018; pp. 137–140. [Google Scholar]

- 29.Caicedo-Acosta J., Cárdenas-Peña D., Collazos-Huertas D., Padilla-Buritica J.I., Castaño-Duque G., Castellanos-Dominguez G. Multiple-instance lasso regularization via embedded instance selection for emotion recognition; Proceedings of the International Work-Conference on the Interplay Between Natural and Artificial Computation; Almeria, Spain. 3–7 June 2019; pp. 244–251. [Google Scholar]

- 30.Meuleman B., Scherer K.R. Nonlinear appraisal modeling: An application of machine learning to the study of emotion production. IEEE Trans. Affect. Comput. 2013;4:398–411. doi: 10.1109/T-AFFC.2013.25. [DOI] [Google Scholar]

- 31.Kauppi J.P., Kandemir M., Saarinen V.M., Hirvenkari L., Parkkonen L., Klami A., Hari R., Kaski S. Towards brain-activity-controlled information retrieval: Decoding image relevance from MEG signals. NeuroImage. 2015;112:288–298. doi: 10.1016/j.neuroimage.2014.12.079. [DOI] [PubMed] [Google Scholar]

- 32.Addington J., Farris M., Stowkowy J., Santesteban-Echarri O., Metzak P., Kalathil M.S. Predictors of transition to psychosis in individuals at clinical high risk. Curr. Psychiatry Rep. 2019;21:39. doi: 10.1007/s11920-019-1027-y. [DOI] [PubMed] [Google Scholar]

- 33.Lang P.J. Behavioral treatment and bio-behavioral assessment: Computer applications. Technol. Ment. Health Care Deliv. Syst. 1980:119–137. [Google Scholar]

- 34.Beck A.T., Ward C.H., Mendelson M., Mock J., Erbaugh J. An inventory for measuring depression. Arch. Gen. Psychiatry. 1961;4:561–571. doi: 10.1001/archpsyc.1961.01710120031004. [DOI] [PubMed] [Google Scholar]

- 35.Lang P.J., Bradley M.M., Cuthbert B.N. International affective picture system (IAPS): Technical manual and affective ratings. Nimh Cent. Study Emot. Atten. 1997;1:39–58. [Google Scholar]

- 36.Taulu S., Simola J., Kajola M. Applications of the signal space separation method. IEEE Trans. Signal Process. 2005;53:3359–3372. doi: 10.1109/TSP.2005.853302. [DOI] [Google Scholar]

- 37.Garcés P., López-Sanz D., Maestú F., Pereda E. Choice of magnetometers and gradiometers after signal space separation. Sensors. 2017;17:2926. doi: 10.3390/s17122926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.García-Pacios J., Garces P., del Río D., Maestu F. Tracking the effect of emotional distraction in working memory brain networks: Evidence from an MEG study. Psychophysiology. 2017;54:1726–1740. doi: 10.1111/psyp.12912. [DOI] [PubMed] [Google Scholar]

- 39.Taulu S., Simola J. Spatiotemporal signal space separation method for rejecting nearby interference in MEG measurements. Phys. Med. Biol. 2006;51:1759. doi: 10.1088/0031-9155/51/7/008. [DOI] [PubMed] [Google Scholar]

- 40.Oostenveld R., Fries P., Maris E., Schoffelen J.M. FieldTrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput. Intell. Neurosci. 2011;2011:156869. doi: 10.1155/2011/156869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Chandra B., Gupta M. An efficient statistical feature selection approach for classification of gene expression data. J. Biomed. Inform. 2011;44:529–535. doi: 10.1016/j.jbi.2011.01.001. [DOI] [PubMed] [Google Scholar]

- 42.Tibshirani R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B (Methodol.) 1996;58:267–288. doi: 10.1111/j.2517-6161.1996.tb02080.x. [DOI] [Google Scholar]

- 43.Algamal Z.Y., Lee M.H. Penalized logistic regression with the adaptive LASSO for gene selection in high-dimensional cancer classification. Expert Syst. Appl. 2015;42:9326–9332. doi: 10.1016/j.eswa.2015.08.016. [DOI] [PubMed] [Google Scholar]

- 44.Friedman J., Hastie T., Tibshirani R. Regularization paths for generalized linear models via coordinate descent. J. Stat. Softw. 2010;33:1–22. doi: 10.18637/jss.v033.i01. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Gothard K.M. The amygdalo-motor pathways and the control of facial expressions. Front. Neurosci. 2014;8:43. doi: 10.3389/fnins.2014.00043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Han X., Li H., Wang X., Zhu Y., Song T., Du L., Sun S., Guo R., Liu J., Shi S., et al. Altered brain fraction amplitude of low frequency fluctuation at resting state in patients with early left and right Bell’s palsy: Do they have differences? Front. Neurosci. 2018;12:797. doi: 10.3389/fnins.2018.00797. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Petrantonakis P.C., Hadjileontiadis L.J. A novel emotion elicitation index using frontal brain asymmetry for enhanced EEG-based emotion recognition. IEEE Trans. Inf. Technol. Biomed. 2011;15:737–746. doi: 10.1109/TITB.2011.2157933. [DOI] [PubMed] [Google Scholar]

- 48.Lin Y.P., Wang C.H., Jung T.P., Wu T.L., Jeng S.K., Duann J.R., Chen J.H. EEG-based emotion recognition in music listening. IEEE Trans. Biomed. Eng. 2010;57:1798–1806. doi: 10.1109/TBME.2010.2048568. [DOI] [PubMed] [Google Scholar]

- 49.Schaaff K., Schultz T. Towards emotion recognition from electroencephalographic signals; Proceedings of the 2009 IEEE 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops; Amsterdam, The Netherlands. 10–12 September 2009. [Google Scholar]

- 50.Takahashi K. Remarks on emotion recognition from bio-potential signals; Proceedings of the 2nd International Conference on Autonomous Robots and Agents; Palmerston North, New Zealand. 13–15 December 2004; pp. 1148–1153. [Google Scholar]

- 51.Chanel G., Kronegg J., Grandjean D., Pun T. Emotion assessment: Arousal evaluation using EEG’s and peripheral physiological signals; Proceedings of the International Workshop on Multimedia Content Representation, Classification and Security; Istambul, Turkey. 11–13 September 2006; pp. 530–537. [Google Scholar]

- 52.Fan X.A., Bi L.Z., Chen Z.L. Using EEG to detect drivers’ emotion with Bayesian Networks; Proceedings of the IEEE 2010 International Conference on Machine Learning and Cybernetics; Qingdao, China. 11–14 July 2010; pp. 1177–1181. [Google Scholar]

- 53.Abadi M.K., Subramanian R., Kia S.M., Avesani P.P.I., Sebe N. DECAF: MEG-Based Multimodal Database for Decoding Affective Physiological Responses. IEEE Trans. Affect. Comput. 2015;6:209–222. doi: 10.1109/TAFFC.2015.2392932. [DOI] [Google Scholar]

- 54.Wallbott H.G., Scherer K.R. The Measurement of Emotions. Academic Press; Cambridge, MA, USA: 1989. Assessing emotion by questionnaire; pp. 55–82. [Google Scholar]

- 55.Kensinger E.A. Remembering emotional experiences: The contribution of valence and arousal. Rev. Neurosc. 2004;15:241–252. doi: 10.1515/REVNEURO.2004.15.4.241. [DOI] [PubMed] [Google Scholar]

- 56.Davis J.I., Senghas A., Ochsner K.N. How does facial feedback modulate emotional experience? J. Res. Personal. 2009;43:822–829. doi: 10.1016/j.jrp.2009.06.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Pfurtscheller G., Neuper C., Kalcher J. 40-Hz oscillations during motor behavior in man. Neurosci. Lett. 1993;164:179–182. doi: 10.1016/0304-3940(93)90886-P. [DOI] [PubMed] [Google Scholar]

- 58.Crone N.E., Miglioretti D.L., Gordon B., Lesser R.P. Functional mapping of human sensorimotor cortex with electrocorticographic spectral analysis. II. Event-related synchronization in the gamma band. Brain A J. Neurol. 1998;121:2301–2315. doi: 10.1093/brain/121.12.2301. [DOI] [PubMed] [Google Scholar]

- 59.Schack B., Vath N., Petsche H., Geissler H.G., Möller E. Phase-coupling of theta–gamma EEG rhythms during short-term memory processing. Int. J. Psychophysiol. 2002;44:143–163. doi: 10.1016/S0167-8760(01)00199-4. [DOI] [PubMed] [Google Scholar]

- 60.Axmacher N., Henseler M.M., Jensen O., Weinreich I., Elger C.E., Fell J. Cross-frequency coupling supports multi-item working memory in the human hippocampus. Proc. Natl. Acad. Sci. USA. 2010;107:3228–3233. doi: 10.1073/pnas.0911531107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Voytek B., Canolty R.T., Shestyuk A., Crone N., Parvizi J., Knight R.T. Shifts in gamma phase–amplitude coupling frequency from theta to alpha over posterior cortex during visual tasks. Front. Hum. Neurosci. 2010;4:191. doi: 10.3389/fnhum.2010.00191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Maris E., van Vugt M., Kahana M. Spatially distributed patterns of oscillatory coupling between high-frequency amplitudes and low-frequency phases in human iEEG. Neuroimage. 2011;54:836–850. doi: 10.1016/j.neuroimage.2010.09.029. [DOI] [PubMed] [Google Scholar]

- 63.Lisman J.E., Jensen O. The theta-gamma neural code. Neuron. 2013;77:1002–1016. doi: 10.1016/j.neuron.2013.03.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Woolley D.E., Timiras P.S. Prepyriform electrical activity in the rat during high altitude exposure. Electroencephalogr. Clin. Neurophysiol. 1965;18:680–690. doi: 10.1016/0013-4694(65)90112-4. [DOI] [PubMed] [Google Scholar]

- 65.Fujisawa S., Buzsáki G. A 4 Hz oscillation adaptively synchronizes prefrontal, VTA, and hippocampal activities. Neuron. 2011;72:153–165. doi: 10.1016/j.neuron.2011.08.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Keil A., Bradley M.M., Hauk O., Rockstroh B., Elbert T., Lang P.J. Large-scale neural correlates of affective picture processing. Psychophysiology. 2002;39:641–649. doi: 10.1111/1469-8986.3950641. [DOI] [PubMed] [Google Scholar]

- 67.Li M.K.K., Niles N., Gore S., Ebrahimi A., McGuinness J., Clark J.R. Social perception of morbidity in facial nerve paralysis. Head Neck. 2016;38:1158–1163. doi: 10.1002/hed.24299. [DOI] [PubMed] [Google Scholar]

- 68.Nellis J.C., Ishii L.E., Boahene K.D., Byrne P.J. Psychosocial Impact of Facial Paralysis. Curr. Otorhinolaryngol. Rep. 2018;6:151–160. doi: 10.1007/s40136-018-0196-2. [DOI] [Google Scholar]