Abstract

The purpose of this study was to compare saccade detection characteristics in two mobile eye trackers with different sampling rates in a natural task. Gaze data of 11 participants were recorded in one 60 Hz and one 120 Hz mobile eye tracker and compared directly to the saccades detected by a 1000 HZ stationary tracker while a reading task was performed. Saccades and fixations were detected using a velocity based algorithm and their properties analyzed. Results showed that there was no significant difference in the number of detected fixations but mean fixation durations differed between the 60 Hz mobile and the stationary eye tracker. The 120 Hz mobile eye tracker showed a significant increase in the detection rate of saccades and an improved estimation of the mean saccade duration, compared to the 60 Hz eye tracker. To conclude, for the detection and analysis of fast eye movements, such as saccades, it is better to use a 120 Hz mobile eye tracker.

Keywords: eye movement, mobile eye tracking, saccades, reading

Introduction

The investigation of eye movements using eye tracking technology provides a powerful tool for different disciplines. Besides its role in scientific and clinical tasks, eye tracking applications are widely used for examining visual attention in marketing studies ( 1-3 ), adapting learning behavior in real time situations ( 4 ) or to enhance the control modalities in computergames ( 5-7 ). Especially saccadic eye movements and their statistics are of interest.

They are for instance used to investigate eye movements during reading, scene perception and visual search task, see Rayner ( 42 ) for review. Eye movement abnormalities like corrective saccades in a smooth pursuit task were shown to supplement clinical diagnosis of schizophrenia ( 8-10 ) and can be linked to cognitive deficits in word processing of schizophrenia patients ( 11 ). Furthermore, saccadic eye movements can be used as objective indicators in screening mental health ( 12 ) for instance of dyslexia ( 13-15 ) or autism ( 16, 17 ). This clearly shows the scientifically and clinically importance to detect the characteristics of saccades accurately. Such eye movement tests are often conducted on controlled conditions with high accuracy eye trackers that require head stabilization and presentation of stimuli on a fixed display. This, however, is unlike normal visual perception, and it is therefore important to move to more day-today tasks. Eye movements in such tasks can only be measured with mobile eye trackers, and it is unclear how well these can measure and detect saccadic eye movements.

In the analysis of eye tracking data, the algorithm used to detect events, such as fixations, blinks and saccades is the crucial factor. Algorithms can be classified in three main categories, based on their threshold criteria: dispersion, velocity or acceleration-based ( 18-20 ), or the combination of these criteria. The velocity-threshold identification is the fastest algorithm (no back-tracking required as in dispersion algorithms) that differentiate fixations and saccades by their point-by-point velocities and requires only one parameter to specify, the velocity threshold ( 18 ). When using velocity-based algorithms to analyze eye tracking data, the sampling rate of the eye tracking signal becomes the limiting factor ( 21 ). During saccades, the eye movements are very fast, and at low sampling rates, insufficient samples of these fast movements may be available for correct detection. Because of their velocity characteristics saccades can be classified as “outliers” in the velocity profile ( 22-24 ) and serve as a robust criteria in analyzing eye tracking data.

According to the Nyquist theorem, a higher sampled eye tracker detects saccades of shorter duration in comparison to a lower sampled eye tracker which saccade detection shows a minimum duration threshold of twice the Nyquist frequency. Thus, specifically an increase of sampling frequency from 60 Hz to 120 Hz is expected to increase the detection rate of saccades, whose durations are in the range between approximately 16 and 33 ms. The main sequence of saccades shows a linear relationship between saccade amplitude and duration ( 24, 25 ). In reading, which is a common activity and highly important in modern day-to-day life, saccade distributions show a high number of small saccades ( 33 ) which would not be detected if they fall into the interval between 16 and 33 ms. Saccadic behavior in reading tasks is a well-studied and explained characteristic of human eye movements . The task-specific saccade distributions directly impact the detection rate of eye trackers with a limited sampling rate. Thus, specifically in this task, an accurate choice of sampling frequency is crucial. Therefore, we assume that an eye tracker with higher sampling might detect more saccades in a reading task, as it will also detect short duration saccades. We furthermore hypothesize, that the estimation of mean saccade duration is more reliable when estimated from higher sampled gaze, because more samples will be available to reliably detect saccade start and end.Studies examining eye movements typically rely on high-sampling static eye trackers. But, novel mobile eye trackers allow recordings in more natural scenarios, especially paradigms in which the subject is freely behaving. With increasing use of mobile eye trackers, it becomes inevitable to evaluate how well mobile eye trackers can detect and measure saccadic eye movements. Thus, this study evaluates the impact of an increase in sampling rate of a head worn eye tracker designed for field studies from 60 Hz to 120 Hz in a realworld task with a topic of high research interest: reading ( 26 ).

Methods

Participants

11 eye-healthy participants with a mean age of 34.9 ± 9.9 years were included in the study. The participants had normal or corrected-to-normal vision. All participants were naïve to the purpose of the study. All procedures followed the tenets of the Declaration of Helsinki. Informed consent was obtained from all participants after explanation of the nature and possible consequences of the study.

Equipment and experimental procedure

Participants were wearing one of two mobile eye trackers (SMI ETG w, 60 Hz sampling; SMI ETG 2w, 120 Hz sampling, SensoMotoric Instruments GmbH, Teltow, Germany). In order to evaluate saccade detection in these eye trackers, participants placed their head in a chin rest and a stationary eye tracker (EyeLink 1000, SR Research Ltd., Mississauga, Canada) was used as a reference. This eye tracker was placed below the screen at a distance of 60cm. For stimulus presentation, a visual display (VIEWPixx /3D, VPixx Technologies, Canada) at distance of 70 cm was used. Both mobile eye trackers and the stationary eye tracker were calibrated and validated using a 3-point calibration pattern composed of three black rings on a gray background. The stationary and mobile eye trackers recorded the eye positions simultaneously. The stationary eye tracker was set to record at a sampling rate of 1000 Hz, binocularly, and the two mobile eye trackers to either 60 Hz or 120 Hz binocular tracking. To minimize an influence of the IR signal from the stationary eye tracker on the tracking ability of the mobile eye tracking glasses, the power of the IR LED was reduced to a minimum of 50% intensity.

The mobile eye tracking glasses use infrared (IR) LED´s arranged in a ring pattern within the glasses frame while the IR array from the stationary eye tracker is a single dot pattern. Because of the differences in shape and intensity of the reflection pattern (see Figure 1) and the intensity of the corneal reflex is much higher in the stationary eye tracker, both reflections were distinguishable from each other and a simultaneous measurement was possible. Moreover, both 60 Hz and 120 Hz eye trackers are expected to be equally affected by any potential mutal interference.

Figure 1.

Comparison of the corneal infrared reflections from the stationary (red arrow) and the mobile (blue arrows) eye tracker. (a) represents the image from the stationary and (b) from the mobile eye tracker in simultaneous use.

Prior to the experiment start, participants were informed that they will read a text, about which they will have to answer questions to ensure attentive reading. To enable an offline temporal synchronization between both eye trackers for the data analysis, a peripheral fixation point of 25° dislocation from the left side of the text was displayed for three seconds on the screen. Subsequently the sample text was presented. The letter size was set to 23 pixels, which corresponded to an angular size of 0.5 ° for capital letters using the font type Helvetica. A relatively large letter size ensured that every normally sighted participant was able to read the text. Two sample texts were created: Text 1 contained information about the human visual systems and covered 237 word, text 2 was about Tuebingen and the Eberhard Karls University Tuebingen with 276 words. An example of the experiment procedure is given in Figure 2. The participants were instructed to read silently and in their normal reading speed. Subsequently, the participants confirmed or rejected five statements regarding the content of the text, by pressing a button on the keyboard. All stimuli were programmed and displayed using the psychophysics toolbox (Psychtoolbox 3, Kleiner M, et al. 2007) in the Matlab programming language (Matlab, MathWorks Inc., Natick, Massachusetts).

Analysis

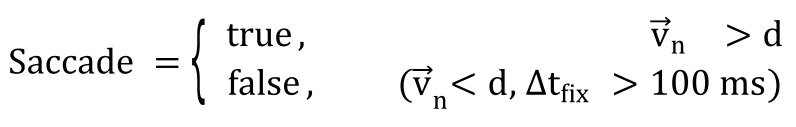

From the eye tracking data the average number of fixations, their average duration and the average number of saccades and their durations were calculated. Blinks were excluded from the dataset prior to analysis. Blinks were identified on the basis of the individual eye tracker criteria (the pupil size is very small or either zero). Fixations and saccades were identified using an algorithm based on the velocity profile, see equation ( 1 ), of the gaze data calculated as the difference in horizontal eye position between successive positions and divided by the intersample time interval ( 18, 27 ) without application of a running-average filter prior to the analysis. According to equation (2), a fixation is classified as gaze points where the eye-velocity signal v→n remains below a threshold of d = 60 °/sec for a minimum time duration of ΔtFix = 100 ms (average fixation durations are around 200 – 300 ms ( 33 ; Starr & Rayner, 2001)). A single fixation and its associated duration was defined as the time interval where equation (2) resulted in a ‘false’ outcome. In addition to the fixation duration, the absolute number of fixations was analyzed.

(1).

(2).

Furthermore, the velocity profile of the gaze data was used for saccade detection. A saccade event was identified as the time interval where the condition of equation (2) was true (i.e., the intervals not assigned to a fixation). The local maximum in this saccade time interval was localized using Matlab and marked a saccade event. The saccade duration was calculated as the time interval during which the velocity of the eye remained above the velocity threshold d and equation (2) was true.

To analyze the difference in performance between the 60 Hz and the 120 Hz mobile tracking glasses, the relative differences in the number and duration of detected fixations and saccades between the mobile and the stationary eye tracker were calculated and evaluated. All calculations consider the gaze data of the right eye. Normality of data was investigated using the Shapiro-Wilk test. In case of normal distributed data a t-test (power 1-β = 0.80) to test for difference in the detection ability was performed. Consequently, a Wilcoxon rank test in case of not normally distributed data was performed. The critical p-value (α error) was set to 0.05 and the statistical analyses was performed (IBM SPSS Statistics 22, IBM, Armonk, USA).

Results

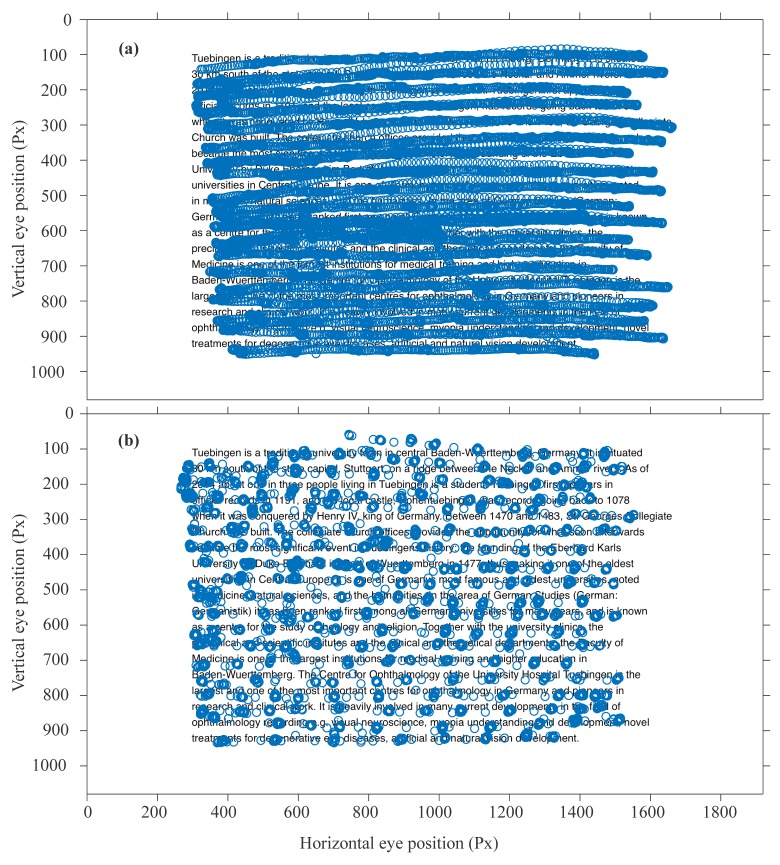

Figure 2 compares the eye movement data from the static eye tracker (a) and the data from the 120Hz mobile eye tracker (which is similar in the case of the 60 Hz mobile eye tracker) in (b), when superimposed on the text that was read. In order to plot the mobile eye tracking data (Figure 2b), the data were manually scaled using an empirically defined scaling factor. Figure 2 shows that the reduced sampling rate in the mobile eye tracker leads to a sparse representation of saccade midflight eye positions.

Figure 2.

Raw data from gaze traces superimposed to the stimulus text. (a) illustrates the recorded eye position with a 1000 Hz eye tracker (EyeLink 1000) and in (b) at a sampling rate of 120 Hz (SMI mobile glasses). Gaze data and text were aligned manually by the author (horizontal and vertical stretching).

A larger number of saccades were detected for the 120 Hz than for the 60 Hz eye tracker (p=0.011, two-sited t-test), see Table 1 and Figure 3. The 120 Hz mobile eye tracker also led to a more reliable estimation of mean saccade duration (Δ = 5.91 ms, p = 0.033, two-sited ttest), see Figure 3. Despite these differences, the number of saccades undetected by the stationary eye tracker but detected by the mobile eye trackers was very low and ranged below 1% of the total number of correctly detected saccades. The data therefore show that saccade detection was generally adequate in mobile eye trackers, the 120Hz eye tracker was better in measuring the duration of the saccade than the 60Hz eye tracker.

In contrast to the saccade, no significant difference in the number of fixations were found between the 60Hz and 120Hz eye tracker (p = 0.110, Wilcoxon-test). Statistical analysis showed no significant difference between the 60 Hz and the 120 Hz devices in fixation durations (p = 0.088, paired t-test). Nevertheless, there is a trend towards more accurate fixation detection in the 120 Hz device when compared to the stationary eye tracker. Mean and standard deviation of the number and the duration of saccades and fixations are shown in Figure 3.

Figure 3.

Mean number of fixations (a) and saccades (b), +/- standard deviation (SD). (c) and (d) present the mean fixation and saccade durations, respectively. Asterisks indicate the significance level: * α < 0.05, *** α < 0.001

Table 1.

Relative comparison of two mobile eye trackers to a stationary eye tracker. Mean and standard deviation (SD) for the number and the duration of saccades and fixations. Asterisks indicate the significance level: * α < 0.05; n = 11

| 60 Hz mobile eye tracker | 120 Hz mobile eye tracker | Relative difference between mobile eye trackers | |

| Mean ± SD | |||

| Number of saccades | 56.11 ± 12.44 % | 68.37 ± 13.97 % | 12.25 % * |

| Duration of saccades (ms) | -10.81 ± 7.51 ms | -4.89 ± 2.76 ms | 5.91 ms * |

| Number of fixations | 76.72 ± 18.67 % | 86.41 ± 15.43 % | 9.69 % |

| Duration of fixations (ms) | 10.55 ± 10.13 ms | 4.30 ± 14.33 ms | 6.25 ms |

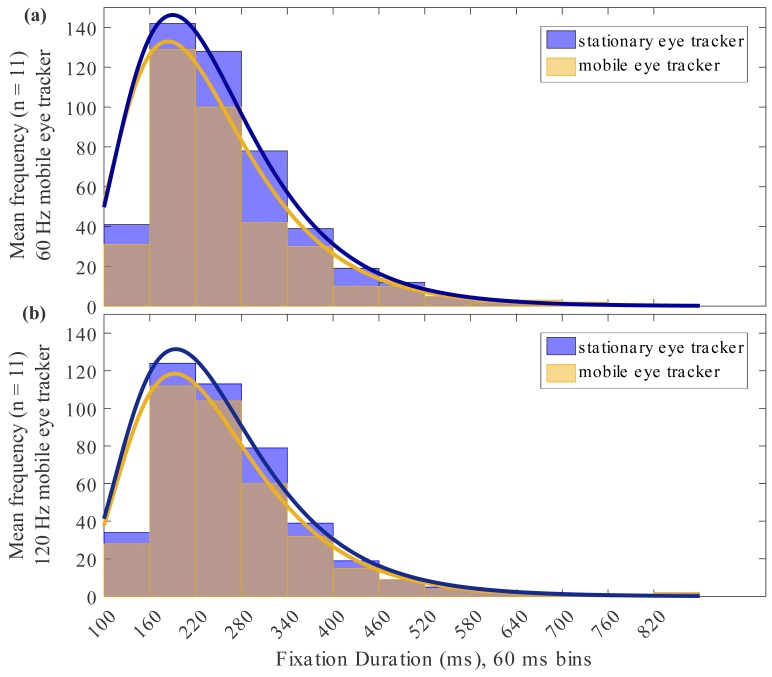

Figure 4 illustrate the frequency distribution of fixation durations in the 60 Hz (Figure 4a) and the 120 Hz devices (Figure 4b), respectively, compared to the stationary eye tracker. Fixation durations within the silent reading task ranged from 100 ms to 600 ms. The distribution of recorded fixations of the 60 Hz mobile eye tracker showed a shift of the maximum towards smaller fixation duration (p = 0.01, Wilcoxon rank test) while the distribution of the 120 Hz mobile eye tracker reveals a trend towards a better assessment of fixation durations (p = 0.59, Wilcoxon rank test), in comparison to the stationary reference eye tracker.

Figure 4.

Mean frequency distribution (n = 11) of fixation durations for the stationary and the (a) 60 Hz and (b) 120 Hz mobile eye tracker in milliseconds (ms).

Discussion

Previous studies have revealed that the use of stationary eye trackers with lower sampling rates results in significantly impoverished detection and measurement of saccadic eye movements, especially at the border of the stimuli screen ( 28 ). Generally, high-frequency stationary eye trackers should be preferred in investigations of saccades and the use of eye tracker with lower sampling rates should be restricted to the examination of fixation behavior and pupil size ( 29 ). While the effects of sampling rate for stationary eye trackers is known, no such information is available for the impact of mobile eye trackers, which place the cameras often closer to participants' eyes, use a different pattern of IR lighting, and a different calibration method. In the current study we compared the impact of sampling rate of mobile eye trackers on extraction rates of saccades and fixations in a reading task, as a common task of daily life.

Mobile eye tracking and reading

The development of mobile eye trackers in the last years ( 30-32 ) has enabled researcher to examine eye movements during reading in a natural context ( 33 ). Mobile eye tracking of reading may enhance clinical diagnosis, for example, by differentiating progressive supranuclear palsy from Parkinson's disease ( 34 ) or for mental or linguistic disorders ( 11, 12 ). The measurement of eye movements in such tasks has led to the further understanding of learning ( 4 ) e.g. in medical and health professions ( 35 ) and can further be extended to e-learning applications ( 36 ). However, research on the reliability of mobile eye tracker in the detection of saccades and fixations especially in reading is sparse.

Current analysis of saccadic eye movements demonstrated the benefit of a higher sampling rate of the 120 Hz mobile eye tracker in the detection of saccades. During reading, the amplitude and number of both progressive and return (regression) saccades depend on various intrinsic and extrinsic factors ( 33 ). To evaluate these properties of saccades, it is therefore important to measure parameter of saccadic eye movements accurately. External aspects like visual information factors, e.g. the spaces or type of characters between words ( 37, 38 ) or the length and orthographic information of the words ( 39-41 ) impact saccadic amplitudes. Secondly, higher level factors, such as spatial coding ( 22 ) or the location of attention ( 42, 43 ) influence saccadic behavior. The main sequence saccadic eye movements ( 25, 44 ) demonstrates a linear correlation between saccadic amplitude and duration. In reading, people often make small saccadic eye movements (e.g. refixations of the same word), and therefore it is important to accurately detect small saccade amplitudes, it is crucial to use high-frequency equipment facilitate recording of small saccade durations. Our results revealed that saccades are better detected with a 120Hz sampling rate and that the distribution of saccade amplitudes is better measured with this higher sampling rate.

Event detection algorithms for eye movement data

In the analysis of saccadic eye movements we used the standard approach based on the velocity profile of the gaze traces ( 18 ). Engbert and Kliegl ( 24 ) developed a velocity-based algorithm for the detection of microsaccades involving a noise dependent detection threshold and a temporal overlap criterion for the binocular occurrence of saccades. The advantage of using a noise dependent algorithm is that it can be adapted easily to the different eye tracking technologies and inter-individual differences ( 24 ). In future work, such noise dependent algorithms could therefore improve the detection performance of low sampled eye tracking data if the internal noise distribution is different between the eye trackers. A further approach for future work is to use data from both eyes in the analysis (the method by Engbert and Kliegl requires saccades to overlap in both eyes). However, in a real-world application a saccade detection algorithm could account for the binocularity and could increase accuracy of detecting saccades. One further extension is to use acceleration in addition to velocity to detect saccades ( 45 ) and combined this with noise-dependent saccade thresholds ( 46 ). Future work can also examine the use of more complex algorithms, including continuous wavelet and principal component analysis (PCA) or using that saccades can be identified as local singularities ( 47 ). Although the focus of the present study was not the comparison of algorithms for event detection in mobile eye tracking, advanced computations might improve the performance in event detection.

Head-worn vs. head fixed eye tracking

The accuracy of eye tracker strongly depends on the conditions of the planned experiment ( 48 ) and furthermore on restrictions of head movements ( 49 ), hence it is important to consider application-oriented parameters as well. Generally, head-worn eye tracker are not restricted by a certain head position but the eye movements show a more complex pattern compared to a head-fixed situation, as for instance the vestibulo-ocular reflex ( 50 ) or the optokinetic nystagmus ( 51 ) occur. In inter-device comparisons between mobile eye trackers, further studies will have to clarify whether the event detection in mobile eye tracking depends on the type of tracking (e.g. pupil/glint tracking vs. 3d- eye model) or number of tracked eyes and the use of advanced calibration methods or algorithms, as discussed above. The current study reports on results of laboratory work including a head-fixed measurement setup. Mobile eye tracker enable a head-free acquisition of eye movement data and it was shown that head movements strongly contribute in the processing of the visual input, and thus to the oculomotor behavior ( 52, 53 ). Furthermore, in head-free scenarios position or orientation dislocation of the eye tracker on the head was shown to have significant influence on the accuracy of eye tracker ( 48 ). Given that, upcoming studies will have to investigate the sampling dependence of mobile eye tracker in head-free scenarios and real-world tasks.

Fixation statistics in reading

Analysis of the fixation statistics during a common reading task showed no difference in the number of detected fixations or the mean fixation duration between the two mobile eye trackers. The observed mean fixation duration of 220 ms to 240 ms is comparable to other studies, which performed silent reading tasks ( 33, 54, 55 ). Yang et al. ( 38 ) reported shorter fixation duration for a reading task of 211 ms. The higher sampling rate of the 120 Hz mobile eye tracker led to a closer mapping of the frequency distribution of fixation durations, which is also represented in the significant smaller mean fixation duration, compared to the 60 Hz eye tracker. The frequency distribution of fixation durations showed in all cases the typical right tailed function ( 23, 38 ) with a maximum around 200 ms. The choice of a typical and realistic reading task, which represents a common daily visual duty, suggests that this estimation is also correct for the saccade amplitude distribution in reading and possibly in other tasks.

Future implications in virtual reality applications

Mobile eye tracking is becoming progressively more important with the introduction of head-mounted-displays (HMD) and virtual reality (VR) glasses to enable a more realistic interaction mediated by human-computerinterfaces ( 56-61 ). Thus, there is an increasing need in eye trackers that combine usability for field studies with high accuracy and fast eye tracker in a miniaturized version and their incorporation into HMD or VR systems( 62, 63 ). Real time gaze estimation including precise and fast eye tracking enables accurate and thus comfortable stereo image presentation in virtual reality simulations or highly interactive virtual reality scenarios due to a higher sampling rate of eye tracker. Specifically, the detection of fast eye movements, like saccades, can enable gaze pointing to virtual objects. Juhola et al. ( 21 ) showed that a velocity based algorithm for saccade analysis requires a minimum of 70 Hz sampled data. DiScenna et al. ( 64 ) stated that for a reliable measurement of all kinds of eye movement video cameras with frame rates above 120 Hz are necessary. The results of the current study suggests that a 120 Hz mobile eye tracker leads to more reliable measurements also in a task specific evaluation of saccade and fixation statistics on reading.

Conclusions

The study reports on a relative performance comparison between two mobile video-based eye trackers during reading. Low sampled eye tracking (60 Hz) lead to an under estimation of the detection of saccades while 120 Hz sampling results in a higher accuracy in the detection of fast eye movements and fixation durations. A certain detection of small saccade durations, as they occur in reading, requires higher sampling rates of the used eye trackers. Reliable and robust detection of saccades by fast and accurate mobile eye trackers will lead to novel developments in gaze-contingent protocols, e.g. for virtual reality simulations. Furthermore, increased sampling rates in eye tracking technology might enable advancements in new fields, such as in clinical applications for eye movement training scenarios in visual impaired patients or clinical eye movement marker analysis in diagnosis of diseases.

Ethics and Conflict of Interest

The author(s) declare(s) that the contents of the article are in agreement with the ethics described in http://biblio.unibe.ch/portale/elibrary/BOP/jemr/ethics.html and that there is no conflict of interest regarding the publication of this paper.

Acknowledgements

This work was done in an industry-on-campuscooperation between the University Tuebingen and Carl Zeiss Vision InternationalGmbH. The work was supported by third-party-funding (ZUK 63).The beta version of the 120 Hz mobile eye tracker was developed and friendly provided by the SensoMotoric Instruments GmbH, D14513 Teltow, Germany.

References

- Oliveira D., Machín L., Deliza R., Rosenthal A., Walter E. H., Giménez A., & Ares G. (2016). Consum-ers’ attention to functional food labels: Insights from eye-tracking and change detection in a case study with probiotic milk. Lebensmittel-Wissenschaft + Technologie, 68, 160–167. 10.1016/j.lwt.2015.11.066 [DOI] [Google Scholar]

- Lahey J. N., & Oxley D. (2016). The Power of Eye Tracking in Economics Experiments. The American Economic Review, 106(5), 309–313. 10.1257/aer.p20161009 [DOI] [Google Scholar]

- Wedel M. (2013). Attention research in marketing: A review of eye tracking studies. Robert H. Smith School Research Paper No. RHS, 2460289. 10.2139/ssrn.2460289 [DOI]

- Rosch J. L., & Vogel-Walcutt J. J. (2013). A review of eye-tracking applications as tools for training. Cognition Technology and Work, 15(3), 313–327. 10.1007/s10111-012-0234-7 [DOI] [Google Scholar]

- Vickers S., Istance H., & Hyrskykari A. (2013). Performing locomotion tasks in immersive computer games with an adapted eye-tracking interface. [TACCESS] ACM Transactions on Accessible Computing, 5(1), 2 10.1145/2514856 [DOI] [Google Scholar]

- Isokoski P., Joos M., Spakov O., & Martin B. (2009). Gaze controlled games. Universal Access in the Information Society, 8(4), 323–337. 10.1007/s10209-009-0146-3 [DOI] [Google Scholar]

- Isokoski P., & Martin B. (2006). Eye tracker input in first person shooter games. Paper presented at the Proceedings of the 2nd Conference on Communication by Gaze Interaction: Communication by Gaze Interaction-COGAIN 2006: Gazing into the Future.

- Benson P. J., Beedie S. A., Shephard E., Giegling I., Rujescu D., & St Clair D. (2012). Simple viewing tests can detect eye movement abnormalities that distinguish schizophrenia cases from controls with exceptional accuracy. Biological Psychiatry, 72(9), 716–724. 10.1016/j.biopsych.2012.04.019 [DOI] [PubMed] [Google Scholar]

- Sereno A. B., & Holzman P. S. (1995). Antisaccades and smooth pursuit eye movements in schizophrenia. Biological Psychiatry, 37(6), 394–401. 10.1016/0006-3223(94)00127-O [DOI] [PubMed] [Google Scholar]

- Shulgovskiy V. V., Slavutskaya M. V., Lebedeva I. S., Karelin S. A., Moiseeva V. V., Kulaichev A. P., & Kaleda V. G. (2015). Saccadic responses to consecutive visual stimuli in healthy people and patients with schizophrenia. Human Physiology, 41(4), 372–377. 10.1134/s0362119715040143 [DOI] [PubMed] [Google Scholar]

- Fernández G., Sapognikoff M., Guinjoan S., Orozco D., & Agamennoni O. (2016). Word processing during reading sentences in patients with schizophrenia: Evidences from the eyetracking technique. Comprehensive Psychiatry, 68, 193–200. 10.1016/j.comppsych.2016.04.018 [DOI] [PubMed] [Google Scholar]

- Vidal M., Turner J., Bulling A., & Gellersen H. (2012). Wearable eye tracking for mental health monitoring. Computer Communications, 35(11), 1306–1311. 10.1016/j.comcom.2011.11.002 [DOI] [Google Scholar]

- Nilsson Benfatto M., Öqvist Seimyr G., Ygge J., Pansell T., Rydberg A., & Jacobson C. (2016). Screening for Dyslexia Using Eye Tracking during Reading. PLoS One, 11(12), e0165508. 10.1371/journal.pone.0165508 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Biscaldi M., Gezeck S., & Stuhr V. (1998). Poor saccadic control correlates with dyslexia. Neuropsychologia, 36(11), 1189–1202. 10.1016/S0028-3932(97)00170-X [DOI] [PubMed] [Google Scholar]

- Eden G. F., Stein J. F., Wood H. M., & Wood F. B. (1994). Differences in eye movements and reading problems in dyslexic and normal children. Vision Research, 34(10), 1345–1358. 10.1016/0042-6989(94)90209-7 [DOI] [PubMed] [Google Scholar]

- Rosenhall U., Johansson E., & Gillberg C. (1988). Oculomotor findings in autistic children. The Journal of Laryngology and Otology, 102(5), 435–439. 10.1017/S0022215100105286 [DOI] [PubMed] [Google Scholar]

- Kemner C., Verbaten M. N., Cuperus J. M., Camfferman G., & van Engeland H. (1998). Abnormal saccadic eye movements in autistic children. Journal of Autism and Developmental Disorders, 28(1), 61–67. 10.1023/A:1026015120128 [DOI] [PubMed] [Google Scholar]

- Salvucci D. D., & Goldberg J. H. (2000). Identifying fixations and saccades in eye-tracking protocols. Paper presented at the Proceedings of the 2000 symposium on Eye tracking research & applications, Palm Beach Gardens, Florida, USA: 10.1145/355017.355028 [DOI] [Google Scholar]

- Duchowski A. (2007). Eye Tracking Methodology: Theory and Practice: Springer; London. [Google Scholar]

- Nyström M., & Holmqvist K. (2010). An adaptive algorithm for fixation, saccade, and glissade detection in eyetracking data. Behavior Research Methods, 42(1), 188–204. 10.3758/brm.42.1.188 [DOI] [PubMed] [Google Scholar]

- Juhola M., & Pyykkö I. (1987). Effect of sampling frequencies on the velocity of slow and fast phases of nystagmus. International Journal of Bio-Medical Computing, 20(4), 253–263. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/3308713 10.1016/0020-7101(87)90036-5 [DOI] [PubMed] [Google Scholar]

- Liversedge S. P., & Findlay J. M. (2000). Saccadic eye movements and cognition. Trends in Cognitive Sciences, 4(1), 6–14. 10.1016/S1364-6613(99)01418-7 [DOI] [PubMed] [Google Scholar]

- Inhoff A. W., Seymour B. A., Schad D., & Greenberg S. (2010). The size and direction of saccadic curvatures during reading. Vision Research, 50(12), 1117–1130. 10.1016/j.visres.2010.03.025 [DOI] [PubMed] [Google Scholar]

- Engbert R., & Kliegl R. (2003). Microsaccades uncover the orientation of covert attention. Vision Research, 43(9), 1035–1045. 10.1016/S0042-6989(03)00084-1 [DOI] [PubMed] [Google Scholar]

- Bahill A. T., Clark M. R., & Stark L. (1975). The main sequence, a tool for studying human eye movements. Mathematical Biosciences, 24(3-4), 191–204. 10.1016/0025-5564(75)90075-9 [DOI] [Google Scholar]

- Rayner K., Pollatsek A., Ashby J., & Clifton C. Jr (2012). Psychology of reading. Psychology Press. [Google Scholar]

- van der Geest J. N., & Frens M. A. (2002). Recording eye movements with video-oculography and scleral search coils: A direct comparison of two methods. Journal of Neuroscience Methods, 114(2), 185–195. 10.1016/S0165-0270(01)00527-1 [DOI] [PubMed] [Google Scholar]

- Ooms K., Dupont L., Lapon L., & Popelka S. (2015). Accuracy and precision of fixation locations recorded with the low-cost Eye Tribe tracker in different experimental setups. Journal of Eye Movement Research, 8(1). [Google Scholar]

- Dalmaijer E. (2014). Is the low-cost EyeTribe eye tracker any good for research? PeerJ PrePrints, 2:e585v1. 10.7287/peerj.preprints.585v1 [DOI]

- Babcock J. S., & Pelz J. B. (2004). Building a light-weight eyetracking headgear. Proceedings of the 2004 symposium on Eye tracking research \& applications, 109-114. doi: 10.1145/968363.968386 [DOI]

- Li D., Babcock J., & Parkhurst D. J. (2006). openEyes: a low-cost head-mounted eye-tracking solution. Paper presented at the Proceedings of the 2006 symposium on Eye tracking research & applications, San Diego, California. [Google Scholar]

- Pfeiffer T., & Renner P. (2014). EyeSee3D: a low-cost approach for analyzing mobile 3D eye tracking data using computer vision and augmented reality technol-ogy. Paper presented at the Proceedings of the Symposium on Eye Tracking Research and Applications, Safety Harbor, Florida 10.1145/2578153.2578183 [DOI] [Google Scholar]

- Rayner. (1998). Eye movements in reading and information processing: 20 years of research. Psychol Bull, 124(3), 372-422. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/9849112 [DOI] [PubMed] [Google Scholar]

- Marx S., Respondek G., Stamelou M., Dowiasch S., Stoll J., Bremmer F., et al. Einhäuser W. (2012). Validation of mobile eye-tracking as novel and efficient means for differentiating progressive supranuclear palsy from Parkinson’s disease. Frontiers in Behavioral Neuroscience, 6(88), 88. 10.3389/fnbeh.2012.00088 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kok E. M., & Jarodzka H. (2017). Before your very eyes: The value and limitations of eye tracking in medical education. Medical Education, 51(1), 114–122. 10.1111/medu.13066 [DOI] [PubMed] [Google Scholar]

- Molina A. I., Redondo M. A., Lacave C., & Ortega M. (2014). Assessing the effectiveness of new devices for accessing learning materials: An empirical analysis based on eye tracking and learner subjective percep-tion. Computers in Human Behavior, 31, 475–490. 10.1016/j.chb.2013.04.022 [DOI] [Google Scholar]

- Pollatsek A., & Rayner K. (1982). Eye movement control in reading: The role of word boundaries. Journal of Experimental Psychology. Human Perception and Performance, 8(6), 817–833. 10.1037/0096-1523.8.6.817 [DOI] [Google Scholar]

- Yang S. N., & McConkie G. W. (2001). Eye movements during reading: A theory of saccade initiation times. Vision Research, 41(25-26), 3567–3585. 10.1016/S0042-6989(01)00025-6 [DOI] [PubMed] [Google Scholar]

- Rayner K., & McConkie G. W. (1976). What guides a reader's eye movements? Vision Research, 16(8), 829-837. doi:http://dx.doi.org/ 10.1016/0042-6989(76)90143-7 [DOI] [PubMed] [Google Scholar]

- Joseph H. S., Liversedge S. P., Blythe H. I., White S. J., & Rayner K. (2009). Word length and landing position effects during reading in children and adults. Vision Research, 49(16), 2078–2086. 10.1016/j.visres.2009.05.015 [DOI] [PubMed] [Google Scholar]

- Vitu F., O’Regan J. K., Inhoff A. W., & Topolski R. (1995). Mindless reading: Eye-movement characteristics are similar in scanning letter strings and reading texts. Perception & Psychophysics, 57(3), 352–364. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/7770326 10.3758/BF03213060 [DOI] [PubMed] [Google Scholar]

- Rayner. (2009). Eye movements and attention in reading, scene perception, and visual search. The Quarterly Journal of Experimental Psychology, 62(8), 1457-1506. doi: 10.1080/17470210902816461 [DOI] [PubMed] [Google Scholar]

- Schneider W. X., & Deubel H. (1995). Visual Attention and Saccadic Eye Movements: Evidence for Obligatory and Selective Spatial Coupling. R. W. John M. Findlay & W. K. Robert (Eds.), Studies in Visual In-formation Processing, 6, 317-324: North-Holland. [Google Scholar]

- Harris C. M., & Wolpert D. M. (2006). The main sequence of saccades optimizes speed-accuracy trade-off. Biological Cybernetics, 95(1), 21–29. 10.1007/s00422-006-0064-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behrens F., & Weiss L. R. (1992). An algorithm separating saccadic from nonsaccadic eye movements automatically by use of the acceleration signal. Vision Research, 32(5), 889–893. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/1604857 10.1016/0042-6989(92)90031-D [DOI] [PubMed] [Google Scholar]

- Behrens F., Mackeben M., & Schröder-Preikschat W. (2010). An improved algorithm for automatic detection of saccades in eye movement data and for calculating saccade parameters. Behavior Research Methods, 42(3), 701–708. 10.3758/BRM.42.3.701 [DOI] [PubMed] [Google Scholar]

- Bettenbühl M., Paladini C., Mergenthaler K., Kliegl R., Engbert R., & Holschneider M. (2010). Microsaccade characterization using the continuous wavelet transform and principal component analysis. Journal of eye Movement Research, 3(5) doi: 10.16910/jemr.3.5.1 [DOI] [Google Scholar]

- Niehorster D. C., Cornelissen T. H. W., Holmqvist K., Hooge I. T. C., & Hessels R. S. (2017). What to expect from your remote eye-tracker when participants are unrestrained. Behavior Research Methods, 1–15. 10.3758/s13428-017-0863-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hessels R. S., Cornelissen T. H. W., Kemner C., & Hooge I. T. C. (2015). Qualitative tests of remote eyetracker recovery and performance during head rotation. Behavior Research Methods, 47(3), 848–859. 10.3758/s13428-014-0507-6 [DOI] [PubMed] [Google Scholar]

- Fetter M. (2007). Vestibuloocular reflex. [): Karger Publish-ers.]. Neuro-Ophthalmology (Aeolus Press; ), 40, 35–51. 10.1159/000100348 [DOI] [PubMed] [Google Scholar]

- Crawford J. D., Martinez-Trujillo J. C., & Klier E. M. (2003). Neural control of three-dimensional eye and head movements. Current Opinion in Neurobiology, 13(6), 655–662. 10.1016/j.conb.2003.10.009 [DOI] [PubMed] [Google Scholar]

- Rifai K., & Wahl S. (2016). Specific eye-head coordination enhances vision in progressive lens wearers. Journal of Vision (Charlottesville, Va.), 16(11), 5–5. 10.1167/16.11.5 [DOI] [PubMed] [Google Scholar]

- t.Hart B. M., Vockeroth J., Schumann F., Bartl K., Schneider E., König P., & Einhäuser W. (2009). Gaze allocation in natural stimuli: Comparing free exploration to head-fixed viewing conditions. Visual Cognition, 17(6-7), 1132–1158. 10.1080/13506280902812304 [DOI] [Google Scholar]

- Kliegl R., Nuthmann A., & Engbert R. (2006). Tracking the mind during reading: The influence of past, present, and future words on fixation durations. Journal of Experimental Psychology. General, 135(1), 12–35. 10.1037/0096-3445.135.1.12 [DOI] [PubMed] [Google Scholar]

- Vitu F., McConkie G. W., Kerr P., & O’Regan J. K. (2001). Fixation location effects on fixation durations during reading: An inverted optimal viewing position effect. Vision Research, 41(25-26), 3513–3533. 10.1016/S0042-6989(01)00166-3 [DOI] [PubMed] [Google Scholar]

- Quinlivan B., Butler J. S., Beiser I., Williams L., McGovern E., O’Riordan S., et al. Reilly R. B. (2016). Application of virtual reality head mounted display for investigation of movement: A novel effect of orientation of attention. Journal of Neural Engineering, 13(5), 056006. 10.1088/1741-2560/13/5/056006 [DOI] [PubMed] [Google Scholar]

- Pfeiffer T., & Memili C. (2016). Model-based real-time visualization of realistic three-dimensional heat maps for mobile eye tracking and eye tracking in virtual re-ality. Paper presented at the Proceedings of the Ninth Biennial ACM Symposium on Eye Tracking Research & Applications, Charleston, South Carolina 10.1145/2857491.2857541 [DOI] [Google Scholar]

- Duchowski A. T., Shivashankaraiah V., Rawls T., Gramopadhye A. K., Melloy B. J., Kanki B. (2000). Binocular eye tracking in virtual reality for inspection training. Paper presented at the Proceedings of the 2000 symposium on Eye tracking research & applications, Palm Beach Gardens, Florida, USA. [Google Scholar]

- Tanriverdi V., & Jacob R. J. K. (2000). Interacting with eye movements in virtual environments. Paper presented at the Proceedings of the SIGCHI conference on Human Factors in Computing Systems, The Hague, The Netherlands 10.1145/332040.332443 [DOI] [Google Scholar]

- Boukhalfi T., Joyal C., Bouchard S., Neveu S. M., & Renaud P. (2015). Tools and Techniques for Real-time Data Acquisition and Analysis in Brain Computer Interface studies using qEEG and Eye Tracking in Virtual Reality Environment. IFAC-PapersOnLine, 48(3), 46–51. 10.1016/j.ifacol.2015.06.056 [DOI] [Google Scholar]

- Krejtz K., Biele C., Chrzastowski D., Kopacz A., Niedzielska A., Toczyski P., & Duchowski A. (2014). Gaze-controlled gaming: immersive and difficult but not cognitively overloading. Paper presented at the Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Com-puting: Adjunct Publication. 10.1145/2638728.2641690 [DOI]

- Bulling A., & Gellersen H. (2010). Toward mobile eye-based human-computer interaction. IEEE Pervasive Computing, 9(4), 8–12. 10.1109/MPRV.2010.86 [DOI] [Google Scholar]

- Kassner M., Patera W., & Bulling A. (2014). Pupil: an open source platform for pervasive eye tracking and mobile gaze-based interaction. Paper presented at the Proceedings of the 2014 ACM international joint conference on pervasive and ubiquitous computing: Ad-junct publication. 10.1145/2638728.2641695 [DOI]

- DiScenna A. O., Das V., Zivotofsky A. Z., Seidman S. H., & Leigh R. J. (1995). Evaluation of a video tracking device for measurement of horizontal and vertical eye rotations during locomotion. Journal of Neuroscience Methods, 58(1-2), 89–94. 10.1016/0165-0270(94)00162-A [DOI] [PubMed] [Google Scholar]