Abstract

Background and Objectives

Currently limited information is available on speech stimuli processing at the subcortical level in the recipients of cochlear implant (CI). Speech processing in the brainstem level is measured using speech-auditory brainstem response (S-ABR). The purpose of the present study was to measure the S-ABR components in the sound-field presentation in CI recipients, and compare with normal hearing (NH) children.

Subjects and Methods

In this descriptive-analytical study, participants were divided in two groups: patients with CIs; and NH group. The CI group consisted of 20 prelingual hearing impairment children (mean age=8.90 ± 0.79 years), with ipsilateral CIs (right side). The control group consisted of 20 healthy NH children, with comparable age and sex distribution. The S-ABR was evoked by the 40-ms synthesized /da/ syllable stimulus that was indicated in the sound-field presentation.

Results

Sound-field S-ABR measured in the CI recipients indicated statistically significant delayed latencies, than in the NH group. In addition, these results demonstrated that the frequency following response peak amplitude was significantly higher in CI recipients, than in the NH counterparts (p<0.05). Finally, the neural phase locking were significantly lower in CI recipients (p<0.05).

Conclusions

The findings of sound-field S-ABR demonstrated that CI recipients have neural encoding deficits in temporal and spectral domains at the brainstem level; therefore, the sound-field S-ABR can be considered an efficient clinical procedure to assess the speech process in CI recipients.

Keywords: Cochlear implants, Auditory brainstem evoked response, Speech perception

Introduction

Cochlear implant (CI) is one of the greatest advances in medical science, and an appropriate treatment option for patients with severe to profound hearing loss [1]. Unlike usual amplifiers like hearing aids, a CI could directly stimulate the auditory nerve fibers, bypassing the cochlea [2]. It is believed that the central auditory system of CI recipients is different from that of people with normal hearing (NH), due to some factors such as auditory sensory deprivation, lack of cochlear processes, and different stimulation in these subjects [3]. The main function of the central auditory system is the neural encoding of speech stimuli [4]. In the central auditory pathways, the brainstem plays a significant role in speech comprehension, reading, and accessing the phonological information. Therefore, investigating the brainstem with real-life signals, such as speech, can be considered useful in identifying its function, and in associating its role with the acoustic stimuli processing, and speech understanding in CI recipients [5]. Auditory evoked potentials (AEPs) are used for evaluating the neural encoding at different levels of the central auditory system. Speech-auditory brainstem response (S-ABR) is one of the AEPs, which can indicate some important speech features, processing at the brainstem level [6]. Speech stimuli have many acoustic parameters, which vary over time. In addition, it has been identified as the only stimulus that describes the process of the auditory system response to speech. Therefore, investigating the speech stimuli at the brainstem level is important. The S-ABR is an important modality for assessing the brainstem behavior in the speech stimuli processing. The S-ABR is a test used for investigation of learning disability, developmental plasticity, and language processing skills [7]. Many authors have previously reported that S-ABR is a useful method for speech processing evaluation at the brainstem level in conditions such as unilateral hearing loss, stuttering disorder, and on hearing aid users [8-10]. Rocha-Muniz, et al. [11] investigated children with a central auditory processing disorder, and language impairment using S-ABR. They reported that the S-ABR was useful in identifying auditory processing disorders, and language deficits. Results of the S-ABR test suggest that speech can be clinically used to assess the central auditory function, and provide additional information on language disorders, and diagnosis of hearing processing deficits. Sinha and Basavaraj [7] used S-ABR in their study, and reported that this test could be applied in the diagnosis and categorization of children with learning disabilities. Moreover, in other subgroups of the same study, the effect of age on hearing could be applied to monitor the hearing aid and CI users. In the study by Elkabariti, et al. [12] in 2014, the speech-evoked ABR in children with epilepsy and healthy children with NH were investigated. Findings reflected the abnormal neural encoding of speech at the level of the brainstem. It was reported that the younger the age of the epileptic child, the more prolonged was the wave A latency, with increased V/A inter-latency values. They concluded that the abnormal response could be detected only with the speech stimulus, and not with the click stimulus from the epileptic group [12].

Gabr and Hassaan [13] measured S-ABR on 20 children using unilateral CIs. They concluded that S-ABR is a new clinical tool, which can identify the role of the brainstem on speech sound processing that contributes to the auditory cortical processing [13].

At present, there is inadequate information on the speech stimuli processing in CI recipients at the subcortical level. The knowledge of brainstem processing can also be used to understand the CI effect on the speech stimuli processing. We hypothesized that, the CI recipients had temporal and spectral auditory dysfunction, at least in part, in the auditory pathway; therefore, we aimed to measure speech processing at the brainstem level using sound-field S-ABR in the CI recipients and NH children.

Subjects and Methods

Participants

The present study was descriptive-analytical in nature. Study participants were divided in two groups. Group 1 (CI) consisted of 20 prelingual hearing-impairment children, with their age ranging from 8 to 10 years [mean age=8.9 years; standard deviation (SD)=0.79 years]. All patients had profound bilateral hearing loss, and were fitted with a unilateral CI in their right ear. Group 2 consisted of 20 NH children, with comparable ages (mean age=8.6 years; SD=0.8 years).

This study was conducted in the afternoon for 6 months, from December 2017 to May 2018, at the School of Rehabilitation Sciences of Iran University of Medical Sciences. The Ethics Committee of Iran University of Medical Sciences (IUMS; IR.IUMS.REC.1397.592), in accordance with the 1975 Declaration of Helsinki, and its later amendments, approved the study protocol. All the participants’ parents signed the written informed consent.

The means and SDs of the pure tone thresholds averaged across 0.25 0.5, 1, 2, and 4 kHz were 24.77±7.37 dB hearing level (HL) and 12.48±7.54 dB HL in CI users and NH children, respectively. The mean and the SD of the audiometric threshold (dB) for the five frequency tests in CI recipients and NH children are reported in Table 1.

Table 1.

Mean and standard deviation of the audiometric threshold (dB HL) in CI recipients and NH children

| Frequency (Hz) | CI |

NH |

||

|---|---|---|---|---|

| Mean | SD | Mean | SD | |

| 250 | 31.54 | 8.24 | 18.22 | 7.67 |

| 500 | 28.66 | 6.11 | 16.00 | 8.63 |

| 1,000 | 22.33 | 6.51 | 9.54 | 9.02 |

| 2,000 | 20.33 | 7.18 | 9.66 | 7.97 |

| 4,000 | 21.00 | 8.83 | 8.67 | 4.41 |

CI: cochlear implant, NH: normal hearing, SD: standard deviation

Demographics of the CI recipients’, including the chronological age, sex, the age of receiving their CIs, the CI usage duration, the age at which the profound hearing loss was identified for each case, ear implanted, implanted device, speech processor, and strategy that was used, are presented in Table 2.

Table 2.

Demographic information of 20 CI recipients

| Case No. | Sex | Chronological age (mon) | Age at CI (mon) | Duration of CI use (mon) | Age at HL diagnosis (mon) | Ear implanted | Implanted device | Speech processor | Strategy |

|---|---|---|---|---|---|---|---|---|---|

| 1 | M | 118 | 36 | 82 | 12 | Right | CI 24RE | Freedom | ACE |

| 2 | M | 110 | 60 | 49 | 14 | Right | CI 24RE | Freedom | ACE |

| 3 | M | 97 | 42 | 55 | 18 | Right | CI 24RE | Freedom | ACE |

| 4 | F | 99 | 56 | 43 | 18 | Right | CI 24RE | Freedom | ACE |

| 5 | F | 100 | 43 | 57 | 12 | Right | CI 24RE | Freedom | ACE |

| 6 | M | 103 | 42 | 61 | 15 | Right | CI 24RE | Freedom | ACE |

| 7 | F | 104 | 39 | 65 | 9 | Right | CI 24RE | Freedom | ACE |

| 8 | M | 117 | 61 | 52 | 15 | Right | CI 24RE | Freedom | ACE |

| 9 | M | 98 | 44 | 52 | 16 | Right | CI 24RE | Freedom | ACE |

| 10 | F | 106 | 62 | 60 | 12 | Right | CI 24RE | Freedom | ACE |

| 11 | F | 99 | 46 | 53 | 14 | Right | CI 24RE | Freedom | ACE |

| 12 | F | 107 | 55 | 52 | 19 | Right | CI 24RE | Freedom | ACE |

| 13 | M | 100 | 58 | 42 | 16 | Right | CI 24RE | Freedom | ACE |

| 14 | F | 113 | 60 | 52 | 24 | Right | CI 24RE | Freedom | ACE |

| 15 | M | 118 | 62 | 55 | 26 | Right | CI 24RE | Freedom | ACE |

| 16 | F | 96 | 45 | 51 | 15 | Right | CI 24RE | Freedom | ACE |

| 17 | M | 114 | 64 | 50 | 15 | Right | CI 24RE | Freedom | ACE |

| 18 | M | 117 | 54 | 62 | 16 | Right | CI 24RE | Freedom | ACE |

| 19 | M | 98 | 37 | 61 | 9 | Right | CI 24RE | Freedom | ACE |

| 20 | M | 102 | 38 | 62 | 10 | Right | CI 24RE | Freedom | ACE |

CI: cochlear implant, HL: hearing loss, mon: months, F: female, M: male, ACE: Advanced Combination Encoder

The children of the NH group were selected from schools in Tehran province, Iran, based on the following inclusion criteria: Persian-monolingual, normal middle ear function, air conduction threshold lower than 20 dB HL for octave frequency from 250 to 4,000 Hz, right-handedness, and with no history of hearing, learning, neurologic, speech, language, and cognitive problems.

CI recipients were randomly selected from the Amir-Alam Cochlear Implant Center in Tehran province, Iran. The inclusion criteria for their participation were as follows: Persian-monolingual, profound bilateral sensory-neural hearing loss before implant was administrated, prelingual hearing loss, using hearing aids for at least 6 months before CI, received auditory training before implantation with no improvement in their speech comprehension, ipsilateral right ear implantation fitted by the Nucleus CI24RE, CI system with the Freedom speech processor, Advanced Combination Encoder (ACE) processing strategy, contour electrode array, and with at least 4 years of CI experience. Exclusion criteria for all the participants included general health problems, non-cooperation, and unwillingness to undergo the tests at any stage of the research.

Experimental procedures

After the medical history taking; otoscopy and admittance measurements were performed to indicate external and middle ear disorders; and, at this stage, any participant with infection or any other conductive problem was excluded from the study.

Neural response telemetry, and electrode impedance were measured, and the accuracy of the CI maps was verified. Prior to presenting S-ABR stimuli, the participants were requested to set their CI setting to an everyday program, with the best sensitivity and volume position, which were checked using the experimenter. The pre-processing options were eliminated during the test to control for possible confounding effects on the test signals. To ensure the participants were hearing the test stimuli, and to check the integrity, uniformity, and connectivity of the external hardware (ABR hardware and speech processor) with the internal hardware, participants were requested to repeat the stimulus /da/, presented through the sound-field before administrating the S-ABR test. After the S-ABR stimulus /da/ was generated by Biologic Navigator Pro device, it was delivered to participants through the sound-field presentation. Only repeatable traces were collected for the test analysis in both the groups.

Since all the experiments were performed in the sound-field, the device and the speaker calibration was accomplished before conducting the tests. The sound level meter (B&K model 2209) was used for calibrating the loudspeaker output. The tests were administered in an anechoic booth, o reduce the background noise and diffusion. The speaker was placed at a 45-degree angle, and 1 m away from the participants’ head, and at the level of the ears. A earplug was deeply inserted into the left ear, such that the close proximity of the loudspeaker to the right ear ensured the minimization of the non-test ear’s contribution to the test results for NH children.

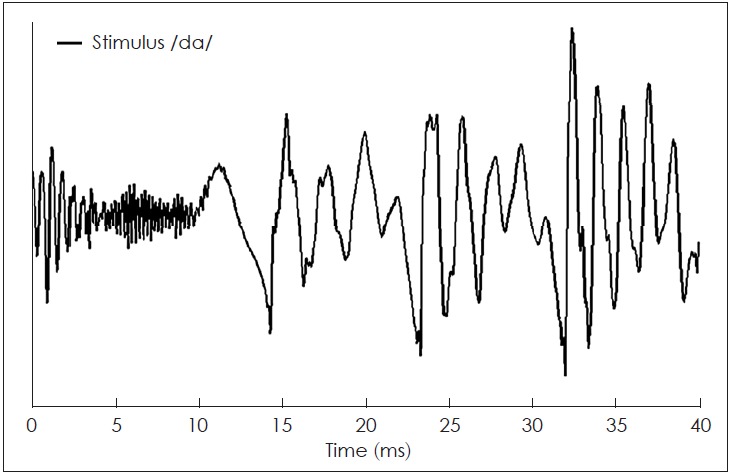

Sound-field S-ABR measures

The sound-field S-ABR test was performed for all participants. The responses were recorded by the use of a Biologic Navigator Pro (Natus Medical Inc., San Carlos, CA, USA). The participants were requested to quietly sit on a chair, with a screen in front, which displayed a soundless animated movie to keep them relaxed, and also to reduce their physical movements. We required some electrodes to be placed on the skull for recording the AEPs, and the generated potentials. The sites for placing the electrodes were cleansed with a skin cleanser gel to reduce the electrodes’ impedance. During the entire test duration, the impedance of the electrodes was maintained at <5 kΩ, and the inter-electrode difference was <3 kΩ. AgAgCl electrodes were used to achieve this goal. For each participant, stimuli were administered using a loudspeaker at 50 dB sensation level (SL), at the alternating polarity, at 9.1 per second rate. An epoch time of 85.33 ms was averaged with 15 ms pre-stimulus time, online filter setting with 100-2,000 Hz, and 4,000 sweeps with artifact-free responses. To control artifact from CI, the electrodes were placed on the participants’ head as follows: Vertex as non-inverting, earlobes contralateral as inverting or as a reference, and forehead (Fpz) as a ground electrode. The speech stimulus was a synthesized stop consonant /da/, with a 40 ms duration, which was passed through the BioMARK module (Natus Medical Inc., San Carlos, CA, USA) (Fig. 1). This stimulus had three parts: first, the initial noise burst; second, a formant transition between the consonants; and third, as steady-state vowel involving fundamental frequency (F0), with 103 to 125 Hz that increased linearly.

Fig. 1.

Time-domain of synthesized stop consonant /da/ with 40 ms. This synthetics stimulus evoked seven peaks in the speech-auditory brainstem response that have named V, A, C, D, E, F, and O.

In addition, this stimulus contained five high formants, which were documented. The first formant (F1) increased linearly from 220 to 720 Hz, while the second formant (F2) reduced from 1,700 to 1,240 Hz. The third formant (F3) also reduced from 2,580 to 2,500 Hz. Conversely, the fourth (F4) and fifth formants (F5) remained constant at 3,600 and 4,500 Hz, respectively. These responses indicated neural events of speech stimulus, synchronously locked to an acoustic element. The sound-field S-ABR waves were extracted from a consonant-vowel speech stimulus, and comprised of seven peaks: the initial part (containing V and A waves), transient consonant to a vowel (wave C), frequency following response (FFR) (waves D, E, and F), and offset response (wave O). Therefore, generally seven waves could be detected, and some peaks could be eliminated if there was noise. The FFR waves were originated from the cumulative phase-locking activities of the brainstem, which coincided with the stimulus period [14,15]. All peak latencies and amplitudes were documented for analyzing sound-field S-ABR. The FFR components were measured including spectral amplitude to F0 and F1 stimuli, and higher frequencies from the 7th to 11th harmonics of F0 [high frequencies (HF) stimulus]. To analyze and assess the sound-field S-ABR waveform, the timing and magnitude of all the discrete peaks and the FFR components were recorded. Several techniques were applied to analyze the spectral aspect of the responses (FFR), including root mean square amplitude, the amplitude of the spectral component corresponding to the stimuli F0, F1, and HF, and stimulus-to-response correlation.

Statistical analysis

For analysis of data, SPSS software version 18 (SPSS Inc., Chicago, USA) was applied. The level of significance was set at p<0.05. In addition, Matrix Laboratory (MATLAB) software version 2010 (The Math Works, Inc., Natick, MA, USA) was used for sound-field S-ABR data processing. The mean and SD of sound-field S-ABR were calculated in the two groups. The independent sample t-test was applied to compare the variables with a normal distribution, while the Kruskal-Wallis test was applied for variables with non-normal distribution.

Results

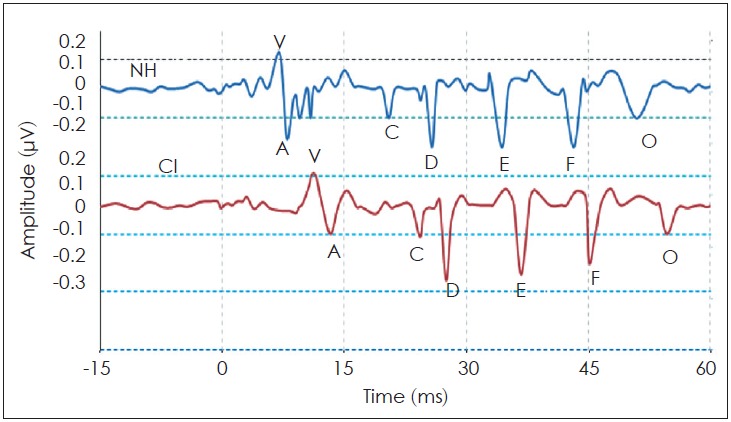

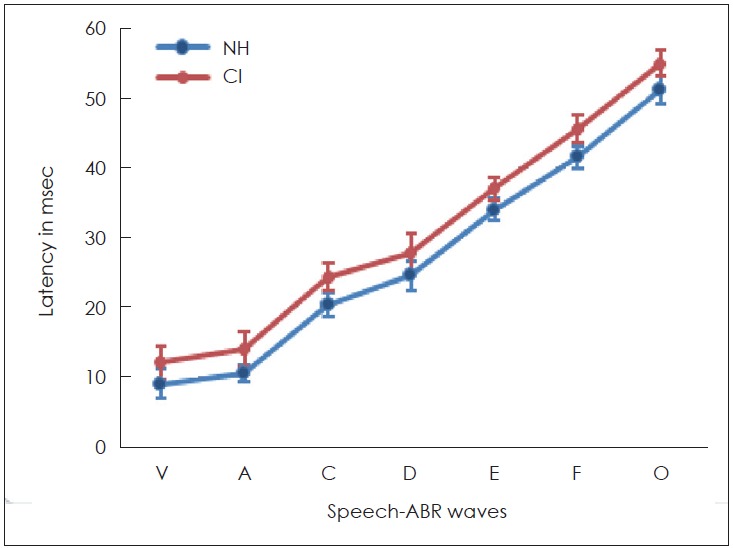

For both groups, the sound-field S-ABR was recorded. Table 3 represents the mean, SD, and p-value of the latency, amplitude, and spectral values of different peaks of sound-field S-ABR in all participants. The grand average sound-field S-ABR waveforms for both groups are presented in Fig. 2. CI recipients had significantly longer latencies of all the peaks relative to those of NH children (p<0.05). These response values are presented in Table 3 and Fig. 3.

Table 3.

The mean, SD, and p-values for latency, amplitude, and spectral measures in sound-field S-ABR in CI recipients and NH children

| NH |

CI |

p-value | |||

|---|---|---|---|---|---|

| Mean | SD | Mean | SD | ||

| Latency (ms) | |||||

| V | 9.51 | 0.30 | 11.92 | 0.49 | <0.05 |

| A | 10.91 | 0.31 | 13.43 | 0.32 | <0.05 |

| C | 20.42 | 0.98 | 24.32 | 0.41 | <0.05 |

| D | 25.27 | 1.26 | 27.71 | 0.58 | <0.05 |

| E | 34.54 | 0.91 | 36.52 | 0.87 | <0.05 |

| F | 42.93 | 0.95 | 45.64 | 1.03 | <0.05 |

| O | 51.15 | 0.62 | 54.16 | 0.48 | <0.05 |

| Amplitude (µv) | |||||

| V | 0.17 | 0.08 | 0.12 | 0.04 | <0.05 |

| A | -0.20 | 0.07 | -0.14 | 0.05 | <0.05 |

| C | -0.14 | 0.08 | -0.09 | 0.03 | <0.05 |

| D | -0.18 | 0.06 | -0.26 | 0.07 | <0.05 |

| E | -0.17 | 0.05 | -0.24 | 0.04 | <0.05 |

| F | -0.12 | 0.04 | -0.18 | 0.05 | <0.05 |

| O | -0.16 | 0.08 | -0.12 | 0.03 | <0.05 |

| Spectral magnitudes (µv) | |||||

| F0 | 9.89 | 4.03 | 7.04 | 2.44 | <0.05 |

| F1 | 4.58 | 1.44 | 2.25 | 1.01 | <0.05 |

| HF | 1.68 | 0.93 | 0.44 | 0.24 | <0.05 |

SD: standard deviation, S-ABR: speech-auditory brainstem response, NH: normal hearing, CI: cochlear implant

Fig. 2.

The grand average waveform for the sound-field speech-auditory brainstem response (S-ABR) in cochlear implant (CI) recipients and normal hearing (NH) children. The sound-field Speech-ABR waves in CI recipients are delayed compared with NH children.

Fig. 3.

Latencies of sound-field speech-auditory brainstem response (S-ABR) in cochlear implant (CI) recipients and normal hearing (NH). The latency of all Sound-field Speech-ABR waves in CI recipients are longer than NH children.

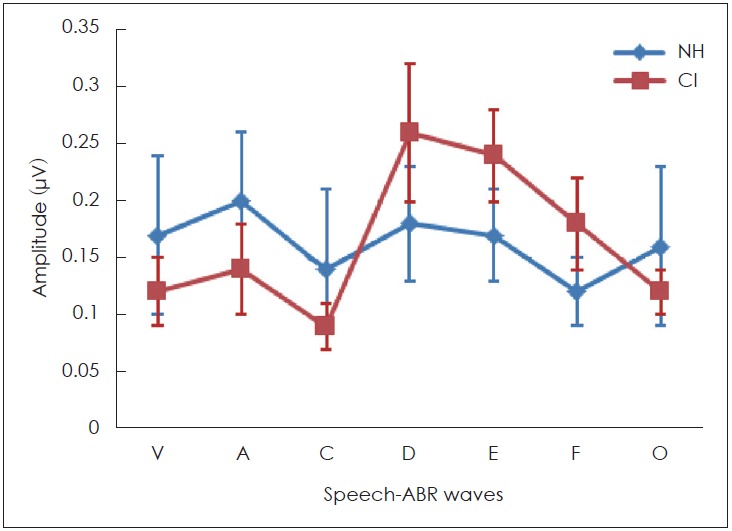

An important finding of this study was that, the sound-field S-ABR waveform amplitude in CI recipients, particularly, the FFR (D, E, and F) wave amplitude was significantly higher in the CI recipients, than in the NH children (p<0.05). Meanwhile the amplitudes of onset (V and A) and offset (O) of the sound-field S-ABR were significantly lower in the CI group than in the NH children group (p<0.05) (Fig. 4).

Fig. 4.

The amplitude of sound-field speech-auditory brainstem response (S-ABR) in cochlear implant (CI) recipients and normal hearing (NH) children. The amplitude of frequency following response portion (D, E and F) waves in CI recipients are higher than NH children.

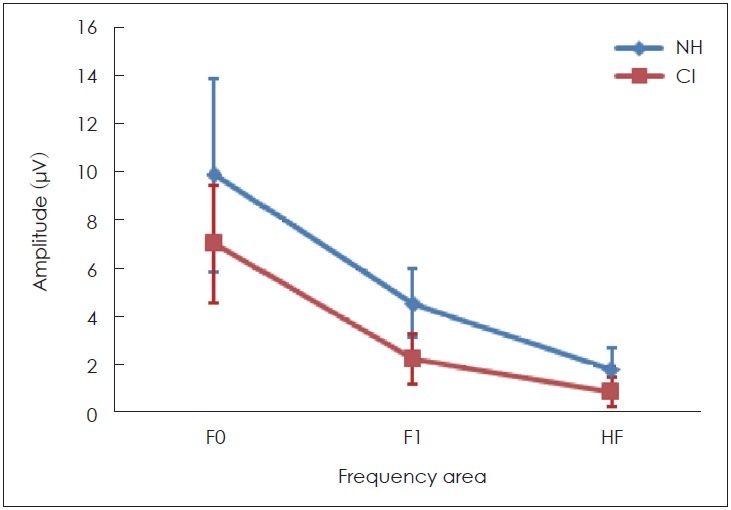

Fourier analysis was used for analyzing the spectral amplitude of the fundamental frequency of formant transition, and its harmonics including F1 and HF. With respect to the fast Fourier transforms, the sound-field S-ABR analysis, the spectral amplitude of F0 (80-121 Hz), F1 of speech stimuli (ranged from 454 to 719 Hz), and HF (ranged from 721 to 1,155 Hz) showed significant differences between these two groups. Table 3 and Fig. 5 represent these measurements.

Fig. 5.

The spectral magnitude of sound-field speech-auditory brainstem response (S-ABR) in cochlear implant (CI) recipients and normal hearing (NH) children. The spectral amplitude of frequency following response in CI recipients are lower than NH children. HF: high frequencies.

Discussion

This study investigated the brainstem neural encoding using the S-ABR test in CI-recipients, and NH counterparts in the sound-field presentation. The main finding in this study regarding sound-field S-ABR was all wave latency increment in the CI-recipient group, than in the NH group. This finding was consistent with the study conducted by Gabr and Hassaan [13], demonstrating that all wave latency in children with CI was longer than that of NH children. This outcome was also in agreement with the study of Galbraith, et al. [16], where the results suggested that CI users may require a longer time for encoding speech stimuli, than non CI users. Our findings of sound-field S-ABR response demonstrated statistically significant differences between the NH children and CI-recipients. Onset and offset responses in CI-recipients were significantly longer than those of the NH children. The previous studies indicated that a long time is needed for processing the rapid acoustic stimuli, similar to the transient component of /da/ stimuli in the CI-recipient [11,17,18]. This finding is consistent with Nada et al. [6], who reported a significant delay in the sound-field S-ABR responses initial wave latency in sensory-neural hearing loss. These findings could be defined by several factors, such as the required time for CI-recipients to process acoustical signals, defect in the brainstem response synchrony to the speech stimuli, degraded sound perception, limited language ability, or even a combination of the aforementioned factors [19].

Interestingly, the results of this study demonstrated that some peaks of the sound-field S-ABR amplitude of the CI recipients had higher rate than those of the NH children. As mentioned earlier, the sound-field S-ABR includes two main portions: aperiodic and periodic or FFR waveforms. Results show that the amplitude of the FFR component in the CI-recipient group was higher than that of the NH children group; however, the amplitude of the aperiodic component was lower in the CI-recipient group, than in the NH children group. These results are in agreement with a previous study that reported that the amplitude of sound-field S-ABR aperiodic in CI-recipients group was lower than FFR waveform; however, that study was conducted in two CI-recipients groups, and comparison with normal groups was not performed [13]. The measure of amplitude in the two studies was not similar. The factors that may account for this discrepancy were the background noise, the participant age, and the different CI prostheses used [13]. However, the amplitude of sound-field S-ABR responses onset and offset in the NH group was higher, than in the CI-recipient group. These results indicated that the reaction and response to fast and short stimuli, like a transient component of speech stimuli in CI-recipients, were not adequate achieving a reduction in the response amplitude. Generally, many factors may explain the increased FFR response amplitude in CI-recipients, however, the authors considered the electrical current, which directly excites the auditory nerve, whereby the compression role of the basilar membrane is overlooked. Moreover, there was no neurotransmitter release in response to an electrical signal, consequently, the rate of a spike in the first auditory neuron row could be increased, and finally, the maximum rate evoked in electrical stimulation was greater than that in acoustical stimulation [20,21]. The most recent important finding was obtained from fast Fourier transform analysis in the spectral domain. This study indicated that the sound-field S-ABR FFR component was not only documented in sound-field presentation, but also as an aperiodic component of speech stimuli. The sound-field S-ABR presence in CI-recipients demonstrated that generally, the speech processing exists at the brainstem level, with respect to the differences seen with normal individuals. Consequently, it was observed that CI devices led to the neural conduction velocity and neural synchrony improvement [13]. This finding is in agreement with the results of the study conducted by Gama, et al. [22], which was conducted on 23 participants with NH, for the FFR recording in the sound-field presentation. They demonstrated that FFR waveforms could be reliably elicited in the NH group and CI-recipients group in sound-field, comparable with close-field presentations [22]. In this analysis, the sin wave magnitude corresponded to the energy level in the complex sound frequency. The spectral analysis was used for phase-locking measurement at F0, F1, and HF. Analysis in the spectral domain of sound-field S-ABR response indicated that the total activity occurring around F0, F1, and HF in NH children were greater, than in CI-recipients. The results indicated that the precision and magnitude of neural phase locking to F0, F1, and HF ranges were lower in CI-recipients. The F0 plays an essential role for perceiving the pitch of a person’s voice, and contains a low-frequency component of speech sound; consequently, recognition of speaker sound, and the voice emotional tone is dependent on the F0 encoding [23]. Furthermore, F1 and HF are a series of the discrete peak in the formant structure that are responsible for the vowel perception, and provide some information regarding the stimuli phonetics; therefore, it could play a significant role in different capabilities of CI-recipients, and normal individuals in the different spectral processing at the brainstem level to speech signals [24]. As mentioned earlier, speech perception in CI recipients is weaker than in the NH counterparts; however, in this study, we found that F0, F1, and HF were lower, in the subcortical area in CI-recipients, than in NH children. Consequently, this study indicated physiological evidence for weak speech perception abilities, such as identifying the speaker and emotional voice, and poor performance in perceiving vowel and phonetic information. These results are in agreement with the findings of Rocha-Muniz, et al. [11], which demonstrated that spectral amplitude of speech sound was decreased in auditory processing disorder, and language impairment.

Therefore, these findings demonstrate that CI-recipients indicated delayed latency for sound-field S-ABR response than the NH children did. The FFR component amplitude in sound-field S-ABR of CI-recipient group was increased, than that of the NH group, while this increased amplitude was not observed in the aperiodic component of sound-field S-ABR. Spectral analysis of sound-field S-ABR responses indicated that CI-recipients have neural encoding deficits for timing features, than NH children do. The decrease in F0, F1, and HF spectral amplitude can result in weak performance of the CI-recipients in speech processing. The findings of this study added valuable information regarding brainstem auditory processing amongst children with CI, and NH counterparts in the sound-field presentation.

In the current study, the findings of sound-field S-ABR demonstrated that CI-recipients had neural encoding deficits in temporal and spectral domains at the brainstem level, than the NH children did. Therefore, the sound-field S-ABR, as an objective, non-invasive, and efficient clinical procedure, is useful for assessing the speech processing at the brainstem level in CI-recipients. Future research is warranted to measure the S-ABR in CI-recipients with different speech recognition abilities.

Footnotes

Conflicts of interest

The authors have no financial conflicts of interest.

Authors’ contribution

Conceptualization: Farnoush Jarollahi, Ayub Valadbeigi, and Bahram Jalaei. Data curation: Ayub Valadbeigi, Bahram Jalaei, and Masoud Motasaddi Zarandy. Formal analysis: Ayub Valadbeigi, Bahram Jalaei, Zahra Shirzhiyan, and Hamid Haghani. Funding acquisition: Farnoush Jarollahi and Masoud Motasaddi Zarandy. Investigation: Ayub Valadbeigi, Farnoush Jarollahi, and Hamid Haghani. Methodology: Ayub Valadbeigi, Mohammad Maarefvand, and Bahram Jalaei. Project administration: Farnoush Jarollahi and Ayub Valadbeigi. Resources: Farnoush Jarollahi and Masoud Motasaddi Zarandy. Software: Bahram Jalaei, Mohammad Maarefvand, and Zahra Shirzhiyan. Supervision: Farnoush Jarollahi and Ayub Valadbeigi. Validation: Bahram Jalaei, Mohammad Maarefvand, Hamid Haghani, and Zahra Shirzhiyan. Visualization: Ayub Valadbeigi, Bahram Jalaei, Mohammad Maarefvand, and Hamid Haghani. Writing—original draft: Ayub Valadbeigi, Farnoush Jarollahi, and Bahram Jalaei. Writing—review & editing: Farnoush Jarollahi, Ayub Valadbeigi, and Mohammad Maarefvand.

REFERENCES

- 1.Taitelbaum-Swead R, Fostick L. Audio-visual speech perception in noise: implanted children and young adults versus normal hearing peers. Int J Pediatr Otorhinolaryngol. 2017;92:146–50. doi: 10.1016/j.ijporl.2016.11.022. [DOI] [PubMed] [Google Scholar]

- 2.Harris MS, Kronenberger WG, Gao S, Hoen HM, Miyamoto RT, Pisoni DB. Verbal short-term memory development and spoken language outcomes in deaf children with cochlear implants. Ear Hear. 2013;34:179–92. doi: 10.1097/AUD.0b013e318269ce50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Abdelsalam NMS, Afifi PO. Electric auditory brainstem response (E-ABR) in cochlear implant children: effect of age at implantation and duration of implant use. Egyptian Journal of Ear, Nose, Throat and Allied Sciences. 2015;16:145–50. [Google Scholar]

- 4.Russo N, Nicol T, Musacchia G, Kraus N. Brainstem responses to speech syllables. Clin Neurophysiol. 2004;115:2021–30. doi: 10.1016/j.clinph.2004.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Heman-Ackah SE, Roland JT, Jr, Haynes DS, Waltzman SB. Pediatric cochlear implantation: candidacy evaluation, medical and surgical considerations, and expanding criteria. Otolaryngol Clin North Am. 2012;45:41–67. doi: 10.1016/j.otc.2011.08.016. [DOI] [PubMed] [Google Scholar]

- 6.Nada NM, Kolkaila EA, Gabr TA, El-Mahallawi TH. Speech auditory brainstem response audiometry in adults with sensorineural hearing loss. Egyptian Journal of Ear, Nose, Throat and Allied Sciences. 2016;17:87–94. [Google Scholar]

- 7.Sinha SK, Basavaraj V. Speech evoked auditory brainstem responses: a new tool to study brainstem encoding of speech sounds. Indian J Otolaryngol Head Neck Surg. 2010;62:395–9. doi: 10.1007/s12070-010-0100-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bellier L, Veuillet E, Vesson JF, Bouchet P, Caclin A, Thai-Van H. Speech auditory brainstem response through hearing aid stimulation. Hear Res. 2015;325:49–54. doi: 10.1016/j.heares.2015.03.004. [DOI] [PubMed] [Google Scholar]

- 9.Tahaei AA, Ashayeri H, Pourbakht A, Kamali M. Speech evoked auditory brainstem response in stuttering. Scientifica. 2014;2014:Article ID 328646. doi: 10.1155/2014/328646. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Carter ME. Speech-evoked auditory brainstem response in children with unilateral hearing loss. In: Washington University School of Medicine, editor. Independent Studies and Capstones. St. Louis: Washington University School of Medicine; 2013. p. 664. [Google Scholar]

- 11.Rocha-Muniz CN, Befi-Lopes DM, Schochat E. Investigation of auditory processing disorder and language impairment using the speech-evoked auditory brainstem response. Hear Res. 2012;294:143–52. doi: 10.1016/j.heares.2012.08.008. [DOI] [PubMed] [Google Scholar]

- 12.Elkabariti RH, Khalil LH, Husein R, Talaat HS. Speech evoked auditory brainstem response findings in children with epilepsy. Int J Pediatr Otorhinolaryngol. 2014;78:1277–80. doi: 10.1016/j.ijporl.2014.05.010. [DOI] [PubMed] [Google Scholar]

- 13.Gabr TA, Hassaan MR. Speech processing in children with cochlear implant. Int J Pediatr Otorhinolaryngol. 2015;79:2028–34. doi: 10.1016/j.ijporl.2015.09.002. [DOI] [PubMed] [Google Scholar]

- 14.Akhoun I, Moulin A, Jeanvoine A, Ménard M, Buret F, Vollaire C, et al. Speech auditory brainstem response (speech ABR) characteristics depending on recording conditions, and hearing status: an experimental parametric study. J Neurosci Methods. 2008;175:196–205. doi: 10.1016/j.jneumeth.2008.07.026. [DOI] [PubMed] [Google Scholar]

- 15.Hornickel J, Knowles E, Kraus N. Test-retest consistency of speech-evoked auditory brainstem responses in typically-developing children. Hear Res. 2012;284:52–8. doi: 10.1016/j.heares.2011.12.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Galbraith GC, Amaya EM, de Rivera JM, Donan NM, Duong MT, Hsu JN, et al. Brain stem evoked response to forward and reversed speech in humans. Neuroreport. 2004;15:2057–60. doi: 10.1097/00001756-200409150-00012. [DOI] [PubMed] [Google Scholar]

- 17.Johnson KL, Nicol TG, Kraus N. Brain stem response to speech: a biological marker of auditory processing. Ear Hear. 2005;26:424–34. doi: 10.1097/01.aud.0000179687.71662.6e. [DOI] [PubMed] [Google Scholar]

- 18.Song JH, Banai K, Russo NM, Kraus N. On the relationship between speech-and nonspeech-evoked auditory brainstem responses. Audiology and Neurotology. 2006;11:233–41. doi: 10.1159/000093058. [DOI] [PubMed] [Google Scholar]

- 19.Kraus N, Banai K. Auditory-processing malleability: focus on language and music. Current Directions in Psychological Science. 2007;16:105–10. [Google Scholar]

- 20.Kiang NY, Moxon EC. Physiological considerations in artificial stimulation of the inner ear. Ann Otol Rhinol Laryngol. 1972;81:714–30. doi: 10.1177/000348947208100513. [DOI] [PubMed] [Google Scholar]

- 21.Javel E, Viemeister NF. Stochastic properties of cat auditory nerve responses to electric and acoustic stimuli and application to intensity discrimination. J Acoust Soc Am. 2000;107:908–21. doi: 10.1121/1.428269. [DOI] [PubMed] [Google Scholar]

- 22.Gama N, Peretz I, Lehmann A. Recording the human brainstem frequency-following-response in the free-field. J Neurosci Methods. 2017;280:47–53. doi: 10.1016/j.jneumeth.2017.01.016. [DOI] [PubMed] [Google Scholar]

- 23.Ahadi M, Pourbakht A, Jafari AH, Shirjian Z, Jafarpisheh AS. Gender disparity in subcortical encoding of binaurally presented speech stimuli: an auditory evoked potentials study. Auris Nasus Larynx. 2014;41:239–43. doi: 10.1016/j.anl.2013.10.010. [DOI] [PubMed] [Google Scholar]

- 24.Skoe E, Kraus N. Auditory brain stem response to complex sounds: a tutorial. Ear Hear. 2010;31:302–24. doi: 10.1097/AUD.0b013e3181cdb272. [DOI] [PMC free article] [PubMed] [Google Scholar]