Abstract

Determining biomarkers for autism spectrum disorder (ASD) is crucial to understanding its mechanisms. Recently deep learning methods have achieved success in the classification task of ASD using fMRI data. However, due to the black-box nature of most deep learning models, it’s hard to perform biomarker selection and interpret model decisions. The recently proposed invertible networks can accurately reconstruct the input from its output, and have the potential to unravel the black-box representation. Therefore, we propose a novel method to classify ASD and identify biomarkers for ASD using the connectivity matrix calculated from fMRI as the input. Specifically, with invertible networks, we explicitly determine the decision boundary and the projection of data points onto the boundary. Like linear classifiers, the difference between a point and its projection onto the decision boundary can be viewed as the explanation. We then define the importance as the explanation weighted by the gradient of prediction w.r.t the input, and identify biomarkers based on this importance measure. We perform a regression task to further validate our biomarker selection: compared to using all edges in the connectivity matrix, using the top 10% important edges we generate a lower regression error on 6 different severity scores. Our experiments show that the invertible network is both effective at ASD classification and interpretable, allowing for discovery of reliable biomarkers.

Keywords: invertible network, ASD, biomarker, regression

1. Introduction

Autism spectrum disorder (ASD) is a neurodevelopmental disorder that affects social interaction and communication, yet the causes for ASD are still unknown [16]. Functional MRI (fMRI) can measure the contrast dependent on blood oxygenation [15] and reflect brain activities, and therefore has the potential to help in understanding ASD.

Recent research efforts have applied machine learning and deep learning methods on fMRI data to classify ASD versus control groups [1,11] and predict treatment outcomes [19,18]. However, deep learning models are typically hard to interpret, and thus are difficult to use for identifying biomarkers for ASD.

Various methods have been proposed to interpret a deep neural network. Bach et al. proposed to assign the decision of a neural network to its input with layer-wise relevance propagation [2]; Mahendran et al. proposed to approximately invert the network to explain its decision [13]; Sundararajan et al. proposed integrated gradient to explain a model’s decision [17]. However, all these methods only generate an approximation to the inversion of neural networks, and can not unravel the black-box representation of deep learning models.

The recently proposed invertible network can accurately reconstruct the input from its output [12,3]. Based on this property, we propose a novel method to interpret ASD classification on fMRI data. As shown in Fig. 1, an invertible network first maps data from the input domain (e.g. connectivity matrix calculated from fMRI data) to the feature domain, then applies a fully-connected layer to classify ASD from control group. Since a fully-connected layer is equivalent to a linear classifier in the feature domain, we can determine the decision boundary as a high-dimensional plane, and calculate projection of a point onto the boundary. Since our network is invertible, we can invert the decision boundary in the feature domain to the input domain. As shown in Fig. 3, the difference between the input and its projection on the decision boundary can be viewed as the explanation for the model’s decision. We applied the proposed method on ASD classification of the ABIDE dataset achieving 71% accuracy, and then identified biomarkers for ASD based on the proposed interpretation method. We further validated the selected biomarkers (edges in the connectivity matrix as in Fig. 6 and ROIs as in Fig. 7) in a regression task: compared with using all edges, the selected edges generate more accurate predictions for ASD severity (Table 2).

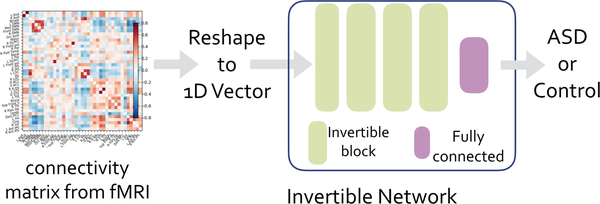

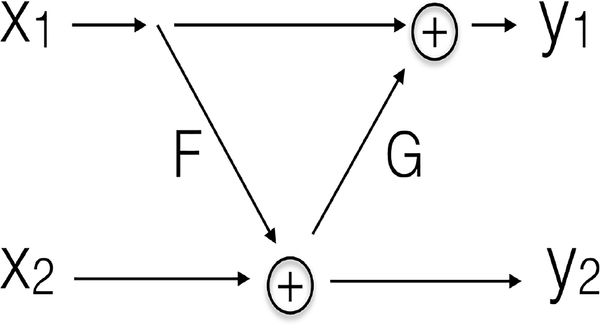

Fig. 1:

Classification pipeline for fMRI and structure of invertible network.

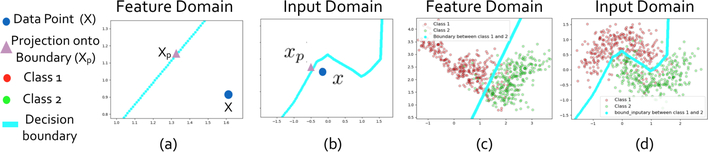

Fig. 3:

Illustration on a simulation dataset. Fig. (a): data point X and its projection Xp onto the decision boundary. In the feature domain the model is a linear classifier. Fig. (b) Corresponding points x and xp in the input domain, calculated as inversion of points from (a). Fig. (c) and (d): Points from two classes are sampled around two interleaving half circles. Decision boundary is a line in the feature domain (c), and is a curve in the input domain (d).

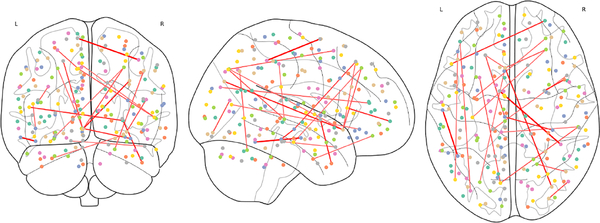

Fig. 6:

Top 20 connections selected by the proposed method.

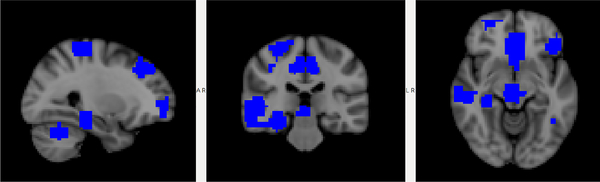

Fig. 7:

Top ROIs selected by the proposed method.

Table 2:

Regression results for ASD severity scores

| # Edges | Total | Awareness | Cognition | Comm | Motivation | Mannerism | |

|---|---|---|---|---|---|---|---|

| MSE | 100% | 44.2 | 3.86 | 6.90 | 11.95 | 7.59 | 6.37 |

| RF(top 10%) | 71.8 | 8.2 | 12.6 | 22.1 | 12.7 | 11.8 | |

| ours | 42.8 | 3.72 | 6.84 | 11.70 | 7.17 | 6.19 | |

| Cor | 100% | 0.14 | 0.33 | 0.38 | 0.20 | −0.03 | 0.22 |

| RF(top 10%) | 0.09 | −0.19 | 0.01 | −0.01 | −0.12 | −0.08 | |

| ours | 0.18 | 0.42 | 0.40 | 0.28 | 0.20 | 0.32 | |

Our contributions can be summarized as:

Based on the invertible network, we proposed a novel method to intepret model decision and rank feature importance.

We applied the proposed method on an ASD classification task, achieved a high classification accuracy (71% on the whole ABIDE dataset), and identified biomarkers.

We demonstrated effectiveness of selected biomarkers in a regression task.

2. Methods

The classification pipeline is summarized in Fig. 1. In this section, we first discuss input data and pre-processing of fMRI, then introduce the structure of invertible networks as in Fig. 1. Next, we propose a novel method to determine the decision boundary and identify biomarkers for ASD.

2.1. Dataset and Inputs

The ABIDE dataset [9] consists of fMRI data for 530 subjects with ASD and 505 control subjects. We use the pre-processed data by the Connectome Computation System (CCS) [20] pipeline, which includes registration, 4D global mean-based intensity normalization, nuisance correction and band-pass filtering. We extract the mean time series for each region of interest (ROI) defined by the CC200 atlas [8], consisting of 200 ROIs. We compute the Pearson Correlation between the time series of every pair of ROIs, and reshape the connectivity into a vector of length 200 × 199/2 = 19900. This vector is the input to the invertible network.

2.2. Invertible Networks

The structure of the invertible network is shown in Fig. 1. An invertible network is composed of a stack of invertible blocks and a final fully-connected (FC) layer to perform classification. Note that inversion does not update parameters, thus is different from the backward propagation method; invertible networks can be trained with the backward propagation method as normal networks.

Invertible Blocks

An invertible block is shown in Fig. 2, where the input is split by channel into two parts x1 and x2, and the outputs are denoted as y1 and y2. Feature maps x1, x2, y1, y2 have the same shape. F and G are non-linear functions with parameters to learn: for 1D input, F and G can be a sequence of FC layers, 1D batch normalization layers and activation layers; for 2D input, F and G can be a sequence of convolutional layers, 2D batch normalization layers and activation layers. F and G are required to generate outputs of the same shape as input. The invertible block can accurately recover the input from its output, where the forward pass and inverse of an invertible block are:

| (1) |

Fig. 2:

Structure of invertible blocks.

Notations

The invertible network classifier can be viewed as a 2-stage model:

| (2) |

where T is the invertible transform and C is the final FC layer which is a linear classifier. We denote as the input domain (e.g. connectivity matrix from fMRI reshaped to 1D vector), and as the feature domain. A data point is mapped from the input domain to the feature domain by an invertible transform T, then a linear classifer C is applied.

2.3. Model Decision Interpretation and Biomarker Selection

In this section we propose a novel method to interpret decisions of an invertible network classifier. We begin with a linear classifier, then generalize to non-linear classifier such as neural networks. An example is shown in Fig. 3.

Interpret Decision of a Linear Classifier

A linear classifier is calculated as:

| (3) |

where w is the weight vector, and b is the bias. The decision boundary is a high dimensional plane, and can be denoted as {x : ⟨w, x⟩ + b = 0}.

For a data point x, we calculate its projection onto the decision boundary as

| (4) |

and define the explanation and importance as:

| (5) |

where ⊗ is element-wise product, the difference x−xp can be viewed as the explanation for the linear classifier, and the importance of each input dimension is defined as the absolute value of the explanation weighted by w. An example is shown in Fig. 3(a).

Determine Projection onto Decision Boundary of Invertible Networks

As in Equation 2, a point x in the input domain is mapped to the feature domain, denoted as X = T(x). Since the classifier is linear in the feature domain, we can calculate the projection of X onto the boundary as in Equation 4, denoted as Xp. Note that since T is invertible, we can map it to the input domain, denoted as xp = T−1(Xp) where T−1 is the inverse operation of T as in Equation 1. Furthermore, we can invert the decision boundary from the feature domain to the input domain.

An example is shown in Fig. 3. Fig. 3(a) shows a data point X and its projection Xp in the feature domain, Fig. 3(b) inverts the projection back to the input domain. Fig. 3(c) and (d) show the decision boundary in the feature and input domain, respectively.

Feature Importance and Biomarker Selection

We expand the network f around current point x using Taylor expansion:

| (6) |

Similar to Equation 5, we approximate f locally as a linear classifier and define the explanation and importance as:

| (7) |

Equation 7 defines individualized importance for each data point, and we calculate the mean importance across the entire dataset. We select edges with top importance values as the biomarkers for ASD.

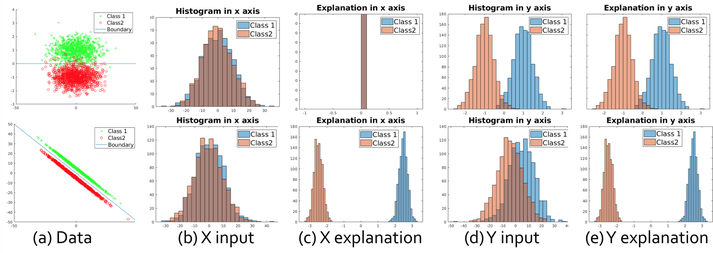

Note that two classes may be close in the input but separated in the explanation. We show two examples in Fig. 4. In the top row, the decision boundary is a horizontal line, thus y is useful to distinguish the two clusters while x is not. In this case, the distribution of the two clusters are overlapped in the x axis but separated in the y axis for both input and the explanation. In the bottom row, two clusters are separated by line y = −x: neither x nor y axis can distinguish two clusters using the input (Fig. 4(b),(d)), but both axes have a large separation margin using the explanation(Fig. 4(c),(e)).

Fig. 4:

Explanation method on simulation datasets. Columns (a) to (e) represent data distribution, input value in x axis, explanation in x axis, input value in y axis and explanation in y axis. In the top row, both input and explanation fail to distinguish two classes in x axis, but succeed in y axis. In the bottom row, both x and y values are useful; two clusters are overlapped in distribution of x (y) input values, but separated in the explanation for x (y) axis.

3. Experiments

Classification Accuracy

We perform a 10-fold cross validation on the entire ABIDE dataset to classify ASD from control group. We compare the invertible network classifier with other methods including SVM, random forest (RF) with 5,000 trees, a 1-layer multi-layer perceptron (MLP) and a 2-layer MLP. Our invertible network (InvNet) has 2 invertible blocks, and the F and G in an invertible block is FC-ReLU-FC-ReLU, where the first FC layer maps a 19,900 (edges in a connectivity matrix using CC200 atlas with 200 ROIs [8]) dimensional vector to a 512 dimensional vector, and the second FC maps a vector from 512 dimension to 19,900. For each of the 1035 subjects, we augment data 50 times by bootstrapping voxels within each ROI, then calculating the connectome from the bootstrapped mean time series. This results in 51570 examples. All deep learning models are trained with SGD optimizer for 50 epochs, with a learning rate of 1e-5 and a cross-entropy loss.

Numerical results are summarized in Table 1. Compared with other methods including a Deep Neural Network (DNN) model in [11], the proposed InvNet generates the highest accuracy (0.71), recall (0.71) and F1 score (0.71).

Table 1:

Classification results

| SVM | RF | MLP(1) | MLP(2) | DNN | InvNet | |

|---|---|---|---|---|---|---|

| Accuracy | 0.67 | 0.66 | 0.66 | 0.68 | 0.70 | 0.71 |

| Precision | 0.68 | 0.65 | 0.66 | 0.68 | 0.74 | 0.72 |

| Recall | 0.68 | 0.65 | 0.66 | 0.67 | 0.63 | 0.71 |

| F1 | 0.68 | 0.68 | 0.67 | 0.69 | - | 0.71 |

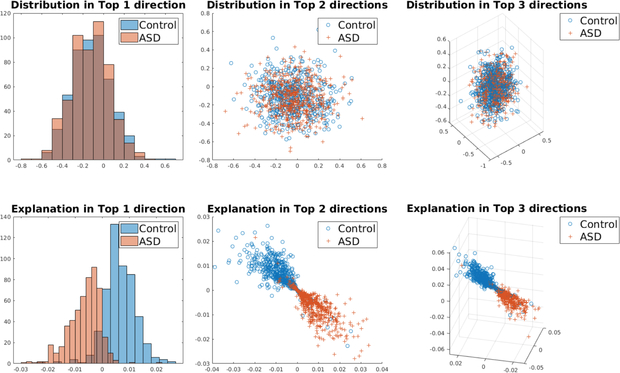

Explanation and Biomarker Selection

We plot the histogram of explanation for edges with top 3 importance in Fig. 5. The histogram of edge values (top row) can not distinguish ASD from control group, while the distribution of explanations (bottom row) for the two groups are separated. The proposed explanation method can be viewed as a naive embedding method, mapping data to a low-dimensional space where two classes are separated.

Fig. 5:

Top row: distribution of connectivity edge for two groups (ASD v.s. Control). Bottom row: distribution of explanation for two groups. From left to right: results on edges with top 1, top 2 and top 3 importance defined in Equation 7.

For each edge, we calculate the importance as defined in Equation 7 and select the top 20 connections, as shown in Fig. 6, and plot the corresponding ROIs in Fig. 7. The proposed method found many regions that are shown to be closely related to autism in the literature, including: superior temporal gyrus [4], frontal cortex [7,5], precentral gyrus [14], insular cortex [10] and other regions. The selected edges and ROIs can be viewed as biomarkers for ASD. Detailed results are in the appendix.

Validate Biomarker Selection in Regression

We validate our biomarker selection in a regression task on the ABIDE dataset. The input is the connectivity matrix reshaped to a vector, and the output is different subscores of the social responsiveness scale (SRS) [6]. SRS provides a continuous measure of different aspects of social ability, including a total score and subscores for awareness, cognition, communication, motivation and mannerism. We use the top 10% of edges selected by the proposed method, and compared its performance with using 100% of edges. We perform a 10-fold cross validation with linear support vector regression (SVR) using l2 penalty, and within each fold the penalty parameter is chosen by nested cross-validation (choices for l2 penalty strength are 0, 10−6, 10−5, …10−1).

We validate our model by comparison with other feature selection methods. We first selected top 10% important features with RF based on out-of-bag prediction importance with default parameters in MATLAB, then refitted a RF using selected features. Results are marked as RF(top 10%) in Table 2.

We calculate the mean squared error (MSE) and cross correlation (Cor) between predictions and measurements. Results are summarized in Table 2. Compared with other methods, our selected biomarkers consistently generates a smaller MSE and a higher Cor, validating our biomarker selection method.

Generalization to Convolutional Invertible Networks

We generalize the proposed model decision interpretation method to convolutional networks, and validate it on an image classification task with the MNIST dataset. Results are summarized in the appendix. The proposed method generates more intuitive explanation results on 2D images.

4. Conclusions

We introduced a novel decision interpretation and feature importance ranking method based on invertible networks. We then applied the proposed method on a classification task to classify ASD from control group based on fMRI scans. We selected important connections and ROIs as biomarkers for ASD, and validated these biomarkers in a regression task on the ABIDE dataset. Our invertible network generates a high classification accuracy, and our biomarkers consistently generate a smaller MSE and a higher Cor compared with using all edges for regression tasks on different severity measures. The proposed interpretation method is generic, and has the potential in other aspects of interpretable deep learning such as 2D image classification.

Acknowledgement

This research was funded by the National Institutes of Health (NINDS-R01NS035193).

References

- 1.Anderson JS, et al. : Functional connectivity magnetic resonance imaging classification of autism. Brain (2011) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bach S, et al. : On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PloS one (2015) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Behrmann J, Duvenaud D, Jacobsen JH: Invertible residual networks. arXiv preprint arXiv:1811.00995 (2018) [Google Scholar]

- 4.Bigler ED, et al. : Superior temporal gyrus, language function, and autism. Developmental neuropsychology (2007) [DOI] [PubMed] [Google Scholar]

- 5.Carper RA, Courchesne E: Localized enlargement of the frontal cortex in early autism. Biological psychiatry 57(2), 126–133 (2005) [DOI] [PubMed] [Google Scholar]

- 6.Constantino JN: Social responsiveness scale. Springer; (2013) [Google Scholar]

- 7.Courchesne E, Pierce K: Why the frontal cortex in autism might be talking only to itself: local over-connectivity but long-distance disconnection. Current opinion in neurobiology 15(2), 225–230 (2005) [DOI] [PubMed] [Google Scholar]

- 8.Craddock RC, et al. : A whole brain fmri atlas generated via spatially constrained spectral clustering. Human brain mapping (2012) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Di Martino A, et al. : The autism brain imaging data exchange: towards a largescale evaluation of the intrinsic brain architecture in autism. Mol. Psychiatry (2014) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Gogolla N, et al. : Sensory integration in mouse insular cortex reflects gaba circuit maturation. Neuron (2014) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Heinsfeld AS, Franco AR, Craddock RC, Buchweitz A, Meneguzzi F: Identification of autism spectrum disorder using deep learning and the abide dataset. NeuroImage: Clinical 17, 16–23 (2018) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Jacobsen JH, Smeulders A, Oyallon E: i-revnet: Deep invertible networks. arXiv preprint arXiv:1802.07088 (2018) [Google Scholar]

- 13.Mahendran A, Vedaldi A: Understanding deep image representations by inverting them. In: CVPR (2015) [Google Scholar]

- 14.Nebel MB, et al. : Precentral gyrus functional connectivity signatures of autism. Frontiers in systems neuroscience (2014) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Ogawa S, et al. : Brain magnetic resonance imaging with contrast dependent on blood oxygenation. Proceedings of the National Academy of Sciences (1990) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Speaks A: What is autism. Retrieved on November 17, 2011 (2011) [Google Scholar]

- 17.Sundararajan M, Taly A, Yan Q: Axiomatic attribution for deep networks. arXiv preprint arXiv:1703.01365 (2017) [Google Scholar]

- 18.Zhuang J, et al. : Prediction of pivotal response treatment outcome with task fmri using random forest and variable selection. In: ISBI 2018 (2018) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Zhuang J, et al. : Prediction of severity and treatment outcome for asd from fmri. In: International Workshop on PRedictive Intelligence In MEdicine (2018) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Zuo XN, et al. : Toward reliable characterization of functional homogeneity in the human brain: preprocessing, scan duration, imaging resolution and computational space. Neuroimage 65 (2013) [DOI] [PMC free article] [PubMed] [Google Scholar]