Abstract

Background

Although the mini-clinical evaluation (mini-CEX) exercise has been adapted to a broad range of clinical situations, limited studies of the mini-CEX for postgraduate residency training in emergency medicine (EM) have been documented.

Aim

The purpose of this study is to analyze the results of implementing the mini-CEX into the one-month postgraduate residency training in EM.

Materials and methods

This study is a retrospective review of mini-CEXs completed by ED faculty members from August 2009 to December 2010. All PGY-1 residents enrolled in this study rotated through the one-month EM training. Each PGY-1 resident received one week of trauma training and three weeks of non-trauma training. The clinical competencies of each PGY-1 resident were evaluated with mini-CEXs, rated by a trauma surgeon and three emergency physicians (EPs). We analyzed the validity of weekly mini-CEX and the impact of seniority and specialty training of ED faculties on observation time, feedback time and rating scores.

Results

Fifty-seven ED faculty members (42 EPs and 15 trauma surgeons) evaluated 183 PGY-1 residents during the 17 months of EM training. ED faculties with different specialty training provided similar assessment processes. Most competencies were rated significantly higher by trauma surgeons than by EPs. On the computerized mini-CEX rating, no data was missed and junior EPs rated all competencies significantly higher. The evaluators and PGY-1 residents were generally satisfied with the computerized format. As compared to the first assessment, only some competencies of PGY-1 residents were rated significantly higher in subsequent evaluations.

Conclusion

The seniority and specialty training of ED faculty affected the mini-CEX ratings. The computer-based mini-CEX facilitated complete data gathering but showed differences for ED faculty with different levels of seniority. Further studies of the reliability and validity of the mini-CEX for PGY-1 EM training are needed.

Keywords: Different specialty, Emergency department, Emergency medicine, Mini-CEX, Postgraduate residency training

1. Introduction

The mini-clinical evaluation exercise (mini-CEX) has been used to assess clinical skills of residents in many specialty training programs. This tool provides both assessment and education for residents in training1 and its validity has been established.2 The mini-CEX is also a feasible and reliable evaluation tool for internal medicine residency training.3 The number and breadth of feedback comments make the mini-CEX a useful assessment tool.4 To some extent, such a tool may predict the future performance of medical students.5 The mini-CEX has been well received by both learners and supervisors6, 7; however, Torre et al8 have shown that evaluators' satisfaction, observation time, and feedback time differed by the form of the mini-CEX8 and the personal digital assistant (PDA)-based mini-CEX was more highly rated by students and evaluators when compared to the paper format.9 Alves de Lima et al10 and Kogan et al11 found that feasibility was the principal defect of the mini-CEX. Although the mini-CEX has been adapted to a broad range of clinical situations,12, 13, 14 limited studies of the mini-CEX for postgraduate residency training in emergency medicine (EM) have been documented.

In 2003, the severe acute respiratory syndrome epidemic uncovered deficiencies in Taiwan's system of medical education. In order to improve the competency of junior resident physicians, the Department of Health authorized the Taiwan Joint Commission to develop the Postgraduate Primary Care Medical Training Program. Each postgraduate resident then received 3-months of general medicine training during residency from 2003 to 2006. The postgraduate Year 1 (PGY-1) general medicine training course was extended to 6 months from 2006 to 2011 and then extended to 1 year in July 2011. Residents must complete this training within 1 year after being accepted for specialty training. One month of emergency medicine training has been part of this program since July 2009. Chang Gung Memorial Hospital is a 3800 bed tertiary hospital in Taoyuan, Taiwan. The emergency department (ED) of the hospital provides the PGY-1 residency-training program and uses the mini-CEX as an assessment tool for clinical competence. As limited knowledge about the variations of assessment outcomes rated by different ED faculties using mini-CEX is understood, this study analyzed the results of implementation of the mini-CEX in the 1-month postgraduate residency training in EM. The purpose of this study is to determine the feasibility, validity, and impact factors of the mini-CEX ratings in the ED setting.

2. Materials and methods

This study was a retrospective review of mini-CEXs completed by ED faculty members from August 2009 to December 2010. All PGY-1 residents enrolled in this study rotated for the one-month EM training received the same 6-month Postgraduate Primary Care Medical Training Program. Each PGY-1 resident received 1 week of trauma training and 3 weeks of nontrauma training during the 1-month EM rotation. The clinical competency of each PGY-1 was evaluated with a weekly mini-CEX, one by a trauma surgeon and three by emergency physicians (EPs), during the 1-month rotation. Fifty-seven faculty members (42 EPs and 15 trauma surgeons) evaluated 183 PGY-1 residents during the 17 months of EM training.

Most ED faculties received a brief workshop of video-based rater training during our preliminary stage of implementation of this assessment tool (mini-CEX) into the PGY-1 EM training. The workshop provided raters reference readings and assessment instructions on the mini-CEX, scoring video and subsequently discussed and reconciled scoring difference for rater errors training. These works helped most evaluators in this study to be competent to perform this work-place assessment.

In the mini-CEX, PGY-1 residents are rated in seven competencies (medical interviewing, physical examination, professionalism, procedural skills, clinical judgment, counseling skills, and organization) using a nine-point rating scale (1 = unsatisfactory and 9 = superior). Each PGY-1 resident was evaluated with a mini-CEX weekly. In the first 12 months, paper-based mini-CEXs were rated by EPs and trauma surgeons. In the followed 5 months, the mini-CEXs rated by EPs were changed to a computer-based format. The satisfaction survey (9-point scale) of evaluators and students was also available in the new format. We analyzed the validity of the weekly mini-CEX and the impact of seniority and the specialty training of ED faculty members on observation time, feedback time, and rating scores. We also explored the benefits of implementation of the computer-based mini-CEX format. A value of p < 0.05 was considered statistically significant. This study was approved by the Hospital Ethics Committee on Human Research. The study protocol was reviewed and qualified as exempt from the requirement to obtain informed consent.

3. Results

3.1. Overall

From August 2009 to December 2010, 475 (paper-based) mini-CEX ratings (122 PGY-1 residents, 47 examiners) were collected in the first 12 months and 248 (computer-based and paper-based) mini-CEX ratings (61 PGY-1 residents, 42 examiners) were collected in the subsequent 5 months. In the first 12 months, 385 of the paper-based mini-CEXs were rated by EPs and 90 by trauma surgeons. The demographic characteristics of all PGY-1 residents are listed in Table 1 . The most frequently evaluated competencies were medical interviewing (99.1%), clinical judgment (98.9%), and physical examination (98.3%) and the least was clinical skill for intubation (55.2%). In the subsequent 5 months, there were 208 mini-CEXs rated by EPs using computerized formats due to the preliminary implementation of electronic teaching system and the other 40 mini-CEXs rated by trauma surgeons were still paper based.

Table 1.

Demographic characteristics of PGY-1 (n = 183).

| Sexa | |

| Male | 122 (66.7) |

| Female | 61 (33.3) |

| Age (y)b | 28.0 ± 2.0 |

| Monthly seniority (mo)a | |

| 1 | 16 (8.7) |

| 2 | 23 (12.6) |

| 3 | 24 (13.1) |

| 4 | 29 (15.8) |

| 5 | 26 (14.2) |

| 6 | 12 (6.6) |

| 7 | 14 (7.7) |

| 8 | 7 (3.8) |

| 9 | 9 (4.9) |

| 10 | 3 (1.6) |

| 11 | 15 (8.2) |

| 12 | 3 (1.6) |

| >12 | 2 (1.1) |

| Major residency training program of specialtiesa | |

| Internal Medicine | 49 (26.8) |

| Surgery | 29 (15.8) |

| Pediatrics | 17 (9.3) |

| Emergency Medicine | 14 (7.7) |

| Obstetrics and Gynecology | 11 (6.0) |

| Physical Medicine and Rehabilitation | 8 (4.4) |

| Orthopedics | 7 (3.8) |

| Anesthesiology | 7 (3.8) |

| Neurology | 6 (3.3) |

| Otolaryngology | 5 (2.7) |

| Medical Imaging and Intervention | 5 (2.7) |

| Neurosurgery | 5 (2.7) |

| Ophthalmology | 4 (2.2) |

| Pathology | 4 (2.2) |

| Psychiatry | 4 (2.2) |

| Family Medicine | 4 (2.2) |

| Urology | 3 (1.6) |

| Nuclear Medicine | 1 (0.5) |

Data presented as case numbers (%).

Data presented as mean ± standard deviation or case numbers (%).

3.2. Impact of seniority and specialty training of ED faculty on the mini-CEX

On analysis of paper-based mini-CEXs in the first 12 months, ED faculty with different specialty training (EPs or trauma surgeons) provided similar observation times and feedback times when completing mini-CEXs. Except for professionalism, all the competencies (medical interviewing, physical examination, procedural skills, counseling skills, clinical judgment, and organization) were rated significantly higher by trauma surgeons than by EPs (Table 2 ). On analysis of paper-based mini-CEXs completed by EPs, those with more than 10 years of clinical attending experience provided similar observation times and feedback times and rated PGY-1 residents similarly in all competencies when compared to junior faculty (Table 3 ).

Table 2.

Overall mean ratings and mini-clinical evaluation exercise time by emergency physician and trauma surgeon raters for paper-based mini-clinical evaluation exercise clinical domains.

| Clinical domains | Emergency physicians n = 385 | Trauma surgeons n = 90 | p |

|---|---|---|---|

| Medical interviewing | 6.6 ± 1.0 | 7.0 ± 1.1 | 0.002 |

| Physical examination | 6.5 ± 1.0 | 7.0 ± 1.0 | <0.001 |

| Clinical skills | 6.5 ± 1.1 | 6.9 ± 1.0 | 0.006 |

| Counseling skills | 6.5 ± 1.1 | 7.0 ± 1.1 | <0.001 |

| Clinical judgment | 6.7 ± 1.1 | 7.0 ± 1.1 | 0.031 |

| Organization/efficiency | 6.5 ± 1.1 | 6.8 ± 1.1 | 0.029 |

| Professionalism | 6.8 ± 1.0 | 6.9 ± 1.1 | 0.234 |

| Observation time | 14.8 ± 8.8 | 16.4 ± 10.2 | 0.162 |

| Feedback time | 11.0 ± 6.7 | 12.7 ± 7.6 | 0.052 |

Data are presented as mean ± standard deviation.

Table 3.

Overall mean ratings and mini-clinical evaluation exercise time by junior and senior emergency physicians (EPs) for paper-based mini-clinical evaluation exercise clinical domains.

| Clinical domains | Junior EP n = 238 | Senior EP n = 147 | p |

|---|---|---|---|

| Medical interviewing | 6.6 ± 1.1 | 6.5 ± 0.8 | 0.49 |

| Physical examination | 6.5 ± 1.1 | 6.5 ± 0.9 | 0.436 |

| Clinical skills | 6.5 ± 1.2 | 6.5 ± 0.8 | 0.885 |

| Counseling skills | 6.5 ± 1.1 | 6.4 ± 1.0 | 0.464 |

| Clinical judgment | 6.7 ± 1.1 | 6.7 ± 1.0 | 0.959 |

| Organization/efficiency | 6.5 ± 1.2 | 6.6 ± 1.0 | 0.75 |

| Professionalism | 6.8 ± 1.1 | 6.7 ± 0.9 | 0.344 |

| Observation time | 14.8 ± 9.9 | 14.8 ± 7.0 | 0.975 |

| Feedback time | 11.1 ± 6.0 | 10.9 ± 7.5 | 0.816 |

Data are presented as mean ± standard deviation.

3.3. Impact of a computerized format on the mini-CEX

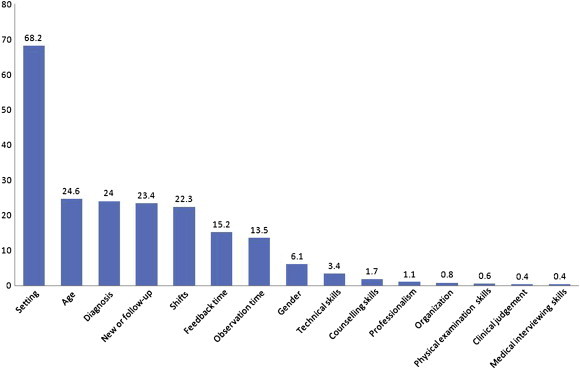

In the analysis of 475 paper-based mini-CEXs during the first 12 months, data gathering was incomplete for all seven dimensions of clinical competency and for other items as well (Fig. 1 ). There were no missing data after the implementation of the computer-based format over the next 5 months. The evaluators and PGY-1 residents were generally satisfied with the computerized mini-CEX format; their ratings ranged from 5 to 9 (7.3 ± 0.8 and 7.9 ± 0.8 respectively). Junior faculty were more satisfied with the format than were senior faculty (7.5 ± 0.8 and 6.9 ± 0.9 respectively, p < 0.001). On the computerized mini-CEX rating, junior EPs rated all competencies of PGY-1 residents significantly higher and senior faculty rated most of them lower (Table 4 ). The scoring difference in each domain was larger for junior faculties than for senior faculties. In our analysis, junior faculty provided longer observation and feedback times than did senior faculty; however, that trend was not statistically significant.

Fig. 1.

The percentage of incomplete data on the paper-based mini-clinical evaluation exercise.

Table 4.

Overall mean ratings and mini-clinical evaluation exercise time by senior and junior emergency physicians (EPs) for computer-based mini-clinical evaluation exercise clinical domains.

| Clinical domains | Junior EP n = 151 | Senior EP n = 57 | p |

|---|---|---|---|

| Medical interviewing | 7.2 ± 1.0 | 6.4 ± 0.8 | <0.001 |

| Physical examination | 7.1 ± 1.1 | 6.2 ± 0.7 | <0.001 |

| Counseling skills | 7.0 ± 1.0 | 6.2 ± 0.9 | <0.001 |

| Clinical judgment | 7.2 ± 1.0 | 6.3 ± 1.0 | <0.001 |

| Organization/efficiency | 7.0 ± 1.2 | 6.3 ± 1.0 | <0.001 |

| Professionalism | 7.4 ± 1.0 | 6.4 ± 0.9 | <0.001 |

| Overall clinical competence | 7.2 ± 0.9 | 6.5 ± 0.9 | <0.001 |

| Observation time (min) | 15.1 ± 9.6 | 13.0 ± 4.0 | 0.099 |

| Feedback time (min) | 11.8 ± 6.0 | 10.4 ± 5.0 | 0.113 |

Data are presented as mean ± standard deviation.

3.4. Feasibility and validity of a weekly mini-CEX in ED training

The feasibility of using the mini-CEX in ED training for PGY-1 residents was high based upon the high rate of completion in the first 12 months (98.1%) and in the following 5 months (100%). Analysis of the validity of using a weekly mini-CEX in the ED training showed that when compared to the first assessment, some competencies (physical examination and organization/efficiency) of PGY-1 residents were rated significantly higher in the third week of the 4-week rotation. The other clinical competencies did not change significantly (Table 5 ).

Table 5.

Weekly overall mean ratings by EPs for each mini-CEX clinical domains on paper-based format.

| Domain | Wk 1 n = 59 | Wk 2 n = 82 | Wk 3 n = 94 | Wk 4 n = 150 |

|---|---|---|---|---|

| Medical interviewing | 6.4 ± 1.1 | 6.6 ± 0.8 | 6.7 ± 1.1 | 6.6 ± 0.9 |

| Physical examination | 6.3 ± 1.0 | 6.4 ± 0.9 | 6.7 ± 1.1* | 6.5 ± 1.0 |

| Clinical skills | 6.5 ± 1.0 | 6.5 ± 0.9 | 6.8 ± 1.3 | 6.4 ± 1.1 |

| Counseling skills | 6.4 ± 1.1 | 6.4 ± 1.0 | 6.5 ± 1.2 | 6.5 ± 1.1 |

| Clinical judgment | 6.5 ± 1.0 | 6.7 ± 1.0 | 6.8 ± 1.2 | 6.7 ± 1.0 |

| Organization/efficiency | 6.3 ± 1.2 | 6.6 ± 1.1 | 6.7 ± 1.2* | 6.5 ± 1.0 |

| Professionalism | 6.5 ± 1.1 | 6.8 ± 1.0 | 6.9 ± 1.1 | 6.8 ± 1.0 |

Data are presented as mean ± standard deviation.

p < 0.05 as compared to data of baseline competence in Week 1.

4. Discussion

4.1. Mini-CEX in the ED setting

The mini-CEX is a method of clinical skills assessment developed by the American Board of Internal Medicine for graduate medical education and is widely used for the evaluation of the clinical competence of medical students in different clerkships1, 4, 8, 9, 11 and residents receiving various specialty training such as those in internal medicine,11, 15 cardiology,10 anesthesia,16 neurology,17 and dermatology.18 It promotes educational interaction and improves the quality of training.19 We retrospectively analyzed the outcomes of implementation of the mini-CEX for PGY-1 residency training in EM, which has not been documented in the literature.

4.2. Factors impacting the mini-CEX

The computer-based format of the mini-CEX in postgraduate emergency residency training effectively replicated the paper-based format for entering and gathering data associated with teaching and learning activities in the ED. The computer-based mini-CEX tool was highly rated by both residents and evaluators. Donato et al20 noted that modifying the mini-CEX format may produce more recorded observations, increase inter-rater agreement, and improve overall rater accuracy. Norman et al21 noted the need for a careful assessment of the advantages and disadvantages for trainees prior to utilizing the PDA-based format of the mini-CEX. These findings were important considerations for improving the quality and effectiveness of a work-place assessment tool in assessing the EM residency training program in a crowded ED environment.

The mean rating scores for PGY-1 residents in each domain of clinical competence were not different on paper-based assessments by ED faculty with different levels of seniority. After implementation of the computer-based format, the mean scores in each domain were rated significantly higher by junior faculty and lower by senior faculty. Torre et al9 documented that residents rated students significantly higher than the faculty did in all mini-CEX clinical domains in the PDA-based format. Kogan et al22 noted that faculty members' own clinical skills may be associated with their ratings of trainees. Torre et al8 found that evaluators' satisfaction, observation time, and feedback time differed according to the form of the mini-CEX. In previous studies, the electronic format of mini-CEX seemed to elicit different responses from ED faculty with different level of seniority.8, 9 In our analysis, junior faculty members were more satisfied than senior faculty with this new computerized format for the mini-CEX. Junior faculty members might be more familiar with the operation of the computer interface in the assessment process than were senior faculty. From another point of view, senior faculty members could be more able to make their evaluations for ED trainees consistent as compared to junior faculty members despite the fact that the format of mini-CEX was changed to another one.

4.3. Rater training for the mini-CEX

In our review of the literature about mini-CEX studies, rater training was rarely described or did not occur at all because of limited resources and time. The necessity for rater training in the use of the mini-CEX has been questioned in the literature.23 Norcini et al12 studied examiner differences in the mini-CEX and found no large differences among the ratings. Torre et al8, 9 showed that format and seniority were associated with rating outcomes on the mini-CEX. As this assessment tool is widely utilized in various clinical settings and is used to certify residents' clinical competence, a better and more standardized evaluation is crucial. Two randomized studies highlighted the value of rater training and its effect on scores23, 24; however, it was likely to be ineffective if the training was too brief.23, 24, 25

Most studies evaluating the mini-CEX focus on its educational impact as an assessment tool and on the perceptions of evaluators and trainees.1, 6, 16, 19 We found no published studies looking at the effect of this assessment tool on doctors' performance in the ED. In our analysis, the seniority and specialty training of ED faculty showed differences in the mini-CEX rating for ED trainees. Moreover, the format of the mini-CEX also had an impact on ratings and was different for junior and senior ED faculty members. Rater training for the assessment process and familiarity with the impact of computer-based format on ratings was suggested as one way to promote educational interaction and to improve the quality of assessment for ED trainees.

4.4. Feasibility and validity of the mini-CEX in the ED

Assessment of trainees who deal with a wide range of patient encounters in the crowded environment of an ED is a challenge. The feasibility of a weekly mini-CEX was confirmed based on the high completion rate, which met the requirements of our PGY-1 training program despite the format of the mini-CEX; however, the validity of a weekly mini-CEX could not be documented by these study results. The necessity of weekly mini-CEX is questioned. It was difficult to show improvement by PGY-1 residents in all domains in a 1-month rotation. Only if the postgraduate EM training course were extended could improvement in trainees' competence be obvious.

4.5. Limitations

-

1.

Although most ED faculties received a brief course of video-based rater training, inter-rater and intrarater variations indeed existed at our preliminary stage of implementation of this assessment tool (mini-CEX) into the PGY-1 EM training.

-

2.

Our study was retrospective and the data were collected from computer and paper databases. Although we made every effort to remain objective, possible errors may have been introduced.

-

3.

The computerized format was not utilized in the 1-week trauma-training curriculum so that the impact of these specialty raters on the mini-CEX ratings was limited to the paper-based format.

-

4.

The domains of the computer-based mini-CEX were modified from the paper-based format. The clinical skills domain was replicated with Direct Observation of Procedural Skills and an overall domain was added to the computerized format. However, most of the core competencies were the same in both formats for analyzing the impact of format change on rating outcomes.

-

5.

The analysis of the impact of seniority on ratings was limited to the EM faculty only because mini-CEX ratings by senior faculty (>10 years) among trauma surgeons were too few.

-

6.

There was no satisfaction survey along with the paper-based mini-CEX so it was not possible to analyze the differences in satisfaction between the paper and computer-based formats.

-

7.

The observation time of around 15 minutes was hardly sufficient to assess all seven parameters of mini-CEX for ED cases with moderate to high complexity. However, most cases treated by PGY-1 residents supervised by ED faculties were simple and in line with the goal of 1-month EM training curriculum.

5. Conclusion

The seniority and specialty training of the ED faculty affected the mini-CEX ratings. The computer-based mini-CEX facilitated complete data gathering but showed differences for the ED faculty with different levels of seniority. Both evaluators and PGY-1 residents were satisfied with the format of the mini-CEX. Further studies of the reliability and validity of the mini-CEX for PGY-1 EM training are needed.

Conflicts of interest

There were no conflicts of interest related to this study.

References

- 1.Malhotra S., Hatala R., Courneya C.A. Internal medicine residents' perceptions of the Mini-Clinical Evaluation Exercise. Med Teach. 2008;30:414–419. doi: 10.1080/01421590801946962. [DOI] [PubMed] [Google Scholar]

- 2.Kogan J.R., Holmboe E.S., Hauer K.E. Tools for direct observation and assessment of clinical skills of medical trainees: a systematic review. JAMA. 2009;23(302):1316–1326. doi: 10.1001/jama.2009.1365. [DOI] [PubMed] [Google Scholar]

- 3.Durning S.J., Cation L.J., Jackson J.L. The reliability and validity of the American Board of Internal Medicine Monthly Evaluation Form. Acad Med. 2003;78:1175–1182. doi: 10.1097/00001888-200311000-00021. [DOI] [PubMed] [Google Scholar]

- 4.Pernar L.I., Peyre S.E., Warren L.E. Mini-clinical evaluation exercise as a student assessment tool in a surgery clerkship: lessons learned from a 5-year experience. Surgery. 2011;150:272–277. doi: 10.1016/j.surg.2011.06.012. [DOI] [PubMed] [Google Scholar]

- 5.Ney E.M., Shea J.A., Kogan J.R. Predictive validity of the mini-Clinical Evaluation Exercise (mCEX): do medical students' mCEX ratings correlate with future clinical exam performance? Acad Med. 2009;84(10 Suppl):S17–S20. doi: 10.1097/ACM.0b013e3181b37c94. [DOI] [PubMed] [Google Scholar]

- 6.Nair B.R., Alexander H.G., McGrath B.P. The mini clinical evaluation exercise (mini-CEX) for assessing clinical performance of international medical graduates. Med J Aust. 2008;189:159–161. doi: 10.5694/j.1326-5377.2008.tb01951.x. [DOI] [PubMed] [Google Scholar]

- 7.Sidhu R.S., Hatala R., Barron S., Broudo M., Pachev G., Page G. Reliability and acceptance of the mini-clinical evaluation exercise as a performance assessment of practicing physicians. Acad Med. 2009;84(10 Suppl):S113–S115. doi: 10.1097/ACM.0b013e3181b37f37. [DOI] [PubMed] [Google Scholar]

- 8.Torre D.M., Treat R., Durning S., Elnicki D.M. Comparing PDA- and paper-based evaluation of the clinical skills of third-year students. WMJ. 2011;110:9–13. [PubMed] [Google Scholar]

- 9.Torre D.M., Simpson D.E., Elnicki D.M., Sebastian J.L., Holmboe E.S. Feasibility, reliability and user satisfaction with a PDA-based mini-CEX to evaluate the clinical skills of third-year medical students. Teach Learn Med. 2007;19:271–277. doi: 10.1080/10401330701366622. [DOI] [PubMed] [Google Scholar]

- 10.Alves de Lima A., Barrero C., Baratta S. Validity, reliability, feasibility and satisfaction of the Mini-Clinical Evaluation Exercise (Mini-CEX) for cardiology residency training. Med Teach. 2007;29:785–790. doi: 10.1080/01421590701352261. [DOI] [PubMed] [Google Scholar]

- 11.Kogan J.R., Bellini L.M., Shea J.A. Implementation of the mini-CEX to evaluate medical students' clinical skills. Acad Med. 2002;77:1156–1157. doi: 10.1097/00001888-200211000-00021. [DOI] [PubMed] [Google Scholar]

- 12.Norcini J.J., Blank L.L., Arnold G.K., Kimball H.R. Examiner differences in the mini-CEX. Adv Health Sci Educ Theory Pract. 1997;2:27–33. doi: 10.1023/A:1009734723651. [DOI] [PubMed] [Google Scholar]

- 13.Norcini J.J., Blank L.L., Arnold G.K., Kimball H.R. The mini-CEX (clinical evaluation exercise): a preliminary investigation. Ann Intern Med. 1995;123:795–799. doi: 10.7326/0003-4819-123-10-199511150-00008. [DOI] [PubMed] [Google Scholar]

- 14.Norcini J.J., Blank L.L., Duffy F.D., Fortna G.S. The mini-CEX: a method for assessing clinical skills. Ann Intern Med. 2003;138:476–481. doi: 10.7326/0003-4819-138-6-200303180-00012. [DOI] [PubMed] [Google Scholar]

- 15.Durning S.J., Cation L.J., Markert R.J., Pangaro L.N. Assessing the reliability and validity of the mini-clinical evaluation exercise for internal medicine residency training. Acad Med. 2002;77:900–904. doi: 10.1097/00001888-200209000-00020. [DOI] [PubMed] [Google Scholar]

- 16.Weller J.M., Jolly B., Misur M.P. Mini-clinical evaluation exercise in anaesthesia training. Br J Anaesth. 2009;102:633–641. doi: 10.1093/bja/aep055. [DOI] [PubMed] [Google Scholar]

- 17.Wiles C.M., Dawson K., Hughes T.A. Clinical skills evaluation of trainees in a neurology department. Clin Med. 2007;7:365–369. doi: 10.7861/clinmedicine.7-4-365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Cohen S.N., Farrant P.B., Taibjee S.M. Assessing the assessments: U.K. dermatology trainees' views of the workplace assessment tools. Br J Dermatol. 2009;161:34–39. doi: 10.1111/j.1365-2133.2009.09097.x. [DOI] [PubMed] [Google Scholar]

- 19.Weller J.M., Jones A., Merry A.F., Jolly B., Saunders D. Investigation of trainee and specialist reactions to the mini-Clinical Evaluation Exercise in anaesthesia: implications for implementation. Br J Anaesth. 2009;103:524–530. doi: 10.1093/bja/aep211. [DOI] [PubMed] [Google Scholar]

- 20.Donato A.A., Pangaro L., Smith C. Evaluation of a novel assessment form for observing medical residents: a randomised, controlled trial. Med Educ. 2008;42:1234–1242. doi: 10.1111/j.1365-2923.2008.03230.x. [DOI] [PubMed] [Google Scholar]

- 21.Norman G., Keane D., Oppenheimer L. Compliance of medical students with voluntary use of personal data assistants for clerkship assessments. Teach Learn Med. 2008;20:295–301. doi: 10.1080/10401330802199542. [DOI] [PubMed] [Google Scholar]

- 22.Kogan J.R., Hess B.J., Conforti L.N., Holmboe E.S. What drives faculty ratings of residents' clinical skills? The impact of faculty's own clinical skills. Acad Med. 2010;85(10 Suppl):S25–S28. doi: 10.1097/ACM.0b013e3181ed1aa3. [DOI] [PubMed] [Google Scholar]

- 23.Cook D.A., Dupras D.M., Beckman T.J., Thomas K.G., Pankratz V.S. Effect of rater training on reliability and accuracy of mini-CEX scores: a randomized, controlled trial. J Gen Intern Med. 2009;24:74–79. doi: 10.1007/s11606-008-0842-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Holmboe E.S., Hawkins R.E., Huot S.J. Effects of training in direct observation of medical residents' clinical competence: a randomized trial. Ann Intern Med. 2004;140:874–881. doi: 10.7326/0003-4819-140-11-200406010-00008. [DOI] [PubMed] [Google Scholar]

- 25.Noel G.L., Herbers J.E., Jr., Caplow M.P., Cooper G.S., Pangaro L.N., Harvey J. How well do internal medicine faculty members evaluate the clinical skills of residents? Ann Intern Med. 1992;117:757–765. doi: 10.7326/0003-4819-117-9-757. [DOI] [PubMed] [Google Scholar]