Abstract

Since its introduction some twenty years ago, named entity (NE) processing has become an essential component of virtually any text mining application and has undergone major changes. Recently, two main trends characterise its developments: the adoption of deep learning architectures and the consideration of textual material originating from historical and cultural heritage collections. While the former opens up new opportunities, the latter introduces new challenges with heterogeneous, historical and noisy inputs. If NE processing tools are increasingly being used in the context of historical documents, performance values are below the ones on contemporary data and are hardly comparable. In this context, this paper introduces the CLEF 2020 Evaluation Lab HIPE (Identifying Historical People, Places and other Entities) on named entity recognition and linking on diachronic historical newspaper material in French, German and English. Our objective is threefold: strengthening the robustness of existing approaches on non-standard inputs, enabling performance comparison of NE processing on historical texts, and, in the long run, fostering efficient semantic indexing of historical documents in order to support scholarship on digital cultural heritage collections.

Keywords: Named entity processing, Text understanding, Information extraction, Historical newspapers, Digital Humanities

Introduction

Recognition and identification of real-world entities is at the core of virtually any text mining application. As a matter of fact, referential units such as names of persons, locations and organizations underlie the semantics of texts and guide their interpretation. Around since the seminal Message Understanding Conference (MUC) evaluation cycle in the 1990s [11], named entity-related tasks have undergone major evolutions until now, from entity recognition and classification to entity disambiguation and linking [21, 25]. Besides the general domain of well-written newswire data, named entity (NE) processing is also applied to specific domains, particularly bio-medical [10, 14], and on more noisy inputs such as speech transcriptions [9] and tweets [26].

Recently, two main trends characterise developments in NE processing. First, at the technical level, the adoption of deep learning architectures and the usage of embedded language representations greatly reshapes the field and opens up new research directions [1, 16, 17]. Second, with respect to application domain and language spectrum, NE processing has been called upon to contribute to the field of Digital Humanities (DH), where massive digitization of historical documents is producing huge amounts of texts [30]. Thanks to large-scale digitization projects driven by cultural institutions, millions of images are being acquired and, when it comes to text, their content is transcribed, either manually via dedicated interfaces, or automatically via Optical Character Recognition (OCR). Beyond this great achievement in terms of document preservation and accessibility, the next crucial step is to adapt and develop appropriate language technologies to search and retrieve the contents of this ‘Big Data from the Past’ [13]. In this regard, information extraction techniques, and particularly NE recognition and linking, can certainly be regarded as among the first steps.

This paper introduces the CLEF 2020 Evaluation Lab1 HIPE (Identifying Historical People, Places and other Entities)2. With the aim of supporting the development and progress of NE systems on historical documents (Sect. 2), this lab proposes two tasks, namely named entity recognition and linking, on historical newspapers in French, German and English (Sect. 3). We additionally report first results on French historical newspapers (Sect. 4), which comfort the idea of various benefits of such lab for both NLP and DH communities.

Motivation and Objectives

NE processing tools are increasingly being used in the context of historical documents. Research activities in this domain target texts of different nature (e.g. museum records, state-related documents, genealogical data, historical newspapers) and different tasks (NE recognition and classification, entity linking, or both). Experiments involve different time periods , focus on different domains, and use different typologies. This great diversity demonstrates how many and varied the needs—and the challenges—are, but also makes performance comparison difficult, if not impossible.

Furthermore, as per language technologies in general [29], it appears that the application of NE processing on historical texts poses new challenges [7, 23]. First, inputs can be extremely noisy, with errors which do not resemble tweet misspellings or speech transcription hesitations, for which adapted approaches have already been devised [5, 27]. Second, the language under study is mostly of earlier stage(s), which renders usual external and internal evidences less effective (e.g., the usage of different naming conventions and presence of historical spelling variations) [2, 3]. Further, beside historical VIPs, texts from the past contain rare entities which have undergone significant changes (esp. locations) or do no longer exist, and for which adequate linguistic resources and knowledge bases are missing [12]. Finally, archives and texts from the past are not as anglophone as in today’s information society, making multilingual resources and processing capacities even more essential [22].

Overall, and as demonstrated by Vilain et al. [31], the transfer of NE tools from one domain to another is not straightforward, and the performance of NE tools initially developed for homogeneous texts of the immediate past are affected when applied on historical material. This echoes the proposition of Plank [24], according to whom what is considered as standard data (i.e. contemporary news genre) is more a historical coincidence than a reality: in NLP non-canonical, heterogeneous, biased and noisy data is rather the norm than the exception.

Even though many evaluation campaigns on NE were organized over the last decades3, only one considered French historical texts [8]. To the best of our knowledge, no NE evaluation campaign ever addressed multilingual, diachronic historical material. In the context of new needs and materials emerging from the humanities, we believe that an evaluation campaign on historical documents is timely and will be beneficial. In addition to the release of a multilingual, historical NE-annotated corpus, the objective of this shared task is threefold: strengthening the robustness of existing approaches on non-standard inputs; enabling performance comparison of NE processing on historical texts; and fostering efficient semantic indexing of historical documents.

Overview of the Evaluation Lab

Task Description

The HIPE shared task puts forward 2 NE processing tasks with sub-tasks of increasing level of difficulty. Participants can submit up to 3 runs per sub-task.

Task 1: Named Entity Recognition and Classification (NERC)

Subtask 1.1 - NERC Coarse-Grained: this task includes the recognition and classification of entity mentions according to high-level entity types (Person, Location, Organisation, Product and Date).

Subtask 1.2 - NERC Fine-Grained: this task includes the classification of mentions according to finer-grained entity types, nested entities (up to one level of depth) and the detection of entity mention components (e.g. function, title, name).

Task 2: Named Entity Linking (EL). This task requires the linking of named entity mentions to a unique referent in a knowledge base (a frozen dump of Wikidata) or to a NIL node if the mention does not have a referent.

Data Sets

Corpus. The HIPE corpus is composed of items from the digitized archives of several Swiss, Luxembourgish and American newspapers on a diachronic basis.4 For each language, articles of 4 different newspapers were sampled on a decade time-bucket basis, according to the time span of the newspaper (longest duration spans ca. 200 years). More precisely, articles were first randomly sampled from each year of the considered decades, with the constraints of having a title and more than 100 characters. Subsequently to this sampling, a manual triage was applied in order to keep journalistic content only and to remove undesirable items such as feuilleton, cross-words, weather tables, time-schedules, obituaries, and what a human could not even read because of OCR noise.

Alongside each article, metadata (journal, date, title, page number, image region coordinates), the corresponding scan(s) and an OCR quality assessment score is provided. Different OCR versions of same texts are not provided, and the OCR quality of the corpus therefore corresponds to real-life setting, with variations according to digitization time and preservation state of original documents.

For each task and language—with the exception of English—the corpus is divided into training, dev and test data sets, released in IOB format with hierarchical information. For English, only dev and test sets will be released.

Annotation. HIPE annotation guidelines [6] are derived from the Quaero annotation guide5. Originally designed for the annotation of “extended” named entities (i.e. more than the 3 or 4 traditional entity classes) in French speech transcriptions, Quaero guidelines have furthermore been used on historic press corpora [28]. HIPE slightly recasts and simplifies them, considering only a subset of entity types and components, as well as of linguistic units eligible as named entities. HIPE guidelines were iteratively consolidated via the annotation of a “mini-reference” corpus, where annotation decisions were tested and difficult cases discussed. Despite these adaptations, HIPE annotated corpora will mostly remain compatible with Quaero guidelines.

The annotation campaign is carried out by the task organizers with the support of trilingual collaborators. We use INCEpTION as an annotation tool [15], with the visualisation of image segments alongside OCR transcriptions.6 Before starting annotating, each annotator is first trained on a mini-reference corpus, where the inter-annotator agreement (IAA) with the gold reference is computed. For each language, a sub-sample of the corpus is annotated by 2 annotators and IAA is computed, before and after an adjudication. Randomly selected articles will also be controlled by the adjudicator. Finally, HIPE will provide complementary resources in the form of in-domain word-level and character-level embeddings acquired from historical newspaper corpora. In the same vein, participants will be encouraged to share any external resource they might use. HIPE corpus and resources will be released under a CC-BY-SA-NC 4.0 license.

Evaluation

Named Entity Recognition and Classification (Task 1) will be evaluated in terms of macro and micro Precision, Recall, F-measure, and Slot Error Rate [20]. Two evaluation scenarios will be considered: strict (exact boundary matching) and relaxed (fuzzy boundary matching). Entity linking (Task 2) will be evaluated in terms of Precision, Recall, F-measure taking into account literal and metonymic senses.

Exploratory Experiments on NER for Historical French

We made an exploratory study in order to assess whether the massive improvements in neural NER [1, 17] on modern texts carry over to historical material with OCR noise. The data of our experiments is the Quaero Old Press (QOP) corpus, 295 OCRed7 newspaper documents dating from December 1890 annotated according to the Quaero guidelines [28], split by us into train (1.45 m tokens) and dev/test (each 0.2 m). We only consider the outermost entity level (no nested entities or components) and train on the fine-grained subcategories (e.g., loc.adm.town) of the 7 main classes.

Modeling NER as a sequence labeling problem and applying Bi-LSTM networks is state of the art [1, 4, 17, 19]. Our experiments follow [1] in using character-based contextual string embeddings as input word representations, allowing to “better handle rare and misspelled words as well as model subword structures such as prefixes and endings”. These contextualized word embeddings rely on neural forward and backward character-level language models that have been trained by us on a large collection (500 m tokens) of late 19th and early 20th centuries Swiss-French newspapers. In accordance to the literature, a Bi-LSTM NER model with an on-top CRF layer (Bi-LSTM-CRF) works best for our data.

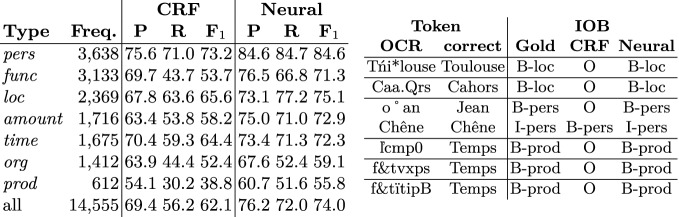

As a baseline system, which will also be provided for the shared task, we train a traditional CRF sequence classifier [18] using basic spelling features such as a token’s character prefix and suffix, the casing of the initial character and the presence of punctuation marks and digits. The baseline classifier shows fairly modest overall performance scores of 69.4% recall, 56.2% precision and 62.1 F (see Table 1).

(see Table 1).

Table 1.

Results and examples from exploratory experiments on NER for French.

Trained and evaluated on the QOP data, the neural model relying on contextual string embeddings clearly outperforms the baseline classifier. As shown in Table 1, the Bi-LSTM-CRF model achieves better F for all of the 7 entity types and surpasses the feature-based classifier by nearly 12 points F

for all of the 7 entity types and surpasses the feature-based classifier by nearly 12 points F . Examples in Table 1 evidence that the CRF model frequently struggles with entities containing miss-recognized special characters and/or punctuation marks. In many such cases, the Bi-LSTM-CRF classifier is capable of assigning the correct label. These results indicate that the new neural methods are ready to enable substantial progress in NER on noisy historical texts.

. Examples in Table 1 evidence that the CRF model frequently struggles with entities containing miss-recognized special characters and/or punctuation marks. In many such cases, the Bi-LSTM-CRF classifier is capable of assigning the correct label. These results indicate that the new neural methods are ready to enable substantial progress in NER on noisy historical texts.

Conclusion

From the perspective of natural language processing (NLP), the HIPE evaluation lab provides the opportunity to test the robustness of existing NERC and EL approaches against challenging historical material and to gain new insights with respect to domain and language adaptation. From the perspective of digital humanities, the lab’s outcomes help DH practitioners in mapping state-of-the-art solutions for NE processing on historical texts, and in getting a better understanding of what is already possible as opposed to what is still challenging. Most importantly, digital scholars are in need of support to explore the large quantities of digitized text they currently have at hand, and NE processing is high on the agenda. Such processing can support research questions in various domains (e.g. history, political science, literature, historical linguistics) and knowing about their performance is crucial in order to make an informed use of the processed data. Overall, HIPE will contribute to advance the state of the art in semantic indexing of historical material, within the specific domain of historical newspaper processing, as in e.g. the “impresso - Media Monitoring of the Past” project8 and, more generally, within the domain of text understanding of historical material, as in the Time Machine Europe project9 which ambitions the application of AI technologies on cultural heritage data.

Acknowledgements

This CLEF evaluation lab is part of the research activities of the project “impresso – Media Monitoring of the Past”, for which authors gratefully acknowledge the financial support of the Swiss National Science Foundation under grant number CR-SII5_173719. We would also like to thank C. Watter, G. Schneider and A. Flückiger for their invaluable help with the construction of the data sets, as well as R. Eckart de Castillo, C. Neudecker, S. Rosset and D. Smith for their support and guidance as part of the lab’s advisory board.

Footnotes

muc, ace, connl, kbp, ester, harem, quaero, germeval, etc.

From the Swiss National Library, the Luxembourgish National Library, and the Library of Congress, respectively.

See the original Quaero guidelines: http://www.quaero.org/media/files/bibliographie/quaero-guide-annotation-2011.pdf.

HIPE is one of the official INCEpTION project use cases, see https://inception-project.github.io/use-case-gallery/impresso/.

[28] reports a character error rate of 5.09% and a word error rate of 36.59%.

Contributor Information

Joemon M. Jose, Email: joemon.jose@glasgow.ac.uk

Emine Yilmaz, Email: emine.yilmaz@ucl.ac.uk.

João Magalhães, Email: jm.magalhaes@fct.unl.pt.

Pablo Castells, Email: pablo.castells@uam.es.

Nicola Ferro, Email: ferro@dei.unipd.it.

Mário J. Silva, Email: mjs@inesc-id.pt

Flávio Martins, Email: flaviomartins@acm.org.

Maud Ehrmann, Email: maud.ehrmann@epfl.ch.

References

- 1.Akbik, A., Blythe, D., Vollgraf, R.: Contextual string embeddings for sequence labeling. In: Proceedings of the 27th International Conference on Computational Linguistics (COLING 2018), Santa Fe, New Mexico, USA, pp. 1638–1649. Association for Computational Linguistics (2018)

- 2.Bollmann, M.: A large-scale comparison of historical text normalization systems. In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, Minnesota, pp. 3885–3898. Association for Computational Linguistics, June 2019

- 3.Borin, L., Kokkinakis, D., Olsson, L.: Naming the past: named entity and animacy recognition in 19th century Swedish literature. In: Proceedings of the Workshop on Language Technology for Cultural Heritage Data (LaTeCH 2007), pp. 1–8 (2007)

- 4.Chiu JPC, Nichols E. Named entity recognition with bidirectional LSTM-CNNs. Trans. Assoc. Comput. Linguist. (TACL) 2016;4:357–370. doi: 10.1162/tacl_a_00104. [DOI] [Google Scholar]

- 5.Dinarelli, M., Rosset, S.: Tree-structured named entity recognition on OCR data: analysis, processing and results. In: 2012, editor, Proceedings of the Eighth International Conference on Language Resources and Evaluation, Istanbul, Turkey, May 2012. European Language Resources Association (ELRA) (2012). ISBN 978-2-9517408-7-7

- 6.Ehrmann, M., Watter, C., Romanello, M., Clematide, S., Flückiger: Impresso Named Entity Annotation Guidelines, January 2020. 10.5281/zenodo.3604227 [DOI]

- 7.Ehrmann, M., Colavizza, G., Rochat, Y., Kaplan, F.: Diachronic evaluation of NER systems on old newspapers. In: Proceedings of the 13th Conference on Natural Language Processing (KONVENS 2016), pp. 97–107. Bochumer Linguistische Arbeitsberichte (2016). https://infoscience.epfl.ch/record/221391?ln=en

- 8.Galibert, O., Rosset, S., Grouin, C., Zweigenbaum, P., Quintard, L.: Extended named entity annotation on OCRed documents : from corpus constitution to evaluation campaign. In: Proceedings of the Eighth Conference on International Language Resources and Evaluation, Istanbul, Turkey, pp. 3126–3131 (2012)

- 9.Galibert, O., Leixa, J., Adda, G., Choukri, K., Gravier, G.: The ETAPE speech processing evaluation. In: LREC, pp. 3995–3999. Citeseer (2014)

- 10.Goulart RRV, de Lima VS, Xavier CC. A systematic review of named entity recognition in biomedical texts. J. Braz. Comput. Soc. 2011;17(2):103–116. doi: 10.1007/s13173-011-0031-9. [DOI] [Google Scholar]

- 11.Grishman, R., Sundheim, B.: Design of the MUC-6 evaluation. In: Sixth Message Understanding Conference (MUC-6): Proceedings of a Conference Held in Columbia, Maryland (1995)

- 12.Van Hooland S, De Wilde M, Verborgh R, Steiner T, Van de Walle R. Exploring entity recognition and disambiguation for cultural heritage collections. Digit. Scholarsh. Humanit. 2015;30(2):262–279. doi: 10.1093/llc/fqt067. [DOI] [Google Scholar]

- 13.Kaplan, F., di Lenardo, I.: Big data of the past. Front. Digit. Humanit. 4 (2017). 10.3389/fdigh.2017.00012. https://www.frontiersin.org/articles/10.3389/fdigh.2017.00012/full. ISSN 2297–2668

- 14.Kim J-D, Ohta T, Tateisi Y, Tsujii J. Genia corpus-a semantically annotated corpus for bio-textmining. Bioinformatics. 2003;19(suppl 1):i180–i182. doi: 10.1093/bioinformatics/btg1023. [DOI] [PubMed] [Google Scholar]

- 15.Klie, J.-C., Bugert, M., Boullosa, B., de Castilho, R.E., Gurevych, I.: The inception platform: machine-assisted and knowledge-oriented interactive annotation. In: Proceedings of the 27th International Conference on Computational Linguistics: System Demonstrations, pp. 5–9 (2018)

- 16.Labusch, K., Neudecker, C., Zellhöfer, D.: BERT for named entity recognition in contemporary and historic German. In: Preliminary proceedings of the 15th Conference on Natural Language Processing (KONVENS 2019): Long Papers, Erlangen, Germany, pp. 1–9. German Society for Computational Linguistics & Language Technology (2019)

- 17.Lample, G., Ballesteros, M., Subramanian, S., Kawakami, K., Dyer, C.: Neural architectures for named entity recognition. In: Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp. 260–270. Association for Computational Linguistics, San Diego, June 2016

- 18.Lavergne, T., Cappé, O., Yvon, F.: Practical very large scale CRFs. In: Hajič, J., Carberry, S., Clark, S., Nivre, J. (eds.) Proceedings the 48th Annual Meeting of the Association for Computational Linguistics (ACL), Uppsala, Sweden, pp. 504–513. Association for Computational Linguistics, July 2010

- 19.Ma, X., Hovy, E.: End-to-end sequence labeling via bi-directional LSTM-CNNs-CRF. In: Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp. 1064–1074. Association for Computational Linguistics, Berlin, August 2016

- 20.Makhoul, J., Kubala, F., Schwartz, R., Weischedel, R.: Performance measures for information extraction. In: Proceedings of DARPA Broadcast News Workshop, pp. 249–252 (1999)

- 21.Nadeau D, Sekine S. A survey of named entity recognition and classification. Lingvisticae Investigationes. 2007;30(1):3–26. doi: 10.1075/li.30.1.03nad. [DOI] [Google Scholar]

- 22.Neudecker, C., Antonacopoulos, A.: Making Europe’s historical newspapers searchable. In: 2016 12th IAPR Workshop on Document Analysis Systems (DAS), pp. 405–410. IEEE (2016)

- 23.Piotrowski M. Natural language processing for historical texts. Synth. Lect. Hum. Lang. Technol. 2012;5(2):1–157. doi: 10.2200/S00436ED1V01Y201207HLT017. [DOI] [Google Scholar]

- 24.Plank, B.: What to do about non-standard (or non-canonical) language in NLP. In: Proceedings of the 13th Conference on Natural Language Processing (KONVENS 2016). Bochumer Linguistische Arbeitsberichte (2016)

- 25.Rao D, McNamee P, Dredze M. Entity linking: finding extracted entities in a knowledge base. In: Poibeau T, Saggion H, Piskorski J, Yangarber R, editors. Multi-source, Multilingual Information Extraction and Summarization. Theory and Applications of Natural Language Processing. Heidelberg: Springer; 2013. [Google Scholar]

- 26.Ritter, A., Clark, S., Etzioni, O., et al.: Named entity recognition in Tweets: an experimental study. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing, pp. 1524–1534 (2011)

- 27.Rodriquez, K.J., Bryant, M., Blanke, T., Luszczynska, M.: Comparison of named entity recognition tools for raw OCR text. In: Jancsary, J. (ed.) Proceedings of KONVENS 2012, pp. 410–414. ÖGAI, September 20. http://www.oegai.at/konvens2012/proceedings/60_rodriquez12w/

- 28.Rosset, S., Grouin, C., Fort, K., Galibert, O., Kahn, J., Zweigenbaum, P.: Structured named entities in two distinct press corpora: contemporary broadcast news and old newspapers. In: Proceedings of the Sixth Linguistic Annotation Workshop The LAW VI, pp. 40–48. Association for Computational Linguistics, Jeju, July 2012

- 29.Sporleder C. Natural language processing for cultural heritage domains. Lang. Linguist. Compass. 2010;4(9):750–768. doi: 10.1111/j.1749-818X.2010.00230.x. [DOI] [Google Scholar]

- 30.Terras MM. The rise of digitization. In: Rikowski R, editor. Digitisation Perspectives. Rotterdam: SensePublishers; 2011. pp. 3–20. [Google Scholar]

- 31.Vilain, M., Su, J., Lubar, S.: Entity extraction is a boring solved problem: or is it? In: Human Language Technologies 2007: The Conference of the North American Chapter of the Association for Computational Linguistics; Companion Volume, Short Papers, NAACL-Short 2007, Rochester, New York, pp. 181–184. Association for Computational Linguistics (2007). http://dl.acm.org/citation.cfm?id=1614108.1614154