Abstract

Documentation burden has become an increasing concern as the prevalence of electronic health records (EHRs) has grown. The implementation of a new EHR is an opportunity to measure and improve documentation burden, as well as assess the role of the EHR in clinician workflow. Time-motion observation is the preferred method for evaluating workflow. In this study, we developed and tested the reliability of an interprofessional taxonomy for use in time-motion observation of nursing and physician workflow before and after a new EHR is implemented at a large academic medical center. Inter-observer reliability assessment sessions were conducted while observing both nurses and physicians. Four out of five observers achieved reliability in an average of 5.75 sessions. Our developed taxonomy demonstrated to be reliable for conducting workflow evaluation of both nurses and physicians, with a focus on time and tasks in the EHR.

Introduction

With the integral role that electronic health records (EHRs) play in healthcare today, the implementation of a new system promises to bring numerous changes to both the institution and the workflow of its clinical providers. Quality and clinical outcomes such as preventive screening rates and length of stay are commonly measured before and after an implementation of a new EHR1. In addition to potential fluctuations in quality and clinical outcomes, changes in clinician workflow are likely to occur and should be analyzed to address issues and realize potential gains in productivity and/or efficiency. As part of a larger study evaluating the implementation of a new commercial EHR at a large northeastern medical center, we are collecting time-motion data of both nurses and physicians before and after implementation to measure the impact on clinician workflow and documentation burden. Documentation burden can be understood as a combination of many factors, including time, low usability, low satisfaction, and high cognitive spending2,3. Time-motion observations can be used to understand the time component of documentation burden. Collecting time-motion data involves observing a person as they conduct tasks to measure how much time is spent on each task and the sequence of task performance that makes up a workflow4,5. Currently, most time-motion studies that have evaluated clinician workflow in the era of the EHR observed either physician or nursing workflow, but not both4,6–11. Because one of the overall aims of implementing a new EHR is to reduce documentation burden and increase efficiency for all clinician types, we developed an interprofessional task taxonomy to capture nursing and physician workflow. This taxonomy allows for accurate description of different workflows while obtaining a picture of pooled documentation burden among the patient care team.

While the prevalence of EHRs has grown, documentation burden among clinicians has become a challenge, with time spent on data entry of particular concern10,12–15. The Office of the National Coordinator (ONC) on Health Information Technology recently released a draft strategy for reducing clinician documentation burden and the first overarching goal is to “reduce the effort and time required to record health information in EHRs for clinicians”16. EHR data entry requires that the clinician record clinical findings in the patient’s electronic record, while data viewing is the consumption of entered data. Evidence suggests that both nurses and physicians spend more time documenting after the implementation of an EHR15. Increased documentation times are associated with clinician burnout and low satisfaction3,17,18. In addition to time spent documenting, poor alignment of the EHR with optimal care delivery workflows is a known challenge3,16. The ONC names “better alignment of EHRs with clinical workflow” as the first strategy to improve EHR usability16. As we work to support healthcare’s Quadruple Aim – improving the care experience, improving population health, reducing cost, and improving the well-being of providers – it is important to understand the role that EHRs play in either hindering or aiding in providers’ care delivery19,20.

Much research has been conducted to measure documentation burden and the role of EHRs in clinician workflow4,6– 14. While many investigators are utilizing EHR log file data to measure documentation times, a limitation to this methodology is lack of validation by direct observation14. For example, while the log files may indicate that a user spent thirty minutes of uninterrupted time viewing a note, it is possible that the user walked away while remaining logged in and delivered direct patient care or was interviewing the patient while reading the note. This patient-care time would be inaccurately reported as note-viewing time only, without the utilization of direct observation. Time- motion observation serves as a method for corroborating findings from EHR log data and for incorporating tasks that occur outside of the EHR.

Much of the current literature surrounding EHR documentation burden is centered on physician documentation burden and has offered strategies involving redistribution of workload from physicians2,10,21,22, including adding order entry to the responsibility of “clinical staff”10. Such efforts should include consideration of existing documentation burden among other clinical roles, such as nursing. Studies have shown that nurses are also dissatisfied with the current state of EHRs and cite EHR inefficiencies as barriers to delivering best-practice patient care3,23. A potential unintended consequence of restricting EHR burden analysis to one clinical role is that low-satisfaction tasks like data entry will be passed from one role to another and improvement will not occur across the care team. By utilizing the same tasks while observing both nurses and physicians, we seek to understand the documentation burden of both clinical roles within the care team, as well as how they are both impacted after a new EHR is implemented.

Methods

In July and August of 2018, the primary author conducted a review of the literature on time-motion evaluations of clinician workflow, specifically surrounding the use of electronic health records (EHRs). We did find that Ballerman and colleagues utilized a shared taxonomy to conduct time-motion observation of both nurses, physicians, and respiratory therapists, though their study was conducted before an EHR was implemented, thus did not have EHR- specific task names24. Therefore, we could not utilize their taxonomy for our purposes. Task names were extracted from relevant articles and brought to an interprofessional team of clinical researchers (RNs and MDs)4,6–9,25,26. Each task was evaluated for its relevance to nursing and physician workflow and then re-worded and re-defined in order to accurately describe the work executed by each profession. For example, in a previous study conducted by Yen and colleagues, evaluating nursing multi-tasking on a medical surgical unit, a task named “Direct – Procedure” was defined as “RN performs treatment or procedure that cannot be delegated (top of license task)”4. We redefined this task so that procedures performed by both nurses and physicians could be captured under “Direct Care – Procedure”. Our task definition is, “Performs direct procedure (e.g. wound care, blood draw, CVC placement)”. Therefore, when a nurse performs wound care or phlebotomy, or a physician places a central venous catheter, our observers capture this action as a “Direct Care – Procedure” task. (Note: we are not observing clinicians in the operating room, therefore operating procedures are out of scope). With this approach, we can capture a pooled measurement of time spent performing procedures by both nurses and physicians before and after implementation, with respect to the differing types of procedures they are each licensed to carry out. EHR tasks such as data entry also often differ between nurses and physicians. Physicians typically enter their clinical findings into a note, while nurses may spend more time entering findings into flowsheets or care plans and synthesize in shorter “end of shift” notes. Instead of employing multiple tasks, we capture each activity in our definition of “Data – Entering.” This allows for measurement of data entry across the two roles, as well as increases the likelihood that observers will be able to reliably capture each of these tasks because they have fewer tasks to choose from.

The task list evolved over a period of four months through team discussion, lab training, and clinical-setting training. After task alterations were made to accommodate nursing and physician workflow, any further alterations were made to reach a level of granularity that allowed us to reliably capture tasks in real time. The taxonomy was pared down from 43 to 38 final tasks, including “tasks” that capture the clinician’s location and communication activities (Table 1). Efforts were made to prioritize capture of data entry tasks, data consumption tasks, and patient care tasks, because much of the current sentiment around EHRs reflects frustration at their functional role as time-consuming data repositories, detracting from patient care, rather than as sources of clinical information delivery3,10,15,16. The taxonomy is organized into the three overarching categories – location (where the clinician is, physically), task (what the clinician is doing), and communication (conversations the clinician is having or listening to). These categories were developed and validated for time-motion evaluations by investigators at The Ohio State University Department of Biomedical Informatics in their creation of a web-based time-motion observation platform, TimeCat27. TimeCat allows the observer to document up to one active task in each category at any time during the observation so that the clinician’s location, what they are doing, and any conversations they may be having, simultaneously, are recorded. TimeCat version 3.9 was used in this study.

Table 1.

Final taxonomy

| Category | Name | Definition |

| Task | Data – Entering | Writing any clinical note in EHR (e.g. progress note, procedure note, admission note, discharge note, result note in a lab test). Writing note or letter in EHR messaging system. Documenting in flowsheets, forms, admission/discharge navigators, patient education, plan of care, allergies, problem list, visit diagnoses, or other data capture functionality. |

| Task | Data – Viewing | Viewing information within notes, handoff/sign-out tab, flowsheets, problem lists, demographics, past medical/family history, results section, admission/discharge navigators, patient education, plan of care, reports, or other type of data display or visualization. |

| Task | Data – Viewing Patient Data Archive | Viewing information in patient data archive application window. * |

| Task | Direct Care – Physical Assessment/Exam | Performs direct physical assessment/exam. |

| Task | Direct Care – Procedure | Performs direct procedure (e.g. wound care, blood draw, CVC placement). |

| Task | Email (Desktop) | Sends email outside of EHR at desktop or laptop computer (not on a mobile phone). |

| Task | Handoff/Sign-out – Documenting | Adding notes and tasks to list of treatment/care goals for which the oncoming clinician will be responsible. Using EHR handoff or sign-out activity. |

| Task | Indirect Care | Activities associated with equipment search, arranging the unit, changing the patient to another bed within the same unit, “waiting time” to perform other tasks, or searching for patient, clinician, or other resource. |

| Task | Log into EHR | Logs in/signs in to the EHR system. |

| Task | Log out of EHR | Logs out/signs out of the EHR system. |

| Task | Med Administration | Barcode or non-barcode medication administration, medication infusion titration, scanning patient and medication/vaccine and completing documentation in EHR medication administration record (mar) or immunization activity. |

| Task | Med Preparation | Obtaining medications from medication dispensing system, opening packages, priming IV tubing, flushing line. |

| Task | Med Reconciliation | Discontinuing and adding new medications in EHR medication reconciliation activity. |

| Task | Smartphone Clinical Messaging App | Non-phone-call use of designated clinical smartphone phone, (including messaging, reference lookup, or EHR app). |

| Task | Mobile Phone | Non-phone-call use (e.g. texting or sending email), excluding designated smartphone clinical messaging app. |

| Task | Orders – Entering | Orders entered into EHR order entry activity. |

| Task | Pager – Sending/Viewing Msg. | Viewing or sending message including through EHR paging or web-based paging. |

| Task | Paper Chart/Notes – Documenting | Writing on any paper components of the patient’s medical record (e.g. notes, letters, results, consent) or a printed list/schedule. |

| Task | Paper Chart/Notes – Viewing | Viewing any paper components of the patient’s medical record (e.g. notes, letters, results, consents) or a printed list/schedule. |

| Task | Patient List/Schedule – Viewing | Viewing EHR clinic schedule or patient lists of unit or service. |

| Task | Personal | Eating, personal computer use, using restroom. |

| Task | Reference Materials – Viewing | Using medical/clinical reference site (e.g. UpToDate28), including accessing info-button through EHR. |

| Task | Transcribing | Taking notes onto paper from EHR. |

| Task | Travel | Moving from one area to another. |

| Task | Use of Other CIS | Logging into another clinical information system (CIS) besides the EHR or viewing another system that does not require log in (e.g. telemetry). |

| Communication | Handoff/Sign-out | Discussing to-do list or plan of care with oncoming clinician, including events in previous shift and outstanding/upcoming goals. |

| Communication | Phone Talking | Conversation over phone, either in team area or on mobile device. |

| Communication | Rounding and Meetings | Planned uni- or interprofessional discussion of patient treatment and care goals. |

| Communication | CodeMDT | A code team / rapid response team (RRT) meeting has been initiated for a patient |

| Communication | Verbal w/Patient/Family | Non-rounding discussion with patient/family (e.g. patient education, shared decision making, or live communication via phone interpreter). |

| Communication | Verbal w/Staff – Care- Related | Non-rounding discussion, not including family meetings. Includes non-rounding presentations to physicians. |

| Communication | Verbal w/Staff – Not Care-Related | Discussion that is not about patient or patient care. |

| Location | Hallway | Corridor in clinic or on unit between patient/exam rooms and team areas. |

| Location | Inaccessible Patient Room | Isolation room, patient under security, patient asked not to be observed. |

| Location | Patient Room | Inpatient room or outpatient exam room. |

| Location | Supply Room/Medication Administration Room | Designated room or area containing clinical supplies or where medications are administered (clinic). |

| Location | Team Area | Nurses station, designated huddle area, clinician workroom. |

| Location | Waiting Room | Open area where patients/family wait to be seen. |

Viewing historical patient data will happen in this application after go-live of new HER

After obtaining IRB approval, we evaluated the interprofessional taxonomy by conducting inter-observer reliability assessments (reliability sessions) on an acute care/step-down unit. The five observers in our study include two licensed and experienced registered nurses, two licensed and experienced medical doctors, and one medical student. Reliability sessions involved two observers following the same clinician, either a nurse or a physician, simultaneously documenting tasks conducted for one and a half to two hours. All activities, clinical and non-clinical, were observed throughout the session and observers entered patient rooms unless the patient was on isolation precautions or the patient or clinician requested otherwise. Each clinician consented to being observed before the session began. Observers did not converse with each other or the clinician during the observations and the data were compared for similarity afterward.

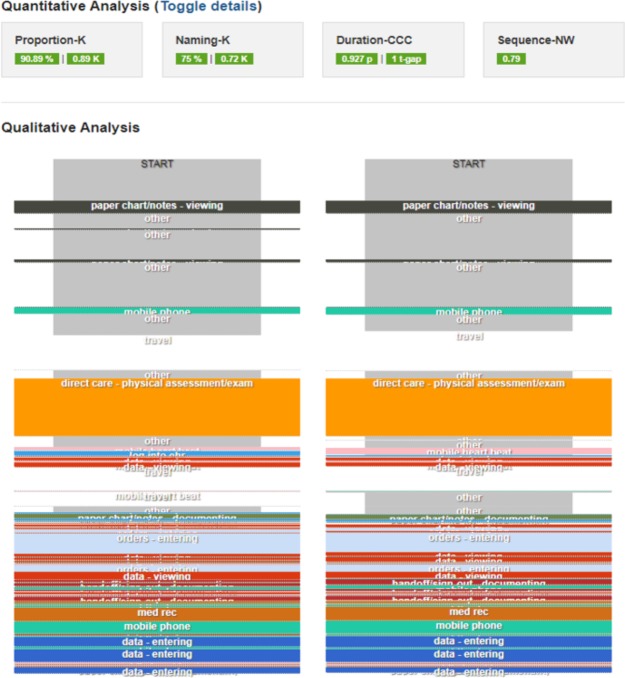

TimeCat includes a sophisticated module for conducting reliability sessions which displays similarity scores in domains called Proportion, Naming, Duration, and Sequence4,29. It also displays a side-by-side visualization of the tasks captured by each observer throughout the observation (Figure 1). Each of the domains were developed and validated by Lopetegui and colleagues and are described in a publication pending review29. The Proportion domain assesses the proportion of time that the two observers document that a specific task is occurring29. For example, if in 10 seconds, Observer 1 documents that the clinician is entering orders, and during 8 of those seconds Observer 2 documents that the clinician is entering orders, the Proportion agreement would be 0.8. This data is then used to calculate Cohen’s kappa agreement score. The Naming domain evaluates each task and compares its name to the task name with which it has the most time overlap29. By measuring the proportion of tasks that share the most time overlap that also share the same name, this score reflects observer agreement on the task that is occurring at any given moment. This domain is also measured with a Kappa agreement score. The Duration domain builds off the Naming domain by calculating a concordance correlation coefficient from tasks with the most time overlap, to reflect the agreement between observers on the length of the task29. The Sequence domain reflects agreement between observers on the sequence of tasks, no matter their duration29. This is measured with a Needleman-Wunsch algorithm score which was developed using informatics approaches to sequence amino acids29,30. Each domain and its score definition is outlined in Table 2 and an example of the output in TimeCat is shown in Figure 1. Domain scores are calculated for each of the three categories – Task, Location, and Communication.

Figure 1.

Example inter-observer reliability session, including scores in each reliability domain and side-by-side display of tasks captured by each observer (visualization generated by TimeCat)

Table 2.

Inter-observer reliability domains, measures, and definitions from Lopetegui et al29

| Domain | Measure | Definition |

| Proportion | Kappa | Agreement that a specific task is occurring |

| Naming | Kappa | Agreement on the name of tasks that have the most time overlap |

| Duration | Concordance Correlation Coefficient |

Agreement on the duration of tasks sharing the most time overlap and same name |

| Sequence | Needleman-Wunsch | Agreement on sequence of tasks |

Our criteria for achieving reliability is that within a minimum of three observations, the observer must obtain at least two with a score of at least 0.8 in the Proportion and Duration domains, a score of at least 0.7 in the Sequence domain, a recent observation scoring at least over 0.65 in each, and with demonstration of improvement over time. This definition is consistent with previous work delineating substantial reliability between observers.31 Once an observer achieves reliability, they become a “gold standard” for training future observers and can begin conducting observations on their own. Because many time-motion studies measure and report inter- observer reliability inconsistently32, Lopetegui and colleagues outlined suggestions for setting inter-observer reliability goals according to the research question being asked, rather than aiming to achieve high scores in each domain29. Following these guidelines, we determined that the Proportion, Duration, and Sequence domains are most important for our reliability sessions. Our aims are two fold; first, to understand how much time nurses and physicians spend entering data, viewing data, and caring for patients, and second, to capture a picture of their workflows. The Proportion and Duration assessments test our ability to reliably meet the first aim by measuring agreement on task occurrence and duration. In addition to these assessments, the Sequence domain tests our ability to reliably meet the second aim by measuring agreement on the order of tasks. These scores, therefore, take priority over the Naming domain, which is of more importance for studies interested in answering the question, “What do clinicians do at any given time?”29, which is not a target of our study.

Results

Twenty reliability sessions were completed. All sessions were conducted between 7am and 7pm, at varying times during this window. Three sessions were conducted on Monday, five on Tuesday, one on Wednesday, five on Thursday, and six on Friday. Four out of five of our observers achieved reliability in as few as four reliability sessions and as many as eight (average 5.75 sessions/observer). One of our sessions included perfect agreement on Communication Proportion, Communication Duration, and Communication Sequence. Three included perfect agreement on Location Duration and one included perfect agreement on Location Sequence.

Additionally, inter-observer reliability scores trended positively, regardless of whether the observer was following a nurse or a physician. For example, two of our observers achieved one reliable observation while observing a nurse, and then their second reliable observation while observing a physician. It might be hypothesized that the nurse observers would be biased toward a nursing interpretation of each task and vice versa for the physicians/medical student. However, our taxonomy demonstrated to be reliably executable by nurses, physicians, and a medical student while observing both nurses and physicians on an acute care hospital unit. Table 3 displays the results of our reliability sessions.

Table 3.

Inter-observer reliability results by observer

| Communication Range | Task Range | Location Range | ||||||||

| Observer | Reliability Sessions* | PK1 | CCC2 | NW3 | PK1 | CCC2 | NW3 | PK1 | CCC2 | NW3 |

| 0.43- | 0.155-1 | 0.62-1 | 0.67- | 0.727- | 0.58- | 0.71- | 0.719- | 0.72- | ||

| 1 (RN) | 6 | 1 | 0.95 | 0.998 | 0.94 | 0.98 | 1 | 1 | ||

| 0.4- | 0.367- | 0.71- | 0.58- | 0.753- | 0.66- | 0.85- | 0.719- | 0.74- | ||

| 2 (RN) | 5 | 0.81 | 0.988 | 0.82 | 0.85 | 0.989 | 0.79 | 0.97 | 1 | 0.86 |

| 3 MD) | 8 | 0.6-1 | 0.682-1 | 0.68-1 | 0.58-0.95 | 0.816-0.998 | 0.67-998 | 0.63-0.98 | 0.789- 1 | 0.7-1 |

| 0.76- | 0.155-1 | 0.72- | 0.76- | 0.727- | 0.72- | 0.89- | 0.997- | 0.81- | ||

| 4 (Medical Student) | 4 | 0.97 | 0.86 | 0.89 | 0.999 | 0.79 | 0.97 | 1 | 1 | |

| 5 (MD) | Not yet achieved | 0.4-0.81 | 0.367-0.979 | 0.62-0.8 | 0.58-0.69 | 0.753-0.967 | 0.58-0.71 | 0.63-0.94 | 0.912-0.997 | 0.7-0.86 |

*Number of inter-observer reliability sessions the observer needed to conduct before meeting reliability criteria.

PK1=Proportion Kappa, CCC2=Duration Concordance Correlation Coefficient, NW3= Sequence Needleman-Wunsch Score

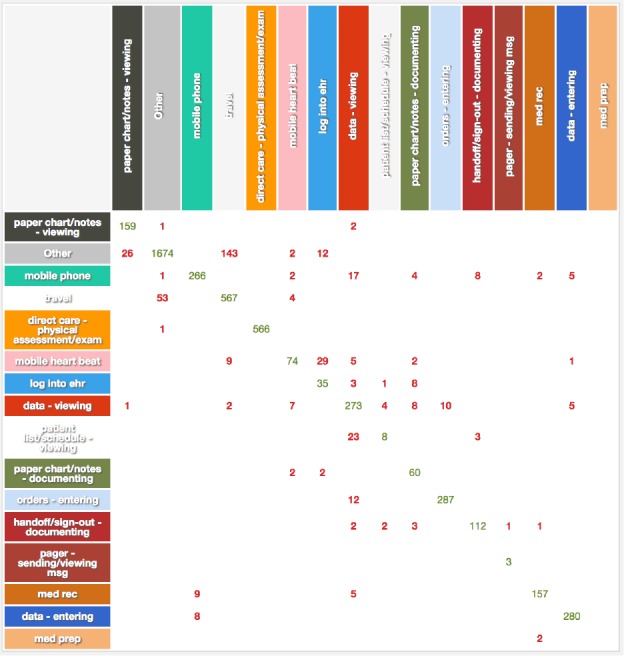

Figure 2 shows a task time agreement matrix for a reliable session. In the agreement matrix, tasks captured during the session are listed across the top of the grid and along the left of the grid. The number of seconds that both observers agreed on the task occurring is plotted across the grid in green. The numbers in red display the seconds that the observers disagreed on the occurring tasks and they are plotted at the intersection of the tasks documented by each observer.

Figure 2.

Example task time agreement matrix (generated by TimeCat)

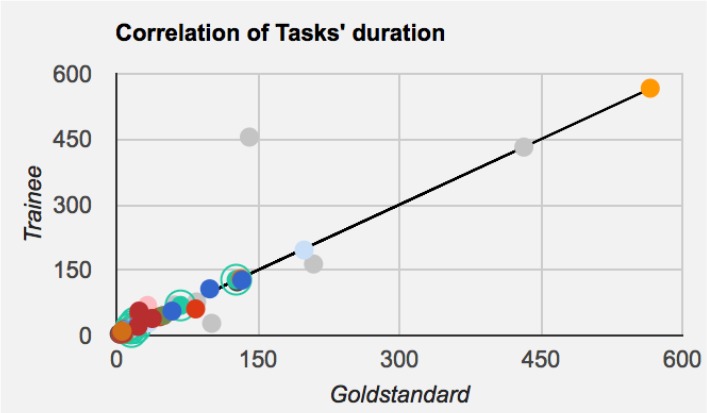

Figure 3 shows a plot of task duration correlation for a reliable session. In this plot, each task, designated by a colored dot, is plotted with an x-value denoting the number of seconds Observer 1 documented that the task occurred, and a y-value denoting the number of seconds Observer 2 documented that the task occurred. A 45-degree line is plotted as a reference point of perfect duration agreement. TimeCat generates these plots and labels observers as either “Gold standard” or “Trainee” to reflect the inter-observer reliability process of validating new observers.

Figure 3.

Example task duration correlation (generated by TimeCat)

Discussion

The outcomes of our reliability sessions are significant because they demonstrate that with careful consideration of task taxonomy and adequate training, observers from differing backgrounds can reliably capture tasks performed by a nurse using the same taxonomy as tasks performed by a physician, and vice versa. Additionally, our specific taxonomy has demonstrated to reliably encompass workflow among nurses and physicians using an EHR. Also, by conducting our inter-observer reliability sessions over varying times and days of the week, we can be confident that we tested reliability during the work hours in which we will collect real observation data. Future studies looking to evaluate shared documentation load and to capture a picture of clinician workflow may model this approach and utilize this taxonomy.

All care team members should be considered when looking to reduce EHR burden and improve alignment of the EHR with clinicians’ workflow. The interprofessional taxonomy developed here was shown to be reliable in our settings for conducting workflow observations of both nurses and physicians to answer research questions surrounding EHR tasks and their duration time. Further, our taxonomy proved to be reliably executable by observers with both nursing and medical backgrounds. Though one of our observers has not yet achieved reliability, that observer is receiving further training to help meet criteria.

The level of detail in our taxonomy allows us to reliably answer questions surrounding changes to time spent on patient care, data entry, and data viewing, as well as changes to general workflow. Using this data to accompany more granular detail of user behavior from EHR audit log data will help us identify areas where efficiency and/or workflow alignment is gained from the new EHR, as well as areas for improvement, for both nurses and physicians. We will be employing this taxonomy to conduct time-motion observations in both the hospital and ambulatory settings. Future work may expand the use of this taxonomy to study additional clinical roles such as social workers, care coordinators, and therapists. Incorporating even more members of the care team will further strengthen our understanding of shared documentation burden and the role of the EHR in clinicians’ workflow. Additionally, this time-motion and EHR audit log data could be used to quantify the care team documentation time required per patient.

Limitations

Though time-motion study of workflow is an ideal accompaniment to EHR audit log analysis, we acknowledge that it can be prohibitively resource intensive, and thus feasibility in all settings is limited. In consideration of resources and scope, we are only focused on day shift hours Monday through Friday, though we acknowledge that night shift and weekend workflow should be considered in future work. We also acknowledge that our task taxonomy might not be useful for answering questions such as, “How much time do clinicians spend multitasking during note documentation?”. As stated previously, we found it prudent to sacrifice capturing this level of detail in our Time- motion analysis in favor of reliably capturing the broader workflow. We plan to utilize the audit log data to analyze time and click navigation patterns surrounding EHR tasks such as note documentation. However, more granular Time- motion studies may be conducted to focus on specific EHR tasks with taxonomy modification. Additionally, this study is primarily focused on evaluating the workflows of nurses and physicians, though, as mentioned previously, there are certainly other care team members integral to patient care who could benefit from a similar inclusive approach to workflow analysis using and extending this taxonomy, as needed.

Conclusion

In summary, we were able to validate an interprofessional taxonomy that is useful for reliable EHR-focused Time- motion observation of nursing and physician workflow. Our work is significant because it demonstrates the potential to utilize one method to quantify burden and analyze workflow across different clinical roles, at a time when reducing EHR burden and improving EHR congruence with clinical workflow is a national priority16. If the lens isn’t widened to examine more than one member of the care team when looking to improve the role of the EHR in clinicians’ care, then myopic strategies may be implemented that do not ultimately improve care delivery because some clinicians will continue to be inefficient and overburdened. By capturing the influence of EHRs among multiple care team members’ workflows and burden, we can focus on visionary re-design strategies of EHR tasks to promote care efficiencies that ultimately aid patient-centered care. This taxonomy can be used in future work looking to evaluate the role of the EHR in clinical workflow and documentation burden so that improvements can be made for all members of the care team.

Acknowledgements

Jessica Schwartz is a Pre-Doctoral Fellow funded by the Reducing Health Disparities through Informatics (RHeaDI) training grant funded by the National Institute for Nursing Research (T32NR007969). We would also like to thank the nurses and physicians who participated in this project.

Figures & Table

References

- 1.Colicchio TK, Del Fiol G, Scammon DL, Facelli JC, Bowes WA, Narus SP. Comprehensive methodology to monitor longitudinal change patterns during EHR implementations: a case study at a large health care delivery network. J Biomed Inform. [Internet] 2018 Jul 1; doi: 10.1016/j.jbi.2018.05.018. [cited 2019 Feb 9];83:40–53. Available from: https://www.sciencedirect.com/science/article/pii/S1532046418301059MD010. [DOI] [PubMed] [Google Scholar]

- 2.Payne TH, Corley S, Cullen TA, Gandhi TK, Harrington L, Kuperman GJ, et al. Report of the AMIA EHR- 2020 Task Force on the status and future direction of EHRs. J Am Med Informatics Assoc. [Internet] 2015 Sep 1; doi: 10.1093/jamia/ocv066. [cited 2019 Mar 4];22(5):1102–10. Available from: https://academic.oup.com/jamia/article-lookup/doi/10.1093/jamia/ocv066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Topaz M, Ronquillo C, Peltonen L-M, Pruinelli L, Sarmiento RF, Badger MK, et al. Nurse informaticians report low satisfaction and multi-level concerns with electronic health records: results from an international survey. AMIA Annu Symp Proc. [Internet] 2016;2016:2016–25. Available from: http://www.ncbi.nlm.nih.gov/pubmed/28269961. [PMC free article] [PubMed] [Google Scholar]

- 4.Yen P-Y, Kelley M, Lopetegui M, Rosado AL, Migliore EM, Chipps EM, et al. Understanding and visualizing multitasking and task switching activities: a Time- motion study to capture nursing workflow. AMIA Annu Symp Proc. [Internet] 2016;2016:1264–73. Available from: http://www.ncbi.nlm.nih.gov/pubmed/28269924. [PMC free article] [PubMed] [Google Scholar]

- 5.National Library of Medicine. Time and Motion Studies. [Internet] 1991 Available from: https://www.ncbi.nlm.nih.gov/mesh?term=Time+and+Motion+Studies. [Google Scholar]

- 6.Mamykina L, Vawdrey DK, Stetson PD, Zheng K, Hripcsak G. Clinical documentation: composition or synthesis? J Am Med Inform Assoc. [Internet] 2012;19(6):1025–31. doi: 10.1136/amiajnl-2012-000901. [cited 2019 Feb 2]; Available from: http://www.ncbi.nlm.nih.gov/pubmed/22813762. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Mamykina L, Vawdrey DK, Hripcsak G. How do residents spend their shift time? A time and motion study with a particular focus on the use of computers. Acad Med. [Internet] 2016 Jun;91(6):827–32. doi: 10.1097/ACM.0000000000001148. [cited 2019 Feb 2];. Available from: http://www.ncbi.nlm.nih.gov/pubmed/27028026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Schenk E, Schleyer R, Jones CR, Fincham S, Daratha KB, Monsen KA. Impact of adoption of a comprehensive electronic health record on nursing work and caring efficacy. CIN Comput Informatics, Nurs. [Internet] 2018 Jul;36(7):331–9. doi: 10.1097/CIN.0000000000000441. [cited 2019 Feb 2]; Available from: http://www.ncbi.nlm.nih.gov/pubmed/29688905. [DOI] [PubMed] [Google Scholar]

- 9.Schachner MB, Recondo FJ, Sommer JA, González ZA, García GM. Pre-implementation study of a nursing e-chart : how nurses use their time. In: MEDINFO 2015: eHealth-enabled Health. 2015 [PubMed] [Google Scholar]

- 10.Arndt BG, Beasley JW, Watkinson MD, Temte JL, Tuan W-J, Sinsky CA, et al. Tethered to the ehr: primary care physician workload assessment using ehr event log data and time-motion observations. Ann Fam Med. [Internet] 2017 Sep 11;15(5):419–26. doi: 10.1370/afm.2121. [cited 2019 Feb 7]; Available from: http://www.annfammed.org/lookup/doi/10.1370/afm.2121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Read-Brown S, Sanders DS, Brown AS, Yackel TR, Choi D, Tu DC, et al. Time-motion analysis of clinical nursing documentation during implementation of an electronic operating room management system for ophthalmic surgery. AMIA Annu Symp Proc. [Internet] 2013;2013:1195–204. [cited 2019 Feb 9]; Available from: http://www.ncbi.nlm.nih.gov/pubmed/24551402. [PMC free article] [PubMed] [Google Scholar]

- 12.Cox ML, Farjat AE, Risoli T, Peskoe S, Goldstein BA, Turner DA, et al. Documenting or operating: where is time spent in general surgery residency? J Surg Educ. [Internet] 2018;75(6):e97–106. doi: 10.1016/j.jsurg.2018.10.010. [cited 2019 Feb 9]; Available from: http://www.ncbi.nlm.nih.gov/pubmed/30522828. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Golob JF, Como JJ, Claridge JA. The painful truth. J Trauma Acute Care Surg. [Internet] 2016 May;80(5):742–7. doi: 10.1097/TA.0000000000000986. [cited 2019 Feb 9]; Available from: http://content.wkhealth.com/linkback/openurl?sid=WKPTLP:landingpage&an=01586154-201605000-00009. [DOI] [PubMed] [Google Scholar]

- 14.Hripcsak G, Vawdrey DK, Fred MR, Bostwick SB. Use of electronic clinical documentation: time spent and team interactions. J Am Med Informatics Assoc. [Internet] 2011 Mar 1;18(2):112–7. doi: 10.1136/jamia.2010.008441. [cited 2019 Feb 9]; Available from: http://www.ncbi.nlm.nih.gov/pubmed/21292706. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Baumann LA, Baker J, Elshaug AG. The impact of electronic health record systems on clinical documentation times: A systematic review. Health Policy (New York). [Internet] 2018 Aug 1;122(8):827–36. doi: 10.1016/j.healthpol.2018.05.014. [cited 2019 Feb 16]; Available from: https://www.sciencedirect.com/science/article/pii/S0168851018301635?via%3Dihub. [DOI] [PubMed] [Google Scholar]

- 16.Office of the National Coordinator for Health Information Technology. Strategy on reducing regulatory and administrative burden relating to the use of health it and ehrs: draft for public comment. [Internet] 2018 [cited 2019 Mar 10]. Available from: https://www.healthit.gov/topic/usability-and-provider-burden/strategy-reducing-burden-relating-use- [Google Scholar]

- 17.Babbott S, Manwell LB, Brown R, Montague E, Williams E, Schwartz M, et al. Electronic medical records and physician stress in primary care: results from the MEMO Study. J Am Med Informatics Assoc. [Internet] 2014 Feb 1;21(e1):e100–6. doi: 10.1136/amiajnl-2013-001875. [cited 2019 Feb 16]; Available from: http://www.ncbi.nlm.nih.gov/pubmed/24005796. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Dyrbye LN, Shanafelt TD, Sinsky CA, Cipriano PF, Bhatt J, Ommaya A, et al. Burnout among health care professionals: a call to explore and address this underrecognized threat to safe, high-quality care. NAM Perspect. [Internet] 2017 Jul 5;7(7) [cited 2019 Feb 16]; Available from: https://nam.edu/burnout-among- health-care-professionals-a-call-to-explore-and-address-this-underrecognized-threat-to-safe-high-quality-care/ [Google Scholar]

- 19.Bodenheimer T, Sinsky CA. From triple to quadruple aim: care of the patient requires care of the provider. Ann Fam Med. [Internet] 2014;12(6):573–6. doi: 10.1370/afm.1713. [cited 2019 Feb 16]; Available from: http://www.annfammed.org/content/12/6/573.full.pdf. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Berwick DM, Nolan TW, Whittington J. The triple aim: care, health, and cost. Health Aff. [Internet] 2008 May;27(3):759–69. doi: 10.1377/hlthaff.27.3.759. [cited 2019 Mar 7]; Available from: http://www.ncbi.nlm.nih.gov/pubmed/18474969. [DOI] [PubMed] [Google Scholar]

- 21.Golob JF, Como JJ, Claridge JA. Trauma surgeons save lives-scribes save trauma surgeons! Am Surg. [Internet] 2018 Jan 1;84(1):144–8. [cited 2019 Feb 16]; Available from: http://www.ncbi.nlm.nih.gov/pubmed/29428043. [PubMed] [Google Scholar]

- 22.Warner JL, Smith J, Wright A. It’s time to wikify clinical documentation. Acad Med. [Internet] 2019 Jan 22; doi: 10.1097/ACM.0000000000002613. [cited 2019 Feb 18];1. Available from: http://www.ncbi.nlm.nih.gov/pubmed/30681451. [DOI] [PubMed] [Google Scholar]

- 23.Roberts RJ, Alhammad AM, Crossley L, Anketell E, Wood L, Schumaker G, et al. A survey of critical care nurses’ practices and perceptions surrounding early intravenous antibiotic initiation during septic shock. Intensive Crit Care Nurs. [Internet] 2017 Aug;41:90–7. doi: 10.1016/j.iccn.2017.02.002. [cited 2019 Feb 16]; Available from: http://www.ncbi.nlm.nih.gov/pubmed/28363592. [DOI] [PubMed] [Google Scholar]

- 24.Ballermann MA, Shaw NT, Mayes DC, Gibney RTN, Westbrook JI. Validation of the Work Observation Method By Activity Timing (WOMBAT) method of conducting time-motion observations in critical care settings: an observational study. BMC Med Inform Decis Mak. [Internet] 2011 May 17;11:32. doi: 10.1186/1472-6947-11-32. [cited 2019 Feb 21]; Available from: http://www.ncbi.nlm.nih.gov/pubmed/21586166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Hakes B, Whittington J. Assessing the impact of an electronic medical record on nurse documentation time. Comput Inform Nurs. [Internet] 2008 Jul;26(4):234–41. doi: 10.1097/01.NCN.0000304801.00628.ab. [cited 2019 Mar 4]; Available from: https://insights.ovid.com/crossref?an=00024665-200807000-00012. [DOI] [PubMed] [Google Scholar]

- 26.Yee T, Needleman J, Pearson M, Parkerton P, Parkerton M, Wolstein J. The influence of integrated electronic medical records and computerized nursing notes on nurses’ time spent in documentation. CIN Comput Informatics, Nurs. [Internet] 2012 Mar; doi: 10.1097/NXN.0b013e31824af835. [cited 2019 Mar 4];1. Available from: http://content.wkhealth.com/linkback/openurl?sid=WKPTLP:landingpage&an=00024665-931986770-99894. [DOI] [PubMed] [Google Scholar]

- 27.Lopetegui M, Yen P-Y, Lai AM, Embi PJ, Payne PRO. Time Capture Tool (TimeCaT): development of a comprehensive application to support data capture for Time- motion Studies. AMIA Annu Symp Proc. [Internet] 2012 [cited 2019 Mar 4];2012:596–605. Available from: http://www.ncbi.nlm.nih.gov/pubmed/23304332. [PMC free article] [PubMed] [Google Scholar]

- 28.2019 UpToDate I. Evidence-Based Clinical Decision Support at the Point of Care | UpToDate. [Internet] [cited 2019 Mar 4]. Available from: https://www.uptodate.com/home. [Google Scholar]

- 29.Lopetegui M, Yen P-Y, Embi P, Payne P. Foundations for Studying Clinical Workflow: Development of a Composite Inter-Observer Reliability Assessment for Workflow Time Studies. Under Rev. [PMC free article] [PubMed] [Google Scholar]

- 30.Needleman SB, Wunsch CD. A general method applicable to the search for similarities in the amino acid sequence of two proteins. J Mol Biol. [Internet] 1970 Mar 28;48(3):443–53. doi: 10.1016/0022-2836(70)90057-4. [cited 2019 Feb 28]; Available from: https://www.sciencedirect.com/science/article/pii/0022283670900574?via%3Dihub. [DOI] [PubMed] [Google Scholar]

- 31.Viera A, Garrett J. Understanding Interobserver Agreement: The Kappa Statistic. 2005 [cited 2019 Feb 4]; Available from: http://www1.cs.columbia.edu/~julia/courses/CS6998/Interrater_agreement.Kappa_statistic.pdf. [PubMed] [Google Scholar]

- 32.Lopetegui MA, Bai S, Yen P-Y, Lai A, Embi P, Payne PRO. Inter-observer reliability assessments in Time- motion studies: the foundation for meaningful clinical workflow analysis. AMIA Annu Symp Proc. [Internet] 2013;2013:889–96. [cited 2019 Feb 18]; Available from: http://www.ncbi.nlm.nih.gov/pubmed/24551381. [PMC free article] [PubMed] [Google Scholar]