Abstract

Background

Current evaluation methods are mismatched with the speed of health care innovation and needs of health care delivery partners. We introduce a qualitative approach called the lightning report method and its specific product—the “Lightning Report.” We compare implementation evaluation results across four projects to explore report sensitivity and the potential depth and breadth of lightning report method findings.

Methods

The lightning report method was refined over 2.5 years across four projects: team‐based primary care, cancer center transformation, precision health in primary care, and a national life‐sustaining decisions initiative. The novelty of the lightning report method is the application of Plus/Delta/Insight debriefing to dynamic implementation evaluation. This analytic structure captures Plus (“what works”), Delta (“what needs to be changed”), and Insights (participant or evaluator insights, ideas, and recommendations). We used structured coding based on implementation science barriers and facilitators outlined in the Consolidated Framework for Implementation Research (CFIR) applied to 17 Lightning Reports from four projects.

Results

Health care partners reported that Lighting Reports were valuable, easy to understand, and they implied reports supported “corrective action” for implementations. Comparative analysis revealed cross‐project emphasis on the domains of Inner Setting and Intervention Characteristics, with themes of communication, resources/staffing, feedback/reflection, alignment with simultaneous interventions and traditional care, and team cohesion. In three of the four assessed projects, the largest proportion of coding was to the clinic‐level domain of Inner Setting—ranging from 39% for the cancer center project to a high of 56% for the life‐sustaining decisions project.

Conclusions

The lightning report method can fill a gap in rapid qualitative approaches and is generalizable with consistent but flexible core methods. Comparative analysis suggests it is a sensitive tool, capable of uncovering differences and insights in implementation across projects. The Lightning Report facilitates partnered evaluation and communication with stakeholders by providing real‐time, actionable insights in dynamic health care implementations.

Keywords: evaluation, implementation science, methods, patient centered, qualitative, rapid synthesis

List of abbreviations

- NIH

National Institutes of Health

- PDSA

Plan‐Do‐Study‐Act

- CBPR

community‐based participatory research

- CFIR

Consolidated Framework for Implementation Research

- PC 2.0

Primary Care 2.0

1. BACKGROUND

Improving health care delivery is an increasingly important issue.1 As such, health care delivery models, workflows, and payment systems are rapidly evolving and being tested in and across health care organizations. Current standard methods of research and evaluation, however, are mismatched with the speed of innovation and the needs of health care partners.2 Appropriate evaluation of such rapid evolution requires methods and tools that facilitate prompt communication with stakeholders while maintaining methodological rigor.

Some models for learning health systems (LHSs) address the need for timely feedback, although these approaches almost exclusively focus on quantitative patient data or process metrics. For instance, the original scope of a LHS was focused on real‐time use of patient data to support continuous improvement. Similarly, Plan‐Do‐Study‐Act (PDSA) cycles intentionally connect process metrics to planning and action in rapid iterations.3, 4

While continuous quality approaches such as PDSA cycles address whether an approach or intervention is working as intended or not, more timely feedback is still needed to inform how and why an effort is successful/unsuccessful. In worst‐case scenarios, tardiness of feedback in traditional qualitative methods can impair partnerships.5 Conversely, building trust and ensuring strong communication channels with health care partners can support a more embedded relationship for researchers.6 Such third party partnered evaluations add value to quality improvement efforts by providing a trusted external perspective. Researchers risk stunting potential important adaptations to interventions, however, unless they align timelines for feedback with their health care delivery partners' information needs.

In the context of dynamic systems, such as health care delivery, qualitative methods are best suited to inform implementation and intervention adaptations. Implementation science, which includes qualitative methods, focuses on understanding the underlying mechanisms of successful change in health care—insights that foundationally support the goals of LHSs and continuous quality improvement PDSA cycles. Outcomes such as acceptability, feasibility, and adaptation focus evaluation efforts on informing next steps related to spread, scaling, and/or adapting to better fit the context.7

Leveraging implementation science constructs and approaches, we developed a high‐quality qualitative rapid analytic method and reporting tool to address the need for timely and on‐going feedback to support adaptation and redirection for research/implementation partners. Here we introduce the lightning report method—including both the analytic approach, and the specific “Lightning Report” one‐page product—and describe variation across projects. First we delineate ideal steps of the method in one context (eg, Primary Care 2.0, a team‐based care clinic redesign8). We then assess stakeholders' initial perceptions of the value of the Lightning Report. Finally, we compare implementation evaluation results across four projects based on Lightning Report content, in order to explore method sensitivity and the potential depth and breadth of lightning report method findings. The purpose of the comparison is to demonstrate the range of applicability, identifying implementation science domains likely to be captured with the lightning report method in evaluation settings independent of the specific project, and highlighting domains that might be most project dependent. We hope that our shared learnings will inspire others to use and improve the method over time. We envision the lightning report method and resulting Lightning Report products facilitating future cross‐project comparisons, a core need as implementation science moves from describing the impact of setting variation in implementation to predicting and managing for that impact.

2. METHODS

2.1. The lightning report method

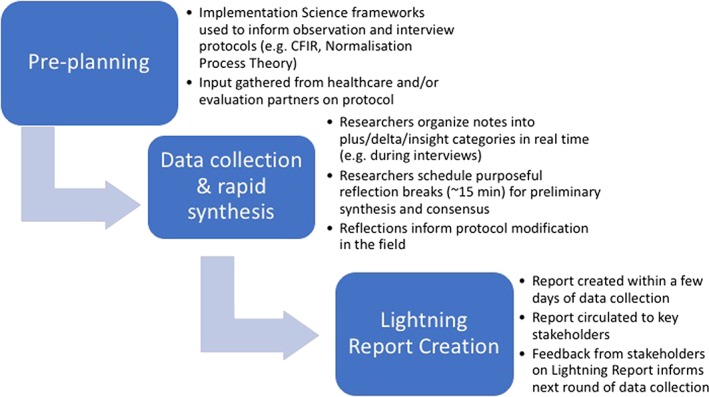

The lightning report method is a type of rapid assessment procedure (RAP),9 similar to rapid approaches that can be used in evaluation of health care implementation10 or embedded in pragmatic clinical trials.11 The lightning report method differs from other RAP approaches in that it includes a specific format for results of delivery, the Lightning Report. The lightning report method involves three basic stages: preplanning, data collection and synthesis, and report creation and communication. This process is illustrated in Figure 1. The following sections describe the ideal lightning report method based on our experience with Primary Care 2.0, where clinic observations and onsite semistructured interviews were primary sources of data.

Figure 1.

Lightning report method for clinic observation with concurrent semistructured interviews

2.1.1. Step 1: Preplanning with embedded subject matter expert partners

We create draft data collection protocols (ie, for interview, focus group, and/or observation) based on implementation outcomes of interest for each project.12 For example, in the life‐sustaining decisions project, we used the Consolidated Framework for Implementation Research (CFIR) interview guide tool13 to identify interview questions related to the CFIR construct of Available Resources, which was perceived to be a potential barrier to successful intervention, and thus of interest to national partners. The CFIR is a “meta‐theoretical” framework based on a systematic review of reports of both theory and evidence, synthesized into an overarching typology of 37 implementation constructs.14 As such, it is a tool that facilitates identifying facilitators and barriers of implementation success with a multilevel framework that includes factors of the intervention, clinic, ecosystem (eg, health care system and national health policies), individuals involved, and process. To facilitate its potential utility, the CFIR website provides extensive documentation including potential interview questions corresponding to structures (http://www.cifrguide.org).

Once protocols are created within the evaluation team, they are circulated to subject matter experts and/or health care partners, generally followed by a brief conversation to approve, adapt, or tailor the protocol. This partnered approach is borrowed in part from best practices of community advisory boards in community‐based participatory research (CBPR). Best practices of CBPR include involving partners at all stages: problem identification, design, methods, analysis, and dissemination.15

2.1.2. Step 2: Iterative data collection and rapid synthesis

The novelty of the lightning report method in data collection and synthesis of findings is the application of Plus/Delta/Insight debriefing. This analytic structure captures Plus (“what works”), Delta (“what needs to be changed”), and Insights (participant or evaluator insights, ideas, and recommendations). The Plus/Delta component is used in both business management16 and educational pedagogy17 as a way to foster reflection by participants at all levels of an organization in order to support a continuous and iterative culture of improvement. A simple example of the Plus/Delta process in business would be asking a committee what they liked about any/all aspects of the meeting (the “Plus”) and what they did not like or would have changed about it (“Delta”).

The Insight component, which simply reflects any insights accumulated along the way, is based on experience in the context of CBPR piloted by Youth Research Evaluation and Planning.18

Trained qualitative researchers use Plus/Delta/Insight sections in their notes throughout their data collection (eg, interviews and/or observations of meetings or clinic flow) to capture researcher perceptions and stakeholder comments about what is working (Plus) and what is not working or needs to change (Delta). The additional “Insight” category includes novel ideas or potential solutions drawn from interviewees and observations, as well as independent qualitative researcher recommendations. These notes are captured on paper or even directly into secure electronic sources (eg, Excel file saved in a secure Box folder that is HIPPA and PHI‐compliant), and structured around Plus/Delta/Insight to reveal themes, facilitators and barriers to implementation, and unexpected findings.

To increase validity of data collection and analysis, ideally two researchers participate in data collection, discussing notes and memos in regular reflection breaks or post‐interview debriefing sessions in order to synthesize material continuously simultaneous to data collection. This on‐site analysis/synthesis echoes qualitative data analysis approaches of consensus coding and constant comparison. In line with consensus and constant comparison, we intentionally emphasize areas of disagreement in our reflection breaks to limit bias, an approach that has been shown to produce better consensus outcomes.19

For example, data collection for a primary care transformation project consisted of quarterly, single‐day, two‐person site visits to the implementation clinics. Interviews were captured in‐clinic using a convenience sampling approach that aimed to gather perspectives from each role within the clinic. The two evaluators used three short reflection breaks (10‐30 min) to collaboratively debrief, synthesize initial results, and enhance analysis validity. In the life‐sustaining decisions project, interviews were conducted by phone with two researchers who routinely debriefed using the Plus/Delta/Insight framework after interviews. These debrief notes were compiled after multiple interviews (ie, >4) in preparation for Lightning Report creation.

An alternative way to conceptualize the lightning report method would be to view Plus/Delta/Insight as an a priori coding framework, where ideally independent researchers “code” observations and interview utterances as Plus/Delta/Insight while those observations and interviews are happening, or else immediately afterwards. One minor but impactful innovation of the lightning report method is that instead of note‐taking with a temporal structure (noting what was observed first at the top of any page of research notes), this method “codes” notes immediately by placing any notes/observations/quotes into the Plus/Delta/Insight framework from the start, effectively merging note‐taking with a first coding phase. During debriefs these independently coded notes are compared, contrasted, discussed, and queried, echoing rigorous constant comparison and consensus analytic approaches. Some of the second level of analysis/synthesis is conducted in lightning report debriefs, but this culling and organizing of results occurs more fully through iterative drafts of the Lightning Report product as they are reviewed and discussed by larger groups with more diverse stakeholders.

2.1.3. Step 3: Lightning Report creation

Initial drafts of the Lightning Report are ideally created within a few days of data collection, or generated on a regular basis (eg, bimonthly for the cancer center transformation initiative). This rapid turn‐around is facilitated by the Plus/Delta/Insight structure used for notes and team synthesis, which is mirrored in the Lightning Report. Report components include an executive summary, status of data collection (ie, interview counts and/or observation time), and key findings that reflect Plus/Delta/Insight synthesis: what is going well with implementation, improvement opportunities and what needs to change, and suggested actions (“Insights”) (see Figure 2).

Figure 2.

Example lightning report

Prior to finalizing the report, we present it in draft form to our evaluation team, including partner stakeholders when appropriate, to ensure alignment in understanding and ideal communication of potentially sensitive issues before disseminating to a broader stakeholder audience. Refinements based on stakeholder input are typically made within a week of report circulation. Once the report is finalized, it is circulated more widely to our clinical and programmatic partners and stakeholders.

Theories of health literacy20 and design21 intentionally inform the language, look, and feel of the Lightning Report. To make the report accessible to our many stakeholders, including non‐researchers, clinic implementers, and staff, we intentionally resist jargon and choose straightforward, non‐technical language when possible. We also leverage graphic design elements to make the report attractive to stakeholders, with the intent of increasing reach and dissemination of our findings.

2.2. Setting and human subjects procedures

The lightning report method was piloted, adapted, and refined by our team over a period of 2.5 years, across four distinct projects that evaluated the implementation of health care–based innovations. Projects were wide‐ranging in topic and clinical setting, and included: (a) a primary care team‐based care initiative, Primary Care 2.0 (PC 2.0)8, 22, 23; (b) a cancer center transformation initiative24, 25; (c) a pilot precision health initiative in primary care, Humanwide26; and (d) a national Veterans Health Administration goals of care initiative, the Life‐Sustaining Treatment Decisions Initiative, led by the National Center for Ethics in Health Care.27 These four projects are a convenience sample of projects that the Stanford School of Medicine Evaluation Sciences Unit engaged with between 2016 and 2018. They represent a wide range of evaluations in terms of scope/scale (from 50‐patient pilot to nationwide rollout) and stage (preliminary intervention scoping to post‐sustainability spread).

In each setting, the lightning report method was embedded in a mixed‐methods implementation and outcomes evaluation. As quality improvement projects, these initiatives were reviewed by the Stanford and VA ethics review boards and exempted from human subjects oversight. Regardless of exemption, our team adhered to best practices in human subject protection including: communicating with interview or focus group participants that participation was optional; retaining physical and digital materials (notes, recordings, etc) in secure locations; and protecting the confidentiality of participants by not disclosing identifiable information in our conversations with stakeholders or through our Lightning Reports.

2.3. Assessment of stakeholders' perceptions of the value of the Lightning Report

We assessed stakeholder perceptions of the value of the Lightning Report with a confidential feedback survey. We used open‐ended questions to find out what stakeholders liked best about Lightning Reports and what they would recommend as suggestions or feedback to make the reports better. In addition, we used a 4‐point Likert scale (ie, disagree strongly, disagree, agree, agree strongly) to assess specific aspects of the Lightning Report: value to the stakeholder, ease of comprehension, desire to share with colleagues, ability to address important issues, ability to change work of stakeholder or their teams, and influence of the report on initiatives and implementation.

2.4. Comparative analysis of Lightning Reports across projects

Our objective in performing a comparative analysis across four projects was to document the pattern and variation of implementation science constructs reflected using the lightning report method and to explore context‐dependent factors impacting each project's implementation. In our analysis, we included all Lightning Reports (n = 17) created by our team in the past two and a half years since we developed the approach. We used structured coding based on the CFIR.13

Coding of Lighting Reports using CFIR was conducted to consensus by two researchers, a trained qualitative expert with a PhD in linguistics (CBJ), and a community‐ and clinic‐focused research associate with a background in public health (NS). Specifically, we independently double‐coded two of 17 Lightning Reports (12%). This independent coding was reviewed jointly and adjusted to consensus. The rest of the Lightning Reports (n = 15) were coded by one researcher and reviewed by the other; again differences were resolved to consensus through conversation. We treated each subconstruct of CFIR, such as “compatibility” (a subconstruct of “Implementation Climate”), as independent codes.

We explored variation at the level of CFIR domains (ie, Inner Setting or clinic, Intervention Characteristics, Outer Setting or health care system, Process of implementation, Characteristics of Individuals) to assess whether coding emphasis varied by project.

3. FINDINGS

3.1. Description of data and stakeholders assessments of the lightning report method and Lightning Report products

Table 1 provides an overview of the four projects and Lightning Reports with respect to source of data (interviews or observations), number of interviewees, and project stage at time of data collection. Two projects produced six reports each; the other two projects produced three and two Lightning Reports, respectively. Data analyzed and reported in a given Lightning Report ranged from a minimum of four phone interviews conducted across multiple weeks to 26 interviews plus 5 days of observation conducted over 3 months.

Table 1.

Projects and topics for lightning reports

| Topic | Data source type | Participants | Project Stage |

|---|---|---|---|

| Team‐based care (Primary Care 2.0 ‐ PC 2.0) | |||

| Provider and staff perceptions | interview | 19 | implementation |

| Patient perceptions of TBC | interview | 6 | implementation |

| Adaptations and drift from original design | observation | n/a | sustainability |

| Spread readiness at future clinics | observation and interviews | 13 | pre‐implementation |

| Perceptions of PC 2.0 at 1.5 years | observation and interviews | 16 | maintenance |

| Perceptions of plan for system‐wide spread | observation and interviews | 26 | pre‐implementation |

| Cancer care transformation | |||

| Care navigation ‐ care navigator perspective | focus group, qualitative survey data | 4 | early implementation |

| Care navigation ‐ clinical perspective and observations of work | interview and observation | 8 | early implementation |

| Implementation check on: care navigation, patient education, PathWell | interview and observation | 6 | implementation |

| Care navigation | observation | n/a | implementation |

| GI pilot (nurse navigation and eHealth) | observation and interviews | 7 | implementation |

| Patient interviews/care coordination | interview | 7 | implementation |

| National goals of care conversations initiative (Goals of care ‐ GoC) | |||

| Pilot implementation and sustainability | interview | 7 | retrospective implementation |

| Perceptions and reflections on GoC pilot implementation and sustainability | interview | 7 | retrospective implementation |

| Launch and implementation of national GoC | interview | 4 | early implementation |

| Precision health in primary care pilot (PH) | |||

| Patient perceptions of PH | focus group | 7 | pre‐implementation |

| Patient perceptions of PH | interview | 14 | early implementation |

Six of seven stakeholders/health care partners responded to our survey. Most stakeholders reported that Lightning Reports were valuable (five of six), easy to understand (five of six), shared with colleagues (five of five), addressing important issues (six of six), and influencing initiative implementation (four of six). Suggestions and areas for growth included wanting Lightning Reports that reflected more data (“larger number of completed interviews”), wanting a report format that could more easily highlight and compare findings across sites, and wanting validation of the lightning report method against systematic coding of full transcripts. Notably, even the project that used the most traditional method for data collection and analysis (ie, a single researcher conducting interviews and coding transcripts for themes, for the cancer center transformation initiative) received high praise for producing Lightning Reports: “these qualitative … reports were very important.” By contrast, this same project reported that before Lightning Reports, they “got so little information during the first 3 to 4 years that we were unable to take corrective action that would help improve the [project].”

3.2. Comparison of implementation barrier and facilitator foci across projects (CFIR coding)

Table 2 presents the number of excerpts coded to each of the CFIR constructs across all Lightning Reports, in total and by project. There were a total of 344 coded excerpts; the largest proportion, 44% (150), related to the CFIR domain of Inner Setting (eg, the implementation clinic) and the next largest related to Intervention Characteristics (23%; n = 83). Within the domains, the largest number of excerpts coded to the following construct or subconstruct: “Patient Needs and Resources” (44), “Networks & Communication” (n = 34, eg, themes of communication), “Available Resources” (n = 29, eg, staffing), “Learning Climate” (n = 19, eg, lack of feedback), “Complexity” (n = 16, eg, alignment with other simultaneous interventions), “Relative Advantage” (n = 15, eg, benefit compared with traditional care), and “Culture” (n = 15, eg, perceived positive impact of team cohesion).

Table 2.

Implementation science construct frequencies in Lightning Reports across four projects‐ Primary Care 2.0 (PC 2.0), Goals of Care (GoC), Cancer center transformation, Precision Health (PH) (coded with CFIR ‐ Consolidated Framework for Implementation Research)

| total coded (n) | PC 2.0 (n) | GoC (n) | Cancer (n) | PH (n) | |

|---|---|---|---|---|---|

| Total Lightning Reports | 17 | 6 | 3 | 6 | 2 |

| Total Constructs coded* | 344 | 139 | 82 | 87 | 36 |

| CFIR Domains and Constructs** | |||||

| INNER SETTING | 150 | 64 | 46 | 34 | 6 |

| Networks & Communications | 34 | 16 | 6 | 10 | 2 |

| Available Resources | 29 | 13 | 14 | 2 | 0 |

| Learning Climate | 19 | 9 | 8 | 2 | 0 |

| Culture | 15 | 7 | 8 | 0 | 0 |

| Compatibility | 14 | 8 | 2 | 4 | 0 |

| Access to Knowledge & Information | 14 | 1 | 0 | 10 | 3 |

| Leadership Engagement | 12 | 9 | 3 | 0 | 0 |

| Relative Priority | 7 | 1 | 4 | 2 | 0 |

| Goals and Feedback | 5 | 0 | 0 | 4 | 1 |

| INTERVENTION CHARACTERISTICS | 83 | 39 | 9 | 18 | 17 |

| Complexity | 16 | 3 | 1 | 8 | 4 |

| Relative Advantage | 15 | 9 | 2 | 2 | 2 |

| Evidence Strength & Quality | 13 | 7 | 1 | 2 | 3 |

| Design Quality & Packaging | 13 | 4 | 2 | 2 | 5 |

| Adaptability | 11 | 9 | 0 | 2 | 0 |

| Cost | 11 | 5 | 1 | 2 | 3 |

| OUTER SETTING | 49 | 11 | 6 | 23 | 9 |

| Patient Needs & Resources | 44 | 10 | 3 | 23 | 8 |

| PROCESS | 33 | 16 | 10 | 6 | 1 |

| Executing | 12 | 7 | 1 | 4 | 0 |

| Champions | 9 | 1 | 6 | 2 | 0 |

| Reflecting & Evaluating | 8 | 6 | 1 | 0 | 1 |

| CHARACTERISTICS OF INDIVIDUALS | 29 | 9 | 11 | 6 | 3 |

| Other Personal Attributes | 10 | 4 | 4 | 1 | 1 |

| Knowledge & Beliefs about the Intervention | 9 | 0 | 4 | 4 | 1 |

| Self‐efficacy | 5 | 2 | 2 | 1 | 0 |

Totals may not match construct‐level data because constructs and cubconstructs with less than 5 total coded excerpts are omitted from this table, including: Intervention Source, Trialability, Peer Pressure, Cosmopolitanism, External Policy & Incentives, Organizational Incentives & Rewards, Goals & Feedback, Readiness for Implementation, Structural Characteristics, Tension for Change, Implementation Climate, Individual Identification with Organization, Planning, Engaging, Opinion Leaders, Individual Stage of Change, Formally Appointed Internal Implementation Leaders, External Change Agents

Totals may not match domain‐levels (see note above)

Less than 10% of the excerpts mapped to each of the other two CFIR domains, Process and Individual Characteristics (n = 33 and n = 29, respectively). Constructs that had less than 5 excerpts coded to them are not shown in Table 2. Four constructs, across three different domains, did not have any excerpts coded to them: “External Policy/Incentives” in the Outer Setting domain, “Structural Characteristics” and “tension for change” (Inner Setting), and “external change agents” (Process).

The largest proportion of excerpts were coded to the domain Inner Setting (ie, clinic attributes) in three of the four projects, ranging from 39% for the cancer center project to a high of 56% for the life‐sustaining decisions project (see Figure 3). An outlier, the precision health implementation had the largest proportion of coding to the domain Intervention Characteristics (47%). While the largest proportion of coding was more consistent, the least coded domains showed more variation by project: Process accounted for 3% and 7% for the precision health and cancer center projects respectively, Characteristics of Individual for 6% of the Primary Care 2.0 evaluation, and Outer Setting 7% of excerpts for the life‐sustaining decisions project.

Figure 3.

Comparison of Consolidated Framework for Implementation Research (CFIR) domains reflected in Lightning Reports by project

The variation in proportion of excerpts coded to domain by project aligned with differences in implementation stage and project design. The two domain/project areas with the largest proportional difference were Outer Setting in the cancer center project and Intervention Characteristics in precision health. Outer Setting in the cancer center project reflected emphasis on patient experience; all Outer Setting codes were assigned to the sub‐domain Patient Needs and Resources. This corresponded with the design and focus of the initiative intervention, which was a patient‐facing lay oncology care navigation program.

Intervention Characteristics in Precision Health aligned with timing/setting of the evaluation, which occurred during pre‐implementation and early‐implementation phases when clarifying the components and characteristics of the intervention were central foci. Accordingly, Lightning Reports from the precision health initiative appropriately corresponded with an emphasis on Intervention Characteristics: 47% of all precision health codes were focused on this domain, compared with 11% to 28% of codes for other projects, which were evaluated at later phases of implementation.

4. DISCUSSION

In the pursuit of improving real‐time partnered evaluation and implementation, we created a method and a communication tool—the lighting report method and the Lightning Report, respectively—to facilitate prompt and actionable communication with our health care partners/stakeholders that would also maintain methodological rigor. In over 2 years of our experience, the lightning report method is filling a gap in rapid qualitative approaches. Specifically, the lightning report method is generalizable with consistent but flexible core methods, and our findings suggest it is a sensitive tool capable of uncovering differences and insights in implementation across projects.

Bridging the chasm between real‐time data collection and too often time‐delayed analysis and reporting,2 the lighting method and resulting Lightning Report products facilitated rich implementation insights and were effective in rapidly transforming qualitative data to an actionable deliverable. A recent white paper, Qualitative Methods in Implementation Science, identified rapid analysis as one of five key areas needed to improve qualitative methods.28 The white paper highlights the need for immediate, actionable insights in qualitative research, especially during the prospective evaluation of dynamic system implementation, when an intervention may need significant tailoring to be appropriately adapted for clinic settings.

We originally developed the lightning report method as an engagement tool for our health care implementation partners, enabling actionable feedback to stakeholders at multiple levels to address potential breaches of trust between research and health care.5 We used the tool to communicate interim results from observations and interviews before waiting for full thematic code analyses, which could be delayed due to issues with transcription, or competing priorities and other projects. Providing an interim deliverable such as the Lightning Report supported trust‐building with our immediate health care system partners, and allowed our team to validate that our findings aligned with stakeholders' areas of interest. We also quickly found the value and interest in these reports extended beyond our immediate team partners—Lightning Reports reached a wide range of health audiences, including clinic staff and providers, academic medicine faculty, collaborating research teams, hospital risk authority, steering committees and advisory boards, and national health care partners.

We propose that the value of the lightning report method and Lightning Reports to LHSs is threefold: (a) intentional expansion of LHS approaches beyond patient data into qualitative findings that can inform intervention adaptation, (b) specific methods that are replicable as well as flexible, and (c) emphasis on partnership at each stage. The lightning report method can enhance LHS culture, which ideally already includes PDSAs and structured quality improvement approaches, by introducing process and infrastructure to address not only whether an initiative worked or not, but how and why it might have been successful or not, in line with the objective of implementation science to inform uptake, spread, and sustainability of interventions. In the context of partnered research (ie, between evaluation scientists and health care system leadership), we developed the lightning report method and embedded Lightning Report, addressing the need for qualitative methods that “document team methods,” allow “rapid analysis,” may potentially facilitate “cross‐context comparison,”28 and represent rapid process evaluation specifically designed to help refine the intervention during the course of its testing.29

Previous approaches such as Learning Evaluations have specified principles for approaching partnered evaluation and research30; the lighting report method enhances frameworks such as this with specific instructions grounded in structured methods from other fields (ie, Plus/Delta/Insight) that facilitate rapid analysis and consumable feedback. The method borrows techniques from education and business management.16, 17 Specifically, the lightning report method applies a modified plus/delta debriefing strategy to traditional qualitative data such as focus groups, semistructured interviews, and site‐visit observations to produce rigorous reports that are immediately available to stakeholders.

Finally, the lightning report method provides value for LHSs by turning to stakeholders at each stage—preplanning, analysis, and dissemination—to enhance evaluation with principles of partnership derived from CBPR approaches. These “Lighting Reports” facilitate communication, support the Learning Healthcare System, and iterate on community‐based participatory research (CBPR) principles of stakeholder engagement in all stages of research.31 Insights on implementation are made and communicated quickly so they can directly inform on‐the‐ground decisions made by the implementation team. This purposeful qualitative data collection and synthesis process enables rapid feedback of actionable results to implementation partners. One rigorous exploration of Community Advisory Board functions and best practices specified involvement of partners at the following stages: identifying the problem, designing the study, recruiting participants and data collection methods, data analysis and interpretation, dissemination, and evaluation and reflection.15 Partnered evaluation within the structure of the lightning report method can emphasize these stages of involvement, pushing evaluation and research teams to include stakeholders consistently in each stage of the research process. Furthermore, from a Learning Evaluation perspective, planning with partners is valuable since it allows for nimble course correction, as implementer priorities inevitably shift over the course of an intervention.30 Input from health care partners ensures that data collection aligns not only with predetermined outcomes but also addresses emergent areas of interest.

The root framework of the lightning report method (ie, preplanning with partners, structured data gathering punctuated with reflection, and attractively designed reporting that can be easily consumed) is quite flexible and could itself be adapted to other venues and tasks. Other use‐cases, for example, might include quick‐cycle quality improvement projects, user‐experience investigations of electronic medical record changes, or embedded process evaluation.29 Thinking big, a larger dataset of Lightning Reports representing more projects and created by diverse research groups could constitute a qualitative “Big Data” repository in implementation science. This Lightning Report dataset could underpin larger cross‐project, cross‐setting research to deepen our understanding of implementation science nationally and internationally. We are currently building a database online to allow collaborators to enter metadata about projects and settings (stage of implementation, academic vs community setting, etc) as well as bullet‐point excerpts and proposed CFIR coding for excerpts. Ideally, a lightning report “community of practice” could build a repository of findings from Lightning Reports that could be mined for a deeper understanding of implementation.

Limitations to this method and comparative study revolve around (a) slight variations in the lightning report method across reported projects; and (b) the intentionally high‐level focus of the Lightning Report, particularly in terms of special cases such as combining data from multiple sources into one Lightning Report. Firstly, our sample of Lightning Reports was developed by two teams of researchers, with two main authors. These teams and main authors have hewn to the main steps of the lightning report method but have intentionally not followed strict replication procedures, to allow for innovation and iteration of the method appropriate to its nascent stage. Secondly, the Lightning Report is meant to be concise and easy to understand. It may therefore include only the most pressing/novel findings. The Lightning Reports may focus on interesting and broad topics to the detriment of covering topics in depth or reiterating basics. Also, we have yet to fully investigate difficult topics such as optimally integrating data from both observations and interviews into Lightning Report products.

To address limitations as we move forward with this method, we urgently need three next steps. To continue to support the lightning report method as a rigorous approach, (a) we must take the time as research teams to communicate with each other about how we are applying the method. (b) We must query ourselves on the why and how of our own decision‐making as we move from method to Lightning Report products—what are the assumptions and biases, for instance, that allow us to condense findings from three‐pages of distilled technical notes to a polished Lighting Report ready for stakeholder consumption? Finally, (c) we also need to document the specifics of our use of the method and any iterations in written and presented reports. The lightning report method is promising as a rapid synthesis method that can be easily embedded into more traditional mixed‐methods evaluations or used in a stand‐alone manner. It addresses important outstanding issues in the evolution of qualitative methods in implementation science, namely, rapid analysis and future cross‐context comparison.28 Next steps in validating and exploring this method include comparing Lightning Report results to traditional qualitative analysis that includes full coding of data.

5. CONCLUSIONS

Finally, we end with a word of caution. The lighting report method and Lightning Report product promises better communication and more engagement with health care partners. Therefore, we warn that if you embark on creating well‐designed rapid synthesis products, you may find, as we have, that the fruit of your labor creates an appetite for more and that health care partners will want you to continue creating Lightning Reports at a fast clip. Accordingly, we advise that you consider in advance the cadence of reporting that will best fit your resources and those of your partners. In summary, we propose that the lightning report method is a methodologically rigorous but flexible approach that facilitates partnered evaluation and communication with stakeholders by providing real‐time, actionable insights in dynamic health care implementations.

DECLARATIONS

Ethics approval and consent to participate: Stanford IRB Human Subject Research Exemption #43466 “Scoping Exercise to Inform a Pragmatic Design for Enhanced Team‐Based Primary Care” for Primary Care 2.0 and Humanwide; #44112 “Life Sustaining Treatment Decisions Initiative (LSTDI) Site Study.”

FUNDING

No public funding was used for activities reported in this manuscript. The evaluations reported in this manuscript were financially supported by Stanford Health Care. The article contents are solely the responsibility of the authors and do not necessarily represent the official views of Stanford Health Care, an entity affiliated with, but not a part of, the Stanford School of Medicine where the Evaluation Sciences Unit resides. Authors Brown‐Johnson, Safaeinili, Zionts, Holdsworth, Shaw, Asch, and Winget are affiliated with the Evaluation Sciences Unit. Dr. Mahoney is currently a practicing provider in Stanford Health Care's system.

CONFLICT OF INTEREST

The authors have no competing interests to declare.

AUTHOR CONTRIBUTIONS

CBJ was one of four researchers involved in data collection and analysis. She also was the primary author of the manuscript. NS was one of four researchers involved in data collection and analysis. She also was a major contributor to writing the manuscript. DZ was one of four researchers involved in data collection and analysis. She also contributed significant edits to the manuscript. LH was one of four researchers involved in data collection and analysis. She also contributed significant edits to the manuscript. JS contributed significant edits to the manuscript. SA contributed significant edits to the manuscript. MM contributed significant edits to the manuscript. MW was a major contributor to writing the manuscript and contributed significant edits. All authors read and approved the final manuscript.

AVAILABILITY OF DATA AND MATERIAL

All data generated and analyzed as part of this evaluation are not public evaluation due to quality improvement restrictions but are available from the corresponding author on reasonable request.

Brown‐Johnson C, Safaeinili N, Zionts D, et al. The Stanford Lightning Report Method: A comparison of rapid qualitative synthesis results across four implementation evaluations. Learn Health Sys. 2020;4:e10210 10.1002/lrh2.10210

REFERENCES

- 1. Institute of Medicine . Crossing the Quality Chasm: A New Health System for the 21st Century. Vol. 6. Washington, DC; 2001.

- 2. Riley WT, Glasgow RE, Etheredge L, Abernethy AP. Rapid, responsive, relevant (R3) research: a call for a rapid learning health research enterprise. Clin Transl Med. 2013;2(1):1‐6, 10. 10.1186/2001-1326-2-10 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Friedman CP, Allee NJ, Delaney BC, et al. The science of learning health systems: foundations for a new journal. Learn Heal Syst. 2017;1(1):1‐3, e10020 10.1002/lrh2.10020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Grumbach K, Lucey CR, Johnston SC. Transforming from centers of learning to learning health systems. JAMA. 2014;311(11):1109‐1110. 10.1001/jama.2014.705 [DOI] [PubMed] [Google Scholar]

- 5. Lieu TA, Madvig PR. Strategies for building delivery science in an integrated health care system. J Gen Intern Med. January 2019;34(6):1‐5. 10.1007/s11606-018-4797-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Marshall M, Pagel C, French C, et al. Moving improvement research closer to practice: the researcher‐in‐residence model. BMJ Qual Saf. 2014;23(10):801‐805. 10.1136/bmjqs-2013-002779 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Proctor E, Silmere H, Raghavan R, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health. 2011;38(2):65‐76. 10.1007/s10488-010-0319-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Brown‐Johnson CG, Chan GK, Winget M, et al. Primary Care 2.0: Design of a transformational team‐based practice model to meet the quadruple aim. Am J Med Qual. November. 2018;1‐9, 106286061880236 10.1177/1062860618802365 [DOI] [PubMed] [Google Scholar]

- 9. Beebe J. Basic concepts and techniques of rapid appraisal. Hum Organ. 1995;54(1):42‐51. 10.17730/humo.54.1.k84tv883mr2756l3 [DOI] [Google Scholar]

- 10. Vindrola‐Padros C, Vindrola‐Padros B. Quick and dirty? A systematic review of the use of rapid ethnographies in healthcare organisation and delivery. BMJ Qual Saf. 2018;27(4):321‐330. 10.1136/bmjqs-2017-007226 [DOI] [PubMed] [Google Scholar]

- 11. Palinkas LA, Zatzick D. Rapid Assessment Procedure Informed Clinical Ethnography (RAPICE) in pragmatic clinical trials of mental health services implementation: methods and applied case study. Adm Policy Ment Heal Ment Heal Serv Res. 2019;46(2):255‐270. 10.1007/s10488-018-0909-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Proctor E, Silmere H, Raghavan R, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health. 2011;38(2):65‐76. 10.1007/s10488-010-0319-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Damschroder L, Hall C, Gillon L, et al. The Consolidated Framework for Implementation Research (CFIR): progress to date, tools and resources, and plans for the future. Implement Sci. 2015;10(S1):A12 10.1186/1748-5908-10-S1-A12 [DOI] [Google Scholar]

- 14. Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4(1):50‐65. 10.1186/1748-5908-4-50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Newman SD, Andrews JO, Magwood GS, Jenkins C, Cox MJ, Williamson DC. Community advisory boards in community‐based participatory research: a synthesis of best processes. Prev Chronic Dis. 2011;8(3):2‐12, A70. http://www.ncbi.nlm.nih.gov/pubmed/21477510 [PMC free article] [PubMed] [Google Scholar]

- 16. Forbes L, Ahmed S, Ahmed SM. Modern Construction. Vol 20102123. CRC Press; 2010. 10.1201/b10260 [DOI] [Google Scholar]

- 17. Phrampus PE, O'Donnell JM. Debriefing using a structured and supported approach In: The Comprehensive Textbook of Healthcare Simulation. New York, NY: Springer New York; 2013:73‐84. 10.1007/978-1-4614-5993-4_6 [DOI] [Google Scholar]

- 18. London JK, Zimmerman K, Erbstein N. 3 Youth‐Led Research and Evaluation: Tools for Youth, Organizational, and Community Development. https://dmlcommons.net/wp-content/uploads/2015/04/LondonZimmErb_NewDirections_2003.pdf.

- 19. Miller CE. The social psychological effects of group decision rules In: Paulus P, ed. Psychology of Group Influence. 2nd ed. Hillsdale, NJ: Erlbaum.le; 1989:327‐355. [Google Scholar]

- 20. Squiers L, Peinado S, Berkman N, Boudewyns V, McCormack L. The health literacy skills framework. J Health Commun. 2012;17(sup3):30‐54. 10.1080/10810730.2012.713442 [DOI] [PubMed] [Google Scholar]

- 21. Wilson EAH, Wolf MS. Working memory and the design of health materials: a cognitive factors perspective. Patient Educ Couns. 2009;74(3):318‐322. 10.1016/J.PEC.2008.11.005 [DOI] [PubMed] [Google Scholar]

- 22. Brown‐Johnson C, Shaw JG, Safaeinili N, et al. Role definition is key‐Rapid qualitative ethnography findings from a team‐based primary care transformation. Learn Heal Syst. February. 2019;1‐7, e10188 10.1002/lrh2.10188 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Levine M, Vollrath K, Nwando Olayiwola J, Brown Johnson C, Winget M, Mahoney M. Transforming medical assistant to care coordinator to achieve the quadruple aim. 2018;(2):1‐2. 10.1370/afm.1475 [DOI]

- 24. Winget M, Veruttipong D, Holdsworth LM, Zionts D, Asch SM. Who are the cancer patients most likely to utilize lay navigation services and what types of issues do they ask for help? J Clin Oncol. 2018;36(30_suppl):229‐229. 10.1200/jco.2018.36.30_suppl.229 [DOI] [Google Scholar]

- 25. Holdsworth LM, Zionts D, Asch S, Winget M. Is there congruence between the types of triggers that cause delight or disgust in cancer care? J Clin Oncol. 2018;36(30_suppl):216‐216. 10.1200/jco.2018.36.30_suppl.216 [DOI] [Google Scholar]

- 26. Mahoney MR, Asch SM. Humanwide: a comprehensive data base for precision health in primary care. Ann Fam Med. 2019;17(3):273 10.1370/afm.2342 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Foglia MB, Lowery J, Sharpe VA, Tompkins P, Fox E. A comprehensive approach to eliciting, documenting, and honoring patient wishes for care near the end of life: the veterans health administration's life‐sustaining treatment decisions initiative. Jt Comm J Qual Patient Saf. 2019;45(1):47‐56. 10.1016/j.jcjq.2018.04.007 [DOI] [PubMed] [Google Scholar]

- 28. NIH . Qualitative Methods In Implementation Science. 2018:1‐31. https://cancercontrol.cancer.gov/IS/docs/NCI-DCCPS-ImplementationScience-WhitePaper.pdf. Accessed March 15, 2019.

- 29. Moore GF, Audrey S, Barker M, et al. Process evaluation of complex interventions: Medical Research Council guidance. BMJ. 2015;350:1‐7, h1258. 10.1136/bmj.h1258 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Balasubramanian BA, Cohen DJ, Davis MM, et al. Learning evaluation: blending quality improvement and implementation research methods to study healthcare innovations. Implement Sci. 2015;10(1):1‐11, 31. 10.1186/s13012-015-0219-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Israel BA, Eng E, Schulz AJ, Parker EA. Methods in Community‐Based Participatory Research for Health: From Process to Outcomes. Jossey‐Bass; 2005. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All data generated and analyzed as part of this evaluation are not public evaluation due to quality improvement restrictions but are available from the corresponding author on reasonable request.