Abstract

Background

Individuals in stressful work environments often experience mental health issues, such as depression. Reducing depression rates is difficult because of persistently stressful work environments and inadequate time or resources to access traditional mental health care services. Mobile health (mHealth) interventions provide an opportunity to deliver real-time interventions in the real world. In addition, the delivery times of interventions can be based on real-time data collected with a mobile device. To date, data and analyses informing the timing of delivery of mHealth interventions are generally lacking.

Objective

This study aimed to investigate when to provide mHealth interventions to individuals in stressful work environments to improve their behavior and mental health. The mHealth interventions targeted 3 categories of behavior: mood, activity, and sleep. The interventions aimed to improve 3 different outcomes: weekly mood (assessed through a daily survey), weekly step count, and weekly sleep time. We explored when these interventions were most effective, based on previous mood, step, and sleep scores.

Methods

We conducted a 6-month micro-randomized trial on 1565 medical interns. Medical internship, during the first year of physician residency training, is highly stressful, resulting in depression rates several folds higher than those of the general population. Every week, interns were randomly assigned to receive push notifications related to a particular category (mood, activity, sleep, or no notifications). Every day, we collected interns’ daily mood valence, sleep, and step data. We assessed the causal effect moderation by the previous week’s mood, steps, and sleep. Specifically, we examined changes in the effect of notifications containing mood, activity, and sleep messages based on the previous week’s mood, step, and sleep scores. Moderation was assessed with a weighted and centered least-squares estimator.

Results

We found that the previous week’s mood negatively moderated the effect of notifications on the current week’s mood with an estimated moderation of −0.052 (P=.001). That is, notifications had a better impact on mood when the studied interns had a low mood in the previous week. Similarly, we found that the previous week’s step count negatively moderated the effect of activity notifications on the current week’s step count, with an estimated moderation of −0.039 (P=.01) and that the previous week’s sleep negatively moderated the effect of sleep notifications on the current week’s sleep with an estimated moderation of −0.075 (P<.001). For all three of these moderators, we estimated that the treatment effect was positive (beneficial) when the moderator was low, and negative (harmful) when the moderator was high.

Conclusions

These findings suggest that an individual’s current state meaningfully influences their receptivity to mHealth interventions for mental health. Timing interventions to match an individual’s state may be critical to maximizing the efficacy of interventions.

Trial Registration

ClinicalTrials.gov NCT03972293; http://clinicaltrials.gov/ct2/show/NCT03972293

Keywords: mobile health, digital health, smartphone, mobile phone, wearable devices, ecological momentary assessment, depression, mood, physical activity, sleep, moderator variables

Introduction

Background

According to the World Health Organization, depression is the leading cause of disease-associated disability in the world [1]. In the United States, the burden of depression, including suicide, has continued to grow [2]. In populations at high risk, prevention of depression may be an effective strategy. The US National Academy of Medicine has highlighted the need to develop, evaluate, and implement prevention interventions for depression and other mental, emotional, and behavioral disorders [3].

Prevention interventions for depression are critical for individuals in stressful work environments because these environments can lead to increased rates of depression [4]. However, individuals in these work environments may have inadequate time or resources to access traditional mental health care services. High stress can also make individuals less receptive to interventions and behavior change [5,6].

Unlike other recent advances, mobile technology has the potential to transform the delivery and timing of depression prevention interventions to meet the needs of highly stressed individuals. In contrast to more intensive treatments (such as therapeutic appointments), mobile health (mHealth) interventions (such as push notifications) can be delivered at low burden, which may be critical given the individuals’ high stress workloads. Mobile devices hold the power to deliver just-in-time adaptive interventions (JITAIs) [7] to individuals during times when they are able to receive and respond to them. Mobile devices also collect objective measurements of an individual’s context and behavior with minimal burden (eg, step counts, sleep duration). These data may, in turn, be used to determine when to deliver interventions, and evaluate intervention efficacy, without bothering the individuals.

When initially designing a JITAI, these states of opportunity [7]—times when individuals are receptive to positive behavior change—are not known. Timing is critical because poorly timed interventions can lead to loss of engagement with the intervention [8]. Timing interventions is also particularly important for individuals in stressful work environments because poorly timed interventions could cause disengagement and treatment fatigue [9].

Current behavioral theories lack the granularity and adaptivity necessary to inform the timing of the delivery of mHealth interventions [10,11]. Many theoretical models are nondynamic—they only consider treatment adaptation based on baseline characteristics, such as sex and depression history [12]. Timing and adapting treatments based on real-time variables is essential for developing high-quality JITAIs [7].

This study follows a data-driven approach to inform the dynamic timing of intervention delivery. Experimentation and data collection were used to provide empirical evidence for determining states of opportunity—the data illustrate when interventions cause positive behavior change in individuals and when they do not.

There have been other empirical studies showing the promise of JITAIs to improve mental health [13]. Those studies are either focused on feasibility and acceptability of the JITAI [14-17] or use a randomized controlled trial (RCT) to demonstrate the impact of the JITAI on a distal outcome [18-20]. They do not focus on the timing of intervention delivery. In two studies [21,22], the authors demonstrated the benefits of timing mHealth intervention delivery based on real-time variables. In that work, the timing of intervention delivery is specified before the study. In contrast, because we did not know a priori how to time our interventions, our work used empirical evidence to learn how to dynamically time intervention delivery.

In statistical terms, we formulated the task of empirically learning how to dynamically time interventions as discovering time-varying moderators of causal treatment effects [23]. Time-varying moderators are moderators because they change—or moderate—the efficacy of subsequent treatments and are time-varying because the moderators’ values vary throughout the study (such as daily mood). For example, if push notifications containing sleep messages cause a larger increase in sleep when individuals had little sleep in the previous night compared with when individuals had high sleep, then the previous night’s sleep moderates the effect of sleep notifications. Discovering time-varying moderators informs treatment timing because treatment delivery can now be based on the observed values of these moderators. In the example, it may be better to send sleep notifications only after individuals have insufficient sleep. Note that time-varying moderators should have meaningful variability to allow the possibility of sending different interventions at different times.

We assessed time-varying moderators of mHealth interventions targeting 3 categories: mood, activity, and sleep. Stressful work environments can lead to sleep deprivation and physical inactivity [24-26], two behaviors directly associated with depression [24,27,28]. To prevent depression among individuals experiencing high stress, it is critical to develop high-quality interventions that can help them maintain and improve their mood, either through targeting mood directly or by indirectly improving activity and sleep [24,28].

Our study population comprised medical interns. Medical internship, the first year of physician residency training, is highly stressful, causing the depression rates of interns to be several folds higher than those of the general population [29]. Focusing on physician training, a rare situation in which a dramatic increase in stress can be anticipated, provides an ideal experimental model to develop interventions for maintaining mental wellness during life and work stressors.

Our study, the 2018 Intern Health Study (IHS) [30], was a 6-month-long mHealth cohort study that tracked medical interns using phones and wearables. During the internship year, we conducted a micro-randomized trial (MRT) [23]. Standard single–time point RCTs only inform moderation by baseline variables [31] and do not permit the discovery of time-varying moderators. The MRT was advantageous because it allowed us to discover time-varying moderators of causal treatment effects [23].

During each week in the 6-month study, an intern was randomized to 1 of 4 possible treatments: a week of mood notifications, activity notifications, sleep notifications, or no notifications. The outcomes were average daily self-reported mood valence (measured through a one-question survey), average daily steps (as a proxy for activity), and average daily sleep duration, where averages were 7-day averages of data collected during the week of treatment. The strongest moderators were hypothesized to be the previous week’s average daily mood, steps, and sleep, as these were the strongest predictors of the outcomes (based on previous years’ IHS data [30]). We were only interested in a subset of combinations of outcomes, treatments, and moderators.

Study Aims

Here, we have highlighted the primary and secondary aims of this paper. Below, the effect (for which we are assessing moderation) corresponds to how a week of a certain notification category causally changes an outcome compared with weeks of no notifications.

The moderator aims listed below were not the only aims of the 2018 IHS. Analyses of main effects analyses were conducted before the analysis of moderator effects (see Additional Analyses in Multimedia Appendix 1). This paper has focused on moderator analyses as those were the most interesting findings.

Primary Aim

Our primary aim focused on discovering how an intern’s previous mood moderates the effect of notifications in general. Specifically, we examined the following: Is the effect of a week of notifications (of any category) on the average daily mood moderated by the previous week’s mood? Here, Outcome=mood; Treatment=any (mood, activity, or sleep); and Moderator=mood.

Exploratory Subaim

If we do find that mood moderates the effect of notifications, generally, we will assess if this moderation is consistent across all intervention categories. Specifically, we will examine the following: Is the effect of each category of notification on the average daily mood moderated by the previous week’s mood? Here, Outcome=mood; Treatment=mood, activity, and sleep separately; and Moderator=mood.

Secondary Aim 1

Secondary aim 1 focused on discovering how an intern’s previous activity moderates the effect of notifications containing activity messages. Specifically, we examined the following: Is the effect of a week of activity notifications on the average daily step count moderated by the previous week’s step count? Here, Outcome=steps, Treatment=activity, and Moderator=steps.

Secondary Aim 2

Secondary aim 2 focused on discovering how an intern’s previous sleep moderates the effect of notifications containing sleep messages. Specifically, we examined the following: Is the effect of a week of sleep notifications on the average daily sleep moderated by the previous week’s sleep? Here, Outcome=sleep; Treatment=sleep; and Moderator=sleep.

Methods

The Study App

Study participants were provided a Fitbit Charge 2 [32] to collect sleep and activity data, and a mobile app was downloaded to the intern’s phone. The app can conduct ecological momentary assessments (EMAs) [33], aggregate and visualize data, and deliver push notifications. The app was designed for iOS using Apple ResearchKit [34].

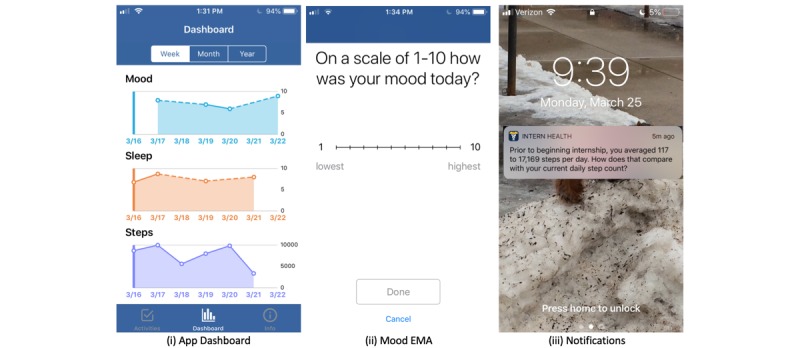

As the primary aim of the study was focused on understanding the effects of interventions on the mental health of interns, we employed a daily EMA to measure mood valence (see Figure 1, Mood EMA). Daily mood is one of the 2 cardinal symptoms of depression [35]. This daily mood EMA is used widely to track the mood in patients with depression [36]. There are more widely used measurements of mental health other than mood valence (such as the Patient Health Questionnaire-9, PHQ-9 [37]). However, these questionnaires are more time-intensive and their assessment may cause higher nonresponse rates. Participants are prompted to enter their daily mood every day at a user-specified time between 5 PM and 10 PM.

Figure 1.

Screenshots of the app dashboard, mood ecological momentary assessment, and lock screen notifications.

In addition to collecting EMA data, the app aggregates and displays visual summaries of interns’ historical data, including step and sleep counts (collected through the Fitbit) and mood EMA data (Figure 1, App Dashboard). The data are integrated with the app using Fitbit’s publicly available application programming interface [38]. Displaying historical trends to the intern helps them self-monitor their mood, activity, and sleep trajectories and could potentially lead to positive reactive behavior change [39]. These displays are a type of pull intervention, that is, interventions that are available at all times but only accessed upon user request. The pull component was available to all participants, and assessing its effects was not the focus of this study.

The IHS app also delivers push interventions, that is, interventions delivered without user prompting. Evaluating and improving the delivery timing of the push notification intervention was the focus of this study.

Push Notification Intervention

As applied to mHealth, theoretically, behavior change comprises an individual’s motivation and ability to change, combined with a trigger to elicit change [40]. Push notifications are such a trigger, potentially providing motivational messages for change (eg, to spark change), strategies for change (eg, to facilitate change), and/or reminders to engage with the app (eg, to signal change) [40]. Importantly, research supports the potential of push notifications for behavior change [41,42].

Push notifications are particularly advantageous for medical interns because they are delivered as needed with minimal burden to the user [42-44]. However, poorly timed push notifications can lead to loss of engagement and treatment fatigue [9,45], demonstrating the importance of evaluating and improving the delivery timing of the push notifications.

Push notifications were provided to the interns through the app, with the goal of improving healthy behavior in a target category of interest: mood, activity, and sleep (ie, mood notifications improve mood, activity notifications increase physical activity, and sleep notifications increase sleep duration). For all 3 categories, there were 2 types of notifications: tips and life insights. Consistent with theory [40] and motivational interviewing approaches [46-48], tips are non–data-based notifications that provide autonomy support (eg, motivational focused messages on why change) and tools (eg, ability-focused messages on how to change) to promote healthy mood, activity, or sleep. Next, consistent with theory [40,49,50] and research showing that interventions that enhance self-monitoring promote behavior change [51], life insight notifications summarize an individual’s data, to provide reminders (eg, signals) and/or reduce the burden of accessing the app to view visualizations. Table 1 contains examples of different push notifications used in the study.

Table 1.

Examples of 6 different groups of notifications.

| Notification groups | Life insight | Tip |

| Mood | Your mood has ranges from 7 to 9 over the past 2 weeks. The average intern’s daily mood goes down by 7.5% after intern year begins. | Treat yourself to your favorite meal. You’ve earned it! |

| Activity | Prior to beginning internship, you averaged 117 to 17,169 steps per day. How does that compare with your current daily step count? | Exercising releases endorphins which may improve mood. Staying fit and healthy can help increase your energy level. |

| Sleep | The average nightly sleep duration for an intern is 6 hours 42 minutes. Your average since starting internship is 7 hours 47 minutes. | Try to get 6 to 8 hours of sleep each night if possible. Notice how even small increases in sleep may help you to function at peak capacity & better manage the stresses of internship. |

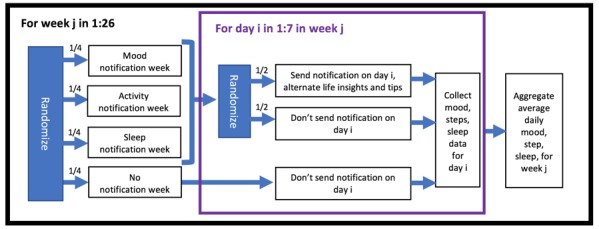

The Intern Health Study Micro-Randomized Trial Design

To discover time-varying moderators for informing the timing of notification delivery, we conducted an MRT. The MRT design is shown in Figure 2. The MRT design and protocol were approved by the University of Michigan Institutional Review Board (Protocol #HUM00033029).

Figure 2.

Randomization scheme of the Intern Health Study micro-randomized trial.

The main randomization was the weekly randomization to a specific notification category (mood, activity, sleep) or to no notification. Thus, we were able to compare how a week of a certain notification category changed intern behavior when compared with a week of no notifications.

The randomization—and the ensuing analysis of effects—occurred at the weekly level for two reasons. First, the notifications are not intended to change the interns’ behavior in the next few hours, but over the next few days. Randomizing and analyzing effects at the weekly level, as opposed to a daily or minute level, permitted the discovery of longer-term effects. Second, as interns are quite busy, their behavior may not change significantly after receiving a single notification. Instead, interns received several notifications related to the same category and had a consistent reminder about improving that category.

Given a week when a user was randomized to receiving notifications, every day they were further randomized (with 50% probability) to receive a notification on that day. Hence, for a mood notification week, the user received, on average, 3.5 mood notifications that week. The purpose of this randomization was to balance delivering enough notifications to be noticeable and cause behavior change but not too often that it leads to treatment fatigue [9]. Treatment fatigue is pervasive in mHealth [7] and for individuals with heavy workloads [9]. Additional Analyses in Multimedia Appendix 1 contains a summary of how many notifications users received in a given week.

Another way to prevent treatment fatigue is through increased variability in notifications and the order in which they are received [52]. For each notification category, the notifications alternated between life insights and tips. In addition, given a type and category, each notification was drawn randomly, without replacement, from a corresponding bucket of notifications. The bucket refilled once it was completely emptied. Alternating between life insights and tips increased the day-to-day variability of the notification framing. Drawing notifications without replacement ensured that users were not receiving repeats of the same notification. Under this scheme, on average, a user did not receive a repeat notification for 16 weeks. Weekly and daily notification randomization and notification delivery were implemented using the Firebase Cloud Messaging platform [53].

Participants

Medical doctors starting their year-long internship in the summer of 2018 were eligible to participate in the study. Interns were onboarded before the start of their internship (between April 2018 and June 2018), in which they were instructed to download the study app, were provided Fitbits, completed a baseline survey, and were able to begin entering mood scores. Baseline and follow-up surveys were administered through the app using Qualtrics survey software [54]. Data collection began when an intern enrolled in the study and continued until the end of the trial. Collecting data before the start of the internship provided baseline measurements of mood, step counts, and sleep, which are valuable control variables in the analysis. The weekly randomizations and notification delivery began on June 30, 2018, 1 day before the start of interns’ clinical duties. Interns were rerandomized every 7 days thereafter. During the study, notifications were sent at 3 PM, mood EMAs were collected daily between 5 PM and 10 PM, and sleep and step data were collected every minute. Data were transferred directly from the subjects’ phones to a secure, Health Insurance Portability and Accountability Act–compliant server managed by the University of Michigan Health Information and Technology Services. The interns received notifications for 6 months (26 weeks), and the trial ended on December 28, 2018.

Statistical Analysis

Overview

To analyze the primary and secondary aims, we performed a moderator analysis for each of the outcomes, treatments, and moderators specified in Study Aims. More details on the methods can be found in Further Details on the Statistical Methods in Multimedia Appendix 1.

In the analysis, there were 4 sets of variables:

The treatment outcome variable of interest, Yt.

The treatment indicator, Zt. For now, Zt is a binary indicator, where Zt=1 implies it is a notification week (of any category) and Zt=0 is a no-notification week. The case with multicategorical treatments—mood, activity, and sleep notifications—will be described under the secondary aims.

The moderator, Mt, corresponding to the causal effect moderator of interest.

The last set of variables, Xt, are the control variables. The control variables are variables measured before each weekly randomization (eg, baseline data and previous weeks’ data) and are included in the model to reduce variation in the outcome, Yt.

The outcomes, treatment, and moderators correspond exactly to the outcomes, treatments, and moderators described in Study Aims. As interns were randomized to different treatments each week, the outcomes, treatments, moderators, and control variables were aggregated at the weekly level and were indexed by time, t, corresponding to each week of the study (t=1,…,26).

To perform the moderator analysis, we used a linear model with an interaction term. The outcome of interest (such as average daily mood), Yt, was regressed on Xt, Mt, Zt and the interaction between Mt and Zt, ZtMt, giving the model the following form:

| E(Yt|Xt,Mt,Zt) = a0Xt + a1Mt + b0Zt + b1ZtMt |

The moderation effect of interest is the coefficient b1 for the interaction of Zt and Mt. This coefficient is interpreted as the change in treatment effect of treatment Zt on Yt per unit change in Mt. A positive value for b1 indicates that the treatment works better after weeks when Mt is high, whereas a negative value indicates that the treatment works better after time points when Mt is low.

For the primary and secondary aims, to evaluate if the moderator effect is statistically significant, we performed a hypothesis test comparing the coefficient b1 to 0, with a 0.05 type I error rate. We reported the estimate of b1, the standard error, and P value of this test. Though estimating and testing the moderation effect is useful, it does not demonstrate whether the notifications had a positive or negative effect on the outcome. Hence, in addition to a hypothesis test, we also plotted the estimated treatment effect at various values of the moderator. We did this by using both the estimate of the slope, b1, and intercept, b0, of the moderation effect. The plots also included histograms of the moderator to illustrate the distribution of treatment effects.

Estimation Techniques

To estimate the coefficients, we used a multicategorical extension of the weighted and centered least-squares estimator described in Boruvka et al [55]. The estimation method provides asymptotically unbiased estimates of the causal effect moderation of interest. The method also protects against potential misspecification of terms not interacted with treatment (a0Xt + a1Mt). The method assesses the uncertainty of the coefficient estimates using robust standard error estimation—the sandwich estimator [56]—to account for correlation between outcomes over time. The method was implemented in R using the package geepack [57]. The code is available on the first author’s website.

Missing Data

Missing data occurred throughout the trial because of interns not completing the self-reported mood survey or not wearing Fitbits. Multiple imputation [58], a robust method for dealing with missing data, was used to impute missing data at the daily level. Due to the complexity of the trial design and data structure, our imputation method combines imputation methods for longitudinal data [59] and sequentially randomized trials [60]. Results were aggregated across 20 imputed datasets using Rubin’s rules [58,61]. We also assessed the sensitivity of the conclusions to the imputation method. See Missing Data and Sensitivity Analyses in Multimedia Appendix 1 for further details on the missingness and sensitivity analysis results.

Primary Aim

The primary aim assessed the previous week’s average daily self-reported mood valence as a moderator of the effect of notifications on the average daily self-reported mood valence. For this analysis, the interpretation, b1, was the change in treatment effect (for delivering a week of notifications compared with a week of no notifications) on the average daily mood when the previous week’s average daily mood increased by 1.

Secondary Aim 1

The first secondary aim assessed the previous week’s average daily step count as a moderator of the effect of activity notifications on the average daily step count. For this aim, the treatment variable (Zt) and corresponding coefficients (b0 and b1) were no longer binary because there were 4 possible notification categories. See Further Details on the Statistical Methods in Multimedia Appendix 1 for further details on the multicategorical treatment model. The focus of inference for secondary aim 1 was on the first dimension of the moderation effect, b11, which corresponds to the comparison between activity notification weeks and no-notification weeks. In addition, to reduce right skew and decrease outliers, the outcome and moderator used average daily square root step count. After the square root transformation, the average daily step counts more closely resembled a Gaussian distribution.

The interpretation, b11, was the change in treatment effect (for delivering a week of activity notifications compared with a week of no notifications) on the average daily square root step count when the previous week’s average daily square root step count increased by 1. Hypothesis testing was performed on b11 and plots were made using estimates of b01 and b11.

Secondary Aim 2

Secondary aim 2 assessed the previous week’s average daily sleep count as a moderator of the effect of sleep notifications on the average daily sleep count. Similar to secondary aim 1, the treatment here was no longer binary, and we encoded the treatment vector the same way as secondary aim 1. For this analysis, the focus of inference was on the second dimension, b12, which compared sleep notification weeks with no-notification weeks. Again, to reduce right skew and decrease outliers, the outcome and moderator used average daily square root sleep minutes.

The interpretation, b12, was the change in treatment effect (for delivering a week of sleep notifications compared with a week of no notifications) on average daily square root sleep minutes when the previous week’s average daily square root sleep minutes increased by 1. Hypothesis testing was performed on b12, and plots were made using estimates of b02 and b12.

Exploratory Subaim

The exploratory aim assessed the previous week’s mood as a moderator of the effect of each notification category on the average daily mood. For the exploratory aim, the outcome and moderator were the same as the primary aim, except that the treatment was separated into 4 treatment categories (as in the secondary aims). As this aim was only exploratory, we did not calculate P values. Instead, for each notification category, we plotted the estimated treatment effect at various values of the moderator. This required making 3 separate lines using each dimension, with estimates of b0i providing the intercept and estimates of b1i providing the slope.

Results

Participants

Participants were recruited through emails, which were sent to future interns from 47 different recruitment institutions between April 1 and June 25, 2018. The recruitment institutions comprised both medical schools, where emails were sent to all graduates, and residency locations, where emails were sent to all incoming interns. A total of 5233 future interns received the initial email inviting them to participate in the study. In all, 40.78% (2134/5233) of interns downloaded the study app, completed the consent form, and filled out the baseline survey sometime before June 25, 2018. The study app and study participation were restricted to interns using an iPhone, the phone brand used by most interns. A total of 2134 interns received a Fitbit Charge 2. Of the 2134 interns, 1565 (73.34%) were randomly selected to participate in the MRT (see Additional Analyses in Multimedia Appendix 1 for an explanation of this initial randomization). These 1565 interns were randomized according to Figure 2. Interns were incentivized to participate in the study by receiving the Fitbit wearable and up to US $125, distributed 5 times throughout the year (US $25 each time) based on continued participation.

Of the 1565 interns in the MRT, 875 (55.91%) were female, and 774 (49.45%) had previously experienced an episode of depression. The interns represented 321 different residency locations and 42 specialties. The study interns’ baseline information closely resembled the known characteristics of the general medical intern population [29]. Throughout the trial, we measured intern mood valence, steps, and nightly sleep. Summaries of the weekly averages of those data can be found in Table 2.

Table 2.

Summary statistics of daily mood, activity, and sleep during the study, averaged over each week of the study. These are the primary outcomes and moderators used in the analyses of all study aims.

| Daily measure | First quartile | Median | Mean (SD) | Third quartile |

| Average daily mood | 6.50 | 7.33 | 7.21 (1.43) | 8.00 |

| Average daily step count | 6193 | 7983 | 8274 (3285) | 10,050 |

| Average daily hours of sleep | 6.02 | 6.65 | 6.54 (1.25) | 7.25 |

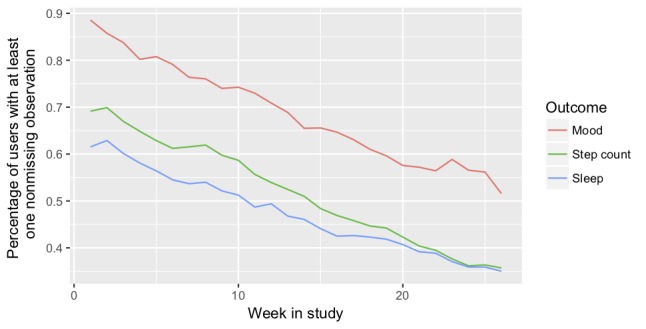

Missing data occurred throughout the study. Figure 3 displays the percentage of interns with at least one nonmissing sleep, step, or mood observation for each week in the study. See Missing Data and Sensitivity Analyses in Multimedia Appendix 1 for further details on the missingness and sensitivity analyses.

Figure 3.

Percentage of interns with at least one nonmissing sleep, step, or mood observation for each week in the study.

Main Findings

Primary Aim

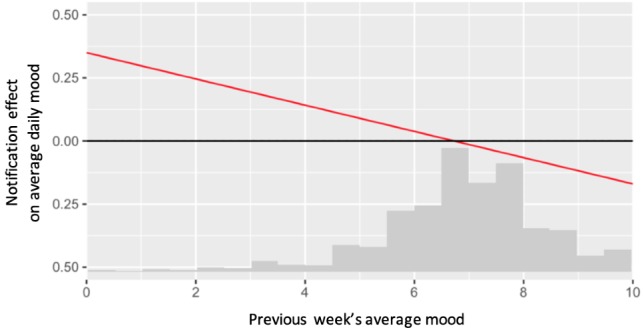

We conclude that the previous week’s average daily self-reported mood valence is a statistically significant negative moderator of the effect of notifications on the average daily self-reported mood valence. The estimate for the moderation is −0.052 (SE 0.014; 95% CI −0.081 to −0.023; P=.001).

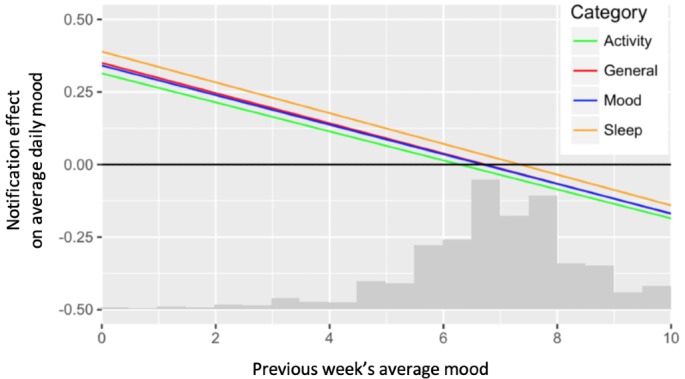

Figure 4 plots the estimated treatment effect at various values of the moderator. Figure 4 shows that the effect of notifications (compared with no notifications) was positive for weeks when the previous mood was low, but negative for weeks when the previous mood was high. For example, when the previous week’s average daily mood was 3, we estimated that a week of notifications increased an intern’s average daily mood by 0.19 (effect size=0.14). However, when the previous week’s average daily mood was 9, we estimated that a week of notifications decreased an intern’s average daily mood by 0.12 (effect size=−0.08).

Figure 4.

Estimated treatment effects (compared with no notifications) of notifications on average daily mood, at various values of previous week’s mood. The x-axis also contains a scaled histogram of previous week’s average mood.

Exploratory Subaim

For each notification category, we plotted the estimated treatment effect at various values of the moderator. Essentially, we broke apart the moderation effect in Figure 4 into 3 categories of notifications. The result is shown in Figure 5. We included the line for general notifications from Figure 4 for reference. Figure 5 demonstrates that the moderation by the previous week’s average daily mood was similar for all 3 notification categories.

Figure 5.

Estimated treatment effects (compared with no notifications) of different notification categories on average daily mood, at various values of previous week’s mood. The x-axis also contains a scaled histogram of previous week’s average mood.

When the previous week’s average daily mood was 3, we estimated that a week of mood, activity, and sleep notifications increased an intern’s average daily mood by 0.19, 0.16, and 0.23 (effect sizes=0.13, 0.11, and 0.16), respectively. When the previous week’s average daily mood was 9, we estimated that a week of mood, activity, and sleep notifications decreased an intern’s average daily mood by 0.12, 0.14, and 0.09 (effect sizes=−0.08, −0.10, and −0.06), respectively.

Secondary Aim 1

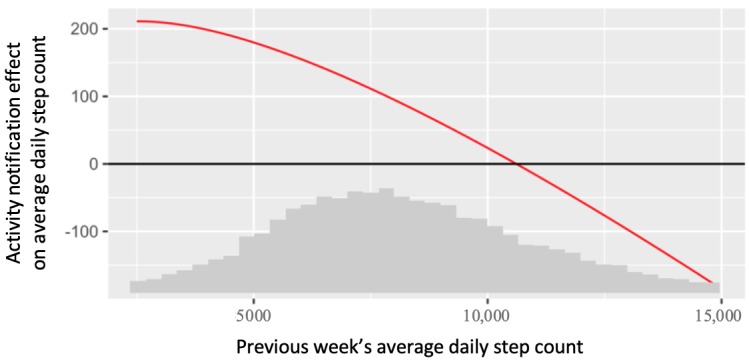

We conclude that the previous week’s average daily step count is a statistically significant negative moderator of the effect of activity notifications on average daily steps. The estimate for the moderation is −0.039 (SE 0.015; 95% CI −0.069 to −0.008; P=.01).

Figure 6 plots the estimated treatment effect at various values of the moderator. In Figure 6, for interpretability, we retransformed the moderation effect back from the analysis scale (square root) to the original scale. We see from Figure 6 that the effect of activity notifications (compared with no notifications) was positive for weeks when previous steps were low, but negative for weeks when previous steps were high. For example, when the previous week’s average daily step count was 5625, we estimated that a week of activity notifications increased an intern’s average daily step count by 165 steps (effect size=0.05). However, when the previous week’s average daily step count was 12,100, we estimated that a week of activity notifications decreased an intern’s average daily step count by 60 steps (effect size=−0.02).

Figure 6.

Estimated treatment effects (compared with no notifications) of activity notifications on average daily steps, at various values of previous week’s step counts. The x-axis also contains a scaled histogram of previous week’s average daily step count.

Secondary Aim 2

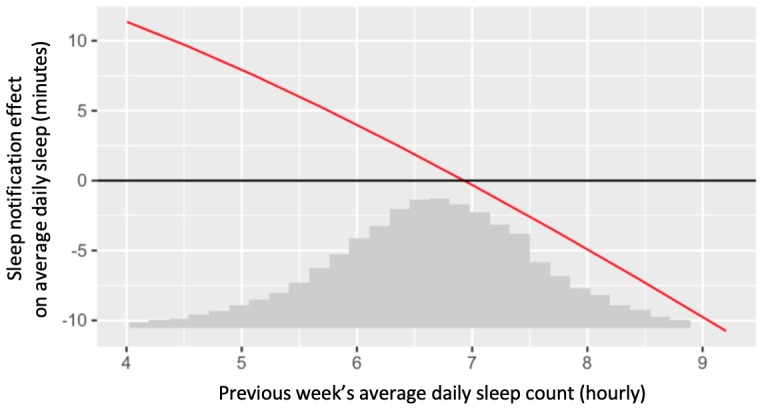

We conclude that the previous week’s average daily sleep is a statistically significant negative moderator of the effect of sleep notifications on average daily sleep. The estimate for the moderation is −0.075 (SE 0.018; 95% CI −0.111 to −0.038; P<.001).

Figure 7 plots the estimated treatment effect at various values of the moderator. Again, we retransformed the moderation effect back from the analysis scale (square root) to the original scale. In addition, for interpretability, the x-axis is on the hourly scale, whereas the y-axis is on the minute scale. We see from Figure 7 that the effect of sleep notifications (compared with no notifications) was positive for weeks when previous sleep was low, but negative for weeks when previous sleep was high. For example, when the previous week’s average daily sleep was 5 hours, we estimated that a week of sleep notifications increased an intern’s average daily sleep by 8 min (effect size=0.11). However, when the previous week’s average daily sleep was 8 hours, we estimated that a week of sleep notifications decreased an intern’s average daily sleep by 5 min (effect size=−0.07).

Figure 7.

Estimated treatment effects (compared with no notifications) of sleep notifications on average daily sleep minutes, at various values of previous week’s hourly sleep. The x-axis also contains a scaled histogram of previous week’s average daily sleep count.

Additional Analyses

The Additional Analyses section of Multimedia Appendix 1 contains detailed results on other analyses, including an analysis of nonmoderated main effects, changes in effects over time, the effects of life insights and tips, the effects on long-term PHQ-9 scores, and an analysis of baseline moderators. There is evidence of a negative effect of (general) notifications on mood. There is also evidence of a positive effect of activity notifications on step count and a positive effect of sleep notifications on sleep duration. All of these effect sizes, however, are small. There is no strong evidence that these effects change over time. There is minor evidence that tips perform better than life insights in improving step count and sleep duration. We did not see any effects on long-term mental health outcomes. We saw some evidence of nonlinear moderation for the primary and secondary aims. The nonlinear moderator analysis suggested that when the moderators are high, the treatment effect on sleep hours and step count is close to 0 (as opposed to negative). Finally, we found that baseline variables, such as gender and depression history, were weak moderators of notification effects, demonstrating the value of personalizing intervention delivery on real-time data.

Discussion

Principal Findings

Through this MRT of an mHealth push notification intervention, we found that the effects of notifications were negatively moderated by the subject’s previous measurement of the outcome of interest. Specifically, we found that previous mood negatively moderated the effect of notifications on mood, previous step count negatively moderated the effect of activity notifications on step count, and previous sleep duration negatively moderated the effect of sleep notifications on sleep duration.

Comparison With Other Studies

A few previous studies explored using real-time variables to determine the timing of mHealth interventions for mental health. These studies postulated that messages would be most effective when self-reported mood was outside the typical range [21], or when self-reported stress or negative affect was high [22]. The studies found that such timing does improve efficacy. Our work differs from these studies because we did not assume, beforehand, that interventions would be most effective during a predetermined time. Instead, we used the MRT design to learn opportune times to send interventions, based on real-time objective and self-reported data.

Outside of mental health, there have been studies that have sought to learn opportune times to send interventions. Much of that work is focused on assessing in-the-moment interruptibility, namely times when a user is open to interruption and willing to engage with a notification. For example, in one study [62], the authors found that phone usage, time of day, and location were strong predictors of a user’s willingness to engage with content provided via a push notification. Another study [63] found that location, affect, current activity, time of day, day of week, and current stress are significant predictors of a user’s willingness to respond to an EMA prompt. Another study [64] used an MRT to causally demonstrate that notifications (which ask users to self-monitor) are more effective when sent mid-day and on weekends. Our study differs from this work. In our study, the outcome was not focused on short-term engagement with the notification but rather longer-term behavior change, such as improved weekly mood, activity, or sleep.

Most standardized effect sizes within this paper fell within the 0.05 to 0.15 range. According to the suggested definitions of small and large [65], the effect sizes for our interventions are small. However, these definitions of small and large may not directly apply to the causal effects assessed in MRTs [66]. As MRTs are a relatively new trial design, there are currently no accepted definitions of large and small [66]. For the 3 MRTs with published effect sizes, the effects sizes were 0.074 [67], 0.2 [66], and 0.1 [66]. The effect sizes within this paper are similar in magnitude to these other works.

Implications

Our principal findings demonstrate that the study interns’ current state meaningfully influences their receptivity to mHealth interventions for mental health. Effective mHealth interventions for individuals in stressful work environments must consider timing notification delivery based on recent real-time data. Delivering notifications when previous measurements of mood, sleep, and activity are low—when improvement is needed—benefits mood and behavior. However, delivering notifications when those variables are high, negatively impacts mood and behavior.

mHealth interventions aiming to increase mood, activity, and sleep can be improved based on these findings. An improved mHealth intervention for increasing mood would deliver notifications (of any type) only when the user’s previous week’s average daily mood is below 7 and sends nothing when previous mood is at or above 7. Similarly, for activity, an improved intervention would deliver activity notifications only when the user’s previous week’s average activity is below 10,614 steps and delivers nothing otherwise. For sleep, an improved intervention would deliver sleep notifications only when the user’s previous week’s average daily sleep duration is below 6.9 hours. These improved interventions are based upon our single trial, with small effect sizes. There is potential for larger effects through further intervention optimization and using different intervention groups in conjunction with each other. Consistent with the multiphase optimization strategy (MOST) framework [68,69], these suggested interventions should be further refined and evaluated in additional studies and confirmatory trials before being used broadly.

Study Strengths

Through the MRT design and repeatedly randomizing interns throughout the trial, we were able to assess causal effect moderation by time-varying measurements. Our large sample size (1565 interns) allowed us to detect the moderators of interest. The relatively long duration of the study (6 months) demonstrated that our conclusions are valid beyond the first few weeks and months of the study (we analyzed how treatment effects vary over time in the Additional Analyses of Multimedia Appendix 1). Our study focused on medical interns, which provided a unique opportunity to assess the efficacy of mHealth interventions on wellness during life and work stressors. There were also advantages of our analytic approach. First, the use of the multicategorical extension of the weighted and centered least-squares estimator allowed us to unbiasedly assess the causal effect moderation. Second, our imputation method allowed us to cope with missing data without requiring strong assumptions.

Limitations

The primary outcome for the study, mood valence, was self-reported. Self-reported outcomes may be less reliable and valid compared with objective measurements [70,71]. In addition, because of user nonresponse, missing data is a common issue with self-reported outcomes collected over an extended period [59]. In future studies, developing and using a passively collected objective measurement of depression could be beneficial for improving objectivity and reducing missing data.

The main findings of the IHS MRT are partially sensitive to the imputation method used for overcoming missing data (see Missing Data and Sensitivity Analyses in Multimedia Appendix 1). The conclusions of the primary aim and secondary aim 2 are not sensitive. The conclusion of secondary aim 1 (the negative moderation of the activity notification effect by previous step count), however, is sensitive to the imputation method.

The results of the IHS MRT may not extrapolate to other populations because medical interns are different from the general population in the average education level and socioeconomic status. Within the population of medical interns, sampling bias may still exist as the study’s interns self-selected into the study, as opposed to being randomly sampled. This self-selection bias may cause the study interns to be different from the general population of interns. For example, because they were motivated to participate in the study, they may also be motivated to change their behavior. Although it is difficult to show self-selection unbiasedness, the bias may be mitigated because a large percentage of interns agreed to participate in the study (40.78%), and the study interns’ baseline information closely resembles that of the general medical intern population [29].

Daily work schedules were not reliably measured in this study. Previous studies [63,64] have found that mHealth message effectiveness varies between weekdays and weekends, suggesting that future studies should assess work schedule as a potential moderator.

Measurements of app engagement could provide further insights into how these notifications are promoting behavior change. For example, after receiving a notification, users may have an increased rate of opening the app and viewing their historical data displays. Unfortunately, the app does not currently collect data on app access and app clicks. It also does not measure a user’s interactions with the notification messages. Including these capabilities in future versions of the app would be useful.

We did not have message tailoring in this study. Currently, the message framing and wording was the same, no matter the intern’s current behavior. The messages (see Table 1) are framed toward improving mood, sleep, and activity. This framing may be frustrating to an intern who already has a high mood or sufficient sleep or activity. Tailoring the wording of the messages [72,73] could potentially eliminate the negative effect of messages when previous mood, sleep, or activity is high (eg, providing a reminder message as opposed to an unnecessary ability-focused message).

There were also a couple technological errors that occurred throughout the trial. There were 8 days (of the 182 total days) when, because of server issues, no notifications were sent to any subject. In addition, the weekly randomization to a notification category occurred without replacement, as opposed to with replacement as originally intended.

Future Iterations of the Intern Health Study

The IHS is an annual study that continues each year with a new cohort of interns [30]. Consistent with the MOST framework [68,69], this provides multiple trial phases to continually update, optimize, and test interventions and provides confirmation of findings from previous cohorts. Starting in the fall of 2019, we will run another study to test new hypotheses with improved interventions. Using the results and conclusions drawn from this study, in 2019, we plan to introduce tailored messages that are tailored based on an intern’s previous mood, activity, and sleep [72,73]. For people with high previous measurements, the messages will be framed toward maintenance of healthy behavior, not improvement. The cutoffs that define high and low scores will be based on data collected from the 2018 study. We also plan to improve the missing data protocol and incentive structure to reduce the frequency of missing data. We will collect work schedule information to compare message efficacy between work days and days off. Finally, in addition to providing notifications on the phone lock screen, we will also show the notifications within the app to give interns more opportunities to read them.

Conclusions

Overall, our study demonstrates the importance of real-time moderators for the development of high-quality mHealth interventions, especially for individuals in stressful work environments. There were times when the notifications were beneficial and times when the notifications were harmful to the study participants. Developers of mHealth interventions are encouraged to think deeply about the delivery of interventions and how real-time variables can be used to inform the timing of intervention delivery. The MRT design allowed us to discover real-time moderators and may be useful for other app developers also aiming to learn when to deliver notification messages.

In addition to the research aims for future iterations of the IHS, assessing the value of mHealth interventions and delivery timing in other highly stressed populations is beneficial for understanding the generality of these results. Future MRTs should also examine the efficacy of mHealth content (eg, content focused on motivation, ability, or triggers) incorporated into other app features for behavior change. In this regard, developing mHealth intervention features beyond push notifications (eg, integrating ability-focused mindfulness exercises) could provide a greater overall benefit.

Acknowledgments

The authors would like to thank Dr Inbal Nahum-Shani, Dr Daniel Almirall, Jessica Liu, Mengjie Shi, and Dr Stephanie Carpenter for valuable feedback on this work. ZW and SS acknowledge support by the National Institutes of Health (NIH) grant R01MH101459 and an investigator award from the Michigan Precision Health Initiative. ZW acknowledges the support of the NIH National Cancer Institute award P30CA046592 through the Cancer Center Support Grant Development Funds from the Rogel Cancer Center and start-up funds from Michigan Institute for Data Science. AT acknowledges the support of the National Science Foundation via CAREER grant IIS-1452099. SS acknowledges the support of the American Foundation for Suicide Prevention (LSRG-0-059-16).

Abbreviations

- EMA

ecological momentary assessment

- IHS

Intern Health Study

- JITAI

just-in-time adaptive intervention

- MRT

micro-randomized trial

- mHealth

mobile health

- MOST

multiphase optimization strategy

- NIH

National Institutes of Health

- PHQ-9

Patient Health Questionnaire-9

- RCT

randomized controlled trial

Appendix

Additional analyses and statistical details.

CONSORT-eHEALTH Checklist (V 1.6.1).

Footnotes

Conflicts of Interest: None declared.

References

- 1.World Health Organization. 2017. [2020-01-31]. Depression and Other Common Mental Disorders: Global Health Estimates https://apps.who.int/iris/bitstream/handle/10665/254610/WHO-MSD-MER-2017.2-eng.pdf.

- 2.Greenberg PE, Fournier A, Sisitsky T, Pike CT, Kessler RC. The economic burden of adults with major depressive disorder in the United States (2005 and 2010) J Clin Psychiatry. 2015 Mar;76(2):155–62. doi: 10.4088/JCP.14m09298. http://www.psychiatrist.com/jcp/article/pages/2015/v76n02/v76n0204.aspx. [DOI] [PubMed] [Google Scholar]

- 3.Hawkins JD, Jenson JM, Catalano R, Fraser MW, Botvin GJ, Shapiro V, Brown CH, Beardslee W, Brent D, Leslie LK, Rotheram-Borus MJ, Shea P, Shih A, Anthony E, Haggerty KP, Bender K, Gorman-Smith D, Casey E, Stone S. Unleashing the power of prevention. NAM Perspectives. 2015;5(6):1343–4. doi: 10.31478/201506c. [DOI] [Google Scholar]

- 4.Tennant C. Work-related stress and depressive disorders. J Psychosom Res. 2001 Dec;51(5):697–704. doi: 10.1016/s0022-3999(01)00255-0. [DOI] [PubMed] [Google Scholar]

- 5.Baucom KJ, Queen TL, Wiebe DJ, Turner SL, Wolfe KL, Godbey EI, Fortenberry KT, Mansfield JH, Berg CA. Depressive symptoms, daily stress, and adherence in late adolescents with type 1 diabetes. Health Psychol. 2015 May;34(5):522–30. doi: 10.1037/hea0000219. http://europepmc.org/abstract/MED/25798545. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Everett KD, Brantley PJ, Sletten C, Jones GN, McKnight GT. The relation of stress and depression to interdialytic weight gain in hemodialysis patients. Behav Med. 1995;21(1):25–30. doi: 10.1080/08964289.1995.9933739. [DOI] [PubMed] [Google Scholar]

- 7.Nahum-Shani I, Smith SN, Spring BJ, Collins LM, Witkiewitz K, Tewari A, Murphy SA. Just-in-time adaptive interventions (JITAIs) in mobile health: key components and design principles for ongoing health behavior support. Ann Behav Med. 2018 May 18;52(6):446–62. doi: 10.1007/s12160-016-9830-8. http://europepmc.org/abstract/MED/27663578. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Zhang S, Elhadad N. Factors contributing to dropping-out in an online health community: static and longitudinal analyses. AMIA Annu Symp Proc. 2016;2016:2090–9. http://europepmc.org/abstract/MED/28269969. [PMC free article] [PubMed] [Google Scholar]

- 9.Heckman BW, Mathew AR, Carpenter MJ. Treatment burden and treatment fatigue as barriers to health. Curr Opin Psychol. 2015 Oct 1;5:31–6. doi: 10.1016/j.copsyc.2015.03.004. http://europepmc.org/abstract/MED/26086031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Riley WT, Rivera DE, Atienza AA, Nilsen W, Allison SM, Mermelstein R. Health behavior models in the age of mobile interventions: are our theories up to the task? Transl Behav Med. 2011 Mar;1(1):53–71. doi: 10.1007/s13142-011-0021-7. http://europepmc.org/abstract/MED/21796270. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Spruijt-Metz D, Nilsen W. Dynamic models of behavior for just-in-time adaptive interventions. IEEE Pervasive Comput. 2014;13(3):13–7. doi: 10.1109/MPRV.2014.46. [DOI] [Google Scholar]

- 12.Riley WT. Theoretical models to inform technology-based health behavior interventions. In: Marsch LA, Lord SE, Dallery J, editors. Behavioral Healthcare and Technology: Using Science-Based Innovations to Transform Practice. Oxford, United Kingdom: Oxford University Press; 2014. pp. 13–24. [Google Scholar]

- 13.Wang L, Miller LC. Just-in-the-moment adaptive interventions (JITAI): a meta-analytical review. Health Commun. 2019 Oct 5;:1–14. doi: 10.1080/10410236.2019.1652388. [DOI] [PubMed] [Google Scholar]

- 14.Miner A, Kuhn E, Hoffman JE, Owen JE, Ruzek JI, Taylor CB. Feasibility, acceptability, and potential efficacy of the PTSD Coach app: a pilot randomized controlled trial with community trauma survivors. Psychol Trauma. 2016 May;8(3):384–92. doi: 10.1037/tra0000092. [DOI] [PubMed] [Google Scholar]

- 15.Ahmedani BK, Crotty N, Abdulhak MM, Ondersma SJ. Pilot feasibility study of a brief, tailored mobile health intervention for depression among patients with chronic pain. Behav Med. 2015;41(1):25–32. doi: 10.1080/08964289.2013.867827. [DOI] [PubMed] [Google Scholar]

- 16.Possemato K, Kuhn E, Johnson E, Hoffman JE, Owen JE, Kanuri N, de Stefano L, Brooks E. Using PTSD Coach in primary care with and without clinician support: a pilot randomized controlled trial. Gen Hosp Psychiatry. 2016;38:94–8. doi: 10.1016/j.genhosppsych.2015.09.005. [DOI] [PubMed] [Google Scholar]

- 17.Rizvi SL, Dimeff LA, Skutch J, Carroll D, Linehan MM. A pilot study of the DBT coach: an interactive mobile phone application for individuals with borderline personality disorder and substance use disorder. Behav Ther. 2011 Dec;42(4):589–600. doi: 10.1016/j.beth.2011.01.003. [DOI] [PubMed] [Google Scholar]

- 18.Kristjánsdóttir OB, Fors EA, Eide E, Finset A, Stensrud TL, van Dulmen S, Wigers SH, Eide H. A smartphone-based intervention with diaries and therapist-feedback to reduce catastrophizing and increase functioning in women with chronic widespread pain: randomized controlled trial. J Med Internet Res. 2013 Jan 7;15(1):e5. doi: 10.2196/jmir.2249. https://www.jmir.org/2013/1/e5/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Proudfoot J, Clarke J, Birch M, Whitton AE, Parker G, Manicavasagar V, Harrison V, Christensen H, Hadzi-Pavlovic D. Impact of a mobile phone and web program on symptom and functional outcomes for people with mild-to-moderate depression, anxiety and stress: a randomised controlled trial. BMC Psychiatry. 2013 Dec 18;13:312. doi: 10.1186/1471-244X-13-312. https://bmcpsychiatry.biomedcentral.com/articles/10.1186/1471-244X-13-312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Newman MG, Przeworski A, Consoli AJ, Taylor CB. A randomized controlled trial of ecological momentary intervention plus brief group therapy for generalized anxiety disorder. Psychotherapy (Chic) 2014 Jul;51(2):198–206. doi: 10.1037/a0032519. http://europepmc.org/abstract/MED/24059730. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Burns MN, Begale M, Duffecy J, Gergle D, Karr CJ, Giangrande E, Mohr DC. Harnessing context sensing to develop a mobile intervention for depression. J Med Internet Res. 2011 Aug 12;13(3):e55. doi: 10.2196/jmir.1838. https://www.jmir.org/2011/3/e55/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Smyth JM, Heron KE. Is Providing Mobile Interventions 'Just-in-time' Helpful? An Experimental Proof of Concept Study of Just-in-time Intervention for Stress Management. Proceedings of the 2016 IEEE Wireless Health; WH'16; October 25-27, 2016; Bethesda, MD, USA. 2016. pp. 1–7. [DOI] [Google Scholar]

- 23.Klasnja P, Hekler EB, Shiffman S, Boruvka A, Almirall D, Tewari A, Murphy SA. Microrandomized trials: an experimental design for developing just-in-time adaptive interventions. Health Psychol. 2015 Dec;34S:1220–8. doi: 10.1037/hea0000305. http://europepmc.org/abstract/MED/26651463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Kalmbach DA, Fang Y, Arnedt JT, Cochran AL, Deldin PJ, Kaplin AI, Sen S. Effects of sleep, physical activity, and shift work on daily mood: a prospective mobile monitoring study of medical interns. J Gen Intern Med. 2018 Jun;33(6):914–20. doi: 10.1007/s11606-018-4373-2. http://europepmc.org/abstract/MED/29542006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Akerstedt T. Psychosocial stress and impaired sleep. Scand J Work Environ Health. 2006 Dec;32(6):493–501. https://www.sjweh.fi/show_abstract.php?abstract_id=1054. [PubMed] [Google Scholar]

- 26.Lallukka T, Sarlio-Lähteenkorva S, Roos E, Laaksonen M, Rahkonen O, Lahelma E. Working conditions and health behaviours among employed women and men: the Helsinki Health Study. Prev Med. 2004 Jan;38(1):48–56. doi: 10.1016/j.ypmed.2003.09.027. [DOI] [PubMed] [Google Scholar]

- 27.Baglioni C, Battagliese G, Feige B, Spiegelhalder K, Nissen C, Voderholzer U, Lombardo C, Riemann D. Insomnia as a predictor of depression: a meta-analytic evaluation of longitudinal epidemiological studies. J Affect Disord. 2011 Dec;135(1-3):10–9. doi: 10.1016/j.jad.2011.01.011. [DOI] [PubMed] [Google Scholar]

- 28.Ströhle A. Physical activity, exercise, depression and anxiety disorders. J Neural Transm (Vienna) 2009 Jul;116(6):777–84. doi: 10.1007/s00702-008-0092-x. [DOI] [PubMed] [Google Scholar]

- 29.Mata DA, Ramos MA, Bansal N, Khan R, Guille C, di Angelantonio E, Sen S. Prevalence of depression and depressive symptoms among resident physicians: a systematic review and meta-analysis. J Am Med Assoc. 2015 Dec 8;314(22):2373–83. doi: 10.1001/jama.2015.15845. http://europepmc.org/abstract/MED/26647259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.The Sen Lab. [2020-01-31]. Intern Health Study https://www.srijan-sen-lab.com/intern-health-study.

- 31.Kraemer HC, Wilson GT, Fairburn CG, Agras WS. Mediators and moderators of treatment effects in randomized clinical trials. Arch Gen Psychiatry. 2002 Oct;59(10):877–83. doi: 10.1001/archpsyc.59.10.877. [DOI] [PubMed] [Google Scholar]

- 32.Fitbit. [2020-01-31]. Fitbit Charge 2 User Manual https://staticcs.fitbit.com/content/assets/help/manuals/manual_charge_2_en_US.pdf.

- 33.Shiffman S, Stone AA, Hufford MR. Ecological momentary assessment. Annu Rev Clin Psychol. 2008;4:1–32. doi: 10.1146/annurev.clinpsy.3.022806.091415. [DOI] [PubMed] [Google Scholar]

- 34.Apple Developer. [2020-01-31]. ResearchKit https://developer.apple.com/researchkit/

- 35.Löwe B, Kroenke K, Gräfe K. Detecting and monitoring depression with a two-item questionnaire (PHQ-2) J Psychosom Res. 2005 Mar;58(2):163–71. doi: 10.1016/j.jpsychores.2004.09.006. [DOI] [PubMed] [Google Scholar]

- 36.Foreman AC, Hall C, Bone K, Cheng J, Kaplin A. Just text me: using SMS technology for collaborative patient mood charting. J Particip Med. 2011;3:e45. https://participatorymedicine.org/journal/columns/innovations/2011/09/26/just-text-me-using-sms-technology-for-collaborative-patient-mood-charting/ [Google Scholar]

- 37.Kroenke K, Spitzer RL, Williams JB. The PHQ-9: validity of a brief depression severity measure. J Gen Intern Med. 2001 Oct;16(9):606–13. doi: 10.1046/j.1525-1497.2001.016009606.x. https://onlinelibrary.wiley.com/resolve/openurl?genre=article&sid=nlm:pubmed&issn=0884-8734&date=2001&volume=16&issue=9&spage=606. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Fitbit. [2020-01-31]. Welcome. It's an API https://www.fitbit.com/dev.

- 39.Korotitsch WJ, Nelson-Gray RO. An overview of self-monitoring research in assessment and treatment. Psychol Assess. 1999;11(4):415–25. doi: 10.1037/1040-3590.11.4.415. [DOI] [Google Scholar]

- 40.Fogg BJ. A Behavior Model for Persuasive Design. Proceedings of the 4th International Conference on Persuasive Technology; Persuasive'09; April 26 - 29, 2009; Claremont, California, USA. 2009. pp. 1–7. [DOI] [Google Scholar]

- 41.de Leon E, Fuentes LW, Cohen JE. Characterizing periodic messaging interventions across health behaviors and media: systematic review. J Med Internet Res. 2014 Mar 25;16(3):e93. doi: 10.2196/jmir.2837. https://www.jmir.org/2014/3/e93/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Morrison LG, Hargood C, Pejovic V, Geraghty AW, Lloyd S, Goodman N, Michaelides DT, Weston A, Musolesi M, Weal MJ, Yardley L. The effect of timing and frequency of push notifications on usage of a smartphone-based stress management intervention: an exploratory trial. PLoS One. 2017;12(1):e0169162. doi: 10.1371/journal.pone.0169162. http://dx.plos.org/10.1371/journal.pone.0169162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Ben-Zeev D, Schueller SM, Begale M, Duffecy J, Kane JM, Mohr DC. Strategies for mHealth research: lessons from 3 mobile intervention studies. Adm Policy Ment Health. 2015 Mar;42(2):157–67. doi: 10.1007/s10488-014-0556-2. http://europepmc.org/abstract/MED/24824311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Danaher BG, Brendryen H, Seeley JR, Tyler MS, Woolley T. From black box to toolbox: outlining device functionality, engagement activities, and the pervasive information architecture of mHealth interventions. Internet Interv. 2015 Mar 1;2(1):91–101. doi: 10.1016/j.invent.2015.01.002. https://linkinghub.elsevier.com/retrieve/pii/S2214-7829(15)00003-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Suggs S, Blake H, Bardus M, Lloyd S. Effects of text messaging in addition to emails on physical activity among university and college employees in the UK. J Health Serv Res Policy. 2013 May;18(1 Suppl):56–64. doi: 10.1177/1355819613478001. [DOI] [PubMed] [Google Scholar]

- 46.Miller WR, Rollnick S. Motivational Interviewing: Preparing People for Change. Second Edition. New York, US: The Guilford Press; 2002. [Google Scholar]

- 47.Miller WR, Rose GS. Toward a theory of motivational interviewing. Am Psychol. 2009 Oct;64(6):527–37. doi: 10.1037/a0016830. http://europepmc.org/abstract/MED/19739882. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Resnicow K, McMaster F. Motivational Interviewing: moving from why to how with autonomy support. Int J Behav Nutr Phys Act. 2012 Mar 2;9:19. doi: 10.1186/1479-5868-9-19. https://ijbnpa.biomedcentral.com/articles/10.1186/1479-5868-9-19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Bandura A. Social Foundations of Thought and Action: A Social Cognitive Theory. Upper Saddle River, New Jersey: Prentice Hall; 1986. [Google Scholar]

- 50.DiClemente CC, Marinilli AS, Singh M, Bellino LE. The role of feedback in the process of health behavior change. Am J Health Behav. 2001;25(3):217–27. doi: 10.5993/ajhb.25.3.8. [DOI] [PubMed] [Google Scholar]

- 51.Harkin B, Webb TL, Chang BP, Prestwich A, Conner M, Kellar I, Benn Y, Sheeran P. Does monitoring goal progress promote goal attainment? A meta-analysis of the experimental evidence. Psychol Bull. 2016 Mar;142(2):198–229. doi: 10.1037/bul0000025. [DOI] [PubMed] [Google Scholar]

- 52.Hockey R. The Psychology of Fatigue: Work, Effort and Control. Cambridge, UK: Cambridge University Press; 2013. [Google Scholar]

- 53.Firebase - Google. [2020-01-31]. Firebase Cloud Messaging https://firebase.google.com/docs/cloud-messaging.

- 54.Qualtrics XM // The Leading Experience Management Software. [2020-01-31]. https://www.qualtrics.com.

- 55.Boruvka A, Almirall D, Witkiewitz K, Murphy SA. Assessing time-varying causal effect moderation in mobile health. J Am Stat Assoc. 2018;113(523):1112–21. doi: 10.1080/01621459.2017.1305274. http://europepmc.org/abstract/MED/30467446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Huber PJ. The behavior of maximum likelihood estimates under nonstandard conditions. Berkeley Symp Math Stat Probab. 1967;1:221–33. https://projecteuclid.org/euclid.bsmsp/1200512988. [Google Scholar]

- 57.Halekoh U, Højsgaard S, Yan J. The R package GEEPACK for generalized estimating equations. J Stat Soft. 2006;15(2):1–11. doi: 10.18637/jss.v015.i02. [DOI] [Google Scholar]

- 58.Little RJ, Rubin DB. Statistical Analysis with Missing Data. Second Edition. Hoboken, New Jersey: Wiley-interscience; 2002. [Google Scholar]

- 59.Bell ML, Fairclough DL. Practical and statistical issues in missing data for longitudinal patient-reported outcomes. Stat Methods Med Res. 2014 Oct;23(5):440–59. doi: 10.1177/0962280213476378. [DOI] [PubMed] [Google Scholar]

- 60.Shortreed SM, Laber E, Scott Stroup T, Pineau J, Murphy SA. A multiple imputation strategy for sequential multiple assignment randomized trials. Stat Med. 2014 Oct 30;33(24):4202–14. doi: 10.1002/sim.6223. http://europepmc.org/abstract/MED/24919867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Grund S, Robitzsch A, Luedtke O. mitml: Tools for Multiple Imputation in Multilevel Modeling. https://rdrr.io/cran/mitml/

- 62.Pielot M, Cardoso B, Katevas K, Serrà J, Matic A, Oliver N. Beyond interruptibility: predicting opportune moments to engage mobile phone users. Proc ACM Interact Mob Wearable Ubiquitous Technol. 2017;1(3):1–25. doi: 10.1145/3130956. [DOI] [Google Scholar]

- 63.Sarker H, Sharmin M, Ali AA, Rahman MM, Bari R, Hossain SM, Kumar S. Assessing the availability of users to engage in just-in-time intervention in the natural environment. Proc ACM Int Conf Ubiquitous Comput. 2014;2014:909–20. doi: 10.1145/2632048.2636082. http://europepmc.org/abstract/MED/25798455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Bidargaddi N, Almirall D, Murphy S, Nahum-Shani I, Kovalcik M, Pituch T, Maaieh H, Strecher V. To prompt or not to prompt? A microrandomized trial of time-varying push notifications to increase proximal engagement with a mobile health app. JMIR Mhealth Uhealth. 2018 Dec 29;6(11):e10123. doi: 10.2196/10123. https://mhealth.jmir.org/2018/11/e10123/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Cohen J. Statistical Power Analysis for the Behavioral Sciences. Second Edition. Mahwah, New Jersey, United States: Lawrence Erlbaum Associates; 1988. [Google Scholar]

- 66.Luers B, Klasnja P, Murphy S. Standardized effect sizes for preventive mobile health interventions in micro-randomized trials. Prev Sci. 2019 Jan;20(1):100–9. doi: 10.1007/s11121-017-0862-5. http://europepmc.org/abstract/MED/29318443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Klasnja P, Smith S, Seewald NJ, Lee A, Hall K, Luers B, Hekler EB, Murphy SA. Efficacy of contextually tailored suggestions for physical activity: a micro-randomized optimization trial of HeartSteps. Ann Behav Med. 2019 May 3;53(6):573–82. doi: 10.1093/abm/kay067. http://europepmc.org/abstract/MED/30192907. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Collins LM, Murphy SA, Strecher V. The multiphase optimization strategy (MOST) and the sequential multiple assignment randomized trial (SMART): new methods for more potent eHealth interventions. Am J Prev Med. 2007 May;32(5 Suppl):S112–8. doi: 10.1016/j.amepre.2007.01.022. http://europepmc.org/abstract/MED/17466815. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.The Methodology Center. [2020-01-31]. Overview of MOST http://www.methodology.psu.edu/ra/most/research/

- 70.Prince SA, Adamo KB, Hamel ME, Hardt J, Gorber SC, Tremblay M. A comparison of direct versus self-report measures for assessing physical activity in adults: a systematic review. Int J Behav Nutr Phys Act. 2008 Dec 6;5:56. doi: 10.1186/1479-5868-5-56. https://ijbnpa.biomedcentral.com/articles/10.1186/1479-5868-5-56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Frost MH, Reeve BB, Liepa AM, Stauffer JW, Hays RD, Mayo/FDA Patient-Reported Outcomes Consensus Meeting Group What is sufficient evidence for the reliability and validity of patient-reported outcome measures? Value Health. 2007;10(Suppl 2):S94–105. doi: 10.1111/j.1524-4733.2007.00272.x. https://linkinghub.elsevier.com/retrieve/pii/VHE272. [DOI] [PubMed] [Google Scholar]

- 72.Krebs P, Prochaska JO, Rossi JS. A meta-analysis of computer-tailored interventions for health behavior change. Prev Med. 2010;51(3-4):214–21. doi: 10.1016/j.ypmed.2010.06.004. http://europepmc.org/abstract/MED/20558196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Hawkins RP, Kreuter M, Resnicow K, Fishbein M, Dijkstra A. Understanding tailoring in communicating about health. Health Educ Res. 2008 Jul;23(3):454–66. doi: 10.1093/her/cyn004. http://europepmc.org/abstract/MED/18349033. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional analyses and statistical details.

CONSORT-eHEALTH Checklist (V 1.6.1).