Abstract

Background Failure to complete recommended diagnostic tests may increase the risk of diagnostic errors.

Objectives The aim of this study is to develop and evaluate an electronic monitoring tool that notifies the responsible clinician of incomplete imaging tests for their ambulatory patients.

Methods A results notification workflow engine was created at an academic medical center. It identified future appointments for imaging studies and notified the ordering physician of incomplete tests by secure email. To assess the impact of the intervention, the project team surveyed participating physicians and measured test completion rates within 90 days of the scheduled appointment. Analyses compared test completion rates among patients of intervention and usual care clinicians at baseline and follow-up. A multivariate logistic regression model was used to control for secular trends and differences between cohorts.

Results A total of 725 patients of 16 intervention physicians had 1,016 delayed imaging studies; 2,023 patients of 42 usual care clinicians had 2,697 delayed studies. In the first month, physicians indicated in 23/30 cases that they were unaware of the missed test prior to notification. The 90-day test completion rate was lower in the usual care than intervention group in the 6-month baseline period (18.8 vs. 22.1%, p = 0.119). During the 12-month follow-up period, there was a significant improvement favoring the intervention group (20.9 vs. 25.5%, p = 0.027). The change was driven by improved completion rates among patients referred for mammography (21.0 vs. 30.1%, p = 0.003). Multivariate analyses showed no significant impact of the intervention.

Conclusion There was a temporal association between email alerts to physicians about missed imaging tests and improved test completion at 90 days, although baseline differences in intervention and usual care groups limited the ability to draw definitive conclusions. Research is needed to understand the potential benefits and limitations of missed test notifications to reduce the risk of delayed diagnoses, particularly in vulnerable patient populations.

Keywords: diagnostic error, results management, patient safety, radiology, electronic alerts and reminders

Background and Significance

Diagnostic errors are potentially serious and preventable events, affecting as many as 15% of primary care patients in the United States. 1 2 They are a significant cause of malpractice claims and suits, and the fastest growing source of malpractice liability in primary care and emergency department settings according to a consortium of large US insurers. 3 4 5 6 Diagnostic error is an international problem, affecting both high- and low-income countries. 2 The World Health Organization recognized diagnostic error as a priority area, emphasizing the potential value of information technology interventions. 7

Diagnostic errors may result from faulty medical decision-making. Cognitive biases can lead to premature closure, anchoring (the tendency to rely disproportionately on an initial piece of information), and other heuristics that may preclude a complete or measured assessment of the data available to the clinician and the range of diagnostic possibilities. 8 9 10 Diagnostic errors also result from poorly configured clinical processes. Effective diagnosis results from a multistep process that includes a presenting complaint, clinical examination and testing, receipt, review, and interpretation of results, and communication of findings to the patient and the next provider of care. 11 Lapses in the diagnostic process can imperil the entire process, resulting in diagnostic delays and poor patient outcomes. 12

Progress in improving the diagnostic process has relied heavily on the electronic health record (EHR). Informatics specialists have used EHRs to create alerts and reminders, test result registries, “closed-loop” communication tools, and novel data-mining capabilities. 13 14 15 A limitation of most EHRs, however, is a dearth of tools to help busy clinicians to identify cases where the patient may have failed to complete a test or referral. Diagnostic errors may occur when a patient fails to complete an appointment with a surgeon to assess a breast lump, or for follow-up of a radiographic incidental finding, or for a repeat colonoscopy in the setting of rectal bleeding. Many EHRs lack tools to flag cases when the patient failed to complete a recommended test or procedure, or when the procedure was canceled and not rescheduled. We have observed that even among EHRs that can identify incomplete orders, follow-up of these cases may be inconsistent and dependent on the practice style of the “destination” clinician, the radiology department, or the ordering clinician's active surveillance of pending orders.

Although several groups have reported the development of “safety net” methods to ensure that failed follow-up tests and referrals are detected and reported to the responsible clinician, this approach is not widely available. 16 17 It requires data-mining capabilities and a significant human resource investment. As a result, many busy clinicians maintain personal tickler files to remind clinicians of important pending results arranged by due date. These files may be difficult to access by other members of the care team.

Objectives

To reduce the risk of incomplete diagnostic tests in ambulatory care and to improve the likelihood of timely follow-up, we undertook a project to create a tool, leveraging embedded EHR tools, that would identify incomplete imaging tests and notify the ordering clinician automatically. 9 18 19 20 Specifically, we sought to alert the ordering clinician about intended radiology tests that were not completed. We hypothesized that the intervention would be acceptable to participating clinicians and would increase the rate of completed imaging tests within 90 days of the originally scheduled test. We acknowledge that missed and delayed tests do not necessarily denote that a medical error has occurred. However, these cases may increase the risk of such events. 21 22

Methods

Project Site and Subjects

This project was conducted in the adult primary care and pulmonary medicine clinics at Tufts Medical Center, a 415-bed academic medical center in downtown Boston, serving a diverse population of adult and pediatric patients. The clinics are located on three floors of an ambulatory clinical building onsite at the medical center and together provide over 80,000 patient visits annually. The medical center has multiple EHRs with various interfaces across ambulatory care, inpatient units, and specialty services.

Intervention

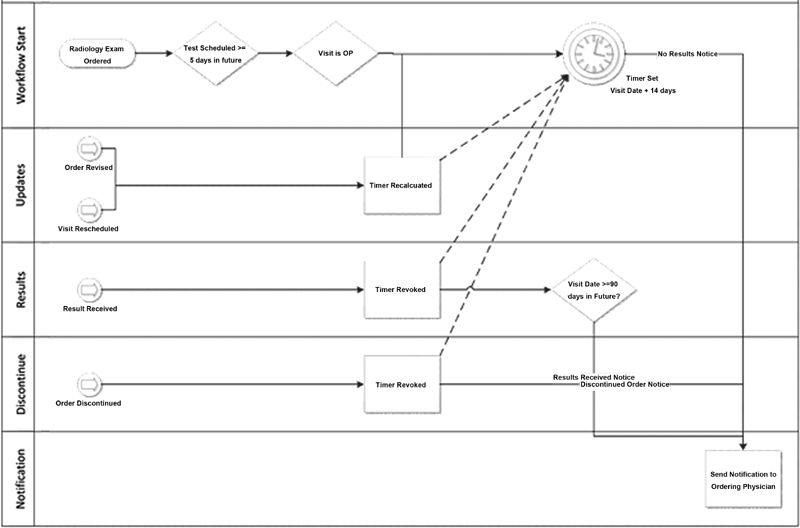

Clinical and information systems staff together designed and developed an Outpatient Results Notification Tool within the Soarian Workflow Engine (“Workflow Engine”), leveraging an embedded functionality in the hospital's primary inpatient EHR, Soarian Clinicals (Cerner, Kansas City, Missouri, United States) to identify and notify the responsible clinician about imaging tests that were scheduled but incomplete. The technical specifications of the tool are outlined in Appendix A . Briefly, the application used a timer that noted the date when an imaging test of any type from any ambulatory clinic was scheduled to be completed ( Fig. 1 ). We chose the appointment as the triggering event rather than the order for two important reasons. Clinicians at the medical center use multiple different electronic medical record systems with various interface capabilities, but a common scheduling system. The appointment date allows for implementation across multiple EHRs. In addition, the appointment date permits one to calculate the interval between the intended test date and the completion date. This information is not contained routinely in the clinician's order.

Fig. 1.

Workflow engine schematic.

The Workflow Engine tracked all newly scheduled appointments for imaging tests and procedures including mammograms, ultrasound, computed tomography (CT), nuclear imaging studies, magnetic resonance imaging (MRI), interventional radiology procedures, and general radiology performed at any on-site medical center testing location. Based on clinician input, tests scheduled less than 5 days in the future were not tracked as this included many same-day or same-week tests with high completion rates. Clinicians argued that they were unlikely to lose track of a high-value imaging test scheduled to be completed within a few days. Using a timer function, the tool waited for completed appointments with associated results to be reported into the main Soarian EHR.

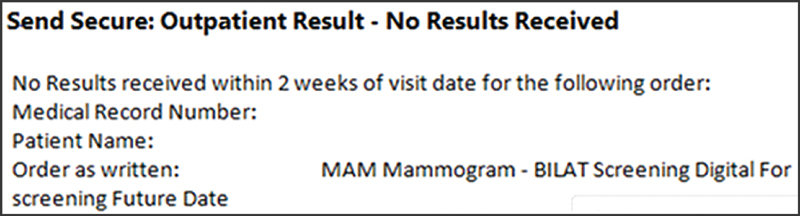

If the test was not completed within 2 weeks of the original appointment date, the tool generated a single email message to the ordering provider titled “Outpatient Result: No Result Received” ( Fig. 2 ). The message stated that no results were received for the order within 2 weeks of the visit date. We chose email to make the tool agnostic to EHR. The message listed the patient's name and medical record number, the ordered test, the ordering clinician, and the missed appointment date. All clinicians and their staff members had and used internal email addresses that were within the organization's firewall. The system also reported completed tests, although some clinicians felt that the messages were redundant with existing results management functionality inside the EHR and could promote alert fatigue. During the pilot phase of the project, the tool alerted clinicians to appointments that had been rescheduled to a later date. Based on clinician feedback, the final version suppressed alerts for rescheduled appointments. The timer reset when a test was rescheduled.

Fig. 2.

Incomplete imaging test email notification.

We piloted the intervention with the rheumatology division over 1 year and then expanded the project into the adult primary care and pulmonary medicine clinics, initially engaging five clinicians beginning in January 2018 and a total of 16 in February. These included 10 of 35 primary care clinicians and 7 advanced practitioners, and 6 of 16 pulmonologists. We selected these clinics because they use separate EHRs: Centricity (General Electric, Boston, Massachusetts, United States) in adult primary care and eClinicalWorks (Westborough, Massachusetts, United States) in pulmonary medicine. Both clinics generate many future imaging studies. We invited an intentional sample of clinicians to participate, selecting physicians from each clinic to reflect variation within the clinic of physicians by seniority and experience, practice style (physician responsiveness and timely documentation), and composition of minority populations in the provider's panel. None declined.

We used rapid-cycle improvement techniques over the course of 3 months to improve and refine the application including interventions to reduce nuisance alerts for completed and rescheduled tests. The clinics adopted workflow improvements to integrate the alerts into clinicians' practices and to facilitate rescheduling of missed tests by nonprovider clinic staff by creating templated messages.

Analyses

To assess the impact of the project, we surveyed participating clinicians in February 2018 via email about their experience with the intervention and opportunities for improvement. We used an open-ended, free-text format that asked intervention physicians if they were aware of the incomplete test and if they took any action to ensure that the test was ultimately completed.

To assess whether the intervention improved rates of testing completed within 90 days of the originally scheduled test date, we abstracted data from the Soarian EHR that included information about each ambulatory patient in the adult primary care and pulmonary clinics who had been scheduled for a future imaging test in the 6 months prior to (July–December 2017) and the 12 months following (January–December 2018) implementation. Patient identifiers hypothesized to be associated with delayed imaging included age, gender, race/ethnicity, preferred language, and primary insurance. We abstracted the type of imaging test, the date when the test was initially entered in the scheduling system, the scheduled date, the date that the test was ultimately completed, the ordering provider, and his or her clinic. Unfortunately, we were unable to extract information about the indication for the test or presence of abnormal findings as these were uncoded, free-text fields. We also lacked information about patients' comorbidities or functional status, factors that might affect adherence.

Our primary outcome was the proportion of eligible tests completed between 14 days (the date the alert could first fire) and 90 days of the originally scheduled appointment date. We note that test completion rates are a process-of-care measure. The effectiveness of the intervention is ultimately determined by the number of completed tests that yield information that affects clinical care and patient outcomes. We chose the 90-day interval based on feedback from clinicians about what they would consider to be a substantial delay. We included only those tests that fell within the study period and whose 90-day completion would have fallen within the study period.

We compared the 90-day completion rate of intervention and usual care patients at baseline (6 months prior to the intervention) and at follow-up. To account for potential differences in the intervention and usual care groups that might affect the completion rate, we examined the difference-in-difference between baseline and follow completion rates of the two groups. To corroborate the results of the primary analysis, we also examined the elapsed time between the scheduled test date and the completion date. We censored incomplete tests at 90 days.

To account for differences in the intervention and usual care groups and for secular trends, we performed an interrupted time-series analysis. We created a multivariable logistic regression model with 90-day completion rate as the binary outcome. Independent variables included patients' sociodemographic characteristics, primary insurance, clinic site, test modality, test date, as well as intervention status, intervention period (baseline or follow-up), and the interaction between intervention status and period. The model controlled for the number of days between the date when the test was entered and the appointment date, in case distant future tests were less likely to be completed than more proximate tests. To adjust for potential within-patient effects, analyses were clustered at the patient level. Analyses used the Chi-square statistic for nominal and ordinal variables and the Wilcoxon's rank-sum test for continuous variables, using two-tailed tests with a p -value of ≤0.05. Statistical analyses used Stata 9.0 (StataCorp, College Station, Texas, United States).

Results

Subject Characteristics

Table 1 displays the characteristics of the 2,023 patients who received usual care and the 725 patients cared for by intervention-group clinicians. Usual care patients were slightly older (57.1 vs. 54.9 years, p = 0.03) during the baseline period and included a higher percentage of men (15.8 vs. 8.8% at baseline [ p = 0.001], 19.2 vs. 12.7% at follow-up [ p = 0.004]) and Asian patients (19.7 vs. 8.8% at baseline [ p < 0.001], 21.5 vs. 8.6% at follow-up [ p < 0.001]). The usual care group had a smaller percentage of patients who preferred English (82.0 vs. 89.4% at baseline [ p = 0.001], 80.8 vs. 90.1% at follow-up [ p < 0.001]) or who had commercial insurance at baseline (51.9 vs. 60.3% [ p = 0.05]).

Table 1. Patients' sociodemographic characteristics at baseline and follow-up, by intervention status.

| Characteristic | Baseline ( n = 1,277) | Follow-up ( n = 1,471) | ||||

|---|---|---|---|---|---|---|

| Usual care ( n = 937), n (% a ) | Intervention ( n = 340), n (% a ) | p -Value b | Usual care ( n = 1,086), n (% a ) | Intervention ( n = 385), n (% a ) | p -Value b | |

| Age, mean (range), SD | 57.1 (22–89), 13.3 | 54.9 (22–86), 13.6 | 0.033 | 56.7 (19–94), 13.8 | 55.6 (23–98), 13.1 | 0.090 |

| Male gender | 148 (15.8) | 30 (8.8) | 0.001 | 208 (19.2) | 49 (12.7) | 0.004 |

| Race/ethnicity | <0.001 | <0.001 | ||||

| White | 441 (47.1) | 202 (59.4) | 482 (44.4) | 222 (57.7) | ||

| Black | 214 (22.8) | 67 (19.7) | 249 (22.9) | 80 (20.8) | ||

| Asian | 184 (19.7) | 30 (8.8) | 234 (21.5) | 33 (8.6) | ||

| Hispanic | 69 (7.4) | 25 (7.4) | 92 (8.5) | 37 (9.6) | ||

| Other | 13 (1.4) | 7 (2.1) | 12 (1.1) | 5 (1.3) | ||

| Unknown | 16 (1.7) | 9 (2.7) | 17 (1.6) | 8 (2.1) | ||

| English preferred language | 768 (82.0) | 304 (89.4) | 0.001 | 877 (80.8) | 347 (90.1) | <0.001 |

| Insurance | 0.047 | 0.083 | ||||

| Commercial | 486 (51.9) | 205 (60.3) | 547 (50.4) | 207 (53.8) | ||

| Medicare | 272 (29.0) | 83 (24.4) | 313 (28.8) | 103 (26.8) | ||

| Medicaid or self-pay | 173 (18.5) | 52 (15.3) | 217 (20.0) | 66 (17.1) | ||

| Other or unknown | 6 (0.6) | 0 | 9 (0.8) | 9 (2.3) | ||

| Clinic | 0.781 | 0.423 | ||||

| Adult primary care | 875 (93.4) | 316 (92.9) | 1,030 (94.8) | 361 (93.8) | ||

| Pulmonary | 62 (6.6) | 24 (7.1) | 56 (5.2) | 24 (6.2) | ||

| Imaging tests per person, mean (range), SD | 1.3 (1–5), 0.6 | 1.2 (1–6), 0.6 | 0.007 | 1.2 (1–4), 0.4 | 1.2 (1–5), 0.6 | 0.343 |

Abbreviation: SD, standard deviation.

Totals may not add to 100% due to rounding.

Chi-square test for categorical variables, Wilcoxon's rank-sum test for continuous variables.

Provider Survey

We surveyed all 16 intervention clinicians after a month of the fully implemented program about the first 39 incomplete test notifications. Fifteen physicians (94%) responded to 30 (77%) of the 39 queries. In 23 (77%) of the 30 responses, the physician indicated that he or she was unaware of the missed test prior to the notification. For these cases, the physician was prompted by the alert to follow-up with the patient after notification in 19 (63%) of the 30 cases. Follow-up included telephone outreach to the patient by the clinician or a staff member. On chart review, all 19 patients had completed the delayed imaging test within 90 days.

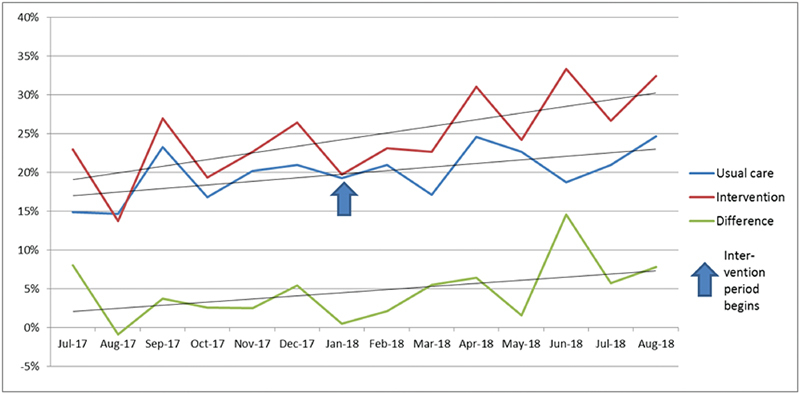

90-Day Test Completion Rates

After a full year, we assessed the impact of the alert by comparing 90-day test follow-up rates among intervention and usual care groups during the baseline and follow-up periods. Table 2 shows that the overall 90-day test completion rate was lower in the usual care than intervention group at baseline (18.8 vs. 22.1%), but this difference was not significant ( p = 0.135). During the follow-up period, there was a significant difference in the 90-day test completion rate favoring the intervention group (20.9 vs. 25.5%, p = 0.027). The difference in completion rates over time is shown graphically in Fig. 3 . The performance of the intervention group relative to usual care at follow-up was driven largely by improved completion rates among patients referred for mammography (21.0 vs. 30.1%, p = 0.003). The improved performance of the intervention group from baseline to follow-up was not significant ( p = 0.213), and no consistent pattern of improvement was observed across imaging modalities or comparing the difference in difference.

Table 2. Alert-eligible imaging tests completed within 90 days by intervention and usual care groups at baseline and follow-up.

| Baseline ( n = 1,671) | Follow-up ( n = 2,063) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Usual care ( n = 1,213) n (% a ) | Intervention ( n = 458) n (% a ) | Difference (%) | p -Value b | Usual care ( n = 1,484) n (% a ) | Intervention ( n = 558) n (% a ) | Difference (%) | p -Value b | Difference in difference | |

| Mammogram | 125/616 (20.3) | 50/243 (20.6) | 0.3 | 0.926 | 141/673 (21.0) | 83/276 (30.1) | 9.1 | 0.003 | 8.8 |

| Ultrasound | 31/227 (13.7) | 18/99 (18.2) | 4.5 | 0.293 | 62/335 (18.5) | 21/119 (17.7) | −0.8 | 0.835 | −5.3 |

| CT | 28/134 (20.9) | 15/50 (30.0) | 9.1 | 0.194 | 49/181 (27.1) | 20/82 (24.4) | −2.7 | 0.647 | −11.8 |

| Nuclear medicine | 27/160 (16.9) | 10/41 (24.4) | 7.5 | 0.268 | 52/231 (22.5) | 10/50 (20.0) | −2.5 | 0.698 | −10.0 |

| MRI | 12/51 (23.5) | 7/17 (41.2) | 17.7 | 0.160 | 2/30 (6.7) | 6/19 (31.6) | 24.9 | 0.022 | 7.2 |

| General radiology | 5/24 (20.8) | 1/6 (16.7) | −4.1 | 0.819 | 4/30 (13.3) | 2/12 (16.7) | 3.4 | 0.780 | 7.5 |

| Other | 0/2 (0) | 0/2 (0) | 0 | n/a | 0/4 (0) | 0/0 (0) | 0 | n/a | 0 |

| Total | 228/1,213 (18.8) | 101/458 (22.1) | 3.3 | 0.135 | 310/1,484 (20.9) | 142/558 (25.5) | 4.6 | 0.027 | 1.3 |

Abbreviations: CT, computed tomography; MRI, magnetic resonance imaging.

Note: Bold values denote statistically significant results.

Totals may not add to 100% due to rounding.

Chi-square statistic.

Fig. 3.

Alert-eligible imaging tests completed within 90 days by intervention and usual care groups, by month.

We also examined the interval between the initial scheduled test completion date and the date when it was ultimately completed, censoring incomplete tests at 90 days. As shown in Table 3 , days to completion were slightly higher in the usual care than intervention group at baseline (81.5 vs. 79.4 days), but this was not a significant difference. The difference again favored the intervention group in the follow-up period (79.8 vs. 78.2), a significant result of uncertain clinical benefit ( p = 0.036). The improvement was driven by timely mammography (80.5 vs. 76.9, p = 0.004).

Table 3. Alert-eligible imaging tests, days to completion (censored at 90 days).

| Baseline ( n = 1,671) | Follow-up ( n = 2,063) | |||||

|---|---|---|---|---|---|---|

| Usual care ( n = 1,213) days, (SD) | Intervention ( n = 458) days (SD) | p -Value a | Usual care ( n = 1,484) days (SD) | Intervention ( n = 558) days (SD) | p -Value a | |

| Mammogram | 81.4 (19.8) | 81.2 (20.0) | 0.845 | 80.5 (20.6) | 76.9 (23.1) | 0.004 |

| Ultrasound | 83.1 (18.8) | 81.3 (20.1) | 0.327 | 79.8 (22.8) | 81.9 (19.9) | 0.784 |

| CT | 81.1 (19.9) | 74.4 (26.2) | 0.134 | 76.3 (25.4) | 78.0 (23.5) | 0.610 |

| Nuclear medicine | 81.9 (19.4) | 76.9 (24.2) | 0.230 | 78.8 (22.9) | 80.4 (22.4) | 0.706 |

| MRI | 77.1 (24.8) | 61.8 (34.9) | 0.092 | 85.2 (18.1) | 68.9 (32.2) | 0.031 |

| General radiology | 76.7 (26.7) | 80.8 (22.5) | 0.739 | 84.4 (17.0) | 78.8 (26.3) | 0.714 |

| Other | 0 | 0 | n/a | 90 (0) | 0 | n/a |

| Total | 81.5 (19.9) | 79.4 (22.1) | 0.092 | 79.8 (22.0) | 78.2 (22.9) | 0.036 |

Abbreviations: CT, computed tomography; MRI, magnetic resonance imaging; SD, standard deviation.

Note: Bold values denote statistically significant results.

Wilcoxon's rank-sum test.

Multivariate Analyses

In the interrupted time-series analysis ( Table 4 ) involving the entire cohort, the intervention had no discernible impact on the odds of completing the test within 90 days among patients who had missed a scheduled appointment date. The factors associated with completion of missed imaging tests were month, patient age, and care in the pulmonary medicine clinic. Each successive month of the project improved the odds of test completion (adjusted odd ratio [aOR]: 1.05, 95% confidence interval [CI]: 1.00–1.09), suggesting a secular trend in improved performance. Each year of age slightly increased the odds of test completion (aOR: 1.01, 95% CI: 1.01–1.02). Care in the pulmonary medicine clinic was associated with a substantially higher odds of test completion (aOR: 1.90, 95% CI: 1.19–2.73).

Table 4. Multivariate logistic regression model examining factors associated with 90-day test completion, clustered by patient (interrupted time-series).

| Variable | Adjusted odds ratio | 95% confidence interval | p -Value |

|---|---|---|---|

| Intervention group (vs. usual care) | 1.22 | 0.91–1.64 | 0.183 |

| Follow-up period (vs. baseline) | 0.88 | 0.61–1.25 | 0.468 |

| Intervention-follow-up interaction | 1.04 | 0.71–1.52 | 0.842 |

| Month | 1.05 | 1.00–1.09 | 0.039 |

| Days from order to scheduled test date | 1.00 | 1.00–1.00 | 0.953 |

| Patient age | 1.01 | 1.01–1.02 | 0.001 |

| Male gender | 0.78 | 0.59–1.03 | 0.081 |

| White race | 0.93 | 0.77–1.14 | 0.497 |

| English primary language | 1.06 | 0.81–1.38 | 0.684 |

| Medicaid or self-insured | 0.90 | 0.71–1.15 | 0.414 |

| Pulmonary clinic (vs. adult primary care) | 1.80 | 1.19–2.73 | 0.005 |

| Imaging test type (vs. mammogram) | |||

| Ultrasound | 0.81 | 0.65–1.01 | 0.652 |

| CT | 0.99 | 0.72–1.36 | 0.717 |

| Nuclear medicine | 0.86 | 0.67–1.10 | 0.674 |

| MRI | 1.22 | 0.74–2.00 | 0.745 |

| General radiology | 0.56 | 0.28–1.11 | 0.284 |

Abbreviations: CT, computed tomography; MRI, magnetic resonance imaging.

Note: Bold values denote statistically significant results.

Discussion

In this analysis of 3,734 imaging studies among 2,748 outpatients at an academic medical center, an email notification of incomplete tests was associated with a 5% absolute and 22% relative improvement in the rate of completed tests within 90 days (21% among 1,484 usual care patients at follow-up compared with 26% among 558 patients in the intervention group). The effect was most pronounced for mammography, where the completion rate of missed tests was 30% in the intervention group compared with 21% in the usual group during the follow-up period. However, the effect was inconsistent across imaging modalities, with significant improvement noted only in mammography and MRI. Analyzing days to completion of missed tests yielded similar results, with significant improvements for mammography and MRI only. Multivariate analyses, however, failed to demonstrate significant improvements attributable to the intervention itself rather than to secular trends and differences between the comparison groups in terms of age, gender, and clinic.

Missed and delayed imaging tests are common, affecting patients across settings and diagnoses. 23 24 25 A recent study of 2.9 million patient visits found no-show rates of up to 7%. 26 The reasons are manifold and are a common cause of medical errors. In a retrospective chart review study of 15 adult primary care practices from 2012 to 2016, Pace et al found that 7% of patients with breast lumps and 27% of those with rectal bleeding had not completed recommended imaging. 27 In a cancer center study of 102 patients with a delayed breast cancer diagnosis, patients' failure to complete diagnostic testing accounted for 13% of cases. 28 And in a prospective cohort study of over 400,000 Italian women, regular mammography was associated with a 28% reduced risk of presentation with advanced disease. 29

Studies have demonstrated the value of patient-oriented interventions including education, reminders, and patient navigation programs. 30 31 32 33 34 Clinician-oriented interventions include results management applications that help clinicians to close the loop on completed tests by notifying the patient or next provider of care about abnormal findings. 35 36 These decision support tools create fail-safe processes to secure potentially risky steps in the diagnostic process, and may ultimately reduce missed diagnoses and malpractice liability claims. While advanced EHRs create reports that indicate if ordered tests were completed, most systems offer only passive reporting that requires a meticulous clinician to search for incomplete studies among his or her panel. Electronic systems that perform active surveillance of missed follow-up offer a promising next-generation approach to reducing diagnostic delays. 37

The present project presents an active decision support model, alerting clinicians when patients fail to complete scheduled tests. The approach is likely scalable and reproducible, amenable to identifying other types of missed, future, high-risk laboratory tests, pathology results, and diagnostic procedures. It leveraged embedded tools in the EHR, relying on appointment scheduling—an approach that may be helpful in organizations without a unified technology platform. It is also suitable for tracking high-risk referrals to subspecialty clinicians. Although alert fatigue is a potential risk to both email and alerts embedded in the EHR, our clinicians found these alerts easy to find, use, and forward.

Our project, though successfully documenting improved 90-day completion rates of certain imaging tests, also found significant differences in completion rates across treatment modalities and practice settings. We believe that these differences may be accounted for in part by practice infrastructure and the impact of patient navigators. The primary care clinic's customized EMR and greater nursing and administrative support may have attenuated the impact of the intervention compared with the pulmonary clinic. We speculate that the hospital's use of breast health navigators for Chinese-speaking patients may have enhanced its value for follow-up mammography. Further research is necessary to examine differences in the process of test ordering and delivery in each clinic and within areas of radiology to understand the impact of variation on test adherence, particularly for minority populations.

While performance improved overall in comparisons between usual care and intervention groups from baseline to follow-up, the overall rate of completion remained surprisingly low. This goes to several issues. First, was the alert presented at a time and in a way that it can be easily integrated into the physician's workflow? The presentation and timing of an alert and ease of accessing the EMR are essential to the alert's adoption and effectiveness. 38 Second, is there an efficient mechanism to translate a missed test notification into an action that improves completion and adherence? Clinicians need a streamlined outreach mechanism to follow up with patients to investigate missed tests, reschedule those that are needed, and address the various barriers that patients may face. It may be helpful to incorporate into the follow-up protocol an assessment of barriers to care such as an intercurrent illness, transportation, family obligations, cost, miscommunication, and fear. Eliciting these obstacles may allow for tailored interventions to improve adherence including patient reminders, information packets, transportation assistance, physician outreach, and navigation programs. Overall, the results are encouraging even as additional work is needed to improve the post-alert “effector” arm capability of clinical practices to successfully reach out to patients to complete planned evaluations.

This project was subject to several limitations. Completed at a single institution, the findings require replication in other settings. The Workflow Engine relied on appointment scheduling rather than a clinician order to set the timer. This created a vulnerability for patients whose tests were ordered but never scheduled. A separate effort was undertaken to ensure that all imaging test orders were scheduled. We recruited an intentional sample of intervention clinicians to represent variation in practitioners, practice styles, and patient panels. A randomized, controlled design allocating clinicians to intervention or control arm would provide a more methodologically robust approach, but this model is problematic in settings where clinicians share tools and resources and where contamination occurs readily. A cluster randomized trial with a step-wedge design may offer a promising strategy. Another limitation is the difficulty of knowing whether higher test completion rates resulted in fewer cases of delayed diagnoses. Addressing this issue is methodologically challenging, as it requires a large, adequately powered sample, and an efficient method to ascertain misdiagnosis. Prospective studies are challenging to perform in that diagnostic clues such as anemia or rectal bleeding, for example, are more often associated with benign disease than malignancy. 20 Retrospective studies, in contrast, which allow for retrospective detection of diagnostic clues among cases with delays, are susceptible to hindsight bias and unmeasured confounders. 18 Finally, we have limited information about the urgency or indication for specific tests or about alerts that led to clinicians' subsequent actions and outreach. Qualitative feedback from participating clinicians supports the concept that delays were averted, but a large prospective chart review study would be required to examine the rate of prevented diagnostic errors.

Conclusion

In sum, email alerts to adult primary care and pulmonary medicine clinicians about missed imaging tests was associated with improved test completion at 90 days, although there was no significant difference in the multivariable analysis. Baseline differences in intervention and usual care groups limited our ability to draw definitive conclusions. Research is needed to understand the potential benefits and limitations of advanced decision support to reduce the risk of delayed diagnoses, particularly in vulnerable patient populations.

Clinical Relevance Statement

To reduce the risk of diagnostic errors, a notification tool was created at an academic medical center to alert clinicians about missed radiology appointments for adult primary care and pulmonary medicine patients. The alerts were associated with a 22% relative improvement in test completion at 90 days, although the impact of the intervention was not significant in multivariable analyses.

Multiple Choice Questions

-

Which of the following is a well-recognized cause of diagnostic errors?

Central line-associated bloodstream infections

Slips and falls

Incomplete tests result follow-up

Electronic bar coding for medication administration

Correct Answer: The correct answer is option c. Central line infections, slips and falls, and medication errors may all result in adverse events, but are not generally identified as a cause of diagnostic errors. Incomplete test result follow-up, in contrast, may result in diagnostic errors in cases when the responsible clinician or patient lacks critical clinical information.

-

The impact of a missed radiology test notification tool on the 90-day test completion rate was greatest for which of the following imaging modalities?

Mammography

Ultrasound

Computed tomography

General radiology

Correct Answer: The correct answer is option a. In the present study, the electronic notification tool did not result in significant improvements in timely completion of ultrasound, CT, and general radiology studies. Implementation of the tool was associated with improvements in timely MRI and mammography.

Funding Statement

Funding This study received funding from the Gordon and Betty Moore Foundation and Wellforce Indemnity Company.

Conflict of Interest None declared.

Protection of Human and Animal Subjects

This project was reviewed in advance by the Tufts Health Sciences Institutional Review Board (IRB) and determined to be a quality improvement project exempt from IRB review.

Appendix A.

Architectural Overview: Soarian Workflow Engine

The Tufts Medical Center Outpatient Results Notification Workflow is built on a loosely coupled, event-driven architecture that ensures reliable, accountable delivery of clinical information to a variety of consumers both inside and outside the Medical Center. At its core is the TIBCO (Palo Alto, California, United States) workflow engine embedded inside the Cerner (Kansas City, Missosuri, United States) Soarian Electronic Medical Record system, Soarian Clinicals.

Soarian workflow engine : An Event-Based Messaging architecture/platform, based on technology from TIBCO, embedded inside the Cerner Electronic Health Record (EHR), Soarian Clinicals. Tufts Medical Center uses Soarian Clinicals as its core inpatient EHR.

Messaging services : Messaging Services, based on Cerner's OPENLink Interface Engine, enables TIBCO to subscribe to events created via real-time HL7 interfaces and from user interactions in the Soarian Clinicals User Interface. An instance of OPENLink sits inside Soarian Clinicals and translates real-time interface engine messages into events that TIBCO can subscribe to. User activity, including placing orders and saved assessments, are translated into events fed from Messaging Services to TIBCO/Workflow Engine.

Workflows : Workflows are event-driven, state-monitoring software processes that run inside Soarian Workflow Engine (TIBCO). Workflows can subscribe to events that occur within Soarian Clinicals (Admit/Discharge/Transfer, orders placed, results received, assessments saved). Workflows can connect to internal processes in Soarian Clinicals and external systems and take action in these internal processes and external systems. Workflows can query Soarian Clinicals for additional information using Soarian Rules Engine.

Soarian rules engine : A rules-based data layer that sits in-between Soarian Workflow Engine and the Soarian Clinicals back-end structured query language (SQL) Server database, Rules Engine enables Workflow Engine to access the Soarian back-end database via a set of service-based application programming interface database calls. Rules are written in Soarian Rules Engine using Arden Syntax language.

Active directory interoperability via ODBC data partner : Soarian Workflow Engine can interact with open database connectivity (ODBC) compliant data-sources over the wide area network between Cerner and Tufts Medical Center via a Rules Engine ODBC Data Partner over Port 1433 enabled for unidirectional communication from Cerner to Tufts. This ODBC connectivity enables Workflow Engine to query Active Directory for Physician email addresses for notifications.

Functional Overview: Outpatient Results Notifications Workflow

The Outpatient Results Notification Workflow is designed to send notifications related to Outpatient Orders/Results to the physicians who place the orders. The workflow starts when a Radiology order is placed for a patient and the visit is scheduled. If the order is less than 5 days out from the time/date the order is placed and visit scheduled, or if the order is on an inpatient visit, then the Workflow will exit. Otherwise, the workflow sets a timer to expire 14 days from the time/date of the outpatient visit.

The Workflow will notify the ordering physician as follows:

Order status updated to complete : If the order is completed, which happens when a result is returned, then the Workflow will notify the physician if the order was for more than 90 days in the future at the time of ordering, and will exit.

Order status discontinued : If the order is discontinued, then the Workflow will notify the ordering physician via email, and exit.

Order details modified : If the details of the order are modified, the Workflow will recalculate the timer (in case the date has changed) and reset the timer.

Visit details modified : If the details of the visit are modified, the Workflow will recalculate the timer (in case the date has changed) and reset the timer.

The Workflow checks Active Directory for the physicians email address, using the physician's short message service (SMS) number as a key for matching. If no match is found, or if the ordering physician is not present on the order in Soarian, then the Workflow will send to an exceptions mailbox, to be managed by end users.

The Workflow logs its steps to a SQL table. Once the workflow has sent a notification and completed logging, the Workflow ends.

References

- 1.Graber M L, Franklin N, Gordon R. Diagnostic error in internal medicine. Arch Intern Med. 2005;165(13):1493–1499. doi: 10.1001/archinte.165.13.1493. [DOI] [PubMed] [Google Scholar]

- 2.Singh H, Schiff G D, Graber M L, Onakpoya I, Thompson M J. The global burden of diagnostic errors in primary care. BMJ Qual Saf. 2017;26(06):484–494. doi: 10.1136/bmjqs-2016-005401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.CRICO Strategies. 2018 CBS Benchmarking Report, Medical Malpractice in America. Boston, MA; 2018 [Google Scholar]

- 4.CRICO Strategies.2014 CBS Benchmarking report, Malpractice Risks in the Diagnostic Process Boston, MA; 2014 [Google Scholar]

- 5.Hanscom R, Small M, Lambrecht A.A dose of insight Boston, MA: Coverys, March 2018. Available at:https://www.coverys.com/Knowledge-Center/A-Dose-of-Insight__Diagnostic-Accuracy. Accessed September 8, 2019 [Google Scholar]

- 6.Newman-Toker D E, Schaffer A C, Yu-Moe C W et al. Serious misdiagnosis-related harms in malpractice claims: The “Big Three” - vascular events, infections, and cancers. Diagnosis (Berl) 2019;6(03):227–240. doi: 10.1515/dx-2019-0019. [DOI] [PubMed] [Google Scholar]

- 7.Errors D.Technical Series on Safer Primary Care Geneva: World Health Organization; 2016. License: CC BY-NC-SA 3.0 IGO. Available at:https://apps.who.int/iris/bitstream/handle/10665/252410/9789241511636-eng.pdf;jsessionid=2B91A13CFDB3BE01B21DBEDEFAB810DB?sequence=1. Accessed December 21, 2019 [Google Scholar]

- 8.Croskerry P. The importance of cognitive errors in diagnosis and strategies to minimize them. Acad Med. 2003;78(08):775–780. doi: 10.1097/00001888-200308000-00003. [DOI] [PubMed] [Google Scholar]

- 9.Groopman J. New York City, New York: Houghton Mifflin; 2007. How Doctors Think. [Google Scholar]

- 10.Kahneman D. New York City, New York: Farrar, Straus, Giroux; 2011. Thinking, Fast, and Slow. [Google Scholar]

- 11.Gandhi T K, Kachalia A, Thomas E J et al. Missed and delayed diagnoses in the ambulatory setting: a study of closed malpractice claims. Ann Intern Med. 2006;145(07):488–496. doi: 10.7326/0003-4819-145-7-200610030-00006. [DOI] [PubMed] [Google Scholar]

- 12.National Academies of Sciences, Engineering, and Medicine.Improving Diagnosis in Health Care Washington, DC: The National Academies Press; 2015 [Google Scholar]

- 13.Bhise V, Sittig D F, Vaghani V, Wei L, Baldwin J, Singh H. An electronic trigger based on care escalation to identify preventable adverse events in hospitalised patients. BMJ Qual Saf. 2018;27(03):241–246. doi: 10.1136/bmjqs-2017-006975. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Schiff G, Ed.Getting Results: Reliably Communicating and Acting on Critical Test Results Oakbrook Terrace, IL: Joint Commission Resources; 2006 [Google Scholar]

- 15.Murphy D R, Wu L, Thomas E J, Forjuoh S N, Meyer A N, Singh H. Electronic trigger-based intervention to reduce delays in diagnostic evaluation for cancer: a cluster randomized controlled trial. J Clin Oncol. 2015;33(31):3560–3567. doi: 10.1200/JCO.2015.61.1301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Emani S, Sequist T D, Lacson R et al. Ambulatory safety nets to reduce missed and delayed diagnoses of cancer. Jt Comm J Qual Patient Saf. 2019;45(08):552–557. doi: 10.1016/j.jcjq.2019.05.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Danforth K N, Smith A E, Loo R K, Jacobsen S J, Mittman B S, Kanter M H. Electronic clinical surveillance to improve outpatient care: diverse applications within an integrated delivery system. EGEMS (Wash DC) 2014;2(01):1056. doi: 10.13063/2327-9214.1056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Singh H, Hirani K, Kadiyala H et al. Characteristics and predictors of missed opportunities in lung cancer diagnosis: an electronic health record-based study. J Clin Oncol. 2010;28(20):3307–3315. doi: 10.1200/JCO.2009.25.6636. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Callen J L, Westbrook J I, Georgiou A, Li J. Failure to follow-up test results for ambulatory patients: a systematic review. J Gen Intern Med. 2012;27(10):1334–1348. doi: 10.1007/s11606-011-1949-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Weingart S N, Stoffel E M, Chung D C et al. Delayed workup of rectal bleeding in adult primary care: examining process-of-care failures. Jt Comm J Qual Patient Saf. 2017;43(01):32–40. doi: 10.1016/j.jcjq.2016.10.001. [DOI] [PubMed] [Google Scholar]

- 21.Medical liability: missed follow-ups a potent triggers of lawsuitsamednews.com, July 15, 2013. Available at:https://amednews.com/article/20130715/profession/130719980/2/. Accessed December 21, 2019

- 22.Singh H, Thomas E J, Mani S et al. Timely follow-up of abnormal diagnostic imaging test results in an outpatient setting: are electronic medical records achieving their potential? Arch Intern Med. 2009;169(17):1578–1586. doi: 10.1001/archinternmed.2009.263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.O AlRowaili M.Ahmed AE, Areabi HA. Facotrs associated with no-shows and rescheduling MRI appointments BMC Health Serv Res 20166679. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Mander G TW, Reynolds L, Cook A, Kwan M M. Factors associated with appointment non-attendance at a medical imaging department in regional Australia: a retrospective cohort analysis. J Med Radiat Sci. 2018;65(03):192–199. doi: 10.1002/jmrs.284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Harvey H B, Liu C, Ai J et al. Predicting no-shows in radiology using regression modeling of data available in the electronic medical record. J Am Coll Radiol. 2017;14(10):1303–1309. doi: 10.1016/j.jacr.2017.05.007. [DOI] [PubMed] [Google Scholar]

- 26.Rosenbaum J I, Mieloszyk R J, Hall C S, Hippe D S, Gunn M L, Bhargava P. Understanding why patients no-show: observations of 2.9 million outpatient imaging visits over 16 years. J Am Coll Radiol. 2018;15(07):944–950. doi: 10.1016/j.jacr.2018.03.053. [DOI] [PubMed] [Google Scholar]

- 27.Pace L E, Percac-Lima S, Nguyen K H et al. Comparing diagnostic evaluations for rectal bleeding and breast lumps in primary care: a retrospective cohort study. J Gen Intern Med. 2019;34(07):1146–1153. doi: 10.1007/s11606-019-05003-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Weingart S N, Saadeh M G, Simchowitz B et al. Process of care failures in breast cancer diagnosis. J Gen Intern Med. 2009;24(06):702–709. doi: 10.1007/s11606-009-0982-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Puliti D, Bucchi L, Mancini S et al. Advanced breast cancer rates in the epoch of service screening: The 400,000 women cohort study from Italy. Eur J Cancer. 2017;75:109–116. doi: 10.1016/j.ejca.2016.12.030. [DOI] [PubMed] [Google Scholar]

- 30.Liu C, Harvey H B, Jaworsky C, Shore M T, Guerrier C E, Pianykh O. Text message reminders reduce outpatient radiology no-shows but do not improve arrival punctuality. J Am Coll Radiol. 2017;14(08):1049–1054. doi: 10.1016/j.jacr.2017.04.016. [DOI] [PubMed] [Google Scholar]

- 31.Phillips L, Hendren S, Humiston S, Winters P, Fiscella K. Improving breast and colon cancer screening rates: a comparison of letters, automated phone calls, or both. J Am Board Fam Med. 2015;28(01):46–54. doi: 10.3122/jabfm.2015.01.140174. [DOI] [PubMed] [Google Scholar]

- 32.Zapka J, Taplin S H, Price R A, Cranos C, Yabroff R. Factors in quality care--the case of follow-up to abnormal cancer screening tests--problems in the steps and interfaces of care. J Natl Cancer Inst Monogr. 2010;2010(40):58–71. doi: 10.1093/jncimonographs/lgq009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.DeFrank J T, Rimer B K, Gierisch J M, Bowling J M, Farrell D, Skinner C S. Impact of mailed and automated telephone reminders on receipt of repeat mammograms: a randomized controlled trial. Am J Prev Med. 2009;36(06):459–467. doi: 10.1016/j.amepre.2009.01.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Freund K M, Battaglia T A, Calhoun E et al. Impact of patient navigation on timely cancer care: the Patient Navigation Research Program. J Natl Cancer Inst. 2014;106(06):dju115. doi: 10.1093/jnci/dju115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Poon E G, Wang S J, Gandhi T K, Bates D W, Kuperman G J.Design and implementation of a comprehensive outpatient Results Manager J Biomed Inform 200336(1-2):80–91. [DOI] [PubMed] [Google Scholar]

- 36.Lacson R, O'Connor S D, Andriole K P, Prevedello L M, Khorasani R. Automated critical test result notification system: architecture, design, and assessment of provider satisfaction. AJR Am J Roentgenol. 2014;203(05):W491-6. doi: 10.2214/AJR.14.13063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Ortel T L, Arnold K, Beckman M et al. Design and implementation of a comprehensive surveillance system for venous thromboembolism in a defined region using electronic and manual approaches. Appl Clin Inform. 2019;10(03):552–562. doi: 10.1055/s-0039-1693711. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Bates D W, Kuperman G J, Wang S et al. Ten commandments for effective clinical decision support: making the practice of evidence-based medicine a reality. J Am Med Inform Assoc. 2003;10(06):523–530. doi: 10.1197/jamia.M1370. [DOI] [PMC free article] [PubMed] [Google Scholar]