Introduction

If therapeutic decisions in healthcare are to be informed by the results of clinical research, patients and prescribers must be able to trust the research evidence presented to them. In recent decades, the credibility of much of the evidence base for some of the most popular therapeutic and preventive interventions has been undermined by the identification of sponsorship bias.

Sponsorship bias is the distortion of design and reporting of clinical experiments to favour the sponsor’s aims. By using the word ‘sponsor’, I am not implying here that the origins of bias are solely or principally commercial. Sponsors are the funders and stakeholders active within the design, setting-up, running and reporting of clinical trials, including the members of research teams.

Until recently, the distortions introduced by sponsorship bias were recognised as important but difficult to identify with certainty because of the secrecy surrounding pharmaceutical trials. Recent developments, such as relaxation of access to regulatory material,1 have led to relatively successful efforts to identify and describe sponsorship bias.

The problem of sponsorship bias was recognised a century ago. In 1917, Torald Sollmann warned of the effects of secrecy and closeness between those who manufacture drugs, those who test them and those who publicise them. As a member of the Council on Pharmacy and Chemistry of the American Medical Association (forerunner of the U.S. Food and Drug Administration), Sollmann was able to identify ‘poor quality’ and secrecy (publication bias) as major threats to the credibility of the reports submitted to the Council.

Some of the papers masquerade as “clinical reports,” sometimes with a splendid disregard for all details that could enable one to judge of their value and bearing, sometimes with the most tedious presentation of all sorts of routine observations that have no relation to the problem …

… when commercial firms claim to base their conclusions on clinical reports, the profession has a right to expect that these reports should be submitted to competent and independent review. When such reports are kept secret, it is impossible for anyone to decide what proportion of them are trustworthy, and what proportion thoughtless, incompetent or accommodating …

… Those who collaborate should realize frankly that under present conditions they are collaborating, not so much in determining the scientific value, but rather in establishing the commercial value of the article.2

In Sollmann’s simple observations lie the nub of the problem. When the clinical trial is designed to advance public understanding, its design and reporting are less likely to be distorted by bias. However, when trials are run for reasons other than advancing knowledge – such as licensing, profit or career enhancement – bias is likely to creep in, even if it has not been present from the start.

It was not until six decades after Sollmann’s article that empirical investigations of sponsor bias began. In 1980, Elina Hemminki reported her pioneering study of 566 reports of clinical trials of psychotropic drugs submitted in support of licensing applications in Sweden and Finland over a period of four non-consecutive years in the 1960s and 1970s.3 Hemminki’s aims were threefold: to define the number of trials accompanying applications; to determine the quality and publication fate of these trials; and to assess whether data contained in the reports could be used to identify harms. Her research may have been the first use of regulatory documents to explore reporting bias and its association with the contents of reports. The list of problems she identified might well have been compiled 30 years later: secrecy; cherry picking of results; publication bias; ghost authorship; distorted design in favour of testing short-term effectiveness; and the inverse association between the reporting of harms and the likelihood of publication.4

To investigate the specific nature of sponsorship bias in this article, I review subsequent applications of Hemminki’s conceptually simple expedient of comparing the reports of clinical trials of medicinal products submitted to regulators with subsequently published reports of the same trials. Although the available evidence relates principally to clinical trials of commercial interest, this should not be taken to imply that academia has higher standards of reporting – available evidence suggests that it does not5 – but rather that sponsorship bias is more difficult to study in non-commercial trials.

One manifestation of sponsor bias is choosing comparators to give the impression that new drugs are more effective or safer than existing alternatives.6 Further on, in this essay, I present examples showing how design distortions can alter the conclusion of a clinical trial or mislead readers.

Sponsorship bias reflected in reporting biases

In the two decades that followed Hemminki’s observations, the problems of sponsorship bias and its most important consequences were investigated and defined by a handful of investigators who assessed projects submitted to regulators or research ethics committees. Easterbrook and colleagues found that only 48% of trials submitted to an ethics committee between 1984 and 1987 were published, and that publication was more likely if statistically significant differences had been found.7

In 1992, Dickersin and colleagues added another dimension. Using a similar sample, they documented publication bias associated with external sponsorship. Their questionnaire survey found that the investigators were reluctant to submit reports of disappointing research for publication, although NIH-funded studies had a higher publication rate than industry-backed studies.8

Melander and colleagues assessed 42 placebo-controlled trials of selective serotonin reuptake inhibitors submitted to the Swedish regulators between 1983 and 1999. They found that analysis methods set out in the protocols were ignored and instead the analyses most favourable to new products were presented. Multiple publications of the same ‘positive’ trials were also frequent.9

Chan and colleagues followed up a cohort of 274 trial protocols submitted to a Danish research ethics committee in the 1990s and compared their content with subsequent reports. This comparison revealed systematic differences in outcome reporting associated with statistical significance of the comparisons. Higher rates of significant harms were associated with a lower likelihood that these would be reported publicly. The investigators denied supressing data despite clear evidence to the contrary.10

An important innovation in methods used to study sponsorship bias was reported in two papers published in 2008.11,12 These compared information in freely available Food and Drug Administration regulators’ reports on the strengths and weaknesses of the population of clinical trials submitted by sponsors to support applications for licensing with information in publications. This investigative innovation was important: these Food and Drug Administration reports are often very detailed and list all the trials relevant to the indications specified in the applications for licensing. Although those investigating possible sponsorship bias did not see the actual submission, they had access to the views of regulatory reviewers. In some instances, they had re-run their original analyses with data provided by the sponsor with each submission. Both these assessments11,12 revealed major discrepancies between Food and Drug Administration assessments and conclusions in subsequently published reports of the same trials.

In a further important analysis, Psaty and Kronmal compared internal sponsor documents with mortality data presented to the Food and Drug Administration with two published trials of the drug rofecoxib for Alzheimer’s disease and cognitive impairment. The sponsor concealed from the Food and Drug Administration their own internal analysis showing excess mortality in the intervention arms, while claiming no excess mortality by the simple expedient of ignoring the deaths in the two off-treatment weeks of follow-up. Neither publication contained any statistical analysis and the reporting of the time between exposure and death was unclear.13

Understanding sponsorship bias

Thanks to the work of groups who accessed regulatory material by statutory means, litigation or media pressure, the decade beginning in 2009 witnessed major advances in understanding sponsorship bias and its effects. All three of the examples which follow have used Hemminki’s comparison method.

The group working in the German Institute for Quality and Efficiency in Health Care, empowered by legislation to access regulatory submissions, has produced cogent evidence of systematic selective reporting of data, both to regulators and in publications, with overemphasis on benefits and under-reporting or non-reporting of harms.14–17

By highlighting the discrepancies among different sources of data for the same trials, primarily publications (when available) and regulatory documents, other assessments have led to a fundamental reappraisal of the properties of drugs and biologics.18–30 More detail of each of these assessments is provided in Figure 1 (adapted from Jefferson et al.31).

Figure 1.

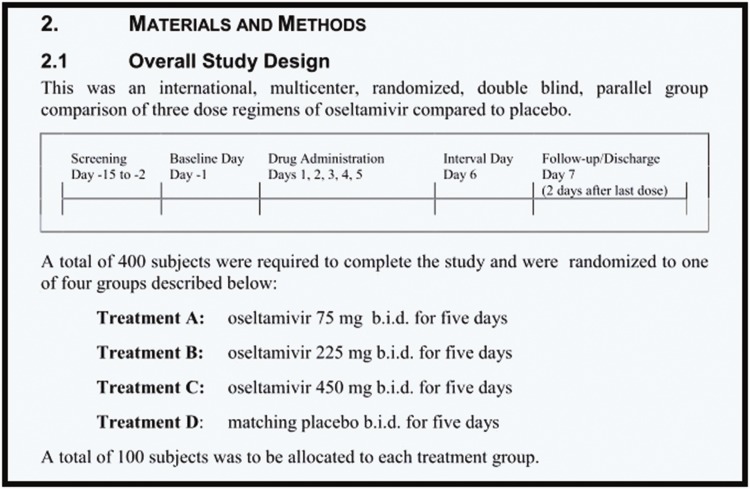

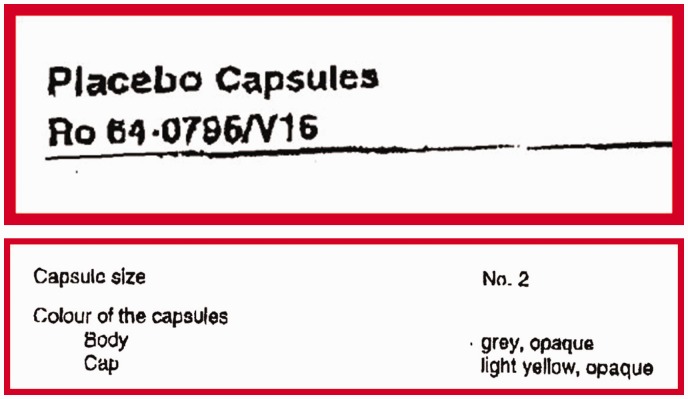

Synopsis extract of trial WP 16263 showing overall study design with matching placebo.

|

Two other reviews of comparison studies looked specifically at how harms were reported in publications and their regulatory submission counterparts and came to similar conclusions.32,33 At the time of writing, the assessment by Golder et al. is more up-to-date and comprehensive (it includes some of the studies already cited), and concluded that ‘the percentage of adverse events that would have been missed had each analysis relied only on the published versions ranged between 43% and 100%, with a median of 64%’.

In summary, this comprehensive body of evidence documented the presence of distortions by sourcing published records and comparing them in detail with information that is not usually visible. It documented the presence of the bias introduced by sponsors’ attempts at ‘establishing the commercial value of the article’ – to use Sollmann’s words written over a century ago.

Although evidence of sponsor bias is now undeniable, however, it does not detail how distortions are generated, and how they are sometimes hidden or misunderstood. I shall illustrate this with three examples with which I am particularly familiar.

Tamiflu

The influenza antiviral Tamiflu (oseltamivir, Roche) is a drug that has been

extensively stockpiled as a precaution against a predicted influenza pandemic. In

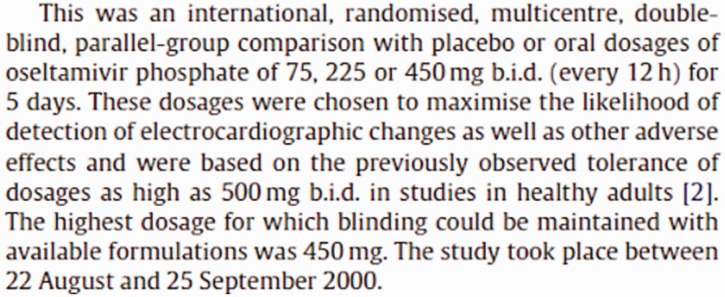

2010, Roche published a randomised, double-blind trial of Tamiflu34 in three different strengths, daily for 5 days, versus placebo, in 395 volunteers.35 The ‘Methods’ section of the publication reported that:

Figure 1 shows the synopsis of the matching 8544-page clinical study report.

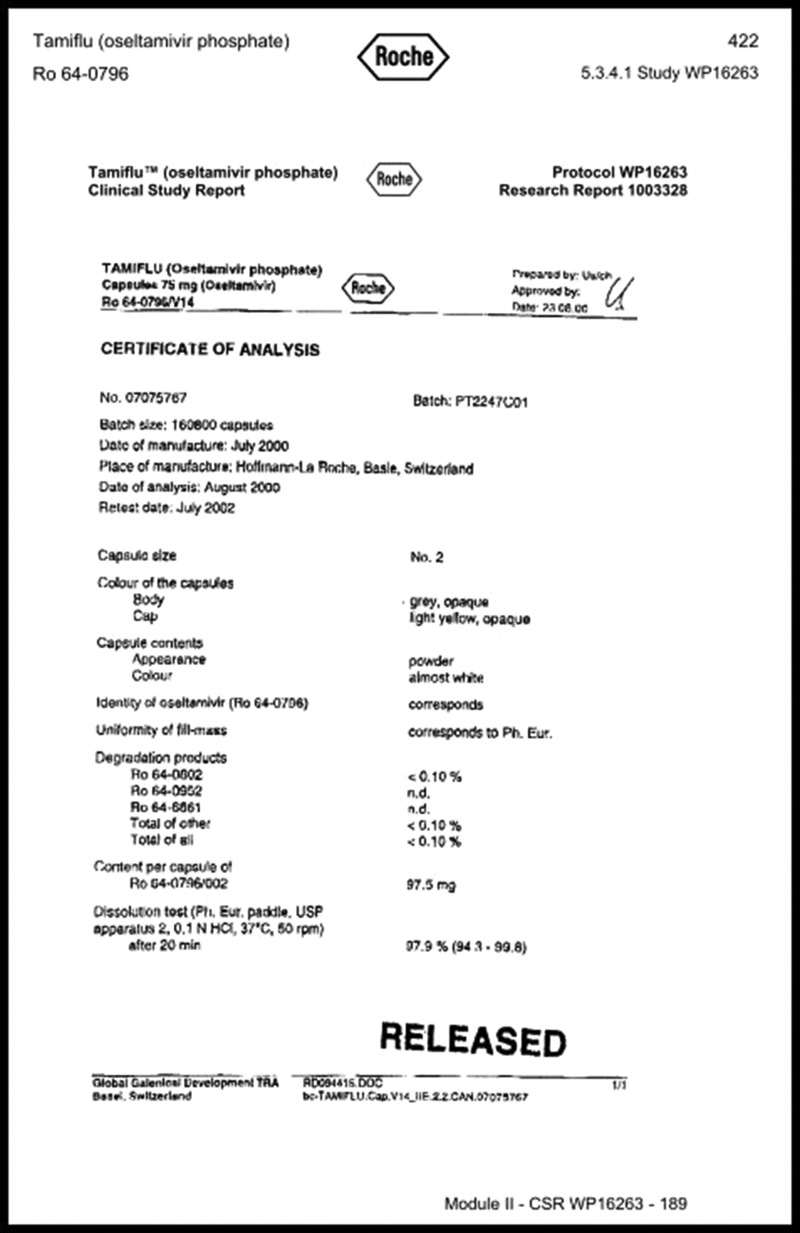

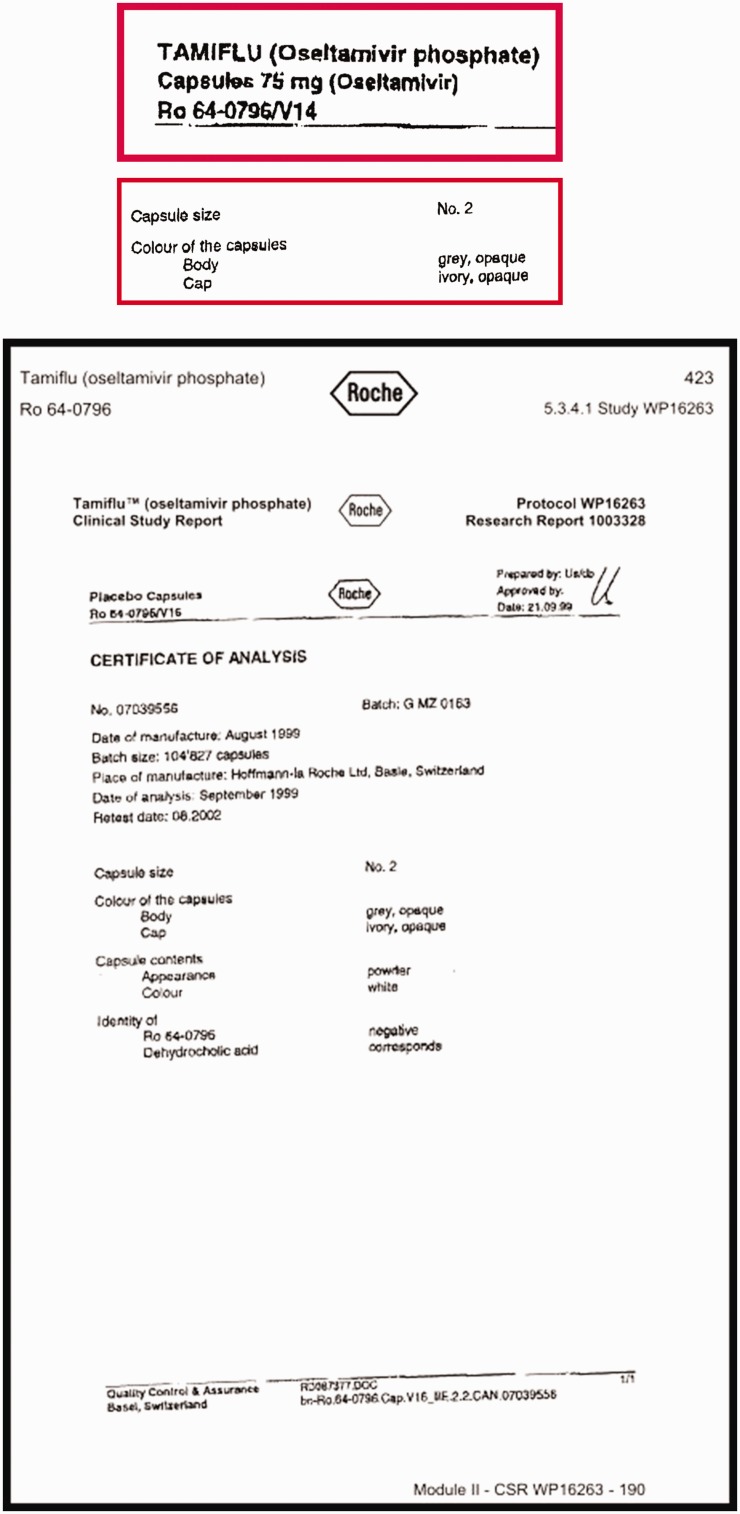

Synopses of clinical study reports are usually very useful, exhaustive and highly structured and the content is usually coherent with the rest of the document. However, when we reviewed the certificates of analysis for oseltamivir, which are part of the regulatory statutory requirements in which manufacturers describe the active intervention and its comparator, we discovered that the capsules containing oseltamivir and its placebo, although of the same size, had different coloured caps. This meant that the trial could not have been double-blind, thus raising the likelihood of bias in assessing outcomes. It is difficult to know whether ‘mistakes’ of this kind are simple errors undetected and unremarked upon by regulators. It is clearly not possible for any editor or peer reviewer to identify them either.

Figure 2 displays the certificates of analysis from Tamiflu trial WP 16263 describing the active capsule and its placebo. The relevant passages have been enlarged and bordered in red.

Figure 2.

Certificates of analysis from Tamiflu trial WP 16263 describing the active capsule and its placebo overall and with details framed and enlarged (in red frames).

|

|

|

Gardasil

The quadrivalent human papilloma virus vaccine Gardasil made by Merck was launched to provide protection against papilloma viral infection, a precursor of cancer of the cervix. The publication of pivotal trial V501-020 in the New England Journal of Medicine in 2011 reports the comparator to the vaccine as ‘AAHS containing placebo’.36 Reports of the same item from other sources are notable (Table 1). All contain the words ‘placebo’.

Table 1.

Reporting format for the control used in Gardasil trial V501-020.

| Trial | Clinical study report | Register | Publication |

|---|---|---|---|

| V501-020 | 225 mcg of aluminium as AAHS in normal saline | No ingredients listed; only states ‘placebo’ (NCT00090285) | AAHS containing placebo36 |

AAHS: adjuvant aluminium hydroxyphosphate sulphate.

Despite its description as a ‘placebo’, and being mentioned as many as 50 times in the publication, a placebo has by definition no active ingredient. Merck’s adjuvant aluminium hydroxyphosphate sulphate (AAHS) is neither a placebo nor inactive but is actually a very potent adjuvant contained in the vaccine. Its purpose is to stimulate immunity and maintain a high and prolonged immune response. Its use as a control may mask both harms and differences in effectiveness between arms, something that none of the four data sources report. The manuscript does not explain any of this nor are readers warned of the presence of fragments of DNA in the AAHS mesh, of possible recombinant origin.

The practical consequence of using the Gardasil adjuvant as a control is that the clinical difference being estimated is Gardasil plus adjuvant versus adjuvant alone. Mistakes and misreporting of this type seem unlikely to have occurred by chance and leave readers wondering how regulators and journal editors could have missed the facts, considering that all the pivotal trials of Gardasil had the same comparator. What is needed is a trial comparing Gardasil (which contains AAHS adjuvant) with an inactive placebo control.

We have drawn the attention to similar reporting bias in four other major human papillomavirus trials.37,38

Statins

The third and last example of sponsor bias comes from the JUPITER39 trial of the cholesterol lowering drug rosuvastatin. JUPITER tested the effects of the statin in preventing primary cardiovascular events in asymptomatic people with elevated C-reactive protein.40 JUPITER is a significant trial as it was the first trial to test statin use in primary prevention. It is on the basis of the results of trials like JUPITER that the indications for statin use have been expanded to include primary prevention. Although there is little debate about the benefits of statin therapy in those at higher risk of cardiovascular disease, recently attention has shifted to the trade-off between benefits and harms in those prescribed statins for primary prevention. Higher doses of statins are known to be associated with rhabdomyolysis, leading to renal and respiratory failure, but the debate is now on the uncertainty about rates of less serious harms (especially myalgia and low-grade myopathy) in populations at lower risk of cardiovascular disease. Although not immediately life-threatening, the impact of these potential harms on quality of life and remaining mobility, especially in frail elderly people, as statins are extended to wider and older populations, make them potentially very important.41 The debate swings between the conclusions drawn by the influential Oxford-based Cholesterol Treatment Trialists’ Collaboration on the basis of their series of individual participant's data meta-analysis42 on one side, and evidence from observational studies on the other.43,44 The Cholesterol Treatment Trialists' director insists that the benefits of statin use in primary prevention of cardiovascular disease outweigh their harms (the incidence of which they estimate at 1 in 10,000 users).45 These observations seem to be contradicted by numerous large surveys, and observational studies report that users quit mainly because of harms.

The original Cholesterol Treatment Trialists' protocol46 made no mention of harms, concentrating instead only on potential benefits

(see Figure 2), while by

2016 the Cholesterol Treatment Trialists was actively seeking harms data from their

trials holdings, as they had based their analyses of individual participant's data

mostly on publications.47 No results are available from this proposed analysis at the time of writing.

Bias and distortion lie in originally ignoring harms and in analysing individual

data without reference to the source clinical study reports and especially in not

checking the definitions, severity scales and case report forms. This is where such

harms would be recorded. Examination of the relevant parts of the JUPITER protocol

and blank or model case report form would provide the answer. The JUPITER protocol

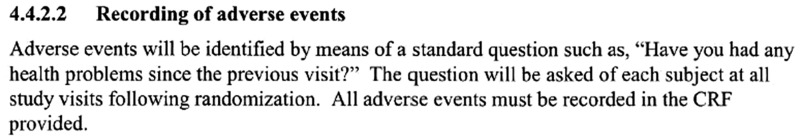

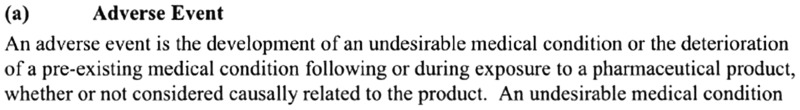

suggests recording adverse events as follows:

In addition, non-cardiovascular adverse events are defined as follows:

Extracts are taken from Astra Zeneca’s protocol for JUPITER.

The publication by Ridker et al.40 states that ‘there were no significant differences between the two study groups with regard to muscle weakness’, although this refers to serious muscular weakness. It is also of note that all specific definitions regarding possible harms are related to cardiovascular endpoints (i.e. benefits). Absence of common methods and definitions across trials are likely to raise questions about the validity of general statements such as those by Ridker et al.

These examples of distortions in designing and reporting trials of these three blockbuster interventions suggest deeply ingrained sponsorship bias in everyday clinical trial science. Whereas the first two examples could be ascribed to Sollmann’s ‘establishing the commercial value of the article’, the statin meta-studies were organised and run by well-respected academic centres in a network of trialists, which is only partially pharma-funded. The dangers of not referring to clinical study reports, protocols and manuals of operations have not been highlighted here. It is important to recognise that undetected but plausible adverse effects of statins in the hundreds of millions of people who are now being prescribed these drugs could be causing low-grade harms making their lives more difficult.

Tackling sponsorship bias

In any list of priorities for preventing sponsorship bias, disclosure and openness and the avoidance of Sollmann’s ‘secrecy’ ranks as the first and most obvious measure. The example and snapshots of regulatory documents presented in this paper did not fall out of the sky. They were the results of many years of work and effort by my colleagues and me. The degree of scrutiny involved in the review of regulatory documents also points to the necessity of quick access (when the intervention has only just been licensed); a sufficient body of researchers willing and capable of doing this work; and the identification of priority topics (perhaps on the basis of cost and potential benefit) on which to concentrate scarce reviewing efforts.

Legislation would probably be necessary to enable early access by accredited groups worldwide to regulatory submissions and to open up the editorial process completely to the use of regulatory material.

Most of these measures were proposed by Garattini and Chalmers more than a decade ago, with little political engagement.48 In 1968, at U.S. Congressional Hearings, the statistician Donald Mainland suggested that Congress, ‘take the evaluation of drugs entirely out of the producer’s hands, after the completion of toxicological testing on animals’.49 Ultimately, separation of those who are keen on establishing the value of healthcare interventions for health and those who promote the commercial worth of interventions will be the only efficient way protecting the interests of the public.

Acknowledgements

The author thanks the Dutch Medicines Evaluation Board for providing the JUPITER trial clinical study report.

Declarations

Competing Interests

TJ is funded by the Nordic Cochrane Centre and NIHR for his work on statins. Other disclosures are https://restoringtrials.org/competing-interests-tom-jefferson/.

Funding

TJ received a fee from the James Lind Initiative for preparing this article.

Ethics approval

Not applicable.

Guarantor

TJ.

Contributorship

Sole authorship.

Provenance

Invited article from the James Lind Library.

References

- 1.Gøtzsche PC, Jørgensen AW. Opening up data at the European Medicines Agency. BMJ 2011; 342: d2686–d2686. [DOI] [PubMed] [Google Scholar]

- 2.Sollmann T. The crucial test of therapeutic evidence. JAMA 1917; 69: 198–10. [DOI] [PubMed] [Google Scholar]

- 3.Hemminki H. Study of information submitted by drug companies to licensing authorities. BMJ 1980; 280: 833–836. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Dickersin K, Chalmers I. Recognising, investigating and dealing with incomplete and biased reporting of clinical research: from Francis Bacon to the World Health Organisation. JLL Bulletin: Commentaries on the history of treatment evaluation. See https://www.jameslindlibrary.org/articles/recognising-investigating-and-dealing-with-incomplete-and-biased-reporting-of-clinical-research-from-francis-bacon-to-the-world-health-organisation/ (last checked 7 March 2020). [Google Scholar]

- 5.Goldacre B, DeVito NJ, Heneghan C, Irving F, Bacon S, Fleminger J, et al. Compliance with requirement to report results on the EU Clinical Trials Register: cohort study and web resource. BMJ 2018; 362: k3218–k3218. See https://www.bmj.com/content/bmj/362/bmj.k3218.full.pdf (last checked 7 March 2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Mann H, Djulbegovic B. Comparator bias: why comparisons must address genuine uncertainties. JLL Bulletin: Commentaries on the history of treatment evaluation. See https://www.jameslindlibrary.org/articles/comparator-bias-why-comparisons-must-address-genuine-uncertainties/ (last checked 7 March 2020). [Google Scholar]

- 7.Easterbrook PJ, Berlin JA, Gopalan R, Matthews DR. Publication bias in clinical research. Lancet 1991; 37: 867–872. [DOI] [PubMed] [Google Scholar]

- 8.Dickersin K, Min YI, Meinert CL. Factors influencing publication of research results: follow-up of applications submitted to two institutional review boards. JAMA 1992; 267: 374–378. [PubMed] [Google Scholar]

- 9.Melander H, Ahlqvist-Rastad J, Meijer G, Beermann B. Evidence b(i)ased medicine – selective reporting from studies sponsored by pharmaceutical industry: review of studies in new drug applications. BMJ 2003; 326: 1171–1173. See https://www.bmj.com/content/326/7400/1171.full.pdf+html (last checked 7 March 2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Chan A-W, Hròbjartsson A, Haahr M, Gøtzsche PC, Altman DG. Empirical evidence for selective reporting of outcomes in randomized trials: comparison of protocols to publications. JAMA 2004; 291: 2457–2465. [DOI] [PubMed] [Google Scholar]

- 11.Turner EH, Matthews AM, Linardatos E, Tell RA, Rosenthal R. Selective publication of antidepressant trials and its influence on apparent efficacy. New Engl J Med 2008; 358: 252–260. [DOI] [PubMed] [Google Scholar]

- 12.Rising K, Bacchetti P, Bero L. Reporting bias in drug trials submitted to the Food and Drug Administration: review of publication and presentation. PLoS Med 2008; 5(11): e217–e217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Psaty BM, Kronmal RA. Reporting mortality findings in trials of Rofecoxib for Alzheimer disease or cognitive impairment. JAMA 2008; 299: 1813–1817. [DOI] [PubMed] [Google Scholar]

- 14.Eyding D, Lelgemann M, Grouven U, Harter M, Kromp M, Kaiser T, et al. Reboxetine for acute treatment of major depression: systematic review and meta-analysis of published and unpublished placebo and selective serotonin reuptake inhibitor controlled trials. BMJ 2010; 341: c4737–c4737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wieseler B, Kerekes MF, Vervoelgyi V, McGauran N, Kaiser T. Impact of document type on reporting quality of clinical drug trials: a comparison of registry reports, clinical study reports, and journal publications. BMJ 2012; 344: d8141–d8141. [DOI] [PubMed] [Google Scholar]

- 16.Wieseler B, Wolfram N, McGauran N, Kerekes MF, Vervölgyi V, Kohlepp P, et al. Completeness of reporting of patient-relevant clinical trial outcomes: comparison of unpublished clinical study reports with publicly available data. PLoS Med 2013; 10: e1001526–e1001526. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Köhler M, Haag S, Biester K, Brockhaus AC, McGauran N, Grouven U, et al. Information on new drugs at market entry: retrospective analysis of health technology assessment reports versus regulatory reports, journal publications, and registry reports. BMJ 2015; 350: h796–h796. See https://www.bmj.com/content/bmj/350/bmj.h796.full.pdf (last checked 7 March 2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Coyne DW. The health-related quality of life was not improved by targeting higher hemoglobin in the Normal Hematocrit Trial. Kidney Int 2012; 82: 235–241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Jefferson T, Jones MA, Doshi P, Del Mar CB, Heneghan CJ, Hama R, et al. Neuraminidase inhibitors for preventing and treating influenza in healthy adults and children. Cochrane Database Syst Rev 2012; 1: artno.: CD008965. [DOI] [PubMed] [Google Scholar]

- 20.Rodgers MA, Brown JVE, Heirs MK, Higgins JPT, Mannion RJ, Simmonds MA, et al. Reporting of industry funded study outcome data: comparison of confidential and published data on the safety and effectiveness of rhBMP-2 for spinal fusion. BMJ 2013; 346: f3981–f3981. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Fu R, Selph S, McDonagh M, Peterson K, Tiwari A, Chou R, et al. Effectiveness and harms of recombinant human bone morphogenetic protein-2 in spine fusion: a systematic review and meta-analysis. Ann Int Med 2013; 158: 890–902. [DOI] [PubMed] [Google Scholar]

- 22.Jefferson T, Jones MA, Doshi P, Del Mar CB, Hama R, Thompson MJ, et al. Neuraminidase inhibitors for preventing and treating influenza in healthy adults and children. Cochrane Database Sys Rev 2014; 4 art. no.: CD008965. [DOI] [PubMed] [Google Scholar]

- 23.Vedula SS, Li T, Dickersin K. Differences in reporting of analyses in internal company documents versus published trial reports: comparisons in industry-sponsored trials in off-label uses of gabapentin. PLoS Med 2013; 10: e1001378–e1001378. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Maund E, Tendal B, Hróbjartsson A, Jørgensen KJ, Lundh A, Schroll J, et al. Benefits and harms in clinical trials of duloxetine for treatment of major depressive disorder: comparison of clinical study reports, trial registries, and publications. BMJ 2014; 348: g3510–g3510. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Lawrence K, Beaumier J, Wright J, Perry T, Puil L, Turner E, et al. Olanzapine for schizophrenia: what do the unpublished clinical trials reveal? Presented at the 23rd Cochrane Colloquium. Vienna, October 2015 (presentation 03.1). See https://abstracts.cochrane.org/2015-vienna/olanzapine-schizophrenia-what-do-unpublished-clinical-trials-reveal (last checked 7 March 2020). [Google Scholar]

- 26.Cosgrove L, Vannoy S, Mintzes B, Shaughnessy AF. Under the influence: the interplay among industry, publishing, and drug regulation. Account Res 2016; 23: 257–279. [DOI] [PubMed] [Google Scholar]

- 27.Hodkinson A, Gamble C, Smith CT. Reporting of harms outcomes: a comparison of journal publications with unpublished clinical study reports of orlistat trials. Trials 2016; 17: 207–207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Jureidini J, Amsterdam J, McHenry L. The citalopram CIT-MD-18 pediatric depression trial: deconstruction of medical ghostwriting, data mischaracterisation and academic malfeasance. Int J Risk Safety Med 2016; 28: 33–43. [DOI] [PubMed] [Google Scholar]

- 29.Schroll JB, Penninga EI, Gøtzsche PC. Assessment of adverse events in protocols, clinical study reports, and published papers of trials of orlistat: a document analysis. PLoS Med 2016; 13: e1002101–e1002101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Mayo-Wilson E, Li T, Fusco N, Bertizzolo L, Canner JK, Cowley T, et al. Cherry-picking by trialists and meta-analysts can drive conclusions about intervention efficacy. J Clin Epidemiol 2017; 91: 95–110. [DOI] [PubMed] [Google Scholar]

- 31.Jefferson T, Doshi P, Boutron I, Golder S, Heneghan C, Hodkinson A, et al. When to include clinical study reports and regulatory documents in systematic reviews. BMJ Evid Based Med. See https://ebm.bmj.com/content/ebmed/23/6/210.full.pdf (last checked 7 March 2020). [DOI] [PubMed] [Google Scholar]

- 32.Hughes S, Cohen D, Jaggi R. Differences in reporting serious adverse events in industry sponsored clinical trial registries and journal articles on antidepressant and antipsychotic drugs: a cross-sectional study. BMJ Open 2014; 4: e005535–e005535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Golder S, Loke YK, Wright K, Norman G. Reporting of adverse events in published and unpublished studies of health care interventions: a systematic review. PLoS Med 2016; 13(9): e1002127–e1002127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.WP16263. A randomized, double blind, parallel group, placebo-controlled study of the effect of oseltamivir on ECG intervals in healthy subjects. See https://datadryad.org/resource/doi:10.5061/dryad.77471 (last checked 7 March 2020).

- 35.Dutkowski R, Smith JR, Davies BE. Safety and pharmacokinetics of oseltamivir at standard and high dosages. Int J Antimicrob Agents 2010; 35: 461–467. [DOI] [PubMed] [Google Scholar]

- 36.Giuliano AR, Palefsky JM, Goldstone S, Moreira ED Jr, Penny ME, Aranda C, et al. Efficacy of quadrivalent HPV vaccine against HPV infection and disease in males. N Engl J Med 2011; 364: 401–11. [DOI] [PMC free article] [PubMed]

- 37.Doshi P, Jefferson T. Clinical study reports of randomised controlled trials: an exploratory review of previously confidential industry reports. BMJ Open 2013; 26: e002496–e002496. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Doshi P, Bourgeois F, Hong K, Jones M, Lee H, Shamseer L, et al. Additional trials within scope: a follow-up to our “Call to action: RIAT restoration of a previously unpublished methodology in Gardasil vaccine trials”. See https://www.bmj.com/content/346/bmj.f2865/rr-9 (last checked 7 March 2020).

- 39.JUPITER (Justification for the Use of statins in Primary prevention: an Intervention Trial Evaluating Rosuvastatin). A randomized, double-blind, placebo controlled, multicenter, Phase III Study of rosuvastatin (CRESTOR) 20 mg in the primary prevention of cardiovascular events among subjects with low levels of LDL-cholesterol and elevated levels of C-Reactive Protein. JUPITER trial protocol (2003 and 2006 versions).

- 40.Ridker PM, Danielson E, Fonseca FAH, Genest J, Gotto AM, Jr, Kastelein JJ, et al. Rosuvastatin to prevent vascular events in men and women with elevated C-Reactive Protein. New Engl J Med 2008; 359: 2195–2207. [DOI] [PubMed] [Google Scholar]

- 41.Cholesterol Treatment Trialists’ (CTT) Collaboration. Efficacy and safety of statin therapy in older people: a meta-analysis of individual participant data from 28 randomised controlled trials. Lancet 2019; 393: 407–415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Collins R, Reith C, Emberson J, Armitage J, Baigent C, Blackwell L, et al. Interpretation of the evidence for the efficacy and safety of statin therapy. Lancet 2016; 388: 2532–2561. See https://www.thelancet.com/journals/lancet/article/PIIS0140-6736(16)31357-5/fulltext (last checked 7 March 2020). [DOI] [PubMed] [Google Scholar]

- 43.Abramson JD, Rosenberg HG, Jewell N, Wright JM. Should people at low risk of cardiovascular disease take a statin? BMJ 2013; 347: f6123–f6123. [DOI] [PubMed] [Google Scholar]

- 44.Malhotra A. Saturated fat is not the major issue. BMJ 2013; 347: f6340–f6340. [DOI] [PubMed] [Google Scholar]

- 45.Demasi M. Statin wars: have we been misled about the evidence? A narrative review. Br J Sports Med 2018; 52: 905–909. [DOI] [PubMed] [Google Scholar]

- 46.Cholesterol Treatment Trialists’ (CTT) Collaboration. Protocol for a prospective collaborative overview of all current and planned randomized trials of cholesterol treatment regimens. Am J Cardiol 1995; 75: 1130–1134. [DOI] [PubMed] [Google Scholar]

- 47.Cholesterol Treatment Trialists’ (CTT) Collaboration. Protocol for analyses of adverse event data from randomized controlled trials of statin therapy. Am Heart J 2016; 176: 63–69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Garattini S, Chalmers I. Patients and the public deserve big changes in evaluation of drugs. BMJ 2009; 338: b1025–b1025. [DOI] [PubMed] [Google Scholar]

- 49.Altman DG. Donald Mainland: anatomist, educator, thinker, medical statistician, trialist, rheumatologist. JLL Bulletin: Commentaries on the history of treatment evaluation. See https://www.jameslindlibrary.org/articles/donald-mainland-anatomist-educator-thinker-medical-statistician-trialist-rheumatologist/ (last checked 7 March 2020). [DOI] [PMC free article] [PubMed]