Abstract

Objectives

The objective was to assess the feasibility of using spaced multiple‐choice questions (MCQs) to teach residents during their pediatric emergency department (PED) rotation and determine whether this teaching improves knowledge retention about pediatric rashes.

Methods

Residents rotating in the PED from four sites were randomized to four groups: pretest and intervention, pretest and no intervention, no pretest and intervention, and no pretest and no intervention. Residents in intervention groups were automatically e‐mailed quizlets with two MCQs every other day over 4 weeks (20 questions total) via an automated e‐mail service with answers e‐mailed 2 days later. Retention of knowledge was assessed 70 days after enrollment with a posttest of 20 unique, content‐matched questions.

Results

Between August 2015 and November 2016, a total 234 residents were enrolled. The completion rate of individual quizlets ranged from 93% on the first and 76% on the 10th quizlet. Sixty‐six residents (55%) completed all 10 quizlets. One‐hundred seventy‐three residents (74%) completed the posttest. There was no difference in posttest scores between residents who received a pretest (61.0% ± 14.5%) and those who did not (64.6% ± 14.0%; mean difference = –3.7, 95% confidence interval [CI] = –8.0 to 0. 6) nor between residents who received the intervention (64.5% ± 13.3%) and those who did not receive the intervention (61.2% ± 15.2%; mean difference = 3.2, 95% CI = –1.1 to 7.5). For those who received a pretest, scores improved from the pretest to the posttest (46.4% vs. 60.1%, respectively; 95% CI = 9.7 to 19.5).

Conclusion

Providing spaced MCQs every other day to residents rotating through the PED is a feasible teaching tool with a high participation rate. There was no difference in posttest scores regardless of pretest or intervention. Repeated exposure to the same MCQs and an increase in the number of questions sent to residents may increase the impact of this educational strategy.

Teaching postgraduate resident physicians (residents) in the pediatric emergency department (PED) is challenging due to variability in training program, training level, shift timing, and seasonality of pediatric patient illness. Some learners may not learn key topics due to a lack of exposure.1 Learners completing rotations in pediatric emergency medicine (PEM) work varied shift times and are often not available to participate in group learning activities. Learner preferences of millennials and digital natives are also shifting toward easy‐to‐use technology and flexible learning environments.2

Educators in the PED have started utilizing asynchronous e‐learning strategies using Web modules and more recently podcasts.3, 4, 5, 6, 7 These delivery modalities are frequently cited by learners as preferred but often show poor participation rates when integrated into the learner curriculum.5, 8

Retrieval practice is an effective learning strategy in which learners recall information they have previously seen using concept maps, flashcards, free‐recall, or multiple‐choice questions (MCQs).9, 10, 11, 12, 13 MCQs are commonly used for assessment purposes to determine how much knowledge each learner was able to absorb during a teaching encounter; however, using MCQs as practice items, has been shown to improve retention of knowledge markedly more than passive educational activities (e.g. lectures, reading).14, 15, 16, 17 Utilizing MCQs as an effective and low‐cost teaching tool for resident physicians completing a rotation in PEM has been incompletely described.18 Although learning science has informed educators about best learning practices, application of these principles remains challenging and there is potential to use technology to create innovative and low‐cost methods to deliver this curriculum.

We hypothesized that sending easily accessible MCQs at frequent, regular intervals during a PEM rotation to residents would be an educational intervention with high participation rates and that completion of these MCQs would improve knowledge on the diagnosis and treatment of pediatric rashes compared to residents who experience standard clinical learning as measured by a posttest 2 months after the completion of the rotation.

Methods

Study Design

This was a prospective, randomized controlled, multicenter educational trial using Solomon four‐group design to control for pretest sensitization.19 Institutional review board approvals were obtained at each of the four study sites.

Study Setting and Population

This study was performed at four PEM sites (Boston Children's Hospital, Children's Hospital Los Angeles, Children's National Medical Center, Denver Health Medical Center) across the United States. Inclusion criteria for study participants included residents from emergency medicine, pediatrics, combined internal medicine–pediatrics, and family medicine rotating for at least 4 consecutive weeks in a PED. Exclusion criterion was limited to residents who had previously enrolled in the study.

Study Protocol

The educational intervention was designed using a six‐step process from Kern.20 A needs assessment was conducted of Denver‐based emergency medicine, pediatrics, and family medicine residents, who ranked the usefulness of common teaching topics within PEM. These data suggested that teaching about pediatric rash diagnosis and treatment would be perceived as useful to residents across training years and programs due to low baseline comfort with rashes and the rarity of some rashes in the clinical environment. The goal of this intervention was to determine if residents would consistently engage in the learning intervention and also to determine if this would lead to an increase in resident knowledge of pediatric rash diagnosis and treatment. Principles utilized in developing the intervention were low cost, easy accessibility for participants, and automation of the intervention.

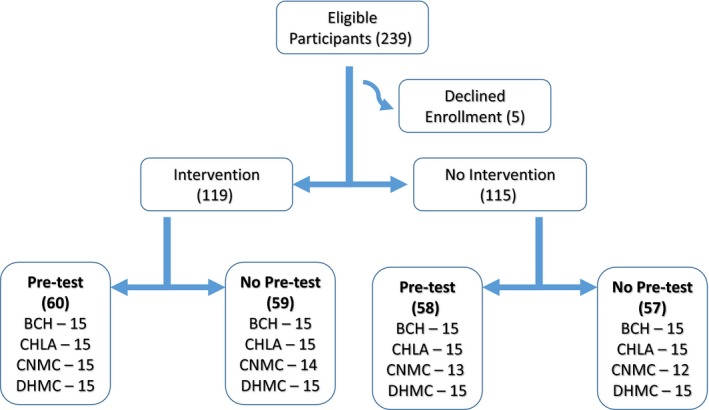

The administration of a pretest can have a significant positive effect on learning.20 To control for this effect, a Solomon four‐group design was used in which participants in both the control group and the intervention group are randomized to receive or not receive a pretest. Stratified block randomization at each site assigned 15 enrollment packets to each of the following four groups: 1) pretest and intervention, 2) pretest and no intervention, 3) no pretest and intervention, and 4) no pretest and no intervention (Figure 1). Study packets with randomization assignments were constructed by the research coordinator at the primary site and mailed to each of the other three sites. Site directors and enrollees were blinded to the randomization, and faculty at each site were not informed about the group of any individual resident.

Figure 1.

Consort diagram. BCH = Boston Children's Hospital; CHLA = Children's Hospital Los Angeles; CNMC = Children's National Medical Center; DHMC = Denver Health Medical Center.

Potential research participants were approached by a faculty representative at each site within the first 3 days of their rotation. After signing a consent form, the residents opened a sealed research packet that contained an enrollment survey and a pretest if randomized to a pretest group. Residents randomized to the pretest groups completed the 10‐question MCQ pretest on paper. The enrollment survey included questions on demographic information, training program and training year. Participants were asked to rank their comfort level diagnosing and treating pediatric rashes on a 5‐point Likert scale (1 = very uncomfortable and 5 = very comfortable) and how many weeks they had previously completed on a pediatric dermatology rotation. Each site director e‐mailed the research coordinator the research packet number, resident name, and resident e‐mail address within 24 hours of enrollment. Within 24 hours of receiving this information, the research coordinator e‐mailed enrollees informing them if they were randomized to the intervention group. The completed consent forms, enrollment surveys, and pretests were batched and mailed to the research coordinator at regular intervals. Residents who chose to enroll were given a study incentive of a printed study version of the 2013 Pediatric Emergency Medicine Question Book (PEMQBook 2013)21 in which all rash‐related questions were removed (78 total questions). These excluded rash questions were offered to residents at the completion of the study. Residents were told prior to enrollment they would need to return the PEMQBook (participation incentive) to their site director if they did not complete the posttest.

Residents who were randomized to the intervention groups were e‐mailed a quizlet of two MCQs every 2 days (20 questions total) starting on the day of enrollment and then were sent a 20‐question posttest 70 days after enrollment. Residents randomized to the “no intervention” group were sent an e‐mail confirming enrollment and then did not receive additional contact until the posttest that was sent 70 days from the time of enrollment.

The 10‐question pretest and 20‐question posttest were created using questions included in the PEMQBook 2013.21 These pretest and posttest questions were selected via an iterative process matching content, question quality, difficulty index, and rash category type. Pretest and posttest questions had similar average difficulty indexes (0.796 and 0.818, respectively) based on preexisting data from administration to PEM fellows and attendings who were using PEMQBook 2013 online to study for PEM board certification. The 20 unique MCQs delivered in the teaching intervention consisted of questions taken from a prior edition of this text: 2009 Pediatric Emergency Question Review Book (PEMQBook 2009).22 All intervention questions and the posttest were administered using the SurveyMonkey survey tool. Residents who had not completed the posttest were sent up to three reminders automatically. All e‐mails and e‐mail reminders were delivered using MailChimp (Rocket Science Group, LLC) an automated e‐mail delivery tool. E‐mail campaigns were created at the onset of enrollment and functioned autonomously after participant e‐mails were added to the campaign. No resident contacted the study coordinator for assistance accessing the intervention quizlets.

Data Analysis

Pretest and posttest scores were calculated by dividing the number of questions answered correctly by the number of questions on the test (10 for pretest and 20 for posttest). Mean pretest and posttest scores were presented with standard deviations (SD) as their distributions were found to be normal. Posttest scores were compared between those who received a pretest and those who did not to assess pretest sensitization. Mean difference with 95% confidence intervals (CIs) were given for two group comparisons. Comfort‐level diagnosing and treating pediatric rashes was dichotomized for analysis. Chi‐square and Fisher's exact tests were used to analyze categorical variables. The Student's t‐test or ANOVA was used to analyze continuous variables. The paired t‐test was used to compare the change from pretest to posttest for participants who received a pretest. ANCOVA was used to analyze the relationship between posttest scores and receipt of the intervention in order to control for potential confounders. Kuder‐Richardson 20 (KR20) was calculated for both the pretest and the posttest to measure the internal consistency of resident responses across questions. The number of residents enrolled was based on a prior study that calculated a sample size of 64 participants in two groups to detect a 10‐percentage‐point difference in posttest scores (i.e., two test questions out of 20) with 80% power and an alpha level of 0.05.18 This sample size was doubled to 240 to ensure sufficient numbers for cross‐group analysis with the Solomon four‐group design. Analysis was performed based on randomization group regardless of participation rate in the intervention group. A p‐value of ≤0.05 was considered statistically significant. Statistical analyses were performed with SPSS version 22. This project was supported by a University of Colorado Department of Pediatrics Education Grant.

Results

Between August 2015 and November 2016, a total of 239 residents were approached and 234 residents were enrolled in the study (Figure 1). Five residents declined enrollment due to concern that the study would be too time‐consuming. There was no difference in group enrollment between the four sites (p = 0.99). There were significant differences among sites in sex distribution, training program, and training year of the residents (Table 1). Prior experience on a pediatric dermatology rotation differed between the sites but not comfort level in diagnosing pediatrics rashes (Table 2).

Table 1.

Demographics of Study Participants by Study Site

| Boston Children's Hospital (n = 60) | Children's Hospital Los Angeles (n = 60) | Children's National Medical Center (n = 54) | Denver Health Medical Center (n = 60) | p‐value | |

|---|---|---|---|---|---|

| Age (years) | 30.5 (±3.3) | 28.9 (±3.1) | 30.1 (±3.9) | 29.1 (±2.8) | 0.04 |

| Male sex | 38 (64) | 20 (35) | 28 (56) | 17 (29) | <0.001 |

| Training programa | |||||

| Emergency medicine | 53 (90) | 5 (8) | 27 (54) | 8 (14) | <0.001 |

| Family medicine | 3 (5) | 6 (10) | 10 (20) | 16 (28) | |

| Pediatrics | 3 (5) | 47 (80) | 9 (18) | 34 (59) | |

| Other | 1 (2) | 4 (8) | |||

| Training yeara | |||||

| PGY‐1 | 21 (36) | 18 (31) | 17 (36) | 31 (54) | <0.001 |

| PGY‐2 | 7 (12) | 25 (43) | 14 (30) | 2 (3) | |

| PGY‐3 or ‐4 | 31 (52) | 15 (26) | 16 (34) | 25 (43) | |

Data are reported as mean (±SD) or n (%).

Not all participants provided their type of training program or training year.

Table 2.

Pediatric Dermatology Comfort and Exposure of Study Participants by Study Site

| Boston Children's Hospital (n = 60) | Children's Hospital Los Angeles (n = 60) | Children's National Medical Center (n = 54) | Denver Health Medical Center (n = 60) | p‐value | |

|---|---|---|---|---|---|

| Very comfortable or comfortable diagnosing and treating pediatric rashesa, b | 7 (12) | 7 (12) | 5 (10) | 12 (21) | 0.35 |

| Prior pediatric dermatology rotationa | 13 (22) | 24 (41) | 7 (14) | 19 (33) | 0.01 |

Data are reported as n (%).

1 = very uncomfortable, 2 = comfortable, 3 = neutral, 4 = comfortable, 5 = very comfortable.

Not all participants responded about comfort level with rashes and prior pediatric dermatology rotation.

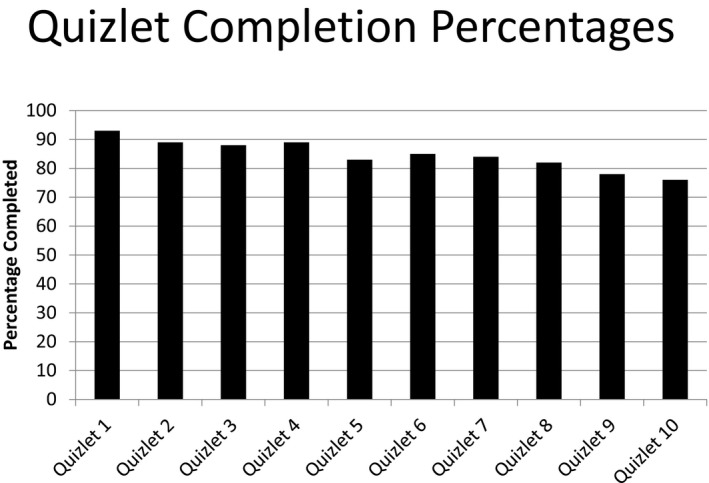

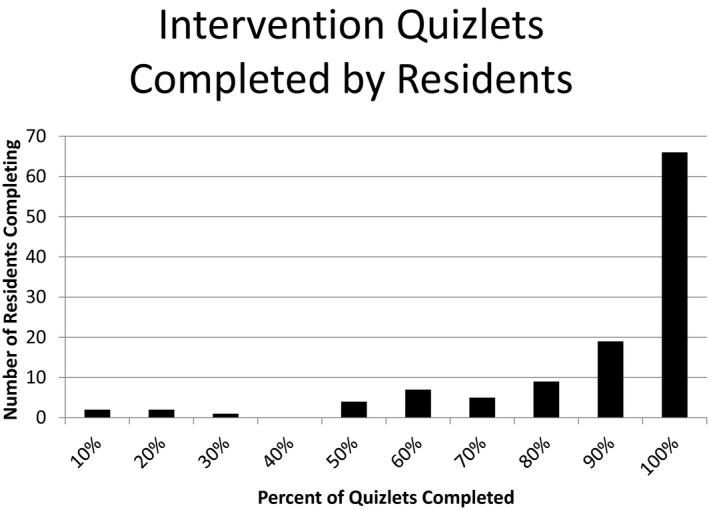

In the intervention group, the completion rate of individual quizlets ranged from 93% on the first quizlet to 76% on the 10th quizlet (Figure 2). Resident completion of the intervention was high with 66 (55%) completing all 10 quizlets (Figure 3) . Only four participants completed none. One‐hundred seventy‐three residents (74%) completed the posttest 2 months after their PED rotation.

Figure 2.

Quizlet completion percentages.

Figure 3.

Number of residents completing quizlets.

Mean pretest and posttest scores are presented in Table 3. There was no difference between the four randomized groups on posttest scores. There was no difference in posttest scores between the group that completed a pretest (61.0 ± 14.5) and those who did not (64.6 ± 14.0; mean difference = –3.7, 95% CI = –8.0 to 0. 6). Since there was no evidence of pretest sensitization, posttest scores were compared between the residents in the group that received an intervention and those who did not regardless of the pretest. There was no difference in posttest scores between the group that received the intervention (64.5 ± 13.3) and those who did not receive the intervention (61.2 ± 15.2; mean difference = 3.2, 95% CI = –1.1 to 7.5). The mean difference between posttest (60.1) and pretest scores (46.4) for those who received a pretest was 14.6 (95% CI 9.7 to 19.5). The percentage of individual questions answered correctly ranged from 30% to 94%.

Table 3.

Mean Pretest and Posttest Scores

| Score | Pretest and Intervention (n = 59 Pretest; (n = 43 Posttest) | Pretest and No Intervention (n = 58 Pretest; (n = 45 Posttest) | No Pretest and Intervention (n = 40) | No Pretest and No Intervention (n = 45) | p‐value |

|---|---|---|---|---|---|

| Pretesta | 44.2 (±17.4) | 46.0 (±17.5) | NA | NA | 0.58 |

| Posttestb | 62.0 (±11.9) | 60.0 (±16.7) | 67.0 (±14.2) | 62.6 (±13.6) | 0.15 |

Data are reported as mean (±SD)

Pretest with 10 questions.

Posttest with 20 questions.

There was no difference in posttest scores by year of training (PGY‐1 = 64.2 ± 14.7, PGY‐2 = 61.1 ± 16.1, PGY‐3 or ‐4 = 62.6 ± 13.6; p = 0.60) nor by specialty (emergency medicine = 60.3 ± 15.0, family medicine = 66.1 ± 14.5, pediatrics = 63.8 ± 13.7; p = 0.28). Given the differences between sites, site was considered a potential confounder for posttest scores. However, after controlling for site, there was no difference in posttest scores between residents in the intervention group compared to those not exposed to the intervention (p = 0.14).

The 20‐question posttest had moderate internal consistency (KR20 = 0.57). The 10‐question pretest was less internally consistent (KR20 = 0.38). Item‐total correlation of the posttest showed no substantial improvements to internal consistency with the removal of any question.

Discussion

We demonstrated that automated, asynchronous learning via regularly spaced “teaching MCQs” is a feasible teaching tool that could be utilized by PED educational directors. Residents enrolled in this study had high rates of completion for both the teaching intervention questions and the posttest, suggesting residents were engaged and willing to complete these electronic tasks. In addition, very few residents refused participation (2%).

Given the average residents’ busy schedules and requirements, finding a teaching modality that is both simple and convenient for the residents to use is paramount to the success of the teaching intervention. Our study's intervention of regularly scheduled e‐mailed MCQs and detailed answers were both easy and convenient for the residents to complete. Time spent communicating with the participants after enrollment was minimal and managed entirely through an automated e‐mail campaign. The teaching questions were delivered to each resident's e‐mail, and residents were able to directly click a link in the e‐mail to answer the questions via computer or mobile device. No residents contacted the study coordinator for assistance accessing the intervention quizlets suggesting that this teaching modality was both convenient and simple to use by the resident. In prior studies, feedback has been noted as a key component of teaching millennial learners and encouraging intrinsic motivation.2, 23 Residents were informed that they would only be able to see explanations to the questions after they completed them, and this may have been motivating for residents to complete the quizlets.

Our rates of posttest completion were markedly higher than prior similar studies (44% dropout rate in Chang et al.5 and 48% completion of the posttest in House et al.18). The posttest in this study was delivered electronically 70 days after enrollment when residents were no longer in the PEM department. Three reminders were sent to participants who had not yet completed the posttest and these multiple reminders likely improved completion rates. Residents may have perceived the posttest as a learning opportunity similar to the teaching questions, although there was no difference in posttest completion rates between those who received the intervention and those who did not. Studies in behavioral economics have noted that people are highly motivated to avoid losing something they have been given (loss aversion).24 We chose to give participants the study incentive at the time of enrollment and the book needed to be returned if participants failed to complete the posttest. This may have motivated residents to complete the posttest rather than lose the book although no site study investigator was able to collect a book from those who did not complete the posttest. In the prestudy needs assessment, pediatric rash as a topic area that was desired by all residents surveyed, which may also have contributed to higher participation rates.

Despite the high participation rate in completing the teaching intervention questions, there was no difference in posttest performance between the intervention and the control groups. Other studies using spaced repetition models where MCQs are delivered at regular intervals have seen moderate and persistent improvements in knowledge.25, 26, 27 Kerfoot et al.26 found gains in medical knowledge using a similar model but used the same questions on the pretest, intervention, and posttest and also repeated questions using a spaced repetition algorithm. Our pretest, intervention, and posttest were composed of unique questions to ensure that we were teaching and measuring content knowledge and to avoid improved test performance based on recollection of the questions. It is also possible that repetition of the intervention items is necessary for a larger effect size. In addition, many of the prior efforts using this educational strategy have spaced questions over longer periods of time,25, 26 with only one prior study using spacing over a 1‐month period.18 We chose this shorter duration intervention period to match the clinical experience on a given rotation for residents. Although there was no difference in posttests between the intervention and control groups, we did see a significant improvement in performance from the pretest to the posttest suggesting that learning did occur during the study period. This may have been related to clinical exposure or the Hawthorne effect. Participants may have focused their learning efforts on rashes during their PED rotation knowing they would be tested later.

In our study, there was moderate internal consistency of the posttest, which suggests that despite the variability in the types of rashes presented, participants had a moderately consistent performance across the questions. Questions used in the posttest were directly extracted from the PEM boards review book that had been heavily edited and questions psychometrically analyzed prior to being published in the PEMQBook. The mean scores seen with resident administration of the questions were lower than those seen with the difficulty indexes derived from PEM attendings and fellows. This may be due to differences in clinical exposure or baseline knowledge for those earlier in their training or the perceived stakes of completion of the questions. There was one question about the management of Bechet's disease that no resident was able to answer correctly. The difficulty of the questions for participants at the resident level may have reduced the sensitivity of the examination to detect knowledge gains.

Despite other studies that have shown a sensitizing effect of a pretest, this was not seen in our cohort. This may be due to the relatively small number of pretest questions leading to an insignificant effect size. There was a 70‐day gap between the pre‐ and posttest, which may have further diminished the effect size. There was no pretest effect in the study by Chang et al.5 that also focused on medical trainees, and it is possible that complex medical knowledge is less affected by pretests than more simplistic recall‐based learning in other contexts.28

This study was accomplished using simple and inexpensive online tools making it a feasible intervention to introduce into a PED or other clinical rotation. The MailChimp subscription was $120/year and Survey Monkey subscription was $250/year. The educational intervention could have been completed with the free version of Survey Monkey, but this would not allow for exporting of data with unique identifiers needed for research analysis. The intervention had a small administrative time demand with ~3 hours needed during the design phase, mostly related to copying questions into Survey Monkey and designing the MailChimp campaign and another 8 hours required of the study coordinator over the 18‐month duration of the study. This time was primarily spent adding the 240 residents to the MailChimp campaign. Using this intervention outside of a research study would be even less time‐intensive, and scaling this intervention to more residents would have a minimal impact in administrative time.

Educators working in EDs struggle to employ traditional teaching structures in which learners and teachers are present in the same location at the same time due to the variability in schedules of both faculty and learners. The majority of teaching that occurs on EM or PEM rotations is done in situ in the context of the patients that are being treated. Residents likely receive sufficient education using this model for common patient conditions; however, rare and variable illnesses like many pediatric rashes may need more deliberate and explicit teaching. This model of automatically delivered quizlets initiated at the start of a rotation proved to be an easy and low‐cost intervention that had a very high rate of participation by residents. Although there may be limits to the number of quizlets or questions that residents will complete using this model, it could provide an effective asynchronous learning tool to address rare topic areas. This teaching modality can be used with residents of varied specialty and training year as well as residents working in geographically separate sites.

Limitations

Enrollment by site differed in sex, training program, and training year. The effect of the intervention may have been greater in residents from a particular specialty or training year, which may have limited our ability to see a difference when all site enrollees were combined.

Despite the fact that there was a relatively high level of overall participation, it is possible that there was some selection bias of more motivated residents completing more of the intervention. Given the size of the cohorts, this effect is not likely to be very large.

The generalized learning impact of this intervention was not assessed in this study and so it is unknown whether residents in the intervention group chose to spend more time completing the training questions and less time studying rashes on their own. No study participant was blinded and therefore the educational topic covered in the intervention may also have been known to those in the control group. Also, there may have been contamination between the intervention and no intervention group if residents in the intervention group shared their quizlet questions with residents in the no intervention group as they were simultaneously rotating in the PED. The attendings were unaware of the randomization of individual residents, and as almost all residents approached for study enrollment agreed to participate in the study, there is no reason to believe there was a systematic bias in clinical teaching that would disproportionally affect intervention or control residents.

Another limitation that may have affected the ability to detect knowledge gain differences is the relatively small number of intervention questions and also the relatively small number of questions on posttest. Other studies with similar numbers of enrollees were able to see differences in performance with a similarly sized posttest.18 However, 20 questions may be insufficient to adequately assess knowledge from a variety of different rash topic areas.

Conclusions

Teaching foundational knowledge to residents rotating in the pediatric ED is challenging due to variability in resident schedules and rarity of certain illnesses. In‐person didactic teaching is also challenging as residents need to work varying shifts and are often unable to take time away from patients while on shift. Delivering short multiple‐choice question quizlets every 2 days to residents via an automated e‐mail service had high rates of resident participation although there was no difference in posttest scores regardless of pretest or intervention. The time and monetary costs of administration were very small, which makes this educational tool easily adoptable by other residency programs and could be extended to large numbers of residents from different programs with a minimal increase in time or costs. This intervention may be particularly helpful in the teaching of uncommon or seasonal diseases to ensure exposure to core content areas.

AEM Education and Training 2020;4:85–93

Presented at the Pediatric Academic Societies Annual Meeting, San Francisco, CA, May 2017.

Supported by a University of Colorado Pediatrics Department Educational Grant.

VW is the CEO of PEMQBOOK LLC, which produces a product relevant to the subject material and is the lead editor of both texts. The other authors have no potential conflicts to disclose.

Author contributions: study concept and design—MR, VW, PZ, GR; acquisition of the data—MR, VW, KD, JN, PJM, PZ, GR; analysis and interpretation of the data—MR, VW, GR; drafting of the manuscript—MR, GR; critical revision of the manuscript for important intellectual content—MR, VW, KD, JN, PJM, GR; statistical expertise—GR; and acquisition of funding—MR, GR.

References

- 1. Mittiga MR, Schwartz HP, Iyer SB, Gonzalez Del Rey JA. Pediatric emergency medicine residency experience: requirements versus reality. J Grad Med Educ 2010;2:571–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Toohey SL, Wray A, Wiechmann W, Lin M, Boysen‐Osborn M. Ten tips for engaging the millennial learner and moving an emergency medicine residency curriculum into the 21st Century. West J Emerg Med 2016;17:337–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Branzetti JB, Aldeen AZ, Foster AW, Courtney DM. A novel online didactic curriculum helps improve knowledge acquisition among non‐emergency medicine rotating residents. Acad Emerg Med 2011;18:53–9. [DOI] [PubMed] [Google Scholar]

- 4. Burnette K, Ramundo M, Stevenson M, Beeson MS. Evaluation of a web‐based asynchronous pediatric emergency medicine learning tool for residents and medical students. Acad Emerg Med 2009;16(Suppl 2):S46–50. [DOI] [PubMed] [Google Scholar]

- 5. Chang TP, Pham PK, Sobolewski B, et al. Pediatric emergency medicine asynchronous e‐learning: a multicenter randomized controlled Solomon four‐group study. Acad Emerg Med 2014;21:912–9. [DOI] [PubMed] [Google Scholar]

- 6. Chartier LB, Helman A. Development, improvement and funding of the emergency medicine cases open‐access podcast. Int J Med Educ 2016;7:340–1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Kornegay JG, Leone KA, Wallner C, Hansen M, Yarris LM. Development and implementation of an asynchronous emergency medicine residency curriculum using a web‐based platform. Intern Emerg Med 2016;11:1115–20. [DOI] [PubMed] [Google Scholar]

- 8. Lew EK, Nordquist EK. Asynchronous learning: student utilization out of sync with their preference. Med Educ Online 2016;21:30587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Friedlander MJ, Andrews L, Armstrong EG, et al. What can medical education learn from the neurobiology of learning? Acad Med 2011;86:415–20. [DOI] [PubMed] [Google Scholar]

- 10. Karpicke J, Roediger H III. Repeated retrieval during learning is the key to long‐term retention. J Mem Lang 2007;57:151–62. [Google Scholar]

- 11. Karpicke JD, Roediger HL 3rd. The critical importance of retrieval for learning. Science 2008;319:966–8. [DOI] [PubMed] [Google Scholar]

- 12. Kreiter CD, Green J, Lenoch S, Saiki T. The overall impact of testing on medical student learning: quantitative estimation of consequential validity. Adv Health Sci Educ Theory Pract 2013;18:835–44. [DOI] [PubMed] [Google Scholar]

- 13. Rohrer D, Pashler H. Recent research on human learning challenges conventional instructional strategies. Educ Researcher 2016;39:406–12. [Google Scholar]

- 14. Bjork EL, Soderstrom NC, Little JL. Can multiple‐choice testing induce desirable difficulties? Evidence from the laboratory and the classroom. Am J Psychol 2015;128:229–39. [DOI] [PubMed] [Google Scholar]

- 15. Little JL, Bjork EL. Optimizing multiple‐choice tests as tools for learning. Mem Cognit 2015;43:14–26. [DOI] [PubMed] [Google Scholar]

- 16. McConnell MM, St‐Onge C, Young ME. The benefits of testing for learning on later performance. Adv Health Sci Educ Theory Pract 2015;20:305–20. [DOI] [PubMed] [Google Scholar]

- 17. Deng F, Gluckstein JA, Larsen DP. Student‐directed retrieval practice is a predictor of medical licensing examination performance. Perspect Med Educ 2015;4:308–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. House H, Monuteaux MC, Nagler J. A randomized educational interventional trial of spaced education during a pediatric rotation. AEM Educ Train 2017;1:151–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Braver MW, Braver SL. Statistical treatment of the Solomon four‐group design: a meta‐analytic approach. Psychol Bull 1988;104:150–4. [Google Scholar]

- 20. Kern DE. Curriculum Development for Medical Education: A Six Step Approach. Baltimore: Johns Hopkins University Press, 1998. [Google Scholar]

- 21. Wang VJ, Flood RG, Sharm S, Godambe SA. Pediatric Emergency Medicine Question Review Book 2013: PEMQBook, LLC; 2012.

- 22. Wang VJ, Sharma S, Flood RG. Pediatric Emergency Medicine Question Review Book 2009: PEMQBook, LLC; 2009.

- 23. Deci EL, Ryan RM. The “what” and “why” of goal pursuits: human needs and the self‐determination of behavior. Psychol Inquiry 2000;11:227–68. [Google Scholar]

- 24. Novemsky N, Kahneman D. The boundaries of loss aversion. J Market Res 2005;42:119–28. [Google Scholar]

- 25. Kerfoot BP. Learning benefits of on‐line spaced education persist for 2 years. J Urol 2009;181:2671–3. [DOI] [PubMed] [Google Scholar]

- 26. Kerfoot BP, DeWolf WC, Masser BA, Church PA, Federman DD. Spaced education improves the retention of clinical knowledge by medical students: a randomised controlled trial. Med Educ 2007;41:23–31. [DOI] [PubMed] [Google Scholar]

- 27. Shaw T, Long A, Chopra S, Kerfoot BP. Impact on clinical behavior of face‐to‐face continuing medical education blended with online spaced education: a randomized controlled trial. J Contin Educ Health Prof 2011;31:103–8. [DOI] [PubMed] [Google Scholar]

- 28. Little JL, Bjork EL. Multiple‐choice pretesting potentiates learning of related information. Mem Cognit 2016;44:1085–101. [DOI] [PubMed] [Google Scholar]