Abstract

Real-time functional magnetic resonance imaging (rt-fMRI) neurofeedback is a non-invasive, non-pharmacological therapeutic tool that may be useful for training behavior and alleviating clinical symptoms. Although previous work has used rt-fMRI to target brain activity in or functional connectivity between a small number of brain regions, there is growing evidence that symptoms and behavior emerge from interactions between a number of distinct brain areas. Here, we propose a new method for rt-fMRI, connectome-based neurofeedback, in which intermittent feedback is based on the strength of complex functional networks spanning hundreds of regions and thousands of functional connections. We first demonstrate the technical feasibility of calculating whole-brain functional connectivity in real-time and provide resources for implementing connectome-based neurofeedback. We next show that this approach can be used to provide accurate feedback about the strength of a previously defined connectome-based model of sustained attention, the saCPM, during task performance. Although, in our initial pilot sample, neurofeedback based on saCPM strength did not improve performance on out-of-scanner attention tasks, future work characterizing effects of network target, training duration, and amount of feedback on the efficacy of rt-fMRI can inform experimental or clinical trial design.

Keywords: real-time fMRI, neurofeedback, connectome-based predictive modeling, functional connectivity, attention

Introduction

Real-time functional magnetic resonance imaging (rt-fMRI) neurofeedback is a powerful tool for measuring both patterns of neural activity and individual abilities to modify said activity (Stoeckel et al., 2014). Rt-fMRI neurofeedback may be used to elucidate brain mechanisms related to cognitive processes or as a non-invasive, non-pharmacological therapeutic tool that enables an individual to learn to modulate their neural activity in order to effect behavioral change (Hawkinson et al., 2012).

While promising, rt-fMRI neurofeedback has not routinely targeted complex brain networks; activity from a single brain region remains the most common feedback signal (Sulzer et al., 2013). However, many processes or disorders cannot be localized to a single region, and the magnitude of focal signal changes typically are weak predictors of out-of-scanner behavior (Gabrieli et al., 2015; Rosenberg et al.). Meta-analyses also suggest that many behaviors rely on the orchestrated activity of a distributed array of regions (Laird et al., 2005; Yarkoni et al., 2011). Thus, the best feedback signal may not rest in the magnitude of activity in a single area, but rather in the degree to which activity is coordinated across large-scale networks. Although feedback derived from activity in multiple voxels shows promising improvements over activity in a single region (Shibata et al., 2011), providing feedback based on functional connectivity—a measure of statistical dependence between two brain regions’ activity time courses—rather than activation may be more clinically relevant (Watanabe et al., 2017).

Accordingly, rt-fMRI feedback based on functional connectivity has become the focus of studies examining functional connections (edges) between pairs of regions (nodes) or networks (Koush et al., 2013; Monti et al., 2017; Yuan et al., 2014). Although functional connectivity feedback has been based on a limited set of edges, brain models that best predict behavior (as opposed to explanatory brain models; (Gabrieli et al., 2015; Shmueli, 2010; Yarkoni and Westfall, 2017) utilize hundreds of regions and thousands of edges rather than a single edge or a priori functional network (Finn et al., 2015; Rosenberg et al., 2016a; Rosenberg et al., 2016b; Shen et al., 2017). The models are often built to predict outcomes and behavior from whole-brain functional connectomes (i.e., patterns characterizing the functional connections between all possible pairs of brain regions) using data-driven techniques. To realize its promise, rt-fMRI methodologies need to be developed to provide feedback based on these complex, large-scale networks derived from predictive models.

Here, we propose and validate connectome-based neurofeedback, a novel translational pathway to directly target these predictive models. By vastly increasing the scale of prior rt-fMRI neurofeedback based on one or two brain regions to feedback based on more than a thousand individual edges connecting hundreds of brain regions, previously validated, predictive brain networks can be targeted in an individual to modify brain dynamics. As an initial pilot validation study, we used the sustained attention connectome-based predictive model (saCPM), in which patterns of functional connectivity predict the ability to maintain focus (Rosenberg et al., 2016a; Rosenberg et al., 2016b). The saCPM was internally validated using Connectome-based Predictive Modeling (CPM) (Rosenberg et al., 2016a; Shen et al., 2017) and externally validated as a generalizable model of attention by predicting ADHD symptoms (Rosenberg et al., 2016a), pharmacological enhancement of attention function from a dose of methylphenidate (Rosenberg et al., 2016b), and fluctuations in attention over time (Rosenberg et al., 2020) in independent samples and applications. We had two main hypotheses for this study: 1) that giving neurofeedback based on a large-scale predictive model would be technically feasible; and 2) that training the saCPM would lead to greater connectivity in this network. By using rt-fMRI neurofeedback and highly generalizable, predictive models of functional connectivity, connectome-based neurofeedback represents a novel translational framework in which complex networks that predict behavior are targeted with a non-invasive interventional tool.

Methods

Connectome-based Neurofeedback.

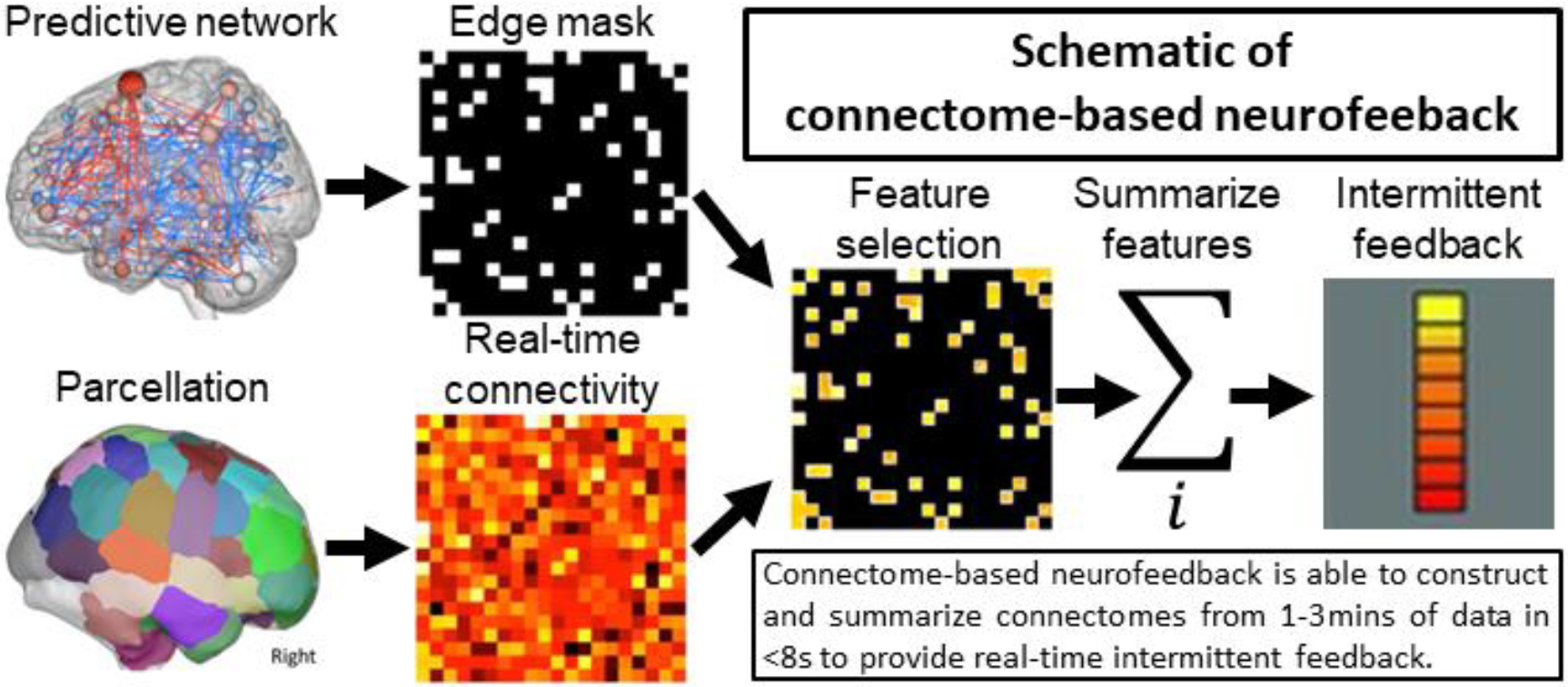

Connectome-based neurofeedback uses a similar approach to CPM (Shen et al., 2017) but summarizes connectivity (calculated in real-time) from a previously defined predictive network to construct a feedback signal. Figure 1 shows a schematic of connectome-based neurofeedback. This approach requires two inputs: a parcellation to calculate a connectivity matrix and networks (i.e., a collection of edges or elements in the connectivity matrix) to summarize connectivity. The parcellation/networks can be as simple as two ROIs and the single connection between them or it can be ~300–400 node parcellations with multiple networks composed of hundreds of edges. When using inputs based on CPM, the parcellation typically contain ~300 nodes and the positive and negative components include ~500 edges each. Connectivity is calculated using blocks of fMRI data collected in real-time. Unlike other approaches using sliding window correlations (Monti et al., 2017; Yuan et al., 2014), the blocks of data are non-overlapping and connectome-based neurofeedback provides intermittent, rather than continuous, feedback (see Discussion for the advantages of intermittent feedback). After a matrix is computed, the edges are summarized producing a single number reflecting the strength of connectivity in each predefined network. The strength of connectivity alone can be used as the feedback signal when a single edge is used, or the strength in multiple networks can be combined into a feedback signal as, for example, by taking the difference between the high- and low-attention networks of the saCPM (as described below).

Figure 1.

An exemplar brain parcellation used to calculate a connectivity matrix and one or more networks that predict behavior are the inputs to our approach. The predictive network(s) defines which edges are used to provide feedback. A connectivity matrix is calculated over a discrete block of data (typically, 1–3 minutes) in real-time. Once calculated, the predictive edges are selected from the matrix and summarized into a single network summary score per network. Intermittent feedback is then provided to the participant based on a combination of these network summary scores. The total time from collecting the last volume in the data block to displaying feedback to the participant was under 8 seconds for our initial pilot.

Implementation:

Connectome-based neurofeedback is implemented as part of the Bioimage Suite project (http://bioimagesuite.org using a combination of C++,Tool Command Language (TCL) and Tk. For processing speed, all preprocessing steps are implemented using objective orientated C++, inheriting from base The Visualization Toolkit (VTK) classes. A Tcl script provides the main user interface and calls the processing objects via C++/Tcl wrapper functions.

Participants.

Twenty-five right-handed, neurologically healthy adults with normal or corrected-to-normal vision were recruited from Yale University and the surrounding community to perform the neurofeedback task and rest conditions during fMRI scanning and participate in pre-scan and post-scan behavioral testing sessions. As this was a pilot study with the main goal of technical feasibility, no formal power analysis was performed. The sample size was selected based on practical considerations including scanner time, personnel availability, and funding. Further, the number of participants scanned (n = 25 before exclusion) matched the sample size of the experiment used to generate the sustained attention model tested here (Rosenberg et al., 2016).

All individuals provided written informed consent and were paid for participating. Data from five individuals were excluded because of a failure to complete all three experimental sessions, technical failures during data collection, and/or excessive head motion during fMRI scanning, leaving data from 20 individuals for analysis (10 females, 18–29 years, mean = 21.5 years).

Behavioral tasks.

The day before their MRI session, participants completed an initial behavioral testing session. This session included four approximately 12-min tasks that assessed different aspects of attention and memory: two versions of the Sustained Attention to Response Task (SART; Robertson et al., 1997), a visual short-term memory task, and a multiple object tracking task. Behavioral tasks were administered on a 15-inch MacBook Pro laptop (screen resolution: 1440 × 900) with custom scripts Matlab (Mathworks) using Psychophysics Toolbox extensions (Kleiner et al., 2007).

The first task, the SART (Robertson et al., 1997), is a not-X continuous performance task that measures participants’ ability to sustain attention and inhibit prepotent responses. On each trial, a digit from 1 to 9 appeared in the center of the screen for 250 ms followed by a 900-ms mask (a circle with a diagonal cross in the middle). Participants were instructed to press a button in response to every number except “3” (1/9th of trials), and were encouraged to respond as quickly and accurately as possible. Stimuli were evenly divided between the nine numbers and were presented in the font Symbol in five font sizes (48, 72, 94, 100, and 120). Task instructions, timing, and stimulus appearance parameters replicated Robertson et al., (1997), except that here the task included 630 rather than 225 trials. Performance on the not-X version of the SART was assessed with sensitivity (d′), or a participant’s hit rate to non-target stimuli relative to their false alarm rate to target “3” trials.

The second task was a change detection task that measures visual short-term memory (Luck and Vogel, 1997). On each of 200 trials, an array of 2, 3, 4, 6, or 8 colored circles was presented on the screen. Circles were randomly positioned along the diameter of an invisible bounding circle (450-pixel diameter), and colors were sampled without replacement from the following list: white (RGB: 1, 1, 1), black (0, 0, 0), gray (.502, .502, .502), dark blue (0, 0, .8), light blue (0, 1, 1), orange (1, .502, 0), yellow (1, 1, 0), red (1, 0, 0), pink (1, .4, .698), dark green (0, .4, 0), and light green (0, 1, 0). After 100 ms, the stimulus array was replaced by a fixation square for 900 ms before reappearing. On half of trials, the array reappeared unchanged, and on the other half, one of the circles reappeared in a different color. Participants were instructed to press one button if there had been a color change and another if no change had occurred. After a response was recorded or 2000 ms elapsed, the fixation square was presented for 500 ms before the next trial began. Performance was assessed with a measure of working memory capacity, Pashler’s K (Pashler, 1988), or the average value of set size*(hit rate – false alarm rate)/(1 – false alarm rate) (Rouder et al., 2011).

The third behavioral task, multiple-object tracking, measures attentional selection and tracking (Pylyshyn and Storm, 1988; code adapted from Liverence and Scholl, 2011). On each of 50 trials, 10 white circles appeared on the screen. Five of the circles blinked green for 1667 ms before all circles moved independently around the screen for 5000 ms (for details see Liverence and Scholl, 2011). Participants were instructed to keep track of the circles that had blinked — the targets. When the display stopped moving, participants used the computer mouse to indicate which circles were targets. After participants clicked five unique circles, feedback (the percent of correctly identified targets) was presented for 500 ms before the next trial began. Task performance was measured with mean accuracy across trials.

The fourth and final behavioral task was an A-X continuous performance task that assesses vigilance and response inhibition. On each trial of this task, a letter (“A”, “B”, “C”, “D”, or “E”) was presented in the center of the screen for 250 ms followed by a 900-ms mask (the same circle and diagonal cross used in the SART). Participants were instructed to respond via button press only to the letter “C” (70/630 trials) when it immediately followed the letter “A” (18 of these 70 trials). Stimuli were presented in the font Lucida Grande in five font sizes (48, 72, 94, 100, and 120). Performance on the A-X version of the SART was assessed with d′, or a participant’s hit rate to target stimuli (the letter “C” preceded by the letter “A”) relative to their false alarm rate on non-target trials.

Participants returned to the lab for a second behavioral testing session the day after the MRI scan. For each participant, the pre-scan and post-scan behavioral sessions were performed at the same time of day, such that session start times fell within an hour of each other. Behavioral task order remained consistent across participants and sessions. Experimenters administering the behavioral testing sessions were blind to participants’ experimental condition. Experimenters administering the MRI sessions were not blind to condition due to the technical demands of real-time neurofeedback.

Imaging parameters.

MRI data were collected with a 3T Siemens Trio TIM system and 32-channel head coil. MRI sessions began with a high-resolution MPRAGE, 3 neurofeedback runs, and a resting-state run was collected before and after neurofeedback runs. Functional runs included 428 (neurofeedback) or 300 (rest) whole-brain volumes acquired using an EPI sequence with the following parameters: TR = 2000 ms, TE = 25 ms, flip angle = 90°, acquisition matrix = 64 × 64, in-plane resolution = 3.5 mm2, 34 axial-oblique slices parallel to the AC-PC line, slice thickness = 4. Parameters of the anatomical MPRAGE sequence were as follows: TR = 1900 ms, TE = 2.52, flip angle = 9°, acquisition matrix = 256 × 256, in-plane resolution = 1.0 mm2, slice thickness = 1.0 mm, 176 sagittal slices. A 2D T1-weighted image coplanar to the functional images was collected for registration.

Neurofeedback runs.

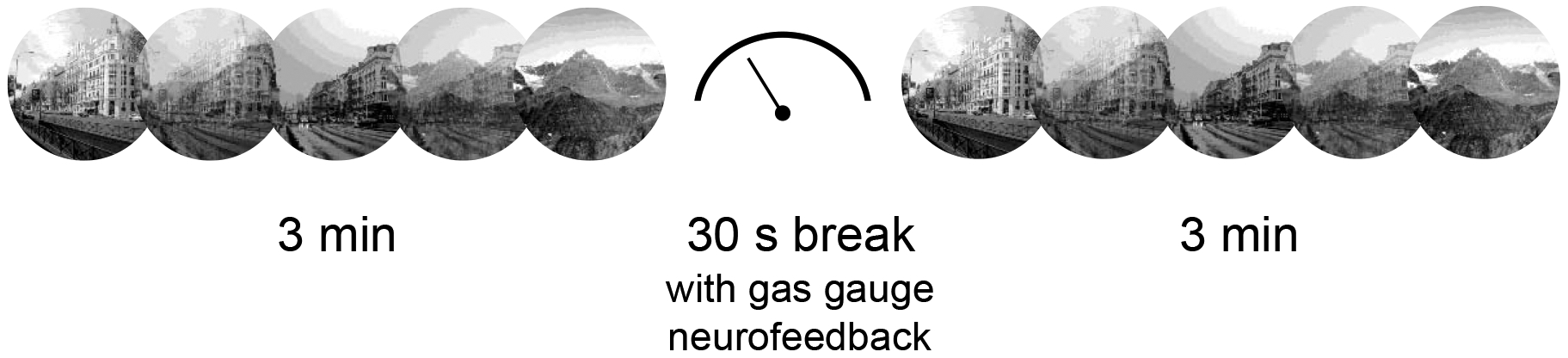

During the neurofeedback runs, participants performed a version of the gradual-onset continuous performance task (gradCPT; Esterman et al., 2013) with the same timing and as stimulus parameters as described in Rosenberg et al. (2016a). Neurofeedback runs included four 3-min blocks of the gradCPT each followed by 30-s blocks of feedback, visualized as a gas gauge. Participants were told that a “full” gauge (line oriented to the far right) indicated optimal attention whereas an “empty” gauge (line oriented to the far left) indicated suboptimal focus and were instructed to keep the gauge as close to “full” as possible (Figure 2).

Figure 2.

Schematic of gradual onset-continuous performance task and neurofeedback. Neurofeedback runs included four 3-min blocks of the gradual-onset continuous performance task (gradCPT) each followed by 30-s blocks of feedback, visualized as a gas gauge. Images are not to scale.

For participants in the neurofeedback group (n = 10), the position of the gauge reflected the strength of their high-attention network relative to their low-attention network during the preceding task block. (Previous work demonstrates that strength in the high-attention network is positively related to sustained attentional performance, whereas strength in the low-attention network is inversely related to sustained attention (Rosenberg et al., 2017). Together the high- and low-attention networks comprise the saCPM.) Specifically, we compared this difference score to the highest and lowest difference scores measured during task performance for the 25 participants in Rosenberg et al. (2016a): . We used this comparison approach to roughly estimate the “best” and “worst” possible difference scores to be expected. Once the difference score for the current task block is calculated, the angle of the gas gauge location was based on the difference achieved, such that: , where estimated min returns the lowest difference score of 25 participants in Rosenberg et al. (2016a) and estimated max returns the highest. Note we define a “full” gauge (line oriented to the far right) as 180° and an “empty” gauge (line oriented to the far left) as 0°.

Participants in the sham feedback group (n = 10) saw the feedback from a yoked neurofeedback-participant (deBettencourt et al., 2015). Participants were assigned to the neurofeedback and sham feedback conditions in alternating order whenever possible, with sham feedback participants seeing the exact task trial order and feedback as the preceding neurofeedback participant. However, due to data exclusion and scheduling and technical challenges, group assignment deviated from this pattern.

Finally, given that head motion can significantly impact functional connectivity values, feedback was not provided following blocks in which head motion exceeded a mean frame-toframe displacement of .1 mm. Instead, neurofeedback participants saw the message, “‘Too much motion for feedback. Please try your best to stay perfectly still!” during the rest break. Participants in the yoked control condition saw this message following blocks in which their true neurofeedback counterpart had excessive head motion. Thus, both stimulus order and visual feedback were exactly matched for neurofeedback and yoked control pairs.

Online data processing.

Functional data from neurofeedback participants were analyzed during data collection using BioImage Suite (Joshi et al., 2011). Following the collection of high-resolution anatomical, 2D coplanar, and initial resting-state scans, the 268-nodes Shen atlas (Shen et al., 2013) was warped into single participant space with series of a non-linear and linear transformations as previously described (Hampson et al., 2011). Transformations were visually inspected prior to neurofeedback runs.

FMRI data were motion corrected in real-time with our validated rt-fMRI motion correction algorithm (Scheinost et al., 2013). After each gradCPT block, images (90 per block) were preprocessed using linear and quadratic drift removal; regression of cerebrospinal fluid, white matter and global signals; calculation and removal of a 24-parameter motion model (6 motion parameters, 6 temporal derivatives and their squares) (Satterthwaite et al., 2012); and low-pass filtered via temporal smoothing with a Gaussian filter. Mean ROI time-series, based on the 268-nodes Shen atlas, were used to generate whole-brain functional connectivity matrices and to compute the high-attention and low-attention network strengths. Time from block offset to feedback presentation was <8 sec. The connectivity preprocessing methods used were intentionally the same as our state-of-the-art methods pipeline for functional connectivity to minimize common physiological and motion artifacts found connectivity data (Weiss et al., 2020).

Offline data processing.

For data harmonization, imaging data collected before, during, and after neurofeedback were processed (off-line) in the same manner as the neurofeedback data using BioImage Suite and custom Matlab scripts. For each participant, preprocessing steps were applied to generate a functional connectivity matrix from each resting-state run separately, as well as an overall task connectivity matrix from data concatenated across task blocks. Task-block specific functional connectivity matrices were also generated from volumes collected during each neurofeedback block separately.

Data exclusion.

MRI data from task blocks were excluded from analysis if (a) the participant saw the “‘Too much motion for feedback” message rather than gas gauge feedback after the block or (b) head motion calculated offline exceeded 2 mm translation, 3° rotation, or .1 mm frame-to-frame displacement. These criteria resulted in the exclusion of 7 blocks from one control participant, 6 blocks from one neurofeedback and one control participant, 2 blocks from two control participants, and 1 block from one neurofeedback participant, meaning that 14/20 participants had all twelve task blocks.

All participants had full pre-task rest runs with acceptable levels of head motion (i.e., 300 TRs, <2 mm translation, <3° rotation, and <.15 mm frame-to-frame displacement). Fourteen participants had post-scan rest runs with acceptable motion. Of these, three had unique scan lengths due to scanner error or time constraints: 134 TRs (neurofeedback participant), 151 TRs (control participant) and 393 TRs (neurofeedback participant). One neurofeedback participant was missing the post-scan rest run altogether. Given our limited sample size, we manually excluded periods of high motion from the five remaining participants’ post-task rest runs (Rosenberg et al., 2016b). This resulted in scans with acceptable head motion of length 300 (one control participant who started off with a longer run), 150 (one neurofeedback and one control participant), and 100 (control participant). Manual volume removal did not successfully reduce motion to acceptable levels in one control participant. Scan length, maximum head translation, maximum head rotation, and mean frame-to-frame displacement during the pre- and post-task rest runs did not significantly differ between the neurofeedback and yoked-control groups (p values > .23).

Due to incomplete experimental sessions or failure to record data, behavioral data were missing from one neurofeedback participant’s post-scan SART session, one neurofeedback participant’s pre-scan AX-CPT session and one control participant’s post-scan AX-CPT session, and two control participants’ pre- and post-scan MOT sessions. One control participant’s pre-scan AX-CPT session in which performance did not fall within 3 standard deviations of the group mean was excluded from analysis.

Validation of real-time feedback.

Although previous work has provided real-time neurofeedback based on the strength of one or several functional connections, to date no study has calculated whole-brain functional connectivity matrices (which here include 35,778 correlation coefficients) during data collection. To confirm that real-time methods veridically captured functional network strength and thus served as the basis for accurate feedback, we compared network strength (high-attention – low-attention network strength) calculated offline after data collection to true neurofeedback (that is, network strength calculated online). As a second validation step, we confirmed that feedback was more closely related to network strength calculated offline in the neurofeedback group than in the yoked control group. A finding that this is not the case—that is, if changes in network strength over time are synchronized across individuals and thus feedback is equally accurate in both groups—would suggest that the yoked feedback approach is not an appropriate control for the current design.

Assessing control over the saCPM network.

It is an open question as to whether participants are able to differentially control the strength of two functional connectivity networks that span hundreds of overlapping nodes across the cortex, subcortex, and cerebellum. To test whether saCPM strength was under participants’ volitional control, we first compared neurofeedback and yoked control participants’ changes in saCPM strength from the first to last task blocks with an unpaired t-test. Two control participants with excessive head motion during the last three task blocks were excluded from this analysis.

We next investigated whether participants learned to control the saCPM by asking whether neurofeedback improved saCPM strength in a post-task resting-state run, during which connectivity was unconfounded by strategy or task performance. Specifically, we compared changes in saCPM strength ([post-feedback high-attention – low-attention network strength] – [pre-feedback high-attention – low-attention network strength]) in the neurofeedback and yoked control groups with an unpaired t-test after confirming that resting-state saCPM strength did not differ between the groups before training.

Behavioral effects of neurofeedback.

To examine whether neurofeedback improved attention task performance, we compared pre-scan to post-scan performance changes between the neurofeedback and yoked control groups with unpaired t-tests.

Code and data availability statement.

Connectome-based neurofeedback and associated software is open-source under GNU GPL v2 terms and available from bioimagesuite.org.

Results

Validation of real-time feedback.

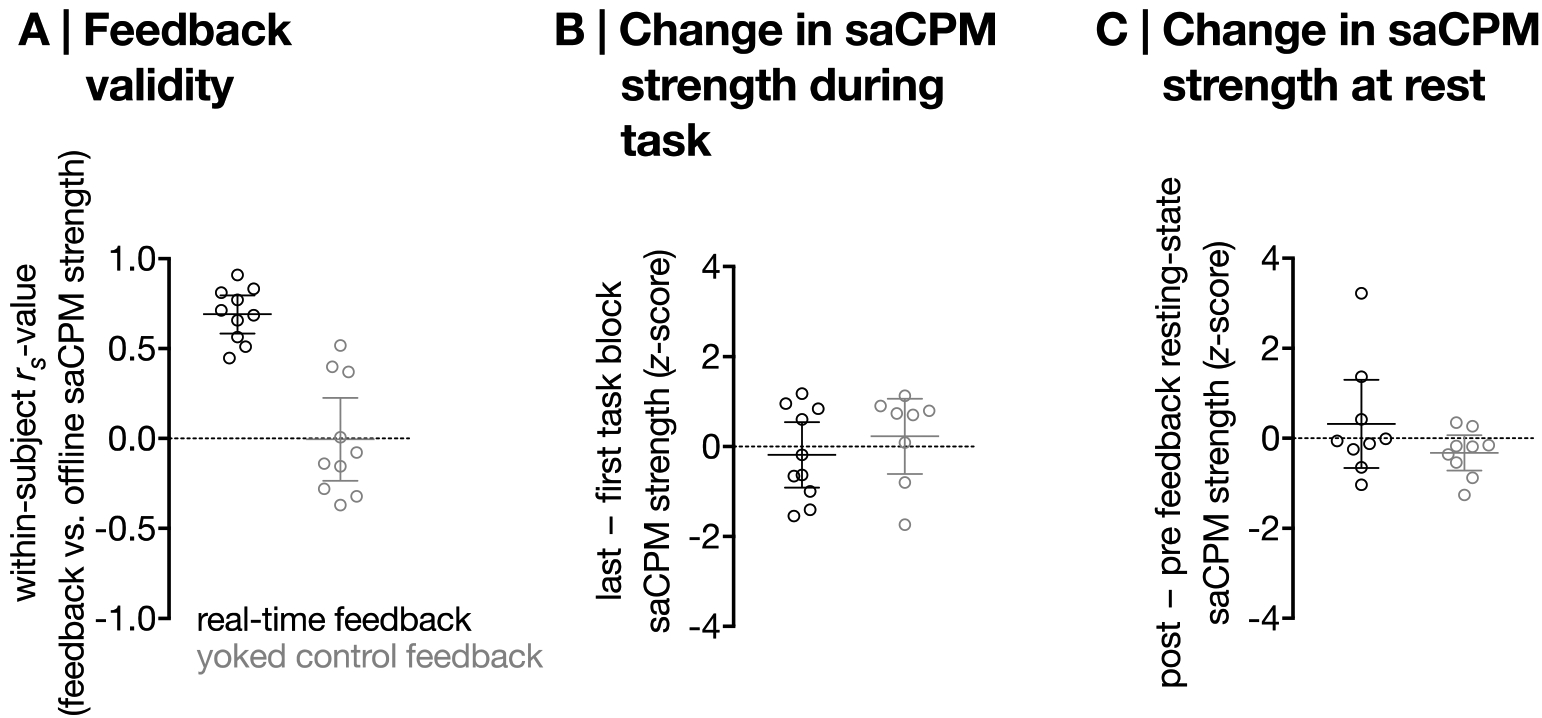

Feedback was positively correlated with network strength calculated offline in the neurofeedback group (mean within-subject rs = .72) but not the yoked control group (mean within-subject rs = .001; Figure 3A). Confirming both the accuracy of feedback and the efficacy of the yoked feedback control, this relationship was significantly greater in the neurofeedback than control group (t18 = 6.20, p = 7.55×10−6, Cohen’s d = 2.92).

Figure 3. A.

Correlation between saCPM strength calculated offline and feedback (for neurofeedback participants, akin to saCPM strength calculated online; group difference: t18 = 6.20, p = 7.55×10−6). Horizontal lines represent group means; error bars show 95% confidence intervals. B. Change in saCPM strength from the first to the last training task block in the neurofeedback and yoked control groups (group difference: t16 = −.86, p = .40, Cohen’s d = .43). C. Change in resting-state saCPM strength from pre- to post-feedback in the neurofeedback and yoked control groups (group difference: t16 = 1.41, p = .18, Cohen’s d = .71). Higher z-scores represent better improvements in functional connectivity signatures of attention.

Control of the saCPM.

Sustained attention CPM strength decreased from the first to the last task block in the neurofeedback and yoked control groups, potentially reflecting vigilance decrements given that participants were performing the gradCPT (a challenging sustained attention task) during training (Figure 3B). This change did not significantly differ between groups (t16 = −.86, p = .40, Cohen’s d = .43). There was, however, a numerical trend in the post-training resting-state such that resting-state saCPM strength—a functional connectivity signature of stronger attention—improved more in the neurofeedback than the yoked control group as a result of training (t16 = 1.41, p = .18, Cohen’s d = .71, Figure 3C). Prior to the training—importantly—resting-state connectivity in the high-attention and low-attention networks did not significantly differ between neurofeedback and yoked control groups (high-attention: t16 = −.38, p = .71, Cohen’s d = .19; low-attention: t16 = .17, p = .87, Cohen’s d = .08). Thus, initial evidence suggests that participants may be able to differentially control the strength of whole-brain functional connectivity networks supporting attention, and motivates future work incorporating additional training sessions.

Within-scanner behavioral effects of neurofeedback.

Overall, both groups showed reduced performance on the gradCPT over time (t18 = −2.36, p = .03, Cohen’s d = 1.11). The interaction between groups was not significant (t18 = −1.37, p = .18, Cohen’s d = .65).

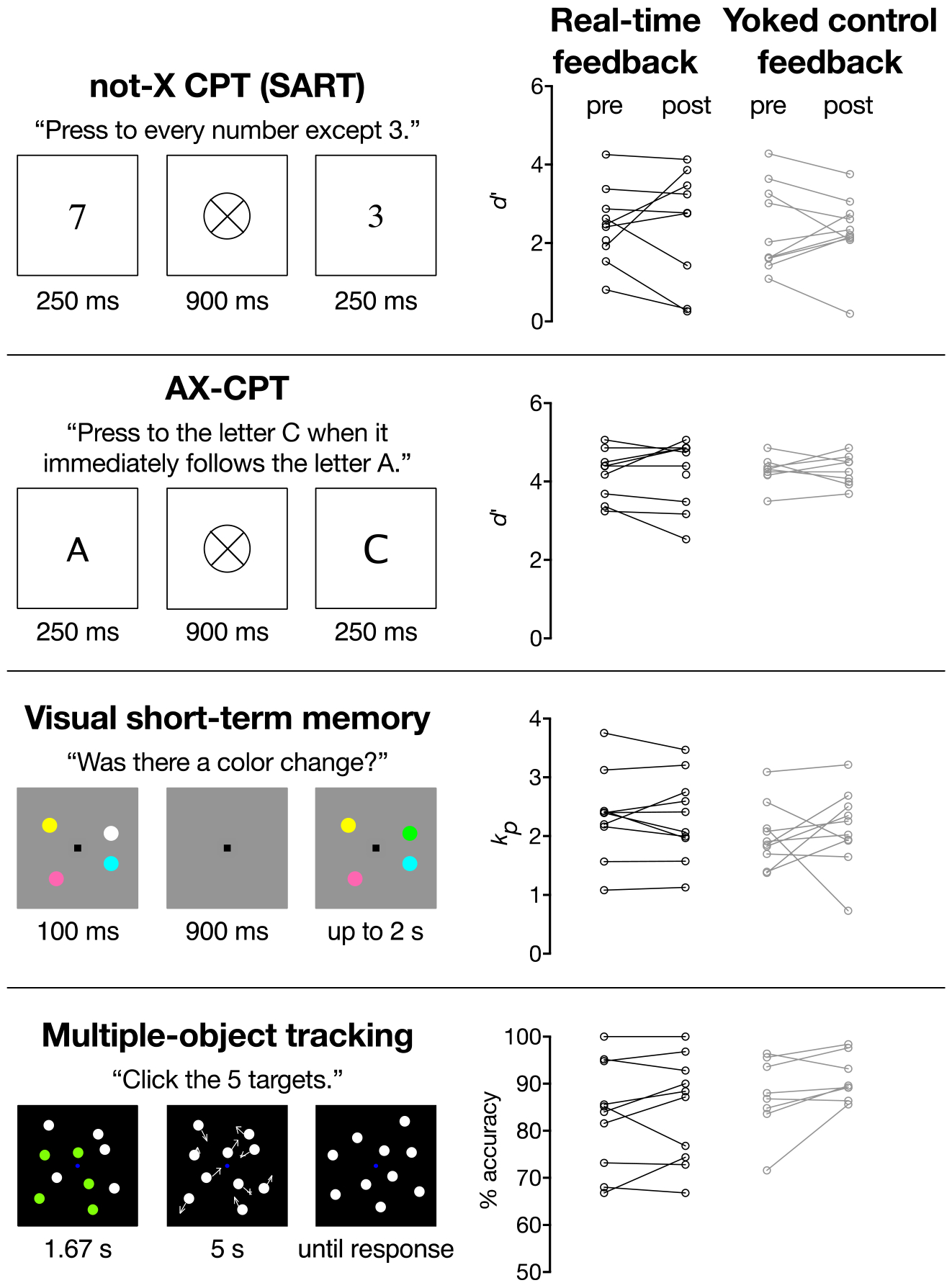

Out-of-scanner behavioral effects of neurofeedback.

Changes in behavioral performance from pre- to post-scanning did not significantly differ between the neurofeedback and yoked control groups (not-X CPT [SART]: t17 =.063, p = .95, Cohen’s d = 0.03; AX-CPT: t15 = .043, p = .97, Cohen’s d = .02; VSTM: t18 = −.68, p = .51, Cohen’s d = .32; MOT: t16 = −1.05, p = .31, Cohen’s d = .52; Figure 4).

Figure 4.

Pre- and post-scanning task performance for individuals in the real-time neurofeedback and yoked control groups. Performance did not change significantly from pre- to post-scanning for either group in any task (p values > .08).

Discussion

Functional connectivity-based predictive models of behavior and rt-fMRI neurofeedback are novel techniques at the cutting-edge of neuroimaging methodology. Yet, despite the promises of both approaches, there are no rt-fMRI methods to provide neurofeedback of functional connectivity from these complex, predictive brain networks. In this pilot study, we demonstrated the feasibility of connectome-based neurofeedback, a method designed to target predictive modeling results via rt-fMRI neurofeedback. Unlike other rt-fMRI neurofeedback approaches based on functional connectivity that are limited to a few functional connections, connectome-based neurofeedback provides feedback based on functional connectivity within complex networks spanning several hundred nodes and edges. We showed that functional connectivity from predictive models can be targeted by rt-fMRI to provide neurofeedback, offering preliminary evidence of this method’s potential as a tool in future studies of cognition and therapeutic clinical trials.

In contrast to other rt-fMRI neurofeedback approaches that rely on sliding window correlations (Monti et al., 2017; Yuan et al., 2014) to provide a continuous feedback signal, we calculated functional connectivity over discrete blocks of data using standard “offline” functional connectivity methods and provided intermittent feedback. This approach has several advantages over sliding window correlations and continuous feedback. First, sliding window correlations have recently been shown to reflect not actual dynamic connectivity, but rather reflect in part noise (Laumann et al., 2016; Lindquist et al., 2014; Shakil et al., 2016). In addition, standard preprocessing methods, commonly used in functional connectivity studies such as global signal regression, are not performed due to time constraints for continuous feedback, leading to physiological artifacts (Weiss et al., 2020). This is a serious issue for potential treatments as feedback based on noise may be interpreted as “real” brain dynamics, leading to an ineffective, or even harmful, intervention. Second, functional connectivity is a much slower signal (~0.01–0.1 Hz) than continuous feedback (~0.5–1 Hz) as connectivity is based on activity patterns collected over time ranging from several seconds to minutes before the current activity. As such, it is unlikely that moment-to-moment changes in connectivity patterns measured at each fMRI volume reflect an interpretable feedback signal to an individual. Finally, intermittent feedback is emerging as a superior alternative to continuous feedback (Johnson et al., 2012). The attentional and cognitive load of evaluating continuous feedback while simultaneously trying to control the signal is mentally taxing for participants. Too much feedback may distract an individual from the main task at hand: controlling their brain. Together, these advantages suggest that intermittent feedback is a preferable solution for real-time functional connectivity.

A consistent concern of machine learning/predictive modeling methods is the translation of complex, whole-brain patterns into clinically actionable targets for intervention (Scheinost et al., 2019). Here, we show that CPM networks can be targeted via neurofeedback, creating intriguing opportunities to test the efficacy of these models in rt-fMRI neurofeedback studies. More broadly, when combined with our previous results targeting the saCPM via pharmacological intervention (Rosenberg et al., 2016b, Rosenberg et al., 2020), these results suggest that predictive models identified via CPM may produce clinically actionable targets for intervention. Though promising, further work is needed to validate how best to target predictive modeling results.

Yoked feedback was chosen as the control condition in our initial pilot of connectome-based neurofeedback. Several other possibilities for a control condition exist, including feedback based on a random network of the same size as the network of interest or otherwise created random feedback (for a discussion of the pros and cons of each, see Sorger et al., 2019). One negative of the yoked feedback as implemented in this study is control participants were told they were moving too much based on the matched experimental participant even when the control participant may not have been moving. Likewise, the control participant received feedback regardless of how they were moving. Future studies using yoked feedback may need to account for these differences in motion.

Although we failed to show that participants could gain control over the saCPM, there were several study limitations that could explain the negative results. First, participants only received one session of neurofeedback; multiple sessions are often needed for participants to gain control over patterns of brain activity (e.g., Yamashita et al., 2017). Given the complexity of the network pattern being trained, even more sessions may be needed. Second, functional connectivity was summarized over long blocks of data (3 minutes). This block length was chosen for harmonization with the gradCPT used to train the saCPM (Rosenberg et al., 2016a). The drawback is that participants only received 3 feedback events per run (9 total). Connectome-based neurofeedback with short block lengths can be used to increase the number of feedback events for a participant. Third, the study was likely underpowered as, for example, the effect size of the between group differences in saCPM strength from pre- to post-feedback (Cohen’s d= .71) would be considered moderate to large. Using a post-hoc power analysis with the same effect size, alpha = 0.05, and beta = 0.8, we estimate that a sample size of n=66 is needed for the experiment to be sufficiently powered, which is more than triple the current sample size of n=20. Fourth, there may be ceiling effects of attentional performance in our participants. Future work will include similar neurofeedback studies in individuals with attentional problems, such as attention deficit hyperactivity disorder (ADHD). Finally, we tested for changes in behavior only a day after the neurofeedback scan. Emerging research suggests that improvement in behavior or symptoms can continue for weeks to months after the final neurofeedback session (Goldway et al., 2019; Mehler et al., 2018; Rance et al., 2018). As such, longer follow-up than a single day is likely needed to detect behavioral changes.

In summary, connectome-based neurofeedback is a new tool for rt-fMRI neurofeedback that can target both a priori functional networks of interest (e.g., the default mode network) and data-driven functional connectivity signatures of symptoms and behavior in individuals. To facilitate the implementation of connectome-based neurofeedback, we have made all associated software freely available for download at http://www.bioimagesuite.org. Moving forward, systematically assessing effects of variables including functional network targets, training duration, and amount and type of feedback on behavioral outcomes—and sharing these results across groups—can increase the efficacy of rt-fMRI and inform both basic research and translational applications.

Highlights.

Previous rt-fMRI work has not targeted predictive models

We propose and validate connectome-based neurofeedback to predictive models

We show that connectivity from predictive models can be targeted by rt-fMRI

We show preliminary evidence of this method’s potential for future studies

Funding Sources:

This work was supported by the Yale FAS MRI Program funded by the Office of the Provost and the Department of Psychology; a Nation Science Foundation Graduate Research Fellowship and American Psychological Association Dissertation Research Award to M.D.R.; National Science Foundation BCS1558497 to M.M.C., and National Institutes of Health MH108591 to M.M.C.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Conflict of Interest: The authors declare no competing financial interests.

References

- deBettencourt MT, Cohen JD, Lee RF, Norman KA, Turk-Browne NB, 2015. Closed-loop training of attention with real-time brain imaging. Nat Neurosci 18, 470–475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Esterman M, Noonan SK, Rosenberg M, Degutis J, 2013. In the zone or zoning out? Tracking behavioral and neural fluctuations during sustained attention. Cerebral Cortex 23, 2712–2723. [DOI] [PubMed] [Google Scholar]

- Finn ES, Shen X, Scheinost D, Rosenberg MD, Huang J, Chun MM, Papademetris X, Constable RT, 2015. Functional connectome fingerprinting: identifying individuals using patterns of brain connectivity. Nat Neurosci 18, 1664–1671. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gabrieli JD, Ghosh SS, Whitfield-Gabrieli S, 2015. Prediction as a humanitarian and pragmatic contribution from human cognitive neuroscience. Neuron 85, 11–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldway N, Ablin J, Lubin O, Zamir Y, Keynan JN, Or-Borichev A, Cavazza M, Charles F, Intrator N, Brill S, Ben-Simon E, Sharon H, Hendler T., 2019. Volitional limbic neuromodulation exerts a beneficial clinical effect on Fibromyalgia. Neuroimage. 186:758–770. [DOI] [PubMed] [Google Scholar]

- Hampson M, Scheinost D, Qiu M, Bhawnani J, Lacadie CM, Leckman JF, Constable RT, Papademetris X, 2011. Biofeedback of real-time functional magnetic resonance imaging data from the supplementary motor area reduces functional connectivity to subcortical regions. Brain connectivity 1, 91–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hawkinson JE, Ross AJ, Parthasarathy S, Scott DJ, Laramee EA, Posecion LJ, Rekshan WR, Sheau KE, Njaka ND, Bayley PJ, deCharms RC, 2012. Quantification of adverse events associated with functional MRI scanning and with real-time fMRI-based training. Int J Behav Med 19, 372–381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson KA, Hartwell K, LeMatty T, Borckardt J, Morgan PS, Govindarajan K, Brady K, George MS, 2012. Intermittent “real-time” fMRI feedback is superior to continuous presentation for a motor imagery task: a pilot study. J Neuroimaging 22, 58–66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joshi A, Scheinost D, Okuda H, Belhachemi D, Murphy I, Staib L, Papademetris X, 2011. Unified Framework for Development, Deployment and Robust Testing of Neuroimaging Algorithms. Neuroinformatics 9, 69–84. doi: 10.1007/s12021-010-9092-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kleiner M, Brainard DH, Pelli DG, Broussard C, Wolf T, Niehorster D, 2007. What’s new in Psychtoolbox-3? Perception. doi: 10.1068/v070821 [DOI] [Google Scholar]

- Koush Y, Rosa MJ, Robineau F, Heinen K, W Rieger S, Weiskopf N, Vuilleumier P, Van De Ville D, Scharnowski F, 2013. Connectivity-based neurofeedback: dynamic causal modeling for real-time fMRI. Neuroimage 81, 422–430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laird AR, Lancaster JL, Fox PT, 2005. BrainMap: the social evolution of a human brain mapping database. Neuroinformatics 3, 65–78. [DOI] [PubMed] [Google Scholar]

- Laumann TO, Snyder AZ, Mitra A, Gordon EM, Gratton C, Adeyemo B, Gilmore AW, Nelson SM, Berg JJ, Greene DJ, McCarthy JE, Tagliazucchi E, Laufs H, Schlaggar BL, Dosenbach NU, Petersen SE, 2016. On the Stability of BOLD fMRI Correlations. Cereb Cortex. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindquist MA, Xu Y, Nebel MB, Caffo BS, 2014. Evaluating dynamic bivariate correlations in resting-state fMRI: a comparison study and a new approach. Neuroimage 101, 531–546. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liverence BM, Scholl BJ, 2011. Selective Attention Warps Spatial Representation: Parallel but Opposing Effects on Attended Versus Inhibited Objects. Psychol. Sci 22, 1600–1608. doi: 10.1177/0956797611422543 [DOI] [PubMed] [Google Scholar]

- Luck SJ, Vogel EK, 1997. The capacity of visual working memory for features and conjunctions. Nature 390, 279–281. doi: 10.1038/36846 [DOI] [PubMed] [Google Scholar]

- Mehler DMA, Sokunbi MO, Habes I, Barawi K, Subramanian L, Range M, Evans J, Hood K, Lührs M, Keedwell P, Goebel R, Linden DEJ, 2018. Targeting the affective brain-a randomized controlled trial of real-time fMRI neurofeedback in patients with depression. Neuropsychopharmacology 43, 2578–2585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Monti RP, Lorenz R, Braga RM, Anagnostopoulos C, Leech R, Montana G, 2017. Real-time estimation of dynamic functional connectivity networks. Hum Brain Mapp 38, 202–220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pashler H, 1988. Familiarity and visual change detection. Percept. Psychophys doi: 10.3758/BF03210419 [DOI] [PubMed] [Google Scholar]

- Pylyshyn ZW, Storm RW, 1988. Tracking multiple independent targets: evidence for a parallel tracking mechanism. Spat. Vis 3, 179–197. doi: 10.1163/156856888X00122 [DOI] [PubMed] [Google Scholar]

- Rance M, Walsh C, Sukhodolsky DG, Pittman B, Qiu M, Kichuk SA, Wasylink S, Koller WN, Bloch M, Gruner P, Scheinost D, Pittenger C, Hampson M., 2018. Time course of clinical change following neurofeedback. Neuroimage. 181, 807–813. doi: 10.1016/j.neuroimage.2018.05.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robertson IH, Manly T, Andrade J, Baddeley BT, Yiend J, 1997. “Oops!”: Performance correlates of everyday attentional failures in traumatic brain injured and normal subjects. Neuropsychologia 35, 747–758. doi: 10.1016/S0028-3932(97)00015-8 [DOI] [PubMed] [Google Scholar]

- Rosenberg M, Noonan S, DeGutis J, Esterman M, 2013. Sustaining visual attention in the face of distraction: a novel gradual-onset continuous performance task. Atten. Percept. Psychophys 75, 426–439. [DOI] [PubMed] [Google Scholar]

- Rosenberg MD, Finn ES, Scheinost D, Constable RT, Chun MM, 2018. Characterizing Attention with Predictive Network Models. Trends in Cognitive Sciences. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenberg MD, Finn ES, Scheinost D, Papademetris X, Shen X, Constable RT, Chun MM, 2016a. A neuromarker of sustained attention from whole-brain functional connectivity. Nat. Neurosci 19, 165–71. doi: 10.1038/nn.4179 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenberg MD, Hsu W-T, Scheinost D, Constable RT, Chun MM, 2018. Connectome-based models predict separable components of attention in novel individuals. J. Cogn. Neurosci 30, 160–173. [DOI] [PubMed] [Google Scholar]

- Rosenberg MD, Zhang S, Hsu WT, Scheinost D, Finn ES, Shen X, Constable RT, Li CS, Chun MM, 2016b. Methylphenidate Modulates Functional Network Connectivity to Enhance Attention. J Neurosci 36, 9547–9557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenberg MD, Scheinost D, Greene AS, Avery EW, Kwon YH, Finn ES, Ramani R, Qiu M, Constable RT, Chun MM, 2020. Functional connectivity predicts changes in attention observed across minutes, days, and months. Proceedings of the National Academy of Sciences U.S.A [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rouder JN, Morey RD, Morey CC, Cowan N, 2011. How to measure working memory capacity in the change detection paradigm. Psychon. Bull. Rev doi: 10.3758/s13423-011-0055-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Satterthwaite TD, Wolf DH, Loughead J, Ruparel K, Elliott MA, Hakonarson H, Gur RC, Gur RE, 2012. Impact of in-scanner head motion on multiple measures of functional connectivity: relevance for studies of neurodevelopment in youth. Neuroimage 60, 623–632. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scheinost D, Hampson M, Qiu M, Bhawnani J, Constable RT, Papademetris X, 2013. A Graphics Processing Unit Accelerated Motion Correction Algorithm and Modular System for Real-time fMRI. Neuroinformatics. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scheinost D, Noble S, Horien C, Greene AS, Lake EMR, Salehi M, Gao S, Shen X, O’Connor D, Barron DS, Yip SW, Rosenberg MD, Constable RT, 2019. Ten simple rules for predictive modeling of individual differences in neuroimaging. NeuroImage 193, 35–45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shakil S, Lee CH, Keilholz SD, 2016. Evaluation of sliding window correlation performance for characterizing dynamic functional connectivity and brain states. Neuroimage 133, 111–128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shen X, Finn ES, Scheinost D, Rosenberg MD, Chun MM, Papademetris X, Constable RT, 2017. Using connectome-based predictive modeling to predict individual behavior from brain connectivity. Nat. Protoc 12, 506–518. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shibata K, Watanabe T, Sasaki Y, Kawato M, 2011. Perceptual learning incepted by decoded fMRI neurofeedback without stimulus presentation. Science 334, 1413–1415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shmueli G, 2010. To Explain or to Predict? Statistical Science 25, 289–310. [Google Scholar]

- Sorger B, Scharnowski F, Linden DEJ, Hampson M, Young KD, 2019. Control freaks: Towards optimal selection of control conditions for fMRI neurofeedback studies. Neuroimage 186, 256–265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stoeckel LE, Garrison KA, Ghosh S, Wighton P, Hanlon CA, Gilman JM, Greer S, Turk-Browne NB, deBettencourt MT, Scheinost D, Craddock C, Thompson T, Calderon V, Bauer CC, George M, Breiter HC, Whitfield-Gabrieli S, Gabrieli JD, LaConte SM, Hirshberg L, Brewer JA, Hampson M, Van Der Kouwe A, Mackey S, Evins AE, 2014. Optimizing real time fMRI neurofeedback for therapeutic discovery and development. Neuroimage Clin 5, 245–255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sulzer J, Haller S, Scharnowski F, Weiskopf N, Birbaumer N, Blefari ML, Bruehl AB, Cohen LG, DeCharms RC, Gassert R, Goebel R, Herwig U, LaConte S, Linden D, Luft A, Seifritz E, Sitaram R, 2013. Real-time fMRI neurofeedback: progress and challenges. Neuroimage 76, 386–399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watanabe T, Sasaki Y, Shibata K, Kawato M, 2017. Advances in fMRI Real-Time Neurofeedback. Trends Cogn Sci 21, 997–1010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiss F, Zamoscik V, Schmidt SNL, Halli P, Kirsch P, Gerchen MF, 2020,Just a very expensive breathing training? Risk of respiratory artefacts in functional connectivity-based real-time fMRI neurofeedback, NeuroImage, 210, 116580. [DOI] [PubMed] [Google Scholar]

- Yarkoni T, Poldrack RA, Nichols TE, Van Essen DC, Wager TD, 2011. Large-scale automated synthesis of human functional neuroimaging data. Nat Methods 8, 665–670. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yarkoni T, Westfall J, 2017. Choosing Prediction Over Explanation in Psychology: Lessons From Machine Learning. Perspect Psychol Sci 12, 1100–1122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yamashita A, Hayasaka S, Kawato M, Imamizu H. 2017. Connectivity Neurofeedback Training Can Differentially Change Functional Connectivity and Cognitive Performance. Cereb Cortex. 27(10), 4960–4970. doi: 10.1093/cercor/bhx177 [DOI] [PubMed] [Google Scholar]

- Yuan H, Young KD, Phillips R, Zotev V, Misaki M, Bodurka J, 2014. Resting-state functional connectivity modulation and sustained changes after real-time functional magnetic resonance imaging neurofeedback training in depression. Brain Connect 4, 690–701. [DOI] [PMC free article] [PubMed] [Google Scholar]