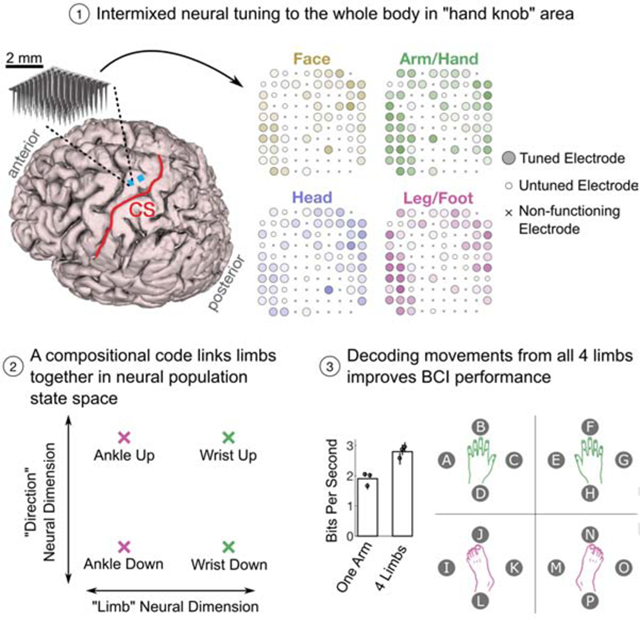

Summary

Decades after the motor homunculus was first proposed, it is still unknown how different body parts are intermixed and interrelated in human motor cortical areas at single-neuron resolution. Using multi-unit recordings, we studied how face, head, arm and leg movements are represented in hand knob area of premotor cortex (precentral gyrus) in people with tetraplegia. Contrary to traditional expectations, we found strong representation of all movements and a partially “compositional” neural code that linked together all four limbs. The code consisted of: (1) a limb-coding component representing the limb-to-be-moved, and (2) a movement-coding component where analogous movements from each limb (e.g. hand grasp and toe curl) were represented similarly. Compositional coding might facilitate skill transfer across limbs and provides a useful framework for thinking about how the motor system constructs movement. Finally, we leveraged these results to create a whole-body intracortical brain-computer interface that spreads targets across all limbs.

In Brief

Willett et al. show that “hand knob” area of premotor cortex in people with tetraplegia is tuned to the entire body and contains a compositional code that links all four limbs together. This coding may potentially facilitate skill transfer and a discrete brain-computer interface can accurately decode all four limbs.

Graphical Abstract

Introduction

Knowing how different body parts are topographically mapped onto motor cortex is central to understanding the motor cortical system. Neural activity in human motor cortex, including Brodmann area 4 (BA4) in central sulcus and adjacent Brodmann area 6 (BA6) on precentral gyrus, is traditionally thought to have macroscopic somatotopy. In this view, face, arm and leg movements are represented in distinct areas of cortex but individual muscles within any one body area may be mixed (e.g. wrist and finger movements may overlap within arm area) (Schieber, 2001). Classical stimulation studies in great apes and humans suggest an orderly arrangement of body parts along precentral gyrus, with little intermixing between face, arm and leg within any one individual (Leyton and Sherrington, 1917; Penfield and Boldrey, 1937; Penfield and Rasmussen, 1950). fMRI studies also support the idea of an orderly map with largely separate face, arm and leg areas along the precentral gyrus (caudal BA6) and the anterior bank of the central sulcus (BA4) (Lotze et al., 2000; Meier et al., 2008), as do electrocortiographic recordings in the high gamma band above precentral gyrus (albeit with occasional exceptions) (Crone et al., 1998a, 1998b; Ganguly et al., 2009; Miller et al., 2007; Ruescher et al., 2013). Nevertheless, the level of mixed representation within any one area of human precentral gyrus has not yet been quantified at single neuron resolution.

Here, we revisit motor somatotopy using microelectrode array recordings from hand knob area of precentral gyrus (Yousry et al., 1997) while two participants in the BrainGate2 pilot clinical trial made (or attempted) a variety of face, head, leg and arm movements. Surprisingly, we found strong neural tuning to all tested movements, contradicting traditional views on human motor cortical organization. In light of this new evidence of intermixed whole-body tuning, combined with prior anatomical evidence that places dorsal precentral gyrus in Brodmann area 6 and links it to macaque premotor area PMd [e.g. (White et al., 1997; Rizzolatti et al., 1998)], we refer to human precentral gyrus as premotor cortex in this manuscript (see Discussion for more details).

Our new finding of whole-body tuning in human premotor cortex presents a unique opportunity to investigate how each body part is neurally coded in relation to the others, which could shed light on its functional role. It also presents an opportunity to improve brain-computer interfaces (BCIs) by enabling whole-body decoding from microelectrode arrays in a single brain area. Along these lines, this manuscript is structured into three parts: (1) demonstrating tuning to the whole body in hand area of premotor cortex, (2) building on this finding to investigate the structure of the neural code for the whole body, and (3) translating this finding to BCIs to help people with paralysis.

Prior work in humans and macaques sets some expectations for how different body parts might be neurally coded in relation to each other. Previous fMRI and ECoG studies on human precentral gyrus found that matching movements of the ipsilateral and contralateral arms have similar (correlated) neural representations (Diedrichsen et al., 2013; Wiestler et al., 2014; Jin et al., 2016; Fujiwara et al., 2017; Bundy et al., 2018). Macaque studies also show a correlated representation of ipsilateral and contralateral reaching movements (Cisek et al., 2003) that changes during bimanual movements (Rokni et al., 2003). Here, we study not only how ipsilateral and contralateral arm movements are related, but also how leg movements are related to arm movements. Examining all four limbs together reveals a “compositional” neural code that links homologous movements of different limbs (e.g. links wrist movements to ankle movements, as well as ipsilateral wrist movements to contralateral ones).

The compositional code has a two-part structure where movements are coded in partial independence of the limb to be moved. One potential function of such a representation is to enable the transfer of motor skills between different limbs [as shown in (Kelso and Zanone, 2002; Christou and Rodriguez, 2008; Morris et al., 2009; Shea et al., 2011)]; motor skill could be learned in movement-coding components of the neural activity and then transferred to another limb by changing only the limb-coding component. We call the code “compositional” since the limb and movement components are “composed” (summed together) to specify the motor action. Recently, compositionality has also been suggested to underlie the representation of task rules in prefrontal areas (Reverberi et al., 2012; Yang et al., 2019).

In the last part of the manuscript, we demonstrate a discrete intracortical BCI that decodes movements across all four limbs from only a single brain area. We show that this whole-body BCI improves information throughput relative to a single-effector approach. This result substantially opens up the space of what intracortical BCIs can do and explore, as current systems are limited to placing microelectrodes in only a few brain areas [e.g. (Hochberg et al., 2012; Collinger et al., 2013; Aflalo et al., 2015; Bouton et al., 2016; Pandarinath et al., 2017; Ajiboye et al., 2017)].

Results

Intermixed Tuning to the Whole Body in Hand Premotor Cortex

Face, Head, and Contralateral Arm & Leg Movements

We used microelectrode array recordings from participants T5 and T7 to assess tuning to attempted movements of the face (mouth, tongue, facial muscles and speaking), head (head turning and tilting), contralateral arm (shoulder, elbow, wrist and fingers) and contralateral leg (hip, knee, ankle, and toes) in hand knob area of precentral gyrus. Participant T5 had a C4 spinal cord injury and was paralyzed from the neck down; he could move his face and head, but most attempted arm and leg movements resulted in little or no motion. Participant T7 had ALS and could move all joints tested, although some of his arm movements were limited due to weakness (see Table S2 for neurologic exam results).

In this experiment, T5 and T7 made (or attempted to make) movements in sync with visual cues displayed on a computer screen (Figure 1A). T5 completed an instructed delay version of the task where each trial randomly cued one of 32 possible movements spanning the face, head, arm and legs. For face and head movements, T5 was instructed to move normally; for arm and leg movements, T5 was instructed to attempt to move as if he were not paralyzed. T7, whose more limited data was collected earlier in a different study (and who is no longer enrolled), completed an alternating paired movement task with a block design. Each block tested a different movement pair, during which T7 alternated between making each of the paired movements every 3 seconds.

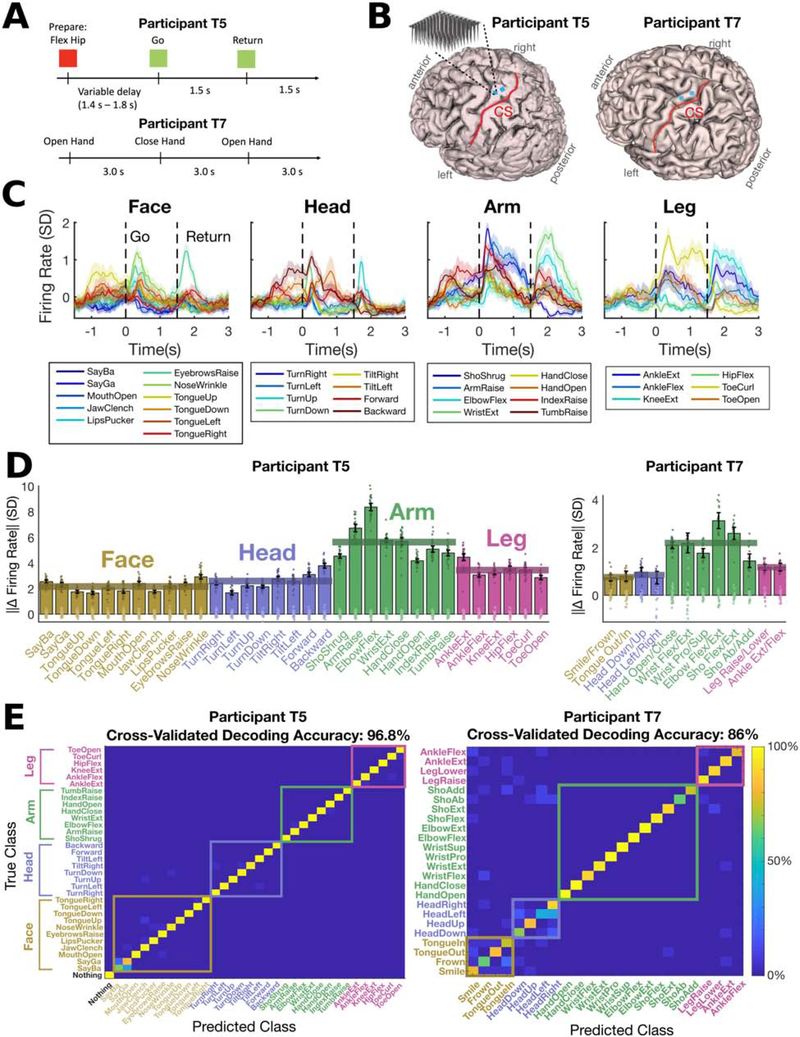

Figure 1. Separable and robust neural modulation for face, head, and contralateral arm & leg movements in hand knob area.

(A) Neural activity was recorded while participants T5 and T7 completed cued movement tasks that instructed them to make (or attempt to make) movements of the face, head arm and leg in sync with text appearing on a computer monitor.

(B) Participants’ MRI-derived brain anatomy and microelectrode array locations. Microelectrode array locations were determined by co-registration of postoperative CT images with preoperative MRI images.

(C) The mean firing rate recorded for each cued movement is shown for an example electrode from participant T5. Shaded regions indicate 95% CIs. Neural activity was denoised by convolving with a Gaussian smoothing kernel (30 ms sd).

(D) The size of the neural modulation for each movement was quantified by comparing to a baseline “do nothing” condition (in participant T5) or by comparing to its paired movement (T7). Each bar indicates the size (Euclidean norm) of the mean change in firing rate across the neural population (with a 95% CI). Each dot corresponds to the cross-validated projection of a single trial’s firing rate vector onto the “difference” axis. For T5, light dots represent the “do nothing” condition and dark dots represent the given movement. For T7, dark and light dots represent different movements of the given pair. Statistically significant modulation was observed for all movements (no confidence intervals contain 0). Translucent horizontal bars indicate the mean bar height for each movement type.

(E) A Gaussian naive Bayes classifier was used to classify each trial’s movement using the firing rate in a window from 200 to 600 ms (T5) or 200 to 1600 ms (T7) after the go cue. Confusion matrices are shown from offline cross-validated performance results; each entry (i, j) in the matrix is colored according to the percentage of trials where movement j was decoded (out of all trials where movement i was cued).

Despite recording from microelectrode arrays placed in hand knob region of precentral gyrus (Figure 1B), we found strong neural tuning to all tested movements, including those of the face, head and leg. Figure 1C shows an example electrode from T5 that was tuned to all movement categories. Here, and in most other results, we analyzed binned threshold crossing rates (i.e. multi-unit “firing rates”) for each microelectrode (although Figure S3, mentioned below, reports single unit results). Analyzing multi-unit threshold crossings allowed us to leverage information from more electrodes, since many electrodes recorded activity from multiple neurons that could not be precisely distinguished. Recent results indicate that neural population structure can be accurately estimated from threshold crossing rates alone (Trautmann et al., 2019). Binned threshold crossing rates were z-scored by subtracting the mean firing rate and dividing by the bin-by-bin standard deviation of the firing rate (and are reported in units of standard deviation, SD).

In Figure 1D we summarize the population-level neural modulation observed for each individual movement (T5) or pair of movements (T7) across all microelectrodes. For participant T5, we quantified modulation size by computing the magnitude (Euclidean norm) of the difference between mean firing rates observed during a “do nothing” control condition and mean rates observed during the movement of interest. Modulation size was estimated in a cross-validated way to reduce bias (see Methods). For participant T7, we computed the difference between the mean rates observed for each pair of movements. Mean rates were computed for each trial in a time window 200 to 600 ms after the go cue (T5) or 200 to 1600 ms after the go cue (T7). Figure 1D shows robust modulation for each tested movement in both participants (the clusters of single trial firing rates are clearly separable and the confidence intervals are far from zero). Tuning to arm movement was the strongest in both participants (p<1e-3; see Methods), with tuning to non-arm movements 38% (face), 46% (head) and 61% (leg) as large as arm movement tuning in T5, and 38% (face), 34% (head) and 53% (leg) as large in T7.

Next, we tested whether each movement was neurally distinguishable from other movements by using a cross-validated naive Bayes classifier to decode the movement on each trial. High classification accuracies (Figure 1E) confirm that tuning to each movement was unique and separable (as opposed to being caused by a generic signal that was the same for all movements). In Figure S1, we plot the neural activity in the top neural dimensions found using principal components analysis (PCA) to illustrate that activity is rich and multi-dimensional for all movement types.

Finally, we searched for somatotopy across the microelectrode arrays and saw no clear patterns; tuning to all four movement types was highly intermixed and many electrodes were tuned to multiple movement types (Figure S2). We also analyzed well-isolated, spike-sorted single neurons and found that they were frequently tuned to multiple movement types as well (Figure S3). In Figures S2–S3, we also report firing rate statistics in raw units of Hz.

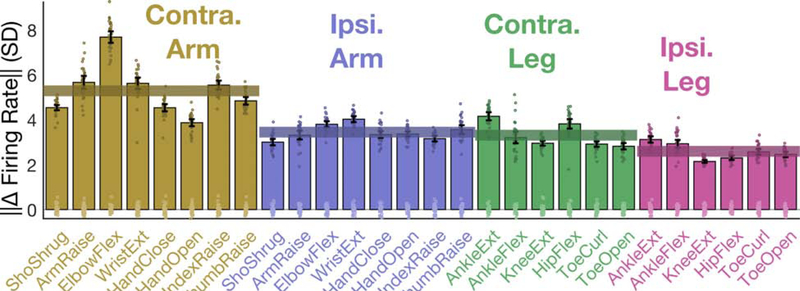

Ipsilateral Arm & Leg Movements

Next, we assessed tuning to ipsilateral attempted arm and leg movements in participant T5 (Figure 2). Our remaining results are based on data from T5, who is currently enrolled in the trial. Figure 2 shows that firing rates changed two-thirds as much for ipsilateral movement as compared to contralateral movement (65% as much for the arm, 78% as much for the leg). Tuning to ipsilateral movements was separable and robust. A cross-validated naive Bayes classifier could decode movements with 97.4% accuracy (amongst all movements shown in Figure 2) using a 200 to 600 ms window after the go cue.

Figure 2. Modulation for ipsilateral vs. contralateral arm and leg movements.

The size of the neural modulation for each attempted movement was quantified by comparing to a baseline “do nothing” condition. Each bar indicates the size (Euclidean norm) of the mean change in neural population activity (with a 95% CI) and each dot corresponds to the cross-validated projection of a single trial’s neural activity onto the “difference” axis. Translucent horizontal bars indicate the mean bar height for each attempted movement type.

A Compositional Code for Arm & Leg Movements

Isolated Movements of Single Effectors

Next, we probed the population-level structure of the whole-body tuning shown above, starting first with the relationship between ipsilateral and contralateral movements. We were guided by prior single unit recordings in macaques (Cisek et al., 2003; Rokni et al., 2003) and fMRI and ECoG studies in people (Bundy et al., 2018; Diedrichsen et al., 2013; Jin et al., 2016) which found that ipsilateral and contralateral movements had correlated representations in motor cortex. If this is the case, we hypothesized there should also exist laterality-related neural dimensions which code for the side of the body independently of the movement details. Laterality dimensions would help downstream areas distinguish between contralateral and ipsilateral movement. This kind of “compositional” neural code, defined by correlated movement-coding dimensions and separate effector-coding dimensions, is distinct from a muscle-like representation. In a muscle-like representation, contralateral and ipsilateral movements would exist in largely orthogonal neural dimensions and hence be uncorrelated [as recently found in macaque primary motor cortex (Ames and Churchland, 2019; Heming et al., 2019)], since the muscles are largely non-overlapping.

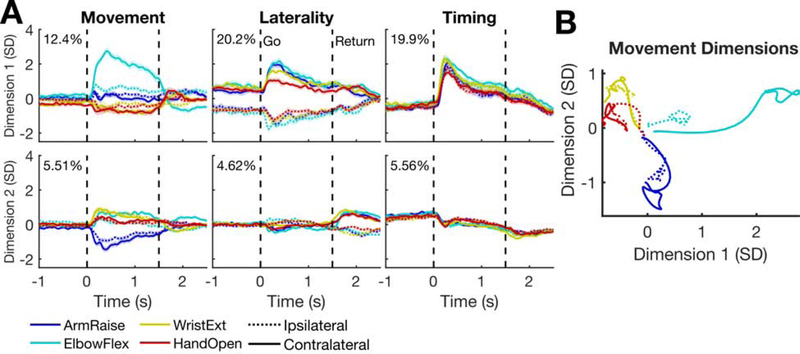

We used demixed PCA (dPCA) (Kobak et al., 2016) to visualize where the neural activity falls on the spectrum between muscle-like and compositional. dPCA decomposes neural data into a set of dimensions that each explain variance related to one marginalization of the data. We marginalized the data according to three factors: time, laterality, and movement. In the movement marginalization, we included the main effect of the movement condition as well as the interaction between movement and laterality. If the muscle-like hypothesis holds, there should be small laterality dimensions and large movement dimensions in which activity is dissimilar for matching movements on opposite sides of the body. On the other hand, for compositional coding, there should be a large laterality dimension (in addition to large movement dimensions), and activity in the movement dimensions should be similar for matching movements on opposite sides of the body.

The dPCA results (Figure 3) appear more consistent with the compositional coding hypothesis. There is a large laterality dimension coding for body side independently of the movement itself (Figure 3A, middle column). Additionally, activity in the movement dimensions (Figure 3A, left column) is similar for any given movement (e.g. wrist extension) regardless of which side of the body it is on (Figure 3B).

Figure 3. The neural population code relating ipsilateral to contralateral movements.

(A) To reveal how the ipsilateral and contralateral movements are represented in relation to each other, demixed PCA was applied to a set of four example attempted arm movements (taken from the dataset shown in Figure 2). Each panel shows the mean activity for a single dimension and each column shows dimensions related to a particular factor (marginalization). Each trace shows the mean activity for a single condition and the shaded regions indicate 95% CIs. The percent variance explained by each dimension is indicated in the top left.

(B) The mean activity through time in the first two movement dimensions is plotted as a trajectory for each condition. The dashed lines lie mostly near the solid lines and travel in the same direction, indicating that activity for a given movement is similar across sides of the body.

The dPCA analysis is a helpful visualization of the overall neural structure, but is not definitive proof of a compositional code. Next, we test for compositionality using more comprehensive analyses. We also expand the scope beyond ipsilateral vs. contralateral movements to consider the possibility of a compositional code linking the arms to the legs.

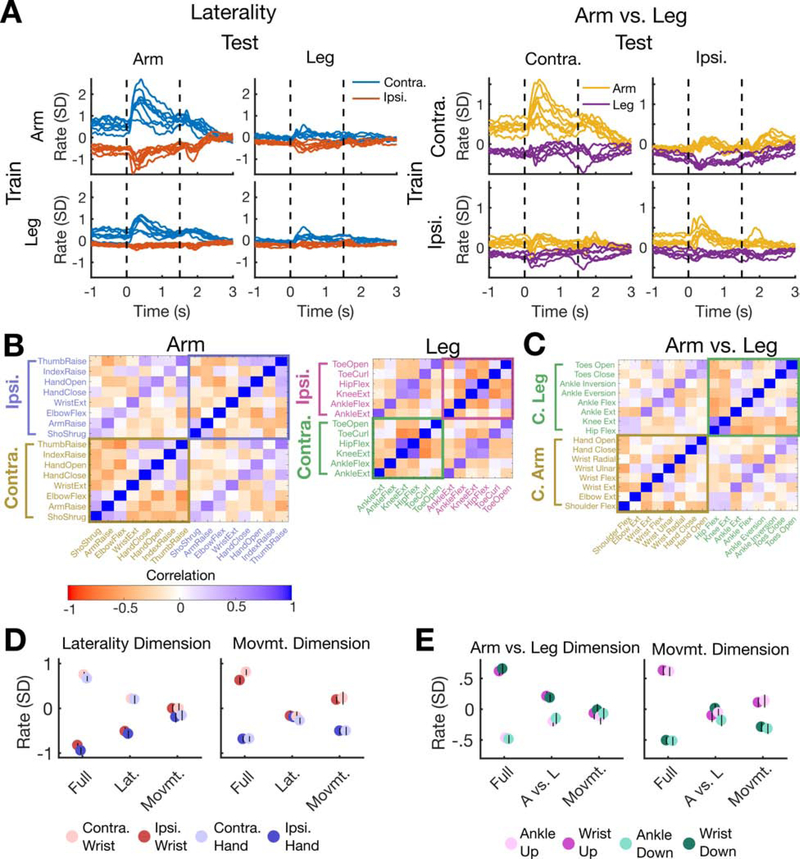

First, we used cross-validation to confirm that the laterality dimension revealed in Figure 3A was consistent for held-out movements that were not used to find it. Figure 4A shows that activity in the laterality dimension correctly separates attempted movements made on opposite sides of the body even for held-out movements (diagonal panels) and for held out effectors (arm or leg, off-diagonal panels). We also used cross-validated dPCA to search for a putative “arm vs. leg” dimension that codes for whether a movement was attempted with the arm or the leg (independently of the movement details or laterality). Results indicate the presence of a robust arm vs. leg dimension in addition to a laterality dimension.

Figure 4. A compositional code that links together all four limbs.

(A) A cross-validation analysis demonstrates the existence of neural dimensions that code for laterality (left vs. right side of the body) and effector (arm vs. leg). Each dimension was identified using only a subset of the data (rows) and then applied to all data (columns). In diagonal panels (e.g. train on arm and test on arm), each individual movement was held out (that is, each movement’s trace was made using dimensions found using other movements only).

(B) Each square (i,j) of the correlation matrices indicates the Pearson correlation between the mean firing rate vector of movement i and that of movement j. The off-diagonal banding shows that ipsilateral and contralateral movements were correlated with each other, for both the arm (left) and leg (right).

(C) Correlations between pairs of contralateral arm and leg movements were computed to reveal a surprising pattern: homologous movements of the arm and leg are correlated (e.g. hand opening and closing is uniquely correlated with toe opening and closing, as indicated by the off-diagonal band)..

(D) Neural activity in laterality-coding and movement-coding dimensions is partially active during an instructed delay period where only the laterality or the movement is specified. Each panel corresponds to a different neural dimension. Each circle indicates the mean (and its black line indicates the 95% CI) of activity in each dimension in the case where the full movement is specified (Full), only the laterality is specified (Lat.), or only the movement is specified (Movmt.). A 300 ms time window preceding the go cue was used for analysis.

(E) Same as in D, showing results from a different experiment testing the partial preparation of arm vs. leg (wrist vs. ankle) and movement direction.

Next, we quantified the correlations between firing rate vectors observed for arm and leg movements on opposite sides of the body (Figure 4B). First, firing rates were averaged across trials in a 200 to 600 ms window after the go cue and concatenated across electrodes into a vector. Then, the effect of the laterality dimension was removed by subtracting the mean firing rate within each laterality (otherwise, all movements on opposite sides of the body would appear negatively correlated). Finally, the correlation (Pearson’s r) between the firing rate vectors for each movement pair was computed. We observed a consistently positive correlation between matching ipsilateral and contralateral movements for both the arms and legs (indicated by the off-diagonal bands in Figure 4B). Correlation values for matching movements, such as contralateral vs. ipsilateral wrist extension, were statistically significantly greater than those for non-matching movements, such as contralateral wrist extension vs. ipsilateral elbow flexion (mean r=0.52 vs. r=−0.07 for the arm, p<1e-9; r=0.73 vs. r=−0.14 for the leg, p<1e-08; significance assessed with a t-test). All correlations were computed with cross-validation to reduce bias (see Methods).

Surprisingly, homologous movements of the arm and leg were also more correlated than non-homologous movements (e.g. hand grasp and toe curl are positively correlated, while hand close and ankle inversion are not, as shown in the off-diagonal band in Figure 4C). Correlations between homologous movements were statistically significantly greater than for non-homologous movements (mean r=0.48 vs. r=−0.06, p<1e-9). We also confirmed this result for a more limited set of four arm and leg movements in participant T7 (mean r=0.22 vs. r=−0.07, p=0.0036). These homologous correlations, along with the arm vs. leg dimension shown in Figure 4A, extend the compositional code to pairs of arm and leg movements.

In Figure S4, we examined the neural representation of directional movement (as opposed to single-joint movement) and found that it was also correlated across all four limbs. Interestingly, directional movements were correlated in an intrinsic coordinate frame (where mirrored joint movements were correlated) as opposed to an extrinsic frame (where movements in the same spatial direction would be correlated), placing precentral gyrus at an intermediate level of motor abstraction.

To exhaustively test that both aspects of compositionality (shared movement-coding and independent effector-coding) were indeed non-trivial properties of the data and well above chance, we performed additional shuffle controls (Figure S5) which confirmed their significance.

Finally, we examined compositionality through the lens of motor preparation. We reasoned that if compositionality was indeed the result of independently specifying the movement and the limb-to-be-moved, then neural activity in limb-coding or movement-coding dimensions should be able to be prepared by itself in the case of partial information (e.g. when only the limb, but not the movement, is cued in an instructed delay task). We tested this idea with a partial cue task. We found that when only the movement is specified during the delay period (i.e. “wrist extension” or “hand close” instead of “right wrist extension” or “left hand close”), activity in the movement dimension partially codes for the upcoming movement while activity in the laterality dimension doesn’t change (Figure 4D, right panel). Likewise, when only the laterality is specified (i.e. “left” or “right”), activity in the laterality dimension codes for the upcoming side of the body while activity in the movement dimension doesn’t change (Figure 4D, left panel). This result also holds for partial specification of arm vs. leg and movement direction (Figure 4E). By examining the single trial distributions of preparatory activity (Figure S6), we confirmed that the neural activity is consistent with partial preparation as opposed to a mixture of full preparation and no preparation.

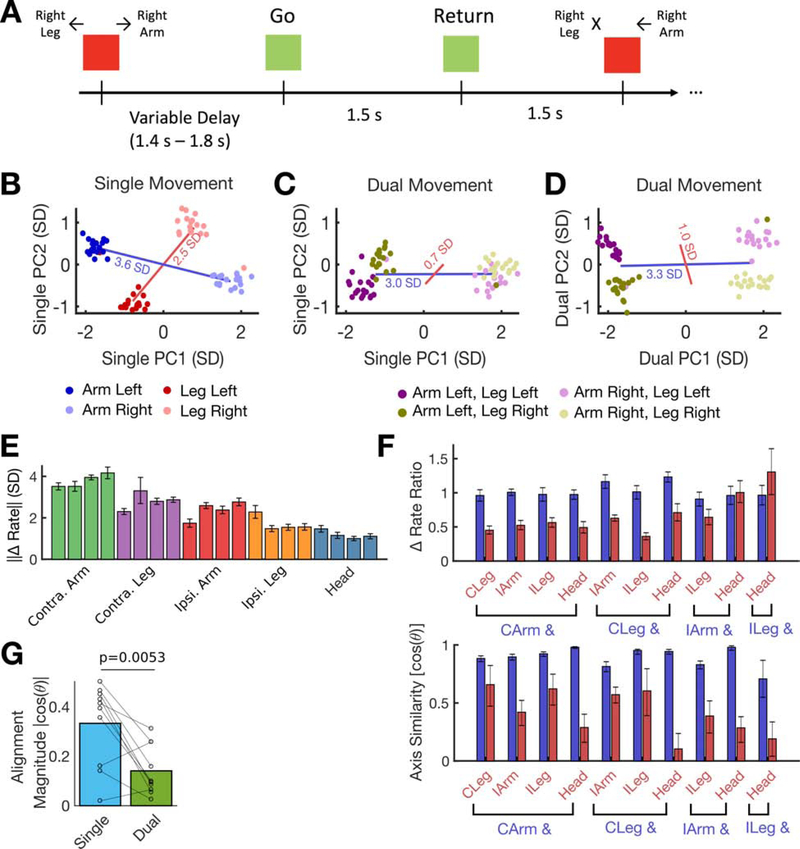

Simultaneous Movement of Two Effectors

We further tested the idea of compositionality by examining how neural activity changes when two effectors are moved simultaneously. Since a movement code that is shared across effectors cannot simultaneously specify two different movements, we hypothesized that the code should change during dual movement. Prior work on bimanual reaching in macaques showed a decorrelation and suppression of ipsilateral-related neural activity during bimanual reaches (Rokni et al., 2003). To investigate this possibility, we tested all pairings of the following five effectors: left arm, right arm, left leg, right leg, and head. For each effector pair, we used an instructed delay cued movement task (Figure 5A) to probe the neural representation of left and right directional movements made either in isolation or simultaneously. For all pairs, we observed separable neural tuning to each of the four simultaneous movement conditions. A cross-validated naive Bayes classifier was able to distinguish between these conditions with an accuracy ranging from 86.1% to 100% (mean=95% across all pairs) using firing rates within a 200 to 1000 ms window after the go cue.

Figure 5. Departure from compositionality during dual movement: suppression and decorrelation effects.

(A) An instructed delay task was used to probe the neural representation of dual (multi-effector) movement. In this example experiment, participant T5 attempted to move his contralateral arm and leg to the left or right either simultaneously (e.g. the first illustrated movement) or individually (e.g. the second illustrated movement).

(B) Example single trial firing rates vectors during the “go” period are plotted in the top two neural dimensions that show differences in firing rate due to movement direction. Red and blue lines connect their respective cluster means and their lengths are a measure of tuning strength.

(C) Firing rates observed during dual movement are plotted in the same dimensions used in B. Leg modulation is considerably weakened in this space (the red line is smaller than in B). Red and blue lines show the distance between the dark and light clusters (blue, arm movement) and the purple and gold clusters (red, leg movement).

(D) The data in C is plotted in the top two principal components that describe the dual movement data. The leg modulation appears larger, indicating that its axis of representation has changed relative to single movement.

(E) Each bar depicts, for a single experiment, the mean change (± 95% CI) of firing rate observed during single movement for each effector (e.g. the blue and red lines in B).

(F) The ratio of dual/single modulation (top) and the change in axis during dual movement (bottom) is shown for each individual experiment. Each pair of bars is from a separate experiment with two different body parts. The blue bar corresponds to whichever body part of the two tested that had the largest modulation in E. Results show that the “secondary” effector (red bar) almost always decreased its modulation more sharply during simultaneous movement (top) and changed its axis of representation more (bottom).

(G) The alignment between each pair of effectors is summarized for the single and dual movement contexts. Each circle shows data from a single pair and lines connect matching pairs. Bar heights indicate the mean across all pairs. A paired t-test reveals a statistically significant difference in alignment between the single and dual contexts, meaning that the effectors become less correlated during dual movement (p=0.0053; 0.33 vs. 0.14).

Figure 5B shows example data from one effector pairing (contralateral arm and leg). To visualize the neural activity, firing rate vectors were computed for each trial using a 200 ms to 1000 ms time window after the go cue. Then, two neural dimensions were found in which to visualize these vectors by using PCA to highlight tuning to movement direction (see Methods). The example data shows clear and separable firing rate clusters for each movement when the effectors are moved in isolation.

Next, we used these same two dimensions to visualize the neural activity observed during dual movement (Figure 5C). Interestingly, the neural representation of arm movement appears largely intact (blue line is similar) while the representation of leg movement shrinks considerably in this space (red line is smaller). This could be because the axis of representation has changed and no longer aligns with the single movement PCs. In Figure 5D we show the same activity but in neural dimensions that best explain dual movement (found again using PCA). Although the modulation for leg movement is still smaller than in Figure 5B, it is enlarged relative to Figure 5C, indicating that both the modulation size has decreased and the axis of representation has shifted.

We then summarized the effects observed in Figure 5B–D across all effector pairings (Figure 5E–F). Figure 5E summarizes the change in firing rate when each effector is moved separately (e.g. the red and blue lines in Figure 5B). Contralateral arm movements cause the largest firing rate changes, followed by the contralateral leg, ipsilateral arm, ipsilateral leg and head. We then quantified how the modulation size changed during dual movement (Figure 5F, top panel). To measure how it changed, we divided the modulation size during dual movement (length of the lines in Figure 5D) by the modulation size during single movement (length of the lines in Figure 5B). Values less than 1 indicate that neural activity was attenuated. For most pairings, the neural representation of the “primary” effector (blue bars) stays relatively constant while the representation of the “secondary” effector (red bars) is attenuated.

We found the same pattern for the change in axis (Figure 5F, bottom panel); the primary effector mostly retains its axis of representation while the secondary effector’s axis changes more. The change in axis was defined by the cosine of the angle between the single movement axis and the dual movement axis. On average, this change caused the two effectors to become less correlated with each other during dual movement (Figure 5G). These suppression and decorrelation effects might facilitate independent dual-effector control by keeping the somatotopically dominant activity free from interference.

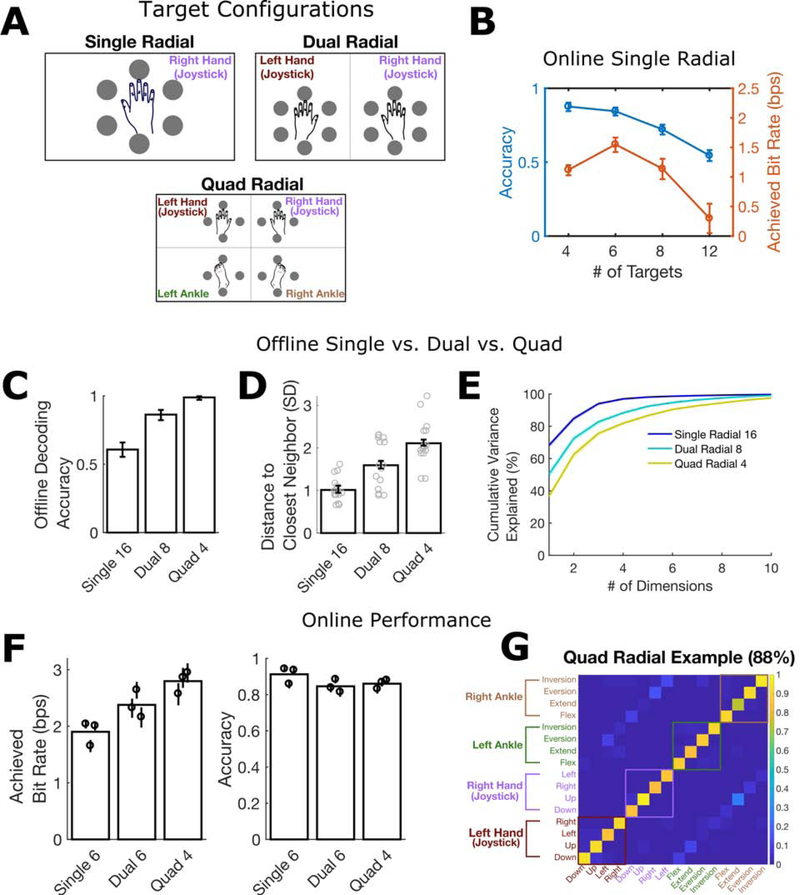

A Whole-Body Discrete Decoding BCI that Increases Information Throughput

Current intracortical BCIs (iBCIs) are limited to recording from a small number of locations [e.g. (Hochberg et al., 2012; Collinger et al., 2013; Aflalo et al., 2015; Bouton et al., 2016; Pandarinath et al., 2017; Ajiboye et al., 2017)], making it difficult to record from the arm and leg areas of precentral gyrus across both hemispheres. The results above, which show strong whole-body tuning in just one patch of cortex, make it possible for current iBCIs to decode movements from all four limbs. One context where this could be useful is to enable accurate selection from a large number of items by mapping movements from different limbs to different items. This could be better than using the contralateral arm alone, since neural activity patterns from other limbs might be more distinct from each other.

We tested this idea with a discrete iBCI that decoded, in closed-loop, which of a set of N movements was attempted by the user. A Gaussian naïve Bayes classifier was used to decode the movement (using a single time window of neural activity). We focused on directional movements made by the wrists (as if pointing a joystick) and ankles (pointing the foot), consistent with prior work on discrete iBCIs that decoded directional movements (Musallam et al., 2004; Santhanam et al., 2006). Figure 6A shows the three target configurations tested. For each trial, a target would illuminate and T5 had exactly one second to identify the target and perform the indicated movement. At the end of one second, the movement was decoded and a sound played indicating to T5 whether the decoder was successful. The next target then appeared immediately.

Figure 6. Spreading targets across multiple effectors increases the accuracy and throughput of a discrete BCI.

(A) Target configurations. Each target was associated with a specific attempted movement.

(B) Online discrete decoding performance for a single contralateral effector (right wrist) as a function of the number of radially spaced targets. Bit rate and accuracy decline if more than 6 targets are used.

(C) Offline decoding accuracy increases as targets are spread across more effectors (mean and 95% CI shown).

(D) As the number of effectors increases, the distance of each target to its closest neighbor in neural population state space increases (each gray dot corresponds to a single target and indicates the distance to its closest neighbor in neural population state space). Bars and error bars show the means ± 95% CIs.

(E) The dimensionality of the neural activity increases with the number of effectors. Cumulative variance explained is plotted (mean ± 95% CI) as a function of the number of PCA components used to explain the neural activity across all targets.

(F) Online decoder performance for the target layouts in A. The achieved bitrate increases with the number of effectors, even when the number of targets is optimized for each layout (see Methods). Each circle shows the mean performance (± 95% CI) during a single session; bar heights show the mean across all sessions.

(G) Example confusion matrix from the online Quad Radial task. Each entry (i, j) in the matrix is colored according to the fraction of trials where movement j was decoded (out of all trials where movement i was cued). Off-diagonal banding shows that most errors were made when classifying between matching hand and foot movements.

First, we motivate the need for using multiple effectors by showing how information throughput [measured with “achieved bit rate” (Nuyujukian et al., 2015)] and decoding accuracy varies as a function of the number of targets when only a single effector is used (Figure 6B). After just 6 targets, accuracy and bit rate start to decrease. Next, we tested offline whether spreading out 16 targets across multiple effectors could increase performance (Figure 6 C–E). When spread across all four limbs (4 targets per limb), accuracy was near 100% (Figure 6C). The improvement in accuracy was a result of increasing the distance between each target in neural population state space (Figure 6D) and increasing the dimensionality of the neural population space spanned by the targets (Figure 6E). We then confirmed online that information throughput was higher when using multiple effectors. Even when using an optimized number of targets for each layout (see Methods), the four-limb layout performed substantially better (Figure 6F). Decoding errors mostly confused matching targets from the arm and leg on the same side of the body (Figure 6G), likely due to their correlated representation (as shown in Figure 4). Supplemental Video 1 shows example trials.

Finally, we explored spreading targets across more body parts than just the wrists and ankles (including the elbow, knee, hip, toes, and fingers). Supplemental Video 2 shows that high decoding accuracies (mean of 95%) can be achieved across 32 targets in this manner, as long as T5 is given more time to recognize the target and prepare the movement.

Discussion

Representation of the Whole Body in Hand Knob Area of Human Precentral Gyrus

How different body parts are topographically mapped onto motor cortical areas is fundamental to understanding the motor system. Although prior work has addressed this question in humans using electrical stimulation [e.g. (Penfield and Boldrey, 1937; Penfield and Rasmussen, 1950)] and low-resolution recording technologies [e.g. ECoG and fMRI (Crone et al., 1998a; Miller et al., 2007; Meier et al., 2008)], single neuron data has been lacking. Using microelectrode recordings, we found the surprising result that there was strong neural tuning to face, head, arm and leg movements intermixed in hand knob area of precentral gyrus. While it was previously recognized that nearby body parts within the arm, leg or face area of precentral gyrus may overlap (e.g. wrist and fingers may overlap), prior work in humans has largely supported the idea of separate areas for the arm, leg and face on precentral gyrus (Penfield and Boldrey, 1937; Miller et al., 2007; Meier et al., 2008). Our finding of intermixed whole-body tuning overturns this prior expectation and illustrates that single neurons may differ substantially from what electrical stimulation or low-resolution activity might predict.

In our view, anatomical studies convincingly argue that a great majority of the precentral gyrus belongs to the caudal portion of Brodmann area 6, a premotor area (as opposed to Brodmann area 4, primary motor cortex) (Rademacher et al., 1993; Geyer et al., 1996; White et al., 1997; Rademacher et al., 2001; Geyer, 2004). This modern perspective is somewhat at odds with Brodmann’s original illustrations, where area 4 was drawn as occupying a large amount of the surface of precentral gyrus (Brodmann, 1909). More recent studies show that area 4 only emerges from the central sulcus quite medially (in leg region) and is mostly confined to the area above the superior frontal sulcus in a wedge shape (Rademacher et al., 1993, 2001; White et al., 1997).

Given that our recordings are likely from premotor cortex, it is natural to ask: would the intermixed tuning we showed here also hold in primary cortex (in the central sulcus)? Prior work in macaques provides some guidance on this issue. Dorsal precentral gyrus, where our arrays were implanted, has been proposed to be homologous to macaque area F2 (PMd) (Genon et al., 2017, 2018; Geyer et al., 2000; Rizzolatti et al., 1998; Zilles et al., 1995). From this perspective, many of our results match previous results from macaque studies, including a large correlation in PMd activity between contralateral and ipsilateral reaching movements (but not in M1) (Cisek et al., 2003; Ames and Churchland, 2019; Heming et al., 2019), and mixed arm and leg representations in PMd (but not in M1) (Kurata, 1989). The close match between our results and macaque PMd provides compelling evidence of homology between human dorsal precentral gyrus and macaque PMd at single neuron and multi-unit resolution, and suggests that Brodmann area 4 in humans likely contains more somatotopically segregated (and less compositional) movement representations. However, only neural recordings from human central sulcus can provide a definitive answer.

Importantly, both participants had chronic tetraplegia (T7 had ALS and T5 had high level spinal cord injury), which raises the question of how whole-body tuning might generalize to people without tetraplegia. One would expect cortical remapping to occur after spinal cord injury; however, current models of remapping predict that the most adjacent intact body parts (in this case face and head) should become more dominant (Qi et al., 2000). Instead, we found in participant T5 that the arm and leg were more strongly represented than the face and head, suggesting that remapping may be limited in precentral gyrus. We also observed similar proportions of population-level tuning for face, head, arm and leg movements in participant T7, who had ALS, giving further confidence that intermixed tuning was not caused by remapping. Nevertheless, only neural recordings from able-bodied people can provide a definitive answer.

One additional consideration is the possibility that some of the whole-body tuning we observed was caused by small, inadvertent movements of the contralateral arm. However, we believe this is an unlikely explanation of our results. First, small generic movements of the contralateral arm induced by effort are unlikely to explain the rich, high-dimensional structure in the data (e.g. compositional coding). Second, BCI decoding performance increased when decoding additional effectors (due to better neural separability of the movements), which would not occur if tuning to the other effectors was caused by small, inadvertent movement of the contralateral arm.

A Compositional Neural Code for Movement

In one participant (T5), we characterized the structure of the neural code for movements across the whole body in one area of premotor cortex. We found that arm and leg movements were represented in a correlated way. Movements of the same body part on opposite sides of the body, and homologous movements of the arm and leg (e.g. hand grasp and toe curl), were significantly correlated in neural dimensions that coded for movement type. In other neural dimensions, the limb itself was represented largely independently of the movement. Together, activity in these neural dimensions forms a partially “compositional” code for movement that differs from a muscle-like representation.

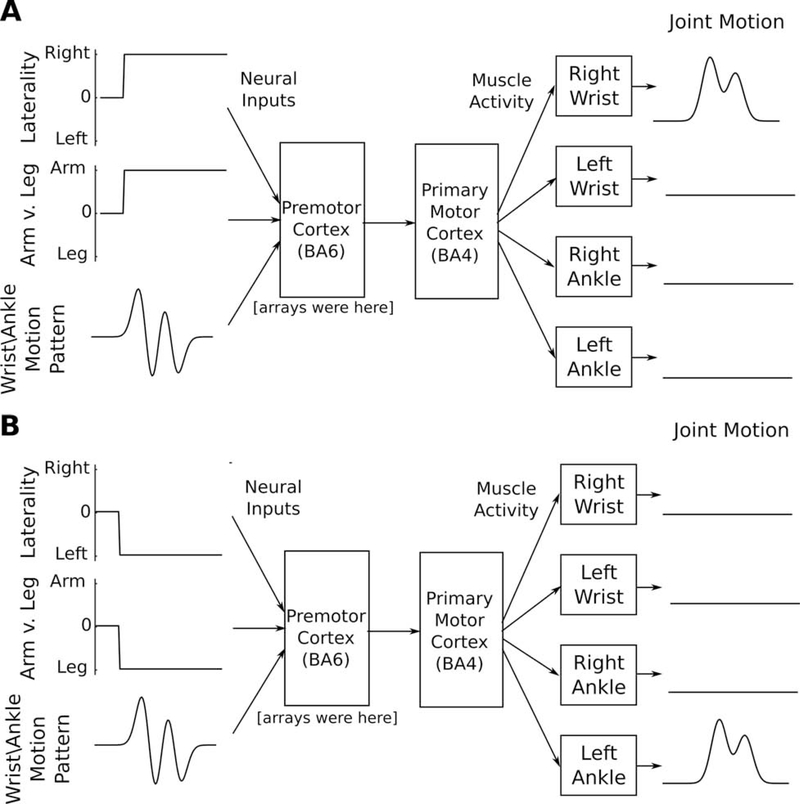

Compositionality provides a new conceptual framework for thinking about how the motor cortical system constructs movement. It can also explain why the entire body is represented in a single area of premotor cortex, since a whole-body compositional code might be useful for transferring motor skills to different limbs. Based on our findings, we propose a concrete neural mechanism for skill transfer that works as follows. Neural activity in movement-coding neural dimensions could be optimized through learning to produce the correct patterns to accomplish the task. Then, the skill could be transferred to another limb by changing only the activity in limb-coding dimensions, causing the movement activity to re-route to different muscles. We schematize this idea in Figure 7 and further concretize it with a neural network model (presented in Figure S7) that is capable of transferring motor skills across the arms using a compositional code. Behaviorally, motor skills such as sequences, rhythms and trajectories have been demonstrated to transfer from one arm to the other (Latash, 1999; Criscimagna-Hemminger et al., 2003; Shea et al., 2011), from one leg to the other (Morris et al., 2009), and from the arms to the legs (Christou and Rodriguez, 2008; Kelso and Zanone, 2002; Savin and Morton, 2008).

Figure 7. A compositional neural code may facilitate the transfer of motor skills across limbs.

(A) We speculate that premotor cortex (the site of our arrays) might receive input from upstream areas in the form of a “compositional” representation of movement where the limb is specified somewhat independently of the motion details. In this example, activity in the “laterality” and “arm vs. leg” input streams specify which of the four limbs should move, and activity in the “wrist/ankle motion pattern” input stream specifies the motion that should be performed (at a wrist or ankle joint). Premotor cortex and primary motor cortex would then convert this representation into limb-specific muscle patterns to achieve a desired joint motion.

(B) The same motion pattern could be transferred to any other wrist or ankle by changing activity only in the “laterality” and “arm vs. leg” dimensions, achieving immediate transfer without requiring relearning.

See Figure S7 for a concrete implementation of this hypothesis in a neural network model.

We hypothesize that precentral gyrus might be an intermediate node that helps transform a compositional representation of movement inherited from upstream areas into a more muscle-like representation required for motor control. Such an “in-between” role could explain why neural activity in precentral gyrus was not fully compositional in the sense that correlations values were always less than one (they typically ranged from 0.5 to 0.7). Consistent with such a role, we also observed that the movement code shared across effectors was represented in an intrinsic coordinate frame (Figure S4). We expect that more rostral areas might encode motor actions in visuospatial coordinates or in more abstract codes that could enable skill transfer between non-homologous effectors.

Our results are consistent with previous fMRI and ECoG studies that found a correlated representation of ipsilateral and contralateral finger/arm movements on precentral gyrus (Bundy et al., 2018; Diedrichsen et al., 2013; Jin et al., 2016) and an intrinsic, effector-independent code for finger movements (Wiestler et al., 2014). A recent microelectrode study of human parietal cortex also found a correlated representation of ipsilateral and contralateral hand and shoulder movements (Zhang et al., 2017). Our study puts these results into a larger context of a two-part compositional coding scheme that includes all four limbs and contains a limb-coding component in addition to correlated movement-coding components. We also showed that neural activity in movement dimensions can be prepared independently of neural activity in limb-coding dimensions, lending more support to the idea of independent specification of the movement and the limb-to-be-moved.

We further tested the idea of compositionality by examining how neural activity changed when two effectors were moved simultaneously. Since a shared movement code cannot simultaneously specify two different movements, we predicted that the code would change during dual movement. Results showed that during dual movement, the neural representation for the secondary effector was suppressed and its tuning changed, becoming less correlated with the primary effector. Our results are consistent with prior behavioral work suggesting that bimanual movements are coded separately from unimanual movements (Nozaki et al., 2006; Yokoi et al., 2016),fMRI studies in people (Diedrichsen et al., 2013), and electrode recordings in macaques that also showed changes in neural tuning for bimanual arm movements (Ifft et al., 2013; Rokni et al., 2003). This study extends these findings to a broader set of effector pairings, revealing a rank ordering of effectors that determines which effector’s representation remains intact during simultaneous movement.

Our compositional coding results focused on the arms and legs. Future work is needed to test whether the representation of head and face movements also shares a common structure with arm and leg movements, and thus whether compositionality can explain the presence of head and face related activity in arm/hand area of premotor cortex. One could imagine that motor skills such as timing and intensity (e.g. finger-tapping vs. nose-wrinkling a certain rhythm) might indeed be able to be transferred between the face and the limbs, necessitating a movement code with shared representations between the limbs and the face. However, skills that are more tied to the morphology of the extremities would likely require a unique representation.

Motor skill transfer is an intriguing hypothesis that could explain why all four limbs are represented in one area of premotor cortex; however, it is not the only possible explanation. One alternative is that the observed activity is an epiphenomenon of “overflow” activity from connected networks that control other body parts. However, the idea of overflow by itself cannot explain why we observed compositional structure (wherein homologous body parts had linked representations, effector-related activity could be prepared independently of movement-related activity, etc.). It is also unclear why motor cortical activity would overflow to connected regions to such a large degree with no functional purpose. Nevertheless, future work is needed to test whether the compositional code is causally involved with movement construction as opposed to epiphenomenal.

Related to the idea of neural overflow is the behavioral finding of “motor overflow”: the phenomenon that arm or leg movements are sometimes accompanied by small, unintended mirror movements of the opposite arm or leg, even in healthy adults (Hoy et al., 2004). Compositional coding offers an explanation for this phenomenon. An incomplete transformation between a compositional code and muscle activity could cause the effector-independent movement command to leak to unintended effectors, resulting in homologous limbs making small mirror movements. One concrete prediction of our theory is that motor overflow should be observed from the arm to the leg, and vice versa, since these limbs are linked together in the compositional code. We are unaware of any studies which test this behavioral prediction in adults, although it has been observed in infants (Soska et al., 2012).

Whole-Body Intracortical Brain-Computer Interfaces

Our findings have important implications for intracortical brain-computer interfaces (iBCIs), since they open up the opportunity to decode movements across the entire body from just a small area of precentral gyrus. We showed that a discrete target selection iBCI could successfully decode targets across all four limbs accurately enough to improve performance relative to a single-effector approach. Using more limbs increased the neural separability of targets, enabling more targets to be presented (up to 32 targets at 95% accuracy, Supplemental Video 2). While promising, it is important to note that our iBCI system was intended only as a proof-of-concept to demonstrate the potential of whole-body decoding. It does not constitute a complete, clinically viable system that is robust enough to recalibrate rapidly as neural features change [e.g. (Jarosiewicz et al., 2015)], or to automatically switch off during task disengagement, which are important challenges to overcome for real-world deployment.

Here, we demonstrated one use case for whole-body decoding: discrete target selection. Other use cases could be to map multiple movements to different kinds of clicks for general purpose computer use (e.g. curl left toes for left click, curl right toes for right click, etc.), or even to restore continuous control of leg and arm movements across both sides of the body. While whole-body decoding could also be achieved by placing electrodes across the entire precentral gyrus of both hemispheres, this is a much more difficult surgical and technical proposition.

One potential limitation of decoding the whole body from a single area is the suppression of activity related to secondary effectors during simultaneous movement (i.e. Figure 5). Nevertheless, simultaneous movements can still be decoded (we observed a mean offline decoding accuracy of 95% across all pairs of simultaneous movement). Moving forward, our results provide important design considerations for future iBCIs which must consider the tradeoff between additional microelectrode placement and multi-effector decoding performance.

STAR Methods

Lead Contact and Materials Availability

Further information and requests for resources and reagents should be directed to and will be fulfilled by the Lead Contact, Frank Willett (fwillett@stanford.edu). This study did not generate new unique reagents.

Experimental Model and Subject Details

This study includes data from two participants (identified as T5 and T7), who gave informed consent and were enrolled in the BrainGate2 Neural Interface System clinical trial (ClinicalTrials.gov Identifier: NCT00912041, registered June 3, 2009). This pilot clinical trial was approved under an Investigational Device Exemption (IDE) by the US Food and Drug Administration (Investigational Device Exemption #G090003). Permission was also granted by the Institutional Review Boards of University Hospitals (protocol #04–12-17), Stanford University (protocol #20804), Partners Healthcare/Massachusetts General Hospital (2011P001036), Providence VA Medical Center (2011–009), and Brown University (0809992560). All research was performed in accordance with relevant guidelines/regulations.

Participant T7 was a right-handed man, 53 years old at the time of data collection, who was diagnosed with Amyotrophic Lateral Sclerosis (ALS) and had resultant motor impairment (functional rating scale ALSFRS-R of 17). In July 2013, T7 was implanted with two 96 channel intracortical microelectrode arrays (Blackrock Microsystems, Salt Lake City, UT) in the hand knob area of dominant precentral gyrus (1.5-mm length). Data are reported from post-implant day 24. At the time of data collection, T7 retained movement of the arm, leg, face and head. T7 was able to extend and flex the knee and ankle normally, and make all requested head and face movements normally, but had more limited range of motion in the arm and leg for some movements (Table S2 reports neurologic exam results). T7 is no longer enrolled in the trial and the data from T7 were collected before the present study was conceived.

Participant T5 is a right-handed man, 65 years old at the time of data collection, with a C4 ASIA C spinal cord injury that occurred approximately 9 years prior to study enrollment. In August 2016, participant T5 was implanted with two 96 channel intracortical microelectrode arrays (Blackrock Microsystems, Salt Lake City, UT) in the hand knob area of dominant precentral gyrus (1.5-mm length). Data are reported from post-implant days 579–961 (Table S1). T5 retained full movement of the head and face and the ability to shrug his shoulders. Below the injury, T5 retained some very limited voluntary motion of the arms and legs that was largely restricted to the left elbow. Some micromotions of the right and left hands were also visible, along with extremely small but reliable twitching of the feet and toes (Table S2 reports neurologic exam results).

The hand knob area in both participants was identified by pre-operative magnetic resonance imaging (MRI). Figure 1 shows array placement locations registered to MRI-derived brain anatomy.

Method Details

Session Structure and Cued Movement Tasks

Neural data was recorded in 3–5 hour “sessions” on scheduled days. During the sessions, participants sat upright in a wheelchair that supported their backs and legs. A computer monitor placed in front of the participants displayed text and/or colored shapes to indicate which movement to make and when. Data was collected in a series of 2–10 minute “blocks” consisting of an uninterrupted series of cued movements; in between these blocks, participants were encouraged to rest as needed. The software required for running the experimental tasks, recording data, and implementing the closed-loop decoding system was developed using MATLAB & Simulink (MathWorks).

The data from participant T7 were collected in a single session consisting of a series of 2-minute blocks containing 20 repetitions of one set of paired movements. These paired movements were designed to engage the same joint(s) but in different directions; for example: hand open/close, wrist flexion/extension, head left/right, etc. Every three seconds, the text on the screen alternated to instruct the other paired movement (e.g. as in Figure 1A) and T7 responded as soon as he was able.

The data from T5 were collected across 19 sessions (Table S1). All sessions followed a simple instructed delay paradigm (like that shown in Figure 1A) except for the closed-loop discrete decoding sessions (described in their own section below). For text-cued tasks (Figures 1–5), during the instructed delay period a red square and text appeared in the center of the screen indicating to T5 that he should prepare to make the specified movement. The instructed delay period lasted a random amount of time that was drawn from an exponential distribution; values that fell outside of a minimum/maximum range were re-drawn. Maximum and minimum delay times varied from session to session but were within 1.4 to 3 seconds. After the delay time, the square turned green and the text indicating the movement changed to “Go”, at which point T5 made the movement immediately. T5 was told to continue attempting to make the movement (for arm & leg movements) or to hold the posture of the completed movement (for face & head movements) until the text changed to “Return”, at which point T5 relaxed and returned to a neutral posture. The movement and return periods lasted 1.5 seconds each. The spatially cued movement task (Figure S4) followed the same structure, but used colored targets instead of text to specify which movement was supposed to be made (as shown in Figure S4A).

Neural Data Processing

Neural signals were recorded from the microelectrode arrays using the NeuroPort™ system (Blackrock Microsystems) [(Hochberg 2006) describes the basic setup]. Neural signals were analog filtered from 0.3 Hz to 7.5 kHz and digitized at 30 kHz (250 nV resolution). Next, a common average reference filter was applied that subtracted the average signal across the array from every electrode in order to reduce common mode noise. Finally, a digital bandpass filter from 250 to 3000 Hz was applied to each electrode before spike detection.

For threshold crossing detection, we used a −4.5 × RMS threshold applied to each electrode, where RMS is the electrode-specific root mean square (standard deviation) of the voltage time series recorded on that electrode. For the analysis of waveform-sorted single neurons (which was only done for Figure S3), neurons were sorted manually using Plexon Offline Spike Sorter v3. Only waveform clusters that appeared in an unambiguous, highly separable cluster in principal component space were included.

Threshold crossing times (and single-unit spike times) were binned into 10 ms bins (for T5) or 20 ms bins (for T7) and z-scored to ensure that electrodes with high firing rates did not overly influence the population-level results. Different bin widths were used for T5 and T7 because the task data was collected with different computer systems at different sampling rates. Z-scoring was accomplished by first subtracting, in a block-wise manner, the mean count over all bins within each block. Subtracting the mean within each block helps to counteract slow drifts in the firing rate of electrodes and was especially important for the T7 data, since it was collected in a block-wise fashion. For this dataset, mean subtraction ensures that spurious drifts in firing rate did not artificially inflate the classification performance shown in Figure 1E (by helping to distinguish movements from different blocks). After mean-subtraction, the binned counts were divided by the sample standard deviation computed using all bins across all blocks.

Finally, electrodes with firing rates < 1 HZ over all time steps were excluded from further analysis in order to denoise population-level results. For some analyses (e.g. dPCA in Figure 3), the binned spike count time series were convolved with a Gaussian kernel (sd = 30 ms) to reduce high frequency noise.

Gaussian Naïve Bayes Classification

For both offline (e.g. Figure 1E) and online classification (Figure 6 F–G), we used a Gaussian naïve Bayes classifier to classify firing rate vectors. We chose to use a Gaussian naïve Bayes classifier because it is a simple and straightforward method that performed well enough to demonstrate our key points. It is possible that more advanced decoding architectures may improve performance even further.

Firing rate vectors were computed by counting the number of threshold crossings that occurred within a fixed window of time for each electrode and then dividing by the window width. To classify the firing rate vectors, we computed the log likelihood of observing that vector for each possible class and then chose the class with the largest log likelihood. We assumed that each element of the firing rate vector was normally distributed and was statistically independent from each other element. We also assumed that the variance of each Gaussian distribution was the same across all classes. Under these assumptions, the following quantity is proportional to the log likelihood of observing firing rate vector x under the class Ck (Bishop, 2006):

Here, N is the number of electrodes, xi is the ith element of the firing rate vector x (corresponding to electrode i’s firing rate), ui,k is the mean for electrode i under class k, and σi is the standard deviation for electrode i (under all classes). To classify, we computed this quantity for each class and chose the class with the largest value. The class-specific means were estimated using the sample mean across all available training data; likewise, the variances were estimated using the pooled variances across all classes.

Closed-Loop Discrete Decoding

All closed-loop discrete decoding experiments used a “time-locked” trial structure where each trial lasted for 1 second and no pauses occurred between trials. At the end of each trial, the closed-loop discrete decoding system classified the participant’s attempted movement. If the movement was correctly classified, a “success” sound played; otherwise, a “failure” sound played. After each trial ended, a new target appeared and the next trial began immediately (see Supplemental Videos 1 and 2 for example trials).

We used a Gaussian naïve Bayes classifier (defined above) to classify mean firing rate vectors computed using a fixed window of time for each trial. This window was optimized for each task and session as part of the process of calibrating the classifier; the window typically fell between 300 to 1000 ms. To optimize the window, we performed an offline grid search across all possible start and end times for the window in 50 ms increments from 0 ms to 1000 ms. Ten-fold cross-validation was used to estimate the achieved bit rate that would result from using each window. The window with the largest achieved bit rate was then chosen.

For each online discrete decoding session, one or two target configurations were tested. For each target configuration, one 5 minute open-loop block of data was first collected with which to calibrate an initial classifier. Then, we proceeded with a series of 5 minute closed-loop blocks to allow participant T5 to practice with that target configuration. After each closed-loop block, we re-calibrated the classifier using the preceding two blocks of data. We continued until we determined that T5’s performance had plateaued and he was comfortable with the task. After this decision was made, we collected a series of five, 3-minute “performance” blocks with the classifier held fixed; the average accuracy and achieved bit rate obtained during these blocks were reported in Figure 6. In addition to the sessions reported here, T5 benefitted from 6 additional sessions of practice wherein we developed/tested the BCI system and tried different target configurations.

Achieved bit rate is a conservative, clinically-motivated metric of information throughput (Nuyujukian et al., 2015) and was computed with the following formula:

where N is the number of targets, Sc is the number of correct selections, Si is the number of incorrect selections, and t is the total time elapsed. Achieved bit rate assumes that every incorrect selection must be followed by the correct selection of a delete key to undo the error, thus heavily penalizing low accuracies that would be hard for participants to work with in practice.

For the online results in Figure 6 F–G, we used an offline simulation to find the optimal number of targets for each layout in order to maximize the achieved bit rate. To do so, we used an exploratory offline dataset with all four effectors (right & left hand, right & left foot) and with eight targets per effector. We then fit a simple linear encoding model of target direction to each effector, using only the data corresponding to that effector’s movements:

Here, f is the N × 1 firing rate vector for a single trial, Ek is an N × 3 matrix of mean firing rates (first column) and directional tuning coefficients (second and third columns) for effector k, θ is the angle of the target, ε is an N × 1 noise vector, and a is a scalar gain factor. Each element of ε is normally distributed with covariance matrix Σk. Ek was fit to the data using least squares regression for each effector independently, and Σk was estimated using the sample error covariance of the linear model. To counteract overfitting in Ek (which could overestimate the amount of tuning present), we searched for the gain factor a which led to the best match in predicted accuracy for the number of targets present in the exploratory dataset (eight). These a values were < 1 and downweighed the tuning.

Once the model was fit, we varied the number of possible targets from 2 to 10. For each target layout, we simulated a new set of trials using the model and predicted decoder performance by applying a Gaussian naïve Bayes classifier to the simulated data using ten-fold cross-validation. Using this method, we predicted that the optimal number of targets was equal to 6 for a single effector (right hand only), 6 each for two effectors (right & left hand), and 4 each for four effectors (right & left hand, right & left foot) when the trial time was constrained to be one second. Encouragingly, the optimal number of targets predicted by this method for a single effector (6) matched online data (Figure 6b).

Quantification and Statistical Analysis

The quantitative methods used for data analysis are detailed below in this section, organized mainly by the Figure for which they were used. Statistical analyses are reported in the Results and also described in detail below in this section. In general, 95% confidence intervals and/or p-values < 0.05 were used to define statistical significance. We used t-tests to compare the means of two distributions. We used bootstrap resampling, jackknifing or parametric assumptions of normality to generate 95% confidence intervals of the mean. Quantitative approaches were not used to determine if the data met the assumptions of the t-tests or normality. The statistical units and N for each test are detailed below.

Cross-Validated Estimator of Neural Distance

Measuring the distance between two distributions of firing rate vectors is useful for quantifying the amount of neural modulation caused by a particular movement. However, measuring this in an unbiased way in the presence of noise is a nontrivial problem. Here, we motivate the problem and describe the methods we used to obtain conservative estimates of distance that substantially reduce bias by using cross-validation (see our code repository https://github.com/fwillett/cvVectorStats for an implementation and simulated tests that validate the approach).

To understand the problem, consider the measurements in Figure 1C. The bar heights reflect the distance between two multivariate distributions: a distribution of firing rate vectors corresponding to the “do nothing” condition (distribution 1), and a distribution of firing rate vectors corresponding to the movement in question (distribution 2). Let us denote the firing rate vector observed on trial i for distribution 1 as xi1 and for distribution 2 as xi2. The sample means are

The sample means differ from the true means u1 and u2 by some sampling error. As a result, the difference in sample means also contains error: for some error vector ε.

One simple method for estimating the distance between distributions 1 and 2 would be to take the Euclidean norm of the difference in sample means: . However, the sampling error inherent in causes this metric to be biased upwards. Expanding terms, we have: , which is larger than on average since ε · ε > 0. One intuitive way to see this is to consider the case where distribution 1 and distribution 2 are identical. The sample means for each distribution will always differ from each other (due to sampling error), and hence there will always be some positive distance between them even though the true difference in means is zero.

Fortunately, there is a straightforward way to achieve a practically bias-free estimate of distance. First, by following the approach laid out in (Allefeld and Haynes, 2014), we can estimate the squared distance by averaging together a series of unbiased estimates, each made by using leave-one-out trials. The following is an unbiased estimate of the squared distance:

Here, is the sample estimate of the difference in means using only the pair of samples from trial i and is the sample estimate of the difference in means using all trials except i. Note that this cross-validation technique has also been used elsewhere as part of the “crossnobis” estimator (Diedrichsen and Kriegeskorte, 2017).

Since and contain no overlapping trials, they are statistically independent from each other. Statistical independence implies that the expectation of their product is equal to the product of their expectation. Taking the expectation, we can show that it is an unbiased measure:

Note that this cross-validation approach will work for computing the squared norm of any vector quantity in an unbiased way. For example, could be a vector of coefficients from a linear tuning model or, more simply, the mean of a single distribution.

Although squared distance can be used as a measure of neural modulation, we find ordinary (Euclidean) distance to be a much more intuitive metric (e.g. when using Euclidean distance, if the neural distance is twice as large, then there is twice as much firing rate change). Unfortunately, taking the square root of the above quantity does not immediately yield an unbiased measure of Euclidean distance, since it can be negative. Nevertheless, it is possible to construct a conservative, largely unbiased estimator of Euclidean distance by taking the square root of the absolute value while preserving the sign: . This square root estimator substantially reduces (practically eliminates) bias and is far more robust to sampling error than a naïve approach of taking the Euclidean norm of the sample estimate. We found that except in cases of very high sampling error, the square root estimator’s expectation was equal to the true value. Importantly, in cases where some bias was present, the estimator was conservative (see https://github.com/fwillett/cvVectorStats for simulations); since it is conservative, significantly positive distances can be safely interpreted as meaningful differences in neural activity.

Note that above method assumes that there are an equal number of observations from each distribution (N observations from each), but the same technique can be applied for unequal numbers of observations by splitting the data into folds of varying sizes (https://github.com/fwillett/cvVectorStats).

Cross-Validated Angles and Correlations

In the above section, we described our approach for reducing bias when estimating the distance between two firing rate distributions. We also extended this approach to reduce the bias of correlation and angle measurements, which we describe here (see our code repository at https://github.com/fwillett/cvVectorStats for an implementation and simulated tests that validate the approach).

When computing the angle between mean firing rate vectors (in Figure 5F–G), the sample estimate is:

Here, and are the sample estimates of the mean firing rate vectors for distribution 1 and 2. The problem here is that and are biased upwards because they contain sampling error. This causes cos θ to appear smaller than it truly is, making the vectors appear more dissimilar. The larger the uncertainty (smaller sample sizes or smaller vectors), the greater this effect will be.

By replacing and with bias-reduced estimators of vector norm, this effect can be eliminated. For example, as shown in the above section, can be estimated in an unbiased way by computing , where is the sample estimate of u1 using only trial i and is the sample estimate of u1 using all trials except i. can then be estimated by applying the sign-preserving square root transform.

The same technique of substituting bias-reduced estimators into the denominator can be applied to greatly reduce bias when estimating correlations between vector means (as in Figure 4) or vectors of tuning coefficients (as in Figure S4). To see this, note that the equation estimates the correlation between two u1 and u2 as long as and are taken to be the sample estimates of the centered vectors.

Figure 1 Methods

The datasets presented in this figure (10.22.2018 and 08.23.2013) consisted of 32 trials per movement for T5 and 19 trials per movement for T7.

In Figure 1C, 95% confidence intervals of the mean were computed by assuming that the data are normally distributed.

In Figure 1D, we used the bias-reducing, cross-validated estimator of distance to compute the height of each bar. To compute the confidence intervals, we used a jackknife procedure as described in (Severiano et al., 2011). We found that the jackknife confidence intervals produced more accurate confidence intervals than bootstrapping (which we confirmed via simulation), since the cross-validated distance metric is biased upwards when resampling identical data points (because identical data points are more aligned with each other). This effect is similar to that noted in (Severiano et al., 2011), where the jackknife was also found to outperform the bootstrap.

In Figure 1D, the small circles represent single trial firing rate vectors projected onto a “difference axis” consisting of the difference between two distributions of firing rate vectors. For T5, the two distributions correspond to the “do nothing” control condition and the movement condition of interest. The light circles are the single trial projections of the “do nothing” activity onto this axis and the dark circles are the single trial projections of the movement activity. For T7, the two distributions correspond to each of the paired movements for the pair of interest, and the light and dark circles each correspond to one of the movements in the pair. The small circles were jittered along the x-axis for ease of visualization.

We used cross-validation to project each trial’s firing rate vector onto a line connecting the two distributions in question. For each trial, we first estimated the line connecting the two distributions using all other trials by computing , where is the sample mean for distribution 1, is the sample mean for distribution 2, and d is the scalar distance between the distributions found using the cross-validated estimator. We then projected the held-out trial’s firing rate vector onto v by using the dot product.

Using the data in Figure 1D, we performed t-tests to determine whether the changes in firing rate evoked by arm movements were statistically significantly larger than those evoked by non-arm movements. For T5, enough movements were tested so that we could perform independent, two-sample t-tests for each movement category (using the mean Δ firing rate magnitude for each movement as the statistical unit, i.e. the bar height for each bar in Figure 1D). The arm modulation (N=8 arm data points) was statistically significantly larger than the face (p<1e-06, N=11 face data points), head (p<1e-04, N=8 head data points), and leg (p=0.003, N=6 leg data points) modulation. For T7, only two movement pairs each were tested for the face, head, and leg categories, making statistical comparison underpowered on a per-category basis. However, lumping together all non-arm movements (for a total of 6 arm data points and 6 non-arm data points) showed a statistically significant difference from arm movements when using a two-sample t-test (p<1e-3).

For Figure 1E (offline classification), we used the firing rates in a window between 200–600 ms (T5) or 200–1600 ms (T7) after the go cue as input into the classifier. For T7, we found that since there was temporal variation in activity throughout the movement, classification performance could be improved by including multiple time windows of firing rates for each electrode in the feature vector. We split the 200 to 1600 ms time window into two smaller windows (200 to 800 and 800 to 1600) and included the activity in each of these windows for each electrode as input to the classifier (effectively doubling the number of inputs). This wasn’t necessary for the T5 dataset as performance was already saturated with a single window.

Note that, due to the block design of T7’s data, we pre-processed the neural data to remove differences in firing rate means from block to block that could spuriously increase classification performance (see above section “Neural Data Processing”).

Figure 2 Methods

Figure 2 was created in the same way as Figure 1D, detailed above. Figure 2’s dataset (12.05.2018) consisted of 30 trials per movement.

Figure 3 Methods

Figure 3 used the same dataset as the one shown in Figure 2 (12.05.2018; 30 trials per movement).

We used the most recent version of demixed principal components analysis (Kobak et al., 2016) [https://github.com/machenslab/dPCA] with the default parameters, including using an optimization procedure to find the best regularization factor. Demixed principal components analysis (dPCA) is an optimization technique that decomposes neural data into a sum of neural dimensions that express variance related only to certain variables in the experiment. This decomposition gives an interpretable and comprehensive overview of the structure in the neural data as it relates to the experimentally manipulated variables. Here, we briefly summarize dPCA as it was applied to our data [refer to (Kobak et al., 2016) for a complete description].