Abstract

Background:

The Centers for Medicare and Medicaid Services provide nationwide hospital ratings that may influence reimbursement. These ratings do not account for the social risk of communities and may inadvertently penalize hospitals that service disadvantaged neighborhoods.

Objective:

This study examines the relationship between neighborhood social risk factors (SRFs) and hospital ratings in Medicare’s Hospital Compare Program.

Research Design:

2017 Medicare Hospital Compare ratings were linked with block group data from the 2015 American Community Survey to assess hospital ratings as a function of neighborhood SRFs.

Subjects:

A total of 3608 Medicare-certified hospitals in 50 US states.

Measures:

Hospital summary scores and 7 quality group scores (100 percentile scale), including effectiveness of care, efficiency of care, hospital readmission, mortality, patient experience, safety of care, and timeliness of care.

Results:

Lower hospital summary scores were associated with caring for neighborhoods with higher social risk, including a reduction in hospital score for every 10% of residents who reported dual-eligibility for Medicare/Medicaid (−3.3%, 95% CI, −4.7, −2.0), no high-school diploma (−0.8%, 95% CI, −1.5, −0.1), unemployment (−1.2%, 95% CI, −1.9, −0.4), black race (−1.2%, 95% CI, −1.7, −0.8), and high travel times to work (−2.5%, 95% CI, −3.3, −1.6). Associations between neighborhood SRFs and hospital ratings were largest in the timeliness of care, patient experience, and hospital readmission groups; and smallest in the safety, efficiency, and effectiveness of care groups.

Conclusions:

Hospitals serving communities with higher social risk may have lower ratings because of neighborhood factors. Failing to account for neighborhood social risk in hospital rating systems may reinforce hidden disincentives to care for medically underserved areas in the U.S.

Keywords: Social risk adjustment, Neighborhood disadvantage, Hospital ratings, Hospital quality, Medicare ratings

INTRODUCTION

Hospital ratings are a cornerstone of modern healthcare, especially in an era of rapid information flow and growing public transparency. From the patient perspective, hospital ratings could be helpful for deciding where to get care. From the hospital perspective, ratings can influence payments from value-based care programs and are used in payer-provider contract negotiations. The Centers for Medicare and Medicaid Services (CMS) and others use quality metrics (i.e., readmission rates, surgical site infection rates) and survey data to create scores used for hospital ratings.1,2 However, a hospital’s quality can be heavily influenced by the risk of its patient population. For instance, a medically complex population with fewer resources can be more challenging to care for than a healthier population with many resources. As such, hospital rating programs have begun to implement risk adjustment strategies, which attempt to account for the differences in risk when calculating a score or rating.3 While these risk adjustment strategies have historically accounted for medical complexity, they have only recently begun to address social complexity.

Social risk factors (SRFs) have been shown to influence numerous quality metrics used in value-based payment programs, such as the Merit-based Incentive Payment System and Hospital Readmissions Reduction Program.4–7 However, there is little agreement about the specific SRFs that most significantly influence quality of care. While some SRFs, such as race and socioeconomic status, have been more consistently associated with quality metrics (e.g., hospital readmission), the influence of other SRFs, such as education or marital status, remains less clear.3 In a 2016 report to Congress evaluating nine Medicare-sponsored value-based programs, dual-eligibility, used as a surrogate marker for high SRFs, was strongly associated with worse patient outcomes.8 Consequently, value-based programs have largely focused on this single surrogate marker in risk adjustment strategies. Similarly, many of these value-based programs focus on a single quality outcome or objective (e.g., reducing readmission). In comparison, hospital rating systems are more complex and may be more susceptible to the influence of SRFs, because they include a broader array of quality metrics. Quality metrics in rating systems provide a common reference point for comparing hospitals, but the impact of SRFs may call into question the fairness of hospital rating systems for hospitals serving disadvantaged communities.9,10

Much of the work to date has examined the role of SRFs at the patient level, with an emphasis on Medicare-Medicaid dual-eligibility because of its availability in CMS datasets; however, quality metrics may also be affected by SRFs at the neighborhood level. In recent years, the National Prevention Strategy by the U.S. Surgeon General has called for greater emphasis on neighborhood disadvantage as a critical lever of health outcomes.11 Living in a disadvantaged community can influence health directly through substandard housing conditions, inadequate access to food or transportation, and high levels of stress due to safety concerns12,13—regardless of a hospital’s performance. In one landmark study, residents were randomized to receive housing vouchers to move from neighborhoods with high levels of poverty to those with low levels of poverty,14 and those who received these vouchers were significantly less likely to develop severe or morbid obesity. As Kind and colleagues explain, “policies that don’t account for neighborhood disadvantage may be ineffective or provide only limited benefit.”13 However, implementation of risk adjustment based on neighborhood SRFs has remained understudied.

No studies to our knowledge have examined a broad subset of neighborhood characteristics at the block group level, and how they influence specific quality metrics used in a major hospital rating program. The purpose of this study was to examine associations between neighborhood social risk and hospital quality ratings in the Medicare Hospital Compare Program. As a secondary objective, we examined the effect of risk adjustment on quality ratings.

METHODS

Data

The 2017 Medicare Hospital Compare dataset groups 57 quality metrics into 7 quality group scores that are used to create an overall summary score. A categorical 1 to 5 star rating is calculated from the summary scores using k-means clustering.15 Of the 3,654 hospitals included in this dataset, 46 were not analyzed due to missing quality group scores. Hospital rating data were paired to block group-level sociodemographic data from the 2015 American Community Survey (ACS) 5-year estimate16 for each hospital’s local geographic catchment area. We observed very little missing data for ACS variables: 6.6% of block groups had missing data for median home value and 3.1% for median family income. To calculate a local geographic catchment area for each hospital, we scaled each hospital’s target population to the number of staffed hospital beds by multiplying the number of hospital beds by 416.6, which represents 2.4 hospital beds per 1000 people.17 For instance, a hospital with 200 beds would have a neighborhood population of 83,333 people. The local geographic catchment area was then defined as the collection of population-weighted block group centroids that fall within a circle centered at the hospital’s latitude and longitude that captures the hospital’s target population (Supplemental Figure 1).18

While the Dartmouth Atlas Group and others have used marginal methods to define hospital catchment (e.g., hospital service areas),19 these methods may overestimate the catchment region by including more distant geographies that access highly specialized services (e.g., cancer care) but not emergency room services, laboratory testing, or other routine health services. Moreover, these hospital service areas (HSAs) have relatively vast boundaries in an urban setting. For example, a single HSA in Chicago (HSA #14023) includes 10 unique hospitals in drastically disparate regions of the city (Supplemental Figure 1),19 which lacks “on the ground” validity regarding the variation in populations served by Chicago-area hospitals. Using the HSA approach, Advocate Illinois Masonic and University of Chicago Medical Centers have the same local geographic catchment, but in reality, serve drastically different patient populations and neighborhoods (Supplemental Figure 1). Thus, in the absence of an established method to provide a more micro-level assessment, we calculated geographic catchment based on each hospital’s location and size to estimate a local, place-based measure of geography.

Measures

The primary dependent variables were the summary and 7 quality group scores from the Medicare Hospital Compare dataset. The 7 quality groups are meant to capture themes of hospital quality and include efficiency of care (healthcare delivered per health benefit achieved), effectiveness of care (use of evidence-based treatments), 30-day hospital readmissions rates, 30-day post-discharge mortality rates, patient experience (meeting patient preferences), safety of care (safety and complication events), and timeliness of care (emergency room crowding and wait times).1 A full summary of all quality groups are included on the Medicare Hospital Compare website.20 To compare the effect of neighborhood SRFs across quality groups, we implemented a standard score to percentile conversion from 1 (lowest performing) to 100 (highest performing). Hospitals were also divided into performance quartiles (low, medium, high, or very high performing) for their summary and quality group scores.

The primary independent variables were neighborhood SRFs associated with each block group within the hospital’s local catchment area using data from the ACS. The initial list of neighborhood SRFs was selected based on the Area Deprivation Index (ADI) used by Singh and colleagues.13,21 In addition to the list of variables included in the ADI, we considered several other theoretical covariates based on empirical literature, including dual eligibility for Medicare-Medicaid, race/ethnicity, languages spoken other than English, and high travel time to work.8,22 ‘High travel time to work’ was used to capture John Kain’s spatial mismatch hypothesis and economic theory,23,24 which describes the discrepancy between the location of low-income neighborhoods and that of viable employment opportunities—particularly for neighborhoods of color. To address multicollinearity, we used variance inflation factor (VIF) and performed stepwise removal of collinear variables within each risk group until all variables had a VIF less than 5.

The final model included median family income, percent single-parent households, percent dual-eligible for Medicare-Medicaid, percent uninsured, median home value, household size (person), percent with less than high school graduation, percent unemployed, percent black, percent speaking a language other than English, and percent who travel more than 45 minutes to work (Table 1).

Table 1.

Neighborhood characteristics by overall hospital performance quartile

| Lowest Performing | Highest Performing | ||||

|---|---|---|---|---|---|

| Neighborhood Social Risk Factor N=3608 |

Quartile 1 % or mean (sd) |

Quartile 2 % or mean (sd) |

Quartile 3 % or mean (sd) |

Quartile 4 % or mean (sd) |

P-valuea |

| Povertyb | |||||

| Median family income, mean $ | 52,239 (18,035) | 52,888 (18,709) | 54,111 (17,987) | 59,259 (20.601) | <0.001 |

| Single-parent households, % | 20.4 (6.5) | 18.1 (5.4) | 16.5 (4.7) | 15.5 (4.9) | <0.001 |

| Insurance | |||||

| Dual-eligible Medicaid/Medicare, % | 2.8 (1.4) | 2.5 (1.3) | 2.3 (1.3) | 2.0 (1.1) | <0.001 |

| Uninsured, % | 13.2 (5.2) | 12.1 (5.2) | 11.1 (4.7) | 10.5 (5.0) | <0.001 |

| Housing | |||||

| Median home value, mean $ | 202,825 (158,570) | 177,572 (138,374) | 192,568 (160,061) | 217,961 (173,326) | <0.001 |

| Household size, mean no. | 2.6 (0.4) | 2.6 (0.3) | 2.5 (0.3) | 2.5 (0.3) | <0.001 |

| Educationc | |||||

| Less than high school graduation, % | 15.8 (7.6) | 14.2 (7.4) | 12.8 (6.5) | 10.9 (5.8) | <0.001 |

| Unemployment, % | 8.9 (3.3) | 7.8 (3.0) | 7.1 (2.7) | 6.4 (2.5) | <0.001 |

| Black race, % | 18.0 (19.1) | 11.6 (14.8) | 8.7 (13.1) | 7.7 (11.4) | <0.001 |

| Language other than English, % | 22.1 (20.4) | 16.3 (17.2) | 14.0 (14.6) | 14.4 (13.0) | <0.001 |

| Travel to work > 45 minutes, % | 15.6 (9.9) | 14.1 (7.6) | 13.7 (7.6) | 12.4 (7.0) | <0.001 |

| Geographic catchment aread | |||||

| Total population, mean no. | 114,835 (97,441) | 75,534 (86,396) | 60,628 (70,866) | 76,062 (92,346) | <0.001 |

| Catchment radius, mean mi2 | 7.4 (7.8) | 8.9 (9.5) | 8.4 (8.1) | 7.8 (9.3) | <0.001 |

P-values based on one-way analysis of variance (ANOVA)

Median family income below federal poverty level was removed due to collinearity

Calculated for adults aged ≥25 years

A geographic catchment area for each hospital was defined based on the hospital’s size and total population in the immediately surrounding geographic region

We chose to examine individual SRFs rather than the ADI composite score for several reasons. First, we were interested in examining the role of specific SRFs and potential differences in the strength of association between variables. Second, the ADI is largely a measure of socioeconomic disadvantage, and key social risk factors, such as race/ethnicity, were not included in the composite measure. However, we conducted sensitivity analyses examining the ADI composite score as a secondary independent variable of interest.13,21

Statistical Analysis

Descriptive statistics were calculated for the geographic catchment regions of 3,608 Medicare-certified hospitals and for each performance quartile. To examine the strength of association between each summary and quality group score with neighborhood SRFs, we used generalized linear models (identity link function and Gaussian distribution) disaggregated to the block group level and clustered by hospital using a robust variance estimator. In effect, individual block groups were treated as cluster-correlated data and not aggregate measures. Hospital rating percentiles were modeled as a function of all block group-level SRFs and fixed effects for catchment population, catchment radius, and state.

Of note, we examined hospital-level summary and quality group scores and block group-level SRFs. Thus, hospitals were conceptualized as having a corresponding collection of block groups, and hospital ratings were available at the hospital level only. This is in contrast to evaluations using claims data, in which dependent variables (i.e., performance measures) are typically available at the patient level. Methods used by previous investigators to estimate expected hospital performance based on patient-level characteristics and outcomes3,6 could not be meaningfully implemented in this study.

Risk Adjustment for SRFs in Hospital Comparisons

To attenuate the effect of SRFs on hospital comparisons, we used risk-adjustment to take underlying SRFs into account during statistical model development.6 In risk adjustment models, we used the full subset of neighborhood SRFs (Table 1) on performance scores and star ratings. Risk-adjusted quality group scores were calculated by removing the variance caused by the neighborhood SRFs for each quality group. First, we used stepwise regression to build individual models of each quality group score as a function of the SRF variables. The adjusted R-squared from each model was used as a global metric for how well SRF variables predicted the summary and quality group scores. Each hospital’s individual quality group score was then adjusted according to the following:

where i is each hospital, j is each quality group, Group Scorei,j is the score for hospitali for quality groupk, n is the number of SRFs, βj,k is the model coefficient of SRFk for quality groupj, SRFk is the mean value of SRFk, and SRFk,i is the SRF value for hospitali. Each hospital’s adjusted summary score was then defined as:

where i is each hospital, m is the number of quality groups, Adjusted Group Scorei,j is the adjusted quality group score for hospitali and groupj, and Wj is the weight for groupj (Supplemental Table 1). The SRF-adjusted star rating was determined by using the Medicare Hospital Compare k-means clustering methodology with the SRF-adjusted summary scores.25

RESULTS

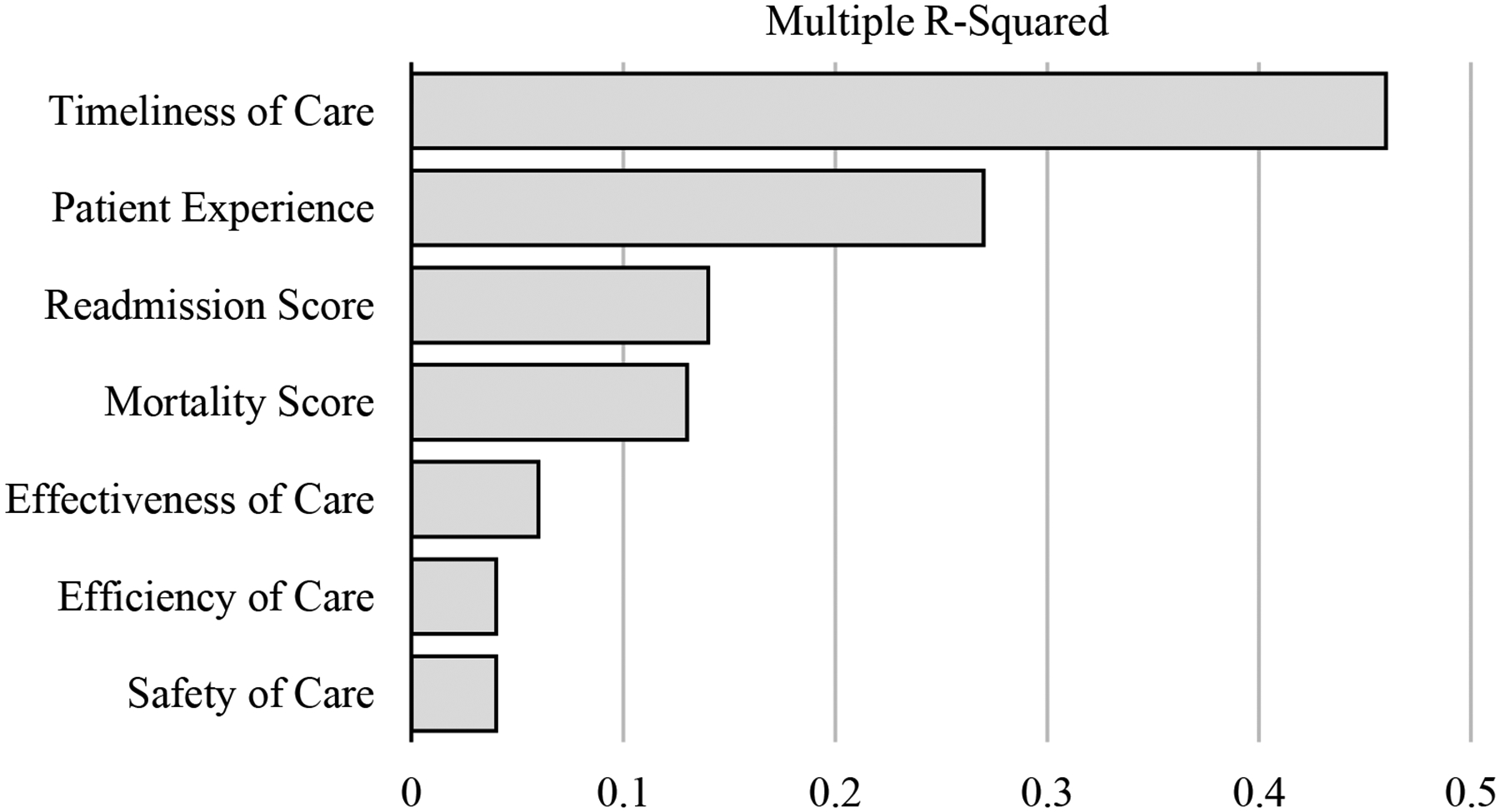

Table 1 summarizes descriptive statistics for neighborhood characteristics by performance quartile. Table 2 summarizes associations between hospital rating scores and neighborhood SRFs. The median number of block groups per hospital was 38 (interquartile range 16–78). Lower hospital summary scores were associated with caring for neighborhoods with a higher percentage of dual-eligible residents (−3.3 percentile per 10%; 95% CI, −4.7, −2.0), lower median home values (−1.1 percentile per $100,000 less; 95% CI, −1.8, −0.4), residents without a high-school diploma (−0.8 percentile per 10%; 95% CI, −1.5, −0.1), unemployed residents (−1.2 percentile per 10%; 95% CI, −1.9, −0.4), black residents (−1.2 percentile per 10%; 95% CI, −1.7, −0.8), residents speaking languages other than English (−0.9 percentile per 10%; 95% CI, −1.5, −0.3), and residents with high travel times to work (−2.5 percentile per 10%; 95% CI, −3.3, −1.6). Overall summary scores were not associated with median family income, percent single-parent households, percent uninsured, or household size. Dual-eligibility, a proposed surrogate marker for social risk in many risk adjustment strategies, was significant in only 3 out of 7 quality groups; household income was only significant in 2 out of 7 quality groups. The association between neighborhood SRFs and quality group scores varied greatly by group (Figure 1); the coefficient of multiple determination (R2) was highest in the timeliness of care group (R2=0.46) and lowest in efficiency of care group (R2=0.04).

Table 2.

Association of Medicare Hospital Compare summary and quality group scores with neighborhood social risk factors

| Difference in Percentile Rating for Overall Summary and Quality Group Scores | ||||||||

|---|---|---|---|---|---|---|---|---|

| Quality Group Scoresa | ||||||||

| Neighborhood Social Risk Factor | Summary Scorea | Mortality Score | Readmission Score | Patient Experience | Timeliness of Care | Safety of Care | Efficiency of Care | Effectiveness of Care |

| N=3608 | Adjusted Percentile Difference (95% CI)b | |||||||

| Median family income (per $10,000 less) | −0.09 (−0.45, 0.28) |

−0.83*** (−1.13, −0.53) |

0.19 (−0.12, 0.50) |

−0.01 (−0.28, 0.26) |

0.45*** (0.23, 0.66) |

0.09 (−0.27, 0.46) |

−0.05 (−0.41, 0.32) |

−0.25 (−0.59, 0.09) |

| Percent single-parent households (per 10%) | −0.20 (−0.59, 0.19) |

0.59** (0.18, 0.99) |

−0.02 (−0.40, 0.35) |

−0.70*** (−1.01, −0.38) |

−0.97*** (−1.25, −0.70) |

−0.05 (−0.49, 0.40) |

0.19 (−0.19, 0.57) |

−0.13 (−0.50, 0.24) |

| Percent dual-eligible (per 10%) | −3.31*** (−4.65, −1.97) |

−1.55 (−3.50, 0.40) |

−3.15*** (−4.43, −1.87) |

−1.95*** (−2.94, −0.96) |

0.17 (−0.80, 1.14) |

−1.69* (−3.29, −0.10) |

0.54 (−0.99, 2.06) |

−0.50 (−1.87, 0.86) |

| Percent uninsured (per 10%) | −0.12 (−1.11, 0.88) |

−2.42*** (−3.40, −1.44) |

1.98*** (1.10, 2.86) |

−0.15 (−0.92, 0.62) |

1.37*** (0.76, 1.98) |

−0.72 (−1.82, 0.38) |

−1.66** (−2.63, −0.69) |

−0.17 (−1.04, 0.70) |

| Median home value (per $10,000 less) | −0.11** (−0.18, −0.04) |

−0.10*** (−0.15, −0.05) |

−0.03 (−0.09, 0.03) |

−0.01 (−0.06, 0.05) |

0.10*** (0.06, 0.14) |

−0.08* (−0.15, −0.01) |

0.03 (0.04, 0.11) |

0.04 (0.02, 0.10) |

| Household size (per person) | −0.73 (−2.15, 0.68) |

−4.06*** (−5.39, −2.73) |

1.27 (−0.02, 2.57) |

0.89 (−0.28, 2.06) |

1.81** (0.74, 2.87) |

0.17 (−1.39, 1.74) |

1.71* (0.33, 3.10) |

0.07 (−1.26, 1.40) |

| Percent no high school diploma (per 10%) | −0.80* (−1.52, −0.08) |

−0.63 (−1.41, 0.15) |

−0.73* (−1.42, −0.03) |

0.26 (−0.30, 0.82) |

0.40 (−0.15, 0.95) |

−0.36 (−1.19, 0.47) |

−1.65*** (−2.41, −0.88) |

−0.70 (−1.44, 0.05) |

| Percent unemployed (per 10%) | −1.16** (−1.93, −0.39) |

1.09** (0.41, 1.77) |

−1.73*** (−2.33, −1.13) |

−1.41*** (−2.02, −0.80) |

−1.87*** (−2.33, −1.41) |

−0.01 (−0.85, 0.84) |

0.74* (0.09, 1.29) |

0.09 (−0.56, 0.73) |

| Percent black (per 10%) | −1.24*** (−1.72, −0.76) |

1.13*** (0.65, 0.16) |

−1.74*** (−2.20, −1.29) |

−0.83*** (−1.23, −0.42) |

−1.61*** (−1.97, −1.25) |

−0.74** (−1.29, −0.19) |

−0.39 (−0.88, 0.10) |

−1.11*** (−1.59, −0.63) |

| Percent language other than English (per 10%) | −0.89** (−1.51, −0.27) |

2.51*** (1.83, 3.18) |

−1.10** (−1.73, −0.47) |

−2.44*** (−2.95, −1.94) |

−2.61*** (−3.08, −2.14) |

−0.19 (−0.91, 0.52) |

0.59 (−0.07, 1.25) |

−0.68 (−1.36, 0.00) |

| Percent travel to work > 45 minutes (per 10%) | −2.46*** (−3.27, −1.64) |

3.42*** (2.59, 4.25) |

−4.30*** (−4.99, −3.61) |

−2.29*** (−2.92, −1.66) |

−1.67*** (−2.16, −1.19) |

−1.60** (−2.52, −0.67) |

0.28 (−0.53, 1.08) |

−1.16** (−1.99, −0.32) |

All scores are on a 0 to 100 percentile scale.

Generalized linear models adjusted for all neighborhood social risk factors at the block group level and clustered by hospital; additional fixed effects were included for catchment population, catchment radius, and state.

P<0.05,

P<0.01,

P<0.001

Figure 1. Variance explained by neighborhood social risk factors by hospital rating quality group.

The quality group score variance explained by social risk factors was examined by assessing the multiple R-squared of aggregate models.

In sensitivity analyses examining associations between hospital rating scores and the ADI, a 1.2 percentile reduction in the overall summary score was associated with a 10-point higher ADI (100-point scale). In analyses comparing the ADI to other SRFs not included in the ADI composite score (Supplemental Figure 2), overall trends appeared similar across variables: the summary score declined with higher social risk, particularly for the highest decile of social risk.

Direct Risk-Adjustment for Social Risk Factors

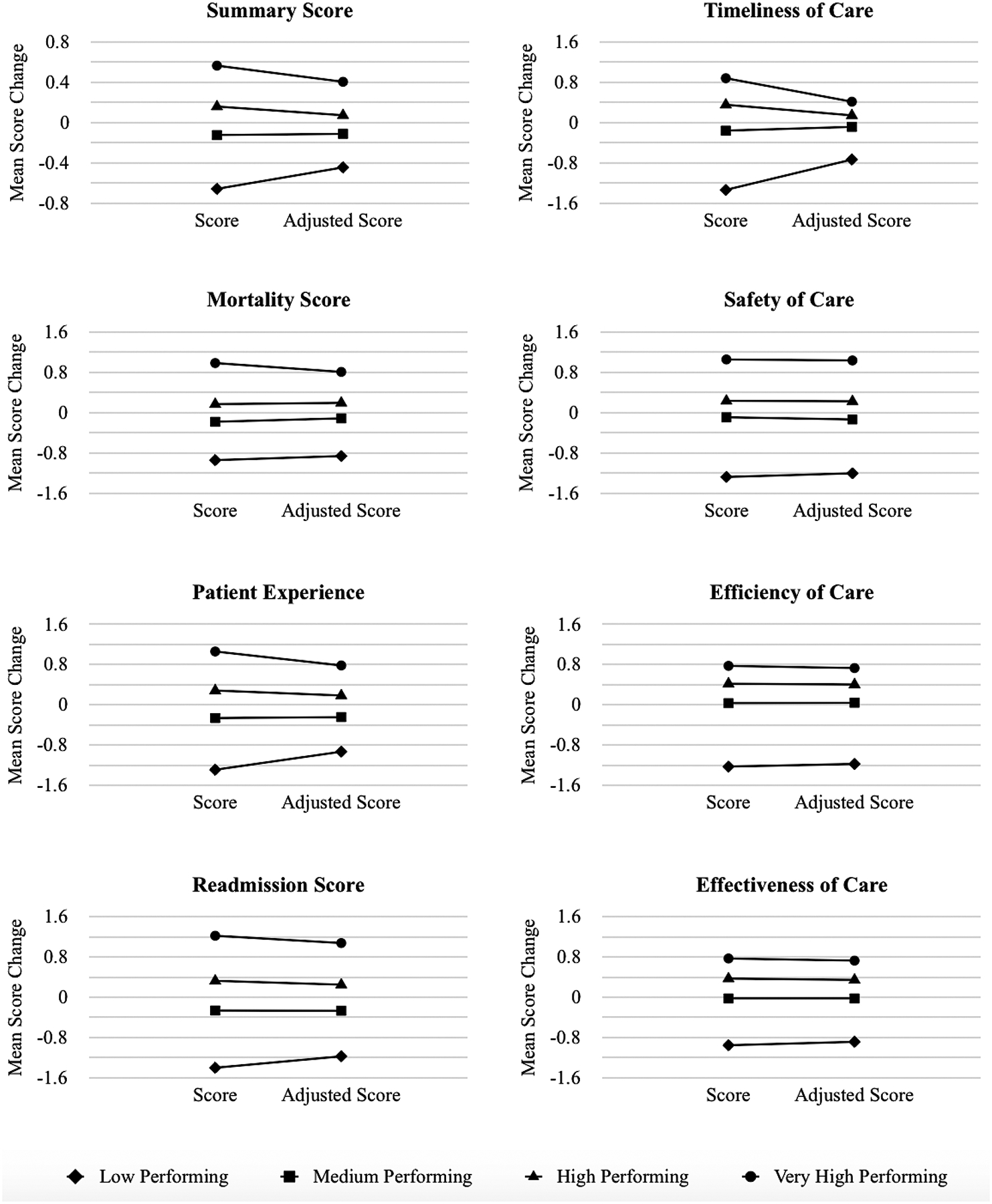

Table 3 summarizes the change in performance quartile and star ratings for each hospital after risk adjustment for social risk factors. The majority (66%) of hospitals had no change in performance quartile, although nearly one-quarter (23%) changed by 1 performance quartile (Table 3A). We observed similar results for star rating (Table 3B), in which 67% of hospitals had no change in star rating and 32% changed by 1 star. In analyses examining overall patterns of change in the summary and quality group scores by performance quartile (Figure 2), risk adjustment did not change the overall ordering of performance quartiles, but narrowed the range between quartiles. Risk adjustment had the largest effect on the timeliness of care group, and virtually no effects on safety, efficiency, and effectiveness of care groups.

Table 3.

Change in performance quartile and star rating before and after risk adjustment for neighborhood social risk factors

| A | Observed Performance Quartile | Adjusted Performance Quartile | |||||

|

Low n (%) |

Moderate n (%) |

High n (%) |

Very high n (%) |

Total N |

|||

| Low | 719 (79.7) | 143 (15.9) | 31 (3.4) | 9 (1.0) | 902 | ||

| Moderate | 177 (19.6) | 503 (55.8) | 177 (19.6) | 45 (5.0) | 902 | ||

| High | 6 (0.7) | 244 (27.1) | 482 (53.4) | 170 (18.9) | 902 | ||

| Very high | 0 | 12 (1.3) | 212 (23.5) | 678 (75.2) | 902 | ||

| Total | 902 (25) | 902 (25) | 902 (25) | 902 (25) | 3608 | ||

| B | Observed Star Rating | Adjusted Star Rating | |||||

|

1 star n (%) |

2 stars n (%) |

3 stars n (%) |

4 stars n (%) |

5 stars n (%) |

Total N |

||

| 1 star | 202 (78.1) | 50 (19.5) | 5 (2.0) | 1 (0.4) | 0 | 256 | |

| 2 stars | 88 (12.1) | 483 (66.3) | 134 (18.4) | 23 (3.2) | 1 (0.1) | 729 | |

| 3 stars | 0 | 237 (20.4) | 739 (63.5) | 174 (14.9) | 14 (1.2) | 1164 | |

| 4 stars | 0 | 0 | 296 (26.2) | 738 (65.3) | 96 (8.5) | 1130 | |

| 5 stars | 0 | 0 | 1 (0.3) | 82 (24.9) | 246 (74.8) | 329 | |

| Total | 288 (8.0) | 770 (21.3) | 1175 (32.6) | 1018 (28.0) | 357 (9.9) | 3608 | |

Note: Hospital summary scores were adjusted for social risk factors and the change in their performance quartile and star rating were determined. Shown are the adjusted performance quartile (A) and star rating (B) (table columns) for each original performance quartile (A) or star rating (B) group (table rows). For instance, Table B shows that after SRF risk-adjustment, for hospitals with an original 5-star rating, a single 5-star hospital (0.3%) became a 3-star hospital, 82 5-star hospitals (24.9%) became 4-star hospitals, and 246 5-star hospitals (74.8%) remained 5-star hospitals. Light gray denotes hospitals with no change in performance quartile after risk adjustment; darker gray denotes hospitals that changed 1 performance quartile after risk adjustment.

Figure 2. Change in overall and quality group scores by performance quartile before and after risk adjustment for neighborhood social risk factors.

The hospitals were grouped by quartiles using summary or individual domain scores. The mean score for each group before and after SRF risk-adjustment was calculated. SRF risk-adjustment did not change the ordering of performance quartiles on average, but the range in scores narrowed between quartiles showing that the variance caused by the SRF was removed. In effect, risk adjustment reduced differences in score between the highest and lowest performing hospitals, without substantially altering their overall ordering.

DISCUSSION

Neighborhood social risk was consistently associated with hospital rating, but the strength of association varied substantially across quality groups. Understanding why certain quality groups are more strongly associated with SRFs than others is not straightforward. The quality groups least associated with neighborhood SRFs—efficiency of care, effectiveness of care, and safety of care—generally reflect outcomes that occur within hospital walls, such as Clostridium difficile transmission or utilization of medical imaging. In contrast, the quality groups most strongly associated with neighborhood SRFs reflect outcomes that are less specific to the hospital setting, such as hospital access and readmission.5,26 Few studies, if any, have examined a broad subset of quality metrics, teasing apart their associations with block-level neighborhood risk. Importantly, differences between the strength of association between SRFs and each quality group may provide pragmatic insight into the metrics that hospitals can control—“within four walls”—and those that require broader strategies to bridge clinical and community-based solutions.27

Organizations, such as the National Academy of Medicine, Department of Health and Human Services, and National Quality Forum have made controversial recommendations for more complete adjustment for SRFs, combined with other techniques to identify quality problems that can be addressed through quality improvement and payment mechanisms.3,28,29 Some patient advocates argue that poor-performing hospitals serving higher risk patients may be rewarded through risk adjustment, despite legitimate deficiencies in quality. In effect, risk adjustment could lower standards for hospitals located in disadvantaged regions. Interestingly, we identified a strong association between patient experience and neighborhood SRFs, which may support some of these patient advocacy concerns. For instance, black race and unemployment were associated with lower patient experience scores, suggesting the potential for both interpersonal and structural forms of discrimination to detract from healthcare encounters. In a recent study published by Dyrbye and colleagues,30 physician burnout—which can sometimes result from challenging social circumstances that disrupt patient care—was associated with higher levels of racial bias.

However, this debate on risk adjustment also reflects an ontological conundrum: Hospitals caring for higher risk patients may already face greater challenges (e.g., burnout, lack of control) to providing high-quality care. It is possible that deficiencies in quality may be a function of having insufficient resources to bridge quality gaps to begin with. By rewarding hospitals with more resources and penalizing hospitals with less, hospital rating systems run the risk of creating a compounding debt scenario, by which a negative balance in quality is carried over to each new round of contract negotiations, making it impossible to reclaim quality deficits. Thus, while concerns about risk adjustment are reasonable and appropriate, more complete risk adjustment, combined with other delivery system reforms, may be necessary to address foundational challenges to advancing health equity.3,28,29,31–33 Moreover, our risk adjustment did not change the average ordering of hospital ratings by quartile (Figure 2)_. Instead, it narrowed the range in performance scores, similar to prior studies.6

Importantly, our study suggests that a hospital’s quality rating may be tied to its geographic location—its place. In a previous study, Herrin et al. found that 58% of the total variation in readmission rates was attributable to the county where a hospital was located.34 One major concern with the current hospital rating system is that it may penalize hospitals that are located in disadvantaged, medically underserved regions and create the illusion of high-quality care in socioeconomically advantaged regions. This could unwittingly enable a form of coverage discrimination, particularly if payers forego contract negotiations with certain hospitals, justifying their decisions based on poor value or quality. Lower hospital ratings may thereby exacerbate financial challenges for hospitals attempting to locate to and sustain services in these regions. Prior studies have documented widespread closure of hospitals in disadvantaged regions of the United States, with 504 hospital closures in both rural and inner city urban geographies between 1990 and 2000.35 This hidden disincentive to locate to a medically underserved region can widen already-extensive disparities in access to care. We found that neighborhood SRFs were most strongly associated with the ‘timeliness of care’ group, which captures wait times for needed testing and emergency services. This finding is particularly noteworthy, because medical service providers who take on the challenge of addressing access gaps in medically underserved regions will, by definition, take on resource scarcity and unmet need, thus driving up wait times. In effect, the ‘timeliness of care’ metric, which is definitionally tied to access, will function to penalize hospitals that reduce access disparities.

Our study has several pragmatic implications for improving fairness and equity in hospital rating systems. First, the 3 out of 7 quality groups least impacted by neighborhood social risk only comprised 30% of the overall rating. Exploring the utility of weighting these quality groups more equally may reduce bias against hospitals serving higher-risk patients. For quality groups most impacted by neighborhood social risks, using neighborhood characteristics in risk adjustment strategies may enable the inclusion of SRFs that are frequently missing from traditional patient data sources. For instance, we found that the most frequently significant SRFs were unemployment, black racial composition, languages spoken other than English, and high travel times to work. While the ADI may be a useful metric for capturing socioeconomic disadvantage, these additional SRFs are seldom included in risk adjustment strategies, largely because these metrics are not available in claims databases.6

Alternatively, some scholars have advocated for the explicit inclusion of equity performance measures in value-based programs.33 For instance, hospital rating systems could consider how hospitals address health disparities or reduce discrimination in healthcare. As a case example, including a metric for ‘reducing access disparities’ could counterbalance the effect of having poorer ‘timeliness to care’ ratings. Alternatively, for outcomes heavily influenced by SRFs, real solutions will necessarily involve community-based strategies to address unmet social needs.27 Capturing these types of measures would reward hospitals for addressing disparities without obscuring poor quality through risk adjustment.

There are several limitations to this study. First, we analyzed SRFs at the census block group level rather than at the individual level. Thus, we used “neighborhood mix” as a multidimensional proxy for patient mix. Analyzing social risk at this geographic level bypasses the measurement limitations of using administrative databases, which are often limited to a subset of relatively crude observable patient characteristics such as dual eligibility status. However, the lack of patient-level data limits our ability to isolate hospital effects from population and neighborhood effects. Second, we were unable to analyze the financial implications of hospital ratings, and the subsequent impact of risk adjustment on hospital reimbursements; payor contracts are not readily transparent or available. Third, in the absence of clinical data, we were unable to include patient health characteristics in the risk adjustment calculation, which is certainly tied to patient outcomes. However, CMS metrics include some risk-adjustment for severity of illness,36 somewhat mitigating this concern. Finally, we estimated local geographic catchment based on each hospital’s location and size. This approach enables a more targeted examination of each hospital’s immediately surrounding population and neighborhood effects. However, additional research is needed to establish uniform methods for examining more granular, place-based measures of hospital location.

CONCLUSIONS

Hospitals caring for neighborhoods with high levels of disadvantage may have lower hospital ratings, not because of the quality of care provided within hospital settings, but because of SRFs experienced in neighborhood settings. Implementation of hospital rating policies should explicitly consider the impact of neighborhood SRFs on specific quality measures to ensure sufficient funding for health equity in vulnerable communities.

Supplementary Material

REFERENCES

- 1.U.S. Centers for Medicare Medicaid Services. Hospital Compare. 2018; https://www.medicare.gov/hospitalcompare/search.html. Accessed August 14, 2018.

- 2.U.S. News World Report. Best Hospitals. 2018; https://health.usnews.com/best-hospitals/rankings. Accessed August 14, 2018.

- 3.The National Academies of Sciences Engineering and Medicine Accounting for Social Risk Factors in Medicare Payment: Identifying Social Risk Factors. Washington, D.C: The National Academies Press;2016. [PubMed] [Google Scholar]

- 4.Calvillo-King L, Arnold D, Eubank KJ, et al. Impact of social factors on risk of readmission or mortality in pneumonia and heart failure: systematic review. Journal of general internal medicine. 2013;28(2):269–282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Gu Q, Koenig L, Faerberg J, Steinberg CR, Vaz C, Wheatley MP. The Medicare Hospital Readmissions Reduction Program: potential unintended consequences for hospitals serving vulnerable populations. Health services research. 2014;49(3):818–837. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Roberts ET, Zaslavsky AM, McWilliams JM. The Value-Based Payment Modifier: Program Outcomes and Implications for Disparities. Annals of internal medicine. 2018;168(4):255–265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Shakir M, Armstrong K, Wasfy JH. Could Pay-for-Performance Worsen Health Disparities? Journal of general internal medicine. 2018;33(4):567–569. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Joynt KE, De Lew N, Sheingold SH, Conway PH, Goodrich K, Epstein AM. Should Medicare Value-Based Purchasing Take Social Risk into Account? The New England journal of medicine. 2017;376(6):510–513. [DOI] [PubMed] [Google Scholar]

- 9.Hu J, Nerenz D. Relationship Between Stress Rankings and the Overall Hospital Star Ratings: An Analysis of 150 Cities in the United States. JAMA internal medicine. 2017;177(1):136–137. [DOI] [PubMed] [Google Scholar]

- 10.Durfey SNM, Kind AJH, Gutman R, et al. Impact Of Risk Adjustment For Socioeconomic Status On Medicare Advantage Plan Quality Rankings. Health affairs. 2018;37(7):1065–1072. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.U.S. Surgeon General. National Prevention Strategy: Elimination of Health Disparities. www.surgeongeneral.gov/nationalpreventionstrategy: National Prevention Council;2014. [Google Scholar]

- 12.Tung EL, Cagney KA, Peek ME, Chin MH. Spatial Context and Health Inequity: Reconfiguring Race, Place, and Poverty. Journal of urban health : bulletin of the New York Academy of Medicine. 2017;94(6):757–763. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kind AJH, Buckingham WR. Making Neighborhood-Disadvantage Metrics Accessible - The Neighborhood Atlas. The New England journal of medicine. 2018;378(26):2456–2458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ludwig J, Sanbonmatsu L, Gennetian L, et al. Neighborhoods, obesity, and diabetes--a randomized social experiment. The New England journal of medicine. 2011;365(16):1509–1519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Yale New Haven Health Services Corporation/Center for Outcomes Research Evaluation (YNHHSC/CORE). Overall Hospital Quality Star Rating on Hospital Compare Methodology Report. 2017; https://www.qualitynet.org/dcs/ContentServer?c=Pagepagename=QnetPublic%2FPage%2FQnetTier2cid=1228775183434.

- 16.U.S. Census Bureau. American Community Survey 5-Year Estimates. 2015; http://factfinder.census.gov.

- 17.Kaiser Family Foundation. Hospital Beds per 1,000 Population by Ownership Type. 2016; https://www.kff.org/other/state-indicator/beds-by-ownership/.

- 18.U.S. Census Bureau. Centers of Population. 2015; https://www.census.gov/geo/reference/centersofpop.html. Accessed August 14, 2018.

- 19.The Dartmouth Atlas of Health Care. Research Methods Compendium. 2019; 11. Available at: http://archive.dartmouthatlas.org/downloads/methods/research_methods.pdf. Accessed May 15, 2019.

- 20.Medicare. Hospital Compare: Measures included by categories. 2018; https://www.medicare.gov/hospitalcompare/Data/Measure-groups.html. Accessed June 23, 2018.

- 21.Singh GK. Area deprivation and widening inequalities in US mortality, 1969–1998. American journal of public health. 2003;93(7):1137–1143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Joynt KE, Orav EJ, Jha AK. Thirty-day readmission rates for Medicare beneficiaries by race and site of care. JAMA : the journal of the American Medical Association. 2011;305(7):675–681. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Casciano R, Massey DS. Neighborhoods, employment, and welfare use: assessing the influence of neighborhood socioeconomic composition. Social science research. 2008;37(2):544–558. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Fernandez RM. Race, spatial mismatch, and job accessibility: evidence from a plant relocation. Social science research. 2008;37(3):953–975. [DOI] [PubMed] [Google Scholar]

- 25.Yale New Haven Health Services Corporation/Center for Outcomes Research Evaluation (YNHHSC/CORE). Overall Hospital Quality Star Rating on Hospital Compare Methodology Report. 2017; https://www.qualitynet.org/dcs/ContentServer?c=Pagepagename=QnetPublic%2FPage%2FQnetTier2cid=1228775183434. Accessed March 5, 2019. [Google Scholar]

- 26.Krumholz HM, Wang K, Normand SLT. Hospital-Readmission Risk - Isolating Hospital Effects. The New England journal of medicine. 2017;377(25):2505. [DOI] [PubMed] [Google Scholar]

- 27.Alley DE, Asomugha CN, Conway PH, Sanghavi DM. Accountable Health Communities--Addressing Social Needs through Medicare and Medicaid. The New England journal of medicine. 2016;374(1):8–11. [DOI] [PubMed] [Google Scholar]

- 28.National Quality Forum. Risk Adjustment for Socioeconomic Status or Other Sociodemographic Factors. Washington, D.C: National Quality Forum;2014. [Google Scholar]

- 29.U.S. Department of Health and Human Services: Office of the Assistant Secretary for Planning and Evaluation Social Risk Factors and Performance Under Medicare’s Value-Based Purchasing Programs. Washington, D.C.: U.S: Department of Health and Human Services;2016. [Google Scholar]

- 30.Dyrbye L, Herrin J, West CP, et al. Association of Racial Bias With Burnout Among Resident Physicians. JAMA network open. 2019;2(7):e197457. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Chin MH, Clarke AR, Nocon RS, et al. A roadmap and best practices for organizations to reduce racial and ethnic disparities in health care. Journal of general internal medicine. 2012;27(8):992–1000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.DeMeester RH, Xu LJ, Nocon RS, Cook SC, Ducas AM, Chin MH. Solving disparities through payment and delivery system reform: a RWJF program to achieve health equity. Health affairs. 2017. [DOI] [PubMed] [Google Scholar]

- 33.Anderson AC, O’Rourke E, Chin MH, Ponce NA, Bernheim SM, Burstin H. Promoting Health Equity And Eliminating Disparities Through Performance Measurement And Payment. Health affairs. 2018;37(3):371–377. [DOI] [PubMed] [Google Scholar]

- 34.Herrin J, St Andre J, Kenward K, Joshi MS, Audet AM, Hines SC. Community factors and hospital readmission rates. Health services research. 2015;50(1):20–39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Rehnquist J Trends in Urban Hospital Closure 1990–2000. Department of Health and Human Services;2003. [Google Scholar]

- 36.QualityNet. Measure Methodology Reports. 2018; https://www.qualitynet.org/dcs/ContentServer?cid=%201219069855841&pagename=QnetPublic%2FPage%2FQnetTier3&c=Page. Accessed March 22, 2019.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.