Abstract

The development of compressed sensing methods for magnetic resonance (MR) image reconstruction led to an explosion of research on models and optimization algorithms for MR imaging (MRI). Roughly 10 years after such methods first appeared in the MRI literature, the U.S. Food and Drug Administration (FDA) approved certain compressed sensing methods for commercial use, making compressed sensing a clinical success story for MRI. This review paper summarizes several key models and optimization algorithms for MR image reconstruction, including both the type of methods that have FDA approval for clinical use, as well as more recent methods being considered in the research community that use data-adaptive regularizers. Many algorithms have been devised that exploit the structure of the system model and regularizers used in MRI; this paper strives to collect such algorithms in a single survey.

I. Introduction

A. Scope

Although the paper title begins with “optimization methods,” in practice one first defines a model and cost function, and then applies an optimization algorithm. There are several ways to partition the space of models, cost functions and optimization methods for MRI reconstruction, such as: smooth vs non-smooth cost functions, static vs dynamic problems, single-coil vs multiple-coil data. This paper focuses on the static reconstruction problem because the dynamic case is rich enough to merit its own survey paper. This paper emphasizes algorithms for multiple-coil data (parallel MRI) because modern systems all have multiple channels and advanced reconstruction methods with under-sampling are most likely to be used for parallel MRI scans. Main families of parallel MRI methods include “SENSE” methods that model the coil sensitivities in the image domain [1], “GRAPPA” methods that model the effect of coil sensitivity in k-space [2], and “calibrationless” methods that use low-rank or joint sparsity properties [3]. This paper considers all three approaches, emphasizing SENSE methods for simplicity.1

B. Measurement model

The signals recorded by the sensors (receive coils) in MR scanners are linear functions of the object’s transverse magnetization. That magnetization is a complicated and highly nonlinear function of the RF pulses, gradient waveforms, and tissue properties, governed by the physics of the Bloch equation [4]. Quantifying tissue properties using nonlinear models is a rich topic of its own [5], but we focus here on the problem of reconstructing images of the transverse magnetization from MR measurements.

Ignoring noise, a vector of signal samples recorded by a MR receive coil is related (typically) to a discretized version of the transverse magnetization via a linear Fourier relationship:

| (1) |

where denotes the k-space sample location of the ith sample (units cycles/cm) and denotes the spatial coordinates of the center of the jth pixel (units cm). In the usual case where the pixel coordinates and k-space sample locations are both on appropriate Cartesian grids, matrix F is square corresponds to the (2D or 3D) discrete Fourier transform (DFT). In this case so reconstructing x from s is simply an inverse fast Fourier transform (FFT), and that approach is used in many clinical MR scans.

The reconstruction problem becomes more interesting when the k-space sample locations are on a non-Cartesian grid [6], when the scan is “accelerated” by recording M < N samples, when non-Fourier effects like magnetic field inhomogeneity are considered [7] and/or when there are multiple receive coils. In parallel MRI, let sl denote the samples recorded by the lth of of L receive coils. Then one replaces the model (1) with

| (2) |

where Cl is a N × N diagonal matrix containing the coil sensitivity pattern of the l coil on its diagonal. Note that F does not depend on l; all coils see the same k-space sampling pattern. Stacking up the measurements from all coils and accounting for noise yields the following basic forward model in MRI:

| (3) |

where denotes the system matrix, denotes the measured k-space data, and denotes the latent image. The noise in k-space is well modeled as complex white Gaussian noise. For extensions that consider other physics effects like relaxation and field inhomogeneity, see [8].

The goal in MR image reconstruction is to recover x from y using the model (3). All MR image reconstruction problems are under-determined because the magnetization of the underlying object being scanned is a space-limited continuous-space function on , yet only a finite number of samples are recorded. Nevertheless, the convention in MRI is to treat the object as a finite-dimensional vector for which M ≥ N appropriate Cartesian k-space samples is considered “fully sampled” and any M < N is considered “accelerated.” Sampling pattern design is a topic of ongoing interest, with renewed interest in data-driven methods [9].

The matrix F in (3) is known prior to the scan, because the k-space sample locations are controlled by the pulse sequence designer. In contrast, the coil sensitivity maps {Cl} depend on the exact configuration of the receive coils for each patient. To use the model (3), one must determine the sensitivity maps from some patient-specific calibration data, e.g., by joint estimation [10], regularization [11], or subspace methods [12].

II. Cost functions and algorithms

A. Quadratic problems

When ML ≥ N, i.e., when the total number of k-space samples acquired across all coils exceeds the number of unknown image pixel values, the linear model (3) is over-determined. If additionally A is well conditioned, which depends on the sampling pattern and coil sensitivity maps, then it is reasonable to consider an ordinary least-squares estimator

| (4) |

In particular, for fully sampled Cartesian k-space data where , this least-squares solution simplifies to , which is trivial to implement because each Cl is diagonal. This is known as the optimal coil combination approach [13]. For regularly under-sampled Cartesian data, where only every nth row of k-space is collected, the matrix F′F has a simple block structure with n × n blocks that facilitates non-iterative block-wise computation known as SENSE reconstruction [1]. This form of least-squares estimation is used widely in clinical MR systems.

B. Regularized least-squares

For under-sampled problems (ML < N) the LS solution (4) is not unique. Furthermore, even when ML ≥ N often A is poorly conditioned, particularly for non-Cartesian sampling. Some form of regularization is needed in such cases. Some early MRI reconstruction work used quadratically regularized cost functions leading to optimization problems of the form:

| (5) |

where β > 0 denotes a regularization parameter and T denotes a K × N matrix transform such as finite differences. The conjugate gradient (CG) algorithm is well-suited to such quadratic cost functions [6], [7]. The Hessian matrix A′A + βT′T often is approximately Toeplitz [14], so CG with circulant preconditioning is particularly effective. Although the quadratically regularized least-squares cost function (5) is passé in the compressed sensing era, CG is often an inner step for optimizing more complicated cost functions [15].

C. Edge-preserving regularization

The drawback of the quadratically regularized cost function (5) with T as finite differences is that it blurs image edges. To avoid this blur, one can replace the quadratic regularizer with a nonquadratic function ψ(Tx) where typically ψ is convex and smooth, such as the Huber function, a hyperbola, or the Fair potential function ψ(z) = δ2 (|z/δ| − log(1 + |z/δ|)), among others as follows:

| (6) |

Such methods have their roots in Bayesian methods based on Markov random fields. The nonlinear CG algorithm is an effective optimization method for cost functions with such smooth edge-preserving regularizers. Another appropriate optimization algorithm is the optimized gradient method (OGM), a first-order method having optimal worst-case performance among all first-order algorithms for convex cost functions with Lipschitz continuous gradients [16]. OGM has a convergence rate bound that is twice better than that of Nesterov’s fast gradient method [17].

Fig. 1 compares two of these methods for the case where T is finite differences and ψ is the Fair potential with δ = 0.1, which approximates TV fairly closely while being smooth.

Fig. 1.

Comparison of CG and OGM convergence for single-coil MRI reconstruction with edge-preserving regularization (akin to anisotropic TV with corner rounding). From left to right: Top row: k-space sampling pattern where only 34% of the phase-encodes are collected, true image, initial image from zero-filled k-space data, minimizer of (6). (Both CG and OGM converge to the same limit .) Bottom row: cost function Ψ(xk) in (6) and normalized root mean squared error (NRMSE) ‖xk − x‖2 / ‖x‖2 versus iteration k.

D. Sparsity models: synthesis form

Scan time in MRI is proportional to the number of k-space samples recorded. Reducing scan time in MRI can reduce cost, improve patient comfort, and reduce motion artifacts. Reducing the number of k-space samples ML to well below N, necessitates stronger modeling assumptions about x, and sparsity models are prevalent [18] [19]. Two main categories of sparsity models are the synthesis approach and the analysis approach. In a synthesis model, one assumes x = Bz for some N × K matrix B where coefficient vector should be sparse. In an analysis model, one assumes Tx is sparse, for some K × N transformation matrix T.

A typical cost function for a synthesis model is

| (7) |

where the 1-norm is a convex relaxation of the ℓ0 counting measure that encourages z to be sparse. Typically B is a wide matrix (often called an over-complete dictionary) so that one can represent x well using only a fraction of the columns of B. The classical approach for (7) is the iterative soft thresholding algorithm (ISTA) [20], also known as the proximal gradient method (PGM) [21] and proximal forward-backward splitting [22], having the simple form

| (8) |

where the soft thresholding function is defined by soft(z, c) = sign(z) max(|z| − c, 0) and D = diag{d} is any positive definite diagonal matrix such that D – B′A′AB is positive semidefinite [23].

The ISTA update (8) applies to the 1-norm in (7). If we replace that 1-norm with some other function ψ(z) then one replaces (8) with the more general PGM update of the form

where the proximal operator of a function f is defined by

| (9) |

Traditionally D = ⦀B′A′AB⦀2I, but computing that spectral norm (via the power iteration) requires considerable computation for parallel MRI problems in general. However, for Cartesian sampling, F′F ≼ NI so it suffices to have NB′C′CB ≼ D. Often the sensitivity maps are normalized such that C′C = I in which case NB′B ≼ D suffices. If in addition B′ is a Parseval tight frame, then B′B ≼ I so using D = NI is appropriate. For non-Cartesian sampling, or non-normalized sensitivity maps, or general choices of B, finding D is more complicated [23].

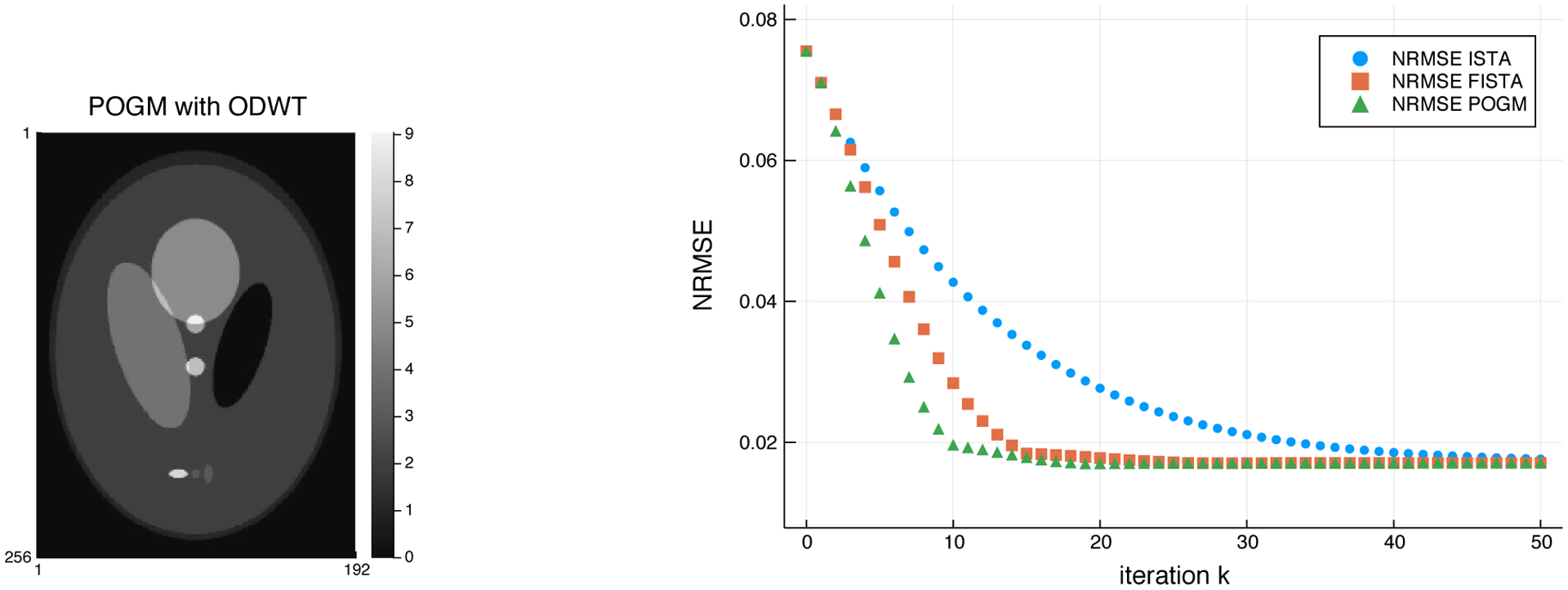

Although ISTA is simple, it has an undesirably slow O(1/k) convergence bound, where k denotes the number of iterations. This limitation was first overcome by the fast iterative soft thresholding algorithm (FISTA) [24], also known as the fast proximal gradient method (FPGM) that has an O(1/k2) convergence bound. A recent extension is the proximal optimized gradient method (POGM) that has worst-case convergence bound about twice better than that of FISTA/FPGM [25]. Both FISTA and POGM are essentially as simple to implement as (8). Recent MRI studies have shown POGM converging faster than FISTA, as one would expect based on the convergence bounds [26], particularly when combined with adaptive restart [25]. So POGM (with restart) is a recommended method for optimization problems having the form (7). Figure 2 provides POGM pseudo-code for solving composite optimization problems like the MRI synthesis reconstruction model (7).

Fig. 2.

Comparison of ISTA/PGM, FISTA/FPGM and POGM for single-coil MRI reconstruction with orthogonal discrete wavelet transform sparsity regularizer using the 1-norm. Minimizer of (7); NRMSE versus iteration k. FISTA requires about 40% more iterations to converge than POGM, consistent with the 2× better worst-case bound of POGM.

Fig. 2 shows that POGM converges faster than FISTA and ISTA for minimizing (7).

E. Sparsity models: analysis form

A potential drawback of the synthesis formulation (7) is that x ≈ Bz may be a more realistic assumption than the strict equality x = Bz when z is sparse. The analysis approach avoids constraining to lie in any such subspace (or union of subspaces when B is wide). For an analysis form sparsity model, a typical optimization problem involves a composite cost function consisting of the sum of a smooth term and a non-smooth term:

| (10) |

where T is a sparsifying operator such as a wavelet transform, or finite differences, or both [18]. The expression (10) is general enough to handle combinations of multiple regularizers, such as wavelets and finite differences [19], by stacking the operators in T and possibly allowing a weight 1-norm. When T is finite differences, the regularizer is called total variation (TV) [11], and combinations of TV and wavelet transforms are useful [19]. Although the details are proprietary, the FDA-approved method for compressed sensing MRI for at least one manufacturer is related to (10) [27].

When T is invertible, such as an orthogonal wavelet transform, one rewrites the optimization problem (10) as

which is simply a special case of (7) with B = T−1. Typically B is wide and T is tall so this simplification is not possible in general.

In the general case (10) where T is not invertible, the optimization problem is much harder than (7) due to the non-differentiability of the 1-norm with the matrix T. The non-invertible case (with redundant Haar wavelets) is used clinically [27]. The PGM for (10) is

| (11) |

where denotes the usual gradient update and the Lipschitz constant is . Unfortunately there is no simple solution for computing the proximal operator (9) in (11) in general, so inner iterative methods are required, typically involving dual formulations [24]. This challenge makes PGM and FPGM and POGM less attractive for (10) and has led to a vast literature on algorithms for problems like (10), with no consensus on what is best. The difficulty of (11) is the main drawback of analysis regularization, whereas a possible drawback of the synthesis regularization in (7) is that often K ≫ N for overcomplete B.

1). Approximate methods:

One popular “work around” option is to “round the corner” of the 1-norm, making smooth approximations like . This approximation is simply the hyperbola function that has a long history in the edge-preserving regularization literature. All of the gradient-based algorithms mentioned for edge-preserving regularization above are suitable candidates when a smooth function replaces the 1-norm. Smooth functions can shrink values towards zero, but their proximal operators never have a thresholding effect that induces sparsity by setting many values exactly to zero. Whether a thresholding effect is truly essential is an open question. Hereafter we focus on methods that tackle the 1-norm directly without any such approximations.

2). Variable splitting methods:

Variable splitting methods replace (10) with an exactly equivalent constrained minimization problem involving an auxiliary variable such as z = Tx, e.g.,

| (12) |

This approach underlies the split Bregman algorithm [28], various augmented Lagrangian methods [29], and the alternating direction multiplier method (ADMM) [30]. The augmented Lagrangian for (12) is

where denotes the vector of Lagrange multipliers and μ > 0 is an AL penalty parameter that affects the convergence rate but not the final image. Defining the scaled dual variable η ≜ 1/μγ and completing the square leads to the following scaled augmented Lagrangian:

An augmented Lagrangian approach alternates between descent updates of the primal variables x, z and an ascent update of the scaled dual variable η. The z update is simply soft thresholding:

The x update minimizes a quadratic function:

A few CG iterations is a natural choice for approximating the x update. Finally the η update is

The unit step size here ensures dual feasibility [31]. A drawback of variable splitting methods is the need to select the parameter μ. Adaptive methods have been proposed to help with this tuning [31].

One could apply ADMM to the synthesis regularized problem (7), though again it would require parameter tuning that is unnecessary with POGM.

The conventional variable split in (12) ignores the specific structure of the MRI system matrix A in (3). Important properties of A include the fact that F′F is circulant (for Cartesian sampling) or Toeplitz (for non-Cartesian sampling) and that each coil sensitivity matrix Cl is diagonal. In contrast, the Gram matrix A′A for parallel MRI is harder to precondition, though possible [32]. An alternative splitting that simplifies the updates is [29]:

| (13) |

where FL ≜ IL ⊗ F. With this splitting, the z update again is simply soft thresholding, and the x update involves the diagonal matrix C′C which is trivial. The v update involves the matrix T′T that is circulant for periodic boundary conditions or is very well suited to a circulant preconditioner otherwise, using simple FFT operations. The u update involves the matrix that is circulant or Toeplitz. This approach exploits the structure of A to simplify the updates; the primary drawback is that it requires selecting even more AL penalty parameters; condition number criteria can be helpful [29]. Another splitting with fewer auxiliary variables leads to an inner update step that requires solving denoising problems similar to (11).

3). Primal-dual methods:

A key idea behind duality-based methods is the fact:

Thus the (nonsmooth) analysis regularized problem (10) is equivalent to this constrained problem:

| (14) |

where . The primal-dual methods typically alternate between updating the primal variable x and the dual variable z, using more convenient alternatives to (14) that involve separate multiplication by A and by A′ without requiring inner CG iterations. These methods provide convergence guarantees and acceleration techniques that lead to O(1/k2) rates [33]. A drawback of such methods is they typically require power iterations to find a Lipschitz constant, and, like AL methods, have tuning parameters that affect the practical convergence rates. Finding a simple, convergent, and tuning-free method for the analysis regularized problem (10) remains an important open problem.

F. Patch-based sparsity models

Using (10) with a finite-difference regularizer is essentially equivalent to using patches of size 2×1. It is plausible that one can regularize better by considering larger patches that provide more context for distinguishing signal from noise. There are two primary modes of patch-based regularization: synthesis models and analysis methods.

A typical synthesis approach attempts to represent each patch using a sparse linear combination of atoms from some signal patch dictionary. Let Pp denote the d × N matrix that extracts the pth of P patches (having d pixels) when multiplied by an image vector x. Then the synthesis model is that Ppx ≈ Dzp where D is a d × J dictionary, such as the discrete cosine transform (DCT) [34], and is a sparse coefficient vector for the pth patch. Under this model, a natural regularizer is

| (15) |

See [34] for an extension to the case of multiple images. The regularizer has an inner minimization over the sparse coefficients {zp}, so the overall problem involves both optimizing the image x and those coefficients. This structure lends itself to alternating minimization algorithms. The work in [34] used ISTA for updating zp; the results in Fig. 2 suggest that POGM may be beneficial.

A typical analysis approach for patches assumes there is a sparsifying transform Ω such that ΩPpx tend to be sparse. For example, [35] uses a directional wavelet transform for each patch. Under this model, a natural regularizer is

| (16) |

Again a double minimization over the image x and the transform coefficients {zp} is needed, so alternating minimization algorithms are natural. For alternating minimization (block coordinate descent), the update of each zp is simply soft thresholding, and the update of x is a quadratic problem involving . When the transform Ω is unitary and the patches are selected with periodic boundary conditions and a stride of one pixel, then this simplifies to A′A + βI. A few inner iterations of the (preconditioned) CG algorithm is useful for the x update. Under these assumptions, and using just a single gradient descent update for x, an alternating minimization algorithm for least-squares with regularizer (16) simply alternates between a denoising step and a gradient step:

| (17) |

For this algorithm the cost function is monotonically nonincreasing.

G. Adaptive regularization

The patch dictionary D in (15) or the sparsifying transform Ω in (16) can be chosen based on mathematical models like the DCT, or they can be learned from a population of preexisting training data and then used in (15) or (16) for subsequent patients. A third possibility is to adapt D or Ω to each specific patient [36]. The “dictionary learning MRI” (DLMRI) approach [36] uses a non-convex regularizer of the following form:

| (18) |

where is the feasible set of dictionaries (typically constrained so that each atom has unit norm). Now there are three set of variables to optimize: x, {zp}, D, so alternating minimization methods are well suited. The update of the image x is a quadratic optimization subproblem, the zp update is soft thresholding, and the D update is simple when considering one atom at a time.

The “transform learning MRI” (TLMRI) approach uses a regularizer of this form:

where r(Ω) enforces or encourages properties of the sparsifying transform such as orthogonality. Again, alternating minimization methods are well suited; the Ω update involves (small) SVD operations.

H. Convolutional regularizers

An alternative to patch-based regularization is to use convolutional sparsity models [37]. A convolutional synthesis regularizer replaces (15) with

where {hk} is a set of filters learned from training images [37] and ∗ denotes convolution. Again, alternating minimization algorithms are a natural choice because the x update is quadratic and the zk update is a sparse coding problem for which proximal methods like POGM are well-suited.

A convolution analysis regularizer replaces (16) with

Again, alternating minimization algorithms are effective, where the zk update is soft thresholding. One can either learn the filters {hk} from good quality (e.g., fully sampled) training data, or adapt the filters for each patient by jointly optimizing x, {hk} and {zk} using alternating minimization.

I. Other methods

The summation in (17) is a particular type of patch-based denoising of the current image estimate xk. There are many other denoising methods, some of which have variational formulations well-suited to inverse problems, but many of which do not, such as nonlocal means (NLM) [38] and block-matching 3D (BM3D) [39]. One way to adapt most such denoising methods for image reconstruction is to use a plug-and-play ADMM approach [40] that replaces a denoising step like (17) with a general denoising procedure.

J. Non-SENSE methods

The measurement model (2) and (3) has a single latent image x, viewed by each receive coil. An alternate formulation is to define a latent image for each coil xl ≜ Clx and write the measurement model as yl = Fxl + εl. For such formulations, the problem becomes to reconstruct the L images X = [x1 … xL] from the measurements, while considering relationships between those images. Because multiplication by the smooth sensitivity map Cl in the image domain corresponds to convolution with a small kernel in the frequency domain, any point in k-space can be approximated by a linear combination of its neighbors in all coil data [2]. This “GRAPPA modeling” leads to an approximate consistency condition vec(X) ≈ G vec(X) where G is a matrix involving small k-space kernels that are learned from calibration data [2]. This relationship leads to “SPIRiT” optimization problems like:

where and R(X) is a regularizer that encourages joint sparsity because all of the images {xl} have edges in the same locations. No sensitivity maps C are needed for this approach. When β2 = 0 the problem is quadratic and CG is well suited. Otherwise, ADMM is convenient for splitting this optimization problem into parts with easier updates. The ESPIRiT approach uses the redundancy in k-space data from multiple coils to estimate sensitivity maps from the eigenvectors of a certain block-Hankel matrix [12]; this approach helps bridge the SENSE and GRAPPA approaches while building on related signal processing tools like subspace estimation and multichannel blind deconvolution.

III. Summary

Although the title of this paper is “optimization methods for…” before selecting an optimization algorithm it is far more important (for under-sampled problems) to first select an appropriate cost function that captures useful prior information about the latent object x. The literature is replete with numerous candidate models, each of which often lead to different optimization methods. Nevertheless, common ingredients arise in most formulations, such as alternating minimization (block coordinate descent) at the outer level, preconditioned CG for inner iterations related to quadratic terms, and soft thresholding or other proximal operators for nonsmooth terms that promote sparsity.

This survey has focused on 1-norm regularizers for simplicity, but (nonconvex) p “norms” with 0 ≤ p < 1 have also been investigated and appear to be beneficial particularly for very undersampled measurements. This survey considers a single image x but many MRI scan protocols involve several images with different contrast and it may be useful to reconstruct them jointly, e.g., by considering common sparsity or subspace models.

There are many open problems in optimization that are relevant to MRI. The analysis form regularized problem (10) remains challenging, and further investigation of analysis vs synthesis approaches is needed. There has been considerable recent progress on finding optimal worst-case methods [16], but these optimality results are for very broad classes of cost functions, whereas the cost functions in MRI reconstruction have particular structure. Finding algorithms with optimal complexity (fastest possible convergence) for MRI-type cost functions would be valuable both for clinical practice and for facilitating research.

Finally, the current trend is to use convolutional neural network (CNN) methods to process under-sampled images, or for direct reconstruction, or as denoising operators. The stochastic gradient descent method currently is the universal optimization tool for training CNN models. Many “deep learning” methods for MRI are based on network architectures that are “unrolled” versions of iterative optimization methods like PGM. Thus, familiarity with “classical” optimization methods for MR image reconstruction is important even in the machine learning era.

Fig. 3.

POGM method for minimizing f(x) + g(x) where f is convex with L-Lipschitz smooth gradient and g is convex. See [25] for adaptive restart version.

IV. Acknowledgement

The author thanks many students and postdocs for all they have taught him, Doug Noll for numerous MRI insights, and the reviewers for their detailed comments that improved the paper,

Research supported in part by NIH Grants R01 EB023618, U01 EB026977, and R21 AG061839.

Footnotes

Jupyter notebooks with code in the open source language Julia that reproduce the figures in this paper are available in the Michigan Image Reconstruction Toolbox (MIRT) at http://github.com/JeffFessler/MIRT.jl

References

- [1].Pruessmann KP, Weiger M, Scheidegger MB, and Boesiger P, “SENSE: sensitivity encoding for fast MRI,” Mag. Res. Med, vol. 42, no. 5, pp. 952–62, November 1999. [PubMed] [Google Scholar]

- [2].Griswold MA, Jakob PM, Heidemann RM, Nittka M, Jellus V, Wang J, Kiefer B, and Haase A, “Generalized autocalibrating partially parallel acquisitions (GRAPPA),” Mag. Res. Med, vol. 47, no. 6, pp. 1202–10, June 2002. [DOI] [PubMed] [Google Scholar]

- [3].Shin PJ, Larson PEZ, Ohliger MA, Elad M, Pauly JM, Vigneron DB, and Lustig M, “Calibrationless parallel imaging reconstruction based on structured low-rank matrix completion,” Mag. Res. Med, vol. 72, no. 4, pp. 959–70, October 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Wright GA, “Magnetic resonance imaging,” IEEE Sig. Proc. Mag, vol. 14, no. 1, pp. 56–66, January 1997. [Google Scholar]

- [5].Ma D, Gulani V, Seiberlich N, Liu K, Sunshine JL, Duerk JL, and Griswold MA, “Magnetic resonance fingerprinting,” Nature, vol. 495, pp. 187–93, March 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Pruessmann KP, Weiger M, Boernert P, and Boesiger P, “Advances in sensitivity encoding with arbitrary k-space trajectories,” Mag. Res. Med, vol. 46, no. 4, pp. 638–51, October 2001. [DOI] [PubMed] [Google Scholar]

- [7].Sutton BP, Noll DC, and Fessler JA, “Fast, iterative image reconstruction for MRI in the presence of field inhomogeneities,” IEEE Trans. Med. Imag, vol. 22, no. 2, pp. 178–88, February 2003. [DOI] [PubMed] [Google Scholar]

- [8].Fessler JA, “Model-based image reconstruction for MRI,” IEEE Sig. Proc. Mag, vol. 27, no. 4, pp. 81–9, July 2010, invited submission to special issue on medical imaging. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Baldassarre L, Li Y-H, Scarlett J, Gozcu B, Bogunovic I, and Cevher V, “Learning-based compressive subsampling,” IEEE J. Sel. Top. Sig. Proc, vol. 10, no. 4, pp. 809–22, June 2016. [Google Scholar]

- [10].Ying L and Sheng J, “Joint image reconstruction and sensitivity estimation in SENSE (JSENSE),” Mag. Res. Med, vol. 57, no. 6, pp. 1196–1202, June 2007. [DOI] [PubMed] [Google Scholar]

- [11].Block KT, Uecker M, and Frahm J, “Undersampled radial MRI with multiple coils. Iterative image reconstruction using a total variation constraint,” Mag. Res. Med, vol. 57, no. 6, pp. 1086–98, June 2007. [DOI] [PubMed] [Google Scholar]

- [12].Uecker M, Lai P, Murphy MJ, Virtue P, Elad M, Pauly JM, Vasanawala SS, and Lustig M, “ESPIRiT-an eigenvalue approach to autocalibrating parallel MRI: Where SENSE meets GRAPPA,” Mag. Res. Med, vol. 71, no. 3, pp. 990–1001, March 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Roemer PB, Edelstein WA, Hayes CE, Souza SP, and Mueller OM, “The NMR phased array,” Mag. Res. Med, vol. 16, no. 2, pp. 192–225, November 1990. [DOI] [PubMed] [Google Scholar]

- [14].Wajer F and Pruessmann KP, “Major speedup of reconstruction for sensitivity encoding with arbitrary trajectories,” in Proc. Intl. Soc. Mag. Res. Med, 2001, p. 767 [Online]. Available: http://cds.ismrm.org/ismrm-2001/PDF3/0767.pdf [Google Scholar]

- [15].Aelterman J, Luong HQ, Goossens B, Pizurica A, and Philips W, “Augmented Lagrangian based reconstruction of non-uniformly sub-Nyquist sampled MRI data,” Signal Processing, vol. 91, no. 12, pp. 2731–42, January 2011. [Google Scholar]

- [16].Drori Y, “The exact information-based complexity of smooth convex minimization,” J. Complexity, vol. 39, pp. 1–16, April 2017. [Google Scholar]

- [17].Nesterov Y, “A method of solving a convex programming problem with convergence rate O(1/k2),” Soviet Math. Dokl, vol. 27, no. 2, pp. 372–76, 1983. [Online]. Available: http://www.core.ucl.ac.be/ñesterov/Research/Papers/DAN83.pdf [Google Scholar]

- [18].Lustig M, Donoho D, and Pauly JM, “Sparse MRI: The application of compressed sensing for rapid MR imaging,” Mag. Res. Med, vol. 58, no. 6, pp. 1182–95, December 2007. [DOI] [PubMed] [Google Scholar]

- [19].Lustig M, Donoho DL, Santos JM, and Pauly JM, “Compressed sensing MRI,” IEEE Sig. Proc. Mag, vol. 25, no. 2, pp. 72–82, March 2008. [Google Scholar]

- [20].Daubechies I, Defrise M, and De Mol C, “An iterative thresholding algorithm for linear inverse problems with a sparsity constraint,” Comm. Pure Appl. Math, vol. 57, no. 11, pp. 1413–57, November 2004. [Google Scholar]

- [21].Combettes PL and Pesquet J-C, “Proximal splitting methods in signal processing,” 2011, fixed-Point Algorithms for Inverse Problems in Science and Engineering, Springer, Optimization and Its Applications, pp 185–212. [Google Scholar]

- [22].Combettes P and Wajs V, “Signal recovery by proximal forward-backward splitting,” SIAM J. Multi. Mod. Sim, vol. 4, no. 4, pp. 1168–200, 2005. [Google Scholar]

- [23].Muckley MJ, Noll DC, and Fessler JA, “Fast parallel MR image reconstruction via B1-based, adaptive restart, iterative soft thresholding algorithms (BARISTA),” IEEE Trans. Med. Imag, vol. 34, no. 2, pp. 578–88, February 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Beck A and Teboulle M, “Fast gradient-based algorithms for constrained total variation image denoising and deblurring problems,” IEEE Trans. Im. Proc, vol. 18, no. 11, pp. 2419–34, November 2009. [DOI] [PubMed] [Google Scholar]

- [25].Kim D and Fessler JA, “Adaptive restart of the optimized gradient method for convex optimization,” J. Optim. Theory Appl, vol. 178, no. 1, pp. 240–63, July 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].El Gueddari L, Lazarus C, Carrie H, Vignaud A, and Ciuciu P, “Self-calibrating nonlinear reconstruction algorithms for variable density sampling and parallel reception MRI,” in Proc. IEEE SAM, 2018, pp. 415–9. [Google Scholar]

- [27].Wetzl J, Forman C, Wintersperger BJ, D’Errico L, Schmidt M, Mailhe B, Maier A, and Stalder AF, “High-resolution dynamic CE-MRA of the thorax enabled by iterative TWIST reconstruction,” Mag. Res. Med, vol. 77, no. 2, pp. 833–40, February 2017. [DOI] [PubMed] [Google Scholar]

- [28].Goldstein T and Osher S, “The split Bregman method for L1-regularized problems,” SIAM J. Imaging Sci, vol. 2, no. 2, pp. 323–43, 2009. [Google Scholar]

- [29].Ramani S and Fessler JA, “Parallel MR image reconstruction using augmented Lagrangian methods,” IEEE Trans. Med. Imag, vol. 30, no. 3, pp. 694–706, March 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Eckstein J and Bertsekas DP, “On the Douglas-Rachford splitting method and the proximal point algorithm for maximal monotone operators,” Mathematical Programming, vol. 55, no. 1–3, pp. 293–318, April 1992. [Google Scholar]

- [31].Boyd S, Parikh N, Chu E, Peleato B, and Eckstein J, “Distributed optimization and statistical learning via the alternating direction method of multipliers,” Found. & Trends in Machine Learning, vol. 3, no. 1, pp. 1–122, 2010. [Google Scholar]

- [32].Koolstra K, van Gemert J, Boernert P, Webb A, and Remis R, “Accelerating compressed sensing in parallel imaging reconstructions using an efficient circulant preconditioner for Cartesian trajectories,” Mag. Res. Med, vol. 81, no. 1, pp. 670–85, January 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [33].Chambolle A and Pock T, “A first-order primal-dual algorithm for convex problems with applications to imaging,” J. Math. Im. Vision, vol. 40, no. 1, pp. 120–145, 2011. [Google Scholar]

- [34].Wang S, Tan S, Gao Y, Liu Q, Ying L, Xiao T, Liu Y, Liu X, Zheng H, and Liang D, “Learning joint-sparse codes for calibration-free parallel MR imaging (LINDBERG),” IEEE Trans. Med. Imag, vol. 37, no. 1, pp. 251–61, January 2018. [DOI] [PubMed] [Google Scholar]

- [35].Qu X, Guo D, Ning B, Hou Y, Lin Y, Cai S, and Chen Z, “Undersampled MRI reconstruction with patch-based directional wavelets,” Mag. Res. Im, vol. 30, no. 7, pp. 964–77, September 2012. [DOI] [PubMed] [Google Scholar]

- [36].Ravishankar S and Bresler Y, “MR image reconstruction from highly undersampled k-space data by dictionary learning,” IEEE Trans. Med. Imag, vol. 30, no. 5, pp. 1028–41, May 2011. [DOI] [PubMed] [Google Scholar]

- [37].Wohlberg B, “Efficient algorithms for convolutional sparse representations,” IEEE Trans. Im. Proc, vol. 25, no. 1, pp. 301–15, January 2016. [DOI] [PubMed] [Google Scholar]

- [38].Buades A, Coll B, and Morel J, “Image denoising methods. A new nonlocal principle,” SIAM Review, vol. 52, no. 1, pp. 113–47, 2010. [Google Scholar]

- [39].Dabov K, Foi A, Katkovnik V, and Egiazarian K, “Image denoising by sparse 3-D transform-domain collaborative filtering,” IEEE Trans. Im. Proc, vol. 16, no. 8, pp. 2080–95, August 2007. [DOI] [PubMed] [Google Scholar]

- [40].Chan SH, Wang X, and Elgendy OA, “Plug-and-play ADMM for image restoration: fixed-point convergence and applications,” IEEE Trans. Computational Imaging, vol. 3, no. 1, pp. 84–98, March 2017. [Google Scholar]