Abstract

At any moment in time, streams of information reach the brain through the different senses. Given this wealth of noisy information, it is essential that we select information of relevance - a function fulfilled by attention - and infer its causal structure to eventually take advantage of redundancies across the senses. Yet, the role of selective attention during causal inference in cross-modal perception is unknown. We tested experimentally whether the distribution of attention across vision and touch enhances cross-modal spatial integration (visual-tactile ventriloquism effect, Expt. 1) and recalibration (visual-tactile ventriloquism aftereffect, Expt. 2) compared to modality-specific attention, and then used causal-inference modeling to isolate the mechanisms behind the attentional modulation. In both experiments, we found stronger effects of vision on touch under distributed than under modality-specific attention. Model comparison confirmed that participants used Bayes-optimal causal inference to localize visual and tactile stimuli presented as part of a visual-tactile stimulus pair, whereas simultaneously collected unity judgments - indicating whether the visual-tactile pair was perceived as spatially-aligned - relied on a sub-optimal heuristic. The best-fitting model revealed that attention modulated sensory and cognitive components of causal inference. First, distributed attention led to an increase of sensory noise compared to selective attention toward one modality. Second, attending to both modalities strengthened the stimulus-independent expectation that the two signals belong together, the prior probability of a common source for vision and touch. Yet, only the increase in the expectation of vision and touch sharing a common source was able to explain the observed enhancement of visual-tactile integration and recalibration effects with distributed attention. In contrast, the change in sensory noise explained only a fraction of the observed enhancements, as its consequences vary with the overall level of noise and stimulus congruency. Increased sensory noise leads to enhanced integration effects for visual-tactile pairs with a large spatial discrepancy, but reduced integration effects for stimuli with a small or no cross-modal discrepancy. In sum, our study indicates a weak a priori association between visual and tactile spatial signals that can be strengthened by distributing attention across both modalities.

Keywords: Visual-tactile, Multisensory integration, Recalibration, Causal inference, Attention, Ventriloquism

1. Introduction

On their own, the senses provide only noisy and sometimes systematically distorted information. Yet, the different senses can support each other. Integration of information from different senses can improve the precision of sensory estimates and consistent discrepancies between the sensory signals can trigger recalibration of one sense by the other. To measure cross-modal integration and recalibration of spatial information, experimenters often induce an artificial discrepancy between the senses, for example, using an optical prism to shift the apparent visual location of a hand-held object (von Helmholtz, 1909). Cross-modal spatial integration leads to shifts in the perceived location of stimuli that are part of a cross-modal, spatially-discrepant stimulus pair. Spatial recalibration induces perceptual shifts of stimuli presented via a single modality after exposure to cross-modal stimulus pairs with a constant spatial discrepancy. These shifts are often called the ventriloquism effect and aftereffect, respectively, in analogy to the perceptual illusion that a puppeteer’s speech sounds appear to originate from the puppet’s mouth (Howard & Templeton, 1966; Pick, Warren, & Hay, 1969; Jack & Thurlow, 1973; Thurlow & Jack, 1973; Bertelson & Aschersleben, 1998; Lewald & Guski, 2003; reviewed in Bertelson & De Gelder, 2004; Chen & Vroomen, 2013). Ventriloquism-like shifts in cross-modal localization are not limited to pairings of visual and auditory stimuli: the perceived locations of auditory stimuli are shifted toward concurrently presented spatially discrepant tactile stimuli (Caclin, Soto-Faraco, Kingstone, & Spence, 2002; Bruns & Röder, 2010a, 2010b; Renzi et al., 2013) and the perceived locations of tactile stimuli are shifted toward concurrently presented visual stimuli (Pavani, Spence, & Driver, 2000; Spence & Driver, 2004; Samad & Shams, 2016). Also ventriloquism aftereffects are not limited to the visual-auditory domain (Radeau & Bertelson, 1974, 1977, 1978; Bermant & Welch, 1976; Bertelson & Radeau, 1981; Recanzone, 1998; Lewald, 2002; Frissen, Vroomen, de Gelder, & Bertelson, 2005; Bertelson, Frissen, Vroomen, & de Gelder, 2006; Kopco, Lin, Shinn-Cunningham, & Groh, 2009; Bruns, Liebnau, & Röder, 2011; Frissen, Vroomen, & de Gelder, 2012; Bruns & Röder, 2015; Zierul, Röder, Tempelmann, Bruns, & Noesselt, 2017; Bosen, Fleming, Allen, O’Neill, & Paige, 2017). Artificial shifts of visually perceived limb locations lead to subsequent changes of proprioceptive and motor space (von Helmholtz, 1909; Mather & Lackner, 1981; Kornheiser, 1976; Hay & Pick, 1966; Welch & Warren, 1980; van Beers, Wolpert, & Haggard, 2002; Redding & Wallace, 1996; Redding, Rossetti, & Wallace, 2005; Cressman & Henriques, 2009, 2010; Henriques & Cressman, 2012; Welch, 2013). Moreover, the spatial perception of tactile stimuli shifts after exposure to pairings with synchronous but spatially discrepant visual stimuli (Samad & Shams, 2018), whereas exposure to spatially discrepant audio-tactile stimulus pairs recalibrates auditory space (Bruns, Spence, & Röder, 2011).

During a ventriloquist’s performance, the perceived sound location will be fully shifted toward the puppet. The far higher spatial precision of vision as compared to audition results in complete capture of the auditory by the visual signal. Yet, when the spatial reliability of the visual stimulus is artificially degraded, the degree of influence each modality has on the perceived location depends on the relative reliability of the two sensory signals (Battaglia, Jacobs, & Aslin, 2003; Hairston et al., 2003; Alais & Burr, 2004; Charbonneau, Véronneau, Boudrias-Fournier, Lepore, & Collignon, 2013) as predicted by optimal cue integration (Landy, Maloney, Johnston, & Young, 1995; Yuille & Bülthoff, 1996; Trommershäuser, Körding, & Landy, 2011). In turn, the apparent dominance of vision over audition, proprioception, and touch in spatial recalibration matches the typically greater spatial reliability of vision (Welch & Warren, 1980) and task-dependent variations in visual and proprioceptive reliability are reflected in the direction of visual-proprioceptive spatial recalibration (van Beers et al., 2002).

Reliability-weighted cross-modal integration and recalibration leads to more precise sensory estimates, but only if the sensory signals provide corresponding information. If the signals do not correspond – usually because they stem from different sources – the sensory signals should be kept separate and should not prompt recalibration. Thus, the brain should perform causal inference to establish whether sensory signals from different modalities originate from the same source (Körding et al., 2007), and that inference should modulate integration and recalibration.

Causal inference takes two types of information into account: sensory data and prior knowledge (Körding et al., 2007; Sato, Toyoizumi, & Aihara, 2007; Wei & Körding, 2009; Wozny, Beierholm, & Shams, 2010; Rohe & Noppeney, 2015a; Gau & Noppeney, 2016). First, cross-modal signals with a large spatial (Gepshtein, Burge, Ernst, & Banks, 2005) or temporal discrepancy (Holmes & Spence, 2005; Parise & Ernst, 2016) are not integrated, because they are unlikely to share a common source (reviews in Alais, Newell, & Mamassian, 2010; Shams & Beierholm, 2010; Chen & Spence, 2017). Second, a priori knowledge about the origin of the sensory signals can impact causal inference. For example, knowledge that visual and haptic signals have a common source can promote integration even in the absence of spatial alignment between the signals (Helbig & Ernst, 2007), and knowledge about random discrepancies between two signals can reduce the strength of multisensory integration (Debats & Heuer, 2018). However, in most situations, explicit knowledge about the correspondence of the two current signals is unavailable and the brain must rely on expectations based on previous experience.

Another means to improve perception in the face of competing sensory signals is selective attention, the “differential processing of simultaneous sources of external information” (Johnston & Dark, 1986). Within one modality, selective attention to a spatial location, object, or perceptual feature improves perception in the focus of attention (Shinn-Cunningham, 2008; Carrasco, 2011), likely because it amplifies the neural responses to that signal and suppresses irrelevant responses (Desimone & Duncan, 1995; Kastner & Ungerleider, 2001; Gazzaley, Cooney, McEvoy, Knight, & D’Esposito, 2005). Yet, selective attention to a modality does not necessarily improve perception, because modality-specific attention counteracts cross-modal integration, which itself can be beneficial for perception. Evidence indicating that the distribution of attention across modalities enhances cross-modal integration comes from behavioral and neuroscientific studies (reviews in Talsma, Senkowski, Soto-Faraco, & Woldorff, 2010; Tang, Wu, & Shen, 2016). Visual-tactile integration, measured by the tap-flash illusion, is reduced when the task emphasizes only one of the modalities (Werkhoven, van Erp, & Philippi, 2009) and cross-modal congruency effects in visual-tactile pattern matching are weaker when participants attend to only one modality (Göschl, Engel, & Friese, 2014). Integration of visual-auditory stimulus pairs, measured either by means of multisensory enhancement effects in color discrimination (Mozolic, Hugenschmidt, Peiffer, & Laurienti, 2008) or by a change in event-related scalp potentials (Talsma, Doty, & Woldorff, 2007), even depended on distributed attention: cross-modal enhancements were only present when both modalities were attended. Thus, current evidence suggests that cross-modal integration is attenuated or even eliminated when participants attend to only one of the stimulus modalities. However, the mechanism behind these attentional modulations remains speculative. The focus of attention could affect either of the two types of information that govern causal inference – the sensory signals themselves and prior expectations about the shared source of the signals – or even change the way in which cross-modal information is processed.

In this paper, we present the results of two experiments involving visual and tactile cues for the estimation of spatial location. In both experiments we manipulate the focus of attention on each modality and measure the impact of distributed versus modality-specific attention on integration (Expt. 1) and recalibration (Expt. 2). We find that the distribution of attention across vision and touch is required to maximize both integration and recalibration. Subsequent modeling reveals an impact of attention on the participant’s expectation that the two signals share a common source and consequently provide corresponding information as well as on the reliability of the individual stimuli. However, model simulations also reveal that only the attention-induced change in the common-cause prior can explain the observed enhancements of visual-tactile integration and recalibration effects.

2. Expt. 1: Attention effects on the integration of vision and touch

In Expt. 1, we investigated whether visual-tactile spatial integration is sensitive to the attentional context. Participants were presented with visual-tactile stimulus pairs of variable spatial discrepancy. Visual-tactile ventriloquism, the indicator for the strength of integration effects, can be measured in two ways, either by means of localization shifts or by means of binary judgments about the unity or spatial alignment of the two stimuli. In general, unity judgments show a similar pattern to localization shifts (Wallace et al., 2004; Hairston et al., 2003; Rohe & Noppeney, 2015b). However, shifts in the perceived locations of two spatially discrepant stimuli do not always result in the observer misperceiving the two stimuli as spatially aligned. There can be substantial but incomplete shifts of perceived auditory location toward a concurrently presented visual stimulus even though the stimuli are perceived as spatially misaligned (Bertelson & Radeau, 1981; Körding et al., 2007; Wozny et al., 2010). Therefore, we measured visual-tactile ventriloquism based on both localization estimates and unity judgments.

We hypothesized that the integration of vision and touch would be affected by the focus of attention with larger effects when attention was distributed across both modalities. In every trial, participants indicated the location of one modality, either the felt or the viewed stimulus. The response-relevant modality was either cued before the stimulus pair was presented, allowing participants to focus attention on one modality (modality-specific attention), or after the stimulus pair had been presented, encouraging participants to attend to both modalities (distributed attention).

2.1. Methods

2.1.1. Participants

A group of 12 individuals participated in Expt. 1 (19–30 years old, mean age: 21 years; 7 female; 11 right-handed). All participants reported having no sensory or motor impairments and normal or corrected-to-normal vision. Participants were rewarded with course credit or $10 per hour for participation. The study was conducted in accordance with the guidelines laid down in the declaration of Helsinki and approved by the New York University institutional review board. All participants gave written informed consent prior to the start of the experiment.

2.1.2. Apparatus and stimuli

Participants sat in front of a table with the head supported by a chin rest. The non-dominant arm was positioned parallel to the front of the body, palm-down, and lay on an arm-length pillow that both provided comfort and leveled the arm (Fig. 1A, B). A custom-made sleeve was attached to the non-dominant arm using velcro straps. The sleeve held 7 LEDs and 7 tactile stimulators (ERMs, Precision Microdrives, UK) spaced 15 mm apart, positioned along the top of the forearm (Fig. 1C). LEDs and tactile stimulators were aligned so that one LED and one tactile stimulator were located at each of 7 positions (Fig. 1C); the LEDs faced upward and the tactile stimulators were in contact with the arm. The sleeve was made of foam and rubber, ensuring that the vibration of an activated tactile stimulator was not transmitted to the LEDs or neighboring tactile stimulators. A translucent cover was placed above the arm to obstruct vision of the arm but allow activated LEDs to be seen. An LCD projector (Hitachi CPX3010, Tokyo, Japan), mounted so that it projected onto the translucent cover (Fig. 1A), was used to display task instructions, a fixation cross (aligned with the center LED at the beginning of each block), a cursor (vertical line, Fig. 1B), and a color cue. For localization responses, a joystick (Fig. 1A, B) enabled participants to move the cursor along the horizontal axis; to confirm a chosen location the joystick was pressed down. For categorical responses, participants used a custom-made button box (not shown). All custom-made peripherals, i.e., stimulators, joystick, and button box were controlled by microcontrollers (Arduino R3, Somerville, MA) that interfaced with the experimental computer via serial connections. The experimental program was written in Matlab (The Mathworks, Inc., Natick, MA) and used the Psychophysics Toolbox (Brainard, 1997; Kleiner, Brainard, & Pelli, 2007).

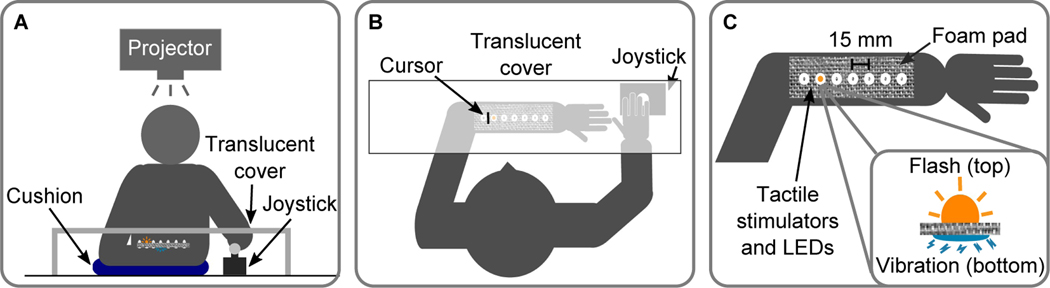

Fig. 1.

Experimental setup. (A) Front view. The non-dominant arm was placed under a translucent cover and supported with a cushion. The dominant hand held a joystick. A projector mounted overhead was used to display task instructions, a fixation cross, a cursor, and colored response cues on the translucent cover. (B) Top view. During the response phase a cursor controlled by the joystick was used to indicate the perceived stimulus location. C) Close up. Seven LEDs and seven tactile stimulators were placed along the top of the forearm (15 mm spacing) using a custom-made sleeve. The LEDs faced upward and the tactile stimulators were in contact with the arm. When activated, the LEDs would shine through the translucent cover and the tactile stimulators would vibrate.

Prior to the main experiment, each participant’s tactile detection threshold was determined as a function of stimulus duration using an adaptive procedure (accelerated stochastic approximation; Robbins & Monro, 1951; Kesten, 1958). In each trial of the thresholding procedure, a tactile stimulus was presented in one of two successive time intervals, delimited by auditory beeps. The participant indicated via button press whether the stimulus was presented in the first or the second interval. The algorithm was set to converge to 75% correct responses. It was determined that the procedure had converged after participants’ accuracy averaged over the last 15 trials lay within the range of 70–80%. The procedure was repeated three times, and the resulting three duration estimates, the average duration over the last 10 trials, were averaged for the final threshold estimate. In the main experiment, tactile stimulus duration was jittered (sampled from a Gaussian with a standard deviation of 2 ms) around 2.5 times the participant’s tactile detection threshold (mean duration: 57 ms, SD across participants: 16 ms). Visual stimulus duration was the same as tactile stimulus duration in visual-tactile trials; in visual-only trials duration was jittered in the same way as tactile stimulus duration.

2.1.3. Task and procedure

Participants localized visual and tactile stimuli presented either alone or as part of a visual-tactile stimulus pair with random inter-modal spatial discrepancy. Visual and tactile stimuli presented alone and visual-tactile stimulus pairs were randomly interleaved. A color cue (color change of the projected background image) signaled the to-be-localized modality (blue: tactile, red: visual). Participants indicated the perceived location of the cued stimulus by moving a visually presented cursor to that location. After confirming the localization response, participants reported whether they had perceived the visual and the tactile stimulus as spatially aligned or misaligned (or whether they only perceived one stimulus modality) by choosing the respective option from a drop-down menu using the joystick.

The time point at which the relevant modality was cued varied across groups (Fig. 2); one group of participants received the cue before the stimulus was presented (modality-specific-attention group; n = 5), the other group afterward (distributed-attention group; n = 7). Otherwise,trial timelines were as similar across groups as possible. A trial began with the display of a fixation cross for 1 s. 0.5 s after the removal of the fixation cross, an auditory start cue was presented. In the modality-specific-attention group, this auditory cue was paired with the visual color cue indicating the response-relevant modality. 1 s later, the stimulus was presented. In the distributed-attention group, the color cue to the response-relevant modality was presented 1 s after stimulus onset. The cursor appeared 200 ms after the stimulus or the post-cue, if one was presented. 300 ms after the localization response had been confirmed, the drop-down menu for the spatial-alignment response appeared. Response times were not limited and the next trial started immediately after the response was given.

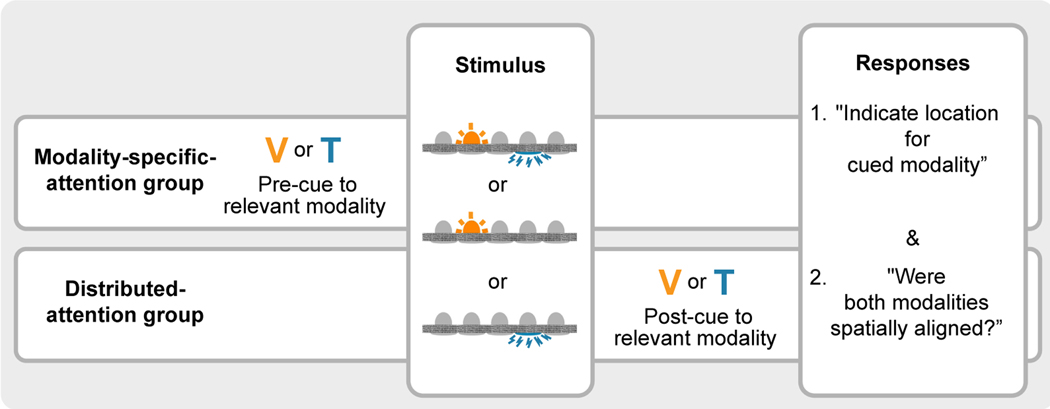

Fig. 2.

Experimental design of Expt. 1. Visual-tactile stimulus pairs with a random inter-modal spatial discrepancy, as well as visual and tactile stimuli presented alone, were interleaved across trials. Participants localized either a visual or a tactile stimulus and indicated afterward whether they perceived the stimulus of the other modality as spatially aligned or misaligned. Alternatively, they could indicate that they had only perceived one stimulus. The cue indicating the response-relevant modality was either given before (modality-specific-attention group) or after (distributed-attention group) the stimulus.

Visual and tactile stimuli were presented with equal probability at each of 5 possible locations (the inner 5 of the 7 possible ones). For visual-tactile stimulus pairs, the location of each modality was chosen independently. Thus, all 25 different spatial combinations were presented with equal probability, but consequently the 9 resulting visual-tactile spatial discrepancies were presented with unequal probabilities (p(0 cm) = 0.2, p(±1.5 cm) = 0.32, p(±3 cm) = 0.24, p(±4.5 cm) = 0.16, and p(±6 cm) = 0.08). Stimulus locations were pseudo-randomized across trials such that one full set of locations was completed before the next set began. Within each set, visual and tactile stimuli presented alone and visual-tactile stimulus pairs were presented twice at all possible locations, i.e., a set comprised 10 visual and 10 tactile stimuli presented alone and 50 visual-tactile stimulus pairs. For visual-tactile stimulus pairs, the visual stimulus was response-relevant in one repetition and the tactile stimulus in the other. Every participant completed at least 13 blocks with 50 trials in each block for a total of 650 trials per participant. However, most participants completed 28 blocks or more. The experiment was divided into multiple sessions of self-determined length.

2.1.4. Data analysis

Trials in which the alignment response indicated that the participant had misperceived the number of stimuli were excluded from all analyses (6.6% of trials; raw data are available at https://osf.io/epcqv/?view_only=ab4d5bc816b54548918b3548bb7eed7d; Badde et al., 2019). First, we compared between groups the size of the ventriloquism effect measured via localization responses. We subtracted participants’ average localization response in trials in which the stimulus had been presented alone from their average localization response for the same stimulus, but in trials in which a visual-tactile stimulus pair had been presented. This difference was calculated for each location of the task-relevant stimulus and each inter-modal discrepancy, and then averaged across all locations of the task-relevant stimulus. We regressed the resulting difference values against the inter-modal discrepancy (with negative values representing stimulus pairs in which the visual stimulus was closer to the elbow than the tactile stimulus). The estimated slope indicates the size of the ventriloquism after (distributed-attention group) the effect relative to the inter-modal discrepancy, with a slope of 0 indicating no ventriloquism effect and a slope of ±1 indicating a full shift toward the non-cued modality. For this analysis, we split localization responses by task-relevant modality, and by whether the unity judgment indicated that the two modalities were perceived as spatially aligned.

To compare these relative localization shifts across groups, an analysis of variance was computed on the estimated regression slopes. We tested one within-participants factor (perceived spatial alignment) and one between-participants factor, group (modality-specific vs. distributed attention). Significant interaction effects were followed-up by t-tests comparing the size of the ventriloquism effect between groups. Once all interactions were resolved, one-sample t-tests against zero were conducted to test the significance of the (relative) ventriloquism effect. As the dependent variable, the regression slopes, might not be normally distributed, we used permutation tests to determine the significance of the F- and t-statistics. For the analysis of variance, we permuted labels across conditions and participants (Anderson, 2001), for group effects we permuted labels across participants, and for condition effects we permuted labels within participants. Please note, the pattern of statistical results was nearly identical to that based on the F- and t-distributions.

We further compared between groups the ventriloquism effects as indicated by the proportion of spatial-alignment responses. We calculated the overall proportion of visual-tactile trials in which the visual and the tactile stimulus were perceived as spatially aligned. We compared the resulting proportions across groups using unpaired t-tests. For this analysis, the data were split by task-relevant modality. We again used permutation tests to derive robust p-values.

We adjusted for multiple comparisons according to Holm (1979) and assumed significance at a Type I error rate of 5%.

2.2. Results

We first analyzed localization performance. We compared the size of the ventriloquism effect – in the form of relative localization shifts – between the two attention groups. We did so separately for each to-be-localized modality and separated trials in which the visual and the tactile stimuli were perceived as spatially aligned from trials in which they were perceived as misaligned (Fig. 3). The slope of a regression line through the data provides an estimate of the degree of the relative ventriloquism effect, i.e., the relative amount of shift of perceived location toward the location of the other modality’s stimulus.

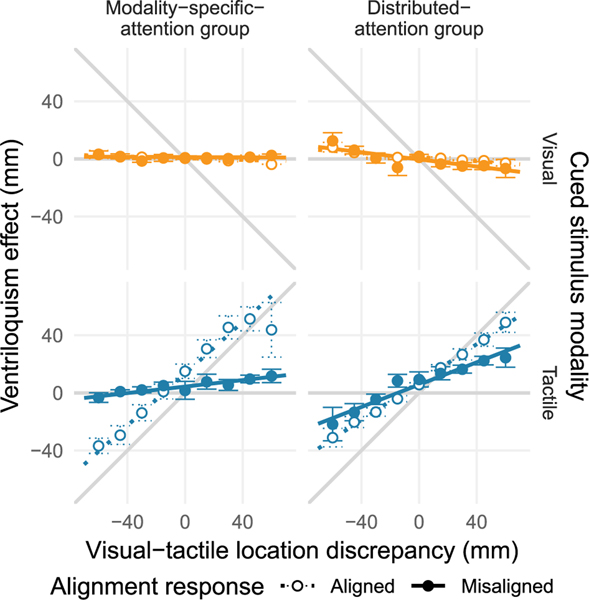

Fig. 3.

Expt. 1: Ventriloquism effect — localization shifts. The ventriloquism effect was measured by subtracting localization responses in trials in which only one stimulus was presented from localization responses for stimuli of the same modality and location but in trials in which a visual-tactile stimulus pair was presented. The averaged differences in perceived location are shown as a function of the spatial discrepancy within the visual-tactile stimulus pair (five possible locations for each modality resulting in nine visual-tactile discrepancies; negative numbers indicate pairs with the visual stimulus located closer to the elbow than the tactile stimulus). The data are separated into trials in which the visual and tactile stimuli were perceived as spatially aligned (open circles and dotted lines) or misaligned (solid circles and lines; see Fig. S1 for undivided data). Visual (top row, orange) and tactile (bottom row, blue) stimuli were response-relevant with equal probability. The modality-specific-attention group (left column) was informed about the response-relevant modality before the stimulation; the distributed-attention group (right column) was informed about the relevant modality after the stimulation. Regression lines are based on group averages of individual participants’ intercept and slope parameters. The grey diagonal line indicates the maximal possible localization shift, the horizontal line indicates the absence of a localization shift, and error bars are standard errors of the mean. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

An analysis of variance on the visual localization data (the estimated slopes) revealed solely a main effect of group, F(1,10) = 6.18, p = 0.041, η2 = 0.259. Follow-up one-sample t-tests against zero revealed a marginally significant relative shift (M = −0.013, SE = 0.005) in the modality-specific-attention group, t(4) = 3.65, p = 0.059, d = 1.35, and a significant relative shift (M = −0.097, SE = 0.027) in the distributed-attention group, t(6) = 3.65, p < 0.001,d = 1.49, both indicative of a ventriloquism effect in visual localization independent of the perceived spatial alignment of the stimulus pair.

An analysis of variance on tactile localization data revealed a main effect of perceived visual-tactile alignment, F(1,10) = 28.35, p < 0.001, η2 = 0.600, which differed across groups, F(1,10) = 7.75, p = 0.018, η2 = 0.291. To follow up on the interaction, we conducted unpaired t-tests between groups separately for trials with and without perceived visual-tactile alignment. For trials in which the visual and the tactile stimulus were perceived as spatially aligned, no significant difference between groups emerged, t(10) = 1.53, p = 0.160, d = 0.683. A one-sample t-test against zero conducted across groups confirmed a ventriloquism effect on tactile localization in these trials, M = 0.759, SE = 0.082, t(11) = 9.25, p < 0.001, d = 2.79. For trials in which the stimuli had been perceived as misaligned, a significant difference between groups was present, t(10) = 3.14, p = 0.026, d = 1.403. Follow-up t-tests indicated a significant ventriloquism effect in both the modality-specific-attention group, M = 0.113, SE = 0.045, t(4) = 2.51, p < 0.001, d = 1.25, and in the distributed-attention group, M = 0.388, SE = 0.066, t(6) = 5.86, p = 0.001, d = 2.39 even though the stimuli were perceived as misaligned. In sum, both groups showed a nearly complete shift of perceived tactile locations toward the locations of concurrently presented visual stimuli in trials in which they indicated perceptual alignment of the stimuli. However, the distributed-attention group showed a stronger ventriloquism effect than the modality-specific-attention group in trials in which the visual and the tactile stimuli had been perceived as spatially misaligned.

We further compared the proportion of trials in which visual and tactile stimuli were perceived as spatially aligned across groups, separately for each cued modality (Fig. 4). Unpaired t-tests indicated a significantly lower proportion of perceived spatial alignment responses in the modality-specific compared to the distributed-attention group for trials in which the visual stimulus had to be localized, t(10) = 3.47, p = 0.012, d = 1.55, and for trials in which the tactile stimulus had to be localized, t(10) = 3.19, p = 0.012, d = 1.43.

Fig. 4.

Expt. 1: Ventriloquism effect — perceived spatial alignment. Group mean proportion of responses indicating that the stimuli of a visual-tactile stimulus pair were perceived as spatially aligned. Data from trials in which the visual (orange) or tactile (blue) stimulus had to be localized are shown separately for the modality-specific-attention group (left side), which was informed about the response-relevant modality before the stimulation, and the distributed-attention group (right side), which was informed about the relevant modality after the stimulation. Error bars show standard errors of the mean. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

2.3. Summary

The first experiment tested whether the integration of visual and tactile spatial information is influenced by the necessity to attend to the locations of both stimuli. Participants localized visual or tactile stimuli presented either alone or with random spatial discrepancies between the two modalities. To manipulate attention, we varied the time point at which the response-relevant modality was cued, either before the stimulus pair was presented – modality-specific attention – or afterward — distributed attention. A small shift of visual localization toward a concurrently presented tactile stimulus emerged in both groups, but the effect was stronger in the distributed-attention group. The perceived location of tactile stimuli shifted considerably toward the location of a concurrently presented visual stimulus. When participants perceived visual-tactile stimulus pairs as spatially aligned, the relative tactile ventriloquism effect was maximal, that is, the perceived tactile stimulus location was completely shifted toward the physical location of the visual stimulus in both groups. However, in trials in which the visual-tactile stimulus pairs were perceived as misaligned, the distributed-attention group showed a larger tactile ventriloquism effect than the modality-specific-attention group. Moreover, participants in the distributed-attention group perceived the stimuli more often as spatially aligned than participants in the modality-specific-attention group. In sum, the results are in agreement with our hypothesis that the integration of vision and touch can be enhanced by attending to both modalities at the same time.

3. Expt. 2: Attention effects on visual recalibration of touch

The second experiment tested the sensitivity of cross-modal recalibration to the attentional context. Previous studies indicate that the effect of modality-specific attention on cross-modal recalibration depends on the pair of sensory modalities involved. Auditory spatial perception is recalibrated independent of which modality is attended during the adaptation phase (Canon, 1970); proprioceptive space is only recalibrated when attention during the adaptation phase is directed toward vision (Kelso, Cook, Olson, & Epstein, 1975). Yet, whether the distribution of attention across both modalities strengthens cross-modal recalibration in the same way as cross-modal integration has, to our knowledge, not been tested for any combination of modalities.

Moreover, neither the spatial recalibration of touch by vision (Cardini & Longo, 2016; Samad & Shams, 2018) nor the recalibration of visual bumpiness (Ho, Serwe, Trommershäuser, Maloney, & Landy, 2009), slant (Ernst, Banks, & Bülthoff, 2000; van Beers, van Mierlo, Smeets, & Brenner, 2011), size (Atkins, Jacobs, & Knill, 2003; Gori, Mazzilli, Sandini, & Burr, 2011), gloss (Wismeijer, Gegenfurtner, & Drewing, 2012), motion (Atkins, Fiser, & Jacobs, 2001), and texture (Atkins et al., 2001) by haptics (active touch, Gibson, 1962) have been probed using any attentional manipulations. Based on our results on visual-tactile integration, we hypothesized that the spatial recalibration of touch by vision would be sensitive to the attentional context. We probed this hypothesis by varying the task participants performed during the adaptation phase in which they were exposed to spatially discrepant visual-tactile stimulus pairs in the same way as in Expt. 1. Additionally, we introduced a task that did not require participants to attend to the location of the stimuli. Participants either monitored the stimulus pairs for stimuli with a longer duration in both modalities (non-spatial-attention task) or they indicated the location of either the felt or the viewed stimulus (spatial task). Crucially, in the spatial task the response-relevant modality was again either cued before the stimulus pair was presented, allowing participants to attend to one modality (the modality-specific-attention task), or after the stimulus pair had been presented, encouraging participants to attend to both modalities (the distributed-attention task). We hypothesized that the need to attend to the locations of both signals at the same time would result in larger recalibration effects.

3.1. Methods

3.1.1. Participants

36 newly-recruited persons (18–45 years old, mean age: 22.5 years; 17 male; 5 left-handed) participated in Expt. 2. Data of 17 additional participants were excluded or only partially collected either due to problems following the task instructions (3 participants) or problems localizing the tactile stimuli (14 participants, indicated by localization performance indistinguishable from chance level). Note that difficulty localizing the tactile stimuli was independent of the participant’s age, sex, and task during the adaptation phase. High exclusion rates are common in studies requiring tactile localization (e.g., Azañón, Mihaljevic, & Longo, 2016; Schubert, Badde, Röder, & Heed, 2017).

3.1.2. Apparatus and stimuli

Apparatus and stimuli were the same as in Expt. 1. As in Expt. 1, the duration of visual and tactile stimuli was set based on individual tactile detection thresholds.

3.1.3. Tasks and procedure

Expt. 2 consisted of three phases (Fig. 5).

Fig. 5.

Design of Expt. 2: Visual recalibration of touch. The experiment had three phases: pre-adaptation, adaptation, and post-adaptation. Pre- and post-adaptation phases: participants localized visual and tactile stimuli presented alone. Adaptation phase: visual-tactile stimulus pairs were presented with a fixed spatial discrepancy. The visual stimulus was always located 30mm closer to the elbow than the tactile stimulus. The task during the adaptation phase differed between groups. Non-spatial attention group: participants detected occasional stimulus pairs with a longer duration.Spatial attention groups: participants localized either the visual or the tactile stimulus in the visual-tactile stimulus pair. The localization tasks differed with respect to the time point at which the response-relevant modality was cued, the cue was presented either before (modality-specific attention) or after (distributed attention) the stimulus.

In the pre-adaptation phase, participants localized visual and tactile stimuli presented alone at one of the inner 5 of the 7 possible locations. Visual and tactile stimuli were randomly interleaved. The pre-adaptation phase comprised 5 blocks of 40 trials for a total of 200 trials. Each block consisted of 4 replications of each combination of location and tested modality.

In the adaptation phase, visual-tactile stimulus pairs were presented with the visual stimulus located 30 mm closer to the elbow than the tactile stimulus. The stimulus pair was presented in one of 5 different locations, randomized across trials. The task during the adaptation phase differed between groups (Fig. 5, center panel). In the non-spatial task, participants indicated whether the current stimulus had a longer duration than the majority of the stimuli. These deviant stimulus pairs were presented in 10% of the trials and were 30 ms longer in duration than standard stimulus pairs. In the spatial, modality-specific-attention task, participants localized either the visual or the tactile stimulus of the stimulus pair. The cue indicating the to-be-localized modality was displayed prior to stimulus presentation. In the spatial, distributed-attention task, participants again localized either the visual or the tactile stimulus, but the cue – indicating the response-relevant modality – was displayed after stimulus presentation. Participants were pseudo-randomly assigned one of the three tasks, resulting in 12 participants per group. The non-spatial adaptation task comprised 12 blocks of 50 trials for a total of 600 trials. The two spatial adaptation tasks comprised 7 blocks of 50 trials for a total of 350 trials. Responses in the spatial adaptation tasks (joystick-controlled positioning of a cursor) took longer to complete than those in the non-spatial adaptation task (button presses). The number of repetitions was chosen so that the duration of the adaptation phase was similar across tasks. Within each task, trials were pseudo-randomized such that all possible stimulus pairs and, for the attention tasks, cued modalities were presented equally often per block.

In the post-adaptation phase, participants performed 5 blocks of 40 trials of the same task as in the pre-adaptation phase, i.e., they localized visual and tactile stimuli presented alone.

Trial timing was identical to Expt. 1. In the non-spatial attention task, a response cue rather than a cursor was presented 200 ms after the stimulus. Participants could rest briefly after each phase of the experiment. On average participants completed the experiment within 120 min; pre- and post-adaptation phases took around 25 min each and the adaptation phase took 40 min.

3.1.4. Data analysis

To measure the recalibration effect, we compared localization performance for visual and tactile stimuli presented alone during the preand post-adaptation phases. For each participant, testing phase, and stimulus modality, we regressed reported stimulus locations against true stimulus locations. To this aim, the center of the possible stimulus locations was coded as location zero, and locations closer to the elbow were coded as negative locations (that is, leftward for right-handed participants tested using their non-dominant left arm and rightward for left-handed participants). A ventriloquism aftereffect shifts perceived location and hence was predicted to impact the regression intercept. For each stimulus modality, an analysis of variance was conducted on the regression intercepts with two factors: testing phase (pre- and post-adaptation) and group (non-spatial, spatial with modality-specific attention, and spatial attention distributed across modalities). Significant interaction effects were followed up with paired t-tests comparing the intercept between pre- and post-adaptation phases separately for each adaptation task group. We were equally interested in testing for the presence and for the absence of differences between pre- and post-adaptation phases. Therefore, non-significant comparisons were further followed-up by a t-test for equivalence (Wellek, 2010), which enabled us to formulate the absence of a difference as an alternative hypothesis and consequently rendered it testable within the null-hypothesis significance-testing framework. For this test, we used a liberal region of similarity, comparable to 0.5 SD. We again used permutation tests to derive distribution-independent p-values, adjusted for multiple comparisons according to Holm (1979), and assumed significance at a Type I error rate of 5%.

3.2. Results

We compared the localization of visual and tactile stimuli presented alone between the pre- and post-adaptation phases (Figs. 6 and 7). If visual-tactile recalibration is enhanced by distributing attention across both modalities, this predicts a larger shift from pre- to post-adaptation for the distributed-attention than for the modality-specific-attention group.

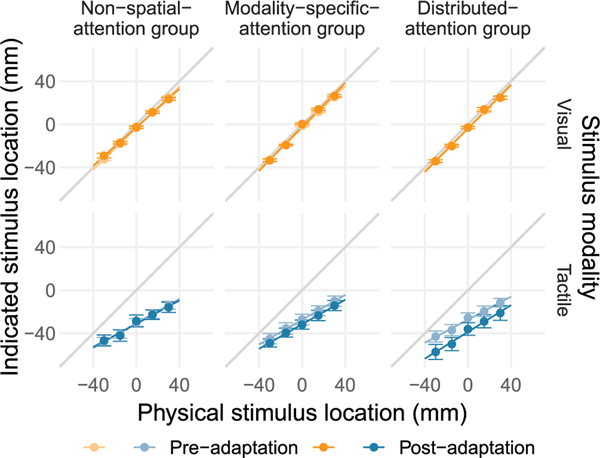

Fig. 6.

Expt. 2: Visual and tactile localization performance in the pre- and post-adaptation phases. Group mean localization responses are shown as a function of the physical stimulus location for visual (top row, orange hues) and tactile stimuli (bottom row, blue hues). Negative numbers indicate locations closer to the elbow. Localization responses are shown for the pre- (light shades) and post-adaptation (dark shades) phases. Lines are based on group averages of individual participants’ regression intercept and slope parameters. The unity line indicates perfect localization. Error bars show standard errors of the mean. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

Fig. 7.

Expt. 2: Ventriloquism aftereffect. Group mean adaptation-induced shifts in the perceived location of visual (orange) and tactile (blue) stimuli. The shifts are calculated as the difference between the pre- and post-adaptation regression intercepts from Fig. 6. Negative numbers indicate a shift of localization responses toward the elbow. Error bars show standard errors of the mean. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

For the localization of visual stimuli, neither significant main effects nor an interaction between testing phase and adaptation task group emerged.

For the localization of tactile stimuli, analysis of variance revealed a main effect of testing phase, F(1,33) = 12.05, p = 0.003, η2 = 0.028. This effect was modulated by the task during the adaptation phase as indicated by a significant interaction between testing phase and adaptation task group, F(2,33) = 4.45, p = 0.020, η2 = 0.021. We followed up on this significant interaction by comparing tactile localization or after (distributed attention) the stimulus. between the pre- and post-adaptation phases separately for each group. No significant difference between testing phases was found for the non-spatial attention group, t(11) = 0.18, p = 0.882, d = 0.052, or for the spatial, modality-specific-attention group, t(11) = 1.29, p = 0.480, d = 0.372. Follow-up t-tests for equivalence confirmed equivalent tactile localization performance in pre- and post-adaptation trials for the non-spatial attention group, T = 0.18, p<0.05, but not for the modality-specific-attention group, T = 1.29, p>0.05. A significant difference in tactile localization performance between testing phases was found for the distributed-attention group, t(11) = 4.31, p = 0.006, d = 1.243. In this group, a ventriloquism aftereffect emerged: after adaptation, tactile localization was shifted toward the elbow, that is, in the direction of the location of the visual stimulus during the adaptation phase.

3.3. Summary

Expt. 2 tested whether visual-tactile spatial recalibration is sensitive to the focus of attention during the adaptation phase. To this aim, we varied the task-relevance of spatial and modality-specific information during the adaptation phase across groups. A significant tactile ventriloquism aftereffect – indicated by a shift in the localization of tactile stimuli presented alone after the adaptation phase – emerged only when participants were required to attend to the location of both visual and tactile stimuli during the adaptation phase. A smaller, non-significant effect emerged when participants had the possibility to focus on spatial information from one modality alone, and equivalent tactile localization in pre- and post-adaptation phases was found when the task during the adaptation phase required no spatial encoding. We found no significant visual ventriloquism aftereffects. One possible explanation for this is that any visual shift would have shifted both the stimulus and the cursor display used for reporting of the perceived location. Alternatively, recalibration might have been restricted to the tactile modality due to the far higher visual than tactile reliability. Our results indicate that it is necessary to attend to spatial information from both modalities to elicit reliable visual-tactile recalibration.

4. Model: causal inference and attention effects on visual-tactile integration and recalibration

Both experiments demonstrated an increase of visual-tactile interaction effects with distributed compared to modality-specific attention. Yet, distributing attention across both modalities might have enhanced visual-tactile interactions in different ways: (1) bottom-up, by affecting the sensory data, and (2) top-down by changing participants’ prior expectation of a shared source for vision and touch, i.e., the stimulus-independent expectation that the signals correspond and should be integrated.

First, our manipulation of attention might have influenced visual-tactile interactions by affecting the reliability of the sensory signals. If attention toward both modalities uses a common, limited resource (Lavie, 1995; Driver & Spence, 1998; Spence & Driver, 2004; Alsius, Navarra, & Soto-Faraco, 2007), the need to attend to both modalities could reduce the resources allocated to each modality. In contrast, under modality-specific attention most resources can be allocated to the response-relevant modality. Hence, the reliability of the response-relevant modality might be higher in the modality-specific than in the distributed-attention condition (Mishra & Gazzaley, 2012), similar to the reduction of sensory noise that is achieved by allocating selective attention within one modality (Carrasco, 2011).

Second, distributed attention might have affected participants’ a priori expectation of whether the two signals originate from the same source and thus belong together. When attending to only one modality, humans might not expect and simply check whether signals from other modalities come from the same source and should be integrated with the attended signal. Consistent with this idea, frontal cortical areas that have been associated with causal inference (Cao, Summerfield, Park, Giordano, & Kayser, 2019) are active under distributed but not under modality-specific attention (Johnson & Zatorre, 2006; Degerman et al., 2007; Salo, Salmela, Salmi, Numminen, & Alho, 2017).

Consistent with an attenuating effect of distributed attention on sensory reliability, activity in the sensory cortices is reduced when attention is distributed across modalities compared to modality-specific attention (Mishra & Gazzaley, 2012). In turn, modality-specific attention seems to improve the reliability of sensory signals: the weighting of auditory stimuli during auditory-tactile integration increases when attention is directed toward audition (Vercillo & Gori, 2015) and the direction of modality-specific attention influences the attended signal’s neural weight (Rohe & Noppeney, 2018). However, a change in modality weighting only provides conclusive evidence of an impact of attention on reliability if participants could be sure that the stimuli came from the same source and, thus, integrated both signals in every trial of the experiment. In causal-inference studies like ours, participants cannot assume that in every trial both stimuli originated from the same source. Thus, a change in modality weighting could result from changes in reliability as well as from changes in the expectation of a shared source for both signals. Hence, the effect of attention on both variables needs to be estimated. A previous visual-auditory ventriloquism study that estimated both parameters found that focusing attention on one rather than both modalities changed only the sensory reliability of the visual stimulus (Odegaard, Wozny, & Shams, 2016). However, the model used to estimate these parameters assumed the same visual and auditory reliability whether modality-specific attention was directed toward the visual or toward the auditory stimulus. The analysis thus compared the average of modality-specific, focused and averted attention with distributed attention, which renders the results difficult to interpret.

We modeled the data from Expt. 1 on visual-tactile integration to determine whether the need to attend to both modalities affected participants’ causal-inference strategy, each modality’s sensory noise, participants’ expectation of a common source for visual and tactile signals, or all of these. We first compared a large set of models to determine participants’ causal-inference strategy. We then evaluated the parameters of the best-fitting model, to test whether our attentional manipulation affected visual and tactile sensory noise and the prior expectation of a common cause for both sensory signals. Finally, we used simulations with the best-fitting model and a corresponding model of cross-modal recalibration to characterize the influence of changes in both sensory reliability and the expected probability of a common source on visual-tactile integration and recalibration effects.

4.1. Model comparison

In Appendix A, we describe twenty models for the data of Expt. 1. We begin with the ideal-observer causal-inference model of cue integration (Körding et al., 2007; Sato et al., 2007), but also include multiple variants of the model (for an overview about the model elements and variants, see Fig. 12). Data from both groups were fitted best by the same model, which suggests that the distribution of attention across modalities had no effect on participants’ causal inference strategy. The best-fitting model indicated that localization data in both groups were in accordance with optimal Bayesian causal inference (Körding et al., 2007). Yet, both groups based their spatial-alignment responses on a heuristic: they reported the stimuli as being spatially aligned when the two optimal location estimates were sufficiently close.

4.2. Model parameters

The parameters of the best-fitting model revealed that our manipulation of modality-specific attention affected both the sensory noise and the common-cause prior, participants’ expectation of a shared source for visual and tactile signals (Fig. 8). Participants who were forced to attend to both stimuli showed higher tactile sensory uncertainty and a stronger expectation of the two signals sharing the same source (see Appendix A for statistical results). Prior studies demonstrated that changes in sensory noise do not induce changes in the probability a priori assigned to a common source (Beierholm, Quartz, & Shams, 2009), suggesting that modality-specific attention influenced sensory reliabilities and the expectation of a shared source independent of each other.

Fig. 8.

Group means of parameter estimates for the best-fitting model. (A) σv,VT,att+ and σv,VT, indicative of the reliability of attended visual stimuli that were presented with a tactile stimulus. (B) σt,VT,att+ and σt,VT, indicative of the reliability of attended tactile stimuli that were presented in the context of visual-tactile stimulation. (C) pCC: participants’ a priori probability that both stimuli share a common source. Error bars show standard errors of the mean. See Tables S1 and S2 for single-participant parameter estimates

4.3. Model simulations — visual-tactile integration

Our simulations using the best-fitting model revealed that even though both reliabilities and common cause priors were affected by our attentional manipulation, only an increase in the prior probability of vision and touch sharing a source predicted increases across all conditions for both measures of visual-tactile ventriloquism (Figs. 9 and 10). In contrast, changes in sensory reliability affected both measures of visual-tactile integration differently, dependent on the stimulus condition, and mostly at sensory reliabilities higher than the ones identified for touch in our experiments (see Appendix A, Figs. 9 and 10). In sum,the simulation results indicate that the influence of attention on visual-tactile integration effects in Expt. 1 is driven mostly by an attention-induced modulation of participants’ prior expectation that visual and tactile signals originate from the same source.

Fig. 9.

Simulations of the influence of sensory reliabilities and common-cause priors on the ventriloquism effect — localization shifts. Simulated shifts in the perceived locations of (A) visual and (B) tactile stimuli presented as part of a visual-tactile stimulus pair with varying degree of cross-modal spatial discrepancy (columns; negative numbers indicate pairs with the visual stimulus located closer to the elbow than the tactile stimulus). The intensity and direction of the perceptual shifts (color scale; negative numbers indicate a shift toward the elbow) are shown as a function of the standard deviation of visual (σv) and tactile (σt) sensory noise and the prior probability of visual and tactile signals sharing a source (pCC, rows). (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

Fig. 10.

Simulations of the influence of sensory reliabilities and common-cause priors on the tactile ventriloquism effect — perceived spatial alignment. Simulated probabilities of perceiving visual-tactile stimulus pairs with varying degree of cross-modal spatial discrepancy (columns; negative numbers indicate pairs with the visual stimulus located closer to the elbow than the tactile stimulus) as originating from a common source. Probabilities (color scale) are shown as a function of the standard deviation of visual (σv) and tactile (σt) sensory noise and the prior probability of visual and tactile signals sharing a source (pCC, rows). (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

4.4. Model simulations — visual-tactile recalibration

Simulations using a recalibration version of the best-fitting model (Appendix A) with three different learning rules all predicted higher recalibration of touch with an increasing expectation of the two signals sharing a source and with increasing learning rate (Fig. 11). In contrast, the observed decrease in the reliability of the to-be-localized modality predicted an increase in recalibration effects for sensory signals with a comparably high reliability, but not for sensory signals with a low reliability as was the case for touch in our study (see Appendix A for a discussion of the rationale behind the effect). Consequently, the simulation results indicate that the influence of attention on visual-tactile recalibration that we observed in Expt. 2 was driven solely by an increase in participants’ prior expectation that the visual and tactile signals share a source.

Fig. 11.

Simulated tactile ventriloquism aftereffect. Estimated shifts in the localization of tactile stimuli after recalibration (color scale) are shown as a function of participants’ estimates of the probability of visual and tactile signals sharing a source (pCC) and the standard deviation of the (attended) tactile stimulus (σt,VT for the distributed-attention group, σt,VT,att+ for the modality-specific-attention group) in visual-tactile trials during the adaptation phase. We additionally varied the decrease in tactile reliability (i.e., the increase in tactile standard deviation, σt,att− − σt,att+) associated with attention toward the visual stimulus and the learning rate α. (A,B) Results from the optimal Bayesian causal-inference model of adaptation (Sato et al., 2007), for (A) the distributed- and (B) the modality-specific-attention group. (C, D) Results from a model variant in which recalibration only occurs in trials in which the two sensory signals were judged as sharing a source. (E, F) Results from a model variant in which the learning rate is modulated by the posterior probability of a common cause. The red markers indicate the parameter estimates derived for visual-tactile integration (squares: distributed-attention group, circles: modality-specific-attention group). (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

5. General discussion

The current study demonstrated enhanced visual-tactile integration and recalibration effects under distributed attention across modalities compared to selective attention toward one modality. Causal-inference modeling revealed that the enhancement of both effects under distributed attention was due to an increase in participants’ common-cause prior, the stimulus-independent estimate of the probability of a shared source for visual and tactile signals. Distributed attention additionally led to a decrease in sensory reliability of the attended modality compared to modality-specific attention. However, our simulations indicate that this reduced reliability cannot account for the enhanced integration and recalibration effects we observed with distributed attention.

5.1. Prior expectations of a shared source for vision and touch

Cross-modal integration and recalibration should occur only if both signals share the same source; sensory signals from different sources should be kept separate (Welch & Warren, 1980; Körding et al., 2007; Sato et al., 2007, reviewed in Shams & Beierholm, 2010; Deroy, Spence, & Noppeney, 2016; Chen & Spence, 2017). Thus, the brain should base cross-modal integration and recalibration on causal inference about the source of the two signals, which for localization responses was confirmed by our modeling efforts. Causal inference is top-down influenced by the prior probability of a common cause, the signal-independent expectation that the two signals belong together because they originate from the same event or object. Here, the need to attend to both vision and touch strengthened the common-cause prior for vision and touch compared to modality-specific attention.

Priors allow observers to use their knowledge about the structure of the world to achieve the best interpretation of the sensory signals they receive. Thus, priors are typically characterized as long-term statistics of the environment (Girshick, Landy, & Simoncelli, 2011). From this viewpoint, the observed effect matches an environment in which we encounter visual-tactile signals from the same source more often when we attend to both modalities than when we switch attention between vision and touch. Such a statistical relation is impossible to verify but appears reasonable. Touch is tied to the body, but our gaze is usually directed toward objects in the world. Thus, most of the time concurrently arriving visual and tactile signals will have different sources. Moreover, most of the time attention will likely be directed only toward one of these modalities, as vision and touch draw from shared attentional resources (Wahn & König, 2017). To perceive visual-tactile signals from the same source, gaze has to be shifted toward the body. Thus, visual-tactile spatial information from the same source is rarely perceived coincidentally but rather requires an action that would imply some degree of attention to both modalities.

Our results reveal that observers do not use a single, fixed prior probability of vision and touch sharing a source, but adapt their expectation to the situation. Consistently, previous studies showed that common-source priors vary with the external context. The expectation of a common source for cross-modal signals is higher in situations that participants learned to associate with corresponding cross-modal signals (Gau & Noppeney, 2016). In turn, our results suggest that distributed attention across vision and touch forms an internal context associated with visual and tactile signals from the same source.

Our finding of a relatively weak link between visual and tactile locations in the case of modality-specific attention stands in marked contrast to findings indicating that visual and tactile texture (Lunghi, Binda, & Morrone, 2010) and motion (Hense, Badde, & Röder, 2019) information are even integrated in the absence of awareness. This contrast suggests that prior assumptions about the shared origin of visual and tactile signals depend on the feature in question, again pointing toward flexible rather than rigid priors about the relation between visual and tactile signals.

5.2. Sensory reliability

Distributed attention across vision and touch was associated with a decrease in sensory reliability for the attended, response-relevant sensory modality. However, our model simulations revealed that a reduction in reliability does not predict a universal enhancement of visual-tactile integration and recalibration effects. Localization shifts resulting from visual-tactile integration and recalibration increased initially with a reduction in tactile reliability, but declined with greater reductions. Thus, there is a ‘sweet spot’ in which the effects of cross-modal integration and recalibration are maximal. This non-linearity clearly distinguishes cases of optimal cue integration (Landy et al., 1995; Yuille & Bülthoff, 1996; Ernst & Banks, 2002; Trommershäuser et al., 2011) from causal-inference-based cue integration (Körding et al., 2007; Sato et al., 2007) as optimal cue integration predicts a monotonic increase in effects with a decrease in the reliability of the response-relevant modality. Hence, apparent mismatches between changes in sensory reliability and the size of cross-modal integration effects (Helbig & Ernst, 2008) might indicate that participants performed causal inference and did not fully rely on the integrated estimate as is the case for optimal cue integration.

Moreover, in our simulation a decrease in sensory reliability led to an increase in the perceived alignment between the two modalities for stimuli with large cross-modal discrepancies but had the opposite effect on stimuli with small cross-modal discrepancies. Thus, the effects of changes in reliability on integration and recalibration depend crucially on the size of the cross-modal conflict. Attention increases cue reliability and thus, for congruent stimuli, will decrease integration effects, whereas for incongruent stimuli, attention will enhance integration effects.

5.3. Attentional resources

Our study reveals that visual-tactile interactions vary with the modality-focus of selective attention. Yet, only the distribution but not the amount of attentional resources should have been affected by our manipulation. The influence of a withdrawal of attentional resources on cross-modal interactions has been studied repeatedly to investigate whether cross-modal integration and recalibration functionally depend on attentional resources (for reviews on the interplay between attention and multisensory integration in general, see Talsma et al., 2010; Koelewijn, Bronkhorst, & Theeuwes, 2010; Tang et al., 2016; Macaluso et al., 2016; Wahn & König, 2017).

One of the best-known arguments that audio-visual integration depends on attentional resources is the breakdown of the McGurk effect, an indicator of the integration of visual and auditory speech information, when attentional resources are withdrawn from both modalities by an additional task (Alsius, Navarra, Campbell, & Soto-Faraco, 2005; Alsius et al., 2007). Yet, this breakdown can be explained by the decrease of visual and auditory reliabilities that accompanies the withdrawal of attention (Carrasco, 2011; Shinn-Cunningham, 2008). Our simulations show that for cross-modal stimuli with little conflict between the sensory signals, such as the stimuli in a McGurk task, a reduction in reliability will lead to a decrease in the inferred probability that the two signals originated from the same source. As a consequence, binary effects in which the two sensory signals are either fused or separated such as the McGurk effect will be reduced or eliminated under increased attentional load.

In contrast, our simulations predict hardly any effect of a with-drawal of attention from both modalities, i.e., a decrease in both sensory reliabilities, on cross-modal interaction effects that reflect the relative weighting of the two sensory signals. Consistent with this prediction, ventriloquism-like shifts in the localization of auditory stimuli were unaffected by the direction of an additional attention-demanding task (Bertelson, Vroomen, de Gelder, & Driver, 2000), and vision recalibrates auditory spatial perception even when attentional resources are reduced by an additional attention-diverting task (Eramudugolla, Kamke, Soto-Faraco, & Mattingley, 2011), or absent altogether, such as in the non-attended hemifield of hemineglect patients (Passamonti, Frissen, & Làdavas, 2009). Thus, the absence of an attentional modulation of cross-modal integration or recalibration effects does by no means imply that attention had no effect on the sensory signals.

Moreover, our study reveals that the focus of attention influences the prior expectation of a common source for vision and touch. A withdrawal of attention might well be associated with even lower prior expectations that vision and touch or vision and other body-related senses share a common cause, simply because observers might even be less likely to evaluate whether signals that are not currently relevant originate from the same source. This potential reduction in the observer’s common-cause prior, coupled with the low reliability of proprioception might explain why visual-proprioceptive recalibration declines substantially when attentional resources are diverted using a mental-arithmetic task (Redding, Clark, & Wallace, 1985; Redding & Wallace, 1985, although see Redding, Rader, & Lucas, 1992, for a conflicting result).

In sum, our modeling results demonstrate that it is not necessary to assume a functional role of attention in cross-modal integration and recalibration, our and previous results can be explained through the influence of attention on sensory reliabilities and common-cause priors.

5.4. Relationship between recalibration and integration

Our manipulations of the ventriloquism aftereffect, as well as the model we employed to simulate this effect, assume a close connection between cross-modal recalibration and cross-modal integration. The results support this assumption: both integration and recalibration effects were strengthened by distributing attention across both modalities, and both of these effects were reproduced using a causal-inference model (Körding et al., 2007; Sato et al., 2007). Indeed, not only Bayesian but also neural-network models can predict both effects of recalibration and integration (Magosso, Cuppini, & Ursino, 2012; Ursino, Cuppini, & Magosso, 2014). However, in apparent contrast to our results, recalibration and integration of visual-proprioceptive (Welch, Widawski, Harrington, & Warren, 1979) and visual-auditory (Radeau & Bertelson, 1977) spatial information show diverging sensitivity to task manipulations. Moreover, the size of visual-auditory recalibration effects cannot be predicted based on the size the integration effects (Bosen et al., 2017), and in visual-motor tasks, the presence of sensory integration does not necessarily lead to recalibration (Smeets, van den Dobbelsteen, de Grave, van Beers, & Brenner, 2006). Finally, whereas some studies find that the direction and degree of cross-modal recalibration depend on the relative reliability of the two sensory signals (van Beers et al., 2002; Burge, Girshick, & Banks, 2010) – the core principle of cross-modal integration – other studies suggest that it is cue accuracy rather than cue reliability that determines the direction of cross-modal recalibration (Zaidel, Turner, & Angelaki, 2011). However, as discussed above, our simulations indicate that changes in sensory reliability can affect integration and recalibration differently, depending on the overall reliability levels and the degree of conflict between the modalities. Thus, recalibration can be based on integration even if both effects are not correlated within persons or across tasks.

5.5. Spatial-alignment responses

Model comparison revealed that participants in both modality-specific- and distributed-attention groups based their localization responses on ideal-observer-like causal inference, but spatial-alignment judgments were based on a heuristic decision strategy. Thus, these two measures, localization shifts and alignment judgments, provided related but distinct information. Consistently, in previous studies, in which both responses were collected and modeled separately, parameter estimates diverged with the measure that was fitted (Bosen et al., 2016; Acerbi, Dokka, Angelaki, & Ma, 2018) even though qualitatively the two measures agree widely (Wallace et al., 2004; Hairston et al., 2003; Rohe & Noppeney, 2015b). In the best-fitting model, spatial-alignment responses were based on comparisons of the optimal location estimates of the two sensory signals (a decision rule not included in previous models). This suggests greater cognitive influence on spatial-alignment (or common-source) judgments as compared to localization responses. Indeed, such binary judgments of ventriloquism vary according to the wording of the instructions (Lewald & Guski, 2003), indicating that these measures are cognitively penetrable. In sum, by combining localization and spatial-alignment judgments within the same trial, as we have done here for the first time, we revealed spatial-alignment responses to be cognitive heuristics based on the optimal location estimates that characterize localization responses.

5.6. Tactile biases

Participants exhibited strong biases in tactile localization. Biases toward anatomical landmarks are a known feature of tactile localization (de Vignemont, Majid, Jola, & Haggard, 2009). Model fitting attributed these biases to modality-specific priors, meaning that participants expected to feel a touch with greater probability at the elbow or the wrist than along the length of the lower arm. These tactile biases influenced not only localization responses but also the perceived spatial alignment of visual-tactile stimulus pairs (Fig. 14). Thus, our study indicates that priors about the location of events and objects in the world depend on the sense through which we perceive them, which is consistent with previous reports on discrepancies between visual and auditory spatial priors (Odegaard, Wozny, & Shams, 2015; Bosen et al., 2016). The modality-specificity of spatial priors is consistent with the variability of the common-cause prior revealed by our study, both results suggest priors to be situation-dependent.

5.7. Working-memory demands

Our manipulation of modality-specific attention affects the working-memory demands of the task. In the modality-specific-attention condition, the localization response was given immediately after stimulus presentation, whereas in the distributed-attention condition participants had to memorize both locations for one second, until the relevant modality was cued. Thus, task difficulty might have been higher for participants who had to distribute their attention across modalities than for the modality-specific-attention group. Yet, whereas varying task difficulty can explain differences in overall performance, the specific effects on integration and recalibration found here are difficult to link to task difficulty. However, the retention of both sensory signals in working memory might influence integration and recalibration independent of task difficulty. Working memory for visual and for tactile stimuli is governed by the same brain network (Ricciardi et al., 2006) and cross-modal information tends to be stored as one integrated object (Lehnert & Zimmer, 2006). Thus, holding both signals in working memory might foster the association between them while at the same time decreasing the reliability of the sensory signals. Yet, given that the retention interval of one second used in our study is comparatively short, we assume that concurrent attention toward both modalities is the major driving force behind our effects.

6. Conclusion

Our study shows that visual-tactile integration and recalibration effects are enhanced under distributed compared to modality-specific attention. Using causal-inference models we revealed that the need to concurrently attend to both vision and touch increased the expectation of visual and tactile sensory signals sharing a source and thus belonging together while reducing their sensory reliability. The gain in the prior expectation about the origin of visual-tactile signals explained the observed enhancement of integration and recalibration effects. The decrease in sensory reliability however predicted reduced recalibration effects while enhancing integration effects for pairs with a large spatial discrepancy only. In sum, our study indicates a weak default link between spatial vision and touch that can be strengthened by distributing attention across both modalities.

Supplementary Material

Acknowledgements

This work was supported by the National Institute of Health, grant NIH EY08266 to MSL and the German Research Foundation, grant BA 5600 1/1 to SB. We thank the Landylab for valuable comments on a previous version of the manuscript.

Appendix A

A.1. Causal-inference models of visual-tactile integration

The ideal-observer causal-inference model (Körding et al., 2007; Sato et al., 2007) is a Bayesian inference model. Thus, it is based on a generative model (Fig. 12) describing the world situation that the observer expects to encounter. Here, the generative model consists of the following elements. First, visual and tactile stimuli either arise from a single source (C = 1) or two sources (C = 2), with p(C = 1) = pCC being the a priori probability of a common cause, i.e., the probability of a single, shared source for visual and tactile stimuli. The locations of the visual (sv) and tactile (st) sources vary randomly from trial to trial. In the original version of the model, visual and tactile sources are sampled from the same Gaussian prior distribution (e.g., ). In other words, a visual and a tactile stimulus have the same probability of originating from any given location. We will consider alternative priors below. If the visual and the tactile stimulus arise from the same source (C = 1), both stimuli originate at a single random location (sv = st = svt); if they arise from separate sources (C = 2), two independent samples of the Gaussian distribution determine the source locations (sv, st). Finally, the observer receives noisy measurements usually centered on these world locations (e.g., ), but we tested alternatives, too. The ideal-observer model assumes the observer has full knowledge of the generative model and performs Bayesian inference, that is, the observer determines the posterior probability of each possible location given the noisy measurements by inverting the generative model.

Fig. 12.