Abstract

Imaging mass spectrometry (IMS) is a rapidly advancing molecular imaging modality that can map the spatial distribution of molecules with high chemical specificity. IMS does not require prior tagging of molecular targets and is able to measure a large number of ions concurrently in a single experiment. While this makes it particularly suited for exploratory analysis, the large amount and high‐dimensional nature of data generated by IMS techniques make automated computational analysis indispensable. Research into computational methods for IMS data has touched upon different aspects, including spectral preprocessing, data formats, dimensionality reduction, spatial registration, sample classification, differential analysis between IMS experiments, and data‐driven fusion methods to extract patterns corroborated by both IMS and other imaging modalities. In this work, we review unsupervised machine learning methods for exploratory analysis of IMS data, with particular focus on (a) factorization, (b) clustering, and (c) manifold learning. To provide a view across the various IMS modalities, we have attempted to include examples from a range of approaches including matrix assisted laser desorption/ionization, desorption electrospray ionization, and secondary ion mass spectrometry‐based IMS. This review aims to be an entry point for both (i) analytical chemists and mass spectrometry experts who want to explore computational techniques; and (ii) computer scientists and data mining specialists who want to enter the IMS field. © 2019 The Authors. Mass Spectrometry Reviews published by Wiley Periodicals, Inc. Mass SpecRev 00:1–47, 2019.

Keywords: unsupervised, machine learning, data analysis, imaging mass spectrometry, MALDI, SIMS, DESI, LAESI, LAICP, matrix factorization, clustering, manifold learning

I. INTRODUCTION

In the area of molecular imaging, imaging mass spectrometry (IMS) (Caprioli et al., 1997; Pacholski & Winograd, 1999; Stoeckli et al., 2001; Vickerman & Briggs, 2001; McDonnell & Heeren, 2007; Vickerman, 2011; Spengler, 2014) is advancing rapidly as a means of mapping the spatial distribution of molecules throughout a sample. Since IMS does not require prior tagging of the molecular target of interest and can measure multiple ions concurrently in a single experiment, it has proven to be particularly suited for exploratory analysis. Consequently, IMS is currently finding application in an expansive set of domains, ranging from the biomedical exploration of organic tissue (Boxer, Kraft, & Weber, 2009; Schwamborn & Caprioli, 2010; Hanrieder et al., 2013; Schöne, Höfler, & Walch, 2013; Wu et al., 2013; Cassat et al., 2018) and the forensic analysis of fingerprints (Wolstenholme et al., 2009; Elsner & Abel, 2014), to the chemical examination of geological samples (Orphan & House, 2009; Senoner & Unger, 2012) and material science‐related studies (McPhail, 2006; Clark et al., 2016). Furthermore, IMS entails many different instrument platforms, ionization techniques, and mass analyzers. This has led to a variety of different IMS modalities, including matrix‐assisted laser desorption/ionization (MALDI), desorption electrospray ionization (DESI), laser ablation electrospray ionization (LAESI), laser ablation inductively coupled plasma (LAICP), and secondary ion mass spectrometry (SIMS)‐based IMS, each with their own advantages and disadvantages.

Traditionally, there has been a lot of focus on solving sample preparation and instrumental challenges. However, as these are being addressed, some of the complexity in IMS has shifted toward the computational analysis of its data and to the extraction of information from the often‐massive amounts of measurements that an IMS experiment can yield. Computational IMS research tends to be heterogeneous and the type of challenges being addressed currently runs the gamut from the spectrum level (e.g., preprocessing (Deininger et al., 2011; Jones et al., 2012a), peak picking (Du, Kibbe, & Lin, 2006; Alexandrov et al., 2010; McDonnell et al., 2010)) to the intraexperiment level (e.g., clustering/segmentation (Alexandrov & Kobarg, 2011), and from the interexperiment level (e.g., differential analysis between IMS experiments (Piantadosi & Smart, 2002; Le Faouder et al., 2011; Verbeeck et al., 2014a; Carreira et al., 2015) to the intertechnology level (e.g., data‐driven fusion between IMS and microscopy (Van de Plas et al., 2015).

Even within the data mining of IMS experiments, there are clear distinctions between supervised and unsupervised machine learning approaches (Bishop, 2006). Supervised methods for IMS analysis will seek to model a specific recognition task. For example, classification approaches applied to MALDI IMS in a digital pathology context can predict tissue classes and tumor labels after having been shown representative example measurements annotated by a pathologist (Lazova et al., 2012; Meding et al., 2012; Hanselmann et al., 2013; Casadonte et al., 2014; Veselkov et al., 2014). This supervised branch also includes any regression approaches, such as data‐driven multimodal image fusion (Van de Plas et al., 2015), which seeks to model ion distributions in terms of variables measured by another imaging technology. Unsupervised approaches to IMS analysis, on the other hand, are not focused on a particular recognition task, but instead seek to discover the underlying structure within an IMS dataset, uncovering trends, correlations, and associations along the spatial and spectral domains. These methods are generally applied to provide a more open‐ended exploratory perspective on the data, without particular spatial areas or ions of interest in mind. The structure they find in the data can be employed for aiding human interpretation, but can also serve to reduce the dimensionality and computational load for subsequent computational analyses. Unsupervised methods include, for example, factorization methods such as principal component analysis (PCA) and nonnegative matrix factorization (NMF) (Lee & Seung, 1999; Jolliffe, 2002; Van de Plas et al., 2007b), but also clustering approaches seeking to delineate underlying groups of spectra or pixels with similar chemical expression (McCombie et al., 2005; Rokach & Maimon, 2005; Alexandrov et al., 2010).

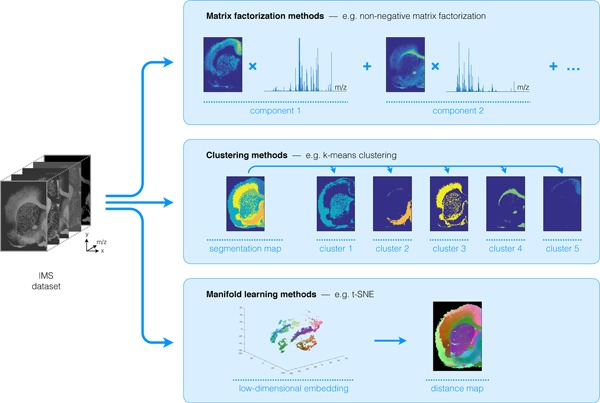

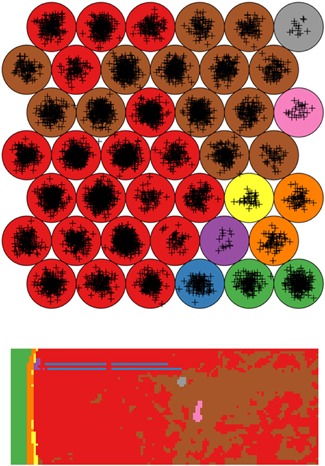

Providing a comprehensive overview of computational methods in IMS has grown beyond the scope of a single review paper, making any review article necessarily focused on a particular branch of computational analysis. Since we aim to provide a resource for those starting out in IMS data analysis, and since one of the advantages of most forms of IMS is its exploratory potential (due to its multiplexed nature and not requiring prior chemical tagging), this paper will specifically review computational methodology for the exploratory analysis of IMS data. More precisely, this review attempts to collect representative examples of unsupervised machine learning algorithms and their applications in an IMS context. This means that work related to preprocessing (e.g., normalization (Deininger et al., 2011; Fonville et al., 2012; Källback et al., 2012), baseline correction (Coombes et al., 2005; Källback et al., 2012), peak picking and feature detection (McDonnell et al., 2010; Alexandrov et al., 2010; Bedia, Tauler, & Jaumot, 2016; Du, Kibbe, & Lin, 2006), data formats (Schramm et al., 2012; Rübel et al., 2013; Verbeeck et al., 2014a; Verbeeck, 2014b; Verbeeck et al., 2017), spatial registration (Schaaff, McMahon, & Todd, 2002; Abdelmoula et al., 2014a; Anderson et al., 2016; Patterson et al., 2018a, 2018b), and supervised methods such as classification (Luts et al., 2010) and regression (Van de Plas et al., 2015) do not fall within the scope of this review, unless there is a substantial contribution to their analysis pipeline by an unsupervised machine learning algorithm. Our focus will lie on three particular subbranches within unsupervised methods, namely (i) factorization methods, (ii) clustering methods, (iii) manifold learning methods, and any hybrid methods that feature a strong relationship to these approaches. Figure 1 gives an overview of these unsupervised methods, with an application to a MALDI Fourier transform ion cyclotron resonance (FTICR) IMS dataset acquired from rat brain (Verbeeck et al., 2017).

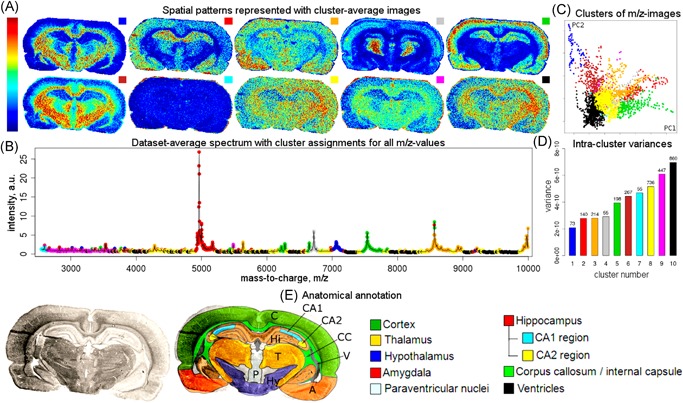

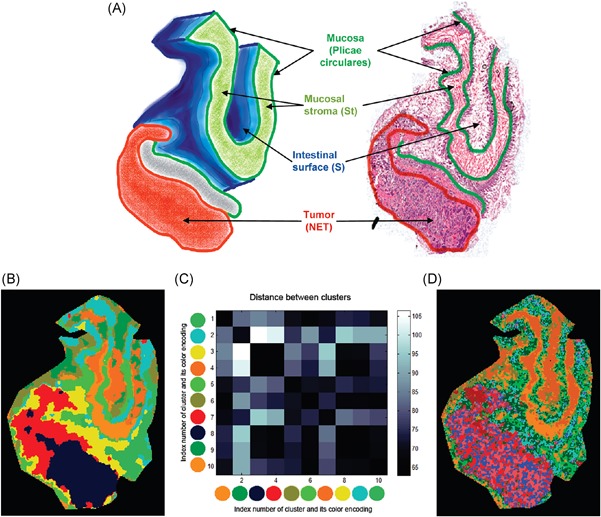

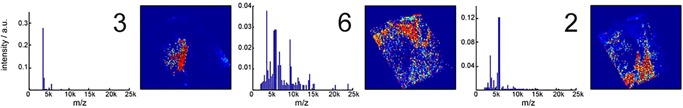

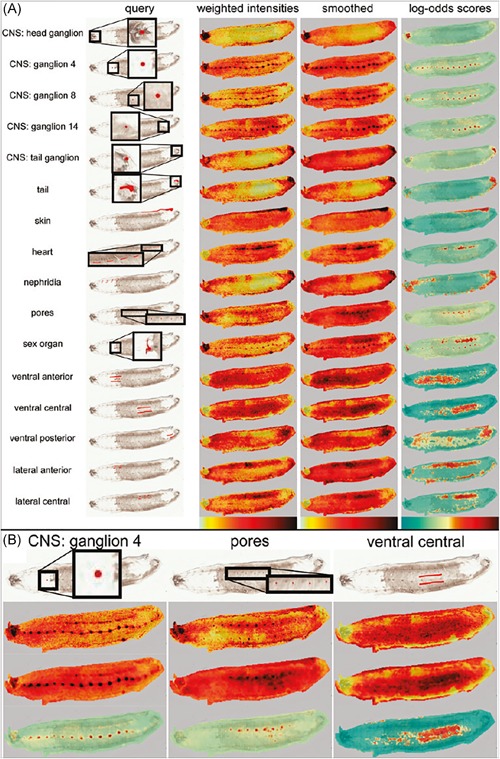

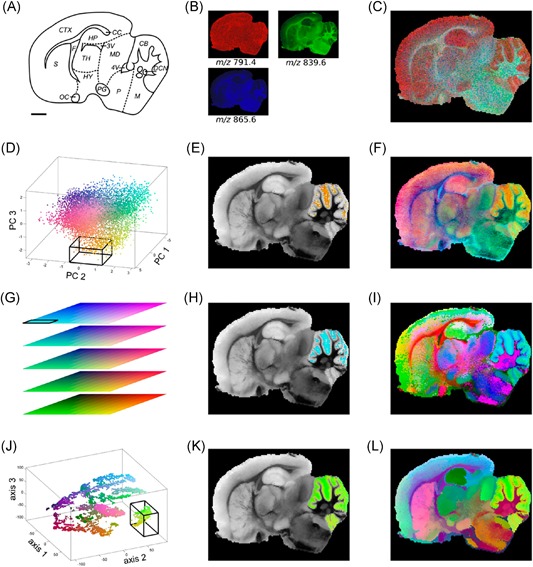

Figure 1.

Unsupervised machine learning methods for exploratory data analysis in IMS. An overview of three reviewed method branches, with application to a MALDI FTICR IMS dataset acquired from rat brain (Verbeeck et al., 2017). (Top) Matrix factorization, with nonnegative matrix factorization as a representative example. (Middle) Clustering analysis, with standard k‐means clustering as a representative example. (Bottom) Manifold learning, with t‐SNE as a representative example. IMS, imaging mass spectrometry; FTICR, Fourier transform ion cyclotron resonance; MALDI, matrix‐assisted laser desorption/ionization; t‐SNE, t‐distributed stochastic neighborhood embedding. [Color figure can be viewed at wileyonlinelibrary.com]

Furthermore, to provide a view across the various instrumental approaches within IMS, we have attempted to include, for each algorithm type, examples from different varieties of IMS. Some analysis methods will show broad application with examples in, for example, MALDI, SIMS, and DESI‐based IMS, while the development of other methods in an IMS context seems to have been confined to a particular instrumental branch. The latter highlights potential for further bridging of computational approaches between the various IMS modalities.

Overall, we have tried to be as encompassing as practically possible and we have attempted to include representative papers from allied areas. Due to the broad scope of IMS and its wide variety of applications, by no means do we imply to give a complete overview of exploratory/unsupervised IMS analysis. However, we do hope that this review can serve as a context‐rich stepping stone or entry point for (i) analytical chemists and mass spectrometry experts who want to explore computational techniques; and (ii) computer scientists and data mining specialists who want to enter the IMS field. To guide the reader, we provide an overview of the discussed methods in Table 1, with a reference to the relevant section and a brief description of the method's demonstrated application area within the field of IMS.

Table 1.

Method Index. Alphabetic index of methods treated in this review and the IMS application areas in which they have been demonstrated

| Methods | Section | Demonstrated applications in IMS |

|---|---|---|

| Adaptive edge‐preserving denoising | Section III.E | Image segmentation incorporating spatial information |

| AMASS | Section III.F | Soft image segmentation, probability‐based model, built‐in feature selection |

| Artificial neural networks | Section IV | Nonlinear dimensionality reduction, image segmentation, visualization of high‐dimensional IMS data |

| Autoscaling | Section II.5 | Data preprocessing |

| Autoencoders | Section IV | Nonlinear dimensionality reduction, image segmentation, visualization of high‐dimensional IMS data |

| Bisecting k‐means | Section III.C | Image segmentation, interactive exploration of clustering tree |

| Compressive sensing | Section II.B | Dimensionality reduction, increase spatial resolution |

| CX/CUR matrix decomposition | Section II.4 | Non‐negative pattern extraction and unmixing (in context of IMS), data size and dimensionality reduction |

| DWT | Section II.B | Dimensionality reduction, feature extraction |

| FCM | Section III.F | Soft image segmentation |

| Filter scaling | Section II.5 | Data preprocessing |

| GMM clustering | Section III.D | Image segmentation (hard and soft) |

| GSOM | Section IV.B | Nonlinear dimensionality reduction with built‐in dimensionality selection, image segmentation, visualization of high‐dimensional IMS data |

| HC | Section III.B | Image segmentation, interactive exploration of clustering tree |

| HDDC | Section III.D | Image segmentation (hard and soft), built‐in dimensionality reduction |

| ICA | Section II.C | Pattern extraction and unmixing, dimensionality reduction |

| k‐means clustering | Section III.C | Image segmentation, grouping of similar ion images |

| Kohonen map | Section IV.B | Nonlinear dimensionality reduction, image segmentation, visualization of high‐dimensional IMS data |

| Latent Dirichlet allocation | Section III.F | Soft image segmentation, probability‐based and generative model |

| MAF | Section II.D | Pattern extraction and unmixing incorporating spatial information, dimensionality reduction |

| MCR | Section II.1 | Non‐negative pattern extraction and unmixing, dimensionality reduction |

| MCR‐ALS | Section II.1 | Non‐negative pattern extraction and unmixing, dimensionality reduction |

| MNF transform | Section II.D | Pattern extraction and unmixing incorporating spatial information, dimensionality reduction |

| MOLDL | Section II.5 | Non‐negative pattern extraction and unmixing (in context of IMS) using prior information, dimensionality reduction |

| MRF | Section III.E | Image segmentation incorporating spatial information |

| NMF | Section II.2 | Non‐negative pattern extraction and unmixing, dimensionality reduction |

| NN‐PARAFAC | Section II.E.3 | Non‐negative pattern extraction and unmixing, pattern extraction, dimensionality reduction |

| PCA | Section II.A | Pattern extraction and unmixing, data size and dimensionality reduction |

| pLSA | Section II.3 | Non‐negative pattern extraction and unmixing, dimensionality reduction, generative and statistical mixture model |

| PMF | Section II.2 | Non‐negative pattern extraction and unmixing, dimensionality reduction |

| Poisson scaling | Section II.5 | Data preprocessing |

| Random projections | Section II.B | Dimensionality reduction |

| Shift‐variance scaling | Section II.5 | Data preprocessing |

| SMCR | Section II.5 | Non‐negative pattern extraction and unmixing, dimensionality reduction |

| SOM | Section IV.B | Nonlinear dimensionality reduction, image segmentation, visualization of high‐dimensional IMS data, image registration |

| Spatial shrunken centroids | Section III.F | Image segmentation (hard and soft), built‐in feature selection |

| Spatially aware clustering | Section III.E | Image segmentation incorporating spatial information |

| SVD | Section II.A | Pattern extraction and unmixing, dimensionality reduction |

| t‐SNE | Section IV.A | Nonlinear dimensionality reduction, image segmentation, visualization of high‐dimensional IMS data |

| Varimax | Section II.A.6 | Improve interpretability of matrix decomposition |

AMASS, algorithm for MSI analysis by semisupervised segmentation; DWT, discrete wavelet transform; FCM, fuzzy c‐means clustering; GMM, Gaussian mixture model; GSOM, growing self‐organizing map; HC, hierarchical clustering; HDDC, high dimensional data clustering; ICA, independent component analysis; IMS, imaging mass spectrometry; MAF, maximum autocorrelation factorization; MCR, multivariate curve resolution; MCR‐ALS, multivariate curve resolution by alternating least squares; MNF, minimum noise fraction; MOLDL, MOLecular Dictionary Learning; MRF, Markov random fields; NMF, non‐negative matrix factorization; NN‐PARAFAC, non‐negativity constrained parallel factor analysis; PCA, principal component analysis; pLSA, probabilistic latent semantic analysis; PMF, positive matrix factorization; SMCR, self modeling curve resolution; SOM, self‐organizing map; SVD, singular value decomposition; t‐SNE, t‐distributed stochastic neighborhood embedding.

II. FACTORIZATION

Matrix factorization techniques are an important class of methods used in unsupervised IMS data analysis. These methods take a large and often high‐dimensional dataset acquired by an IMS experiment, and decompose it into a (typically reduced) number of trends that underlie the observed data. This reduced representation enables the analyst to gain visual insight into the underlying structure of the IMS data, and it often exposes the spatial and molecular signals that tend to colocalize and correlate (usually under the assumption of linear mixing). Furthermore, as these techniques can provide a lower‐dimensional and lower‐complexity representation of the original data, they regularly serve as a starting point for follow‐up computational analysis as well.

A. Principal Component Analysis

Principal Component Analysis (PCA) (Jolliffe, 2002) is a widespread data analysis method, with applications ranging from finance (Brockett et al., 2002), ecology (Wiegleb, 1980), and psychology (Russell, 2002), to genetics (Wall, Rechtsteiner, & Rocha, 2003), image processing (Liu et al., 2010), and facial recognition (Turk & Pentland, 1991). It has been widely employed in IMS research and is the most commonly used multivariate analysis technique in SIMS‐based IMS (Graham & Castner, 2012). Early proponents of PCA as a data processing tool for SIMS include Gouti et al. (1999), Biesinger et al., (2002), and Pachuta (2004). PCA was also one of the first multivariate analysis techniques to be applied to MALDI IMS data (McCombie et al., 2005; Gerhard et al., 2007; Muir et al., 2007; Van de Plas et al., 2007b; Trim et al., 2008). Given the ubiquitous use of PCA in IMS analysis, we devote extra attention to the underlying principle of this technique. Throughout this review, we have tried to organize principles of methods and their particular interpretations and applications in an IMS context into separate subsections. This should allow the reader to read subsections relevant to their interests.

1. Principle

The goal of PCA is to reduce the dimensionality of a dataset, that is, describe the dataset with a lower number of variables, while still retaining as much of the original variation as possible (Jolliffe, 2002). These new variables, called the principal components (PCs), are linear combinations of the original variables and each of them is uncorrelated to the others. This means that the PCs are constructed such that they give the orthonormal directions of maximum variation in the dataset. The first PC is defined such that it explains the largest possible amount of variance in the data. Each subsequent PC is orthogonal to the previous PCs and describes the largest possible variance that remains in the data after removal of the preceding PCs. This formulation ensures that most of the variance in the dataset is captured by the earlier PCs, while later PCs report consistently decreasing variance and thus often reduced impact on the data. In data that describes genuine instrumental measurements, it is often possible to account for most of the observed dataset variance with a number of PCs that is substantially smaller than the original number of variables, in which that dataset was described. By attempting to represent data using a smaller number of variables than the number of variables it was initially recorded with, PCA can help to uncover the underlying, or latent, structure of the data, which is often difficult to observe natively in high‐dimensional datasets such as IMS measurements. In current IMS datasets, the largest trends in the data are often captured by the first 10–20 PCs, making those PCs particularly useful for human exploration. These PCs essentially provide by means of 10–20 images (and their corresponding spectral signatures) a summarized view into the major underlying spatial and spectral patterns present in the data, side‐stepping the need to exhaustively examine hundreds to thousands of ion images individually.

PCA can be written in the form of a matrix decomposition. Let us take the data matrix of size , where is the number of pixels and is the number of spectral bins or bins, that is, each row of represents the mass spectrum of a pixel in the sample. PCA then decomposes as

where represents matrix transposition, is an matrix with orthogonal columns, often called the score matrix, and is an matrix with orthonormal columns, traditionally called the loading matrix. The number of columns in and is the number of PCs, and . Since in many IMS experiments the number of spectral bins exceeds the number of pixels, in those cases is smaller than and thus the total number of PCs is limited to the number of pixels. In cases where the number of pixels is larger than the number of variables per pixel , which for example sometimes occurs in peak‐picked IMS data, the number of PCs is limited to . The columns in and are ranked from high to low variance. If we wish to explore the most important trends in the data (from a variance perspective) or wish to approximate the original dataset as close as possible using only variables instead of or variables, we need to retain only the first PCs, that is, retain and store only the first columns of and . By representing the data as a product of a score matrix and a loading matrix, and having variance directly accessible in terms of the columns of and , PCA can provide the best approximation of the data (with respect to the mean square error, and assuming linear mixing) using only components, with often being a user‐specified number. More formally, PCA provides the best rank‐ approximation of a dataset with respect to the L2‐norm (Jolliffe, 2002).

The PCA matrix decomposition of dataset can be obtained by performing an eigenvalue decomposition of , a matrix that (if is zero mean or column‐centered) is proportional to the covariance matrix of the measurements up to a scaling factor with as the number of samples. The PCA decomposition of (and its underlying eigenvalue decomposition of ) are generally, however, calculated via another closely related matrix decomposition called the singular value decomposition (SVD) (Golub & Van Loan, 1996), which can provide some additional insights into the meaning of and (Keenan & Kotula, 2004a). SVD decomposes as

| (1) |

where is an matrix, which only contains nonzero elements on its diagonal (called the singular values), is a orthogonal matrix containing the left singular vectors of and is an orthogonal matrix containing the right singular vectors of . By convention, the singular values are sorted from high to low, determining the order of the singular vectors. The number of nonzero singular values is determined by the rank of the matrix, and Matrices and have specific meanings in case of a matrix D representing the IMS data (with each row of representing the mass spectrum at a particular pixel). The left singular vectors in form an orthonormal basis for the IMS experiment's pixel space. The right singular vectors in form an orthonormal basis for the mass spectral space (Wall, Rechtsteiner, & Rocha, 2003). By using SVD (Equation 1), the covariance matrix of (omitting the scaling factor) can now also be written as:

| (2) |

It can be shown that the matrix now actually contains the eigenvectors of the covariance matrix, which also form the PCA loadings . When is column‐centered, that is, the mean is subtracted for each column of , the PCA loading matrix can be directly derived from the SVD results (and Equation 2) as:

and the score matrix as:

When PCA is applied as a dimensionality reduction technique, only the first components (and columns) are retained, and these relationships become:

The PC loadings are equivalent to the right singular vectors spanning the spectral space, and the PC scores are the left singular vectors spanning the pixel space, multiplied by the singular values . From this comparison, it also becomes clear that performing PCA on the transpose of ,

simply switches the positions of and around, as only contains scalar values on the diagonal. Due to the existence of efficient SVD algorithms and its superior numerical stability over eigenvalue decomposition of the covariance matrix (Wilamowski & Irwin, 2011), PCA is generally calculated using the SVD decomposition. Furthermore, given that only the first singular values are nonzero, only the first PCs will be nonzero, and it therefore suffices to calculate only the first PCs.

2. Interpretation

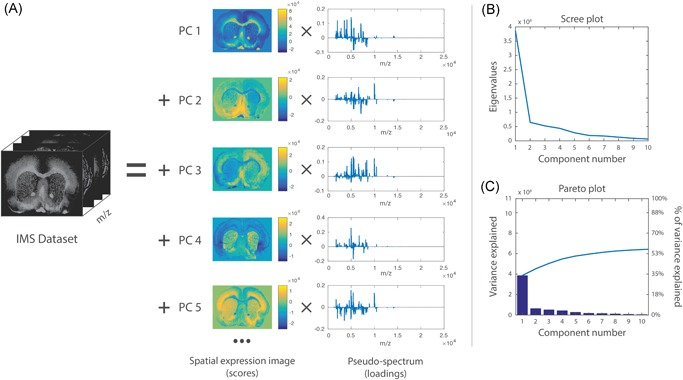

The interpretation of the loadings and scores provided by PCA is not always straightforward (Wall, Rechtsteiner, & Rocha, 2003). An example of PCA applied to a MALDI IMS dataset acquired from a rat brain section of a Parkinson disease model (Verbeeck et al., 2017) is shown in Figure 2. The loading of each PC can be seen as a pseudospectrum, a spectral signature that explains a part of the variance of the dataset. The magnitude of the total explained variance for a component is codetermined by its scaling factor in the score matrix. Generally, it is not advisable to tie biological meaning directly to this pseudospectrum (Wall, Rechtsteiner, & Rocha, 2003). These pseudospectra constitute linear combinations of bins, optimized to explain as much of the data variance as possible, but that is not necessarily the same as correctly modeling the underlying biology or sample content. One example of this is that PCA‐provided pseudospectra often contain negative peaks. These can be difficult to interpret from a mass spectrometry perspective since the original IMS data only contains positive values, namely ion counts. Regardless of how the sign of the intensity signal for a bin in these pseudospectra is interpreted, it is clear that if its absolute signal intensity is relatively high, the bin plays a role in explaining the overall variations and patterns observed in the data. Furthermore, the higher the absolute peak height for a particular bin is within a PC's pseudospectrum, the larger its contribution to the variance accounted for by that component, and thus the larger its importance within the data trend captured by that component (Wall, Rechtsteiner, & Rocha, 2003). As such, without over‐interpreting the PCs, PCA can serve to highlight among thousands of measured bins those bins that seem to play a more prominent role in the patterns that underlie the data.

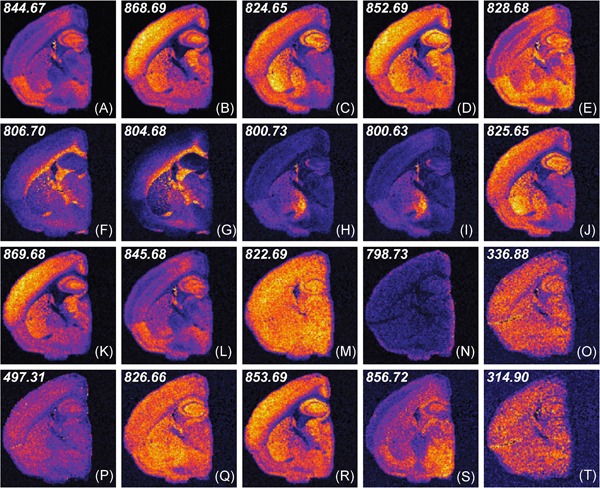

Figure 2.

Example of PCA. PCA applied to a MALDI IMS dataset acquired from a coronal rat brain section of a Parkinson's disease model. Details of the dataset are available in Verbeeck et al., 2017. (a) PCA decomposes the original IMS dataset into a sum of principal components (PCs), where each component is characterized by a spatial expression image (score) times a pseudospectrum (loading). The PCs are ranked by the amount of variance they account for in the original dataset. The first five PCs are displayed, showing extraction of molecular patterns specific to various anatomical structures and with some exhibiting clear differences between the left and right hemispheres (as expected in this disease model). To estimate the number of relevant principal components for a dataset, a scree plot (b) or Pareto plot (c) can be used. These visualize the variance explained per PC, and can thus suggest a cut‐off threshold. IMS, imaging mass spectrometry; MALDI, matrix‐assisted laser desorption/ionization; PCA, principal component analysis. [Color figure can be viewed at wileyonlinelibrary.com]

Each loading vector has an accompanying score vector, which constitutes the spatial expression tied to the PC. These images obtained from the score matrix (and its equivalent in other factorization methods) can be considered spatial expression images or spatial mappings. The scores can be seen as the strength with which a loading is expressed at a particular location in the tissue or sample. These, again, consist of linear combinations of the pixels in the dataset, and thus negative values are generally included. Nevertheless, the spatial expression images of the most important PCs often give a good overview of the most prominent spatial patterns that make up the majority of measured variation in the IMS dataset, and can thus be very useful in gaining initial insight into the data. One helpful way of using these spatial expression images (scores) and their accompanying spectral signatures (loadings) is to employ them as guides to find correlating and anticorrelating ion peaks and ion images. Effectively, PCA can be used as a multivariate way of finding among thousands of pixels those that show similar spectral content, or among thousands of ions those that exhibit a similar spatial distribution. A less powerful, univariate version of this task can also be explored using correlation, which will be discussed in greater detail in Section III.G.

3. Number of Components

Determining the correct number of components to retain after a PCA analysis is an important but difficult challenge to tackle, and several papers have been dedicated to the subject (Peres‐Neto et al., 2005). As mentioned by Jolliffe (2002), there is no straightforward solution to the problem available. One of the most commonly used and easiest methods to determine the number of components is by means of a scree plot (Cattell, 1966) (Fig. 2b), which consists of plotting the eigenvalues of the PCs in descending order, and selecting a cut‐off at the point where there is no longer a significant change in the value of two consecutive eigenvalues. Alternatively, a Pareto plot (Fig. 2c) can be used, which plots the cumulative percentage of variance explained by each consecutive PC. Here, one can either use a predefined threshold of what percentage of variance must be maintained, or, similar to the scree plot method, select a cut‐off point when there is no longer a significant change in percentage explained. Determining the correct number of components is a recurring issue for various pattern extraction techniques in IMS, and various methods exist that can be readily applied, including the minimum description length (Rissanen, 1978; Verbeeck, 2014b), the Bayesian information criterion (BIC) (Schwarz, 1978; Hanselmann et al., 2008), the Akaike information criterion (AIC) (Akaike, 1973), and the Laplace method (Minka, 2000; Verbeeck, 2014b).

4. Application to IMS

Graham & Castner (2012) lists applications of PCA in SIMS imaging, along with other multivariate analysis methods. Recent work by Bluestein et al. (2016) shows the use of PCA in lipid‐focused TOF‐SIMS data, acquired from breast cancer tissue, using PCA as a means of selecting regions of interest (ROIs). Fletcher et al. (2011) used PCA for data reduction and visualization of 3D TOF‐SIMS measurements collected from HeLa‐M cells, allowing for visualization of different cellular components such as the membrane and nucleus. Additionally, PCA provided a method to adjust and register the cellular signal along the z axis.

PCA has also been extensively used in DESI‐based imaging. Dill et al. (2009) used PCA to distinguish tumor regions and associated lipid profiles in DESI IMS data, acquired from canine bladder cancer samples. In follow‐up work (Dill et al., 2011), PCA was applied to DESI imaging data of a relatively large set of 20 human bladder carcinomas, to investigate the variation within and between both healthy and diseased tissues. The first PC showed a high expression in the tumor tissue and a low expression in the healthy tissue, indicating a strong difference in molecular expression between the two tissue types. However, as PCA does not provide explicit classification rules, a follow‐up with a supervised approach, namely orthogonal projection to latent structures (O‐PLS), was used to create a classification model for these samples. Pirro et al. (2012) have used PCA to provide an interactive way to explore DESI IMS data, while Calligaris et al. (2014) employed PCA in the characterization of biomarkers in DESI imaging data collected from breast cancer samples.

PCA has also found application in the analysis of rapid evaporative ionization mass spectrometry (REIMS) imaging data of human liver samples and bacterial cultures, where it enabled differentiation between healthy and cancerous tissue and between three bacterial strains (Golf et al., 2015).

In MALDI IMS, there has been extensive use of PCA both for aiding human interpretation of IMS data, as well as for reducing the dimensionality and size of the data to enable subsequent computational analysis. Examples include McCombie et al. (2005), who used PCA to extract spatial trends from MALDI IMS measurements acquired from the mouse brain, employing the technique primarily as a dimensionality reduction step preceding further data analysis. Gerhard et al. (2007) used PCA in the analysis of MALDI IMS data acquired as part of a clinical breast cancer study, with spatial expression images correlating well with the histology and showing a clear separation of the different tissue parts. Deininger et al. (2010, 2008) used PCA in the analysis of MALDI IMS of gastric cancer tissue sections, where the first PCs reflected the histology quite well. However, they noted that the PC loadings and scores were difficult to interpret, particularly when compared with hierarchical clustering (HC) (see Section III.B), which can summarize the data in a single image rather than spread out over multiple components. Siy et al. (2008) applied PCA in the analysis of MALDI IMS data from the mouse cerebellum, and compared the results to those of independent component analysis (ICA) and non‐negative matrix factorization (NMF), which we discuss in Sections II.C and II.E, respectively. While PCA allowed identification of the major spatial patterns, both the loadings and scores were noisier than those of NMF and ICA. Hanselmann et al. (2008) used PCA in a comparison with pLSA, ICA, and NN‐PARAFAC on SIMS as well as on MALDI IMS datasets. In this setting, PCA was found to be the least successful of the compared techniques for extracting spatial patterns from the dataset. Furthermore, Hanselmann et al. note that the pseudospectra are difficult to interpret due to the negative values they contain. Gut et al. (2015) included PCA as one of several matrix decomposition methods to analyze MALDI IMS data acquired from pharmaceutical tablets. In this study, PCA allowed for good retrieval of sources of variation in the data, but provided limited spectral information compared to the other matrix decomposition methods used.

Due to the ubiquity of PCA in IMS research, subsequent subsections focus primarily on papers that made changes to the standard PCA workflow, adapting it specifically toward application in an IMS setting.

5. Preprocessing—Scaling

While PCA offers a robust method for the extraction of underlying components, statistical preprocessing of the data can have a large impact on the decomposition results. This topic has been studied in depth in the SIMS imaging community (Keenan & Kotula, 2004a; Tyler, Rayal, & Castner, 2007). There are several reasons for this. First, preprocessing will influence the impact or weight that a single variable has in the overall PCA analysis. PCA pushes variables toward more important PCs on the basis of how much variance the variables represent in the data. In the case of IMS data, high‐intensity peaks that vary will tend to represent a much larger part of the overall variance than low‐intensity peaks that vary in a similar way. As a result, tall peaks can exert a greater influence on the retrieved PCs than short peaks. On the one hand, this is a desirable effect: large differences in high intensity peaks are often very informative, and should therefore be reported prominently. On the other hand, these peaks can be so dominant that changes in relatively small, yet biologically relevant, peaks can be lost in the overall PCA decomposition of a dataset, as these small peaks are not essential to explaining the majority of variance in the data. One way of countering this phenomenon is by scaling the variables prior to performing PCA analysis.

5.1.

Autoscaling

One popular method is autoscaling (Jackson, 1991; Keenan & Kotula, 2004a; Tyler, Rayal, & Castner, 2007), where each variable has its mean subtracted and is divided by its standard deviation. This scaling is equivalent to performing the PCA analysis on the correlation (coefficient) matrix instead of the covariance matrix. Autoscaling makes each bin exhibit unit variance, thus giving high and low intensity peaks equal influence in the PCA analysis (Tyler, Rayal, & Castner, 2007; Deininger et al., 2010). It is important to note though that this type of scaling can allow noisy low‐signal bins to have an increased (and sometimes disproportionate) impact on the PCA analysis results, potentially delivering noisy uninformative PCs in the process.

Poisson Scaling

Besides the weight that a variable (pixel or bin) has in an overall PCA decomposition, a more fundamental reason to apply statistical preprocessing or scaling prior to PCA lies in the underlying assumptions behind PCA. Due to the fact that PCA finds relationships between features based on their variance, and a Gaussian distribution is fully determined by its variance and mean, PCA works optimally on data with a Gaussian distribution. If the data are not Gaussian‐distributed, however, there are usually higher‐order statistics beyond variance present that are not being taken into account by PCA. While PCA captures components that are uncorrelated, these components are not necessarily statistically independent for general distributions, a topic we will discuss in greater detail in Section II.C. For example, the spectra in TOF‐based mass spectrometry are typically formed by counting the number of ions that hit a detector. This means that both the noise and variability of the signal are likely to be governed by Poisson statistics (Keenan & Kotula, 2004a) and will not necessarily approximate a Gaussian distribution. One of the further consequences of a Poisson distribution of ion counts is that the variance, and thus uncertainty, of an ion intensity measurement is directly proportional to the magnitude of the measurement (the mean and variance of a Poisson distribution are identical to each other). Thus, high peaks will tend to have higher noise intensities than low peaks, which will again influence their importance in the PCA decomposition.

This aspect also plays an important role in the selection of the correct number of PCs: the graph of eigenvalues or scree plot that is commonly used to select the correct number of PCs should be near‐zero for components that only describe (Gaussian) noise, and should take substantial values for components that describe systematic and structural information (Malinowski, 1987). However, this is not the case for variables that report a non‐Gaussian noise distribution, as is illustrated in Keenan & Kotula (2004a). Consequently, noisy but high‐intensity components can rank very high in the order of PCs, whereas small but relevant peaks can end up in components with a substantially lower rank, and might go unrecognized as a result. For this reason, a popular scaling method, especially in SIMS research, is the Poisson scaling described in Keenan & Kotula, (2004a). This is essentially a form of weighted PCA that aims to mitigate the effects of the Poisson distributed noise, by transforming the variables to a space better suited for PCA analysis, prior to performing the actual PCA analysis. This form of scaling involves dividing each row of the matrix by the square root of the mean spectrum row vector, and dividing each column of the data matrix by the square root of the mean pixel (or image) column vector. In a related setting, Wentzell et al. (1997) applied a maximum likelihood PCA (MLPCA) approach to compensate for deviating noise structure in the PCA of near infrared spectra. Keenan (2005) compared Poisson‐scaled PCA to MLPCA on simulated SIMS data with known amounts of Poisson noise, and showed that, while MLPCA performed the best in extracting the original components, Poisson‐scaled PCA performed nearly as well, at a much lower computational cost. In another comparison of scaling methods, Keenan & Kotula (2004b) show that Poisson scaling outperforms autoscaling as a statistical preprocessing method.

Filter Scaling and Shift Variance Scaling

Several other scaling methods exist, including filter scaling (Tyler, Rayal, & Castner, 2007), which consists of dividing each peak by the standard deviation of a set of pixels near that peak, in order to account for local intensity variation and noise, and shift variance scaling (Tyler, Rayal, & Castner, 2007), which is based on the same concept as maximum autocorrelation factorization (MAF), a matrix factorization method akin to PCA that we will discuss in Section II.D. Briefly, shift variance scaling entails dividing each peak by its standard deviation in the shift matrix, a data matrix obtained by subtracting from the original data matrix a copy that has been spatially shifted by a pixel horizontally and/or vertically. In a comparison of PCA using autoscaling, Poisson scaling, filter scaling, shift variance scaling, and MAF, Tyler et al. (2007) demonstrated that MAF performed best, albeit at the cost of greater computation time, while Poisson‐scaling and shift variance scaling provided results similar to MAF at a lower computational cost.

Intensity Scaling to Incorporate Prior Knowledge

Besides its use for noise correction, weighted PCA by means of scaling also provides the tools for directly incorporating domain‐specific knowledge into the analysis. Scaling the absolute intensity values of specific bins on the basis of prior knowledge provides a mechanism for giving known ions of interest a greater weight, and thus pushing a normally unsupervised PCA algorithm to identify, in a more supervised way, ion species that correlate and anticorrelate with the specific ions of interest. In a similar fashion, the weight of known noise peaks or ion species that are not relevant to the biological mechanism at hand, can be diminished in the overall decomposition, essentially clearing up PCA bandwidth for patterns of interest. By downscaling undesirable peaks, their variance is reduced and consequently their corresponding components move into a less prominent position in the PC ranking. This essentially allows for prior knowledge to be straightforwardly incorporated into PCA, without a fundamental change to the underlying algorithm, and provides a means for dynamically testing the influence of ion species on the final PCA result (by testing the response to scaled versions). With these uses in mind, we demonstrated on MALDI IMS data the influence of weighted PCA (Van de Plas et al., 2007a). The scaling was done using digital image enhancement techniques, specifically gray level transformations on the basis of histograms, to enhance the contrast of individual ion images and eliminate noise patterns, while concurrently leading to a reduction in the resulting PCA expression images.

Although the issue of scaling is treated here within the context of PCA, it is clear that all unsupervised data mining methods can be influenced by it. This can serve different purposes, including accounting for underlying algorithm assumptions, noise reduction, as well as contrast enhancement and incorporation of prior knowledge.

6. Postprocessing—Varimax

PCA, and several other factorization methods, suffer from what is known as “rotational ambiguity.” This means that there are an infinite number of orientations of the factors that account for the data equally well (Russell, 2002). More precisely, given a matrix factorization

and any invertible transformation matrix , the matrix can also be written as

| (3) |

In other words, there exist an infinite number of factor pairs and that will fit the data equally well (Keenan, 2009). PCA aims to find the solution to this factorization where the matrices have mutually orthogonal vectors, and the components serially represent maximal variance in the original data. As stated, PCA provides the best rank‐ approximation of the data (in a least squares sense). This means that PCA aims to represent as much information as possible, using as few components as possible. This setup makes sense when PCA is used as a dimensionality and data reduction tool; however, it also leads to very “dense” components, components with many nonzero weights, which makes them difficult to interpret by humans. It is therefore often useful to apply a rotation of the resulting PCA loading vectors in order to simplify the factor model for human examination (Russell, 2002). Similar to Equation 3, this rotation does not affect the goodness of fit of the solution, or more formally: the subspace defined by the PCs remains the same. However, we are selecting a different solution among all the equally valid solutions of rank (Paatero & Tapper, 1994). A rotation of the loadings will, however, relax the orthogonality constraints on the scores when projected on the new loadings, that is, the scores will no longer be uncorrelated (Jolliffe, 2002; Keenan, 2009).

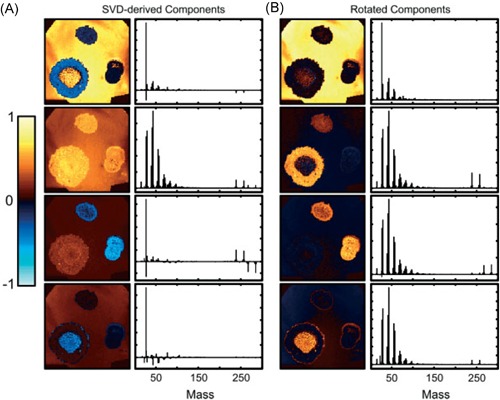

One of the most frequently used criteria for rotation of PCA components is the Varimax rotation proposed by Kaiser (1958), which performs an orthogonal rotation that maximizes the variance of the squared loadings. This approach results in loading vectors that are more sparse, that is, have many elements that are zero, while having a lower number of nonzero loading coefficients with higher absolute values. In the case where the loadings are the spectral features, this means fewer bins that are nonzero, which greatly improves the interpretability of the loading components. Klerk et al. (Broersen, Van Liere, & Heeren, 2005; Klerk et al., 2007) have applied Varimax rotation to PCA components in LDI and SIMS imaging datasets. Performing the Varimax rotation on the chemical loadings increased calculation times only slightly, but led to a high increase in contrast in the spatial expression images, while simultaneously leading to sparser chemical component spectra with higher peaks. When comparing the results to those obtained through non‐negativity constrained parallel factor analysis (NN‐PARAFAC; see Section II.E), PCA + Varimax offered the best trade‐off between calculation time and result quality. On the other hand, NN‐PARAFAC offered the advantage that loadings were positive or zero, more closely approximating the non‐negative ion counts naturally encountered in MS. Due to its negligible calculation time, the authors recommended the use of Varimax as a default postprocessing step after PCA for improved interpretability. Fornai et al. (2012) used PCA + Varimax in the investigation of a 3D SIMS dataset of rat heart, consisting of over 49 billion spectra collected from 40 tissue sections. Keenan (2009) has applied Varimax rotation in SIMS data on the spatial‐domain components rather than the spectral components, which resulted in a higher contrast for the expression images, as shown in Figure 3. Furthermore, due to the fact that this was a relatively uncomplicated sample with only a few components present in each spatial location, the spectral components are relatively simple, making them similar to those obtained through MCR‐ALS, a NMF method discussed in Section II.E.

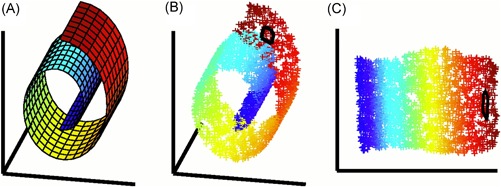

Figure 3.

Example of Varimax rotation. Original caption: Comparison between (a) the abstract factors obtained by SVD with (b) the same factors after Varimax rotation to maximize spatial‐domain simplicity for the palmitic/stearic acid sample. Source: Keenan, 2009, Figure 7, reproduced with permission from John Wiley & Sons. SVD, singular value decomposition. [Color figure can be viewed at wileyonlinelibrary.com]

Despite the many strengths of PCA, that is, availability of an analytical solution, ease of use, existence of optimized algorithms (see Intermezzo below), and availability in many software packages, several disadvantages remain. One disadvantage is the presence of negative peaks in the pseudospectra and negative values in the spatial expression images, which can lead to difficulties in interpreting PCA results (Deininger et al., 2010). Another is that the assumptions underlying PCA do not necessarily agree with the characteristics of IMS data. Fortunately, the method has shown remarkable robustness to such violations of its assumptions, and continues to provide useful insight into IMS data regardless. An issue that we have not yet discussed is that PCA treats each pixel as an independent sample. This is technically not true in imaging data if detectable levels of spatial autocorrelation are present in the measurements (Van de Plas et al., 2015; Cassese et al., 2016), and it merits further investigation. Furthermore, standard PCA does not take the available spatial information into account in its decomposition, an aspect that could be used to improve the analysis further. Some of these disadvantages have been addressed by alternative matrix factorization approaches, which will be discussed in the next sections.

B. Intermezzo: Dimensionality Reduction and Computational Resources

Current state‐of‐the‐art IMS datasets can reach massive sizes, with raw data ranging in the GBs and TBs for a single experiment, typically containing – bins per pixel, and – or more pixels, depending on the instrumentation used. When applying factorization or clustering techniques to these data, their sheer size can easily lead to computer memory shortages and very long calculation times on standard desktop computers. A common method to deal with these issues is to perform a feature selection step such as peak‐picking, which precedes the data analysis (McDonnell et al., 2010; Jones et al., 2012a; Alexandrov et al., 2010; Du, Kibbe, & Lin, 2006). This greatly reduces the number of variables, and consequently the size of the data, by eliminating from the analysis the spectral bins that do not contain peak centers. Peak picking is, however, a rather drastic form of feature selection that discards a large amount of information from the original data (e.g., peak shape), while also holding the risk of discarding peaks that go unrecognized by the peak‐picking algorithm. This makes the quality of the subsequent analysis dependent on the quality of the preceding peak‐picking or feature selection method, which may not always be desirable (Palmer, Bunch, & Styles, 2013, 2015). Furthermore, IMS datasets with large numbers of pixels may still be too large to be analyzed even after peak‐picking (Halko, Martinsson, & Tropp, 2011; Race et al., 2013). Other methods that are sometimes used to reduce the size of the data are spectral binning (Broersen et al., 2008a) and spatial binning (Henderson, Fletcher, & Vickerman, 2009), which average (or sum) ion intensities over multiple bins and pixels, respectively. While these methods sometimes increase the signal‐to‐noise‐ratio, they inherently lead to loss of spatial or mass resolution, which is usually undesirable.

As a result, several groups have investigated efficient algorithms and techniques capable of handling these large amounts of data without the need for peak picking and with minimal loss of information.

1. Memory‐Efficient PCA

A number of studies have focused specifically on the development of memory‐efficient PCA algorithms, which allow PCA analysis to be performed despite large data sizes. Race et al. (2013) have applied a memory‐efficient PCA algorithm that allows sequential reading of the pixel data, and thus does not require keeping the full dataset in computer memory. This allows for processing of very large datasets, albeit that the full covariance matrix must still be constructed and therefore extremely high‐dimensional datasets may still prove to be problematic. This method allowed for processing of a 50 GB 3D MALDI IMS dataset that was previously too large to analyze. A MATLAB (The Mathworks Inc., Natick, MA) toolbox by Race et al. (2016) carries an implementation of this method, and allows application of this and other IMS data mining algorithms without loading the full data into memory. Klerk et al. (2007) used the PCA algorithms in MATLAB together with its sparse data format to exploit the sparse nature of IMS data, that is, the fact that many of the values measured in IMS are near‐zero. Rather than loading the full data matrix, this MATLAB format only stores nonzero values, which allows for much larger datasets to be loaded into memory. They used the native MATLAB algorithms to solve the eigenvector problem for sparse matrices, using restarted Arnoldi iteration (Sorensen, 1992). Cumpson et al. (2015) have compared four different SVD algorithms for PCA in SIMS imaging data. The “random vectors” SVD method proposed by Halko et al. (2011) was selected. Similar to the method of Race et al., it did not require loading the full dataset into memory. This method was used to analyze a 134 GB TOF‐SIMS imaging dataset in around 6 hr on a standard desktop PC. In recent work, Van Nuffel et al. (2016) achieved good decomposition results using a random subsampling approach to perform PCA in a 3D TOF‐SIMS dataset from an embryonic rat cortical cell culture. The loadings were calculated using a training set consisting of only 6.11% of the total number of pixels, and the IMS data was then projected on the loading vectors to create the (score) images. Cumpson et al. (2016) have similarly used a subsampling approach for the PCA analysis of large size 3D SIMS datasets, although here quasirandom Sobol sampling was used to obtain a more even spatial sampling throughout the sample. Graphical processing units (GPUs) were used to speed up the calculation of the PCs, as has also previously been demonstrated by Jones et al. (2012b) for PCA, pLSA, and NMF (see Section II.E). The quasirandom subsampling strategy used by Cumpson et al. has also been used by Trindade et al. (2017) in the calculation of the NMF decomposition of 3D TOF‐SIMS IMS data with very high spatial resolution.

Broersen et al. (2008a) have alleviated some of the memory and calculation constraints of PCA by using a multiscale approach, in which PCA is first calculated on a rebinned version of the data, grouping together multiple bins (e.g., through averaging). It reduces the number of variables and the size of the data, while increasing the signal‐to‐noise ratio. In an interactive approach, the user can then select features of interest, which can be zoomed in on, and the PCA can be recomputed (without rebinning) for that smaller area or reduced set of features. In other work, Broersen et al., 2008a showed how the denoising effect and dimensionality reduction provided by PCA can be used in the alignment and combination of multiple SIMS datasets, imaging different areas of a large sample.

2. Non‐PCA Dimensionality Reduction

Besides peak‐picking and PCA, many other data reduction and feature extraction/selection techniques exist and allow for high levels of data compression while retaining much of the original information. These are often less memory‐intensive than PCA or can be serially applied on individual spectra, avoiding the need to load the full IMS dataset into memory. For example, we have demonstrated the application of the Discrete Wavelet Transform (DWT) for the dimensionality reduction of IMS data (Van de Plas, De Moor, & Waelkens, 2008; Van de Plas, 2010; Verbeeck, 2014b). DWT is a popular data transformation that allows compact representation of the original mass spectra in a much lower‐dimensional wavelet coefficient space, with arbitrarily little loss of signal or information. DWT‐based compression greatly reduced the size of MALDI IMS datasets, and resulted in a 63‐fold reduction in memory requirements when performing PCA on a MALDI TOF IMS measurement set, while obtaining comparable PCs to those calculated without prior dimensionality reduction. Furthermore, we used this technique (Van de Plas, 2010) to reduce the dimensionality of a 19.6 GB MALDI FTICR IMS dataset by a factor of 128, after which the reduced data could be successfully analyzed through ‐means clustering (see Section III.C), showing clear overlap with biologically significant histological features.

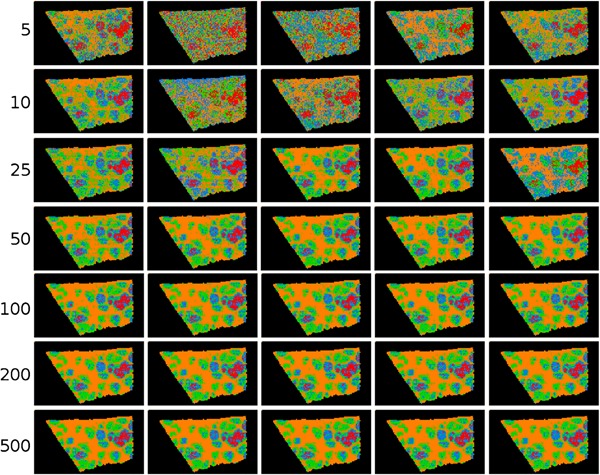

In other work, Palmer et al. (2013) have investigated the use of random projections for dimensionality reduction of hyperspectral imaging datasets, such as MALDI IMS and Raman spectroscopy data. The full original IMS data are projected onto a set of randomly chosen vectors, rather than a calculated set of basis vectors as is the case in, for example, PCA. By projecting the data onto randomized vectors, a large part of the data redundancy that is often present in IMS data due to colocalization and correlation is removed, which reduces the data size, while preserving distances between points and angles between vectors, a property that is desirable in many machine‐learning approaches. Using this approach allowed impressive 100‐fold reductions in dimensionality and data size, while still allowing reconstruction of the original data within noise limits. In follow‐up work, Palmer et al. (2015) demonstrated consistent clustering results that followed histologic changes, using less than one percent of the original data, as is demonstrated in Figure 4.

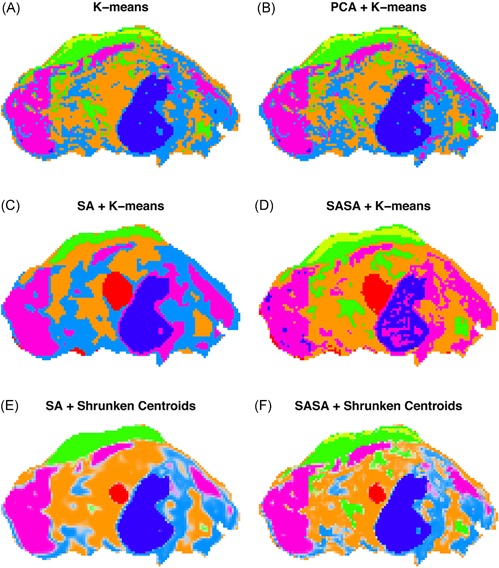

Figure 4.

Example of ‐means clustering performed on random projections. Original caption: Top‐to‐bottom: increasing the number of projections up to around 100 increases the segmentation reproducibility; after this point, the segmentation result completely stabilizes and the same tissue patterns are produced. Left‐to‐right: each column is the result of a different set of random vectors. At low numbers of projections, the exact choice of projection vectors affects the results of the segmentation, whereas for higher numbers of projections, the segmentation is stable and reproducible against a different choice of projection vectors. Source: Reproduced from Palmer et al. (2015), Figure 4, under Creative Commons Attribution License. [Color figure can be viewed at wileyonlinelibrary.com]

3. Compressive Sensing

A method for reducing the measurement dimensionality of IMS, and thus also keeping computational and instrumental requirements to a minimum, is the use of a compressive (or compressed) sensing framework. Compressed sensing builds on the famous Whittaker‐Nyquist‐Shannon‐Kotelnikov sampling theorem, which establishes the minimum sampling frequency required to fully reconstruct a signal. Candès, Tao, and Donoho (Candès & Tao, 2006; Donoho, 2006; Candès & Wakin, 2008) have posed that, given knowledge on the signal's sparsity, the original signal may still be reconstructed with fewer samples than the sampling theorem technically requires. Compressed sensing has been applied in various domains such as image reconstruction (Mairal, Elad, & Sapiro, 2008), hyperspectral imaging (Golbabaee, Arberet & Vandergheynst, 2010), and clustering (Guillermo, Sprechmann, & Sapiro, 2010). In the case of IMS, this would enable a reduction in the number of samples (pixels) collected in tissue, and enable the computational processing of smaller datasets. Gao et al. (2014) have demonstrated the use of compressed sensing in DESI imaging data, where it was used to reconstruct ion images using only half the sampling points in the original data. Only out of approximately values were identified as important to allow this reconstruction. Bartels et al. (2013) used compressed sensing with the assumption of sparse image gradients and compressible spectra, to reconstruct smoothed ion images using different fractions of samples of the original data (20–100). Tang et al. (2015) have used compressed sensing to increase the spatial resolution in AFAI‐IMS data. To demonstrate this approach, the spatial resolution of the original imaging data was lowered by merging pixels. The compressed sensing approach was then used to reconstruct the image at the original resolution. Results were compared to bicubic interpolation, with compressed sensing reconstruction showing improved peak signal‐to‐noise‐ratio over the bicubic interpolation approach. In a similar application, Milillo et al. (2006) have used geospatial statistics, namely Kriging and inverse distance squared weighted methods, to reconstruct ion images from SIMS data, achieving reconstruction with recognizable images using only 10 of the original pixels in the image.

To conclude this intermezzo, it is worth noting that in recent years there has been increased use of high‐performance computing (HPC) resources in the processing of IMS data. For example, Smith et al. (2015) used HPC in the exploration of large FTICR imaging datasets. Further support comes from the emergence of HPC‐capable data processing environments such as openMSI (Rübel et al., 2013; Fischer, Ruebel, & Bowen, 2016). It is to be expected that this trend toward HPC will continue and even accelerate over the coming years, as IMS datasets grow ever larger due to advances in mass spectrometry instrumentation.

C. Independent Component Analysis

ICA is a matrix decomposition technique that originated from the area of blind source separation, and that aims to find statistically independent components that underlie the observed data (Jutten & Herault, 1991; Comon, 1994). ICA has been used extensively in a wide range of applications, including facial recognition (Bartlett, Movellan, & Sejnowski, 2002), functional magnetic resonance imaging (fMRI; Calhoun, Liu, & Adalı, 2009), and remote sensing (Du, Kopriva, & Szu, 2005; Wang & Chang, 2006). In IMS research, ICA has been used in the analysis of MALDI IMS measurements (Hanselmann et al., 2008; Siy et al., 2008; Verbeeck, 2014b; Gut et al., 2015), but, to our knowledge, not yet in other IMS varieties.

1. Principle

While PCA and ICA are similar in premise, ICA requires its components to be statistically independent of each other, where PCA requires its components only to be uncorrelated. The requirement by ICA is stronger than that imposed by PCA: if two variables and are statistically independent, they are also uncorrelated, however, uncorrelated variables are not necessarily independent. Another distinction between PCA and ICA is that ICA assumes the underlying sources to follow a non‐Gaussian distribution, and in fact exploits this non‐Gaussianity for their recovery (Hyvärinen, 2013). While the assumption of non‐Gaussianity may seem limiting at first, many real‐life signals are in fact non‐Gaussian when considering the probability distribution of the signal (De Lathauwer, De Moor, & Vandewalle, 2000). Siy et al. (2008) note that the probability distribution of IMS data are highly non‐Gaussian given the large number of near‐zero values in a typical mass spectrum, and as we have previously noted (see Section II.A), the peaks in mass spectrometry signals tend to be Poisson‐distributed for most varieties of mass spectrometry. Furthermore, biological or other sample‐specific processes, underlying the observed mass spectra, could give rise to non‐normally distributed signals (Hebenstreit & Teichmann, 2011; Dobrzyński et al., 2014). In ICA, non‐Gaussianity of signals is an integral part of the analysis and is even utilized to accomplish its task. In PCA, most use cases will deal with non‐Gaussianity prior to analysis, and apply some form of statistical preprocessing (e.g., Poisson scaling) to make the data and noise more Gaussian‐like before doing PCA analysis.

Rather than a single technique, ICA constitutes a class of methods that use assumptions of independence and non‐Gaussianity to find underlying components. A number of different strategies exist and the ICA approaches can be roughly divided into two categories, although many ICA algorithms combine both: (1) approaches primarily rooted in information theory, which are aimed at minimizing mutual information between the components (or maximizing entropy exhibited by the components), such as the popular Infomax algorithm (Linsker, 1988); and (2) approaches aimed at finding maximally non‐Gaussian signals using higher‐order statistics (De Lathauwer, De Moor, & Vandewalle, 2000) such as kurtosis (skew or tailedness of the probability distribution) and negentropy (deviation from Gaussianity), for example, JADE (Cardoso & Souloumiac, 1993) and fastICA (Hyvärinen & Oja, 1997).

ICA is generally performed as a two‐step process. First, the data are whitened, and then the whitened data are used to retrieve the independent components. Whitening entails that the data are transformed to a new set of variables, such that its covariance matrix is the identity matrix, meaning that the new variables are decorrelated and all have unit variance. This is often done by performing PCA and then normalizing the PCs to unit length, but other approaches exist and it is important to note that this whitening step is not unique. Whereas for Gaussian variables, decorrelation by whitening implies independence, this is not necessarily the case for non‐Gaussian variables. Since the independent components ICA is looking for need to be at least decorrelated, that is, orthogonal to each other, PCA can however deliver a good starting point. As we discussed in Section II.A, the decorrelated variables provided by PCA will, however, suffer from rotational ambiguity, i.e there are still an infinite number of possible rotations of this decorrelated set of vectors possible, and PCA thus offers a “space” of possible solutions rather than a unique solution. The stronger requirements of ICA resolve the issue of rotational ambiguity, and the additional information provided by the higher order statistics can be used to retrieve the true independent sources (De Lathauwer, De Moor, & Vandewalle, 2000; Hyvärinen, 2013), that is, find a unique solution/rotation.

However, some considerations should be taken into account. Statistical independence is a strong property with potentially infinite degrees of freedom (Hyvärinen, 2013) (as it requires checking all possible combinations of all linear and nonlinear functions). Whitening the data reduces the degrees of freedom by restricting the search to orthogonal matrices, but the search space is still very large. To find a unique solution, most ICA algorithms operate in an iterative way using local optimization methods, and aim to increase an objective function. While a global optimum for the ICA problem exists, there is a chance that the algorithm gets stuck in a local minimum. For this reason, ICA often requires multiple runs with random initializations and a consequent search for consensus over these runs (e.g., using ICASSO (Himberg & Hyvärinen, 2003)). Additionally, most ICA algorithms cannot identify the actual number of source signals, and therefore require the user to specify the number of expected components beforehand (which further restricts the search space). Finally, unlike PCA, ICA does not provide an inherent ordering of the source signals or components it found.

2. Application to IMS

Siy et al. (2008) applied the fastICA algorithm to MALDI IMS data of mouse cerebellum and compared the results to those of PCA and NMF. Overall, the components retrieved by ICA showed less noise than those obtained by PCA, both in the component images as in the pseudospectra. Contrary to PCA, much of the noise, including the baseline, was separated by ICA into a single component. The pseudospectra contained fewer negative values than those in PCA, and spectra and expression images were comparable to those of NMF (see also Section II.E). Hanselmann et al. (2008) also applied the fastICA algorithm to MALDI IMS measurements and compared its results to PCA, NN‐PARAFAC (which can be considered a type of NMF), and a newly introduced method, pLSA. The approaches were tested in both simulated and real IMS data. In the simulated data, ICA extracted fewer components than NN‐PARAFAC and pLSA, while in the real IMS data ICA returned component images with lower contrast than those of NN‐PARAFAC and pLSA. One critique was that its spectra contained a relatively high number of negative peaks, which are difficult to interpret. These components did not clearly delineate the underlying tissue or cell types, an aspect which was more readily apparent from NN‐PARAFAC and pLSA, both of which only give non‐negative components. In recent work, Gut et al. (2015) have compared pharmaceutical tablets of known composition, using PCA, ICA, NMF, and MCR‐ALS. Similar to previous studies, Gut et al. noted that the components provided by PCA are difficult to interpret. ICA provided the most accurate method to extract appropriate contributions of chemical compounds. In contrast, NMF and MCR‐ALS were better at distinguishing semiquantitative information in heterogeneous tablets due to their non‐negativity constraints. In our own research, we have used a variant of ICA, namely group ICA (GICA), to discover differences between different MALDI IMS experiments (Verbeeck, 2014b). GICA was originally developed for the differential analysis of fMRI data (Calhoun, Liu, & Adalı, 2009), and entails two pattern extraction and dimensionality reduction steps with PCA, one at the individual experiment level, and one at the level of the full dataset, after which ICA is performed. This two‐step pattern extraction improves retrieval of signals at the single experiment level, while reducing the size of the data at the level of the full dataset, making the analysis faster and less memory intensive. Similar to Siy et al. (2008), the resulting pseudospectra contained fewer peaks and contained fewer negative values than those obtained with PCA, while obtaining spatial expression images with very little noise. In an artificially generated dataset, GICA allowed near‐perfect retrieval of the original signals.

D. Maximum Autocorrelation Factorization

Maximum Autocorrelation Factorization (MAF) was originally proposed by Switzer and Green (1984) as an alternative method to PCA to analyze satellite and other multivariate spatial data. MAF has been used as a multivariate analysis technique in SIMS on several occasions (Tyler, Rayal, & Castner, 2007; Henderson, Fletcher, & Vickerman, 2009; Park et al., 2009; Hanrieder et al., 2014), but has been used only sparsely in MALDI IMS, primarily in combination with other data analysis techniques (Jones et al., 2011; Balluff et al., 2015). Stone et al. (2012) used the minimum noise fraction (MNF) transform, a related technique.

1. Principle

Similar to PCA and ICA, MAF aims to decompose the original data as

where and are the scores and loadings, respectively. MAF aims to find the transformation that maximizes the autocorrelation between neighboring observations, that is, neighboring pixels in images. This is under the assumption that signals of interest will exhibit a high autocorrelation, whereas most noise sources will exhibit much lower autocorrelation (Larsen, 2002), and will consequently have a lower ranking in the extracted components. The autocorrelation is generally calculated by taking the correlation between an image and that same image offset by a number of pixels, for example, one pixel diagonally (Henderson, Fletcher, & Vickerman, 2009). Switzer and Green (1984) originally proposed a horizontal offset of one and a vertical offset of one, followed by a pooling of the two variance‐covariance matrices resulting from each individual offset. The resulting covariance matrix is then used as the input for a PCA analysis, and the extracted PCs are the MAF factors, which are ranked from high autocorrelation to low autocorrelation. Due to the way it is formulated, MAF has the advantage that it can incorporate both spatial information (through the autocorrelation) and spectral information (correlation between mass channels) into a single analysis, contrary to other factorizations that consider these separately. As the technique uses PCA, the number of components does not need to be selected prior to the analysis. Larsen (2002) have shown that MAF analysis is equivalent to the Molgedey‐Schuster ICA algorithm (Molgedey and Schuster, 1994).

When applied to remotely sensed spectrometer data with 62 spectral channels, MAF performed much better than PCA in separating interesting signal from noise (Larsen, 2002). Furthermore, components retrieved by MAF tend to have a more intuitive ordering (components of interest ranked higher, noise ranked lower) compared with those retrieved by PCA (components of interest interspersed with noisy components). PCA's suboptimal result is most probably due to some of the interesting components having high autocorrelation (=high ranking in MAF), but having lower variance (=low ranking in PCA) than some of the noisy components. By incorporating additional structural information into the model, Larsen (2002) pose that MAF achieves a better ordering and compression of the data.

2. Application to IMS

Tyler et al. (2007) compared MAF to PCA in analyses that used different types of scaling on SIMS data, acquired from several types of samples. Unlike PCA, MAF is scaling independent, as the correlation calculated between an image and an offset of that same image will cancel out any (linear) scaling applied to the imaging data (Tyler, Rayal, & Castner, 2007; Larsen, 2002). Overall, MAF produced the best result, achieving better dimensionality reduction, offering the highest image contrast, and recovering important spectral features. Furthermore, MAF was able to retrieve subtle features that were lost with PCA, and could not be visualized in individual ion images. Park et al. (2009) reported comparable findings. Although MAF outperforms PCA with different scalings, Tyler et al. (2007) did report that MAF was computationally more intensive, taking two to five times longer to compute for their data. Furthermore, MAF typically requires more computer memory as the autocorrelation needs to be computed on the full image, resulting in a matrix of size , where is the number of pixels in the image. With increasing sizes of IMS images, this requirement can become prohibitively large.

However, Nielsen (2011) proposed the use of chunking (splitting up the image in multiple sections) to counter this issue. Furthermore, Tyler et al. note that PCA using root mean scaling and shift variance scaling (which was inspired by MAF) offers comparable results, and is less computation and memory intensive. In contrast, Kono et al. (2008) did not retrieve any meaningful results using MAF, due to the fact that the algorithm did not converge.

Henderson et al. (2009) found that MAF outperforms PCA specifically in data with a low signal to noise ratio: the autocorrelation allows MAF to better filter out noise and extract genuine signal, as is demonstrated in Figure 5. They also investigated the effects of spatial resolution on MAF results by summing up neighboring pixels, which can be done to improve signal intensity in low intensity datasets. From this comparison, they concluded that MAF should not be used in situations where spatial resolution is important, such as where the size of the sample features of interest is approaching the spatial resolution of the measurement, as in such cases there will remain little autocorrelation to exploit. This observation holds true for most techniques that rely on the local pixel neighborhood to filter noise and improve analysis results (see e.g., Section III.E on the incorporation of spatial information into the clustering of IMS data). In such resolution‐strained cases, the spatial context is less informative since there are fewer local measurements available to describe the same underlying biological pattern. In that case, techniques that do not use neighborhood information have the advantage (by exclusively looking at the chemical information to make their assessment), while local neighborhood‐based techniques run the risk of filtering out such signals as noise (due to insufficient local representation of a genuine biological or sample‐born pattern). Ideally, a combination of both types of analysis would be used.

Figure 5.

A comparison of PCA and MAF results. Original caption: Scaled, smoothed images of HeLa cells showing (a) the total ion image; (b) PC 6; and (c) MAF 4. MAF captures the distinction between inner and outer cellular regions more clearly than PCA. Comparison with the total ion image shows the additional information available following multivariate analysis. Source: Henderson et al. (2009), Figure 3, reproduced with permission from John Wiley & Sons. MAF, maximum autocorrelation factorization; PCA, principal component analysis. [Color figure can be viewed at wileyonlinelibrary.com]

Stone et al. (2012) have used the minimum noise fraction (MNF) transform (Green et al., 1988) in the analysis of coronal murine midbrain sections. MNF has ties to MAF, and aims to incorporate spatial noise estimation into hyperspectral data analysis. In the original work on MNF, Green et al. (1988) used the shift difference MAF approach as a method to estimate the spatial variance in the data. However, more complex spatial relationships can be plugged into the analysis as well if desired. Stone et al. showed extraction of spatially coherent components from murine tissue data using MNF with a relatively simple local linear fit, outperforming what could be achieved with PCA. Furthermore, when using the extracted MNF components to perform HC (see Section III.B), the results demonstrated good segmentation of the image into clusters with high spatial coherence.

E. Non‐negativity Constrained Matrix Factorizations

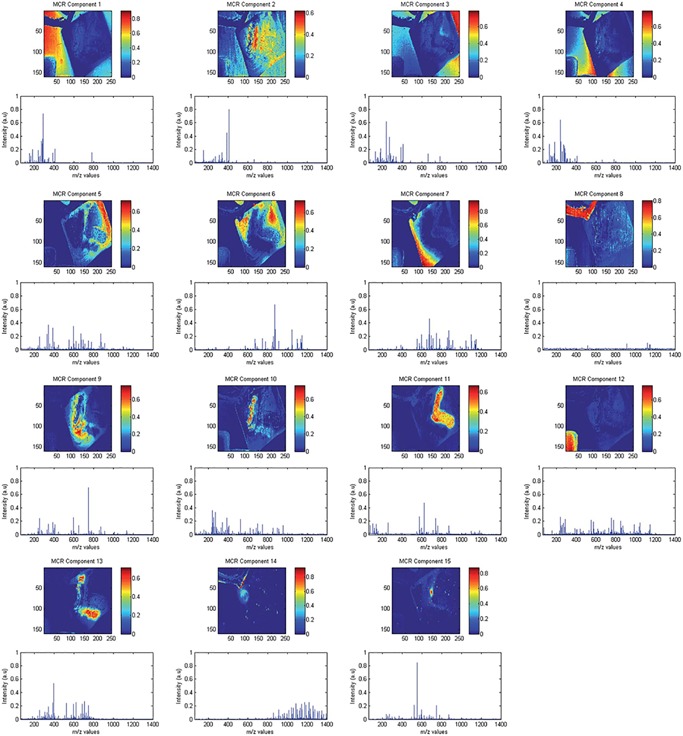

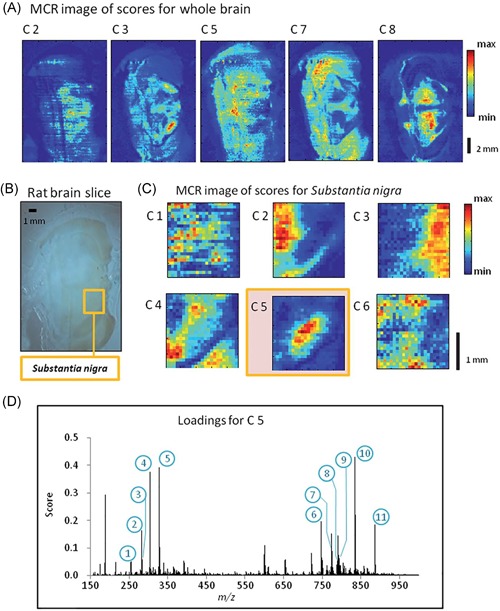

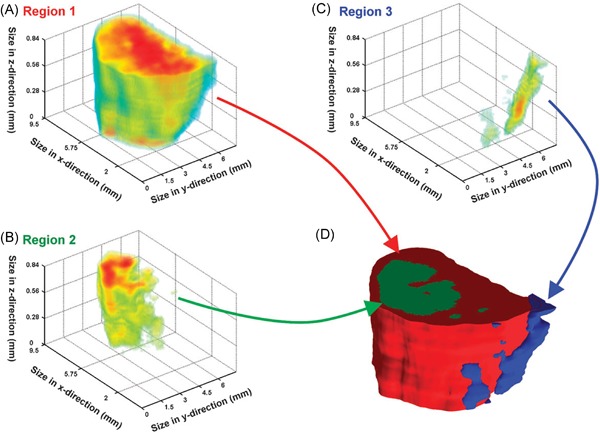

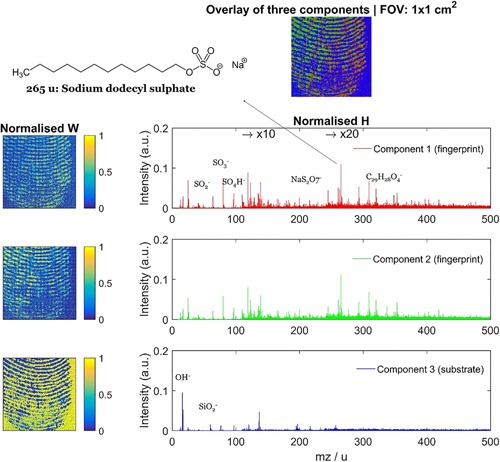

A disadvantage of the previously discussed factorization methods is that they will often have negative peaks in the pseudospectra or negative values in their spatial expression images. Such negative peaks are often difficult to interpret, since there is no straightforward physical meaning to negative ion intensity counts. Nevertheless, these negative values can be relevant, for example, they can signify a downregulation of the peak compared to the mean or compared to other components. However, they can also be the result of mathematical constraints that do not necessarily line up with the physical constraints of the underlying measurement process or sample reality. For example, PCA of IMS data tries to capture as much variation as possible in each component. Although this objective is useful for data compression, it does not limit the results to follow the physical reality of mass spectrometry (i.e., allowing only zero or positive ion counts). As a result, negative values are not uncommon (since the data are often mean‐centered) and interpretation from a mass spectrometry viewpoint becomes more difficult.