Abstract

Continuous quality improvement initiatives (CQII) in home visiting programs have traditionally occurred within a local implementing agency (LIA), parent organization, or funding provision. In Missouri, certain LIAs participate in the Missouri Maternal, Infant, and Early Childhood Home Visiting program (MIECHV). Their CQII activities and the coordination of CQI efforts across agencies are limited to quarterly meetings to discuss barriers to service delivery and newsletters. Their designed CQI process does not include evaluation of program fidelity or assessment nor supports to assist with identifying and prioritizing areas where improvement is needed. Therefore, much of LIA CQII are often lost to the benefit of external agencies facing similar challenges. We developed a virtual environment, the Missouri MIECHV Gateway, for CQII activities. The Gateway promotes and supports quality improvement for LIAs while aligning stakeholders from seven home visiting LIAs. Development of the Gateway environment aims to complement the existing MIECHV CQI framework by: 1) adding CQI elements that are missing or ineffective, 2) adding elements for CQI identification and program evaluation, and 3) offering LIAs a network to share CQI experiences and collaborate at a distance. This web-based environment allows LIA personnel to identify program activities in need of quality improvement, and guides the planning, implementation, and evaluation of CQII. In addition, the Gateway standardizes quality improvement training, collates overlapping resources, and supports knowledge translation, thus aimed to improve capacity for measurable change in organizational initiatives. This interactive web-based portal provides the infrastructure to virtually connect and engage LIAs in CQI and stimulate sharing of ideas and best practices. This article describes the characteristics, development, build, and launch of this quality improvement practice exchange virtual environment and present results of three usability pilot tests and the site launch. Briefly, prior to deployment to 58 users, usability pilot testing of the site occurred in three stages, to three defined groups. Pilot testing results were overall positive, desirable, and vital to improving the site prior to the full-launch. The majority of reviewers stated they would access and use the learning materials (87%), use the site for completing CQII (80%), and reported that the site will benefit their work teams in addressing agency challenges (66%). The majority of reviewers also approved of the developed fidelity assessment: as, easy to use (79%), having a clear purpose (86%), providing value in self-identification of CQII (75%), and recommendations were appropriate (79%). The System Usability Scale (SUS) score increased (10%) between pilot groups 2 and 3, with a mean SUS score of 71.6, above the U.S. average of 68. The site launched to 60 invited users; the majority (67%) adopted and used the site. Site stability was remarkable (6 total minutes of downtime). The site averaged 29 page views per day.

Keywords: continuous quality improvement, online, public health, home visiting programs, training, information exchange, capacity building

Introduction & Implications for Policy and Practice

Early childhood home visiting programs date back to the 1880s and deliver a vital public service of providing and connecting families with health, educational, and economic resources to support optimal development [1]. The home visitor service delivery model provides intervention and mediation techniques to families with young children [1]. Presently, seven local implementing agencies (LIA) in Missouri are participating in the Missouri Department of Health and Senior Services (MoDHSS) Maternal, Infant, and Early Childhood Home Visiting program (MIECHV). The LIAs coordinate continuous quality improvement initiatives (CQII) as part of a MoDHSS contract deliverable and, potentially, as required by their accrediting model. LIAs adhere to a designed continuous quality improvement (CQI) process focused on broad programmatic strategies. The MIECHV program evaluation found the direction and coordination of CQI efforts across agencies were limited to quarterly meetings and newsletters. The evaluation also found a lack of past or present documented CQII. In addition, the MIECHV CQI process did not include: an evaluation of program fidelity, an identification and prioritization of problems with program implementation, the development and execution of corrective action plans to address shortfalls, and an avenue to disseminate CQI experiences. Without dissemination of CQI experiences, CQII are often lost to the benefit of external agencies facing similar challenges. Overall, the program evaluation suggested the need to provide more structured CQI supports to enable LIAs to self-administer and direct their improvement activities, independently from the broader MoDHSS-driven MIECHV CQII activities. In addition, the evaluation suggested the need to enhance participating LIAs’ communication networks to foster connecting, sharing, collaborating, and learning across LIAs. In response to these program challenges, we developed a quality improvement information exchange web-based environment, the Missouri MIECHV Gateway. The Gateway aims to enhance CQII by providing an infrastructure to self-assess local program activities in need of quality improvement, and to guide the planning, implementation and evaluation of CQII. In addition, the Gateway virtually connects and engages LIAs in CQII by serving as a portal to share CQII, identify best practices, generate new learning, and network across agencies to virtually align stakeholders from the seven home visiting LIAs. This web-based environment aims to support current LIA CQII activities, while at the same time, adding missing elements that will strengthen it. We describe the characteristics, development, build, and launch of this quality improvement practice exchange virtual environment and present results of three usability pilot tests and the site launch.

Materials and Methods

Concept

Quality improvement consists of systematic and continuous actions that lead to measurable improvement in services for targeted groups [2]. A simple web search of “quality improvement education” returns over 114 million results. The abundant search results demonstrate CQI web-based resources both exist and are publically shared. However the quality, applicability, and validity of these web-based resources must be evaluated on an individual basis. Sifting through those results requires a time commitment many public health agencies cannot afford and is likely overwhelming to the average user seeking basic CQI knowledge.

A search of existing literature and web resources uncovered an additional gap in the implementation of online quality improvement sites. The literature, however limited, provided insight on characteristics of other project sites. CQI resources, historically used in business and industry environments (e.g., Juran 1951; Ishikawa 1985; Deming 1986), were translated to learning materials and tools applicable for the home visiting LIAs. A National Association of County and City Health Officials project reported the most valuable web-based resources as: public health related CQI resources, training, tools, networking, and one-on-one consultation [3]. In a web-based site designed for home visiting programs in Ohio and Kentucky, the integration of user access to quality indicator performance reports increased user downloads by 297%, and a centralized data reporting system improved the program’s ability to meet performance indicators and standardize treatment across multiple sites [4].

To enhance CQII and promote sharing across LIAs, we designed and built a web-based portal to provide LIAs the infrastructure to self-assess local program activities in need of quality improvement, and to guide the planning, implementation and evaluation of CQII. In addition, the Missouri MIECHV Gateway virtually connects and engages LIAs in CQII by serving as a portal for LIAs to undertake and share CQII, identify best practices, generate new learning, and network across agencies. Knowledge from the literature, paired with expert consultation, substantiated both the usefulness and uniqueness of building and developing the Missouri MIECHV Gateway, a proof-of-concept website which represents a passage into a quality improvement network.

Platform

Planning, designing, building, and developing a website is a significant undertaking. Weekly planning sessions occurred for several months before an online environment existed. The website was developed and hosted in the Amazon Web Service (AWS) cloud environment on a standard Windows 2012 platform. AWS was chosen for several reasons: 1) initial low-volume use of a single virtual server in AWS is free for development, allowing for economical and fast initial set-up; 2) as with most cloud hosting services, AWS allows for rapid expansion of capacity in response to use demand; given the novelty of the site and uncertainty as to the ultimate volume of traffic it will generate over the long term, this flexibility is key to meeting future needs; 3) AWS is the largest and most popular cloud hosting system in the U.S., so should be familiar to the widest range of future developers and maintainers who might be involved in the project; and 4) the cloud nature of AWS makes it easy to transfer ownership and administrative duties as necessary throughout the indefinite life of the project. The website itself is built within the WordPress CMS platform for several reasons: 1) WordPress is free, open source software; 2) WordPress offers a large library of plugin extensions and one of the largest third-party developer communities in the industry, making it functionally extensible; 3) WordPress is one of the most widely deployed CMS platforms in the world, so should be familiar to the widest range of future developers and maintainers who might be involved in the project, and; 4) WordPress has modest and easily accessible language and database middleware requirements (in our case, PHP and MySQL, respectively), allowing the server operating system to acclimate to most specifications and the site to be highly portable. Once the site was established on the server, a secure HTTP over SSL domain name was registered.

Information Exchange Infrastructure and Development

Krug’s (2014) book Don’t Make Me Think, Revisited has provided valuable insight on website design and usability. Krug encourages adopting expected conventions for web pages including where things are located on a page, how things work, how things look and how primary, secondary, and tertiary menus should be added and arranged [5].

We designed and built the site infrastructure with five main content pages: Home; CQI Process; Discussions; Education & Training; and Resources. The Home page includes the following secondary pages: About; Getting Started; Feedback; and Technical Support. Under CQI Process, the secondary pages of CQI Process Overview, Current CQI Project Tracker, Stage 1: Plan, Stage 2: Do, Stage 3: Study, and Stage 4: Act pages are located. The Discussion Forum, Groups, and Members secondary pages are housed under the Discussions primary menu. Events, Glossary, MIECHV, CQI Storyboard Library, Gateway Webinars, and Training are located under Education and Training. The Resources primary menu includes the secondary menu External Resources and Organization Directory pages.

Site Features

To exclusively limit site access to LIA and MoDHSS staff, a plugin was enabled to require a username and password to log into the site. This encourages idea sharing and collaboration between the LIAs, without input from the general public. Gateway Administrators established initial accounts with a system-generated strong password. Once users enter the site, they are directed to set a unique password which meets or exceeds strong password standards.

User profile management affords users an identity beyond their username and promotes social networking. A registered user can upload a profile photo, a cover photo, and update information on their public profile such as their job role, professional interests, and other demographic information viewable by all registered users.

To draw users into and around the site, a plan for user engagement was established. This plan incorporated the use of gamification methods. Gamification is the application of incentives typically found in gaming applications to a non-gaming environment. The first gamification method incorporated was the integration of a badge system. When a user completes defined activities within the site, a medal shaped icon, referred to as a badge, is awarded to the user and displayed on the user’s profile indefinitely (Figure 1).

Figure 1,

Badges

The second incorporated gamification method was the inclusion of a user activity point system. Users who complete activities across the site (e.g., daily login, joining a group, participating in a discussion forum, uploading a storyboard, downloading a tutorial, etc.) receive points based on a defined point system ranging from 1-5 points per activity. There is no maximum number of points a user can accumulate, and users can never lose points. A user’s point sum is highlighted on their profile page and in the running footer of the website in a ranked order.

The Home page content includes a quick link meta slider with scrolling images and text of site pages, Getting Started links, a weekly poll, a weekly quote, and a listing of upcoming events. Of note, the weekly poll is designed as a simple yes/no or multiple choice question to inform site development, site satisfaction, and user engagement. Site features under the Home menu are organized in an expected arrangement for the average web user. A user guide and Frequently Asked Questions page exist, along with web forms designed for both submitting feedback and seeking technical assistance.

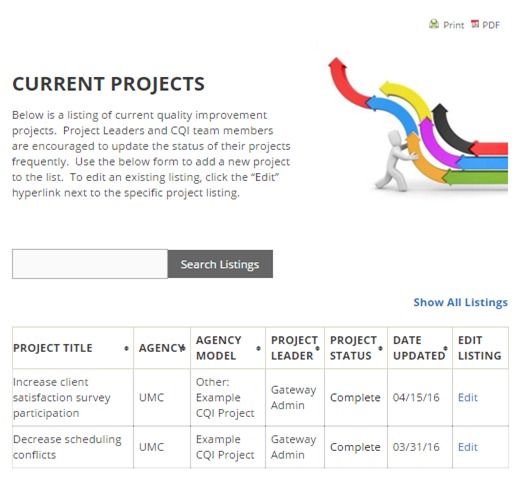

The CQI Process tab houses the process designed to enable LIA users to complete a self-assessment, design and implement a local quality improvement project, evaluate the project, and finalize the project in the format of a CQI storyboard. W. Edwards Deming’s Plan-Do-Study-Act (PDSA) cycle was integrated as the preferred model to drive continuous small-scale CQI improvements [6]. The storyboard captures all stages of the Plan-Do-Study-Act (Stages 1-4) CQI project, shares lessons learned, and future directions. These storyboards are stored in a searchable directory under the Education and Training tab. Within each stage of the CQI process, guided questions and training tutorials are available to the user. Specifically, within Stage 1: Planning, users begin the CQI process by completing a program fidelity and CQI assessment, a structured survey where the user self-reports the extent to which theoretical model program activities are implemented within their LIA and to the MIECHV program implementation. This assessment provides fidelity score, recommendation, and a document suggestions areas for related potential quality improvement activities. A point system is assigned to user responses as ‘always implemented’ (4 points), ‘sometimes implemented’ [3], ‘seldom implemented’ [2], ‘never implemented’ [1], and ‘unable to evaluate’ (0). These points are summed and divided by the number of questions, excluding those in which ‘unable to evaluate’ is selected. Scores are then paired with the scoring key; 75% and above are considered as operating with a high level of fidelity to the model activities. User assessments within this scoring bracket are encouraged to continue ongoing review of program assessment and quality improvement work, as needed, to maintain fidelity. Scores within 30-74% strongly recommend the user begins quality improvement projects to improve fidelity, and scores within the 1-29% range recommend users take immediate action in the form of program assessment and quality improvement work to reach a higher level of fidelity. This assessment also captures additional information including the problems with implementation, barriers to implementation, and history of CQI review. Following completion of the assessment, users are guided back to Stage 1 where they begin a CQI project and navigate through to Stage 4. Finally, the CQI Process tab hosts the Current CQI Project Tracker page, which includes an interactive table listing of current CQI projects in process (Figure 2). Users add their projects to the public table, update the project status as they progress, and search for current CQI projects of interest.

Figure 2,

Current CQI Project Tracker screenshot

The Discussions tab houses three pages, Discussion Forum, Groups, and Members. BuddyPress, a popular social network software plugin that integrates open discussion forums, group forums (private and public), and member connections. Within the discussion and group forums, users post and respond to threaded discussions with the ability to upload documents and insert URL hyperlinks. The Discussions sub-page sidebars include activity streams to easily guide users to active discussions and group forums.

The Education and Training menu tab includes the following sub-pages: Events, Glossary, MIECHV, CQI Storyboard Library, Gateway Webinars, and Training. Events includes a menu of offerings, from conferences to awareness weeks with each event tagged to applicable categories. These tags are populated in a word cloud-type format that appears in the footer of all site pages. Any tagged category can be clicked by the user which filters the full site for content with the selected tag, affording a quick and accurate search. The Glossary page includes a comprehensive and alphabetical listing of key CQI terms, definitions, resources, and site training tutorials of frequently used CQI terminology and concepts, designed as a quick reference for user retrieval. The MIECHV page includes program specific documents such as CQI meeting minutes, CQI newsletters, forms, and reports. The CQI Storyboard Library, as previously discussed under the CQI Process menu, includes a search-enabled directory of completed CQI project storyboards. The Gateway Webinars page houses recorded webinar videos, their accompanying slide decks, and announcements for upcoming webinars. Lastly, the Training page hosts meta slider tutorials on common CQI tools and methodologies (Figure 3).

Figure 3,

Training page screenshot

The Resources primary menu includes the secondary menu External Resources and Organization Directory pages. External Resources consists of a listing of CQI literature and articles, CQI resources from external sites, and resources surrounding specific MIECHV home visiting program constructs. To support the exchange of resources among LIAs, the Organization Directory page hosts a searchable directory of client resources. Users may add agency listings to the directory, edit existing listings, and/or search for listings by one or more of the following criteria: agency name, county, state, service type, and population served.

The ability to receive and respond to technical inquiries is vital to the success of the site. Along with a technical support web-based form, live chat has been integrated to encourage feedback and inquiry from users. Users may select the live chat-expanding box (located on all site pages) to connect with a Gateway Administrator. Messages received during off-peak hours receive an auto-reply, followed up with a response the next business day.

The site has been developed using a theme optimized for use with mobile devices, tablets, and desktops. Site pages can be saved in PDF format and are printer friendly. The site menu header and footer are both static across all pages while the sidebars vary by page to optimize content navigation. Finally, site functionality is continually reviewed, modified, and upgraded as needed to maintain a stable platform.

User Engagement & Social Media

Employee engagement is defined as the extent to which employees are committed to a cause or to a person in their organization, how hard they work, and how long they stay as a result of that commitment [7]. Employees hold the key to organizational success in today’s competitive marketplace. However, this competitive edge will not be gained until employees are properly engaged. Engagement begins from the time of recruitment and continues throughout the time that the employee commits to the organization. The issue today is not just employing and retaining talented people but in maintaining their attention at each stage of their work lives by engaging them [7]. We are now turning our sights to technology, social media specifically, for the purpose of engaging employees, and specifically LIA personnel with the web-based portal.

Social media sites like Twitter, Facebook, LinkedIn and web 2.0 applications like RSS newsfeeds and blogs have all been utilized with various degrees of success in an effort to increase engagement among employees. Even though social media undoubtedly has the potential to elicit employee engagement, the organizational culture and leadership buy-in are major factors that determine if social media would be implemented for the purposes of employee engagement [8]. Social media has the potential for being distracting as well as addictive. Organizations may be able to control employees’ excessive use of social media by the use of management tactics [9]. Thus, it is key to reaching an optimal balance between utilizing social media as a tool for employee engagement and letting it distract employees to the end that organizational productivity diminishes.

A sparse amount of published literature is available on the subject of engaging employees using social media. Where literature abounds, they have emanated from case studies. These case studies focus on how certain organizations utilized social media for employee engagement. However, this is not readily generalizable as culture and leadership differ from organization to organization. In adopting the capabilities of social media for employee engagement, a number of assumptions are usually made. One major assumption is that employees are all social media savvy; the lack of capability could hinder its use. Conversely, excessive use of social media has the potential to take away from organizational productivity. When balanced, social media, if well aligned with organizational culture, has the potential to add to employee engagement.

The MIECHV Gateway site established social media accounts on Facebook, LinkedIn, and Twitter and added quick access buttons on the Gateway. To limit the audience strictly to Gateway users, the Facebook and LinkedIn pages required requesting membership to the group while the Twitter page was available for viewing/following by the general public. Feed management was optimized through the use of the network management site, Hootsuite. CQI-focused articles and latest news were fed to all three sites and Gateway webinar and site-specific announcements were fed to the Facebook and LinkedIn pages.

Monitoring

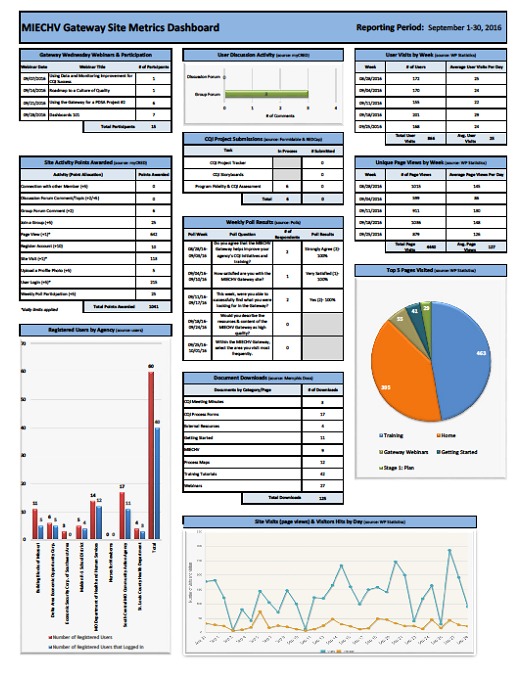

Plugin software capabilities have been adopted and integrated to monitor site activity (site visits, discussion posts, points, badges, document downloads/uploads, etc.) and capture analytics on site use. A data analytics reporting dashboard has been created to document these analytics, on a monthly and quarterly basis, and provide insight on social marketing and engagement activity needs.

To communicate and standardize software specifications across site administrators, a back-end user manual was created. This manual receives regular review and updates to document all technical specifications, theme consistencies, plugin integration, and a comprehensive listing of activities and timelines to perform site maintenance and updates.

Pilot Testing

To optimally understand the human-computer interaction with the site, user testing is widely recognized as the most reliable method [10]. Prior to site deployment to the LIAs, pilot user testing is necessary to assess the usability of the website and provide an opportunity to make identified changes prior to full-scale deployment. To garner both expert and stakeholder feedback, pilot testing occurred in three stages, to three defined groups. The three pilot testing groups consisted of: 1) faculty and staff from the university department; 2) administrators within the state department of health; and 3) supervisors and data managers within the local implementing agencies.

Results

Pilot Testing Results

Pilot testing of group 1 occurred December 2015-January 2016, group 2 occurred February-March 2016, and group 3 April 2016. To conduct the pilot testing, group reviewers were provided access to the website, the online survey, and instructions of the review purpose and process. The survey for group 1 consisted of 13 questions, the survey from groups 2 and 3 consisted of 19 questions. Three case-based scenarios were developed, describing educational and professional work experiences of potential users. The survey instructs reviewers to read each case-based scenario and identify site content pages most useful to the potential user. Based on responses, the survey prompts users for narrative responses of their site experience and content suggestions. Additional survey questions evaluate how frequently reviewers would visit specific site pages, the amount of content on each page, and potential site impact on coordination, collaboration, and learning of CQI education and initiatives (Table 1).

Table 1, Pilot Usability Testing Results.

| Survey Question | N |

Point Value

(Minimum attainable points: 0; Maximum: 60) |

Mean Score

(Scale 0-4; 0 Strongly Disagree… 4 Strongly Agree) |

| This site improves coordination of quality improvement education and initiatives. | 15 | 46 | 3.06 |

| This site encourages collaboration of quality improvement education and initiatives. | 15 | 49 | 3.27 |

| This site fosters the learning of quality improvement education and initiatives. | 15 | 48 | 3.20 |

Last, reviewers within pilot testing groups 2 and 3 were asked to evaluate their overall site experience and respond to 10 statements to measure site effectiveness, efficiency, and satisfaction as defined within a modified version of the System Usability Scale (SUS) [11] (Table 2). The University of Missouri Institutional Review Board has reviewed these three pilot testing studies.

Table 2, Modified System Usability Scale Survey.

| Question Number | Question |

|---|---|

| 1 | I would use this CQI project process frequently. |

| 2 | I found this CQI project process unnecessarily complex. |

| 3 | I thought this CQI project process was easy to use. |

| 4 | I think that I would need the support of a technical person to be able to use this CQI project process. |

| 5 | I found the various functions (features) in this CQI project process were well integrated. |

| 6 | I thought there was too much inconsistency (ex. information, navigation) in this CQI project process. |

| 7 | I would imagine that most people would learn to use this CQI project process very quickly. |

| 8 | I found this CQI project process very cumbersome to use. |

| 9 | I felt very comfortable using this CQI project process. |

| 10 | I needed to learn a lot of things before I could begin using this CQI project process. |

Results of the three pilot testing groups were overall positive, desirable, and vital to improving the site for full-launch implementation. The majority (87%) of reviewers reported they would access/use the learning materials (e.g. CQI project process, training tutorials, resources, etc.), stated they would use the site for completing quality improvement projects (80%), and reported the site would help their work teams address internal quality improvement challenges (66%).

Reviewers were asked to submit feedback for expansion, modification, or further development of the site content through survey prompts allowing for narrative responses. Reviewers submitted a total of 98 narrative responses, with 19 from pilot group 1 (average 2.7 per user), 50 from pilot group 2 (average of 6.3 per user), and 29 from pilot group 3 (average of 4.1 per user).

Reviewers reported they would “frequently/regularly” (64%) or “occasionally” visit (30%) the primary pages (including Home Page, CQI Storyboard Library, Discussion Forum, Current CQI Project Tracker, and CQI Process pages). Reviewers reported they would “frequently/regularly” (32%) or “occasionally” (54%) visit learning and resource pages (including External Resources, MIECHV, Glossary, Organization Directory, Training, Events, and Groups). In evaluating the amount of content on 20 individual site pages, 75% reported the site pages included the right amount of content, 15% reported the certain site pages were in need of improvement, and 10% reported too much content on certain site pages. The majority of reviewers reported feeling “comfortable” or “highly comfortable” in sharing experiences, practices, and/or concerns in the following site areas: open discussion forums (88%); closed groups (100%); private messaging (88%); and feedback submission forms (100%).

The majority of pilot reviewers approved of the “Program Fidelity & CQI Assessment” site assessment tool. Most reported the assessment tool was easy to use (79%), and the purpose of the assessment was clear (86%). Reviewers were able to use the assessment to self-identify areas where quality improvement work would be beneficial (75%). The majority of reviewers stated the assessment recommendations were appropriate (79%). Reviewers identifying the next step in the CQI process after completing the assessment was the lowest rated survey item (72%). The overall satisfaction and usability of the assessment by reviewers calculated as 3.10 out of a maximum of 4 (78%) (Table 3).

Table 3, Program Fidelity & CQI Assessment Evaluation Survey Results.

| Survey Question | Group 2 N | Group 2 Mean Score | Group 3 N* | Group 3 Mean Score | Total N | Point Value (Minimum attainable points: 0; Maximum: 60) |

Mean Score (Scale 0-4; 0 Strongly Disagree… 4 Strongly Agree) |

|---|---|---|---|---|---|---|---|

| The assessment is easy to use. | 8 | 3.13 | 6 | 3.17 | 14 | 44 | 3.14 |

| The purpose of the assessment is clearly stated. | 8 | 3.50 | 6 | 3.33 | 14 | 48 | 3.43 |

| The assessment allows users to self-identify areas where quality improvement work may be beneficial. | 8 | 2.88 | 6 | 3.17 | 14 | 42 | 3.00 |

| The assessment recommendations appear on target. | 8 | 4.13 | 6 | 3.17 | 14 | 44 | 3.14 |

| At the conclusion of the assessment, it was clear what my next step in the CQI process was. | 8 | 2.75 | 6 | 3.00 | 14 | 40 | 2.86 |

| The assessment is useful in measuring fidelity to a program model. | 8 | 3.00 | 6 | 3.00 | 14 | 42 | 3.00 |

| Overall usability of the Program Fidelity & CQI

Assessment (Measured by individual evaluation of the following six questions: ease of use, clearly stated, recommendations appear on target, allows users to self-identify areas where quality improvement work may be beneficial, clarity of next step, and useful in measuring fidelity to a program model) |

8 | 3.06 | 6 | 3.14 | 14 | 43 | 3.10 |

* Group 3 had seven reviewers complete the survey, one reviewer did not respond to the series of questions presented in Table 3.

Site improvements made between pilots 2 and 3 were found to benefit the overall site usability and increased the site’s SUS score by 10% (6.9 points). The modified SUS score of pilot testing group 2 was 68.4, group 3 was 75.4, and weighted mean score of the two pilot groups calculated as 71.6, ranking above the U.S. average of 68 [12].

Site Launch & Results

The site launched to 58 users (47 LIAs; 11 MoDHSS). Prior to launch, LIAs and MoDHSS managers provided user registration data to establish unique user accounts with appropriate roles for Gateway users. New users received an automated email with the site web address, unique username, temporary password, instructions to change their password, and general information on utilizing the site. A 10-part live-stream weekly webinar series was offered to users. Webinars were recorded and posted, with the accompanying slide deck, on the Gateway Webinars page. A total of 44 participants, across five agencies, joined the live webinars.

Site performance and activity were measured through integrated software capabilities. Site stability and performance were exceptional. Throughout the 12-weeks the site was open to users, the site experienced six minutes of total site downtime. Downtime was planned to update plugins. The integrated software capabilities that monitor site activity (e.g. visits, visitors, posts, downloads, activity points, technical support inquiries, etc.) capture analytics on site use. Site activity metrics were gathered and reported in monthly “MIECHV Gateway Site Metrics Dashboard” to MoDHSS administrators (Figure 4).

Figure 4,

MIECHV Gateway Site Metrics Dashboard, September 2016

At the conclusion of the contract period (09/30/2016), a total of 60 users (46 LIAs; 14 MoDHSS) were registered with 40 active users (66.7% adoption rate). The site averaged 29 page views per day, awarded 3,178 site activity points, and had 540 document downloads. The Training page was most frequently visited by users. In regards to social media engagement, at the end of the contract period, there were no members or followers (other than Gateway administrators) to any of the three social media sites. Further surveying of LIA users is necessary to determine if users utilize social network sites, access social media sites in the workplace, access internet and internet-accessible devices at both work and home, and share opinions on using social media for business/employment purposes.

Limitations

The development, build, and launch of this quality improvement practice exchange virtual environment achieved its overarching aim in developing a widely accepted web-based environment to balance CQI training and practice and increase the capacity for organizational change. Still, unavoidable limitations exist. First, significant run-time is essential for adoption and utility of any new technology. The short run (12 weeks) did not allow adequate time for a pilot test where generalizable impact to larger user populations could be assured. Further and lengthier pilots are necessary to gather and analyze key trends over time such as fidelity score measurements, average site utilization by user, PDSA submission rates, participation by agency, benchmark and construct performance improvements, and others. Second, a lack of prior studies on comparable web-based tools pose challenges in the ability to set baseline measurements of whether the web-based CQI intervention achieved meaningful success. Lastly, from conceptualizing a problem for improvement to measurement of current to future-state change to monitoring and maintaining the change, there is a strong reliance on certifiable and accessible program performance data. Due to data reporting system barriers, external and independent from the site, shared performance data was unable to be integrated as a site resource. However, program reports remained accessible to users via their designated LIA supervisor, yet the convenience benefits of directly accessing data reports from within the site could not be achieved during the time of the study.

Discussion & Conclusion

Advances in web-based collaborative workplace environments offer tremendous potential to improve dissemination of information, access to standardized educational materials, distance collaborations, and overall quality of program delivery and performance. To our knowledge, a virtual environment aimed to create a culture of quality improvement and foster CQII for home visiting program LIAs has not been previously reported. The Missouri MIECHV Gateway site hosts key characteristics advocated by experts in CQI, website development, and online learning environments. Development of the Gateway environment aimed to complement the existing MIECHV CQI framework. We were successful in meeting these aims by: 1) adding CQI elements that are missing or ineffective, such as standardized training tutorials, webinars, and structured CQI project forms, 2) adding elements for CQI identification and program evaluation, such as the “Program Fidelity and CQI Assessment” and 3) offering LIAs a network to share CQI experiences and collaborate at a distance, through avenues such as the discussion forums and the CQI Storyboard Library. We built a stable site that successfully: achieved an above average (71.6) usability score, developed an acceptable (78% overall satisfaction) fidelity self-assessment tool to prioritize CQI activities, and concluded with a site adoption rate of 67% averaging 29 page views per day.

Throughout the process of developing and launching the Missouri MIECHV Gateway, many lessons are learned. First, the site design is fluid, and it appears to address required flexibility, creativity, and adaptability [13]. The integration of features within the site is not limited, with the widespread availability of third-party plugins one does not typically require a robust programming background to implement new features. Second, encouraging open communication, stakeholder buy-in, and ongoing feedback was a necessary activity in garnering shared vision and ownership of the site [14]. Stakeholder feedback remains a vital part of the site design and development. Frequent meetings continued to occur with administrators of the state health department, and with LIAs throughout the contract period. Additionally, further pilots are necessary to understand how individuals are motivated to use the site. Finally, the systematic approach to CQI of examining performance relative to targets requires the integration of real-time data, dashboards, and reports powered by information technology and informatics frameworks [15,16].

The site adds value to quality improvement beyond this presented scope of work. This value virtually connects users and embeds them within an environment balancing CQI training and practice [17]. Expansion of this site has endless opportunities given the focus on CQI priorities. From the addition of expanded training tutorials to the expanded integration of digital tools to the measurement of fidelity and outcomes from CQII, these features and characteristics aim to improve and enhance the site. More longitudinal assessments will be needed to further measure and evaluate the Gateway site impact on programs and agencies beyond the built population.

Acknowledgments

We are grateful to the Missouri Department of Health and Senior Services’ MIECHV program leadership and project personnel for their diligent efforts in implementing this innovative program.

Footnotes

Funding Acknowledgement: This project is supported by the Health Resources and Services Administration (HRSA) of the U.S. Department of Health and Human Services (HHS) under grant number D89MC2791501-Affordable Care Act (ACA) Maternal, Infant, and Early Childhood Home Visiting Program in the Amount of $886,521 with 0% financed with nongovernmental sources. This information or content and conclusions are those of the authors and should not be construed as the official position or policy of, nor should any endorsements be inferred by HRSA, HHS or the U.S. Government.

Funding: This project is supported by the Health Resources and Services Administration (HRSA) of the U.S. Department of Health and Human Services (HHS) under grant number D89MC2791501-Affordable Care Act (ACA) Maternal, Infant, and Early Childhood Home Visiting Program in the Amount of $886,521 with 0% financed with nongovernmental sources. This information or content and conclusions are those of the authors and should not be construed as the official position or policy of, nor should any endorsements be inferred by HRSA, HHS or the U.S. Government.

7 References

- 1.Sweet M, Appelbaum M. 2004. Is home visiting an effective strategy? A meta‐analytic review of home visiting programs for families with young children. Child Dev. 75(5), 1435-56. 10.1111/j.1467-8624.2004.00750.x [DOI] [PubMed] [Google Scholar]

- 2.U.S. Department of Health and Human Services Health Resources and Services Administration. Quality Improvement. n.d. Available from: http://www.hrsa.gov/quality/toolbox/methodology/qualityimprovement/.

- 3.Davis P, Solomon J, Gorenflo G. 2010. Driving quality improvement in local public health practice. J Public Health Manag Pract. 16(1), 67-71. 10.1097/PHH.0b013e3181c2c7f7 [DOI] [PubMed] [Google Scholar]

- 4.Ammerman R, Putnam F, Kopke J, Gannon T, Short J, et al. 2007. Development and implementation of a quality assurance infrastructure in a multisite home visitation program in ohio and kentucky. J Prev Intervent Community. 34(1-2), 89-107. 10.1300/J005v34n01_05 [DOI] [PubMed] [Google Scholar]

- 5.Krug S. Don't Make Me Think, Revisited: A Common Sense Approach to Web Usability: New Riders; 2014.

- 6.Deming W. Out of the Crisis. Cambridge, MA: Massachusetts Institute of Technology Centre for Advanced Engineering Study xiii; 1983. [Google Scholar]

- 7.Lockwood NR. 2007. Leveraging employee engagement for competitive advantage. Society for Human Resource Management Research Quarterly. 1, 1-12. [Google Scholar]

- 8.Parry E, Solidoro A. 2013. Social media as a mechanism for engagement. Advanced Series in Management. 12, 121-41. 10.1108/S1877-6361(2013)0000012010 [DOI] [Google Scholar]

- 9.Herlle M, Astray-Caneda V. The Impact of Social Media in the Workplace. 2013:67-73.

- 10.Woolrych A, Cockton G, eds. Why and when five test users aren't enough. IHM-HCI 2001 conference; 2001; Toulouse, FR. [Google Scholar]

- 11.Brooke J. SUS: a "quick and dirty" usability scale. Usability Evaluation in Industry. 1996. [Google Scholar]

- 12.Sauro J, Kindlund E, eds. A method to standardize usability metrics into a single score. Proceedings of the SIGCHI conference on Human factors in computing systems; 2005: ACM. [Google Scholar]

- 13.Radaideh MDA. Architecture of Reliable Web Applications Software: IGI Global; 2006. [Google Scholar]

- 14.Alexander M. Lead or Lag: Linking Strategic Project Management & Thought Leadership: Lead-Her-Ship Group; 2016.

- 15.Ghazisaeidi M, Safdari R, Torabi M, Mirzaee M. 2015. Development of performance dashboards in healthcare sector: key practical issues. Acta Inform Med. 23(5), 317. 10.5455/aim.2015.23.317-321 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Institute of Medicine. Crossing the quality chasm: a new health system for the 21st century. Washington, D.C.: National Academy Press; 2001. [PubMed] [Google Scholar]

- 17.Anderson T. The theory and practice of online learning: Athabasca University Press; 2008. [Google Scholar]