Abstract

OBJECTIVE

Cochlear implants (CIs) are considered a safe and effective intervention for more severe degrees of hearing loss in adults of all ages. Although older CI users ≥65 years of age can obtain large benefits in speech understanding from a CI, there is a growing body of literature suggesting that older CI users may not perform as well as younger CI users. One reason for this potential age-related limitation could be that default CI stimulation settings are not optimal for older CI users. The goal of this study was to determine if improvements in speech understanding were possible when CI users were programmed with non-default stimulation rates, and to determine whether lower-than-default stimulation rates improved older CI users’ speech understanding.

DESIGN

Sentence recognition was measured acutely using different stimulation rates in 37 CI users ranging in age from 22 to 87 years. Maps were created using rates of 500, 720, 900, and 1200 pps for each subject. An additional map using a rate higher than 1200 pps was also created for individuals that used a higher rate in their clinical processors. Thus, each subject’s clinical rate was also tested, including non-default rates above 1200 pps for Cochlear users and higher rates consistent with the manufacturer defaults for subjects implanted with Advanced Bionics and Med-El devices. Speech understanding performance was evaluated at each stimulation rate using AzBio and PRESTO sentence materials tested in quiet and in noise.

RESULTS

For Cochlear-brand users, speech understanding performance using non-default rates was slightly poorer when compared to the default rate (900 pps). However, this effect was offset somewhat by age, in which older subjects were able to maintain comparable performance using a 500 pps map compared to the default-rate map when listening to the more difficult PRESTO sentence material. Advanced Bionics and Med-El users showed modest improvements in their overall performance using 720 pps compared to the default rate (>1200 pps). On the individual-subject level, 10 subjects (11 ears) showed a significant effect of stimulation rate, with eight of those ears performing best with a lower-than-default rate.

CONCLUSION

Results suggest that default stimulation rates are likely sufficient for many CI users, but some CI users at any age can benefit from a lower-than-default rate. Future work that provides experience with novel rates in everyday life has the potential to identify more individuals whose performance could be improved with changes to stimulation rate.

INTRODUCTION

There is considerable variability in cochlear-implant (CI) users’ speech understanding ability; some users obtain excellent open-set sentence recognition while others only benefit from improved sound awareness (Gifford et al. 2008; Holden et al. 2013). Biological factors that affect speech understanding include age at implantation, etiology, age at onset of hearing loss, and duration of deafness (Blamey et al. 2013). CI users who have early-onset hearing loss and prolonged durations of deafness tend to fall into the lower range of performance levels on speech understanding tasks. Another emerging factor that may affect speech understanding ability following implantation is advancing age (Chatelin et al. 2004; Friedland et al. 2010; Sladen and Zappler 2015). While these biological variables may account for much of the variance observed in CI outcomes, the methods that are used to program clinical sound processors may be an additional factor contributing to variable outcomes.

Advanced age, especially in the presence of severe-to-profound hearing loss, causes many peripheral, central, and cortical changes to the auditory system that could impact an individual’s ability to understand speech via a CI. Prolonged periods of auditory deprivation, which commonly occur in older CI candidates, are associated with the neural degeneration of spiral ganglion cells (Leake et al. 1999). Aging alone, even in the absence of hearing loss, is also associated with widespread spiral ganglion loss (Kujawa and Liberman 2015; Sergeyenko et al. 2013). The rate of spiral ganglion loss in ears with no evidence of pathology is estimated to be about 2,000 cells per decade (Otte et al. 1978). The status of a CI user’s peripheral auditory system has important implications for the quality of the electrode-to-neuron interface required for successful delivery of the electrical signal. Aging also impacts the central auditory system. The presence of age-related central auditory temporal processing deficits could interfere with the delivery of the temporal envelope that acts as the primary cue for understanding speech signals processed by a CI (Anderson et al. 2012; Parthasarathy and Bartlett 2011; Presacco et al. 2016). Finally, changes in cognition have been proposed as a potential factor influencing performance in older CI users (Amichetti et al. 2018; Moberly et al. 2017; Schvartz et al. 2008). Changes in working memory ability, in particular, could impact a person’s ability to understand highly degraded, CI-processed speech signals (e.g., Ronnberg et al. 2013). Each level of the aging auditory system has the potential to limit an older CI user’s ability to understand speech signals compared to younger CI users.

The effect of age alone, when controlling for other factors that appear to have a larger impact on CI outcomes compared to age (i.e., duration of deafness), contributes to the variance in CI outcomes. Blamey et al. (2013) found that individuals implanted after the age of 70 years had poorer speech recognition outcomes than individuals implanted before age 70. Smaller-scale studies that evaluated the effect of age on CI outcomes compared groups of younger and older CI users matched for duration of deafness and found that older CI users performed more poorly on most, if not all, speech and word recognition measures (Friedland et al. 2010; Lazard et al. 2012; Lin et al. 2011; Roberts et al. 2013; Sladen and Zappler 2015). However, there is conflicting evidence as to the effect of advancing age on CI outcomes, as many studies that compared speech understanding scores from younger and older CI users found no significant differences between age groups (Haensel et al. 2005; Labadie et al. 2000; Leung et al. 2005; Noble et al. 2009; E. Park et al. 2011; Pasanisi et al. 2003; Poissant et al. 2008). In many of these studies, the age range of the “younger” group was between approximately 45–60 years, or even up to 64 years of age. Since age-related changes in many forms of auditory perception can be observed as early as 40–55 years of age (Grose et al. 2006; Snell and Frisina 2000), it is possible that a lack of an age effect in some prior studies is associated with recruitment of middle-aged adults into the “younger” group. Another reason for the lack of age effects is that relatively simple speech materials (e.g., highly redundant sentences) were often used in these studies and were presented in quiet. The use of relatively simple test materials presented in quiet listening conditions may not reveal age-related differences in perception (Dubno et al. 1984).

One issue that may contribute to relatively poor CI outcomes in older listeners is that the stimulation rate used in the sound processor program may not be optimal for this age group. Due to a lack of guidance for optimizing device settings to improve function for older CI users, clinicians typically rely on the manufacturers’ default settings. However, some CI audiologists report anecdotally that improved speech understanding is possible for older users by adjusting device settings to use a lower-than-default electrical stimulation rate. Stimulation rate refers to the number of electrical pulses per second (pps) that are delivered to a single electrode. These pulses convey amplitude modulations that are derived from the acoustic input signal. Selecting a stimulation rate lower than 1000 pps is explicitly recommended in CI-programming textbooks for certain patients, including those with long durations of deafness, auditory nerve dysfunction, and older CI users (Wolfe and Schafer 2014). Such a recommendation, however, appears to be currently without evidence and the underlying mechanisms that would produce improvements in speech understanding with slower stimulation rates are unknown. The notion that lower-than-default stimulation rates can greatly improve performance in some patients seems contrary to the current guidance from CI manufacturers. Default stimulation rates for each company are as follows: Advanced Bionics: 1800 or 2900 pps1 (per single electrode) depending on the speech processing strategy; Cochlear: 900 pps; and Med-El: between 1500–2000 pps on average (Med-El default stimulation rates are set to the fastest possible rate within voltage compliance).

The trend of increasing CI stimulation rate began after the introduction of non-simultaneous stimulation strategies such that only one electrode is stimulated at a time, which reduces channel interaction and employs a relatively high rate of stimulation for each electrode. High stimulation rates should, in theory, sample the temporal envelope of the signal more accurately, and thus facilitate neural presentation of the rapid modulations that carry short-duration speech segments (e.g., stop consonant bursts). From a digital signal processing standpoint, stimulation rate is essentially analogous to sampling rate, for which a higher rate should produce a higher fidelity signal representation. However, the idea that relatively higher stimulation rates are desirable because of a more precise neural representation of the temporal envelope is not consistently supported. Many studies do show an improvement in speech understanding with higher rates >1000 pps (Frijns et al. 2003; Loizou et al. 2000), while others show no consistent effect of rate (Friesen et al. 2005; Weber et al. 2007), or even a decrease in performance using relatively higher rates (Buechner et al. 2010). Results of other studies show that some subjects benefitted from relatively higher rates while others benefitted from lower rates (Balkany et al. 2007; Holden et al. 2002; Skinner, Arndt, et al. 2002; Vandali et al. 2000). For example, Skinner, Arndt, et al. (2002) found that 23% of their subjects preferred and achieved the best speech understanding performance when using the lowest rate of 250 pps. If lower rates do indeed produce better speech understanding scores in some CI users, it may be that their auditory systems cannot cope with the higher stimulation rate of the signal, which may be linked to some biological factor.

Even studies that describe an overall group benefit from high stimulation rates report substantial individual variability, noting that some subjects “benefitted more than others” from high rates (Loizou et al. 2000). The largest study to date that investigated the effect of stimulation rate measured speech understanding and subjective preference in 55 CI users (Balkany et al. 2007). Two ACE processing strategies were tested, one using stimulation rates of 500, 900, and 1200 pps (standard “ACE”) and the second using rates of 1800, 2400, and 3500 pps (higher rate “ACE RE”). Subjects were given six weeks to use each strategy and to experience the three rates, with the final two weeks using their preferred stimulation rate within that processing strategy. Higher rates of stimulation did not improve speech understanding scores on average and, in fact, 67% of the subjects preferred the slower rates within the first ACE strategy. Nearly every study that observes substantial individual variability recommends some form of “individual rate optimization” on a case by case basis. Wolfe and Schafer (2014) recommend that CI patients should be provided with maps using different stimulation rates within the first months following activation to select their individualized optimal rate. With clinical audiologists’ time already at a premium, establishing a patient’s optimal stimulation rate is becoming more difficult.

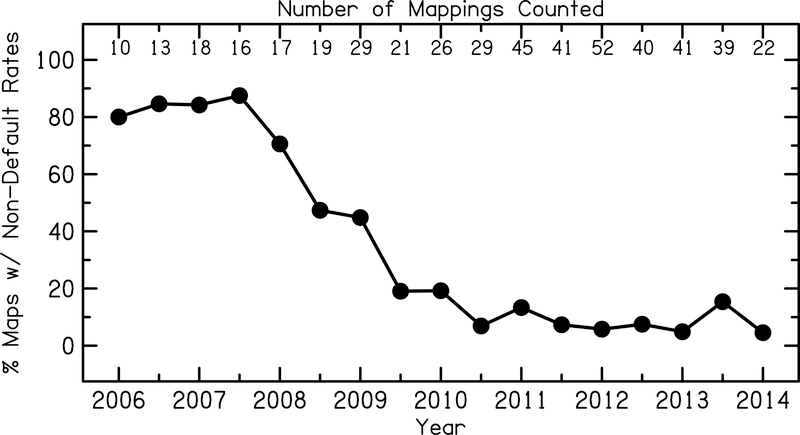

Individual optimization of CI map settings has the potential to improve outcomes for many CI users within this diverse and highly variable patient population. However, the choice of stimulation rate at some clinics rarely deviate from the manufacturer default. Figure 1 shows the proportion of CI patients with Cochlear Ltd. devices mapped at non-default stimulation rates as a function of year from one CI mapping and surgical center in the greater Baltimore, Maryland – Washington, DC metropolitan area. During the years in which stimulation rate data were collected (2006–2014), this center employed three mapping audiologists simultaneously. These retrospective data show a strong trend in using default stimulation rate (900 pps) settings after the year 2008. From 2010 on, less than approximately 10% of CI patients were mapped at a non-default stimulation rate. This is likely due to recommendations for audiologists to maintain default manufacturer settings because these settings result in the best speech understanding performance for CI users on a group level. Given the large variability in biological factors and post-implantation speech understanding scores, mapping nearly every CI user at the default stimulation rate may not result in maximum outcomes on an individual level.

Figure 1:

Proportion of adult CI patients implanted with Cochlear Ltd. devices mapped at non-default stimulation rates at a CI mapping and surgical center in the Baltimore, MD – Washington, DC metropolitan area. Retrospective data was analyzed in six-month intervals from years 2006 to 2014. Analysis only includes actual mapping instances that occurred in each six-month interval, therefore, for example, patients that were mapped in 2012, but were not seen again until 2014 were only included in the 2012 and 2014 analysis and were not carried through into the 2013 analysis. The number of mappings in each six-month interval ranged from 10 to 52, and are listed at the top of the figure.

Universal application of default, and relatively high, stimulation rates do not appear to benefit all CI users and will be detrimental to some. However, because the underlying mechanism that limits one’s ability to neurally encode temporal envelope modulations using high stimulation rates is unknown, a priori strategies for patient-specific rate optimization are absent. Previous studies evaluating the effects of stimulation rate on speech understanding did not consider individual subject variables, such as age or age at onset of hearing loss. Moreover, the subject groups selected for those studies were primarily composed of high-performing CI users with late onsets of hearing loss and short durations of deafness. If the effect of stimulation rate is indeed dependent on such biological factors, then a subject’s age, age at onset of hearing loss, and duration of deafness should be considered.

The goal of this study was to determine if improvements in speech understanding were possible when CI users were programmed with non-default stimulation rates, and to determine whether lower-than-default stimulation rates improved older CI users’ speech understanding. If age can be identified as a predictor of a potential benefit from lower-than-default stimulation rates, this factor could provide evidence-based guidance for best clinical practice to audiologists. An understanding of the predictive value of age would allow for improved individual rate optimization and could decrease the time needed for a clinical CI-programming appointment.

MATERIALS AND METHODS

Subjects

Thirty-seven adult CI users were recruited to participate in this study. Ages ranged from 22 to 87 years (mean = 58.8 years ± 14.5 years). For three bilaterally implanted subjects, both ears were tested separately. This resulted in 40 separate ears that were included in the study. Subjects were required to have at least one year of experience with their CI. All subjects were required to pass the Modified Mini-Mental State (3MS) Examination, which is a brief screening test for dementia (Teng and Chui 1987). Subject details are listed in Table 1. Thirty-two subjects were implanted with Cochlear Ltd. devices (35 ears), three subjects with Advanced Bionics devices, and two subjects with Med-El devices. For analysis purposes, subjects were divided into two groups based on their device manufacturer. Thirty-two subjects were included in the Cochlear model with ages ranging from 22 to 87 (mean = 60.6 years ± 14.7 years). Five subjects were included in the Advanced Bionics/Med-El group with ages ranging from 39 to 67 (mean = 52.5 years ± 8.5 years).

Table 1.

Subject demographics.

| Subject | Age | Age HL Onset | Duration of Deafness | Internal Device | Processing Strategy | Clinical Stimulation Rate (pps) |

|---|---|---|---|---|---|---|

| CAR | 22 | 4 | 14 | CI24RE(CA) | ACE | 900 |

| CAT | 26 | 10 | 8 | CI24RE(CA) | ACE | 900 |

| CBP | 34 | 5 | 15 | CI24M | ACE | 900 |

| CBU | 39 | 0 | 35 | HR90K-Advantage | HiRes-S | 3228 |

| CBW | 41 | 26 | 5 | CI24R(CS) | ACE | 900 |

| CAP | 46 | 38 | 1 | CI24RE(CA) | ACE | 2400 |

| CBM | 49 | 32 | 14 | HR90K-HiFocus 1j | HiRes-S w/ Fidelity 120 | 3712 |

| CBN | 49 | 0 | 39 | CI24R(CS) | ACE | 900 |

| CAS | 50 | 41 | 3 | CI24RE(CA) | ACE | 900 |

| CAW - L | 51 | 0 | 47 | CI24RE(CA) | ACE | 900 |

| CAW - R | 51 | 0 | 50 | CI24RE(CA) | ACE | 500 |

| CBJ | 51 | 17 | 33 | CONCERT-FLEX28 | FSP | 1899 |

| CCF | 51 | 48 | 2 | CI422 | ACE | 900 |

| CAX | 52 | 49 | 0 | CI512 | ACE | 900 |

| CBA | 53 | 0 | 52 | CI24RE(CA) | ACE | 900 |

| CAL | 54 | 13 | 30 | CI24R(CS) | ACE | 900 |

| CBI | 55 | 52 | 3 | CONCERT-FLEX28 | FSP | 1813 |

| CBK | 55 | 20 | 31 | CI24RE(CA) | ACE | 900 |

| CBF | 56 | 5 | 47 | CI24RE(CA) | ACE | 900 |

| CBG | 60 | 4 | 53 | CI512 | ACE | 900 |

| CBH | 60 | 52 | 6 | CI24RE(CA) | ACE | 900 |

| CBV | 61 | 7 | 52 | CI512 | ACE | 900 |

| CAJ | 62 | 0 | 47 | CI24M | ACE | 720 |

| CAG | 63 | 20 | 32 | CI24R(CA) | ACE | 1800 |

| CAZ | 67 | 45 | 20 | CI512 | ACE | 900 |

| CBY | 67 | 5 | 50 | CII-HiFocus 1J | HiRed Optima P | 3712 |

| CAB | 68 | 13 | 45 | CI24R (CS) | ACE | 1200 |

| CAK - R | 68 | 57 | 2 | CI24R(CS) | ACE | 720 |

| CAK - L | 68 | 34 | 33 | CI422 | ACE | 720 |

| CAM | 69 | 40 | 24 | CI24RE(CA) | ACE | 900 |

| CAO | 69 | 3 | 63 | CI512 | ACE | 900 |

| CAI | 70 | 60 | 3 | CI24RE(CA) | ACE | 1800 |

| CBT | 72 | 50 | 20 | CI24RE(CA) | ACE | 900 |

| CAD | 74 | 55 | 9 | CI24R (CS) | ACE | 900 |

| CCA | 74 | 70 | 1 | CI512 | ACE | 900 |

| CBC - L | 76 | 70 | 0 | CI24RE(CA) | ACE | 2400 |

| CBC - R | 76 | 35 | 41 | CI24RE(CA) | ACE | 900 |

| CBD | 78 | 74 | 0 | CI24RE(CA) | ACE | 500 |

| CBB | 80 | 77 | 2 | CI24RE(CA) | ACE | 900 |

| CBE | 87 | 12 | 64 | CI24R(CS) | ACE | 900 |

All values given in years. Age values represent the age at participation in the study. HL, hearing loss.

Mapping

Each subject was mapped by a single research audiologist using four or five stimulation rates to create experimental maps. Comfort (C or M) levels were measured for all subjects. Thresholds (T levels) were measured for Cochlear-brand devices only, according to the manufacturers’ mapping guidelines. For Cochlear Ltd. and Med-El devices, clinical mapping software (Custom Sound and MAESTRO, respectively) was used to create experimental maps. For Advanced Bionics devices, BEPS+ research mapping software was used, which allowed for access to the desired stimulation rates. Map levels were measured for the following target rates: 500, 720, 900, 1200 pps, and occasionally >1200 pps if the subject’s clinical processor was mapped at a rate higher than 1200 pps. The actual stimulation rates varied slightly by ±5 pps for Advanced Bionics devices due to software limitations. In one instance, an experimental map was created using 250 pps in order to evaluate a rate lower than the subject’s clinical rate of 500 pps (subject CAW). In general, these rates were chosen to represent the range of rates that are most commonly used in current clinical practice and to evaluate if changes in stimulation rate within this typical range could affect speech understanding scores. Current levels for C/M-levels and/or T-levels were carefully measured at each rate. For Advanced Bionics and Med-El devices, M-levels were obtained using the ascending loudness technique on individual channels to a level of “loud, but comfortable.” T-levels were automatically set by the programming software to 10% of the M-level. For Cochlear devices, C-levels were obtained using the ascending loudness technique on every fifth electrode (interpolation was used for electrodes falling between every fifth electrode) to a level of “loud, but comfortable.” T-levels were also measured on every fifth electrode and were set at the softest level that resulted in detection 100% of the time. Loudness balancing between adjacent electrodes was not performed. Further adjustments for loudness/comfort and sound-quality issues after initial measurement were limited to global increases or decreases in T and/or C or M levels, and directional tilting either clockwise (increase low-frequency, decrease high-frequency channels) or counterclockwise (increase high-frequency, decrease low-frequency channels). Default input processing was not altered; however, noise reduction algorithms were not activated in any maps. For this reason, experimental maps could have been quite similar or different from subjects’ clinical maps, as their audiologists likely have different approaches to mapping and different strategies for sound-quality adjustments, including channel-specific modifications. For this study, which examined the effect of stimulation rate only, this mapping approach was applied consistently across rates. In addition, all other map parameters (i.e., maxima, presence of deactivated electrodes, etc.) remained consistent with each subject’s clinical map. The processor model used for experimental testing was consistent with the processor used by the subject in order to eliminate extraneous variables due to subtle differences between devices. For example, experimental maps were written to a research Freedom processor for subjects that currently used a Freedom processor.

Stimuli and Procedure

Testing was conducted in a double-walled sound-attenuating booth over a single loudspeaker (Grason-Stadler, GSI-Audera) located 1 m directly in front of the subject. A five-minute listening period for each experimental map was provided before each set of trials in which subjects listened to an unabridged book-on-tape while following along with the written text. Sentence recognition was tested at 60 dB SPL in quiet and in noise (10-talker babble) at a +10-dB signal-to-noise ratio using both the AzBio (Spahr et al. 2012) and PRESTO (Perceptually Robust English Sentence Test Open-set) (H. Park et al. 2010) sentence materials. AzBio sentences are low-context sentences spoken by two males and two females, all with a standard American dialect. Each AzBio sentence list is composed of 20 sentences spoken in a conversational fashion rather than a “clear speech” style used in more traditional sentence materials. PRESTO sentences are considered a high-variability corpus because it includes both talker and dialect variability. Each PRESTO list is composed of 18 low-context sentences varying in length and spoken by a different talker. Talkers include males and females with various United States dialects (i.e., New England, Northern, Southern, New York City, etc.). No talker is repeated within a single list and each list includes at least five different dialects. AzBio and PRESTO materials were selected to reflect subjects’ “real world” speech recognition performance with a conversational speaking style and rate. The use of two sentence materials was employed to avoid floor and ceiling effects given a variety of subject performance levels.

Subjects were instructed to listen to each sentence and repeat as much of the sentence as possible. Responses were scored as percent correct in real-time by two experimenters. The experimenters were informed of the stimulation rate assigned to each program. Each experimental map was evaluated using four sentence lists per block: AzBio in quiet, AzBio in noise, PRESTO in quiet, and PRESTO in noise. Each map was tested in two separate blocks, for a total of eight sentence lists, resulting in a total of 152 sentences tested per map. The order of experimental maps and order of sentence material was randomly assigned for each subject prior to testing.

Analysis

Two linear mixed-effects (LME) models were used to examine the effects of age and stimulation rate on speech understanding scores in quiet and in noise, and to understand how other biological variables such as age at onset of hearing loss interacted with different stimulation rates. Subjects were divided between two models based on their device manufacturer (one model for those implanted with Cochlear devices, and a second model for those implanted with Advanced Bionics and Med-El devices) to examine speech understanding performance at various stimulation rates as compared to performance using each manufacturer’s default rate. The Cochlear model used 900 pps as the reference rate (the default rate), while the Advanced Bionics/Med-El model used the highest rate (>1200 pps) as the reference rate. As described earlier, Advanced Bionics currently defaults to either 1800 or 2900 pps per electrode, and Med-El defaults to the fastest possible rate per electrode within voltage compliance. Subjects’ individual percent correct scores for each sentence in each test condition were modeled using the lme4 package (Bates et al. 2014) in R Studio software.

Fixed Effects

For both models, the model building approach described by Hox et al. (2017) was used. First, intercept-only models were constructed as a benchmark. Then, the main effects and interactions of level-1 predictors were added as fixed variables. In the Advanced Bionics/Med-El model, the level-1 predictors were stimulation rate [five levels: −4 = 500 pps, −3 = 720 pps, −2 = 900 pps, −1 = 1200 pps, 0 = >1200 pps (reference level)], noise condition [two levels: 0 = quiet (reference level), 1 = noise], and corpus [0 = AzBio (reference level), 1 = PRESTO]. In the Cochlear model, the level-1 predictors were the same as in the Advanced Bionics/Med-El model except that stimulation rate had only four levels [−2 = 500 pps, −1 = 720 pps, 0 = 900 pps (reference level), 1 = 1200 pps], and there was one additional predictor variable of “clinical rate.” This additional variable was necessary because the Cochlear model included eight subjects (nine ears) that used a non-default rate in their clinical processors. In order to evaluate if speech understanding performance in response to changing the rate was different in these eight subjects, a “clinical rate” variable was added to this model [0 = clinical rate was the default rate, 1 = clinical rate was a non-default rate]. The fixed effects for each model were then reduced by removing non-significant predictors. The improvement in model fit with the addition of the fixed effects compared to the intercept-only model was tested with a chi-square significance test (alpha level = 0.05). Next, main effects and interactions for the level-2 predictors (chronological age and age at onset of hearing loss) were added to the fixed effects structure. Values for level-2 predictors were transformed into standardized values (z-scores) before being entered in the model. Age at onset of hearing loss was chosen as a predictor variable instead of duration of deafness because it was less correlated with chronological age. Both predictors of age at onset of hearing loss and duration of deafness were not included in the model together because they were also highly correlated. Non-significant level-2 predictors were then removed to create the most parsimonious fixed effects structure.

Random Effects

Random slope variation for all level-1 predictors was tested on a variable-by-variable basis to avoid an overparameterized model. Non-significant level-1 predictor variables that were previously removed from the fixed effects structure were reintroduced into the random effects during this step. All predictors that had significant variance across subjects were then added to the random effects structure. A chi-square significance test was used to evaluate the improvement in model fit when including random effects compared to a model without random effects.

Cross-level Interactions

Finally, cross-level interactions (interactions between fixed level-1 and level-2 predictors) were added to the fixed effects structure. Note that only the level-1 predictors that had significant random variance across subjects were included in this step (Hox et al. 2017). In order to appropriately interpret these interactions, both the main effect and any lower-level interaction term remained in the model regardless of significance.

RESULTS

Effect of age and stimulation rate – group results

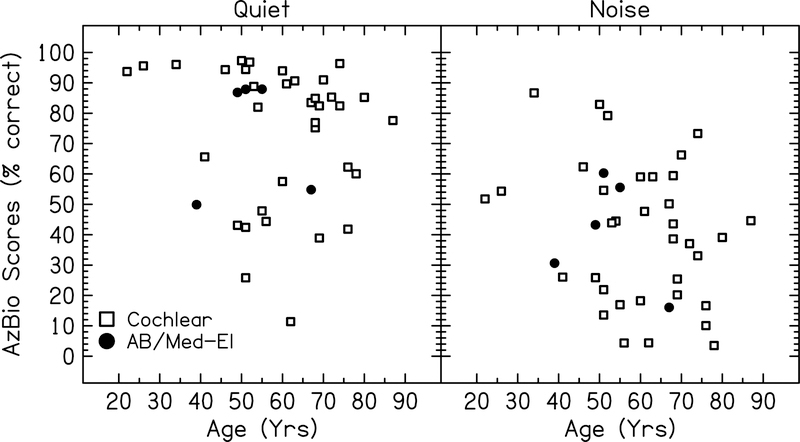

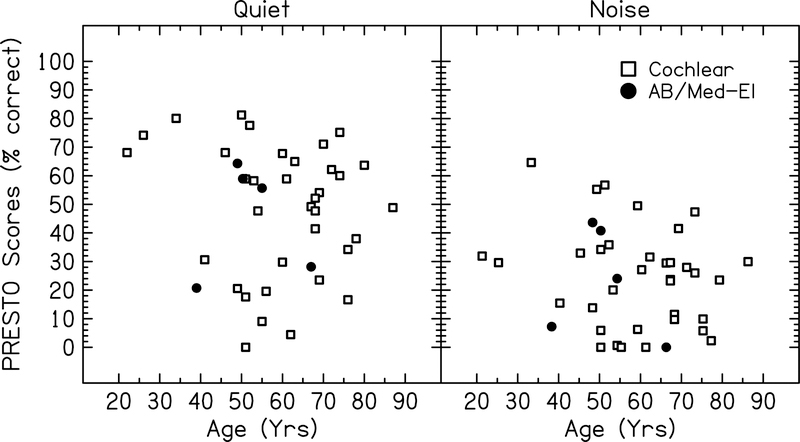

Speech understanding scores for all subjects are plotted as a function of chronological age for AzBio and PRESTO sentence materials in Figure 2 and 3, respectively. Each point represents the speech understanding score obtained by the subject in that condition averaged across all stimulation rates.

Figure 2:

Speech understanding scores for each subject for AzBio sentences in quiet and noise plotted as a function of chronological age. Each point represents the average speech understanding score across all stimulation rates tested for each subject. Open symbols represent the 35 ears with Cochlear devices included in the first model. Filled symbols represent the five ears with either Advanced Bionics (AB) or Med-El devices included in the second model.

Figure 3:

Speech understanding scores for each subject for PRESTO sentences in quiet and noise plotted as a function of chronological age. Each point represents the average speech understanding score across all stimulation rates tested for each subject. Open symbols represent the 35 ears with Cochlear devices included in the first model. Filled symbols represent the five ears with either Advanced Bionics (AB) or Med-El devices included in the second model.

Cochlear Subjects (Model 1)

The final LME model for subjects implanted with Cochlear devices is shown in Table 2. The fixed main effect of sentence corpus was significant (p<0.001), indicating that speech understanding scores decreased an average of 22.4 percentage points when using PRESTO sentence material compared to AzBio sentences. The main effect of noise condition was also significant (p<0.001), indicating that scores decreased 31.9 percentage points, on average, with the addition of background noise compared to scores obtained in quiet. The main effect of chronological age did not reach significance (p=0.07)2. Additionally, the clinical-rate variable was not significant at any step of the model building approach, indicating that the nine ears that were mapped at a non-default rate in their clinical processors did not respond to rate changes any differently compared to subjects that were mapped at the default rate.

Table 2.

Final LME model for the Cochlear subjects (N=35). Bolded rows indicate significant fixed effects terms at the 0.05 level.

| Fixed effects | Coefficient | SE | t | p |

|---|---|---|---|---|

| Intercept | 71.97 | 3.98 | 18.07 | <0.001 |

| Rate (0 = 900 pps reference): | ||||

| (−2 = 500 pps) | 0.98 | 1.08 | 0.90 | 0.37 |

| (−1 = 720 pps) | 1.85 | 1.02 | 1.82 | 0.07 |

| ( 1 = 1200 pps) | 0.99 | 1.03 | 0.97 | 0.34 |

| Corpus (0 = AzBio, 1 = PRESTO) | −22.43 | 1.26 | −17.83 | <0.001 |

| Noise (0 = Quiet, 1 = Noise) | −31.97 | 1.84 | −17.38 | <0.001 |

| Age (standardized) | −6.35 | 3.46 | −1.84 | 0.07 |

| Interactions | ||||

| Rate 500 pps × PRESTO | −3.17 | 1.19 | −2.67 | 0.007 |

| Rate 720 pps × PRESTO | −2.86 | 1.19 | −2.40 | 0.016 |

| Rate 1200 pps × PRESTO | −3.07 | 1.19 | −2.59 | 0.009 |

| PRESTO × Noise | 8.57 | 8.59 | 1.86 | <0.001 |

| PRESTO × Age | 1.95 | 1.17 | 1.67 | 0.09 |

| Rate 500 pps × Age | 1.48 | 1.05 | 1.41 | 0.16 |

| Rate 720 pps × Age | −0.08 | 1.03 | −0.08 | 0.94 |

| Rate 1200 pps × Age | 0.64 | 1.05 | 0.61 | 0.55 |

| Rate 500 pps × PRESTO × Age | 2.38 | 1.16 | 2.04 | 0.04 |

| Rate 720 pps × PRESTO × Age | 2.26 | 1.18 | 1.92 | 0.06 |

| Rate 1200 pps × PRESTO × Age | 0.31 | 1.16 | 0.27 | 0.79 |

| Random effects | Variance | SD | ||

| Subject (intercept) | 541.72 | 23.28 | ||

| Subject - Rate: | ||||

| (−2 = 500 pps) | 19.43 | 4.41 | ||

| (−1 = 720 pps) | 14.09 | 3.75 | ||

| ( 1 = 1200 pps) | 15.58 | 3.95 | ||

| Subject - Corpus | 24.16 | 4.92 | ||

| Subject - Noise | 107.52 | 10.37 | ||

| Residual | 852.35 | 29.19 | ||

Significant two-way interactions of stimulation rate and PRESTO material were identified for each rate condition (p≤0.016 for all rate interactions), demonstrating small declines in performance by 2–3 percentage points with non-default rates compared to the default rate of 900 pps when using PRESTO sentences only. There was also a significant two-way interaction of sentence corpus and noise condition (p<0.001). While average speech understanding scores for AzBio sentences declined 32 percentage points with the introduction of background noise, scores for PRESTO sentences only declined 23 percentage points in noise. This interaction is likely the result of a floor effect with PRESTO sentences; average scores for PRESTO sentences were 49% correct in quiet and 26% in noise.

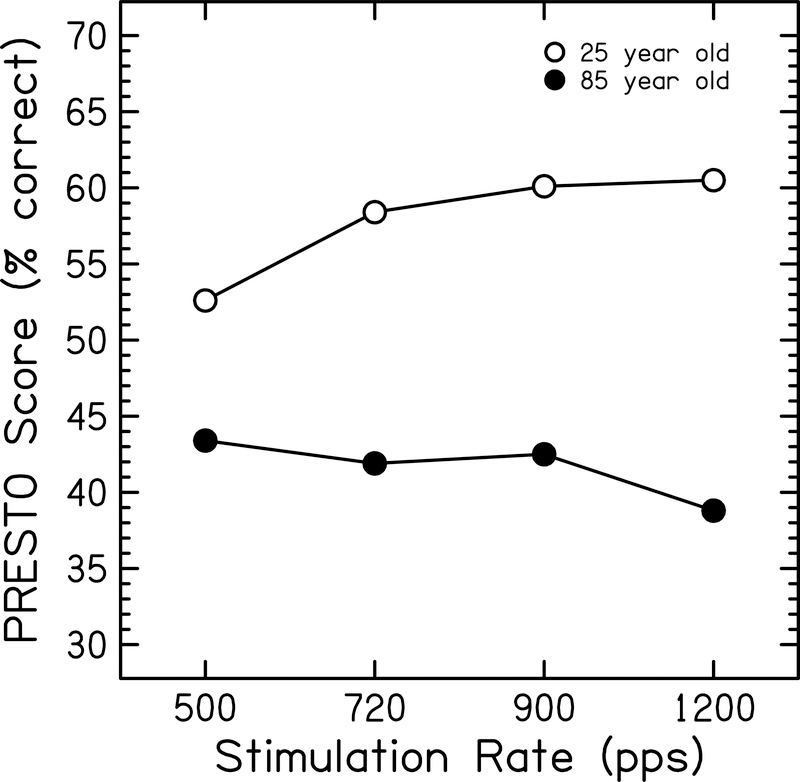

There was a significant three-way interaction of 500 pps × PRESTO × Age (p=0.04). To investigate the nature of this interaction, predicted performance functions for PRESTO sentences in quiet for one younger (25 years old) and one older (85 years old) subject were calculated (Figure 4). These age parameters were selected to represent the lower- and upper-most ages of the subjects that participated in this study. The predicted performance for the younger subject decreases when the rate is reduced from the default rate of 900 pps to lowest rate of 500 pps, whereas predicted performance in the older subject does not show this change in performance between these rates. Thus, this model predicts that speech understanding scores for a 25 year old would decrease by 7.5 percentage points when mapped at 500 pps compared to 900 pps in this condition, while scores for the 85 year old would increase slightly by 2.3 percentage points.

Figure 4:

Predicted performance functions using PRESTO sentences plotted as a function of stimulation rate for two Cochlear subjects. Open circles represent a 25-year-old subject. Filled circles represent an 85-year-old subject.

Advanced Bionics/Med-El Subjects (Model 2)

The final LME model for subjects implanted with either Advanced Bionics or Med-El devices is shown in Table 3. There was a significant main effect of sentence corpus (p<0.001), demonstrating an average decrease in speech understanding scores of 27.4 percentage points with PRESTO sentences compared to AzBio sentences. There was also a significant main effect of noise condition (p<0.001), with an average decrease of 32.2 percentage points with the introduction of background noise. The main effect of stimulation rate was significant for the 720 pps condition (p=0.02), indicating an average increase in speech understanding scores of 3.8 percentage points when using a 720 pps map compared to using a default rate map of >1200 pps. A similar benefit with using the 900 pps map compared to the faster default was observed, but this particular rate effect did not reach statistical significance (p=0.055). There was also a significant main effect of age at onset of hearing loss (p=0.03), indicating that with every 1 standard deviation increase in the age at which hearing loss was acquired, there was a 13.8 percentage point improvement in speech understanding scores at the default rate.

Table 3.

Final LME model for the Advanced Bionics and Med-El subjects (N=5). Bolded rows indicate significant fixed effects terms at the 0.05 level.

| Fixed effects | Coefficient | SE | t | p |

|---|---|---|---|---|

| Intercept | 73.53 | 5.09 | 14.45 | <0.001 |

| Rate (0 = >1200 pps reference): | ||||

| (−4 = 500 pps) | −2.67 | 1.65 | −1.62 | 0.11 |

| (−3 = 720 pps) | 3.83 | 1.65 | 2.32 | 0.02 |

| (−2 = 900 pps) | 3.17 | 1.65 | 1.92 | 0.055 |

| (−1 = 1200 pps) | 0.8 | 1.65 | 0.49 | 0.62 |

| Corpus (0 = AzBio, 1 = PRESTO) | −27.42 | 1.42 | −19.38 | <0.001 |

| Noise (0 = Quiet, 1 = Noise) | −32.19 | 3.19 | −10.10 | <0.001 |

| Age at HL onset (standardized) | 13.84 | 4.93 | 2.81 | 0.038 |

| Interactions | ||||

| PRESTO × Noise | 10.74 | 2.17 | 4.94 | <0.001 |

| Random effects | Variance | SD | ||

| Subject (intercept) | 118.59 | 10.90 | ||

| Subject - Noise | 41.58 | 6.45 | ||

| Residual | 937.96 | 30.63 | ||

The interaction between PRESTO sentences and noise condition was also significant (p<0.001). Speech understanding scores decreased by an average of 32.1 percentage points when listening to AzBio sentences in noise compared to when listening in quiet. When listening to PRESTO sentences, scores only decreased by 21.4 percentage points when background noise was introduced. This result is consistent with the PRESTO × noise interaction also identified in the Cochlear model, and this result is likely due to a floor effect for PRESTO sentences. Average scores for the PRESTO sentences in this model were 46.1% correct in quiet and 24.7% correct in noise.

Effect of stimulation rate – individual subject results

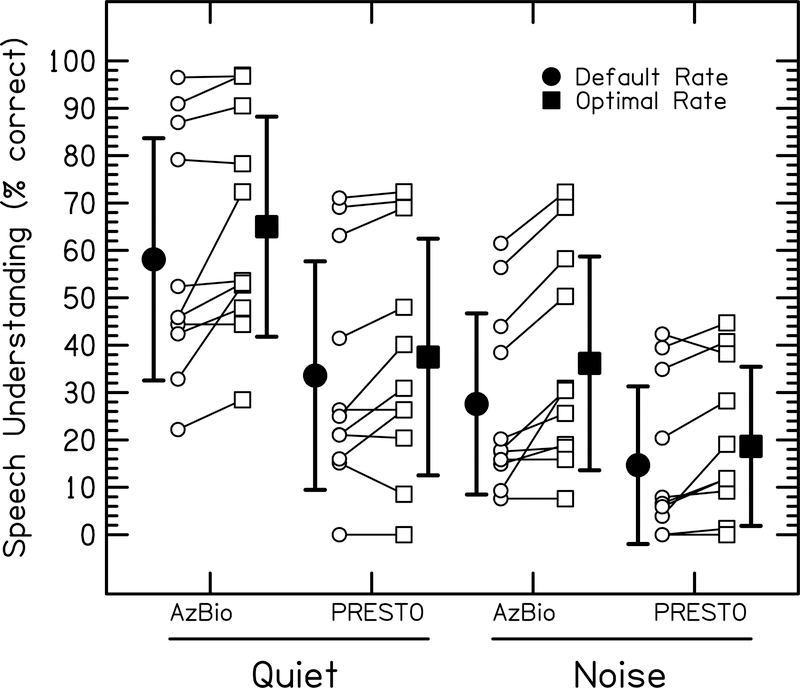

On a group level, the effect of stimulation rate was only significant when involved in the three-way interaction of 500 pps × PRESTO sentences × Age for the Cochlear model, and as a main effect for 720 pps for the Advanced Bionics/Med-El model. Although statistically significant, the size of the rate effect was negligible in a clinical sense. However, when speech understanding scores using each rate were plotted for individual subjects, it was clear that some subjects benefited substantially from non-default rates. In an effort to identify individual subjects who showed large differences between rates, a 10 percentage point or greater difference in speech understanding scores between any two stimulation rates was chosen as a criterion for identifying subjects who showed an effect of rate. Two speech understanding scores for each rate were used for this comparison: AzBio and PRESTO averages in quiet and AzBio and PRESTO averages in noise. Eleven of the 40 ears that were tested showed an effect of stimulation rate based on this criterion and their results are shown in Figure 5. Nine out of these 10 subjects were implanted with Cochlear devices, which uses a default rate of 900 pps. The stars plotted along the x-axes represent the “optimal” stimulation rate for each subject. Optimal rate was defined as the rate that resulted in the highest speech understanding score when all sentence scores (including scores collected in quiet and in noise) were averaged together for each rate condition. Some subjects performed best with a particular rate in noise and with a different rate in quiet, but in these specific cases the “best” rate was only marginally better than any other rate. For individuals that were identified as showing rate effects (shown in Figure 5), their performance with their optimal rate was generally consistent across quiet and noise conditions. The strategy used for identifying the optimal rate was the rate that resulted in the best speech understanding score per subject overall. In the current experiment, there is an admitted statistical bias towards finding that “other” stimulation rates are better than the default rate, because an increase in the number of conditions increases the chances of finding such a change. Nevertheless, the trends observed within individuals and the magnitude of the potential gains are striking.

Figure 5:

Speech understanding scores for the 11 ears that showed an effect of stimulation rate as determined by a 10 percentage point or greater change in speech understanding scores between any two rates. Open circles represent AzBio sentences in quiet. Filled circles represent AzBio sentences in noise. Open triangles represent PRESTO sentences in quiet. Filled triangles represent PRESTO sentences in noise. Star symbols represent the subject’s optimal stimulation rate. The manufacturer default stimulation rate is 900 pps for all subjects displayed, except for subject CBU who was an Advanced Bionics user whose clinical rate was set to the default rate of 1614 pps per electrode (3228 pps per channel). Note that the y-axes ranges differ across subjects.

Out of the 11 ears that showed an effect of rate, the optimal rate was different than the default rate in 10 ears. Out of those selected 10 ears, eight ears had an optimal rate that was lower than the default. The average amount of improvement with an optimal rate compared to the manufacturers’ default rate varied between subjects. Some subjects showed minimal improvement once performance was averaged across multiple sentence conditions, while others achieved >15 percentage point improvements in speech understanding scores with the optimal rate compared to the default. Subjects CAO and CBG are two examples of subjects who showed large improvements with optimal rates compared to the default rate. CAO achieved a 14.8 and 18.4 percentage point increase in quiet and noise conditions, respectively. When mapped at the optimal rate compared to the default, CBG had a 20.8 percentage point increase in quiet. On average, the 10 subjects that showed an effect of rate improved by approximately 7 percentage points for AzBio in quiet, by 4 points for PRESTO in quiet, by 9 points for AzBio in noise, and by 4 points for PRESTO in noise.

Of these 10 subjects who showed an effect of rate, four of them were mapped clinically at a non-default stimulation rate. Two of these four subjects had an optimal rate that was the same as their clinical rate (CAW-R and CAG), while the other two had an optimal rate that was lower than their clinical rate (CAP and CAK-L). The “clinical rate” variable in the group analysis was not significant, indicating that subjects who were mapped at non-default rates in their everyday processors did not show a different pattern of results as compared to someone mapped at the default. However, it was unclear whether those subjects had a slight advantage when using their individual non-default clinical rate compared to the other rates, because the clinical rates varied across these subjects. Scores obtained at the clinical rate for the eight subjects (nine ears) who were mapped at a non-default rate were compared to the average scores obtained at the other rates tested. On average, speech understanding scores obtained at the clinical rate were approximately 1 percentage point lower compared to those obtained at other rates in both quiet and in noise. The subjects who had the largest differences in performance with their clinical rates were CAP (6 and 16 percentage points lower with the clinical rate in quiet and noise conditions, respectively) and CAG (2 and 12 percentage points higher with the clinical rate in quiet and noise, respectively). Thus, subjects mapped at non-default rates did not show consistent improvements on average when using their clinical map with which they had extensive experience.

DISCUSSION

There are limited specialized recommendations for programming CIs for older users ≥65 years of age. As demonstrated in Figure 1, the default stimulation rate is most often used to program CIs, which may not maximize some patients’ speech understanding. This experiment demonstrated that the use of lower-than-default stimulation rates may improve speech understanding for some CI users. Additionally, the results on an individual subject level suggest that rate optimization could maximize outcomes for some users.

Tables 2 and 3 display the results of the final LME model for Cochlear users and Advanced Bionics/Med-El users, respectively. The effects of sentence corpus (AzBio vs PRESTO) and noise condition (quiet vs noise) were consistent across both models. The results show a decrease in performance of 22–27 percentage points using the PRESTO sentence corpus compared to AzBio sentences across both models. Additionally, performance drops approximately 32 percentage points on average with the introduction of background noise when listening to AzBio sentences. For PRESTO sentences, performance only drops between 21–23 percentage points in noise. This interaction between noise condition and sentence corpus is likely the result of a floor effect for performance on PRESTO sentences, as the average performance dropped to approximately 25% correct in noise in both models.

Chronological age did not significantly predict speech understanding performance in this group of CI users when the subjects’ data were analyzed in two separate models. Results for the Cochlear model showed that the default rate resulted in the best speech understanding scores on average. However, a three-way interaction between 500 pps × PRESTO × Age suggested that this negative effect of using non-default rates was offset with increasing age, because older subjects were able to obtain comparable scores when using 500 pps compared to the default (Figure 4). The interaction between age and stimulation rate was significant for PRESTO sentences, but not AzBio sentences. This may be a result of ceiling effects for the AzBio sentence conditions, as many subjects achieved scores >90% correct at all stimulation rates for AzBio sentences in quiet. In comparison, PRESTO sentences are more difficult test materials and are not as susceptible to ceiling effects (see Figures 2 and 3).

For the Advanced Bionics/Med-El model, there were no significant main effects nor interactions with age, which is not unexpected given that this analysis included only five subjects. However, the effect of age at onset of hearing loss was significant, suggesting that subjects with later onsets of hearing loss performed better on the speech understanding tasks used in this study. It is well-established that later age of onset of hearing loss is associated with a better CI prognosis (e.g., Blamey et al. 2013). The main effect of stimulation rate for 720 pps specifically was significant in this model, suggesting that performance would improve overall by approximately 4 percentage points using this lower-than-default rate compared to the default rates above 1200 pps. Although statistically significant, this improvement is not considered significant in a clinical sense, and does not provide strong support for a departure from current clinical practice. However, it is clear that age is not the only factor in predicting optimal stimulation rate. On an individual level, some subjects showed substantial improvements with their optimal rates compared to the default (Figure 5). More research is needed to identify the factors that may predict an individual’s optimal rate.

Eleven out of the 40 ears tested (27.5% of subjects) were identified as showing an effect of rate as determined by a 10 percentage point or greater difference in speech understanding scores between any two stimulation rates. Individual speech understanding results for these 11 ears are shown in Figure 5. The “optimal” rate, or the rate that resulted in the best speech understanding scores for all sentence conditions, was selected for each of these 11 ears. All but one subject’s optimal rate was different from the default rate. For the 10 ears that had an optimal rate that differed from the default, eight of them had a lower-than-default optimal rate. The magnitude of the change in speech understanding scores between the default and optimal rate varied between individual subjects (see Figure 6). Most subjects showed modest improvements of <10 percentage points with their optimal rate, while some subjects (e.g., CAO and CBG) showed large improvements >10–20 percentage points. For the subjects who do show an effect of stimulation rate, individual rate optimization procedures employed by their audiologist may result in measurable improvements in speech understanding ability.

Figure 6:

Comparison of speech understanding scores using the default rate vs the optimal rate in each sentence condition for each of the 11 ears that showed an effect of stimulation rate. Optimal stimulation rate was defined as the rate that results in the highest average speech understanding scores for all sentence conditions. Thus, the optimal rate resulted in better performance overall, but could result in the same or slightly poorer performance for any single sentence condition. Open circles represent individual default-rate scores. Open squares represent individual optimal-rate scores. Filled circles represent group average scores with the default rates. Filled squares represent group average scores with the optimal rates. Error bars represent ±1 standard deviation.

The results for individual subjects are consistent with the large across-subject variability seen in previous studies as it relates to individuals’ sensitivity to changing stimulation rate. Skinner, Holden, et al. (2002) evaluated phoneme, monosyllabic word, and sentence recognition, as well as subjective preference, using multiple stimulation strategies with different rates in newly implanted CI users. When the 12 subjects were provided with the ACE processing strategy, individual rate optimization demonstrated that two subjects preferred 500 pps, five preferred 900 pps, three preferred 1200 pps, and two preferred 1800 pps. However, when given the choice as to which processing strategy subjects preferred for everyday use, three of the 12 subjects (25% of subjects) preferred and performed best with the SPEAK strategy, which is limited to a 250 pps stimulation rate. Holden et al. (2002) compared speech recognition performance for words in quiet and sentences in noise for stimulation rates of 720 and 1800 pps. Two of the eight subjects (25% of subjects) performed significantly better using a rate of 720 pps, while two other subjects performed significantly better using a rate of 1800 pps. These studies underscore the point that although changing stimulation rate may not result in group-level differences in speech recognition (e.g., Loizou et al. 2000), individual subjects may show a significant benefit from a particular stimulation rate.

Future research should consider other potential underlying mechanisms that may limit the ability of an individual’s auditory system to encode electrical signals with high stimulation rates. Advancing age is generally associated with many changes to the auditory system: a loss of peripheral hearing sensitivity, degenerative processes in the human auditory system in response to prolonged hearing loss, including demyelination and a reduction in the soma area of primary auditory neurons (Elverland and Mair 1980; Shepherd and Hardie 2001; Spoendlin and Schrott 1989), and prolonged refractory properties (Shepherd et al. 2004). Age-related changes could theoretically reduce older CI users’ ability to encode rapid temporal changes in electrical stimulation (Zhou et al. 1995). Following these degenerative changes, eventual loss of spiral ganglia with advancing age would result in a limited number of neurons available to encode electrical signals. This “stochastic undersampling” of the temporal envelope would theoretically result in poor envelope/amplitude-modulation encoding (Lopez-Poveda 2014). However, age is not the only factor, as evidenced by the fact that not all older subjects showed a significant lower-rate advantage. Additionally, the absence of a control group in this experiment did not permit a thorough assessment of this issue. It has also been shown that loudness discrimination ability is poor and more variable at higher stimulation rates compared to lower rates (Azadpour et al. 2018). This reduction in loudness discrimination reduces the number of discriminable acoustic intensity steps, which could potentially reduce the perception of certain speech features that rely on intensity information to cue phoneme identity. Another element to consider is genetic factors, which have been shown to influence CI outcomes (Shearer et al. 2017), and may also help to stratify which patients would benefit from lower vs. higher stimulation rates.

One limitation of this study is that speech understanding scores with each stimulation rate were tested acutely, with only a five-minute listening period beforehand, which did not allow for any acclimatization to the novel rates. However, the relatively large improvements in speech understanding that were observed, at least for some subjects, with no acclimatization period to the different rates, argues that the benefits from optimal stimulation rates have the potential to increase over time. Based on the results of the current study, default stimulation rates are likely sufficient for many patients, but some patients may benefit from stimulation rate optimization during clinical mapping appointments. Future work investigating optimal stimulation rates when given more experience with novel rates in everyday life is needed. It is possible that more subjects would have shown optimal performance with a non-default rate if given an extended acclimatization period. Future research should also focus on identifying which approach provides the best clinical practice to older CI patients.

ACKNOWLEDGMENTS

We would like to thank Advanced Bionics, Cochlear Ltd., and Med-El for testing equipment and technical support. Leo Litvak from Advanced Bionics provided helpful feedback on a previous version of this report. This work was supported by NIH Grants R01-AG051603 (M.J.G.), R01-AG09191 (S.G.S.), F32-DC016478 (M.J.S.), and T32-DC000046E (M.J.S.). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. We would like to thank the University of Maryland’s College of Behavioral & Social Sciences (BSOS) Dean’s Office for their support. Thank you to Brittany N. Jaekel for your help with implementing and interpreting the multilevel analysis. Thank you to Allison Heuber, Sasha Pletnikova, Casey R. Gaskins, Kelly Miller, Lauren Wilson, and Calli M. Yancey for their help in data collection and analysis. Author contributions: N.N. conceived the idea for the experiment. N.N., D.J.E., and R.H. assisted in recruiting subjects. M.J.S., N.N., S.G.S., and M.J.G. designed the methods. M.C. analyzed the retrospective data on stimulation rates presented in Figure 1. M.J.S. collected and analyzed the data, and drafted the manuscript. M.J.S. prepared the figures. S.A., D.J.E., and R.H. provided input on study design and interpretation. M.J.G. and S.G.S supervised the project. M.J.S., S.G.S., and M.J.G. interpreted results of the experiments. All authors edited and revised the manuscript, and approved the final version.

This work was supported by NIH Grant R01 AG051603 (M.J.G.), NIH Grant R37 AG09191 (S.G.S.), NIH Grant F32 DC016478 (M.J.S.), NIH Institutional Research Grant T32 DC000046E (S.G.S. - Co-PI with Catherine Carr), and a seed grant from the University of Maryland-College Park College of Behavioral and Social Sciences. We have no other conflicts of interest. Finally, this work met all requirements for ethical research put forth by the IRB of the University of Maryland.

Footnotes

The stimulation rate displayed in Advanced Bionics clinical software represents pulses per second per “channel.” For a current-steered strategy in which there are two pulses per channel presented simultaneously, the displayed channel rate is double the stimulation rate per single electrode. The term “stimulation rate” throughout this paper is referring to the rate per single electrode.

However, the main effect of age was significant when all 37 subjects were included in one analysis, which increased the statistical power for identifying such an effect. Results for the combined model showed that increased age was associated with significant declines in speech understanding overall, suggesting a 0.4 percentage point decrease in performance for every one-year increase in age (p<0.01).

References

- Amichetti NM, Atagi E, Kong Y-Y, et al. (2018). Linguistic context versus semantic competition in word recognition by younger and older adults with cochlear implants. Ear Hear, 39, 101–109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson S, Parbery-Clark A, White-Schwoch T, et al. (2012). Aging affects neural precision of speech encoding. J Neurosci, 32, 14156–14164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Azadpour M, McKay CM, Svirsky MA (2018). Effect of pulse rate on loudness discrimination in cochlear implant users. J Assoc Res Otolaryngol, 19, 287–299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balkany T, Hodges A, Menapace C, et al. (2007). Nucleus freedom North American clinical trial. Otolaryngol Head Neck Surg, 136, 757–762. [DOI] [PubMed] [Google Scholar]

- Bates D, Maechler M, Bolker B, et al. (2014). lme4: Linear mixed-effects models using Eigen and S4. R package version, 1, 1–23. [Google Scholar]

- Blamey P, Artieres F, Baskent D, et al. (2013). Factors affecting auditory performance of postlinguistically deaf adults using cochlear implants: An update with 2251 patients. Audiol Neurootol, 18, 36–47. [DOI] [PubMed] [Google Scholar]

- Buechner A, Frohne-Buchner C, Gaertner L, et al. (2010). The Advanced Bionics high resolution mode: Stimulation rates up to 5000 pps. Acta Otolaryngol, 130, 114–123. [DOI] [PubMed] [Google Scholar]

- Chatelin V, Kim EJ, Driscoll C, et al. (2004). Cochlear implant outcomes in the elderly. Otol Neurotol, 25, 298–301. [DOI] [PubMed] [Google Scholar]

- Dubno JR, Dirks DD, Morgan DE (1984). Effects of age and mild hearing loss on speech recognition in noise. J Acoust Soc Am, 76, 87–96. [DOI] [PubMed] [Google Scholar]

- Elverland H, Mair I (1980). Hereditary deafness in the cat: An electron microscopic study of the spiral ganglion. Acta Otolaryngol, 90, 360–369. [DOI] [PubMed] [Google Scholar]

- Friedland DR, Runge-Samuelson C, Baig H, et al. (2010). Case-control analysis of cochlear implant performance in elderly patients. Otolaryngol Head Neck Surg, 136, 432–438. [DOI] [PubMed] [Google Scholar]

- Friesen LM, Shannon RV, Cruz RJ (2005). Effects of stimulation rate on speech recognition with cochlear implants. Audiol Neurootol, 10, 169–184. [DOI] [PubMed] [Google Scholar]

- Frijns JHM, Klop WMC, Bonnet RM, et al. (2003). Optimizing the number of electrodes with high-rate stimulation of the Clarion CII cochlear implant. Acta Oto-laryngol, 123, 138–142. [DOI] [PubMed] [Google Scholar]

- Gifford RH, Shallop JK, Peterson AM (2008). Speech recognition materials and ceiling effects: Considerations for cochlear implant programs. Audiol Neurootol, 13, 193–205. [DOI] [PubMed] [Google Scholar]

- Grose JH, Hall JW 3rd, Buss E (2006). Temporal processing deficits in the pre-senescent auditory system. J Acoust Soc Am, 119, 2305–2315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haensel J, Ilgner J, Chen Y-S, et al. (2005). Speech perception in elderly patients following cochlear implantation. Acta Oto-laryngol, 125, 1272–1276. [DOI] [PubMed] [Google Scholar]

- Holden LK, Finley CC, Firszt JB, et al. (2013). Factors affecting open-set word recognition in adults with cochlear implants. Ear Hear, 34, 342. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holden LK, Skinner MW, Holden TA, et al. (2002). Effects of stimulation rate with the Nucleus 24 ACE speech coding strategy. Ear Hear, 23, 463–476. [DOI] [PubMed] [Google Scholar]

- Hox JJ, Moerbeek M, Van de Schoot R (2017). Multilevel analysis: Techniques and applications. Routledge. [Google Scholar]

- Kujawa SG, Liberman MC (2015). Synaptopathy in the noise-exposed and aging cochlea: Primary neural degeneration in acquired sensorineural hearing loss. Hear Res, 330, 191–199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Labadie RF, Carrasco VN, Gilmer CH, et al. (2000). Cochlear implant performance in senior citizens. Otolaryngol Head Neck Surg, 123, 419–424. [DOI] [PubMed] [Google Scholar]

- Lazard DS, Vincent C, Venail F, et al. (2012). Pre-, per-and postoperative factors affecting performance of postlinguistically deaf adults using cochlear implants: a new conceptual model over time. PLoS One, 7, e48739. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leake PA, Hradek GT, Snyder RL (1999). Chronic electrical stimulation by a cochlear implant promotes survival of spiral ganglion neurons after neonatal deafness. J Comp Neurol, 412, 543–562. [DOI] [PubMed] [Google Scholar]

- Leung J, Wang NY, Yeagle JD, et al. (2005). Predictive models for cochlear implantation in elderly candidates. Arch Otolaryngol Head Neck Surg, 131, 1049–1054. [DOI] [PubMed] [Google Scholar]

- Lin FR, Thorpe R, Gordon-Salant S, et al. (2011). Hearing loss prevalence and risk factors among older adults in the United States. J Gerontol A Biol Sci Med Sci, 66, 582–590. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loizou PC, Poroy O, Dorman M (2000). The effect of parametric variations of cochlear implant processors on speech understanding. J Acoust Soc Am, 108, 790–802. [DOI] [PubMed] [Google Scholar]

- Lopez-Poveda EA (2014). Why do I hear but not understand? Stochastic undersampling as a model of degraded neural encoding of speech. Front Neurosci, 8, 348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moberly AC, Harris MS, Boyce L, et al. (2017). Speech Recognition in Adults With Cochlear Implants: The Effects of Working Memory, Phonological Sensitivity, and Aging. J Speech Lang Hear Res, 60, 1046–1061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noble W, Tyler RS, Dunn CC, et al. (2009). Younger-and older-age adults with unilateral and bilateral cochlear implants: Speech and spatial hearing self-ratings and performance. Otol Neurotol, 30, 921. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Otte J, Schuknecht HF, Kerr AG (1978). Ganglion cell populations in normal and pathological human cochleae. Implications for cochlear implantation. Laryngoscope, 88, 1231–1246. [DOI] [PubMed] [Google Scholar]

- Park E, Shipp DB, Chen JM, et al. (2011). Postlingually deaf adults of all ages derive equal benefits from unilateral multichannel cochlear implant. J Am Acad Audiol, 22, 637–643. [DOI] [PubMed] [Google Scholar]

- Park H, Felty R, Lormore K, et al. (2010). PRESTO: Perceptually robust English sentence test: Open‐set—Design, philosophy, and preliminary findings. J Acoust Soc Am, 127, 1958–1958. [Google Scholar]

- Parthasarathy A, Bartlett EL (2011). Age-related auditory deficits in temporal processing in F-344 rats. Neurosci, 192, 619–630. [DOI] [PubMed] [Google Scholar]

- Pasanisi E, Bacciu A, Vincenti V, et al. (2003). Speech recognition in elderly cochlear implant recipients. Clin Otolaryngol Allied Sci, 28, 154–157. [DOI] [PubMed] [Google Scholar]

- Poissant SF, Beaudoin F, Huang J, et al. (2008). Impact of cochlear implantation on speech understanding, depression, and loneliness in the elderly. Otolaryngol Head Neck Surg, 37, 488–494. [PubMed] [Google Scholar]

- Presacco A, Simon JZ, Anderson S (2016). Evidence of degraded representation of speech in noise, in the aging midbrain and cortex. J Neurophysiol, 116, 2346–2355. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roberts DS, Lin HW, Herrmann BS, et al. (2013). Differential cochlear implant outcomes in older adults. Laryngoscope, 123, 1952–1956. [DOI] [PubMed] [Google Scholar]

- Ronnberg J, Lunner T, Zekveld A, et al. (2013). The Ease of Language Understanding (ELU) model: Theoretical, empirical, and clinical advances. Front Syst Neurosci, 7, 1–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schvartz KC, Chatterjee M, Gordon-Salant S (2008). Recognition of spectrally degraded phonemes by younger, middle-aged, and older normal-hearing listeners. J Acoust Soc Am, 124, 3972–3988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sergeyenko Y, Lall K, Liberman MC, et al. (2013). Age-related cochlear synaptopathy: an early-onset contributor to auditory functional decline. J Neurosci, 33, 13686–13694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shearer AE, Eppsteiner RW, Frees K, et al. (2017). Genetic variants in the peripheral auditory system significantly affect adult cochlear implant performance. Hear Res, 348, 138–142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shepherd RK, Hardie NA (2001). Deafness-induced changes in the auditory pathway: implications for cochlear implants. Audiol Neurootol, 6, 305–318. [DOI] [PubMed] [Google Scholar]

- Shepherd RK, Roberts LA, Paolini AG (2004). Long-term sensorineural hearing loss induces functional changes in the rat auditory nerve. Eur J Neurosci, 20, 3131–3140. [DOI] [PubMed] [Google Scholar]

- Skinner MW, Arndt PL, Staller SJ (2002). Nucleus 24 advanced encoder conversion study: Performance versus preference. Ear Hear, 23, 2S–17S. [DOI] [PubMed] [Google Scholar]

- Skinner MW, Holden LK, Whitford LA, et al. (2002). Speech recognition with the nucleus 24 SPEAK, ACE, and CIS speech coding strategies in newly implanted adults. Ear Hear, 23, 207–223. [DOI] [PubMed] [Google Scholar]

- Sladen DP, Zappler A (2015). Older and younger adult cochlear implant users: Speech recognition in quiet and noise, quality of life, and music perception. Am J Audiol, 24, 31–39. [DOI] [PubMed] [Google Scholar]

- Snell KB, Frisina DR (2000). Relationships among age-related differences in gap detection and word recognition. J Acoust Soc Am, 107, 1615–1626. [DOI] [PubMed] [Google Scholar]

- Spahr AJ, Dorman MF, Litvak LM, et al. (2012). Development and validation of the AzBio sentence lists. Ear Hear, 33, 112–117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spoendlin H, Schrott A (1989). Analysis of the human auditory nerve. Hear Res, 43, 25–38. [DOI] [PubMed] [Google Scholar]

- Teng EL, Chui HC (1987). The Modified Mini-Mental State (3MS) examination. J Clin Psychiatry, 48, 314–318. [PubMed] [Google Scholar]

- Vandali AE, Whitford LA, Plant KL, et al. (2000). Speech perception as a function of electrical stimulation rate: Using the Nucleus 24 cochlear implant system. Ear Hear, 21, 608–624. [DOI] [PubMed] [Google Scholar]

- Weber BP, Lai WK, Dillier N, et al. (2007). Performance and preference for ACE stimulation rates obtained with nucleus RP 8 and freedom system. Ear Hear, 28, 46S–48S. [DOI] [PubMed] [Google Scholar]

- Wolfe J, Schafer E (2014). Programming cochlear implants. Plural Publishing. [Google Scholar]

- Zhou R, Abbas PJ, Assouline JG (1995). Electrically evoked auditory brainstem response in peripherally myelin-deficient mice. Hear Res, 88, 98–106. [DOI] [PubMed] [Google Scholar]