Abstract

Pattern recognition and feature extraction of images always have been important subjects in improving the performance of image classification and Content-Based Image Retrieval (CBIR). Recently, Machine Learning and Deep Learning algorithms are utilized widely in order to achieve these targets. In this research, an efficient method for image description is proposed which is developed by Machine Learning and Deep Learning algorithms. This method is created using combination of an improved AlexNet Convolutional Neural Network (CNN), Histogram of Oriented Gradients (HOG) and Local Binary Pattern (LBP) descriptors. Furthermore, the Principle Component Analysis (PCA) algorithm has been used for dimension reduction. The experimental results demonstrate the superiority of the offered method compared to existing methods by improving the accuracy, mean Average Precision (mAP) and decreasing the complex computation. The experiments have been run on Corel-1000, OT and FP datasets.

Keywords: Pattern recognition, Image descriptor, Machine learning, Convolutional neural network (CNN), Image classification, Content-based image retrieval (CBIR)

1. Introduction

In recent years, because of the wide spread use of the Internet and the massive use of audio-visual information in digital format for communications, designing the systems for describing the content of multimedia information in order to seek and classify them is really important. In computer vision, image descriptors describe elementary characteristics such as shape, color, texture or motion of images, which are visual features of images [1,2].

Offering new image descriptors has been active research area and will help in increasing the performance of many tasks in computer vision. Some descriptors such as HOG, LBP, SURF and SIFT have been proposed, so far [[3], [4], [5], [6], [7]]. These descriptors are usually used in Machine Learning for pattern recognition and feature extraction [[8], [9], [10], [11]]. Each of these descriptors has disadvantages, like large dimension of feature vector and considering only certain features such as texture. To overcome these problems, an efficient image descriptor is presented in this research. This descriptor is created using combination of HOG, LBP and improved AlexNet CNN. Furthermore, for dimension reduction the PCA has been applied. When the proposed descriptor is used for image classification and CBIR, it provides benefits which are divided into following items:

-

•

Higher accuracy and mAP compare with other works;

-

•

Overcoming the problem of high dimension descriptors such as HOG;

-

•

Sensitivity to intra-class as well as inter-class variety;

-

•

High performance on imbalanced databases.

The rest of this paper is organized as:

The related works is introduced in section 2. The proposed method is discussed in section 3. The experimental results are reported in section 4 and these results are compared with existing experimental results in this section. Finally, the conclusion and future work are presented in section 5.

2. Related works

In recent years, image descriptors are usually used for many cases such as image classification and CBIR [12,13]. Some researchers utilized combination of descriptors to propose new ones in order to increase efficiency and use it for special cases [14]. Some of them are described as follow:

[15] has offered a descriptor using combination of HOG and LBP. The results of this approach have showed that, it is an efficient descriptor for object detection, but the unequal dimension is the main problem of that. This descriptor is affected by HOG. Suppose the dimension of input image is 227 × 227 × 3; in this case, the feature vector’s dimension of HOG descriptor is 1 × 26244, whereas this dimension for LBP descriptor is 1 × 59 and the combined descriptor has more affected by the larger descriptor. Whatever this difference become larger, the smaller descriptor lose its effect [16,17].

[18] similar to [15], has suggested a way that feature vector’s dimension of HOG and LBP descriptors (1 × 181,535) are combined and are reduced to 1 × 2824 using the variance and improved PSO algorithm, in which LBP descriptor has more influence. In our proposed method, for overcoming mentioned problems a solution is presented for dimension reduction using PCA algorithm.

Convolutional Neural Networks can be used for feature extraction, and the extracted features are utilized in cases such as classification, retrieval or detection. [19] proposed a method in which the fusion of extracted features by two networks used for face detection. In some works the combination of Convolutional Neural Networks and descriptors is used to create a new image descriptor. For instance [20], has suggested a method that image features are extracted using the HOG and SURF descriptors, then the features of each descriptor are sent to convolutional layers, and the feature vectors with 1 × 2016 and 1 × 1024 dimensions are created. After that, these features are combined and their dimension is reduced by Fully Connected (FC) layers and eventually are used for classification. In this way, such as [15,18], because of the inequality of the dimensions of HOG and SURF descriptors, their impacts on the outcome of classification will be different.

Considering the weaknesses of the mentioned existing methods, in this study a new descriptor has been offered to overcome these problems.

3. Proposed method

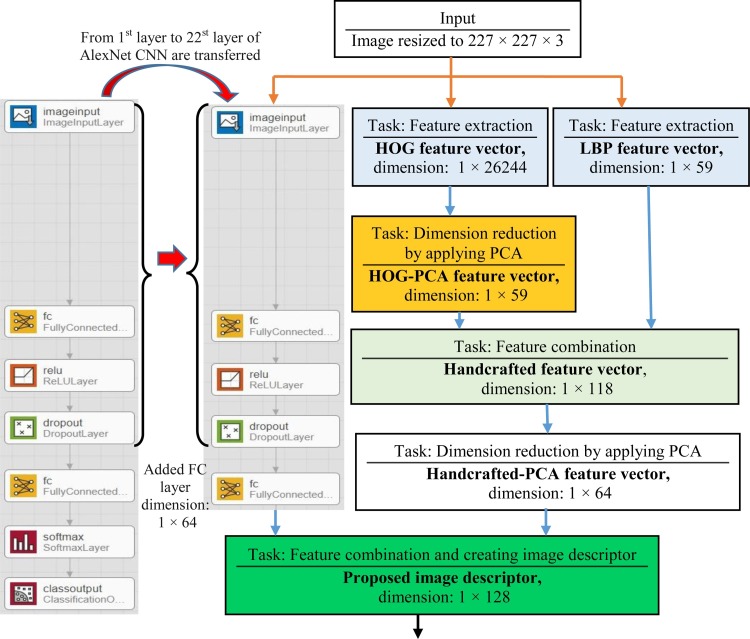

In Fig. 1 , the schematic of proposed method has been shown. According to this figure, in the first step, the images are read and resized to 227 × 227 × 3. Then, each image is sent to deep feature extractor (an improved AlexNet CNN) and handcrafted descriptors such as HOG and LBP simultaneously. On the one hand, the improved AlexNet CNN processes the images and recognizes its patterns, and finally proposes a feature vector with the dimension of 1 × 64 [21]. On the other hand, the HOG and LBP descriptors extracts features. Then the PCA algorithm is used to reduce the dimensions of produced features by HOG descriptor.

Fig. 1.

Proposed method.

In this paper, for having equal effects of HOG and LBP descriptors on final feature descriptor, the dimensions of HOG descriptor has been reduced to 1 × 59 by PCA algorithm. After that, the HOG-PCA and LBP feature’s vectors are combined and a new handcrafted feature vector with dimension of 1 × 118 is created. In order to match the dimension of handcrafted feature vector with deep feature vector the PCA is applied on handcrafted feature vector and 64 of 118 features are selected and a Handcrafted-PCA feature vector with a dimension of 1 × 64 is created. Finally, the deep feature vector and Handcrafted-PCA feature vector are combined and an efficient image descriptor with a dimension of 1 × 128 is created.

In this research, the AlexNet CNN, HOG, LBP and PCA are chosen by authors due to some reasons. These reasons are described as follow:

-

1)

The Feature selection technique which is applied by PCA algorithm, has been used for several reasons such as reducing the computation volume and training times, simplification of models and etc. [22].

-

2)

The LBP descriptor is a strong feature for texture classification. Combining the LBP with HOG descriptor, improves the performance of detection considerably on some datasets [15,23].

-

3)

The HOG descriptor is computed on a dense grid of uniformly spaced cells and for improving the accuracy, uses overlapping local contrast normalization [62]. The advantages of this descriptor is that it executes on local cells which is invariant to geometric and photometric transformation, except for object orientation [4,24].

-

4)

The improved AlexNet CNN detects the important and high level features automatically without any human supervision [21].

4. Simulation and comparison results

The proposed method has been implemented with Matlab 2018b software, a computer system with 6GB RAM, a NVIDIA GeForce 920 M graphics processor unit (GPU), and an Intel® Core™ i5-7200U @ 2.50 GHz central processor unit (CPU).

In this investigation, the accuracy, mean average precision (mAP) and recall criteria have been measured in order to evaluate the proposed method for classification and CBIR [[25], [26], [27], [28]] and 3-fold cross-validation has been applied in all experiments (Westerhuis et al 2008). In addition, the Adam algorithm has been used to train the AlexNet CNN [29] and Random forest, SVM and KNN classifiers have been utilized for classification [[30], [31], [32], [33], [34], [35], [36]]. Moreover, for measuring the similarity, Euclidean distance has also been used [37,38]. In the following, used datasets, experiments and comparison results have been demonstrated and described in details.

4.1. Used datasets

In this study, for evaluating the offered way, Corel-1000 (Wang), OT and FP datasets have been used. In Table 1 , the number of categories and number of images per categories have been shown. These datasets are described in details as below:

Table 1.

The number of categories and images per categories for used datasets.

| Datasets | Number of categories | Number of images |

|---|---|---|

| Corel-1000 | 10 | 1000 |

| OT | 8 | 2688 |

| FP (Catlech-101) | 5 | 380 |

4.1.1. Corel-1000 dataset

Corel-1000 dataset contain 10 different categories including Africa, Beaches, Building, Bus, Dinosaur, Elephant, Flower, Horses, Mountain and Food. In this dataset, each category include 100 images which are with the size of 256 × 384 or 384 × 256 pixels [39].

4.1.2. Oliva and Torralba (OT) dataset

OT dataset include 8 categories and 2688 images: 260 highway, 292 streets, 360 coasts, 328 forest, 308 inside of cities, 374 mountain, 410 open country and 356 tall buildings, in which, forest is considered for all forest and rivers scenes. Because almost all of the images include the sky object, there is not a specific sky scene. Most of the scenes present a large inter-class variability, however this annotations make a higher inter-class variability besides a large intra-class variability. In addition, this dataset is an imbalanced dataset [40].

4.1.3. FP (Caltech-101) dataset

FP dataset consist of 5 categories of Caltech-101 dataset include Bonsai, Joshua tree, Lotus, Sunflower and Water Lilly which contain 128, 64, 66, 85 and 37 images, respectively. Therefore, this dataset like OT is an imbalanced dataset [[41], [42], [43]].

4.2. Simulations and evaluation of proposed method for image classification

In this section, the performance of proposed method using Corel-1000, OT and FP databases has been evaluated and compared to AlexNet CNN for image classification [61]. For better comparison all below experiments have been done in same condition.

4.2.1. Simulation and evaluation of proposed method on Corel-1000 dataset for image classification

In Table 2 , the results of AlexNet CNN on Corel-1000 dataset for image classification have been demonstrated. According to this table, the accuracy of AlexNet CNN in train data is 97.80% and the accuracy in test data is 90.10%. Also, standard divisions of 3-fold for train and test data are 0.46 and 1.80, respectively. Moreover, by considering Table 3 , it is understood that, this method offered higher accuracy in training and test phase compared with AlexNet CNN. In presented method, Random forest, SVM and KNN have been evaluated for classification. As can be seen, Random forest classifier has made higher accuracy on test data which is 6% more than AlexNet CNN.

Table 2.

Evaluation of AlexNet CNN on Corel-1000 dataset for Image Classification.

| Method | Train data (Accuracy ± Standard division) | Test data (Accuracy ± Standard division) |

|---|---|---|

| Accuracy ± Standard division | Accuracy ± Standard division | |

| AlexNet CNN | 97.80 ± 0.46 | 90.10 ± 1.80 |

Table 3.

Evaluation of proposed method on Corel-1000 dataset for Image Classification.

| Method | Train data (Accuracy ± Standard division) |

Test data (Accuracy ± Standard division) |

||||

|---|---|---|---|---|---|---|

| Classifiers |

Classifiers |

|||||

| Random forest | SVM | KNN | Random forest | SVM | KNN | |

| Proposed method | 100 | 100 | 100 | 96 ± 0.62 | 94.70 ± 1.08 | 95.20 ± 1.04 |

4.2.2. Simulation and evaluation of proposed method on OT dataset for image classification

In Table 4 , the results of AlexNet CNN on OT dataset for image classification have been illustrated. As can be seen, the accuracy of AlexNet CNN on test data is 89.17%. Also, standard divisions of 3-fold for test data is 0.48. In Table 5 , the results of proposed method shows that the accuracy is 93.86% on test data that is 4% more than AlexNet CNN.

Table 4.

Evaluation AlexNet CNN on OT dataset for Image Classification.

| Method | Train data (Accuracy ± Standard division) | Test data (Accuracy ± Standard division) |

|---|---|---|

| Accuracy ± Standard division | Accuracy ± Standard division | |

| AlexNet CNN | 95.67 ± 0.61 | 89.17 ± 0.48 |

Table 5.

Evaluation proposed method on OT dataset for Image Classification.

| Method | Train data (Accuracy ± Standard division) |

Test data (Accuracy ± Standard division) |

||||

|---|---|---|---|---|---|---|

| Classifiers |

Classifiers |

|||||

| Random forest | SVM | KNN | Random forest | SVM | KNN | |

| Proposed method | 100 | 99.67 ± 0.03 | 100 | 93.86 ± 0.17 | 93.86 ± 0.21 | 93.19 ± 0.09 |

4.2.3. Simulation and evaluation of proposed method on FP dataset for image classification

In Table 6 , the results of AlexNet CNN on FP for image classification have shown that the accuracy of test data in AlexNet CNN is 88.95%. In Table 7 , the results of the proposed method have been shown. The results show that the accuracy of proposed method on test data is 89.74% and could achieve higher accuracy compare with AlexNet CNN. In all these experiments, Random forest classifier is more accurate than other classifiers.

Table 6.

Evaluation AlexNet CNN on FP dataset for Image Classification.

| Method | Train data (Accuracy ± Standard division) | Test data (Accuracy ± Standard division) |

|---|---|---|

| Accuracy ± Standard division | Accuracy ± Standard division | |

| AlexNet CNN | 98.16 ± 0.88 | 88.95 ± 1.55 |

Table 7.

Evaluation proposed method on FP dataset for Image Classification.

| Method | Train data (Accuracy ± Standard division) |

Test data (Accuracy ± Standard division) |

||||

|---|---|---|---|---|---|---|

| Classifiers |

Classifiers |

|||||

| Random forest | SVM | KNN | Random forest | SVM | KNN | |

| Proposed method | 100 | 99.87 ± 0.10 | 100 | 89.74 ± 0.88 | 88.16 ± 0.82 | 89.74 ± 0.52 |

4.3. Simulations and evaluation of proposed method for Contend-based Image Retrieval (CBIR)

In this section, the performance of proposed method using Corel-1000, OT and FP databases has been evaluated and compared to AlexNet CNN for CBIR. All below experiments have been done in same condition.

4.3.1. Simulation and evaluation of proposed method on Corel-1000 dataset for CBIR

In Table 8 , the results of AlexNet CNN and proposed method on Corel-1000 dataset for CBIR have been demonstrated. According to this table, the proposed method has a higher mAP for 5-top, 10-top and all relative images retrieval than AlexNet CNN. Consequently, this method could achieve better performance compare with a base model such as AlexNet CNN. Also the dimension of proposed feature vector is 1 × 128, whereas the AlexNet CNN has a feature vector with 1 × 4096 dimension for CBIR [44].

Table 8.

Evaluation proposed method and comparison with AlexNet CNN on Corel-1000 dataset for CBIR.

| Methods | mAP ± Standard division |

||

|---|---|---|---|

| 5-top | 10-top | All relative images | |

| AlexNet CNN | 93.14 | 91.87 | 75.48 |

| Proposed method | 96.02 ± 1.94 | 95.80 ± 1.82 | 91.65 ± 1.22 |

4.3.2. Simulation and evaluation of proposed method on OT dataset for CBIR

According to Table 9 and by comparing the proposed method with AlexNet CNN on OT dataset for CBIR, it is found that the proposed method is more efficient and has higher mAP.

Table 9.

Evaluation proposed method on OT dataset for CBIR.

| Methods | mAP ± Standard division |

||

|---|---|---|---|

| 5-top | 10-top | All relative images | |

| AlexNet CNN | 93 ± 0.48 | 92.30 ± 0.65 | 71.26 ± 1.58 |

| Proposed method | 94.22 ± 0.36 | 93.91 ± 0.37 | 85.64 ± 0.55 |

4.3.3. Simulation and evaluation of proposed method on FP dataset for CBIR

Table 10 illustrates, the presented way has a higher mAP for 5-top, 10-top and all relative images retrieval compare with AlexNet CNN on FP dataset that leads to better performance.

Table 10.

Evaluation proposed method on FP dataset for CBIR.

| Methods | mAP ± Standard division |

||

|---|---|---|---|

| 5-top | 10-top | All relative images | |

| AlexNet CNN | 83.78 ± 2.34 | 81.80 ± 2.22 | 71.23 ± 2.36 |

| Proposed method | 87.58 ± 1.44 | 86.86 ± 1.20 | 83 ± 0.50 |

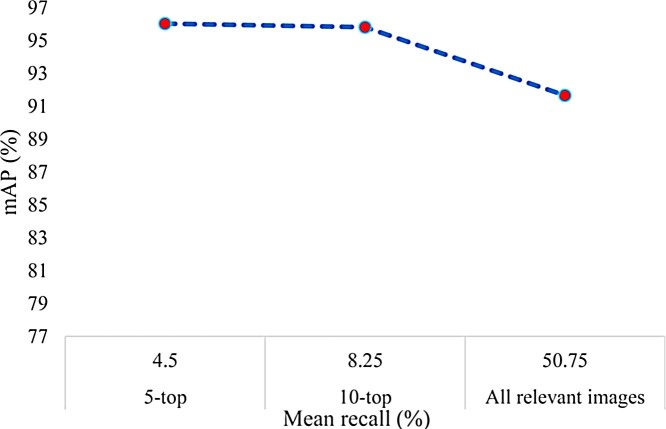

4.4. The proposed method’s mAP-Mean recall plots

In this section, the mAP-mean Recall plots of mentioned method on Corel-1000, OT and FP databases for CBIR have been illustrated. In Plot 1 , the efficiency of proposed method are shown on Corel-1000 dataset. According to this plot, the mAP and mean recall for 5-top retrieval are 96.02% and 4.5%, respectively. These values for 10-top retrieval are 95.80% and 8.25%. Furthermore, for all relative images are 91.65% and 50.75%. By considering these results, it can be said that:

-

•

The offered methodology can retrieve many relevant images perfectly.

Plot 1.

The mAP-Mean recall plot of the proposed method for Corel-1000 dataset.

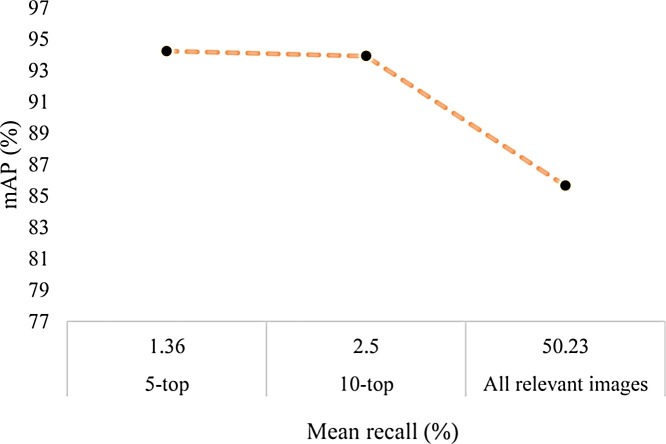

The efficiency of proposed method on OT dataset are depicted in Plot 2 . According to this plot, the mAP and mean Recall for 5-top retrieval are 94.22% and 1.36%, for 10-top retrieval are 93.91% and 2.5% and for all relative images are 85.64% and 50.23% respectively. Hence, for this dataset, the presented technique could retrieve many relevant images too.

Plot 2.

The mAP-Mean recall plot of the proposed method for OT dataset.

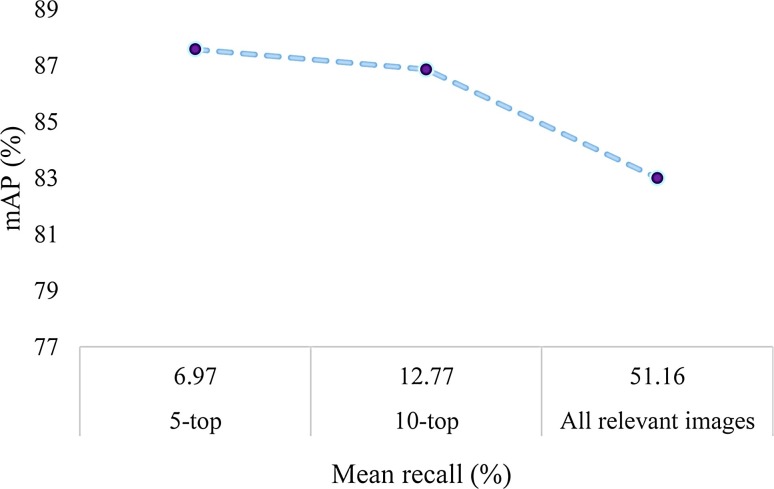

The efficiency of proposed method for FP dataset, are demonstrated in the Plot 3 . According to this plot, the mAP and mean Recall for 5-top retrieval are 87.58% and 6.97%, respectively. These values for 10-top retrieval are shown 86.86% and 12.77% and for all relative images are 83% and 51.16%. Therefore this a successful method in retrieving relevant images.

Plot 3.

The mAP-Mean recall plot of the proposed method for FP dataset.

4.5. Visual representation of proposed method's retrieval performance

In this section, visual representation of proposed technique in term of CBIR is illustrated. For each dataset and its categories, an image is randomly selected and content based image retrieval is done. Finally, the 5-top retrieve images are shown.

4.5.1. Visual representation of proposed method's retrieval for Corel-1000 dataset

The visual results of proposed method for CBIR on Corel-1000 dataset are depicted in Table 11 . For each category, one query image is randomly picked out. After similarity measurement, 5 more similar images to query image are retrieved and illustrated.

Table 11.

The visual results of proposed method for CBIR on Corel-1000 dataset.

|

According to Table 11, it can be concluded that, in more cases the most similar images to query image have been retrieved and placed in first position that is what is expected from a good CBIR system. For example, in Africa category, an African native presents in query image and in retrieved images African natives with high similarity to query image are presented. Also, in Beaches category, the retrieved images have beach, sea, sky and people similar to query image. In addition, in other category such as Dinosaur, the retrieved dinosaurs have the most similarity to query image and more of them belong to same species. It means:

-

•

The proposed method is sensitive to inter-class variety as well as intra-class variety and can find and retrieve the most similar images, despite the diversity within the class [45,46];

-

•

Considering the horse and flower category, it is found that the presented method can take into account features such as color and shape besides texture features [[47], [48], [49]].

4.5.2. Visual representation of the proposed method's retrieval for OT dataset

In Table 12 , the visual results of proposed method for CBIR on OT dataset are demonstrated. According to this table, it can be found that:

-

•

Although this dataset is imbalanced and the imbalanced datasets may lead to problems, the proposed method is able to retrieve similar images in imbalanced dataset too [50,51].

Table 12.

The visual results of proposed method for CBIR on OT dataset.

|

4.5.3. Visual representation of proposed method's retrieval for FP dataset

In Table 13 , the visual results of proposed method for CBIR on FP dataset are shown. According to this table, it can be figured out that although this dataset has categories in which images have similar contents (Such as Lotus and Water Lilly), it performs perfectly in retrieving images [43,52].

Table 13.

The visual results of proposed method for CBIR on FP dataset.

|

4.6. Comparison results

In Table 14 , the results of suggested way in term of classification compared with other available methods is demonstrated. Considering this table, it is concluded that the proposed method has higher accuracy compared with other existing methods. Therefore the new image descriptor is an effective descriptor for image classification.

Table 14.

Comparison results of proposed method with other method for image classification.

| Dataset | Method | Accuracy | Training rate%, Test rate% |

|---|---|---|---|

| Corel-1000 | Fuzzy Topological [53] | 62.20 | 33, 67 |

| Color Histogram + Fuzzy Neural Network [54] | 73.40 | – | |

| Proposed method | 96 | 3-fold cross validation | |

| OT | Fusion features [55] | 63.89 | – |

| Co-occurance matrix + Bayesian classifier [43] | 89 | 10-fold cross validation | |

| Hybrid generative + Dense SIFT [56] | 91.08 | 50, 50 | |

| Proposed method | 93.86 | 3-fold cross validation | |

| FP | Co-occurance matrix + KNN [43] | 87.20 | 10-fold cross validation |

| Proposed method | 89.74 | 3-fold cross validation |

In Table 15 , the retrieval results of mentioned method has been shown. According to this table, this method has good performance in CBIR, too. Moreover, it is more efficient because of higher mean average precision (mAP), whereas the dimension of proposed method is same to references [[65], [66], [67]].

Table 15.

Comparison results of proposed method with other method for CBIR.

| Dataset | Method | mAP | Training rate%, Test rate% | Dimension of proposed method |

|---|---|---|---|---|

| Corel-1000 | CCM + DBPSP [63] | 76.10 | 90, 10 | 1 × 21 |

| Block Truncation Coding [57] | 77.90 | – | 1 × 96 | |

| HOG + SURF [64] | 80.61 | 70, 30 | – | |

| Dense SIFT [65] | 84.20 | 50, 50 | 1 × 128 | |

| SURF + FREAK [66] | 86 | 70, 30 | 1 × 128 | |

| SURF + MSER [67] | 88 | 70, 30 | 1 × 128 | |

| Fusion features [55] | 83.50 | – | – | |

| AlexNet CNN [44] | 93.80 | – | 1 × 4096 | |

| Proposed method | 95.80 | 3-fold cross validation | 1 × 128 | |

| OT | Co-occurance matrix [43] | 76.39 | 10-fold cross validation | 1 × 9 |

| Color moment + Angular Radial Transform + Edge histogram [58] | 50.59 | 85, 15 | – | |

| Relevance Feedback [59] | 79 | – | – | |

| Proposed method | 93.91 | 3-fold cross validation | 1 × 128 | |

| FP | Co-occurance matrix [43] | 78.83 | 10-fold cross validation | 1 × 9 |

| Proposed method | 86.86 | 3-fold cross validation | 1 × 128 |

The feature vector of each image in offered method is 1 × 128. Suppose a single byte is required to work on any element of 1 × 128. So, in this case only 128 bytes of memory for each vector is required. However the dimension of feature vector become larger, more memory is needed. For example, 4096 bytes of memory were needed to measure the similarity of each image when was used FC7 in the paper of [44]. Therefore, the suggested method is more effective than these studies [18,60].

5. Conclusion and future works

In this research, an efficient method for image description has been proposed. According to the results of experiments and comparing the proposed method to existing works, it is obvious that the proposed method is an efficient way for image description hence, it is suitable for image classification and CBIR.

One of the merits of proposed method is sensitivity to intra-class and inter-class variety when it is used for CBIR. In other words, related images with more similarity are retrieved early. Another advantage is high performance on imbalanced databases. Plus, the presented way could reduce high dimension of descriptors such as HOG using PCA and has increased the accuracy and mAP.

Some of current researches have focused on designing and development of Computer-Aided diagnosis (CAD) systems. In these systems, data descriptors play vital role. Because of the epidemic of COVID-19 coronavirus and its dangerous, if a descriptor can extract important and key features on medical images of patients (such as CT), it will be used on designing an efficient CAD system for early diagnosis of this disease. Thus, extending the proposed method on CAD system can be a target for future researches. Furthermore, using new and powerful convolutional neural networks such as EfficientNet and MobileNetV3 instead of AlexNet CNN can be valuable in future studies. The authors of this research are also completing their studies in this regard.

Declaration of Competing Interest

No conflict of interest.

Contributor Information

Ashkan Shakarami, Email: ashkan.shakarami.ai@gmail.com.

Hadis Tarrah, Email: hs.tarrah.88@gmail.com.

References

- 1.Kumar R.M., Sreekumar K. A survey on image feature descriptors. Int J Comput Sci Inf Technol. 2014;5:7668–7673. [Google Scholar]

- 2.Nair A.S., Jacob R. 2017. A Survey on Feature Descriptors for Texture Image Classification. [Google Scholar]

- 3.Lowe D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004;60(2):91–110. [Google Scholar]

- 4.Dalal N., Triggs B. Histograms of oriented gradients for human detection. IEEE; 2005. pp. 886–893. (June). [Google Scholar]

- 5.Ahonen T., Hadid A., Pietikainen M. Face description with local binary patterns: application to face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2006;28(12):2037–2041. doi: 10.1109/TPAMI.2006.244. [DOI] [PubMed] [Google Scholar]

- 6.Bay H., Tuytelaars T., Van Gool L. Springer; Berlin, Heidelberg: 2006. Surf: Speeded up Robust Features. In European Conference on Computer Vision; pp. 404–417. (May) [Google Scholar]

- 7.Veerashetty S., Patil N.B. Novel LBP based texture descriptor for rotation, illumination and scale invariance for image texture analysis and classification using multi-kernel SVM. Multimed. Tools Appl. 2019:1–21. [Google Scholar]

- 8.Dhar P. A new flower classification system using LBP and SURF features. Int. J. Image Graph. Signal Process. 2019;11(5):13. [Google Scholar]

- 9.Gonzalez-Arias C., Viáfara C.C., Coronado J.J., Martinez F. Automatic classification of severe and mild wear in worn surface images using histograms of oriented gradients as descriptor. Wear. 2019;426:1702–1711. [Google Scholar]

- 10.Shinde A., Rahulkar A., Patil C. Content based medical image retrieval based on new efficient local neighborhood wavelet feature descriptor. Biomed. Eng. Lett. 2019;9(3):387–394. doi: 10.1007/s13534-019-00112-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Fekri-Ershad S. 2020. Developing a Gender Classification Approach in Human Face Images Using Modified Local Binary Patterns and Tani-moto Based Nearest Neighbor Algorithm. arXiv preprint arXiv:2001.10966. [Google Scholar]

- 12.Sepas-Moghaddam A., Correia P.L., Pereira F. Light field local binary patterns description for face recognition. 2017 IEEE International Conference on Image Processing (ICIP); IEEE; 2017. pp. 3815–3819. (September) [Google Scholar]

- 13.Pan Z., Wu X., Li Z. Central pixel selection strategy based on local gray-value distribution by using gradient information to enhance LBP for texture classification. Expert Syst. Appl. 2019;120:319–334. [Google Scholar]

- 14.Huang M., Shu H., Ma Y., Gong Q. Content-based image retrieval technology using multi-feature fusion. Opt. – Int. J. Light Electron. Opt. 2015;126(19):2144–2148. [Google Scholar]

- 15.Wang X., Han T.X., Yan S. An HOG-LBP human detector with partial occlusion handling. 2009 IEEE 12th International Conference on Computer Vision; IEEE; 2009. pp. 32–39. (September). [Google Scholar]

- 16.Yang J., Yang J.Y., Zhang D., Lu J.F. Feature fusion: parallel strategy vs. Serial strategy. Pattern Recognit. 2003;36(6):1369–1381. [Google Scholar]

- 17.Epp N., Funk R., Cappo C., Lorenzo–Paraguay S. Anomaly-based web application firewall using HTTP-specific features and one-class SVM. In Workshop Regional de Segurança da Informação e de Sistemas Computacionais. 2017 [Google Scholar]

- 18.Jani K.K., Srivastava S., Srivastava R. Computer aided diagnosis system for ulcer detection in capsule endoscopy using optimized feature set .J.Intelli.Fuzzy Syst. (Preprint) 2019:1–8. [Google Scholar]

- 19.Lu X., Duan X., Mao X., Li Y., Zhang X. Feature extraction and fusion using deep convolutional neural networks for face detection. Math. Probl. Eng. 2017;2017 [Google Scholar]

- 20.Madan R., Agrawal D., Kowshik S., Maheshwari H., Agarwal S., Chakravarty D. 2019. Traffic Sign Classification Using Hybrid HOG-SURF Features and Convolutional Neural Networks. [Google Scholar]

- 21.Shakarami A., Tarrah H., Mahdavi-Hormat A. A CAD system for diagnosing Alzheimer’s disease using 2D slices and an improved AlexNet-SVM method. Optik. 2020 [Google Scholar]

- 22.Arefnezhad S., Samiee S., Eichberger A., Nahvi A. Driver drowsiness detection based on steering wheel data applying adaptive neuro-fuzzy feature selection. Sensors. 2019;19(4):943. doi: 10.3390/s19040943. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Alhakeem Z., Jang S.I. 2019. A Convolution-Free LBP-HOG Descriptor For Mammogram Classification. arXiv preprint arXiv:1904.00187. [Google Scholar]

- 24.Katti V.S., Sushitha S., Dhareshwar S., Sowmya K. Information and Communication Technology for Sustainable Development. Springer; Singapore: 2020. Implementation of dalal and triggs algorithm to detect and track human and Non-human classifications by using histogram-oriented gradient approach; pp. 759–770. [Google Scholar]

- 25.Swets J.A. Measuring the accuracy of diagnostic systems. Science. 1988;240(4857):1285–1293. doi: 10.1126/science.3287615. [DOI] [PubMed] [Google Scholar]

- 26.Davis J., Goadrich M. The relationship between precision-recall and ROC curves. Proceedings of the 23rd International Conference on Machine Learning. 2006:233–240. (June) [Google Scholar]

- 27.Powers D.M. 2011. Evaluation: From Precision, Recall and F-measure to ROC, Informedness, Markedness and Correlation. [Google Scholar]

- 28.Alsmadi M.K. An efficient similarity measure for content based image retrieval using memetic algorithm. Egypt. J. Basic Appl. Sci. 2017;4(2):112–122. [Google Scholar]

- 29.Kingma D.P., Ba J. 2014. Adam: a Method for Stochastic Optimization. arXiv preprint arXiv:1412.6980. [Google Scholar]

- 30.Breiman L. Bagging predictors. Mach. Learn. 1996;24(2):123–140. [Google Scholar]

- 31.Suykens J.A., Vandewalle J. Least squares support vector machine classifiers. Neural Process. Lett. 1999;9(3):293–300. [Google Scholar]

- 32.Gislason P.O., Benediktsson J.A., Sveinsson J.R. Random forests for land cover classification. Pattern Recognit. Lett. 2006;27(4):294–300. [Google Scholar]

- 33.Das R., De S., Bhattacharyya S., Platos J., Snasel V., Hassanien A.E. Data augmentation and feature fusion for melanoma detection with content based image classification. International Conference on Advanced Machine Learning Technologies and Applications; Springer, Cham; 2019. pp. 712–721. (March) [Google Scholar]

- 34.Katuwal R., Suganthan P.N., Zhang L. Heterogeneous oblique random forest. Pattern Recognit. 2020;99 [Google Scholar]

- 35.Land W.H., Schaffer J.D. Springer; Cham: 2020. The Support Vector Machine. In The Art and Science of Machine Intelligence; pp. 45–76. [Google Scholar]

- 36.Zheng S., Ding C. Pattern Recognition Letters; 2020. A Group Lasso Based Sparse KNN Classifier. [Google Scholar]

- 37.Wang L., Zhang Y., Feng J. On the Euclidean distance of images. IEEE Trans. Pattern Anal. Mach. Intell. 2005;27(8):1334–1339. doi: 10.1109/TPAMI.2005.165. [DOI] [PubMed] [Google Scholar]

- 38.Tabaghi P., Dokmanić I., Vetterli M. Kinetic euclidean distance matrices. Ieee Trans. Signal Process. 2019;68:452–465. [Google Scholar]

- 39.Wang J.Z., Li J., Wiederhold G. SIMPLIcity: semantics-sensitive integrated matching for picture libraries. IEEE Trans. Pattern Anal. Mach. Intell. 2001;23(9):947–963. [Google Scholar]

- 40.Oliva A., Torralba A. Modeling the shape of the scene: a holistic representation of the spatial envelope. Int. J. Comput. Vis. 2001;42(3):145–175. [Google Scholar]

- 41.Fei-Fei L., Fergus R., Perona P. Learning generative visual models from few training examples: an incremental bayesian approach tested on 101 object categories. 2004 Conference on Computer Vision and Pattern Recognition Workshop; IEEE; 2004. (June). (pp. 178-178) [Google Scholar]

- 42.Griffin G., Holub A., Perona P. 2007. Caltech-256 Object Category Dataset. [Google Scholar]

- 43.Serrano-Talamantes J.F., Aviles-Cruz C., Villegas-Cortez J., Sossa-Azuela J.H. Self organizing natural scene image retrieval. Expert Syst. Appl. 2013;40(7):2398–2409. [Google Scholar]

- 44.Shah A., Naseem R., Iqbal S., Shah M.A. Improving CBIR accuracy using convolutional neural network for feature extraction. In Emerging Technologies (ICET), 2017 13th International Conference on; IEEE; 2017. pp. 1–5. (December) [Google Scholar]

- 45.Nazir S., Yousaf M.H., Velastin S.A. 2017. Inter and Intra Class Correlation Analysis (IICCA) for Human Action Recognition in Realistic Scenarios. [Google Scholar]

- 46.Fraschini M., Pani S.M., Didaci L., Marcialis G.L. Robustness of functional connectivity metrics for EEG-based personal identification over task-induced intra-class and inter-class variations. Pattern Recognit. Lett. 2019;125:49–54. [Google Scholar]

- 47.Zhao Z., Tian Q., Sun H., Jin X., Guo J. Content based image retrieval scheme using color, texture and shape features. Int. J. Signal Process. Image Process. Pattern Recognit. 2016;9(1):203–212. [Google Scholar]

- 48.Yuvaraj D., Sivaram M., Karthikeyan B., Abdulazeez J. Shape, color and texture based CBIR system using fuzzy logic classifier. Cmc.Comp. Mater.Continua. 2019;59(3):729–739. [Google Scholar]

- 49.Singh C., Singh J. Geometrically invariant color, shape and texture features for object recognition using multiple kernel learning classification approach. Inf. Sci. (Ny) 2019;484:135–152. [Google Scholar]

- 50.Japkowicz N., Stephen S. The class imbalance problem: a systematic study. Intell. Data Anal. 2002;6(5):429–449. [Google Scholar]

- 51.Zhang J., Bloedorn E., Rosen L., Venese D. Learning rules from highly unbalanced data sets. Fourth IEEE International Conference on Data Mining (ICDM’04); IEEE; 2004. pp. 571–574. (November) [Google Scholar]

- 52.Huang K. Content-based image retrieval using generated textual meta-data. Proceedings of the 2nd International Conference on Advances in Artificial Intelligence. 2018:16–19. (October) [Google Scholar]

- 53.Fashandi H., Peters J.F. A fuzzy topological framework for classifying image databases. Int. J. Intell. Syst. 2011;26(7):621–635. [Google Scholar]

- 54.Kaur M., Dhingra S. Comparative analysis of image classification techniques using statistical features in CBIR systems. 2017 International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud)(I-SMAC); IEEE; 2017. pp. 265–270. (February) [Google Scholar]

- 55.Ahmed K.T., Ummesafi S., Iqbal A. Content based image retrieval using image features information fusion. Inf. Fusion. 2019;51:76–99. [Google Scholar]

- 56.Bosch A., Zisserman A., Muñoz X. Scene classification using a hybrid generative/discriminative approach. IEEE Trans. Pattern Anal. Mach. Intell. 2008;30(4):712–727. doi: 10.1109/TPAMI.2007.70716. [DOI] [PubMed] [Google Scholar]

- 57.Guo Z., Zhang L., Zhang D. A completed modeling of local binary pattern operator for texture classification. Ieee Trans. Image Process. 2010;19(6):1657–1663. doi: 10.1109/TIP.2010.2044957. [DOI] [PubMed] [Google Scholar]

- 58.Walia E., Vesal S., Pal A. An effective and fast hybrid framework for color image retrieval. Sens. Imaging. 2014;15(1):93. [Google Scholar]

- 59.Nanayakkara Wasam Uluwitige D.C., Chappell T., Geva S., Chandran V. Improving retrieval quality using pseudo relevance feedback in content-based image retrieval. Proceedings of the 39th International ACM SIGIR Conference on Research and Development in Information Retrieval. 2016:873–876. (July) [Google Scholar]

- 60.Damodaran N., Sowmya V., Govind D., Soman K.P. In Soft Computing and Signal Processing. Springer; Singapore: 2019. Single-plane scene classification using deep convolution features; pp. 743–752. [Google Scholar]

- 61.Krizhevsky A., Sutskever I., Hinton G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012:1097–1105. [Google Scholar]

- 62.Liu Y., Ge Y., Wang F., Liu Q., Lei Y., Zhang D., Lu G. A rotation invariant HOG descriptor for Tire pattern image classification. ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP); IEEE; 2019. pp. 2412–2416. (May) [Google Scholar]

- 63.ElAlami M.E. A new matching strategy for content based image retrieval system. Applied Soft Computing. 2014;14:407–418. [Google Scholar]

- 64.Mehmood Z., Abbas F., Mahmood T., Javid M.A., Rehmen A., Nawaz T. Content-based image retrieval based on visual words fusion versus features fusion of local and global features. Arabian Journal for Science and Engineering. 2018;12:7265–7284. [Google Scholar]

- 65.Mehmood M., Anwar S.M., Ali N., Habib H.A., Rashid M. A novel image retrieval based on a combination of local and global histograms of visual words. Mathematical Problems in Engineering. 2016 [Google Scholar]

- 66.Jabeen S., Mehmood Z., Mahmood T., Saba T., Rehman A., Mahmood M.T. An effective content-based image retrieval technique for image visuals representation based on the bag-of-visual-words model. PloS One. 2018;13(4 doi: 10.1371/journal.pone.0194526. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Elnemr H.A. Combining SURF and MSER along with Color Features for Image Retrieval System Based on Bag of Visual Words. JCS. 2016:213–222. [Google Scholar]