Abstract

Purpose

This study examined the impact of home use of remote microphone systems (RMSs) on caregiver communication and child vocalizations in families of children with hearing loss.

Method

We drew on data from a prior study in which Language ENvironmental Analysis recorders were used with 9 families during 2 consecutive weekends—1 that involved using an RMS and 1 that did not. Audio samples from Language ENvironmental Analysis recorders were (a) manually coded to quantify the frequency of verbal repetitions and alert phrases caregivers utilized in communicating to children with hearing loss and (b) automatically analyzed to quantify children's vocalization rate, duration, complexity, and reciprocity when using and not using an RMS.

Results

When using an RMS at home, caregivers did not repeat or clarify their statements as often as when not using an RMS while communicating with their children with hearing loss. However, no between-condition differences were observed in children's vocal characteristics.

Conclusions

Results provide further support for home RMS use for children with hearing loss. Specifically, findings lend empirical support to prior parental reports suggesting that RMS use eases caregiver communication in the home setting. Studies exploring RMS use over a longer duration of time might provide further insight into potential long-term effects on children's vocal production.

Adult–child interactions are recognized as playing an integral role in early language acquisition (e.g., Chapman, 2000). It has been proposed that language and communication development occurs in the context of dyadic exchanges or “transactions” between a caregiver and child (see Sameroff, 2010, and Snyder-McLean, 1990, for reviews). Studies conducted with a number of different populations (including typically developing children, as well as children with autism spectrum disorder, and other intellectual and developmental disabilities) lend empirical support to this transactional theory of language development (Fusaroli, Weed, Fein, & Naigles, 2019; McDuffie & Yoder, 2010; Woynaroski, Yoder, Fey, & Warren, 2014; Zimmerman et al., 2009). In other words, within this cross-generational framework, behaviors and traits of both adults and children can influence the course of development during reciprocal dialogues.

Having access to caregiver talk is thus considered requisite for typical vocal and verbal development of young children (Hart & Risley, 1995; Hoff & Naigles, 2002; Masur, Flynn, & Eichorst, 2005; Werker & Yeung, 2005). Providing optimal access to caregiver talk via hearing technologies is considered to be similarly important for phonological and linguistic development in young listeners with hearing loss (e.g., Eisenberg, Shannon, Martinez, Wygonski, & Boothroyd, 2000). Valuable caregiver–child transactions could be disrupted by a reduction in access to clear caregiver speech for children with hearing loss. In fact, national guidelines (American National Standards Institute, 2010) have recommended a signal-to-noise ratio (SNR) of at least +15 dB for optimal speech perception by children with hearing loss.

Remote microphone systems (RMSs) are designed to improve the SNR for listeners, thus increasing the likelihood that children with hearing loss have access to their caregivers' verbal communication (e.g., Schafer & Thibodeau, 2006). Therefore, RMS use has been widely endorsed for children with hearing loss in school settings, which frequently offer poor acoustic conditions (e.g., Anderson & Goldstein, 2004). However, few studies have examined the impact of RMS use in the home environment, where background noise and distance can also prove detrimental for listening (Flynn, Flynn, & Gregory, 2005; Moeller, Donaghy, Beauchaine, Lewis, & Stelmachowicz, 1996; Mulla & McCracken, 2014; Pearsons, Bennett, & Fidell, 1977; Wu et al., 2018) and where young children spend about 60% of their time (Hofferth & Sandberg, 2001).

Recently, RMS technology used in homes was demonstrated to increase children's access to significantly more caregiver talk originating from a distance (Benítez-Barrera, Angley, & Tharpe, 2018). However, the study did not examine the quality of the adult linguistic input that became accessible via an RMS. That is, it was not determined if the caregiver talk was child directed (one characteristic of quality of caregiver speech) or “overheard” conversations of no particular relevance to the children. Although overhearing is known to be beneficial to building vocabulary in children (Akhtar, 2005; Akhtar, Jipson, & Callanan, 2001; Bloom, 2000; Floor & Akhtar, 2006), child-directed speech is generally considered to promote emergent lexical processing skills and foster language development, especially early in development (Newman, Rowe, & Ratner, 2016; Rowe, 2008; Rowe, 2012; Shneidman, Arroyo, Levine, & Goldin-Meadow, 2013; Weisleder & Fernald, 2013). A recent follow-up study revealed that the proportion of caregiver talk that is directed to a child with hearing loss via an RMS is similar to the caregiver talk that is directed to a child with hearing loss when not using an RMS (Benítez-Barrera, Thompson, Angley, Woynaroski, & Tharpe, 2019). Thus, research to date has shown that RMS use is likely to provide children with hearing loss with increased access to caregiver talk, much of which is directed to the children and, thus, likely to facilitate their processing and language development.

Also of interest to the current study, qualitative data have indicated that families who use RMSs at home observe enhanced child responsiveness, among other advantages. Generally, families have shared positive perceptions of RMS use at home, reporting improvements in their children's speech access, listening effort, attention, and language comprehension (Moeller et al., 1996). Some caregivers have additionally noted increases in their children's imitation of words and sentences (i.e., vocal reciprocity) with RMS use (Mulla, 2011). Furthermore, another RMS home study noted that 33% of families cited gains in the intelligibility and complexity of their children's speech clarity during home interactions—particularly surprising given that parents made this observation after the children wore the RMS for only 2 days (Benítez-Barrera et al., 2018). To date, there are few quantitative data to corroborate these perceived benefits associated with RMS use at home.

Therefore, the current study extended our prior work to investigate several additional aspects of caregiver–child communication that theory and anecdotal accounts suggest might be impacted by RMS use in homes of children with hearing loss. Specifically, this study evaluated the effects of RMS use on several aspects of caregiver communication, including the caregiver's production of repetitions or other talk intended to alert or secure the attention of a child with hearing loss. Additionally, we explored the effects of RMS use on several characteristics of vocalizations produced by children with hearing loss, including vocal rate, duration, complexity, and reciprocity. The research questions for this study included the following:

Are caregiver repetitions or clarifications and/or use of alerting phrases directed to their children with hearing loss reduced when using an RMS relative to when not using an RMS?

Do children with hearing loss show increased vocal rate, duration, complexity, or reciprocity when using an RMS relative to when not using an RMS?

We hypothesized that caregivers would have to produce fewer repetitions or clarifications and use less alerting statements to secure their children's attention, when using the RMS than when not using the RMS in the home setting. Furthermore, we predicted that having increased access to clear caregiver talk via the RMS technology would result in an increased rate, duration, complexity, and/or reciprocity of child vocalizations.

Method

This study draws upon extant data from a prior study on RMS use in young children with hearing loss. Detailed methodological information regarding the data collection is available in an earlier report by Benítez-Barrera et al. (2018). All study procedures were approved by the Vanderbilt University Institutional Review Board. An overview of methods relevant to this study is provided below.

Data Source and Previous Procedures

The source data were collected from nine families whose children were enrolled in a preschool for children with hearing loss in the Department of Hearing and Speech Sciences. All children had bilateral, permanent hearing loss and were full-time users of some type of hearing amplification (hearing aids, cochlear implants, and/or bone-anchored device [soft band]) for at least 1 year and were not previous users of an RMS at home or at school. Additional details regarding participant characteristics are delineated in Table 1.

Table 1.

Summary of participating family characteristics and child auditory profiles.

| Family number | Key caregiver | Language spoken at home | Sex of key child | Age of key child (years;months) | Type and degree of hearing loss | BEPTA (unaided) | Age of amplification (years;months) | Hearing device currently used |

|---|---|---|---|---|---|---|---|---|

| 1 | Father | English | Female | 4;0 | RE: Moderately severe rising to moderate CHL LE: Severe rising to mild SNHL |

62 dB HL | 2;4 | RE: BAI (soft band) |

| 2 | Mother | Spanish | Female | 2;1 | BIL: Mild sloping to severe rising to mild SNHL | 63 dB HL | 0;6 | BIL: HA |

| 3 | Mother | English | Male | 6;4 | RE: Mild sloping to profound SNHL LE: Minimal sloping to profound SNHL |

43 dB HL | 3;6 | RE: CI LE: HA |

| 4 | Mother | Spanish/ASL/English | Male | 4;4 | BIL: Profound SNHL | > 90 dB HL | HA—0;3 and CI—2;0 |

LE: CI |

| 5 | Mother | English/German | Female | 5;3 | BIL: Profound SNHL | > 90 dB HL | HA—0;8 and CIs—1;0 and 1;6 |

BIL: CI |

| 6 | Mother | English | Male | 3;11 | BIL: Profound SNHL | > 90 dB HL | HA—0;2 and CI—1;0 |

BIL: CI |

| 7 | Father | English | Male | 3;2 | BIL: Minimal sloping to moderately severe SNHL | 25 dB HL | 1;9 | BIL: HA |

| 8 | Grandmother | English/ASL | Male | 4;7 | BIL: Profound SNHL | > 90 dB HL | 0;10 | BIL: CI |

| 9 | Father | Spanish/Hindi | Male | 2;6 | BIL: Profound SNHL | > 90 dB HL | HA—0;6 and CI—1;6 |

BIL: CI |

Note. BEPTA = better ear pure-tone average; RE = right ear; LE = left ear; BIL = bilateral; CHL = conductive hearing loss; SNHL = sensorineural hearing loss; CI = cochlear implant; HA = hearing aid; BAI = bone-anchored implant; ASL = American Sign Language.

The child participant with hearing loss in each family was designated as the “key child” throughout this investigation. Although more than one caregiver could have been present in the key child's home during this study, a single caregiver was designated as the “key caregiver.” Families participated in the study from which these data were drawn for 2 days (i.e., Saturday and Sunday) for two consecutive weekends. 1 On one of the two consecutive weekends, families used RMSs (Phonak Roger™). RMS and No-RMS weekend assignments were counterbalanced across families. Caregivers were provided with a transmitter and receiver and received written and oral instructions for the use and care of the RMS device.

During the course of the RMS weekend, the key caregiver and the key child were instructed to wear the transmitter and receiver, respectively, for the entire weekend. However, the key caregiver was allowed to mute the transmitter at times when speech or sound was considered inappropriate for the child to hear (e.g., going to the bathroom). Caregivers recorded their daily activities in a log provided by the research team, which served to aid in the process of selecting segments of audio-recorded samples for coding and analysis. More specifically, the key caregiver kept track of activities occurring at home, visitors, outdoor activities, and RMS use in this daily log. Periods of time when families reported outdoor activities, visitors at home, and no RMS use were excluded from coding and analysis.

Language ENvironmental Analysis (LENA) technology (Xu, Yapanel, & Gray, 2009) was used to record and analyze key caregiver talk and key child vocalizations within participants' homes. LENA is a system that allows for collection and analysis of large (i.e., up to 16 hr in duration) audio-recorded files collected in naturalistic settings (Oller et al., 2010). Briefly, a digital language processor (DLP), in conjunction with the LENA Pro software, captures and classifies (a) the vocal productions of a key child, who wears a DLP device in the pocket of a specially designed T-shirt; (b) the adult talk produced within a distance of approximately 8–10 ft of the DLP; and (c) a variety of other features of the ambient environment not relevant to the current study (e.g., productions of other children, media noise, silence, overlapping sound sources, and/or unclear acoustic events). The LENA software also parses out child vocalizations that are speech related from those that are not speech related (crying, vegetative sounds, or fixed signals) for further analysis.

Data captured by DLPs can subsequently be exported as audio (e.g., .wav) files for conventional coding and/or further analyzed by commercially available LENA software and other software programs that are in development and/or are available as freeware or via the LENA Research Foundation for research purposes. The aforementioned software programs collectively allow one to quantify several aspects of speech-related child vocalizations automatically, including vocal rate, duration, complexity, and/or reciprocity. The reliability and validity of the automated measures of child vocalizations utilized for this study have been reported elsewhere (Harbison et al., 2018; Xu, Richards, & Gilkerson, 2014; Xu et al., 2009; Woynaroski et al., 2017).

A complementary methodological approach applied in this study allowed for the capture of caregiver talk produced near (< 8–10 ft) and far from (> 8–10 ft) the key child (i.e., caregiver talk that would be accessible without an RMS and when wearing an RMS), in addition to the vocalizations produced by the child. That is, LENA DLP devices were worn by both the key caregiver and key child for each study condition and recording day. Both DLP devices recorded an average of 7 hr of data across families (range: 6–10 hr) each day for a mean total of 14 hr of sampling per family per weekend.

Data Reduction and Variable Derivation

Measuring Key Child Vocalizations

Vocal characteristics exhibited by children with hearing loss, with and without the use of an RMS, were derived from audio recordings collected by the key children's DLP. For the purposes of this project, day long audio recordings from the key children's DLPs for each study condition (RMS and No-RMS weekends) were uploaded to a computer with the LENA Pro software, which segmented the acoustic stream, labeled each time-stamped acoustic segment as being produced by the key child or one of several other predefined sources (see above), and parsed out key child productions that were speech-related from those that were not. After segmenting and standard labeling by the LENA software, key child produced, speech-related productions were analyzed using three different software programs to derive indices of child vocal rate, duration, complexity, and reciprocity.

The LENA Advanced Data Extractor was used to obtain rate and duration information for speech-related vocalizations labeled as being produced by the key child during each recording day automatically. The metrics used to quantify key child vocal rate and vocal duration in analyses were mean frequency count per minute and mean vocalization duration as averaged across the two audio recordings collected on RMS and No-RMS weekends.

A custom-made software program (i.e., contingencies from LENA data; Yoder, Wade, Tapp, Warlaumont, & Harbison, 2016) was used to quantify children's vocal reciprocity. In brief, this software program uses the LENA files, which are time-stamped and labeled for easy identification of vocalizations produced by the key child and adults, to derive an index of vocal reciprocity called the reciprocal vocal contingency (RVC) score. The RVC score is a risk difference score that quantifies the likelihood that a key child will vocalize following a previous adult vocal response, based on three-event sequences (child vocalization ➔ adult vocalization ➔ child vocalization) observed within audio-recorded files and controlling for the chance probability of such sequences occurring.

Quantifying vocal reciprocity in the current study, wherein we aimed to distinguish vocal reciprocity when a child has access only to adult talk produced nearby from vocal reciprocity when the child has access to adult talk produced from a farther distance by virtue of RMS technology, posed a unique methodological challenge. Vocal reciprocity during the No-RMS condition, wherein children with hearing loss likely had access only to the speech produced in relatively close proximity, was quite easily measured and quantified from recordings of the key child and adult talk captured on the key children's DLPs. However, vocal reciprocity for the No-RMS condition, wherein children with hearing loss had access to adult talk produced nearby and at a greater distance, had to be quantified by merging data from the key children's DLPs with data from the key caregivers' DLPs (which captured any adult talk that would have been accessible to the child via the RMS). The metric of key child vocal reciprocity used in analyses was, thus, the RVC score as averaged across the two audio recordings collected on RMS weekends (by each key child and key caregiver's DLP) and No-RMS weekends (by each key child's DLP). See Harbison et al. (2018) for further information about derivation and validation of the RVC score.

Open-source Sphinx recognition software was used to quantify child vocal complexity. Briefly, the Sphinx software estimates the average count of 39 different phones (acoustic matches to 24 consonants such as “p” and 15 vowels such as “a”) produced in speech-related utterances identified by the LENA software as being produced by the key child. We derived both the average count per utterance (ACPU) of consonants (C) and vowels (V). The metric of key child vocal complexity used in analyses was the ACPU − C + V score as averaged across the two audio recordings collected on RMS weekends and No-RMS weekends from each key child's DLP. See Xu et al. (2014) and Woynaroski et al. (2017) for further information about derivation and validation of the ACPU − C + V score.

Measuring Key Caregiver Communication

Characteristics of key caregiver talk were measured from audio files exported from the key caregivers' DLPs. This study focuses exclusively on previously selected segments of key caregiver talk (i.e., from Benítez-Barrera et al., 2018) that were labeled as being produced a “far distance” from the key child, as captured by the key caregiver's DLP. The rationale behind this approach to audio segment selection was twofold. First, from a logical perspective, the characteristics of interest—repetitions of key caregiver talk and use of alerting statements—would more likely occur during verbal interactions between a key caregiver and a key child separated by a greater physical distance as opposed to a dyad engaging within closer proximity. Second, the caregiver's DLP captures all caregiver vocalizations for analysis purposes, including adult speech from a distance (> 8–10 ft away from the child) that might be missed by relying on recordings from the child's DLP alone.

Fourteen different 5-min “far distance” segments of time were selected from the two recording days for the two study weekends per family (i.e., seven segments each from RMS and No-RMS conditions, distributed evenly across study days). 2 These 5-min audio segments had been extracted from the caregivers' DLPs, according to selection criteria delineated by Benítez-Barrera et al. (2019). In the aforementioned study, eligible segments were analyzed for both conditions (RMS and No-RMS) over two different distance categories (close distance and far distance) to represent key caregiver talk produced relative to the position of the key child.

Coding system. The 14 selected “far distance” audio segments per family were coded for key caregiver productions of repetitions and alerting phrases directed toward the key child using a timed event coding system, wherein coders listened to each audio-recorded segment and inserted “point codes” to mark the timing of the onset of each caregiver behavior of interest—caregiver repetitions and caregiver use of alerting phrases. Coders were able to pause each audio recording as needed and documented the occurrence of repetitions or alerting phrases that occurred within each segment in a securely stored electronic spreadsheet.

Key caregiver repetitions were classified into two categories. Casual repetitions were defined as instances where-in a key caregiver repeated at least part of an immediately preceding utterance directed to the key child informally (e.g., “Yes, yes, yes…good job” followed by the utterance, “Yes, that's correct…great job”). Intentional repetitions were defined as instances wherein a key caregiver was determined to repeat at least part of an immediately preceding utterance directed to the key child in a more deliberate or purposeful way, either for clarification/emphasis or to otherwise enhance the child's processing or comprehension (e.g., “Time to get your red shoes” followed immediately by the utterance, “no, not your jacket—your red shoes”). Coders additionally recorded the number of instances wherein the key caregiver used alerting phrases or the child's name (e.g., “Come here! Hey, Julie!”) for refocusing or engaging the child during an audio segment. In particular, coders were instructed to listen for specific acoustic features (e.g., increased vocal volume) to aid in identifying these alerting phrases used by key caregivers.

Prior to coding each segment, coders reviewed vocal samples from the target speakers (i.e., key child and key caregiver) for each individual family, as extracted from a separate 5-min segment that contained a conversation between the dyad of interest. Coders also listened to the 3 min immediately preceding the segment to obtain some context regarding the talkers' communication environment. Coders were additionally provided with the daily activities log described earlier to provide contextual information for reference throughout coding. To facilitate efficiency and accuracy of the human coding, a written transcript was generated for each segment from the six English-speaking families, using outside vendor services (Rev.com transcription team). Foreign language transcription services were not available for the additional audio recordings from the three Spanish-speaking families. Therefore, Spanish-speaking coders were instructed to utilize the aforementioned daily activities log to supplement those audio recordings. The metrics for caregiver communication used in analyses were frequency counts of key caregiver-produced repetitions (casual and intentional) and alerting phrases, as aggregated across the 2 days of each weekend (RMS and No-RMS conditions).

Coders and coder training. Selected segments were coded by four Vanderbilt University graduate student volunteers (two native English speakers and two bilingual Spanish speakers) who (a) were blind to study condition and thus unaware of whether a recording represented an RMS or No-RMS weekend and (b) completed a comprehensive training protocol. Primary coders for both English and Spanish coding teams coded all segments per family, whereas reliability coders were responsible for coding a randomly selected 30% of the total set of audio files per family. Training procedures involved a review of the coding manual and multiple discussions with study personnel to ensure coder reliability and address any questions raised throughout the training process. All coders were trained using 5-min practice segments (excluded from analyses) until the desired level of coding reliability (> .8 small-over-large agreement for the percentage of intervals containing key caregiver repetitions and alerting phrases) was attained for two consecutive files.

Quantifying interobserver reliability. Interobserver agreement was monitored using MOOSES, a computer software program chiefly designed for this purpose (see Tapp, 2015). Throughout the coding process, interobserver agreement for each segment remained above the a priori threshold for acceptable agreement (< 80%) for all dependent variables of interest. Following completion of all coding for this study, interrater reliability achieved across segments randomly selected for coding was quantified by intraclass correlation coefficients.

Analytic Plan

All dependent variables were checked for normality (i.e., skew < ǀ1ǀ and kurtosis < ǀ3ǀ) prior to running analyses. Paired-sample t tests (Singer & Willett, 2003) were subsequently used to examine between-condition (RMS vs. No-RMS) differences on each variable of interest.

Results

Preliminary Analyses

All variables to be included in analyses were within acceptable limits for skew and kurtosis. Intraclass correlation coefficients for coded variables indexing key caregiver communication ranged from .924 to .953 across the RMS and No-RMS conditions. These values reflect excellent interrater reliability.

Primary Analyses for Key Caregiver Communication

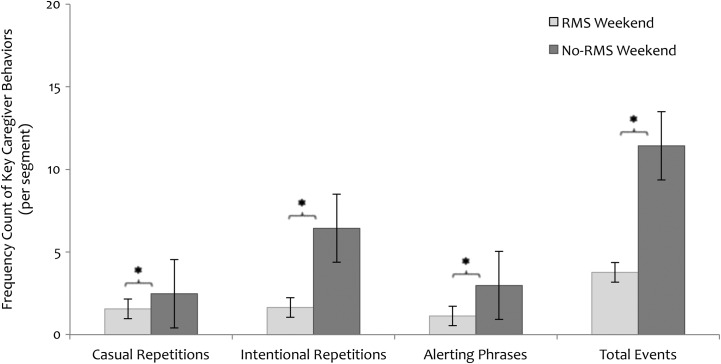

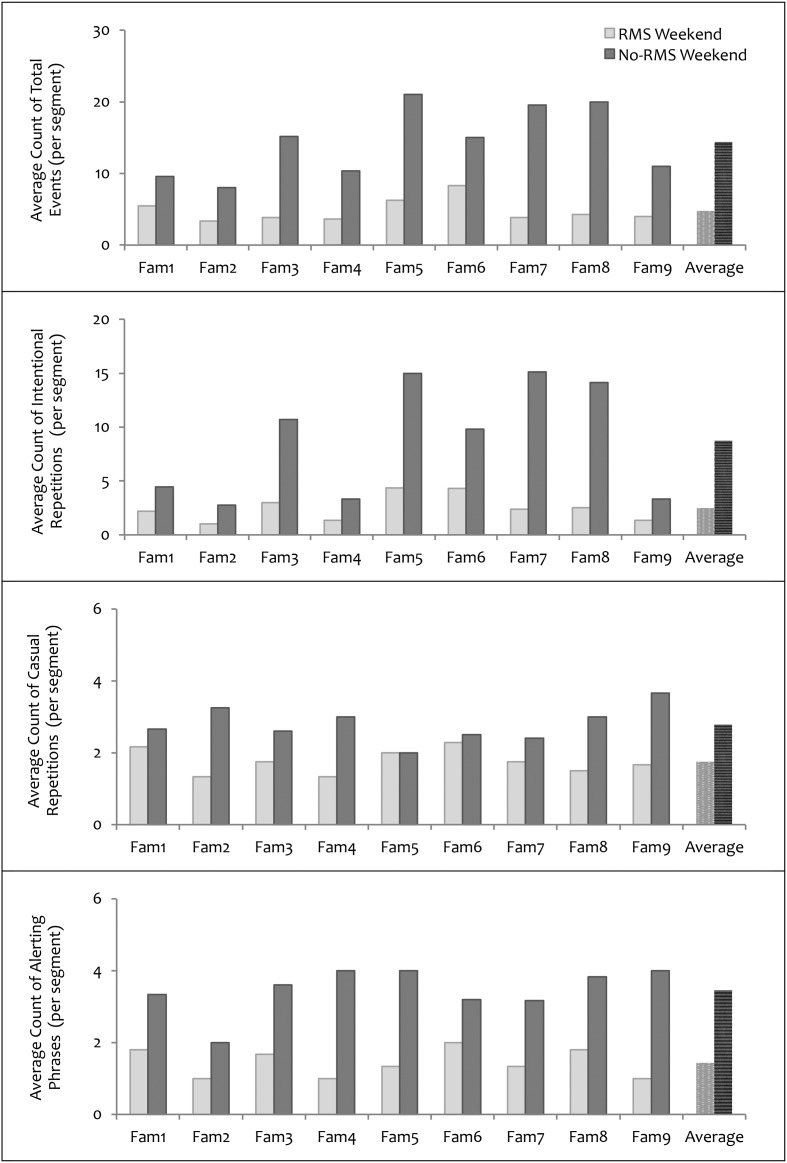

Significant between-condition differences were observed for all aspects of key caregiver communication of interest to this study. A significant difference was observed between the quantity of total events—including casual repetitions, intentional repetitions, and alerting phrases—exhibited by key caregivers during the No-RMS (M = 14.40, SD = 4.94), and RMS (M = 4.78, SD = 1.62) conditions, t(8) = −6.04, p < .005 (d = −2.01). On average, when using the RMS, key caregivers used nine (67%) fewer verbal “clarifying” behaviors per sample than when not using the RMS at home. The production of casual repetitions by key caregivers was significantly different during the No-RMS (M = 2.79, SD = 0.50) versus RMS (M = 1.75, SD = 0.34) weekends, t(8) = −4.12, p < .005 (d = −1.37). On average, key caregivers uttered approximately one (37%) fewer casual repetition per sample while using the RMS than when not using the RMS at home. The production of intentional repetitions by key caregivers also differed between No-RMS (M = 8.74, SD = 5.33) and RMS (M = 2.49, SD = 1.22) conditions, t(8) = −4.12, p < .005 (d = −1.37). That is, on average, key caregivers produced approximately six (72%) fewer intentional repetitions per sample when talking to their children, while using the RMS at home than when not using the RMS. The average proportion of alerting phrases produced by key caregivers during the No-RMS (M = 3.46, SD = 0.65) and RMS (M =1.44, SD = 0.39) conditions was also significantly different, t(8) = −8.19, p < .005 (d = −2.73). On average, key caregivers used approximately two (58%) fewer alerting phrases per sample when using the RMS at home than when not using RMS technology. Figures 1 and 2 illustrate the findings for key caregiver production of repetitions and alerting phrases during No-RMS and RMS weekends. Effect sizes for between-condition differences for metrics of key caregiver communication were all large in magnitude.

Figure 1.

Group-level data on average frequency count of each key caregiver-produced variable of interest, including casual repetitions, intentional repetitions, alerting phrases, and total events (composite of all three categories) per segment in the RMS condition (light gray bars) and the No-RMS condition (dark gray bars). Average data collapsed across the nine families for each variable of interest are displayed. Error bars represent standard error of the mean. RMS = remote microphone system. *p < .05.

Figure 2.

Family-level data on average frequency count of the three key caregiver-produced and human-coded variables of interest per segment in the RMS condition (light gray bars) and the No-RMS condition (dark gray bars). The composite of all three variables (referred to as total events) is represented in the top panel. Each distinct variable (intentional repetitions, casual repetitions, and alerting phrases, respectively) is represented in the bottom three panels. Individual data for each of the nine families are displayed. RMS = remote microphone system; Fam = family.

Primary Analyses for Key Child Vocalizations

In contrast, no significant between-condition differences were found for any indices of child vocalizations. The mean rate of child vocalizations did not significantly differ during the No-RMS (M = 2.75, SD = 0.69) and RMS (M = 2.56, SD = 0.63) weekends, t(8) = −0.84, p = .426 (d = −0.28). The average duration of vocalizations (in seconds) produced by key children was also not different between No-RMS (M = 0.83, SD = 0.08) and RMS (M = 0.84, SD = 0.08) conditions, t(8) = 0.75, p = .475 (d = 0.25). Vocal reciprocity between child–caregiver dyads additionally did not significantly differ during the No-RMS (M = 0.21, SD = 0.07) versus RMS (M = 0.22, SD = 0.05) weekends, t(8) = 1.00, p = .347 (d = 0.33). Finally, vocal complexity as indexed by ACPU − C + V scores did not differ according to No-RMS (M = 4.40, SD = 0.93) versus RMS (M = 4.36, SD = 0.97) conditions, t(8) = −0.27, p = .792 (d = −0.09). Thus, the vocalizations of children with hearing loss did not differ according to whether or not the family was using an RMS. Effect sizes for between-condition differences on key child vocal metrics were all negligible or small in magnitude.

Discussion

Previous studies of RMS use have suggested a number of potential benefits related to the use of this technology in the homes of children with hearing loss, including children's enhanced responsiveness, attention, and receptive language skills, as well as general improvements in speech access (Benítez-Barrera et al., 2018; Curran, Walker, Roush, & Spratford, 2019; Moeller et al., 1996). This study extended prior work by exploring the effects of RMS use on several aspects of caregiver communication and characteristics of child vocalizations. Findings from this investigation provide increased empirical support for RMS use in the home setting, specifically providing evidence to corroborate prior parent reports that RMS use eases caregiver communication in the home environment.

Effects of RMS Use on Caregiver Communication

We expected that, by improving the SNR through the use of an RMS in the home environment, caregivers' verbal communication would be more effectively conveyed to children with hearing loss, necessitating fewer repetitions of caregiver talk and reduced use of caregiver phrases intended to alert or secure attention from their children. Findings were consistent with this hypothesis. There was a significant reduction in caregivers' use of repetitive verbal behaviors intended to clarify their speech or refocus the attention of their children when using an RMS versus when not using an RMS, with large effects. This finding supports prior parental reports suggesting that RMS use in the home results in children appearing more alert, engaged, and attentive in daily activities and conversations.

Of note, the observed reduction in caregivers' use of casual repetitions in this study was somewhat unexpected. This category of human-coded repetitions was presumed to represent caregivers' natural use of repetitions in everyday conversations. By contrast, intentional repetitions captured caregivers' purposeful aim to clarify their previous utterances for the benefit of their children with hearing loss. However, a potential explanation for this finding might be that the caregivers had developed a natural habit of repeating or had been instructed by professionals to repeat even seemingly unimportant speech around their children with hearing loss. Consequently, according to that logic, the observed decrease in caregivers' use of casual repetitions might further highlight caregivers' awareness that frequent clarifying behaviors are not necessary while using the RMS.

Lack of Effects for Key Child Vocalizations

We additionally hypothesized that having access to more clearly conveyed caregiver communication might translate to increased vocal rate, duration, reciprocity, and/or complexity for children with hearing loss. This hypothesis was not born out. Rather, results of the current study suggest an absence of immediate, short-term effects of RMS use on child vocalization rate, vocalization duration, vocal reciprocity (RVC), and vocal complexity metrics. The small effect sizes for these analyses suggest that the null results were not simply a matter of power. It could be that more extended use of the RMS and, thereby, increased exposure to clear caregiver talk, would be necessary to affect children's vocalizations and broader language development.

Implications for Practice

The current findings have potential implications for clinical practice. Although an ever-growing literature suggests that RMS use has the potential to facilitate caregiver–child communication, there is sparse evidence that such technology is commonly recommended for home use. Yet, based on the current collective literature, including this study, documenting benefits of RMS use in homes, audiologists might consider expanding their recommendations for RMS use to homes of children with hearing loss.

Results from this investigation present numerous potential advantages of RMS use during caregiver–child communication in home environments. To begin, the reduction in caregivers' verbal repetitions and alerting phrases with RMS use might explain the finding of Benítez-Barrera et al. (2018) that caregivers talked more from a distance with their children who had hearing loss when utilizing RMS technology. That is, perhaps caregivers no longer needed to repeat themselves as often as they did when not using an RMS if speaking at some distance from their child, thus encouraging parents to talk more from a distance. Furthermore, given the reduction in caregivers' efforts to secure their children's attention, conversations might be easier with rather than without the RMS, thus contributing to more natural family interactions. By minimizing the need to repeat or clarify their speech as frequently, the RMS might reduce caregivers' frustration or impatience while interacting with their children with hearing loss.

Future research should examine the possible dis-advantages to caregiver use of RMS in the home. For example, talking to children more often from a distance could be less beneficial or even detrimental for some children, in particular for those who rely on visual cues to facilitate their speech processing and/or language comprehension, or those who rely on contextual cues and caregiver scaffolding to identify the referent/s relevant to a communicative exchange. Furthermore, it is possible to imagine situations in which having access to caregiver talk that is not child directed might be distracting when the child is trying to attend to another speaker (e.g., sibling, friend). Although overhearing can be beneficial to a child's language development (Akhtar, 2005; Akhtar et al., 2001; Floor & Akhtar, 2006), in children with normal hearing, overheard speech typically is received at a lower intensity than voices closer to and directed at them. Overheard speech received via an RMS could be at an equal or even louder level than what the child is trying to hear and thus might be more likely to impact a child's ability to engage effectively with more proximal communication partners.

Limitations

Of note, this study had several limitations. In particular, this investigation used a relatively small participant sample, which limits the extent to which results might generalize to the larger population of children with hearing loss. Furthermore, the short-term nature of this study likely limited our ability to detect effects on more distal child language skills (i.e., skills that are broader than, or developmentally downstream from, the most direct, proximal targets of treatment, which in this case was clear access to caregiver talk; Gersten et al., 2005; Yoder, Bottema-Beutel, Woynaroski, Chandrasekhar, & Sandbank, 2013). Also, the various environmental constraints imposed on participating families (i.e., no other adult relatives/visitors or older siblings at home, minimal outdoor activities permitted) limited our ability to observe a broader range of natural caregiver–child communication dynamics during the weekends observed in this study.

Conclusion and Future Directions

In summary, this study provides additional support for the use of RMSs in the homes of children with hearing loss. Findings from this investigation suggest that RMS technology eases caregiver communication directed to children with hearing loss at home. Examination of RMS use over a longer duration of time (beyond the two weekends observed in this study) and with a larger number of families could provide further insight into possible long-term effects of RMS use on children's vocal and verbal development.

Acknowledgments

This work was supported by Phonak LLC and Phonak AG (PI: A. M. Tharpe), Vanderbilt Clinical and Translational Science Award UL1 AQ10TR002243 (awarded to E. Thompson) from the National Center for Advancing Translational Sciences, and Nashville Scottish Rite Foundation, Inc. (Department of Hearing & Speech Sciences), and supported in part by National Institutes of Health grant U54 HD083211 (PI: Neul). The contents of this article are solely the responsibility of the authors and do not necessarily represent the official views of the funding agencies. We want to thank Rene Gifford and Paul Yoder for their guidance and collaboration on this project, in addition to all volunteer coders (Sarah Alfieri, Sarah Chu, Nichole Dwyer, and Aimee Grisham) for their assistance. We also extend our gratitude to Kim Coulter from the LENA Foundation for her input in the design phase of this study.

Funding Statement

This work was supported by Phonak LLC and Phonak AG (PI: A. M. Tharpe), Vanderbilt Clinical and Translational Science Award UL1 AQ10TR002243 (awarded to E. Thompson) from the National Center for Advancing Translational Sciences, and Nashville Scottish Rite Foundation, Inc. (Department of Hearing & Speech Sciences), and supported in part by National Institutes of Health grant U54 HD083211 (PI: Neul). The contents of this article are solely the responsibility of the authors and do not necessarily represent the official views of the funding agencies.

Footnotes

Due to technological limitations associated with use of LENA as a measurement system encountered by Benítez-Barrera et al. (2018), eligibility criteria for participating families included having caregivers opposite in biological sex (male and female), having no other adults (i.e., individuals older than 12 years of age) living at home, and agreeing to avoid visitors at home during recording weekends. As LENA software analysis does not distinguish between adults of the same sex, any additional adult talkers present in the home environment (aside from caregivers) could have erroneously contributed to the LENA-generated total adult word count of the key caregiver. Refer to Benítez-Barrera et al. for additional detail.

Because of DLP technical problems for Family 5 and Family 6, only one and five segments, respectively, met the coding criteria for the No-RMS far distance condition.

References

- Akhtar N. (2005). The robustness of learning through overhearing. Developmental Science, 8(2), 199–209. [DOI] [PubMed] [Google Scholar]

- Akhtar N., Jipson J., & Callanan M. A. (2001). Learning words through overhearing. Child Development, 72(2), 416–430. [DOI] [PubMed] [Google Scholar]

- American National Standards Institute. (2010). Acoustical performance criteria, design requirements, and guidelines for schools, Parts 1 and 2 (ANSI S12.60-2010). New York, NY: Acoustical Society of America. [Google Scholar]

- Anderson K. L., & Goldstein H. (2004). Speech perception benefits of RMS and infrared devices to children with hearing aids in a typical classroom. Language, Speech, and Hearing Services in Schools, 35(2), 169–184. [DOI] [PubMed] [Google Scholar]

- Benítez-Barrera C., Angley G., & Tharpe A. M. (2018). Remote microphone use in the homes of children with hearing loss: Impact on parent and child language production. Journal of Speech, Language, and Hearing Research, 61(2), 399–409. [DOI] [PubMed] [Google Scholar]

- Benítez-Barrera C., Thompson E., Angley G., Woynaroski T., & Tharpe A. M. (2019). Remote microphone use at home: Impact on child-directed speech. Journal of Speech, Language, and Hearing Research, 62(6), 2002–2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bloom P. (2000). How children learn the meanings of words. Cambridge, MA: MIT Press. [Google Scholar]

- Chapman R. S. (2000). Children's language learning: An interactionist perspective. The Journal of Child Psychology and Psychiatry and Allied Disciplines, 41(1), 33–54. [PubMed] [Google Scholar]

- Curran M., Walker E., Roush P., & Spratford M. (2019). Using propensity-score matching to address clinical questions: The impact of remote-microphone systems on language outcomes in children who are hard of hearing. Journal of Speech, Language, and Hearing Research, 62(3), 564–576. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eisenberg L. S., Shannon R. V., Martinez A. S., Wygonski J., & Boothroyd A. (2000). Speech recognition with reduced spectral cues as a function of age. The Journal of the Acoustical Society of America, 107(5), 2704–2710. [DOI] [PubMed] [Google Scholar]

- Floor P., & Akhtar N. (2006). Can 18-month-old infants learn words by listening in on conversations. Infancy, 9(3), 327–339. [DOI] [PubMed] [Google Scholar]

- Flynn T. S., Flynn M. C., & Gregory M. (2005). The FM advantage in the real classroom. Journal of Educational Audiology, 12, 37–44. [Google Scholar]

- Fusaroli R., Weed E., Fein D., & Naigles L. (2019). Hearing me hearing you: Reciprocal effects between child and parent language in autism and typical development. Cognition, 183, 1–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gersten R., Fuchs L., Compton D., Coyne M., Greenwood C., & Innocenti M. (2005). Quality indicators for group experimental and quasi-experimental research in special education. Exceptional Children, 71, 149–164. [Google Scholar]

- Harbison A. L., Woynaroski T. G., Tapp J., Wade J. W., Warlaumont A. S., & Yoder P. J. (2018). A new measure of child vocal reciprocity in children with autism spectrum disorder. Autism Research, 11(6), 903–915. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hart B., & Risley T. R. (1995). Meaningful differences in the everyday experience of young American children. Baltimore, MD: Brookes. [Google Scholar]

- Hoff E., & Naigles L. (2002). How children use input to acquire a lexicon. Child Development, 73(2), 418–433. [DOI] [PubMed] [Google Scholar]

- Hofferth S. L., & Sandberg J. F. (2001). How American children spend their time. Journal of Marriage and Family, 63(2), 295–308. [Google Scholar]

- Masur E. F., Flynn V., & Eichorst D. L. (2005). Maternal responsive and directive behaviours and utterances as predictors of children's lexical development. Journal of Child Language, 32(1), 63–91. [DOI] [PubMed] [Google Scholar]

- McDuffie A., & Yoder P. (2010). Types of parent verbal responsiveness that predict language in young children with autism spectrum disorder. Journal of Speech, Language, and Hearing Research, 53(4), 1026–1039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moeller M. P., Donaghy K. F., Beauchaine K. L., Lewis D. E., & Stelmachowicz P. G. (1996). Longitudinal study of RMS use in nonacademic settings: Effects on language development. Ear and Hearing, 17(1), 28–41. [DOI] [PubMed] [Google Scholar]

- Mulla I. (2011). Preschool use of RMS amplification technology (Unpublished doctoral dissertation). University of Manchester, England. [Google Scholar]

- Mulla I., & McCracken W. (2014). Frequency modulation for preschoolers with hearing loss. Seminars in Hearing, 35(3), 206–216. [Google Scholar]

- Newman R., Rowe M., & Ratner N. B. (2016). Input and uptake at 7 months predicts toddler vocabulary: The role of child-directed speech and infant processing skills in language development. Journal of Child Language, 43(5), 1158–1173. [DOI] [PubMed] [Google Scholar]

- Oller D. K., Niyogi P., Gray S., Richards J. A., Gilkerson J., Xu D., … Warren S. F. (2010). Automated vocal analysis of naturalistic recordings from children with autism, language delay, and typical development. Proceedings of the National Academy of Sciences, 107(30), 13354–13359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pearsons K. S., Bennett R. L., & Fidell S. (1977). Speech levels in various noise environments (Report No. EPA-600/1-77-025). Washington, DC: U.S. Environmental Protection Agency. [Google Scholar]

- Rowe M. L. (2008). Child-directed speech: Relation to socioeconomic status, knowledge of child development and child vocabulary skill. Journal of Child Language, 35, 185–205. [DOI] [PubMed] [Google Scholar]

- Rowe M. L. (2012). A longitudinal investigation of the role of quantity and quality of child-directed speech in vocabulary development. Child Development, 83(5), 1762–1774. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sameroff A. (2010). A unified theory of development: A dialectic integration of nature and nurture. Child Development, 81(1), 6–22. [DOI] [PubMed] [Google Scholar]

- Schafer E. C., & Thibodeau L. M. (2006). Speech recognition in noise in children with cochlear implants while listening in bilateral, bimodal, and RMS-system arrangements. American Journal of Audiology, 15(2), 114–126. [DOI] [PubMed] [Google Scholar]

- Shneidman L. A., Arroyo M. E., Levine S., & Goldin-Meadow S. (2013). What counts as effective input for word learning. Journal of Child Language, 40, 672–686. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Singer J. D., & Willett J. B. (2003). Applied longitudinal data analysis: Modeling change and event occurrence. Oxford, United Kingdom: Oxford University Press. [Google Scholar]

- Snyder-McLean L. (1990). Communication development in the first two years: A transactional process. Zero to Three, 11, 13–20. [Google Scholar]

- Tapp J. (2015). MOOSES for Windows (Version 4.8.8.0). Retrieved from http://mooses.vueinnovations.com

- Weisleder A., & Fernald A. (2013). Talking to children matters: Early language experience strengthens processing and builds vocabulary. Psychological Science, 24(11), 2143–2152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Werker J. F., & Yeung H. H. (2005). Infant speech perception bootstraps word learning. Trends in Cognitive Sciences, 9(11), 519–527. [DOI] [PubMed] [Google Scholar]

- Woynaroski T., Oller D. K., Keceli-Kaysili B., Xu D., Richards J. A., Gilkerson J., … Yoder P. J. (2017). The stability and validity of automated vocal analysis in minimally verbal preschoolers with autism spectrum disorder. Autism Research, 10, 508–519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woynaroski T., Yoder P. J., Fey M. E., & Warren S. F. (2014). A transactional model of spoken vocabulary variation in toddlers with intellectual disabilities. Journal of Speech, Language, and Hearing Research, 57(5), 1754–1763. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu Y. H., Stangl E., Chipara O., Hasan S. S., Welhaven A., & Oleson J. (2018). Characteristics of real-world signal to noise ratios and speech listening situations of older adults with mild to moderate hearing loss. Ear and Hearing, 39(2), 293–304. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu D., Richards J., & Gilkerson J. (2014). Automated analysis of child phonetic production using naturalistic recordings. Journal of Speech, Language, and Hearing Research, 57, 1638–1650. [DOI] [PubMed] [Google Scholar]

- Xu D., Yapanel U., & Gray S. (2009). Reliability of the LENATM language environment analysis system in young children's natural home environment. Retrieved from http://www.lenafoundation.org/TechReport.aspx/Reliability/LTR-05-2

- Yoder P. J., Bottema-Beutel K., Woynaroski T., Chandrasekhar R., & Sandbank M. (2013). Social communication intervention effects vary by dependent variable type in preschoolers with autism spectrum disorders. Evidence-based Communication Assessment and Intervention, 7(4), 150–174. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yoder P., Wade J., Tapp J., Warlaumont A., & Harbison A. L. (2016). Contingencies from LENA data. Retrieved from https://github.com/HomeBankCode/LENA_contingencies

- Zimmerman F. J., Gilkerson J., Richards J. A., Christakis D. A., Xu D., Gray S., & Yapanel U. (2009). Teaching by listening: The importance of adult-child conversations to language development. Pediatrics, 124(1), 342–349. [DOI] [PubMed] [Google Scholar]