Abstract

Despite a growing body of research devoted to the study of how humans encode environmental patterns, there is still no clear consensus about the nature of the neurocognitive mechanisms underpinning statistical learning nor what factors constrain or promote its emergence across individuals, species, and learning situations. Based on a review of research examining the roles of input modality and domain, input structure and complexity, attention, neuroanatomical bases, ontogeny, and phylogeny, ten core principles are proposed. Specifically, there exist two sets of neurocognitive mechanisms underlying statistical learning. First, a “suite” of associative-based, automatic, modality-specific learning mechanisms are mediated by the general principle of cortical plasticity, which results in improved processing and perceptual facilitation of encountered stimuli. Second, an attention-dependent system, mediated by the prefrontal cortex and related attentional and working memory networks, can modulate or gate learning and is necessary in order to learn nonadjacent dependencies and to integrate global patterns across time. This theoretical framework helps clarify conflicting research findings and provides the basis for future empirical and theoretical endeavors.

Keywords: Statistical learning, Implicit learning, Sequential learning, Artificial grammar learning

1. Introduction

Many events in our daily existence occur not completely randomly or haphazardly, but with a certain amount of structure, regularity, and predictability. Because of the ubiquitous presence of structured patterns in human action, perception, and cognition, the ability to process and represent these patterns is of paramount importance. This type of structured pattern learning – which is likely a crucial foundational ability of all higher-level organisms, and possibly of many lower-level ones as well – has been studied under the guise of different terms for what arguably tap into aspects of the same underlying construct, including “implicit learning” (A.S. Reber, 1967), “sequence learning” (Nissen and Bullemer, 1987), “sequential learning” (Conway and Christiansen, 2001), and “statistical learning” (Saffran et al., 1996).

Despite gains made in understanding how humans and other organisms learn patterned input, we are still far from an understanding of the neurocognitive mechanisms underlying learning and what factors constrain its emergence across individuals, species, and learning situations. What is needed is an integration of research findings across six key areas that have generally been treated in isolation:

Input modality and domain: How does learning proceed for inputs across different perceptual modalities (e.g., vision vs. audition) or domains (e.g., language vs. music)? Does the learning of patterns in one modality or domain involve the same neurocognitive mechanisms as learning in a different modality or domain?

Input structure and complexity: What mechanisms underpin the learning of different types of input patterns, such as associations between adjacent or co-occurring elements to more complex “global” patterns that require integration of information over longer time-scales?

Role of attention. To what extent are attention and related cognitive processes necessary for statistical learning to occur? In turn, does the outcome of learning modulate attention?

Neural bases. What is the underlying neuroanatomy of statistical learning? Is there a single, common learning and processing network? Or are there different sets of regions or networks that are used for different types of learning situations?

Ontogenetic constraints. How does statistical learning emerge and change across the lifespan? Do different aspects of learning have different developmental trajectories?

Phylogenetic constraints. Which aspects of statistical learning are shared versus unique across different animal species? What drives variation or differences across species?

Although a number of theoretical perspectives exist (e.g., Arciuli, 2017; Aslin and Newport, 2012; Daltrozzo and Conway, 2014; Forkstam and Petersson, 2005; Frost et al., 2015; Janacsek and Nemeth, 2012; Keele et al., 2003; Perruchet and Pacton, 2006; Pothos, 2007; P.J. Reber, 2013; A.S. Reber, 2003; Savalia et al., 2016; Seger, 1994; Thiessen and Erickson, 2013), currently none of them sufficiently address all of these questions. In this paper, we begin by defining in more detail what is meant by “statistical learning” and how it relates to other similarly-used terms. A lack of clarity and consensus in regards to terminology has proven to be a barrier for integrating findings across different research areas; furthermore, the use of certain terms denotes premature assumptions about what the underlying mechanisms are that characterize the construct of interest. Following this discussion, we provide a selective review and synthesis of research related to the six areas described above. Then, based on this review, we outline ten core principles that arise from an integration of the reviewed research and provide the beginnings of what could be construed as a unified theory of statistical learning. To preview, we propose there exist two primary sets of neurocognitive mechanisms – one based on the general principle of cortical plasticity and the other a specialized neural system that can provide top-down modulation of learning – with each affected and constrained by different factors in different ways. Only by taking into account the operation of these two mechanisms will we understand the neurocognitive bases of statistical learning and how they are constrained by factors such as input modality, complexity, ontogeny, and phylogeny.

2. Preliminary considerations

Statistical learning research began in earnest with the seminal study by Saffran et al. (1996), who showed that 8-month-old infants were sensitive to the statistical structure inherent in a short (2-minute) auditory nonword speech stream. In this study, statistical structure was operationalized as the strength of transitional probabilities between adjacent syllables (i.e., the likelihood of a given syllable occurring next based on the current syllable).

Subsequent research examined the generality of this phenomenon, demonstrating learning not only in human infants (Kirkham et al., 2002), but also adults, and not only with speech-like input, but also for non-linguistic sound sequences (Saffran et al., 1999) and visual scenes (Fiser and Aslin, 2001). Thus, statistical learning was quickly recognized as a general-purpose mechanism, robust across tasks, situations, and perhaps even species (Conway and Christiansen, 2001). It should be noted that the term “statistical learning” is limited in that it would seem to imply that learning and processing of input patterns consists of making statistical computations. Although the input in learning tasks can often be described in terms of statistical regularities (e.g., transitional probabilities between stimuli), it is as yet an open question whether in fact the brain learns and represents statistical regularities per se or whether what is learned is something different such as memory for frequently occurring clusters of items or “chunks” (Orban et al., 2008; Perruchet and Pacton, 2006; Slone and Johnson, 2018). This point will be returned to in section 3.2.

For decades prior to these initial studies, another area of research had been focused on a similar phenomenon, known as “implicit learning” (A.S. Reber, 1967, 1989). Implicit learning is generally defined as “learning without awareness” (Cleeremans et al., 1998) but it has been argued that statistical learning and implicit learning both refer to the same general learning phenomenon (Batterink et al., 2019; Christiansen, 2018; Perruchet and Pacton, 2006). Indeed, both types of learning reflect a type of incidental pattern learning (i.e., learning occurring without intention or instruction). For this reason, we regard the similarities among statistical learning and implicit learning research as indicative that there may be core processes that contribute to both, and as such, we look for insights that may be gained by considering research findings from both areas (and other areas of research as well).

Of course, even the early research on implicit learning did not occur in a vacuum. Behaviorist approaches to associative learning and conditioning provided an important historical context for the implicit learning work (e.g., see Pearce and Bouton, 2001; Rescorla and Wagner, 1972). Gureckis and Love (2007) in fact argued that much of what the field has been studying under the guise of statistical learning is embodied by behaviorist principles of conditioning and associative learning (c.f., Goddard, 2018). Additional neurophysiological antecedents of statistical learning include Hebb’s principles of learning and plasticity (i.e., the “Hebbian learning rule”; see Cooper, 2005; Hebb, 1949) and the demonstration that the development of primary visual cortex depends on environmental experience (e.g., Blakemore and Cooper, 1970). While acknowledging these important precursors, this review focuses primarily on the findings from the implicit learning and statistical learning literatures per se.

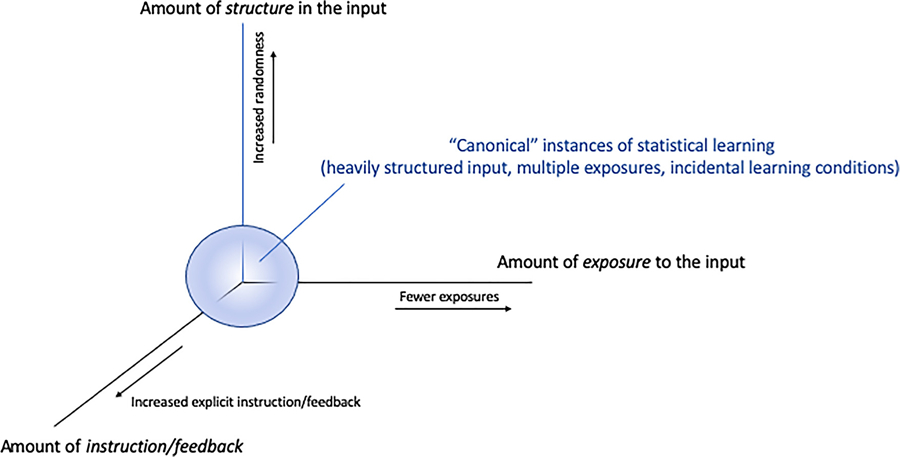

For simplicity the term “statistical learning” is used in the remainder of this paper to refer to incidental learning of structured patterns encountered in the environment. To constrain and operationalize the definition of statistical learning and provide added focus to this review, we delineate the task or situational characteristics of interest. Specifically, we propose three orthogonal dimensions that can help clarify the construct of statistical learning. These dimensions include: the level of structure present in input (i.e., random versus heavily structured sequences); the amount of exposure that is involved (i.e., a single exposure versus multiple instances); and the extent to which task situations provide explicit instruction or overt feedback (i.e., incidental versus intentional learning situations). These three dimensions are depicted graphically in Fig. 1. They create a “task space” containing a continuum of distributed points in which tasks (or situations) that are closer to the zero-point (0,0,0) can be thought of as being more characteristic of statistical learning compared to tasks at the periphery (note though, that technically speaking there is no actual zero-point as there could always be a situation with more exposures, or more structure, etc. and in that case the zero-point might be more appropriately regarded as a mathematical asymptote or singularity). Thus, the situations that we consider to be “canonical” for statistical learning have the following characteristics: structured input patterns presented over multiple exposures under incidental conditions in which there is no instruction to learn or attend to the patterns per se. Note, from this perspective, the phenomenon of statistical learning is not a categorical distinction, but a graded, continual one in which certain tasks or situations might elicit such learning more so than others.

Fig. 1.

Three orthogonal dimensions outlining a proposed task space for statistical learning.

Likewise, we can consider the types of tasks that have been used to investigate statistical learning and related learning phenomena. The primary tasks include the artificial grammar learning (AGL) task (A.S. Reber, 1967), the serial reaction time (SRT) task (Nissen and Bullemer, 1987), and the word segmentation task and its variants (Fiser and Aslin, 2001; Saffran et al., 1996). Table 1 differentiates these three tasks in terms of the measure of learning, the input structure and perceptual modality of the stimuli, and whether or not the task requires generalization to new, previously unencountered items. Despite some differences, what is common across tasks is that participants receive repeated exposure to structured patterns, usually under incidental learning conditions and without overt feedback. The general finding is that under such conditions, participants show facilitation of or sensitivity to the underlying structure, and this often – though not always – is accompanied by an inability to verbalize one’s knowledge of what has been learned.

Table 1.

Summary of three common paradigms for studying statistical learning.

| AGL | SRT | Segmentation | |

|---|---|---|---|

| Measure of learning | Explicit judgment of grammaticality (usually) | Reaction times (though response accuracy can also be used) | Explicit judgement of familiarity (usually) |

| Input structure | Defined by artificial grammar | Repeating sequences (usually) | Defined by transitional probabilities |

| Modality | Perceptual (any) | Visual-motor (mostly, though auditory stimuli can also be used) | Perceptual (any) |

| Generalization at test? | Yes | No | No |

3. Six key questions

3.1. How is learning affected by input modality and domain?

For some time now, it has been known that statistical learning is not tied to a single perceptual modality or cognitive domain. Indeed, even a cursory review of findings from the three canonical tasks (Table 1), shows that learning can occur with auditory language-like material (Saffran et al., 1996), strings of letters (A.S. Reber, 1967), non-language auditory input such as pure tones (Saffran et al., 1999) or sequences of musical timbre (Tillman and McAdams, 2004), visual scenes and shapes (Fiser and Aslin, 2001), visual-motor patterns (Nissen and Bullemer, 1987), and tactile input (Conway and Christiansen, 2005). The demonstration of learning across such a widespread set of domains and input types immediately prompted suggestions that statistical learning should be thought of as a unitary, domain-general learning phenomenon that applies across a wide range of situations (Kirkham et al., 2002). That is, it is logically possible that statistical learning is governed by a single mechanism or neurocognitive principle that applies across a wide range of input types.

On the other hand, a series of studies showed that although learning of structured patterns can occur across various perceptual domains, the way that learning occurred in different modalities differed, suggesting the involvement of multiple modality-specific learning mechanisms (Conway and Christiansen, 2005; 2006; 2009; Emberson et al., 2011). For instance, adult participants showed higher levels of learning for auditory serial patterns compared to visual serial patterns – despite the patterns across perceptual modalities being equated in terms of low-level perceptual factors (Conway and Christiansen, 2005). In addition, the rate of presentation of serial input patterns had opposite effects on auditory and visual learning, with auditory and visual learning excelling at fast and slow presentation rates, respectively (Emberson et al., 2011). Moreover, different patterns presented in multiple streams of stimuli could be learned simultaneously and independently of each other, as long as the input streams were instantiated in different perceptual modalities (visual versus auditory) or perceptual categories (shapes versus colors; tones versus nonwords) (Conway and Christiansen, 2006). Given such findings, Conway (2005) and Conway and Christiansen (2005; 2006) proposed that aspects of statistical learning might share similarities with perceptual priming or perceptual learning (Conway et al., 2007), in which networks of neurons in modality-specific brain regions show decreased activity and improved facilitation for items that are similar to those previously experienced (P.J. Reber et al., 1998; Schacter and Badgaiyan, 2001). Furthermore, Conway (2005) suggested that although learning is implemented by a set of common computational principles or algorithms that exist across perceptual domains, there are processing differences within each perceptual modality that affect learning, such as audition and vision being differentially adept at picking up information distributed in time and space, respectively. Note, too, that from this perspective, it is not only the sensory modality that is important (e.g., auditory), but also the type of domain or category (e.g., verbal material vs. nonlinguistic tones; Conway and Christiansen, 2006).

However, under a purely domain-specific viewpoint, learning in one perceptual modality or domain would have no bearing or relation to learning and processing in another perceptual modality or domain. This does not appear to be the case. For instance, a number of studies have demonstrated that input presented in one perceptual modality can affect pattern learning in a second concurrently presented modality (Cunillera et al., 2010; Mitchel and Weiss, 2011; Mitchel et al., 2014; Seitz et al., 2007; Thiessen, 2010). This implies an ability for learners to integrate information across different modalities or domains, a challenge for modality-specific processing accounts. However, it is important to note that for all of these demonstrations of cross-modal learning effects, the stimuli in the two different perceptual domains were presented simultaneously in time. A recent study showed that when cross-modal dependencies are created between sequentially-presented input (e.g., a visual stimulus that is followed by an auditory stimulus with a certain statistical regularity), cross-modal learning does not occur (Walk and Conway, 2016); only sequential dependencies within the same perceptual modality were shown to be learnable by adult participants. One possibility therefore is that the learning of such sequential cross-modal patterns might require additional cognitive resources such as attention or working memory in order to focus on the dependencies in question and link them together across time.

It is also important to note that the motor modality can contribute to learning. For instance, a number of studies have investigated the role of perceptual versus motor learning using the SRT task (e.g., Nemeth et al., 2009; Song et al., 2008). The general finding is that motor learning can make independent contributions to learning over and above that which occurs perceptually (Goschke, 1998). In addition, motor-response learning, but not visual perceptual learning, is unaffected by sensory manipulations of the stimuli (e.g., changes to stimulus colors; Song et al., 2008). Furthermore, motor learning and perceptual sequence learning appear to follow different time-courses of consolidation (Hallgató et al., 2013), further suggesting that the motor and perceptual modalities should be thought of as independent learning systems.

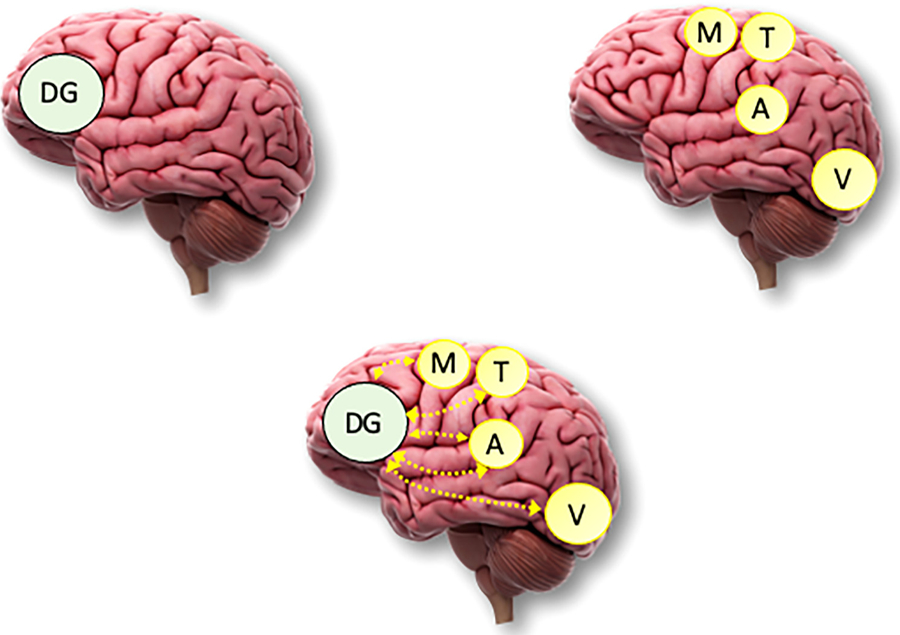

In sum, that input modality can affect statistical learning is no longer questioned (e.g., Frost et al., 2015). However, exactly how these at least partially separable and independent modality-specific learning mechanisms (e.g., visual, auditory, tactile, motor, etc.) operate in a multimodal environment is still not completely understood. It is likely that there may be a combination of modality-specific and domain-general learning processes that work together (e.g., Conway, 2005; Batterink et al., 2019; Keele et al., 2003). Fig. 2 illustrates three candidate architectures corresponding to domain-general, modality/domain-specific, and combined general/specific accounts. As an example of how a combined domain-general and domain-specific account might be instantiated in the brain, Conway and Pisoni (2008) reviewed evidence that statistical learning is associated with both modality-specific perceptual/motor brain regions – such as visual processing occipital regions for learning visual input patterns, auditory processing brain regions for learning auditory input, and motor and premotor cortex for motor learning – as well as areas such as the prefrontal cortex (PFC) which is involved in processing input across a variety of perceptual modalities and domains. Likewise, Tecumseh and Martins (2014) proposed that for the processing of sequential patterns, the PFC and specifically Broca’s area mediates domain-general predictive processing mechanisms that interact with posterior brain networks that mediate modality-specific input processing. Finally, Frost et al. (2015) proposed a similar interaction between domain-specific and domain-general processing, though their emphasis was on the hippocampus, basal ganglia, and thalamus as contributing to multimodal and domain-general processing, rather than the PFC.

Fig. 2.

Three candidate architectures for how input modality and domain interacts with statistical learning. (Top left): A domain-general (DG) account posits a single, unitary mechanism that implements statistical learning for all input modalities and domains. Here, the frontal lobe of the brain (prefrontal cortex) is offered as one potential domain-general brain region, though others areas are also likely candidates. (Top right): A modality/domain-specific account posits multiple, relatively independent mechanisms, each handling a specific type of input such as auditory (A), visual (V), tactile (T), and motor (M) patterns. For simplicity, only these four modality/domain-specific regions are illustrated though others would be posited to exist as well. (Bottom): Finally, perhaps the most viable account is one which combines domain-general and domain-specific architectures, here shown with connections between modality-specific brain regions and the prefrontal cortex.

More work is needed to specify to what extent different processing modes or mechanisms reflect a combination of modality-specific and domain-general learning under different situations. It is likely that certain cognitive processing resources such as selective attention and cognitive control may modulate or gate learning (e.g., Turk-Browne et al., 2005), and may be necessary for learning multimodal patterns across a temporal sequence. The role of attention as well as the neural bases of statistical pattern learning will be addressed further in subsequent sections (i.e., 3.3 and 3.4); but first, we turn to the question of input structure and complexity.

3.2. How is learning affected by the type of input structure?

Related to though independent of the question of input modality, is the question of input structure and complexity: what types of regularities and patterns can be learned, and what learning mechanisms are used to learn different types of structures? This question was central to much of early implicit learning research. Cleeremans et al. (1998) summarized the varying approaches emphasizing different aspects of learning, including distributional or statistical approaches (based on associative learning mechanisms as embodied for instance by neural network models; Cleeremans and McClelland, 1991), exemplar-based approaches (in which newly encountered exemplars are compared to the similarity of previously-memorized whole items; Vokey and Brooks, 1992), fragment-based or chunking approaches (in which newly encountered exemplars are evaluated to the extent to which they contain previously-encountered short chunks that were observed in previous exemplars; Perruchet and Pacteau, 1990), and abstractionist approaches (in which the structure of the relationships among stimuli is represented, independent of the stimuli surface features, perhaps taking the form of IF-THEN statements or algebraic rules; Marcus et al., 1999; A.S. Reber, 1989). Artificial grammar learning research using “balanced chunk strength designs”, in which chunk/fragment information was independently varied with the rules of the artificial grammar, showed that the learning of fragment or chunk information can be at least partly dissociated from the learning of grammatical rules (Knowlton and Squire, 1996). That is, the two types of patterns can be learned independently of each other and are subserved by apparently distinct neural and cognitive mechanisms (Lieberman et al., 2004).

What exactly constitutes a grammatical “rule” has led to a certain amount of debate (Altmann and Dienes, 1999; Marcus et al., 1999). One possibility is that what is regarded as a rule is actually a type of “perceptual primitive” (Endress et al., 2009). Perceptual primitives include repetition-based structures (e.g., “ga-ti-ti” and “li-na-na” follow the same ABB repetition pattern), which are highly salient to learners, as well as edge-based positional regularities, where items occurring at the beginning and ending of sequences tend to be learned more effectively than items in the middle. These perceptual primitives are so-named because they appear to be a type of regularity that is detected and learned on the basis of low-level perceptual mechanisms, common across both ontogeny and phylogeny (though this does not rule out the possibility that other non-perceptual memory systems might also contribute to their learning). From a slightly different perspective, following from the research on balanced chunk-strength designs (Knowlton and Squire, 1996), what is referred to as rule-based information might include positional constraints (such as which stimuli are allowable in initial, middle, or ending positions of a sequence), which is not fully captured by analysis of local chunk information alone. Thus, the umbrella term “rule” likely refers to more than one type of pattern (e.g., perceptual primitives and positional information being two likely candidates).

Apart from rule-based information, one important question raised by Perruchet and Pacton (2006; c.f., Christiansen, 2018) is whether chunk-based learning and statistical learning are the same or independent processes. Research on implicit learning has generally stressed chunk-based approaches whereas research on statistical learning has stressed statistical computations. Chunking models such as PARSER assume that attention to frequently co-occurring units results in an improved memory trace for those items, resulting in the formation of a chunk (Perruchet and Vinter, 1998). In a chunk-based view, chunking mechanisms are the primary way that learning proceeds; sensitivity to statistical relations are not learned per se but rather are a byproduct of the chunking process. On the other hand, it is possible that “chunks” are formed through the detection of transitional probabilities; in such a view, a chunk is the outgrowth of statistical learning processes, being the learned association between two items connected by high transitional probabilities. Several studies have attempted to clarify which mechanism governs pattern learning, with most of the evidence to date favoring chunk-based mechanisms (Giroux and Rey, 2009; Fiser and Aslin, 2005; Perruchet and Poulin-Charronnat, 2012; Orban et al., 2008), though at least one study appears to support a statistical learning approach (Endress and Mehler, 2009). It is possible that both chunk-based and statistical-based computations are available to learners and which process is used depends on the learning conditions, such as the availability of temporal cues which might promote chunking (Franco and Destrebecqz, 2012). It is also possible that forming a chunk among items separated in time (i.e. as part of a temporal sequence) has different cognitive requirements compared to a chunk of spatially-arranged and simultaneously-presented stimuli.

In addition to the distinctions among chunks, statistical associations, and rules, pattern structure can be quantified in other ways. For instance, patterns can differ in relation to how many preceding items are needed to predict the subsequent item in a sequence: for a 1st order dependency, only one preceding item is needed to determine the next item, whereas for a 2nd order dependency, two preceding items are required, etc. (Gomez, 1997). Likewise, in the statistical learning literature, complexity can be manipulated in terms of the strength of the transitional probabilities between items, the size of the “words” or chunks in word segmentation tasks (e.g., pairs of items or triplets), and the hierarchical arrangement of chunks in visual learning tasks. Similarly, for the serial reaction time task, complexity can be manipulated in terms of the type of sequence pattern (fixed versus probabilistic; first-order conditional versus second-order conditional; Remillard, 2008) and the length of the sequence.

Within the artificial grammar learning literature, there have been attempts to quantify the level of complexity of input patterns (e.g., Pothos, 2010; Schiff and Katan, 2014; van den Bos and Poletiek, 2008v). For instance, Wilson et al. (2013) used a metric that quantifies the complexity of a finite-state grammar by dividing the number of different stimulus elements in the grammar by the number of unique transitions between stimulus elements. This gives a measure of the grammar’s linear predictability or determinism, where a value of 1.0 denotes a perfectly deterministic grammar (i.e., a linear chain) and a lower value denotes a certain level of unpredictability (i.e., branching within the grammar). Wilson et al. (2013) used this metric to examine grammar learning of varying levels of complexity in humans and nonhumans, a point that will be returned to in section 3.6 (see also, Heimbauer et al., 2018). Pothos (2010) proposed an entropy model for quantifying complexity in AGL, borrowing concepts from information theory. Essentially, Shannon entropy is a logarithmic function of the number of different possibilities available; the greater the level of entropy the higher the level of uncertainty. Pothos (2010) found that this measure of entropy correlated with artificial grammar learning performance (greater levels of entropy were associated with lower levels of learning); entropy was also correlated with most other standard measures of complexity and regularity, such as associative chunk strength. Likewise, Schiff and Katan (2014) used a measure of topological entropy to assess 56 previously published AGL studies incorporating a total of 10 different artificial grammars. They found that their measure of entropy was significantly correlated with learning performance, despite the fact that the studies were carried out under different conditions and using different types of stimuli. In sum, it is clear that, regardless of the specific measure used, increased pattern complexity is associated with decreased learning performance on AGL tasks.

For patterns occurring in input sequences, it may also be possible to differentiate the types of structures in terms of three primary types of patterns: fixed sequences, where items in the sequence occur in an arbitrary, inflexible order (e.g., a phone number); statistical-based patterns, where the sequence consists of frequently co-occurring elements such as pairs or triplets defined by transitional probabilities; and hierarchical-based sequences, in which primitive units are combined to create more complex units, such as the case in natural language and other complex domains (Conway and Christiansen, 2001). Supporting this proposal, recent empirical work using the SRT task suggests that the learning of fixed sequences and statistical-based patterns reflect partially different characteristics, both at the behavioral and neural levels (e.g., Kóbor et al., 2018; Simor et al., 2019). For instance, statistical learning appears to occur relatively rapidly and plateaus quickly, whereas sequence learning shows a slower, gradual improvement across learning episodes (Simor et al., 2019). Furthermore, the two types of learning are reflected by different ERP components (Kóbor et al., 2018). These are interesting findings because from a certain perspective, the learning of both a fixed sequence and a statistical-based one could be construed as involving the learning of transitional probabilities inherent in the sequences, with a fixed sequence having transitional probabilities of 1.0 and statistical-based sequences containing transitional probability values less than one. However, the evidence suggests that at least partially separate mechanisms underly the learning of these two types of patterns.

One way to distinguish fixed sequences and statistical-based patterns from hierarchical patterns is by considering the difference between adjacent and nonadjacent dependencies (Gómez, 2002; Remillard, 2008). Adjacent dependencies consist of regularities between two items immediately following each other (e.g., A–B) whereas nonadjacent dependencies consist of regularities between two items in which the two have one or more intervening elements between them (e.g., A-x-B). The distinction between adjacent and nonadjacent dependencies is similar to the distinction made in formal linguistics between finite-state grammars, that generally include adjacent-item dependencies and are thought to be inadequate to describe natural language, and phrase structure grammars, which incorporate non-adjacent item dependencies, can have a recursive or hierarchical structure, and are computationally more powerful and arguably more able to characterize natural language (Fitch and Friederici, 2012; Tecumseh and Martins, 2014; Jager and Rogers, 2012).

Because nonadjacent dependency learning is thought to be a hallmark of human language and possibly other aspects of cognition (Christiansen and Chater, 2015), it is not surprising that there has been much recent interest in this type of learning (e.g., Creel et al., 2004; Deocampo et al., 2019; Frost and Monaghan, 2016; Gómez, 2002; Lany and Gómez, 2008; Pacton and Perruchet, 2008; Romberg and Saffran, 2013; Vuong et al., 2016). The learning of nonadjacent dependencies is often difficult to demonstrate in the lab and appears to generally require that the nonadjacent structure be highlighted – such as by manipulation of the transitional probabilities or through perceptual cues – or for endogenous attention to be properly oriented to the dependencies in question (de Diego-Balaguer et al., 2016d; Gómez, 2002; Newport and Aslin, 2004). More specifically, de Diego-Balaguer et al., 2016d suggested that the learning of nonadjacent dependencies likely is only possible later in development when endogenous attentional mechanisms become available to the learner. Research also suggests that the learning of nonadjacent dependencies recruits neural networks that are separate from those involved in the learning of adjacent dependencies (more on neural bases of nonadjacent dependency learning in section 3.4).

Taken together, it may be possible therefore to think about different types of input structures that vary in complexity. Table 2 presents a rough taxonomy of different types of patterns, with purportedly more “simpler” patterns (i.e., easier to learn) at the top and “more complex” patterns toward the bottom. Pattern complexity can thus be thought of as existing along a continuum from more serial, linear, and adjacent-item associations to dependencies that are more variable, nonadjacent, and/or contain recursive or hierarchical structure (c.f., Dehaene et al., 2015; Petkov and Wilson, 2012). Additional research is needed to specify the cognitive, computational, and neural prerequisites needed to learn patterns of varying structure and complexity, as there have been few studies systematically investigating these factors.

Table 2.

A rough taxonomy of input structures learnable through statistical learning.

| Perceptual primitives (repetitions, etc.) |

| Serial transitions |

| Chunks |

| Finite state grammars (of varying complexity) |

| Nonadjacent dependencies |

| Recursive / hierarchical / phrase structure |

3.3. What is the role of attention in learning?

Although statistical learning generally occurs under “incidental” conditions (i.e., without direct instruction or feedback during the learning process), this does not necessarily imply that attention plays no role. Before examining the role of attention in statistical learning, it is necessary to briefly define and discuss the construct of attention as well as related concepts such as automaticity, working memory, and conscious awareness.

An important distinction can be made between exogenous and endogenous attention (e.g., Chica et al., 2013). Exogenous attention is a bottom-up process in which cognitive resources are captured by salient stimuli in the environment; endogenous attention is a top-down process that provides a way to select which stimuli to process and which to ignore. Related to attention is the notion of automaticity. A cognitive process can be considered automatic if it occurs with little effort and requires few attentional resources (Hasher and Zacks, 1979). More specifically, it has been suggested that automatic behaviors or cognitive processes usually have the following four characteristics (Bargh, 1994): there is a general lack of awareness of the cognitive process that is occurring; there is no intentional initiation of the cognitive process in question; the cognitive process is difficult to stop or alter once it has been initiated; and the cognitive process has a low mental load. Thus, in regard to the role of attention in statistical learning, one question is whether statistical learning can be considered an automatic process (i.e., whether it proceeds without awareness, is initiated without intention, is unable to be controlled once it has started, and whether it has a low mental load or cost). A separate question is what roles do endogenous and exogenous attention play in learning, if any.

It is important to point out that (endogenous) attention is closely linked to the construct of working memory (Awh et al., 2006). For instance, one common definition of working memory is that it refers to processes that “hold a limited amount of information temporarily in a heightened state of availability for use in ongoing information processing” (e.g., Cowan, 1988, 2017). Thus, by this definition, working memory and (endogenous) attention are closely intertwined as the items that are in a heightened state of availability are necessarily within the focus of attention.

Finally, related to the question of attention and working memory, is to what extent statistical learning results in knowledge that is accessible to conscious awareness. Attention and awareness are related – e.g., the involvement of attention is more likely to lead to conscious awareness – but they are not synonymous (Lamme, 2003; Norman et al., 2013). Awareness can emerge when the activation strength or quality of the representations reach a sufficient level (Cleeremans, 2011), regardless of how much attention was originally deployed during the learning task. The extent to which learning proceeds intentionally versus incidentally can be manipulated by task instructions, which in turn can influence the extent that the knowledge that is learned is accessible to conscious awareness (Bertels et al., 2015). Pattern awareness can also emerge naturally during the learning process, even when no instructions are given to explicitly promote explicit strategies or conscious awareness (Singh et al., 2017). Decades of research on implicit learning has demonstrated that some aspects of learning can occur without the involvement of explicit strategies or conscious awareness (e.g., Song et al., 2007; Turk-Browne et al., 2009). To provide focus to the remaining discussion, we focus primarily on the roles of attention and working memory in relation to statistical learning.

Understanding the role of attention and working memory during statistical learning is not straightforward and in fact is a matter of some debate (e.g., Janacsek, and Nemeth, 2013; 2015; Martini et al., 2015). On the one hand, it seems plausible that having a larger working memory capacity provides a bigger “window” to encode and bind stimuli together across a temporal sequence that could subsequently improve learning of the contained regularities (Janacsek, and Nemeth, 2013). However, the empirical findings do not consistently demonstrate a functional relationship between working memory capacity and sequence learning ability as measured by the SRT task (Janacsek, and Nemeth, 2013). One possible reason for this is that if one takes a multi-component view of statistical learning (e.g., Arciuli, 2017; Daltrozzo and Conway, 2014), then each separate component in the system may depend upon attention or working memory to different degrees. For instance, the evidence appears to suggest that working memory may be more closely related to explicit forms of sequence learning compared to implicit forms of learning, as argued by Janacsek and Nemeth (2013). Likewise, the construct of working memory is multi-faceted, so different aspects of working memory may be more or less important for statistical learning. For instance, visual-spatial working memory may be closely tied to performance on statistical learning tasks that require visual-spatial encoding but less so for tasks involving the learning of verbal patterns (Janacsek, and Nemeth, 2013). In addition, for any given learning task, different participants may represent and conceptualize the task differently in terms of how they rely upon verbal, visual, or other types of representations (Martini et al., 2013). This variability in how participants represent the learning tasks could therefore explain the lack of strong correlations observed between working memory capacity and performance on statistical learning tasks.

Further illustrating the complex relationships among these constructs is a study by Hendricks et al. (2013) that attempted to examine the role of working memory in statistical learning. Hendricks et al. (2013) used a concurrent loadtask in conjunction with an artificial grammar learning paradigm to examine whether the learning of grammatical rules versus chunk-based information was automatic or not (i.e., required working memory resources). The concurrent load task involved participants viewing six random numbers on the screen, maintaining the numbers in memory while they subsequently viewed a trial of letters generated from an artificial grammar, and then finally typing the six numbers from memory. This concurrent load task was given to participants either during the exposure phase of the AGL task, the test phase, or both. Performance was compared to a control group that did the AGL task without having to do the concurrent load task. The results of this study showed that the learning of chunk or fragment-based information could proceed with minimal cognitive requirements (that is, the concurrent load task did not impair performance), suggesting that this form is learning can occur relatively automatically and under incidental learning conditions. On the other hand, the expression of rule-based knowledge (at test following learning), required a certain amount of cognitive resources (that is, the concurrent load task given during the test phase interfered with test performance for rule-based knowledge). These findings were interpreted by suggesting that the learning of fragment or chunk information is mediated by a form of implicit “perceptual fluency” in which perception of items is facilitated via experience (e.g., Chang and Knowlton, 2004), whereas the learning and expression of rule-based regularities was an explicit process involving something akin to “hypothesis generation” (e.g., Dulany et al., 1984).

Note, this conjecture appears to contradict earlier work suggesting that chunk learning and rule-learning occur via declarative and procedural memory, respectively (Lieberman et al., 2004). Briefly, declarative memory refers to the recall and recognition of facts and events (Squire, 2004) whereas procedural memory is a type of nondeclarative and largely implicit form of learning (Ullman, 2004). The relationship between statistical learning and these two other forms of memory will be taken up in section 3.5. For now, it is important to point out that the findings from Hendricks et al. (2013) and Lieberman et al. (2004) are not necessarily contradictory of one another, as it is possible that chunk learning can proceed via multiple routes, using either perceptual-based or declarative memory-based forms of encoding. Likewise, rule-based learning similarly may rely on either procedural memory or hypothesis-generation depending on the particular task, learning context, or individual. As mentioned earlier, note that “rules” in the present case refer to any information in the stimulus sequences that denote grammaticality apart from bigram and trigram information, such as positional regularities (e.g., what stimuli are allowed in different positions of a sequence) or possibly even nonadjacent regularities as dictated by the grammar.

Interestingly, the concurrent load task also interfered with performance in a transfer condition in which the underlying rules were consistent but the stimulus set was changed (Hendricks et al., 2013). That is, knowledge of the underlying grammatical regularities could be transferred to a non-trained letter set but only if there were sufficient cognitive resources available during test (i.e., only if there was not a concurrent load task). It appears then that some aspects of statistical learning require attention / working memory (e.g., using hypothesis-generation strategies, expressing rule-based knowledge at test, and transferring knowledge to novel stimulus domains), whereas others appear to be automatic (e.g., perceptual fluency of chunk-based information). However, it should be noted that it is not perfectly certain that the Hendricks et al. (2013) concurrent load task completely eliminated attentional resources; some amount of attention may still have been available during learning.

Another way to manipulate attention is by capitalizing on its selective nature. Turk-Browne et al. (2005) did so by creating two interleaved streams of differently colored visual regularities and then instructing participants to detect repetitions in one stream but not the other. Across several experiments, Turk-Browne et al. (2005) determined that learning of the statistical regularities only occurred for the attended stream, not for the unattended stream. They concluded that visual learning of sequential regularities both is and is not automatic: it requires attention in the sense that the regularities are only learned if the stimuli are selectively attended; but learning is automatic in the sense that it can occur incidentally (i.e., in the face of a cover task that provided no information about the presence of regularities) and does not necessarily result in conscious awareness of what was learned. Selective attention therefore may act as a “gate” for statistical learning, at least for certain learning situations and task paradigms (e.g., Baker et al., 2004; Emberson et al., 2011; Toro et al., 2005; Turk-Browne et al., 2005).

Interestingly, there appears to be a reciprocal relationship, in which learning itself can modulate attention (Alamia and Zénon, 2016; Hard et al., 2018; Zhao et al., 2013). That is, attention affects learning by facilitating encoding of particular aspects of the input; and yet, learning itself can affect attention, for instance by creating a “pop-out” effect, drawing (exogenous) attention to input that violates the expectations that have been generated based on previous experience (Kristjansson et al., 2007). In support of this idea, Sengupta et al. (2018) recently found that functional connectivity between brain networks supporting attention and working memory processes changed following exposure to the statistical regularities presented in an artificial language.

Attention also plays a crucial role in the framework of de Diego-Balaguer et al., 2016d, in which top-down control of attention (i.e., endogenous attention) is a prerequisite for learning nonadjacent but not adjacent sequential dependencies. Consistent with such a dissociation are findings from Romberg and Saffran (2013). They constructed artificial languages in which the first and third items of 3-word phrases had nonadjacent deterministic relationships while the intervening elements had adjacent probabilistic relationships with the surrounding two items. Although adults were able to demonstrate learning of both the adjacent and nonadjacent dependencies, higher confidence ratings on nonadjacent trials were associated with higher accuracy, while greater confidence on adjacent trials was not associated with greater accuracy. This may suggest that learning of the nonadjacent dependencies occurred explicitly while learning of adjacent dependencies was accomplished by more implicit means. Interestingly, Turk-Browne et al. (2005) pointed out that, because their design involved two interleaved streams of stimuli, learning was occurring in many cases over intervening items, thus requiring learning of nonadjacent dependencies. Together these findings are consistent with the idea that selective attention perhaps is most needed for learning nonadjacent dependencies across a temporal stream (Diego-Balaguer et al., 2016).

A different perspective, however, stresses not only the necessity of attention for pattern learning, but also its sufficiency (Pacton and Perruchet, 2008; Perruchet and Vinter, 1998). Under this view, the learning of patterns in input is a natural consequence of attentional processing due to the laws of memory and associative learning. From this perspective, attention may be necessary not only for learning nonadjacent but also adjacent dependencies (Pacton and Perruchet, 2008). However, as reviewed previously, it appears that adjacent-item chunks can also be learned without the availability of attentional resources (Hendricks et al., 2013).

Other studies are consistent with the notion that while some aspects of learning require attention or intention to learn, other aspects of learning can indeed proceed automatically and under incidental conditions. For instance, Bekinschtein et al. (2009) (see also Wacongne et al., 2011) used a local-global paradigm in which auditory sequences of pure tones that contained either local (within-sequence) or global (across-sequence) violations were presented. For instance, in “XXXXXY” the “Y” is a local violation in this sequence because it violates the expected pattern of “X’s”. However, after repeated exposures of “XXXXXY”, if the sequence “xref” is then encountered, the final “X” in the sequence becomes a global violation because the “Y” is expected after exposure to the “XXXXXY” sequences. Bekinschtein et al. (2009) found that the local deviants were processed automatically and non-consciously; these types of violations were immune to attentional manipulations and were even elicited in coma patients as measured by event-related potentials (ERPs). On the other hand, the global deviants required explicit or controlled processing: they were accompanied by conscious awareness in healthy participants; in coma patients the ERPs related to these types of deviants were not detected.

Thus, it appears likely that both “implicit” (i.e., attention-independent / automatic) and “explicit” (i.e., attention-dependent) learning processes operate alongside each other. Such “dual-theory” approaches are common in the literature. For instance, Dale et al (2012) proposed that during a learning episode, implicit associative or reactive learning occurs initially, which leads to the formulation of predictive “wagers” that steadily become more correct and that in turn lead to explicit awareness of the learned patterns. This perspective is also consistent with research using a predictor-target paradigm in which visual “target” stimuli are predicted to varying degrees by “high” or “low” predictor stimuli; although learning is incidental, over the course of the experiment, adults and children display the emergence of a P300-like ERP component elicited by the high predictor stimulus (Jost et al., 2015). This ERP component is strongly related to participants’ conscious awareness of the predictor-target contingency (Singh et al., 2017). This attention-based ERP component is distinct from participants’ learning as assessed through reaction times, which appeared to be indexing learning of the contingencies occurring outside attention and awareness (Singh et al., 2018).

Similarly, Batterink et al. (2015) proposed that implicit and explicit learning systems operate in parallel, with the implicit system more or less always engaged but the explicit system optional. They suggested that in the standard familiarity task often used in statistical learning research, the familiarity judgement reflects explicit knowledge but that implicit learning can also be displayed and measured indirectly using reaction times or possibly ERPs. Another dual-system approach is that of Keele et al. (2003), who proposed a theoretical perspective based on a review of findings from the SRT task. In their view, a dorsal neural system mediates implicit learning of unimodal or unidimensional stimuli, whereas a ventral system mediates the learning of cross-modal or cross-dimensional input, which can involve both implicit and explicit learning mechanisms. This last tenet is consistent with Walk and Conway (2016) who proposed that implicit learning is sufficient for learning unimodal sequential regularities (i.e., sequential dependencies between items in the same perceptual modality) but that additional cognitive resources such as selective attention or working memory may be required to learn cross-modal sequential patterns. Similarly, Daltrozzo and Conway (2014) also proposed a two-system view of pattern learning: a bottom-up implicit-perceptual learning system that develops early in life and encodes the surface structure of input; and a second system that is dependent on attention, develops later in life, and relies to a greater extent on top-down information to encode and represent more complex patterns.

Finally, insight can also be gained from a related though somewhat distinct research literature on category learning. Smith and colleagues (Smith and Grossman, 2008; Smith et al., 1998) proposed that there are multiple types of category-learning systems: rule-based and similarity-based. They proposed that rule-based category learning involves selective attention and working memory processes to enable a decision to be made about whether an item belongs to a particular category. On the other hand, similarity-based categorization processes can be mediated by the involvement of both explicit and implicit learning processes. The implicit learning system involves processes such as perceptual fluency and perceptual priming; that is, one decides whether an item belongs to the category in question in terms of the ease with which the perceptual features of the item can be processed (Smith and Grossman, 2008).

Taken together, we believe the evidence supports the idea that statistical learning reflects both implicit / automatic and attention-dependent / explicit aspects of processing. The attention-dependent learning system shares similarities with Baars (1988; 2005) global workspace theory of consciousness, in which consciousness is construed as a limited capacity attentional spotlight that “enables access between brain functions that are otherwise separate” (Baars, 2005, p.46). What determines the mode of learning (explicit vs. implicit) likely depends at least in part on the type of input to be learned; some types of structures appear to require attention to adequately process and encode the patterns, such as nonadjacent dependencies (de Diego-Balaguer et al., 2016d), global patterns (Bekinschtein et al., 2009), cross-modal dependencies (Keele et al., 2003), and rule-based processing (Hendricks et al., 2013; Smith et al., 1998). Other factors that may affect the involvement of automatic versus attention-dependent mechanisms include whether learning is assessed through the use of direct/explicit judgments versus indirect measures such as reaction times (Batterink et al., 2015) and whether learning requires generalization or transfer to new stimulus sets (Hendricks et al., 2013). We furthermore propose that the automatic learning system is “obligatory” in the sense that it is always active, whereas the attention-dependent system is optional and is only engaged when selective attention and working memory are brought to bear on the learning task (Batterink et al., 2015) via the involvement of endogenous or exogenous attentional mechanisms. It is also possible that the involvement of one or both systems is not an “either-or” phenomenon but may be graded; as learning proceeds, exogenous attention can be increasingly drawn to the regularities in question (Alamia and Zénon, 2016), which would necessarily gradually activate the attention-dependent learning system. Thus, the involvement of automatic versus attention-dependent learning mechanisms could likely change across a learning episode or across multiple episodes.

3.4. An interim summary

Based on the preceding three sections, it is clear that statistical learning: 1) consists of both modality-specific and domain-general learning mechanisms; 2) can be used to learn patterns along a continuum of complexity from relatively simple to more complex structures; 3) and involves both implicit / automatic as well as explicit / attention-dependent modes of learning. We furthermore propose that these three factors “line up” so to speak, suggesting the involvement of two primary modes of learning (see Fig. 3). Specifically, statistical learning is mediated through the functioning of at least two (or more) distinct processing mechanisms. The first is the classic “implicit” learning system, that proceeds automatically and with minimal attentional requirements, is likely a perceptual-based process, and can mediate the learning of local, unimodal, and associative-based patterns. The second is an explicit, attention-dependent system that is necessary for learning nonadjacent, global, and crossmodal dependencies; it is also needed for when transferring learning to new stimulus sets or contexts. These two systems likely operate in parallel with each other (Batterink et al., 2015), and each can be more or less engaged depending on the learning requirements and situation (Daltrozzo and Conway, 2014).

Fig. 3.

Depiction of two distinct learning mechanisms, “implicit” and “explicit”, in relation to the factors of input modality, input complexity, and attention.

This dual-system approach has similarities to the distinction between “model-based” and “model-free” reinforcement learning (e.g., Savalia et al. 2016; Kurdi et al., 2019) which describes learning that is goal-directed, flexible, and reliant upon long-term knowledge (i.e., model-based learning) versus learning that is data-driven, automatic, and relatively inflexible (i.e., model-free learning). Together, these two types of learning provide complementary ways to best learn about and interact with the environment.

However, it should be pointed out that the two systems may possibly operate competitively, rather than independently of or in cooperation with one another, as has been suggested up to this point. For instance, some studies have shown that executive control processes may have an antagonistic relationship with implicit pattern learning (Ambrus et al., 2019; Filoteo et al., 2010; Nemeth et al., 2013; Tóth et al., 2017; Virag et al., 2015). Virag et al. (2015) observed a negative correlation between executive functions and implicit learning as measured by a variant of the SRT task. And Nemeth et al. (2013) used hypnosis to reduce explicit attentional processes in their subjects, which resulted in improved learning on the SRT task. At present it is not clear under what conditions these two systems act synergistically versus antagonistically but one possibility is that it is due to the task requirements. Most of the studies cited above that observed a competitive relationship used the SRT task, which differs in a number of important respects with other statistical learning tasks such as the segmentation task or AGL task. One important difference is that the SRT task requires a motor response on each trial, whereas the other two tasks have a passive exposure phase that involves perceptual learning or memory-based encoding without a motor response. It is possible that top-down attentional control interferes with the type of trial-by-trial stimulus-response learning that the SRT task elicits; it is currently an open question whether this holds true for learning during other types of statistical learning tasks that do not involve the same type of stimulus-response learning.

In the next section (3.5), we review the neuroanatomical bases of statistical learning through the lens of the operation of these two proposed learning systems. Then, we examine how each type of learning system might change across human development and may differ across phylogeny (sections 3.6 and 3.7) before concluding with a summary of ten core principles that flesh out the neurocognitive mechanisms underlying statistical learning (section 4).

3.5. What are the neuroanatomical bases of statistical learning?

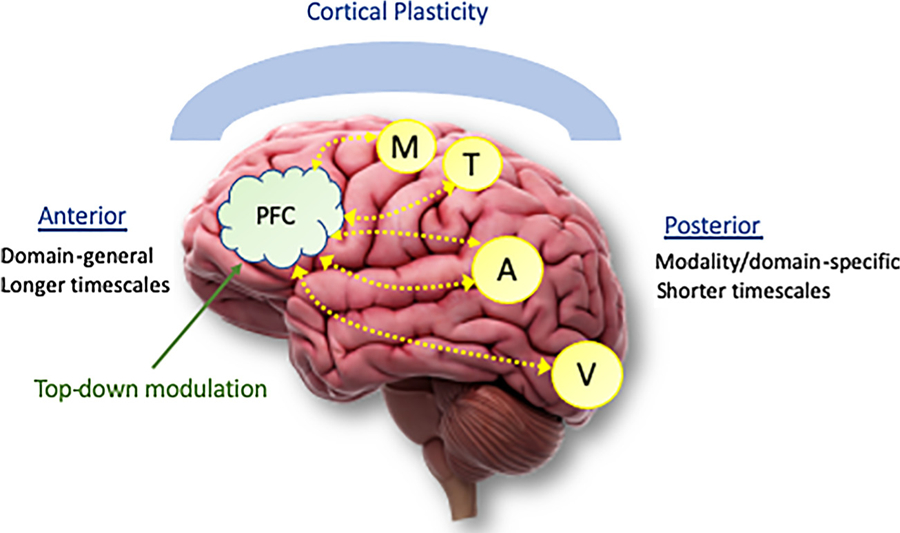

Brain areas that have shown significant activation during different types of statistical learning and implicit learning tasks include practically the entire brain, including: perceptual regions (e.g., Turk-Browne et al., 2010), parietal cortex (e.g., Forkstam et al., 2006), prefrontal cortex and Broca’s area specifically (e.g., Abla and Okanoya, 2008), as well as subcortical regions such as the hippocampus (and medial temporal lobe, MTL) (e.g., Schapiro et al., 2014), and basal ganglia (e.g., Karuza et al., 2013). Rather than reviewing all of the available evidence in detail, we instead focus on theoretical perspectives that can help explain why certain brain regions may or may not be active depending on the task or situation. For added focus, we mainly discuss the neocortical bases of statistical learning, while still acknowledging the important role played by subcortical structures such as the hippocampus, cerebellum and basal ganglia (see Batterink et al., 2019). At the end of this section we then discuss how the neocortical systems proposed here interact with “classic” learning and memory systems (i.e., declarative and procedural memory), thought to be mediated largely by subcortical structures.

One perspective that is consistent with the neural findings showing multiple brain regions involved with statistical learning is P.J. Reber’s (2013) proposal that implicit learning reflects a general principle of plasticity of neural networks that results in improved processing. That is, learning is an emergent property of neural plasticity that is pervasive and universal, not localized to a particular brain region, nor confined to any specific task, but contributes to cognition and behavior very broadly. Under this view, implicit learning cannot be defined exclusively by whether or not it involves for instance the MTL or conscious awareness; instead, it reflects the gradual tuning of neural networks and synapses to adapt to statistical structure encountered in the environment. Such neural plasticity and tuning generally is associated with reduction of neural activity that reflects increased processing efficiency (P.J. Reber, 2013).

Under this “plasticity of processing” perspective of statistical learning, the areas of the brain that will reflect learning are those same areas involved in processing the input in question. Thus, it is perhaps not surprising that perceptual regions of the brain are implicated in statistical learning (Turk-Browne et al., 2010), as perceptual processing is necessary in order to encode the stimuli in the first place. Note, perceptual areas have shown activity reflecting not just perception of the individual stimuli, but learning of the patterns themselves. But what about studies showing activity in frontal, parietal, subcortical, and other areas? It is likely that general constraints on processing in different neural networks determines which brain areas will reflect learning. There appear to be two primary sets of cortical regions involved in implicit learning of sequential structure (Conway and Pisoni, 2008): sensory/perceptual regions as already discussed, but also frontal regions such as the prefrontal cortex (PFC) that have connective loops with subcortical networks including the basal ganglia and cerebellum. For tasks involving processing of sequential (i.e., temporal) structure in particular, working memory and selective attention are likely necessary, which in turn relies on PFC and associated brain networks. Thus, these two systems – perceptual and frontal – together constitute a dynamic and adaptive cortical network that is used to perceive, encode, and adapt to most types of input patterns encountered in the world.

The distinction between frontal “executive” cortical regions and posterior “perceptual” regions is nicely summarized by Fuster and Bressler (2012), who argued this dichotomy reflects a general characteristic of neural functioning, with frontal areas needed for actions (e.g., behavior, language) as well as higher-level planning, and posterior regions involved in sensory, perceptual, and memory operations. Under this view, all aspects of cognition involve the operation of large-scale cortical networks, not modular regions, and in particular involves the interaction of the posterior and frontal systems. Lateral PFC is argued specifically to be crucial for the temporal organization of behavior (Fuster, 2001). Furthermore, working memory neuroimaging studies generally show lateral PFC involvement in conjunction with posterior areas that vary on the sensory modality of the particular input type that is encountered: “If the memorandum is visual, that posterior region includes inferotemporal and parastriate cortex ….. if it is auditory, superior temporal cortex; if it is spatial, posterior parietal cortex” (Fuster and Bressler, 2012, p.215). Integrating Fuster and Bressler’s (2012) view with P.J. Reber’s (2013) leads to the following conclusion: items encountered in a temporal sequence necessarily recruit PFC as well as sensory/posterior regions (the exact sensory region active depending on the input modality) in order to process the sequence; if that particular type of sequence is encountered repeatedly, containing structural regularities, then the networks involved in processing these sequences (i.e., PFC and perceptual regions) will show plasticity and tuning, resulting in learning of the underlying structure.

In a similar perspective, Hasson et al. (2015) noted that virtually all cortical circuits can accumulate information (i.e. learn) over time, but that timescales vary hierarchically in the brain: lower sensory areas can only process information on the order of 10 s to 100 s of milliseconds, whereas higher-order areas can process information over much longer timescales (many seconds or minutes) (see also Farbood et al., 2015; Kiebel et al., 2008). This appears to be due to the hierarchical arrangement of neural systems. That is, lower-order sensory areas respond to relatively simple features (such as single tones or lines of particular orientations), whereas higher-order areas integrate across this information to represent increasingly complex stimuli (such as speech or faces). This same general “rostro-caudal” framework appears to apply to temporal dynamics as well, in which timescales of representation generally increase as one moves from lower sensory areas to higher-level frontal areas (Kiebel et al., 2008).

Hasson et al. (2015) furthermore argued against a memory versus processing distinction; instead, in their view, prior information continuously shapes processing in the present moment, very similar to P.J. Reber’s (2013) view of implicit learning consisting of cortical tuning of processing networks. In addition, Hasson et al. (2015) argued for the existence of modulatory circuits: “attentional control processes supported by fronto-parietal circuits” (related to traditional working memory operations), “and binding and consolidation processes supported by [medial temporal lobe] circuits (related to episodic memory)”. Thus, the general principle of cortical plasticity is constrained by differences in processing characteristics of different areas of the brain (e.g., short vs. longer timescales) but is also modulated by attentional control, working memory, and consolidation processes. We thus suggest there are at least two primary neurocognitive (primarily, cortical-based) mechanisms that embody statistical learning: 1) gradual tuning of cortical networks based on experience (i.e., cortical plasticity); and 2) top-down modulatory control mechanisms that guide selective attention and working memory, which is especially needed for learning patterns that require integration of information across time (i.e., statistical patterns in temporal sequences).

As reviewed in section 3.1, statistical learning appears to be partly, and perhaps largely, based on perceptual processing mechanisms (Conway and Christiansen, 2005; Frost et al., 2015). However, based on the review in this section so far, we now know there are at least two reasons why sensory/perceptual regions cannot mediate all aspects of statistical learning. One is that due to the sizes of their temporal receptive windows, sensory areas cannot process information that spans longer than the order of milliseconds. Thus, learning sequential patterns over a temporal sequence, especially for long-distance or nonadjacent dependencies, cannot occur in these perceptual processing brain regions, but must rely on downstream networks including frontoparietal networks and perhaps PFC specifically. Second, the PFC and related frontoparietal networks appear to modulate learning in any given situation, even if these frontal regions don’t reflect cortical tuning and plasticity themselves. For example, through frontoparietal network involvement, attention to particular stimuli may occur, directing perceptual processing regions to then engage with those inputs, which over the course of repeated experience, results in those perceptual regions exhibiting neural plasticity and learning. It is also likely that the frontoparietal networks themselves may show neural tuning and plasticity with exposure, allowing learning itself to modulate attention, as reviewed in section 3.3.

This framework where learning (perhaps of sequential input specifically) might involve a combination of higher-level frontal areas as well as lower perceptual regions, is consistent with other relevant theoretical perspectives. For instance, Uhrig et al. (2014) provided evidence consistent with the idea that learning sequences occurs at hierarchical levels in the brain: lower/modality-specific areas are independent of attention and can mediate “local” processing operations whereas higher levels are attention-dependent and are needed for more “global” processing. Thothathiri and Rattinger (2015) further argued that frontal areas and controlled processing are necessary for sequence processing specifically. Their argument, based on a review of both neuroimaging and neuropsychological studies and focusing on sequence production, is that sequencing involves cognitive control (the ability to order items, reject incorrect items, resolve interference, and choose the correct item to produce). Therefore, the frontal lobe (specifically, left ventrolateral PFC) is needed for sequencing because cognitive control functions are necessary for selecting the correct stimulus among various alternatives in a sequence.

Cognitive control and selective attention are likely important not just for sequence production but also for sequence learning, especially for long-distance or nonadjacent dependencies. As mentioned earlier, de Diego-Balaguer et al., 2016d proposed that in order to learn a sequential nonadjacent dependency, cognitive control is needed to inhibit processing of intervening items occurring between the nonadjacent dependencies and to focus on the long-distance dependency itself. Indeed, areas of the PFC such as left inferior frontal gyrus (LIFG, or Broca’s region) have often been implicated in sequence learning that specifically involves structures that contain long-distance regularities (e.g., Bahlmann et al., 2008; Friederici et al., 2006). In fact, LIFG has been proposed to be a “supramodal hierarchical processor” (Tettamanti and Weniger, 2006). It is possible that the reason that LIFG may appear to be necessary for hierarchical operations is that hierarchical dependencies necessarily require the processing (and learning) of long-distance dependencies, which relies on PFC involvement.

Finally, a crucial aspect of statistical learning appears to be prediction and expectation (Dale et al., 2012), which is mediated by both sensory and downstream areas such as the PFC (though it is possible that the PFC generates the predictions and subsequently modulates sensory areas, Bubic, 2010). In particular, temporal sequencing appears to be an area where prediction is most important due to the prominent role of time and uncertainty (Bubic, 2010). An important part of making predictions of upcoming events is the necessity of inhibiting the representation of events or stimuli that are not predicted, which likely involves PFC (Bar, 2009). However, predictive processing appears to be inherent to all levels of the hierarchically organized nervous system (Friston, 2005) and thus appears to go hand-in-hand with a “plasticity of processing” approach. Furthermore, Huettig (2015) suggested a dual-system account of predictive processing similar to our proposal of statistical learning consisting of an implicit, automatic processing system and an explicit, attention-dependent one.

To summarize, there are a number of considerations that can illuminate why certain brain regions show consistent activation in studies of statistical learning. Under a “plasticity of processing” approach (P.J. Reber, 2013), whatever neural substrate is involved in processing the input in question, through repeated exposure and experience, becomes tuned through general principles of neural plasticity to become more efficient at processing that type of stimulus, resulting in lower levels of neural activation. This explains why it is common to observe a variety of distributed neural regions active for different kinds of tasks and input types, such as auditory and visual processing regions during auditory and visual statistical learning tasks, respectively. In addition, the brain is organized hierarchically, with upstream brain regions showing relatively short temporal receptive windows and downstream areas (such as PFC) showing the largest temporal receptive windows. This acts as a further constraint on processing: for sequences and especially long-distance or global dependencies, only brain regions with temporal receptive windows that are large enough to process the stimuli across longer periods of time will reflect learning of such dependencies. Finally, in addition to the general mechanism of cortical plasticity, the involvement of PFC and frontoparietal networks can act as a modulatory mechanism on learning, providing top-down control of attention and cognitive control, which can affect and direct learning, especially for more complex patterns such as hierarchical or long-distance dependencies. This distributed versus specialized dichotomy appears to map loosely onto the “implicit / automatic” and “explicit / attention-dependent” distinction outlined in section 3.4 and Fig. 3, with cortical plasticity instantiated in lower perceptual regions reflecting attention-independent, automatic implicit learning mechanisms, and downstream brain regions reflecting attention-dependent specialized functions needed for processing and learning certain aspects of structural regularities, especially those that require integration over longer periods of time.

However, as reviewed earlier in section 3.4, some research suggests that attention-independent and attention-dependent systems might operate antagonistically, rather than synergistically. That is, frontal-based executive and cognitive control functions might operate competitively with more implicit forms of learning (e.g., Nemeth et al., 2013). For instance, Tóth et al. (2017) used EEG to measure functional connectivity during implicit sequence learning with the SRT task. They found that learning performance was negatively correlated with functional connectivity in anterior sites, which they proposed suggests that top-down attentional control interferes with automatic, implicit learning of the visual-motor sequences. More recently, Ambrus et al. (2019) investigated the relationship between these two systems using inhibitory transcranial magnetic stimulation (TMS) on the dorsolateral prefrontal cortex (DLPFC) while participants engaged in the SRT task. The results revealed that disrupting this area of the frontal lobe resulted in better learning of nonadjacent dependencies. Thus, these findings appear to show that at least for the SRT task, frontal-mediated executive and attentional functions act antagonistically with implicit learning, with the latter improving when the former are weak or disrupted.