Significance

Tree-like structures are abundant in the empirical sciences as they can summarize high-dimensional data and show latent structure among many samples in a single framework. Prominent examples include phylogenetic trees or hierarchical clustering derived from genetic data. Currently, users employ ad hoc methods to test for association between a given tree and a response variable, which reduces reproducibility and robustness. In this paper, we introduce treeSeg, a simple to use and widely applicable methodology with high power for testing between all levels of hierarchy for a given tree and the response while accounting for the overall false positive rate. Our method allows for precise uncertainty quantification and therefore, increases interpretability and reproducibility of such studies across many fields of science.

Keywords: subgroup detection, hypothesis testing, tree structures, change-point detection

Abstract

Tree structures, showing hierarchical relationships and the latent structures between samples, are ubiquitous in genomic and biomedical sciences. A common question in many studies is whether there is an association between a response variable measured on each sample and the latent group structure represented by some given tree. Currently, this is addressed on an ad hoc basis, usually requiring the user to decide on an appropriate number of clusters to prune out of the tree to be tested against the response variable. Here, we present a statistical method with statistical guarantees that tests for association between the response variable and a fixed tree structure across all levels of the tree hierarchy with high power while accounting for the overall false positive error rate. This enhances the robustness and reproducibility of such findings.

In the era of big data where quantifying the relationship between samples is difficult, tree structures are commonly used to summarize and visualize the relationship between samples and to capture latent structure. The hierarchical nature of trees allows the relationships between all samples to be viewed in a single framework, and this has led to their widespread usage in genomics and biomedical science. Examples are phylogenetic trees built from genetic data, hierarchical clustering based on distance measures of features of interest (for example, gene expression data with thousands of markers measured in each sample), evolution of human languages, and more broadly, in machine learning where clustering and unsupervised learning are fundamental tasks (1–7).

Often, samples have additional response measurements (e.g., phenotypes), and a common question is whether there is a relation between the sample’s latent group structure captured by the tree and the outcome of interest (i.e., whether the distribution of depends on its relative location among the leaves of the tree ). Testing for all possible combinations of groupings on the tree is practically impossible as it grows exponentially with sample size. Currently, users typically decide on the number of clusters on an ad hoc basis (e.g., after plotting the response measurement on the leaves of the tree and deciding visually which clusters to choose), which are then tested for association with the outcome of interest. This lack of rigorous statistical methodology has limited the translational application and reproducibility of these methods.

Here, we present a statistical method and accompanying R package, treeSeg, that, given a significance level , test for dependence of the response measurement distribution on all levels of hierarchy in a given tree while accounting for multiple testing. It returns the most likely segmentation of the tree such that each segment has a distinct response distribution while controlling the overall false positive error rate. This is achieved by embedding the tree segmentation problem into a change-point detection setting (8–13).

treeSeg does not require any assumptions on the generation process of the tree . It treats as given and fixed, testing the response of interest against the given tree structure. Every tree , independent of how it was generated, induces some latent ordering of the samples. treeSeg tests whether, for this particular ordering, the distribution of the independent observations depends on their locations on the tree.

treeSeg is applicable to a wide range of problems across many scientific disciplines, such as phylogenetic studies, molecular epidemiology, metagenomics, gene expression studies, etc. (3, 5, 14–16), where the association between a tree structure and a response variable is under investigation. The only inputs needed are the tree structure and the outcome of interest for the leaves of the tree. We demonstrate the sensitivity and specificity of treeSeg using simulated data and its application to a cancer gene expression study (1).

Results

For ease of presentation, we restrict to discrete binary response measurements . However, the procedure is equally applicable to continuous and other observation types (Methods). If there is no association between the tree and the response measurement , then the observed responses would be randomly distributed on the leaves of the tree, independent of the tree structure . However, if the distribution of responses is associated with the tree structure, we may observe clades in the tree with distinct response distributions. The power to detect segments with distinct distributions depends on the size of the clade and the change in response probability, , which means that one can only make statistical statements on the minimum number of clades with distinct distributions on the tree and not the maximum.

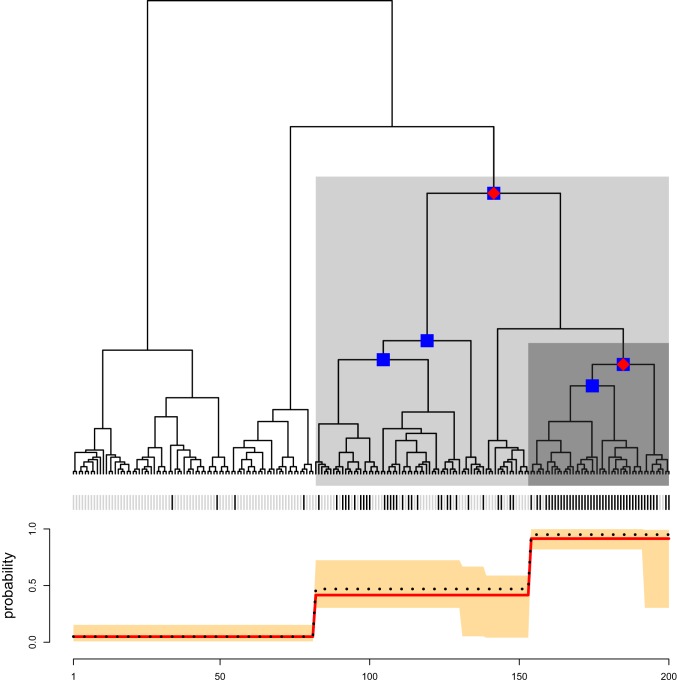

Fig. 1 illustrates our method and its output for a simulated dataset. The responses are displayed on the leaves of the tree as black and gray lines in Fig. 1. The tree is made of three segments with distinct distributions over the responses indicated by dark gray, light gray, and white backgrounds in Fig. 1. Given a confidence level (e.g., ), the treeSeg procedure estimates the most likely segmentation of the tree into regions of common response distributions such that the true number of segments is at least as high as the estimated number of segments with probability of .

Fig. 1.

Illustration of the treeSeg method. Binary tree with 200 leaves and three segments with distinct response distributions indicated by dark gray, light gray, and white backgrounds. Outcomes for each sample are shown on the leaves of the tree as gray or black vertical lines. Leaf responses were simulated such that the black line has probabilities of , and 0.05 for each of the dark gray, light gray, and white background sections, respectively. Using , treeSeg has estimated three segments on the tree with distinct response distributions indicated by the red diamonds on the nodes of the tree. Blue squares constitute a 90% confidence set for the nodes of the tree associated with the change in response distribution. Lower shows the simulation response probabilities (black dotted line), the treeSeg estimate (red line), and its 90% confidence bands (orange).

Our method employs many likelihood ratio (LR) statistics simultaneously to test for changes in the response distribution on all levels of tree hierarchy and estimates at what level, if any, there is a change. The multiple testing procedure of treeSeg is based on a multiscale change-point methodology (11) tailored to the tree structure. The significance levels of the individual tests are chosen in such a way that the overall significance level is the prespecified . As well as the maximum likelihood estimate, our method also provides confidence sets (at the level) for the nodes of the tree associated with the change in response distribution and a confidence band for the response probabilities over the segments (Methods and SI Appendix have theoretical proofs). In the example of Fig. 1, using , treeSeg estimates three segments in the tree , indicated with Fig. 1, red diamonds on the nodes of the tree, recovering the true simulated changes in response distributions. In Fig. 1, blue squares on the tree indicate the confidence set for the nodes on the tree associated with the change in responses . The red line in Fig. 1, Lower shows the maximum likelihood estimate of the response probabilities for each segment, which accurately recovers the true simulated probabilities shown as the black dotted line. The orange band in Fig. 1, Lower shows the confidence band of the response probabilities.

The treeSeg method can handle missing data and make response predictions on new samples. Computationally, treeSeg scales well with sample size: for example, a test simulation for a tree with 100,000 samples (number of leaves in the tree) and no response association took around 110 min to run on a standard laptop. Details on treeSeg’s implementation are in SI Appendix.

Simulation Study.

We confirmed the statistical calibration and robustness of treeSeg using simulation studies. We found that, for reasonable minimal clade sizes and changes in response distribution, treeSeg is able to detect association between response and tree structure reliably (SI Appendix, Figs. S11–S16). More importantly, treeSeg almost never detects segments that are not present (it can be mathematically proven that the inclusion of a false positive segment only happens with probability ) (Methods), and the nominal guarantee of is exceeded in most cases. For instance, in a simulation study of 1,000 randomly generated trees (200 leaves) with no changes in response distribution, treeSeg (using ) correctly detected no association in of the runs.

The treeSeg algorithm uses a fixed ordering of the leaf nodes according to the tree structure . In principle, any such ordering is equally valid as long as this is made independent of the response variables. In our implementation in SI Appendix, we apply a standardized ordering of the nodes so that treeSeg’s output is independent of any user-specified ordering. Furthermore, we provide simulation results showing that treeSeg is robust to random changes in node ordering and consistently infers the correct number of segments on the tree (SI Appendix, section 2.B and Figs. S17–S25). In SI Appendix, section 2.B.1, we provide some discussion on signal-dependent branch orderings that may yield a higher detection power compared with others. We also present a procedure for aggregating results across random orderings to ensure that the output is independent of any specific tip ordering (SI Appendix, section 1.E). SI Appendix has full details on simulation studies.

The treeSeg procedure is conditioned on the input tree and thus, independent of the particular way that the tree is generated. In real applications, the input tree structure is usually just a noisy version of some true neighborhood structure of interest. Therefore, whenever the noise in the input tree is reasonably small such that and essentially describe the same neighborhood structure, treeSeg’s output is robust to this noise (SI Appendix, section 1.F has further illustration).

Application to Cancer Data Example.

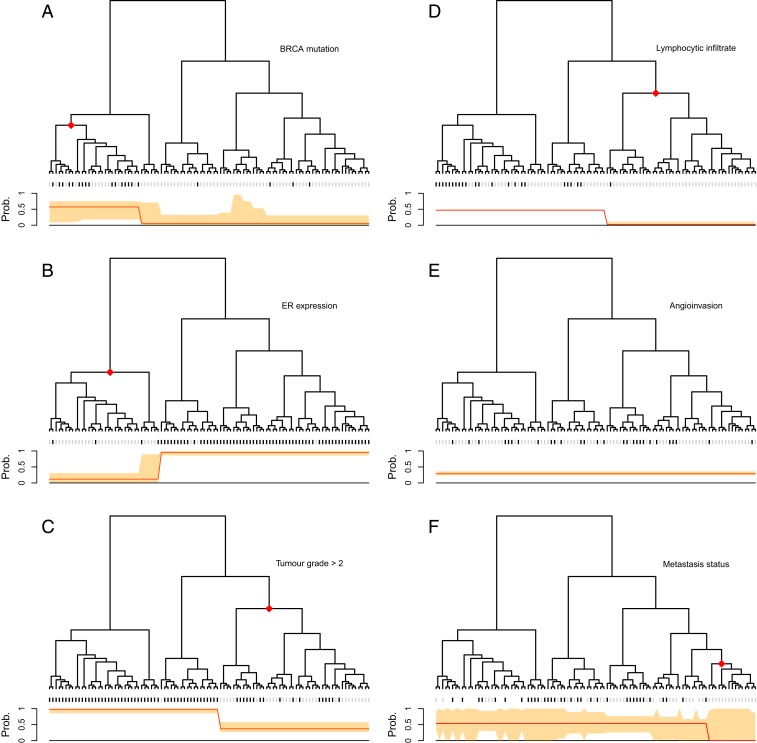

We illustrate the application of the method on a breast cancer gene expression study (1) where data are publicly available. Following the original study, we used correlation of gene expression data as a distance measure between samples to build a hierarchical clustering tree. In the original study, based on visual inspection, the authors divided the samples into two clusters, observing differences in the distributions of various clinical responses between the two clusters.

In contrast, treeSeg only requires a significance level as input and searches for associations between responses and the tree on all levels of hierarchy while accounting for multiple testing. Our results are shown in Fig. 2. Using an , for one of the responses treeSeg delineated the tree into two clusters with distinct response distribution as in the original study. However, treeSeg reports different patterns of association between the tree and the other five responses, including one that has no association with tree structure.

Fig. 2.

Application of treeSeg to a cancer gene expression study (1). Gene expressions for 98 breast cancer samples were clustered based on correlation between samples. Six clinical responses were collected for the samples (A) BRCA mutation, (B) estrogen receptor (ER) expression, (C) histological grade, (D) lymphocytic infiltration, (E) angioinvasion, and (F) development of distant metastasis within 5 y. In each panel, the treeSeg estimation (at ) for clades with distinct response distribution and their probabilities are indicated by the red diamonds on the tree and the red lines below the tree, respectively. The orange band shows the 95% confidence band for the response probabilities for the estimated segments. In E, there is no association between the tree and the response (angioinvasion), and in F, some of the samples have missing observations for the response (distant metastasis within 5 y).

The treeSeg algorithm can be applied to any tree structure and is not restricted to trees generated using hierarchical clustering. An application to a maximum likelihood phylogenetic tree generated from pathogen sequence data is in SI Appendix, section 3.

Discussion

The only tuning parameter for the treeSeg method is a significance level . Depending on the application, the user can decide which value of is appropriate or screen through several values of (e.g., ). A small gives a higher confidence that all detected associations are, indeed, present in the data (with probability of at least ). A larger allows us to detect more clusters but increases the risk of including false positive clusters.

The confidence statement for detected clades and response probabilities that accompany treeSeg’s segmentation account for multiple testing at the level of . This allows for precise uncertainty quantification when detecting associations between tree structure and the responses. We highlighted treeSeg’s potential with an example from a gene expression study but note its ubiquitous applicability in various settings and its potential to be used across many fields of science. Our method treeSeg is implemented as an R package available on GitHub (https://github.com/merlebehr/treeSeg) and accompanied by a detailed Jupyter notebook with reproductions of all figures in this text.

Methods

Model Assumptions.

For illustration purposes, we focus on binary traits . SI Appendix, section 1.B shows how treeSeg generalizes for arbitrary continuous and discrete data. We assume a fixed given rooted tree with leaves that captures some neighborhood structure of interest. For the samples (the leaves of the tree), independent binary traits , , with success probability , are observed: that is,

| [1] |

independently for , where denotes a Bernoulli distribution with success probability . The aim is to estimate the underlying success probabilities from the observations . Without any additional structural information on the success probabilities, we cannot do better than estimating . However, taking into account the tree structure, we can assume that the success probabilities are associated with the tree such that samples on the same clade of the tree may have the same success probabilities. Our methodology is based on a testing problem, where the null model assumes that all isolates have the same success probability, say , and the alternative model assumes that some of the clades on the tree have different success probabilities (). In the following, we denote an internal node (which demarcates a clade on the tree) with a distinct success probability as an active node.

For simplicity, we will assume in the following that the tree is binary. Extensions to arbitrary trees are straight forward. We use the following notation. For a binary, rooted tree , we assume vertices and edges . The leaves are labeled , the inner nodes are labeled , and the root is labeled . For a node , its set of offspring leaves in is denoted as . For a node , the subtree of with root is denoted as . An illustrative example for this notation is shown in SI Appendix, Fig. S28. Moreover, for an inner node with offspring leaves and for some , we denote the left -leaf neighborhood of as and analog, the right -leaf neighborhood of as .

We consider the following statistical model.

Model 1.

For a given binary, rooted tree as above, assume that one observes for each of the leaves independent Bernoulli random variables , , where the vector of success probabilities is an element of .

For an element , we denote the set of nodes as a set of active nodes and as the number of active nodes. To ensure identifiability of active nodes, we further assume that, for each active node , , there exists at least one offspring leaf that has the same success probability as . This just excludes the trivial case where the influence of one active node (or the root) is completely masked by other active nodes. Equivalently, this means that we assume that . We provide a simple example in SI Appendix, Fig. S30.

We stress that the set is not necessarily unique (SI Appendix, Fig. S29 has an example). That is, for a given vector , there may exist two (or more) sets of active nodes and such that . To overcome this ambiguity, we will implicitly associate with each a set of active nodes of size . When a specific vector has more than one possible sets of active nodes , we assume several copies of in , one associated with each of the sets . In the following, we explore the tree structure to estimate the underlying success probabilities and hence, their segmentation into groups of leaves where observations within a group have the same success probability and observations between different groups have different success probabilities.

Multiscale Segmentation.

The procedure that we propose extends (11) from totally ordered structures to trees and is a hybrid method of estimating and testing. A fundamental observation is that one can never rule out an additional active node. This is because a node could be active but change the success probability of its offspring nodes only by an arbitrarily small amount. On the other hand, if in a subtree, successes are much more common than in the remaining tree, it is possible to significantly reject the hypothesis that all leaves in the tree have the same success probability.

For a given candidate vector , our procedure employs on each subtree where is constant, with , an LR test for the hypothesis that the corresponding observations all have the same success probability . The levels of the individual tests are chosen in such a way that the overall level of the multiple test is for a given prespecified . A statistical hypothesis test can always be inverted into a confidence statement and vice versa. Therefore, we can derive from the above procedure a confidence set for the vector of success probabilities . We require our final estimate to lie in this confidence set. That is, we require that, whenever has constant success probability on a subtree, the respective LR test accepts. Within all vectors that lie in this confidence set, we choose one which comes from a minimum number of active nodes, and within this set, we choose the maximum likelihood solution. Thereby, our procedure not only provides an estimate but also, provides a confidence statement for all quantities (11). More precisely, the following asymptotic confidence statements hold true.

-

1)

With probability at least , the true underlying signal originates from at least active nodes, where is the number of active nodes of (Theorem 1).

-

2)

treeSeg yields a set of nodes , such that the active nodes of , , are contained with probability at least in (Corollary).

-

3)

treeSeg yields a confidence band for the underlying signal , denoted as and , such that with probability at least it holds that simultaneously for all (Theorem 3).

Moreover, the coverage probability of the confidence sets allows us to derive (up to log factors) optimal convergence rates of the treeSeg estimator as the sample size increases (11). In particular, we show the following.

-

4)

For fixed overestimation bound , the probability that treeSeg underestimates the number of active nodes vanishes exponentially as increases (Theorem 2).

-

5)

The localization of the estimated active nodes is optimal up to a leaf node set of order (Theorem 4).

In the following, we will give details of the method and of the statements 1 to 5. The proofs of Theorems 1 to 4 and Corollary are similar to the totally structured setting (11). Necessary modifications are outlined in SI Appendix.

For an arbitrary given test vector (which may depend on ), we define the multiscale statistic (11, 17–19)

| [2] |

where and . Here, is the local log LR test statistic (20) for the testing problem

The calibration term serves as a balancing of the different scales in a way that the maximum in Eq. 2 is equally likely attained on all scales (11, 17) and guarantees certain optimality properties of the statistic [2] (17). Assuming a minimal segment scale of the underlying success probability vector : that is,

| [3] |

it can be shown that converges in distribution to a functional of the Brownian motion (11), which is stochastically bounded by

| [4] |

Thereby, the minimal scale may depend on such that as (11). As the distribution of does not depend on the true underlying signal , its quantiles can be obtained by Monte Carlo simulations and are in the following denoted as : that is,

| [5] |

For a given confidence level or equivalently, a threshold value in Eq. 5, we first define an estimator for the number of active nodes in Model 1 via

| [6] |

After the number of active nodes is estimated, we estimate as the constrained maximum likelihood estimator

| [7] |

where is the log likelihood function of the binomial distribution and

| [8] |

Note that the maximum likelihood solution in Eq. 7 is not necessarily unique. On the one hand, this is due to the nonuniqueness of the active nodes. On the other hand, this might happen with positive probability by the discreteness of the Bernoulli observations . In that case, treeSeg just reports the first available solution, with all other equivalent solutions listed in the confidence set. Clearly, if we choose as in Eq. 5 for some given confidence level , the estimator asymptotically controls the probability to overestimate the number of active nodes as summarized in the following theorem.

Theorem 1.

For fixed minimal scale and significance level , let be as in Eq. 3, be as in Eq. 5, and be treeSeg’s estimated number of active nodes in Eq. 6. Then, it holds that

| [9] |

We stress that, in Theorem 1, it is possible to let go to zero as increases (11); recall the paragraph after Eq. 4. In particular, from the construction of in Eq. 6, it follows that implies , and thus, for the set in Eq. 8, one obtains that

| [10] |

By Eq. 5, it follows that the first term on the right-hand side, , is asymptotically lower bounded by . Moreover, as we show in Theorem 2, the underestimation error vanishes exponentially fast as sample size increases. From this, it follows that the set constituents an asymptotically honest confidence set (11) for the whole vector from which confidence sets for the active nodes and confidence bands for as in statements 2 and 3 follow (Theorem 3 and Corollary).

Any bound on the underestimation error necessarily must depend on the minimal segment scale in Eq. 3 as well as a minimal pairwise difference of success probabilities in different active segments. That is, for , we assume and let

| [11] |

With this, one obtains that the underestimation probability decreases exponentially (11) in [for fixed and significance level ] as the following theorem shows.

Theorem 2.

For fixed minimal scale , minimal probability difference , and significance level , let be as in Eq. 11, be as in Eq. 5, and be treeSeg’s estimated number of active nodes in Eq. 6. Then, it holds that

| [12] |

where and are positive constants, which only depend on .

Again, it is possible to let and go to zero as the sample size increases (11). The proof of Theorem 2 is similar as for totally ordered structures (11). We outline necessary modifications in SI Appendix. From Theorem 2 and [10], we directly obtain that , indeed, constitutes a asymptotic confidence set for the segmentation .

Theorem 3.

For fixed minimal scale , minimal probability difference , and significance level , let be as in Eq. 11, be as in Eq. 5, and be as in Eq. 8; then,

As a corollary, we also obtain a confidence set of the active nodes.

Corollary.

For fixed minimal scale , minimal probability difference , and significance level , let be as in Eq. 11, be as in Eq. 5, and be as in Eq. 8; then,

Theorems 1 and 2 reveal treeSeg’s ability to accurately estimate the number of active nodes in Model 1. For any (arbitrarily small) , we can control the overestimation probability by (Theorem 1). Simultaneously, as the sample size increases, the underestimation error probability vanishes exponentially fast (Theorem 2). The next theorem shows that treeSeg does not just estimate the number of active nodes correctly with high probability but that it also estimates the location of those active nodes with high accuracy. To this end, note that, for any active node and any , the leaf nodes of its left -leaf neighborhood have nonconstant success probability. The same is true for the right -leaf neighborhood . Now assume that treeSeg estimates the number of active nodes correctly , which is the case with high probability by Theorems 1 and 2. Then, being nonconstant on both and for any true active nodes , implies a perfect segmentation. Thus, the following theorem shows that treeSeg, indeed, yields such a perfect segmentation up to a leaf node set of size . That is, conditioned on the correct model dimension , treeSeg’s segmentation is perfect up to at most an order of misclassified leaf nodes.

Theorem 4.

For fixed minimal scale , minimal probability difference , and significance level , for any it holds true that

are not constant, for all , with probability at least , where are positive constants that only depend on .

Theorem 4 follows directly by translating change-point location estimation accuracy results for the totally ordered case (11) to the tree setting.

A natural question is whether the localization rate in Theorem 4 is optimal. In particular, one can compare this result with the totally ordered setting, where the minimax optimal change-point estimation rate is known to be of the same order [possibly up to factors]. One would expect that the additional tree structure leads to a strictly better segmentation rate. It turns out, however, that without making further assumptions on the tree the rate in Theorem 4 cannot be improved in general (SI Appendix, Theorem 6). More precisely, for an arbitrary number of observations , there always exist trees that do not contain any additional information other than the ordering of the tips. In that case, the tree structured setting is essentially equivalent to a regular change-point setting, and thus, treeSeg cannot yield any better performance.

On the other hand, when one imposes additional structural assumptions on the tree, it can be shown that treeSeg yields a perfect segmentation with high probability SI Appendix, Theorem 7. Essentially, when a tree structure is such that the segmentation from different sets of actives nodes is either the same or differs by some nonvanishing fraction (more precisely, this is captured by the -spreading property in SI Appendix, Definition 1), then treeSeg will recover those active nodes exactly with high probability. A simple example of trees that provide such additional structure are perfect trees, where all tip nodes have the same depth. In summary, treeSeg efficiently leverages the tree structure to overcome the minimax lower bound from a simple change-point estimation problem whenever the tree allows this. We provide more details in SI Appendix, section 1.D.

Supplementary Material

Acknowledgments

M.B. was supported by Deutsche Forschungsgemeinschaft (DFG; German Research Foundation) Postdoctoral Fellowship BE 6805/1-1. Moreover, M.B. acknowledges funding of DFG Grant GRK 2088. M.B. and A.M. acknowledge support from DFG Grant SFB 803 Z02. A.M. was funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under Germany’s Excellence Strategy EXC 2067/1-390729940. C.H was supported by The Alan Turing Institute, Health Data Research UK, the Medical Research Council UK, the Engineering and Physical Sciences Research Council (EPSRC) through the Bayes4Health programme Grant EP/R018561/1, and AI for Science and Government UK Research and Innovation (UKRI). We thank Laura Jula Vanegas for help with parts of the implementation. Helpful comments of Bin Yu and Susan Holmes are gratefully acknowledged. We are also grateful to two referees and one Editor for their constructive comments that led to an improved version of this paper.

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission. S.C.K. is a guest editor invited by the Editorial Board.

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.1912957117/-/DCSupplemental.

References

- 1.van’t. Veer L. J., et al. , Gene expression profiling predicts clinical outcome of breast cancer. Nature 415, 530–536 (2002). [DOI] [PubMed] [Google Scholar]

- 2.Gray R. D., Atkinson Q. D., Language-tree divergence times support the Anatolian theory of Indo-European origin. Nature 426, 435–439 (2003). [DOI] [PubMed] [Google Scholar]

- 3.Eckburg P. B., Diversity of the human intestinal microbial flora. Science 308, 1635–1638 (2005). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Hastie T., Tibshirani R., Friedman J. H., The Elements of Statistical Learning (Springer Series in Statistics, Springer, New York, NY, 2009). [Google Scholar]

- 5.Faria N. R., et al. , The early spread and epidemic ignition of HIV-1 in human populations. Science 346, 56–61 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Ansari M. A., Didelot X., Bayesian inference of the evolution of a phenotype distribution on a phylogenetic tree. Genetics 204, 89–98 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Fukuyama J., et al. , Multidomain analyses of a longitudinal human microbiome intestinal cleanout perturbation experiment. PLoS Comput. Biol. 13, e1005706 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Zhang N. R., Siegmund D. O., A modified Bayes information criterion with applications to the analysis of comparative genomic hybridization data. Biometrics 63, 22–32 (2007). [DOI] [PubMed] [Google Scholar]

- 9.Killick R., Fearnhead P., Eckley I. A., Optimal detection of changepoints with a linear computational cost. J. Am. Stat. Assoc. 107, 1590–1598 (2012). [Google Scholar]

- 10.Sharpnack J., Singh A., Rinaldo A., “Changepoint detection over graphs with the spectral scan statistic” in Artificial Intelligence and Statistics, Carvalho C. M., Ravikumar P., Eds. (Proceedings of Machine Learning Research, Scottsdale, AZ: 2013), pp. 545–553. [Google Scholar]

- 11.Frick K., Munk A., Sieling H., Multiscale change point inference. J. Roy. Stat. Soc. B 76, 495–580 (2014). [Google Scholar]

- 12.Chen H., Zhang N., Graph-based change-point detection. Ann. Stat. 43, 139–176 (2015). [Google Scholar]

- 13.Du C., Kao C. L. M., Kou S. C., Stepwise signal extraction via marginal likelihood. J. Am. Stat. Assoc. 111, 314–330 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ansari M. A., et al. , Genome-to-genome analysis highlights the effect of the human innate and adaptive immune systems on the hepatitis C virus. Nat. Genet. 49, 666–673 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Lu J., et al. , MicroRNA expression profiles classify human cancers. Nature 435, 834–838 (2005). [DOI] [PubMed] [Google Scholar]

- 16.Earle S. G., et al. , Identifying lineage effects when controlling for population structure improves power in bacterial association studies. Nature Microbiology 1, 16041 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Dümbgen L., Spokoiny V., Multiscale testing of qualitative hypotheses. Ann. Stat. 29, 124–152 (2001). [Google Scholar]

- 18.Dümbgen L., Walther G., Multiscale inference about a density. Ann. Stat. 36, 1758–1785 (2008). [Google Scholar]

- 19.Behr M., Holmes C., Munk A., Multiscale blind source separation. Ann. Stat. 46, 711–744 (2018). [Google Scholar]

- 20.Siegmund D., Yakir B., Tail probabilities for the null distribution of scanning statistics. Bernoulli 6, 191–213 (2000). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.