Abstract

Wide adoption of electronic health records (EHRs) has raised the expectation that data obtained during routine clinical care, termed “real-world” data, will be accumulated across health care systems and analyzed on a large scale to produce improvements in patient outcomes and the use of health care resources. To facilitate a learning health system, EHRs must contain clinically meaningful structured data elements that can be readily exchanged, and the data must be of adequate quality to draw valid inferences. At the present time, the majority of EHR content is unstructured and locked into proprietary systems that pose significant challenges to conducting accurate analyses of many clinical outcomes. This article details the current state of data obtained at the point of care and describes the changes necessary to use the EHR to build a learning health system.

CURRENT STATE OF DATA SUPPORTING CLINICAL CARE

For the past 50 years, the practice of evidence-based medicine has been promoted as a way of improving health care by informing clinical decision-making and facilitating provider education. This focus can be traced to a 1967 publication entitled Clinical Judgment, in which the epidemiologist, Alvan Feinstein,1 examined the nature of clinical reasoning and described many important biases that can influence decision making. What followed was a sustained multidisciplinary effort that involved statisticians, epidemiologists, and health policy experts to educate clinicians about the reliability of data used to generate clinical recommendations and to call for increased research to rigorously assess treatment outcomes. The term “evidence-based medicine” was first used in a 1990 article in the Journal of the American Medical Association by David M. Eddy, MD,2 a physician, mathematician, and health policy analyst, who outlined strategies for improving decision making that used clinical practice guidelines. These strategies included requiring that guidelines provide a clear description of the nature and extent of evidence underlying treatment recommendations, estimating the expected outcomes, and explicitly addressing patient preferences.

Over the past three decades, the complexity of clinical care has expanded dramatically. In response, clinical guidelines, clinical pathways, and other decision support tools have become increasingly important components of medical practice. In addition to informing decision making for individual patients, these tools can improve clinical care at the health care system and population levels by measuring adherence to clinical pathways. Evidence-based clinical guidelines and pathways are therefore an essential component of a learning health system, which was defined in a 2010 commentary by Friedman et al3 as “an integrated health system which harnesses the power of data and analytics to learn from every patient and feed the knowledge of what works best back to clinicians, health professionals, patients and other stakeholders to create cycles of continuous improvement.”

At this time, one of the major impediments to improving patient outcomes is a lack of high-quality evidence to inform clinical decisions. An informative example from the field of oncology is the nature of data underlying National Comprehensive Cancer Network (NCCN) guidelines. NCCN guidelines are developed by multidisciplinary expert panels, are based on review of the best available evidence, and are one of five compendia used to justify coverage for cancer care by private insurers and by the Centers for Medicare & Medicaid Services.4 The extent to which NCCN guidelines are based upon level I evidence and supported by prospective randomized trials (RCTs) was detailed in a 2011 publication by Poonacha and Go.5 They examined guidelines for the 10 most common cancers that covered staging, initial and salvage therapy, and surveillance. Of 1,023 recommendations, only 62 (6%) were based upon level I evidence. A more recent analysis examined NCCN guidelines for 47 new oncology drugs approved by the US Food and Drug Administration (FDA) between 2011 and 2015.6 Wagner et al examined recommendations for use of these agents beyond that defined by FDA labels, such as expansion of inclusion criteria, use of the agent in a different malignancy, or removal of a previous treatment from the recommendations on the basis of the availability of a new drug. The 47 drugs examined in that study were recommended by the NCCN for 113 indications, and for 26 drugs (55%), the NCCN recommendations extended beyond the FDA-approved use. A total of 44 such recommendations were made, of which 10 (23%) were based upon evidence from RCTs, but only seven (16%) were based on evidence from phase III RCTs.6

Even when there is evidence from an RCT, the results may not be sufficiently generalizable to the heterogeneous patient populations that actually receive the treatment during routine care. When compared with actual patient populations, clinical trials cohorts tend to be younger and healthier, with lower levels of comorbid disease, higher socioeconomic status, and better performance status. For example, a recent study of treatment for metastatic renal cell carcinoma compared patients participating in RCTs with those treated with the same agents by providers in a large health system.7 They found that 39% of patients receiving the recommended drugs would not meet eligibility criteria for the trials that supported these recommendations, largely because they were older and had comorbid conditions. Particularly for treatments with significant toxicity risk, the lack of data for balancing treatment risk with potential benefit means that clinical decisions are less likely to deliver the desired outcome for an individual patient than is expected on the basis of RCT data.8 In addition, there is a system-wide bias in the types of treatments that are supported by RCT data. This bias exists because RCTs are very expensive to execute, and public funding for clinical trials is modest and insufficient for meeting the demands of the clinical research community. As a result, RCT data tend to favor research sponsored by industry and support only those treatments with the potential for commercial success. For example, RCTs rarely compare different treatment modalities such as surgery or radiation therapy in the same patient population or use an observation-only arm. Other essential questions that often are not rigorously addressed include the optimal dosing regimens for patients with comorbidities or the best treatments for patients with rare diseases.

HARNESSING THE EHR TO IMPROVE DATA COMPLETENESS AND QUALITY

Advances in information technology are changing the way health data are collected, and this is particularly true for data obtained from the point of care. In the past decade, the practice of medicine in the United States has been transformed by the wide adoption of electronic health records (EHRs). Using an application programming interface, EHR data can be transferred to an outside party and are subject to rules imposed by the Health Insurance Portability and Accountability Act (HIPAA). Early research efforts using EHR-based real-world data showed that it is possible to collect medical records from multiple institutions, thus achieving data sets that include millions of individual patients. Numerous contract research organizations provide this service to the pharmaceutical industry, and professional organizations, governmental agencies, and private enterprises have also established real-world data registries. Examples include the Oncology Care Model registry from the Medicare Innovation Center, the AQUA registry from the American Urological Association, the IRIS registry from the American Academy of Ophthalmology, CancerLinQ, a health technology platform developed by ASCO to monitor and improve cancer care quality, and Flatiron Health, a company supporting an oncology EHR. Emerging studies show that EHR-derived data may augment RCTs by providing data that more accurately reflect the target patient population. One practical example comes from a cohort of patients studied by using the Flatiron Health EHR who received programmed death ligand 1–directed immunotherapy for non–small-cell lung cancer.9 These data included 5,257 patients, of which 1,582 patients were age 75 years or older at the time of treatment, and 166 patients had some degree of renal or hepatic failure. In addition, black or African American patients made up 9.3% of the cohort. In contrast, RCTs typically include patients who are a decade younger, exclude patients with organ dysfunction, and show lower accrual rates for minority populations.10

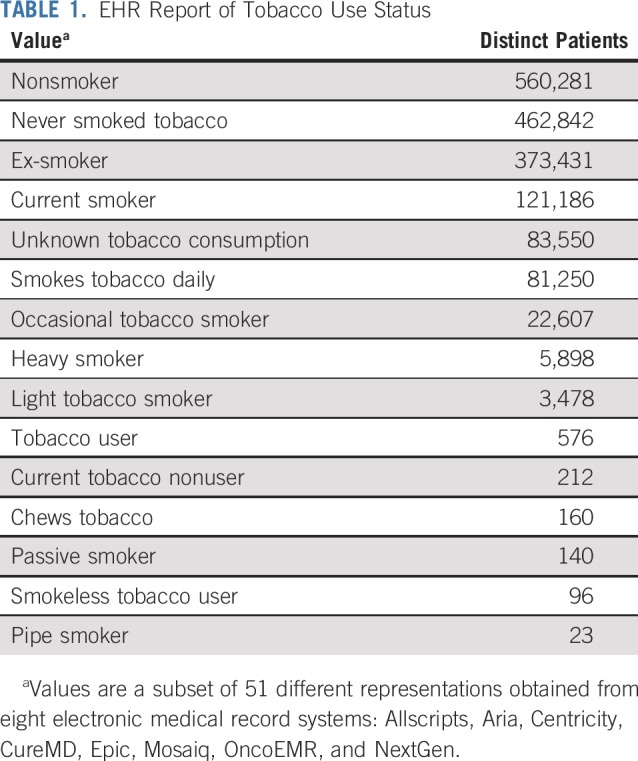

Unfortunately, currently available multi-institutional point of care data cannot adequately support a learning health system at scale. This is because today’s EHR ecosystem lacks the ability to exchange medical data in an interoperable format that achieves the standards required for clinical decision support and research. Because most EHR systems were created to provide documentation for billing purposes and not for managing clinical and research data, outputs from them are little more than nonstandardized electronic paper. This is especially true of the unstructured free text of the physician notes and diagnostic reports, which in oncology often contain the most valuable information, such as disease status (eg, progressing v responding to treatment), treatment rationale, and many other factors involved in the clinical judgment underlying treatment decisions. The problem, however, is that the assembly of these data sets is logistically challenging, even for variables that seem relatively straightforward. Table 1 provides an example from CancerLinQ, showing a subset of many different ways that a patient’s tobacco use status was reported for data obtained across 55 different clinical sites and eight different EHRs. To compensate for this, EHR-based data are typically curated by having trained medical record abstractors manually review the data according to standardized procedures and with assistance by tools such as natural language processing. In addition to being time-consuming and prohibitively expensive, this retrospective data curation runs the risk of introducing errors and biases into the data.

TABLE 1.

EHR Report of Tobacco Use Status

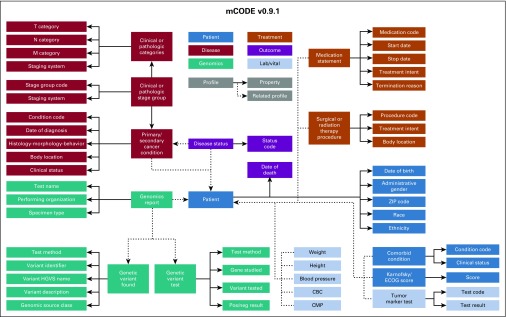

The most effective approach to improve data quality is to collect data in the EHR at the point of care using a widely adopted standard format that is relevant to clinical research. By using this approach, the data will be made interoperable and will be certain to contain elements that can address research needs. One aspect of the interoperability challenge was recently addressed when the US Office of the National Coordinator for Health Information Technology announced that the Health Level Seven International Fast Healthcare Interoperability Resource (HL7 FHIR) would be the standard used for exchanging EHR data.11,12 By using this standard, data from across institutions and EHRs from different vendors can be more accurately and efficiently shared and analyzed. The first version of a new FHIR-based data standard to promote cancer data interoperability, mCODE, was released June 1, 2019, for unrestricted use by the oncology clinical care and research community. mCODE, which stands for Minimal Common Oncology Data Elements, was developed as a collaboration between an oncology professional organization (ASCO), a government regulatory agency (FDA), a clinical trials research consortium (Alliance for Clinical Trials in Oncology Foundation), and a not-for-profit company that operates federally funded research and development centers (MITRE Corporation). The first mCODE version (mCODE v0.9.1) includes 73 data elements that cover variables common to all patients with a cancer diagnosis (Fig 1).13 A growing community of clinicians, clinical researchers, health care institutions, and health care companies are currently engaged in testing mCODE and expanding its scope. An mCODE pilot for clinical care is underway at Intermountain Healthcare, which is headquartered in Salt Lake City, Utah, and is coordinated by ASCO and MITRE. For this pilot, an open source SMART-on-FHIR app known as Compass will use an index patient’s mCODE elements from the Intermountain Center EHR to match with a cohort of similar but de-identified cancer cases from the CancerLinQ Discovery database. This pilot will show the feasibility of capturing mCODE data elements from a commercial EHR system and can be used as a practical tool for identifying real-world treatment outcomes that support clinician-patient shared decision making.14

FIG 1.

Conceptual model diagram of mCODE (Minimal Common Oncology Data Elements) version 0.9.1 currently available as an open-source data specification to promote oncology electronic health record data interoperability. CBC, complete blood count; CMP, comprehensive metabolic panel; ECOG, Eastern Cooperative Oncology Group; HGVS, Human Genome Variation Society.

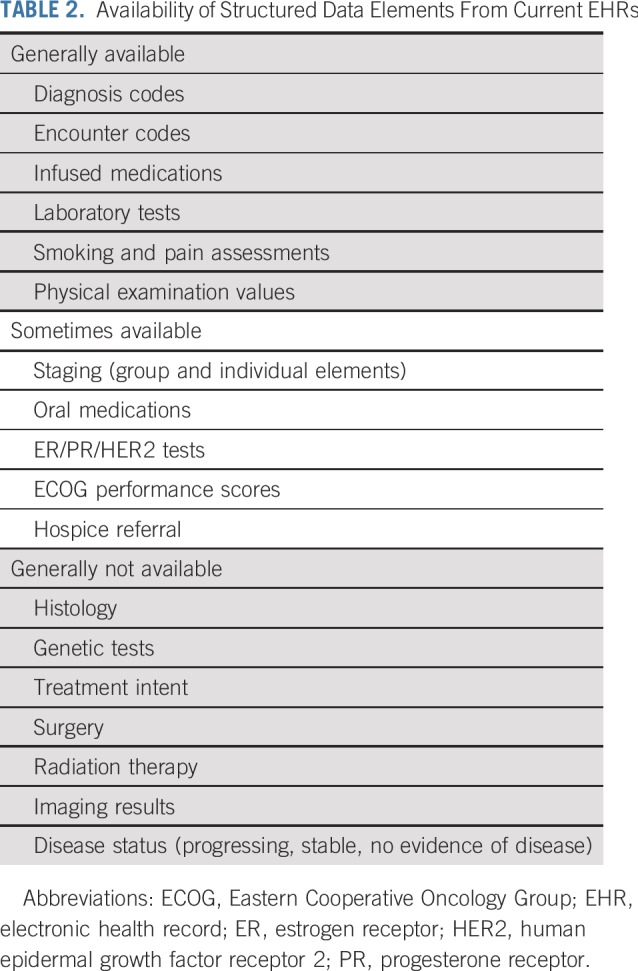

It is hoped that wide adoption of the initial mCODE data standards will significantly enhance data quality and dramatically reduce the need for post hoc curation. However, this will not completely address some important requirements for oncology clinical research using the EHR. For example, in oncology RCTs, treatment response for advanced solid tumors is measured by comparing pre- and post-treatment radiographic images by using standard criteria such as Response Evaluation Criteria in Solid Tumors (RECIST),15 which is labor intensive is not used in routine clinical care. In contrast, during routine clinical care, information indicating whether a patient’s tumor is responding to treatment is sporadically captured in the free text clinical notes. Experience to date has shown that, even after performing manual curation of clinical notes assisted by state-of-the-art natural language processing, descriptions of the response of solid tumors to treatments such as chemotherapy cannot be reliably converted to well-defined, computable data elements.16 Although image-based data formats such as RECIST may soon be replaced by machine learning algorithms, assessing the benefits and risks of treatment still requires complex variables such as elements that define physical examination findings, symptoms, or adverse events. These important end points, which are also relevant to research beyond the field of oncology, cannot be accurately extracted from current EHR-based data sets. Table 2 indicates the relative availability of different data elements in current noncurated EHR data. This example clearly shows that we need to develop and validate new methods to reliably capture some of the outcomes that matter most to patients and clinicians. In addition, the process of inputting data into the EHR needs to be optimized. The current approach to collecting point of care data places the burden of formatting on clinicians, and this approach has failed to foster a learning health system. Going forward, unstructured and missing data must be avoided by prompting clinicians to enter relevant outcomes and by making it easy for them to do so. Even more important, clinicians must be motivated to provide essential data because they derive value from their interaction with the EHR.

TABLE 2.

Availability of Structured Data Elements From Current EHRs

After the release of mCODE v0.9.1 to the oncology community, several efforts were launched to test and expand this new data standard. One example is a project to develop and validate data elements for EHR-based capture of cancer treatment response and toxicity data. This effort is a collaboration between the National Clinical Trials Network (funded by the National Cancer Institute), the FDA, and MITRE, and is known as the Integrating Clinical Trials and Real World Endpoints data (ICAREdata) initiative. Both the ICAREdata initiative and the Compass implementation pilot will address the need to better understand clinician decision making and to gather data that will define the factors that dictate which treatments clinicians recommend and which treatments patients agree to accept. Importantly, because the EHR as it is currently configured contributes a great deal to clinician burnout, an essential goal of these projects is to optimize the clinician’s interaction with the EHR to improve efficiency and effectiveness. Finally, the patient’s wishes must be acknowledged and used to guide how data are used. Both ICAREdata initiative and the Compass pilot are considering methods to allow patient participation by submitting patient-reported outcomes data and requesting specific consent for data use.

DELIVERING A LEARNING HEALTH SYSTEM

Perhaps the most aggressive driver of achieving a learning health system is the urgent need to manage the use of health care resources by directly linking cost of care to the results that it produces for the patient. Currently available value frameworks, such as those developed by ASCO and the European Society of Medical Oncology, attempt to enable decision making by presenting various treatment options together with the cost of care.17 These models, however, cannot be implemented without data to support them, and this will require wider availability of data that address what is most meaningful to patients and clinicians, not just survival duration but quality of life and impact of treatment on activities of daily living. This extent of data sharing is new to medicine, and serving the best interests of the individual patient in this process must be a core guiding principle. Ideally, the learning health system will also yield tools that help patients engage more effectively in their own care and in clinical research. Potential risks, such as the loss of patient confidentiality, can be mitigated by using techniques such as data de-identification and by adherence to the highest standards for data security.

What does the ideal learning environment look like? At each clinical encounter, decision support available within the EHR recalls the data that are currently available, provides context so the clinician can assess the relevance of these data to a specific patient, and prospectively captures data from every patient encounter in a manner that allows valid research conclusions to be drawn by using either an observational or RCT approach. To do this, we must define and structure the data elements that are essential to knowledge generation, configure the EHR to prompt and incentivize clinicians and patients to provide these data in a standardized format, and share data widely, encompassing clinical environments across the broad spectrum of health care. It also means that medicine must pivot from an environment in which access to clinical data are restricted to an environment in which innovation can instead focus competitive drive upon developing new uses for the data. Health systems must be willing to share their data in a safe and secure manner, clinicians and patients must be willing to provide the data in a structured format, and EHR vendors must enable this goal, working with clinicians and researchers to reformat the EHR so that it can be the tool that is needed to drive a learning health system.

ACKNOWLEDGMENT

At the time of S.K.’s involvement with this manuscript, he was an employee of the US Food and Drug Administration. This manuscript does not necessarily reflect the views and policies of the US government or the Food and Drug Administration.

Footnotes

The views expressed in this article are those of the authors and do not necessarily represent the views and policies of the US government or the US Food and Drug Administration.

AUTHOR CONTRIBUTIONS

Conception and design: Monica M. Bertagnolli, Brian Anderson, Steven Piantadosi, Andre Quina, Richard L. Schilsky, Sean Khozin

Administrative support: Monica M. Bertagnolli, Brian Anderson

Collection and assembly of data: Brian Anderson, Andre Quina, Robert S. Miller

Data analysis and interpretation: Monica M. Bertagnolli, Brian Anderson, Kelly Norsworthy, Robert S. Miller

Manuscript writing: All authors

Final approval of manuscript: All authors

Accountable for all aspects of the work: All authors

AUTHORS' DISCLOSURES OF POTENTIAL CONFLICTS OF INTEREST

Status Update on Data Required to Build a Learning Health System

The following represents disclosure information provided by authors of this manuscript. All relationships are considered compensated unless otherwise noted. Relationships are self-held unless noted. I = Immediate Family Member, Inst = My Institution. Relationships may not relate to the subject matter of this manuscript. For more information about ASCO's conflict of interest policy, please refer to www.asco.org/rwc or ascopubs.org/journal/jco/site/ifc.

Open Payments is a public database containing information reported by companies about payments made to US-licensed physicians (Open Payments).

Monica M. Bertagnolli

Research Funding: AbbVie (Inst), Agenus (Inst), Astellas Pharma (Inst), AstraZeneca (Inst), Breast Cancer Research Foundation (Inst), Bristol-Myers Squibb (Inst), Celgene (Inst), Complion (Inst), Exelixis (Inst), Genentech (Inst), Gilead Sciences (Inst), GlaxoSmithKline (Inst), Incyte (Inst), Jazz Pharmaceuticals (Inst), Eli Lilly (Inst), Mayo Clinic (Inst), Millennium Pharmaceuticals (Inst), Novartis (Inst), Patient-Centered Outcomes Research Institute (Inst), Pfizer (Inst), Robert Wood Johnson Foundation (Inst), SageRock Advisors (Inst), Taiho Pharmaceutical (Inst), Bayer HealthCare Pharmaceuticals (Inst), Eisai (Inst), Leidos Biomedical Research (Inst), Lexicon Pharmaceuticals (Inst), Merck (Inst), Pharmacyclics (Inst), Takeda Pharmaceuticals (Inst), TESARO (Inst), Baxalta (Inst), Sanofi (Inst), TEVA Pharmaceuticals Industries (Inst), Janssen Oncology (Inst), Merck (Inst)

Uncompensated Relationships: Leap Therapeutics, Syntimmune, Syntalogic

Open Payments Link: https://openpaymentsdata.cms.gov/physician/114497/summary

Brian Anderson

Stock and Other Ownership Interests: Athenahealth (I)

Consulting or Advisory Role: Athenahealth (I)

Travel, Accommodations, Expenses: Clarify Health Solutions

Steven Piantadosi

Consulting or Advisory Role: Omnitura

Richard L. Schilsky

Research Funding: AstraZeneca (Inst), Bayer HealthCare Pharmaceuticals (Inst), Bristol-Myers Squibb (Inst), Genentech (Inst), Eli Lilly (Inst), Merck (Inst), Pfizer (Inst), Boehringer Ingelheim (Inst)

Travel, Accommodations, Expenses: Varian Medical Systems

Open Payments Link: https://openpaymentsdata.cms.gov/physician/1138818/summary

No other potential conflicts of interest were reported.

REFERENCES

- 1.Feinstein AR. Clinical Judgment. Baltimore, MD: Williams & Wilkins; 1967. [Google Scholar]

- 2.Eddy DM. Clinical decision making: From theory to practice. Guidelines for policy statements: The explicit approach. JAMA. 1990;263:2239–2243. doi: 10.1001/jama.263.16.2239. [DOI] [PubMed] [Google Scholar]

- 3.Friedman CP, Wong AK, Blumenthal D. Achieving a nationwide learning health system. Sci Transl Med. 2010;2:57cm29. doi: 10.1126/scitranslmed.3001456. [DOI] [PubMed] [Google Scholar]

- 4.Centers for Medicare & Medicaid Services Medicare Coverage Indexes. 2019 https://www.cms.gov/medicare-coverage-database/indexes/medicare-coverage-documents-index.aspx

- 5.Poonacha TK, Go RS. Level of scientific evidence underlying recommendations arising from the National Comprehensive Cancer Network clinical practice guidelines. J Clin Oncol. 2011;29:186–191. doi: 10.1200/JCO.2010.31.6414. [DOI] [PubMed] [Google Scholar]

- 6.Wagner J, Marquart J, Ruby J, et al. Frequency and level of evidence used in recommendations by the National Comprehensive Cancer Network guidelines beyond approvals of the US Food and Drug Administration: Retrospective observational study. BMJ. 2018;360:k668. doi: 10.1136/bmj.k668. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Mitchell AP, Harrison MR, Walker MS, et al. Clinical trial participants with metastatic renal cell carcinoma differ from patients treated in real-world practice. J Oncol Pract. 2015;11:491–497. doi: 10.1200/JOP.2015.004929. [DOI] [PubMed] [Google Scholar]

- 8.Khozin S, Blumenthal GM, Pazdur R. Real-world data for clinical evidence generation in oncology. J Natl Cancer Inst. 2017;109(11) doi: 10.1093/jnci/djx187. [DOI] [PubMed] [Google Scholar]

- 9.Khozin S, Miksad RA, Adami J, et al. Real-world progression, treatment, and survival outcomes during rapid adoption of immunotherapy for advanced non-small cell lung cancer. Cancer. 2019;125:4019–4032. doi: 10.1002/cncr.32383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Nazha B, Mishra M, Pentz R, et al. Enrollment of racial minorities in clinical trials: Old problem assumes new urgency in the age of immunotherapy. Am Soc Clin Oncol Educ Book. 2019;39:3–10. doi: 10.1200/EDBK_100021. [DOI] [PubMed] [Google Scholar]

- 11.HL7 FHIR [Fast Healthcare Interoperability Resource], release 4 2019 https://www.hl7.org/fhir/ Home page.

- 12.HealthITBuzz ONC’s proposed rule will connect people to their care. 2019 https://www.healthit.gov/buzz-blog/interoperability/oncs-proposed-rule-will-connect-people-to-their-care

- 13.mCODE Minimal common oncology data elements: The initiative to create a core cancer model and foundational EHR data elements. 2019 mCODEinitiative.org

- 14.MITRE MITRE announces Compass, a new open-source application to collect common oncology data. 2019 https://www.mitre.org/news/press-releases/mitre-announces-compass-a-new-open-source-application-to-collect-common

- 15.Eisenhauer EA, Therasse P, Bogaerts J, et al. New response evaluation criteria in solid tumours: Revised RECIST guideline (version 1.1) Eur J Cancer. 2009;45:228–247. doi: 10.1016/j.ejca.2008.10.026. [DOI] [PubMed] [Google Scholar]

- 16.Griffith SD, Tucker M, Bowser B, et al. Generating real-world tumor burden endpoints from electronic health record data: Comparison of RECIST, radiology-anchored, and clinician-anchored approaches for abstracting real-world progression in non-small cell lung cancer. Adv Ther. 2019;36:2122–2136. doi: 10.1007/s12325-019-00970-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Cherny NI, de Vries EGE, Dafni U, et al. Comparative assessment of clinical benefit using the ESMO-Magnitude of Clinical Benefit Scale Version 1.1 and the ASCO Value Framework Net Health Benefit Score. J Clin Oncol. 2019;37:336–349. doi: 10.1200/JCO.18.00729. [DOI] [PubMed] [Google Scholar]