Abstract

PURPOSE

Rapid increases in technology and data motivate the application of artificial intelligence (AI) to primary care, but no comprehensive review exists to guide these efforts. Our objective was to assess the nature and extent of the body of research on AI for primary care.

METHODS

We performed a scoping review, searching 11 published or gray literature databases with terms pertaining to AI (eg, machine learning, bayes* network) and primary care (eg, general pract*, nurse). We performed title and abstract and then full-text screening using Covidence. Studies had to involve research, include both AI and primary care, and be published in Eng-lish. We extracted data and summarized studies by 7 attributes: purpose(s); author appointment(s); primary care function(s); intended end user(s); health condition(s); geographic location of data source; and AI subfield(s).

RESULTS

Of 5,515 unique documents, 405 met eligibility criteria. The body of research focused on developing or modifying AI methods (66.7%) to support physician diagnostic or treatment recommendations (36.5% and 13.8%), for chronic conditions, using data from higher-income countries. Few studies (14.1%) had even a single author with a primary care appointment. The predominant AI subfields were supervised machine learning (40.0%) and expert systems (22.2%).

CONCLUSIONS

Research on AI for primary care is at an early stage of maturity. For the field to progress, more interdisciplinary research teams with end-user engagement and evaluation studies are needed.

Key words: artificial intelligence, health information technology, health informatics, electronic health records, big data, data mining, primary care, family medicine, decision support, diagnosis, treatment, scoping review

Artificial intelligence (AI) research began in the 1950s, and public, professional, and commercial recognition of its potential for adoption in health care settings is growing.1-7 This application includes primary care,8-10 defined by Barbara Starfield as “The level of a health service system that provides entry into the system for all new needs and problems, provides person-focused (as opposed to disease-oriented) care over time, provides care for all but very uncommon or unusual conditions, and coordinates or integrates care provided elsewhere or by others.”11(pp8-9)

Given the recent surge in uptake of electronic health records (EHRs) and thus availability of data,12,13 there is potential for AI to benefit both primary care practice and research, especially in light of the breadth of practice and rapidly increasing amounts of information that humans cannot meaningfully condense and comprehend.1,2,4-11,14-20

AI’s immediate usefulness is not guaranteed, however: EHRs were predicted to transform primary care for the better, but led to unanticipated outcomes and encountered barriers to adoption.12,21-23 AI could also harm, for example, by exaggerating racial, class, or sex biases if models are built with biased data or used with new populations for whom performance may be poor. Liability, trust, and disrupted workflow are further concerns.5

AI initially focused on how computers might achieve humanlike intelligence and how we might recognize this.24,25 Two approaches emerged, rule centric and data centric. Rule-centric methods capture intelligence by explicitly writing down rules that govern intelligent decision making, whereas data-centric methods learn specific tasks using previously collected data.24 Examples of health applications are presented below.

MYCIN was the first rule-based AI system for health care, developed in the 1970s to diagnose blood infections using more than 450 rules derived from experts, textbooks, and case reports.24,26 Although met with initial enthusiasm, rule-centric methods faltered when faced with increasing complexity. As availability of EHRs increased, AI shifted toward data-centric, machine learning methods designed to automatically capture complex relationships within health data. Machine learning methods are now used in health research to predict diabetes and cancer from health records,16,27-29 and together with computer vision have been applied to skin cancer diagnosis based on skin lesion images.30,31 Machine learning and natural language processing methods extract structured information from unstructured text data,15 which could potentially remove some of the EHR-associated burden from clinicians.6,32,33

These examples predominantly come from referral care settings, not from primary care, where the spectrum of illness is wider, and clinicians have fewer diagnostic instruments or tests available. Despite optimism for using AI to benefit primary care, there is no comprehensive review of what contribution AI has made so far, and thus little guidance on how best to proceed with research. To address this gap, our objective was to identify and assess the nature and extent of the body of research involving AI and primary care.

METHODS

We performed a scoping review according to published guidelines whereby a systematic search strategy identifies literature on a topic, data are extracted from relevant documents, and findings are synthesized.34-36

We followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses for Scoping Reviews (PRISMA-ScR) Checklist (Supplemental Appendix 1, http://www.AnnFamMed.org/content/18/3/250/suppl/DC1),37 and registered our protocol with the Open Science Framework (osf.io/w3n2b).

Search Strategy

We developed our search strategies (Supplemental Appendix 2, http://www.AnnFamMed.org/content/18/3/250/suppl/DC1) iteratively and in collaboration with a medical sciences librarian for health sciences, computer science, and interdisciplinary databases. Strategies included key words and, where possible, subject headings around the concepts of AI and primary care. Terms were identified through searches of the National Library of Medicine MeSH Tree Structures and by discipline experts on our review team. Supplemental Appendix 2 contains an overview of the search strategy development process (Figure 1S) and final strategies for the 11 published or gray literature databases: Medline-OVID, EMBASE, CINAHL, Cochrane Library, Web of Science, Scopus, Institute of Electrical and Electronics Engineers Xplore, Association for Computing Machinery Digital Library, MathSciNet, Association for the Advancement of Artificial Intelligence, and arXiv. Retrieved references were uploaded into Covidence.38 Where possible, English-language limits were set; to estimate the amount of literature missed, searches were rerun for a subset of the databases (Medline-OVID, CINAHL, Web of Science) with language limits reset to accept all non-English languages. Each search retrieved fewer than 10 documents. We used Covidence38 to remove duplicate results and facilitate the screening process.

Study Selection

Title and Abstract Screening

For preliminary screening, 2 reviewers (J.K.K., D.J.L.) independently rated document titles and abstracts as to whether they met our eligibility criteria: (1) reported on research, (2) mentioned or alluded to AI, and (3) mentioned primary care data source, setting, or personnel. We pilot-tested the first 25 and next 100 documents, discussing disagreements to ensure mutual understanding of the eligibility criteria and capture of relevant literature. A third reviewer (A.L.T.) resolved remaining initial disagreements. If 2 reviewers rated a document as meeting the above criteria, the document progressed to full-text screening. A large number of documents on computerized cognitive behavioral therapy (37 documents) were excluded because underlying methods were often unclear and reviews on these systems already exist.39-43

Full-Text Screening

For our full-text screening, 2 reviewers (J.K.K., D.J.L.) independently reviewed the full text of each document for the following eligibility criteria: (1) was a research study, (2) developed or used AI (Table 1S, Supplemental Appendix 3, http://www.AnnFamMed.org/content/18/3/250/suppl/DC1, contains subfield definitions), (3) used primary care data and/or study was conducted in a primary care setting and/or explicitly mentioned study applicability to primary care. Documents were excluded if they were narratives or editorials, did not apply to primary care, or were not accessible in English language full text. As for title and abstract screening, we performed pilot-testing and refined the eligibility criteria. Disagreements were resolved by discussion until consensus was reached.

A notable challenge arose from authors’ use of terminology that overlaps with AI when the methods used are not considered AI; we excluded these studies. We also excluded 34 studies because there was insufficient information to determine whether AI was involved, even after consulting references cited in methods. For example, 1 study referred to simple string matching as natural language processing.44

Data Extraction and Synthesis

We developed the data extraction sheet iteratively to ensure relevant and consistent information capture, performing pilot-testing and revisions for 3 and then 5 randomly selected articles.31,45-51 Remaining documents were split alphabetically and extracted independently (100 by A.L.T., 50 by D.J.L., 250 by J.K.K.). We extracted the following information: publication details, study purpose(s), author appointment(s), primary care function(s), author-intended target end user(s), target health condition(s), location of data source(s) (if any), AI subfield(s), the reviewer who performed extraction, and any reviewer notes. We agreed on definitions for each data extraction field (Table 1S, Supplemental Appendix 3). For fields except publication details, author appointments, and additional notes, we predefined categories based on the pilot testing and on content knowledge; studies could belong to multiple categories. An “other” category captured specifics of studies that did not fit into a predefined category, and an “unknown” category was used if not enough information was provided for category selection. We summarized results as categorical variables for 7 data extraction fields and performed selected cross-tabulations.

RESULTS

Searches

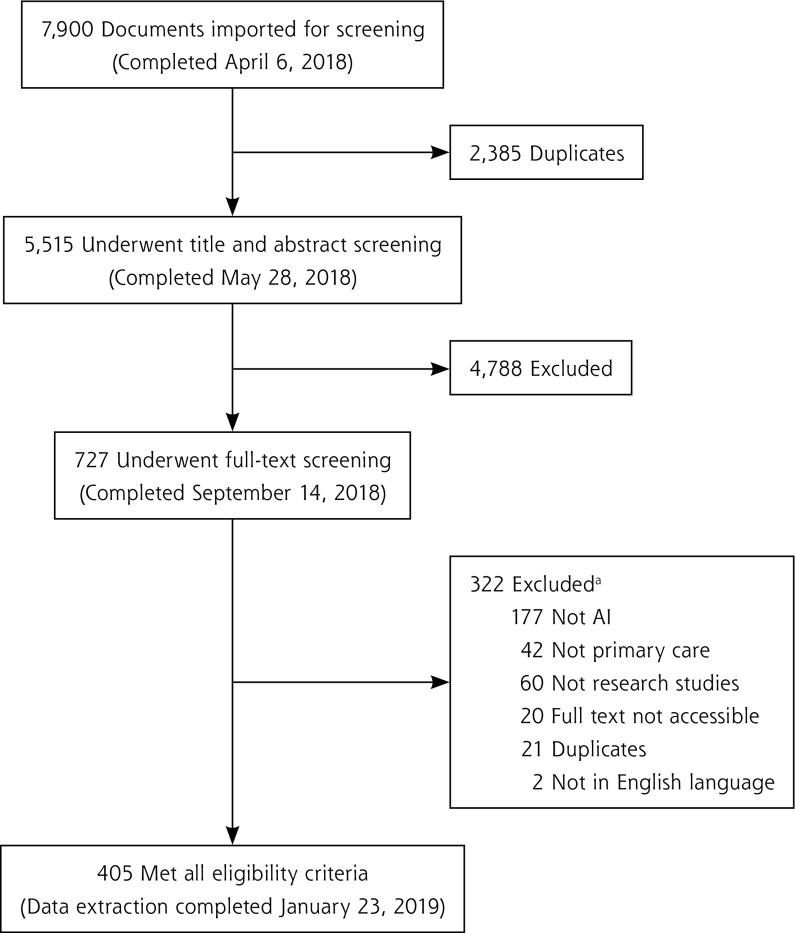

We retrieved 5,515 nonduplicate documents for title and abstract screening; 727 met the eligibility criteria for full-text screening and 405 met the final criteria as shown in Figure 1. (Supplemental Appendix 4, available at http://www.AnnFamMed.org/content/18/3/250/suppl/DC1, contains a list of the 405 references.) The AI and primary care study with the earliest date of publication, 1986, developed a supervised machine learning method to support abdominal pain diagnoses.52 Studies are summarized below according to the 7 key data extraction categories mentioned above.

Figure 1.

PRISMA flow diagram.

AI = artificial intelligence; PRISMA = Preferred Reporting Items for Systematic Reviews and Meta-Analyses.

a“Not primary care” used as exclusion criterion when multiple criteria applied.

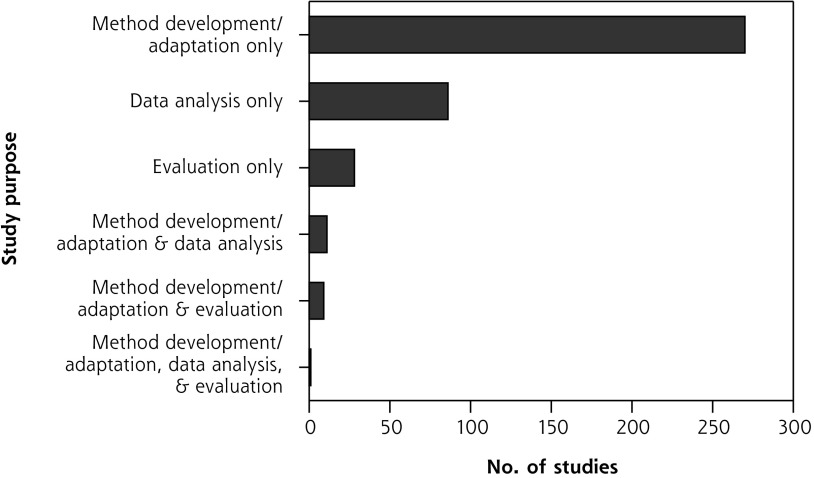

Study Purpose

The majority of studies (270 studies, 66.7%) developed new or adapted existing AI methods using secondary data. The second most common study purpose (86 studies, 21.2%) was analyzing data using AI techniques, such as eliciting patterns from health data to facilitate research. Few (28 studies, 6.9%) evaluated AI application in a real-world setting.

Some series of studies reported on multiple stages of a project, from AI development to pilot-testing; these projects included intended end users located in a primary care setting.53-60 A small minority of studies (21 studies, 5.2%) had multiple purposes. Figure 2 presents all combinations.

Figure 2.

Overall purpose of studies.

Author Appointment

We categorized author appointments into 4 categories: (1) technology, engineering, and math (TEM) discipline, meaning an author appointed in a department of mathematics, engineering, computer science, informatics, and/or statistics; (2) primary care discipline, meaning an author appointed in a department of family medicine, primary care, community health, and/or other analogous term; (3) nursing discipline; and (4) other. Authors were predominantly from TEM disciplines with 214 studies (52.8%) having at least 1 author with a TEM appointment compared with just 57 studies (14.1%) having at least 1 author with a primary care appointment. Twenty-three studies (5.7%) had a primary care–appointed author listed first and 27 (6.7%) had one listed last. These patterns remained when unspecified or general medical appointments (ie, nonspecialist) were counted as primary care appointments. Four studies had authors with nursing appointments. Cross-tabulations between study purpose and author appointment categories did not suggest that author appointment types differed by study purpose. Table 1 presents a summary of the body of literature broken into primary care and TEM author disciplines; Table 2S (Supplemental Appendix 3) breaks down author appointments into 16 categories.

Table 1.

Appointments of Study Authors

| Author Appointment Category | Studies, No. (%) (N = 405) |

|---|---|

| Primary care and TEM | 27 (6.7) |

| Primary care and no TEM | 30 (7.4) |

| TEM and no primary care | 187 (46.2) |

| Neither TEM nor primary care | 161 (39.8) |

TEM = technology, engineering, and math.

Note: To be included in a row count, a study must have had at least 1 author with an appointment in the category or categories indicated.

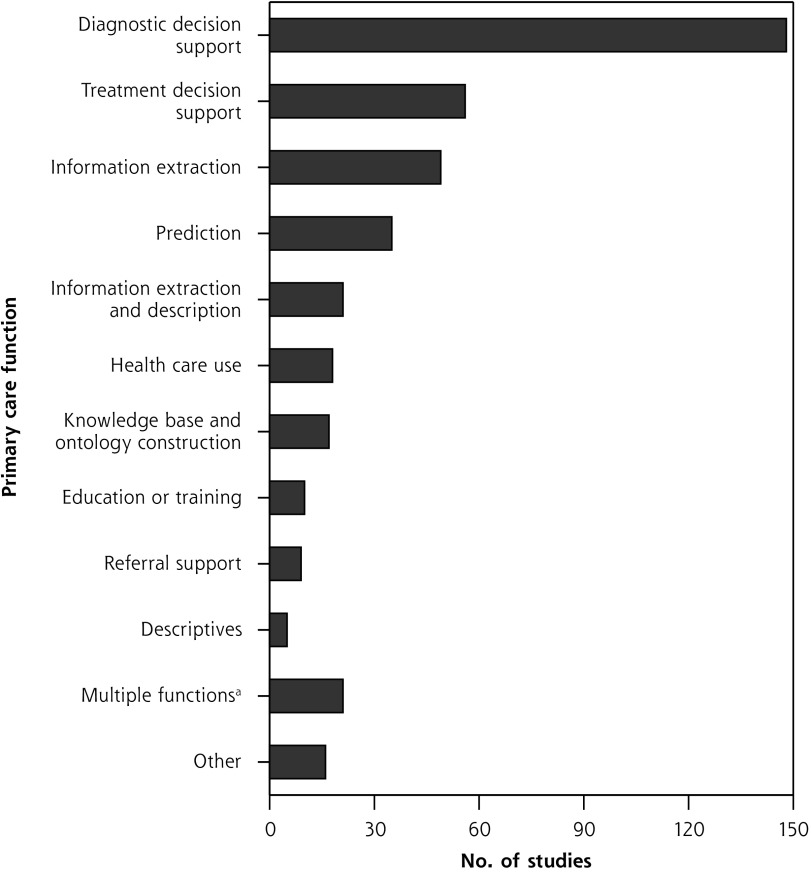

Primary Care Function

Diagnostic decision support was the most common primary care function addressed in studies (148 studies, 36.5%), followed by treatment decision support (56 studies, 13.8%), and then using AI for extracting information from data sources such as EHRs (49 studies, 12.1%). The most frequent combination of functions was information extraction and description (21 studies, 5.2%). Figure 3 summarizes primary care function counts; Figure 2S (Supplemental Appendix 3) presents more detail.

Figure 3.

Primary care functions to be supported with artificial intelligence.

aGiven combinations of functions evaluated by fewer than 5 studies. (Combinations evaluated by 5 or more studies are shown above.)

Reported Target End User

The majority of studies reported physicians as a target end user, either alone or in combination with other target end users (243 studies, 60%). There appeared to be no positive association between having physicians as a target end user and having at least 1 author with a medical appointment: the percentage of studies with at least 1 author with any kind of medical appointment was similar between studies with physician and exclusively nonphysician target end users (51.9% and 46.3%, respectively). Twenty-six studies (6.4%) stated that their research was intended for patients, 25 (6.2%) for administrative use, and 9 (2.2%) for nurses or nurse practitioners, either alone or in combination with other end users. Figure 3S-A (Supplemental Appendix 3) shows the number of studies that included each of the target end user categories; Figure 3S-B (Supplemental Appendix 3) presents all combinations on a per-study basis.

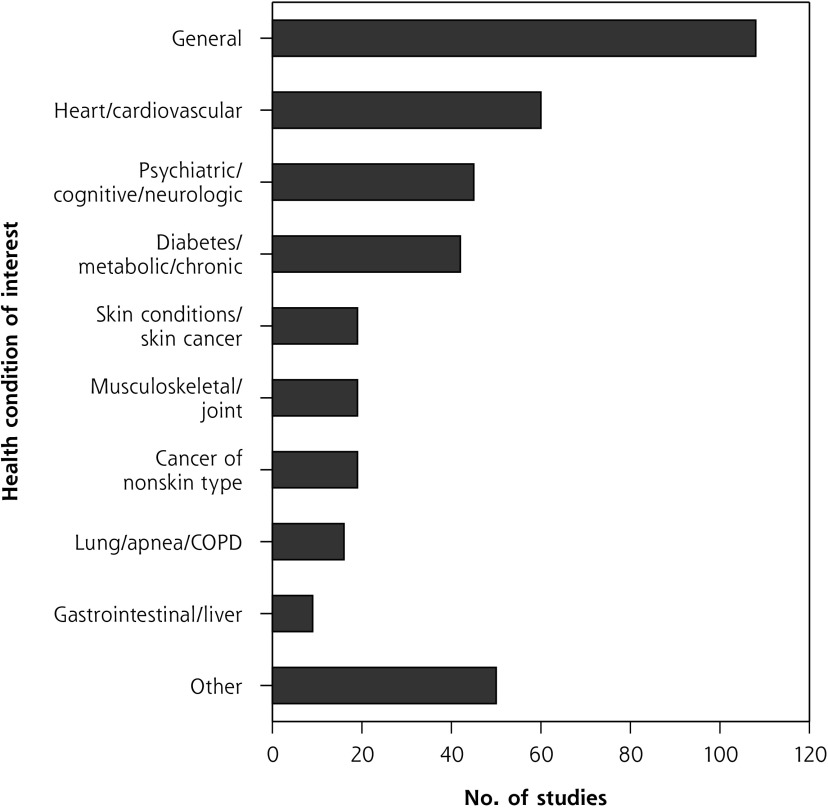

Health Condition

About one-quarter of studies (108 studies, 26.7%) focused on developing, using, or analyzing AI so that it would be relevant for most health conditions seen in primary care settings. Of studies that targeted a particular condition, chronic physical conditions were more frequent than acute or psychiatric conditions. We condensed target health conditions into 10 categories, with study distribution shown in Figure 4; Figure 4S (Supplemental Appendix 3) expands them into 27 categories.

Figure 4.

Health conditions studied.

COPD = chronic obstructive pulmonary disease.

Note: Includes only the 387 studies for which target condition(s) could be identified.

Geographic Location

The location of most data source(s) used in a study or the intended location of AI implementation was higher-income countries belonging to the Organisation for Economic Co-operation and Development. Low- and middle-income countries were poorly represented. Most studies used data from a single country, with the United States being the most common source (79 studies, 19.5%). Figure 5S (Supplemental Appendix 3) summarizes location counts and per capita rates; Table 3S (Supplemental Appendix 3) contains a more detailed breakdown.

AI Subfield

Most studies (363 studies, 89.6%) used methods within a single subfield of AI, and of these, supervised machine learning was the most common (162 studies, 40.0%), followed by expert systems (90 studies, 22.2%), and then natural language processing (35 studies, 8.6%). There were no articles on robotics. Expert systems had the earliest median year of publication (2007); data mining had the most recent (2015). Figure 6S-A (Supplemental Appendix 3) presents frequencies and median year of publication for 10 subfields of AI used by studies captured in our literature review; all AI subfield combinations are presented in Figure 6S-B (Supplemental Appendix 3).

DISCUSSION

Key Findings

We identified and summarized 405 research studies involving AI and primary care, and discerned 3 predominant trends. First, regarding authorship, the vast majority of studies did not have any primary care involvement. Second, in terms of methods, there was a shift over time from expert systems to supervised machine learning. And third, when it came to applications, studies most often developed AI to support diagnostic or treatment decisions, for chronic conditions, in higher-income countries. Overall, these findings show that AI for primary care is at an early stage of maturity for practice applications,61,62 meaning more research is needed to assess its real-world impacts on primary care. The dominance of TEM-appointed authors and AI methods development research is congruent with the early stage of this field. An AI-driven technology needs to be working well before real-world testing and implementation. Good performance is achieved through methods development research, which is further reflected by most studies specifying researchers as an intended end user alongside clinicians—more work is required before implementing the AI in a practice setting. On the other hand, research focused on AI for analyzing health data is distinct and at a later stage of maturity. These AI applications are not intended for everyday clinical practice, so although their methodologic performance is important, longer-term health or workflow outcomes may not need to be assessed before real-world use.

The dominant subfields of AI identified by our review mirror trends in AI advances and align with other characteristics of the included studies. Expert systems comprise a substantial portion of the literature but are now less common (median publication year 2007 vs 2014 for supervised machine learning), reflecting a general shift in AI research from expert systems and rule-centric AI methods to machine learning and data-centric AI methods.63 The latter are amenable to providing diagnostic and treatment recommendations as well as predicting future health, which supports primary care activities such as primary prevention and screening. This trend also aligns with the focus on physicians as target eventual end users.

Underlying drivers of AI research, and by extension maturation, are data availability and quality, particularly after the shift toward data-driven machine learning methods. The United States is the single dominant country in the field, which is unsurprising given its population, wealth, and research resources and output.64-67 The high standing of the United Kingdom and Netherlands despite smaller populations may be attributable to primary care data availability,68,69 facilitated by high adoption rates of EHRs,70 and strong information technology academics and industries.71,72 Investments in data generation, quality, and access will increase future possibilities for AI to be used to strengthen primary care in the corresponding region.

Strengths and Limitations

Strengths of our review include a comprehensive search strategy, without date restriction, with use of inclusive eligibility criteria and conducted by an interdisciplinary team. Limitations include multiple reviewers extracting data without double coding, English language restriction, and the lack of single widely accepted definitions for primary care or AI to guide screening. Proprietary research would not be captured by our review, nor would research completed after our search date.

Future Research

Our next steps include further assessing the quality of the included studies and summarizing exemplary research projects. We additionally recommend a review on AI for the broader primary health care system that includes clinicians beyond physicians and nurses (eg, social workers, physiotherapists).

For the field to mature, future research studies should have interdisciplinary teams with primary care end user engagement. Value must be placed both on developing rigorous methods and on identifying potential impacts of the developed AI on care delivery and longer-term health outcomes. Inclusion of nurses, patients, and administrators needs to increase—identifying relevant nonphysician end user activities that could be augmented by AI is an outstanding research endeavor on its own.

We expect future AI methods development to shift toward a middle ground between rule-centric and data-centric methods because interpretable models better support decisions and trust in the health care setting. For example, explainable AI is a paradigm whereby one can understand what a model is doing or why it arrives at a particular output.73-75 Interpretability of models is additionally important from an equity lens to be able to identify and then avoid AI reproduction of biases in data, which is a present concern with data-driven methods.76 It is also important to remember that AI is not always a superior solution: a recent review found no benefit overall of machine learning compared with logistic regression analysis for clinical prediction rules.77

Conclusions

Ours is the first comprehensive, interdisciplinary summary of research on AI and primary care. Two fundamental aims in the body of research emerged: providing support for clinician decisions and extracting meaningful information from primary care data. Overall, AI for primary care is an innovation that is in early stages of maturity, with few tools ready for widespread implementation. Interdisciplinary research teams including frontline clinicians and evaluation studies in primary care settings will be crucial for advancement and success of this field.

Footnotes

Conflict of interest: authors report none.

To read or post commentaries in response to this article, see it online at http://www.AnnFamMed.org/content/18/3/250.

Funding support: The Canadian Institutes of Health Research and Innovations Strengthening Primary Health Care through Research supported this research through funding for J. Kueper’s doctoral studies (CGS-D and TUTOR-PHC Fellowship).

Disclaimer: The views expressed are solely those of the authors and do not necessarily represent official views of the authors’ affiliated institutions or funders.

Prior presentations: North American Primary Care Research Group (NAPCRG), November 19, 2019, Toronto, Ontario, oral presentation; Artificial INTELLIGENce for efficient community based primary health CARE (INTELLIGENT-CARE) Workshop, September 20, 2019, Quebec City, oral workshop; Trillium Primary Health Care Research Day, June 5, 2019, Toronto, Ontario, poster; Canadian Student Health Research Forum, June 12-14, 2018, Winnipeg, Manitoba, poster; Trillium Primary Health Care Research Day, June 6, 2018, Toronto, Ontario, poster; Fal-lona Family Research Showcase, April 12, 2018, London, Ontario, poster.

Supplemental materials: Available at http://www.AnnFamMed.org/content/18/3/250/suppl/DC1/.

References

- 1.Jiang F, Jiang Y, Zhi H, et al. Artificial intelligence in healthcare: past, present and future. Stroke Vasc Neurol. 2017; 2(4): 230-243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Beam AL, Kohane IS. Big data and machine learning in health care. JAMA. 2018; 319(13): 1317. [DOI] [PubMed] [Google Scholar]

- 3.Gray R. The A-Z of how artificial intelligence is changing the world. http://www.bbc.com/future/gallery/20181115-a-guide-to-how-artificial-intelligence-is-changing-the-world. Published Nov 2018 Accessed Mar 18, 2019.

- 4.Davenport TH, Ronanki R. Artificial Intelligence for the Real World. Harvard Business Review; 2018. https://hbr.org/2018/01/artificial-intelligence-for-the-real-world Accessed Mar 18, 2019.

- 5.Rajkomar A, Dean J, Kohane I. Machine learning in medicine. N Engl J Med. 2019; 380(14): 1347-1358. [DOI] [PubMed] [Google Scholar]

- 6.Topol E. The Topol Review. Preparing the Healthcare Workforce to Deliver the Digital Future. London, UK: NHS Health Education England; 2019. [Google Scholar]

- 7.U.S. Agency for International Development Artificial Intelligence in Global Health: Defining a Collective Path Moving Forward. USAID; The Rockefeller Foundation; Bill & Melinda Gates Foundation; 2019. https://www.usaid.gov/cii/ai-in-global-health Accessed Jun 17, 2019. [Google Scholar]

- 8.Hitching R. Primary Care 2.0. https://becominghuman.ai/the-changing-face-of-healthcare-artificial-intelligence-primary-care-an-empowered-patients-8ba638ffacd6. Published Feb 2019 Accessed Jul 8, 2019.

- 9.Pratt MK. Artificial intelligence in primary care. Medical Economics; https://www.medicaleconomics.com/business/artificial-intelligence-primary-care. Published 2018 Accessed Jul 8, 2019. [Google Scholar]

- 10.Moore SF, Hamilton W, Llewellyn DJ. Harnessing the power of intelligent machines to enhance primary care. Br J Gen Pract. 2018; 68(666): 6-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Starfield B. Primary care and its relationship to health. In: Primary Care. Balancing Health Needs, Services, and Technology. New York, NY: Oxford University Press, Inc; 1998. [Google Scholar]

- 12.Huang M, Gibson C, Terry A. Measuring electronic health record use in primary care: a scoping review. Appl Clin Inform. 2018; 09(01): 15-33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Terry AL, Stewart M. How does Canada stack up? A bibliometric analysis of the primary healthcare electronic medical record literature. Inform Prim Care. 2012; 20: 233-240. [DOI] [PubMed] [Google Scholar]

- 14.Kringos DS, Boerma WG, Hutchinson A, van der Zee J, Groenewegen PP. The breadth of primary care: a systematic literature review of its core dimensions. BMC Health Serv Res. 2010; 10: 65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Friedman C, Elhadad N. Natural language processing in health care and biomedicine. In: Shortliffe EH, Cimino JJ, eds. Biomedical Informatics. London, England: Springer London; 2014: 255-284. [Google Scholar]

- 16.Yu K-H, Beam AL, Kohane IS. Artificial intelligence in healthcare. Nat Biomed Eng. 2018; 2(10): 719-731. [DOI] [PubMed] [Google Scholar]

- 17.Hinton G. Deep learning – A technology with the potential to transform health care. JAMA. 2018; 320(11): 1101-1102. [DOI] [PubMed] [Google Scholar]

- 18.Lin SY, Mahoney MR, Sinsky CA. Ten ways artificial intelligence will transform primary care. J Gen Intern Med. 2019; 34(8): 1626-1630. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Talukder AK. Big data analytics advances in health intelligence, public health, and evidence-based precision medicine. In: Reddy PK, Sureka A, Chakravarthy S, Bhalla S, eds. Big Data Analytics. Vol 10721 Cham, Switzerland: Springer International Publishing; 2017: 243-253. [Google Scholar]

- 20.Wiens J, Shenoy ES. Machine learning for healthcare: on the verge of a major shift in healthcare epidemiology. Clin Infect Dis. 2018; 66(1): 149-153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Greenhalgh T, Potts HWW, Wong G, Bark P, Swinglehurst D. Tensions and paradoxes in electronic patient record research: a systematic literature review using the meta-narrative method: electronic patient record research. Milbank Q. 2009; 87(4): 729-788. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Greenhalgh T, Hinder S, Stramer K, Bratan T, Russell J. Adoption, non-adoption, and abandonment of a personal electronic health record: case study of HealthSpace. BMJ. 2010; 341: c5814. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Terry AL, Ryan BL, McKay S, et al. Towards optimal electronic medical record use: perspectives of advanced users. Fam Pract. 2018; 35(5): 607-611. [DOI] [PubMed] [Google Scholar]

- 24.Russell S, Norvig P. Chapter 1: Introduction. In: Artificial Intelligence: A Modern Approach. 3rd ed. Cedar Rapids, IA: Pearson Education Inc; 2010: 1-33. [Google Scholar]

- 25.Turing A. Computing machinery and intelligence. Mind. 1950; 49: 433-460. [Google Scholar]

- 26.Shortliffe EH, Axline SG, Buchanan BG, Green CC, Cohen SN. Computer-based consultations in clinical therapeutics: explanation and rule acquisition capabilities of the MYCIN System. Comput Biomed Res. 1975; 8: 303-320. [DOI] [PubMed] [Google Scholar]

- 27.Alanazi HO, Abdullah AH, Qureshi KN. A critical review for developing accurate and dynamic predictive models using machine learning methods in medicine and health care. J Med Syst. 2017; 41(4): 69. [DOI] [PubMed] [Google Scholar]

- 28.Miotto R, Wang F, Wang S, Jiang X, Dudley JT. Deep learning for healthcare: review, opportunities and challenges. Brief Bioinform. 2018; 19(6): 1236-1246. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Miotto R, Li L, Kidd BA, Dudley JT. Deep patient: an unsupervised representation to predict the future of patients from the electronic health records. Sci Rep. 2016; 6: 26904. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Esteva A, Kuprel B, Novoa RA, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017; 542(7639): 115-118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Stanganelli I, Brucale A, Calori L, et al. Computer-aided diagnosis of melanocytic lesions. Anticancer Res. 2005; 25(6C): 4577-4582. [PubMed] [Google Scholar]

- 32.Collier R. Electronic health records contributing to physician burnout. CMAJ. 2017; 189(45): E1405-E1406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Arndt BG, Beasley JW, Watkinson MD, et al. Tethered to the EHR: primary care physician workload assessment using EHR event log data and time-motion observations. Ann Fam Med. 2017; 15(5): 419-426. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Tricco AC, Lillie E, Zarin W, et al. A scoping review on the conduct and reporting of scoping reviews. BMC Med Res Methodol. 2016; 16: 15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Arksey H, O’Malley L. Scoping studies: towards a methodological framework. Int J Soc Res Methodol. 2005; 8(1): 19-32. [Google Scholar]

- 36.Levac D, Colquhoun H, O’Brien KK. Scoping studies: advancing the methodology. Implement Sci. 2010; 5: 69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Tricco AC, Lillie E, Zarin W, et al. PRISMA extension for scoping reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med. 2018; 169(7): 467-473. [DOI] [PubMed] [Google Scholar]

- 38.Covidence Systematic Review Software. Melbourne, Australia: Veritas Health Innovation; http://www.covidence.org Accessed Jul 8, 2019. [Google Scholar]

- 39.Craske MG, Rose RD, Lang A, et al. Computer-assisted delivery of cognitive behavioral therapy for anxiety disorders in primary-care settings. Depress Anxiety. 2009; 26(3): 235-242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Cucciare MA, Curran GM, Craske MG, et al. Assessing fidelity of cognitive behavioral therapy in rural VA clinics: design of a randomized implementation effectiveness (hybrid type III) trial. Implement Sci. 2016; 11: 65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.de Graaf LE, Gerhards SAH, Arntz A, et al. Clinical effectiveness of online computerised cognitive–behavioural therapy without support for depression in primary care: randomised trial. Br J Psychiatry. 2009; 195(1): 73-80. [DOI] [PubMed] [Google Scholar]

- 42.de Graaf LE, Hollon SD, Huibers MJH. Predicting outcome in computerized cognitive behavioral therapy for depression in primary care: A randomized trial. J Consult Clin Psychol. 2010; 78(2): 184-189. [DOI] [PubMed] [Google Scholar]

- 43.de Graaf LE, Gerhards SAH, Arntz A, et al. One-year follow-up results of unsupported online computerized cognitive behavioural therapy for depression in primary care: A randomized trial. J Behav Ther Exp Psychiatry. 2011; 42(1): 89-95. [DOI] [PubMed] [Google Scholar]

- 44.Chang EK, Yu CY, Clarke R, et al. Defining a patient population with cirrhosis: an automated algorithm with natural language processing. J Clin Gastroenterol. 2016; 50(10): 889-894. [DOI] [PubMed] [Google Scholar]

- 45.Widmer G, Horn W, Nagele B. Automatic knowledge base refinement: Learning from examples and deep knowledge in rheumatology. Artif Intell Med. 1993; 5(3): 225-243. [DOI] [PubMed] [Google Scholar]

- 46.Michalowski M, Michalowski W, Wilk S, O’Sullivan D, Carrier M. AFGuide system to support personalized management of atrial fibrillation. In: Joint Workshop on Health Intelligence. https://aaai.org/ocs/index.php/WS/AAAIW17/paper/view/15215. Published Mar 21, 2017 Accessed Jul 8, 2019.

- 47.Glasspool DW, Fox J, Coulson AS, Emery J. Risk assessment in genetics: a semi-quantitative approach. Stud Health Technol Inform. 2001; 84(Pt 1): 459-463. [PubMed] [Google Scholar]

- 48.Flores CD, Fonseca JM, Bez MR, Respício A, Coelho H. Method for building a medical training simulator with Bayesian networks: SimDeCS. In: 2nd KES International Conference on Innovation in Medicine and Healthcare. Vol 207 San Sebastian, Spain: Studies in Health Technology and Informatics; 2014: 102-114. [PubMed] [Google Scholar]

- 49.Astilean A, Avram C, Folea S, Silvasan I, Petreus D. Fuzzy Petri nets based decision support system for ambulatory treatment of non-severe acute diseases. In: 2010 IEEE International Conference on Automation, Quality and Testing, Robotics (AQTR). Cluj-Napoca, Romania: IEEE; 2010: 1-6. [Google Scholar]

- 50.Dominguez-Morales JP, Jimenez-Fernandez AF, Dominguez-Morales MJ, Jimenez-Moreno G. Deep neural networks for the recognition and classification of heart murmurs using neuromorphic auditory sensors. IEEE Trans Biomed Circuits Syst. 2018; 12(1): 24-34. [DOI] [PubMed] [Google Scholar]

- 51.Zamora M, Baradad M, Amado E, et al. Characterizing chronic disease and polymedication prescription patterns from electronic health records. In: 2015 IEEE International Conference on Data Science and Advanced Analytics (DSAA). Campus des Cordeliers, Paris, France: IEEE; 2015: 1-9. [Google Scholar]

- 52.Orient JM. Evaluation of abdominal pain: clinicians’ performance compared with three protocols. South Med J. 1986; 79(7): 793-799. [DOI] [PubMed] [Google Scholar]

- 53.Sumner W, II, Truszczynski M, Marek VW. Simulating patients with parallel health state networks. Proceedings/AMIA. Annual Symposium AMIA Symposium. 1998: 438-442. [PMC free article] [PubMed] [Google Scholar]

- 54.Sumner W, II, Xu JZ, Roussel G, Hagen MD. Modeling relief. AMIA. Annual Symposium proceedings / AMIA Symposium AMIA Symposium. 2007: 706-710. [PMC free article] [PubMed] [Google Scholar]

- 55.Sumner W, II, Xu JZ. Modeling fatigue. Proceedings / AMIA. Annual Symposium AMIA Symposium. 2002: 747-751. [PMC free article] [PubMed] [Google Scholar]

- 56.Sumner W, Hagen MD, Rovinelli R. The item generation methodology of an empiric simulation project. Adv Health Sci Educ Theory Pract. 1999; 4(1): 49-66. [DOI] [PubMed] [Google Scholar]

- 57.Zhuang ZY, Churilov L, Sikaris K. Uncovering the patterns in pathology ordering by Australian general practitioners: a data mining perspective. In: Proceedings of the 39th Annual Hawaii International Conference on System Sciences (HICSS ‘06) Kauia, HI: IEEE; 2006: 5(92c): 1-10. [Google Scholar]

- 58.Zhuang ZY, Amarasiri R, Churilov L, Alahakoon D, Sikaris K. Exploring the clinical notes of pathology ordering by Australian general practitioners: a text mining perspective. In: Proceedings of the 40th Annual Hawaii International Conference on System Sciences (HICSS ’07) Waikoloa, HI: IEEE; 2007: 1(136a): 1-10. [Google Scholar]

- 59.Zhuang ZY, Churilov L, Burstein F, Sikaris K. Combining data mining and case-based reasoning for intelligent decision support for pathology ordering by general practitioners. Eur J Oper Res. 2009; 195(3): 662-675. [Google Scholar]

- 60.Zhuang ZY, Wilkin CL, Ceglowski A. A framework for an intelligent decision support system: A case in pathology test ordering. Decis Support Syst. 2013; 55(2): 476-487. [Google Scholar]

- 61.Voracek D. NASA innovation framework and center innovation fund. Presented at: Edwards Technical Symposium; September 10, 2018; Edwards, California. [Google Scholar]

- 62.Gupta A, Thorpe C, Bhattacharyya O, Zwarenstein M. Promoting development and uptake of health innovations: the nose to tail tool. F1000 Res. 2016; 5: 361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Hao K. We analyzed 16,625 papers to figure out where AI is headed next. MIT Technology Review; 2019. https://www.technologyreview.com/s/612768/we-analyzed-16625-papers-to-figure-out-where-ai-is-headed-next/ Accessed Mar 15, 2019.

- 64.SJR Scimago journal and country rank. https://www.scimagojr.com/countryrank.php Accessed May 31, 2019.

- 65.Conte ML, Liu J, Schnell S, Omary MB. Globalization and changing trends of biomedical research output. JCI Insight. 2017; 2(12): e95206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Hajjar F, Saint-Lary O, Cadwallader J-S, et al. Development of primary care research in North America, Europe, and Australia from 1974 to 2017. Ann Fam Med. 2019;17(1):49-51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.41Studio 15 countries with highest technology in the world. https://www.41studio.com/blog/2018/15-countries-with-higest-technology-in-the-world/. Published 2019 Accessed May 31, 2019.

- 68.Sturgiss E, van Boven K. Datasets collected in general practice: an international comparison using the example of obesity. Aust Health Rev. 2018; 42(5): 563-567. [DOI] [PubMed] [Google Scholar]

- 69.Smeets HM, Kortekaas MF, Rutten FH, et al. Routine primary care data for scientific research, quality of care programs and educational purposes: the Julius General Practitioners’ Network (JGPN). BMC Health Serv Res. 2018; 18: 875. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.WHO Global Observatory for eHealth, World Health Organization, WHO Global Observatory for eHealth , eds. Atlas of eHealth Country Profiles: The Use of eHealth in Support of Universal Health Coverage: Based on the Findings of the Third Global Survey on eHealth, 2015. Geneva, Switzerland: World Health Organization; 2016. [Google Scholar]

- 71.Boyrikova A. Why the Netherlands is the new Silicon Valley. Innovation Origins. https://innovationorigins.com/why-netherlands-is-the-new-silicon-valley/. Published 2017 Accessed Jun 14, 2019.

- 72.Europe’s hub for R & D innovation. Invest in Holland. https://investinholland.com/business-operations/research-and-development/. Published 2019 Accessed Jun 14, 2019.

- 73.Doshi-Velez F, Kim B. Towards a rigorous science of interpretable machine learning. arXiv: 170208608 [cs, stat]. http://arxiv.org/abs/1702.08608. Published Feb 2017 Accessed May 31, 2019.

- 74.Holzinger A, Biemann C, Pattichis CS, Kell DB. What do we need to build explainable AI systems for the medical domain? arXiv: 17120 9923 [cs, stat]. http://arxiv.org/abs/1712.09923. Published Dec 2017 Accessed May 31, 2019.

- 75.Miller T. Explanation in artificial intelligence: insights from the social sciences. arXiv: 170607269 [cs]. http://arxiv.org/abs/1706.07269. Published Jun 2017 Accessed May 31, 2019.

- 76.Verghese A, Shah NH, Harrington RA. What this computer needs is a physician: humanism and artificial intelligence. JAMA. 2018; 319(1): 19-20. [DOI] [PubMed] [Google Scholar]

- 77.Christodoulou E, Ma J, Collins GS, Steyerberg EW, Verbakel JY, Van Calster B. A systematic review shows no performance benefit of machine learning over logistic regression for clinical prediction models. J Clin Epidemiol. 2019; 110: 12-22. [DOI] [PubMed] [Google Scholar]