Abstract

We study a stochastic model of infection spreading on a network. At each time step a node is chosen at random, along with one of its neighbors. If the node is infected and the neighbor is susceptible, the neighbor becomes infected. How many time steps does it take to completely infect a network of nodes, starting from a single infected node? An analogy to the classic “coupon collector” problem of probability theory reveals that the takeover time is dominated by extremal behavior, either when there are only a few infected nodes near the start of the process or a few susceptible nodes near the end. We show that for , the takeover time is distributed as a Gumbel distribution for the star graph, as the convolution of two Gumbel distributions for a complete graph and an Erdős-Rényi random graph, as a normal for a one-dimensional ring and a two-dimensional lattice, and as a family of intermediate skewed distributions for -dimensional lattices with (these distributions approach the convolution of two Gumbel distributions as approaches infinity). Connections to evolutionary dynamics, cancer, incubation periods of infectious diseases, first-passage percolation, and other spreading phenomena in biology and physics are discussed.

I. INTRODUCTION

Contagion is a topic of broad interdisciplinary interest. Originally studied in the context of infectious diseases [1–4], contagion has now been used as a metaphor for diverse processes that spread by contact between neighbors. Examples include the spread of fads and fashions [5,6], scientific ideas [7], bank failures [8–12], computer viruses [13], gossip [14], rumors [15,16], and yawning [17]. Closely related phenomena arise in probability theory and statistical physics in the setting of first-passage percolation [18,19], and in evolutionary dynamics in connection with the spread of mutations through a resident population [20–24]. We use the language of contagion throughout, but bear in mind that everything could be reformulated in the language of the other fields mentioned above.

In the simplest mathematical model of contagion, the members of the population can be in one of two states: susceptible or permanently infected. When a susceptible individual meets an infected one, the susceptible immediately becomes infected. Even in this idealized setting, interesting theoretical questions remain, whose answers could have significant real-world implications, as we argue below.

For example, consider the following model, motivated by cancer biology. Imagine a two-dimensional lattice of cells in a tissue, where each cell is either normal or mutated. At each time step a random cell is chosen, along with one of its neighbors, also chosen uniformly at random. If the first cell is mutated and its neighbor is normal, the mutated cell (which is assumed to reproduce much faster than its normal neighbor) makes a copy of itself that replaces the normal cell. In effect, the mutation has spread; it behaves as if it were an infection. This deliberately simplified model was introduced in 1972 to shed light on the growth and geometry of cancerous tumors [25].

Here, we study this model on a variety of networks. Our question is, given a single infected node in a network of size , how long does it take for the entire network to become infected? We call this the takeover time . It is conceptually related to the fixation time in population genetics, defined as the time for a fitter mutant to sweep through a resident population. It is also reminiscent of the incubation period of an infectious disease, defined as the time lag between exposure to the pathogen and the appearance of symptoms; this lag presumably reflects the time needed for infection to sweep through a large fraction of the resident healthy cells.

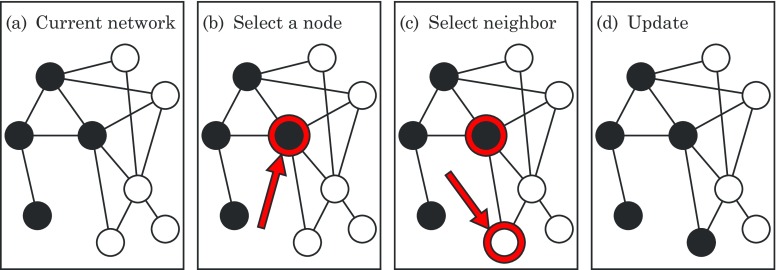

For the model studied here, the calculation of the network takeover time is inherently statistical because the dynamics are random. At each time step, we choose a random node in the network, along with one of its neighbors, also at random. If neither of the nodes is infected, nothing happens and the time step is wasted. Likewise, if both are infected, the state of the network again does not change and the time step is wasted. Only if the first node is infected and its neighbor is susceptible does the infection progress, as shown in Fig. 1.

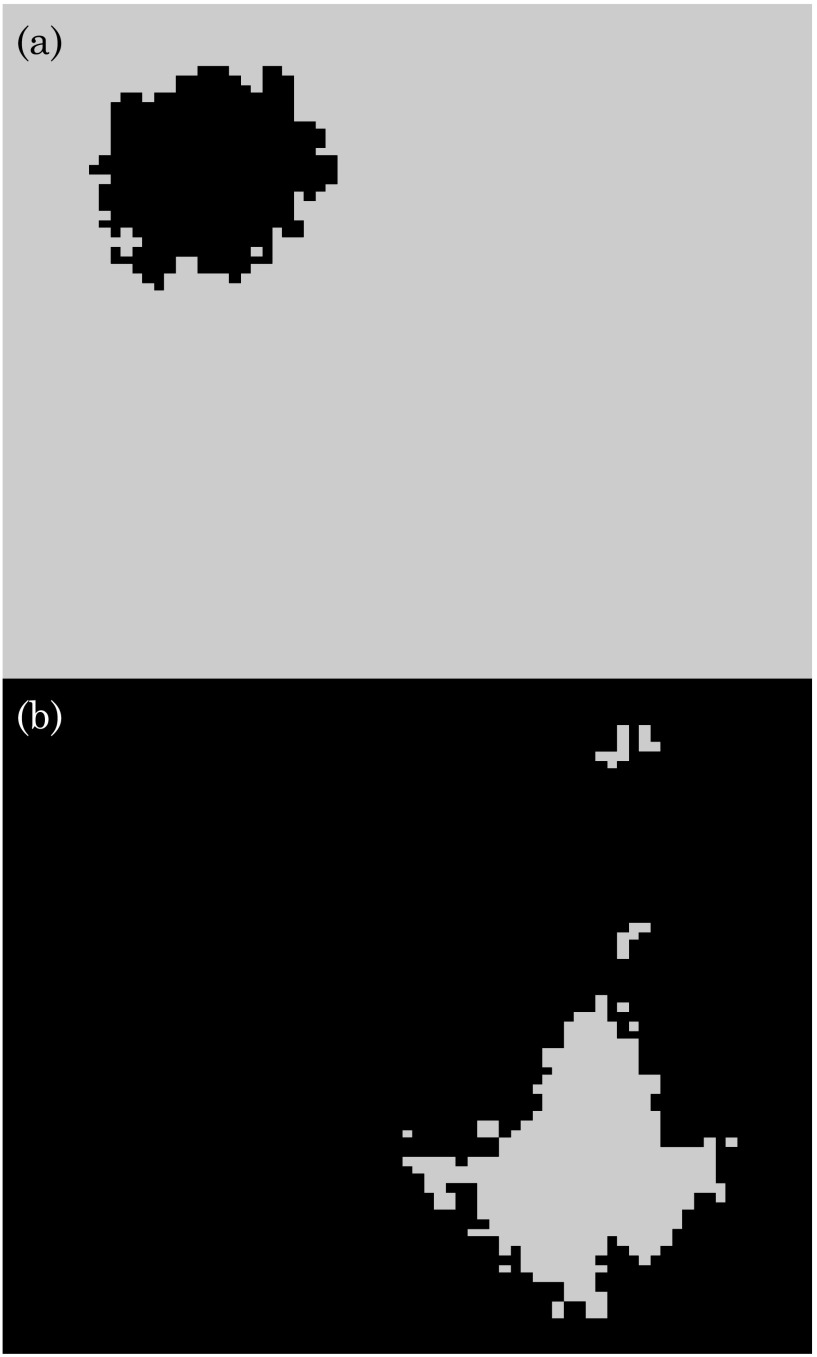

FIG. 1.

Simple model of infection spreading on a network. (a) A typical current state of the network. Solid dots represent infected nodes, and open dots represent susceptible nodes. Links represent potential interactions. (b) At each time step, a random node is selected. (c) One of the node's neighbors is also selected at random. The infection spreads only if the node chosen in (b) happens to be infected and its neighbor chosen in (c) happens to be susceptible, as is the case here; then the state of the network is updated accordingly as in (d). Otherwise, if the node is not infected or the neighbor is not susceptible, nothing happens and the state of the network remains unchanged. In that case the time step is wasted.

The time course of the infection is interesting to contemplate. Intuitively, when the network is large, it seems that the dynamics should be very stochastic at first and take a long time to get rolling, because it is exceedingly unlikely that we will randomly pick the one infected node, given that there are so many other nodes to choose from. Similarly we expect a dramatic slowing down and enhancement of fluctuations in the endgame. When a big network is almost fully infected, it becomes increasingly difficult to find the last few susceptible individuals to infect.

These intuitions led us to suspect that the problem of calculating the distribution of takeover times might be amenable to the techniques used to study the classic “coupon collector” problem in probability theory [26,27]. If you want to collect distinct coupons, and at each time step you are given one coupon at random (with replacement), what is the distribution of the time required to collect all the coupons? Like the endgame of the infection process, the coupon collection process slows down and suffers large fluctuations when almost all the coupons are in hand and one is waiting in exasperation for that last coupon. Erdős and Rényi proved that for large , the distribution of waiting times for the coupon collection problem approaches a Gumbel distribution [28]. This type of distribution is right skewed and is one of the three universal extreme value distributions [29,30].

In what follows, we show that for , the takeover time is distributed as a Gumbel distribution for the star graph, and as the convolution of two Gumbel distributions for a complete graph and an Erdős-Rényi random graph. For -dimensional cubic lattices, the dependence on is intriguing: we find that is normally distributed for and , then becomes skewed for and approaches the convolution of two Gumbel distributions as approaches infinity. We conclude by discussing the many simplifications in our model, with the aim of showing how the model relates to more realistic models. We also discuss the possible relevance of our results to fixation times in evolutionary dynamics, population genetics, and cancer biology, and to the longstanding (yet theoretically unexplained) clinical observation that incubation periods for infectious diseases frequently have right-skewed distributions.

II. ONE-DIMENSIONAL LATTICE

We start with a one-dimensional (1D) lattice. In this paper, we always take lattices to have periodic boundary conditions, so imagine nodes arranged into a ring.

Suppose that nodes are currently infected. Let denote the probability that a susceptible node gets infected in the next time step. Notice that for a more complicated graph, might not be a well-defined concept, because it could depend on more than alone: the probability of infecting a new node could depend on the positions of the currently infected nodes, as well as on the susceptible node being considered. In such cases, we would need to know the entire current state of the network, not just the value of , to calculate the probability that the infection will spread.

The 1D lattice, however, is especially tractable. Assuming that only one node is infected initially, at later times the infected nodes are guaranteed to form a contiguous chain. So for this simple case the graph state is indeed determined by alone. The only places where the infection can spread are from the two ends of the infected chain. (Even on more complicated networks, the dynamics of our model imply that the infected nodes always form contiguous regions, but few are as simple as this.)

The spread of infection involves two events. First, the node chosen at random must lie on the boundary of the infected cluster. Then, one of its neighbors that happens to be susceptible must be picked. So

| (1) |

Hence, for the ring, the probability that the infection spreads on the next time step reduces to for all .

Next, define the random variable as the number of time steps during which the network has exactly infected nodes. The probability that this state lasts for time steps is then given by

for , where . To see this, note that is the probability that no new infection occurs on the first steps, times the probability that infection does occur on step .

Thus, for any network where is well defined, the time spent with infected nodes is a geometric random variable, with mean and variance . In particular, since the ring has for all , we find that has mean and variance in this case.

The takeover time for any network is

the sum of all the individual times required to go from to infected nodes, for . (Equality, in this case, means equality in distribution, as it does for all the other random variables considered throughout this paper.)

In the case of the 1D lattice, all the are identical. However, their means and variances depend on , which prevents us from invoking the usual central limit theorem to deduce the limiting distribution of . However, we can invoke a generalization of it known as the Lindeberg-Feller theorem. See Appendix A for more details.

After normalizing by its mean, , and its standard deviation, , we find

| (2) |

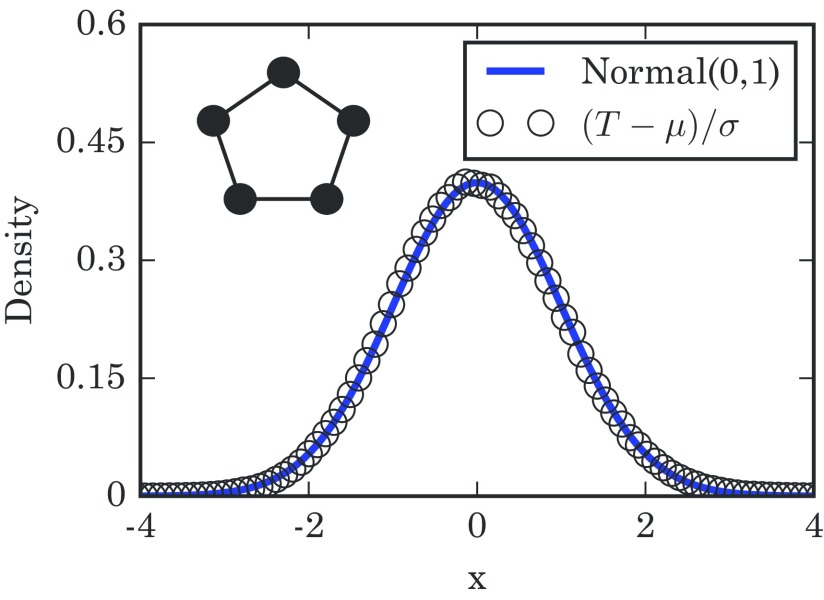

where the symbol means convergence in distribution as gets large. Figure 2 confirms that the takeover times are normally distributed in the limit of large rings.

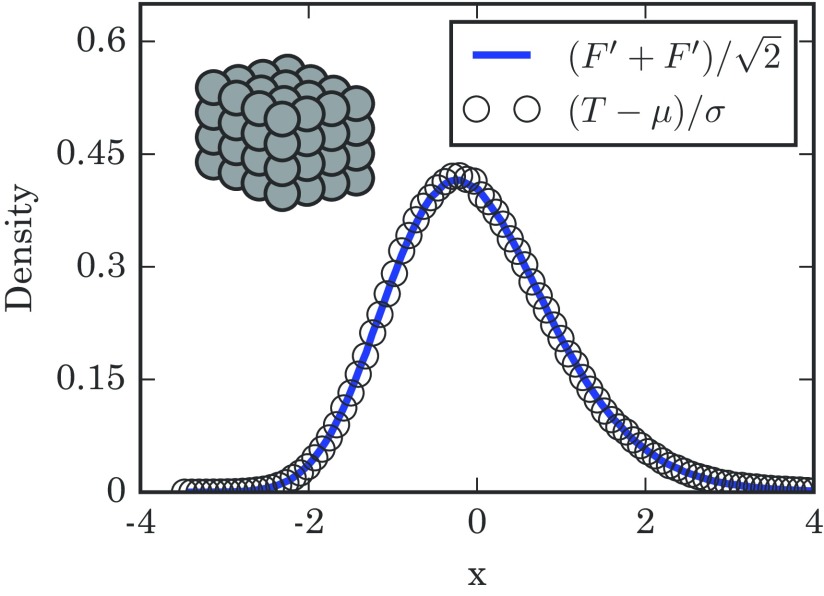

FIG. 2.

Distribution of takeover times for 1D lattices with nodes, obtained from simulations. The mean takeover time is and its variance is , both found analytically. The simulation results are well approximated by a normal distribution, as expected. The diagram in the upper left schematically shows a 1D lattice.

III. STAR GRAPH

A star graph is another common example for infection models. Here, separate “spoke” nodes all connect to a single “hub” node and to no others, as illustrated in the upper left of Fig. 3. We assume the initial infection starts at the hub, since starting it in a spoke node would require only a trivial adjustment to the calculations below.

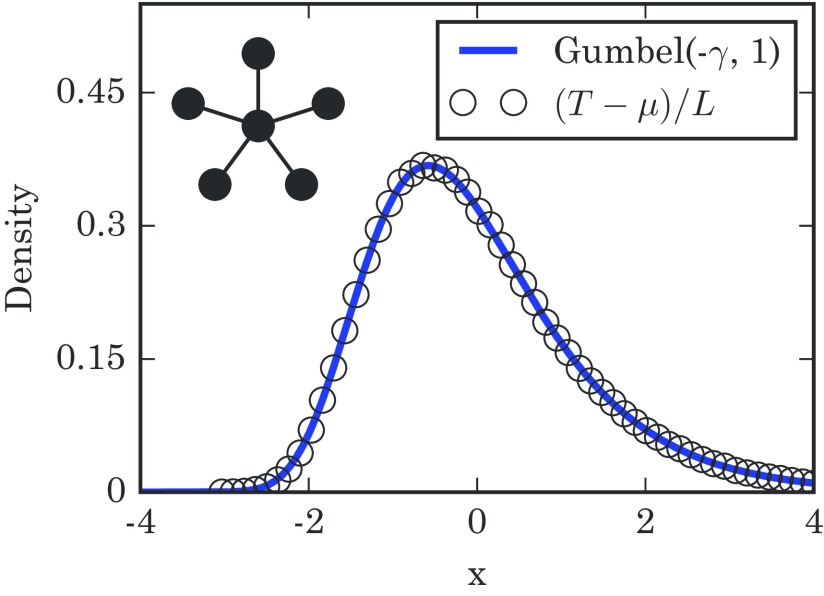

FIG. 3.

Distribution of takeover times for a star graph with spoke nodes, obtained from simulation runs. The mean and characteristic width are given by and . The numerically generated histogram of takeover times closely follows the predicted Gumbel distribution, even for the small used here. The schematic diagram in the upper left shows a star network.

Let be the number of spoke nodes that are currently infected. As in the ring case, (the probability to go from to in the next time step) is a well-defined quantity that depends on alone, and not on any other details of the network state. Using the logic of Eq. (1), we get

| (3) |

for . Here, is the probability of choosing the infected hub as the first node, and is the probability of selecting one of the currently susceptible spoke nodes, out of the spoke nodes in total, as its neighbor.

Now that is in hand for the star graph, we can define the random variable and the takeover time just as we did for the one-dimensional ring. The only difference is that the dependence of is now controlled entirely by the factor .

That same factor turns up in a classic probability puzzle called the coupon collector's problem [26,27]. At the time of this writing, millions of children are experiencing it firsthand as they desperately try to complete their collection of pocket monsters in the Pokémon video game series.

To see the connection, suppose you are trying to collect distinct items, and you have of them so far. If you are given one of the items at random (with replacement), the probability it is new to your collection is , the same factor we saw above, and precisely analogous to the probability of adding a new node to the infected set. Likewise, the waiting time to collect all items is precisely analogous to the time needed to take over the whole star graph. The only difference is the constant factor in Eq. (3).

The limiting distribution of the waiting time for the coupon collector's problem is well known. Although it resembles a lognormal distribution [31], in fact it is a Gumbel distribution in the limit of large , given the right scaling [27,28,32,33]. We now show that the same is true for our problem.

The first move is to approximate the geometric random variables by exponential random variables , with density

From here we define the random variable , which has mean .

It can be shown (see Appendix B) for a large class of and normalizing factors that

| (4) |

where the symbol “” means the ratio of characteristic functions goes to 1 as gets large. That is, the random variables on both sides converge to each other in distribution as gets large.

In the traditional coupon collector's problem we would take ; but because of that factor, what we want is . Thanks to the fact we are now using exponential variables, we now know

(using . A nice closed form for the probability distribution function of the sum of a collection of distinct exponential variables, , is known [24,33], but for the sake of convenience we rederive it in Appendix C. For our choice of and , the distribution function is given by

which can be manipulated into

for . From here, we can find the distribution for , and by extension. Therefore, taking the limit of large and using the standard approximation of the harmonic sum for gives us

| (5) |

as the density, where is the Euler-Mascheroni constant. The density in Eq. (5) is a special case of the Gumbel distribution, denoted and defined to have the density

| (6) |

Specifically, we find

| (7) |

where is a Gumbel random variable distributed according to .

This distribution can be tested against simulation, and it works nicely as seen in Fig. 3. Gumbel distributions have arisen previously in infection and birth-death models [24,34,35] and are well known in extreme-value theory [26,29,30], but the fact that they show up here as a result of a network topology is unexpected.

IV. COMPLETE GRAPH

The complete graph on nodes corresponds to a “well-mixed population” and is one of the most common topologies in infection models. This network consists of mutually connected nodes, so the location of the initial infection does not matter.

Given infected nodes, we once again have a well-defined . Using the concept behind Eq. (1), we find

| (8) |

for . For the sake of convenience, we collect these probabilities into a vector . As in the case of the star graph, we can approximate the takeover time by summing exponential random variables instead of geometric ones. So

| (9) |

To compress notation, we defined to be the normalized sum of exponential random variables across the entries of the vector .

The specific in Eq. (8) has some helpful symmetry. Notice that if , then

This symmetry means that the second half of the takeover looks just like the first half played backwards. If we set to be the front half of the vector and to be the back half of , then we know

| (10) |

Because we have a symmetry and the order we add the individual exponential variables will not matter, the random variables and should be equal in distribution. Although being odd or even may seem to be distinct cases, we find that the distinction does not matter.

The basic concept here is to compare and to , where . The sequence of represents the probabilities corresponding to a coupon collector's problem. It is therefore known that

| (11) |

where is distributed as , as described in Sec. III.

On the complete graph, we can rewrite (the probability of going from infected nodes to on the next time step) as , where . So for , the 's resemble the 's quite closely. Therefore, both the front tail of and the back tail of look suspiciously like coupon collector's processes. By this logic, we expect the total time to take over the complete graph should be just the sum of two coupon collector's times. That is, we suspect that is the sum of two Gumbel random variables.

There are a few hang-ups with this intuitive argument:

-

(1)

and are about half the length of .

-

(2)

does not quite equal at small .

Addressing the first hang-up involves, once again, the front tails of these vectors. Each has a standard deviation of , which tells us that the smallest values of are the strongest drivers of the final distribution.

The fact that the events at low populations (of either infected or susceptible types) strongly determine most of the random fluctuations is something that has shown up in other evolutionary models, especially with selective sweeps [36]. So if we were to just truncate both and at some point, we should expect the limiting distributions of or to not substantially change. We formalize this idea in Appendix D, and find that it works out nicely.

We have a lot of options about where to truncate, but a useful truncation point is . The expression simply means we round down to the nearest integer. If we define

then we have

| (12) |

and

| (13) |

Addressing the second hang-up mostly involves formalizing as a rather small number. The details are outlined in Appendix E, where we find that

| (14) |

From here we can daisy-chain the previous numbered equations in this section together and find that

| (15) |

This means that we successfully built on the result for star graphs to find that the resulting takeover time for the complete graph is just a sum of two Gumbel random variables. The sum of two Gumbel random variables has appeared previously in mathematically analogous places [18,37]. However, our use of the coupon collector's problem makes for a quick conceptual justification.

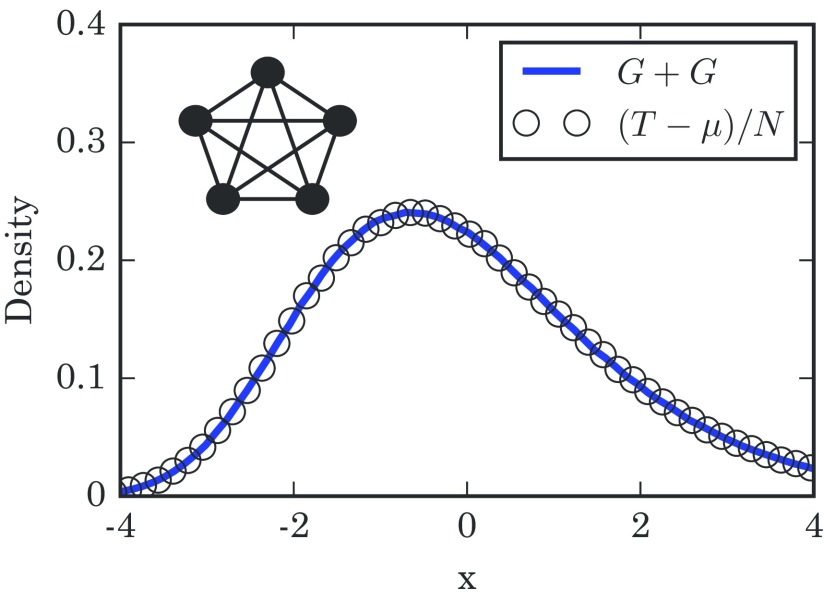

Figure 4 compares the takeover time distribution seen in simulations against the predicted distribution , and we see that this double-coupon logic works out well.

FIG. 4.

Distribution of takeover times for a complete graph with nodes. The histogram is based on simulation runs. The mean takeover time is exactly. Here, the numerically generated distribution fits closely to the convolution of two Gumbel distributions, produced using samples. The schematic diagram in the upper left shows a complete graph.

V. -DIMENSIONAL LATTICE

As we did with the one-dimensional lattice, in our analysis of -dimensional lattices we assume periodic boundary conditions. The side length of the -dimensional cube of nodes is denoted by . We are also taking , since we have already covered the 1D lattice and the infinite-dimensional lattice is a somewhat special case.

Unlike every previous case we have examined, we cannot consistently define . The probability of infecting a new node will almost always depend on the specific location of all currently infected nodes. This means that all our previous approaches will not work well here. However, this does not bar us from making guesses based on reasonable approximations.

Although we could potentially get all kinds of weirdly shaped clusters of infected nodes, that should not happen in expectation. Think back to the definition of our infection dynamics and Eq. (1). New infectees are added when a node on the boundary of the infected cluster gets randomly selected, and then one of its susceptible neighbors gets randomly selected and catches the infection.

Intuitively, it sounds like we have an expanding blob of infected nodes, with the expansion happening uniformly outward on every unit of surface area. This is a recipe for making spherelike blobs in dimensions, at least at the start of the dynamics. As seen from the top half of Fig. 5, this looks plausible in two dimensions.

FIG. 5.

Snapshots of our infection dynamics on a two-dimensional (2D) periodic cubic lattice. Black pixels show infected nodes, and grey pixels show susceptible nodes. (a) Snapshot near the beginning of the dynamics and (b) snapshot near the end. Notice how the blob of infected nodes in the top panel has a fairly simple shape, and most of the susceptible nodes lie in a single cluster in the bottom panel.

The exact nature of this shape is actually a notoriously difficult unsolved question. As we pointed out, there is a link between our infection model and first-passage percolation on a lattice [19]. In that context, there is a rich literature surrounding questions about the nature of this cluster, but formal proofs of many of its properties have turned out to be difficult. However, convexity appears to be typical in the large size limit, and surface fluctuations should be relatively small [19]. Moreover, there is good reason to believe that on the two-dimensional (2D) lattice, the boundary of the expanding cluster is a one-dimensional curve, which comes in handy later [38].

In any case, since the lattice is periodic, this infected cluster will keep expanding. This means that at the end of the dynamics we should expect the majority of susceptible nodes to also be in a single cluster, with insignificant enclaves elsewhere. This is borne out in simulations, as shown in the bottom half of Fig. 5. If we focus on this majority susceptible cluster, we see that the end of the dynamics looks like a uniformly shrinking cluster of susceptible nodes, which is approximately the reverse of the uniformly growing infected cluster at the start. So, the beginning and end of the dynamics look similar once again, as they did for the complete graph.

More importantly, since this is a -dimensional lattice, we can guess the surface area of these blobs. For a shape with a length scale of , we typically expect volume to scale as and surface area to go as . So given an infected cluster of nodes, we expect it to have a surface area proportional to . Assuming some uniformity, we should get that the typical probability of infecting a new node should be proportional to at the start of the dynamics, where the exponent is given by

| (16) |

And just as in the case of the complete graph, this process at the start gets repeated backwards at the end.

This heuristic argument suggests that the total time to takeover should look like the sum of geometric variables , where

| (17) |

The fact that we only got a grip on up to a proportionality should not worry us. After all, that did not stop us when we worked through the star graph case earlier; back then we argued that such a proportionality constant would simply show up in the scaling factor in the denominator. If we treat this as a numerical problem, we do not need to explicitly find the scaling factor. Instead, we can examine , where and are empirically obtained values for the average and standard deviation of , respectively. Then any proportionality constants just get absorbed by the anonymous .

This reasoning further suggests that, for sufficiently large,

| (18) |

where is just the variance of the sum of geometric variables. But we already know how to approximate sums of geometric random variables. We can follow a similar procedure of truncation and perturbation as in the case of the complete graph. Assuming Eq. (18) is correct, we get

| (19) |

where we define

| (20) |

and

| (21) |

for the sum of variances.

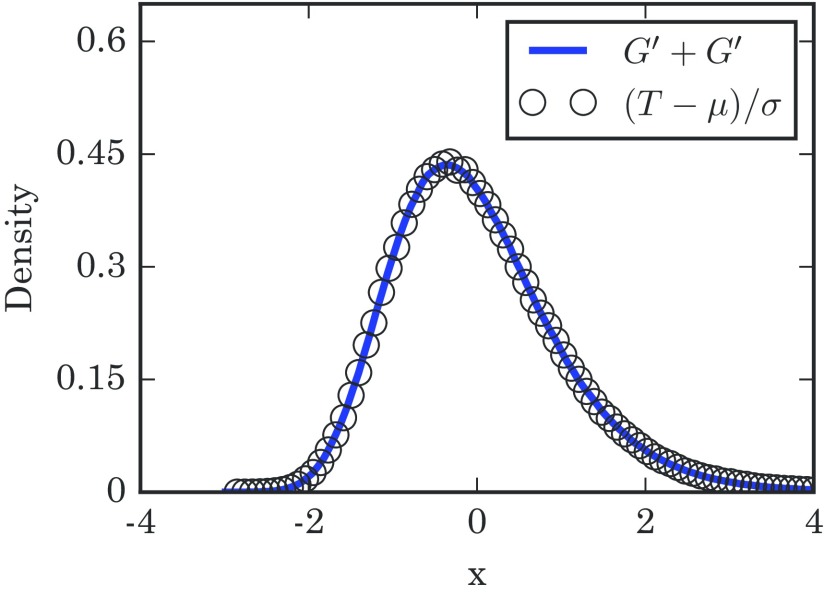

The truncation point is some increasing function of , which can normally just be set to . In the limit of large , the distinction does not really matter. However, it seems frequently possible to tune to get a good fit on finite- cases, as the simulations of the three-dimensional (3D) lattices in Fig. 6 suggest.

FIG. 6.

Distribution of takeover times for a 3D lattice with a side length of . The numerically generated distribution is based on simulation runs. The solid line shows the distribution of , with being summed up to and using repetitions. The empirical quantities and are the numerically calculated mean and variance of . The schematic diagram in the upper left shows a 3D lattice.

In principle, we could try to use Eq. (C1) to get a finite- estimate for this distribution. However, we do not expect any of these distributions to have a large- limit as easy as in the case of the star graph, nor for any of these distributions to have a name. For practical purposes, we can just simulate the right hand side of Eq. (20) directly, since generating and adding a large number of exponential variables is rather fast.

The critical dimension

Naively, we might expect the limiting distribution of to always be something between a Gumbel and a normal distribution. After all, implies , which returns us to identical variables and the 1D ring, giving us the standard normal. Meanwhile, implies , which returns us to the coupon collector's problem and the star graph, giving us the Gumbel distribution. Incidentally, this argument suggests that the infinite-dimensional lattice has similar behavior as the complete graph under these dynamics. In between these extreme cases, we might expect the intermediate 's to correspond to a family of intermediate distributions.

While this is generally true, there is a surprising caveat to be made about the case of . Even though all the summands are distinct, they start to resemble each other once gets sufficiently large.

For , Eq. (16) gives , which means that in Eq. (21) is the harmonic series. This diverges with , giving each summand a large denominator, and thus a small variance about a mean of zero. So, even though the summands are not identical random variables, they will become rather similar as we take to be large, suggesting that an improved version of the central limit theorem may apply. This intuition is confirmed by a careful analysis in Appendix F, showing that the Lindeberg-Feller theorem applies in this case.

Thus we predict a normal limiting distribution of in the specific case of the 2D lattice: as ,

| (22) |

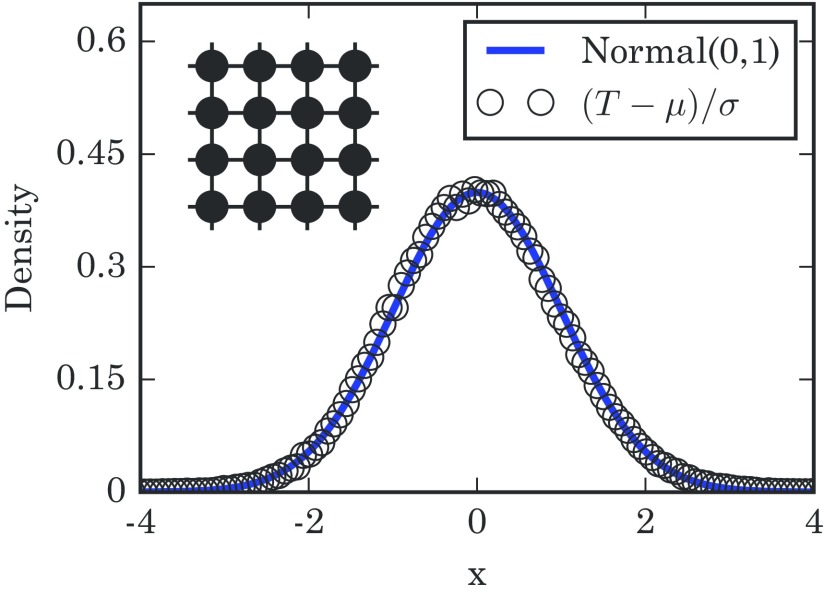

for . This prediction is borne out in simulation, as shown in Fig. 7.

FIG. 7.

Distribution of takeover times for a 2D lattice with a side length of . The numerical results are obtained from simulations. The solid line is the standard normal distribution. The empirical quantities and are the numerically calculated mean and variance of . The schematic diagram in the upper left shows a 2D lattice.

However, no dimension higher than can yield normally distributed takeover times. For each of , the distribution of will converge to a distinct limiting distribution between a normal and a Gumbel distribution, as we initially suspected. The important distinction between and is that, in the latter, always converges to a finite number. Because of that, will always have a nonzero third moment, preventing it from converging to a standard normal. For more details, see Appendix G.

VI. ERDŐS-RÉNYI RANDOM GRAPH

Unlike all the previous graphs we have seen, an Erdős-Rényi graph is randomly constructed. We start off with nodes, and add an edge between any two with some probability . In this section, we condition on the graph being connected, so that complete takeover is always possible.

There is a good history of using generating functions to analyze desired properties on a random graph, including for various infection models [39–41]. But since we just finished analyzing the general lattice case, we can take another road.

Recall the central observation that let us recast as a sum of geometric random variables. That train of logic only really involved the graph having a well-defined dimension . If we could define the dimension for other kinds of graphs, then all our observations from the previous section would simply carry over.

Imagine taking a cluster of nodes on an Erdős-Rényi graph. What is the surface area of said cluster? Well, in expectation, the nodes are externally connected to nodes, for or [or in general]. So as gets large, the number of external neighbors in any cluster gets large as well, for both very small and very large . This is suggestive of an infinite-dimensional topology.

So, by collecting results from Eqs. (15) and (19), we can guess the limiting distribution of the takeover times . Defining and to be the empirical mean and standard deviation of , we find

| (23) |

where is a Gumbel random variable with a mean of zero and a variance of 1/2. One can check that the corresponding distribution for is .

We experimentally tested Eq. (23) by fixing a randomly generated Erdős-Rényi random graph, along with a seed at which the infection always started. Then we ran simulations of the stochastic infection process and compiled the observed distribution of takeover times. (The reason we fixed the graph beforehand was to avoid sampling multiple different values of and over different realizations of the random graph.) The results of the experiment were consistent with our prediction, as shown in Fig. 8.

FIG. 8.

Distribution of takeover times for an Erdős-Rényi random graph on nodes with an edge probability of . Simulation results were compiled from runs, all using the same realization of the random graph and all with the initial infection starting at the same node. The solid line was generated numerically by adding pairs of random variables together. Similarly, and are the numerically calculated mean and variance of .

VII. DISCUSSION

A. Relation to other models

1. Infection models

The model studied in this paper is intentionally simplified in several ways, compared to the most commonly studied models of infection. The purpose of the simplifications is to highlight how one aspect of the infection process—its network topology—affects the distribution of takeover times. However, the update rule also plays an important role. The assumptions we have made about it therefore deserve further comment.

Assumption 1

The infection is infinitely transmissible. When an infected node interacts with a susceptible node, the infection spreads with probability 1. In a more realistic model, infection would be transmitted with a probability less than 1.

Assumption 2

The infection lasts forever. Once infected, a node never goes back to being susceptible, or converts to an immune state, or gets removed from the network by dying. The dynamics of these more complicated models, known as susceptible-infected-susceptible (SIS) or susceptible-infected-recovered (SIR) models, have been studied on lattices and networks by many authors; for reviews, see Refs. [3,4].

Assumption 3

The update rule is asynchronous. In other words, only one link is considered at a time. By contrast, in a model with synchronous updating, every link is considered simultaneously.

If the infection is further assumed to be infinitely transmissible, then at each time step every infected node passes the infection to every one of its susceptible neighbors. Such an infection, akin to the spreading of a flood or a wildfire, would behave even more simply than the process studied here. In fact, it would be too simple. The calculation of the network takeover time would reduce to a breadth-first search and its value would be bounded above by the network's diameter. Note, however, that if the infection has a probability less than 1 of being transmitted to susceptible neighbors (such as in the original 1-type Richardson model [42]), the system becomes nontrivial to analyze [19,42].

Interestingly, the asynchronous assumption may not have as much impact as it first appears. We may build a continuous time model based on our discrete time model, by interpreting the discrete time as counting the number of events, and assigning random variables to measure the “true” time between events and . But if these intermediate times have finite moments, then they will become infinitesimal compared to as the system size gets large. In fact, using cumulant generating functions, it is easily possible to show that the skew of the combined continuous time distribution exactly converges to the skew of the discrete time distribution.

2. Models of evolutionary dynamics

About a decade ago, the field of evolutionary dynamics [43] was extended to networks, and the field of evolutionary graph theory was born [20]. In general, the results in this field depend on modeling the spread of a mutant population using the Moran process [43,44]. (Our model can be viewed as a limiting variation of the Moran birth-death process, in the limit as the mutant fitness tends to infinity.) A number of important and interesting results have come from these studies of Moran dynamics, including the existence of network topologies that act as amplifiers of selection [45], increasing the probability of takeover, and also topologies that shift the takeover times we are considering [46].

For example, working in the framework of evolutionary graph theory, Ashcroft, Traulsen, and Galla recently explored how network structure affects the distribution of “fixation times” for a population of individuals evolving by birth-death dynamics [24]. The fixation time is defined as the time required for a fitter mutant (think of a precancerous cell in a tissue) to sweep through a population of less fit wild-type individuals (normal cells). Initially, a single mutant is introduced at a random node of the network. At each time step, one individual is randomly chosen to reproduce. With probability proportional to its fitness, it gives birth to one offspring, and one of its network neighbors is randomly chosen to die and be replaced by that offspring. The natural questions are as follows: What is the probability that the lineage of the mutant will eventually take over the whole network? And if it does, how long does it take for this fixation to occur?

The calculations are difficult because there is no guarantee of mutant fixation (in contrast to our model, where the network is certain to become completely infected eventually). In the birth-death model, sometimes by chance a normal individual will be chosen to give birth, and its offspring will replace a neighboring mutant. If this happens often enough, the mutant population can go extinct and wild-type fixation will occur. Using Markov chains, Hindersin and colleagues provided exact calculations of the fixation probability and average fixation times for a wide family of graphs, as well as an investigation of the dependence on microscopic dynamics [22,23,47,48]. A challenge for this approach is that the size of the state space becomes intractable quickly: even with sparse matrix methods, it grows like [47]. For networks of size , their computations showed that the distributions of mutant fixation times were skewed to the right, much like the Gumbel distributions, convolutions of Gumbel distributions, and intermediate distributions found analytically and discussed here in Secs. III–VI.

3. First-passage percolation

Our infection model is also closely related to first-passage percolation [18,19]. The premise behind this family of models can be described as follows. Given a network, assign a random weight to each edge. By interpreting that weight as the time for an infection to be transmitted across that edge, and by choosing properly tuned geometric (or, more commonly, exponential) random variables as the edge weights, we can recreate our infection model.

Notice that percolation defines a random metric on the network, meaning that internode distances change from one realization to another. This leads to a number of natural questions. The most extensively studied is the “typical distance,” quantified by the total weight and number of edges on the shortest path between a pair of random nodes [49–51]. It is also possible to analyze the “flooding time” [37,52], defined as the time to reach the last node from a given source node chosen at random. This quantity is the closest analog, within first-passage percolation, of our takeover time. Indeed, a counterpart of our result for two Gumbel distributions in the Erdős-Rényi random graph was obtained previously using these techniques [37]. However, we are unaware of flooding-time counterparts of our results about the takeover times for -dimensional lattices.

Another natural question in first-passage percolation involves finding the long-time and large- limiting shape of the infected cluster. More precisely, given a fixed origin node, we can identify all nodes that can be reached from the origin within a total path weight of or less. This amounts to finding all the nodes that have been infected by the origin within time , a problem that percolation theorists have typically studied in -dimensional lattices. We saw an instance of such an expanding cluster in Sec. V. In a general number of dimensions, the provable nature of this shape may be complicated; the fluctuations of its boundary are thought to depend on the Kardar-Parisi-Zhang (KPZ) equations [19,53,54]. The limiting shape is not typically a Euclidean ball, but it has been proven to be convex; see Refs. [18,19] for an introductory discussion of these issues. In Fig. 5, the nature of this cluster's complement in a large torus was of concern to us, but that issue has not yet attracted mathematical attention, as far as we know.

B. Applications to medicine: Epidemic and disease incubation times and cancer mortality

For more than a hundred years, there have been intriguing empirical observations of “right-skewed” distributions in a remarkably wide range of phenomena related to disease [55–59]. Examples include within-patient incubation periods for infectious diseases like typhoid fever [55,56], polio [58], measles [60], and acute respiratory viruses [61]; exposure-based outbreaks like anthrax [62] (see Refs. [61,63] for more recent reviews); rates of cancer incidence after exposure to carcinogens [64]; and times from diagnosis to death for patients with various cancers [65] or leukemias [66].

The relationship between these phenomena and our model is intuitive: most of these processes depend on some sort of agent (a mutant cell, a virus, or a bacterium) invading and taking over a population, something which typically proceeds one “interaction” at a time. And as we have seen, our simple infection model automatically generates right-skewed distributions like Gumbel distributions, convolutions of Gumbel distributions, and intermediate distributions via a coupon-collection mechanism, for many kinds of population structures. Although the model studied here does not quite emulate real-world disease incubation (because of its assumptions of asynchronous update, zero latency periods, etc.), this is still a striking comparison. So could it be that the right-skewed distributions so often seen clinically are, at bottom, a reflection of this same mathematical mechanism—a manifestation of an invasive, pathogenic agent spreading through a network of cells or people?

To test the plausibility of this idea, we need to amend our model slightly. Until now we have focused exclusively on the time to total takeover of a network. But in most scenarios related to disease, total takeover is not the relevant consideration. Sufficient takeover is what matters. For example, a patient need not have every single one of their bone marrow stem cells replaced by leukemic cells before they die from leukemia. Death presumably occurs as soon as some critical threshold is crossed—which is probably the case for diseases with infectious etiologies as well. So let us now check whether changing the criterion from total takeover to partial takeover changes our results, or not.

Times to partial takeover: Truncation

Define to be the time for out of members to be infected, with the interesting range of 's being . For the sake of example, consider the complete graph as our network topology, so we have .

As in the analysis for the complete takeover times, we can split into a front and back part and , with the front covering up to about and the back covering the remainder. Then

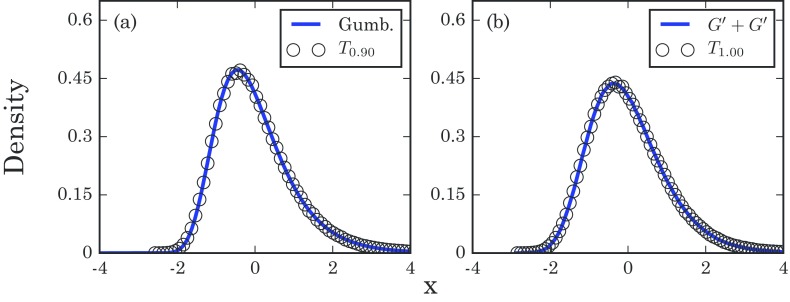

where is the mean of , and and are the mean and standard deviation of . However, it is easy to show that converges to zero as gets large, regardless of . So we expect the distribution of in this case to asymptotically approach a Gumbel distribution. As seen in Fig. 9, similar results hold for Erdős-Rényi random graphs, even for .

FIG. 9.

Normalized distribution of takeover times for an Erdős-Rényi random graph on nodes with an edge probability of , obtained from simulation runs. For the sake of convenience, each is rescaled to have a mean of zero and a variance of 1. (a) Normalized times required to infect 90% of the population, and (b) times for complete takeover. Here, the convolution of two Gumbel distributions plotted on the right was generated using samples.

Thus, for complete graphs and Erdős-Rényi random graphs, the right-skewed distributions for complete takeover persist when we relax the criterion to partial takeover. In that respect our results seem to be robust.

The resilience of the Gumbel distribution is important to appreciate. As pointed out by Read [31], a Gumbel distribution can be impersonated by a properly tuned three-parameter lognormal distribution; see Appendix H for further details. A three-parameter lognormal distribution has a density function

provided .

It is this three-parameter lognormal distribution that has been frequently noted in empirical studies of disease incubation times. Originally proposed and elaborated by Sartwell [57–59] as a curve-fitting model, its seeming generality has led to it being called “Sartwell's law.” But it has always lacked a theoretical underpinning. Even recent reviews consider the origin of lognormal incubation times to be unresolved [63]. In contrast, Gumbel and related distributions arise very naturally from the model studied here and from other infection models [34,35] and may provide a more suitable theoretical foundation than lognormals in that sense.

C. Future directions

In conclusion, we have presented distributions for takeover times of a simple infection model across many different networks, including complete graphs, stars, -dimensional lattices, and Erdős-Rényi random graphs. While heterogeneous networks are outside the scope of this work, our initial results suggest that the takeover times there too are distributed in a right-skewed fashion resembling a Gumbel distribution (see Appendix I). In the future, we hope to link our model to more concrete real-world phenomena and experimental data, connecting our abstract “step-based” takeover times with physically meaningful times on time scales appropriate for the situation in question.

ACKNOWLEDGMENTS

Thanks to David Aldous, Rick Durrett, Remco van der Hofstad, Lionel Levine, and Piet Van Mieghem for helpful conversations. This research was supported by a Sloan Fellowship and NSF Graduate Research Fellowship Grant No. DGE-1650441 to B.O.-L. in the Center for Applied Mathematics at Cornell, and by NSF Grants No. DMS-1513179 and No. CCF-1522054 to S.S. and J.S. acknowledges the NIH for their generous loan repayment grant.

APPENDIX A: THE LINDEBERG CONDITION (ONE DIMENSION)

When we were analyzing the 1D lattice, we could not quite make use of the classical central limit theorem, since our variables have a dependence on , being . Fortunately, there is the Lindeberg-Feller variant of the central limit theorem, which lets us get the desired normal convergence (see Ref. [67], p. 98, for more details). However, we must first satisfy some special conditions before we can cite it.

For the sake of convenience, let us define

So , and , which satisfies two of the three conditions. However, there is still the matter of the titular Lindeberg condition on the restricted second moments, which says, for any fixed ,

To verify this, first notice that means that we need either or . However, the minus case will not come up in the limit; as gets large it would require to be negative, which is not possible.

Letting , we get

And so,

Here, the first limit looks like

Meanwhile, the second limit can be bounded above (with some constant by

So the total sum of conditional expectations converges to zero as gets large, and so the Lindeberg condition is satisfied. This allows us to cite the theorem, and confirms that in the limit we get

| (A1) |

APPENDIX B: GEOMETRIC VARIABLES CONVERGING TO EXPONENTIAL VARIABLES

Proposition. Say we have a positive sequence , and some function such that and

Then if , and , we have

| (B1) |

Proof. This is proven by finding the characteristic functions for both sides, and showing that the ratio of these functions goes to 1 as gets large. The characteristic function of a random variable uniquely determines its distribution, so this is a rather powerful statement.

Let us define

If we split into the sum of geometric random variables and rearrange, we eventually get

| (B2) |

Similarly, if we set

then after we proceed through some more algebra, we find that

| (B3) |

Let us fix so that we can pointwise consider the ratio of the characteristic functions. After some manipulation, we find

We assumed that gets large, so there is some function that has vanishing magnitude with large such that

So then we have

Notice that . In addition, we already know the sum of goes to zero, so it must be that each individual gets large for all . This ensures the second term is small, and therefore it can be rewritten exactly as an appropriate exponential.

So

where the final limit comes from our assumption on . The limit converges to 1, which establishes the proposition.

APPENDIX C: SUM OF EXPONENTIALS

Proposition. If we have exponential random variables for , with distinct, then is distributed according to the density

| (C1) |

on .

Proof. This is a straightforward induction for the most part. The base case is simply checked by plugging in . To get the inductive step down, we just convolve the previous step with a new exponential distribution, so

After calculating for a bit, we find

The first term is in the desired form, but the second term requires some work. After some further manipulation, we can get

where we define

We can interpret as a polynomial of at most degree in (a Lagrange polynomial, to be specific).

But notice that for . This means that is a polynomial with distinct roots, which is more than what its maximum degree should normally allow. The only way that is possible is if is a constant zero, so . Plugging this in and simplifying gives

which is the desired result.

APPENDIX D: TRUNCATION OF SEQUENCES

Proposition. Let with . Further say that is integer valued with . Given a positive sequence , assume

and given that . Then

| (D1) |

Proof. As before, the proof involves showing the ratio of characteristic functions converges to 1. The full series on the right has the function

and the truncated series on the left has

So naturally, we fix a and get the ratio

Because of our last condition, we know that is small for all in this range. So we can do a Taylor expansion and make a function which is small in magnitude so that

Again, and is large, so we can again shift to an exponential to get

where is small in magnitude again. But notice that this is based on the tail of a convergent sum. So

And so .

APPENDIX E: EDGE PERTURBATIONS

In principle we could show a more general statement here, but we are only going to directly calculate the effect of a perturbation once in this paper. So, for the sake of readability, we are just going to do this specific example.

Recall that for the complete graph with . Also recall that and are the truncated sequences up to . Let be the characteristic function associated with the normalized sum , and is the characteristic function associated with . Then

Notice in the range of 's in the product and is therefore small. We go through some Taylor expansions and cancellations and, using to represent functions of small magnitude, we find

The first term can be once again turned into an exponential (thanks to the smallness of , and so we get

Therefore, we get convergence to 1 if the sum converges to zero as gets large. But this sum is easy to bound from above. That is,

This means we get that , implying that the truncated sum for the complete graph converges to the truncated distribution for the coupon collector's problem.

APPENDIX F: THE LINDEBERG CONDITION (TWO DIMENSIONS)

Much like in the 1D lattice case, we are unable to directly use the typical central limit theorem, because the variables are not identical and have a dependence on . But once again, we can apply the Lindeberg-Feller theorem. We are going to focus on the 2D case, so we have . Let

Because we are only looking at the special 2D case, .

Notice that for any higher dimension we would get , which quickly converges to a finite number as gets large, whereas with , we have that gets large for large . This distinction is what lets us apply the theorem to the 2D case, but not the rest.

Anyway, it is easy to check that and . So then

So in order to apply the theorem, we only need to check if, for any fixed , we have

| (F1) |

If this final condition holds, then we can cite the theorem and conclude that is distributed as a normal as gets large.

We need not care about the case, because this is equivalent to asking for . However, scales as in the limit of large whereas , so this quantity will always eventually become negative, whereas exponential variables are always positive.

Therefore, let us focus on the positive half. Letting and integrating, we get

Substituting in gives us

That last term will be the dominant term as gets large, so we can choose some positive constant (which may depend on such that

So, we have

We can bound the harmonic sum from below with a constant times , so there is some positive such that

We can approximate this sum from above by interpreting it as a Riemann sum. By taking the appropriate integral, we get

Second moments are always non-negative, which means

So Eq. (F1) is finally confirmed. As a consequence, we can finally cite the Lindeberg-Feller theorem, and know that

| (F2) |

APPENDIX G: NON-NORMALITY OF

There are a lot of possible ways to show a distribution does not converge to a normal in a limit. But to show that the distribution of for a -dimensional lattice [as defined in Eq. (20)] is not normal, it will suffice to consider the moments. We already know that has a mean of zero and a variance of 1; so if went like a normal, then we should expect that by symmetry.

We can reuse Eq. (B3) to find the characteristic function of by plugging in and . Because , then . By the definition of the characteristic function, we know that if we expand in powers of , then

So we can get the third moment by just reading off the coefficient of the term.

Returning to Eqs. (20) and (B3), let . Using the standard expansions for and , we find

If we do not care about high order terms in , then this is an easy product to take. In fact, if we collect terms and plug in for , we get

This means, for any finite , the third moment of is simply

Although is a function that depends on , this quantity will never get large. In fact, since , then we know in the limit of large that

| (G1) |

where is the Riemann zeta function. In the range of 's presented, neither diverges nor hits zero, so the above will never be zero. In fact, the right hand side of Eq. (G1) is monotone, so each distinct will produce a distinct third moment and therefore a distinct distribution. As a side note, if we take , we get , which is the correct value for the third moment of a normalized Gumbel distribution, as expected. However, since the rest are distinct, that means we only transition to an exact Gumbel distribution in the extreme limit.

In summary: given , we never expect to have a zero third moment in the limit of large , and so can never converge to a normal distribution. Moreover, because their third moments depend on , we expect to converge to a different distribution for each . Hence we expect there is no upper critical dimension.

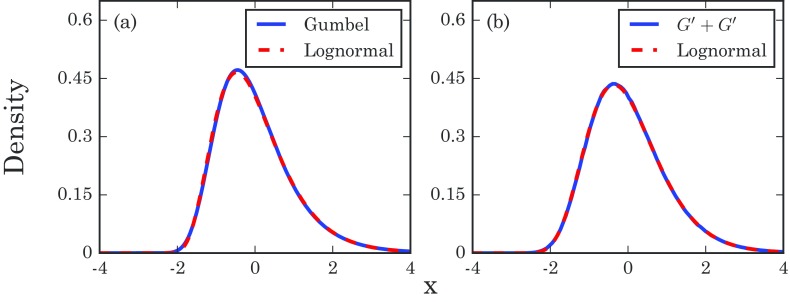

APPENDIX H: LOGNORMAL DISTRIBUTIONS CAN MASQUERADE AS GUMBEL DISTRIBUTIONS

In Sec. VII B we noted that the distribution of disease incubation periods and other times of medical interest have often been fit by a lognormal. However, given the noise in real data, it is entirely possible that the true distribution should have been a Gumbel distribution (or a convolution of two Gumbel distributions) and was impersonated by a similar-looking lognormal.

Moreover, since most studies used three-parameter lognormals, it would always be possible to match the first three moments of the data. We do as such in Fig. 10, producing a very close fit to both a Gumbel distribution and a convolution of two Gumbel distributions. We can compare these densities using the Kolmogorov metric, given by the maximum difference between their cumulative distribution functions. Using this, we find that the normalized Gumbel distribution is away from its corresponding lognormal in this metric. For the convolution of two Gumbel distributions, we can numerically estimate that its corresponding lognormal is away in the Kolmogorov metric.

FIG. 10.

A properly chosen three-parameter lognormal distribution can closely approximate (a) a Gumbel distribution or (b) a convolution of two Gumbel distributions. The parameters in these lognormals were chosen to fit the first three moments of the Gumbel or Gumbel+Gumbel distributions.

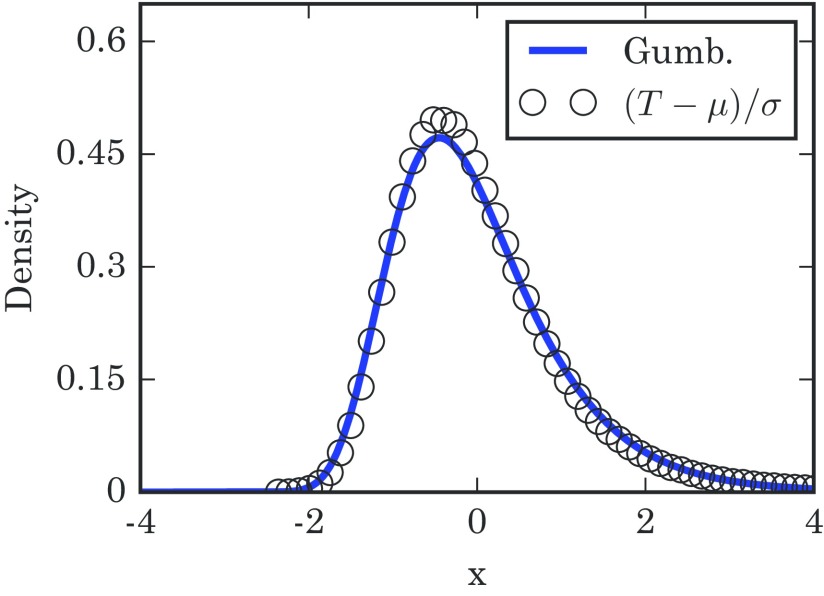

APPENDIX I: NETWORK RESILIENCE OF RIGHT SKEWS

Our theory thus far has addressed homogeneous networks, primarily. However, our results show an amount of numerical robustness. For example, when takeover times are measured for certain more complicated heterogeneous networks (e.g., a Barabasi-Albert scale-free network; see Fig. 11), we still obtain nearly Gumbel distributions. However, due to the lack of theory, we cannot say that this convergence occurs in the limit of large . Rigorous understanding of these heterogeneous networks remains an open question.

FIG. 11.

Distribution of takeover times for a Barabasi-Albert scale-free network with a minimum degree of 3 and nodes. Simulation results were compiled from runs, all using the same realization of the random graph and all with the initial infection starting at the same node. The solid line is the distribution for reference. and are the numerically calculated mean and variance of .

REFERENCES

- [1].R. M. Anderson and R. M. May, Infectious Diseases of Humans: Dynamics and Control (Oxford University Press, Oxford, UK, 1991). [Google Scholar]

- [2].M. J. Keeling and K. T. Eames, J. R. Soc. Interface 2, 295 (2005). 10.1098/rsif.2005.0051 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].O. Diekmann, H. Heesterbeek, and T. Britton, Mathematical Tools for Understanding Infectious Disease Dynamics (Princeton University Press, Princeton, NJ, 2012). [Google Scholar]

- [4].R. Pastor-Satorras, C. Castellano, P. Van Mieghem, and A. Vespignani, Rev. Mod. Phys. 87, 925 (2015). 10.1103/RevModPhys.87.925 [DOI] [Google Scholar]

- [5].S. Bikhchandani, D. Hirshleifer, and I. Welch, J. Political Econ. 100, 992 (1992). 10.1086/261849 [DOI] [Google Scholar]

- [6].D. J. Watts, Proc. Natl. Acad. Sci. USA 99, 5766 (2002). 10.1073/pnas.082090499 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].L. M. Bettencourt, A. Cintrón-Arias, D. I. Kaiser, and C. Castillo-Chávez, Physica A 364, 513 (2006). 10.1016/j.physa.2005.08.083 [DOI] [Google Scholar]

- [8].J. Aharony and I. Swary, J. Bus. 56, 305 (1983). 10.1086/296203 [DOI] [Google Scholar]

- [9].F. Allen and D. Gale, J. Political Econ. 108, 1 (2000). 10.1086/262109 [DOI] [Google Scholar]

- [10].R. M. May, S. A. Levin, and G. Sugihara, Nature (London) 451, 893 (2008). 10.1038/451893a [DOI] [PubMed] [Google Scholar]

- [11].R. M. May and N. Arinaminpathy, J. R. Soc. Interface 7, 823 (2010). 10.1098/rsif.2009.0359 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].A. G. Haldane and R. M. May, Nature (London) 469, 351 (2011). 10.1038/nature09659 [DOI] [PubMed] [Google Scholar]

- [13].J. O. Kephart and S. R. White, in Proceedings of the IEEE Computer Society Symposium on Research in Security and Privacy (IEEE, Piscataway, NJ, 1991), pp. 343–359. [Google Scholar]

- [14].Z. J. Haas, J. Y. Halpern, and L. Li, IEEE/ACM Trans. Netw. 14, 479 (2006). 10.1109/TNET.2006.876186 [DOI] [Google Scholar]

- [15].D. J. Daley and D. G. Kendall, IMA J. Appl. Math. 1, 42 (1965). 10.1093/imamat/1.1.42 [DOI] [Google Scholar]

- [16].M. Draief and L. Massouli, Epidemics and Rumours in Complex Networks (Cambridge University Press, Cambridge, UK, 2010). [Google Scholar]

- [17].R. R. Provine, Am. Sci. 93, 532 (2005). 10.1511/2005.56.980 [DOI] [Google Scholar]

- [18].D. Aldous, Bernoulli 19, 1122 (2013). 10.3150/12-BEJSP04 [DOI] [Google Scholar]

- [19].A. Auffinger, M. Damron, and J. Hanson, arXiv:1511.03262.

- [20].E. Lieberman, C. Hauert, and M. A. Nowak, Nature (London) 433, 312 (2005). 10.1038/nature03204 [DOI] [PubMed] [Google Scholar]

- [21].T. Antal, S. Redner, and V. Sood, Phys. Rev. Lett. 96, 188104 (2006). 10.1103/PhysRevLett.96.188104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].L. Hindersin and A. Traulsen, J. R. Soc. Interface 11, 20140606 (2014). 10.1098/rsif.2014.0606 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].L. Hindersin and A. Traulsen, PLoS Comput. Biol. 11, e1004437 (2015). 10.1371/journal.pcbi.1004437 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].P. Ashcroft, A. Traulsen, and T. Galla, Phys. Rev. E 92, 042154 (2015). 10.1103/PhysRevE.92.042154 [DOI] [PubMed] [Google Scholar]

- [25].T. Williams and R. Bjerknes, Nature (London) 236, 19 (1972). 10.1038/236019a0 [DOI] [PubMed] [Google Scholar]

- [26].W. Feller, An Introduction to Probability Theory and Its Applications: Volume I (Wiley, New York, 1968). [Google Scholar]

- [27].A. Pósfai, arXiv:1006.3531.

- [28].P. Erdős and A. Rényi, Publ. Math. Inst. Hung. Acad. Sci. 6, 215 (1961). [Google Scholar]

- [29].R. A. Fisher and L. H. C. Tippett, Math. Proc. Cambridge Philos. Soc. 24, 180 (1928). 10.1017/S0305004100015681 [DOI] [Google Scholar]

- [30].S. Kotz and S. Nadarajah, Extreme Value Distributions: Theory and Applications (World Scientific, Singapore, 2000). [Google Scholar]

- [31].K. Read, Am. Stat. 52, 175 (1998). 10.2307/2685477 [DOI] [Google Scholar]

- [32].H. Rubin and J. Zidek, DTIC Technical Report, 1965.

- [33].L. E. Baum and P. Billingsley, Ann. Math. Stat. 36, 1835 (1965). 10.1214/aoms/1177699813 [DOI] [Google Scholar]

- [34].A. Gautreau, A. Barrat, and M. Barthélemy, J. Stat. Mech. (2007) L09001 10.1088/1742-5468/2007/09/L09001 [DOI] [Google Scholar]

- [35].T. Williams, J. R. Stat. Soc. B 27, 338 (1965). [Google Scholar]

- [36].R. Durrett and J. Schweinsberg, Theor. Popul. Biol. 66, 129 (2004). 10.1016/j.tpb.2004.04.002 [DOI] [PubMed] [Google Scholar]

- [37].R. van der Hofstad, G. Hooghiemstra, and P. Van Mieghem, Extremes 5, 111 (2002). 10.1023/A:1022175620150 [DOI] [Google Scholar]

- [38].M. Bramson and D. Griffeath, Math. Proc. Cambridge Philos. Soc. 88, 339 (1980). 10.1017/S0305004100057650 [DOI] [Google Scholar]

- [39].M. E. J. Newman, S. H. Strogatz, and D. J. Watts, Phys. Rev. E 64, 026118 (2001). 10.1103/PhysRevE.64.026118 [DOI] [PubMed] [Google Scholar]

- [40].M. E. J. Newman, Phys. Rev. E 66, 016128 (2002). 10.1103/PhysRevE.66.016128 [DOI] [Google Scholar]

- [41].M. E. J. Newman and D. J. Watts, Phys. Rev. E 60, 7332 (1999). 10.1103/PhysRevE.60.7332 [DOI] [PubMed] [Google Scholar]

- [42].D. Richardson, Math. Proc. Cambridge Philos. Soc. 74, 515 (1973). 10.1017/S0305004100077288 [DOI] [Google Scholar]

- [43].M. A. Nowak, Evolutionary Dynamics: Exploring the Equations of Life (Harvard University Press, Cambridge, MA, 2006). [Google Scholar]

- [44].P. A. P. Moran, Proc. Cambridge Philos. Soc. 54, 463 (1958). 10.1017/S0305004100003017 [DOI] [Google Scholar]

- [45].B. Adlam, K. Chatterjee, and M. Nowak, Proc. R. Soc. A 471, 20150114 (2015). 10.1098/rspa.2015.0114 [DOI] [Google Scholar]

- [46].M. Frean, P. B. Rainey, and A. Traulsen, Proc. R. Soc. B 280, 20130211 (2013). 10.1098/rspb.2013.0211 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [47].L. Hindersin, M. Möller, A. Traulsen, and B. Bauer, Biosystems 150, 87 (2016). 10.1016/j.biosystems.2016.08.010 [DOI] [PubMed] [Google Scholar]

- [48].L. Hindersin, B. Werner, D. Dingli, and A. Traulsen, Biol. Direct 11, 41 (2016). 10.1186/s13062-016-0140-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [49].S. Bhamidi, R. van der Hofstad, and G. Hooghiemstra, Bernoulli 19, 363 (2013). 10.3150/11-BEJ402 [DOI] [Google Scholar]

- [50].M. Eckhoff, J. Goodman, R. van der Hofstad, and F. R. Nardi, arXiv:1512.06152. [DOI] [PMC free article] [PubMed]

- [51].M. Eckhoff, J. Goodman, R. van der Hofstad, and F. R. Nardi, arXiv:1512.06145. [DOI] [PMC free article] [PubMed]

- [52].S. Janson, Combinatorics Probab. Comput. 8, 347 (1999). 10.1017/S0963548399003892 [DOI] [Google Scholar]

- [53].M. Kardar, G. Parisi, and Y.-C. Zhang, Phys. Rev. Lett. 56, 889 (1986). 10.1103/PhysRevLett.56.889 [DOI] [PubMed] [Google Scholar]

- [54].M. Cieplak, A. Maritan, and J. R. Banavar, Phys. Rev. Lett. 76, 3754 (1996). 10.1103/PhysRevLett.76.3754 [DOI] [PubMed] [Google Scholar]

- [55].W. A. Sawyer, J. Am. Med. Assoc. 63, 1537 (1914). 10.1001/jama.1914.02570180023005 [DOI] [Google Scholar]

- [56].J. R. Miner, J. Infect. Dis. 31, 296 (1922). 10.1093/infdis/31.3.296 [DOI] [Google Scholar]

- [57].P. E. Sartwell, Am. J. Hyg. 51, 310 (1950). [DOI] [PubMed] [Google Scholar]

- [58].P. E. Sartwell, Am. J. Pub. Health Nat. Health 42, 1403 (1952). 10.2105/AJPH.42.11.1403 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [59].P. E. Sartwell, Am. J. Epidemiol. 83, 204 (1966). 10.1093/oxfordjournals.aje.a120576 [DOI] [PubMed] [Google Scholar]

- [60].E. W. Goodall, Br. Med. J. 1, 73 (1931). 10.1136/bmj.1.3653.73-a [DOI] [Google Scholar]

- [61].J. Lessler, N. G. Reich, R. Brookmeyer, T. M. Perl, K. E. Nelson, and D. A. Cummings, Lancet Infect. Dis. 9, 291 (2009). 10.1016/S1473-3099(09)70069-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [62].R. Brookmeyer, E. Johnson, and S. Barry, Stat. Med. 24, 531 (2005). 10.1002/sim.2033 [DOI] [PubMed] [Google Scholar]

- [63].H. Nishiura, Emerging Themes Epidemiol. 4, 2 (2007). 10.1186/1742-7622-4-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [64].H. K. Armenian and A. M. Lilienfeld, Am. J. Epidemiol. 99, 92 (1974). 10.1093/oxfordjournals.aje.a121599 [DOI] [PubMed] [Google Scholar]

- [65].J. W. Boag, J. R. Stat. Soc. B 11, 15 (1949). [Google Scholar]

- [66].M. Feinleib and B. MacMahon, Blood 15, 332 (1960). [PubMed] [Google Scholar]

- [67].R. Durrett, Probability: Theory and Examples (Brooks-Cole, Belmont, MA, 1991). [Google Scholar]