Abstract

Background:

The provision of health-care services is dependent on the effective and efficient functioning of various components of a health-care system. It is therefore important to evaluate the functioning of these various components. Hence, the aim of this study was to review studies on health-care facilities efficiency in sub-Saharan Africa (SSA) with respect to the methodologies used as well as outcomes and factors influencing efficiency.

Methods:

The review was conducted through a comprehensive search of electronic databases which included PubMed, Web of science, academic search complete via EBSCOhost, Science Direct, and Google scholar. A search was also conducted by looking into citations in the reference list of selected articles and through gray literature. Studies were screened by examining their titles, abstracts, and full-text based on stated inclusion and exclusion criteria. The concurrent screening and data extraction were conducted by the two authors.

Results:

A total of 40 studies were shortlisted for the review. The majority (90.0%) of the studies employed the data envelopment analysis technique for their efficiency measurements. The input and output variables utilized by most of the studies were predominantly human resources and health-related services respectively. The outcome from majority of the studies showed that less than 40% of the studied facilities were efficient. The leading influencing factors reported by the studies were catchment population, facility ownership, and location.

Conclusions:

The review showed that there was a marked degree of inefficiency across the health-care facilities. Consequently, due to severe resource constraints facing SSA, there is a need to determine how to use the available resources optimally to improve health systems performance.

Keywords: health facilities, efficiency, systematic review, sub-Saharan Africa

Background

Health-care system is a structure of production units consisting of sections focusing on improving the health status of the population. Primary health care and its associated facilities are considered as the gateway to higher levels of care.1 The examination of health systems performance in most countries is usually based on the analysis of system components that deliver care.2 Such an analysis allows for an understanding of how effective and efficient service delivery components function. Health system functioning can be measured through cost-effective analysis, and also through technical and allocative efficiency.

Efficiency indicates how well an organization has used its resources to produce the best outcome over a period.3 There are two main components of efficiency: allocative and technical efficiency.3 Technical efficiency refers to achieving maximum possible output with the least available sets of inputs. As regard health-care services, it refers to the physical relationship between the resources consumed, such as capital, labor, and equipment and related health outcomes.4 These outcomes may either be defined in terms of intermediate outputs such as the number of patients treated and waiting time or final outcomes such as lower mortality rates and improved life expectancy.4 On the other hand, allocative efficiency refers to the ability of an organization to utilize different input resources in optimal proportions to produce a mix of different outputs considering input prices and the production technology.4,5 Overall, total efficiency is determined through the combined effect of both technical and allocative efficiency.4,5

In the face of severe global disease burden, health-care investments are becoming a high public health priority globally.6 Sub-Saharan Africa (SSA) accounts for about 11% of the world’s population but bears 24% of the global disease burden.7 The region spends on average 6.1% of its total gross domestic product (GDP) on health.8 A value greater than average total health expenditure per GDP spent by most countries of the Next Eleven (Next-11) nations,9 but less than most developed countries of the world such as United States with a 15% GDP.10 Health facilities are known to consume the highest proportion of total health expenditure in most SSA countries, estimated to be from 45% to 81% of government health expenditure.11,12 As health facilities are increasingly consuming more in health-care resources, there is a need to determine if the increase in input is accompanied by an increase in service provision. While health facilities such as hospitals and health centers constitute an important component of health systems in SSA, there are few studies that have assessed health facility efficiency over the past three decades.13

In contrast to SSA, most of the efficiency and health system performance studies have been routinely conducted in developed countries where it has become a norm. A wide range of instruments have been deployed, ranging from simple ratio analysis and unit costing to more advanced methodologies, such as the data envelopment of analysis (DEA) and stochastic frontier analysis (SFA).14 Promoting efficiency measurement in SSA countries is essential to ensuring optimal resource utilization targeted at equity in health-care delivery. This review aims at reviewing studies of health-care facilities efficiency in SSA based on the methodologies used as well as outcomes and factors influencing efficiency.

Majority of the previous efficiency review studies conducted were done in developed countries of Europe and Asia, and they are mostly more than a decade old.15-20 Although a study was recently conducted to review efficiency measurement in low and middle-income countries (LMICs),13 our study exclusively focused on efficiency measuring techniques in SSA as well as outcomes and factors influencing the efficiency of health facilities in the region. Findings from this study revealed coverage and nature of research activities on assessing the efficiency of health facilities in SSA in the last two decades. Thus, the review contributes to tools available for the future conduct of efficiency studies.

Methods

This review was guided by Preferred Reporting Items for Systematic Review and Meta-Analysis, which is a standard guideline for systematic reviews.21

Eligibility of Studies for the Review

The SPIDER (Sample, Phenomenon of Interest, Design, Evaluation, Research type) framework22 adapted from PICOs (Population, Intervention, Comparison, Outcomes, and Study setting) tool was used to determine the eligibility of studies for the review process (Table 1).

Table 1.

SPIDER Framework to Determine the Eligibility of Studies for the Review Process.

| Sample | Health-care facilities (this will include, primary health-care facilities, secondary, and tertiary health facilities). Studies conducted in SSA countries |

| Phenomenon of Interest | Efficiency of health-care facilities |

| Designs | Cross-sectional study, survey, interview, focus group, case reports, and observational study. |

| Evaluation |

|

| Research type | Qualitative, quantitative, and mixed methods |

Strategy for Identifying Relevant Studies

We searched electronic databases from the year 2000 to October 2018. These databases include PubMed, web of science, academic search complete via EBSCOhost, Science Direct, and Google scholar. Also, articles were retrieved from citations in the reference list of the included articles. A gray literature search through the university library was also conducted to source for additional articles. The keywords used to search for relevant studies included “Efficiency,” “inefficiency,” “Hospital,” “health facilities,” “and Sub-Saharan Africa,” “productivity,” “performance,” “health centre.” To identify factors associated with efficiency measurements additional search terms; “factor,” “determinant,” and “influence” were added. These keywords were combined in different ways to form “search string” during the database search to generate potentially relevant studies. These keywords were also truncated (eg, efficien*) in some instances so as to retrieve relevant studies. For full articles that could not be obtained online, the University of KwaZulu-Natal library service was consulted for assistance. Some authors were also contacted for full article and other relevant studies. Studies were comprehensively screened through examining their titles and abstracts. This was done based on stated inclusion and exclusion criteria. The concurrent screening and data extraction were conducted by two reviewers.

Study Selection Criteria

This is a systematic review study with included studies fulfilling the following criteria:

– Used health facilities as the study population

– Studies on efficiency measurements

– Studies conducted in SSA

– Technical efficiency studies (studies including allocative efficiency were also considered)

– Studies published in English.

Studies were excluded based on the following characteristics:

– Efficiency studies conducted that did not include health facilities

– Studies published in languages other than English

– Studies carried out on health facilities outside Sub-Saharan Africa

Study Validity Check

The methodological validity of included studies was assessed using a validated tool adapted from previously published review studies on resource utilization in health care.13,23-28 This tool contains 13 items arranged in 4 categories to assess the design, sample, indicators, and statistical methods of each study. Each checklist item was graded “1” point if the response is “Yes” and “0” if it is “No,” this gave a total score ranging from “0 to 13.” Studies with a total score of less than 6 points were considered to have low validity, those with “6 to 8” medium validity while those with a total score above “9” were assumed to have high validity.13,23-28

Data Extraction

All eligible studies and articles were exported and stored in endnote version 9.0 reference management software. We created a Google template form based on the study aim and objectives to assist with the extraction of data. This form was used to extract background data (details of the author, year of publication, and study location), methodologies used (type of studies, efficiency measurement approach, input variables, output variables, and statistical technique used), study outcome, influencing factors, and other relevant information. The data extraction form was first piloted and then constantly updated during the data extraction process. Information extracted was exported into an excel spreadsheet for data cleaning, analysis, and presentation. We imported the data spreadsheet in Nvivo version 11 for data grouping and thematic analysis.

Results

Description of Selected studies

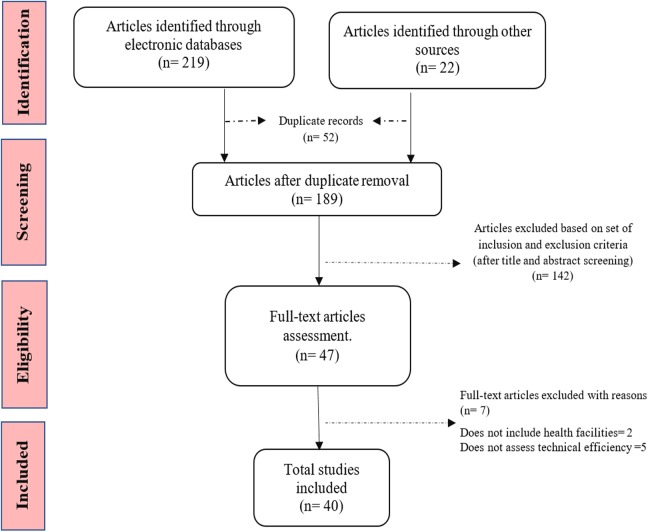

A total of 241 potentially relevant studies were identified during the literature search. Of the retrieved studies, 219 were retrieved from five electronic databases (PubMed, Web of science, academic search complete via EBSCOhost, Science Direct, and Google scholar), while 22 were identified through manual search (gray literature). The total retrieved studies were screened for duplicate records and a total of 52 duplicate studies were identified and removed by endnote. Subsequently, the screened articles were assessed based on inclusion and exclusion criteria and a total of 149 studies which did not fulfill the inclusion criteria were excluded. Seven studies were excluded after the second screening and full abstract assessment. Finally, 40 eligible studies were included in the systematic review. The study selection and screening procedure are as shown in Figure 1.

Figure 1.

Preferred Reporting Items for Systematic Review and Meta-Analysis (PRISMA) Flowchart showing study selection procedure.

The study validity check showed that 37 (92.5%) of the total included studies had high validity scores and 3 (7.5%) had medium scores. Studies with high validity had a defined statistical approach, used several inputs and outputs and clearly defined study period, while those with medium validity mostly had sample size that was not clearly justified, inputs and output variables were limited and not clearly defined.

Description of Included studies

Approximately 55% of the studies were published between 2010 and 2018, while 45% were published between 2000 and 2009. The majority of the studies were carried out in three regions of SSA; Eastern Africa, Southern Africa, and Western Africa while two of the studies were intercountry. Western African region has the highest number of efficiency studies (Table 2).

Table 2.

Region and Country by Year of Publication.

| Region | Country | Year of Publication | Total, n | |

|---|---|---|---|---|

| 2000-2009, n (%) | 2010-2018, n (%) | |||

| Eastern Africa | Eritrea | 0 (0.0) | 1 (100.0) | 1 |

| Ethiopia | 0 (0.0) | 3 (100.0) | 3 | |

| Kenya | 2 (66.7) | 1 (33.3) | 3 | |

| Seychelles | 1 (100.0) | 0 (0.0) | 1 | |

| Tanzania | 0 (0.0) | 1 (100.0) | 1 | |

| Uganda | 1 (33.3) | 2 (66.7) | 3 | |

| Total | 4 (33.3) | 8 (66.7) | 12 | |

| Southern Africa | Angola | 1 (100.0) | 0 (0.0) | 1 |

| Botswana | 1 (50.0) | 1 (50.0) | 2 | |

| Namibia | 1 (100.0) | 0 (0.0) | 1 | |

| South Africa | 4 (100.0) | 0 (0.0) | 4 | |

| Zambia | 2 (100.0) | 0 (0.0) | 2 | |

| Total | 9 (90.0) | 1 (10.0) | 10 | |

| Western Africa | Burkina Faso | 1 (50.0) | 1 (50.0) | 2 |

| Gambia | 0 (0.0) | 1 (100.0) | 1 | |

| Ghana | 3 (50.0) | 3 (50.0) | 6 | |

| Nigeria | 0 (0.0) | 5 (100.0) | 5 | |

| Sierra Leone | 1 (50.0) | 1 (50.0) | 2 | |

| Total | 5 (31.3) | 11 (68.7) | 16 | |

| Multiple Countries | Kenya and Swaziland | 0 (0.0) | 1 (100.0) | 1 |

| Kenya, Uganda, Zambia | 0 (0.0) | 1 (100.0) | 1 | |

| Total | 0 (0.0) | 2 (100.0) | 2 | |

| Overall Total | 18 (45.0) | 22 (55.0) | 40 | |

Eight of the 12 studies conducted in Eastern Africa were published between 2010 and 2018. Eleven of the 16 western Africa studies were conducted between 2010 to 2018 while majority (90.0%) of the southern Africa studies were published more than 10 years ago.

The health facilities type used were grouped into four major categories: primary health-care centers, secondary or district hospitals, tertiary, specialist and teaching hospitals, and others (mainly health posts and voluntary medical male circumcision facilities). Most, 37.5% and 25.0% of the studies were conducted in only primary health-care facilities and only secondary/district health facilities, respectively. Seven of the studies were conducted in more than one facility type, and of these studies, four were studies on both primary and secondary health facilities, while one study used “secondary and tertiary” facilities. Two of the studies used all three types of health facilities. Concerning facility ownership, the majority (65.0%) of the facilities were owned by the government. Some (17.5%) of the studies were conducted in both government-owned and privately owned facilities. The remaining four studies assessed combination of government, private, mission/NGO-owned facilities.

The data source for the studies was mainly secondary sources while a few of the studies used both primary and secondary data sources. The secondary data collection involved retrieving relevant data from health information databases, while primary data collection was carried out through the use of trained research assistants to collect data directly from the health facilities. On the other hand, data collection years varied from 1 to 9 years and the mean year of data collection was 2.31 ± 2.04 years (Table 3).

Table 3.

Studies Description.

| Description | Number of Studies (n = 40) | Percentage (%) |

|---|---|---|

| Facility type used | ||

| Primary | 15 | 37.5 |

| Secondary/district | 10 | 25.0 |

| Tertiary/teaching/specialist | 5 | 12.5 |

| Primary and secondary | 4 | 10.0 |

| Secondary and tertiary | 1 | 2.5 |

| Primary, secondary, and tertiary | 2 | 5.0 |

| Others | 3 | 7.5 |

| Ownership | ||

| Public | 26 | 65.0 |

| Private | 1 | 2.5 |

| Public and private | 7 | 17.5 |

| Public and mission | 1 | 2.5 |

| Public, private, mission, and NGO | 4 | 10.0 |

| Not specified | 1 | 2.5 |

| Data source | ||

| Primary | 12 | 30.0 |

| Secondary | 25 | 62.5 |

| Primary and secondary | 3 | 7.5 |

| Data collection years | ||

| Single year | 26 | 65.0 |

| Multiple years | 14 | 35.0 |

| Mean (±SD), years | 2.31 ± 2.04 | |

| Maximum data collection year frame | 9 | |

| Data collection period | ||

| Before 2000 | 5 | 12.5 |

| 2000-2009 | 22 | 55.0 |

| 2010-2018 | 13 | 32.5 |

Efficiency Measuring Techniques

In general, there are two main techniques for measuring technical efficiency: parametric and nonparametric.29,30 A parametric approach involves the stochastic frontier production function based on a set of explanatory variables.30 On the other hand, the nonparametric approach uses linear programming to measure the relative efficiency of some decision-making units (DMUs; organizations) through identification of an optimal mix of inputs and outputs based on the best-performing unit within the set.30 The most common type of parametric technique is SFA while that of nonparametric is DEA.

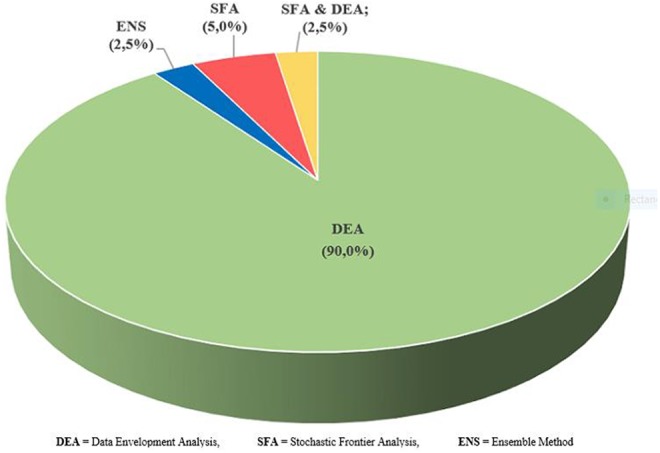

Three different efficiency measuring techniques were identified among the studies: DEA, SFA, and Ensemble method (ENS). The majority (90.0%) of the studies used DEA while 2 studies (5.0%) used both DEA and SFA. Though not a common technique, the ENS is a combination of restricted versions of data envelopment analysis (rDEA) and stochastic distance function (rSDF) which was used by one of the studies (Figure 2).31

Figure 2.

Efficiency measuring techniques.

Studies that employed the use of DEA techniques either use it alone or together with a second-stage analysis involving Malmquist total factor productivity index, Tobit regression analysis, and correlation efficiency.

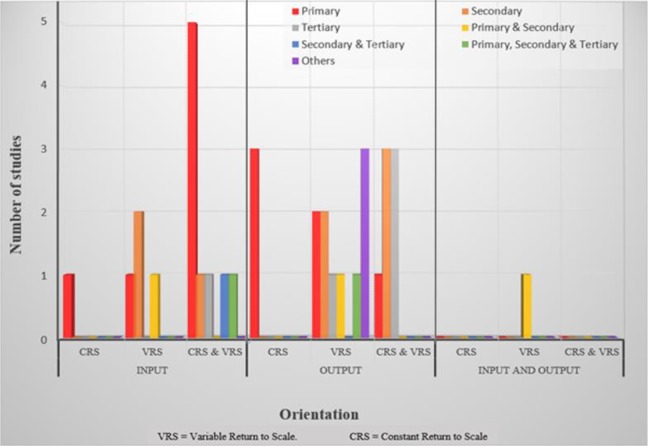

Orientation

Orientation adoption is one of the analysis procedures in DEA technique. The general orientation adopted by DEA studies were either input or output orientation. Of the 36 studies that employed the use of DEA technique, most (55.6%) of the studies used output orientation, and 14 (38.9%) used input orientation. One of the studies used both input and output orientations while another had unspecified orientation. Majority (64.3%) of the input-oriented studies were measured on both constant return to scale (CRS) model and variable return to scale (VRS) model. On the other hand, half (50.0%) of the output-oriented studies had efficiency determined on VRS model and also the only study (a primary and secondary health facility-based study) that utilized both input and output orientation also used VRS model. Efficiency assessment of majority of the primary health facilities was determined through input orientation using both CRS and VRS models. While most of the studies involving tertiary facilities and other facilities were mainly output-orientated (Figure 3).

Figure 3.

Data Envelopment Analysis (DEA) Orientation by facility types.

Input and output variables

There were different range of input and output variables deployed by the studies. The input variables were categorized into physical inputs which include human resources (clinical and nonclinical staff) and proxy for capital cost (eg, size of facility, number of beds, and number of wards) and financial input which include recurrent expenditure and expenditure on drugs and supplies (Table 4). On the other hand, the output variables were classified into health services alone such as consultation visits and maternal and child health services and those that included both health services and health outcomes (Table 5).

Table 4.

Description of Input Variables by Type of Health Facility.a

| Input Variable | a | b | c | d | e | F | g | Total |

|---|---|---|---|---|---|---|---|---|

| n (%) | n (%) | n (%) | n (%) | n (%) | n (%) | n (%) | n (%) | |

| Human Resources | ||||||||

| Clinical staff | ||||||||

| Doctor/dentists | 7 (10.6) | 6 (13.0) | 2 (10.5) | 4 (19.0) | 1 (25.0) | 1 (12.5) | 1 (11.1) | 22 (12.7) |

| Nurse/midwife | 10 (15.2) | 8 (17.4) | 2 (10.5) | 4 (19.0) | 1 (25.0) | 1 (12.5) | 2 (22.2) | 28 (16.2) |

| Pharm. /pharm. tech. | 1 (1.5) | 2 (4.3) | 1 (5.3) | 1 (4.8) | 1 (12.5) | 6 (3.5) | ||

| Physiotherapist | 1 (1.5) | – | – | – | – | – | – | 1 (0.6) |

| Public health/CHO | 1 (1.5) | 1 (2.2) | – | – | – | – | 3 (33.3) | 5 (2.9) |

| Radiographer | – | – | – | 1 (4.8) | – | – | – | 1 (0.6) |

| Technician/paramedics | 5 (7.6) | 2 (4.3) | 1 (5.3) | 3 (14.3) | – | 1 (12.5) | – | 12 (6.9) |

| Other (non-specified clinical staff) | 3 (4.5) | 3 (6.5) | 3 (15.8) | 1 (4.8) | – | – | – | 10 (5.8) |

| Nonclinical staff | ||||||||

| Administrative staff | 4 (6.1) | 1 (2.2) | 1 (5.3) | 1 (4.8) | – | 1 (12.5) | – | 8 (4.6) |

| Counsellor and educator | – | – | – | – | – | 1 (12.5) | – | 1 (0.6) |

| Health attendants | – | 2 (4.3) | – | – | – | – | – | 2 (1.2) |

| Other support staff | 7 (10.6) | 7 (15.2) | 3 (15.8) | 2 (9.5) | 1 (25.0) | – | 1 (11.1) | 21 (12.1) |

| Financial | ||||||||

| Recurrent expenditure | 7 (10.6) | 3 (6.5) | – | – | – | 1 (12.5) | – | 11 (6.3) |

| Expenditure on drugs and supplies | 5 (7.6) | 1 (2.2) | – | 1 (4.8) | – | – | – | 7 (4.0) |

| Structure | ||||||||

| No of consulting rooms | 1 (1.5) | – | – | – | – | – | – | 1 (0.6) |

| No of wards | 1 (1.5) | – | – | – | – | – | – | 1 (0.6) |

| Size of facility | 2 (3.0) | – | – | – | – | – | – | 2 (1.2) |

| Bed | 8 (12.1) | 10 (21.7) | 5 (26.3) | 3 (14.3) | 1 (25.0) | 1 (12.5) | 1 (11.1) | 29 (16.8) |

| Others | ||||||||

| Drug supplies | 1 (1.5) | – | 1 (5.3) | – | – | – | – | 2 (1.2) |

| Equipment | 1 (1.5) | – | – | – | – | – | – | 1 (0.6) |

| Power/energy supply | 1 (1.5) | – | – | – | – | – | – | 1 (0,6) |

| Total operating time | – | – | – | – | – | – | 1 (11.1) | 1 (0.6) |

| Total | 66 (100.0) | 46 (100.0) | 19 (100.0) | 21 (100.0) | 4 (100.0) | 8 (100.0) | 9 100.0) | 173 (100.0) |

a “a” = studies that assessed primary health facilities alone; “b” = studies that assessed secondary health facilities alone; “c” = studies that assessed tertiary health facilities alone; “d” = studies that assessed both primary and secondary health facilities; “e” = studies that assessed primary, secondary, and tertiary health facilities; “g” = studies that assessed other health facilities.

Table 5.

Description of Output Variables by Type of Health Facility.a

| Output Variable | a | b | c | d | e | F | g | Total |

|---|---|---|---|---|---|---|---|---|

| n (%) | n (%) | n (%) | n (%) | n (%) | n (%) | n (%) | n (%) | |

| Consultation visits | ||||||||

| Outpatient visits | 13 (17.8) | 10 (27.8) | 5 (33.3) | 3 (15.0) | 1 (25.0) | 1 (11.1) | 2 (11.8) | 35 (20.1) |

| Inpatient visits | 3 (4.1) | 13 (36.1) | 5 (33.3) | 4 (20.0) | 1 (25.0) | 1 (11.1) | 1 (5.9) | 28 (16.1) |

| Dental care visits | 1 (1.4) | 1 (2.8) | – | – | – | – | – | 2 (1.1) |

| Emergency cases | – | – | 1 (6.7) | 1 (5.0) | – | – | – | 2 (1.1) |

| Special care visit | 6 (8.2) | 1 (2.8) | 1 (5.0) | 2 (22.2) | 2 (11.8) | 12 (6.9) | ||

| Maternal and child health services | ||||||||

| Antenatal care visits | 8 (11.0) | 1 (2.8) | – | 2 (10.0) | – | – | 1 (5.9) | 12 (6.9) |

| Delivery | 9 (12.3) | 4 (11.1) | 1 (6.7) | 1 (5.0) | 1 (25.0) | 1 (5.9) | 17 (9.8) | |

| Immunization visits | 8 (11.0) | – | – | 2 (10.0) | – | – | 1 (5.9) | 11 (6.3) |

| Postnatal visits | 8 (11.0) | 1 (2.8) | – | – | – | 1 (11.1) | 2 (11.8) | 12 (6.9) |

| Family planning visits | 5 (6.8) | – | 1 (6.7) | 1 (5.0) | – | 1 (11.1) | 1 (5.9) | 9 (5.2) |

| Other MCH visit | 3 (4.1) | – | – | 1 (5.0) | – | 1 (11.1) | – | 5 (2.9) |

| Others | ||||||||

| Procedure/surgery | 1 (1.4) | 2 (5.6) | 2 (13.3) | – | 1 (25.0) | – | 1 (5.9) | 7 (4.0) |

| Tests and observation | 3 (4.1) | 2 (5.6) | – | 1 (5.0) | – | 1 (11.1) | 1 (5.9) | 8 (4.6) |

| Patient death | – | 1 (2.8) | – | – | – | – | – | 1 (0.6) |

| Health educ. Sessions | 4 (5.5) | – | – | – | – | 1 (11.1) | 3 (17.6) | 8 (4.6) |

| Average facility service quality index score | – | – | – | – | – | – | 1 (5.9) | 1 (0.6) |

| New births discharged alive | – | – | – | 1 (5.0) | – | – | – | 1 (0.6) |

| Inpatients discharged alive | – | – | – | 1 (5.0) | – | – | – | 1 (0.6) |

| Patients days | – | – | – | 1 (5.0) | – | – | – | 1 (0.6) |

| Domiciliary cases treated | 1 (1.4) | – | – | – | – | – | – | 1 (0.6) |

| Total | 73 (100.0) | 36 (100.0) | 15 (100.0) | 20 (100.0 | 4 (100.0) | 9 (100.0) | 17 (100.0) | 174 (100.0) |

a “a” = studies that assessed primary health facilities alone; “b” = studies that assessed secondary health facilities alone; “c” = studies that assessed tertiary health facilities alone; “d” = studies that assessed both primary and secondary health facilities; “e” = studies that assessed primary, secondary, and tertiary health facilities; “g” = studies that assessed other health facilities.

Most (67.5%) of the studies utilized physical inputs while 32.5% used both physical and financial inputs. Across all the facility types assessed, doctors and nurses were the major clinical staff input. Other support staff such as cleaners and security personnel constituted the majority of the nonclinical staff. Financial input used were recurrent expenditure (this include personnel and administrative costs) and expenditure on drugs and supplies. The number of beds, number of wards, consulting rooms, and facility size were used as a proxy for capital costs. Other inputs reported in the studies were hospital equipment, power or energy supplied, and total operating time (total elapsed client–surgeon contact time during circumcision).

Output variables can be the quantity of health services rendered or a combination of the quantity and quality of the health-care service. Majority (92.5%) of studies utilized quantity of health services as output variables while three (7.5%) studies included quantity and health outcomes. The health service outputs mostly used by the studies were outpatient visits and inpatient visits. Maternal and child health services were determined by antenatal care, delivery, immunization, postnatal, and family planning visits. Health service quality or health outcomes were measured through considering records of average facility service quality index score, domiciliary cases treated, new births discharged alive, and inpatients discharged alive. Other outputs used by the studies include: procedure or surgery, tests or observation, and health education sessions (Table 5).

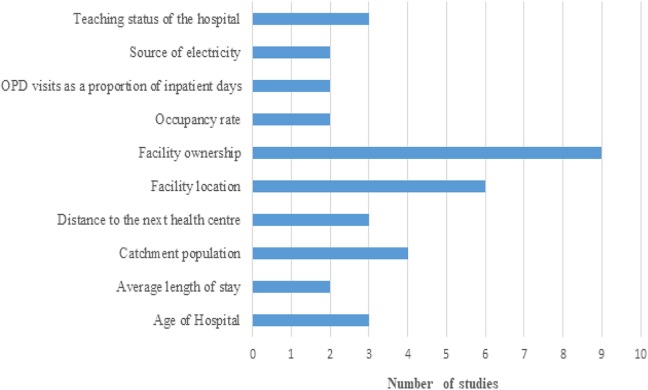

Factors Influencing Hospital Efficiency

Figure 4 shows the range of contextual factors influencing productivity and efficiency reported by thirteen out of the reviewed studies. The most common factor identified by the studies was “facility ownership” (as reported in 9 of the 13 studies). This is followed by “facility location” and “catchment population” as reported by 6 and 4 studies respectively.

Figure 4.

The most common factors influencing efficiency.

Study outcomes

Four efficiency outcomes were reported, which include technical, allocative, scale, and cost efficiencies. All the studies reported technical efficiency outcomes, two studies reported scale-efficiency outcomes, two studies gave allocative efficiency outcome, and one study included cost or economic efficiency in its outcome report.

In Eastern Africa, 58.3% of the studies reported that “41% to 69%” of the facilities were technically efficient, while 16.7% of the studies reported that “above 70%” of the facilities were technically efficient. Five of the seven studies with scale-efficiency outcomes indicated between “41% to 69%” scale-efficient facilities (Table 6).

Table 6.

Study Outcomes by Region.

| Region | Outcome | % Efficient Facilities | |||

|---|---|---|---|---|---|

| 0%-40% | 41%-69% | 70% and Above | Total | ||

| n (%) | n (%) | n (%) | n | ||

| Eastern Africa (n = 12) | Scale efficiency | 2 (28.6) | 5 (71.4) | 0 (0.0) | 7 |

| Technical efficiency | 3 (25.0) | 7 (58.3) | 2 (16.7) | 12 | |

| Southern Africa (n = 10) | Allocative efficiency | 1 (100.0) | 0 (0.0) | 0 (0.0) | 1 |

| Cost efficiency | 1 (100.0) | 0 (0.0) | 0 (0.0) | 1 | |

| Scale efficiency | 3 (75.0) | 0 (0.0) | 1 (25.0) | 4 | |

| Technical efficiency | 6 (60.0) | 2 (20.0) | 2 (20.0) | 10 | |

| Western Africa (n = 16) | Allocative efficiency | 1 (100.0) | 0 (0.0) | 0 (0.0) | 1 |

| Scale efficiency | 6 (75.0) | 1 (12.5) | 1 (12.5) | 8 | |

| Technical efficiency | 9 (56.3) | 5 (31.3) | 2 (12.4) | 16 | |

| Multiple Countries (n = 2) | Technical efficiency | 2 (100.0) | 0 (0.0) | 0 (0.0) | 2 |

All four efficiency outcomes were reported among the studies carried out in Southern Africa. The only study that reported both allocative and cost efficiencies of health facilities indicated that few (33.0%) of the facilities were both allocative and cost-efficient. Majority (75%) of the studies that reported scale efficiency showed that “less than 40%” of the facilities were scale-efficient. Similarly, most (60.0%) of the Southern African studies indicated that “less than 40%” of the facilities were technically efficient (Table 6).

In the Western region, majority of the studies indicated that “less than 40%” of the health facilities were both scale- and technically efficient. Also, the only study that reported allocative efficiency showed that the facilities been studied were mostly inefficient. Finally, the two intercountry studies included in this review reported that there were “less than 40%” technically efficient facilities each (Table 6).

Study Limitations

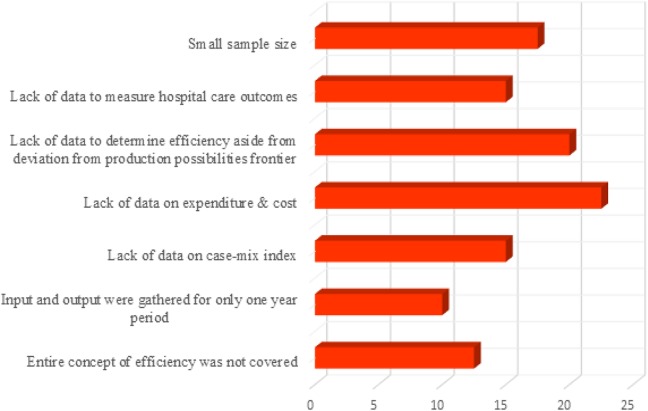

Limitations were mainly methodological- and data-related. The most-reported limitations were “small sample size,” “lack of data to measure hospital care outcomes,” “lack of data to determine efficiency aside from deviation from production possibilities frontier,” “lack of data on expenditure and cost,” “lack of data on case-mix index,” “data were gathered for only 1-year period,” and insufficient coverage of the entire concepts of efficiency (Figure 5).

Figure 5.

Most reported study limitations.

Discussion

This review identified various approaches, inputs and outputs that have been used to assess the efficiency of health facilities in SSA countries. Proportion of efficient facilities and some factors influencing efficiency of these facilities were also analyzed. The efficiency measuring techniques that have been used in SSA were DEA, SFA, and ENS. These techniques were based on the best performance frontier between observed production units, deviation from this frontier is said to constitute a relative technical inefficiency.

A vast majority of the studies used the DEA technique which has been on the increase in the last two decades. Data envelopment analysis was developed as an alternative approach to stochastic frontier models.32 Data envelopment analysis was developed to estimate technical efficiency by accommodating multiple inputs and multiple outputs.32-34 This is an advantage over the SFA which utilizes a specific functional form of input and output.35 Data envelopment analysis assists in identifying both the level and sources of inefficiency.34 Also, DEA is not affected by challenges of model mis-specification, multicollinearity, and heteroscedasticity which can lead to incorrect estimation of standard error and subsequently erroneous conclusion.32,33 Data envelopment analysis estimation is usually based on the best performing DMUs such as organization or facilities, among a set of homogeneous units.33 There is no theoretical maximum by which the facilities are compared. Hence, facilities that appeared to be efficient among the unit sets might actually be inefficient in the real sense.33 The use of a large sample size has been proven to minimize this error.32

Malmquist productivity index (MPI) was mostly used in some of the studies to assess changes in efficiency and productivity over a given period. The MPI allows the breakdown of productivity changes into technical efficiency change and technological change.36 Technological change is a measure of the change in the health facility production technology while technical efficiency change is the difference between observed production and maximum feasible production.37 This productivity index does not require assumptions on the cost minimization or profit maximization and prices of input and output.36 Health facilities with MPI scores greater than one signify growth in productivity while values less than one indicates productivity deterioration. Also, a second stage DEA analysis involving the use of regression techniques was deployed to explain the efficiency score.35,36 This was aimed at explaining the impact of some factors (such as environmental and non-discretional factors) which are beyond the control of facility managers on efficiency.38-40 Some of the most common factors cited to influence health facility efficiency include; catchment population, bed occupancy rate, the average length of stay, ownership, location, payment source, the ratio of outpatient visits to inpatient days, quality of care, and distance.

Although there are concerns expressed in the literature surrounding the appropriateness of assumption selection and robustness of result by SFA,19,41 it remains one of the techniques that is often used in efficiency studies in both LMICs and HICs. One of the studies included in this review utilized SFA while another study used it in conjunction with DEA. The SFA efficiency analysis is done through executing a functional form of the input and output data. It assumes that deviation from the frontier can be due to either random error or inefficiency.41 It considers the effect of measurement error (such as outliers) which is beyond the facility’s control.42 This can be said to be an advantage over DEA which mostly attributes inefficiency to deviation from the frontier.

An ensemble modeling approach that combines rDEA and rSDF was used in one of the studies.31 The approach was designed as a technical efficiency estimation strategy in low-resource settings.31 The main advantage of this approach is that it provides a robust estimate of technical efficiency most especially when the underlying production function of facilities is unclear.31 It also assists to eradicate some of the major challenges of DEA such as overestimation of efficiency among homogeneous DMUs. Although DEA seems to be the most preferred efficiency assessment technique in SSA (as demonstrated by majority of the studies), there is no specific agreement or evidence to show which technique is the best. They all have their merits and demerits, they are better used to complement each other.

Most of the studies looked into maximizing outputs given the amount of input used by facilities. This is shown by their choice of orientation which is mostly output oriented. The DEA studies assumed mostly a combination of both CRS and VRS. The CRS model was adopted with the assumption that an increase in inputs leads to the same proportionate increase in output.36 The VRS model was adopted with two notions; first, an increase in inputs may lead to a larger proportionate increase in outputs; this is referred to as increasing return to scale or economies of scale. Secondly, an increase in inputs can lead to a smaller proportionate increase in output, which is referred to as decreasing return to scale or diseconomies of scale.34,36 The different model assumptions were used to determine output response to variation in health facilities input. This was done because health managers or policymakers have more control over health facility inputs than outputs.

Findings from this review showed that input variables that have been used were predominantly human resources and structure (proxy by the number of beds). Majority of the studies used clinical staff and the number of beds as the major input variables. Only a few used other input variables such as financially related and other structural inputs. These findings are similar to those reported in reviews conducted on HICs and LMICs, which reported similar input utilization.13,43 This low utilization of financial-related inputs was mainly attributed to nonavailability of data. There has been an increase effort by South Africa toward availability and access to medicines and other medical supplies in the public health system recently.44 Going forward, this could improve availability of input data related to expenditure on drugs and supplies for future efficiency analysis.

Output variables that have been used in SSA were mostly related to health-care service consultation. These consultation visits included mainly outpatient and inpatient visits. Some studies included total procedure/surgery and test/observations outputs34,41,45-50 while very few studies considered a service quality index score, domiciliary cases treated, new births discharged alive and inpatients discharged alive as a proxy for health-care quality.51-53 Due to difficulty in accessing data related to quality of care delivered in health facilities, the reported output variables could not incorporate much of quality indicators. Alternatively, these quality indicators can be considered through considering some outputs such as morbidity and mortality rate and some indicators such as average length of stay and adverse outcomes that have been previously used in developed countries.54,55 It was generally observed that different input and output variables were selected based on previous efficiency studies and data availability.

Policy Implication

The study outcomes show that the majority of the health facilities in different regions of SSA were performing well below the 100% efficiency benchmark set by their peer. Although majority of the studies from eastern Africa show that “41 to 69%” of their health facilities were both technical and scale-efficient, majority of studies from other regions indicated that few facilities (less than 40%) were technical, allocative, scale, or cost-efficient. To improve efficiency analysis in the region, health planners or policymakers need to consider some interventions. These can include:

– tackling challenges associated with data availability through organizing training in health management information systems to enhance the availability of timely, adequate, and reliable data;

– health management information systems should be strengthened to regularly capture input, input price, and output data which could use for allocative and economic efficiency analysis36;

– formal health care-seeking behavior of the populace can be increased through the provision of incentives so as to make maximal use of health-care input resources.38 Similarly, health workers’ performance could be improved through the same means5; and

– a planning model to enhance the geographical distribution of health facilities.

Study Limitations

The review only included studies that were published in English language, non-English studies were excluded due to interpretation challenges. Also, studies published before the year 2000 were not included in the review as the objective of the review is to present recent relevant information on health facilities efficiency measurement. The included studies in each region may not be representative of the entire region, thus the distribution of efficient health facilities in each region of SSA may not correspond to real life. As this study only focused on SSA, future review of other parts of Africa will be complimentary.

Conclusions

The major aim of policymakers and health managers is efficiency in the delivery of health care. Data envelopment analysis has been the predominant efficiency measuring technique in SSA in the last 2 decades, and there has been a record of wide inefficiency across health facilities. With the severe resources constraint facing the region, there is a need to determine how to use the available resources optimally to enhance the performance of health systems through improved service delivery at health-care facilities.

Supplemental Material

Supplemental Material, PRISMA_2009_checklist for Assessing the Efficiency of Health-care Facilities in Sub-Saharan Africa: A Systematic Review by Tesleem K. Babalola and Indres Moodley in Health Services Research and Managerial Epidemiology

Supplemental Material, Standardized_validity_evaluation_tool for Assessing the Efficiency of Health-care Facilities in Sub-Saharan Africa: A Systematic Review by Tesleem K. Babalola and Indres Moodley in Health Services Research and Managerial Epidemiology

Acknowledgments

The authors acknowledge the support of the Department of Public Health Medicine, College of Health Sciences, University of KwaZulu-Natal, and National Research Foundation (NRF), South Africa.

Author Biographies

Tesleem K. Babalola is a doctoral candidate at the department of public health, University of KwaZulu-Natal, Durban, South Africa.

Indres Moodley is a professor at the health outcomes research unit, department of public health, University of KwaZulu-Natal, Durban, South Africa.

Authors’ Note: TKB conceptualized the study design, retrieved relevant articles, screening and data extraction, analyzed, interpreted the results, and drafted the manuscript. IM contributed to research aim and objectives, screening and data extraction, analysis and interpretation of data, and critically revising the drafted manuscript. Both authors approved the submission of the manuscript for publication. Data generated and analyzed during this study are included in this publication as supplementary information file. As the study did not involve individual participants, there was no requirement for anonymity or permission for consent. The systematic review protocol for the review was registered in PROSPERO (CRD42017072961), and ethical approval was received from the ethics committee of the University of KwaZulu-Natal (HSS/0805/017D). Authors were independent in the design of the study, data collection and analysis, interpretation of results, writing and publishing the manuscript.

Declaration of Conflicting Interests: The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This study was funded by grant provided by the South African National Research Foundation through the NRF-TWAS African renaissance doctoral fellowship (Grant no: 110911).

ORCID iD: Tesleem K. Babalola  https://orcid.org/0000-0003-1568-3134

https://orcid.org/0000-0003-1568-3134

Supplemental Material: Supplemental material for this article is available online.

References

- 1. Murray CJ, Frenk JA. WHO Framework for Health System Performance Assessment. Evidence and Information for Policy. Geneva, Switzerland: World Health Organization; 1999. [Google Scholar]

- 2. Olafsdottir AE, Reidpath DD, Pokhrel S, Allotey P. Health systems performance in sub-Saharan Africa: governance, outcome and equity. BMC Public Health. 2011;11(1):237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Czypionka T, Kraus M, Mayer S, Rohrling G. Efficiency, ownership, and financing of hospitals: the case of Austria. Health Care Manag Sci. 2014;17(4):331–47. [DOI] [PubMed] [Google Scholar]

- 4. Moshiri H, Aljunid SM, Amin RM. Hospital efficiency: concept, measurement techniques and review of hospital efficiency studies. Malaysian J Public Health Med. 2010;10(2):35–43. [Google Scholar]

- 5. Akazili J, Adjuik M, Chatio S, et al. What are the technical and allocative efficiencies of public health centres in Ghana? Ghana med J. 2008;42(4):149. [PMC free article] [PubMed] [Google Scholar]

- 6. Jakovljevic M, Timofeyev Y, Ekkert N, et al. The impact of health expenditures on public health in BRICS nations. J Sport Health Sci. 2019;8(6):516. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Funahashi J. The Business of Health in Africa: Partnering with the Private Sector to Improve People’s Lives. Washington, DC: International Finance Corporation; 2016. [Google Scholar]

- 8. World health organization. WHO Statistical Information System; 2008. http://www.who.int/whosis/data/search.jsp. Accessed November 23, 2018.

- 9. Rancic N, Jakovljevic MM. Long term health spending alongside population aging in N-11 emerging nations. East Eur Bus Econ J. 2016;2(1):2–26. [Google Scholar]

- 10. Jakovljevic M, Getzen TE. Growth of global health spending share in low and middle-income countries. Front Pharm. 2016;7:21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Zere E, Mbeeli T, Shangula K, et al. Technical efficiency of district hospitals: evidence from Namibia using data envelopment analysis. Cost Eff Resour Alloc. 2006;4:5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Republic of South Africa budget review 2017. In: Treasury N, ed. South Africa: 2017. [Google Scholar]

- 13. Hafidz F, Ensor T, Tubeuf S. Efficiency measurement in health facilities: a systematic review in low-and middle-income countries. Appli Health Econom Health Poli. 2018;16(4):465–480. [DOI] [PubMed] [Google Scholar]

- 14. Cook WD, Seiford LM. Data envelopment analysis (DEA)–Thirty years on. Euro J Operat Res. 2009;192(1):1–17. [Google Scholar]

- 15. Afzali HH, Moss JR, Mahmood MA. A conceptual framework for selecting the most appropriate variables for measuring hospital efficiency with a focus on Iranian public hospitals. Health Serv Manag Res. 2009;22(2):81–91. [DOI] [PubMed] [Google Scholar]

- 16. Emrouznejad A, Parker BR, Tavares G. Evaluation of research in efficiency and productivity: a survey and analysis of the first 30 years of scholarly literature in DEA. Socio-Econom Plannin Sci. 2008;42(3):151–157. [Google Scholar]

- 17. Kiadaliri AA, Jafari M, Gerdtham UG. Frontier-based techniques in measuring hospital efficiency in Iran: a systematic review and meta-regression analysis. BMC Health Serv Rese Res. 2013;13(1):312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. O’Neill L, Rauner M, Heidenberger K, Kraus M. A cross-national comparison and taxonomy of DEA-based hospital efficiency studies. Socio Econom Plann Sci. 2008;42(3):158–189. [Google Scholar]

- 19. Rosko MD, Mutter RL. Stochastic frontier analysis of hospital inefficiency: a review of empirical issues and an assessment of robustness. Med Care Res Rev. 2008;65(2):131–166. [DOI] [PubMed] [Google Scholar]

- 20. Rosko MD. Measuring technical efficiency in health care organizations. J Med Syst. 1990;14(5):307–322. [DOI] [PubMed] [Google Scholar]

- 21. Moher D, Shamseer L, Clarke M, et al. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. System Rev. 2015;4(1):1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Cooke A, Smith D, Booth A. Beyond PICO: the SPIDER tool for qualitative evidence synthesis. Qualitat Health Rese. 2012, (10):1435–1443. [DOI] [PubMed] [Google Scholar]

- 23. Hadji B, Meyer R, Melikeche S, Escalon S, Degoulet PJ. Assessing the relationships between hospital resources and activities: a systematic review. J med syst. 2014;38(10):127. [DOI] [PubMed] [Google Scholar]

- 24. Cummings G, Estabrooks CA. The effects of hospital restructuring that included layoffs on individual nurses who remained employed: a systematic review of impact. Int J Sociol Soci Policy. 2003;23(8/9):8–53. [Google Scholar]

- 25. Estabrooks C, Goel V, Thiel E, Pinfold P, Sawka C, Williams I. Decision aids: are they worth it? a systematic review. J Health Servi Res Policy. 2001;6(3):170–182. [DOI] [PubMed] [Google Scholar]

- 26. Estabrooks CA, Floyd JA, Scott Findlay S, O’leary KA, Gushta M. Individual determinants of research utilization: a systematic review. J Advanc Nurs. 2003;43(5):506–520. [DOI] [PubMed] [Google Scholar]

- 27. Meijers JM, Janssen MA, Cummings GG, Wallin L, Estabrooks CA, Halfens R. Assessing the relationships between contextual factors and research utilization in nursing: systematic literature review. J Advanc Nurs. 2006;55(5):622–635. [DOI] [PubMed] [Google Scholar]

- 28. Thungjaroenkul P, Cummings GG, Embleton A. The impact of nurse staffing on hospital costs and patient length of stay: a systematic review. Nurs Econom. 2007;25(5):255–267. [PubMed] [Google Scholar]

- 29. Kuosmanen T, Johnson A, Saastamoinen A. Stochastic Nonparametric Approach to Efficiency Analysis: A unified Framework. Data Envelopment Analysis. New York, NY: Springer; 2015. 191–244. [Google Scholar]

- 30. Erkie A, Begashaw A. Review on parametric and nonparametric methods of efficiency analysis. Open Access Biostat Bioinform. 2018;2(1):1–7. [Google Scholar]

- 31. Di Giorgio L, Moses MW, Fullman N, et al. The potential to expand antiretroviral therapy by improving health facility efficiency: evidence from Kenya, Uganda, and Zambia: BMC Med. 2016;14(1):108–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Akazili J, Adjuik M, Jehu-Appiah C, Zere E. Using data envelopment analysis to measure the extent of technical efficiency of public health centres in Ghana. Bmc Int Health Hum Right. 2008;8(1):11–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Alhassan RK, Nketiah-Amponsah E, Akazili J, Spieker N, Arhinful DK, de Wit TF. Efficiency of private and public primary health facilities accredited by the national health insurance authority in Ghana. Cost Effect Resourc Allocat. 2015;13(1):23–37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Jehu-Appiah C, Sekidde S, Adjuik M, et al. Ownership and technical efficiency of hospitals: evidence from Ghana using data envelopment analysis. Cost Effect Resourc Allocat. 2014;12(1):9–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Novignon J, Nonvignon J. Improving primary health care facility performance in Ghana: efficiency analysis and fiscal space implications. BMC Health Serv Res. 2017;17(1):399–407. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Kirigia J, Emrouznejad A, Cassoma B, Asbu E, Barry S. A performance assessment method for hospitals: the case of municipal hospitals in Angola. J Med Syst. 2008;32(6):509–519. [DOI] [PubMed] [Google Scholar]

- 37. Mujasi P, Kirigia JM. Productivity and Efficiency Changes in Referral Hospitals in Uganda: An Application of Malmquist Total Productivity Index; 2016. [Google Scholar]

- 38. Marschall P, Flessa S. Efficiency of primary care in rural Burkina Faso. A two-stage DEA analysis. Health Econom Rev. 2011;1(1):5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Mujasi PN, Asbu EZ, Puig-Junoy J. How efficient are referral hospitals in Uganda? a data envelopment analysis and Tobit regression approach. BMC Health Serv Res. 2016;16:230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. San Sebastian M, Lemma H. Efficiency of the health extension programme in Tigray, Ethiopia: a data envelopment analysis. BMC Int Health Hum Right. 2010;10(1):16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Hyacinth E, Ichoku WF, Obinna O, Kirigia J. Modelling the technical efficiency of hospitals in south-eastern Nigeria using stochastic frontier analysis. African J Health Econom. 2014;2:1–18. [Google Scholar]

- 42. Cullinane K, Wang TF, Song DW, Ji P. The technical efficiency of container ports: comparing data envelopment analysis and stochastic frontier analysis. Trans Res part A Poli Pract. 2006;40(4):354–374. [Google Scholar]

- 43. Hussey PS, De Vries H, Romley J, et al. A systematic review of health care efficiency measures. Health Serv Res. 2009;44(3):784–805. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Meyer JC, Schellack N, Stokes J, et al. Ongoing initiatives to improve the quality and efficiency of medicine use within the public healthcare system in South Africa; a preliminary study. Front Pharm. 2017;8:751. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Ichoku HE, Fonta WM, Onwujekwe OE, Kirigia JM. Evaluating the technical efficiency of hospitals in southeastern Nigeria. Eur J Bus Manag. 2011;3(2):24–37. [Google Scholar]

- 46. Kibambe JN, Koch SF. DEA Applied to a Gauteng sample of public hospitals. South African J Econom. 2007;75(2):351–68. [Google Scholar]

- 47. Kirigia JM, Lambo E, Sambo L. Are public hospitals in Kwazulu-Natal province of South Africa technically efficient? African J Health Sci. 2000;7(3-4):25–33. [PubMed] [Google Scholar]

- 48. Masiye F. Investigating health system performance: an application of data envelopment analysis to Zambian hospitals. BMC Health Serv Res. 2007;7(1):58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Sede PI, Ohemeng W. An empirical assessment of the technical efficiency in some selected hospitals in Nigeria. J Busines Res. 2012;6(1-2):14–43. [Google Scholar]

- 50. Yawe B, Kavuma S. Technical efficiency in the presence of desirable and undesirable outputs: a case study of selected district referral hospitals in Uganda. Health Policy Develop. 2008;6(1):37–53. [Google Scholar]

- 51. Omondi Aduda DS, Ouma C, Onyango R, Onyango M, Bertrand J. Voluntary medical male circumcision scale-up in Nyanza, Kenya: evaluating technical efficiency and productivity of service delivery. PLoS One. 2015;10(2):e0118152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Ramanathan TV, Chandra KS, Thupeng WM. A comparison of the technical efficiencies of health districts and hospitals in Botswana. Develop South Africa. 2003;20(2):307–320. [Google Scholar]

- 53. Kirigia JM, Emrouznejad A, Gama Vaz R, Bastiene H, Padayachy J. A comparative assessment of performance and productivity of health centres in Seychelles. Int J Product Perform Manage. 2007;57(1):72–92. [Google Scholar]

- 54. Matranga D, Sapienza F. Congestion analysis to evaluate the efficiency and appropriateness of hospitals in Sicily. Health Policy. 2015;119(3):324–332. [DOI] [PubMed] [Google Scholar]

- 55. Ding DX. The effect of experience, ownership and focus on productive efficiency: a longitudinal study of US hospitals. J Operat Manag. 2014;32(1-2):1–14. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental Material, PRISMA_2009_checklist for Assessing the Efficiency of Health-care Facilities in Sub-Saharan Africa: A Systematic Review by Tesleem K. Babalola and Indres Moodley in Health Services Research and Managerial Epidemiology

Supplemental Material, Standardized_validity_evaluation_tool for Assessing the Efficiency of Health-care Facilities in Sub-Saharan Africa: A Systematic Review by Tesleem K. Babalola and Indres Moodley in Health Services Research and Managerial Epidemiology