Abstract

Medical image fusion techniques can fuse medical images from different morphologies to make the medical diagnosis more reliable and accurate, which play an increasingly important role in many clinical applications. To obtain a fused image with high visual quality and clear structure details, this paper proposes a convolutional neural network (CNN) based medical image fusion algorithm. The proposed algorithm uses the trained Siamese convolutional network to fuse the pixel activity information of source images to realize the generation of weight map. Meanwhile, a contrast pyramid is implemented to decompose the source image. According to different spatial frequency bands and a weighted fusion operator, source images are integrated. The results of comparative experiments show that the proposed fusion algorithm can effectively preserve the detailed structure information of source images and achieve good human visual effects.

Keywords: medical image fusion, convolutional neural network, image pyramid, multi-scale decomposition

1. Introduction

In the clinical diagnosis of modern medicine, various types of medical images play an indispensable role and provide great help for the diagnosis of diseases. To obtain sufficient information for accurate diagnosis, doctors generally need to combine multiple different types of medical images from the same position to diagnose the patient’s condition, which often causes great inconvenience. If multiple types of medical images are only analyzed by doctor’s space concepts and speculations, the analysis accuracy is subjectively affected, even parts of image information may be neglected. Image fusion techniques provide an effective way to solve these issues [1]. As the variety of medical imaging devices increases, the obtained medical images from different modalities contain complementary as well as redundant information. Medical image fusion techniques can fuse multi-modality medical images for more reliable and accurate medical diagnosis [2,3].

This paper proposes a CNN-based medical image fusion method. First, CNN-based model generates a weight map for any-size source image. Then, Gaussian pyramid decomposition is performed on the generated weight map, and the contrast image pyramid decomposition is applied to source images for obtaining the corresponding multi-scale sub-resolution images. Next, a weighted fusion operator based on the measurement of regional characteristics is used to set different thresholds for the top layer and the remaining layers of sub-decomposed images to obtain the fused sub-decomposed images. Finally, the fused image is obtained by the reconstruction of contrast pyramid. This paper has three main contributions as follows:

-

(1)

In training process of CNN, source images can be directly mapped to the weight map. Thus, it can also achieve the measurement of activity level and weight distribution in an optimal way to overcome the difficulties in design by learning network parameters in the training process.

-

(2)

Human visual system is sensitive to the changes of image contrast. Thus, this paper proposes a multi-scale contrast pyramid decomposition based image fusion solution, which can selectively highlight the contrast information of fused image to achieve better human visual effects.

-

(3)

The proposed solution uses a weighted fusion operator based on the measurement of regional characteristics. In the same decomposition layer, the fusion operators applied to different local regions may be different. Thus, the complementary and redundant information of fused image can be fully explored to achieve a better fusion effect and highlight important detailed features.

The remainder of this paper is organized as follows. Section 2 discusses the related works of medical image fusion. Section 3 demonstrates the proposed CNN-based medical image fusion solution in detail. Section 4 presents the comparative experiments and compares corresponding results. Section 5 concludes this paper.

2. Related Works

Researchers have proposed many medical image fusion methods in recent years [4,5]. Mainstream medical image fusion methods include decomposition-based and learning-based image fusion methods [6,7]. As a commonly used decomposition-based medical image fusion method, multi-scale transform (MST) generally has three steps in the fusion process: decomposition, fusion, and reconstruction. Pyramid-based method, wavelet, and multi-scale geometric analysis (MAG) based method are commonly used in MST [8]. In MAG-based methods, nonsubsampled contourlet transform (NSCT) [9,10] and nonsubsampled shearlet transform (NSST) [11] based methods have high efficiency in image representation. In addition to image transformation, the analysis of high- and low-frequency coefficients is also a key issue of MST-based fusion methods. Traditionally, the activity level of high-frequency coefficient is usually based on its absolute value. It is calculated in a pixel- or window-based way, and then uses a simple fusion rule, such as the selection of the maximum or weighted average, to obtain the fused coefficient. Averaging the coefficients of different source images was the most popular low-frequency fusion strategy in early research. In recent years, more advanced image transformations and more complex fusion strategies have been developed [12,13,14,15,16,17]. Liu proposed an integrated sparse representation (SR)- and MST-based medical image fusion framework [18]. Zhu proposed an NSCT based multi-modality decomposition method for medical images, which uses the phase consistency and local Laplacian energy to fuse high- and low-pass sub-bands, respectively [9]. Yin proposed a multi-modality medical image fusion method in NSST domain, which introduced pulse coupled neural network (PCNN) for image fusion [19]. To improve the fusion quality of multi-modality images, a novel multi-sensor image fusion framework based on NSST and PCNN was proposed by Li [20].

In the past decade, learning-based methods have been widely used in medical image fusion. Especially, SR- and deep learning-based fusion methods are most widely used [21,22]. In the early stage, SR-based fusion methods used a standard sparse coding model based on a single image component and local image blocks [23,24,25]. In the original spatial domain, source images were segmented into a set of overlapping image blocks for sparse coding. Most existing SR-based fusion methods attempt to improve their performances is the following ways: adding detailed constraints [5], designing more efficient dictionary learning strategy [26], using multiple sub-dictionaries in representation [27,28], etc. As an SR-based model, Kim proposed a dictionary learning method based on joint image block clustering for multi-modality image fusion. Zhu proposed a medical image fusion method based on cartoon-texture decomposition (CTD), and used an SR-based fusion strategy to fuse the decomposed coefficients [29]. Liu proposed an adaptive sparse representation (ASR) model for simultaneous image fusion and denoising [28]. All the above-mentioned methods propose complex fusion rules or different SR-based models. However, these specific rules cannot be applicable to every type of medical image fusion [27].

With the rapid development of artificial intelligence, deep learning-based image fusion methods have become a hot research topic [30,31,32,33]. As a main representative of artificial intelligence, deep learning is developed on the basis of traditional artificial neural networks. It can learn data characteristics autonomously, establish a human-like learning mechanism by simulating the neural network of human brain, and then analyze and learn the related data, such as images and texts [34,35]. CNN as a classical deep learning model can achieve the encoding of direct mapping from source images to weight map during the training process [29,36]. Thus, both activity-level measurements and weight distribution can be achieved together in an optimal way by learning network parameters. In addition, CNN’s local connection and weight sharing feature can further improve the performance of image fusion algorithms, while reducing the complexity of entire network and the number of weights. At present, CNN plays an increasingly important role in medical image fusion. Xia integrated multi-scale transform and CNN into a multi-modality medical image fusion framework, which uses the deep stacked neural network to divide source images into high- and low-frequency components to do corresponding image fusion [37]. Liu proposed a CNN-based multi-modality medical image fusion algorithm, which applies image pyramids to the medical image fusion process in a multi-scale manner [38].

The calculation of weight map, which fuses the pixel activity information from different sources, is one of the most critical issues in existing deep learning based image fusion [38]. Most existing fusion methods use a two-step solution that contains activity-level measurement and weight assignment. In traditional transform-domain fusion methods, the absolute value of decomposition coefficient is used to measure its activity first. Then, the fusion rule, such as “choose-max” or “weighted-average”, is used to select the maximum or weighted average [39]. According to the obtained measurements, the corresponding weights are finally assigned to different sources. To improve the fusion performance, many complicated decomposition methods and detailed weight assignment strategies have been proposed in recent years [28,40,41,42,43,44,45]. However, it is not easy to design an ideal activity level measurement or weight assignment strategy, which can consider all key issues [37].

3. The Proposed Medical Image Fusion Solution

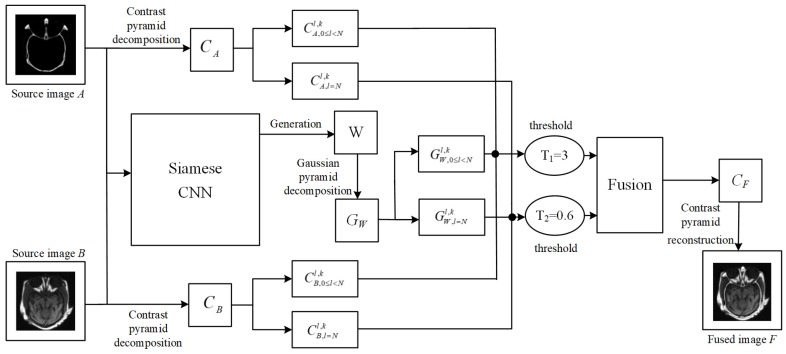

As shown in Figure 1, the proposed medical image fusion framework has three main steps. First, it uses Siamese network model to generate the same-size weight map W for any-size source image A and B, respectively. Then, Gaussian pyramid decomposition is applied to the generated weight map W to obtain corresponding multi-scale sub-decomposed image , which is used to determine the fusion operator in coefficient fusion process. and are the top layer and the remaining layers of sub-decomposed image. It applies the contrast pyramid to the decomposition of source image A and B. The multi-scale sub-decomposed images and are obtained for the subsequent coefficient fusion process. and are the top layer of sub-decomposed image and , respectively. and are used to represent the remaining layers of sub-decomposed image and , respectively. Finally, different thresholds are set for the top layer and the remaining layers of sub-decomposed images, respectively. A weighted fusion operator based on the measurement of regional characteristics is used to fuse the different regions in the same decomposition layer to obtain the fused sub-decomposed image . The final fused image F is obtained by the reconstruction of contrast pyramid.

Figure 1.

The proposed medical image fusion framework.

3.1. Generation of CNN-Based Weight Map

3.1.1. Network Construction

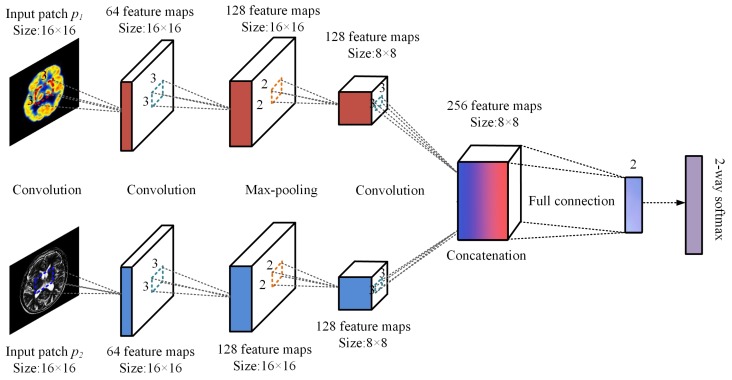

To obtain the weight map of pixel activity information from multiple source images, the proposed method uses CNN to achieve the measurement of optimal pixel activity level and weight distribution. This paper uses siamese network to improve the efficiency of CNN training. Siamese network has two branches. Each branch contains three convolutional layers and one max-pooling layer. The first two layers are convolutional layers. The first layer is used for the simple feature extraction of input image. In the second layer, the number of feature maps increases. The features of output map in the upper convolutional layer are extracted. The third layer is a max-pooling layer. It removes unimportant samples from feature map to further reduce the number of parameters. As a convolution layer, the fourth layer extracts more complex features from the output map of the pooling layer. To reduce memory consumption, it uses a lightweight network structure to reduce the training complexity. Specifically, the feature map of each branch’s final output is concatenated first. Then, the concatenated ones are directly connected to a two-dimensional vector by a fully connected layer. To predict the probability distribution of different characteristics, the two-dimensional vector obtained by mapping is sent to a bi-directional softmax layer, and then classified by probability value. This paper uses the siamese network training architecture shown in Figure 2.

Figure 2.

Siamese network training architecture.

To achieve the classification in CNN network, this paper uses softmax classifier to obtain the classification probability by Equation (1).

| (1) |

If one is larger than all the other p, then its mapping component is close to 1, and the others are close to 0, which normalizes all input vectors. The batch size is set to 128, thus the softmax loss function is obtained as Equation (2).

| (2) |

Taking the softmax loss function as the optimization goal, stochastic gradient descent is used to minimize the loss function. As the initial parameter settings, the momentum and weight decay are set to 0.9 and 0.0005, respectively. Thus, Equations (3) and (4) are used to update the weights.

| (3) |

| (4) |

where is the dynamic variable, is weight after ith iteration, is the learning rate, L represents the loss function, and is the loss derivative of weight .

3.1.2. Networking Training

It selects a high-quality multi-modality medical image set from http://www.med.harvard.edu/aanlib/home.html as training samples. It applies a Gaussian filter to each image to obtain corresponding five different-level fuzzy versions. Specifically, a Gaussian filter with a standard deviation of 2 and a cutoff value of is used. Gaussian filter is used to blur the original image to obtain the first blurred image. In the following Gaussian filtering, the previous output image is used as the next input image. For instance, the output image of first Gaussian filtering is used as the input image of the second Gaussian filtering. Then, for each blurred and clear image, it randomly samples 20 pairs of image blocks. and represent a pair of clear and blurred image blocks. When and , it is defined as a positive example (marked as 1), where and are the inputs for the first and second branch, respectively. Oppositely, when and , it is defined as a negative example (marked as 0). Therefore, the training set is ultimately composed of positive and negative examples. After the sample is generated, the weight of each convolutional layer is initialized by using Xavier algorithm, which adaptively determines the initialization scale based on the number of input and output neurons. The deviation of each layer is initialized to 0. The inclination rates of all layers are equal, and their initial values are set to 0.0001. When the loss reaches a steady state, the inclination rates are manually reduced to 10% of previous values. After about ten iterations, it can complete the network training.

3.1.3. The Generation of Weight Map W

In the image testing and fusion process, to process any-size source images, it converts the fully connected layer into two equivalent convolutional layers of equal kernel size. When the conversion is completed, any-size image A and B to be fused can be processed as a whole to generate a dense prediction map S. Every prediction is a two-dimensional vector, and the value of each dimension is between 0 and 1. If one dimension is larger than another, this dimension can be normalized to 1, and the other one is set to 0. It simplifies the weight of corresponding image block with an output dimension value of 1. For two adjacent predictions in S, the steps of corresponding image blocks overlap. For overlapping areas, the weights are averaged. The output is the average weight of the overlapping image blocks. In the above way, it is possible to input any-size image A and B into the network, and generate the corresponding same-size weight map W.

3.2. Pyramid Decomposition

This paper uses both contrast pyramid and Gaussian pyramid to decompose source images. It builds the contrast pyramid first. Then, when the Gaussian pyramid is established, is the zeroth layer (bottom layer), and the l’th layer can be constructed in the following manner. As shown in Equation (5), it convolves by a window function with low-pass characteristics first, and then downsamples the convolutional result by the interlaced every other row and column.

| (5) |

where is the window function, and are the number of columns and the number of rows in the l’th-layer sub-image of the Gaussian pyramid, respectively, and N is the total number of the pyramid layers.

-

(1)

Separability:

-

(2)

Normalization:

-

(3)

Symmetry: and

-

(4)

Equal contribution of odd and even terms:

According to the above constraints, it can construct , , and . Then, according to Constraint 1, it can get the window function by calculation, as shown in Equation (6).

| (6) |

At this point, the image Gaussian pyramid is constructed by .

After the construction of a Gaussian pyramid image by halving the size of each layer one by one, the interpolation method is used to interpolate and expand the Gaussian pyramid. Thus, the expanded lth-layer image and the th-layer image have the same size and the operation is shown as follows:

| (7) |

| (8) |

where is an expansion version of image Gaussian pyramid . According to the above formulas, an expansion sequence is obtained by interpolating and expanding each layer of Gaussian pyramid, respectively.

According to the above formulas, an expansion sequence is obtained by interpolating and expanding each layer of Gaussian pyramid respectively. The decomposition of image contrast is shown as Equation (9).

| (9) |

where is the contrast pyramid, is the Gaussian pyramid, I is the image decomposed by contrast pyramid, and l is the decomposition level, which composes the contrast pyramid of source image.

Source image A and B are decomposed into corresponding sub-images by contrast pyramid, respectively. For the weight map generated in CNN network, it is decomposed into sub-images by Gaussian pyramid. Different thresholds are set for the top layer and the remaining layers of the obtained sub-images respectively in the fusion processing.

3.3. Fusion Rules

In the fusion process, to obtain better visual characteristics, richer details, and outstanding fusion effects, this paper adopts new fusion rules and the weighted average fusion operators based on regional characteristics. The fusion rules and operators are shown as follows:

-

(1)After the contrast pyramid decomposition, it calculates the energy and of corresponding local regions in each decomposition level l of source image A and B, respectively.

where represents the local area energy centered at (x,y) on the lth layer of contrast pyramid, is the lth-layer image of contrast pyramid, and m and n represent the size of local area.(10) -

(2)Calculate the similarity of corresponding local regions in two source images.

where and are calculated by Equation (10). The range of similarity is [–1,1], and a value close to 1 indicates high similarity.(11) -

(3)Determine the fusion operators. Define a similarity threshold T (when , ; when , ). When , it obtains:

when , weight map W based weighted mean model is:(12)

where is the lth layer of sub-image after fusion.(13) (14) Finally, the integration strategy can be summarized as a whole by Equation (15).(15)

According to the above algorithm, when the similarity between the corresponding local regions of source image A and B is less than threshold T, it means that the “energy” difference of two local regions is large. At this time, the central pixel of the region with a larger “energy” is selected as the central pixel of corresponding region in the fused image. Conversely, when the similarity is greater than or equal to threshold T, it means that the “energy” of the region is similar in two source images. At this time, the weighted fusion operator is used to determine the contrast or gray value of the central pixel of the region in the fused image.

Since the central pixel with large local energy represents a distinct feature of source image, the local image features generally do not only depend on a certain pixel. Therefore, the weighted fusion operator based on region characteristics is used, which is more reasonable than other determination methods of fused pixel based on the simple selection or the weight of an independent pixel.

Finally, the decomposed sub-image obtained after fusion is inversely transformed by contrast pyramid, which is also called image reconstruction. According to Equation (16), the accurate image reconstruction by contrast pyramid can be obtained.

| (16) |

where ⊙ denotes Hadamard product ( also known as the element-wise multiplication ).

The fused image F can be obtained by calculating the above-mentioned image reconstruction formula. Algorithm 1 shows the main steps of the proposed medical image fusion solution.

| Algorithm 1 Proposed NSST-based multi-sensor image fusion framework. |

|

Input: source image A and B; Parameters: pyramid decomposition level l, the number of pyramid levels N, similarity threshold T Output: the fused image F

|

4. Comparative Experiments and Analysis

4.1. Experiment Results and Analysis

The following comparative experiments were used to prove that the proposed CNN-based algorithm has good performance in medical image fusion. Eight different image fusion methods were used to fuse MR-CT, MR-T1-MR-T2, MR-PET, and MR-SPECT images, respectively. These eight methods are MST-SR [18], NSCT-PC [9], NSST-PCNN [19], ASR [28], CT [29], KIM [26], CNN-LIU [38], and the proposed solution.

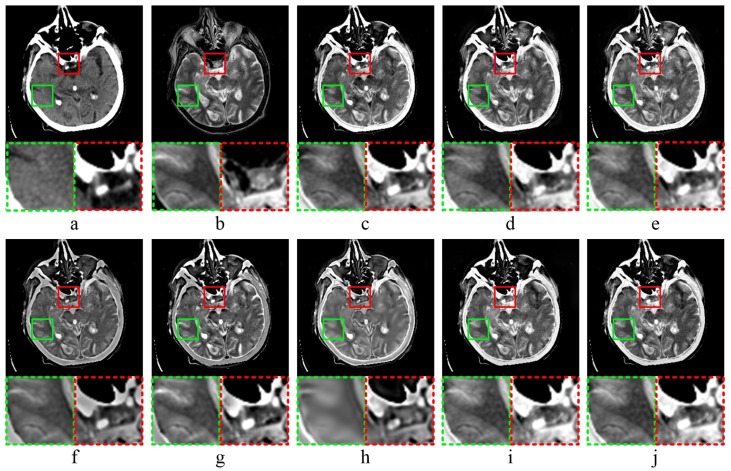

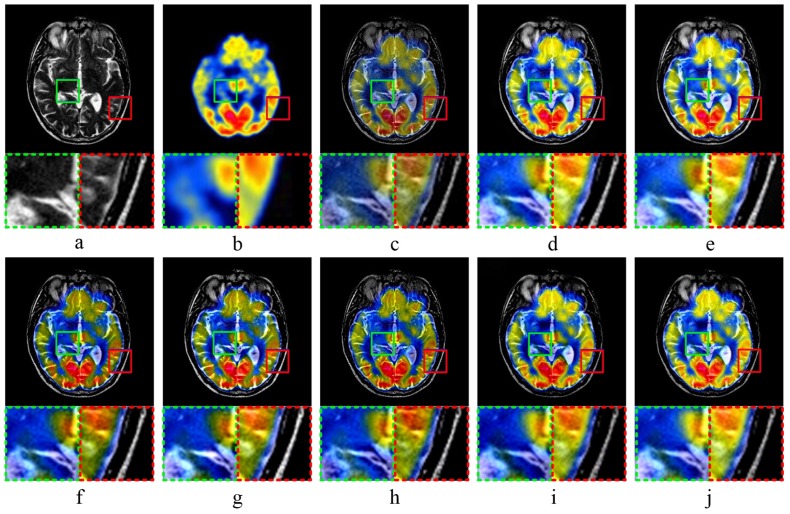

Figure 3 shows the results of MR-CT image fusion experiments. In Figure 3c, the fused image obtained by MST-SR method has a general visualization performance, and the image contrast is high by analyzing the partially enlarged image. As shown in Figure 3d,e, the fused images by NSCT-PC and NSST-PCNN have a high brightness. According to the partial enlargements marked in green and red dashed frame, both methods have the poor performance in the preservation of image details. In Figure 3f,g, the fused images obtained by ASR and CT have low brightness. According to the analysis of details, the detailed information of image edge is not obvious, which is not good for human eye observation. As shown in Figure 3h, the fused image obtained by KIM has low sharpness and poor visual effect. Comparing Figure 3i,j, as well as the partially magnified images, it is difficult to visually distinguish the quality of the fused image obtained by CNN-LIU and the proposed method.

Figure 3.

MR-CT image fusion experiments: (a,b) source images; and (c–j) the fused image obtained by MST-SR, NSCT-PC, NSST-PCNN, ASR, CT, KIM, CNN-LIU, and the proposed method, respectively. Two partially enlarged images marked in green and red dashed frames correspond to the regions surrounded by green and red frames in the fused image.

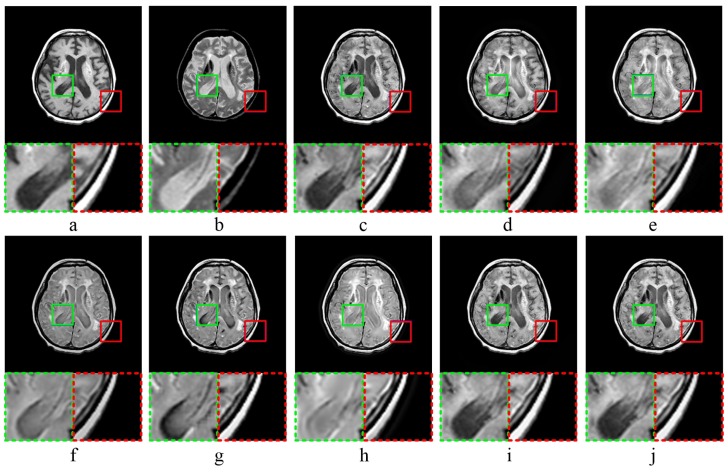

The results of MR-T1-MR-T2 image fusion experiments are shown in Figure 4. Comparing the fused result (Figure 4c) with source image (Figure 4a,b), the fused image obtained by MST-SR method has a low similarity to source image (Figure 4a), and does not well retain the detailed structure information of source image (Figure 4a). As shown in Figure 4d, the fused image obtained by NSCT-PC method is too smooth in some areas, and the detailed image texture is not sufficiently obvious. In Figure 4e, the fused image obtained by NSST-PCNN method has high brightness, and does not well preserve the detailed features of source images. ASR method obtains the fused image with low contrast and a lot of noises, as shown in Figure 4f. The fused image shown in Figure 4g was obtained by CT method, and has high edge brightness, which weakens the detailed texture information of image edges. According to Figure 4h, the fused image obtained by KIM method has low sharpness, and is blurred. As shown in Figure 4i,j, CNN-LIU and the proposed method reach the almost same visual performance of human eyes.

Figure 4.

MR-T1 CMR-T2 image fusion experiments: (a,b) source images; and (c–j) the fused image obtained by MST-SR, NSCT-PC, NSST-PCNN, ASR, CT, KIM, CNN-LIU, and the proposed method, respectively. Two partially enlarged images marked in green and red dashed frames correspond to the regions surrounded by green and red frames in the fused image.

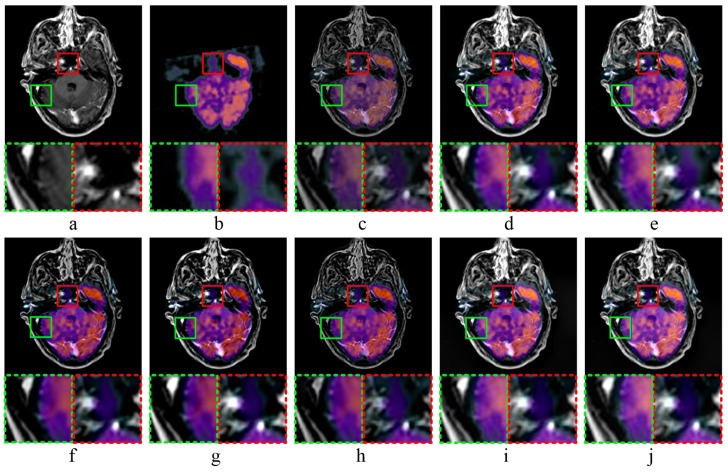

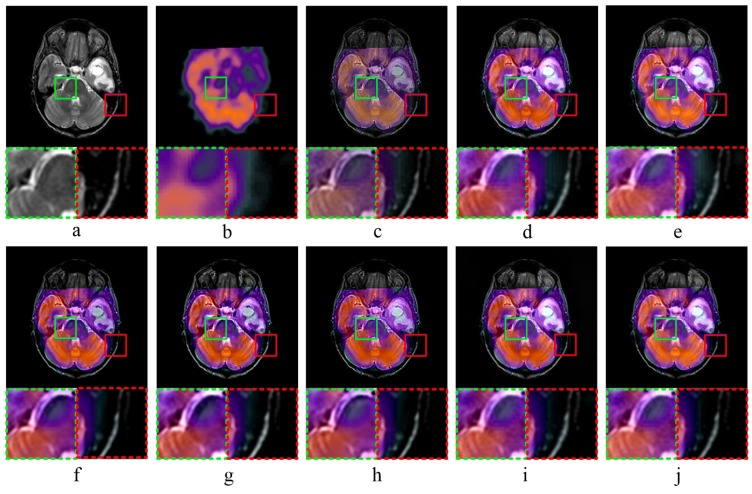

Figure 5 and Figure 6 show the results of MR-PET image fusion experiments. In both Figure 5c and Figure 6c, the fused images obtained by MST-SR method have high darkness, which is not conducive to human visual observation. According to Figure 5d,e, as well as the partially magnified areas, the fused images obtained by NSCT-PC and NSST-PCNN method have high brightness, and the detailed image information is not clear. Figure 5f,g and Figure 6f,g show that the fused images obtained by ASR and CT methods have low brightness. It means that these two methods have poor performance in the preservation of image details. Comparing Figure 5i,j, the fused image of KIM method has low sharpness, which means the image is blurred. As shown in Figure 5i, the fused image obtained by CNN-LIU method has a low contrast, and the detailed edge information is not obvious. Both Figure 5j and Figure 6j show that the proposed fusion method can preserve the detailed information of source images well, which is conducive to the observation of medical images and the diagnosis of diseases.

Figure 5.

MR-PET image fusion Experiment 1: (a,b) source images; and (c–j) the fused image obtained by MST-SR, NSCT-PC, NSST-PCNN, ASR, CT, KIM, CNN-LIU, and the proposed method, respectively. Two partially enlarged images marked in green and red dashed frames correspond to the regions surrounded by green and red frames in the fused image.

Figure 6.

MR-PET image fusion Experiment 2: (a,b) source images; and (c–j) the fused image obtained by MST-SR, NSCT-PC, NSST-PCNN, ASR, CT, KIM, CNN-LIU, and the proposed method, respectively. Two partially enlarged images marked in green and red dashed frames correspond to the regions surrounded by green and red frames in the fused image.

Figure 7 and Figure 8 show the results of MR-SPECT image fusion experiments. In Figure 7c, the fused image of MST-SR method has a low contrast and unclear edge details. As shown in Figure 8d,e, some edge regions are too smooth in the fused images obtained by NSCT-PC and NSST-PCNN methods, and the edge details are not clear. In Figure 7g and Figure 8g, the images obtained by CT method have the high contrast, and CT method performs poorly on the detail retention of source images. The fused images shown in Figure 7f,h and Figure 8f,h, which were obtained by ASR and KIM method, respectively, have the low brightness and poor visualization performance. As shown in Figure 7i,j and Figure 8i,j, the fused images obtained by both CCN-LIU and the proposed method have the high brightness and good visualization performance. Comparing all the fused results in Figure 7 and Figure 8, the fused images obtained by the proposed fusion method have the high similarity with source images, which can preserve the detailed structures of source images well and achieve good fusion performance.

Figure 7.

MR-SPECT image fusion Experiment 1: (a,b) source images; and (c–j) the fused image obtained by MST-SR, NSCT-PC, NSST-PCNN, ASR, CT, KIM, CNN-LIU, and the proposed method, respectively. Two partially enlarged images marked in green and red dashed frames correspond to the regions surrounded by green and red frames in the fused image.

Figure 8.

MR-SPECT image fusion Experiments 2: (a,b) source images; and (c–j) the fused image obtained by MST-SR, NSCT-PC, NSST-PCNN, ASR, CT, KIM, CNN-LIU, and the proposed method, respectively. Two partially enlarged images marked in green and red dashed frames correspond to the regions surrounded by green and red frames in the fused image.

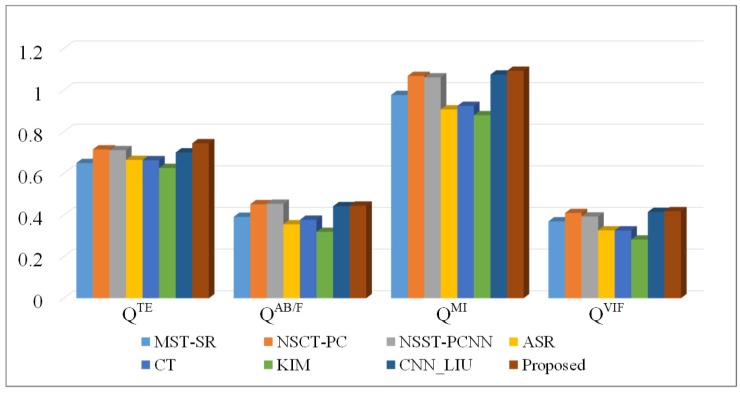

4.2. Evaluation of Objective Metrics

For image fusion, a single evaluation metric lacks objectivity. Therefore, it is necessary to do a comprehensive analysis by using multiple evaluation metrics. In this study, four objective evaluation metrics, namely [46,47], [29,48], [47], and [29,49], were used to evaluate the performances of different fusion methods. is the Tsallis entropy of the fused image. The entropy value represents the amount of average information contained in the fused image. as a gradient-based quality indicator is mainly used to measure the edge information of fused images. is the mutual information indicator, which is used to measure the amount of information contained in the fused image. is the information ratio between the fused image and source images to evaluate the human visualization performance of the fused image. The objective evaluation results of medical image fusion are shown in Figure 9. Among all the fusion results, the proposed method achieves good performance in all four objective evaluations. It confirms that the proposed method can preserve the detailed structure information of source images well and realize good human visual effects.

Figure 9.

Objective evaluation results of eight fusion methods.

Table 1 shows the values of four objective metrics for eight fusion methods. The proposed method achieves the highest value. Comparing with the seven other fusion methods, the fused image obtained by the proposed method has the highest Tsallis entropy, and contains more information than the others. According to the analysis of , the fused images obtained by NSCT-PC, NSST-PCNN, CNN-LIU, and the proposed method have high , which means these fused images perform well in the preservation of edge details. The fused image obtained by KIM has low , which indicates that KIM does not have good performance in the preservation of edge information. For , the proposed method is a little bit higher than the others. It means more information of source images is retained in the fused image, and the preservation ability of source image details is strong. The proposed method has the highest . Comparing with CNN-LIU, the proposed method has a higher information ratio between the fused image and source images, and achieves a better human visual effect as well.

Table 1.

Objective evaluations of medical image fusion comparative experiments.

| Average Processing Time | |||||

|---|---|---|---|---|---|

| MST-SR | 0.6495 | 0.3911 | 0.9764 | 0.3693 | 15.0541 |

| NSCT-PC | 0.7150 | 0.4515 | 1.0681 | 0.4092 | 3.7743 |

| NSST-PCNN | 0.7113 | 0.4537 | 1.0610 | 0.3924 | 6.1595 |

| ASR | 0.6643 | 0.3550 | 0.9072 | 0.3258 | 35.2493 |

| CT | 0.6631 | 0.3769 | 0.9240 | 0.3258 | 14.5846 |

| KIM | 0.6257 | 0.3188 | 0.8792 | 0.2820 | 59.1929 |

| CNN-LIU | 0.7003 | 0.4421 | 1.0745 | 0.4145 | 14.5846 |

| Proposed | 0.7445 | 0.4449 | 1.0925 | 0.4181 | 12.8667 |

4.3. Threshold Discussion

In this study, a similarity threshold T was defined to fuse the multi-scale sub-decomposed images. For the top layer of sub-decomposed images, the threshold was set to 0.6. For the remaining layers of sub-decomposed images, the threshold was set to 3. Table 2 shows the values of five objective metrics for the fusion framework with different thresholds. According to , the proposed method is a little bit lower than others. However, for , , and , the proposed method is higher than the others. It means more average information and edge information is contained in the fused image, and it has a higher information ratio between the fused image and source images. In addition, these three methods have close values in terms of time consumption. Overall, the proposed method performs better on five objective metrics.

Table 2.

Objective evaluations of the fusion framework with different thresholds.

| Average Processing Time | |||||

|---|---|---|---|---|---|

| Threshold = 0.6 | 0.6973 | 0.4248 | 1.1165 | 0.4151 | 12.7256 |

| Threshold = 3 | 0.7289 | 0.4255 | 1.2540 | 0.4068 | 12.6652 |

| Proposed | 0.7445 | 0.4449 | 1.0925 | 0.4181 | 12.8667 |

5. Conclusions

This paper proposes a CNN-based medical image fusion solution. The proposed method implements the measurement of activity level and weight distribution by CNN training to generate a weight map including the integrated pixel activity information. To obtain better visual effects, the multi-scale decomposition method based on contrast pyramid is used to fuse corresponding image components in different spatial frequency bands. Meanwhile, the complementary and redundant information of fused images is explored by the local similarity strategy in adaptive fusion mode. Comparative experiment results show that the fused images by proposed method have high visual quality and objective indicators. In the future, we will continue to explore the great potential of deep learning techniques and apply them to other types of multi-modality image fusion, such as infrared-visible and multi-focus image fusion.

Author Contributions

K.W. designed the proposed algorithm and wrote the paper; M.Z. and H.W. participated in the algorithm design, algorithm programming, and testing the proposed method; G.Q. participated in the algorithm design and paper writing processes; and Y.L. participated in the algorithm design, provided technical support, and revised the paper. All authors have read and approved the final manuscript.

Funding

This work was jointly supported by the National Natural Science Foundation of China under Grants 61803061 and 61906026; the National Nuclear Energy Development Project of China (Grant No. 18zg6103); Science and Technology Research Program of Chongqing Municipal Education Commission (Grant No. KJQN201800603); Chongqing Natural Science Foundation Grant cstc2018jcyjAX0167; the Common Key Technology Innovation Special of Key Industries of Chongqing science and Technology Commission under Grant Nos. cstc2017zdcy-zdyfX0067, cstc2017zdcy-zdyfX0055, and cstc2018jszx-cyzd0634; and the Artificial Intelligence Technology Innovation Significant Theme Special Project of Chongqing science and Technology Commission under Grant Nos. cstc2017rgzn-zdyfX0014 and cstc2017rgzn-zdyfX0035.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Ganasala P., Kumar V. Feature-Motivated Simplified Adaptive PCNN-Based Medical Image Fusion Algorithm in NSST Domain. J. Digit. Imaging. 2016;29:73–85. doi: 10.1007/s10278-015-9806-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Li S., Kang X., Fang L., Hu J., Yin H. Pixel-level image fusion: A survey of the state of the art. Inf. Fusion. 2017;33:100–112. doi: 10.1016/j.inffus.2016.05.004. [DOI] [Google Scholar]

- 3.James A.P., Dasarathy B.V. Medical image fusion: A survey of the state of the art. Inf. Fusion. 2014;19:4–19. doi: 10.1016/j.inffus.2013.12.002. [DOI] [Google Scholar]

- 4.Zhu Z., Chai Y., Yin H., Li Y., Liu Z. A novel dictionary learning approach for multi-modality medical image fusion. Neurocomputing. 2016;214:471–482. doi: 10.1016/j.neucom.2016.06.036. [DOI] [Google Scholar]

- 5.Li H., He X., Tao D., Tang Y., Wang R. Joint medical image fusion, denoising and enhancement via discriminative low-rank sparse dictionaries learning. Pattern Recognit. 2018;79:130–146. doi: 10.1016/j.patcog.2018.02.005. [DOI] [Google Scholar]

- 6.Li Y., Sun Y., Zheng M., Huang X., Qi G., Hu H., Zhu Z. A novel multi-exposure image fusion method based on adaptive patch structure. Entropy. 2018;20:935. doi: 10.3390/e20120935. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Qi G., Wang J., Zhang Q., Zeng F., Zhu Z. An Integrated Dictionary-Learning Entropy-Based Medical Image Fusion Framework. Future Internet. 2017;9:61. doi: 10.3390/fi9040061. [DOI] [Google Scholar]

- 8.Shen J., Zhao Y., Yan S., Li X. Exposure Fusion Using Boosting Laplacian Pyramid. IEEE Trans. Cybern. 2014;44:1579–1590. doi: 10.1109/TCYB.2013.2290435. [DOI] [PubMed] [Google Scholar]

- 9.Zhu Z., Zheng M., Qi G., Wang D., Xiang Y. A Phase Congruency and Local Laplacian Energy Based Multi-Modality Medical Image Fusion Method in NSCT Domain. IEEE Access. 2019;7:20811–20824. doi: 10.1109/ACCESS.2019.2898111. [DOI] [Google Scholar]

- 10.Li Y., Sun Y., Huang X., Qi G., Zheng M., Zhu Z. An Image Fusion Method Based on Sparse Representation and Sum Modified-Laplacian in NSCT Domain. Entropy. 2018;20:522. doi: 10.3390/e20070522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Xu L., Gao G., Feng D. Multi-focus image fusion based on non-subsampled shearlet transform. IET Image Process. 2013;7:633–639. doi: 10.1049/iet-ipr.2012.0558. [DOI] [Google Scholar]

- 12.Qu X.B., Yan J.W., Xiao H.Z., Zhu Z.Q. Image Fusion Algorithm Based on Spatial Frequency-Motivated Pulse Coupled Neural Networks in Nonsubsampled Contourlet Transform Domain. Acta Autom. Sin. 2008;34:1508–1514. doi: 10.3724/SP.J.1004.2008.01508. [DOI] [Google Scholar]

- 13.Bhatnagar G., Wu J., Liu Z. Directive Contrast Based Multimodal Medical Image Fusion in NSCT Domain. IEEE Trans. Multimed. 2013;15:1014–1024. doi: 10.1109/TMM.2013.2244870. [DOI] [Google Scholar]

- 14.Das S., Kundu M.K. A Neuro-Fuzzy Approach for Medical Image Fusion. IEEE. Trans. Biomed. Eng. 2013;60:3347–3353. doi: 10.1109/TBME.2013.2282461. [DOI] [PubMed] [Google Scholar]

- 15.Liu Z., Yin H., Chai Y., Yang S.X. A novel approach for multimodal medical image fusion. Expert Syst. Appl. 2014;41:7425–7435. doi: 10.1016/j.eswa.2014.05.043. [DOI] [Google Scholar]

- 16.Wang L., Li B., Tian L. Multimodal Medical Volumetric Data Fusion Using 3-D Discrete Shearlet Transform and Global-to-Local Rule. IEEE. Trans. Biomed. Eng. 2014;61:197–206. doi: 10.1109/TBME.2013.2279301. [DOI] [PubMed] [Google Scholar]

- 17.Yang Y., Que Y., Huang S., Lin P. Multimodal Sensor Medical Image Fusion Based on Type-2 Fuzzy Logic in NSCT Domain. IEEE Sens. J. 2016;16:3735–3745. doi: 10.1109/JSEN.2016.2533864. [DOI] [Google Scholar]

- 18.Liu Y., Liu S., Wang Z. A general framework for image fusion based on multi-scale transform and sparse representation. Inf. Fusion. 2015;24:147–164. doi: 10.1016/j.inffus.2014.09.004. [DOI] [Google Scholar]

- 19.Yin M., Liu X., Liu Y., Chen X. Medical Image Fusion with Parameter-Adaptive Pulse Coupled Neural Network in Nonsubsampled Shearlet Transform Domain. IEEE Trans. Instrum. Meas. 2019;68:49–64. doi: 10.1109/TIM.2018.2838778. [DOI] [Google Scholar]

- 20.Yin L., Zheng M., Qi G., Zhu Z., Jin F., Sim J. A Novel Image Fusion Framework Based on Sparse Representation and Pulse Coupled Neural Network. IEEE Access. 2019;7:98290–98305. doi: 10.1109/ACCESS.2019.2929303. [DOI] [Google Scholar]

- 21.Zhang Q., Liu Y., Blum R.S., Han J., Tao D. Sparse representation based multi-sensor image fusion for multi-focus and multi-modality images: A review. Inf. Fusion. 2018;40:57–75. doi: 10.1016/j.inffus.2017.05.006. [DOI] [Google Scholar]

- 22.Qi G., Zhang Q., Zeng F., Wang J., Zhu Z. Multi-focus image fusion via morphological similarity-based dictionary construction and sparse representation. CAAI TIT. 2018;3:83–94. doi: 10.1049/trit.2018.0011. [DOI] [Google Scholar]

- 23.Wang K., Qi G., Zhu Z., Cai Y. A Novel Geometric Dictionary Construction Approach for Sparse Representation Based Image Fusion. Entropy. 2017;19:306. doi: 10.3390/e19070306. [DOI] [Google Scholar]

- 24.Yang B., Li S. Multifocus Image Fusion and Restoration with Sparse Representation. IEEE Trans. Instrum. Meas. 2010;59:884–892. doi: 10.1109/TIM.2009.2026612. [DOI] [Google Scholar]

- 25.Yang B., Li S. Pixel-level image fusion with simultaneous orthogonal matching pursuit. Inf. Fusion. 2012;13:10–19. doi: 10.1016/j.inffus.2010.04.001. [DOI] [Google Scholar]

- 26.Kim M., Han D.K., Ko H. Joint patch clustering-based dictionary learning for multimodal image fusion. Inf. Fusion. 2016;27:198–214. doi: 10.1016/j.inffus.2015.03.003. [DOI] [Google Scholar]

- 27.Li S., Yin H., Fang L. Group-Sparse Representation with Dictionary Learning for Medical Image Denoising and Fusion. IEEE. Trans. Biomed. Eng. 2012;59:3450–3459. doi: 10.1109/TBME.2012.2217493. [DOI] [PubMed] [Google Scholar]

- 28.Liu Y., Wang Z. Simultaneous image fusion and denoising with adaptive sparse representation. IET Image Process. 2015;9:347–357. doi: 10.1049/iet-ipr.2014.0311. [DOI] [Google Scholar]

- 29.Zhu Z., Yin H., Chai Y., Li Y., Qi G. A novel multi-modality image fusion method based on image decomposition and sparse representation. Inf. Sci. 2018;432:516–529. doi: 10.1016/j.ins.2017.09.010. [DOI] [Google Scholar]

- 30.Shen D., Wu G., Suk H.I. Deep Learning in Medical Image Analysis. Annu. Rev. Biomed. Eng. 2017;19:221–248. doi: 10.1146/annurev-bioeng-071516-044442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Litjens G., Kooi T., Bejnordi B.E., Setio A.A.A., Ciompi F., Ghafoorian M., van der Laak J.A.W.M., van Ginneken B., Sánchez C.I. A Survey on Deep Learning in Medical Image Analysis. Med. Image Anal. 2017;42:60–88. doi: 10.1016/j.media.2017.07.005. [DOI] [PubMed] [Google Scholar]

- 32.Zhu Z., Qi G., Li Y., Wei H., Liu Y. Image Dehazing by An Artificial Image Fusion Method based on Adaptive Structure Decomposition. IEEE Sens. J. 2020;42:1–11. doi: 10.1016/j.media.2017.07.005. [DOI] [Google Scholar]

- 33.Qi G., Chang L., Luo Y., Chen Y., Zhu Z., Wang S. A Precise Multi-Exposure Image Fusion Method Based on Low-level Features. Sensors. 2020;20:1597. doi: 10.3390/s20061597. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Qi G., Zhu Z., Erqinhu K., Chen Y., Chai Y., Sun J. Fault-diagnosis for reciprocating compressors using big data and machine learning. Simul. Model. Pract. Theory. 2018;80:104–127. doi: 10.1016/j.simpat.2017.10.005. [DOI] [Google Scholar]

- 35.Li D., Dong Y. Deep Learning: Methods and Applications. Found. Trends Signal Process. 2014;7:197–387. doi: 10.1561/2000000039. [DOI] [Google Scholar]

- 36.Qi G., Wang H., Haner M., Weng C., Chen S., Zhu Z. Convolutional neural network based detection and judgement of environmental obstacle in vehicle operation. CAAI TIT. 2019;4:80–91. doi: 10.1049/trit.2018.1045. [DOI] [Google Scholar]

- 37.Xia K.J., Yin H.S., Wang J.Q. A novel improved deep convolutional neural network model for medical image fusion. Cluster Comput. 2018;22:1515–1527. doi: 10.1007/s10586-018-2026-1. [DOI] [Google Scholar]

- 38.Liu Y., Chen X., Cheng J., Peng H. A medical image fusion method based on convolutional neural networks; Proceedings of the 20th International Conference on Information Fusion (Fusion); Xi’an, China. 10–13 July 2017; [DOI] [Google Scholar]

- 39.Li H., Li X., Yu Z., Mao C. Multifocus image fusion by combining with mixed-order structure tensors and multiscale neighborhood. Inf. Sci. 2016;349-350:25–49. doi: 10.1016/j.ins.2016.02.030. [DOI] [Google Scholar]

- 40.Shen R., Cheng I., Basu A. Cross-Scale Coefficient Selection for Volumetric Medical Image Fusion. IEEE. Trans. Biomed. Eng. 2013;60:1069–1079. doi: 10.1109/TBME.2012.2211017. [DOI] [PubMed] [Google Scholar]

- 41.Singh R., Khare A. Fusion of multimodal medical images using Daubechies complex wavelet transform-A multiresolution approach. Inf. Fusion. 2014;19:49–60. doi: 10.1016/j.inffus.2012.09.005. [DOI] [Google Scholar]

- 42.Zhu Z., Qi G., Chai Y., Li P. A Geometric Dictionary Learning Based Approach for Fluorescence Spectroscopy Image Fusion. Appl. Sci. 2017;7:161. doi: 10.3390/app7020161. [DOI] [Google Scholar]

- 43.Bhatnagar G., Wu Q.M.J., Liu Z. A new contrast based multimodal medical image fusion framework. Neurocomputing. 2015;157:143–152. doi: 10.1016/j.neucom.2015.01.025. [DOI] [Google Scholar]

- 44.Li H., Yu Z., Mao C. Fractional differential and variational method for image fusion and super-resolution. Neurocomputing. 2016;171:138–148. doi: 10.1016/j.neucom.2015.06.035. [DOI] [Google Scholar]

- 45.Li H., Liu X., Yu Z., Zhang Y. Performance improvement scheme of multifocus image fusion derived by difference images. Signal Process. 2016;128:474–493. doi: 10.1016/j.sigpro.2016.05.015. [DOI] [Google Scholar]

- 46.Cvejic N., Canagarajah C., Bull D. Image fusion metric based on mutual information and Tsallis entropy. Electron. Lett. 2006;42:626–627. doi: 10.1049/el:20060693. [DOI] [Google Scholar]

- 47.Liu Z., Blasch E., Xue Z., Zhao J., Laganiere R., Wu W. Objective Assessment of Multiresolution Image Fusion Algorithms for Context Enhancement in Night Vision: A Comparative Study. IEEE Trans. Pattern Anal. Mach. Intell. 2012;34:94–109. doi: 10.1109/TPAMI.2011.109. [DOI] [PubMed] [Google Scholar]

- 48.Petrović V. Subjective tests for image fusion evaluation and objective metric validation. Inf. Fusion. 2007;8:208–216. doi: 10.1016/j.inffus.2005.05.001. [DOI] [Google Scholar]

- 49.Sheikh H., Bovik A. Image information and visual quality. IEEE Trans. Image Process. 2006;15:430–444. doi: 10.1109/TIP.2005.859378. [DOI] [PubMed] [Google Scholar]