Abstract

When processing spoken language sentences, listeners continuously make and revise predictions about the upcoming linguistic signal. In contrast, during comprehension of American Sign Language (ASL), signers must simultaneously attend to the unfolding linguistic signal and the surrounding scene via the visual modality. This may affect how signers activate potential lexical candidates and allocate visual attention as a sentence unfolds. To determine how signers resolve referential ambiguity during real-time comprehension of ASL adjectives and nouns, we presented deaf adults (n = 18, 19–61 years) and deaf children (n = 20, 4–8 years) with videos of ASL sentences in a visual world paradigm. Sentences had either an adjective-noun (“SEE YELLOW WHAT? FLOWER”) or a noun-adjective (“SEE FLOWER WHICH? YELLOW”) structure. The degree of ambiguity in the visual scene was manipulated at the adjective and noun levels (i.e., including one or more yellow items and one or more flowers in the visual array). We investigated effects of ambiguity and word order on target looking at early and late points in the sentence. Analysis revealed that adults and children made anticipatory looks to a target when it could be identified early in the sentence. Further, signers looked more to potential lexical candidates than to unrelated competitors in the early window, and more to matched than unrelated competitors in the late window. Children’s gaze patterns largely aligned with those of adults with some divergence. Together, these findings suggest that signers allocate referential attention strategically based on the amount and type of ambiguity at different points in the sentence when processing adjectives and nouns in ASL.

Keywords: American Sign Language, eye tracking, semantic processing, visual world, deaf

Introduction

A key task for individuals processing and comprehending language is to determine the referent of an utterance. In most cases, language is produced and perceived within the context of a complex natural environment that contains multiple potential referents for every spoken or signed utterance, making referent resolution a difficult task. Studies of spoken language processing have revealed that listeners continuously make and revise predictions about upcoming information while the linguistic signal is unfolding. Listeners interpret a linguistic signal immediately and incrementally with respect to the visual context (Spivey et al. 2001, 2002). Similarly, deaf individuals processing a sign language can identify a target object based on partial information in an unfolding ASL sentence (Lieberman et al. 2017). The fact that both linguistic and non-linguistic information is perceived visually may influence how signers allocate referential attention when the surrounding visual scene varies in the amount and type of ambiguity relative to an unfolding sentence. The current study addresses this question by studying incremental processing of adjectives and nouns in American Sign Language (ASL) among deaf children and adults. Before turning to the current study, we briefly review previous findings related to incremental processing of semantic information, integration of adjectives and nouns, and gaze patterns during ASL comprehension.

Incremental processing during sentence comprehension

Listeners often hear sentences in which semantic information produced at an early point in the sentence provides sufficient disambiguating information for the listener to identify the target. When this scenario is presented experimentally, listeners make rapid and anticipatory eye movements to a target picture as soon as they have sufficient information to identify it (e.g., Altmann and Kamide 2007). Frequently, however, the linguistic signal does not immediately provide sufficient information to identify the target. Instead, sentences are often initially ambiguous in relation to the visual context. In these cases, listeners have been shown to divide their attention between potential targets, activating and considering all possible referents until a single referent can be uniquely identified from information in the linguistic signal (Kamide et al. 2003). Speakers use cumulative and combinatory information over time to narrow down a set of possible referents, and must constantly update their mental representations of lexical candidates in order to successfully identify a single target word (Eberhard et al. 1995; Sedivy et al. 1999; Spivey et al. 2001).

Dynamic comprehension of semantic information has been studied in the case of adjective-noun combinations (Sedivy et al. 1999). Adjectives provide an interesting test case for incremental processing for several reasons: they range in the degree to which they characterize objects, they vary cross-linguistically in utterance position relative to the noun, and comprehension of adjectives shows protracted development in children learning language (Gasser and Smith 1998). Sedivy and colleagues (1999) carried out an initial investigation of incremental processing of sentences based on the amount of disambiguating information in a prenominal adjective. Participants were given instructions such as “Touch the blue pen” while sitting in front of visual arrays containing four objects in which either one or two of the objects were blue. Participants were significantly faster to gaze towards the target when there was only one blue object in the array, suggesting that adjective information can be used to predict a target noun.

When an adjective signals one or more referents in a visual scene, a key factor mediating incremental processing is the position of the adjective in the sentence relative to the noun (Ninio 2004). This can be studied by comparing languages in which the adjective is either pre- or post-nominal within the sentence. Rubio-Fernández and colleagues (2018) presented native speakers of Spanish and English with referential expressions such as ‘el triángulo azul’/’the blue triangle’ that differ in their pre- or post-nominal adjective position, and asked them to click on the matching target in a visual array. The array included a target, a shape competitor, a color competitor, and an unrelated competitor. When searching for the blue triangle, English speakers divided their attention between the two blue objects until the noun was presented and the referent could be identified. In contrast, Spanish speakers shifted their attention to the two triangles until the target could be identified based on the post-nominal adjective. When Spanish-speaking bilinguals were presented with the English language input, their visual search pattern was similar to that observed for English speakers, suggesting that referential attention is guided by properties of the constituent order of the language in which it is presented. However, it is unclear how individuals comprehending a language with variable word order might perceive adjectives, nor how utterance position of adjectives relative to nouns might affect comprehension.

Incremental processing during development

A critical question related to incremental processing concerns the timeframe in which this comprehension strategy develops in childhood. Ninio (2004) suggested that integrating noun and adjective information presents a challenge to young children, particularly when there are four pictures with different amounts of ambiguity relative to the adjective and noun. In English, simple sentences that require a listener to integrate adjective and noun information incrementally appears to develop over the third year. Fernald and colleagues (2010) presented 30- and 36-month-old English-speaking children with auditory stimulus sentences like “Can you find the blue car?” and visual scenes with two pictures, including a target and either a color competitor, an object competitor, or an unrelated competitor. The 30-month-olds waited to shift gaze to the target until they heard the noun, and did not use the information provided by the color adjective even if it was sufficient to identify the target. The 36-month olds, in contrast, were able to integrate adjective and noun information and identified the correct target as soon as the linguistic signal provided sufficient information.

There is also evidence that combining semantic information to predict a target is available as young as age two years (Mani and Huettig 2012) or three years (Borovsky et al. 2012). Borovsky and colleagues (2012) found that children between the ages of three and ten years, and adults, made rapid and anticipatory eye movements to a target based on combinatory information about an agent and action that could be used to predict a sentence-final object. In this case, vocabulary ability was a significant predictor of target looking speed for both children and adults, suggesting that language knowledge can be used to support incremental processing. However, when children were required to learn new agent-action-object relationships, 3- to 4-year-old children were not able to use combinatory information during incremental processing; only children ages five and older used such information to make anticipatory eye movements to a target (Borovsky et al. 2014).

With regard to word order effects, Weisleder and Fernald (2009) investigated whether language-specific differences in the sequential order of nouns and adjectives affect children’s ability to interpret adjective-noun or noun-adjective phrases. A group of Spanish-speaking 3-year-old children were directed to find objects using Spanish phrases in which the adjective followed the noun. Children were found to interpret noun-adjective phrases incrementally, providing some evidence for facilitated incremental processing for noun-adjective phrases relative to children hearing adjective-noun phrases in languages such as English.

Gaze and information processing in sign language

In sign languages such as ASL, the linguistic signal and the visual context are both perceived through the same visual channel. This unique feature of language comprehension in sign language requires signers to develop specific referential strategies to alternate their attention between the two signals. Recent research has shown that at the level of lexical recognition, signers use partial information from ASL signs to make rapid gaze shifts to pictures in a visual display (Lieberman et al. 2015; MacDonald et al. 2018). At the sentence level, deaf signers can also use semantic information from an ASL verb to identify a target sign when the verb uniquely constrains the target (Lieberman et al. 2017).

Deaf children acquiring ASL must learn to allocate attention to both a visual linguistic signal and the surrounding visual scene. For deaf children who are exposed to ASL from their deaf parents, these strategies appear to develop early, as parents scaffold interactions in a way that helps children learn when to shift attention (Swisher 2000; Waxman and Spencer 1997). By about 18 months of age, deaf children have been shown to alternate attention based on their own understanding of when and how to perceive linguistic information (Harris et al. 1989). By the age of two, deaf children of deaf parents make frequent and meaningful gaze shifts between linguistic input from their caregiver and the objects and pictures to which that input refers (Lieberman et al. 2014).

In a recent study of lexical recognition during ASL comprehension, MacDonald and colleagues (2018) found that deaf and hearing children acquiring ASL initiated gaze shifts toward a target sign even before sign offset, paralleling findings for adult signers. In our previous study with deaf children (Anonymous), we found that deaf children between the ages of four and eight years can use semantic information from an ASL verb to predict a sentence-final target. For example, if children saw a sentence that started with DRINK while only one drinkable object was on the screen, they would make anticipatory looks towards the target object before the target ASL sign was produced. However, in this study there was always only one possible target once the verb provided semantically constraining information. In contrast, when there is referential ambiguity in the visual scene such that the target can be narrowed down but not identified until later in the sentence, deaf children may manage and allocate visual attention more sequentially. Understanding children’s ability to handle referential ambiguity is one of the aims of the current study.

Across sign languages, there is variability and flexibility in the relative position of adjectives and nouns within an utterance (Kimmelman 2012; Sandler and Lillo-Martin 2006; Sutton-Spence and Woll 1999). ASL has pre-nominal and post-nominal adjectives, but their usage tends to vary between signers (Neidle and Nash 2012; Sandler and Lillo-Martin 2006). In particular, signers may be more likely to choose a pre-nominal adjective when the adjective is categorical, such as color, but may be more likely to use a post-nominal adjective for relative adjectives or when producing classifiers (Rubio-Fernández et al. 2019). Children acquiring ASL have been shown to use inconsistent word order in general (Chen Pichler 2011), suggesting that word order knowledge undergoes protracted development for children acquiring ASL. It is not clear how word order impacts processing of semantic information during ASL comprehension among deaf children. Thus, in the current study we manipulate word order in sentences presented to deaf children and adults to examine whether color adjectives produced either before or after a noun object facilitate target recognition.

The current study

We investigated real-time processing of ASL adjectives and nouns in deaf adults and children. Using a modified visual world paradigm (Tanenhaus et al. 1995), we examined the effects of ambiguity in the referent within a visual scene. Specifically, we asked how ambiguity in adjectives and nouns affects gaze patterns towards a target object in an unfolding ASL sentence. We presented deaf signers with ASL sentences with the structure VERB SIGN1 QUESTION-PARTICLE SIGN2, and constrained potential target objects presented on the screen either early in the sentence, i.e., at SIGN1, or late in the sentence, i.e., at SIGN2. We predicted that signers would show anticipatory looks to the target object when there was sufficient information early in the sentence to identify the target, i.e., when SIGN1 uniquely identified a single target object in the visual scene. In contrast, if the information was not sufficient to identify the correct target, i.e., when SIGN1 was ambiguous in its reference, we expected signers to delay looks to the target until later in the sentence. To study word order effects, we included sentences where the adjective occurred at SIGN1 and the noun at SIGN2, and vice versa.

One way in which the current study goes beyond previous findings is that it allowed us to investigate how signers activate potential target candidates as the sentence unfolds. If signers, like speakers, shift gaze to potential targets even before the target can be uniquely identified, then we would expect to see increased looks to potential targets relative to unrelated competitors in the early window. For example, if the sentence starts with “SEE YELLOW…” then signers may look more at two yellow objects than two non-yellow objects during this part of the sentence. This gaze pattern would suggest that semantic processing is a modality-independent process and that signers allocate gaze much as listeners do. However, this strategy--in which listeners gaze towards potential referents--may not be an efficient one for signers, as it requires them to shift attention away from the unfolding linguistic input which is critical for further disambiguation of the referent. Thus, the visual attentional demands of perceiving sign language may require signers to continue attending to the sentence until the target can be uniquely identified.

Once the target sign has been even partially produced, we expected that the majority of fixations would be to the target picture. However, signers may also look more towards objects that match the properties of the target sign either in color or object. We investigated whether signers, like speakers, activate objects or features that match the target, despite the fact that the target had already been uniquely constrained. Thus, if signers see “SEE YELLOW WHAT? FLOWER”, we predicted that while most looks will be to the yellow flower, signers would show increased looks to a matched competitor (e.g., a red flower) relative to unrelated objects.

We first investigated processing in deaf adult ASL signers to shed light on the effects of word order and ambiguity on the processing of ASL sentences in adult signers with native or early sign experience. With this baseline information regarding typical adult gaze patterns, we investigated the same question in deaf children who had acquired ASL at birth or early in life. We predicted that children would show similar effects of ambiguity as adults, i.e., that they would make anticipatory gaze shifts to the target picture in trials in which the target could be identified early, although the effect might be smaller than that observed in adults. We further predicted that children might be more susceptible to adjective and noun competitors at both early and late points in the sentence, and thus would show increased fixations to related competitors overall. Finally, we predicted that older children would show more anticipatory looks than younger children, providing evidence for development of referential attention and the ability to integrate adjective and noun information throughout childhood.

Methods

Participants

Adult participants.

Eighteen deaf adults (11 males, 7 females) between the ages of 19 – 61 years (M= 32 years) participated. Nine participants had deaf parents and had been exposed to ASL from birth. The remaining nine participants had hearing parents and were first exposed to ASL before age 2 (N= 6), at age 5 (N= 1) and between ages 11 and 12 (N= 2). All participants had been using ASL as their primary form of communication for at least 19 years. One additional adult was tested but was unable to complete the eye-tracking task.

Ethical approval was provided by the Institutional Review Board at the participating university. All participants viewed study information in ASL or English and gave written consent prior to the experiment.

Child participants.

Twenty deaf children (12 females, 8 males) between the ages of 4;2 to 8;1 (M = 6;5, SD = 1;3) participated. Seventeen children had at least one deaf parent and were exposed to ASL from birth. The remaining three children had two hearing parents and were exposed to ASL by the age of two and a half. All parents reported that they used ASL as the primary form of communication at home. All but two of the children attended a state school for deaf children in which ASL was used as the primary language of instruction; two children attended a segregated classroom for deaf children within a public school program. One additional child was recruited but was unable to do the eye-tracking task.

Stimuli

Experimental stimuli consisted of recorded ASL sentences presented with four pictures on a 17-inch LCD display. The pictures were 300 × 300 pixels and were presented on the four quadrants of the screen. Pictures were color photo-realistic images on a white background. The ASL sentence was presented on a black background measuring 300 × 300 pixels and presented in the center of the display.

The ASL sentence had the structure VERB SIGN1 QUESTION-PARTICLE SIGN2, where SIGN1 and SIGN2 included an adjective and a noun. Each sentence began with a verb prompt (e.g., LOOK-FOR, FIND, SEE). The placement of the adjective and noun varied to create two different constituent orders (noun-adjective and adjective-noun). The wh-question particle was included to enable standardization of the timing of critical points in the sentence (i.e., adjective and noun onsets), and to allow time for participants to fixate the surrounding pictures while processing the dynamic sentences.

The ASL stimulus sentences were recorded by two deaf native signers who produced the sentences with a positive affect and slightly child-directed prosody. Stimuli were edited using Adobe Premiere Pro. The videos were edited such that the last frame of the verb occurred at exactly 1000 ms from video onset. To do this, the first frame in which the signer’s hands began to transition away from the formation of the verb sign was identified, and 1000 ms prior to this point was then identified as the starting point. At the starting point, the signer’s hands were typically in the resting position by her sides. Next, the SIGN1 QUESTION-PARTICLE portion was edited to be exactly 2000 ms long; the first frame was the frame in which the signer’s hands transitioned from the verb sign and the final frame came at the end of the question particle before the signer’s hands transitioned to SIGN2. If the amount of time was more or less than 2000 ms for the SIGN QUESTION-part, the video speed was adjusted slightly up or down. Finally, the SIGN2 was produced lasting approximately 1000 ms, after which the video ended.

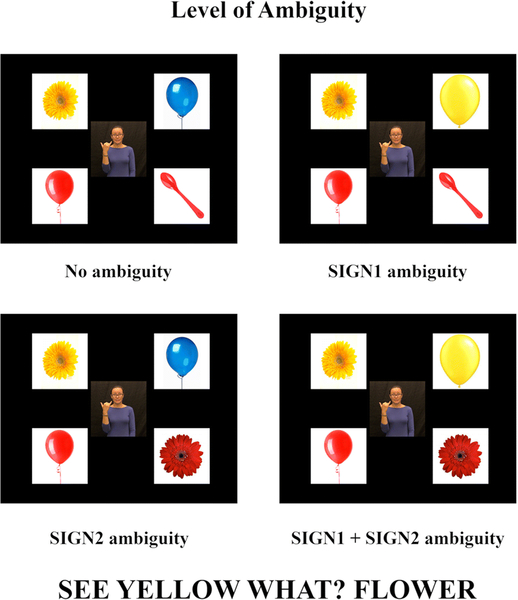

The pictures in the display consisted of one target and three non-target pictures. The non-target pictures were manipulated such that the number of potential referents varied at both early and late points in the sentence (Fig. 1). That is, the pictures included either a color competitor only, an object competitor only, a color and object competitor, or no related competitors. This resulted in four different conditions in each of two word orders (Table 1).

Fig. 1.

Example of stimuli in each condition. The target (e.g., yellow flower) is presented with three competitors and a signed sentence (e.g., “SEE YELLOW WHAT? FLOWER”).

Table 1.

Overview of picture displays presented for each word order in each condition varying the level of ambiguity

| Level of Ambiguity |

Word order | |

|---|---|---|

| Adjective-Noun (SEE YELLOW WHAT? FLOWER) |

Noun-Adjective (SEE FLOWER WHICH? YELLOW) |

|

| SIGN1 ambiguity | target adjective competitor two unrelated competitors | target noun competitor two unrelated competitors |

| SIGN2 ambiguity | target noun competitor two unrelated competitors | target adjective competitor two unrelated competitors |

| SIGN1 + SIGN2 ambiguity | target adjective competitor noun competitor one unrelated competitor | target noun competitor adjective competitor one unrelated competitor |

| No ambiguity | target three unrelated competitors | target three unrelated competitors |

Importantly, unrelated competitors overlapped with other pictures in non-target attributes. For example, if the target was a yellow flower, competitors might include a purple dress and a purple chair. In this way, participants could not distinguish trial types simply by observing whether any of the surrounding pictures shared either a color or object attribute.

The adjective in the sentence was always a color word; color words were chosen based on previous studies of adjective processing in young children (Fernald et al. 2010), and due to the fact that colors are categorical and salient (Rubio-Fernández et al. 2018). The nouns in the sentences were all chosen to be concrete, easily depicted and early acquired signs. Nouns were excluded if they were strongly associated with a specific color (e.g., frogs are typically green; bananas are yellow). Objects included clothing items, cars, furniture, and other household objects that can more freely vary in color.

There were six trials in each condition and eight conditions for a total of 48 trials. To create the visual arrays, 12 sets of object and picture pairs were selected (see Appendix A for a full stimuli list). Each of the 12 color/object combinations was presented four times, each time with a different target, a different set of competitors, and in a different condition. Across versions of the experiment, the stimuli were counterbalanced such that any object and color was equally likely to appear as a target or a competitor. The position of the target on the screen was counterbalanced as well. The trial order was pseudorandomized and presented in three blocks of 16 trials with a break in between each block.

Procedure

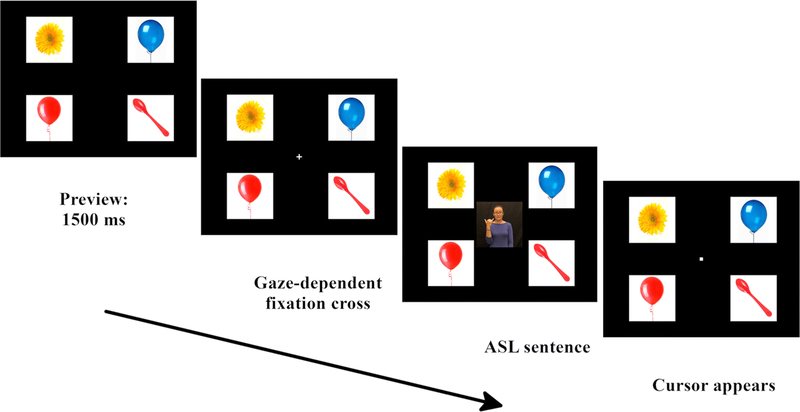

After obtaining written signed consent from the participants (adults) or parents (children), participants were seated in front of the screen and the eye-tracker. The stimuli were presented using Eyelink Experiment Builder software (SR Research). Participants watched a pre-recorded ASL instruction video explaining that they would see four pictures followed by an ASL sentence and that they should click on the matching picture when the cursor appeared on the screen. A 5-point calibration and validation sequence were conducted prior to starting the experiment. Additionally, a drift correction check was performed before each trial block. Participants were presented with three practice trials to familiarize them with the procedure.

Each trial started with a preview period of 1500 ms, in which the pictures appeared on the screen. Next a gaze-contingent central fixation cross appeared, such that when participants gazed at the cross the ASL video began. At the end of the video, a cursor appeared in the center of the screen. Adult participants were instructed to click on the matching picture. The pictures remained on the screen until a picture was clicked. Participants were encouraged to guess if they did not know the answer (Fig. 2).

Fig. 2.

Structure of a single trial

The procedure for the child participants was identical to that for the adults, except that the children were instructed to point to the target instead of clicking on the mouse, so that they could remain focused on the screen without looking down at the mouse. A second experimenter sat next to the child and clicked the picture that corresponded to their point.

Eye movements were recorded using an Eyelink 1000 eye-tracker (SR Research) in a remote arm-mount configuration at 500 Hz. The eye tracker and the display were placed 580–620 mm from the participants’ face. On each trial, fixation recordings started at the initial presentation of the visual scene and continued throughout the ASL video until participants clicked on a picture. Data were binned offline into 50 ms time windows.

Picture naming task

Child participants were administered a picture naming task which consisted of 174 pictures used to elicit single ASL signs. The pictures were primarily concrete nouns (N = 163) and color signs (N = 11) and consisted of items and colors used in the current task and in related experiments of deaf children’s ASL processing. Children were seated at a table next to an experimenter who presented the pictures on a laptop computer. Pictures were presented on PowerPoint slides with arrays of two to five pictures per slide. Children were instructed to name the objects in ASL and their responses were video recorded for later coding. This task was administered following the eye-tracking task. The purpose of the picture naming task was to establish that children had a basic knowledge of ASL vocabulary items typically acquired by the time they enter kindergarten (Anderson and Reilly 2002). In addition, the task was used to evaluate knowledge of the specific items used in the eye-tracking task, so that experimental stimuli signs that the child did not produce could later be excluded from analysis of gaze data.

Children’s responses to each item were counted as correct or incorrect. In order to be counted as correct, the child’s production had to match the target sign with minimal phonological variation (phonological substitutions typical of children acquiring ASL were allowed). All other cases were counted as incorrect. The mean performance on the task was 82% (range 32% to 97%). Of the 20 child participants, one participant completed only 117 (out of 174) items due to lack of attention. Importantly, over half of the participants scored at least 90% on the task, suggesting that children were largely familiar with the items used as stimuli in the experiment.

We used responses on the picture naming task to determine whether children knew the specific set of signs that were used in each stimulus sentence they viewed in the eye-tracking task. We matched responses to both SIGN1 and SIGN2, i.e., target object and color, and excluded trials in which the child did not produce both signs correctly. Using this approach, 158 (19.8 %) trials were excluded from final analyses. Additionally, we excluded any participant who, after removing trials for which the child did not produce the target object or color, contributed fewer than 10 of 48 trials. This resulted in excluding four participants from the dataset, leaving 16 children that were included in the final analyses. Although children’s production of the stimuli items may have underestimated their comprehension knowledge, we chose to use this conservative approach in order to ensure that gaze data could be interpreted with certainty that children were perceiving familiar labels.

Results

Data cleaning

We assessed accuracy on the task, and excluded trials from the analysis where participants did not select the correct target picture. This resulted in removal of 19 (2.2 %) trials for adult participants, and 47 (7.4 %) trials for child participants. Next, we excluded trials exceeding the trackloss threshold of 50% for adult participants, which led to removal of 14 (1.6 %) trials. For child participants, we used a lower trackloss threshold of 20%.1 This led to removal of 10 trials (1.7 %).

Eye tracking results

Approach to eye tracking analysis

Eye movement data were analyzed according to fixations to five areas of interest – the four pictures and the video – across the sentence. Accuracy was calculated by dividing fixations to the target by the sum of fixations to all areas of interest for each participant and item. We performed permutation-based analyses (Maris and Oostenveld 2007) on divergences in the time course to examine effects of word order and ambiguity of the visual scene on target fixations.

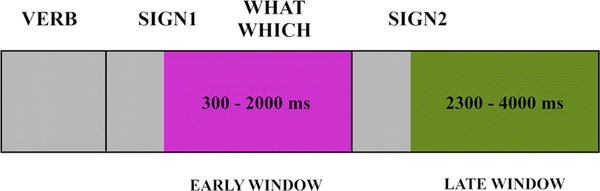

The next analysis addressed how signers allocate gaze based on the degree of ambiguity in the pictures relative to the target. We divided the time course in two discrete time windows based on the appearance of SIGN1 and SIGN2 in the stimulus sentence (Fig. 3). The early window defines the part of the sentence from the onset of SIGN1 until the onset of SIGN2. To ensure that gaze shifts were in response to the unfolding stimuli, the first 300 ms for each time window was excluded from the analysis. Thus, the early window lasted 1700 ms starting 300 ms from SIGN1 onset. The second time window, defined as the late window, began 300 ms following SIGN2 onset and continued for 1700 ms as well. The presentation of the sentence-initial verb in the video was excluded from the analysis because participants almost exclusively fixated the video stimulus at this time.

Fig. 3.

Schematic of time windows used for statistical analysis

In the early window, we asked whether signers made anticipatory looks to the target when the early window was unambiguous (e.g., the sentence “SEE YELLOW WHAT? FLOWER” in which there was only one yellow object on the screen). We computed mean log gaze probability ratios (Knoeferle et al. 2011; Knoeferle and Crocker 2009) for the target picture relative to the competitor pictures (log(proportion of looks to the target +1 / sum of proportion of looks to competitors +1). Data were analyzed using a linear mixed-effects regression model (Barr 2008) using the lme4 package in R (Version 3.5.0) with fixed effects for ambiguity of the visual scene relative to SIGN1 (ambiguous, unambiguous) and random effects for participants and items (Baayen et al. 2008).

To determine whether signers, like speakers, begin to activate and gaze towards potential targets as the sentence unfolds, we also looked at the subset of sentences in which the target referent was ambiguous in the early window. In these cases, we asked whether signers looked to the two potential targets more than to the other two pictures. For example, if given the sentence “SEE YELLOW WHAT? FLOWER”, and there were two yellow objects on the screen, we asked whether signers look more to these yellow objects than to the other two objects. We compared mean proportion of fixations to potential targets vs. unrelated competitors using a linear mixed-effects regression model.

Although looks to the target and competitors in the early window enabled us to investigate effects of early ambiguity, we were also interested in exploring whether signers activated lexical items that were produced late in the sentence, even when the target had already been identified. To do this, we categorized the pictures in the late window according to whether the competitor matched one of the properties (object or color) of SIGN2. For example, in the sentence “SEE YELLOW WHAT? FLOWER”, we compared looks to a red flower compared to the two unrelated competitors. We compared the proportion of fixations to matched and unrelated competitors for the subset of trials in which there were two possible referents that matched SIGN2.

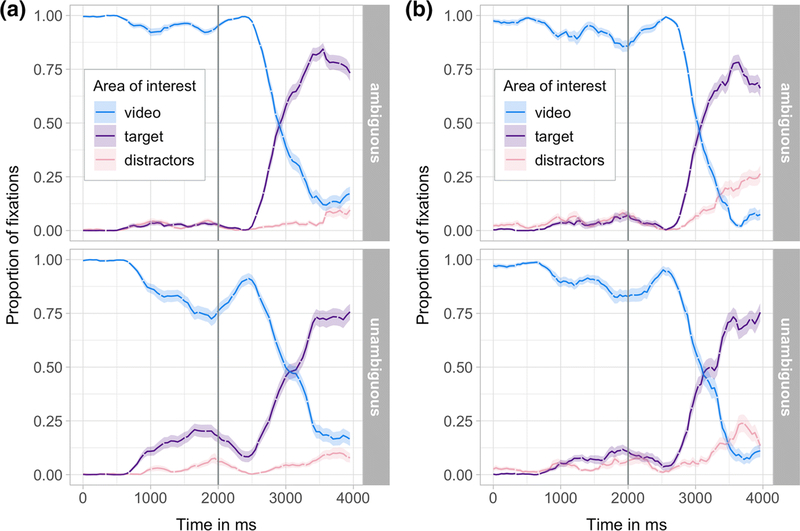

Time course of fixations

Adult participants.

We ran permutation-based analyses to compare effects of word order on target fixations in sentences based on the presence or absence of early ambiguity. Analysis revealed no differences between word orders in the time course for the ambiguous visual scene. In contrast, in trials in which the first sign was unambiguous, there were word order effects between 1800 – 2150 ms (p < .05). Signers fixated the target significantly longer when the adjective preceded the noun. This is likely due to the salience of color: when signers could identify the target object by color early in the sentence, they spent longer fixating the target.

Having established these marginal effects of word order, in the remaining analyses we collapsed across word order to investigate the effects of ambiguity. Running a permutation-based cluster analysis comparing the ambiguous and the unambiguous visual scene revealed significant divergences in the time course between 1150 – 2300 ms (p < .001). Adult signers shifted their gaze towards the target early in the sentence when the visual scene was unambiguous (Fig. 4). Following this initial shift to the target, signers shifted gaze back to the video to see the rest of the sentence, and then directed a second fixation to the target picture. In contrast, when the visual scene was ambiguous, signers continued to watch the video with few looks to the picture until the target sign was produced. There was a second divergence between conditions from 3050 –3450 ms (p < .05), with more looks to the target in the early ambiguity conditions. Of note, this late divergence in target looks is based on dividing trials according to their early ambiguity, suggesting a possible carry-over effect of early ambiguity to later points in the sentence.

Fig. 4.

Time of mean fixations to the video (blue), the target (purple) and the distractors (pink) from 0 – 4000 ms following the onset of SIGN1 separately for ambiguous (upper panel) and unambiguous (lower panel) visual scene relative to SIGN1 for a) adult and b) child signers. The vertical line marks the start of SIGN2.

Child participants.

To determine whether children used information early in the sentence to make anticipatory looks to a target, we ran a permutation-based cluster analysis comparing the ambiguous and the unambiguous visual scene. Analysis revealed no significant divergences across the time course for the child participants. Visual inspection of the time course illustrates that children shift their gaze to the target when it can be identified early, but that they also shift gaze when the target is still ambiguous (Fig. 4). Although the target cannot be identified at this point, the increased shifts are likely reflecting looks to potential targets, suggesting that children are quick to look for the target when they have even partial information. Children also shifted back to the video after this initial look to see the unfolding ASL sentence. Adding the factor of word order showed a divergence in the time course by word order between 3050 – 3300 ms (p < .05). Children fixated longer on the target when perceiving sentences in the noun-adjective order. This is the opposite pattern as that observed in adult signers, who fixated the target longer in the adjective-noun order. Importantly, however, the effect for adults was observed in the early window (i.e., for adjective noun order; the adjective portion of the sentence), while for children the effect was in the late time window (noun-adjective order; thus also the adjective portion). Thus, for both populations, greater fixations to the target occurred at a time point in which the color adjective was produced. This suggests that properties of the adjective, such as the salience of color, might be at least partially responsible for these word order effects. In the remaining analyses, we collapsed across word order.

Window analysis

Target looking.

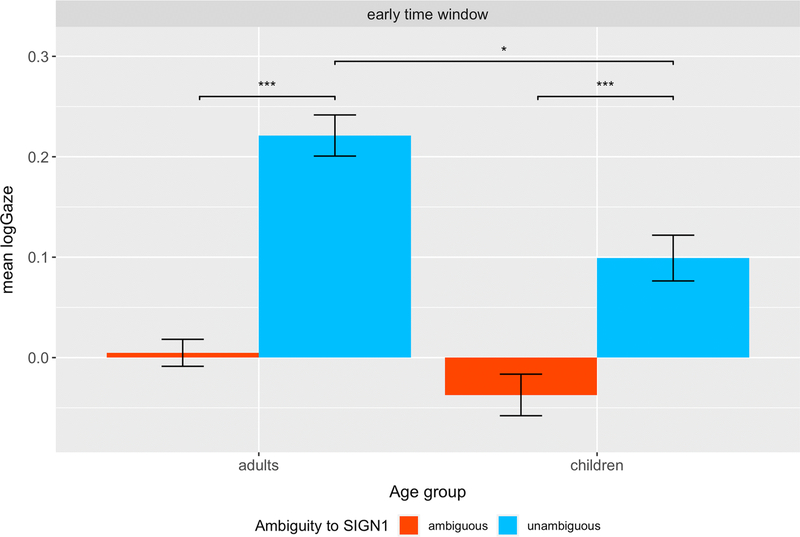

As described above, we calculated the mean log gaze probability ratios (mean logGaze) for the target picture relative to the competitor pictures in the early window (Fig. 5). Positive values indicate greater fixations to the target picture while a negative value indicates greater fixations to the competitors. We fit a linear-mixed effects regression to compare the mean logGaze in the early window with fixed effects of SIGN1 ambiguity and random effects of participant and item. For adult participants, analysis revealed a main effect of ambiguity (β = .21; SE = .02; t= 9.34; p < .001) suggesting that, as predicted, adult signers looked significantly more to the target picture in the early window when the visual scene is unambiguous vs. ambiguous in relation to SIGN1.

Fig. 5.

Mean logGaze transformation of adults’ and children’s fixations to the target relative to the competitor pictures by ambiguity of the visual scene for SIGN1 in the early window.

For child participants, we fit a linear-mixed effects regression on fixations in the early window with SIGN1 ambiguity as a fixed effect, age as a continuous variable, and participant and item as random effects. We found a main effect of ambiguity (β = .14; SE = .03; t= 4.50; p.< .001). Children fixated more on the target when it could be uniquely identified early in the sentence than when it could not yet be identified. There were no age effects.

To compare adult and child participants’ fixations in the early window, we fit a linear-mixed effects regression with SIGN1 ambiguity and age group (adults, children) as fixed effects and participant and item as random factors. The analysis showed a main effect of ambiguity (β = .21; SE = .02; t= 8.81; p < .001) and an interaction of ambiguity and age group (β = −.07; SE = .04; t= −1.97; p < .05). Planned comparisons revealed that adults fixated more on the target than children (β = .12; SE = .04; t= 3.05; p < .05) when the visual scene is unambiguous and the target could be uniquely identified in this early window.

Target and competitor looking for SIGN1 ambiguity.

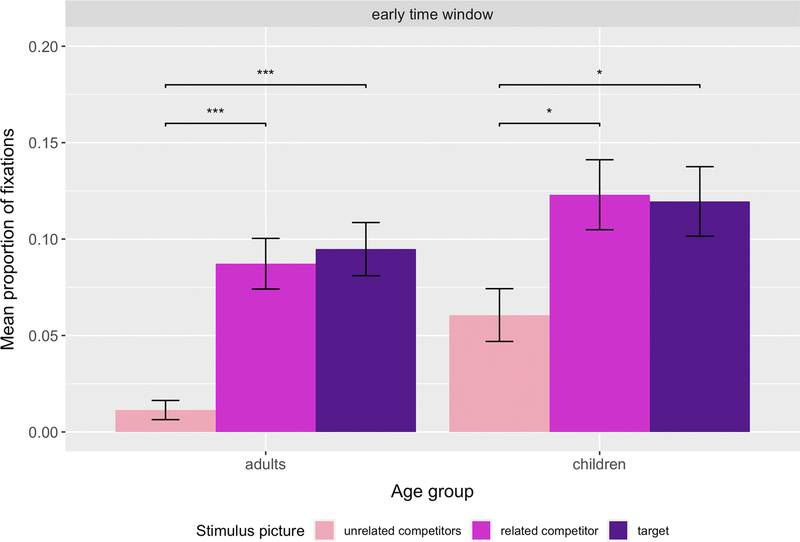

Next, we looked at the subset of trials in which the visual scene was ambiguous in relation to SIGN1 (Fig. 6) to determine whether signers begin fixating on potential targets more than unrelated competitors as the sentence is unfolding. We calculated mean proportions of fixations to the two potential targets and the two unrelated competitors. Fitting a linear-mixed effects regression for the stimulus pictures revealed a main effect (β = −.17; SE = .02; t = −9.04; p < .001). Planned comparisons showed that adult signers looked more to the target and the related competitor than the unrelated competitors (Table 2). As expected, participants were equally likely to fixate the target and the related competitor, as there was no way to determine during this window which of the two pictures would ultimately become the target.

Fig. 6.

Mean proportion of adults’ and children’s fixations to the target, related competitor, and the unrelated competitors for ambiguous visual scene relative to SIGN1 in the early window

Table 2.

Parameter estimates for contrasts of target, related competitor and unrelated competitor in the early time window in adult and child signers

| Adults | Children | |||||

|---|---|---|---|---|---|---|

| Contrast | Estimate | SE | t value | Estimate | SE | t value |

| unrelated vs related competitor | −.08 | .02 | −4.91** | −.06 | .02 | −2.66* |

| unrelated competitor vs target | −.08 | .02 | −5.40** | −.06 | .02 | −2.51* |

| related competitor vs target | −.01 | .02 | −0.49 | .003 | .02 | .15 |

p < .001

p < .05

For child participants, we ran a linear-mixed effects regression with picture type (potential targets, unrelated competitors) as the fixed effect and age as a continuous variable. We found a main effect of stimulus picture (β = .18; SE = .03; t = −6.47; p < .001). Planned comparisons revealed that children looked more to the target and the related competitor than to the unrelated competitors, but as expected, no difference in looks to the target and the related competitor (Table 2). Children’s increased looks to potential targets is likely responsible for the negative logGaze values in this condition. Age was not significant.

Comparing picture type for adults and children using a linear-mixed effects regression revealed a main effect of picture type (β = .17; SE = .02; t = 8.09; p < .001), no effect of age group, and no interaction. Thus, adults and children both looked more to the target and the related competitor than to the unrelated competitor.

Competitor looking in the late window.

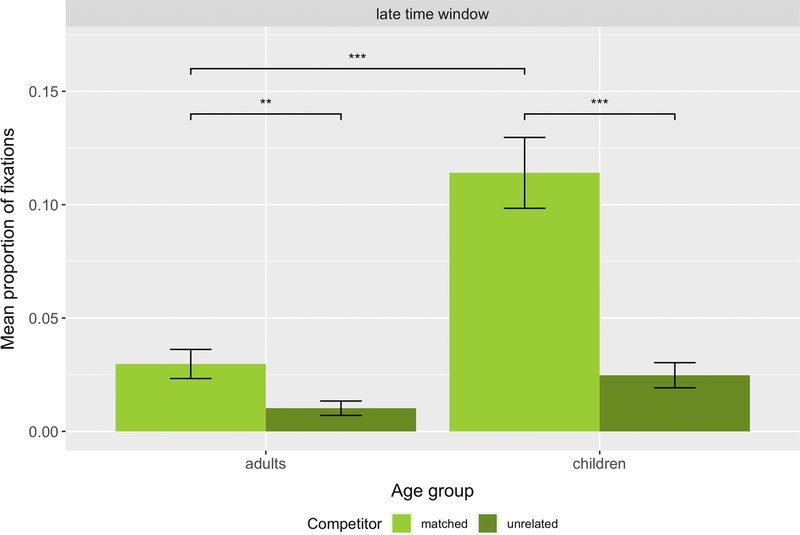

Finally, we analyzed looks to the competitor pictures in the late sentence window for the subset of trials in which the visual scene was ambiguous relative to SIGN2 (regardless of whether the visual scene was ambiguous at the previously presented SIGN1) (Fig. 7). At this point in the sentence, the target could always be identified regardless of condition. We predicted that, although the majority of looks would be to the target, signers might look more to competitors that shared features with SIGN2. For example, for the sentence “SEE FLOWER WHICH? YELLOW”, we expected to see more looks to a yellow balloon (matching the target in color) than to a red balloon (no match with target features). Comparing matched and unrelated competitors using a linear-mixed effects regression revealed a significant effect (β = −.02; SE = .01; t= −2.79; p < .01) showing that adult signers fixated matched competitors more than unrelated competitors in an ambiguous context late in the sentence.

Fig.7.

Mean proportion of adults’ and children’s fixations to matched and unrelated competitors for ambiguous visual scene for SIGN2 in the late time window

Among child participants, a linear-mixed effects regression revealed a main effect of competitor type (β = −.09; SE = .02; t = −5.41; p < .001). Children looked more to the matched than to then unrelated competitor late in the sentence (Fig. 7). There were no age effects.

Fitting a linear-mixed effects regression with competitor type and age group (adults, children) as fixed effects and participant and item as random effects showed a marginal significant effect of competitor type (β = −.02; SE = .01; t = −1.83; p = .06), a main effect of age group (β = .08; SE = .01; t = 6.40; p < .001) and a significant interaction between the two factors (β = −.07; SE = .02; t = −4.23; p < .001). Planned comparisons revealed that child signers look more to the matched competitor than adult signers (β = −.08; SE = .01; t = −6.39; p < .001).

Discussion

We tested adult and child signers of ASL to examine how ambiguous visual scenes affect referential attention while the linguistic signal is still unfolding. During sentence comprehension, signers made anticipatory looks to a target adjective or noun when it could be identified early in the sentence. Children and adults both made these anticipatory looks, although children had fewer such looks than adults. This finding provides further evidence for incremental processing of ASL sentences in a manner that is parallel to spoken language semantic processing. However, signers also showed unique patterns that arose from processing in the visual modality: following the initial shift to the target, signers shifted their gaze back to the unfolding sentence to perceive the remainder of the video. They did this despite the fact that the target had already been uniquely identified. Implications of this pattern are discussed below.

Allocating referential attention to language and visual information

As adult and child signers viewed an ASL sentence, they showed clear evidence for processing information incrementally. When the target could be uniquely identified early in the sentence, signers shifted gaze away from the video to look at the target. This pattern has been well established among listeners of spoken language (Sedivy et al. 1999; Spivey et al. 2001). However, perceiving a visual language could have motivated an alternative pattern, in which signers remained fixated towards a linguistic stimulus to ensure they did not miss any critical linguistic information. Instead, signers shifted gaze to the target as soon as they could identify it. This finding aligns with our previous findings using a similar paradigm in which a verb at the onset of a sentence constrained the target sign (Anonymous) and suggests that incremental processing is a modality-independent process.

After this initial gaze shift, a pattern unique to sign language comprehension occurred: signers quickly gazed back to the sentence video in order to perceive the remaining signs. We attribute this shift back to the video to signers’ sophisticated strategies for allocating referential attention. Signers, likely due to an accumulation of experience perceiving the world visually, can alternate gaze between an ongoing linguistic signal and the surrounding visual world in a way that maximizes perception of relevant linguistic information. Notably, signers tended to shift away from the video during the semantically light question particle in the middle of the sentence. Thus, they did not miss critical information during this well-timed shift.

Signers’ ability to carefully manage and allocate their own visual attention, and to do so by yong childhood, may be rooted in a more domain-general advantage for deaf individuals in tasks involving selective visual attention (Bavelier et al. 2006). Deaf individuals demonstrate enhancements relative to hearing individuals for tasks that rely on specific aspects of visual attention, including detection of information in parafoveal vision (Neville and Lawson 1987). In the current task all stimuli were visually accessible when fixating the signer, and we did not assess peripheral processing. However, we speculate that signers, when looking at a particular picture, were able to quickly shift gaze back to the unfolding linguistic stimulus when they detected additional meaningful information (i.e., the target sign). Deaf individuals’ ability to react quickly to visual stimuli (Pavani and Bottari 2012) has likely implications for their strategic allocation of attention. This may help signers navigate visual and linguistic information, as in the current task, but may also be advantageous when managing complex visual scenes or social interactions involving several interlocutors (Coates and Sutton-Spence 2001). Importantly, both adult and child signers in the current sample and lifelong exposure to ASL, and this experience with and exposure to sign language is also a critical contributor to successful management of visual attention (Lieberman et al. 2014).

The overlap of color and object competitors in the current study allowed us to examine whether signers considered a limited set of potential target items when the target could be only partially constrained. In the early ambiguity conditions, signers saw a visual scene where the first critical sign constrained the possible targets to two of the four pictures. In studies with spoken language, listeners will start shifting gaze to the potential targets as soon as they have partial information from a color adjective (Sedivy et al. 1999). We expected that if signers behave like listeners, they should also gaze to potential targets at this point in the sentence. The current results support this interpretation. Although the majority of fixations remained on the sentence video in these ambiguous sentences, there were more fixations to potential targets (i.e., the target and a related competitor) than to unrelated pictures. As information is provided by the input, the set of potential referents is partially constrained, and signers respond by gazing at these possible referents. This pattern held true for the child signers. Once again, this pattern suggests that many of the strategies involved in matching language to its referents arise independent of the modality in which the language is being comprehended.

Finally, in the second half, or late portion of the sentence, we examined whether signers gazed towards a picture that matched in either color or object to the target, despite the fact that the target had already been uniquely identified. Once again, this question aims at the influence of modality in guiding the allocation of referential attention. Both child and adult signers demonstrated continued activation of lexical signs that were produced, in that they fixated more to target-matched competitors than to unrelated competitors. Thus, competing lexical items are still activated even when the target has been uniquely constrained (Eberhard et al. 1995; Hanna et al. 2003; Sedivy 2003; Sedivy et al. 1999). Comparison across groups revealed that children fixated matched competitors more than adults in this late window, suggesting that they are more susceptible to competitor activation even when the target could be identified. The natural drive to gaze at an object that has been named is indeed one that is exploited throughout the visual world paradigm (Eberhard et al. 1995; Tanenhaus et al. 1995). Signers, like speakers, showed evidence in this paradigm for fixating objects as they are activated. This drive is strong enough to override the fact that the target had already been identified, which is particularly striking in the case of processing language visually.

Referential attention in child signers

As a group, children showed evidence that they had largely developed the ability to allocate referential attention effectively based on the amount and specificity of the information provided by the linguistic signal. As with adults, even the youngest children showed an initial anticipatory gaze shift to a target when it could be identified early, although there was variability in children’s shifting patterns. When children perceived a sign at the end of the sentence that matched a non-target object on the screen, they fixated more than adult signers did in this same window towards the matched competitor. While children were clearly able to arrive at the target sign with high speed and accuracy, they were also more susceptible to activating these competitors. Children may thus still be developing the ability to integrate noun and adjective information across signs as they unfold in a single utterance (Ninio 2004; Weisleder and Fernald 2009).

Given the wide age range of the child participants in this study, we predicted that age would be a significant predictor of gaze patterns. This was not the case; age did not contribute to the variance in most of our analyses. It is possible that the lack of age effects was due to the relatively small number of participants across ages. We believe a more likely explanation is that the process studied here had already been fully acquired by the children in our sample. Previous studies have shown that hearing children can integrate noun and adjective information by the age of three (Fernald et al. 2010). Similarly, in their study of sentence processing requiring integration of an agent and an action, Borovsky and colleagues (2012) found that vocabulary ability was a more robust predictor than age of the speed with which the target was recognized. Individual differences in linguistic and cognitive skills are likely better predictors of performance than age for tasks involving semantic processing (Nation et al. 2003). In the current study, vocabulary score was not obtained, due to lack of existing ASL assessment instruments for this age range.

Given that the children in the current study were all exposed to ASL before age two, and that ASL and English show similar developmental trajectories with regard to major linguistic milestones (Mayberry and Squires 2006), it is likely that in our study, children’s ability to process adjectives and nouns incrementally and combinatorally had already been fully acquired. Similar performance of adults and children was observed in previous literature in hearing children as well. When presented with instructions like Point to the big coin, 5-year-old English speaking children used the semantics of the scalar adjective and the referential context to identify the target referent in a visual array with four objects (Huang and Snedeker 2013). Three-year-old Dutch children process informative adjective-noun phrases, i.e., the small mouse, as fast as adult participants (Tribushinina and Mak 2016). In both studies, children identified the target shortly following the onset of the adjective and prior to the onset of the noun. Importantly, these studies all use syntactically simple sentences, where the anticipatory looks are driven by lexical activation. In contrast, in studies where ambiguity arises from more complex syntactic structures, and thus involve higher level syntactic processing, children are unable to integrate referential cues with equal success (Hurewitz et al. 2000; Snedeker and Trueswell 2004; Trueswell et al. 1999). The studies by Huang and Snedeker (2013) and Tribushinina and Mak (2016) as well as this study examine more basic level lexical processing, suggesting that at this level referential information can be fully exploited during processing in children to the same extent as adults.

Effects of word order

Finally, we investigated whether the relative position of adjectives and nouns influenced gaze patterns across the sentence. Both adults and children showed a slight processing advantage for target recognition at the point in the sentence when the color adjective was produced. Adult signers showed more fixations to the target early in the sentence when the disambiguating sign was an adjective than when it was a noun (i.e., in the adjective-noun order). For children, a complimentary pattern was observed: children fixated the target more in the noun-adjective order, but these increased fixations were only seen in the later half of the time course. Thus, both children and adults showed an advantage in target fixations at the point in the sentence when the color adjective was presented, though the effect manifested early for adults and later for children.

Given that all of the adjectives in the stimuli were color signs, we cannot differentiate whether it was the presence of an adjective in general, or a particular feature of color adjectives, that drove this effect. Children may have processed noun-adjective sentences more efficiently, as has been found in Spanish-speaking children where the adjective is typically post-nominal (Weisleder and Fernald 2009). At the same time, color is known to be a particularly salient feature in perceiving objects visually (Melkman et al. 1981; Odom and Guzman 1972), so it is possible that viewing a color sign attracted more attention and thus longer fixations. In future work it will be critical to alternate the adjective type (e.g., categorical, relative) and position relative to the noun to tease apart the influence of visual salience from a general strategy for processing adjectives and nouns. The current results do suggest that both adults and children respond quickly and robustly to color adjectives by shifting gaze to the matching object in the visual scene.

Conclusion

In sum, we found evidence for incremental processing of adjectives and nouns in deaf adult and child signers. Our findings point to a largely modality-independent process of activation of semantic information as it is presented in conjunction with a visual scene. Signers’ gaze patterns during sentence comprehension largely paralleled those of listeners perceiving spoken language, with a few notable exceptions that arise from the increased demands on visual attention when perceiving ASL. These findings add to our understanding of how language is processed incrementally across languages with different modalities, attentional constraints, and word orders. The ability to allocate attention between language and visual information is a robust and early-acquired skill that appears to adapt based on the nature and complexity of the surrounding visual scene.

Acknowledgments:

This work was funded by the National Institute on Deafness and Communication Disorders (NIDCD), grant number R01DC015272. We thank Rachel Mayberry and Arielle Borovsky for valuable feedback, and Marla Hatrak, Michael Higgins, and Valerie Sharer for help with data collection. We are grateful to all of the individuals who participated in this study.

Footnotes

Data availability statement

The datasets during and/or analyzed during the current study are available from the corresponding author on reasonable request.

We used a more inclusive criteria for trackloss for children compared to adults to allow for more variable gaze patterns typical of child participants during eye-tracking. The 20% threshold for children is similar to that used in previous studies with young children (e.g., Borovsky et al. 2016; Nordmeyer and Frank 2014).

Conflict of interest statement

On behalf of all authors, the corresponding author states that there is no conflict of interest.

References

- Altmann GT, & Kamide Y. (2007). The real-time mediation of visual attention by language and world knowledge: Linking anticipatory (and other) eye movements to linguistic processing. Journal of Memory and Language, 57(4), 502–518. doi: 10.1016/j.jml.2006.12.004 [DOI] [Google Scholar]

- Anderson D, & Reilly J. (2002). The MacArthur communicative development inventory: normative data for American Sign Language. Journal of Deaf Studies and Deaf Education, 7(2), 83–106. doi: 10.1093/deafed/7.2.83 [DOI] [PubMed] [Google Scholar]

- Baayen RH, Davidson DJ, & Bates DM (2008). Mixed-effects modeling with crossed random effects for subjects and items. Journal of Memory and Language, 59(4), 390–412. doi: 10.1016/j.jml.2007.12.005 [DOI] [Google Scholar]

- Barr DJ (2008). Analyzing ‘visual world’eyetracking data using multilevel logistic regression. Journal of Memory and Language, 59(4), 457–474. doi: 10.1016/j.jml.2007.09.002 [DOI] [Google Scholar]

- Bavelier D, Dye MW, & Hauser PC (2006). Do deaf individuals see better? Trends in Cognitive Sciences, 10(11), 512–518. doi: 10.1016/j.tics.2006.09.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borovsky A, Elman JL, & Fernald A. (2012). Knowing a lot for one’s age: Vocabulary skill and not age is associated with anticipatory incremental sentence interpretation in children and adults. Journal of Experimental Child Psychology, 112(4), 417–436. doi: 10.1016/j.jecp.2012.01.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borovsky A, Sweeney K, Elman JL, & Fernald A. (2014). Real-time interpretation of novel events across childhood. Journal of Memory and Language, 73, 1–14. doi: 10.1016/j.jml.2014.02.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borovsky A, Ellis EM, Evans JL, & Elman JL (2016). Lexical leverage: Category knowledge boosts real-time novel word recognition in 2-year-olds. Developmental science 19(6). 918–932. doi: 10.1111/desc.12343 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen Pichler D. (2011). Using early ASL Word Order to Shed Light on Word Order Variability in Sign Language In Anderssen M, Bentzen K, & Westergaard M. (Eds.), Variation in the Input(pp. 157–177). Dordrecht: Springer. [Google Scholar]

- Coates J, & Sutton-Spence R. (2001). Turn-taking patterns in deaf conversation. Journal of Sociolinguistics, 5(4), 507–529. doi: 10.1111/1467-9481.00162 [DOI] [Google Scholar]

- Eberhard KM, Spivey-Knowlton MJ, Sedivy JC, & Tanenhaus MK (1995). Eye Movements as a Window into Real-Time Spoken Language Comprehension in Natural Contexts. Journal of Psycholinguistic Research, 24(6), 409–436. doi: 10.1007/bf02143160 [DOI] [PubMed] [Google Scholar]

- Fernald A, Thorpe K, & Marchman VA (2010). Blue car, red car: Developing efficiency in online interpretation of adjective–noun phrases. Cognitive psychology, 60(3), 190–217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gasser M, & Smith LB (1998). Learning Nouns and Adjectives: A Connectionist Account. Language and Cognitive Processes, 13(2–3), 269–306. doi: 10.1080/016909698386537 [DOI] [Google Scholar]

- Hanna JE, Tanenhaus MK, & Trueswell JC (2003). The effects of common ground and perspective on domains of referential interpretation. Journal of Memory and Language, 49(1), 43–61. doi: 10.1016/s0749-596x(03)00022-6 [DOI] [Google Scholar]

- Harris M, Clibbens J, Chasin J, & Tibbitts R. (1989). The social context of early sign language development. First Language, 9(25), 81–97. doi: 10.1177/014272378900902507 [DOI] [Google Scholar]

- Huang YT, & Snedeker J. (2013). The use of lexical and referential cues in children’s online interpretation of adjectives. Developmental psychology, 49(6), 1090–1102. doi: 10.1037/a0029477 [DOI] [PubMed] [Google Scholar]

- Hurewitz F, Brown-Schmidt S, Thorpe K, Gleitman LR, & Trueswell JC (2000). One Frog, Two Frog, Red Frog, Blue Frog: Factors Affecting Children’s Syntactic Choices in Production and Comprehension.Journal of Psycholinguistic Research, 29(6), 597–626. doi: 10.1023/A:1026468209238 [DOI] [PubMed] [Google Scholar]

- Kamide Y, Altmann GTM, & Haywood SL (2003). The time-course of prediction in incremental sentence processing: Evidence from anticipatory eye movements. Journal of Memory and Language, 49(1), 133–156. doi: 10.1016/S0749-596X(03)00023-8 [DOI] [Google Scholar]

- Kimmelman V. (2012). Word Order in Russian Sign Language. Sign Language Studies, 12(3), 414–445. doi: 10.1353/sls.2012.0001 [DOI] [Google Scholar]

- Knoeferle P, Carminati MN, Abashidze D, & Essig K. (2011). Preferential Inspection of Recent Real-World Events Over Future Events: Evidence from Eye Tracking during Spoken Sentence Comprehension. Frontiers in Psychology, 2, 376. doi: 10.3389/fpsyg.2011.00376 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knoeferle P, & Crocker MW (2009). Constituent order and semantic parallelism in online comprehension: Eye-tracking evidence from German. The Quarterly Journal of Experimental Psychology, 62(12), 2338–2371. doi: 10.1080/17470210902790070 [DOI] [PubMed] [Google Scholar]

- Lieberman AM, Borovsky A, Hatrak M, & Mayberry RI (2015). Real-time Processing of ASL signs: Delayed First Language Acquisition Affects Organization of the Mental Lexicon. Journal of Experimental Psychology: Learning, Memory, and Cognition, 41(4), 1130–1139. doi: 10.1037/xlm0000088 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lieberman AM, Borovsky A, & Mayberry RI (2017). Prediction in a visual language: real-time sentence processing in American Sign Language across development. Language, Cognition and Neuroscience, 1–15. doi: 10.1080/23273798.2017.1411961 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lieberman AM, Hatrak M, & Mayberry RI (2014). Learning to Look for Language: Development of Joint Attention in Young Deaf Children. Language Learning and Development, 10(1), 19–35. doi: 10.1080/15475441.2012.760381 [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacDonald K, LaMarr T, Corina D, Marchman VA, & Fernald A. (2018). Real-time lexical comprehension in young children learning American Sign Language. Developmental science, 21(6), e12672. doi:0.31234/osf.io/zht6g [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mani N, & Huettig F. (2012). Prediction During Language Processing is a Piece of Cake—But Only for Skilled Producers. Journal of Experimental Psychology: Human Perception and Performance, 38(4), 843–847. doi: 10.1037/a0029284 [DOI] [PubMed] [Google Scholar]

- Maris E, & Oostenveld R. (2007). Nonparametric statistical testing of EEG-and MEG-data. Journal of Neuroscience Methods, 164(1), 177–190. doi: 10.1016/j.jneumeth.2007.03.024 [DOI] [PubMed] [Google Scholar]

- Mayberry RI, & Squires B. (2006). Sign Language: Acquisition In Brown K. (Ed.), Encyclopedia of Language and Linguistics (pp. 291–296). Boston, MA: Elsevier. [Google Scholar]

- Melkman R, Tversky B, & Baratz D. (1981). Developmental trends in the use of perceptual and conceptual attributes in grouping, clustering, and retrieval. Journal of Experimental Child Psychology, 31(3), 470–486. doi: 10.1016/0022-0965(81)90031-x [DOI] [PubMed] [Google Scholar]

- Nation K, Marshall CM, & Altmann GT (2003). Investigating individual differences in children’s real-time sentence comprehension using language-mediated eye movements. Journal of Experimental Child Psychology,86(4), 314–329. doi: 10.1016/j.jecp.2003.09.001 [DOI] [PubMed] [Google Scholar]

- Neidle C, & Nash J. (2012). The noun phrase In Pfau R, Steinbach M, & Woll B. (Eds.), Sign Language: An International Handbook (pp. 265–292). Berlin, Boston: De Gruyter Mouton. [Google Scholar]

- Neville HJ, & Lawson D. (1987). Attention to central and peripheral visual space in a movement detection task. III. Separate effects of auditory deprivation and acquisition of a visual language. Brain research, 405(2), 284–294. doi: 10.1016/0006-8993(87)90297-6 [DOI] [PubMed] [Google Scholar]

- Ninio A. (2004). Young children’s difficulty with adjectives modifying nouns. Journal of Child Language, 31(2), 255–285. doi: 10.1017/S0305000904006191 [DOI] [PubMed] [Google Scholar]

- Nordmeyer AE, & Frank MC (2014). The role of context in young children’s comprehension of negation. Journal of Memory and Language, 77, 25–39. doi: 10.1016/j.jml.2014.08.002 [DOI] [Google Scholar]

- Odom RD, & Guzman RD (1972). Development of hierarchies of dimensional salience. Developmental Psychology, 6(2), 271–287. doi: 10.1037/h0032096 [DOI] [Google Scholar]

- Pavani F, & Bottari D (2012). Visual abilities in individuals with profound deafness a critical review In Murray MM & Wallace MT (Eds.), The neural bases of multisensory processes (pp. 423–448). Boca Raton, FL: CRC Press/Taylor & Francis. [PubMed] [Google Scholar]

- Rubio-Fernández P, Mollica F, & Jara-Ettinger J. (2018). Why searching for a blue triangle is different in English than in Spanish. doi: 10.31234/osf.io/gf8qx [DOI] [Google Scholar]

- Rubio-Fernández P, Wienholz A, Kirby S, & Lieberman AM (2019, March 31). ASL signers vary adjective position to maximize efficiency: A reference production study. Poster presented at the 32nd Annual CUNY Conference on Human Sentence Processing, Boulder, CO. [Google Scholar]

- Sandler W, & Lillo-Martin D. (2006). Sign Language and Linguistic Universals. Cambrigde, UK: Cambridge University Press. [Google Scholar]

- Sedivy JC (2003). Pragmatic Versus Form-Based Accounts of Referential Contrast: Evidence for Effects of Informativity Expectations. Journal of Psycholinguistic Research, 32(1), 3–23. doi: 10.1023/A:1021928914454 [DOI] [PubMed] [Google Scholar]

- Sedivy JC, Tanenhaus MK, Chambers CG, & Carlson GN (1999). Achieving incremental semantic interpretation through contextual representation. Cognition, 71(2), 109–147. doi: 10.1016/s0010-0277(99)00025-6 [DOI] [PubMed] [Google Scholar]

- Snedeker J, & Trueswell JC (2004). The developing constraints on parsing decisions: The role of lexical-biases and referential scenes in child and adult sentence processing. Cognitive Psychology, 49(3), 238–299. doi: 10.1016/j.cogpsych.2004.03.001 [DOI] [PubMed] [Google Scholar]

- Spivey MJ, Tanenhaus MK, Eberhard KM, & Sedivy JC (2002). Eye movements and spoken language comprehension: Effects of visual context on syntactic ambiguity resolution. Cognitive Psychology, 45(4), 447–481. doi: 10.1016/s0010-0285(02)00503-0 [DOI] [PubMed] [Google Scholar]

- Spivey MJ, Tyler MJ, Eberhard KM, & Tanenhaus MK (2001). Linguistically mediated visual Search. Psychological Science, 12(4), 282–286. doi: 10.1111/1467-9280.00352 [DOI] [PubMed] [Google Scholar]

- Sutton-Spence R, & Woll B. (1999). The linguistics of British Sign Language: An Introduction. Cambrigde, UK: Cambridge University Press. [Google Scholar]

- Swisher MV (2000). Learning to converse: How deaf mothers support the development of attention and conversational skills in their young deaf children In Spencer P, Erting CJ, & Marschark M. (Eds.), The deaf child in the family and at school (pp. 21–40). Mahwah, NJ: Erlbaum. [Google Scholar]

- Tanenhaus MK, Spivey-Knowlton MJ, Eberhard KM, & Sedivy JC (1995). Integration of visual and linguistic information in spoken language comprehension. Science, 268(5217), 1632–1634. doi: 10.1126/science.7777863 [DOI] [PubMed] [Google Scholar]

- Tribushinina E, & Mak WM (2016). Three-year-olds can predict a noun based on an attributive adjective: evidence from eye-tracking. Journal of child language, 43(2), 425–441. doi: 10.1017/s0305000915000173 [DOI] [PubMed] [Google Scholar]

- Trueswell JC, Sekerina I, Hill NM, & Logrip ML (1999). The kindergarten-path effect: studying on-line sentence processing in young children. Cognition, 73(2), 89–134. doi: 10.1016/S0010-0277(99)00032-3 [DOI] [PubMed] [Google Scholar]

- Waxman RP, & Spencer PE (1997). What Mothers Do to Support Infant Visual Attention: Sensitivities to Age and Hearing Status. Journal of Deaf Studies and Deaf Education, 2(2), 104–114. doi: 10.1093/oxfordjournals.deafed.a014311 [DOI] [PubMed] [Google Scholar]

- Weisleder A, & Fernald A. (2009). Real-time processing of postnominal adjectives by Latino children learning Spanish as a first language. In Proceedings of the 24th Annual Boston University Conference on Language Development(pp. 611–621). [Google Scholar]