Abstract

Forecasting the El Niño-Southern Oscillation (ENSO) has been a subject of vigorous research due to the important role of the phenomenon in climate dynamics and its worldwide socioeconomic impacts. Over the past decades, numerous models for ENSO prediction have been developed, among which statistical models approximating ENSO evolution by linear dynamics have received significant attention owing to their simplicity and comparable forecast skill to first-principles models at short lead times. Yet, due to highly nonlinear and chaotic dynamics (particularly during ENSO initiation), such models have limited skill for longer-term forecasts beyond half a year. To resolve this limitation, here we employ a new nonparametric statistical approach based on analog forecasting, called kernel analog forecasting (KAF), which avoids assumptions on the underlying dynamics through the use of nonlinear kernel methods for machine learning and dimension reduction of high-dimensional datasets. Through a rigorous connection with Koopman operator theory for dynamical systems, KAF yields statistically optimal predictions of future ENSO states as conditional expectations, given noisy and potentially incomplete data at forecast initialization. Here, using industrial-era Indo-Pacific sea surface temperature (SST) as training data, the method is shown to successfully predict the Niño 3.4 index in a 1998–2017 verification period out to a 10-month lead, which corresponds to an increase of 3–8 months (depending on the decade) over a benchmark linear inverse model (LIM), while significantly improving upon the ENSO predictability “spring barrier”. In particular, KAF successfully predicts the historic 2015/16 El Niño at initialization times as early as June 2015, which is comparable to the skill of current dynamical models. An analysis of a 1300-yr control integration of a comprehensive climate model (CCSM4) further demonstrates that the enhanced predictability afforded by KAF holds over potentially much longer leads, extending to 24 months versus 18 months in the benchmark LIM. Probabilistic forecasts for the occurrence of El Niño/La Niña events are also performed and assessed via information-theoretic metrics, showing an improvement of skill over LIM approaches, thus opening an avenue for environmental risk assessment relevant in a variety of contexts.

Subject terms: Climate sciences, Projection and prediction

Introduction

Previous studies on improving the skill of conventional LIMs1,2 have highlighted the importance of nonlinearity in ENSO dynamics, such as surface-subsurface interactions and surface winds3, which are usually underestimated in linear dynamics approximations. Adequate representation of such processes within statistical ENSO models is important to attain optimal long-term forecast skill, influencing two major components of model design, namely (i) the construction of predictor vectors to extract pertinent information about the state of the climate system; and (ii) the assumed evolution dynamics employed to make predictions beyond the training period. For instance, LIMs use the leading principal components from empirical orthogonal function (EOF) analysis as predictors, and approximate their tendencies by a Markov prediction model similar to a linear regression. A linear structure is imposed in two aspects here; that is, the linear predictors obtained by EOF analysis and the linear dynamical model treating these predictors as state vectors. This suggests that two corresponding types of improvement of conventional LIMs can be sought. Indeed, a number of studies3,4 have shown that inclusion of LIM residuals at the present and recent past states can implicitly capture subsurface forcing and its nonlinear interactions with SST, consequently contributing to more skillful ENSO predictions. Meanwhile, replacing EOF analysis by nonlinear dimension reduction techniques5 has also led to improved performance by LIMs under certain circumstances, especially for long-term ENSO forecasts.

Other statistical approaches for ENSO prediction6–9 have included methods based on Lorenz’s analog forecasting technique10 or neural networks (NNs)11. The core idea of analog methods6–8 is to employ observational or free-running (non-initialized) model data as libraries of states, through which forecasts can be made by identifying states in the library that closely resemble the observed data at forecast initialization, and following the evolution of the quantity of interest on these so-called analogs to yield a prediction. Advantages of analog forecasting include avoiding the need for an expensive, and potentially unstable, initialization system, as well as reducing structural model error if natural analogs are employed. For phenomena such as ENSO exhibiting a reasonably small number of effective degrees of freedom, the performance of analog techniques has been shown to be comparable to, and sometimes exceed, the skill of initialized forecasts by coupled general circulation models (CGCMs)7,8. Meanwhile, NN models build a representation of the predictand as a nonlinear function of the predictor variables by optimization within a high-dimensional parametric class of functions. A recent study9 utilizing convolutional NNs trained on GCM output and reanalysis data has demonstrated forecast skill for the 3-month averaged Niño 3.4 index extending out to 17 months.

The KAF approach12,13 employed in this work can be viewed as a generalization of conventional analog forecasting, utilizing nonlinear-kernel algorithms for statistical learning14 and operator-theoretic ergodic theory15,16 to optimally capture the evolution of a response variable (here, an ENSO index) under partially observed nonlinear dynamics. In particular, unlike conventional approaches, the output of KAF is not given by a local average over analog states (or linear combinations of states in the case of constructed analogs6), but is rather determined by a projection operation onto a function space of observables of high regularity, learned from high-dimensional training data. This hypothesis space has as a basis a set of eigenvectors of a judiciously constructed kernel matrix, which depends nonlinearly on the input data, thus potentially capturing richer patterns of variability than the linear principal components from EOF analysis. It should be noted, in particular, that the eigenvectors employed in KAF are not expressible as linear projections of the data onto corresponding EOFs. By virtue of this structure, the forecast function from KAF can be shown to converge in an asymptotic limit of large data to the conditional expectation of the response function, acted upon by the Koopman evolution operator of the dynamical system over the desired lead time, conditioned on the input (predictor) data at forecast initialization13. As is well known from statistics, the conditional expectation is optimal in the sense of minimal expected square error (L2 error) among all forecasts utilizing the same predictors.

Here, we build KAF models using lagged histories of Indo-Pacific SST fields as input data. Specifically, we employ the class of kernels introduced in the context of nonlinear Laplacian spectral analysis (NLSA)17,18 algorithms, whose eigenfunctions provably capture modes of high dynamical coherence19,20, including representations of ENSO, ENSO combination modes, and Pacific decal variability21–23. This capability is realized without ad hoc prefiltering of the input data through the use of delay-coordinate maps24 and a nonlinear (normalized Gaussian) kernel function25 measuring similarity between delay-embedded sequences of SST fields. A key advantage of the methodology employed here over LIMs or other parametric models is its nonparametric nature, in the sense that KAF does not impose explicit assumptions on the dynamical model structure (e.g., linearity), thus avoiding systematic model errors that oftentimes preclude parametric models from attaining useful skill at long lead times. Moreover, by employing delays, KAF enhances the information content of the predictor data beyond the information present in individual SST snapshots, allowing it to capture important contributors to ENSO predictability, such as upper-ocean heat content and surface winds3,26.

A distinguishing aspect of the approach presented here over recent analog7,8 and NN9 methods utilizing large, GCM-derived, multivariate datasets for training is that our observational forecasts are trained only on industrial-era observational/reanalysis SST fields. Indeed, due to the large number of parameters involved, NN methods cannot be adequately trained from industrial-era data alone9, and are trained instead using thousands of years of model output. In contrast, KAF is an intrinsically nonparametric method, involving only a handful of meta-parameters (such as embedding window and hypothesis space dimension), and thus able to yield robust ENSO forecasts from a comparatively modest training dataset spanning ~120 years. It should also be noted that KAF exhibits theoretical convergence and optimality guarantees13 which, to our knowledge, are not available for other comparable statistical ENSO forecasting methodologies.

Results

We present prediction results obtained via KAF and LIMs in a suite of prediction experiments for (i) the Niño 3.4 index in industrial-era HadISST data27; (ii) the Niño 3.4 index in a 1300-yr control integration of CCSM428, a CGCM known to exhibit fairly realistic ENSO dynamics and Pacific decadal variability29; and (iii) the probability of occurrence of El Niño/La Niña events in both HadISST and CCSM4. All experiments use SST fields from the respective datasets in a fixed Indo-Pacific domain as training data. The Niño 3.4 prediction skill is assessed using root-mean-square error (RMSE) and pattern correlation (PC) scores. We employ and 0.5 as representative thresholds separating useful from non-useful predictions. The probabilistic El Niño/La Niña experiments are assessed using the Brier skill score (BSS)30,31, as well as information-theoretic relative entropy metrics32–34. For consistency with an operational forecasting environment, all experiments employ disjoint, temporally consecutive portions of the available data for training and verification, respectively. In the CCSM4 experiments, Niño 3.4 indices are computed relative to monthly climatology of the training data; in HadISST we use anomalies relative to the 1981–2010 monthly climatology as per current convention in many modeling centers35.

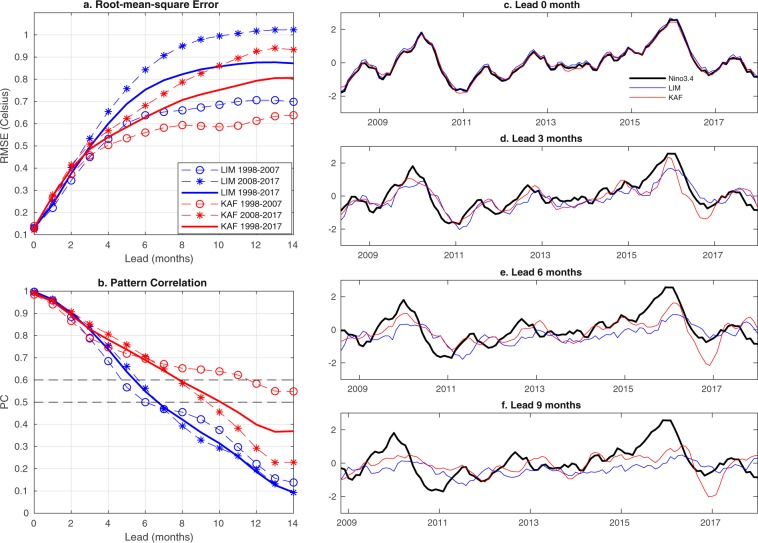

Figure 1 displays KAF and LIM prediction results for the Niño 3.4 index in the HadISST dataset over a 1998–2017 verification period, using 1870–1997 for training. In Fig. 1(a,b) it is clearly evident that even though there is significant decadal variability of skill, KAF consistently outperforms the LIM in terms of both RMSE and PC scores, with an apparent increase of 4–7 months of useful forecast horizon. In particular, KAF attains the longest useful skill in the first decade of the verification period, 1998–2007, despite an initially faster decrease of skill over 0–6 months. The KAF PC score for 1998–2007 is seen to cross the 0.6 threshold at approximately 12 months, and remains above 0.5 at least out to 14 months, compared to 5 and 6 months for LIM, respectively. In 2008–2017, the PC skill of KAF exhibits a more uniform rate of decay, crossing the threshold at a lead time of 9 months, compared to 6–7 months for LIM. When measured over the full, 20-year verification period, KAF provides 3 to 4 months of additional useful skill, as well as a markedly slower rate of skill reduction beyond 4 months. As a comparison with state-of-art dynamical forecasts, the skill scores in Fig. 1 are generally comparable, and in some cases exceed, recently reported scores from the North American Multimodel Ensemble36 (though it should be kept in mind that the verification periods of that study and ours are different).

Figure 1.

Forecasts of the Niño 3.4 index in industrial-era HadISST data during 1998–2017 obtained via KAF (red) and LIM (blue). (a,b) RMSE and PC scores, respectively, as a function of lead time, computed for 1998–2007 ( markers), 2008–2017 (* markers), and the full 1998–2017 verification period (solid lines). The 0.6 and 0.5 PC levels are highlighted in (b) for reference. (c–f) Running forecasts for representative lead times in the range 0–9 months. Black lines show the true signal at the corresponding verification times.

In separate calculations, we have verified that incorporating delays in the kernel is a significant contributor to the skill of KAF, particularly at lead times greater than 2 months. This is consistent with previous work, which has shown that delay embedding increases the capability of kernel algorithms to extract dynamically intrinsic coherent patterns in the spectrum of the Koopman operator19,20, including ENSO and its linkages with seasonal and decadal variability of the climate system21–23. Given that our main focus here is on the extended-range regime, Fig. 1 depicts KAF results for a single embedding window of 12 months. Operationally, however, one would employ different embedding windows at different leads to optimize performance.

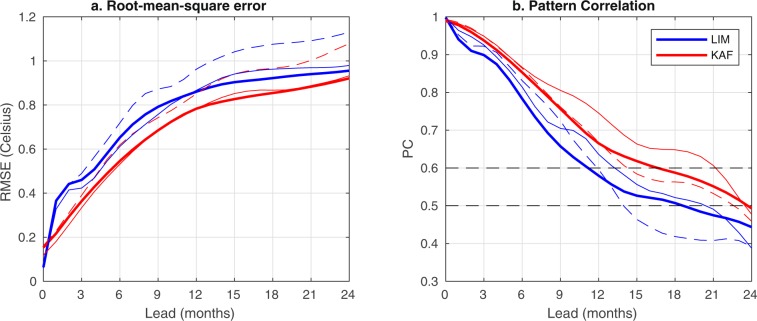

Next, in order to assess the potential skill of KAF and LIM approaches in an environment with more data available for training and verification (contributing to more robust model construction and skill assessment, respectively) than HadISST, we examine Niño 3.4 prediction results for the CCSM4 dataset, using the first 1100 years for training and the last 200 years for verification. Figure 2 demonstrates that while both KAF and LIM exhibit significantly higher skill compared to the HadISST results in Fig. 1(a,b), the performance of KAF is particularly strong, with PC scores computed over the 200-year verification period crossing the 0.6 and 0.5 levels at 17- and 24-month leads, respectively. The latter values correspond to an increase of predictability over HadISST by 9 and 14 months, measured with respect to the same PC thresholds. In comparison, the and 0.5 predictability horizons for LIM are 11 and 18 months, respectively, corresponding to an increase of 6 and 11 months over HadISST. As illustrated in Fig. 2, the RMSE and PC scores from individual decades in the CCSM4 verification period exhibit significant variability, and similarly to HadISST, KAF consistently outperforms LIM on individual verification decades. In general, KAF appears to benefit from the larger CCSM4 dataset more than LIM, which is consistent with the former method better leveraging the information content of large data sets due to its nonparametric nature and theoretical ability to consistently approximate an optimal prediction function (the conditional expectation). In contrast, LIM’s performance is limited to some extent by structural model error due to linear dynamics, and this type of error may not be possible to eliminate even with arbitrarily large training datasets.

Figure 2.

As in Fig. 1(a,b), but for a 200-year verification period corresponding to CCSM4 simulation years 1100–1300. Solid and dashed lines correspond to scores computed from the fourth and last decade of the verification period, respectively. Thick lines show scores computed from the full 200-year verification period.

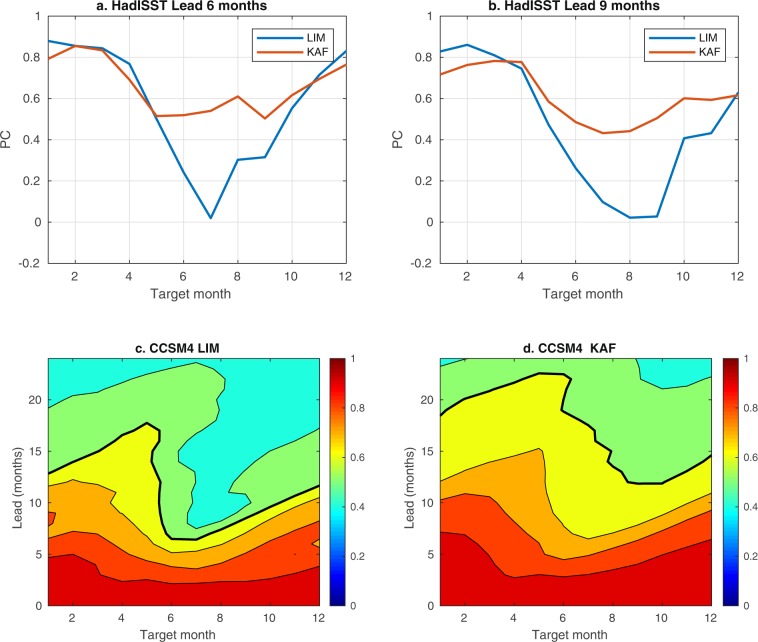

Another important consideration in ENSO prediction is the seasonal dependence of skill, exhibiting the so-called “spring barrier”37. Figure 3 shows the month-to-month distribution of the KAF and LIM Niño 3.4 PC scores, computed for 6- and 9-month leads in HadISST and 0–24-month leads in CCSM4 using the same training and verification datasets as in Figs. 1 and 2, respectively. These plots feature characteristic spring barrier curves, with the highest predictability occurring in late winter to early spring and a clear drop of skill in summer3,4. The diminished summer predictability is thought to be caused by the low amplitude of SST anomalies developing then, making ENSO dynamics more sensitive to high-frequency atmospheric noise3. As we have shown in previous work22, the class of kernels employed here for forecasting is adept at capturing the effects of atmospheric noise and its nonlinear impact on ENSO statistics, including positive SST anomaly skewness underlying El Niño/La Niña asymmetry. The method has also been found highly effective in capturing the phase locking of ENSO to the seasonal cycle through a hierarchy of combination modes21,23,38. Correspondingly, while both methods exhibit a reduction of skill during summer, KAF fares significantly better than the benchmark LIMs in both of the HadISST and CCSM4 datasets. More specifically, in the HadISST results in Fig. 3(a,b), the LIM’s PC score drops rapidly to small, 0–0.3 values between June and September, while KAF maintains scores of about 0.4 or greater over the same period. In particular, the June–September PC scores for KAF at 6-month leads hover between 0.5 and 0.6, which are values that one could consider as “beating” the spring barrier (albeit marginally). Similarly, in CCSM4 (Fig. 3(d)), the contour for KAF decreases less appreciably and abruptly over May–August than in the case of the LIM, indicating that KAF is better at maintaining skill during the transition from spring to summer. Since strong El Niño events are often triggered by westerly wind bursts in spring, advecting warm surface water to the east3, the better performance of KAF in summer suggests that it is more capable of capturing the triggering mechanism22, aiding its ability in predicting the onset of ENSO. Indeed, this is consistent with the running forecast time series for HadISST in Fig. 1(c,d), in which KAF often yields more accurate forecasts at the beginning of El Niño events, e.g., during 2009/10 and 2015/16.

Figure 3.

(a,b) Seasonal dependence of Niño 3.4 PC scores for KAF (red lines) and the benchmark LIM at 6- and 9-month leads in industrial-era HadISST data during 1998–2017. (c,d) LIM and KAF PC scores for 0–24-month leads in CCSM4 during simulation years 1100–1300. Contours are drawn at the levels. Thick black lines highlight contours for reference.

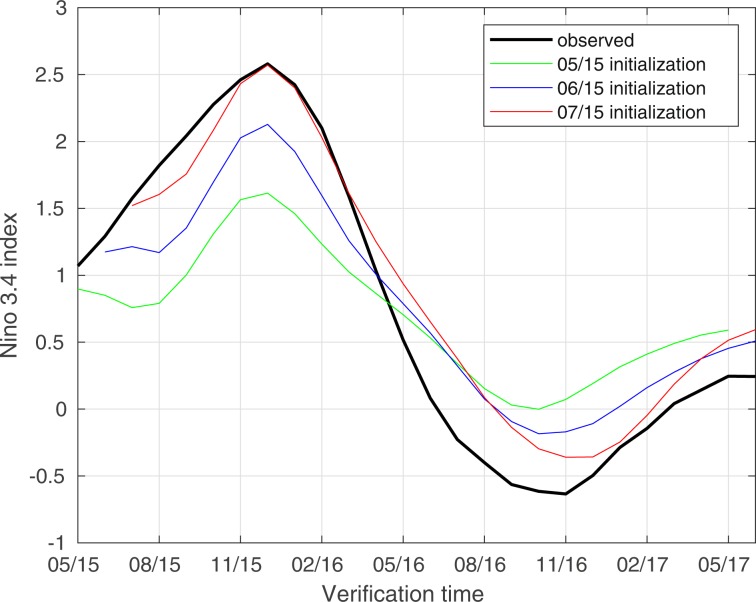

As a final deterministic hindcast experiment, we consider prediction of the 2015/16 El Niño event with KAF. This event has received considerable attention by researchers and stakeholders across the world35, in part due to it being the first major El Niño since the 1997/98 event, providing an important benchmark to assess the advances in modeling and observing systems in the nearly two intervening decades. Figure 4 shows KAF forecast trajectories of 3-month running means of the Niño 3.4 index in HadISST for the period May 2015 to May 2017, initialized in May, June, and July of 2015 (i.e., 7, 6, and 5 months prior to the December 2015 peak of the of the event). In this experiment, we use Indo-Pacific SST data from HadISST over the period January 1870 to December 2007 for training. Moreover, we perform prediction of 3-month-averaged Niño 3.4 indices to highlight seasonal evolution35. Prediction results for the instantaneous (monthly) Niño 3.4 index are broadly consistent with the results in Fig. 4.

Figure 4.

KAF hindcasts of the 3-month-averaged Niño 3.4 index in HadISST during the 2015/16 El Niño period. Hindcast trajectories are initialized in the May, June, and July preceding the December 2015 peak of the event.

We find that the KAF hindcast initialized in July 2015 accurately predicts the 3-month averaged Niño 3.4 evolution through February 2016, including the 2015/16 El Niño peak, yielding a moderate over-prediction over the ensuing months which nevertheless correlates well with the true signal. The trajectory initialized in June 2015 also predicts a strong (≥2 °C) El Niño event peaking in December 2015, but underestimates the true 2.5 °C anomaly peak by approximately 0.4 °C. Meanwhile, the trajectory initialized in May 2015 predicts a 1.5 °C positive anomaly in the ensuing December, which falls short of the true event by 1 °C but still qualifies as an El Niño event by conventional definitions. Overall, this level of performance compares favorably with many statistical model forecasts of the 2015/16 El Niño (which typically did not yield a possibility of a ≥2 °C event until August 201535). In fact, the forecast trajectories in Fig. 4 have comparable, and sometimes higher, skill than forecasts from many operational weather prediction systems, despite KAF utilizing far-lower numerical/observational resources and temporal resolution.

We now turn to the skill of probabilistic El Niño/La Niña forecasts. A distinguishing aspect of probabilistic prediction over point forecasts (e.g., the results in Figs. 1–3) is that it provides more direct information about uncertainty, and as a result more actionable information to decision makers. Probabilistic prediction with either first-principles or statistical models, or ensembles of models, is typically conducted by binning collections of point forecasts, e.g., realized by random draws from distributions of initial conditions and/or model parameters. Here, we employ an alternative approach, which to our knowledge has not been previously pursued, whereby KAF and LIM approaches are used to predict the probability of occurrence of El Niño or La Niña events directly, without generating ensembles of trajectories. Our approach is based on the fact that predicting conditional probability is equivalent to predicting the conditional expectation of a characteristic function indexing the event of interest13. As a result, this task can be carried out using the same KAF and LIM approaches described above, replacing the Niño 3.4 index by the appropriate characteristic function as the response variable.

Here, we construct characteristic functions for El Niño and La Niña events in the HadISST and CCSM4 data using the standard criterion requiring that at least five overlapping seasonal (3-month running averaged) Niño 3.4 indices to be greater (smaller) than 0.5 (−0.5) °C, respectively35. In this context, natural skill metrics are provided by the BSS30,31, as well as relative entropy from information theory32,33 (see Methods). For binary outcomes, such as occurrence vs. non-occurrence of El Niño or La Niña events, the BSS is equivalent to a climatology-normalized RMSE score for the corresponding characteristic function. As information-theoretic metrics, we employ two relative entropy functionals34, denoted and , which measure respectively the precision relative to climatology and ignorance (lack of accuracy) relative to the truth in a probabilistic forecast. For a binary outcome, attains a maximal value , corresponding to a maximally precise binary forecast relative to climatology. On the other hand, is unbounded, but has a natural scale corresponding to the ignorance of a probabilistic forecast based on random draws from the climatology; this makes a natural threshold indicating loss of skill. Overall, a skillful binary probabilistic forecast should simultaneously have , , and . Note that we only report values of these scores in the CCSM4 experiments, as we found that the HadISST verification is not sufficiently long for statistically robust computation of relative-entropy scores.

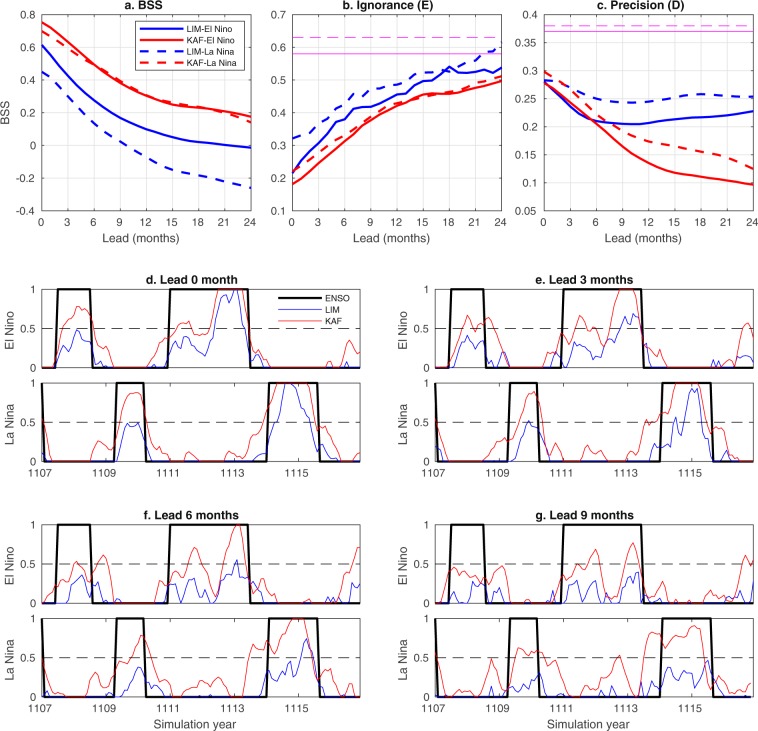

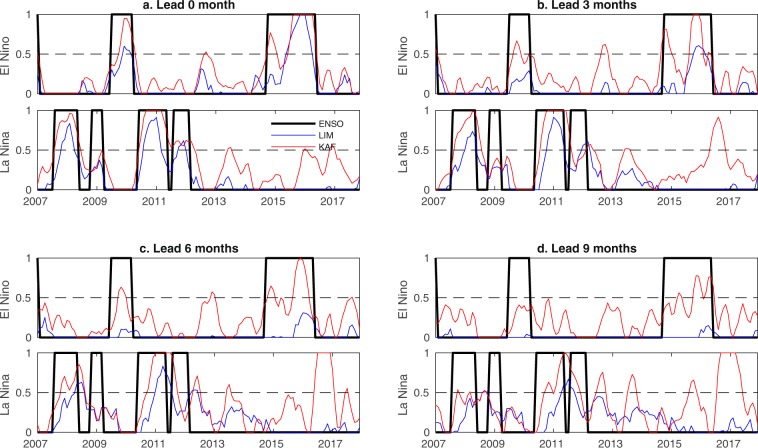

The results of probabilistic El Niño/La Niña prediction for CCSM4 and HadISST are shown in Figs. 5 and 6, respectively. As is evident in Fig. 5(a,b), in the context of CCSM4, KAF performs markedly better than LIM in terms of the BSS and metric for all examined lead times, and for both El Niño and La Niña events. The scores in Fig. 5(c) start from 0.3 at forecast initialization (zero lead) for both methods, and while the KAF scores exhibit a monotonic decrease with lead time, in the case of LIM they exhibit an oscillatory behavior, hovering around 0.25 values. The latter behavior, in conjunction with a steady decrease of BSS and seen in Fig. 5(a,b), is a manifestation of the fact that, as the lead time grows, LIM produces biases, likely due to dynamical model error. Note, in particular, that a forecast of simultaneously high ignorance (i.e., large error) and high precision, as the LIM forecast at late times, must necessarily be statistically biased as it underestimates uncertainty. In contrast, the KAF-derived results exhibit a simultaneous increase of ignorance and decrease of precision, as expected for an unbiased forecast under complex dynamics with intrinsic predictability limits. Turning to the HadISST results, Fig. 6 again demonstrates that KAF performs noticeably better than LIM for both El Niño and La Niña prediction. Note that the apparent “false positives” in the KAF-based La Niña results around 2017 are not necessarily unphysical. In particular, there are weak La Niña events documented during 2016–2017 and 2017–2018, which are excluded from the true characteristic function for La Niñas, but may exhibit residuals in the approximate characteristic function output by KAF.

Figure 5.

Probabilistic El Niño (solid lines) and La Niña (dashed lines) forecasts in CCSM4 data during simulation years 1100–1300, using KAF (red lines) and LIMs (blue lines). (a–c) BSS and information-theoretic ignorance () and precision () metrics, respectively, as a function of lead time. Magenta lines in (b,c) indicate the entropy thresholds and , respectively. (d–g) Running forecasts of the characteristic function for El Niño/La Niña events, representing conditional probability, for representative lead times in the range 0–9 months. Black lines show the true signal at the corresponding lead times.

Figure 6.

As in Fig. 5(d–g), but for probabilistic El Niño/La Niña forecasts in industrial-era HadISST data during 2007–2017.

In conclusion, this work has demonstrated the efficacy of KAF in statistical ENSO prediction, with a robust improvement in useful prediction horizon in observational data by 3–7 months over LIM approaches, and three appealing characteristics—namely, more skillful forecasts at long lead times with slower reduction of skill, reduced spring predictability barrier, and improved prediction of event onset. Moreover, in the setting of model data with larger sample sizes, the enhanced performance of KAF becomes more pronounced, with skill extending out to 24 month leads. Aside from the higher skill in predicting ENSO indices, the method is also considerably more skillful in probabilistic El Niño/La Niña prediction. We attribute these improvements to the nonparametric nature of KAF, which can consistently approximate optimal prediction functions via conditional expectation in the presence of nonlinear dynamics through a rigorous connection with Koopman operator theory. A combination of this approach with delay-coordinate maps further aids its capability to extract dynamically coherent predictor variables (through kernel eigenfunctions), including seasonal variability associated with ENSO combination modes, representations of higher-order ENSO statistics due to atmospheric noise, and Pacific decadal variability. Even though nonparametric ENSO prediction methods are not particularly common, this study shows that methods such KAF have high potential for skillful ENSO forecasts at long lead times, and can be naturally expected to be advantageous in forecasting other geophysical phenomena and their impacts, particularly in situations where the underlying dynamics is unknown or partially understood.

Methods

Datasets

The observational data used in this study consists of monthly averaged SST fields from the Hadley Centre Sea Ice and Sea Surface Temperature (HadISST) dataset27,39, sampled on a 1° × 1° latitude–longitude grid, and spanning the industrial era, 1870–2017. The modeled SST data are from a 1300-year, pre-industrial control integration of the Community Climate System Model version 4 (CCSM4)28,40, monthly averaged, and sampled on the model’s native ocean grid of approximately 1° nominal resolution. All experiments use SST on the Indo-Pacific longitude-latitude box 28°E–70°W, 30°S–20°N as input predictor data, and the corresponding Niño 3.4 indices as target (predictand) variables. The latter are defined as SST anomalies relative to a monthly climatology (to be specified below) computed from each dataset, spatially averaged over the region 5°N–5°S, 170°–120°W. The number p of spatial gridpoints in the HadISST and CCSM4 Indo-Pacific domains is 11,315 and 31,984, respectively.

Each dataset is divided into disjoint, temporally consecutive, training and verification periods. In the HadISST results in Figs. 1, 3 and 6, these periods are January 1870 to December 1997 and January 1998 to December 2017, respectively. The 2015/16 El Niño forecasts in Fig. 4 use January 1870 to December 2014 for training and May 2015 to May 2017 for verification. In the CCSM4 results in Figs. 2 and 5, the training and verification periods correspond to simulation years 1–1100 and 1101–1300, respectively. In separate calculations, we have removed portions of the training data to perform parameter tuning via hold-out validation. Note that we use hold-out validation as opposed to cross-validation in order to reduce the risk of overfitting the training data and estimating artificial skill in the verification phase. In the HadISST experiments, SST anomalies are computed by subtracting monthly means of the period 1981–2010. In CCSM4, anomalies are computed relative to the monthly means of the 900 yr training periods. See Eq. (8) ahead for an explicit formula relating SST data vectors to Niño 3.4 indices.

Kernel analog forecasting

Kernel analog forecasting (KAF)12,13 is a kernel-based nonparametric forecasting technique for partially observed, nonlinear dynamical systems. Specifically, it addresses the problem of predicting the value of a time series , where and are the forecast initialization and lead times, respectively, given a vector of predictor variables observed at forecast initialization, under the assumption that and are generated by an underlying dynamical system. To make a prediction of at lead time , where q is a non-negative integer and a fixed interval, it is assumed that a time-ordered dataset consisting of pairs , with

| 1 |

and , is available for training. In the examples studied here, is the Niño 3.4 index, is a lagged sequence of Indo-Pacific SST snapshots (to be defined in Eq. (4) below), Δt is equal to 1 month, and N is the number of samples in the training data.

For every such lead time , KAF constructs a forecast function , such that approximates . To that end, it treats and as the values of observables (functions of the state) along a trajectory of an abstract dynamical system (here, the Earth’s climate system), operating on a hidden state space Ω. On Ω, the dynamics is characterized by a flow map , such that corresponds to the state reached after dynamical evolution by time t, starting from a state . Moreover, there exist functions and such that, along a dynamical trajectory initialized at an arbitrary state , we have and . That is, in this picture, and are realizations of random variables, and , referred to as predictor and response variables, respectively, defined on the state space Ω.

It is a remarkable fact, first realized in the seminal work of Koopman41 and von Neumann42 in the 1930s, that the action of a general nonlinear dynamical system on observables such as and can be described in terms of intrinsically linear operators, called Koopman operators. In particular, taking to be the vector space of all real-valued observables on Ω (note that lies in ), the Koopman operator at time is defined as the linear operator mapping to , such that . From this perspective, the problem of forecasting at lead time becomes a problem of approximating the observable ; for, if were known, one could compute , where is the state initializing the observed dynamical trajectory. It should be kept in mind that while is by construction a linear space, on which acts linearly without approximation, the elements of may not (and in general, will not) be linear functions satisfying a relationship such as for and . In fact, in many applications is not a linear set (e.g., it could be a nonlinear manifold), in which case no elements of are linear functions.

In the setting of forecasting with initial data determined through , a practically useful approximation of must invariably be through a function that can be evaluated given values of alone. That is, we must have , where is a real-valued function on data space, referred to above as the forecast function, and . In real-world applications, including the ENSO forecasting problem studied here, the observed data are generally not sufficiently rich to uniquely infer the underlying dynamical state on Ω (i.e., is a non-invertible map). In that case, any forecast function will generally exhibit a form of irreducible error. The goal then becomes to construct optimally given the training data so as to incur minimal error with respect to a suitable metric. Effectively, this is a learning problem for a function in an infinite-dimensional function space, which KAF addresses using kernel methods from statistical learning theory14.

Following the basic tenets of statistical learning theory, and in particular kernel principal component regression, KAF searches for an optimal in a finite-dimensional hypothesis space , of dimension , which is a subspace of a reproducing kernel Hilbert space (RKHS), , of real-valued functions on data space . For our purposes, the key property that the RKHS structure of allows is that a set of orthonormal basis functions for can be constructed from the observed data , such that can be evaluated at any point , not necessarily lying in the set of training points . In particular, can be evaluated for the data observed at forecast initialization. As with every RKHS, is uniquely characterized by a symmetric, positive-definite kernel function , which can be intuitively thought of as a measure of similarity, or correlation, between data points. As a simple example, EOF analysis is based on a covariance kernel, , also known as a linear kernel, but note that the kernel used in our experiments is a Markov-normalized, nonlinear Gaussian kernel (whose construction will be described below).

Associated with any kernel function and the training data is an symmetric, positive-semidefinite kernel matrix K with elements , and a corresponding orthonormal basis of consisting of eigenvectors , satisfying

By convention, we order the eigenvalues in decreasing order. Assuming then that is strictly positive, the basis functions of the hypothesis space are given by

Given these basis functions, the KAF forecast function at lead time is expressed as the linear combination

where the expansion coefficients are determined by regression of the time-shifted response values against the eigenvectors , viz.

One can verify that with this choice of expansion coefficients , minimizes an empirical risk functional over functions in the hypothesis space .

A key aspect of the KAF forecast function is its asymptotic behavior as the number of training data N and the dimension of the hypothesis space grow. In particular, it can be shown13 that if the dynamics on Ω has an ergodic invariant probability measure (i.e., there is a well-defined notion of climatology, which can be sampled from long dynamical trajectories), and the eigenvalues of the kernel matrix K are all strictly positive for every , then under mild regularity assumptions on , , and the kernel (related to continuity and finite variance), converges in a joint limit of followed by to the conditional expectation of the response observable evolved under the Koopman operator for the desired lead time, conditioned on the data available at forecast initialization through X. In particular, it is a consequence of standard results from probability theory and statistics that is optimal in the sense of minimal RMSE among all finite-variance approximations of that depend on the values of X alone. Note that no linearity assumption on the dynamics was made in order to obtain this result. It should also be noted that while, as with many statistical forecasting techniques, stating analogous asymptotic convergence or optimality results in the absence of measure-preserving, ergodic dynamics is a challenging problem, the KAF formulation described above remains well-posed in quite general settings, including the long-term climate change trends present in the observational SST data studied in this work.

Choice of predictors and kernel

Our choice of predictor function X and kernel is guided by two main criteria: (i) X should contain relevant information to ENSO evolution beyond the information present in individual SST snapshots. (ii) should induce rich hypothesis spaces ; in particular, the number of positive eigenvalues (which controls the maximal dimension of ) should grow without bound as the size of the training dataset grows. First, note that the covariance kernel employed in EOF analysis is not suitable from the point of view of the latter criterion, since in that case the number of positive eigenvalues is bounded above by the dimension of the data space (which also bounds the number of linearly independent, linear functions of the input data). This means that one cannot increase the hypothesis space dimension to beyond , even if plentiful data is available, i.e., . In response, following earlier work17,18,43, to construct we start from a kernel of the form , where is a nonlinear shape function, set here to a Gaussian,

with , and a distance-like function on data space. We set d to an anisotropic modification of the Euclidean distance, shown to improve skill in capturing slow dynamical timescales43. Specifically, choosing the dimension of the predictor space to be an even number, and (for reasons that will become clear below) partitioning the predictor vectors into two -dimensional components, i.e., with and column vectors in , we define

| 2 |

Here, is a parameter lying in the interval , ||·|| is the standard (Euclidean) 2-norm, and Δx, , are vectors in given by

Moreover, and are the angles between Δx and and , respectively, so that

The kernel is then Markov-normalized to obtain k using the normalization procedure introduced in the diffusion maps algorithm25. This involves the following steps:

where are the predictor values from the training dataset. With this construction, all eigenvalues of K are positive if the bandwidth parameter is small-enough.

What remains is to specify the predictor function . For that, we follow the popular approach employed, among many techniques, in singular spectrum analysis (SSA)44, extended EOF analysis, and NLSA17,18, which involves augmenting the dimension of data space using the method of delay-coordinate maps24. Specifically, using to denote the function on state space such that

| 3 |

is equal to the values of the SST field sampled at the gridpoints on the Indo-Pacific domain corresponding to climate state , we set

where Q is the number of delays, and . It is well known from dynamical systems theory24 that for sufficiently large , generically becomes in one-to-one correspondence with , meaning that as grows, becomes a more informative predictor than . We then build our final predictor function by concatenating with its one-timestep lag, viz.

| 4 |

In this manner, the quantities and in the anisotropic distance in Eq. (2) measure the local time-tendencies of the predictor data, and due to the presence of the cos θ and cos θ′ terms, takes preferentially larger values on pairs of predictors where the mutual displacement Δx is aligned with the local time-tendencies. Together, the delay-embedding and anisotropic distance contribute to the ability of to identify states that evolve in a similar manner (i.e., are good analogs of one-another) over longer periods of time than kernels operating on individual snapshots.

Elsewhere19,20, it was shown that as increases, the eigenvectors of the corresponding kernel matrix K, and thus the hypothesis spaces , become increasingly adept at capturing intrinsic dynamical timescales associated with the spectrum of the Koopman operator of the dynamical system. In particular, it has been shown21–23 that for interannual embedding windows, , the leading eigenvectors of K become highly efficient at capturing coherent modes of variability associated with ENSO evolution, as well as its linkages with the seasonal cycle and decadal variability. Due to this property, the kernels employed in this work are expected to be useful for ENSO prediction since the associated eigenspaces can capture nonlinear functions of the input data, and within those eigenspaces, meaningful representations of ENSO dynamics are possible using a modest number of eigenfunctions.

Linear inverse models

Under the classical LIM ansatz1, the dynamics of ENSO can be well modeled as linear system driven by stochastic external forcing represented as temporally Gaussian white noise. The method used here to conduct LIM experiments follows closely the procedure described by Penland and Sardeshmukh2. Specifically, the dynamics is governed by a stochastic differential equation

| 5 |

where is the LIM state vector at time t, B is an matrix representing a stable dynamical operator, and W(t) is a Gaussian white noise process.

Let s(t) be an SST vector from Eq. (3), observed at forecast initialization time t, and be the corresponding anomaly vector relative to the monthly climatology at time (computed as described above for the HadISST and CCSM4 datasets). Then, the corresponding LIM state vector is given by projection onto the leading L EOFs , computed for the spatial covariance matrix C based on the anomalies in the training data, i.e.,

| 6 |

where S′ is the data matrix whose -th column is equal to .

The RMSE-optimal estimate for at an arbitrary lead time under the assumed dynamics in Eq. (5) is then given by the solution of the deterministic part with initial data , viz.

where . Given this estimate, the predicted Niño 3.4 index at time is given by

| 7 |

where is the l-th component of , and is the regression coefficient of the Niño 3.4 index against the -th SST principal component time series in the training phase,

Note that because the Niño 3.4 index is a linear function of the Indo-Pacific SST anomalies , forecasts via Eq. (7) are equivalent to (but numerically cheaper than) first computing LIM forecasts of the full Indo-Pacific SST anomalies , and then deriving from these forecasts a predicted Niño 3.4 index. Specifically, let denote the set of indices of the components of lying within the Niño 3.4 region, and the number of elements of . Then, we have

| 8 |

so that

where is the -th component of EOF . Noticing that the -th component of the LIM forecast is given by

it then follows that

| 9 |

form which we deduce that predicting using Eq. (7) is equivalent to Eq. (9). For completeness, we note that had one implemented KAF using the covariance kernel, the eigenvectors of the kernel matrix corresponding to nonzero eigenvalues would be principal components, given by linear projections of the data onto the corresponding EOF from Eq. (6); that is,

| 10 |

We emphasize that Eq. (10) is special to eigenvectors associated with covariance kernels, and in particular does not hold for the nonlinear Gaussian kernels employed in this work.

Returning to the LIM implementation, in practice, is first estimated at some time , , from the training SST data sampled at the times from (1),

| 11 |

and then is computed at the desired lead time by

| 12 |

All LIM-based forecasts reported in this paper were obtained via Eq. (7) with from Eq. (12). Note that under analogous ergodicity assumptions to those employed in KAF, the empirical time averages in Eq. (11) converge to climatological ensemble averages. It should also be noted that, among other approximations, the model structure in Eq. (5) assumes that the dynamics is seasonally independent.

Validation

The main tunable parameters of the KAF method employed here are the number of delays , the Gaussian kernel bandwidth , and the hypothesis space dimension . Here, we use throughout the values , , , and . As stated above, these values were determined by hold-out validation, i.e., by varying the parameters seeking optimal prediction skill in validation datasets. Note that this search was not particularly exhaustive, as we found fairly mild dependence of forecast skill under modest parameter changes around our nominal values.

The LIMs in this work have two tunable parameters, namely in Eq. (12) and the number of principal components employed. We set months and , using the same hold-out validation procedure as in KAF.

Probabilistic forecasting

Our approach for probabilistic El Niño/La Niña forecasting is based on the standard result from probability theory that the conditional probability of a certain event to occur is equal to the conditional expectation of its associated characteristic function. Specifically, let, as above, be the real-valued function on state space Ω such that is equal to the Niño 3.4 index corresponding to climate state . Let also be the 3-month running-averaged Niño 3.4 index, i.e.,

Here, we follow a standard definition for El Niño events35, which declares to be an El Niño state if for a period of five consecutive months about . This leads to the characteristic function , such that if there exists a set of five consecutive integers in the range , such that for all , and otherwise. Similarly, we define a characteristic function for La Niña events, requiring that for a five-month period about . With these definitions, the conditional probabilities for El Ninõ/La Niña, respectively, to occur at lead time , given the predictor vector at forecast initialization time , is equal to the conditional expectation of acted upon by the time- Koopman operator . In particular, because the values of are available to us over the training period, we can estimate using the KAF and LIM methodologies analogously to the Niño 3.4 predictions described above. All probabilistic forecast results reported in this paper were obtained in this manner.

Forecast skill quantification

Let , with and , be the climate states in Ω over a verification period consisting of samples, and and be the corresponding values of the predictor and response (Niño 3.4) functions at lead time . We assess the forecast skill of the prediction function for using the root-mean-square error (RMSE) and Pearson correlation (PC; also known as pattern correlation) scores, defined as

respectively. Here, () and () are the empirical means and standard deviations of (), respectively.

In the case of the probabilistic El Niño/La Niña forecasts, we additionally employ the BSS and relative-entropy scores, which are known to provide natural metrics for assessing the skill of statistical forecasts31–34. In what follows, we outline the construction of these scores in the special case of binary response functions, such as the characteristic functions for El Niño/La Niña events, taking values in the set . First, note that every probability measure on can be characterized by a single real number such that the probability to obtain 0 and 1 is given by and , respectively. Given two such measures characterized by , we define the quadratic distance function

Moreover, under the condition that only if , we define the relative entropy (Kullback-Leibler divergence)

where, by convention, we set or equal to zero whenever or is equal to zero, respectively. This quantity has the properties of being non-negative, and vanishing if and only if . Thus, can be thought of as a distance-like function on probability measures, though note that it is non-symmetric (i.e., in general, ), and does not obey the triangle inequality. Intuitively, can be interpreted as measuring the precision (additional information content) of the probability measure characterized by relative to that characterized by , or, equivalently, the ignorance (lack of information) of relative to .

In order to assess the skill of probabilistic El Niño forecasts, for each state in the verification dataset, we consider three probability measures on , characterized by (i) the predicted probability determined by KAF or LIM; (ii) the climatological probability , which is equal to the fraction of states in the verification dataset corresponding to El Niño events (i.e., those states for which ); and (iii) the true probability equal to the value of the characteristic function indexing the event. Using these probability measures, for each lead time , , we define the instantaneous scores

Among these, and measure the quadratic error of the probabilistic binary forecasts by and the climatological distribution , respectively, relative to the true probability . In particular, vanishes if and only if is equal to 1 whenever is equal to 1 (i.e., if whenever the forecast model predicts an El Niño event, the event actually occurs). Moreover, based on the interpretation of relative entropy stated above, measures the additional precision in the forecast distribution relative to climatology, and the ignorance of the forecast distribution relative to the truth. In our assessment of a forecasting framework such as KAF and LIM we consider time-averaged, aggregate scores over the verification dataset, viz.

Note that is equal to the mean square difference between the characteristic function and the forecast function evaluated on the verification dataset. Similarly, is equal to the mean square difference between and the constant function equal to the climatological probability . As is customary, we normalize by the climatological error , and subtract the result from 1, leading to the Brier skill score31

Note that in the expression above we have tacitly assumed that the climatological forecast has nonzero error, i.e., , which holds true apart from trivial cases. With these definitions, a skillful model for probabilistic El Niño prediction should have small values of , large values of , and small values of .

Let now be defined such that it is equal to 1 if , and 0 if . Note that the binary probability distribution represented by places all probability mass to the outcome in that is least probable with respect to the climatological distribution, which in the present context corresponds to an El Niño event. One can verify that the precision score is bounded above by the relative entropy . The latter quantity provides a natural scale for corresponding to the precision score of a probabilistic forecast that is maximally precise relative to climatology, in the sense of predicting with probability 1 the climatologically least likely outcome. To define a natural scale for , we consider the time-averaged relative entropy , i.e., the average ignorance of the climatological distribution relative to the truth. This quantity sets a natural threshold for useful probabilistic El Niño forecasts, in the sense that such forecasts should have . The BSS and relative entropy scores and thresholds for probabilistic La Niña prediction are derived analogously to their El Niño counterparts using the characteristic function .

Acknowledgements

D.G. received support by NSF grants DMS-1521775, 1551489, 1842538, and ONR YIP grant N00014-16-1-2649. X.W. was supported as a PhD student under the first NSF grant. J.S. was supported as a postdoctoral fellow under the second and third NSF grants.

Author contributions

X.W., J.S. and D.G. designed research. X.W. and J.S. performed numerical experiments. X.W., J.S. and D.G. analyzed the results. X.W. and D.G. wrote the main manuscript. All authors reviewed and edited the manuscript.

Data availability

The datasets analyzed during the current study are available in the Earth System Grid repository, https://www.earthsystemgrid.org/dataset/ucar.cgd.ccsm4.joc.b40.1850.track1.1deg.006.html, and the Haley Centre Ice and Sea Surface Temperature repository, http://www.metoffice.gov.uk/hadobs/hadisst/data/download.html. The datasets generated during the current study are available from the corresponding author on reasonable request.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Penland C, Magorian T. Prediction of Niño 3 sea surface temperatures using linear inverse modeling. J. Climate. 1993;6:1067–1076. doi: 10.1175/1520-0442(1993)006<1067:PONSST>2.0.CO;2. [DOI] [Google Scholar]

- 2.Penland C, Sardeshmukh PD. The optimal growth of tropical sea surface temperature anomalies. J. Climate. 1995;8:1999–2024. doi: 10.1175/1520-0442(1995)008<1999:TOGOTS>2.0.CO;2. [DOI] [Google Scholar]

- 3.Chapman D, Cane MA, Henderson N, Lee DE, Chen C. A vector autoregressive ENSO prediction model. J. Climate. 2015;28:8511–8520. doi: 10.1175/JCLI-D-15-0306.1. [DOI] [Google Scholar]

- 4.Kondrashov D, Kravtsov S, Robertson AW, Ghil M. A hierarchy of data-based ENSO models. J. Climate. 2005;18:4425–4444. doi: 10.1175/JCLI3567.1. [DOI] [Google Scholar]

- 5.Lima CH, Lall U, Jebara T, Barnston AG. Statistical prediction of ENSO from subsurface sea temperature using a nonlinear dimensionality reduction. J. Climate. 2009;22:4501–4519. doi: 10.1175/2009JCLI2524.1. [DOI] [Google Scholar]

- 6.Van den Dool H. Empirical Methods in Short-Term Climate Prediction. Oxford: Oxford University Press; 2006. [Google Scholar]

- 7.Ding H, Newman M, Alexander MA, Wittenberg AT. Skillful climate forecasts of the tropical Indo-Pacific Ocean using model-analogs. J. Climate. 2018;31:5437–5459. doi: 10.1175/JCLI-D-17-0661.1. [DOI] [Google Scholar]

- 8.Ding H, Newman M, Alexander MA, Wittenberg AT. Diagnosing secular variations in retrospective ENSO seasonal forecast skill using CMIP5 model-analogs. Geophys. Res. Lett. 2019;46:1721–1730. doi: 10.1029/2018GL080598. [DOI] [Google Scholar]

- 9.Ham Y-G, Kim J-H, Luo J-J. Deep learning for multi-year ENSO forecasts. Nature. 2019;573:568–572. doi: 10.1038/s41586-019-1559-7. [DOI] [PubMed] [Google Scholar]

- 10.Lorenz EN. Atmospheric predictability as revealed by naturally occurring analogues. J. Atmos. Sci. 1969;26:636–646. doi: 10.1175/1520-0469(1969)26<636:APARBN>2.0.CO;2. [DOI] [Google Scholar]

- 11.LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 12.Zhao Z, Giannakis D. Analog forecasting with dynamics-adapted kernels. Nonlinearity. 2016;29:2888. doi: 10.1088/0951-7715/29/9/2888. [DOI] [Google Scholar]

- 13.Alexander, R. & Giannakis, D. Operator-theoretic framework for forecasting nonlinear time series with kernel analog techniques. Phys. D, https://arxiv.org/abs/1906.00464, In minor revision (2019).

- 14.Cucker F, Smale S. On the mathematical foundations of learning. Bull. Amer. Math. Soc. 2001;39:1–49. doi: 10.1090/S0273-0979-01-00923-5. [DOI] [Google Scholar]

- 15.Eisner, T., Farkas, B., Haase, M. & Nagel, R. Operator Theoretic Aspects of Ergodic Theory, vol. 272 of Graduate Texts in Mathematics (Springer, 2015).

- 16.Budisić M, Mohr R, Mezić I. Applied Koopmanism. Chaos. 2012;22:047510. doi: 10.1063/1.4772195. [DOI] [PubMed] [Google Scholar]

- 17.Giannakis D, Majda AJ. Comparing low-frequency and intermittent variability in comprehensive climate models through nonlinear Laplacian spectral analysis. Geophys. Res. Lett. 2012;39:L10710. [Google Scholar]

- 18.Giannakis D, Majda AJ. Nonlinear Laplacian spectral analysis for time series with intermittency and low-frequency variability. Proc. Natl. Acad. Sci. 2012;109:2222–2227. doi: 10.1073/pnas.1118984109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Giannakis D. Data-driven spectral decomposition and forecasting of ergodic dynamical systems. Appl. Comput. Harmon. Anal. 2019;47:338–396. doi: 10.1016/j.acha.2017.09.001. [DOI] [Google Scholar]

- 20.Das S, Giannakis D. Delay-coordinate maps and the spectra of Koopman operators. J. Stat. Phys. 2019;175:1107–1145. doi: 10.1007/s10955-019-02272-w. [DOI] [Google Scholar]

- 21.Slawinska J, Giannakis D. Indo-Pacific variability on seasonal to multidecadal time scales. Part I: Intrinsic SST modes in models and observations. J. Climate. 2017;30:5265–5294. doi: 10.1175/JCLI-D-16-0176.1. [DOI] [Google Scholar]

- 22.Giannakis D, Slawinska J. Indo-Pacific variability on seasonal to multidecadal time scales. Part II: Multiscale atmosphere-ocean linkages. J. Climate. 2018;31:693–725. doi: 10.1175/JCLI-D-17-0031.1. [DOI] [Google Scholar]

- 23.Wang X, Giannakis D, Slawinska J. Antarctic circumpolar waves and their seasonality: Intrinsic traveling modes and ENSO teleconnections. Int. J. Climatol. 2019;39:1026–1040. doi: 10.1002/joc.5860. [DOI] [Google Scholar]

- 24.Sauer T, Yorke JA, Casdagli M. Embedology. J. Stat. Phys. 1991;65:579–616. doi: 10.1007/BF01053745. [DOI] [Google Scholar]

- 25.Coifman RR, Lafon S. Diffusion maps. Appl. Comput. Harmon. Anal. 2006;21:5–30. doi: 10.1016/j.acha.2006.04.006. [DOI] [Google Scholar]

- 26.Kleeman R, Moore AW, Neville RS. Assimilation of subsurface thermal data into a simple ocean model for the initialization of an intermediate tropical coupled ocean–atmosphere forecast model. J. Climate. 1995;123:3103–3113. [Google Scholar]

- 27.Rayner, N. A. et al. Global analyses of sea surface temperature, sea ice, and night marine air temperature since the late nineteenth century. J. Geophys. Res. 108 (2003).

- 28.Gent PR, et al. The Community Climate System Model version 4. J. Climate. 2011;24:4973–4991. doi: 10.1175/2011JCLI4083.1. [DOI] [Google Scholar]

- 29.Deser C, et al. ENSO and Pacific decadal variability in the Community Climate System Model Version 4. J. Climate. 2012;25:2622–2651. doi: 10.1175/JCLI-D-11-00301.1. [DOI] [Google Scholar]

- 30.Brier GW. Verification of forecasts expressed in terms of probability. Mon. Wea. Rev. 1950;78:1–3. doi: 10.1175/1520-0493(1950)078<0001:VOFEIT>2.0.CO;2. [DOI] [Google Scholar]

- 31.Weigel AP, Liniger MA, Appenzeller C. The discrete Brier and ranked probability skill scores. Mon. Wea. Rev. 2007;135:118–124. doi: 10.1175/MWR3280.1. [DOI] [Google Scholar]

- 32.Kleeman R. Measuring dynamical prediction utility using relative entropy. J. Atmos. Sci. 2002;59:2057–2072. doi: 10.1175/1520-0469(2002)059<2057:MDPUUR>2.0.CO;2. [DOI] [Google Scholar]

- 33.DelSole T, Tippett MK. Predictability: Recent insights from information theory. Rev. Geophys. 2007;45:RG4002. doi: 10.1029/2006RG000202. [DOI] [Google Scholar]

- 34.Giannakis D, Majda AJ, Horenko I. Information theory, model error, and predictive skill of stochastic models for complex nonlinear systems. Phys. D. 2012;241:1735–1752. doi: 10.1016/j.physd.2012.07.005. [DOI] [Google Scholar]

- 35.L’Heureux ML, et al. Observing and predicting the 2015/16 El Niño. Bull. Amer. Meteorol. Soc. 2017;98:1363–1382. doi: 10.1175/BAMS-D-16-0009.1. [DOI] [Google Scholar]

- 36.Barnston, A. G., Tippett, M. K., Ranganathan, M. & L’Heureux, M. L. Deterministic skill of ENSO predictions from the North American Multimodel Ensemble. Climate Dyn. 53, 7215–7234 (2019). [DOI] [PMC free article] [PubMed]

- 37.Barnston AG, Ropelewski CF. Prediction of ENSO episodes using canonical correlation analysis. J. Climate. 1991;5:1316–1345. doi: 10.1175/1520-0442(1992)005<1316:POEEUC>2.0.CO;2. [DOI] [Google Scholar]

- 38.Stuecker MF, Timmermann A, Jin F-F, McGregor S, Ren H-L. A combination mode of the annual cycle and the El Niño/Southern Oscillation. Nat. Geosci. 2013;6:540–544. doi: 10.1038/ngeo1826. [DOI] [Google Scholar]

- 39.HadISST. Hadley Centre Sea Ice and Sea Surface Temperature (HadISST1) data, http://www.metoffice.gov.uk/hadobs/hadisst/data/download.html, Accessed March 2019 (2013).

- 40.CCSM. Community Climate System Model Version 4 (CCSM4) data, https://www.earthsystemgrid.org/dataset/ucar.cgd.ccsm4.joc.b40.1850.track1.1deg.006.html, Accessed March 2019 (2010).

- 41.Koopman BO. Hamiltonian systems and transformation in Hilbert space. Proc. Natl. Acad. Sci. 1931;17:315–318. doi: 10.1073/pnas.17.5.315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Kooopman BO, von Neumann J. Dynamical systems of continuous spectra. Proc. Natl. Acad. Sci. 1931;18:255–263. doi: 10.1073/pnas.18.3.255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Giannakis D. Dynamics-adapted cone kernels. SIAM J. Appl. Dyn. Sys. 2015;14:556–608. doi: 10.1137/140954544. [DOI] [Google Scholar]

- 44.Ghil, M. et al. Advanced spectral methods for climatic time series. Rev. Geophys. 40 (2002).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets analyzed during the current study are available in the Earth System Grid repository, https://www.earthsystemgrid.org/dataset/ucar.cgd.ccsm4.joc.b40.1850.track1.1deg.006.html, and the Haley Centre Ice and Sea Surface Temperature repository, http://www.metoffice.gov.uk/hadobs/hadisst/data/download.html. The datasets generated during the current study are available from the corresponding author on reasonable request.